Abstract

While Shannon’s mutual information has widespread applications in many disciplines, for practical applications it is often difficult to calculate its value accurately for high-dimensional variables because of the curse of dimensionality. This article focuses on effective approximation methods for evaluating mutual information in the context of neural population coding. For large but finite neural populations, we derive several information-theoretic asymptotic bounds and approximation formulas that remain valid in high-dimensional spaces. We prove that optimizing the population density distribution based on these approximation formulas is a convex optimization problem that allows efficient numerical solutions. Numerical simulation results confirmed that our asymptotic formulas were highly accurate for approximating mutual information for large neural populations. In special cases, the approximation formulas are exactly equal to the true mutual information. We also discuss techniques of variable transformation and dimensionality reduction to facilitate computation of the approximations.

1. Introduction

Shannon’s mutual information (MI) provides a quantitative characterization of the association between two random variables by measuring how much knowing one of the variables reduces uncertainty about the other (Shannon, 1948). Information theory has become a useful tool for neuroscience research (Rieke, Warland, de Ruyter van Steveninck, & Bialek, 1997; Borst & Theunissen, 1999; Pouget, Dayan, & Zemel, 2000; Laughlin & Sejnowski, 2003; Brown, Kass, & Mitra, 2004; Quiroga & Panzeri, 2009), with applications to various problems such as sensory coding problems in the visual systems (Eckhorn & Pöpel, 1975; Optican & Richmond, 1987; Atick & Redlich, 1990; McClurkin, Gawne, Optican, & Richmond, 1991; Atick, Li, & Redlich, 1992; Becker & Hinton, 1992; Van Hateren, 1992; Gawne & Richmond, 1993; Tovee, Rolls, Treves, & Bellis, 1993; Bell & Sejnowski, 1997; Lewis & Zhaoping, 2006) and the auditory systems (Chechik et al., 2006; Gourévitch and Eggermont, 2007; Chase & Young, 2005).

One major problem encountered in practical applications of information theory is that the exact value of mutual information is often hard to compute in high-dimensional spaces. For example, suppose we want to calculate the mutual information between a random stimulus variable that requires many parameters to specify and the elicited noisy responses of a large population of neurons. In order to accurately evaluate the mutual information between the stimuli and the responses, one has to average over all possible stimulus patterns and over all possible response patterns of the whole population. This averaging quickly leads to a combinatorial explosion as either the stimulus dimension or the population size increases. This problem occurs not only when one computes MI numerically for a given theoretical model but also when one estimates MI empirically from experimental data.

Even when the input and output dimensions are not that high, an MI estimate from experimental data tends to have a positive bias due to limited sample size (Miller, 1955; Treves & Panzeri, 1995). For example, a perfectly flat joint probability distribution implies zero MI, but an empirical joint distribution with fluctuations due to finite data size appears to suggest a positive MI. The error may get much worse as the input and output dimensions increase because a reliable estimate of MI may require exponentially more data points to fill the space of the joint distribution. Various asymptotic expansion methods have been proposed to reduce the bias in an MI estimate (Miller, 1955; Carlton, 1969; Treves & Panzeri, 1995; Victor, 2000; Paninski, 2003). Other estimators of MI have also been studied, such as those based on k-nearest neighbor (Kraskov, Stögbauer, & Grassberger, 2004) and minimal spanning trees (Khan et al., 2007). However, it is not easy for these methods to handle the general situation with high-dimensional inputs and high-dimensional outputs.

For numerical computation of MI for a given theoretical model, one useful approach is Monte Carlo sampling, a convergent method that may potentially reach arbitrary accuracy (Yarrow, Challis, & Series, 2012). However, its stochastic and inefficient computational scheme makes it unsuitable for many applications. For instance, to optimize the distribution of a neural population for a given set of stimuli, one may want to slightly alter the population parameters and see how the perturbation affects the MI, but a tiny change of MI can be easily drowned out by the inherent noise in the Monte Carlo method.

An alternative approach is to use information-theoretic bounds and approximations to simplify calculations. For example, the Cramér-Rao lower bound (Rao, 1945) tells us that the inverse of Fisher information (FI) is a lower bound to the mean square decoding error of any unbiased decoder. Fisher information is useful for many applications partly because it is often much easier to calculate than MI (see e.g., Zhang, Ginzburg, McNaughton, & Sejnowski, 1998; Zhang & Sejnowski, 1999; Abbott & Dayan, 1999; Bethge, Rotermund, & Pawelzik, 2002; Harper & McAlpine, 2004; Toyoizumi, Aihara, & Amari, 2006).

A link between MI and FI has been studied by several researchers (Clarke & Barron, 1990; Rissanen, 1996; Brunel & Nadal, 1998; Sompolinsky, Yoon, Kang, & Shamir, 2001). Clarke and Barron (1990) first derived an asymptotic formula between the relative entropy and FI for parameter estimation from independent and identically distributed (i.i.d.) observations with suitable smoothness conditions. Rissanen (1996) generalized it in the framework of stochastic complexity for model selection. Brunel and Nadal (1998) presented an asymptotic relationship between the MI and FI in the limit of a large number of neurons. The method was extended to discrete inputs by Kang and Sompolinsky (2001). More general discussions about this also appeared in other papers (e.g., Ganguli & Simoncelli, 2014; Wei & Stocker, 2015). However, for finite population size, the asymptotic formula may lead to large errors, especially for high-dimensional inputs, as detailed in sections 2.2 and 4.1.

In this article, our main goal is to improve FI approximations to MI for finite neural populations especially for high-dimensional inputs. Another goal is to discuss how to use these approximations to optimize neural population coding. We will present several information-theoretic bounds and approximation formulas and discuss the conditions under which they are established in section 2, with detailed proofs given in the appendix. We also discuss how our approximation formulas are related to other statistical estimators and information-theoretic bounds, such as Cramér-Rao bound and van Trees’ Bayesian Cramér-Rao bound (see section 3). In order to better apply the approximation formulas in high-dimensional input space, we propose some useful techniques in section 4, including variable transformation and dimensionality reduction, which may greatly reduce the computational complexity for practical applications. Finally, in section 5, we discuss how to use the approximation formulas for optimizing information transfer for neural population coding.

2. Bounds and Approximations for Mutual Information in Neural Population Coding

2.1. Mutual Information and Notations.

Suppose the input x is a K-dimensional vector, x = (x1, x2, …, xK)T, and the outputs of N neurons are denoted by a vector, r = (r1, r2, …, rN)T. In this article, we denote random variables by uppercase letters (e.g., random variables X and R) in contrast to their vector values x and r. The MI I(X; R) (denoted as I below) between X and R is defined by Cover and Thomas (2006):

| (2.1) |

where , , , , and the integration symbol ∫ is for the continuous variables and can be replaced by the summation symbol ∑ for discrete variables. The probability density function (p.d.f.) of r, p(r), satisfies

| (2.2) |

The MI I in equation 2.1 may also be expressed equivalently as

| (2.3) |

where H(X) is the entropy of random variable X,

| (2.4) |

and ⟨·⟩ denotes expectation:

| (2.5) |

| (2.6) |

| (2.7) |

Next, we introduce the following notations,

| (2.8) |

| (2.9) |

| (2.10) |

and

| (2.11) |

| (2.12) |

where det (·) denotes the matrix determinant, and

| (2.13) |

| (2.14) |

| (2.15) |

Here J(x) is the FI matrix, which is symmetric and positive-semidefinite, and ′ and ″ denote the first and second derivative for x, respectively; that is, l′(r∣x) = ∂l (r∣x) /∂x and q″(r∣x) = ∂2 ln p (x)/∂x∂xT. If p(r∣x) is twice differentiable for x, then

| (2.16) |

We denote the Kullback-Leibler (KL) divergence as

| (2.17) |

and define

| (2.18) |

as the ω neighborhoods of x and its complementary set as

| (2.19) |

where ω is a positive number.

2.2. Information-Theoretic Asymptotic Bounds and Approximations.

In a large N limit, Brunei and Nadal (1998) proposed an asymptotic relationship I ~ IF between MI and FI and gave a proof in the case of one-dimensional input. Another proof is given by Sompolinsky et al. (2001), although there appears to be an error in their proof when a replica trick is used (see equation B1 in their paper; their equation B5 does not follow directly from the replica trick). For large but finite N, I ≃ IF is usually a good approximation as long as the inputs are low dimensional. For the high-dimensional inputs, the approximation may no longer be valid. For example, suppose p(r∣x) is a normal distribution with mean ATx and covariance matrix IN and p(x) is a normal distribution with mean μ and covariance matrix ∑,

| (2.20) |

where A = [a1, a2, …, aN] is a deterministic K × N matrix and IN is the N × N identity matrix. The MI I is given by (see Verdu, 1986; Guo, Shamai, & Verdu, 2005, for details)

| (2.21) |

If rank (J(x)) < K, then IF = −∞. Notice that here, J(x) = AAT. When a = a1 = … = aN and ∑ = Ik, then by equation 2.21 and a matrix determinant lemma, we have

| (2.22) |

and by equation 2.11,

| (2.23) |

which is obviously incorrect as an approximation to I. For high-dimensional inputs, the determinant det (J(x)) may become close to zero in practical applications. When the FI matrix J(x) becomes degenerate, the regularity condition ensuring the Cramér-Rao paradigm of statistics is violated (Amari & Nakahara, 2005), in which case using IF as a proxy for I incurs large errors.

In the following, we will show that IG is a better approximation of I for high-dimensional inputs. For instance, for the above example, we can verify that

| (2.24) |

which is exactly equal to the MI I given in equation 2.21.

2.2.1. Regularity Conditions.

First, we consider the following regularity conditions for p(x) and p(r∣x):

C1: p(x) and p(r∣x) are twice continuously differentiable for almost every , where is a convex set; G(x) is positive definite, and ∥ G−1 (x)∥ = O(N−1), where ∥·∥ denotes the Frobenius norm of a matrix. The following conditions hold:

| (2.25a) |

| (2.25b) |

| (2.25c) |

| (2.25d) |

and there exists an ω = ω (x) > 0 for such that

| (2.25e) |

where O indicates the big-O notation.

C2: The following condition is satisfied,

| (2.26a) |

for , and there exists η > 1 such that

| (2.26b) |

for all ϵ ∈ (0,1/2), and with p(x) > 0, where denotes the probability of r given x.

The regularity conditions C1 and C2 are needed to prove theorems in later sections. They are expressed in mathematical forms that are convenient for our proofs, although their meanings may seem opaque at first glance. In the following, we will examine these conditions more closely. We will use specific examples to make interpretations of these conditions more transparent.

Remark 1. In this article, we assume that the probability distributions p(x) and p(r∣x) are piecewise twice continuously differentiable. This is because we need to use Fisher information to approximate mutual information, and Fisher information requires derivatives that make sense only for continuous variables. Therefore, the methods developed in this article apply only to continuous input variables or stimulus variables. For discrete input variables, we need alternative methods for approximating MI, which we will address in a separate publication.

Conditions 2.25a and 2.25b state that the first and the second derivatives of q(x) = ln p(x) have finite values for any given . These two conditions are easily satisfied by commonly encountered probability distributions because they only require finite derivatives within , the set of allowable inputs, and derivatives do not need to be finitely bounded.

Remark 2. Conditions 2.25c to 2.26a constrain how the first and the second derivatives of l(r∣x) = ln p(r∣x) scale with N, the number of neurons. These conditions are easily met when p(r∣x) is conditionally independent or when the noises of different neurons are independent, that is, .

We emphasize that it is possible to satisfy these conditions even when p(r∣x) is not independent or when the noises are correlated, as we show later. Here we first examine these conditions closely, assuming independence. For simplicity, our demonstration that follows is based on a one-dimensional input variable (K = 1). The conclusions are readily generalizable to higher-dimensional inputs (K > 1) because K is fixed and does not affect the scaling with N.

Assuming independence, we have with l(rn∣x) = ln p(rn∣x), and the left-hand side of equation 2.25c becomes

| (2.27) |

where the final result contains only two terms with even numbers of duplicated indices, while all other terms in the expansion vanish because any unmatched or lone index k (from n1, n2, n3, n4) should yield a vanishing average:

| (2.28) |

Thus, condition 2.25c is satisfied as long as ⟨l′(rn∣x)2⟩rn∣x and ⟨l′(rn∣x)4⟩rn∣x are bounded by some finite numbers, say, a and b, respectively, because now equation 2.27 should scale as N−2 (aN(N – 1) + bN) = O(1). For instance, a gaussian distribution always meets this requirement because the averages of the second and fourth powers are proportional to the second and fourth moments, which are both finite. Note that the argument above works even if ⟨l′(rn∣x)4⟩rn∣x is not finitely bounded but scales as O(N).

Similarly, under the assumption of independence, the left-hand side of equation 2.25d becomes

| (2.29) |

where, in the second step, the only remaining terms are the squares, while all other terms in the expansion with n ≠ m have vanished because ⟨l″(rn∣x) – ⟨l″(rn∣x)⟩rn∣x⟩rn∣x = 0. Thus, condition 2.25d is satisfied as long as ⟨l″(rn∣x)⟩rn∣x and ⟨l″(rn∣x)2⟩rn∣x are bounded so that equation 2.29 scales as N−2N = N−1.

Condition 2.25e is easily satisfied under the assumption of independence. It is easy to show that this condition holds when l″(rn∣x) is bounded.

Condition 2.26a can be examined with similar arguments used for equations 2.27 and 2.29. Assuming independence, we rewrite the left-hand side of equation 2.26a as

| (2.30) |

where z = 2(m + 1) ≥ 4 is an even number. Any term in the expansion with an unmatched index nk should vanish, as in the cases of equations 2.27 and 2.29. When ⟨l″ (rn∣x)⟩rn∣x and ⟨l″(rn∣x)2⟩rn∣x are bounded, the leading term with respect to scaling with N is the product of squares, as shown at the end of equation 2.30, because all the other nonvanishing terms increase more slowly with N. Thus equation 2.30 should scale as N−zNm+1 = N−m−1, which trivially satisfies condition 2.26a.

In summary, conditions 2.25c to 2.26a are easy to meet when p(r∣x) is independent. It is sufficient to satisfy these conditions when the averages of the first and second derivatives of l(r∣x) = ln p(r∣x), as well as the averages of their powers, are bounded by finite numbers for all the neurons.

Remark 3. For neurons with correlated noises, if there exists an invertible transformation that maps r to such that becomes conditionally independent, then conditions C1 and C2 are easily met in the space of the new variables by the discussion in remark 2. This situation is best illustrated by the familiar example of a population of neurons with correlated noises that obey a multivariate gaussian distribution:

| (2.31) |

where ∑ is an N × N invertible covariance matrix, and g = (g1(x; θ1),…, gN(x; θN)) describes the mean resp onses with θn being the parameter vector. Using the following transformation,

| (2.32) |

| (2.33) |

we obtain the independent distribution:

| (2.34) |

In the special case when the correlation coefficient between any pair of neurons is a constant c, −1 < c < 1, the noise covariance can be written as

| (2.35) |

where a > 0 is a constant, IN is the N × N identity matrix, and . The desired transformation in equations 2.32 and 2.33 is given explicitly by

| (2.36) |

where

| (2.37) |

The new response variables defined in equations 2.32 and 2.33 now read:

| (2.38) |

| (2.39) |

Now we have the derivatives:

| (2.40) |

| (2.41) |

where and are finite as long as ∂gn/∂x and ∂2gn/∂x2 are finite. Conditions C1 and C2 are satisfied when the derivatives and their powers are finitely bounded as shown before.

The example above shows explicitly that it is possible to meet conditions C1 and C2 even when the noises of different neurons are correlated. More generally, if a nonlinear transformation exists that maps correlated random variables into independent variables, then by similar argument, conditions C1 and C2 are satisfied when the derivatives of the log likelihood functions and their powers in the new variables are finitely bounded. Even when the desired transformation does not exist or is unknown, it does not necessarily imply that conditions C1 and C2 must be violated.

While the exact mathematical conditions for the existence of the desired transformation are unclear, let us consider a specific example. If a joint probability density function can be morphed smoothly and reversibly into a flat or constant density in a cube (hypercube), which is a special case of an independent distribution, then this morphing is the desired transformation. Here we may replace the flat distribution by any known independent distribution and the argument above should still work. So the desired transformation may exist under rather general conditions.

For correlated random variables, one may use algorithms such as independent component analysis to find an invertible linear mapping that makes the new random variables as independent as possible (Bell & Sejnowski, 1997) or use neural networks to find related nonlinear mappings (Huang & Zhang, 2017). These methods do not directly apply to the problem of testing conditions C1 and C2 because they work for a given network size N and further development is needed to address the scaling behavior in the large network limit N → ∞.

Finally, we note that the value of the MI of the transformed independent variables is the same as the MI of the original correlated variables because of the invariance of MI under invertible transformation of marginal variables. A related discussion is in theorem 3, which involves a transformation of the input variables rather than a transformation of the output variables as needed here.

Remark 4. Condition 2.26b is satisfied if a positive number δ and a positive integer m exist such that

| (2.42) |

for all , where

| (2.43) |

and A < B means that the matrix A – B is negative definite. A proof is as follows.

First note that in equation 2.43, if η → 1 or m → ∞, then . Following Markov’s inequality, condition C2 and equation A.19 in the appendix, for the complementary set of , , we have

| (2.44) |

where

| (2.45) |

Define the set

| (2.46) |

Then it follows from Markov’s inequality and equation 2.42 that

| (2.47) |

Hence, we get

which yields condition 2.26b.

Condition 2.42 is satisfied if there exists a positive number ς such that

| (2.48) |

for all and . This is because

| (2.49) |

Here notice that det (G (x))1/2 = O (NK/2) (see equation A.23).

Inequality 2.48 holds if p(r∣x) is conditionally independent, namely, , with

| (2.50) |

for all and . Consider the inequality where the equality holds when . If there is only one extreme point at for , then generally it is easy to find a set that satisfies equation 2.50, so that equation 2.26b holds.

2.2.2. Asymptotic Bounds and Approximations for Mutual Information.

Let

| (2.51) |

and it follows from conditions C1 and C2 that

| (2.52) |

Moreover, if p(r∣x) is conditionally independent, then by an argument similar to the discussion in remark 2, we can verify that the condition ξ = O (N−1) is easily met.

In the following we state several conclusions about the MI; their proofs are given in the appendix.

Lemma 1. If condition C1 holds, then the MI I has an asymptotic upper bound for integer N,

| (2.53) |

Moreover, if equations 2.25c and 2.25d are replaced by

| (2.54a) |

| (2.54b) |

for some τ ∈ (0,1), where o indicates the Little-O notation, then the MI has the following asymptotic upper bound for integer N:

| (2.55) |

Lemma 2. If conditions C1 and C2 hold, ξ = O (N−1), then the MI has an asymptotic lower bound for integer N,

| (2.56) |

Moreover, if condition C1 holds but equations 2.25c and 2.25d are replaced by 2.54a and 2.54b, and inequality 2.26b in C2 also holds for η > 0, then the MI has the following asymptotic lower bound for integer N:

| (2.57) |

Theorem 1. If conditions C1 and C2 hold, ξ = O (N−1), then the MI has the following asymptotic equality for integer N:

| (2.58) |

For more relaxed conditions, suppose condition C1 holds but equations 2.25c and 2.25d are replaced by 2.54a and 2.54b, and inequality 2.26b in C2 also holds for η > 0, then the MI has an asymptotic equality for integer N:

| (2.59) |

Theorem 2. Suppose J(x) and G(x) are symmetric and positive-definite. Let

| (2.60) |

| (2.61) |

Then

| (2.62) |

where Tr (·) indicating matrix trace; moreover, if P(x) is positive-semidefinite, then

| (2.63) |

But if

| (2.64) |

for some β > 0, then

| (2.65) |

Remark 5. In general, we need only to assume that p(x) and p(r∣x) are piecewise twice continuously differentiable for . In this case, lemmas 1 and 2 and theorem 1 can still be established. For more general cases, such as discrete or continuous inputs, we have also derived a general approximation formula for MI from which we can easily derive formula for IG (this will be discussed in separate paper).

2.3. Approximations of Mutual Information in Neural Populations with Finite Size.

In the preceding section, we provided several bounds, including both lower and upper bounds, and asymptotic relationships for the true MI in the large N (network size) limit. Now, we discuss effective approximations to the true MI in the case of finite N. Here we consider only the case of continuous inputs (we will discuss the case of discrete inputs in another paper).

Theorem 1 tells us that under suitable conditions, we can use IG to approximate I for a large but finite N (e.g., N ⪢ K), that is,

| (2.66) |

Moreover, by theorem 2, we know that if ς ≈ 0 with positive-semidefinite P(x) or ς1 ≈ 0 holds (see equations 2.60 and 2.64), then by equations 2.63, 2.65, and 2.66, we have

| (2.67) |

Define

| (2.68) |

| (2.69) |

where is positive-definite and Q (x) is a symmetric matrix depending on x and ∥Q (x) ∥ = O(1). Suppose . If we replace IG by in theorem 1, then we can prove equations 2.58 and 2.59 in a manner similar to the proof of that theorem. Considering a special case where ∥P(x)∥ → 0, det (J(x)) = O(1) (e.g., rank (J(x)) < K) and ∥G−1 (x) ∥ ≠ O(N−1), then we can no longer use the asymptotic formulas in theorem 1. However, if we substitute for G(x) by choosing an appropriate Q (x) such that is positive-definite and , then we can use equation 2.58 and 2.59 as the asymptotic formula.

If we assume G(x) and are positive-definite and

| (2.70) |

then similar to the proof of theorem 2, we have

| (2.71) |

and

For large N, we usually have .

It is more convenient to redefine the following quantities:

| (2.72) |

| (2.73) |

| (2.74) |

and

| (2.75) |

Notice that if p(x) is twice differentiable for x and

| (2.76) |

then

| (2.77) |

For example, if p(x) is a normal distribution, , then

| (2.78) |

Similar to the proof of theorem 2, we can prove that

| (2.79) |

where

| (2.80) |

We find that IG is often a good approximation of MI I even for relatively small N. However, we cannot guarantee that P(x) is always positive-semidefinite in equation 2.14, and as a consequence, it may happen that det (G(x)) is very small for small N, G(x) is not positive-definite, and ln (det (G(x))) is not a real number. In this case, IG is not a good approximation to I but IG+ is still a good approximation. Generally, if P(x) is always positive-semidefinite, then IG or IG+ is a better approximation than IF, especially when p(x) is close to a normal distribution.

In the following, we give an example of 1D inputs. High-dimensional inputs are discussed in section 4.1.

2.3.1. A Numerical Comparison for 1D Stimuli.

Considering the Poisson neuron model (see equation 5.7 in section 5.1 for details), the tuning curve of the nth neuron, f (x; θn), takes the form of circular normal or von Mises distribution

| (2.81) |

where x ∈ [−T/2, T/2), θn ∈ [−Tθ/2, Tθ/2], n ϵ {1,2, …, N}, with T = π, Tθ = 1, σf = 0.5, and A = 20, and the centers θ1, θ2, …, θN of the N neurons are uniformly distributed on interval [−Tθ/2, Tθ/2], that is, θn = (n – 1) dθ – Tθ/2, with dθ = Tθ/(N – 1) and N ≥ 2. Suppose the distribution of 1D continuous input x (K = 1) p(x) has the form

| (2.82) |

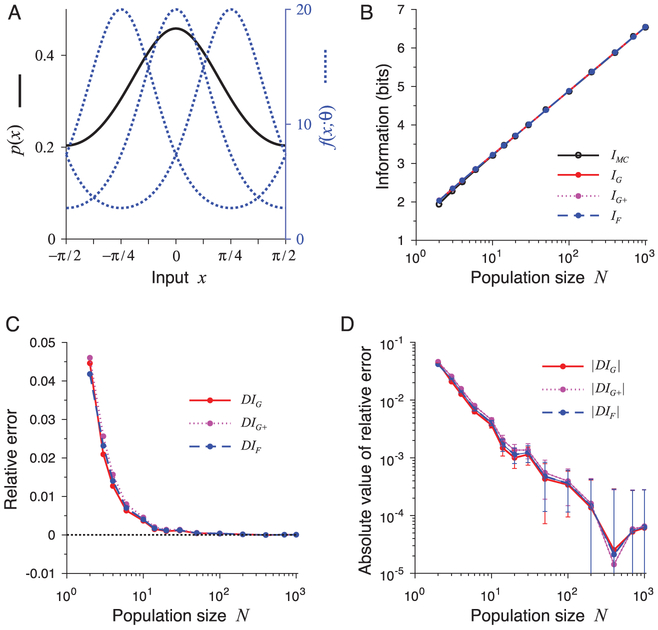

where σp is a constant set to π/4 and Z is the normalization constant. Figure 1A shows graphs of the input distribution p(x) and the tuning curves f (x; θ) with different centers θ = −π/4, 0, π/4.

Figure 1:

A comparison of approximations IMC, IG, IG+, and IF for one-dimensional input stimuli. All of them were almost equally good, even for small population size N. (A) The stimulus distribution p(x) and tuning curves f (x; θ) with different centers θ = −π/4, 0, π/4. (B) The values of IMC, IG, IG+, and IF all increase with neuron number N. (C) The relative errors DIG, DIG+, and DIF for the results in panel B. (D) The absolute values of the relative errors ∣DIG∣, ∣DIG+∣, and ∣DIF∣, with error bars showing standard deviations of repeated trials.

To evaluate the precision of the approximation formulas, we use Monte Carlo (MC) simulation to approximate MI I. For MC simulation, we first sample an input xj by the distribution p(x), then generate the neural response rj by the conditional distribution p(rj∣xj), where j = 1, 2, …, jmax. The value of MI by MC simulation is calculated by

| (2.83) |

where p(rj) is given by

| (2.84) |

and xm = (m – 1) T/M – T/2 for m ϵ {1,2, …, M}.

To evaluate the accuracy of MC simulation, we compute the standard deviation,

| (2.85) |

where

| (2.86) |

| (2.87) |

and Γj,i ϵ {1,2, …, jmax} is the (j, i)th entry of the matrix with samples taken randomly from the integer set {1, 2, …, jmax} by a uniform distribution. Here we set jmax = 5 × 105, imax = 100 and M = 103.

For different N ∈ {2, 3, 4, 6, 10, 14, 20, 30, 50, 100, 200,400, 700, 1000}, we compare IMC with IG, IG+, and IF, which are illustrated in Figures 1B to 1D. Here we define the relative error of approximation, for example, for IG, as

| (2.88) |

and the relative standard deviation

| (2.89) |

Figure 1B shows how the values of IMC, IG, IG+, and IF change with neuron number N, and Figures 1C and 1D show their relative errors and the absolute values of the relative errors with respect to IMC. From Figures 1B to 1D, we can see that the values of IG, IG+, and IF are all very close to one another and the absolute values of their relative errors are all very small. The absolute values are less than 1% when N ≥ 10 and less than 0.1% when N ≥ 100. However, for the high-dimensional inputs, there will be a big difference between IG, IG+, and IF in many cases (see section 4.1 for more details).

3. Statistical Estimators and Neural Population Decoding

Given the neural response r elicited by the input x, we may infer or estimate the input x from the response. This procedure is sometimes referred to as decoding from the response. We need to choose an efficient estimator or a function that maps the response r to an estimate of the true stimulus x. The maximum likelihood (ML) estimator defined by

| (3.1) |

is known to be efficient in large N limit. According to the Cramér-Rao lower bound (Rao, 1945), we have the following relationship between the covariance matrix of any unbiased estimator and the FI matrix J (x),

| (3.2) |

where is an unbiased estimation of x from the response r, and A ≥ B means that matrix A – B is positive-semidefinite. Thus,

| (3.3) |

The MI between X and is given by

| (3.4) |

where is the entropy of random variable and is its conditional entropy of random variable given X. Since the maximum entropy probability distribution is gaussian, satisfies

| (3.5) |

Therefore, from equations 3.4 and 3.5, we get

| (3.6) |

The data processing inequality (Cover & Thomas, 2006) states that postprocessing cannot increase information, so that we have

| (3.7) |

Here we can not directly obtain I ≥ IF as in Brunel and Nadal (1998) when and . The simulation results in Figure 1 also show that IF is not a lower bound of I.

For biased estimators, the van Trees’ Bayesian Cramér-Rao bound (Van Trees & Bell, 2007) provides a lower bound:

| (3.8) |

It follows from equations 2.75, 3.6, and 3.8 that

| (3.9) |

| (3.10) |

| (3.11) |

We may also regard decoding as Bayesian inference. By Bayes’ rule,

| (3.12) |

According to the Bayesian decision theory, if we know the response r, from the prior p(x) and the likelihood p(r∣x), we can infer an estimation of the true stimulus x, —for example,

| (3.13) |

which is also called maximum a posteriori (MAP) estimation.

Consider a loss function for estimation,

| (3.14) |

which is minimized when p(x∣r) reaches its maximum. Now the conditional risk is

| (3.15) |

and the overall risk is

| (3.16) |

Then it follows from equations 2.3 and 3.16 that

| (3.17) |

Comparing equations 2.12, 2.66, and 3.17, we find

| (3.18) |

Hence, maximizing MI I (or IG) means minimizing the overall risk Ro for a determinate H(X). Therefore, we can get the optimal Bayesian inference via optimizing MI I (or IG).

By the Cramér-Rao lower bound, we know that the inverse of FI matrix J−1(x) reflects the accuracy of decoding (see equation 3.2). P(x) provides some knowledge about the prior distribution p(x); for example, P−1 (x) is the covariance matrix of input x when p(x) is a normal distribution. ∥P(x)∥ is small for a flat prior (poor prior) and large for a sharp prior (good prior). Hence, if the prior p(x) is flat or poor and the knowledge about model is rich, then the MI I is governed by the knowledge of model, which results in a small ς1 (see equation 2.64) and I ≃ IG ≃ IF. Otherwise, the prior knowledge has a great influence on MI I, which results in a large ς1 and I ≃ IG ≄ IF.

4. Variable Transformation and Dimensionality Reduction in Neural Population Coding

For low-dimensional input x and large N, both IG are IF are good approximations of MI I, but for high-dimensional input x, a large value of ς1 may lead to a large error of IF, in which case IG (or IG+ ) is a better approximation. It is difficult to directly apply the approximation formula I ≃ IG when we do not have an explicit expression of p (x) or P (x). For many applications, we do not need to know the exact value of IG and care only about the value of ⟨ln (det (G(x)))⟩x (see section 5). From equations 2.12, 2.22, and 2.78, we know that if p (x) is close to a normal distribution, we can easily approximate P (x) and H(X) to obtain ⟨ln (det (G(x)))⟩x and IG. When p (x) is not a normal distribution, we can employ a technique of variable transformation to make it closer to a normal distribution, as discussed below.

4.1. Variable Transformation.

Suppose is an invertible and differentiable mapping:

| (4.1) |

, and . Let denote the p.d.f. of random variable and

| (4.2) |

Then we have the following conclusions, the proofs of which are given in the appendix.

Theorem 3. The MI is equivariant under the invertible transformations. More specifically, for the above invertible transformation T, the MI I(X; R) in equation 2.1 is equal to

| (4.3) |

Furthermore, suppose and fulfill the conditions C1, C2 and ξ = O (N−1). Then we have

| (4.4) |

| (4.5) |

where is the entropy of random variable and satisfies

| (4.6) |

and DT (x) denotes the Jacobian matrix of T (x),

| (4.7) |

Corollary 1. Suppose p(r∣x) is a normal distribution,

| (4.8) |

where y = f (BT x) = (y1, y2, …, yK)T, for k = 1, 2, …, K, A is a deterministic K × N matrix, B = [b1, b2, …, bK] is a deterministic invertible matrix, and fk is an invertible and differentiable function. If Y has also a normal distribution, , then

| (4.9) |

where

| (4.10) |

| (4.11) |

| (4.12) |

Remark 6. From corollary 1 and equation 2.78, we know that the approximation accuracy for IG ≃ I(X; R) is improved when we employ an invertible transformation on the input random variable X to make the new random variable Y closer to a normal distribution (see section 4.3).

Consider the eigendecompositions of AAT and ∑f as given by

| (4.13) |

| (4.14) |

where UA and Uf are K × K orthogonal matrices; and are K × K eigenvalue matrices; and and . Then by equations 2.11 and 4.9, we have

| (4.15) |

| (4.16) |

and

| (4.17) |

Now consider two special cases. If , then by equation 4.17, we get

| (4.18) |

If UA = Uf, then

| (4.19) |

Here , . The FI matrices J(x) and P−1(x) become degenerate when and .

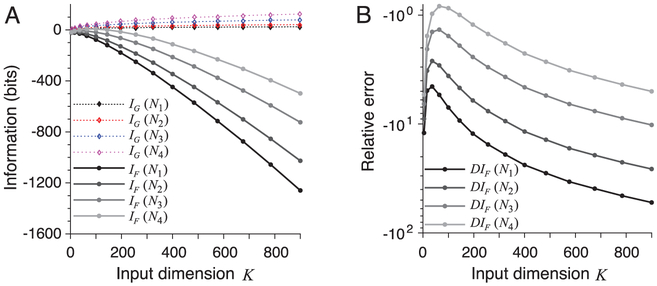

From equations 4.18 and 4.19, we see that if either J(x)or P−1 (x) becomes degenerate, then (IF – IG) → −∞. This may happen for high-dimensional stimuli. For a specific example, consider a random matrix A defined as follows. Here we first generate K × N elements Ak,n, (k = 1, 2, …, K; n = 1, 2, …, N) from a normal distribution (0,1). Then each column of matrix A is normalized by . We randomly sample M (set to 2 × 104) image patches with size ω × ω from Olshausen’s nature image data set (Olshausen & Field, 1996) as the inputs. Each input image patch was centered by subtracting its mean: . Then let for ∀m ϵ {1, 2, …, M}. Define matrix X = [x1, x2, …, xM] and compute eigendecomposition

| (4.20) |

where Ux is a K × K orthogonal matrix and is a K × K eigenvalue matrix with . Define

| (4.21) |

Then

| (4.22) |

The distribution of random variable Y can be approximated by a normal distribution (see section 4.3 for more details). When , we have

| (4.23) |

| (4.24) |

| (4.25) |

The error of approximation IF is given by

| (4.26) |

and the relative error for IF is

| (4.27) |

Figure 2A shows how the values of IG and IF vary with the input dimension K = ω × ω and the number of neurons N (with ω = 2, 4, 6, …, 30 and N = 104, 2 × 104, 5 × 104, 105). The relative error DIF is shown in Figure 2B. The absolute value of the relative error tends to decrease with N but may grow quite large as K increases. In Figure 2B, the largest absolute value of relative error ∣DIF∣ is greater than 5000%, which occurs when K = 900 and N = 104. Even the smallest ∣DIF∣ is still greater than 80%, which occurs when K = 100 and N = 105. In this example, IF is a bad approximation of MI I, whereas IG and IG+ are strictly equal to the true MI I across all parameters.

Figure 2:

A comparison of approximations IG and IF for different input dimensions. Here IG is always equal to the true MI with IG = IG+ = I(X; R), whereas IF always has nonzero errors. (A) The values IG and IF vary with input dimension K = ω2 with ω = 2, 4, 6, …, 30, and the number of neurons N = Ni with N1 = 104, N2 = 2 × 104, N3 = 5 × 104, N4 = 105. (B) The relative error DIF changes with input dimension K for different N.

4.2. Dimensionality Reduction for Asymptotic Approximations.

Suppose x = (x1, … xK)T is partitioned into two sets of components, with

| (4.28) |

| (4.29) |

where , , K1 + K2 = K, K ≥ 2, K1 ≥ 1 and K2 ≥ 1.

Then by Fubini’s theorem, the MI I in equation 2.1 can be written as

| (4.30) |

where p(x1, x2) = p(x) and p(r∣x1, x2) = p(r∣x).

First define

| (4.31a) |

| (4.31b) |

where i, j ϵ {1, 2}, and

| (4.32a) |

| (4.32b) |

Then we have the following results, their proofs are given in the appendix.

Theorem 4. Suppose matrices G (x), G1, 1 (x), and G2,2 (x) are positive-definite. If the matrix satisfies

| (4.33) |

with

| (4.34) |

then we have

| (4.35) |

with strict equality if and only if

| (4.36) |

where

| (4.37) |

Theorem 5. Suppose matrices G (x), G1,1 (x) and P2,2 (x) are positive-definite. If the matrix is positive-semidefinite and satisfies

| (4.38) |

with

| (4.39) |

| (4.40) |

then we have

| (4.41) |

with strict equality if and only if

| (4.42) |

where

| (4.43) |

Corollary 2. If the random variables X1 and X2 are independent so that is a normal distribution, and G (x), G1,1 (x), P1,1 (x) and P2,2 (x) are all positive-definite and satisfy equation 4.38, then we have

| (4.44) |

| (4.45) |

with strict equality if and only if

| (4.46) |

where

| (4.47a) |

| (4.47b) |

| (4.47c) |

Remark 7. Sometimes we are concerned only with calculating the determinant of matrix G(x) with a given p(x). Theorems 3 and 4 provide a dimensionality reduction method for computing G (x) or det (G (x)), by which we need only to compute G1,1 (x) and G2,2 (x) separately. To apply the approximation 4.35, we do not need to strictly require ∣Tr (⟨Ax⟩x)∣ ⪡ 1. Instead we need to require only

| (4.48) |

Similarly, the inequality ∣Tr (⟨Bx⟩x)∣ ⪡ 1 can be substituted by

| (4.49) |

By equation 4.44 and the second mean value theorem for integrals, we get

| (4.50) |

for some fixed . When ∥∑x2∥ is small, should be close to the mean: . It follows from theorem 1 and corollary 2 that the approximate relationship holds. However, equation 4.50 implies that is determined only by the first component x1. Hence, there is little impact on information transfer by the minor component (i.e., x2) for the high-dimensional input x. In other words, the information transfer is mainly determined by the first component x1, and we can omit the minor component x2.

4.3. Further Discussion.

Suppose x is a zero-mean vector; if it is not, then let x ← x – ⟨x⟩x. The covariance matrix of x is given by

| (4.51) |

where U is a K × K orthogonal matrix whose kth column is the eigenvector uk of ∑x and ∑ is a diagonal matrix whose diagonal elements are the corresponding eigenvalues— with σ1 ≥ σ2 ≥ … ≥ σK > 0. With the whitening transformation,

| (4.52) |

the covariance matrix of becomes an identity matrix:

| (4.53) |

By the central limit theorem, the distribution of random variable should be closer to a normal distribution than the distribution of the original random variable X; that is, . Using Laplace’s method asymptotic expansion (MacKay, 2003), we get

| (4.54) |

| (4.55) |

In principal component analysis (PCA), the data set is modeled by a multivariate gaussian. By a PCA-like whitening transformation equation 4.52, we can use the approximation 4.55 with Laplace’s method, which requires only that the peak be close to the mean and the random variable does not need to be an exact gaussian distribution.

By theorem 3, we have

| (4.56) |

where

| (4.57) |

| (4.58) |

| (4.59) |

| (4.60) |

| (4.61) |

Given a K × K orthogonal matrix , we define

| (4.62) |

Then it follows from equations 4.56 to 4.62 that

| (4.63) |

where

| (4.64) |

| (4.65) |

| (4.66) |

Suppose y is partitioned into two sets of components, and

| (4.67) |

| (4.68) |

where K1 + K2 = K, K ≥ 2, K1 ≥ 1 and K2 ≥ 1. Let

| (4.69) |

where

| (4.70) |

When K ⪢ 1, suppose we can find an orthogonal matrix B and K1 that satisfy condition 4.38 in theorem 5 or condition 4.49—that is,

| (4.71) |

| (4.72) |

| (4.73) |

Here matrix By is positive-semidefinite because

| (4.74) |

where

| (4.75) |

and a (r) is a K1-dimensional random vector that satisfies

| (4.76) |

| (4.77) |

Assuming that J1,1 (y) is positive-definite, and ∥J1,2(y)∥ = ∥J2,1(y)∥ = O (N), we have

| (4.78) |

and

| (4.79) |

Hence, if

| (4.80) |

| (4.81) |

then equation 4.71 holds. Notice that the matrix is positive-semidefinite, which is similar to equation 4.74 and . Hence, if

| (4.82) |

then equations 4.80 and 4.81 hold so does equation 4.71.

5. Optimization of Information Transfer in Neural Population Coding –

5.1. Population Density Distribution of Parameters in Neural Populations.

If p(r∣x) is conditional independent, we can write

| (5.1) |

where denotes a -dimensional vector for parameters of the nth neuron, and p(rn∣x; θn) is the conditional p.d.f. of the output rn given x. With the definition in equation 2.13, we have following proposition.

Proposition 1. If p(r∣x) is conditional independent as in equation 5.1, we have

| (5.2) |

where

| (5.3) |

, , and p(θ) is the population density function of parameter vector θ:

| (5.4) |

with δ (·) being the Dirac delta function.

Proof.

| (5.5) |

□

Remark 8. Proposition 1 shows that J(x) can be regarded as a function of the population density of parameters, p(θ). If the p.d.f. of the input p(x) is given, we can find an appropriate p(θ) to maximize MI I.

For neuron model with Poisson spikes, we have

| (5.6) |

| (5.7) |

where f (x; θn) is the tuning curve of the nth neuron, n = 1, 2, …, N. Now we have

| (5.8) |

| (5.9) |

Similarly, for a neuron response model with gaussian noise, we have

| (5.10) |

| (5.11) |

where σ is a constant standard deviation. Now we get

| (5.12) |

5.2. Optimal Population Distribution for Neural Population Coding.

Suppose p(x) and p(r∣x) fulfill conditions C1 and C2 and equation 5.1. Following the discussion in section 2.2, we define the following objective for maximizing MI I,

| (5.13) |

or, equivalently,

| (5.14) |

where

| (5.15) |

| (5.16) |

| (5.17) |

Here P (x) is given in equation 2.15, and it generally can be substituted by P+ (see equation 2.78).

When ς1 ≈ 0 (see equation 2.64), the object function, equation 5.13, can be reduced to

| (5.18) |

or, equivalently,

| (5.19) |

The constraint condition for p(θ) is given by

| (5.20) |

However, without further constraints on the neural populations, especially a limit on the peak firing rate, the capacity of the system may grow indefinitely: I(X; R) → ∞. The most common limitation on neural populations is the energy or power constraint. For neuron models with Poisson noise or gaussian noise, a useful constraint is a limitation on the peak power,

| (5.21) |

where Emax > 0 is the peak power. Under this constraint, maximizing IG[p(θ)] or IF[p(θ)] for independent neurons will result in maxx ∣ f (x; θn)∣ = Emax for ∀n = 1,2, …, N.

Another constraint is a limitation on average power. For Poisson neurons given in equation 5.7,

| (5.22) |

which can also be written as

| (5.23) |

and for gaussian noise neurons given in equation 5.11,

| (5.24) |

where Eavg > 0 is the maximum average energy cost.

In equation 5.15, we can approximate the continuous integral by a discrete summation for numerical computation,

| (5.25) |

where the positive integer K1 ≤ N denotes the number of subclasses in the neural population and

| (5.26) |

If we do not know the specific form of p(x) but have M samples, x1, x2, …, xM, which are i.i.d. samples drawn from the distribution p(x), then we can approximate the integral in equation 5.13 by the sample average:

| (5.27) |

Optimizing the objective 5.13 or 5.18 is a convex optimization problem (see the appendix for a proof).

Proposition 2. The functions IG[p(θ)] and IF [p(θ)] are concave about p(θ).

Remark 9. For a low-dimensional input x, we may use equation 5.18 or 5.19 as the objective. Since IG[p(θ)] and IF[p(θ)] are concave functions of p(θ), we can directly use efficient numerical methods to get the optimal solution for small K. However, for high-dimensional input x, we need to use other methods (e.g., Huang & Zhang, 2017).

5.3. Necessary and Sufficient Conditions for Optimal Population Distribution.

Applying the method of Lagrange multipliers for the optimization problems 5.13 and 5.20 yields

| (5.28) |

where λ1 is a constant and λ2(θ) is a function of θ. According to Karush-Kuhn-Tucker (KKT) conditions (Boyd & Vandenberghe, 2004), we have

| (5.29) |

and the necessary condition for optimal population density,

| (5.30) |

It follows from equations 5.29 and 5.30 that

| (5.31) |

| (5.32) |

Since IG[p(θ)] is a concave function of p(θ), equations 5.31 and 5.32 are the necessary and sufficient conditions for the optimization problems 5.13 and 5.20.

5.4. Channel Capacity for Neural Population Coding.

If p(x) is unknown, then by Jensen’s inequality, we have

| (5.33) |

and the equality holds if and only if p(x)−1 det (G(x))1/2 is a constant. Thus,

| (5.34) |

| (5.35) |

assuming .

Let us consider a specific example. Suppose J(x) = J0 is a constant matrix; then it follows from equation 2.12 that

| (5.36) |

According to the maximum entropy probability distribution, we know that maximizing H(X) results in a uniformly distributed p(x). Hence we have G(x) = J0, and p*(x) coincides with the uniform distribution (see equation 5.35). In this case, the maximum IG[p*(x)] can be regarded as the channel capacity for this neural population.

If we consider a constraint on random variables X and assume that the covariance matrix of X is ∑0 and satisfies

| (5.37) |

then it follows from the maximum entropy probability distribution that

| (5.38) |

and the equality holds if and only if the p.d.f. of the input is a normal distribution: . Hence,

| (5.39) |

where IG[p*(x)] is the channel capacity of neural population. Here the equality holds if and only if , which is consistent with equation 5.37.

Furthermore, if ς1 ≈ 0 (see equation 2.64), we have

| (5.40) |

Similarly, we also get

| (5.41) |

| (5.42) |

assuming . Here IF[p*(x)] is the channel capacity of the neural population. The distribution p*(x) coincides with the Jeffrey’s prior in Bayesian probability (Jeffreys, 1961). In this case, if we suppose the covariance matrix of X is ∑0, then similar to equations 5.38 and 5.39, we can get the channel capacity

| (5.43) |

with .

For another example, consider the Poisson neuron model given in equation 5.7 and suppose the input x is one dimension, K = 1. It follows from equations 5.8 and 5.42 that

| (5.44) |

If p(θ) = δ(θ – θ0), equation 5.44 becomes

| (5.45) |

Atick and Redlich (1990) presented a redundancy measure to approximate Barlow’s optimality principle:

| (5.46) |

where C(R) is the channel capacity. Here for neural population coding, we have C(R) ≃ IG[p*(x)] and I(X; R) ≃ IG (or C(R) – IF[p*(x)] and I(X; R) ≃ IF). Hence, we can minimize by choosing an appropriate J(x) to maximize IG (or IF) and simultaneously satisfy equation 5.35 (or 5.42) (see Huang & Zhang, 2017, for further details).

6. Discussion

In this article, we have derived several information-theoretic bounds and approximations for effective approximation of MI in the context of neural population coding for large but finite population size. We have found some regularity conditions under which the asymptotic bounds and approximations hold. Generally these regularity conditions are easy to meet. Special examples that satisfy these conditions include the cases when the likelihood function p(r∣x) for the neural population responses is conditionally independent or has correlated noises with a multivariate gaussian distribution. Under the general regularity conditions, we have derived several asymptotic bounds and approximations of MI for a neural population and found some relationships among different approximations.

How to choose among these different asymptotic approximations of MI in a neural population with finite size N? For a flat prior distribution p(x), we have IG ≃ IF; that is, the two approximations IG and IF are about equally valid. For a sharply peaked prior distribution p(x), IG is generally a better approximation to MI I than IF. Under suitable conditions (e.g., cases C1 and C2) for low-dimensional inputs, IG and IF are good approximations of MI I not only for large N but also for small N. For high-dimensional inputs, the FI matrix J(x) (see equation 2.11) or matrix P−1(x) (see equation 2.15) often becomes degenerate, which causes a large error between IF and MI I. Hence, in this situation, IG is a better approximation to MI I than IF. For more convenient computation of the approximation, we have also introduced the approximation formula IG+ which may substitute for IG as a proxy of MI I. For some special cases (see corollary 1), IG and IG+ are strictly equal to the true MI I. Our simulation results for the one-dimensional case show that the approximations IG, IG+, and IF are all highly precise compared with the true MI I, even for small N (see Figure 1).

These approximation formulas satisfy additional constraints. By the Cramér-Rao lower bound, we know that IF is related to the covariance matrix of an unbiased estimator (see equation 3.3). By van Trees’ Bayesian Cramér-Rao bound, we get a link between IG+ and the covariance matrix of a biased estimator (see equation 3.9). From the point of view of neural population decoding and Bayesian inference, there is a connection between MI (or IG) and MAP (see equation 3.17).

For more efficient calculation of the approximation IG (or IG+ ) for high-dimensional inputs, we propose to apply an invertible transformation on the input variable so as to make the new variable closer to a normal distribution (see section 4.1). Another useful technique is dimensionality reduction, which effectively approximates MI by further reducing the computational complexity for high-dimensional inputs. We found that IF could lead to huge errors as a proxy of the true MI I for high-dimensional inputs even when IG and IG+ are strictly equal to the true MI I.

These approximation formulas are potentially useful for optimization problems of information transfer in neural population coding. We have proven that optimizing the population density distribution of parameters p(θ) is a convex optimization problem and have found a set of necessary and sufficient conditions. The approximation formulas are also useful for discussion of the channel capacity of neural population coding (see section 5.4).

Information theory is a powerful tool for neuroscience and other disciplines, including diverse fields such as physics, information and communication technology, machine learning, computer vision, and bioinformatics. Finding effective approximation methods for computing MI is a key for many practical applications of information theory. Generally the FI matrix is easier to evaluate or approximate than MI. This is because calculation of MI involves averaging over both the input variable x and the output variable r (see equation 2.1), and typically p(r) also needs to be calculated from p(r∣x) by another average over x (see equation 2.2). By contrast, the FI matrix J(x) involves averaging over r only (see equation 2.13). Furthermore, it is often easier to find analytical forms of FI for specific models such as a population of tuning curves with Poisson spike statistics. Taking into account the computational efficiency, for practical applications we suggest using IG or IG+ as a proxy of the true MI I for most cases. These approximations could be very useful even when we do not need to know the exact value of MI. For example, for some optimization and learning problems, we only need to know how MI is affected by the conditional p.d.f. or likelihood function p(r∣x). In such situations, we may easily solve for the optimal parameters using the approximation formulas (Huang & Zhang, 2017; Huang, Huang, & Zhang, 2017). Further discussions of the applications will be given in separate publications.

Acknowledgments

This work was supported by an NIH grant R01 DC013698.

Appendix: The Proofs

We consider a Taylor expanding of around x. If is twice differentiable for , then by condition C1 we get

| (A.1) |

where

| (A.2) |

| (A.3) |

| (A.4) |

| (A.5) |

and

| (A.6) |

By condition C1, we know that the matrix B1 + B2 is continuous and symmetric for and ∥B1 + B2∥ = O(1). By the definition of continuous functions, we can prove the following: for any ϵ ∈ (0, 1), there is an ε ∈ (0, ω) such that for all ,

| (A.7) |

where

| (A.8) |

Hence,

| (A.9) |

Here , ε is a function of r, ε = ε (r) = O (1), and

| (A.10) |

We define the sets

| (A.11) |

where

| (A.12) |

1(·) denotes an indicator random variable,

| (A.13) |

and

| (A.14) |

For all , we have ; then

| (A.15) |

It follows from equations A.3 and A.6 that

| (A.16) |

and

| (A.17) |

and it follows from condition C1 that

| (A.18) |

Combining conditions C1 and C2 and equations A.3, A.4, and A.6, we find

| (A.19) |

together with the power mean inequality,

| (A.20) |

where , m0 ∈ {1, 2}. Notice that ∣G−1 (x)∥ = O (N−1). Here we note that for all conformable matrices A and B,

| (A.21) |

By equation 2.25c, we have

| (A.22) |

Then it follows from equations 2.25b and A.22 that

| (A.23) |

A.1 Proof of Lemma 1. It follows from equation A.1 that

| (A.24) |

For , according to the definitions in equations A.13 and A.14, we have

| (A.25) |

Then by condition C1, we get

| (A.26) |

where is a positive constant and . By equations A.9, A.17, and A.24, we get

| (A.27) |

where , the last step in equation A.27 follows from Jensen’s inequality, and

| (A.28) |

Integrating by parts yields

| (A.29) |

and

| (A.30) |

for some δ > 0.

Then from equation A.27, we get

| (A.31) |

where

| (A.32) |

Here, notice that

| (A.33) |

and

| (A.34) |

Hence, from the consideration above, we find

| (A.35) |

Since ϵ is arbitrary, let it go to zero. Thus, combining equations A.24 and A.35 yields

| (A.36) |

Considering

| (A.37) |

and combining equations 2.3 and A.36, we immediately get equation 2.53.

On the other hand, by conditions 2.54a and 2.54b, we have

| (A.38) |

Similarly we can get equation 2.55. This completes the proof of lemma 1. □

A.2 Proof of Lemma 2. Define the sets

| (A.39) |

and

| (A.40) |

where , assuming ϵ ∈ (0, 1/2) and p(x) > 0.

Then by Markov’s inequality, we have

| (A.41) |

and by equation 2.26b,

| (A.42) |

Consider the following equality:

| (A.43) |

For the last term in equation A.43, Jensen’s inequality implies that

| (A.44) |

For the first term in equation A.43, it follows from equations A.40 and A.9 that

| (A.45) |

The last term, equation A.45, is upper-bounded by

| (A.46) |

| (A.47) |

Equation A.46 is equal to

| (A.48) |

Equation A.47 is equal to

| (A.49a) |

| (A.49b) |

where

| (A.50) |

Notice that

| (A.51) |

and

| (A.52) |

Then by equation A.19, we get

| (A.53) |

| (A.54) |

and by equation 2.51,

| (A.55) |

Hence, we have

| (A.56) |

and by Cauchy-Schwarz inequality and equation A.53, the term A.49b is upper-bounded by

| (A.57) |

Since ϵ is arbitrary, we can let it go to zero. Then, taking everything together, we get

| (A.58) |

Putting equation A.58 into 2.3 yields 2.56.

On the other hand, we have

| (A.59) |

| (A.60) |

For equation A.60, it fo11ows from Jensen’s inequa1ity that

| (A.61) |

and

| (A.62) |

where

| (A.63) |

Similarly we can get equation 2.57. □

A.3 Proof of Theorem 1. By lemmas 1 and 2, we immediately get equation 2.58. The proof of equation 2.59 is similar. □

A.4 Proof of Theorem 2. First, we have

| (A.64) |

Since J(x) and G(x) are symmetric and positive-definite, IK + Ψ(x) is also symmetric and positive-definite. The eigendecompositon of Ψ(x) is given by

| (A.65) |

where is an orthogonal matrix and the matrix is a K × K diagonal matrix with K nonnegative real numbers on the diagonal, λ1 ≥ λ2 ≥, …, ≥ λK > −1. Then we have

| (A.66) |

and

| (A.67) |

Notice that ln(1 + x) ≤ x for ∀x ∈ (−1, ∞). It follows from equations A.64 and A.67 that

| (A.68) |

From equations 2.12, 2.11, and A.68, we obtain equation 2.62.

If P(x) is positive-semidefinite, then λ1 ≥ λ2 ≥, …, ≥ λK ≥ 0, ς ≥ 0 and (ln (det ⟨IK + Ψ(x)))⟩x ≥ 0. Hence we can get equation 2.63.

On the other hand, it follows from equations 2.64 and A.67 and the power mean inequality that

| (A.69) |

Let for ∀k ∈ {1, 2, …, K}. Then

| (A.70) |

Notice that . Then by equation A.69, we have

| (A.71) |

From equations 2.12, 2.11, A.68, A.70, and A.71, we immediately get equation 2.65. □

A.5 Proof of Theorem 3. Considering the change of variables theorem, for any real-valued function f and invertible transformation T, we have

| (A.72) |

and for p(x) and ,

| (A.73) |

Then it follows from equations 4.2, A.72, and A.73 that

| (A.74) |

Substituting equations A.73 and A.74 into 2.1, we can directly obtain equation 4.3. Moreover, if and fulfill conditions C1, C2 and ξ = O (N−1), then by theorem 1, we immediately obtain equation 4.4. □

A.6 Proof of Corollary 1. It follows from equation 2.21 and theorem 3 that

| (A.75) |

and

| (A.76) |

Here notice that

| (A.77) |

and

| (A.78) |

Hence, combining equations A.75 to A.78, we can immediately obtain equation 4.9. □

A.7 Proof of Theorem 4. First, we have

| (A.79) |

Then by the eigendecompositon of Ax, we have

| (A.80) |

where Ux and Λx are K2 × K2 eigenvector matrix and eigenvalue matrix, respectively. Since G (x), G1,1 (x), and G2,2 (x) are positive-definite, then IK – Ax is also positive-definite and Ax is positive-semidefinite, with 0 ≤ (Λx)k,k = λk < 1 for ∀k ∈ {1, 2, …, K2}. Moreover, it follows from equation 4.33 that

| (A.81) |

Then by equation A.81, we have

| (A.82) |

Substituting equation A.82 into A.79 and then combining with equation 2.12, we get equation 4.35.

If equation 4.36 holds, then Ax = 0 and IG = IG1. Conversely, if IG = IG1, then

| (A.83) |

Ax = 0, and equation 4.36 holds. □

A.8 Proof of Theorem 5. Similar to equation A.79, we have

| (A.84) |

Similar to equation A.65, the eigendecompositon of Bx is given by

| (A.85) |

where Ux and Λx are K2 × K2 eigenvector matrix and eigenvalue matrix, respectively. If the matrix Bx is positive-semidefinite and satisfies equation 4.38, then (Λx)k,k = λk ≥ 0 for ∀k ∈ {1, 2, …, K2} and

| (A.86) |

Substituting equation A.86 into A.84, we immediately get equation 4.41. If Cx = 0, then ln (det(lK2 + Bx)) = 0 and IG = IG2. And if IG = IG2, then ln (det(IK2 + Bx)) = 0, Bx = 0 and Cx = 0. □

A.9 Proof of Corollary 2. Notice that

| (A.87) |

and the matrices

| (A.88) |

| (A.89) |

are positive-semidefinite, and the proof is similar to equation 4.74. Then by theorem 5, we immediately get equation 4.41. Substituting equation A.87 into 4.41 yields equation 4.44 with strict equality if and only if Cx = 0. □

A.10 Proof of Proposition 2. By writing p(θ) as a sum of two density functions p1(θ) and p2(θ),

| (A.90) |

we have

| (A.91) |

where 0 ≤ α ≤ 1 and

| (A.92) |

| (A.93) |

Using the Minkowski determinant inequality and the inequality of weighted arithmetic and geometric means, we find

| (A.94) |

It follows from equations A.91 and A.94 that

| (A.95) |

where the equality holds if and only if G1(x) = G2(x). Thus ln (det (G(x))) is concave about p(θ). Therefore IG[p(θ)] is a concave function about p(θ). Similarly, we can prove that IF[p(θ)] is also a concave function about p(θ). □

Contributor Information

Wentao Huang, Department of Biomedical Engineering, Johns Hopkins University School of Medicine, Baltimore, MD 21205, U.S.A., and Cognitive and Intelligent Lab and Information Science Academy of China Electronics Technology Group Corporation, Beijing 100846, China.

Kechen Zhang, Department of Biomedical Engineering, Johns Hopkins University School of Medicine, Baltimore, MD 21205, U.S.A..

References

- Abbott LF, & Dayan P (1999). The effect of correlated variability on the accuracy of a population code. Neural Comput., 11(1), 91–101. [DOI] [PubMed] [Google Scholar]

- Amari S, & Nakahara H (2005). Difficulty of singularity in population coding. Neural Comput., 17(4), 839–858. [DOI] [PubMed] [Google Scholar]

- Atick JJ, Li ZP, & Redlich AN (1992). Understanding retinal color coding from first principles. Neural Comput., 4(4), 559–572. [Google Scholar]

- Atick JJ, & Redlich AN (1990). Towards a theory of early visual processing. Neural Comput., 2(3), 308–320. [Google Scholar]

- Becker S, & Hinton GE (1992). Self-organizing neural network that discovers surfaces in random-dot stereograms. Nature, 355(6356), 161–163. [DOI] [PubMed] [Google Scholar]

- Bell AJ, & Sejnowski TJ (1997). The “independent components” of natural scenes are edge filters. Vision Res., 37(23), 3327–3338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bethge M, Rotermund D, & Pawelzik K (2002). Optimal short-term population coding: When Fisher information fails. Neural Comput., 14(10), 2317–2351. [DOI] [PubMed] [Google Scholar]

- Borst A, & Theunissen FE (1999). Information theory and neural coding. Nat. Neurosci, 2(11), 947–257. [DOI] [PubMed] [Google Scholar]

- Boyd S, & Vandenberghe L (2004). Convex optimization. Cambridge: Cambridge University Press. [Google Scholar]

- Brown EN, Kass RE, & Mitra PP (2004). Multiple neural spike train data analysis: State-of-the-art and future challenges. Nat. Neurosci, 7(5), 456–461. [DOI] [PubMed] [Google Scholar]

- Brunel N, & Nadal JP (1998). Mutual information, Fisher information, and population coding. Neural Comput., 10(7), 1731–1757. [DOI] [PubMed] [Google Scholar]

- Carlton A (1969). On the bias of information estimates. Psychological Bulletin, 71(2), 108. [Google Scholar]

- Chase SM, & Young ED (2005). Limited segregation of different types of sound localization information among classes of units in the inferior colliculus. Journal of Neuroscience, 25(33), 7575–7585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chechik G, Anderson MJ, Bar-Yosef O, Young ED, Tishby N, & Nelken I (2006). Reduction of information redundancy in the ascending auditory pathway. Neuron, 51(3), 359–368. [DOI] [PubMed] [Google Scholar]

- Clarke BS, & Barron AR (1990). Information-theoretic asymptotics of Bayes methods. IEEE Trans. Inform. Theory, 36(3), 453–471. [Google Scholar]

- Cover TM, & Thomas JA (2006). Elements of information (2nd ed.) New York: Wiley-Interscience. [Google Scholar]

- Eckhorn R, & Pöpel B (1975). Rigorous and extended application of information theory to the afferent visual system of the cat. II. Experimental results. Biological Cybernetics, 17(1), 7–17. [DOI] [PubMed] [Google Scholar]

- Ganguli D, & Simoncelli EP (2014). Efficient sensory encoding and Bayesian inference with heterogeneous neural populations. Neural Comput., 26(10), 2103–2134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gawne TJ, & Richmond BJ (1993). How independent are the messages carried by adjacent inferior temporal cortical neurons? Journal of Neuroscience, 13(7), 2758–2771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gourévitch B, & Eggermont JJ (2007). Evaluating information transfer between auditory cortical neurons. Journal of Neurophysiology, 97(3), 2533–2543. [DOI] [PubMed] [Google Scholar]

- Guo DN, Shamai S, & Verdu S (2005). Mutual information and minimum mean-square error in gaussian channels. IEEE Trans. Inform. Theory, 51(4), 1261–1282. [Google Scholar]

- Harper NS, & McAlpine D (2004). Optimal neural population coding of an auditory spatial cue. Nature, 430(7000), 682–686. [DOI] [PubMed] [Google Scholar]

- Huang W, Huang X, & Zhang K (2017). Information-theoretic interpretation of tuning curves for multiple motion directions. In Proceedings of the 51st Annual Conference on Information Sciences and Systems (pp. 1–4). Piscataway, NJ: IEEE. [Google Scholar]

- Huang W, & Zhang K (2017). An information-theoretic framework for fast and robust unsupervised learning via neural population infomax. In Proceedings of the 5th International Conference on Learning Representations (ICLR). arXiv:1611.01886. [Google Scholar]

- Jeffreys H (1961). Theory of probability (3rd ed.). New York: Oxford University Press. [Google Scholar]

- Kang K, & Sompolinsky H (2001). Mutual information of population codes and distance measures in probability space. Phys. Rev. Lett, 86(21), 4958–4961. [DOI] [PubMed] [Google Scholar]

- Khan S, Bandyopadhyay S, Ganguly AR, Saigal S, Erickson DJ III, Protopopescu V, & Ostrouchov G (2007). Relative performance of mutual information estimation methods for quantifying the dependence among short and noisy data. Physical Review E, 76(2), 026209. [DOI] [PubMed] [Google Scholar]

- Kraskov A, Stögbauer H, & Grassberger P (2004). Estimating mutual information. Physical Review E, 69(6), 066138. [DOI] [PubMed] [Google Scholar]

- Laughlin SB, & Sejnowski TJ (2003). Communication in neuronal networks. Science, 301(5641), 1870–1874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis A, & Zhaoping L (2006). Are cone sensitivities determined by natural color statistics? J. Vis, 6(3), 285–302. [DOI] [PubMed] [Google Scholar]

- MacKay DJC (2003). Information theory, inference and learning algorithms. Cambridge: Cambridge University Press. [Google Scholar]

- McClurkin JW, Gawne TJ, Optican LM, & Richmond BJ (1991). Lateral geniculate neurons in behaving primates. II. Encoding of visual information in the temporal shape of the response. Journal of Neurophysiology, 66(3), 794–808. [DOI] [PubMed] [Google Scholar]

- Miller GA (1955). Note on the bias of information estimates In Quastler H (Ed.), Information theory in psychology: Problems and methods II-B (pp. 95–100). Glencoe, IL: Free Press. [Google Scholar]

- Olshausen BA, & Field DJ (1996). Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature, 381(6583), 607–609. [DOI] [PubMed] [Google Scholar]

- Optican LM, & Richmond BJ (1987). Temporal encoding of two-dimensional patterns by single units in primate inferior temporal cortex. III. Information theoretic analysis. Journal of Neurophysiology, 57(1), 162–178. [DOI] [PubMed] [Google Scholar]

- Paninski L (2003). Estimation of entropy and mutual information. Neural Comput., 15(6), 1191–1253. [Google Scholar]

- Pouget A, Dayan P, & Zemel R (2000). Information processing with population codes. Nat. Rev. Neurosci, 1(2), 125–132. [DOI] [PubMed] [Google Scholar]

- Quiroga R, & Panzeri S (2009). Extracting information from neuronal populations: Information theory and decoding approaches. Nat. Rev. Neurosci, 10(3), 173–185. [DOI] [PubMed] [Google Scholar]

- Rao CR (1945). Information and accuracy attainable in the estimation of statistical parameters. Bulletin of the Calcutta Mathematical Society, 37(3), 81–91. [Google Scholar]

- Rieke F, Warland D, de Ruyter van Steveninck R, & Bialek W (1997). Spikes: Exploring the neural code. Cambridge, MA: MIT Press. [Google Scholar]

- Rissanen JJ (1996). Fisher information and stochastic complexity. IEEE Trans. Inform. Theory, 42(1), 40–47. [Google Scholar]

- Shannon C (1948). A mathematical theory of communications. Bell System Technical Journal, 27, 379–423, 623–656. [Google Scholar]

- Sompolinsky H, Yoon H, Kang KJ, & Shamir M (2001). Population coding in neuronal systems with correlated noise. Phys. Rev. E, 64(5), 051904. [DOI] [PubMed] [Google Scholar]

- Tovee MJ, Rolls ET, Treves A, & Bellis RP (1993). Information encoding and the responses of single neurons in the primate temporal visual cortex. Journal of Neurophysiology, 70(2), 640–654. [DOI] [PubMed] [Google Scholar]

- Toyoizumi T, Aihara K, & Amari S (2006). Fisher information for spike-based population decoding. Phys. Rev. Lett, 97(9), 098102. [DOI] [PubMed] [Google Scholar]

- Treves A, & Panzeri S (1995). The upward bias in measures of information derived from limited data samples. Neural Comput., 7(2), 399–407. [Google Scholar]

- Van Hateren JH (1992). Real and optimal neural images in early vision. Nature, 360(6399), 68–70. [DOI] [PubMed] [Google Scholar]

- Van Trees HL, & Bell KL (2007). Bayesian bounds for parameter estimation and nonlinear filtering/tracking. Hoboken, NJ: Wiley. [Google Scholar]

- Verdu S (1986). Capacity region of gaussian CDMA channels: The symbol synchronous case. In Proc. 24th Allerton Conf. Communication, Control and Computing, (pp. 1025–1034). Piscataway, NJ: IEEE. [Google Scholar]

- Victor JD (2000). Asymptotic bias in information estimates and the exponential (bell) polynomials. Neural Comput., 12(12), 2797–2804. [DOI] [PubMed] [Google Scholar]

- Wei X-X, & Stocker AA (2015). Mutual information, Fisher information, and efficient coding. Neural Comput., 28, 305–326. [DOI] [PubMed] [Google Scholar]

- Yarrow S, Challis E, & Series P (2012). Fisher and Shannon information in finite neural populations. Neural Comput., 24(7), 1740–1780. [DOI] [PubMed] [Google Scholar]

- Zhang K, Ginzburg I, McNaughton BL, & Sejnowski TJ (1998). Interpreting neuronal population activity by reconstruction: Unified framework with application to hippocampal place cells. J. Neurophysiol, 79(2), 1017–1044. [DOI] [PubMed] [Google Scholar]

- Zhang K, & Sejnowski TJ (1999). Neuronal tuning: To sharpen or broaden? Neural Comput., 11(1), 75–84. [DOI] [PubMed] [Google Scholar]