SUMMARY

Humans have remarkable scale-invariant visual capabilities. For example, our orientation discrimination sensitivity is largely constant over more than two orders of magnitude of variations in stimulus spatial frequency (SF). Orientation selective V1 neurons are likely to contribute to orientation discrimination. However, because at any V1 location, neurons have a limited range of receptive field (RF) sizes, we predict that at low SFs, V1 neurons will carry little orientation information. If this were the case, what could account for the high behavioral sensitivity at low SFs? Using optical imaging in behaving macaques, we show that, as predicted, V1 orientation-tuned responses drop rapidly with decreasing SF. However, we reveal a surprising coarse-scale signal that corresponds to the projection of the luminance layout of low SF stimuli to V1’s retinotopic map. This homeomorphic and distributed representation, which carries high quality orientation information, is likely to contribute to our striking scale-invariant visual capabilities.

In Brief

Benvenuti et al. describe a novel retinotopic representation of low spatial-frequency luminance stimuli in V1 of behaving macaques. This distributed representation could solve the “aperture problem” for computation of orientation of low spatial-frequency stimuli by single V1 neurons.

INTRODUCTION

In natural visual scenes, relevant information regarding pattern geometry (e.g., contour orientation) is distributed over a wide range of spatial scales. Similarly, the spatial scale of the retinal image of an object can vary dramatically with its distance from the observer. Therefore, to support our interactions with complex natural environments, our visual system must be able to finely discriminate visual patterns over a broad range of spatial frequencies (SFs). Behavioral measurements suggest that this is indeed the case (Burr and Wijesundra, 1991; Jamar and Koenderink, 1983). For example, we can discriminate between gratings whose orientation differs by less than one degree, and this sensitivity is only modestly affected by changes in SF and size over more than two orders of magnitude (Burr and Wijesundra, 1991). What are the neural mechanisms that underline these remarkable scale-invariant capabilities?

Neurons in primate primary visual cortex (V1) are selective to the orientation of contrast edges within their receptive fields (RFs) (Hubel and Wiesel, 1968). These orientation-selective responses are likely to play an important role in pattern discrimination. However, due to the limited spatial spread of the geniculo-cortical projections to V1 (Blasdel and Lund, 1983) and the connections between the input and output layers within V1 (Angelucci et al., 2002; Levitt and Lund, 2002; Lund et al., 2003), at any location in V1, neurons have a limited range of RF sizes. As a consequence, when the spatial scale of an oriented stimulus exceeds this range of RF sizes at the corresponding location, individual V1 neurons should not be able to represent edge orientation reliably (Figure 1A). Surprisingly, this fundamental “aperture problem” for the computation of orientation, phase and SF of large-scale static patterns, has not received the same attention as the aperture problem for the computation of direction and speed of large-scale moving patterns (Adelson and Movshon, 1982; Marr, 1982). Therefore, our first goal was to test the prediction that the quality of orientation selective signals in macaque V1 deteriorates for low SF stimuli.

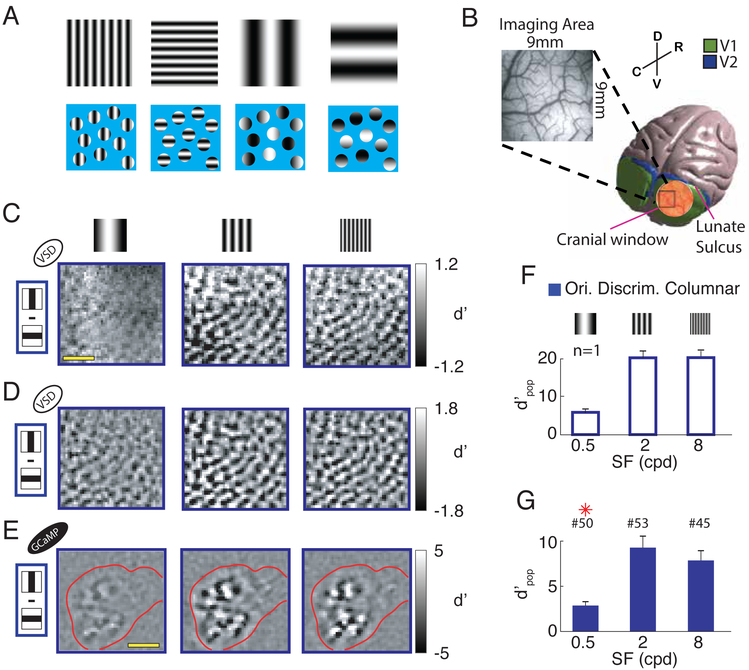

Figure 1. Discriminability of columnar-scale orientation tuned responses to orthogonal gratings as a function of spatial-frequency.

(A) Schematic demonstration of the “aperture problem” for low SF stimuli. The four gratings on the top row (two orientations for medium and low SFs, respectively) are re-displayed in the bottom row behind a blue mask, with small circular apertures, each one representing the RF of a V1 neuron. At low SFs, the oriented contrast pattern in each individual aperture carries limited information about orientation. However, orientation information can be obtained by combining information about the relative positions of apertures with bright and dark patterns. (B) 3D model of the macaque brain with a superimposed image of the cranial window (in scale). The insert shows a picture of the vasculature in an imaging area. Spatial reference abbreviations: R=rostral, C=caudal, D=dorsal, V=ventral. (C) Pixel-by-pixel discriminability (d’ map) across responses to two orthogonal gratings, for three stimulus SFs (0.5, 2 and 8cpd), from an example session. Amplitude expressed in signed d′. (D) Similar to C but isolating the columnar response component with bandpass filtration at 0.8-3 cycles per mm. (E)Similar to D but in a different monkey, using calcium imaging. Red contour represents cortical area in which the calcium indicator GCaMP6f is expressed. Scale bar in C and E - 2mm. (F) Overall discriminability (d′pop; see Methods) of columnar responses to gratings stimuli with orthogonal orientations, at the three SFs, in the same example session as in C and D. Error-bars indicate bootstrapped standard errors. (G) Similar to F but summary across sessions. Error-bars indicate standard-error of the mean across sessions. Red asterisks signal significant difference with respect to the central SF condition (two-sample t-test P<0.05). The number of sessions per SF is indicated above the corresponding bar. All figures and text refer to VSD imaging unless explicitly noted otherwise. Ori. Discrim – orientation discrimination. Here and in subsequent figures, open bars indicate results from an example session while solid bars indicate summary across sessions.

If the quality of orientation signals carried by individual V1 neurons drops at low SFs, what could account for the high behavioral sensitivity in orientation discrimination at low SFs? Here we explore the possibility that information regarding the orientation of low SFs stimuli is encoded by the collective pattern of population responses in V1 rather than by the activity of individual V1 neurons (Figure 1A). In addition to orientation, V1 neurons are also tuned to SF (De Valois et al., 1982). However, most V1 neurons continue to respond (albeit weakly) to the onset of visual patterns with SFs that are much lower than their preferred SF (De Valois et al., 1982). Because each neuron has access only to a small portion of the stimulus (Figure 1A), these responses must be driven by the abrupt change in the local mean luminance within the neuron’s receptive field. As discussed above, these responses are likely to be non-selective for orientation. While these untuned luminance-related responses are relatively small in single neurons, they may be large at the population level because they are shared by most neurons (in contrast to the response to a stimulus that is optimal for a small subset of V1 neurons but elicits weak or no response from the vast majority of the other V1 neurons). Such an untuned luminance-related population response could provide a substrate for a distributed representation of orientation at low SFs. Therefore, our second goal was to determine whether there is a robust V1 population response to low SF stimuli.

V1 neurons are topographically organized so that neurons with similar response properties are clustered together. At a large (millimeters) scale, V1 neurons are organized based on their receptive field location into a map of visual/retinal space (“retinotopic map”) (Van Essen and Newsome, 1984; Hubel and Wiesel, 1974). At a finer (submillimeter) scale, neurons with similar preferred orientation are organized into a map of orientation columns (Blasdel, 1992; Bonhoeffer and Grinvald, 1991; Hubel and Wiesel, 1968). We hypothesized that variations in the level of the untuned luminance-related population responses across V1’s retinotopic map provide useful information regarding the orientation of low SF stimuli. A central goal of the current study was to test this hypothesis.

If there are systematic variations in the amplitude of the untuned luminance-related population response across V1’s retinotopic map, downstream circuits could extract orientation information from these variations by performing computations on this luminance-retinotopic representation that are similar to those that V1 neurons perform on the retinal image. A downstream mechanism that relies on the spatial pattern of V1 population responses at the retinotopic scale (rather than the tuning properties of individual V1 neurons) could also account for our ability to discriminate the orientation of second order patterns created by low SF modulations in the local contrast of high SF textures (Chubb and Sperling, 1988; Landy and Graham, 2004; Lin and Wilson, 1996). Our final goal was to compare V1 retinotopic representation of stimuli with large-scale variations in luminance to the representation of visual stimuli with large-scale variations in local contrast.

RESULTS

Sensitivity of orientation selective V1 responses as a function of stimulus SF

Due to the limited range of V1 neurons’ RF sizes at a given visual field eccentricity, we predicted that the sensitivity of orientation selective V1 responses will drop as the stimulus’ SF decreases. To test this prediction, we used wide-field imaging of voltage-sensitive dye (VSD) signals (Chen et al., 2006; Seidemann et al., 2002; Shoham et al., 1999), which measures the aggregate membrane potential changes in the superficial cortical layers. We imaged a V1 area (~9×9 mm2, corresponding to ~2×2 degrees of visual angle square (dva2), at eccentricity of ~2-4 dva) (Figure 1B), while monkeys viewed large sinusoidal gratings (6×6 dva2) flashed at two orthogonal orientations (0, 90°), two opposite phases (0, 180°) and three SFs (0.5, 2, 8 cycle per dva (cpd)).

Because V1 neurons are organized in cortical columns based on their orientation preference (Blasdel, 1992; Bonhoeffer and Grinvald, 1991; Hubel and Wiesel, 1968), the quality of the population response at the columnar scale can serve as a proxy for the average quality of the orientation signal at the single neuron level.

We first investigated how the orientation tuned columnar-scale signal changes with stimulus SF (Figure 1C). As a reference, we measured responses to stimuli with medium SF (2 cpd, which is close to the average preferred SF of V1 neurons in our imaging area). We compared responses to medium SF stimuli to the responses to stimuli with four-fold lower and four-fold higher SFs (0.5 and 8 cpd respectively).

For every stimulus SF, we measured the preference, strength and reliability of the orientation tuned response across the imaged cortical area, by calculating the pixel-by-pixel signed d′ (see Methods) across responses to orthogonal visual gratings. As expected, these “d′ maps” display a patchy texture representing the columnar response layout with an average cortical periodicity of ~1.2 cyc/mm (Chen et al., 2012).

As predicted, the quality of the columnar-scale orientation signal was high at medium and high SFs, but dropped dramatically at low SF, as evidenced by the lower difference between the bright and dark patches in Figure 1C,D (left panels). Similar results were obtained using wide-field calcium imaging (using the virally-expressed calcium indicator GCaMP6f), a signal that is more closely related to the pooled spiking response in V1 (Seidemann et al., 2016) (Figure 1E).

To obtain a quantitative measure of the overall orientation discriminability of the columnar-scale neural responses, we developed a simple linear orientation decoder, which pools the single-trial responses with weights equal to the thresholded d′ maps in Figure 1D (see Methods). The population discriminability (d′pop) is the signal-to-noise ratio of the pooled signals for discriminating between the two orthogonal orientations (with cross validation; see Methods).

Consistent with our predictions and with the quality of the maps in Figure 1C,D and E, we find that the overal discriminability of the columnar-scale orientation signal is exceedingly high at medium and high SFs and drops dramatically at low SF (Figure 1F,G). In 53 VSD sessions from three hemispheres of two macaque monkeys, the average columnar-scale orientation discriminability was maximal at medium SF, decreased slightly at high SF (15% average decrease), but dropped dramatically at low SF (70% average decrease) (Figure 1G; see Figure S1 for results from individual monkeys). These results support our prediction that the quality of orientation-tuned V1 responses should drop rapidly when stimulus’ SF is decreased from the optimal value. In addition, to the best of our knowledge, these results are the first demonstration of reliable decoding of stimulus orientation based on single-trial columnar-scale responses in macaque V1 (see Figure S2 for example of single-trial columnar responses).

Strong untuned neural population response to low SF stimuli

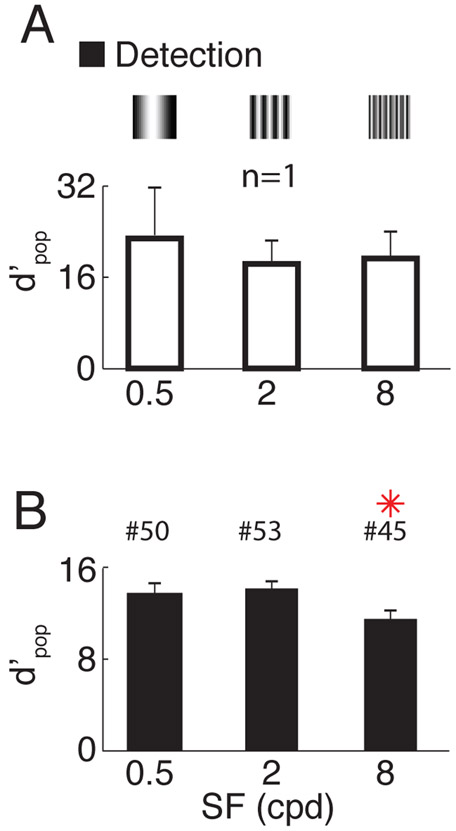

The drop in the quality of orientation tuned V1 responses at low SF is not due to a decrease in the overall neural response to low SF stimuli. As discussed in the introduction, V1 population responses may contain a large untuned response to low SF stimuli. To test this possibility, we quantified the overall detectability of the stimulus based on the same neural responses (the discriminability between the responses to a grating and a blank stimulus; see Methods) and found no significant decrease in detectability for low SF compared to the medium SF condition, and a decrease for high SF in one monkey (Figure 2 and Figure S4). These results show that there are strong untuned population responses to low SF stimuli in V1. Our next goal was to examine the spatial variations of these responses at the retinotopic scale in V1.

Figure 2. Detectability of responses to grating stimuli as a function of stimulus spatial frequency.

(A) Overall discriminability between responses to gratings vs. blank, at the three different stimulus SFs, in the same example session as in Figure 1. Error-bars indicate bootstrapped standard errors. (B) Similar to A but summary across sessions. Error-bars indicate standard-error of the mean across sessions. Red asterisks signal significant difference with respect to the central SF condition (two-sample t-test, P<0.05). The number of sessions per SF is indicated above the corresponding bar.

Luminance-retinotopic V1 representations of low SF stimuli

How can we be highly sensitive to orientation at low SFs if individual V1 neurons and columns carry low quality information about orientation? We hypothesized that a new emergent neural population signal at the retinotopic scale encodes orientation information of low SF patterns. The large untuned neural population response at low SF (Figure 2) could provide the substrate for such a signal. To test this possibility, we carefully analyzed the spatial layout of the evoked VSD responses to low SF gratings.

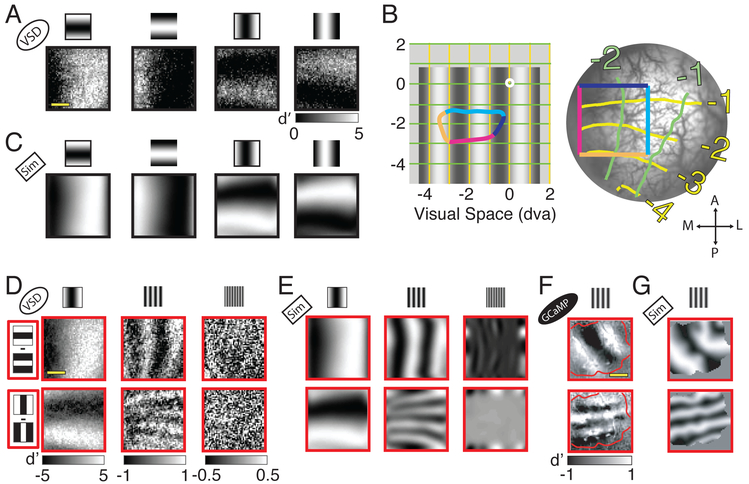

Consistent with our hypothesis, we found a surprising coarse modulation of V1 population responses that varies with the orientation and phase of low SF stimuli (Figure 3A). Each grating elicits a spatial modulation of the V1 population response that resembles the projection of the stimulus’ luminance pattern onto the retinotopic map of the imaged area.

Figure 3. Layout of luminance-retinotopic responses to grating stimuli as a function of stimulus orientation, phase and spatial-frequency.

(A) Each panel displays the difference (in signed d′) between neural responses to a low SF (0.5 cpd) grating stimulus and a blank stimulus. The stimuli are the four combinations of two opposite phases and two orthogonal orientations conditions (see cartoon on top). Same example session as in Figure 1. (B) Representation of visual space in the imaged area. Left - The central white dot indicates the fixation point. Bottom-left – 0.5 cpd grating. The colored outline indicates the projection to visual space of the colored rectangle on the right panel (which represents the imaged area). The yellow and green lines represent visual space coordinates. Right - Image of the cortex within the cranial window with retinotopic coordinates superimposed. Brain orientation spatial references: A=anterior, P=posterior, M=medial, L=lateral. The V1/V2 border is located several mm anterior to the imaged area and is approximately parallel to the dark blue edge of the colored square. Consistently with previous studies (e.g., Blasdel and Campbell, 2001), cortical magnification in the direction parallel to the V1/V2 border (and normal to ocular dominance columns in this regions of V1) is much larger than in the direction normal to the V1/V2 border (and parallel to ocular dominance columns). This is why the projection of the square from the cortex to the visual field is strongly anisotropic. (C) Simulation (Sim) of the luminance-retinotopic signal presented in A under the assumption of stronger V1 population response to the dark stimulus regions. The average correlation between the predicted and observed patterns is 0.80. Because the prediction under the assumption of bright dominance is of opposite polarity to the prediction under the assumption of dark dominance, the average correlation between the observed response and the one predicted under a bright-dominance assumption is equal to, and of opposite sign, to the correlation with the prediction under a dark-dominance assumption. (D) Mean differences in neural responses divided by their standard deviations (signed d’ values) to grating stimuli with opposite phase angles, for the two orthogonal orientations (rows) and the three SFs (columns). (E) Simulation of the luminance-retinotopic signal presented in D. Average correlation between the predicted and observed pattern is 0.93 at 0.5 cpd, 0.39 at 2 cpd and 0.02 at 8 cpd. (F) Similar to D but in another example experiment and only for the medium SF condition (2cpd), using calcium imaging. Red contour represents cortical area in which the calcium indicator is expressed. (G) Simulation of the luminance-retinotopic signal presented in F. Average correlation between the predicted and observed patterns is 0.62. Scale bar in A, D and F - 2mm. Note that because of the anisotropy in the retinotopic map, the spatial frequency of the predicted pattern is higher for vertical than for horizontal stimuli.

To test whether the observed response corresponds to the retinotopic projection of the stimulus’ spatial luminance pattern, we used a VSD imaging protocol to measure the precise retinotopic coordinates in each V1 imaged area (Yang et al., 2007) (Figure 3B). We then projected the visual grating from visual space to the cortex, testing two possible polarities, where V1 response is either positively or negatively correlated with the local luminance at the corresponding retinotopic location (i.e., stronger response to bright or dark stimulus regions, respectively). We found that the predicted pattern of responses assuming stronger V1 population responses to the dark regions of the stimulus (Figure 3C) closely matches the observed responses (Figure 3A).

Our next goal was to investigate the luminance-retinotopic signal in the response to the other stimulus SFs. To better visualize the luminance-retinotopic signal, we calculated the pixel-by-pixel signed d′ across responses to stimuli with the same orientation and SF but opposite stimulus phase. The results from the example VSD experiment resemble the projection of the stimulus to the retinotopic map for the low and medium SF conditions; however, the amplitude of the luminance-retinotopic signal drops rapidly as SF increases and approaches zero for the high SF condition (Figure 3D). This drop in amplitude with increasing SF is an expected by-product of the cortical point image (CPI) and can be simulated by convolving the projection of the stimulus to the retinotopic map with a 2D Gaussian with the diameter of the subthreshold CPI (Palmer et al., 2012) (Figure 3E). Using the same approach, we were also able to reveal the spatiotemporal dynamics of the luminance-retinotopic signal to low SF drifting gratings (Suppl. Movie S1). Finally, we used widefield GCaMP imaging in one monkey to confirm that the luminance-retinotopic signal is not unique to subthreshold responses (Figure 3F,G).

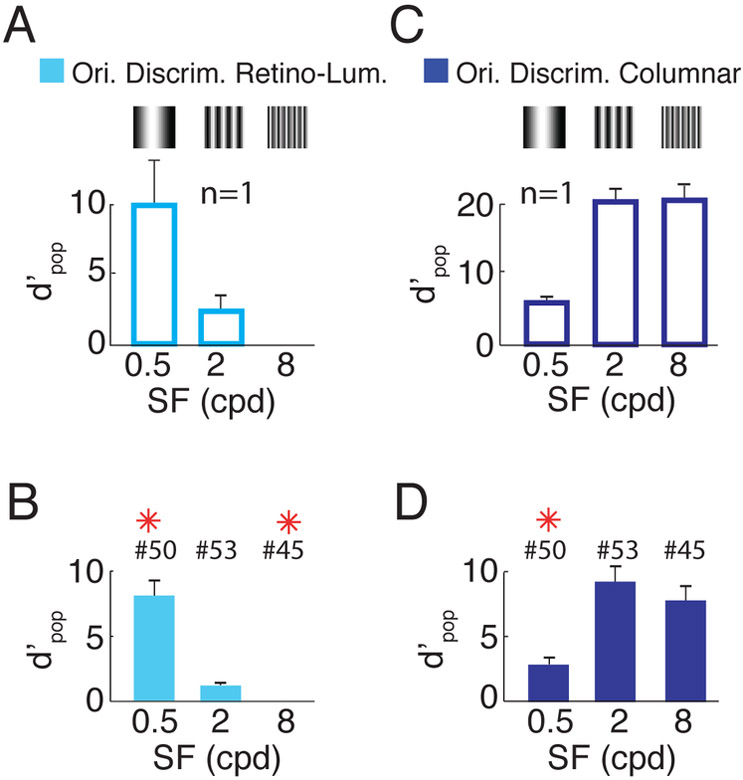

Because the luminance-retinotopic signal reflects the geometry of the visual stimulus, it implicitly encodes orientation information that could be extracted by downstream mechanisms and contribute to orientation discrimination. To quantify the quality of the orientation information encoded in these signals, we developed a linear orientation decoder similar to the columnar decoder described above, but one that uses only the luminance-retinotopic signals (see Methods). The average orientation discriminability based on the luminance-retinotopic signal in the example experiment is strong at low SF stimuli; this discriminability drops by 84% at medium SF and is near zero at high SF (Figure 4A). Similar results are seen in the summary across all imaging sessions (Figure 4B). While direct comparison between the quality of the luminance-retinotopic and columnar signals (Figure 4C,D) is not warranted (since these signals could be affected by different sources of measurement noise), the relative discriminability as a function of SF within each signal shows a complementary trend, consistent with the possibility that the luminance-retinotopic signals contribute to pattern discrimination at low SF.

Figure 4. Discriminability based on luminance-retinotopic and columnar responses to orthogonal gratings as a function of spatial-frequency.

(A) Overall discriminability based on luminance-retinotopic signals between grating stimuli with orthogonal orientations, at the three SFs, for the same example session as in Figures 1 and 2. Error-bars indicate bootstrapped standard errors. (B) Similar to A but averaged across sessions. Same format as Figure 1G. The bars and error bars for 8 cpd in A and B are too small to see. (C,D). Same as A,B but for columnar signals (reproduced from Figure 1F,G). See Figure S1 and S4 for results from individual monkeys. Ori. Discrim – orientation discrimination. Retino-Lum – luminance-retinotopic.

Retinotopic representations of low SF second order stimuli in V1

Our results demonstrate that the luminance signal creates a blurred homeomorphic representation of the retinal image at the level of the retinotopic map in V1. Downstream circuits in V2 and other extrastriate areas, having larger RFs, could extract orientation information from large-scale variations in V1 responses by performing on this luminance-retinotopic representation similar computations to those that V1 neurons perform on the retinal image. Could such a mechanism be useful for discriminating other types of visual stimuli?

Similar large-scale variations in V1 population responses at the retinotopic scale could be produced by a different class of visual stimuli - patterns created by low SF modulations of the stimulus’ contrast (e.g., the patterns in Figure 5A where the contrast of a high SF texture is modulated by a low SF sinusoid). These patterns are considered “second order” because their local mean luminance is constant while their contrast is varying over space (Landy and Graham, 2004).

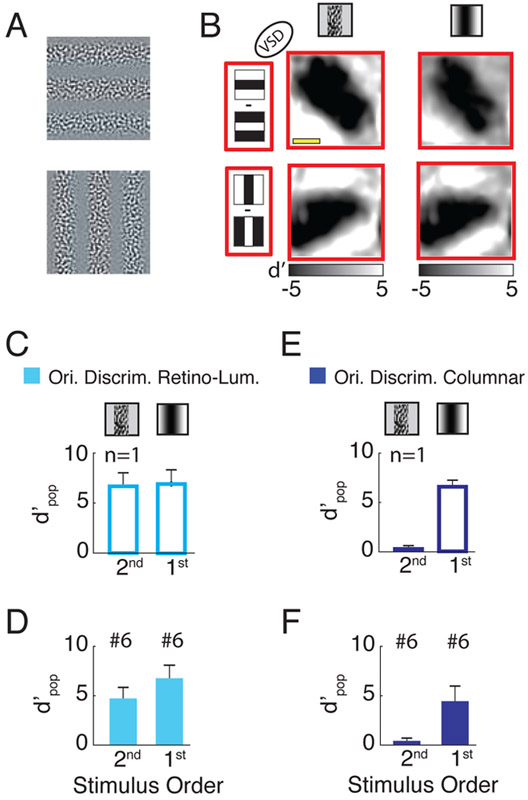

Figure 5. Discriminability of neural responses to second- and first-order gratings with orthogonal orientations.

(A) Example of second order gratings stimuli with orthogonal orientations (the amplitude is clipped for display purposes). (B) Difference (d′ map) across responses to two low SF (0.5 cpd) gratings stimuli with opposite phases for the two orthogonal orientations (rows). The two columns represent responses to second and first order stimuli respectively when the region with the high contrast in the second order stimulus overlaps the dark region in the first order stimulus. The average correlation between the responses to first and second order stimuli is 0.96. (C) Discriminability of the luminance-retinotopic (cyan) responses to second and first order stimuli with orthogonal orientations and low SF (same session as in B). (D) Similar to C but averaged across 6 sessions. (E,F) Similar to C and D but for the columnar signals. Scale bar in B - 2mm.

Since the responses of V1 neurons increase monotonically with contrast, such second-order stimuli are expected to strongly drive V1 neurons at the retinotopic locations overlapping the high-contrast portion of the stimulus, and elicit weak or no response at the regions overlapping the zero contrast portion of the stimulus. Indeed, a different type of second-order stimulus (one in which a mixture of drifting square wave gratings are viewed through complementary elongated apertures) was used by Blasdel & Campbell (Blasdel and Campbell, 2001) to measure the organization of the retinotopic map in V1 of anesthetized macaques.

Note that these second-order stimuli with large-scale variations in local contrast are fundamentally different from the low SF first-order stimuli discussed so far, where local contrast is effectively constant (and near zero) over space and the observed modulations in V1 responses are due to variations in local luminance.

Because the responses to second order stimuli are likely to be similar to the luminance-retinotopic responses described above, a downstream mechanism that relies on the spatial pattern of V1 population responses at the retinotopic scale (rather than the tuning properties of individual V1 neurons and columns) could also account for our ability to discriminate the orientation of second-order stimuli. Such a downstream mechanism could also account for the selectivity of some extrastriate neurons to second-order stimuli (El-Shamayleh and Movshon, 2011; Li et al., 2014).

In the second-order stimuli in Figure 5A, the local orientation of the texture is random and independent of the orientation of the global contrast modulation; thus, we expect individual V1 neurons and columns to carry no information regarding the global orientation. On the other hand, as discussed above, we expect these stimuli to elicit clear retinotopic-scale modulation of V1 population responses due to the spatial variations in contrast. Our final goal was to test these predictions.

Our measurements of V1 responses to second order gratings confirm these predictions and show that low SF (0.5 cpd) second order gratings evoke similar retinotopic responses to low SF first order gratings (Figure 5B). A summary of the results shows high-quality orientation-selective signals at the retinotopic scale and virtually no orientation-selective signals at the columnar scale (Figure 5C-F). These results provide further support to the notion that orientation-tuned responses at the single neuron/column level in V1 are not necessary for the orientation discrimination of large-scale stimuli.

DISCUSSION

Here we report that the quality of orientation-selective columnar responses to grating stimuli drops quickly when their SF is decreased (Figure 1). This phenomenon, which is expected given the limited size of V1 RFs, creates an “aperture problem” for the computation of orientation of low SF static stimuli. However, we show that there is a large untuned component of the population response at low SFs (Figure 2). This untuned population response produces a blurred homeomorphic representation of the retinal image in the cortex, with stronger response in the retinotopic areas that correspond to the darker stimulus areas (Figure 3). This distributed representation of the stimulus’ local luminance is surprising because neural activity in V1 has generally been assumed to represent primarily local contrast (i.e., local variations in luminance rather than the average local luminance itself).

The observed luminance-retinotopic response is probably related to the imbalance between ON and OFF responses in V1. OFF responses (driven by a decrease in local luminance) have been reported to be stronger than the ON responses (driven by an increase in local luminance) in monkey V1 as well as in other species (Jin et al., 2008; Kremkow et al., 2016; Lee et al., 2016; Xing et al., 2010; Yeh et al., 2009; Zurawel et al., 2014). However, such luminance-retinotopic signals could be used by downstream mechanisms even if the ON/OFF signals were balanced as long as downstream circuits take into account the polarity of the luminance preference of V1 cells.

We demonstrate that this luminance-retinotopic signal carries high quality single-trial orientation information (Figure 4). The luminance-retinotopic signal is not restricted to the subthreshold responses measured with VSDI. We show that these responses can be detected using widefield calcium imaging, a signal strongly related to the aggregated spiking activity of pyramidal neurons in the superficial cortical layers (Seidemann et al., 2016). These results show that the luminance-retinotopic signals are transmitted from V1 to downstream cortical areas. Therefore, neurons in downstream visual areas, with larger RFs, could extract orientation information about low SFs stimuli by performing similar computations on this retinotopic representation to those that V1 neurons perform on the retinal image (i.e., linear spatial filtration).

A similar pooling mechanism can explain how the visual system computes the orientation of second order contrast-modulated stimuli (i.e., large-scale contrast modulations of a fine-grained texture) (Chubb and Sperling, 1988; Landy and Graham, 2004). Humans can easily discriminate the orientation of such second order stimuli (Lin and Wilson, 1996) even though in these stimuli, global orientation is independent of the local texture.

Here we show that, as expected, V1 columnar responses carry little or no information regarding the global orientation of second order contrast-modulation stimuli. On the other hand, we show that such stimuli produce robust modulations at the retinotopic scale in V1. These modulations could serve as a homeomorphic representation of the global contrast modulations of the second order stimuli, similar to the luminance-retinotopic signals that we reveal in response to low-SF first order stimuli.

Our results support the idea that orientation tuned responses at the single neuron/column level in V1 are not necessary for pattern discrimination of large-scale stimuli. On the other hand, they also suggest that a downstream mechanism that extracts orientation (and possibly other coarse shape and size information (Michel et al., 2013)) from retinotopic-scale modulations of V1 population responses (independent of local orientation), could provide useful orientation information for first as well as second order stimuli at low SFs.

Overall, our results suggest that two independent and complementary modes of pattern representation co-exist in V1. An explicit representation of moderate and high SF contrast edges by orientation tuned neurons and columns, and an implicit representation of moderate and low SF luminance features, where information is represented by the relative level of population activity across the V1 retinotopic map. Such multi-scale and multi-modal representation is likely to occur in the representation of other visual features (i.e. phase and SF) and other sensory modalities. The interplay between these two modes of representation could explain our striking ability to discriminate patterns over very different spatial scales.p

STAR METHODS

CONTACT FOR REAGENT AND RESOURCE SHARING

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Eyal Seidemann eyal@austin.utexas.edu.

EXPERIMENTAL MODEL AND SUBJECT DETAILS

Macaque monkey

We used four male, adult (6 years old), rhesus monkeys (macaca mulatta) in this study: monkeys BA, AP, LO and HO. All monkeys were used for the VSD imaging experiments while only monkey LO was used for calcium imaging experiments as well. Monkeys BA and HO were purchased from Covance, San Antonio while the other two monkeys were purchased from the University of Louisiana. All monkeys were housed at UT Animal Resources Center (https://research.utexas.edu/arc/) in Austin, TX, USA. Monkeys weighted between 7 and 9 kg, were healthy and were not used for previous procedures. All procedures were approved by the University of Texas Institutional Animal Care and Use Committee and conformed to National Institutes of Health standards. Our general experimental procedures in behaving macaque monkeys have been described in detail previously (Chen et al., 2006, 2008).

METHOD DETAILS

Behavioral task and visual stimulus

Four adult male macaque monkeys were trained to perform a visual fixation task. Each trial began when a fixation spot was displayed at the center of a monitor in front of the monkey. The monkey had to shift gaze to the fixation point and maintain fixation within a small window (less than 2 dva full width) for at least 1500ms to receive a reward. Eye position was monitored by an infrared eye tracker (Dr. Bouis or EyeLink). Each trial began when the monkey fixated the fixation point. Following an initial fixation of 1000ms, a large grating stimulus (6×6 dva2) was flashed 5 times at 5Hz (60ms on, 140ms off) for the VSD imaging sessions and 4 times at 4Hz (100ms on, 150ms off) for the calcium imaging sessions. The stimulus was centered at the position corresponding to the imaged neural population’s receptive field (identified by measuring the retinotopy over the imaging area (Yang et al., 2007)). All stimuli were presented binocularly.

We used two main stimulation protocols: the 3-spatial-frequencies (3-SF) protocol, and the second order protocol. In the 3-SF protocol, the grating stimulus was presented with the following combination of parameters: two orientations = [0,90°], three spatial frequencies = [0.5, 2, 8 cpd] and two phase angles = [0,180°]. In the second order protocol, we used the following combination of parameters: two stimulus types (first order or second order), two orientations = [0,90°], one spatial frequency = [0.5 cpd] two phase angles = [0,180°]. To generate Supp. Movie S1, we presented a drifting luminance grating with the following parameters: SF = 0.5 cpd; temporal frequency = 4 cycles/sec; duration = 0.5 sec; orientation = [0,90°]. For every condition, we recorded 10 successful trials. All conditions were randomly interleaved. The mean luminance of the screen was maintained at 30cd/m2 and all stimuli were presented at 100% contrast. The second order grating patterns (Figure 4A) were generated by modulating the contrast of a noise carrier pattern. The carrier was generated by filtering zero mean binary random noise (with values −1 or 1) with an isotropic band-pass filter having a center spatial frequency of 4 cpd and a bandwidth of two octaves.

Optical imaging with voltage and calcium indicators

Each animal was implanted with a metal head post and a metal recording chamber located over the dorsal portion of V1, a region representing the lower contralateral visual field at eccentricities of 1–5 dva (Figures 1B & 3B). Craniotomy and durotomy were performed in order to obtain a chronic cranial window. A transparent artificial dura made of silicone was used to protect the brain while allowing optical access for imaging. For VSD imaging, for every session, we stained the cortical surface within the cranial window with the dye (Shoham et al., 1999) (#RH1838 or #RH1691), for two hours before starting imaging. For calcium imaging, we used a viral-based method to express GCaMP6f (ref (Seidemann et al., 2016)) in V1 of one of the three monkeys after the completion of the VSD experiments. Epi-fluorescence imaging was performed using the following filter sets: GCaMP, excitation 470/24 nm, dichroic 505 nm, emission 515 nm cutoff glass filter; VSD, excitation 620/30 nm, dichroic 660 nm, emission 665 nm cutoff glass filter). Illumination was obtained with an LED light source (X-Cite120LED) for GCaMP or a QTH lamp (Zeiss) for VSD imaging. Data acquisition was time locked to the animal’s heartbeat. Imaging was performed at 20 Hz for GCaMP imaging and 100 Hz for VSD imaging. Imaging data were collected using a resolution of 512×512 pixels over a cortical area of ~9×9 mm2 (pixel size ~18×18 µm2).

Data Analysis

We recorded V1 responses using VSD from 4 hemispheres of 3 fixating monkeys, using two main visual stimulation protocols. In addition, we performed pilot calcium imaging experiments in one of the three monkeys and a pilot VSD imaging experiment using a low SF drifting grating in a fourth monkey. For the 3-SFs protocol we recorded 58 sessions from 3 hemispheres of 2 monkeys. Out of 58 sessions, we excluded 5 sessions because of low signal-to-noise quality. The remaining 53 sessions where composed of 20 sessions from monkey AP and 33 sessions from monkey BA. In 8 sessions for monkey BA we presented only the low and the medium SF gratings, and in 3 sessions for monkey BA we only presented the high and medium SF gratings. For the second order stimulus protocol we recorded 6 sessions from 2 hemispheres of two monkeys, 4 from monkey AP and 2 from monkey LO. The VSD image frames captured on each trial were filtered in time (ref (Chen et al., 2012); FFT at 5Hz) resulting in a single response image for each trial. For every session, outlier trials were removed (see ref (Chen et al., 2012)) prior to quantitative analysis.

Discriminability maps

The mean and standard deviation of the response image at each pixel location was then calculated separately for each condition in each session. Recall that for each spatial frequency there were four conditions: 2 orientations [0°,90°] and 2 phases [0°,180°]. Thus for each spatial frequency, we computed a mean-response image for each combination of orientation and phase, m0,0 (x,y), m0,180 (x,y), m90,0 (x,y), m90,180 (x,y), as well as a corresponding SD-response image, σ0,0 (x,y), σ0,180 (x,y), σ90,0 (x,y), σ90,180 (x,y). Unless noted otherwise, the first subscript indicates the orientation of the grating and the second one the phase. Finally, we calculated maps giving the signed discriminability at each pixel location for different pairs of conditions. For example, the discriminability map for the two orientations, when the phases are both 0°, is given by

| (1) |

Such d′ maps for orthogonal orientations are shown in Figures 1C,D,E, and for opposite phases are shows in Figures 2D,F. For display purposes, the d′ maps from the VSD example experiment in Figures 1, 2 & 4 have been slightly cropped due to lower SNR close to the edge of the imaging area (~7×7 mm2). All quantitative analysis was performed without cropping.

Retinotopic and columnar decoding

For every imaging session, we calculated the overall orientation discriminability (d′pop) of neural responses at each stimulus SF, based on the columnar (band-pass filtered at 0.8-3 cyc/mm) and on the retinotopic (low-pass filtered at 0-0.8 cyc/mm) response components, separately. We calculated the overall detectability (discriminability between responses to a stimulus and the blank) based on the unfiltered responses. Mean-response and SD-response images were again computed as described above.

To compute overall orientation discriminability at the retinotopic scale we first computed the orientation discriminability for four possible pairing of phases for the vertical and horizontal gratings and then averaged the discriminabilities:

| (2) |

Note that in this equation only, the first subscript indicates the phase of the horizontal grating and the second subscript the phase of the vertical grating.

Discriminabilities were measured using a simple linear decoding approach. For example, consider the discriminability when the phases of the vertical and horizontal components are both zero. In order to get more accurate estimates of the mean-response maps we assumed symmetry and combined the maps for opposite phase:

| (3) |

| (4) |

From these two mean-response maps for the two orientations, we computed a matched template for the discrimination,

| (5) |

as well as an estimate of the standard deviation at each matched template pixel location,

| (6) |

From these two functions we computed a normalized matched template that down-weights unreliable pixel locations:

| (7) |

This normalized template was applied to all trials where the phase of two oriented stimuli was zero. From the means and standard deviations of the normalized template responses we computed . To avoid overfitting, we computed the normalized matched template for each trial leaving out the response of that trial. Equivalent calculations were used for the other discriminabilities in equation (2).

We note that the luminance-retinotopic signals can also be decoded using a phase-invariant decoder that first combines signals at each orientation from two energy filters, each based on a quadrature pair of linear filters (as in the model of phase-invariant complex cells (Adelson and Bergen, 1985)) before taking the difference between the output for the two orientations (data not shown).

To compute overall orientation discriminability at the columnar scale we averaged the mean response images across the two phases, because the orientation-scale responses do not depend on phase:

| (8) |

| (9) |

The single normalized matched template is thus given by

| (10) |

This template was applied to all the stimuli (at the given SF) and from the mean and standard deviations of the template responses we computed . Again, to avoid overfitting, we computed the normalized matched template for each trial leaving out the response of that trial.

To estimate the overall detectability at the retinotopic scale we used the same method as for orientation discrimination except the normalized templates were based on the difference between the unfiltered mean responses to the grating stimuli and the blank stimulus.

In all cases, the standard error of d′pop within an experiment was calculated by bootstrapping 1000 times the trials for the two conditions.

Retinotopic responses simulation

We characterize the retinotopy in each cranial window in a separate experiment using the protocol described in ref. (Yang et al., 2007) (Figure 2B). Predicted luminance-retinotopic or second order responses were simulated by projecting the visual grating stimuli to the retinotopic coordinates of the specific imaging area of every session and convolving the output with a 2D Gaussian filter with sigma = 1.5 mm to simulate the blurring effect of the membrane potential cortical point image (Palmer et al., 2012). For the luminance predictions, we tested two possible polarities and found that the observed V1 response is consistent with stronger V1 activity to the dark regions of the grating. The retinotopic coordinates of the specific imaging area of every session were estimated by aligning the image of the vasculature in the session with the image of the vasculature in the retinotopy characterization experiment.

Detection of the cortical area in which the calcium indicator GCaMP6f is expressed

In the calcium imaging example d′ maps (Figures 1E and 2F) we added a red contour around the area in which the calcium indicator GCaMP6f was expressed. To estimate this area, we first averaged the d′ maps obtained between the evoked responses and the blank. Second, we smoothed the amplitude of the outcome over space, using a 2D Gaussian filter (sigma = 2pixels). Third, we detected the contour with d′ equal to 4.

QUANTIFICATION AND STATISTICAL ANALYSIS

Statistics were performed using customized MATLAB software.

Population discriminability

The signal-to-noise ratio (Population discriminability, d′pop) between neural responses to two different stimulation conditions was estimated, for each experiment, by calculating the d′ across the single-trial responses, pooled with specific weights for each pixel (each response image was converted to a scalar value, see Methods above). Because the weights were calculated from the same set of data they were applied to, we pooled each trial with weights estimated by using all trials but the one to be weigthed (leave-one-out cross-validation). The use of this operation was mentioned in the Results and Mehtods sections.

Population discriminability examples

Population discriminability examples (e.g. Figure 1F) error-bars indicate bootstrap resampling standard errors (1000 random resampling) as specified in the figure legends.

Average population discriminability

Group data (e.g. Figure 1G) were reported as average ± SEM as specified in the figure legends. The number of experiments averaged for each condition is reported within each figure on top of each bar of each bar plot (preceded by a “#” symbol). Significant differences across conditions were calculated using a two-sample t-test across experiments’ population-discriminabilities (P<0.05, two sided, Matlab function “ttest2.m”), as specified in the figure legends. They were indicated within the figures by a red asterisk on top of each bar of the bar plot.

Strategy for randomization and/or stratification

The order of the visual stimuli was randomly shuffled during experiments.

Inclusion and Exclusion Criteria of any Data

Out of 58 sessions, we excluded 5 sessions because of low signal-to-noise quality. The criterion used to exclude experiments was that the population discriminability (d′pop ) of their columnar responses to orthogonal stimuli at 2cpd was lower than d′=2.

DATA AND SOFTWARE AVAILABILITY

The dataset and custom MATLAB code is available on Mendeley Data (doi:10.17632/5hr36rp3rz.2)

Supplementary Material

Movie S1. Time-course of luminance-retinotopic responses to two low SF grating stimuli drifting in orthogonal directions (Related to Figure 3). Central panel displays an image of the entire cranial window in the right hemisphere of monkey HO. Scale bar 2mm. Brain orientation spatial references on the top-left: M: medial, L: lateral, R: rostral, C: caudal. Movie sequence: (1) Cortical areas (V1,V2 and V4 cortex) and anatomical references (V1/V2 border, Lunate Sulcus) are labeled in red. (2) Labels disappear and the time-course of the luminance-retinotopic response to the first stimulus (vertical drifting grating, SF:0.5cpd, TF:4cps, Size:6dva2) is presented (lapse time on top of the panel). (3) Anatomical labels are presented again. (4) Time-course of the response to the second stimulus (horizontal drifting grating) is presented. Response colors represent the pixel-by-pixel discriminability (d′ map) across VSD luminance-retinotopic responses (after low pass spatial filtration <0.8cyc/mm) to opposite stimulus phases. For each time-frame we calculated the d′ map with respect to the time-frame of the same stimulus condition where the stimulus phase was opposite (half-cycle forward, + 125 ms). Only d′<-0.8 and d′>0.8 is visible, color-bar on the right. Top-right insert shows the time-course of the visual stimulus in the corresponding area of visual field (bottom left portion of the visual field).

KEY RESOURCES TABLE

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

| Chemicals, Peptides, and Recombinant Proteins | ||

| Voltage Sensitive Dyes | Optical Imaging, Ltd, Israel | #RH1838, #RH1691 |

| Experimental Models: Organisms/Strains | ||

| Rhesus Monkeys | Covance San Antonio and University of Louisiana | (BA) D290; (HO) D291; (AP) A11N156; (LO) A11N115 |

| Recombinant DNA | ||

| AAV1-CaMKIIa-NES-GCaMP6f | Karl Deisseroth Lab, Stanford | N/A |

| AAV1-CaMKIIamGCaMP6f | Karl Deisseroth Lab, Stanford | N/A |

| Software and Algorithms | ||

| MATLAB | MathWorks | RRID:SCR_001622 |

| Dataset and Matlab custom codes to reproduce results | Mendeley Data | doi:10.17632/5hr36rp3rz.2 |

Highlights.

V1 neurons carry poor orientation information at low spatial frequencies (SF)

This study reveals a retinotopic representation of low SF luminance stimuli in V1

This distributed representation carries high quality orientation information

This signal is likely to contribute to our scale-invariant visual capabilities

ACKNOWLEDGMENTS

We thank Tihomir Cakic, Kelly Todd and members of Seidemann laboratory for their assistance with this project. This work was supported by NIH grants EY016454 to ES and EY024662 to WSG and ES.

Footnotes

DECLARATION OF INTEREST

The authors declare no competing interests.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- Adelson E, and Movshon J (1982). Phenomenal coherence of moving visual patterns. Nature 300, 523–525. [DOI] [PubMed] [Google Scholar]

- Adelson EH, and Bergen JR (1985). Spatiotemporal energy models for the perception of motion. J. Opt. Soc. Am. A 2, 284–299. [DOI] [PubMed] [Google Scholar]

- Angelucci A, Levitt JB, and Lund JS (2002). Anatomical origins of the classical receptive field of single neurons in macaque visual cortical area V1. Prog. Brain Res 136, 373–388. [DOI] [PubMed] [Google Scholar]

- Blasdel GG (1992). Orientation Selectivity, Striate Cortex Preference, and Continuity in Monkey. J. Neurosci 12, 3139–3161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blasdel GG, and Campbell D (2001). Functional retinotopy of monkey visual cortex. J. Neurosci 21, 8286–8301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blasdel GG, and Lund JS (1983). Termination of afferent axons in macaque striate cortex. J. Neurosci 3, 1389–1413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonhoeffer T, and Grinvald A (1991). Iso-orientation domains in cat visual cortex are arranged in pinwheel-like patterns. Nature 353, 429–431. [DOI] [PubMed] [Google Scholar]

- Burr DC, and Wijesundra S-A (1991). Orientation discrimination depends on spatial frequency. Vision Res. 31, 1449–1452. [DOI] [PubMed] [Google Scholar]

- Chen Y, Geisler WS, and Seidemann E (2006). Optimal decoding of correlated neural population responses in the primate visual cortex. Nat. Neurosci 9, 1412–1420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Y, Geisler WS, and Seidemann E (2008). Optimal temporal decoding of neural population responses in a reaction-time visual detection task. J. Neurophysiol 99, 1366–1379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Y, Palmer CR, and Seidemann E (2012). The relationship between voltage-sensitive dye imaging signals and spiking activity of neural populations in primate V1. J. Neurophysiol 107, 3281–3295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chubb C, and Sperling G (1988). Drift-balanced random stimuli: a general basis for studying non-Fourier motion perception. J. Opt. Soc. Am. A 5, 1986–2007. [DOI] [PubMed] [Google Scholar]

- El-Shamayleh Y, and Movshon JA (2011). Neuronal Responses to Texture-Defined Form in Macaque Visual Area V2. J. Neurosci 31, 8543–8555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Essen DC, and Newsome WT (1984). The visual field representation in the striate cortex of the macaque monkey: Asymmetries, anisptropies, and indiviual variability. Vision Res. 24, 429–448. [DOI] [PubMed] [Google Scholar]

- Hubel D, and Wiesel T (1968). Receptive fields and functional architecture of monkey striate cortex. J. Physiol 215–243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hubel DH, and Wiesel TN (1974). Uniformity of monkey striate cortex: a parallel relationship between field size, scatter, and magnification factor. J. Comp. Neurol 158, 295–305. [DOI] [PubMed] [Google Scholar]

- Jamar JHT, and Koenderink JJ (1983). Sine-wave gratings: Scale invariance and spatial integration at suprathreshold contrast. Vision Res. 23, 805–810. [DOI] [PubMed] [Google Scholar]

- Jin JZ, Weng C, Yeh CI, Gordon JA, Ruthazer ES, Stryker MP, Swadlow HA, and Alonso JM (2008). On and off domains of geniculate afferents in cat primary visual cortex. Nat. Neurosci 11, 88–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kremkow J, Jin J, Wang Y, and Alonso JM (2016). Principles underlying sensory map topography in primary visual cortex. Nature 533, 52–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landy MS, and Graham N (2004). Visual Perception of Texture. Vis. Neurosci 10003, 1106–1118. [Google Scholar]

- Lee K-S, Huang X, and Fitzpatrick D (2016). Topology of ON and OFF inputs in visual cortex enables an invariant columnar architecture. Nature 533, 90–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levitt JB, and Lund JS (2002). The spatial extent over which neurons in macaque striate cortex pool visual signals. Vis. Neurosci 19, 439–452. [DOI] [PubMed] [Google Scholar]

- Li G, Yao Z, Wang Z, Yuan N, Talebi V, Tan J, Wang Y, Zhou Y, and Baker CL (2014). Form-Cue Invariant Second-Order Neuronal Responses to Contrast Modulation in Primate Area V2. J. Neurosci 34, 12081–12092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin LM, and Wilson HR (1996). Fourier and non-fourier pattern discrimination compared. Vision Res. 36, 1907–1918. [DOI] [PubMed] [Google Scholar]

- Lund JS, Angelucci A, and Bressloff PC (2003). Anatomical Substrates for Functional Columns in Macaque Monkey Primary Visual Cortex. Cereb. Cortex 13, 15–24. [DOI] [PubMed] [Google Scholar]

- Marr D (1982). Vision: A computational investigation into the human representation and processing of visual information. The MIT Press. [Google Scholar]

- Michel MM, Chen Y, Geisler WS, and Seidemann E (2013). An illusion predicted by V1 population activity implicates cortical topography in shape perception. Nat. Neurosci 16, 1477–1483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palmer CR, Chen Y, and Seidemann E (2012). Uniform spatial spread of population activity in primate parafoveal V1. J. Neurophysiol 107, 1857–1867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seidemann E, Arieli A, Grinvald A, and Slovin H (2002). Dynamics of depolarization and hyperpolarization in the frontal cortex and saccade goal. Science 295, 862–865. [DOI] [PubMed] [Google Scholar]

- Seidemann E, Chen Y, Bai Y, Chen SC, Mehta P, Kajs BL, Geisler WS, and Zemelman BV (2016). Calcium imaging with genetically encoded indicators in behaving primates. Elife 5, 1–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shoham D, Glaser DE, Arieli A, Kenet T, Wijnbergen C, Toledo Y, Hildesheim R, and Grinvald A (1999). Imaging cortical dynamics at high spatial and temporal resolution with novel blue voltage-sensitive dyes. Neuron 24, 791–802. [DOI] [PubMed] [Google Scholar]

- De Valois RL, Albrech DG, and Thorell LG (1982). Spatial frequency selectivity of cells in macaque visual cortex. Vision Res. 22, 545–559. [DOI] [PubMed] [Google Scholar]

- Xing D, Yeh CI, and Shapley RM (2010). Generation of black-dominant responses in V1 cortex. J. Neurosci 30, 13504–13512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang Z, Heeger DJ, and Seidemann E (2007). Rapid and Precise Retinotopic Mapping of the Visual Cortex Obtained by Voltage-Sensitive Dye Imaging in the Behaving Monkey. J. Neurophysiol 98, 1002–1014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeh CI, Xing D, and Shapley RM (2009). “Black” Responses Dominate Macaque Primary Visual Cortex V1. J. Neurosci 29, 11753–11760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zurawel G, Ayzenshtat I, Zweig S, Shapley R, and Slovin H (2014). A Contrast and Surface Code Explains Complex Responses to Black and White Stimuli in V1. J. Neurosci 34, 14388–14402. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Movie S1. Time-course of luminance-retinotopic responses to two low SF grating stimuli drifting in orthogonal directions (Related to Figure 3). Central panel displays an image of the entire cranial window in the right hemisphere of monkey HO. Scale bar 2mm. Brain orientation spatial references on the top-left: M: medial, L: lateral, R: rostral, C: caudal. Movie sequence: (1) Cortical areas (V1,V2 and V4 cortex) and anatomical references (V1/V2 border, Lunate Sulcus) are labeled in red. (2) Labels disappear and the time-course of the luminance-retinotopic response to the first stimulus (vertical drifting grating, SF:0.5cpd, TF:4cps, Size:6dva2) is presented (lapse time on top of the panel). (3) Anatomical labels are presented again. (4) Time-course of the response to the second stimulus (horizontal drifting grating) is presented. Response colors represent the pixel-by-pixel discriminability (d′ map) across VSD luminance-retinotopic responses (after low pass spatial filtration <0.8cyc/mm) to opposite stimulus phases. For each time-frame we calculated the d′ map with respect to the time-frame of the same stimulus condition where the stimulus phase was opposite (half-cycle forward, + 125 ms). Only d′<-0.8 and d′>0.8 is visible, color-bar on the right. Top-right insert shows the time-course of the visual stimulus in the corresponding area of visual field (bottom left portion of the visual field).

Data Availability Statement

The dataset and custom MATLAB code is available on Mendeley Data (doi:10.17632/5hr36rp3rz.2)