Abstract

Background:

Measurement of cognitive behavioural therapy (CBT) competency is often resource intensive. A popular emerging alternative to independent observers’ ratings is using other perspectives for rating competency.

Aims:

This pilot study compared ratings of CBT competency from four perspectives – patient, therapist, supervisor and independent observer using the Cognitive Therapy Scale (CTS).

Method:

Patients (n = 12, 75% female, mean age 30.5 years) and therapists (n = 5, female, mean age 26.6 years) completed the CTS after therapy sessions, and clinical supervisor and independent observers rated recordings of the same session.

Results:

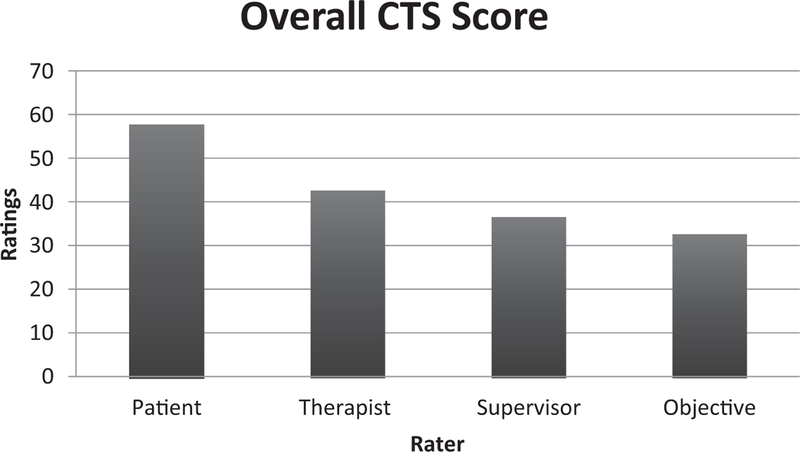

Analyses of variance revealed that therapist average CTS competency ratings were not different from supervisor ratings, and supervisor ratings were not different from independent observer ratings; however, therapist ratings were higher than independent observer ratings and patient ratings were higher than all other raters.

Conclusions:

Raters differed in competency ratings. Implications for potential use and adaptation of CBT competency measurement methods to enhance training and implementation are discussed.

Keywords: Cognitive behavioural therapy, competence ratings

Introduction

Measurement of cognitive behavioural therapy (CBT) competency is designed to reveal how well therapists deliver CBT, the results of which can serve many important functions in research and clinical training contexts and has the potential to optimize training and dissemination of CBT. However, competency measures are typically resource intensive, relying on objective raters, which is not often feasible in practice settings. As a result, more efficient methods for assessing CBT competence are being explored.

An alternative approach to observer ratings is to garner the perspectives of clinical supervisors, therapists and patients (Muse and McManus, 2013). Supervisors may be especially well qualified to evaluate therapist competency as they have a strong understanding of important contextual factors including therapist variables (e.g. therapist background/skills) and patient variables (e.g. treatment plan targets). This added context may allow supervisors to more accurately evaluate therapists’ abilities. Alternatively, this information may produce demand characteristics that could inflate competency ratings. Additionally, supervisors are often involved with treatment planning, especially with trainees, probably leading to over-estimations of perceived competency scores.

Therapists’ self-reported competency has potential benefits including that therapists do not need to review recorded therapy sessions before rating competency and it encourages the therapist to reflect on the session, potentially leading to training opportunities to promote skill growth. However, research suggests that therapists have difficulty evaluating their own competence (Brosan et al., 2008), particularly when they have yet to develop skills in a specific intervention (Mathieson et al., 2009). Indeed, therapists often either over- or under-estimate their own competency compared with supervisor or independent observer ratings.

Utilizing patients’ perspectives is another low resource option for measuring CBT competency, as patients, like therapists, can complete the ratings immediately following the session. However, patients’ limited CBT knowledge may impact ratings as they may have difficulty both understanding specific CBT components and rating the competency of their therapist. Patients may also experience bias in their rating of competency due to their therapeutic relationship and perceived implications that ratings may have for continued treatment (Muse and McManus, 2013).

In summary, each perspective has potential strengths and weaknesses (Muse and McManus, 2013). Insight into the relations among these perspectives will help determine if competency measurement methods that require fewer resources can be adopted to increase the likelihood of utilization in the context of enhancing training and implementation of CBT, especially for therapists early in training. For example, if therapist and patient competency ratings agree with those of objective raters or supervisors, more resource-intensive competency evaluation methods may not be necessary. In contrast, if objective rater, supervisor, therapist and patient ratings fail to agree, alternative methods may be required for the feasible evaluation of CBT competency.

The present study

Few studies have examined multiple perspectives (i.e. independent observer, supervisor, trainee therapists and patient) of competency evaluation and no current studies have examined all perspectives together. There is limited consensus regarding optimal competency measures and methods (e.g. Mathieson et al., 2009). The aim of the current study was to collect exploratory pilot data to replicate and build on previous studies by comparing ratings of therapists’ competency from four different perspectives.

Method

Participants and raters

Patients were recruited at a psychology training clinic and all procedures were approved by the university’s Institutional Review Board. Patients (n = 12) were mainly female (75%), Caucasian (75%), with a mean age of 30.5 years (SD = 13.05), and primary presenting problems included major depressive disorder (58.3%) and/or anxiety disorders (41.7%). Five trainee therapists enrolled in the university’s CBT practicum participated in the study. The trainee therapists had bachelor degrees and were in their fourth to sixth year of doctoral training. The sample of therapists was entirely female and primarily Caucasian (n = 3, 60%), with an average age of 26.6 years (SD = 1.34). Prior to enrolling in the CBT training practicum, four therapists (80%) had exposure to CBT through reading CBT research articles and two therapists (40%) had attended a CBT workshop.

Objective raters consisted of two CBT experts, one advanced doctoral student, and two bachelor-level research assistants. The objective raters had over 30 years of combined experience in CBT and received more than 25 hours of training together on the Cognitive Therapy Rating Scale (CTS). Inter-rater reliability has been found to be variable for the CTS (Muse and McManus, 2013) and the current study adopted a group consensus rating approach, where together they determined the score for each item rather than individually (Simons et al., 2010). The objective rater group was led by the senior CBT researchers. The supervisor was a licensed psychologist with over eight years of CBT experience. Objective raters and the supervisor conducted CTS training together, but rated sessions independently.

Measures

Cognitive Therapy Scale (CTS; Young and Beck, 1980). The CTS is an 11-item measure of CBT skill competency using a seven-point response format ranging from 0 (poor) to 6 (excellent). The CTS was adapted, with permission from Dr A. T. Beck, for the wording of the CTS items to reflect the view of the client and create a self-report version for the therapist.

Procedure

Both patients and therapists completed ratings immediately following the session and supervisor and objective raters rated later using video recordings. Ratings were made for the second session of treatment only for each patient as it is the first standard, non-introductory session where CBT content would be introduced and the therapist’s strategy for change could be assessed. Patients were informed their responses would be anonymous.

Results

CTS total score

ANOVA conducted on CTS total scale score revealed that there were significant differences: F(3,44) = 45.24, p < .001. Post-hoc results indicated that supervisor scores did not differ significantly from objective raters’ scores; therapist scores did not differ significantly from supervisor scores; all other means were significantly different. Observed power was calculated as .98 or higher for all analyses. Of note, the full range of the CTS scale was not utilized by raters (e.g. no zeroes were given).

Discussion

This pilot study compared CBT competency ratings and revealed that CTS competency demonstrated therapist ratings were not significantly different from supervisor ratings and supervisor ratings were not significantly different from independent observer ratings; however, therapist ratings were significantly higher than independent observer ratings and patient ratings were significantly higher than all other raters (see Fig. 1). These results indicate that different raters have varying perceptions of CBT competency and using less resource-intensive raters (i.e. therapists or patients) may not be a viable option for the CTS with the limited adaptations made. Given the lack of agreement of ratings by different perspectives, should one be given privilege over the others?

Figure 1.

Average CTS ratings across raters

This is a difficult question as each perspective may be providing different and useful information; however, current competency ratings may not fully measure what they intend to measure. The CTS and other competency skills are vulnerable to issues of validity and reliability. Measures focused on assessing adherence to CBT or whether or not the clinician is utilizing specific components prescribed by CBT may be ideal. Alternatively, efforts to increase the specificity of the CTS could optimize its psychometric performance. Recent research has started this process by addressing the limitations of the CTS by providing behavior-based anchors, updating the areas of competency based on up-to-date research, and reducing the amount of inferences used by assessors (Muse et al., 2017). The Assessment of Core CBT Skills (ACCS; https://www.accs-scale.co.uk/) shows initial promise in addressing problems with the CTS and has potential to be used by the therapists themselves, reducing the resources needed for competency measure. Future research should continue to work on validating and refining competency measures like the ACCS that are less resource intensive in order to enhance training and, in turn, increase accessibility to empirically based treatments allowing for better patient outcomes.

Limitations

While the current study has a number of strengths (e.g. four rater perspectives within the same study, using the same rating scale, etc.), there are several noteworthy limitations. Primarily, this was a pilot sample and consisted of a small number of therapists in training and patients, and future studies should replicate these findings with a larger sample and a wider variety of therapist experience. These pilot data provide initial evidence suggesting that overall there are differences in raters and that the feasibility of using the CTS in this modified manner is probably not the solution for reducing the resources needed for competency ratings. Second, the therapists were trainees and therefore findings may not generalize to more experienced therapists. However, use of competency ratings for training purposes often occurs and this sample is informative for this purpose. Third, patients in this study also varied in diagnosis, which may affect how competency was rated; however, this is more generalizable to what is seen in community settings. Fourth, the independent observer group ranged in experience with CBT. However, the consensus format where the objective group rated items in a discussion allows for a more thorough examination of each item where there may be disagreement (Simons et al., 2010). Additionally, future research should focus on rating several sessions for each therapist and patient dyad to have a more comprehensive view of competency rather than a one-time snapshot.

Another limitation of the current study is that the supervisor of the current study trained on the CTS with the objective raters. This introduces a third variable that may explain why the supervisor aligns with the objective observer. Additionally, the supervisor and observers rated therapist competency after the end of treatment and the supervisor knew the treatment outcome, which may have affected competency ratings. Ideally ratings would happen as sessions occur, something that may not be possible in many clinics due to time constraints and may actually impact ratings more than known. Therapists and patients were not trained on the CTS, which may have had an impact on their ratings as well. Overall, these pilot data provide preliminary insight into issues with current competency rating systems.

Conclusion

In conclusion, this study adds to the growing literature investigating CBT competency measures. Results showed that ratings of therapists’ competency differed. Although the current study used the CTS, many of the same issues exist for related and revised versions of competency measures used in CBT training. Future research is needed to examine if differing views of competency can provide useful information for training and if new competency measures or methods (e.g. ACCS) can be developed and fine-tuned to increase validity and reliability in order to enhance training of new CBT therapists.

Supplementary Material

Acknowledgements

We thank Dr A.T. Beck for allowing us to modify the CTS to examine this important question. We also thank the therapists and patients for participating in this study.

Financial support: This research was supported in part by a large research grant from the Institute for Scholarship in the Liberal Arts at the University of Notre Dame to Anne D. Simons.

Footnotes

Conflicts of interest: The authors identify no conflicts of interest.

Ethical statement: The authors assert that all procedures contributing to this work comply with the ethical standards of the relevant national and institutional committees on human experimentation and with the Helsinki Declaration of 1975, and its most recent revision.

Contributor Information

David C. Rozek, University of Utah

Jamie L. Serrano, VA Roseburg Healthcare System

Brigid R. Marriott, University of Missouri-Columbia

Kelli S. Scott, Indiana University Bloomington

L. Brian Hickman, University of Washington St Louis.

Brittany M. Brothers, Indiana University Bloomington

Cara C. Lewis, Kaiser Permanente Washington Health Research Institute, Indiana University Bloomington and University of Washington

Anne D. Simons, University of Oregon and Oregon Research Institute

References

- Brosan L, Reynolds S and Moore RG (2008). Self-evaluation of cognitive therapy performance: do therapists know how competent they are? Behavioural and Cognitive Psychotherapy, 36, 581–587. 10.1017/S1352465808004438 [DOI] [Google Scholar]

- Mathieson FM, Barnfield T and Beaumont G (2009). Are we as good as we think we are? Self-assessment versus other forms of assessment of competence in psychotherapy. the Cognitive Behaviour Therapist, 2, 43–50. 10.1017/S1754470X08000081 [DOI] [Google Scholar]

- Muse K and McManus F (2013). A systematic review of methods for assessing competence in cognitive-behavioural therapy. Clinical Psychology Review, 33, 484–499. doi: 10.1016/j.cpr.2013.01.010 [DOI] [PubMed] [Google Scholar]

- Muse K, McManus F, Rakovshik S and Thwaites R (2017). Development and psychometric evaluation of the Assessment of Core CBT Skills (ACCS): an observation-based tool for assessing cognitive behavioral therapy competence. Psychological Assessment, 5, 542–555. [DOI] [PubMed] [Google Scholar]

- Simons AD, Padesky CA, Montemarano J, Lewis CC, Murakami J, Lamb K, DeVinney S, Reid M, Smith DA and Beck AT (2010). Training and dissemination of cognitive behavior therapy for depression in adults: a preliminary examination of therapist competence and client outcomes. Journal of Consulting and Clinical Psychology, 5, 751–756. doi: 10.1037/a0020569 [DOI] [PubMed] [Google Scholar]

- Young J and Beck AT (1980). Cognitive Therapy Scale: Rating Manual. Unpublished manuscript, Center for Cognitive Therapy, University of Pennsylvania, Philadelphia, PA. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.