Abstract

There is growing interest in the potential for complex systems perspectives in evaluation. This reflects a move away from interest in linear chains of cause-and-effect, towards considering health as an outcome of interlinked elements within a connected whole. Although systems-based approaches have a long history, their concrete implications for health decisions are still being assessed. Similarly, the implications of systems perspectives for the conduct of systematic reviews require further consideration. Such reviews underpin decisions about the implementation of effective interventions, and are a crucial part of the development of guidelines. Although they are tried and tested as a means of synthesising evidence on the effectiveness of interventions, their applicability to the synthesis of evidence about complex interventions and complex systems requires further investigation. This paper, one of a series of papers commissioned by the WHO, sets out the concrete methodological implications of a complexity perspective for the conduct of systematic reviews. It focuses on how review questions can be framed within a complexity perspective, and on the implications for the evidence that is reviewed. It proposes criteria which can be used to determine whether or not a complexity perspective will add value to a review or an evidence-based guideline, and describes how to operationalise key aspects of complexity as concrete research questions. Finally, it shows how these questions map onto specific types of evidence, with a focus on the role of qualitative and quantitative evidence, and other types of information.

Keywords: complex interventions, systems, systematic reviews, public health

Summary box.

There is little guidance on the implications of complex systems for reviewing evidence or for developing guidelines as a basis for recommendations for practice and policy.

Key aspects of complex systems include interactions between interventions and the system itself; emergent properties; and positive and negative feedback loops.

These and other aspects of complexity can be framed as specific review questions, and evidence can be sought for each of them.

Systematic reviewers can use this new guidance to consider whether a systems perspective will be of value to them, and how it can be operationalised.

It is also important to note that a ‘full systems’ perspective is not necessarily appropriate for all reviews (or even many reviews).

Introduction

A complexity perspective

Recent years have seen a rapid rise in interest in complex interventions, perhaps because interventions themselves are becoming more complex, along with their evaluations.1 2 Complexity is a concept underpinned by a set of theories used to understand the dynamic nature of interventions and systems.3 Complexity theory has been increasingly used within the health sector to explore the ways in which interactions between component parts of an intervention or system give rise to dynamic and emergent behaviours.4 5 Interventions are often defined as ‘complex’ in terms of their being (1) multicomponent (ie, the intervention itself may comprise multiple components that may interact in synergistic or dissynergistic ways); (2) non-linear (they may not bring about their effects via simple linear causal pathways); and (3) context-dependent (they are not standardised, but may work best if tailored to local contexts).1 There is a range of methodological guidance on reviewing evidence on complex interventions,6–8 and new tools have emerged to help reviewers and guideline developers to deal with complexity—such as the Intervention Complexity Assessment Tool for Systematic Reviews (iCAT_SR) tool, which aims to help reviewers to categorise levels of intervention complexity.9

The academic focus is often on clearly described ‘interventions’—these are often sets of professional behaviours, or practices or ways of organising a service. However a different perspective has gained traction.10 This sees interventions not as discrete, bounded activities, but as interconnected ‘events in systems’.11 12 The current paper (one of a series, exploring the implications of complexity for systematic reviews and guideline development, commissioned by WHO) differentiates between these perspectives, focusing on how evidence may be synthesised. The aim is that the paper will be of relevance to guideline development at the global level but, like other papers in the series, will also be relevant to other contexts, such as developing evidence-based guidance at the national or subnational level.

Complex interventions and complex systems

The term ‘complex intervention’ is often used to describe both health service and public health interventions, including psychological, educational, behavioural and organisational interventions. Examples include health promotion interventions (eg, sexual health education),1 public health legislation (like Smokefree legislation)1 and organisational interventions (eg, stroke units, which involve multicomponent packages of care).13 The interventions have implicit conceptual boundaries, representing a flexible but common set of practices, often linked by an explicit or implicit theory about how they work.14 15

This ‘complex interventions perspective’ can be differentiated from a complex systems perspective—sometimes referred to as ‘systems thinking’.16 This has a long history in other fields.17 What differentiates the two perspectives is a move away from focusing on ‘packages’ of activities, with the idea that the intervention is external to the target population, towards (in Hawe et al’s words) ‘a focus on the dynamic properties of the context into which the intervention is introduced.’11 In a systems perspective, complexity arises from the relationships and interactions between a system’s agents (eg, people or groups that interact with each other and their environment) and its context. A system perspective conceives the intervention as being part of the system, and emphasises changes and interconnections within the system itself. It does not carry the implication of a separate intervention intervening—as if from outside the system. Thus, reviewing evidence from a systems perspective requires ‘consideration of the ways in which processes and outcomes at all points within a system drive change. Instead of asking whether an intervention works to fix a problem, researchers should aim to identify if and how it contributes to reshaping a system in favourable ways’.10 Notably, a systems perspective can be adopted in relation to individual-level (eg, interventions targeting individual eating behaviours), population-level (eg, an intervention delivered to a wider population, such as a mass media campaign) and/or system-level (eg, interventions designed to change food environments, such as high streets) interventions.10 In all of these cases, the focus of a systems perspective is on how the intervention interacts with and impacts on the system as a whole.

These differences are clarified in a later paper in this series (Rehfuess et al 18).

We broadly [distinguish] between interventions targeting individuals (eg, diagnosis, treatment, or preventative measures addressed at individuals), interventions targeting populations, and interventions targeting the health system or context. Population-level interventions encompass those concerned with whole populations or population groups as defined by their age, sex, risk factor profile or other characteristic; they are often implemented in specific settings or organisations (eg, school health programmes). System-level interventions specifically re-design the context in which health-relevant behaviours occur; they are often implemented through geographical jurisdictions from national to local levels (eg, laws and regulations regarding the taxation, sale and use of tobacco products). Health system interventions represent a specific type of system-level intervention and often result in complex re-arrangements across multiple health system building blocks (eg, task shifting as a process of delegating specific health service tasks from medical doctors or nurses to less specialised health workers).

Hawe et al 11 give the examples of schools, communities and worksites as complex ecological systems, which can be theorised in three dimensions: (1) their constituent activity settings (eg, clubs, festivals, assemblies, classrooms); (2) the social networks that connect the people and the settings; and (3) time. They also note the need to understand the dynamics of the whole system, not just the intervention or the individuals within it, and to understand that ‘the most significant aspect of the complexity possibly lies not in the intervention per se (multi-faceted as it might be), but in the context or setting into which the intervention is introduced and with which the intervention interacts’.11 Not all definitions of complex systems are in agreement, but box 1 identifies some key characteristics and points of difference. There is of course no distinct boundary between the two perspectives, and the choice of which perspective to adopt is (and should be) led by users’ needs. Sometimes it may be useful to analyse interventions as if they were packages of interconnecting components, acting externally upon a pre-existing system; at other times, it may be more productive (in terms of producing useful, actionable evidence) to conceive of them as ‘events in systems’; or to treat interventions as subsystems within a larger system (such as the Sure Start intervention in the UK which aimed to support families with young children in deprived communities1). In these instances, an intervention may be conceptualised as an ‘entry point’ into a system—a means by which to understand how a system adapts and changes in response to internal and external events.

Box 1. Overview of a complex systems perspective.

Interactions between components of complex interventions

The functioning of the whole system, rather than parts of the system, or solely on the interventions within it (as opposed to a focus on the characteristics of the intervention, such as interactions between its components, in the case of a complex intervention).11

Interactions of interventions with context

The interactions between an intervention and the system within which it takes effect.11

System adaptivity

How the system itself adapts to the introduction of an intervention.11 12 For example: ‘A complex system is one that is adaptive to changes in its local environment, is composed of other complex systems (for example, the human body), and behaves in a non-linear fashion (change in outcome is not proportional to change in input). Complex systems include primary care, hospitals, and schools. Interventions in these settings may be simple or complicated, but the complex systems approach makes us consider the wider ramifications of intervening and to be aware of the interaction that occurs between components of the intervention as well as between the intervention and the context in which it is implemented’.12

Non-linearity

Interactions between individuals, between levels (eg, interactions between effects at the individual, neighbourhood, community, societal level), and interactions between different parts of the system.12 44 By comparison, discussions of complex interventions tend to focus more on interactions between components of the intervention, and the levels or groups which the intervention is ‘targeted at’ (as opposed to interactions between the levels or groups).1

Emergent properties

These are properties or behaviours which arise from interactions between parts of a system. These properties are not seen in any one part of a complex system nor are they summations of individual parts (community empowerment,19 44 social exclusion and income inequality are noted emergent properties relevant to population health). Obesity has also been used as an example of emergence, with individual exercise patterns being linked to the risk of obesity, but obesity is also a determinant of individual exercise patterns.45 46 So outcomes should be measured at multiple levels within the complex system.12

Feedback loops

Mechanisms by which change is either amplified (positive or reinforcing feedback) or lessened (negative or balancing feedback).

Multiple outcomes and dependencies

When outcomes from one individual (or community) may be affected by outcomes from another (see handwashing example in the main text).

There are several implications of adopting a systems perspective. One implication noted by Shiell et al 12 is that interventions, whatever their perceived level of complexity (simple or complex), can bring about wider changes in systems. For example, legislation (which may be conceptualised as either simple or complex) can bring about changes in social systems; in the case of the UK, banning smoking in public places resulted in changes in the pattern and nature of smoking, drinking and socialising, as well as changes in health outcomes. 19

There are many potential sources of complexity to be considered in both complex interventions and complex systems perspectives. Some of these are described in box 1 and table 1. Diez-Roux also notes that complex systems are characterised by dependencies: that is, outcomes from one individual (or community) may be affected by outcomes from another.20 One example comes from drinking water, sanitation and hand hygiene (ie, ‘WASH’) interventions, which are protective against enteric infections. In this case most of the protective effects come from ‘herd protection’ (ie, an emergent property of a system), which occurs when an infectious disease intervention provides indirect protection to non-recipients, due to the reduction in environmental contamination. 21

Table 1.

How do aspects of complex systems map onto review questions and inclusion criteria?

| Aspect of complexity of interest | Why this is relevant | Examples of potential research question(s) | What sort of evidence may answer this question? (note: non-exhaustive list) | Types of study to search for (eg, study designs) | Examples |

| What ‘is’ the system? How can it be described?10 11 |

It is helpful to have a theoretical model of how the system works—what the main influences on outcomes are and their interconnections. This can help with scoping the review. | What are the main influences on the health problem? How are they created and maintained? How do these influences interconnect? Where might one intervene in the system? | Potentially any sort of evidence may be helpful: background theoretical literature; epidemiological and other evidence on the determinants (eg, of childhood obesity). This can be presented as a conceptual diagram of the system or part of the system. | Theoretical papers; previous systematic reviews of the causes of the problem; epidemiological studies (eg, cohort studies examining risk factors of obesity); policy documents; network analysis studies showing the nature of social and other systems.47 | Cochrane review of audit and feedback mechanisms (shows the use of a theory of change). Tools: logic model 46 23 24 |

| Interactions between components of complex interventions.1 | Users may wish to know which components are essential for effectiveness (ie, to bring about system change) and which less so; some components may dampen intervention effects (through dissynergies). | Effectiveness question: What is the independent and combined effect of the individual components? Process question: How do the components work along and in combination to produce effects? (How do they interact to produce outcomes?) | Evidence of the independent effects of components of the intervention and synergistic/dissynergistic interactions between those components may be available in the form of either quantitative or qualitative data.47 | Studies with multiple arms, for example, factorial designs (eg, randomised controlled trials of multicomponent interventions). Studies with different configurations of components, to permit indirect comparisons between studies47; ’Tracer studies’ to understand how the intervention works at various levels of the system; social network analyses to understand role of actors and/or networks; modelling studies, drawing on different types of data to understand how various domains have interacted. | Changing prescribing practice involves interactions between pharmacists and the organisations in which they are located.1 |

| Interactions of interventions with context and adaptation.48 49 | Complex interventions can legitimately adapt to their context—the same intervention can look different in different contexts or it may need to be delivered in a context-specific manner. | 1. For a research question about implementation: (How and why) does the implementation of this intervention vary across contexts? 2. For an effectiveness review: Do the effects of the intervention appear to be context- dependent? |

1. Process evaluations; studies which describe the implementation of the intervention. 2. Effectiveness studies from a range of contexts: individual studies conducted in a range of contexts. |

1. For example, qualitative studies; case studies. 2. Trials or other effectiveness studies from different contexts; multicentre trials, with stratified reporting of findings; other quantitative studies that provide evidence of moderating effects of context. |

Community-based interventions to address depression may legitimately vary between contexts—the form of the intervention varies, but the underlying theory and objectives remain the same.50 |

| System adaptivity (how does the system change?).11 | Systems may adapt to (accommodate or assimilate) new interventions, which may affect their effectiveness. (Note that systems are often embedded within other systems and can coevolve.) | (How) does the system change when the intervention is introduced? Which aspects of the system are affected (see the CICI framework51)? Does this potentiate or dampen its effects? | As above; process evaluations; possibly policy analysis analysing change in the system over time, depending on the intervention. | 1. Qualitative studies; case studies; quantitative longitudinal data; possibly historical data; effectiveness studies providing evidence of differential effects across different contexts; system modelling (eg, agent-based modelling). | The introduction of a tax on sugar-sweetened beverages (SSBs) may affect individual consumption; manufacturers may reformulate SSBs to avoid the tax—and may also reformulate food products.52 |

| Emergent properties.46 | Where effects emerge from synergies within the system—such as from interactions between parts of the system or between individuals or groups within the system. | What are the effects (anticipated and unanticipated) which follow from this system change? | Qualitative research is often a source of evidence on unanticipated effects, including adverse effects; any quantitative evaluation may also produce such evidence. | Prospective quantitative evaluations; qualitative studies; retrospective studies (eg, case–control studies, surveys) may also help identify less common effects; dose–response evaluations of impacts at aggregate level in individual studies or across studies included with systematic reviews (see suggested examples). | Herd immunity in relation to vaccinating individuals53; population-health outcomes for sanitation interventions at household level (threshold effects); emergence of new social norms (eg, in smoking, following new tobacco control measures).54 |

| Non-linearity and phase changes.53 1 55 | Where the effect or the scale of the effect does not appear to be directly related to the cause. May explain why intervention effects suddenly appear or disappear. | How do effects change over time? (Changes may be due to biological (genetic drift in virulence factors), ecological (changes in habitat creating or constraining new/different space for vectors or disease), epidemiological (changes in disease patterns by age, cause, location and so on) or social factors (changing social norms around gender and behaviours)). | Longitudinal quantitative data (eg, Interrupted studies); qualitative data. | Mainly prospective quantitative studies, including ITS studies; dose–response evaluations of impacts at aggregate level in individual studies or across studies included with systematic reviews (see above—might fit in either place). | Use of quantitative time series methods alongside qualitative ‘story telling’ to identify phases/evolution in social care policy in England.56 |

| Positive (reinforcing) and negative (balancing) feedback loops.20 | These can potentiate or reduce the effects of interventions: for example, between behavioural and environmental features within the system, for example, when availability of healthy food promotes healthy diets, creating demand. | What explains change in the effectiveness of the intervention over time? Are the effects of an intervention damped/suppressed by other aspects of the system (eg, contextual influences)? | Potentially quantitative or qualitative data. | Qualitative studies of factors that enable or inhibit implementation of interventions; quantitative studies of moderators of effectiveness; long-term longitudinal studies; development of conceptual diagrams to illustrate potential feedback loops, and to help identify ways in which they may be identified empirically. | 1. Provision of cycling lanes encourages more cycling; 57 in theory cyclists may feel safer because there are larger numbers of visible cyclists, so more people cycle (positive/reinforcing feedback). |

| Multiple (health and non-health) outcomes and dependencies.22 | Changes in systems can produce a range of health and non-health outcomes—both anticipated and unanticipated, with no single ‘primary’ outcome.58 Outcomes from one individual (or level) may be affected by outcomes from another. | What changes in processes and outcomes follow the introduction of this system change? At what levels in the system are they experienced? | Quantitative and qualitative data (eg, qualitative data have been used to identify unanticipated adverse effects 59). Examination of specific pathways and many outcomes along the overall theory of change and over time (including lag effects across one or several decades) would be useful. | Quantitative studies tracking change in the system over time; qualitative research exploring effects of the change in individuals, families, communities and so on. | Many social programmes produce changes in health outcomes, but also non-health outcomes (eg, employment, education) at individual, family, community and city levels.60–63 |

CICI, Context and Implementation of Complex Interventions; ITS, Interrupted Time Series.

For those developing guidelines, the above issues will often be explored during the scoping stage (see the WHO Handbook for Guideline Development, section 2.7: http://www.who.int/publications/guidelines/handbook_2nd_ed.pdf). At this stage it may be useful to consider whether producing the guideline will involve summarising the evidence on a specific complex intervention, or will go beyond this to take a complex systems approach. If it restricts itself to consideration of a complex intervention, it will be necessary to decide which characteristics of complexity may be most relevant to this task. Scoping the guideline requires considering the interventions, and the individuals and/or populations, and the potential benefits and harms.

We conclude this section by emphasising that not every systematic review needs to consider all aspects of complexity. Even if complexity is taken account of in the review, it needs to be done pragmatically, by considering whether it will enhance the review’s usefulness to decision makers. We want to avoid simply encouraging every review to be as complex (and potentially confusing, and impractical) as possible. A pragmatic balance therefore needs to be struck between appropriately and accurately representing the complexity of the intervention and/or system being evaluated, and producing useful guidance or guidelines. Box 2 may help with striking this balance.

Box 2. Will a complex systems perspective be useful for my systematic review?

To answer this, first consider the priority questions for the review.

What do the review users want to know about?

If users only want to know about the effects of the intervention on individual-level outcomes, they may not be interested in a wider system perspective (although that may simply be because they are not aware that this could be useful). If they only want to know about population-level effects, but are less interested in interactions between levels, or between the intervention and its context, then again a systems perspective may not be of interest (but that does not mean that it is not important; see other questions below).

Other questions to consider.

At what level(s) does the intervention have its effects?

If the intervention involves changes to wider structures or systems which affect health (eg, through regulation, healthcare reorganisation, the introduction of new policies or through the reorganisation of services), then a system perspective may be helpful. This could involve considering the outcomes of the intervention at different levels—for example, the individual level, the family level, the community level, the organisational level, the societal level. It could also consider how effects at each of these levels interact. A systems perspective may of be particular value in evaluating the implementation of an intervention.

Does the intervention affect the context into which it is introduced?

Public health interventions often interact with their context; a systematic review could explore the extent to which this is the case. For example, some interventions do not only change individual-level outcomes, but also social norms: Smokefree legislation affects smoking rates, but it also affects the wider acceptability of smoking in public places. This, in turn, may affect individual smoking rates.

Through which processes and mechanisms does the intervention bring about changes?

Users may want to know about the processes and mechanisms by which outcomes are produced by an intervention. From a systems perspective, this involves consideration of system-level mechanisms—in other words, by what means does the intervention change the wider system (its structures and processes) to bring about change?

Taking account of complexity: why we need to think about theory, system properties and context

These complementary perspectives have implications for developing appropriate, answerable research questions for systematic reviews. For example, if the focus is on the intervention, then research questions are more likely to focus on the individual and interactive effects of components of the intervention. The pathways between the intervention and those outcomes will also be of interest. However if the focus is on the system, or on the interaction between the intervention and the wider system, then the research questions may focus on whether and how the system adapts to the intervention (what has been referred to as ‘what happens’ questions, rather than ‘what works’ questions10 22); describing and analysing feedback loops between different parts of the system; and describing how effects are produced within different parts and at different levels of the system. Complex interventions adapt to the system within which they are introduced, and they may change the system itself. This may even be their purpose, for example in the case of health system interventions (note also that a health system can also be seen as a subsystem of a much wider social system).

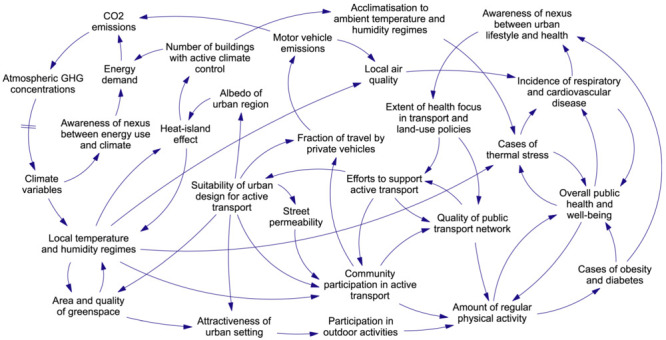

The review process is likely to start by describing the boundaries of the system. This can be done using a graphical display of the various relationships between elements of the system. Such displays have variably been referred to as conceptual frameworks/diagrams or causal loop diagrams (figure 1 shows in simplified form the interactions between humans and their environments, and how these influence health outcomes). Here, we adopt the term conceptual frameworks as the most generic term—they can be thought of as being the logic model.

Figure 1.

Causal loop diagram of human health and climate change (Proust et al 44). GHG, Greenhouse gas.

Developing a conceptual framework like this can be done through a combination of literature reviews, stakeholder input and discussions within the review team. In one example, relating to the causal pathways linking crime, fear of crime and mental health, the conceptual framework was developed from a review of existing theory.23 Conceptual frameworks have been shown to be a useful means of (1) thinking through complexity upfront, (2) prioritising research questions and (3) making methodological choices in response to these decisions. Templates for such conceptual frameworks/diagrams can facilitate the development of a logic model.24 Certainly an initial illustration of factors and processes can help reviewers refine the research questions and the review’s inclusion criteria. This initial illustration may remain unchanged (an a priori logic model), or it may be subject to modifications as the evidence synthesis progresses (a staged or iterative logic model).25

From a guidelines developers’ perspective, it may be helpful to start the guideline scoping phase by considering the system boundaries. Systems are potentially huge, and for pragmatic reasons it may be best to focus on only part of the system. For example, in the case of childhood obesity, the focus may be restricted to marketing of unhealthy foods. The boundaries can/should be determined in consultation with stakeholders—and they should also be closely related to the review question.

The role of theory

The conceptual framework in figure 1 is similar to using ‘explanatory’ theory to depict a system. This is different from a process-orientated logic model or analytical framework which usually corresponds to the ‘theory of change’ (usually quite linear) of a complex intervention. Explanatory theory sheds light on the nature of the problem and helps to identify a range of factors that may be modifiable.26 Conceptual frameworks like this describe the inter-relationships within the wider system or subsystems. These graphical displays are themselves representations of initial hypotheses, or sets of linked hypotheses, about the processes involved. As such, they can be used to help generate specific research questions (see below).

System properties

The literature refers to a number of common properties of complexity. Some of the most frequently mentioned are defined in table 1. In producing a systems-oriented systematic review, the reviewer should first consider where the intervention of interest is located with respect to the wider system. To do this she/he does not have to analyse the whole system. Second, she/he should consider whether any system-level characteristics (such as feedback loops, non-linearities and interactions between intervention components) are of relevance and why. Not all these effects will be relevant to every systematic review; the effects may be small and/or the system-level effects in question may have limited explanatory power in some cases. Evidence-to-decision frameworks (such as the WHO-INTEGRATE framework described elsewhere in this series18) will be of particular value here, as they ensure that all factors or criteria of relevance in a given guideline development or other health decision-making process are considered in a systematic way.

The role of context

Complex health interventions are often characterised by their sensitivity to context, the fact that ‘one-size does not fit all’ and that such interventions often interact with and sometimes adapt to the context within which they are implemented, which may have implications for the effectiveness, acceptability and sustainability of the intervention itself. 27 28 In the case of health research, any aspect of an individual’s life could in principle be described as their ‘context’—such as their location within any social, spatial, physical or cultural space. Moreover, the same or similar contexts may affect people in quite different ways—think, for example, of studies of employment and health (such as the Whitehall studies in UK civil servants), which show that even in broadly similar contexts, employees’ health can be affected by subtly different employment grades and different levels of control over their working environment.29

However in defining a research question for a systematic review it is important to think pragmatically about context and to identify which aspects of context are likely to matter most—for example, which are likely to have a significant moderating effect on an intervention. This decision can be informed by existing theory, and by the use of conceptual diagrams and logic models to reveal potentially important contextual elements in different parts of a system. It can also be informed by users’ needs.27

Not all information on context will come from empirical studies. For example information on the political context within which a policy intervention is implemented may be found in policy documents and through media analyses.30 There is also a growing evidence base on implementation, including systematic reviews which examine how guidelines are implemented; this points to complexity as being an important barrier to implementation. 31 32

Context often acts as a moderator of the effects of complex interventions; however it can also be part of the intervention itself. For example, some public health interventions explicitly aim to change contexts, such as Smokefree legislation, which restricts smoking in public places such as bars and restaurants.33 In fact many policy interventions are like this, in that they involve changes over time in social, economic, health or other systems. Wells et al 14 refer to this as a ‘blurred intervention’. The role of context is dealt with in detail in another paper in this series, which describes how context is currently managed within existing systematic review tools and methods, and describes good practice in terms of the use of context within systematic reviews and guidelines.34

A worked example: childhood obesity and system properties

Both the determinants and the consequences of childhood obesity are complex: there is an intergenerational passage of obesity risk, with obesity in adults being perpetuated into future generations through multiple mechanisms, social as well as biological. These pathways cover various stages of the life-cycle during childhood, from undernutrition or overnutrition in fetal development, childhood and through to adulthood, and incorporate unhealthy diets and inadequate physical activity. The physical and psychological consequences of childhood obesity are wide-ranging, likely to last into adulthood, and impact on social and health capital and the economy. A systems approach to childhood obesity sees it as embedded within the wider political, institutional and cultural system. It addresses upfront both life-course and environmental considerations, including appropriate infant and young child feeding, and a child’s daily food environment (eg, as affected by marketing and advertising of products, or the quality and access to school and preschool foods) and physical activity environment.

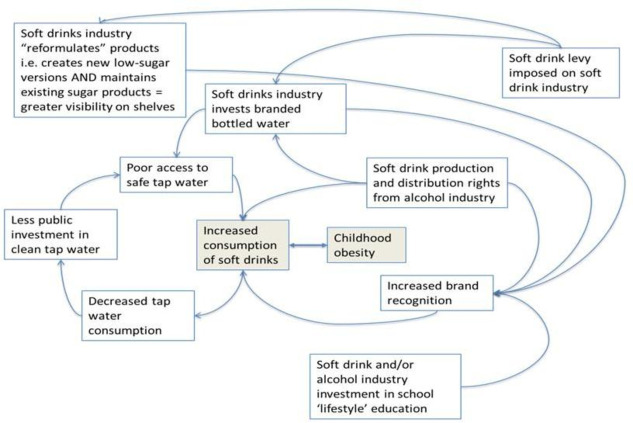

Refining the question could start, for example, with drawing a (or referring to an existing) model to synthesise the evidence on determinants, such as the one reproduced in figure 2. This can help visualise the underlying characteristics and relations of systems and show how they inter-relate to produce childhood obesity. Thus through this lens, childhood obesity can be conceptualised as an emergent property of a complex system, rather than the result of individual lifestyle choices. Visualising these relations can also help unpack feedback loops and how they might suppress or potentiate the effect of an intervention.

Figure 2.

Conceptual framework: soft drinks consumption and childhood obesity in countries with limited access to safe drinking water.

Taking, for example, excessive soft drinks consumption as a well-established factor in childhood obesity: a systems perspective allows one to move away from a linear ‘cause (soft drink consumption) and effect (excess weight gain)’ approach, to understand the range of factors contributing to soft drink consumption and how they might interact to reinforce this behaviour. Crucially it also helps to define the boundaries of this complex problem (and therefore the boundaries of the system) by facilitating a thought process of ‘what else is happening in this picture?’—thus in this (incomplete, rough-sketch) conceptual framework (figure 2), issues such as water access and safety may come into play, and/or who is producing and marketing soft drinks (not always a soft drink company). Thus the boundaries move away from the individual child to include municipal actors and laws, corporate players, and even industries we would not automatically include (such as the alcohol industry) when thinking of causal pathways between soft drink consumption and childhood obesity. Thus understanding relations between components of a system requires acknowledging the system’s context and culture.

Figure 2 also illustrates an example of adaptivity or ‘self-organisation’, where the system finds ways to diversify and evolve. For example in response to a levy imposed by the government on soft drink industry, it will adapt in a number of ways, including by reformulating its products. Evidence shows, however, that this is most often not a question of substitution (actually removing high sugar drinks) but rather creating a low-sugar alternative, adding to the overall offer. This adaptation makes the system more resilient to external shocks (such as a soft drink levy on industry).

Implications of a systems perspective for framing the research question and types of evidence included

Assuming that complexity is a relevant concern for a systematic review, the next step is to turn that ‘concern about complexity’ (or a specific aspect of complexity) into a research question, or questions. Again, caution is necessary, because not all elements of complexity are necessarily the focus of research questions. For example, feedback loops may be relevant to how an intervention may/may not work, so they may be important to ‘bear in mind’ when reviewing evidence, rather than being a main focus of the review. It is likely that reviews of evidence adopting a complex systems perspective will be interested in a wide range of questions, relating to the characteristics of complexity summarised above. In particular questions about how an intervention works, the nature of the interaction between intervention components, and between intervention and context are likely to be of relevance. There may still be an overall ‘what works question’ to which the PICO framework (patient, population or problem; intervention; comparison; outcomes) can be applied,35 but there may be a need to frame other questions in addition which may require a wide range of evidence to answer them. It is likely that a series of different syntheses or reviews may be undertaken, each of which may draw on a method-specific question formulation framework.27 36

Lumping versus splitting

Squires et al 37 note the importance of considering how broad the scope of a review should be, often known as ‘lumping’ versus ‘splitting’. ‘Splitters’ argue that it is only appropriate to combine highly similar studies; for example, studies should be comparable in terms of their design, population, interventions, outcomes and context. ‘Lumpers’ however argue that a systematic review aims to identify the common generalisable features within broadly similar interventions unless there are good grounds not to. Squires et al 37 suggest taking a lumping approach whenever possible as it allows the assessment of generalisability and consistency of research findings to be assessed across a wider range of different settings, study populations and behaviours.37 It could also be argued that it is in the very nature of complex interventions, and interventions in complex systems, that individual studies will vary in terms of context, population and of course the intervention itself. It is therefore uncommon to have groups of very homogeneous studies which can be ‘split’. By comparison ‘lumping’ allows decision makers to see how findings have varied across different population groups and contexts. Describing context clearly can therefore help users assess the potential generalisability of research findings. (A later paper in this series describes meta-analytical approaches to lumping and splitting.38

A more ambitious perspective would go beyond thinking just of lumping and splitting, and would acknowledge that no single study—or methodological approach—is likely to contain the breadth of evidence required to model a complex system adequately. Mixed methods reviews and studies—which blend the power of statistical aggregation with qualitative explanation—are likely to be the most useful approach.36

Table 1 gives examples of how different aspects of complexity may be framed as research questions and the implications for systematic review inclusion criteria. In general, the reviewer or guideline developer needs to start by thinking about the scope of their review or guideline: Is the focus solely on effectiveness? Or implementation? Or exposures (does X cause Y)? Or is it a question about process/implementation? Are users likely to be interested in the adaptivity of the intervention and the system surrounding it? Are they interested in variations in effects across contexts? Which components of the intervention appear to matter, and which don’t?

Choices about what sort of evidence to include in order to answer these questions then require further decisions about how to synthesise and appraise that evidence.36 39 They will also influence how one might assess the overall confidence in a body of evidence (see the papers in this series on evidence-to-decision frameworks and considerations of complexity in rating certainty of evidence.18 39

In conclusion to this section, it should be noted that synthesising complex sets of evidence can be methodologically challenging and resource-intensive. Not every review—even if the review aims to take a systems perspective—will be able to address all the aspects of complexity in table 1. Reviewers may therefore need to prioritise which aspects are likely to be most important to users, and focus resources on these. As we noted earlier in the paper, not every systematic review needs to consider all aspects of complexity. In many cases—particularly where resources are limited—a more straightforward review approach will be appropriate. However where a systems perspective is likely to be of value, authors will need to consider how best to include relevant evidence as far as their resources permit. Considering users’ priorities alongside table 1 may be helpful in this regard. It is also possible that as part of the guideline development process several reviews may be conducted, addressing different aspects of complexity. For example, a systematic review of effectiveness might be conducted alongside a review exploring the processes and mechanisms by which the intervention brings about change within a particular system, and/or exploring issues of acceptability and feasibility.27

How users can help shape the review question(s)

It is standard practice to involve review users in defining the review question(s). However there are different types of decision maker (or ‘stakeholders’) to consider, and they may have different priorities. They may have different views, for example, about the primary/secondary outcomes and about what aspects of complexity matter most. Decision makers may also have particular biases; some may not want specific outcomes or phenomena of interest to be considered. In public health, for example, stakeholders with vested interests, and sometimes policymakers, may be keen for individual-level interventions and/or outcomes and/or populations to be addressed in a research project, but may be less interested in interventions that act at the population level. For example some unhealthy commodity industries are often most accepting of evidence about individual-level informational interventions (such as educational interventions and provision of information), which are known to be only weakly effective, but are less accepting of evidence about population-level structural interventions aimed at the whole population, such as marketing restrictions, which are generally more effective.40 Guidance can be found elsewhere on how to obtain user input into framing review questions.35 41

Having a specific decision-making context or a specific decision maker in mind can help focus the review question and the review itself (Booth et al 27). This may be particularly true of reviews with a complexity focus, which themselves may risk becoming overly complex. One simple application of this is to acknowledge that in many cases there is imperfect evidence for a defined magnitude of effect, yet it is also possible (based on theory, observation, natural experiments and experience) to be confident that a proposed intervention, compared with doing nothing, will not have an effect in the wrong direction and will do some good (see also concept of quality of evidence for a ‘non-null effect’ in a later paper in this series (Montgomery et al 39)). Consideration of this prior wider evidence base, alongside any effectiveness evidence, based on randomised controlled trials or quasi-experiments, allows the decision maker to make the appropriate decision, by considering the balance between the evidence on both (or all) sides of a decision.36 42

Value of information approaches

The concept of ‘Value of Information’ (VOI) may be helpful to focus the review. VOI frameworks describe the anticipated value of the new information which would be generated by conducting a new piece of research (such as a new systematic review)43 The potential value of new research is that it reduces some aspect of decision-maker uncertainty. The potential implication for systematic reviews is that by assessing in advance what new evidence would be needed to reduce that uncertainty, the review question can be more closely tailored to users’ needs. VOI approaches usually rely on formal (quantitative) estimation of the value of new evidence, but even in the absence of this quantitative approach, it is useful to consider (and find out) the main areas of uncertainty for different types of decision maker and to explore what sort of evidence would be needed to reduce it. In the case of reviews of evidence on complexity, it may show that producing new synthesised evidence about, say, feedback loops would have little impact on a decision—and so this aspect could be left out of the review. This can be a helpful way of focusing the development of new guidelines—by asking the questions: ‘What area of decision-maker uncertainty is the guideline aiming to reduce?’ and ‘What new evidence would be most useful to review, to reduce that uncertainty?’

The degree of consultation with users may depend on the review topic and its political or other sensitivities. For highly uncertain, politically sensitive topics with little clear evidence, more input would be necessary to involve all potential stakeholders (or their representatives) in the decisions about the framing of the question. For ‘simpler’ less contentious reviews, this may be less crucial. In addition, any recommendation about an intervention does not depend on the evidence alone; other criteria must be taken into consideration. A subsequent paper in this series will show how the WHO-INTEGRATE evidence-to-decision framework helps with this process, based on six structural criteria (Balance of health benefits and harms, Acceptability, Health equity, equality and non-discrimination, Societal impact, Financial and economic considerations and Feasibility and health system considerations). A seventh criterion, Quality of evidence, represents a meta-criterion that applies to each of the six structural criteria; all seven criteria influence the strength of a guideline recommendation. Each criterion may apply at the individual level, the population level, the system level or several of these.

Conclusions

In reviewing evidence and developing guidelines, it may be helpful at the beginning of the process to explicitly consider whether to take a ‘complex interventions’ or a ‘complex systems’ focus. Both may be of value at different stages of the review. The decision should be taken with reference to users’ needs and available resources. Box 2 and table 1 may help with making this decision and in assessing the implications for what type of evidence to include. It should also be noted that this is an evolving field and what is now needed are concrete examples of complex systems-oriented systematic reviews. These will help clarify the feasibility and resource requirements for such reviews. Subsequent papers in this series will consider in more detail the practical steps which are likely to be involved, and the contribution made by different types of qualitative and quantitative evidence.

Acknowledgments

We acknowledge the contributions through participation in discussions and meetings of Lorenzo Moja, Jonathon Simon, Asha George, Mauricio Bellerferri and Neena Raina.

Footnotes

Handling editor: Soumyadeep Bhaumik

Contributors: All authors contributed to discussions to decide on the content, and/or contributed examples and to revising and/or writing or reviewing the text, and approved the final version. MP is the guarantor.

Funding: Funding provided by the World Health Organization Department of Maternal, Newborn, Child and Adolescent Health through grants received from the United States Agency for International Development and the Norwegian Agency for Development Cooperation.

Competing interests: None declared.

Patient consent: Not required.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: No additional data are available.

References

- 1. Craig P, Dieppe P, Macintyre S, et al. Developing and evaluating complex interventions: the new medical research council guidance. BMJ 2008;337:a1655. 10.1136/bmj.a1655 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Moore GF, Audrey S, Barker M, et al. Process evaluation of complex interventions: medical research council guidance. BMJ 2015;350:h1258. 10.1136/bmj.h1258 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Byrne D, Callaghan G. Complexity theory and the social sciences: the state of the a rt. Abingdon: Routledge, 2014. [Google Scholar]

- 4. Rickles D, Hawe P, Shiell A. A simple guide to chaos and complexity. J Epidemiol Community Health 2007;61:933–7. 10.1136/jech.2006.054254 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Matheson A, Walton M, Gray R, et al. Evaluating a community-based public health intervention using a complex systems approach. J Public Health 2017:1–8. 10.1093/pubmed/fdx117 [DOI] [PubMed] [Google Scholar]

- 6. Guise JM, Chang C, Butler M, et al. AHRQ series on complex intervention systematic reviews-paper 1: an introduction to a series of articles that provide guidance and tools for reviews of complex interventions. J Clin Epidemiol 2017;90:6–10. 10.1016/j.jclinepi.2017.06.011 [DOI] [PubMed] [Google Scholar]

- 7. Tugwell P, Knottnerus JA, Idzerda L. Complex interventions-how should systematic reviews of their impact differ from reviews of simple or complicated interventions? J Clin Epidemiol 2013;66:1195–6. 10.1016/j.jclinepi.2013.09.003 [DOI] [PubMed] [Google Scholar]

- 8. Anderson L, Petticrew M, Chandler J. Introduction: systematic reviews of complex interventions.

- 9. Lewin S, Hendry M, Chandler J, et al. Assessing the complexity of interventions within systematic reviews: development, content and use of a new tool (iCAT_SR). BMC Med Res Methodol 2017;17:76. 10.1186/s12874-017-0349-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Rutter H, Savona N, Glonti K, et al. The need for a complex systems model of evidence for public health. Lancet 2017;390:2602–4. 10.1016/S0140-6736(17)31267-9 [DOI] [PubMed] [Google Scholar]

- 11. Hawe P, Shiell A, Riley T. Theorising interventions as events in systems. Am J Community Psychol 2009;43:267–76. 10.1007/s10464-009-9229-9 [DOI] [PubMed] [Google Scholar]

- 12. Shiell A, Hawe P, Gold L. Complex interventions or complex systems? implications for health economic evaluation. BMJ 2008;336:1281–3. 10.1136/bmj.39569.510521.AD [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Langhorne P, Pollock A, Stroke Unit Trialists' Collaboration . What are the components of effective stroke unit care? Age Ageing 2002;31:365–71. 10.1093/ageing/31.5.365 [DOI] [PubMed] [Google Scholar]

- 14. Wells M, Williams B, Treweek S, et al. Intervention description is not enough: evidence from an in-depth multiple case study on the untold role and impact of context in randomised controlled trials of seven complex interventions. Trials 2012;13:95. 10.1186/1745-6215-13-95 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. May C, Finch T, Mair F, et al. Understanding the implementation of complex interventions in health care: the normalization process model. BMC Health Serv Res 2007;7:148. 10.1186/1472-6963-7-148 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Meadows D. Thinking in systems. A Primer London: Earthscan, 2008. [Google Scholar]

- 17. Leischow SJ, Best A, Trochim WM, et al. Systems thinking to improve the public's health. Am J Prev Med 2008;35:196–203. 10.1016/j.amepre.2008.05.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Rehfuess EA, Stratil JM, Scheel IB, et al. The WHO-INTEGRATE evidence to decision framework version 1.0: integrating who norms and values and a complexity perspective. BMJ Glob Health 2019;4(Suppl 1):i90–i110. 10.1136/bmjgh-2018-000844 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Gruer L, Tursan d'Espaignet E, Haw S, et al. Smoke-free legislation: global reach, impact and remaining challenges. Public Health 2012;126:227–9. 10.1016/j.puhe.2011.12.005 [DOI] [PubMed] [Google Scholar]

- 20. Diez Roux AV. Complex systems thinking and current impasses in health disparities research. Am J Public Health 2011;101:1627–34. 10.2105/AJPH.2011.300149 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Fuller JA, Eisenberg JN. Herd protection from drinking water, sanitation, and hygiene interventions. Am J Trop Med Hyg 2016;95:1201–10. 10.4269/ajtmh.15-0677 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Petticrew M. Time to rethink the systematic review catechism. Syst Rev 2015;4:1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Lorenc T, Petticrew M, Whitehead M, et al. Crime, fear of crime and mental health: synthesis of theory and systematic reviews of interventions and qualitative evidence. Public Health Research 2014;2:1–398. 10.3310/phr02020 [DOI] [PubMed] [Google Scholar]

- 24. Rohwer A, Pfadenhauer L, Burns J. Logic models help make sense of complexity in systematic reviews and health technology assessments. J Clin Epidemiol 2017;83:37–47. [DOI] [PubMed] [Google Scholar]

- 25. Rehfuess EA, Booth A, Brereton L, et al. Towards a taxonomy of logic models in systematic reviews and health technology assessments: a priori, staged, and iterative approaches. Res Synth Methods 2018;9:1–12. 10.1002/jrsm.1254 [DOI] [PubMed] [Google Scholar]

- 26. Green J. The role of theory in evidence-based health promotion practice. Health Educ Res 2000;15:125–9. 10.1093/her/15.2.125 [DOI] [PubMed] [Google Scholar]

- 27. Booth A, Noyes J, Flemming K, et al. Formulating questions to explore complex interventions within qualitative evidence synthesis. BMJ Glob Health 2019;4(Suppl 1):i33–i39. 10.1136/bmjgh-2018-001107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Petticrew M, Moore L. What is this thing called context. NIHR Briefing paper, 2015. [Google Scholar]

- 29. Marmot MG, Stansfeld S, Patel C, et al. Health inequalities among British civil servants: the Whitehall II study. The Lancet 1991;337:1387–93. 10.1016/0140-6736(91)93068-K [DOI] [PubMed] [Google Scholar]

- 30. Shepperd S, Lewin S, Straus S, et al. Can we systematically review studies that evaluate complex interventions? PLoS Med 2009;6:e1000086. 10.1371/journal.pmed.1000086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Fitzgerald A, Lethaby A, Cikalo M, 2014. Review of systematic reviews exploring the implementation/uptake of guidelines. York Health Economics Consortium. https://wwwniceorguk/guidance/ph56/evidence/evidence-review-2-431762366

- 32. Moullin JC, Sabater-Hernández D, Fernandez-Llimos F, et al. A systematic review of implementation frameworks of innovations in healthcare and resulting generic implementation framework. Health Res Policy Syst 2015;13:16. 10.1186/s12961-015-0005-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Haw SJ, Gruer L, Amos A, et al. Legislation on smoking in enclosed public places in Scotland: how will we evaluate the impact? J Public Health 2006;28:24–30. 10.1093/pubmed/fdi080 [DOI] [PubMed] [Google Scholar]

- 34. Booth A, Moore G, Flemming K, et al. Taking account of context in systematic reviews and guidelines considering a complexity perspective. BMJ Glob Health 2019;4(Suppl 1):i18–i32. 10.1136/bmjgh-2018-000840 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Higgins J, Green S, 2011. Cochrane Handbook for Systematic Reviews of InterventionsVersion 5.1.0, The Cochrane Collaboration. www.cochrane-handbook.org

- 36. Noyes J, Booth A, Moore G, et al. Synthesising quantitative and qualitative evidence to inform guidelines on complex interventions: Clarifying the purposes, designs and outlining some methods. BMJ Glob Health 2019;4(Suppl 1):i64–i77. 10.1136/bmjgh-2018-000893 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Squires JE, Valentine JC, Grimshaw JM. Systematic reviews of complex interventions: framing the review question. J Clin Epidemiol 2013;66:1215–22. 10.1016/j.jclinepi.2013.05.013 [DOI] [PubMed] [Google Scholar]

- 38. Higgins JPT, López-López JA, Becker BJ, et al. Synthesising quantitative evidence in systematic reviews of complex health interventions. BMJ Glob Health 2019;4(Suppl 1):i49–i63. 10.1136/bmjgh-2018-000858 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Montgomery P, Movsisyan A, Grant SP, et al. Considerations of complexity in rating certainty of evidence in systematic reviews: a primer on using the grade approach in global health. BMJ Glob Health 2019;4(Suppl 1):i78–i89. 10.1136/bmjgh-2018-000848 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Babor T, Caetano R, Casswell S. No ordinary commodity. Oxford University Press, 2010. [Google Scholar]

- 41. Gough D, Oliver S, Thomas J. An introduction to systematic reviews. London: Sage Publications Ltd, 2012. [Google Scholar]

- 42. Fischer AJ, Threlfall A, Meah S, et al. The appraisal of public health interventions: an overview. J Public Health 2013;35:488–94. 10.1093/pubmed/fdt076 [DOI] [PubMed] [Google Scholar]

- 43. Claxton K, Sculpher M, Drummond M. A rational framework for decision making by the National Institute For Clinical Excellence (NICE). The Lancet 2002;360:711–5. 10.1016/S0140-6736(02)09832-X [DOI] [PubMed] [Google Scholar]

- 44. Proust K, Newell B, Brown H, et al. Human health and climate change: leverage points for adaptation in urban environments. Int J Environ Res Public Health 2012;9:2134–58. 10.3390/ijerph9062134 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Scott K, George AS, Harvey SA, et al. Negotiating power relations, gender equality, and collective agency: are village health committees transformative social spaces in northern India? Int J Equity Health 2017;16:84. 10.1186/s12939-017-0580-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Anderson LM, Petticrew M, Rehfuess E, et al. Using logic models to capture complexity in systematic reviews. Res Synth Methods 2011;2:33–42. 10.1002/jrsm.32 [DOI] [PubMed] [Google Scholar]

- 47. Petticrew M, Rehfuess E, Noyes J, et al. Synthesizing evidence on complex interventions: how meta-analytical, qualitative, and mixed-method approaches can contribute. J Clin Epidemiol 2013;66:1230–43. 10.1016/j.jclinepi.2013.06.005 [DOI] [PubMed] [Google Scholar]

- 48. Orton L, Halliday E, Collins M, et al. Putting context centre stage: evidence from a systems evaluation of an area based empowerment initiative in England. Crit Public Health 2017;27:477–89. 10.1080/09581596.2016.1250868 [DOI] [Google Scholar]

- 49. Craig P, Di Ruggiero E, Frolich K. Taking account of context in population health intervention research: guidance for producers, users and funders of research. National Institute for Health Research, 2018. [Google Scholar]

- 50. Hawe P, Shiell A, Riley T. Complex interventions: how "out of control" can a randomised controlled trial be? BMJ 2004;328:1561–3. 10.1136/bmj.328.7455.1561 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Pfadenhauer LM, Gerhardus A, Mozygemba K, et al. Making sense of complexity in context and implementation: the Context and Implementation of Complex Interventions (CICI) framework. Implement Sci 2017;12:21. 10.1186/s13012-017-0552-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Evans C. How successful will the sugar levy be in improving diet and reducing inequalities in health? Perspect Public Health 2018;138:85–86. 10.1177/1757913917750966 [DOI] [PubMed] [Google Scholar]

- 53. Halloran ME, Hudgens MG. Estimating population effects of vaccination using large, routinely collected data. Stat Med 2018;37:294–301. 10.1002/sim.7392 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Rooke C, Amos A, Highet G, et al. Smoking spaces and practices in pubs, bars and clubs: young adults and the English smokefree legislation. Health Place 2013;19:108–15. 10.1016/j.healthplace.2012.10.009 [DOI] [PubMed] [Google Scholar]

- 55. Johnston LM, Matteson CL, Finegood DT. Systems science and obesity policy: a novel framework for analyzing and rethinking population-level planning. Am J Public Health 2014;104:1270–8. 10.2105/AJPH.2014.301884 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Hayes P. Complexity theory and evaluation in public management. Public Management Review 2008;10:401–19. [Google Scholar]

- 57. Tin Tin S, Woodward A, Thornley S, et al. Cyclists' attitudes toward policies encouraging bicycle travel: findings from the Taupo Bicycle Study in New Zealand. Health Promot Int 2010;25:54–62. 10.1093/heapro/dap041 [DOI] [PubMed] [Google Scholar]

- 58. Petticrew M, Shemilt I, Lorenc T. Alcohol advertising and public health: do narrow perspectives lead to narrow conclusions? Journal of Epidemiology & Community Health 2017;71. [DOI] [PubMed] [Google Scholar]

- 59. Roberts H, Curtis K, Liabo K, et al. Putting public health evidence into practice: increasing the prevalence of working smoke alarms in disadvantaged inner city housing. J Epidemiol Community Health 2004;58:280–5. 10.1136/jech.2003.007948 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Petticrew M. Public health evaluation: epistemological challenges to evidence production and use. Evidence & Policy: A Journal of Research, Debate and Practice 2013;9:87–95. 10.1332/174426413X663742 [DOI] [Google Scholar]

- 61. Savigny D, Adam T, eds. Systems thinking for health systems strengthening: alliance for health policy and systems research. WHO, 2009. [Google Scholar]

- 62. Matheson A. Reducing social inequalities in obesity: complexity and power relationships. J Public Health 2016;38:197–9. 10.1093/pubmed/fdv197 [DOI] [PubMed] [Google Scholar]

- 63. Galea S, Riddle M, Kaplan GA. Causal thinking and complex system approaches in epidemiology. Int J Epidemiol 2010;39:97–106. 10.1093/ije/dyp296 [DOI] [PMC free article] [PubMed] [Google Scholar]