Abstract

There remains little consensus about whether there exist meaningful individual differences in syntactic processing and, if so, what explains them. We argue that this partially reflects the fact that few psycholinguistic studies of individual differences include multiple constructs, multiple measures per construct, or tests for reliable measures. Here, we replicated three major syntactic phenomena in the psycholinguistic literature: use of verb distributional statistics, difficulty of object-versus subject-extracted relative clauses, and resolution of relative clause attachment ambiguities. We examine whether any individual differences in these phenomena could be predicted by language experience or general cognitive abilities (phonological ability, verbal working memory capacity, inhibitory control, perceptual speed). We find correlations between individual differences and offline, but not online, syntactic phenomena. Condition effects on reading time were not consistent within individuals, limiting their ability to correlate with other measures. We suggest that this might explain controversy over individual differences in language processing.

Keywords: syntax, self-paced reading, sentence comprehension, individual differences, reliability

In Lee Cronbach’s famous presidential address to the American Psychological Association Annual Convention in 1957, he described an optimistic vision of the future of psychology in which the best of the correlational and experimental traditions joined forces as the united discipline. A complete theory of human behavior, he argued, requires the modeling of individual variability along with the prediction of an individual’s response to varying conditions. The usefulness of such a united approach is especially clear in the domains of applied psychology: It would be best to provide an intervention that is tuned to the particular needs of each individual (Pellegrino, Baxter, & Glaser, 1999). Since that 1957 address, psychologists have taken up the challenge of the united discipline. In their 1999 review, Pellegrino, Baxter, and Glaser chart the progress of the field, focusing on the intersections of cognitive psychology and psychometrics that follow directly from Cronbach’s initial interests, focusing first on “aptitude-treatment interactions”, or the relationship between a student’s intellectual abilities and expertise on one hand, and educational materials and instructional methods on the other.

A specific case of this type of investigation is what we will call “reader-text interactions”. Substantial prior work has revealed that the time required to read a sentence or text is a function of both the individual reader and the text being read: Researchers in individual differences and educational psychology have identified important sources of variation in reading and comprehension skill (e.g., Kuperman & Van Dyke, 2011; Perfetti & Hart, 2002), and work in cognitive psychology and psycholinguistics has identified the types of words, sentences, and texts that are more difficult for comprehenders (e.g., some syntactic structures are more difficult to process; Just & Carpenter, 1992; Waters & Caplan, 1996; Gibson, 1998). What is less clear is whether and how reader and text characteristics interact: Are difficult sentences equally challenging for all readers? And, conversely, does variation in reading skill affect the comprehension of all linguistic materials, or just especially difficult ones?

Here, we investigate reader-text interactions in the domain of syntactic processing. We first consider whether there is evidence for such interactions—that is, are some types of sentences consistently more difficult for specific types of readers? Then, to the extent that we observe such differences, we consider what might explain them.

The Relevance of Reader-Text Interactions

The potential for reader-text interactions in syntactic processing is relevant to several broader issues in psychology. First, if variability in readers interacts with properties of texts, that can provide insights into the underlying mechanisms of language processing (for a discussion of how individual differences contribute to more general theoretical development, see Vogel & Awh, 2008). As we review below, theories of language processing make different claims about why some texts are more difficult to process. Consequently, they also imply different hypotheses about which individual differences are likely to modulate syntactic processing. For instance, theories that attribute the difficulty of some syntactic structures to comprehenders’ relative inexperience with them predict that individual differences in language experience might drive differences in syntactic processing. By contrast, in accounts in which some syntactic structures are difficult because of the demands they place on memory, it is individual differences in memory capabilities that are most likely to relate to individual differences in syntactic processing.

Second, whether and which individual differences exist in syntactic processing speak to broader, fundamental questions about the architecture of the mind and language processing system. For example, as we review in greater detail below, some theories (e.g., Waters & Caplan, 2003) propose that language processing is divided into initial, automatic stages and later, interpretive stages, with only the latter subject to individual differences in working memory and other cognitive abilities. Studying individual differences in both online and offline processing allows us to test this theoretical claim. Similarly, another central question in psychology is the extent to which cognitive systems are modular rather than driven by domain-general systems (see Fodor, 1983). By understanding whether variability in the capacity of domain general systems like working memory and executive function is associated with syntactic processing, we can better understand the overall architecture of the mind: To what degree is language (and other motor and perceptual systems) modular, and to what degree does it recruit domain-general systems? It also provides an opportunity to understand why characteristics like high working memory are associated with positive outcomes in more complex domains like reading comprehension.

Finally, and most broadly, reader-text interactions exemplify one of the central questions of the united discipline envisioned by Cronbach: How do the skills and abilities identified by psychometricians intersect with the cognitive-processing effects discovered by experimentalists? Aptitude-treatment interactions have been reported in some educational domains. For instance, learners with greater prior knowledge learn better from different types of texts (McNamara, Kintsch, Songer, & Kintsch, 1996) and feedback (Hausmann, Vuong, Towle, Fraundorf, Murray, & Connelly, 2013) than do low-knowledge learners. Indeed, several reader-text interactions have been reported within the language processing literature. For instance, slower overall readers show larger effects of word frequency (Seidenberg, 1985), and readers with greater linguistic experience may be less sensitive to word difficulty and correspondingly more sensitive to discourse-level factors (e.g., the introduction of new concepts; Stine-Morrow, Soederberg Miller, Gagne, & Hertzog, 2008). Most relevant for the present paper, readers with greater linguistic knowledge are also more efficient at resolving syntactic ambiguity (Traxler & Tooley, 2007). On the other hand, a review of the learning-styles hypothesis—that certain learners do best under one instructional method and other learners do best with a different method—has found little evidence to date in favor of such an interaction; instead, the most well-established mnemonic effects appear to apply across learners (Pashler, McDaniel, Rohrer, & Bjork, 2008). Thus, there is a need to investigate in other domains whether the cognitive-processing effects discovered by experimentalists are consistent across individuals, and whether the important skills and abilities identified in psychometrics apply across tasks and materials.

Assessing Reader-Text Interactions

Given the applied and theoretical relevance of potential reader-text interactions, it is not surprising that they have been studied by both educational psychologists and cognitive psychologists. While educational psychologists have investigated reader-text interactions with the goal of promoting learning in young readers (e.g. Coté, Goldman, & Saul, 1998) and comprehension among students (e.g., McNamara et al., 1996), a complementary literature grew in cognitive psychology as theories of reading began to include ideas about individual differences in cognitive abilities. An influential example is Just and Carpenter (1992), who proposed, and reviewed evidence, that differences in capacity between individuals correlate with differences in reading ability. Since then, psycholinguists have employed individual differences to promote both memory-capacity theories of language comprehension (e.g., Fedorenko, Gibson, & Rohde, 2006; 2007; Gibson, 1998; 2000), competing experience-based theories (MacDonald & Christiansen, 2002, discussed in greater detail below), and a number of other explanations that combine language-specific and domain-general mechanisms (e.g., Farmer, Fine, Misyak, & Christiansen, 2017; Novick, Trueswell, & Thompson-Schill, 2010; Payne, Grison, Gao, Christianson, Morrow & Stine-Morrow, 2014; Swets, Desmet, Hambrick, & Ferreira, 2007; Van Dyke, Johns, & Kukona, 2014; Engelhardt, Nigg, & Ferreira, 2017).

As the individual differences approach in psycholinguistics has continued to grow in popularity in recent years, it is important to take a step back and assess its progress toward the united discipline. These psycholinguistic investigations are nested within the experimental approach, investigating language-processing effects that have been previously shown across subjects using controlled linguistic stimuli. So, the question is whether these investigations live up to the ideals of the correlational approach. Here, we describe several methodological demands identified by the correlational approach and discuss how these constraints may have contributed to a lack of consensus regarding individual differences in syntactic processing.

First, a critical insight from measurement theory is that two variables can be observed to correlate only to the degree that there is meaningful variation in those individual variables and to the degree that such variation is reliably measured (Spearman, 1904). If there are genuine, stable individual differences in syntactic processing, those individuals who show large syntactic-processing effects on one subset of items should also show large effects on another, similar subset. By contrast, a failure to observe such correlations would suggest that either (a) there are not consistent individual differences in syntactic processing or (b) such differences exist, but our methods cannot reliably detect them.

For instance, consider a scenario in which all readers read a syntactically complex sentence 300 ms more slowly than a syntactically simple sentence. In this case, there is clearly a text effect—one sentence is more difficult than another—but there is no reader-text interaction because all readers found the complex sentence more difficult than the simple sentence to the same degree. In this scenario, it would be impossible for any other construct (such as verbal working memory) to explain individual differences in syntactic processing because such variation was not observed to begin with.

Unfortunately, while past investigations of individual differences in syntactic processing have sometimes used measures of working memory and other cognitive abilities that have been normed for their reliability, researchers have only rarely assessed whether we observe meaningful variation across individuals in the syntactic processing effects themselves (but see Swets, et al., 2007 for one application of psychometric principles to syntactic processing). Thus, before we ask why individuals might differ in syntactic processing, it is first necessary to establish that such individual differences exist at all. If we cannot observe consistent individual differences in syntactic processing to begin with, differences in online syntactic processing cannot be expected to relate to any other measure.

Second, individual differences are best assessed with multiple measures. “Perhaps the most valuable trading of goods the correlator can offer,” Cronbach (1957) states, “…is his multivariate conception of the world. No experimenter would deny that situations and responses are multifaceted, but rarely are his procedures designed for a systematic multivariate analysis” (p. 676). A strength of the multivariate approach is that it deals explicitly with measurement error: Observed performance on almost any single task reflects not only the construct of interest but also measurement error, which includes both random error and non-random error from other constructs (Bollen, 1989). Consider reading span (Daneman & Carpenter, 1980), which has been used as the single measure of verbal working memory capacity in several influential psycholinguistic studies of individual differences (e.g., Just & Carpenter, 1992; MacDonald, Just, & Carpenter, 1992; Pearlmutter & MacDonald, 1995). The reading span task purports to measure verbal working memory capacity because it requires participants to remember particular words while reading sentences, but it might also be influenced by participants’ knowledge of specific lexical items (Engle, Nations, & Cantor, 1990; MacDonald & Christiansen, 2002). These confounds make it difficult to interpret a high or low score on any single measure. But, including multiple measures of a single construct allows researchers to assess the degree of common variance between them and use composite scores within a construct; for instance, a composite score for verbal working memory can be created by administering both a reading span and an operation span task. Unfortunately, not all psycholinguistic studies have used multiple measures (or indicators) for any given factor.

Further, the psychometric approach implies that multiple constructs should be measured simultaneously in order to tease apart their effects. A challenge for studying individual differences is that many potential explanatory constructs, such as verbal working memory and linguistic experience, might be intercorrelated (e.g., MacDonald & Christiansen, 2002), making it more challenging to attribute effects to any one construct in particular. In order to demonstrate that a specific construct—say, linguistic experience—is the one that drives differences in online syntactic processing, it is important to also measure other competing constructs and to show that it is specifically linguistic experience, and not (for example) verbal working memory or inhibitory control, that relates to individual differences in processing. However, many psycholinguistic studies have examined only one or two of these constructs within a single investigation; for instance, a study may measure verbal working memory but not reading experience, or vice versa.

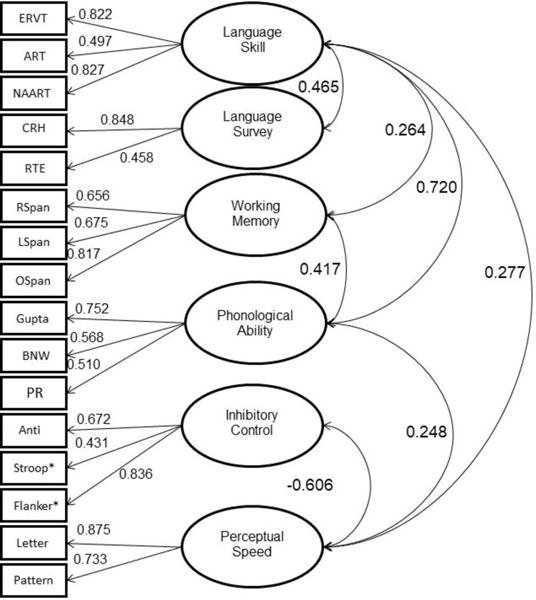

In the current study, we aim to address these three issues by (1) assessing multiple constructs—both domain-general and language-specific—within individuals, (2) including multiple measures of each construct (e.g., multiple span tasks to create a composite measure of verbal working memory), and (3) assessing whether our data include consistent individual differences in the predictor variables and in the syntactic processing effects, both in initial processing and in subsequent comprehension. And, to the extent we find such differences, we attempt to tease apart competing theoretical explanations of them by examining multiple constructs that have been separately proposed to account for individual differences in syntactic processing: language experience, phonological ability, verbal working memory, inhibitory control, and processing speed. We review these proposed explanations below.

What Might Account for Individual Differences in Syntactic Processing?

Language experience

Experience-based accounts propose that individual differences in syntactic processing, even those that are correlated with domain-general abilities, are better explained as differences in exposure to various structures (MacDonald & Christiansen, 2002). This claim about individual differences is consistent with broader theories of language comprehension that posit a strong influence of experience on syntactic processing more generally. For example, constraint-based theories of language comprehension (Altmann & Steedman, 1988; MacDonald, 1994; MacDonald, Pearlmutter, & Seidenberg, 1994; Spivey-Knowlton, Trueswell, & Tanenhaus, 1993) propose that language comprehension is fast and accurate because it incorporates numerous probabilistic constraints, including syntactic ones, that comprehenders have learned through their experience with language. Experience-based theories are supported by demonstrations that syntactic structures are read more quickly when they are more frequent or predictable, as determined from either global statistics or those of particular verbs (Garnsey, Pearlmutter, Myers, & Lotocky, 1997; MacDonald & Christiansen, 2002), and even when memory demands are equated (e.g., Levy, Fedorenko, Breen, & Gibson, 2012). Experience-based accounts are further supported by evidence that online processing of initially difficult structures can be facilitated on the basis of recent laboratory-provided experience with the structures, including both trial-to-trial changes (Arai, van Gompel, & Scheepers, 2007; Thothathiri & Snedeker, 2008; Tooley, Traxler, & Swaab, 2009; Traxler, 2008) and changes over the course of one or more experimental sessions (Farmer, Fine, Yan, Cheimariou, & Jaeger, 2014; Fine, Qian, Jaeger, & Jacobs, 2010; Fine, Jaeger, Farmer, & Qian, 2013; Wells, Christiansen, Race, Acheson, & MacDonald, 2009), and even for structures that are only marginally grammatical (Luka & Barsalou, 2005; Luka & Choi, 2012) or that were previously unfamiliar to the comprehender (Kaschak, 2006; Kaschak & Glenberg, 2004; Fraundorf & Jaeger, 2016).

The examples thus far discuss differences between syntactic structures but within individuals. But, the claim that syntactic processing is guided by relative experience with different structures also suggests that processing could be influenced by differences among individuals in their relevant linguistic experience: Some individuals may come into the reading task with substantially more or less of the experience that was experimentally manipulated in some of the experiments described above. Thus, for instance, computational simulations suggest that rare, difficult structures are less disruptive for more experienced readers, who have more experience with these uncommon structures (MacDonald & Christiansen, 2002). This prediction has been supported by recent studies in the spoken language processing domain, which have found that individuals with higher vocabulary or higher literacy show facilitation in online, anticipatory language processing in the visual world (e.g. Borovsky, Elman, & Fernald, 2012; Mishra, Singh, Pandey, & Huettig, 2012; Rommers, Meyer, & Huettig, 2015). There is still relatively little comparable work in the written modality, but Traxler and Tooley (2007) found that individuals with greater knowledge were less affected by temporary syntactic ambiguity in their online processing.

Phonological ability

Phonological abilities have long been hypothesized to be a major factor in determining reading ability, particularly in acquisition or among poor readers (e.g. Byrne & Letz, 1983; Read & Ruyter, 1985; Sawyer & Fox, 1991; Wagner, Torgesen, & Rashotte, 1999). Experimental manipulations of phonological interference in text (Baddeley, Eldrige, & Lewis, 1981; Keller, Carpenter, & Just, 2003; Kennison, 2004; McCutchen, Bell, France, & Perfetti, 1991, to name a few) also suggest a role of phonology in offline syntactic comprehension even among skilled adult readers.

However, fewer studies have investigated effects of phonology during initial, on-line syntactic processing, and those that have yielded mixed evidence. Acheson and MacDonald (2011) found that sentences with embedded relative clauses were made more difficult by phonological overlap between the head noun of the relative clause and a noun embedded within it (e.g. baker and banker) and between the relative clause verb and main clause verb (e.g. sought and bought). This overlap effect was larger for object-extracted relative clauses (ORCs; 1a), which are typically more difficult in general, relative to subject-extracted relative clauses (SRCs; 1b), perhaps because in some theoretical accounts, phonological representations could be used to maintain the non-canonical ordering of agent and patient in the ORC (that is, the sought baker precedes the seeking banker; MacDonald & Christiansen, 2002).

-

(1a)

The baker that the banker sought bought the house.

-

(1b)

The baker that sought the banker bought the house.

However, Kush, Johns, and Van Dyke (2015) present data that suggest that these effects are the result of encoding interference rather than interference with syntactic integration. Indeed, some theories (e.g., McElree, Foraker, & Dyer, 2003; Martin & McElree, 2008) propose that maintaining serial order is not necessary for comprehension because previous constituents can be directly accessed in memory. Thus, while Van Dyke et al. (2014) found that reading times were related both to vocabulary and to non-verbal memory for serial order, they found no effects of phonological ability.

Whether variation between individuals in phonological ability plays a role in processing is a point of controversy, but it is possible that individual differences between individuals in phonological ability could also influence syntactic processing ability—especially for structures where it may be important to maintain serial order to arrive at the correct meaning of the sentence.

Verbal working memory capacity

As we introduced briefly above, capacity constraints in verbal working memory have figured prominently in research on reader-text interactions. Some theories have proposed that syntactic structures are difficult to process to the extent that they impose greater demands on memory (Fedorenko et al., 2006, 2007; Gibson, 1998, 2000; Just & Carpenter, 1992; King & Just, 1991). For instance, in both the ORC (1a) and SRC (1b) above, the relative pronoun that introduces a dependency in which the relative pronoun must eventually be co-indexed with a syntactic gap in the relative clause. In the ORC, this integration occurs later (at sought, the reader must recall it was the baker who was sought) and requires a longer-distance memory retrieval than in the SRC, in which the gap occurs immediately after that. It has been argued (Gibson, 1998, 2000) that these memory demands explain why ORCs are understood more slowly and less accurately. Thus, differences between individuals in their ability to store and retrieve these dependencies may be associated with how much more difficult they find ORCs.

Other theories suggest a second reason that memory abilities may be important to online language processing. Just and Carpenter (1992) propose that individuals differ in their total capacity to consider multiple sources of information; as a result, individuals with lower memory capacity may also be less able to use additional constraints such as semantic plausibility or referential contexts to help resolve a syntactic ambiguity.

Many studies have evaluated both of these predictions by directly relating syntactic processing to individual differences in measures of verbal working memory. These studies have often used complex span tasks in which participants receive sets of items to store and remember while completing a concurrent or interleaved processing task. For instance, participants may read sentences while remembering particular words from the sentences (Daneman & Carpenter, 1980). It has sometimes been reported that readers with lower scores on complex span tasks have greater difficulty with online processing of challenging syntactic structures, such as the object-extracted relative clauses described above (King & Just, 1991). However, Waters and Caplan (1996) point out that low-span readers in these studies performed worse overall and were not differentially more affected by syntactic difficulty. Moreover, studies have revealed inconsistent results as to whether low-span participants are actually more or less influenced by semantic and pragmatic information; some results suggest that low-but not high-span subjects see a benefit in online processing when helpful pragmatic cues are present (King & Just, 1991), and others suggest exactly the reverse (Just & Carpenter, 1992; Long & Prat, 2008; Pearlmutter & MacDonald, 1995; Traxler, Williams, Blozis, & Morris, 2005).

As a result, Caplan and Waters (1999) propose that online, automatic language processing and later interpretive processes tap separate resources and that only later, post-interpretive processes are assessed by complex span tasks and other working memory measures. For instance, differences in verbal working memory significantly relate to performance on object-extracted relative clauses in offline comprehension accuracy but not in online reading time, even when the measures come from the same participants reading the same sentences (Caplan, DeDe, Waters, Michaud, & Tripodis, 2011; Waters & Caplan, 2005). Indeed, although it is unclear whether such measures correspond to online reading, complex span performance correlates with offline syntactic processing, as well as reading comprehension more generally (Daneman & Merikle, 1996). For instance, Swets et al. (2007) found that working memory— even when measured using non-verbal complex span tasks—was significantly associated with how participants would interpret a syntactically ambiguous relative clause in offline comprehension questions (see also Payne et al., 2014).

Inhibitory control

Differences in working memory relate closely to another construct that has been proposed to drive individual differences in language processing: attentional control. Recent work (Novick et al., 2010) has examined syntactic processing as a function of domain-general inhibitory control, or the ability to resolve conflict between competing internal representations. Inhibitory control may be necessary for syntactic processing because the interpretation that comprehenders initially favor sometimes turns out to be wholly wrong and needs revision. This possibility is suggested by evidence that an initial misparse, even when later ruled out syntactically (Christianson, Hollingworth, Halliwell, & Ferreira, 2001) or revised by a speaker (Lau & Ferreira, 2005), is not always fully suppressed and may continue to influence readers’ eventual, offline interpretations. Indeed, online competition may even arise from syntactic structures that are never supported globally but that are coherent in the local syntactic context (Tabor, Galantucci, & Richardson, 2004).

In addition to the demands of revising the syntactic structure of a sentence, inhibitory control may be necessary for resolving competition between similar constituents online as the sentence unfolds. For example, the online processing difficulty of object-extracted relative clauses may be amplified by semantic (Gordon, Hendrick, & Johnson, 2001) or phonological (Acheson & MacDonald, 2011) similarity between the referents in the sentence. These findings are consistent with theories, both of language comprehension specifically (Lewis, Vasishth, & Van Dyke, 2006) and of memory more generally (Nairne, 2002), in which the primary determinant of short-term remembering is not a fixed storage capacity but rather the degree of interference between items to be remembered.

Thus, differences in the ability to suppress irrelevant information and resolve competition might lead to differences in the speed and accuracy of comprehension, and such correlations have been observed (Novick, Trueswell, & Thompson-Schill, 2005). More generally, the ability to suppress incorrect or irrelevant information has been argued to contribute to many aspects of language comprehension ability (Gernsbacher, 1993). Differences in inhibitory control might even account for effects previously attributed to working memory capacity—measures of inhibitory control often correlate with complex span task performance, and individual differences in performance on such tasks have sometimes been attributed in whole (Engle, 2002) or in part (Unsworth & Engle, 2007) to differences in inhibitory control. Indeed, it has been proposed that working memory span performance correlates with language comprehension and other complex activities because each of these activities relies on general attentional control processes (for review, see Kane, Conway, Hambrick, & Engle, 2007).

Perceptual speed

The final construct explored here is perceptual speed, or how quickly one is able to process perceptual stimuli (in the visual domain, within the current study), an ability that falls under the more general construct of processing speed (Salthouse, 1996). The inclusion of this basic ability is intended to capture and control for shared aspects of the reading task and other cognitive tasks that result from rapid visual processing of on-screen stimuli. For instance, perceptual speed has been proposed as one of the core abilities that support working memory (see Jarrold & Towse, 2006, for review), so controlling for perceptual speed would allow us to examine other aspects of working memory that may relate more to sentence processing. In addition, perceptual speed itself has been implicated in individual differences in language processing, although most frequently as an explanation for age-related changes in cognition (e.g., Salthouse, 1996; Caplan et al., 2011). Nevertheless, individual differences in processing speed may also explain some of the variability within an age group.

Current study

In the current study, we examined the contributions of both domain-specific and domain-general mechanisms to online and offline syntactic processing. We selected three syntactic constructions that have been relevant in the psycholinguistic literature in motivating both general theories of language processing and specifically those of individual differences. Our choice of constructions also allowed us to measure both online processing and offline comprehension, which provide insight into potential differences between interpretative and post-interpretive mechanisms. Critically, we also measured the internal consistency of each of these measures: Do we, in fact, observe consistent individual differences such that (for instance) some subjects consistently find ORCs easier to read than do other subjects? As we note above, although many studies have sought to relate verbal working memory and other such constructs to online sentence processing, researchers have not always assessed directly tested whether there are genuine individual differences in sentence processing to begin with.

To the extent that we do observe individual differences in syntactic processing, we also assessed individual differences in five other constructs (language experience, phonological ability, verbal working memory, inhibitory control, and perceptual speed) that might explain those differences in syntactic processing. All constructs were measured within the same set of participants, allowing for their effects to be distinguished and compared. Further, each of these constructs was assessed with multiple tasks, which allows us to create composite measures and mitigate task-specific effects.

Finally, we applied linear mixed-effects regression to relate these individual differences to syntactic processing. One potential challenge in distinguishing the influences of, say, verbal working memory and language experience is that, with a relatively large number of predictors and too few observations, regression models tend to capitalize on chance aspects of the data rather than yield generalizable results (the problem of overfitting; Babyak, 2004). Linear mixed-effects models reduce this problem because the unit of analysis is the reading time on an individual word or the response to an individual comprehension question, rather than an average of all of a participant’s reading times or responses. Thus, thousands of observations are available to the regression model. (For further discussion of linear mixed-effects models and other solutions to the study of individual differences in reading, see Matsuki, Kuperman, & Van Dyke, 2016).

Below, we detail each of these three syntactic structures and their corresponding processing measures.

Structures of interest

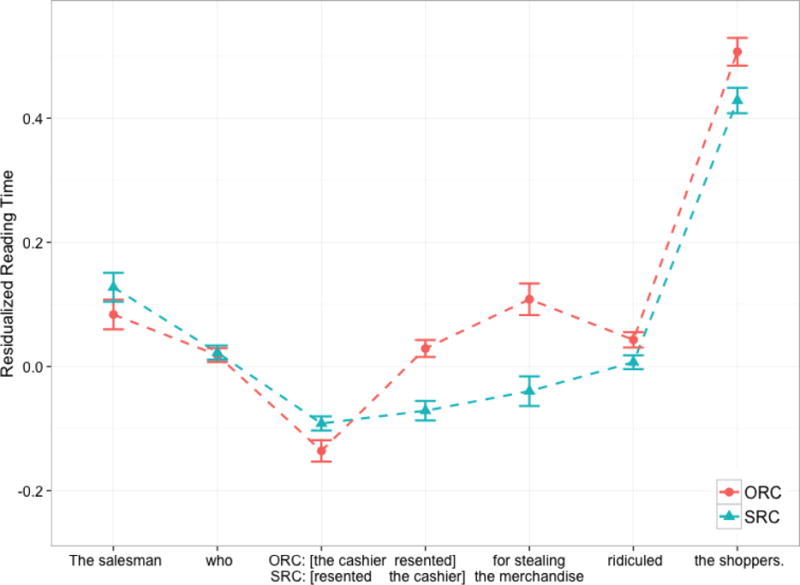

Relative clause extraction

First, we tested differences in reading and comprehending object-extracted versus subject-extracted relative clauses, a hallmark syntactic phenomenon that has contributed to numerous theories of syntactic processing. As reviewed above, within a participant, ORCs are typically more demanding and are read more slowly than SRCs within the relative clause; to preview, we replicate this well-established effect in our own data. Our interest, however, was whether there were differences across participants in the degree to which ORCs were relatively more difficult than SRCs. Thus, we took as a measure of individual differences the degree to which each participant read the syntactically difficult ORCs more slowly than the syntactically simpler SRCs.

Verb bias

We also examined a second widely-studied phenomenon in syntactic processing: the online use of verb distributional statistics in the sentential complement structure. In sentence (2), a temporary ambiguity between a direct object and sentential complement reading is introduced. In (2a), the ambiguity is resolved early: The complementizer that signals that the main verb accepted takes a sentential complement in which the contract is the subject. In (2b), removing the complementizer makes the contract temporarily ambiguous between the subject of the sentential complement (the player accepted some fact about the contract) and the direct object of accepted (the contract is what the player accepted).

-

(2a)

The basketball player accepted that the contract required him to play every game.

-

(2b)

The basketball player accepted the contract required him to play every game.

In general, the verb accepted is more likely to take a direct object than a sentential complement. Correspondingly, in the ambiguous version, readers slow down when the sentence is disambiguated to the sentential complement structure (at the verb required), suggesting they had initially favored the direct object interpretation that is consistent with the distributional statistics of accepted. However, other verbs, such as acknowledged, take a sentential complement more than a direct object; for these verbs, there is no benefit to disambiguating the structure with that, suggesting that readers already favor the sentential complement interpretation (Fine et al., 2010; Garnsey et al., 1997; Wilson & Garnsey, 2009; but see Kennison, 2001). Thus, our dependent measure of interest was individual differences in magnitude of this verb bias x ambiguity interaction, which indexes the influence of these distributional statistics on online syntactic processing. The use of verb bias is of interest not only because it is another cue that is available during online processing, but also because the learning of these biases provides evidence for how processing is shaped through experience with the language environment (for further discussion, see Ryskin, Qi, Duff, & Brown-Schmidt, 2017).

Attachment ambiguity

Finally, we examined the resolution of globally ambiguous relative clause attachments, such as (3) below:

-

(3)

The maid of the princess who scratched herself in public was terribly embarrassed.

The relative clause who scratched herself in public could modify either the maid or the princess. No syntactic information within the sentence resolves this ambiguity, but attaching the relative clause to the second noun (low attachment) is more common than attaching to the first noun (high attachment) in English (Rayner, Carlson, & Frazier, 1983), though not in all languages (Cuetos & Mitchell, 1988).

For these items, our interest was purely in participants’ offline syntactic processing (in contrast to Payne et al., 2014). Specifically, we queried whether participants arrived at the low attachment or high attachment reading, as revealed by offline probe questions, such as Did the princess scratch herself? Note that a “yes” answer to this question, taken alone, might reflect either a genuine low-attachment preference or a simple bias to affirm whichever interpretation is presented. However, as detailed in the Method and Results sections, we varied the question type across items, which allowed us to obtain a measure of participants’ low-attachment preference that was independent of a bias to respond “yes”; this measure of low-attachment preference then served as the key individual-difference variable for these items.

Research Questions

For each of these structures, we considered three questions. Our first question was simply whether we in fact observe consistent individual variation in each of the syntactic processing effects described above. That is, are there some individuals who are consistently advantaged at reading ORCs relative to other individuals? Do some individuals consistently show a stronger low-attachment preference than others? As we note above, a critical first step is to establish that individual differences exist and have been reliably measured before considering what other constructs might explain those differences.

Where we found that individuals do vary significantly in their syntactic processing, our second question was determining which individual differences, if any, relate to this variability: Are they domain-specific influences such as linguistic experience, or are they more domain-general abilities such as verbal working memory or executive function?

Finally, we considered whether the relationship between sentence processing and any of the individual differences here is present only in online processing, only in offline comprehension, or in both. Caplan and Waters (1999) propose that there are different constraints on online versus post-interpretive processing, and that only the latter is sensitive to differences in capacity between individuals; however, direct tests of this claim have still been relatively sparse in the literature.

Method

Participants

One hundred and thirty-three subjects participated for course credit or a cash honorarium. The study was advertised to the campus community and was thus biased toward younger adults and university students. Of the 133 participants, 10 did not provide any demographic information: Nine did not show up for the second session, in which the questionnaire was given, and one declined to complete the questionnaire. Of the 123 participants with demographic information, 78 (63%) were female. Participants’ ages ranged from 18 to 67 years (M = 20.94 years; SD = 5.37; median = 20 years; 94.3% under age 30). Our sample had only slightly more years of formal education than the nationwide mean (M =13.3 years completed; SD = 1.91; median = 12 years; range = [12, 19]; versus a nationwide mean of 12.9 years according to the United Nations Development Programme, 2014). Most participants (87%) indicated that they had completed at least “some college,” and of the 16 remaining responses, 10 came from University students participating for course credit, who presumably did in fact have some college education.

All participants reported that they were native speakers of English who had not been exposed to any other languages before the age of 5 and that they had normal or corrected-to-normal vision and hearing.

Of the total 133 participants that took part in the study, two were excluded entirely for not following directions on the processing speed tasks. An additional 14 participants were excluded from the regression analyses for having incomplete data (running out of time during a session or not arriving for the second session), leaving a total of 117 participants included in the analyses.

Materials

Critical stimuli for the self-paced moving window task consisted of 80 sentences with DO- or SC-bias verbs, 32 unambiguous subject-modifying relative clause sentences manipulated for extraction type, and 20 globally ambiguous relative clause sentences. We describe each of these stimulus types in detail below.

Use of verb bias

The online use of verb bias was tested using 80 critical sentences taken from Lee, Lu, and Garnsey (2013). Each sentence included a matrix subject, followed either by a DO-bias verb (40 sentences) or by a SC-bias verb (40 sentences), and then followed by a sentential complement. Each sentence had 2 versions that differed from each other solely in whether the sentential complement was headed by the complementizer that. Example sentences are presented in (4) below; they were otherwise identical in word frequency, length, and order. (Emphasis is added here for illustration purposes only and was not presented to participants.).

-

(4a)

DO-biased verb: The club members understood (that) the bylaws would be applied to everyone.

-

(4b)

SC-biased verb: The ticket agent admitted (that) the mistake might be hard to correct.

In the version without that, the role of the post-verbal noun was temporarily ambiguous between the direct object of the verb and the subject of a sentential complement. This ambiguity persisted until the next word (e.g., would in 4a or might in 4b), which disambiguated the sentence towards a sentential complement structure. In the version with the complementizer, the post-verbal noun was unambiguously the subject of a sentential complement.

Lee et al. (2013) controlled the character length and Francis-Kucera log word frequency of the post-verbal noun across verb type. Although the post-verbal noun was intended in all cases to be highly plausible as a direct object of the verb, plausibility as a direct object was rated as slightly higher after DO-bias verbs than after SC-bias verbs in a norming study conducted on a 7-point scale (6.4 and 6.1 respectively; 1: highly implausible, 7: highly plausible). For details of these norms, see Lee et al. (2013).

We used self-paced reading times to measure participants’ online processing of the verb bias items. Reaction times under 200 milliseconds were dropped and remaining times were log transformed. Only reaction times for correct trials were included in further analyses. For both sentence types, the critical region of analysis consisted of the embedded verb and the word immediately afterward, such as would be or might be, underlined in (4a) and (4b) above (“the disambiguation region” following Garnsey et al., 1997).

To measure offline comprehension, we created a YES-NO comprehension question for each sentence measuring participants’ understanding of its general meaning (e.g., Did the ticket agent think the mistake would be a problem?). The questions did not probe whether the participant arrived at the direct object or sentential complement interpretation.

Subject-versus object-extracted relative clauses

Processing of subject-versus object-extracted relative clauses was examined using 32 critical items taken from Gibson, Desmet, Grodner, Watson, and Ko (2005). Critical items began with a subject noun phrase, which was modified by a relative clause, and then continued with the verb phrase of the main clause of the sentence. Each item was manipulated for relative clause extraction site as shown in (5) below. The antecedent noun (in this case, reporter) was the subject of the relative clause in the SRC condition, and it was the object of the relative clause in the ORC condition.

-

(5a)

SRC: The reporter who attacked the senator on Tuesday ignored the president.

-

(5b)

ORC: The reporter who the senator attacked on Tuesday ignored the president.

Because the order of the words in the relative clause differed across extraction type, self-paced reading times were analyzed for a combined region including all of the relevant words (following Gibson et al., 2005). This region is underlined above and consisted of the relative pronoun who, the noun phrase, and the verb. Note that the conditions varied only in the order of these words; thus, word frequency and word length was controlled across conditions.

For each item, a YES-NO comprehension question was also created to assess offline comprehension. In half of the items, the questions required identifying the subject and object of the relative clause correctly (e.g., Did the reporter attack the senator?/Did the senator attack the reporter?). In the other half, the questions asked about main clauses (e.g., Did the reporter ignore the president?/Did the senator ignore the president?). This distinction allowed us to probe whether any difficulties in interpreting the ORCs were driven by difficulty in interpreting the relative clause in particular as opposed to the sentence more broadly.

Offline resolution of relative clause attachment ambiguities

To test offline judgments of relative clause attachment, we used 20 relative clause sentences taken from Swets et al. (2007). Each sentence contained a complex noun phrase modified by a relative clause, which was followed by the verb phrase of the main clause. The complex noun phrase included two animate nouns that were linked by the preposition of. Relative clauses contained a reflexive pronoun that could refer to either noun of the complex noun phrase, thus creating an attachment ambiguity. An example sentence is presented in (3), reproduced below.

-

(3)

The maid of the princess who scratched herself in public was terribly embarrassed.

For each item, we created a YES-NO question asking explicitly about relative clause attachment. In half of the items, a YES response indicated a low attachment interpretation (e.g., Did the princess scratch herself?); in the other half, a YES response indicated a high attachment interpretation (e.g., Did the maid scratch herself?). This design allowed us to apply signal-detection analyses (Green & Swets, 1996; Macmillan & Creelman, 2005; Murayama, Sakaki, Yan, & Smith, 2014) to separate participants’ potential response bias (any overall tendency to answer yes to all questions) from their low-attachment preference (an increase in yes responses specifically when that response indicates a low-attachment reading, termed sensitivity in the signal-detection framework).

List construction

Two lists were constructed by counterbalancing the complementizer presence-absence pairings for each of the 80 verb bias sentences and the SRC-ORC pairings for each of the 32 unambiguous relative clause sentences across lists. The stimuli for the 20 globally ambiguous relative sentences were identical across lists. In addition to these 132 experimental sentences, each list contained 80 filler sentences of various structures. The filler sentences were constructed to include a variety of grammatical structures and thereby disguise the structures of interest. Seventeen fillers were passive sentences (e.g., The terrifying monster was killed by the heroic knight), twenty-three were simple transitive sentences (The motivational speaker fixed the projector before her lecture), six included infinitive clauses (The game show contestant expected to win), four were simple intransitive sentences (The four kids shrieked when the monster appeared on screen), three were ditransitive sentences (The friendly man lent sugar to the neighbor next door), eight were conjoined sentences (Tania was accepted to graduate school and Steve passed the bar exam), sixteen used the sentential-complement structure but with a post-verbal noun phrase that was implausible as a direct object of the verb (eleven with the complementizer that and five without; e.g., The housewife hoped the antiques were valuable), two used the past progressive (The experienced flight attendant was giving instructions to a group of trainees), and one was an existential (There is an old house on the street whose roof was fixed).

Because the filler sentences were not constructed to be syntactically difficult or confusing, the comprehension questions did not specifically probe the syntax of the sentences but rather their general semantic content (e.g., Did Steve fail the bar exam?). For half of the fillers, the correct answer to the comprehension question was true; for the other half, it was false.

All participants saw the experimental and distracter sentences in the same, pseudo-randomized order. This design was motivated by our goal of measuring differences between individuals in their language processing, which requires minimizing extraneous sources of variability between participants. Differences in the experimental procedure (e.g., item ordering) across participants introduce additional, irrelevant between-participants variance that cannot be explained by the constructs of interest. By contrast, presenting items in the same order to all participants, although it confounds variance in item properties with serial position, crucially reduces the variance between participants in their experience in the experiment, and the goal of the present study was to explain variance between individuals rather than between items. (See Swets et al., 2007, for another example of an application of this principle to language processing studies.)

Procedure

All tasks, including the self-paced reading task, were completed on a Macintosh desktop computer running MATLAB software and the Psychophysics Toolbox (Brainard, 1997; Kleiner, Brainard, & Pelli, 2007; Pelli, 1997) and CogToolbox (Fraundorf et al., 2014). Participants sat approximately 750 mm from the screen.

Participants completed a total of 16 tasks (described individually in detail below) over two experimental sessions 24 hours apart. All participants completed the tasks in the same order to minimize experimental variability between individuals. First, participants completed a self-paced moving-window reading task designed to measure syntactic processing. Participants then completed a battery of tasks measuring the other individual differences of interest. On the first day, these tasks included, in order, three measures of verbal working memory (Reading Span, Listening Span, and Operation Span), two measures of perceptual speed (Letter Comparison and Pattern Comparison), three measures of inhibitory control (Antisaccade, Stroop, and Flanker), and two of five measures of language experience (vocabulary and Author Recognition Test). On the second day, participants completed a third language experience task (North American Adult Reading Test), three measures of phonological ability (Pseudoword Repetition, Phoneme Reversal, and Blending Nonwords), and finally the two remaining language experience measures (Comparative Reading Habits and Reading Time Estimates questionnaires). Between tasks, the list of tasks was displayed on the screen with checkmarks beside the completed tasks to indicate subjects’ progress. Participants were encouraged to take breaks between tasks as needed.

Self-paced moving window

Syntactic processing was assessed through a self-paced moving-window reading task (Just, Carpenter, & Woolley, 1982). The first word of a sentence was displayed on the screen, with each remaining word in the sentence replaced by a number of dashes equal to the character length of the word (e.g., chair would be replaced with -----). When the participant pressed the space bar, the next word was displayed and the previous word was replaced by dashes. Sentences were aligned with the left edge of the screen and displayed equidistant from the top and bottom of the display. All sentences occupied only a single line of text on the screen.

After participants read the last word of a sentence, the sentence disappeared, and a comprehension question was presented in its entirety. Participants answered yes or no by pressing one of two keys on the keyboard.

Between trials, the serial position of the upcoming trial was displayed for 750 ms in the same screen position as the first word of each sentence. Participants were given a rest period every 40 trials. This task lasted approximately forty-five minutes.

Reading Span

As in all variants of the Reading Span task (Daneman & Carpenter, 1980), participants read sentences while remembering material for a memory test. In the reading portion of the task, participants saw a sentence defining a common noun either truthfully, as in (6a), or falsely, as in (6b). Sentences were taken from Stine and Hindman (1994). Approximately half of the sentences were true and half were false.

-

(6a)

An article of clothing that is worn on the foot is a sock.

-

(6b)

A part of the body that is attached to the shoulder is the toe.

Each sentence was displayed in its entirety in the center of the screen. Participants read the sentence aloud, and then pressed the space bar. The sentence disappeared and was replaced with the prompt “Is this true?” Participants pressed one of two keys on the keyboard to judge the sentence as true or false.

One goal was to obtain measures of complex span performance that were less influenced by participants’ linguistic experience, which otherwise might explain any potential relation between verbal working memory and sentence processing (Engle et al., 1990; Macdonald & Christiansen, 2002). For instance, one way that language experience could influence span scores is by speeding the processing (sentence-reading) component of the task: If all participants saw the sentences for the same amount of time, those participants who could read the sentences more quickly would have more time remaining to implement rehearsal strategies (Friedman & Miyake, 2004). Indeed, allowing participants time to implement strategies in this way reduces the predictive power of complex span tasks (Friedman & Miyake, 2004; McCabe, 2010; Unsworth, Redick, Heitz, Broadway, & Engle, 2009). Thus, we followed the procedure of Unsworth, Heitz, Schrock, and Engle (2005) to reduce the influence of language processing speed by introducing an initial calibration phase to the task. During the initial calibration phase, participants performed only the processing (semantic judgment) task on 15 sentences and did not perform the memory storage task described below. Participants had unlimited time to read each sentence and make the judgment, and they received feedback on their accuracy afterwards. This procedure was designed to assess each participant’s reading speed. We then controlled for reading speed in the main task by giving participants a response deadline that was based on their speed in the calibration phase. In the Results section, we provide evidence that these procedures successfully deconfounded Reading Span scores from language experience.

A second way that language experience might influence complex-span performance is by facilitating processing of the to-be-remembered items. In some versions of the Reading Span task (such as the original version by Daneman & Carpenter, 1980), the to-be-remembered items are the final words of the sentences in the processing task. However, participants’ ability to remember such words is influenced by their familiarity or experience with the lexical items themselves (Engle et al., 1990). We thus instead adopted the procedure of Unsworth and colleagues by asking participants to remember letters, which all participants should find highly familiar and easy to process. The letters were randomly chosen from the set F, H, J, K, L, N, P, Q, R, S, T, Y, with the constraint that no letter ever appeared twice within the same trial. After each sentence in the main task, the to-be-remembered letter was displayed in caps in the center of the screen for 800 ms.

We also took two other steps to reduce participants’ ability to implement strategies. First, participants were required to read the sentence aloud and to press the space bar immediately after doing so; the program displayed a warning if participants were too slow at reading the sentences. Past work has established that stricter pacing of complex span tasks increases their predictive power (Friedman & Miyake, 2004; McCabe, 2010; Unsworth et al., 2009). Second, to prevent participants from neglecting the reading task in favor of rehearsing the to-be-remembered items, participants were instructed that their primary goal was to maintain at least 85% accuracy on the reading portion of the task. After each test phase, participants saw their cumulative accuracy on the processing task (i.e., their accuracy in judging the sentences as true or false) and received a warning whenever it dropped below 85% (Unsworth et al., 2005).

After completing the calibration procedure, participants proceeded to the main task. Participants continued to read sentences and judge them as true or false, but the maximum time allowed to read a sentence and make the semantic judgment was now set as the participant’s mean reading time in the calibration phase plus 2.5 standard deviations (Unsworth et al., 2005). If participants took longer than this time, “TOO SLOW!” displayed on the screen for 1000 ms, the sentence was counted as an error, and the computer proceeded to the next sentence. Participants did not receive feedback on their processing accuracy during the main task. After a predetermined number of sentences and letters, participants proceeded to the test phase of each trial, in which they were required to type the to-be-remembered letters in the order in which they had been presented.

Within the main task, participants first completed two practice trials at span length two (that is, two sentences and a total of two to-be-remembered letters). The critical trials consisted of two trials each at span lengths two to six, for a total of ten trials. A common procedure for complex span tasks has been for participants to start at the shortest span length and progress towards the longest span length, with the task ending if participants do not meet some criterion level of performance. However, researchers have raised several concerns with this procedure. First, performance typically decreases over repeated memory tests (the phenomenon of proactive interference). Presenting spans in ascending order confounds span length with the amount of proactive interference, and so variability in complex span performance could actually reflect variability in susceptibility to proactive interference (Lustig, May, & Hasher, 2001, but see Salthouse & Pink, 2008). Second, concluding the task early reduces the data collected from each participant. Participants may succeed or fail at a particular span lengths for reasons other than their putative verbal working memory abilities, such as the idiosyncratic difficulty of particular sentences (Conway, Kane, Bunting, Hambrick, Wilhelm, & Engle, 2005). Thus, even if a participant does not completely succeed at a given span length, performance at longer spans can still be revealing of their verbal working memory ability. Consequently, we presented the spans in a random order and required all participants to complete all spans.

Scoring was performed according to the partial-credit unit scoring procedure recommended by Conway and colleagues (2005). Trials on which participants remembered all of the items were scored as 1 point. Trials on which participants remembered some but not all items were scored as the proportion of items the participants did remember. This procedure makes use of all of the information available about participants’ performance and incorporates the fact that, for instance, remembering five out of six items represents somewhat better performance than remembering one out of six items. In a comparison of several scoring systems, Conway and colleagues found this procedure to produce the most normal distribution of scores.

Operation Span

The Operation Span task (Turner & Engle, 1989; Unsworth et al., 2005) was also intended to measure verbal memory and generally followed a similar procedure to the reading span task, except that the processing component of the task involved verifying the solutions to equations such as (7).

-

(7)

(6 × 4) − 2 = ?

In the processing portion of the Operation Span task, participants silently read the equation and pressed the space bar when finished. The equation was erased and a probe (such as 22) displayed on the screen; participants pressed one of two keys to judge whether or not the probe was the correct answer to the equation. Equations were generated according to the procedure of Unsworth et al. (2005). Specifically, the three numbers were always digits between 1 and 9. The first two digits were multiplied or divided together; then, a third digit was added or subtracted. These digits were selected semi-randomly such that the final answer was always a positive integer. Approximately half the test probes were true, and half were false. False probes were generated from the true answer by adding or subtracting a random number between one and nine, with the constraint that the resulting probe was always a positive integer.

As in the Reading Span procedure described above, participants first completed 15 equations in a calibration phase, which involved only the processing component of the task, in order to set the response deadline of the main task. The to-be-remembered items in the main task were the same set of letters used in the reading span task. Participants completed one practice trial at span length two and one at span length three, followed by three critical trials each at span lengths three to seven (for a total of 15 critical trials). As in the Reading Span task, the critical trials were presented in random order.

Listening Span

The Listening Span task generally followed the same procedure as the Reading Span task. However, rather than reading printed sentences aloud, participants listened to pre-recorded sentences spoken by a female native speaker of American English. The prompt to judge the sentence as true or false appeared immediately after the recorded sentence ended. Because the recorded sentence had an identical duration for all participants, calibration of the response deadline was based only on the latency to respond to the prompt. The to-be-remembered letters were also spoken aloud by the same recorded speaker. The task followed the same procedure as the Reading Span task in all other aspects.

Stimulus sentences were also taken from Stine and Hindman (1994) but comprised a different set of sentences than used in the Reading Span task. There were two practice trials at span length two, followed by two critical trials each at span lengths two to six, again presented in random order.

Letter Comparison

The Letter Comparison task followed Salthouse and Babcock (1991). Participants judged, as quickly as possible, whether two arrays of consonant letters were identical. Trials were presented in six blocks: two blocks comparing three-letter arrays, two blocks comparing six-letter arrays, and two blocks comparing nine-letter arrays. For practice, participants first completed two trials with three-letter arrays, in which one trial contained a match and the other contained a mismatch. Then, during each block, participants were given 20 seconds to complete as many comparison trials as possible, pressing one key for matching arrays and another for mismatching arrays. On mismatching trials, only one letter differed between the arrays. The dependent measure was the total number of correct answers provided within the duration of the critical blocks.

Pattern Comparison

The procedure of the Pattern Comparison task was the same as Letter Comparison, except that participants compared arrays of line segments rather than letters (Salthouse & Babcock, 1991). Blocks of three-, six-, and nine-segment arrays were presented in an order identical to that in the letter comparison task, with the dependent measure being the number of correct answers provided within this time.

Vocabulary

One word was displayed at the top of the screen in capital letters, followed by five other words (in lower case) and DON’T KNOW. Participants pressed one of the keys 1-5 on the keyboard to indicate which word was closest in meaning to the word at the top, or they pressed 6 if they did not know. There was one practice item, followed by two critical blocks of 24 items each. Participants had six minutes to complete each block; all participants completed the task within this time limit. All items were taken from the Extended Range Vocabulary Test of the Kit of Factor-Referenced Cognitive Tests (Ekstrom, French, Harman, & Dermen, 1976). Following the procedure recommended by Ekstrom et al. (1976), the dependent measure was the number of correct responses minus a penalty of 0.25 for each incorrect guess. Responses of DON’T KNOW were not penalized.

Author Recognition Test

The Author Recognition Test (ART) was developed as a measure of exposure to print materials (Stanovich & West, 1989). We used an updated and slightly lengthened version of the task developed by Acheson, Wells, and MacDonald (2008), which included the names of 65 authors’ names and 65 foil names, and adapted that version of the task for the computer. Participants saw names presented one at a time in a random order. For each name, the participant clicked one of two response buttons that appeared at the bottom of the screen reading Author and Don’t know. Participants were told that there was a penalty for guessing, so they were encouraged to only respond with Author if they were sure, and to otherwise choose Don’t know. Participants received one point for each correctly identified author, they lost one point for each foil name that they identified as an author, and there was no change to the score if they selected Don’t know.

North American Adult Reading Test

The North American Adult Reading Test (NAART) was developed as a way to estimate pre-morbid IQ in brain trauma patients (Blair & Spreen, 1989). Participants received a list of 61 words with irregular spellings, presented one at a time at increasing difficulty. The participants’ task was to correctly pronounce each word aloud. Correct pronunciations, determined by Merriam-Webster’s online dictionary, were given one point. Any incorrect response was given zero points with no partial credit. Table 1 displays inter-rater reliability for the NAART and for the other tasks discussed below that require manual scoring.

Table 1.

Inter-rater reliability for tasks requiring subjective scoring.

| Task | Nsubjects | Nratings | Match proportion | Cohen’s κ |

|---|---|---|---|---|

| NAART | 100 | 6100 | 0.884 | 0.765 |

| Stroop accuracy | 36 | 7200 | 0.980 | 0.816 |

| Pseudoword Repetition | 12 | 1056 | 0.863 | 0.848 |

| Blending Nonwords | 96 | 2304 | 0.954 | 0.900 |

| Phoneme Reversal | 107 | 2354 | 0.992 | 0.981 |

Note. Nsubjects is the number of subjects that were scored by two raters, and Nratings is the total number of trials with two ratings for these subjects. Match proportion is the proportion of Nratings that were the same across both raters. Cohen’s κ provides a correction for chance agreement among raters (Cohen, 1960).

Comparative Reading Habits (CRH) survey

Participants answered five questions comparing their own self-reported reading habits to what they perceive to be the norm for their fellow college students (Acheson et al., 2008).

Reading Time Estimate (RTE) survey

Participants estimated how many hours in a typical week they read various types of materials, including fiction, newspapers, and online materials (Acheson et al., 2008).

Stroop

Following Stroop (1935) and Brown-Schmidt (2009), the Stroop task consisted of two phases. In the first, no-conflict phase, participants named the color of squares displayed one at a time on the screen. The possible colors were red, blue, green, yellow, purple, and orange. Before beginning the task, participants viewed a screen that displayed all six of the possible colors and their names. During the task, participants spoke aloud the name of the color of the square and then pressed a key to advance to the next trial; the key press was used to record participants’ response time for the trial.

In the second, conflict phase, participants performed the same task, except that the colored squares were replaced by the English names of colors (e.g., red printed in blue). Again, participants’ task was to name the color that the word appeared in, rather than read the word aloud.

Each phase contained 100 trials. There was no practice block in either phase, but the first and last trials in each phase were excluded from analysis to account for extreme reaction times attributed to beginning and ending the task.

Participants’ responses were recorded and coded for accuracy. Trials were coded as errors if the participant produced the incorrect color name, did not name a color at all, produced a filled pause such as uh or um (Fraundorf & Watson, 2013; Maclay & Osgood, 1959), or began speaking an incorrect color name before correcting themselves (e.g. gree-blue). Accuracy was generally high even in the conflict phase (M = 94%), and all participants obtained accuracy of 74% or greater.

The dependent measure was the difference in median response time between the conflict (second) phase and the no-conflict (first) phase. Because response times were positively skewed, as is typical in response time tasks (e.g., Van Zandt, 2000), response times were first log-transformed before conflict scores were calculated. Only correct trials were analyzed.

Antisaccade

Following the procedures of Kane, Bleckley, Conway, and Engle (2001), participants needed to look in the opposite direction of an anti-predictive cue in order to identify a letter briefly flashed on the opposite side of the screen. Each trial began with a fixation cross that lasted 200, 600, 1000, 1400, 1800, or 2200 ms; this duration varied across trials in order to prevent participants from anticipating the onset of the target. A cue (the equality sign =) then flashed one line of text below the fixation point, at either 11.3 degrees of visual angle to the left or to the right. The cue was visible for 100 ms, disappeared for 50 ms, and reappeared for 100 ms. The target display was then presented at the opposite location (e.g., if the cue appeared on the left, the target appeared on the right) on the same line of text as the fixation point. The target display consisted of a forward mask (the letter H) for 50 ms, then the target letter itself (B, P, or R) for 100 ms, and then a backward mask (the numeral 8). The backward mask remained on the screen until participants indicated the identity of the target by pressing the 1, 2, or 3 key on the keyboard. All of the characters subtended 2 degrees of visual angle vertically on the screen. There was a 400 ms interval between trials.

Participants first completed 18 trials in a response-mapping phase to practice the mapping between letters and response keys. In this phase, no cue appeared, and the masks and target appeared in the center of the screen. The response mapping was followed by 52 practice trials of the full task. During the practice trials only, participants received feedback in the form of a 175 Hz tone for 500 ms in response to incorrect responses. There was no feedback for a correct response. The practice trials were followed by 72 critical trials. Each possible combination of target identity (B, P, R), target location, and fixation duration was represented twice, and the trials were presented in random order. The dependent measure was the proportion of trials in the critical block on which participants responded correctly.

Flanker

Participants completed a version of the “flankers” response competition paradigm (Eriksen & Eriksen, 1974; see Eriksen, 1995 for review) in which a visually-presented target item is flanked either by congruent items that facilitate correct responding or by incongruent items that inhibit correct responding. In this particular implementation, participants indicated the direction of an arrow that was flanked by four arrows of the same (< < < < <) or different (> > < > >) direction. The incongruent items are thought to activate the incorrect response, making selecting the correct response more difficult, as reflected in longer response latencies (Eriksen, 1995). Similar to the Stroop analysis, the dependent measure was the difference between the median of log-transformed reaction times in the incongruent versus congruent trials.

Pseudoword Repetition

Following Gupta (2003), participants listened to recordings of pseudowords that were phonotactically legal in English (e.g., ginstabular), spoken by a female native speaker of American English. After each recording ended, a green dot appeared on the center of the screen and participants attempted to repeat the pseudoword they had just heard. When participants had finished repeating the word, they pressed a key and, after a 100 ms delay, the next trial began. To ensure that participants attempted to produce each word, participants could not end the trial before at least 1000 ms had elapsed; this time point was signaled by the dot on the screen turning blue. There were four critical blocks, each with 18 words: six two-syllable words, six four-syllable words, and six seven-syllable words. Before the main task, participants also completed six practice trials, two at each syllable length. Materials were taken from Gupta (2003).

Participants were awarded one point for each correctly repeated syllable from the onset of the word; correctly repeated syllables that occurred after an erroneous syllable did not earn points. For example, repeating ginstabular as ginstabcular would score two points; the first two syllables were repeated correctly, but the fourth syllable, while correct, occurred after an error in the third syllable. Some trials (7%) could not be coded because of problems in the recordings, usually because the participant pressed the key before completing the word; for this reason, the dependent measure used was the proportion of points earned out of the points possible on the coded trials only.

Blending Nonwords

Blending Nonwords is a task from the Comprehensive Test of Phonological Processing (CTOPP; Wagner et al., 1999). On each trial, participants heard a list of phonemes or syllables and were asked to combine these elements into one pseudoword, or “nonword”. For instance, if the participants heard/h/,/ε/, and/t/, they would need to produce/hεt/as one word. The number of elements ranged from two to eight. Participants were given six practice trials and eighteen critical trials, and the dependent measure was the proportion of correct responses. Following the CTOPP procedure, responses were scored as either fully correct or incorrect, with no partial credit.

Phoneme Reversal

In the Phoneme Reversal task (CTOPP; Wagner et al., 1999), participants heard a pseudoword and were asked to repeat the word and then pronounce it backwards, creating a real English word. For instance, if the participants heard/stu:b/, they would need to produce the word boots. Participants were given four practice trials and eighteen critical trials, and the dependent measure was the proportion of correct responses. Following the CTOPP procedure, responses were scored as either fully correct or incorrect, with no partial credit.

Results

As we reviewed above, interpreting any relationship between self-paced reading times and the other constructs requires establishing that the measures are reliable (consistent). It is also critical to demonstrate that the measures are valid (measuring what they intend to measure). We thus first discuss the reliability and validity of, in turn, (a) the measures of verbal working memory, perceptual speed, inhibitory control, language experience, and phonological ability and (b) individual differences in syntactic processing in the self-paced reading task. Finally, we turn to whether any individual differences in syntactic processing—should we observe any—can be explained by the other cognitive constructs.

Individual Differences

Mean performance on all 16 individual difference measures across the five domains is summarized in Table 2.

Table 2.

Summary of task performance on measures of individual differences.

| Construct | Task | Measure | Min. | Mean | Max. | SD |

|---|---|---|---|---|---|---|

| Language experience | ART | Number correct with penalty | −9 | 10 | 47 | 11.570 |

| ERVT | 1 | 17.180 | 36.750 | 7.740 | ||

| CRH | Sum of Likert responses | 5 | 22 | 33 | 5.326 | |

| NAART | Number correct | 0.283 | 0.560 | 0.885 | 0.125 | |

| RTE | Hours per week | 5 | 20 | 63 | 10.695 | |

| Verbal | Listening | Score with | 5.633 | 8.943 | 10 | 0.954 |

| working | Operation | partial credit | 1.852 | 10.588 | 15 | 3.435 |

| memory span | Reading | 2.367 | 6.749 | 10 | 1.757 | |

| Inhibitory control | Anti. Acc. | Proportion correct responses (all conflict trials) | 0.264 | 0.717 | 0.986 | 0.192 |

| Anti. RT | Log median reaction time for correct responses (all conflict trials) | −2.043 | −0.559 | 0.270 | 0.328 | |

| Flanker | Difference in log | −0.254 | 0.151 | 0.344 | 0.069 | |

| Stroop | median reaction time of correct responses for conflict and no-conflict trials | −0.153 | 0.193 | 0.514 | 0.135 | |

| Phonological ability | Pseudo. Rep. | Proportion correct | 0.487 | 0.801 | 0.949 | 0.077 |

| BNW | 0.167 | 0.646 | 1 | 0.176 | ||

| PR | 0.182 | 0.687 | 1 | 0.176 | ||

| Perceptual | Letter | Number correct | 41 | 73 | 405 | 34.305 |

| speed | Pattern | within time limit | 46 | 84 | 398 | 31.378 |