Abstract

The aim of this study was to assess the parameters of choice, such as duration, intensity, rate, polarity, number of sweeps, window length, stimulated ear, fundamental frequency, first formant, and second formant, from previously published speech ABR studies. To identify candidate articles, five databases were assessed using the following keyword descriptors: speech ABR, ABR-speech, speech auditory brainstem response, auditory evoked potential to speech, speech-evoked brainstem response, and complex sounds. The search identified 1288 articles published between 2005 and 2015. After filtering the total number of papers according to the inclusion and exclusion criteria, 21 studies were selected. Analyzing the protocol details used in 21 studies suggested that there is no consensus to date on a speech-ABR protocol and that the parameters of analysis used are quite variable between studies. This inhibits the wider generalization and extrapolation of data across languages and studies.

Keywords: Auditory brainstem response, speech, speech evoked ABR, central auditory processing, speech perception

INTRODUCTION

The ability to communicate is essential to the quality of life of humans and hearing is part of this process. Exposure to auditory stimuli, as well as the anatomical and functional integrity of the peripheral and central auditory system, is a pre-requisite for the acquisition and development of normal language.

Auditory-evoked potentials (AEPs) represent an objective way to assess the mechanism of processing the brainstem and the auditory cortex information. The auditory brainstem response (ABR) is an AEP that carries information from the electrical activity of the auditory brainstem. Using ABR it is possible to evaluate the functionality of the auditory system, estimate electrophysiological hearing levels, detect neural signal anomalies, and better evaluate clinical cases in which the available audiological information is not complete [1, 2].

Until recently, ABR testing was conducted using only non-verbal stimuli, such as clicks, chirps, or tone-bursts. Although transient stimuli ABR protocols are accepted as the clinical routine today, the exact mechanisms of verbal coding and decoding in the brainstem are not well described. To gain better understanding of these mechanisms, recent instruments offer speech-evoked ABR protocols. Potential knowledge regarding how a verbal stimulus is processed in the brainstem and which structures participate in this process when verbal stimuli is used in the ABR electrophysiological evaluation may be obtained [3].

Speech ABR protocols use syllables as stimuli, where various combinations of consonants and vowels are mixed [4]. According to Rana and Barman, [5] consonant discrimination is performed by three fundamental components: (i) pitch (a source characteristic conveyed by the fundamental frequency); (ii) sound formants (filter characteristics conveyed by the selective enhancement and attenuation of harmonics); and (iii) the timing of major acoustic landmarks. All of these components are important to speech perception, communication, and proper development of linguistic skills.

The perception of vocal stimuli appears to begin in the brainstem, which significantly contributes to the important processes of reading and speech acquisition [6–8]. A practical method to investigate the characteristics of these processes is to use verbal stimuli for the estimation of hearing threshold to allow the identification of subtle auditory processing complications, which can be associated with various communication skills. Usually these deficits are not clearly apparent in ABR responses from transient stimuli; thus, speech ABR assessment offers a potential advantage in early identification of auditory processing impairments in very young children [9]. Speech ABR can be used as an objective measure of hearing function, since it is not heavily influenced by variables, such as attention, motivation, alertness/fatigue, and co-existing disorders, including language and learning or attention deficits, which often influence behavioral assessments [10].

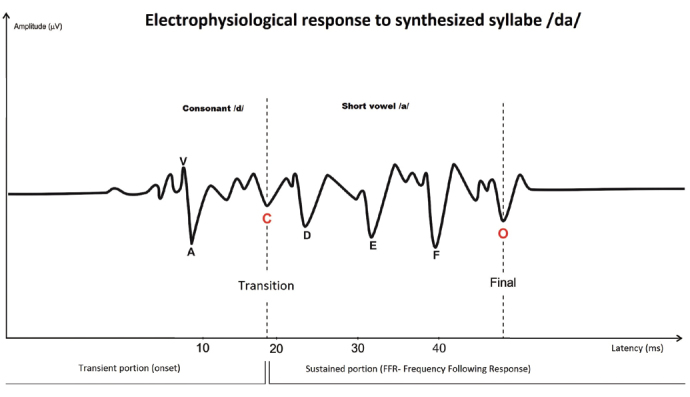

The computer-synthesized syllable /da/, which was designed by Skoe and Kraus [4], is a speech ABR stimulus that is mostly used in clinical practice. Synthesized speech offers better control of the stimulus acoustic parameters and assures stability and quality of the signal that is presented to the patient [11]. The stimulus is composed of the consonant /d/ and the short vowel /a/. Upon stimulation with the /da/ combination, a response emerges, which is characterized by seven wave peaks called V, A, C, D, E, F, and O, respectively. Waves V and A reflect the onset of the response; wave C the transition region; waves D, E, and F the periodic region (frequency following response); and wave O the offset of the response [4, 12–14]. Human brainstem Frequency-Following Response (FFR) registers phase-locked neural activity to cyclical auditory stimuli. Galbraith et al. [15] showed that FFR can be elicited by word stimuli, and when speech-evoked FFRs are reproduced as auditory stimuli, they are heard as intelligible speech.

Speech ABR response appears to mature by the age of 5 years [16]; thus, it can be used in young children contributing to the differential diagnosis of diseases presenting similar symptoms [2]. According to Sinha and Basavaraj, [17] the major uses of speech ABR include the diagnosis and categorization of children with learning disabilities in different subgroups; assessment of the effects of aging on the central auditory processing of speech; and assessment of the effects of central auditory hearing deficits in subjects using hearing aids or cochlear implants.

Speech ABR is an emerging field and several laboratories in different countries are committed to investigating the clinical boundaries of this procedure. However, there is no consensus regarding a particular clinical protocol or a particular value-set of the protocol settings. To aid a possible proposal for such a consensus, the present study analyzed the parameters that have been used clinically in various speech ABR protocols through a systematic review over a 10-year period. The latter includes the following information:

Stimulus and response characteristics (duration, intensity, rate, polarity, sweeps, display window, stimulated ear, F0, first formant, and second formant)

Condition of the patient at the time of assessment

Software and equipment used

CASE PRESENTATION

This study is based on a systematic literature review conducted from June 2015 to September 2015. Articles were selected from queries from the following databases:

US National Library of Medicine National Institutes of Health (PubMed)

Scientific Electronic Library Online (Scielo)

Latin American and Caribbean Health Sciences (Lilacs)

The Search Engine Tool for Scientific (Scopus)

ISI Web of Science “Web of Science

For defining the research terms, the broader term “speech-ABR” was chosen, since it includes both transient and sustained responses from the auditory brainstem and midbrain [4]. Terms related only to transient responses (ie, FFR) were not considered. The keywords (query terms) were restricted to English, according to the Medical Subject Headings (MeSH): The searched query terms are shown below with the Boolean operator “OR.

Speech OR ABR

Speech OR auditory OR brainstem OR response

Auditory OR evoked OR potential OR speech

“Speech-evoked” OR brainstem OR response

Complex OR sounds

The study was conducted by two reviewers independently. Eventual disagreements were resolved through discussion. The article selection procedure followed the inclusion and exclusion criteria provided below:

Inclusion criteria

Articles published in the last 10 years (2005 2015)

Original articles

Exclusion criteria

Experiments on animals

Case studies

Literature reviews

Editorials

Articles not published in English

Studies using in-house hardware or software

The following data were collected from each article:

Type of equipment

Type of software

Stimulus and response characteristics (duration, intensity, rate, polarity, sweeps, display window, stimulated ear, F0, first formant, and second formant)

Condition of the patient during the assessment

RESULTS

The combined queries resulted in the identification of 1288 studies, which were filtered-out in three consecutive phases as described below:

Elimination of duplicates generated from overlapping data across different databases (n = 887)

Elimination of articles referring to experimental animal studies, non-English articles, case studies, editorials, or literature reviews (n = 377)

Elimination after reading the complete article (n = 3)

Overall, 12 articles were included in this review. The data were extracted from each article in an alphabetical order (Table 1).

Table 1.

Description of the analysis parameters used in the speech ABR articles

| Article | No. | Equipment | Software | Stimulated ear | Condition | Stimulus | Duration (ms) | Number of sweeps | Intensity | Polarity | Rate (ms) | Window (ms) | F0 (Hz) | F1 (Hz) | F2 (Hz) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Anderson et al. 2013 [33] | 1 | Biologic Navigator Pro | - | RE | - | /da/ | 40 | 2 de 3000 | 80,3 dB SPL | Alternate | 10.9 | 85,33 | 103 – 125 | 220 – 720 | 1700 – 1240 |

| Ahadi et al. 2014 [19] | 2 | Biologic Navigator Pro | BioMARK | RE/LE/BIN | - | /da/ | 40 | 2 de 3000 | 80 dB SPL | Alternate | 10.9 | 85,33 | 103–125 | 220–720 | 1700 –1240 |

| Akhoun et al. 2008 (clinical) [32] | 3 | Centor USB (Racia-Alvar) | - | RE | - | /ba/ | - | 2 de 1500 | 60 dB SL | Alternate | 11.1 | 80 | - | 700 | 1200 |

| Akhoun et al. 2008 (journal) [35] | 4 | Nicolet | - | RE/LE | - | /ba/ | 60 | 2 de 1500 | - | Alternate | 11.1 | 80 | - | - | - |

| Bellier et al. 2015 [28] | 5 | BrainAmp EEG | Neurobehavioral Systems | BIN | Watch a movie | /ba/ | 180 | 3000 | 80 dB SPL | Alternate | 2.78 | - | 100 | 400–720 | 1700–1240 |

| Clinard et al. 2013 [20] | 6 | Biologic Navigator Pro | BioMARK | RE | - | /da/ | 40 | 2 de 3000 | 80 dB SPL | Alternate | 10.9 | 54 | 103 – 125 | 220 – 720 | 1700 – 1240 |

| Elkabariti et al. 2014 [23] | 7 | Biologic Navigator Pro | BioMARK | RE/LE | - | /da/ | 40 | 2 de 3000 | 80 dB SPL | Alternate | 10.9 | 60 | - | - | - |

| Fujihira et al. 2015 [31] | 8 | EEG 1200, Nikon Kohden | - | RE | Sleep or awake | /da/ | 170 | 2 de 2000 | 70 dB SPL | Condensation and rarefaction | - | - | 100 | 400–720 | 1700–1240 |

| Hornickel et al. 2012 [25] | 9 | NeuroScan Acquire 4.3 | NeuroScan Stim 2 | RE | Allowed to watch a movie | /da/ | 170 | 2 de 3000 | 80 dB SPL | Alternate | - | 230 | 100 | 400 – 720 | 1700 – 1240 |

| Hornickel et al. 2013 [26] | 10 | NeuroScan Acquire 4.3 | NeuroScan Stim 2 | RE | Allowed to watch a movie | /da/ | 170 | 2 de 3000 | 80 dB SPL | Alternate | - | 230 | 100 | 400 – 720 | 1700 – 1240 |

| Karawani et al. 2010 [18] | 11 | Biologic Navigator Pro | BioMARK | RE | - | /da/ | 40 | 2 de 3000 | 80 dB SPL | Alternate | 10.9 | 85.33 | 103–125 | 220–720 | 1700 – 1240 |

| Kouni et al. 2013 [39] | 12 | - | - | - | - | /baba/ | - | 3 de 1000 | 80 dB SPL | Alternate | - | 60 | - | - | - |

| Mamo et al. 2015 [30] | 13 | Neuroscan 4.3 | - | Ear with best threshold | Sleep or watch a movie | /da/ | 170 | 3 de 2000 | 80 dB SPL | Alternate | 3.9 | - | 100 | 400–720 | 1700–1240 |

| Rana et al. 2011 [5] | 14 | Biologic Navigator Pro | BioMark | RE/LE | - | /da/ | 40 | 2 de 3000 | 80 dB SPL | Alternate | 9.1 | 74.67 | 103–125 | 220–720 | 1700–1240 |

| Rocha et al. 2010 [34] | 15 | Audera | - | RE | - | /da/ | 40 | 2 de 1000 | 75 dB SPL | - | 11 | 50 | - | - | - |

| Rocha-Muniz et al. 2012 [21] | 16 | Biologic Navigator Pro | BioMARK | RE | - | /da/ | 40 | 3 de 1000 | - | - | 10.9 | 74.67 | 103-125 | 455–720 | 1700 – 1240 |

| Rocha-Muniz et al. 2014 [22] | 17 | Biologic Navigator Pro | BioMARK | RE | - | /da/ | 40 | 3 de 1000 | - | Alternate | 10.9 | 74,67 | 103–125 | 455–720 | 1700–1240 |

| Sinha et al. 2010 [17] | 18 | Intelligent Hearing Systems | - | MON | - | /da/ | 40 | 2000 | 80 dB nHL | Alternate | 7.1 | - | 103–121 | 220–729 | - |

| Song et al. 2006 [29] | 19 | Neuroscan | - | RE | Allowed to watch a movie | /da/ | 40 | 3 de 1000 | 80.3 dB SPL | Alternate | 11.1 | 70 | - | - | - |

| Song et al. 2011 [27] | 20 | Study 1: NeuroScan Acquire 4.3 Study 2: Biologic Navigator Pro |

Study 1: NeuroScan Stim 2 Study 2: BioMARK |

Study 1: RE Study 2: RE |

Allowed to watch a movie | Study 1: /da/ Study 2: /da/ |

Study 1: 170 Study 2: 40 |

Study 1: 6300 Study 2: 2 de 3000 |

Study 1: 80.3 dB SPL Study 2: 80.3 dB SPL |

Study 1: Alternate Study 2: Alternate |

Study 1: 4.35 Study 2: 10.9 |

Study 1: 40 Study 2: 74.67 |

Study 1: 100 Study 2: 103–125 |

Study 1: 400– 720 Study 2: - |

Study 1: 1700–1240 Study 2: |

| Tahaei et al. 2014 [24] | 21 | Biologic Navigator Pro | BioMARK | RE | - | /da/ | 40 | 2 de 3000 | 80 dB SPL | Alternate | 10.9 | 85,33 | 105–125 | 455–720 | 1700–1222 |

Legend: RE: Right Ear; LE: Left Ear; BIN: Binaural; MON: Monoaural; SPL: sound pressure level; ABR: auditory brainstem response; dB: decibel; nHL: normal hearing level

DISCUSSION

Hardware & Software

The first parameter that was extracted from the data refers to the hardware used for speech ABR data acquisition and storage. Navigator Pro (Biologic, Natus, Pleasanton, Califórnia, EUA) and NeuroScan (Compumedics, Inc.; Charlotte, NC, EUA) were the most cited, with 42.9% and 19% of the articles, respectively.

Regarding the software packages, 38.1% of the studies used BioMARK (Biological Marker of Auditory Processing, Natus Medical, Inc.), while 9.5% of studies used Neuroscan Stim2 (Compumedics) [5, 18–24–26]. Song et al. [27] utilized both equipment (Biologic Navigator Pro and Neuroscan) and both software packages (NeuroScan Stim 2 and BioMARK).

Condition of the patient

The majority of articles (66.6%) did not describe the patient’s condition at the time of assessment; only seven studies (33.3%) provided this data. In five studies (23.8%), the patient was allowed to watch a movie with subtitles (no sound) or with a decreased sound intensity [28, 29]. Mamo et al. [30] offered the subjects the choice to sleep or to watch a movie. Fujihira and Shiraishi [31] instructed the subjects to relax and not move the body to minimize myogenic artifacts.

Laterality effects

A number (61.9%) of studies performed speech ABR testing only in the right ear [18, 20–22, 24–27, 29, 31–34]. This choice is explained by the fact that the right ear has an advantage in the encoding of speech because of the contralateral projection of information to the left hemisphere. Sinha and Basavaraj [17] used the stimulus monaurally, without offering information on the stimulation ear. Mamo et al. [30] reported that the stimulus was presented in the ear with better hearing.

In 14.3% of the articles, the assessment was performed monaurally on both ears [5, 35]. Data in the literature suggest that even if the right ear stimulation can contribute to better speech processing, the left ear stimulation has significant effects on the same processes; however, the corresponding speech ABR responses are characterized by lower amplitudes.

Monoaural vs binaural stimulation

Skoe and Kraus [4] suggested that monaural stimulation in children is the preferred protocol, while binaural stimulation is more realistic than monaural stimulation in adultss. Binaural hearing confers information about everyday listening environments, such as differences in the timing and intensity of a sound at the two ears—interaural time and interaural level differences, and the location of sound sources [36]. Bellier et al. [28] showed better results when the stimulus was elicited binaurally. Ahadi et al. [19] evoked responses from three different protocols: monaural right, monaural left, and binaural left. The data suggested that the amplitude of speech ABR response depends on stimulus modality (monaural, binaural, etc.) and that binaural stimulus permits better response without significant changes in the response acquisition time.

Polarity of the stimulus

Polarity was found to be the most congruent parameter of speech ABR. In 90.5% of the studies, there is information regarding the use of an alternating stimulus polarity to collect speech ABR responses; this information was not included in only two studies [21, 34]. Consensus on the use of alternating polarity is probably related to the fact that this stimulus modality minimizes artifacts and microphonically suppresses the cochlear [37]. Data in the literature show that the stimulus polarity does not affect the latency of the various peaks of speech ABR, and in this context, it may be preferable to record data using a single rather than alternating polarity sequences [38].

Stimulus presentation rate

The most frequent stimulus presentation rate value reported was 10.9 ms in 38.1% of the papers [19–24, 33], followed by an 11.1 ms value in 14.3% of the articles [29, 32, 35]. Rocha et al. [34] used 11 ms as the value. Song et al. [27] used two presentation rates: 4.35 ms and 10.9 ms. In 19% of the studies, specific information on the stimulus presentation rate was not included [25, 26, 31, 39].

Length of the stimulus

There is an inverse relationship between the presentation rate and the stimulus duration; hence, the longer the stimulus, the shorter will be the presentation rate. The most frequently mentioned values were 40 ms in 57.1% of the papers, followed by 170 ms in 19% of the papers [5, 17–26, 29–31, 33, 34]. Song et al. [27] used both stimulus durations and concluded that both short (40 ms) and long stimuli (170 ms) reliably reflect the coding of speech in the brainstem.

The values for the stimulus length were 40–230 ms; however, most studies have used stimuli in the 70–85 ms range. In 19% of the studies, no information was included [17, 28, 30, 31].

Stimulus intensity

Most studies (66.6%) used a stimulus with an intensity around 80 dB sound pressure level (SPL); however, other values, such as 80 dB nHL, 75 dB SPL, 70 dB SPL, and 60 dB SPL, were also used [5, 18–20, 23–30, 33, 39]. These findings demonstrate that speech stimulus, which is considered to be complex sound, is presented in the 60 85 dB SPL range as in the normal communication process. Therefore, the evaluation should be performed in an audible stimulus intensity that is comfortable for the patient. However, one should not rule out the possibility of a speech ABR evaluation at lower stimulus intensities, depending on the purpose of the research. Akhoun et al. [32] observed the response time of the onset and offset portions of the speech ABR, while stimulus intensity was varied in steps of 10 dB from 0 dB SPL to 60 dB SPL. Furthermore, it was reported that in accordance with the behavior of the click-evoked ABR, speech ABR responses present an increased latency associated with decreased stimulus intensity.

One vs more consonant-vowels in the stimulus

In 95.2% of the articles, [5, 17–35] a consonant vowel (CV) stimulus was used, while only in one study by Kouni et al. [39] two consonant-vowel syllables /baba/ were used. According to the authors, the choice of this stimulus was the familiarity of Greek individuals with the word (baba = dad). A majority of the studies (80.9%) used the single compound syllable /da/, which is justified for several reasons: it is considered to be a universal syllable, allowing its use in individuals from different nationalities and presents an opportunity to evaluate cortical responses from bilingual children. Three articles [28, 32, 35] used stimulus as the syllable /ba/. Akhoun et al. [32] suggested that /ba/ can be equally effective in speech ABR assessment because it maintains the characteristics of neural responses, i.e., the initial portion representing the consonant /b/ and the frequency following response representing the vowel /a/.

Fundamental frequency and formants of the stimulus

According to literature, the F0 value has to lie between 80 Hz and 300 Hz [4]. In 38% of the articles, [5, 18–22, 33] the F0 used was in the 103 125 Hz range. Speech may contain spectral information above 10.0 kHz; therefore, the speech stimulus to be used in the evaluation of speech ABR should be selected carefully to ensure that the responses encoded in the brainstem can be captured. However, the consonant to vowel distinction usually occurs below 3.0 kHz [4].

Regarding the parameters of the first formant (F1), F1 was described in the 400 720 Hz and 220 720 Hz range in 28.6% and 28.6 % of the studies, respectively. For the second formant (F2), the most commonly reported values were in the 1700 1240 Hz range in 61.9% of the studies.

Stimulus sweeps

There is a difference between transient and speech stimuli. For the first type of stimulus, it is well established that an average of 1000 2000 sweeps will provide solid ABR responses. This is not the case with the speech stimuli; to generate robust and replicable responses, [4] an average of 4000 6000 sweeps are required. In 47.6% [5, 18–20, 23–26, 30, 33] of the papers, speech ABR responses were elicited by averaging a total of 6000 sweeps with two blocks containing 3000 sweeps each. However, ABR responses were generated from <4000 sweeps, <3000 sweeps, and only 2000 sweeps in 42.3%, 33.3%, and 9.5% of the articles, respectively.

CONCLUSION

The analysis of the speech ABR protocol parameters within the last decade has underlined the lack of a consensus on a fixed procedural setup. This may come as a surprise considering that FFR data to sound stimuli were generated by Daly et al. [40] as early as 1976. The last decade clinical studies using speech ABR assessment have used many protocol variables quite differently. Data of this review suggest that standardization procedures are essential to expand the current findings on a global scale and across several different languages.

The majority of studies have used the following parameters. These can be the basis of a proposed protocol consensus:

Stimulus = syllable /da/

A stimulus duration of 40 ms

Right ear monoaural stimulation

Alternating stimuli with an amplitude of 80 dB SPL

A stimulus presentation rate of 10.9 ms

A visualization window of 70–85 ms

Averaged speech-ABR responses composed of 6000 sweeps under the control of the BioMARKTM software.

Figure 1.

Representation of electrophysiological response to synthesized syllable /da/ from a normal hearing subject; personal file of the investigator of an assessment performed with BioMARKTM software

Footnotes

Peer-review: Externally peer-reviewed.

Author contributions: Concept - M.D.S., M.F.C.S.; Design - M.D.S., M.F.C.S., S.H.; Supervision - M.D.S., M.F.C.S., S.H., P.H.S.; Resource - M.D.S., C.D., T.A.D.; Materials - M.D.S., C.D.; Data Collection and/or Processing - M.D.S., C.D., T.A.D., L.R.B.; Analysis and/or Interpretation - M.D.S., C.D., M.F.C.S.; Literature Search - M.D.S., C.D., T.A.D.; Writing - M.D.S., M.F.C.S., S.H.; Critical Reviews - M.D.S., M.F.C.S., S.H.

Conflict of Interest: No conflict of interest was declared by the authors.

Financial Disclosure: The authors declared that this study has received no financial support.

REFERENCES

- 1.Sanfins A, Plancha CE, Overstrom EW, Albertini DF. Meiotic spindle morphogenesis in in vivo and in vitro matured mouse oocytes: insights into the relationship between nuclear and cytoplasmic quality. Hum Reprod. 2004;19:2889–99. doi: 10.1093/humrep/deh528. [DOI] [PubMed] [Google Scholar]

- 2.Sanfins MD, Colella-Santos MF. A review of the Clinical Applicability of Speech-Evoked Auditory Brainstem Responses. J Hear Science. 2016;6:9–16. [Google Scholar]

- 3.Blackburn CC, Sachs MB. The representation of steady-state vowl /eh/ in the discharge paterns of cat anteroventral cochlear nucleus neurons. J Neurophysiol. 1990;63:1191–212. doi: 10.1152/jn.1990.63.5.1191. [DOI] [PubMed] [Google Scholar]

- 4.Skoe E, Kraus N. Auditory brainstem response to complex sounds: a tutorial. Ear Hear. 2010;31:302–24. doi: 10.1097/AUD.0b013e3181cdb272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Rana B, Barman A. Correlation between speech-evoked auditory brainstem responses and transient evoked otoacoustic emissions. J Laryngol Otol. 2011;125:911–6. doi: 10.1017/S0022215111001241. [DOI] [PubMed] [Google Scholar]

- 6.Hornickel J, Skoe E, Nicol T, Zecker S, Kraus N. Subcortical differentiation of stop consonants relates to reading and speech-in-noise perception. Proc Natl Acad Sci U S A. 2009;106:13022–7. doi: 10.1073/pnas.0901123106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Dhar S, Abel R, Hornickel J, Nicol T, Skoe E, Zhao W, et al. Exploring the relationship between physiological measures of cochlear and brainstem function. Clin Neurophysiol. 2009;120:959–66. doi: 10.1016/j.clinph.2009.02.172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Basu M, Krishnan A, Weber-Fox C. Brainstem correlates of temporal auditory processing in children with specific language impairment. Dev Sci. 2010;13:77–91. doi: 10.1111/j.1467-7687.2009.00849.x. [DOI] [PubMed] [Google Scholar]

- 9.Kraus N, Hornickel J. cABR: A Biological Probe of Auditory Processing. In: Geffner DS, Ross-Swain D, editors. Auditory processing disorders: assessment, management, and treatment. San Diego: Plural Publishing; 2013. pp. 159–83. [Google Scholar]

- 10.Baran JA. Test battery considerations. In: Musiek FE, Chermak GD, editors. Handbook of (central) auditory processing disorders. San Diego: Plural Publishing; 2007. pp. 163–92. [Google Scholar]

- 11.Kent RD, Read C. The acoustic analysis of speech. Delmar: Cengage Learning; 2002. [Google Scholar]

- 12.Johnson KL, Nicol TG, Kraus N. Brain stem response to speech: a biological marker of auditory processing. Ear Hear. 2005;26:424–34. doi: 10.1097/01.aud.0000179687.71662.6e. [DOI] [PubMed] [Google Scholar]

- 13.Sanfins MD, Borges LR, Ubiali T, Donadon C, Diniz Hein TA, Hatzopoulos S, et al. Speech-evoked brainstem response in normal adolescent and children speakers of Brazilian Portuguese. Int J Pediatr Otorhinolaryngol. 2016;90:12–9. doi: 10.1016/j.ijporl.2016.08.024. [DOI] [PubMed] [Google Scholar]

- 14.Sanfins MD, Borges LR, Ubiali T, Colella-Santos MF. Speech-evoked auditory brainstem response in the differential diagnosis of scholastic difficulties. Braz J Otorhinolaryngol. 2017;83:112–6. doi: 10.1016/j.bjorl.2015.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Galbraith GC, Arbagey PW, Branski R, Comerci N, Rector PM. Intelligible speech encoded in the human brain stem frequency-following response. Neuroreport. 1995;6:2363–7. doi: 10.1097/00001756-199511270-00021. [DOI] [PubMed] [Google Scholar]

- 16.Johnson KL, Nicol T, Zecker SG, Kraus N. developmental plasticity in the human auditory brainstem. J Neurosci. 2008;28:4000–7. doi: 10.1523/JNEUROSCI.0012-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Sinha SK, Basavaraj V. Speech Evoked auditory brainstem responses: a new tool to study brainstem encoding of speech sounds. Indian J Otolaryngol Head Neck Surg. 2010;62:395–9. doi: 10.1016/j.bjorl.2015.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Karawani H, Banai K. Speech-evoked brainstem responses in Arabic and Hebrew speakers. Int J Audiol. 2010;49:844–9. doi: 10.1097/00001756-199511270-00021. [DOI] [PubMed] [Google Scholar]

- 19.Ahadi M, Pourbakht A, Jafari AH, Jalaie S. Effects of stimulus presentation mode and subcortical laterality in speech-evoked auditory brainstem responses. Int J Audiol. 2014;53:243–9. doi: 10.1523/JNEUROSCI.0012-08.2008. [DOI] [PubMed] [Google Scholar]

- 20.Clinard CG, Tremblay KL. Aging degrades the neural encoding of simple and complex sounds in the human brainstem. J Am Acad Audiol. 2013;24:590–9. doi: 10.3766/jaaa.24.7.7. [DOI] [PubMed] [Google Scholar]

- 21.Rocha-Muniz CN, Befi-Lopes DM, Schochat E. Investigation of auditory processing disorder and language impairment using the speech-evoked auditory brainstem response. Hear Res. 2012;294:143–52. doi: 10.1016/j.heares.2012.08.008. [DOI] [PubMed] [Google Scholar]

- 22.Rocha-Muniz CN, Befi-Lopes DM, Schochat E. Sensitivity, specificity and efficiency of speech-evoked ABR. Hear Res. 2014;317:15–22. doi: 10.1016/j.heares.2014.09.004. [DOI] [PubMed] [Google Scholar]

- 23.Elkabariti RH, Khalil LH, Husein R, Talaat HS. Speech evoked auditory brainstem response findings in children with epilepsy. Int J Pediatr Otorhinolaryngol. 2014;78:1277–80. doi: 10.1016/j.ijporl.2014.05.010. [DOI] [PubMed] [Google Scholar]

- 24.Tahaei AA, Ashayeri H, Pourbakht A, Kamali M. Speech evoked auditory brainstem response in stuttering. Scientifica (Cairo) 2014;2014:328646. doi: 10.1155/2014/328646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hornickel J, Knowles E, Kraus N. Test-retest consistency of speech-evoked auditory brainstem responses in typically-developing children. Hear Res. 2012;284:52–8. doi: 10.1016/j.heares.2011.12.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Hornickel J, Lin D, Kraus N. Speech-evoked auditory brainstem responses reflect familial and cognitive influences. Dev Sci. 2013;16:101–10. doi: 10.1111/desc.12009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Song JH, Nicol T, Kraus N. Test-retest reliability of the speech-evoked auditory brainstem response. Clin Neurophysiol. 2011;122:346–55. doi: 10.1016/j.clinph.2010.07.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Bellier L, Veuillet E, Vesson JF, Bouchet P, Caclin A, Thai-Van H. Speech Auditory Brainstem Response through hearing aid stimulation. Hear Res. 2015;325:49–54. doi: 10.1016/j.heares.2015.03.004. [DOI] [PubMed] [Google Scholar]

- 29.Song JH, Banai K, Russo NM, Kraus N. On the relationship between speech- and nonspeech-evoked auditory brainstem responses. Audiol Neurootol. 2006;11:233–41. doi: 10.1159/000093058. [DOI] [PubMed] [Google Scholar]

- 30.Mamo SK, Grose JH, Buss E. Speech-evoked ABR: Effects of age and simulated neural temporal jitter. Hear Res. 2016;333:201–9. doi: 10.1016/j.heares.2015.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Fujihira H, Shiraishi K. Correlations between word intelligibility under reverberation and speech auditory brainstem responses in elderly listeners. Clin Neurophysiol. 2015;126:96–102. doi: 10.1016/j.clinph.2014.05.001. [DOI] [PubMed] [Google Scholar]

- 32.Akhoun I, Gallégo S, Moulin A, Ménard M, Veuillet E, Berger-Vachon C, et al. The temporal relationship between speech auditory brainstem responses and the acoustic pattern of the phoneme /ba/ in normal-hearing adults. Clin Neurophysiol. 2008;119:922–33. doi: 10.1016/j.clinph.2007.12.010. [DOI] [PubMed] [Google Scholar]

- 33.Anderson S, Parbery-Clark A, White-Schwoch T, Kraus N. Auditory brainstem response to complex sounds predicts self-reported speech-in-noise performance. J Speech Lang Hear Res. 2013;56:31–43. doi: 10.1044/1092-4388(2012/12-0043). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Rocha CN, Filippini R, Moreira RR, Neves IF, Schochat E. Brainstem auditory evoked potential with speech stimulus. Pro-Fono. 2010;22:479–84. doi: 10.1590/S0104-56872010000400020. [DOI] [PubMed] [Google Scholar]

- 35.Akhoun I, Moulin A, Jeanvoine A, Menard M, Buret F, Vollaire C, Scorretti R, et al. Speech auditory brainstem response (speech ABR) characteristics depending on recording conditions, and hearing status: an experimental parametric study. J Neurosci Methods. 2008;175:196–205. doi: 10.1016/j.jneumeth.2008.07.026. [DOI] [PubMed] [Google Scholar]

- 36.Haywood NR, Undurraga JA, Marquardt T, McAlpine D. A comparison of two objective measures of binaural processing: the interaural phase modulation following response and the binaural interaction component. Trends Hear. 2015;19:2331216515619039. doi: 10.1177/2331216515619039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Gorga M, Abbas P, Worthington D. Stimulus calibration in ABR measurements. In: Jacobson J, editor. The Auditory Brainstem Response. San Diego: College-Hill Press; 1985. [Google Scholar]

- 38.Kumar K, Bhat JS, D’Costa PE, Srivastava M, Kalaiah MK. Effect of stimulus polarity on speech evoked auditory brainstem response. Audiol Res. 2014;3:e8. doi: 10.4081/audiores.2013.e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kouni SN, Giannopoulos S, Ziavra N, Koutsojannis C. Brainstem auditory evoked potentials with the use of acoustic clicks and complex verbal sounds in young adults with learning disabilities. Am J Otolaryngol. 2013;34:646–51. doi: 10.1016/j.amjoto.2013.07.004. [DOI] [PubMed] [Google Scholar]

- 40.Daly DM, Roesen RJ, Moushegian G. The frequency-following responses in subjects with profound unilateral hearing loss. Electroencephalogr Clin Neurophysiol. 1976;40:132–42. doi: 10.1016/0013-4694(76)90158-9. [DOI] [PubMed] [Google Scholar]