Abstract

Annotation of foot-contact and foot-off events is the initial step in post-processing for most quantitative gait analysis workflows. If clean force plate strikes are present, the events can be automatically detected. Otherwise, annotation of gait events is performed manually, since reliable automatic tools are not available. Automatic annotation methods have been proposed for normal gait, but are usually based on heuristics of the coordinates and velocities of motion capture markers placed on the feet. These heuristics do not generalize to pathological gait due to greater variability in kinematics and anatomy of patients, as well as the presence of assistive devices. In this paper, we use a data-driven approach to predict foot-contact and foot-off events from kinematic and marker time series in children with normal and pathological gait. Through analysis of 9092 gait cycle measurements we build a predictive model using Long Short-Term Memory (LSTM) artificial neural networks. The best-performing model identifies foot-contact and foot-off events with an average error of 10 and 13 milliseconds respectively, outperforming popular heuristic-based approaches. We conclude that the accuracy of our approach is sufficient for most clinical and research applications in the pediatric population. Moreover, the LSTM architecture enables real-time predictions, enabling applications for real-time control of active assistive devices, orthoses, or prostheses. We provide the model, usage examples, and the training code in an open-source package.

1 Introduction

One of the key elements in analysis of gait is the quantitative assessment of gait parameters collected in a reproducible setting. Modern gait laboratories are equipped with motion capture systems that allow experimenters to track trajectories of markers positioned on a subject’s body. After collecting such data, experimenters fit a musculoskeletal model with associated markers and reconstruct body movement. This procedure allows computation of joint angles over time using inverse kinematics. These data are used in a variety of applications, ranging from basic scientific studies about the nature of human movement to clinical assessments used for planning treatments and assessing outcomes.

To make quantitative comparisons, it is conventional to align gait data to predefined landmarks in the gait cycle. These landmarks allow segmentation of the time series into comparable gait cycles and sub-cycles. The standard and well-established parameters of alignment are the foot-contact event (the instant in time when the foot contacts the floor) and the foot-off event (the instant in time when the foot leaves the floor). Whenever the gait data include unambiguous measurement of ground reaction forces, these events can be automatically defined. However, clean force plate data is often missing, especially when individuals with orthopaedic or neurological impairments are being tested [1, 2].

Lacking ground reaction forces, detection can be done automatically in the case of normal gait using basic functions of the markers, such as the velocity of the heel marker or the distance between the heel and the pelvis [3]. In practice, most of the methods have been only validated for normal gait and automatic detection in clinics is uncommon.

In pathological gait, signals from force plates can be corrupted due to partial contact of the foot with the force plate, the presence of the contralateral foot on the force plate, short step length or foot drop, or the presence of assistive devices, such as walkers. For example, vertical acceleration has been shown to be an inappropriate indicator of events in toe walking [4]. Moreover, not all clinics are equipped with force plates, and pathological kinematics, especially of the foot and ankle, introduce significant problems for automatic techniques. Consequently, researchers have sought alternatives to force-plate data using inertial measurement units [5–7], and have developed event detection algorithms appropriate for these systems [8–13]. These solutions require additional hardware, extra time for placing the hardware on the subject, and additional analysis.

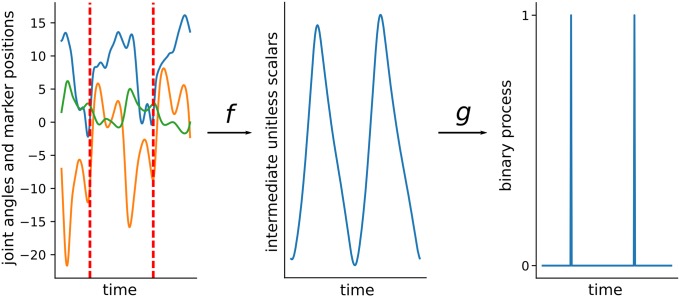

In practice, events in the pathological gait are annotated manually from the kinematic data collected with a motion capture systems. This motivates the search for algorithms automating the time-consuming annotation. Most available algorithms have a similar structure (Fig 1), and can be divided into three categories: Coordinate-based, Velocity-based, and Probabalistic. Coordinate-based methods [14, 15] exploit distances between body parts. Velocity-based methods [9, 14, 16–20] use velocities of markers. Probabilistic methods [21–23] use parametric probabilistic models with manual or data-driven parameter tuning. These algorithms are not widely adopted, rely on heuristics designed for normal or treadmill gait, and commonly fail in the situations encountered in clinics.

Fig 1. Most of the available algorithms can be decomposed into two steps.

In the first step, a multivariate time series of the kinematics and marker trajectories (left plot, with only three variables displayed) is mapped to a univariate time series (middle plot) with some function f. In the second step a peak detection algorithm g is applied to convert univariate time series into a binary time series (0 when no event and 1 otherwise).

In this paper we describe an event detection algorithm that does not require force plates and is applicable to a wide range of pathological gait patterns. The algorithm combines elements of coordinate-based, velocity-based, and probabilistic approaches through a data-driven model. We create a time series model, using coordinate- and velocity-based features to predict the likelihood of an event in a series of frames. To that end, we use a dataset of over 9092 motion capture trials of the gait of children seen in the Center for Gait and Motion Analysis at Gillette Children’s Specialty Healthcare.

There are potential clinical, research related, and economic benefits of automatic gait event detection. From the economic perspective, methods of event detection which only use marker trajectories can reduce the cost of human annotator or additional hardware such as accelerometer or force plates. From the clinical perspective, automatic annotation provides more consistent data and reduces the variance of individual annotators as well as between annotators. From the research perspective, the ability to accurately and efficiently extract multiple gait cycles from a trial reduces the time for annotation and allows the identification of more gait cycles, generating more data for further analysis.

We are releasing the model in order to stimulate further research and enable practical use. The ready-to-use software is published as a GitHub repository (https://github.com/kidzik/event-detector).

2 Methods

2.1 Data

The participants comprising the dataset were patients visiting Gillette Children’s Specialty Healthcare Center for Gait and Motion Analysis between 1994 and 2017. The participants ranged in age from 4 to 19 years. The dataset contains 18153 trials and 9092 of them have annotated gait events (see Table 1 for details). The patient population is comprised of 73% individuals with a diagnosis of cerebral palsy. The remaining 27% are a mix of neurological (e.g. myelomeningocele), orthopaedic (e.g. patellofemoral syndrome), developmental (e.g. idiopathic toe walking and idiopathic torsion), and genetic disorders.

Table 1. Distributions of features of patients in the dataset.

| training set | test set | |

|---|---|---|

| age (years) | 11.4 (sd = 6.2) | 11.0 (sd = 4.5) |

| weight (kg) | 35.7 (sd = 17.7) | 35.9 (sd = 16.7) |

| height (cm) | 135.7 (sd = 21.6) | 135.6 (sd = 21.4) |

| leg length (cm) | 70.3 (sd = 14.0) | 70.6 (sd = 12.8) |

| walking speed (m/s) | 0.84 (sd = 0.28) | 0.85 (sd = 0.29) |

Patients were asked to walk X approximately 15 meters. Each leg is treated separately, yielding 18184 observations with annotations. For every observation the available data consists of trajectories of markers placed on the foot, shank, thigh, and pelvis and rotations of the pelvis, hip, knee, ankle and foot, expressed as Euler angles computed using the plug-in-gait biomechanical model [24, 25]. We refer to these sequences as kinematic time series.

Estimation of the joint angles was performed by tracking the markers attached to body, defining segmental coordinate systems, and computing rotations between segments. Data was collected at 120Hz with a VICON system.

During each patient’s visit, multiple trials were collected. The trials were processed for clinical use by trained technicians. In general, a single stride was identified from each trial, delineated by foot-contact and foot-off events for both legs.

The study was approved by the University of Minnesota Institutional Review Board (IRB) and it was granted a waiver. Patients, and guardians, where appropriate, gave informed written consent at the clinical visit for their data to be included. In accordance with IRB guidelines, all patient data was deidentified prior to any analysis.

2.2 Data considerations

We designed the algorithm taking into account potential variability in the motion capture protocols. We assume that most of the clinics have a kinematic model producing similar output for joint angles, so we use many of these signals, but we reduce the set of marker trajectory signals to only a few of the most commonly used markers.

Although the input data is close to data used in existing algorithms the advantage of our approach comes from the data-driven nature of the algorithm; instead of deriving heuristics related to particular markers or joint angles, we build a statistical model that finds a relation between gait events and multivariate time series of marker and kinematic data.

2.3 Data preprocessing

For each leg r ∈ {left, right} we track observations of five bodies: hip, knee, ankle, toes, and pelvis. In each frame positions of hip, knee, ankle, and toes are stored relative to the pelvis in the same frame. Positions of the pelvis are stored relative to the pelvis in the first frame. In each trial we sample nr vectors composed of: 3-dimensional position of each body, 3-dimensional velocities, and 3-dimensional acceleration of the pelvis. Input observations are stored as matrices .

We annotate foot-contact and foot-off events and we treat these manual annotations as the ground truth. For clarity, here we focus on the foot-contact event, the analysis of foot-off event is analogous. For each frame we encode a gait event as 1 and a non-event as 0. As a result, each trial consisting of nr frames is encoded as a vector of nr binary values, i.e. . Our task can now be defined as a prediction of Yr vector from the observed Xr. Formally we are looking for a mapping function f such that , and we want to optimize the mapping to have the prediction as close as possible to the observe data Yr,t for t ∈ {1, …, Tr}.

2.4 Sequence to sequence mapping

We use the framework of sequence to sequence mapping [26]. In this framework, we are finding a function between one time series and another as represented by function f in Fig 1. To approximate the function we use a Long Short-Term Memory Network (LSTM) [27] due to its high flexibility. Thanks to the large size of our dataset, we can train this complex network architecture efficiently.

We build predictive models for foot-contact and foot-off events separately. Events in the output signal occur sparsely. That is, in Yr, the occurrence of a 1 (gait event) is rare compared to the occurrence of a 0 (non-event portion of the gait cycle). For this reason we need to balance the data or choose an objective function which accounts for the sample imbalance. Following machine learning literature [28], we choose to use a weighted binary cross-entropy, penalizing a misclassified 1 with 100: 1 proportion,

| (1) |

where Yr stands for the observed data and is the model prediction.

If we find f minimizing H(Yr, f(Xr)), real-valued process f(Xr) can be interpreted as the likelihood of an event. Peaks of sufficient magnitude in this process will correspond to predicted events.

For training the network we use sequences of fixed length q = 128 frames. Let n0 be an event in Yr. We use sequences [n0 − Z, n0 − Z + q − 1] where Z is a random integer sampled uniformly from {1, 2, …, q/2}. We apply this random shift Z in order to simulate starting from different points—to make the procedure more robust to various setups.

2.5 Evaluation framework

We use cross-entropy as a differentiable function that helps to identify the regions of interest. However, the practical usefulness of the method is better measured by an average number of frames between predicted annotations and the true annotations. Let Er be the set of events and the predicted set of events. Let S be a set of trials from the test set T on which numbers of predicted and the true annotations are equal.

coverage = |S|/|T|,

time = average time (measured by the number of frames) between true and predicted annotations on S, i.e. the minimum average absolute difference between pairs of values from Er and .

Neither of these measures are smooth, so we cannot use them directly in the training. However, note that if we optimize the Eq (1) to 0, then our estimated matches exactly the true Yr,t and therefore both coverage and time are also optimized.

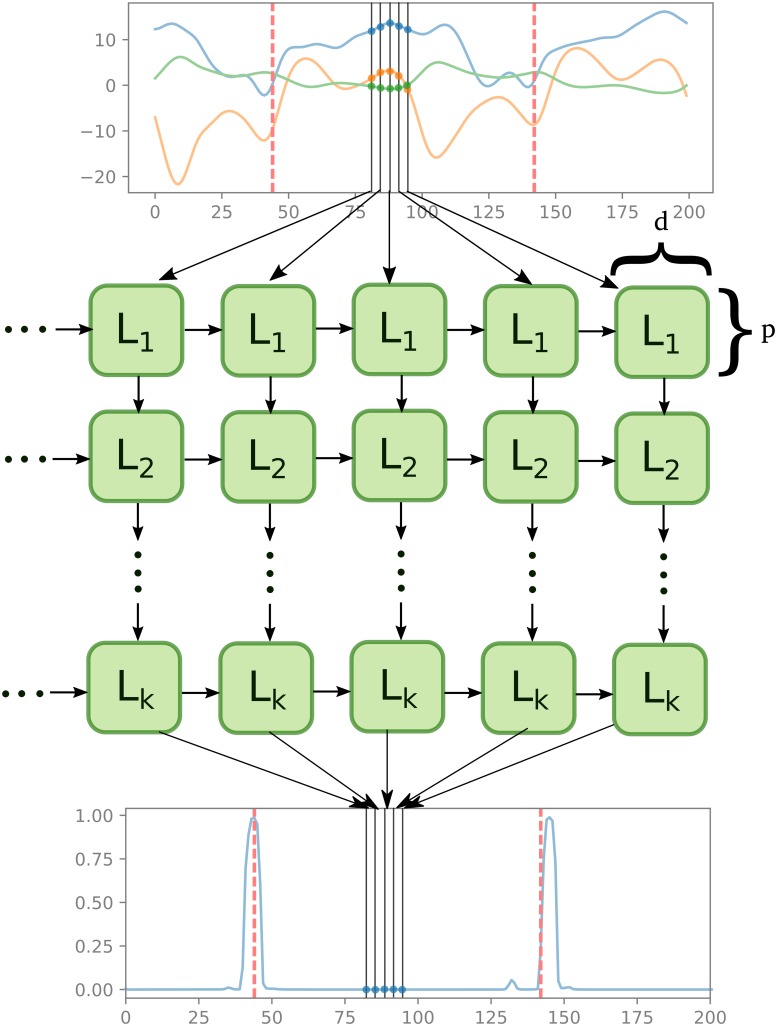

2.6 Network architecture

Our pipeline can be described in the framework we presented earlier (Fig 1). First, we construct a neural network for approximating likelihood of an event. Second, we apply a peak detection algorithm for identifying the actual events.

After a preliminary assessment of various parameter constellations, we test the following architectures of the network (Fig 2):

Fig 2. We approximate the mapping from the multivariate time series of kinematics and marker trajectories (top plot with only 3-dimensional time series for illustration) to the likelihood of an event (bottom plot) as a multi-layer neural network with LSTM layers (middle part of the figure).

Each cell takes as input values from the previous time-step in the same level cell, and values from the current time-step in the higher level cell. Red dashed vertical lines represent the true event, and are used for training. Note that all LSTM cells Li in every horizontal level are the same. Parameter d corresponds to the output dimension of each cell and p to the size of the hidden layer.

d = 33 dimensional time series input (as described in Section 2.3),

(k − 1) LSTM layers mapping the input to a p-dimensional time series,

LSTM layer mapping the previous layer to a 2-dimensional time series,

where k ∈ {1, 2, 3} and p ∈ {16, 32, 64}.

We split our dataset into training (81%), validation (9%), and test (10%) sets. The validation set was selected randomly in each experiment. In the training phase we use cross-validation to choose the best combination of parameters (k, p) and the right set of features, where the best combination is chosen by comparing the predictions on the validation set. Once we establish the best combination, we retrain the network on the training and validation sets together and then compare our method with the two other algorithms using the test set. Due to the high correlation of within-subject trials, we split the dataset on the patient level.

2.7 Peak detection

We use an elementary peak detection algorithm, which defines a local maximum as a maximum point between two local minima [29]. More precisely let X(t) be a time series where t ∈ Ω = {1, 2, 3, …, T}, for some T. Let δ > 0 be some threshold for discriminating peaks. We call a point t0 a local maximum if and only if there exist points tl ∈ Ω and tg ∈ Ω such that: (i) X(tl) + δ < X(t0), (ii) X(tr) + δ < X(t0), and (iii) X(t0) is the maximimum point on the interval [tl, tr]. We define local minima analogically.

Points following the above definition can be found using an algorithm linear in time (O(n)), which scans through all the points one by one. For details on implementation and properties of this approach please refer to [30].

For our analysis, we normalize the outputs to [0, 1] interval and choose δ = 0.5. Our preliminary analysis indicated that the threshold delta has little effect on the results.

3 Experiments

3.1 Existing methods

We compare our method with two methods introduced in [14], coordinate-based and velocity-based algorithms. The coordinate-based method leverages the fact that at the foot-contact event, the distance in anterior-posterior direction between the heel and sacrum is maximized, whereas in the foot-off event the distance in anterior-posterior direction between the toe and the sacrum is minimized.

The velocity-based method uses the observation that at the foot-contact event the anterior-posterior component of the velocity of the heel marker changes from anterior to posterior. Similarly, the anterior-posterior component of the toe marker velocity changes from posterior to anterior at the foot-off event.

To ensure fair comparison between benchmark methods and our approach we attempted to maximize the performance of the benchmark. To this end, we used the following to techniques. First, in the peak detection algorithm described in Section 2.7 we tried different parameters δ. Second, we shifted all predictions by k number of frames ahead. Our analysis showed no sensitivity to parameter δ, while parameter k = 3 improves prediction in both algorithms. We used δ = 0.5 and k = 3 for the analysis.

While multiple other peak detection algorithms are available, none are commonly used in pathological gait. Comparison in [31] suggests that there is no clearly superior algorithm, and available algorithms yield comparable results.

4 Results

Our comparisons show a substantial advantage of our method over the two selected heuristic-based algorithms. Both coordinate-based and velocity-based methods fail to detect any events in between 7% and 16% of cases. In contrast, our algorithm fails to detect foot contact in less than 1% cases and foot-off in less than 5% cases. Manual investigation of these cases did not reveal any clear pattern distinguishing these cases.

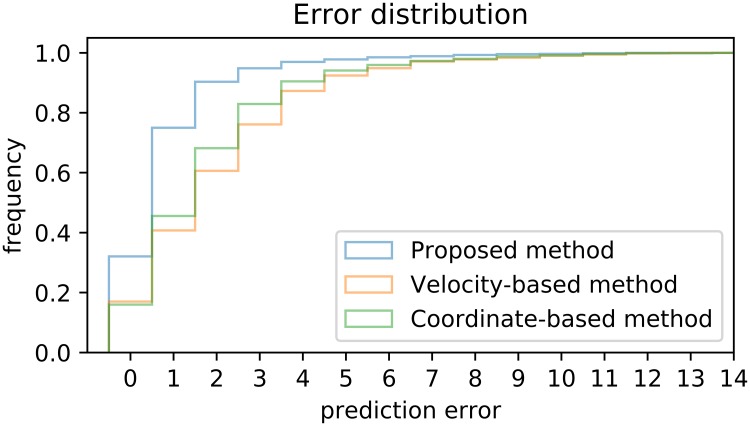

Regarding the error in the cases where events were identified, our algorithm predicts the foot-contact within average 1.2 frames (∼10.0 ms) of the human expert identification (Table 2). This is a substantial improvement compared to 2.5 frames (∼20.8 ms) for the velocity-based algorithm, and 2.2 frames (∼18.3 ms) for the coordinate-based algorithm. Another important feature of the algorithm is the variability of the error, where the risk of an error greater than a given threshold is consistently and significantly lower for our algorithm compared to the velocity or coordinate algorithms (Fig 3).

Table 2.

Performance of the three algorithms on two measures on predicting foot-contact (FC) and foot-off (FO): Coverage corresponds to the frequency of detecting the event (larger is better), time is the average error in milliseconds from the ground truth as defined in Section 2.5 (smaller is better).

| coverage | time (in ms) | |||

|---|---|---|---|---|

| FC | FO | FC | FO | |

| Coordinate-based | 90% | 84% | 18.3 | 16.7 |

| Velocity-based | 93% | 90% | 20.8 | 18.3 |

| Proposed method | 99% | 95% | 18.3 | 12.5 |

Fig 3. Comparison of sensitivity (true positive ratio) of three methods as a function of error tolerance (number of frames from the observed event within which we count the prediction as correct).

Our method is off by at most 3 frames in 95% cases, whereas the coordinate-based algorithm only attains this level of accuracy in 83% cases.

Note that, the foot-off detection is consistently worse than the foot-contact detection. We expect that this is due factors commonly recognized by human annotators. The most likely such factor is that, for many children with severe neurological and orthopaedic impairments, there is no “clean” foot-off event, but rather the toe is gradually dragged off the floor. This results in an ambiguous event, corrupting both the human gold-standard and the algorithm estimation of the event. Further investigation is needed to determine sources of this effect.

5 Discussion

We combined three approaches to detect gait events: velocity-based, coordinate-based, and our machine learning approach derived from combining kinematic characteristics. We built a neural network leveraging a large dataset of the kinematics of children with cerebral palsy. Results indicate that the new model is superior to the heuristic-based models.

The model can reduce engineering costs and can allow more precise data processing. First, annotation of gait events can save around 30 minutes per trial, amounting to 150 hours of engineering time yearly in a clinic with only one patient visit per day. Second, thanks to this reduced cost, more data can be annotated. In many clinics, every trial consists of over 6 steps, but only 1 − 2 gait cycles are extracted; so over 60% of data is ignored for both clinical reporting and research. Third, as an automatic method, our algorithm is free from human errors and biases, which further contributes to the improvement of the work flow. Finally, accurate real-time event detection opens up intriguing possibilities including gait training, real-time feedback, tuning of electrical stimulation devices, or designing better assistive, orthotic, and prosthetic devices [32].

Results from [31], where three human annotators were asked to annotate events of 50 subjects, suggest that our algorithm surpasses human accuracy. In that study, annotations compared pair-wise are within two frames 84% of time and three frames 92% of time. Direct comparison using our algorithm on Bruening’s dataset would be necessary to prove superiority.

We release the model as part of this publication to stimulate further research and enable its use in practice. Our Python [33] implementation is based on the TensorFlow library [34]. It requires Biomechanics Toolkit [35] and runs on Linux (https://github.com/kidzik/event-detector). It can be used as-is, taking .c3d files as input and outputting annotated .c3d files. Moreover, relying on the principles of transfer learning [36], the trained network might be further tuned for a specific population using a much smaller training set.

While our method is directly applicable in practice for the data presented in this study, the training relies on a large dataset what can be constraining for wider adoption in specific clinical scenarios. Methods for decreasing both the sample size and the number of required features can further simplify our approach. First, expert-informed features, such as the ones used in heuristic-based approach, could greatly reduce the required data. Second, to reduce the required amount of data, one can apply principles of transfer learning [28] by tuning our model for a specific populations. This can be achieved by fixing the low-level features (the first layers of the network) and retraining only the last few layers.

Acknowledgments

Our research was supported by the Mobilize Center, a National Institutes of Health Big Data to Knowledge (BD2K) Center of Excellence through Grant U54EB020405.

Data Availability

The dataset analyzed in this study was shared by Gillette Specialty Healthcare under a data-sharing agreement between Stanford University and Gillette Specialty Healthcare. These data are not publicly available due to restrictions on sharing patient health information but are available from the authors on reasonable request and with permission of Gillette Specialty Healthcare. Researchers interested in the data used for this study are asked to contact Joyce Trost, Manager of Research Administration (JTrost@Gillettechildrens.com).

Funding Statement

The study was funded by the Mobilize Center, National Institutes of Health Big Data to Knowledge (BD2K) Center of Excellence supported through Grant U54EB020405.

References

- 1. Fellin RE, Rose WC, Royer TD, Davis IS. Comparison of methods for kinematic identification of footstrike and toe-off during overground and treadmill running. Journal of Science and Medicine in Sport. 2010;13(6):646–650. 10.1016/j.jsams.2010.03.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Caderby T, Yiou E, Peyrot N, Bonazzi B, Dalleau G. Detection of swing heel-off event in gait initiation using force-plate data. Gait & posture. 2013;37(3):463–466. 10.1016/j.gaitpost.2012.08.011 [DOI] [PubMed] [Google Scholar]

- 3. Alton F, Baldey L, Caplan S, Morrissey M. A kinematic comparison of overground and treadmill walking. Clinical biomechanics. 1998;13(6):434–440. 10.1016/S0268-0033(98)00012-6 [DOI] [PubMed] [Google Scholar]

- 4. Hsue B, Miller F, Su F, Henley J, Church C. Gait timing event determination using kinematic data for the toe walking children with cerebreal palsy. Journal of Biomechanics. 2007;40(2):S529 10.1016/S0021-9290(07)70519-5 [DOI] [Google Scholar]

- 5. Kuo YL, Culhane KM, Thomason P, Tirosh O, Baker R. Measuring distance walked and step count in children with cerebral palsy: an evaluation of two portable activity monitors. Gait & posture. 2009;29(2):304–310. 10.1016/j.gaitpost.2008.09.014 [DOI] [PubMed] [Google Scholar]

- 6. Yang CC, Hsu YL. A review of accelerometry-based wearable motion detectors for physical activity monitoring. Sensors. 2010;10(8):7772–7788. 10.3390/s100807772 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Appelboom G, Camacho E, Abraham ME, Bruce SS, Dumont EL, Zacharia BE, et al. Smart wearable body sensors for patient self-assessment and monitoring. Archives of Public Health. 2014;72(1):28 10.1186/2049-3258-72-28 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Fourati H. Heterogeneous data fusion algorithm for pedestrian navigation via foot-mounted inertial measurement unit and complementary filter. IEEE Transactions on Instrumentation and Measurement. 2015;64(1):221–229. 10.1109/TIM.2014.2335912 [DOI] [Google Scholar]

- 9. Jasiewicz JM, Allum JH, Middleton JW, Barriskill A, Condie P, Purcell B, et al. Gait event detection using linear accelerometers or angular velocity transducers in able-bodied and spinal-cord injured individuals. Gait & posture. 2006;24(4):502–509. 10.1016/j.gaitpost.2005.12.017 [DOI] [PubMed] [Google Scholar]

- 10. Mannini A, Genovese V, Sabatini AM. Online decoding of hidden Markov models for gait event detection using foot-mounted gyroscopes. IEEE journal of biomedical and health informatics. 2014;18(4):1122–1130. 10.1109/JBHI.2013.2293887 [DOI] [PubMed] [Google Scholar]

- 11. Olsen E, Haubro Andersen P, Pfau T. Accuracy and precision of equine gait event detection during walking with limb and trunk mounted inertial sensors. Sensors. 2012;12(6):8145–8156. 10.3390/s120608145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Hannink J, Kautz T, Pasluosta CF, Gaßmann KG, Klucken J, Eskofier BM. Sensor-based gait parameter extraction with deep convolutional neural networks. Journal of Biomedical and Health Informatics. 2017;21(1):85–93. 10.1109/JBHI.2016.2636456 [DOI] [PubMed] [Google Scholar]

- 13. Hannink J, Kautz T, Pasluosta CF, Barth J, Schülein S, Gaßmann KG, et al. Mobile stride length estimation with deep convolutional neural networks. IEEE journal of biomedical and health informatics. 2018;22(2):354–362. 10.1109/JBHI.2017.2679486 [DOI] [PubMed] [Google Scholar]

- 14. Zeni J, Richards J, Higginson J. Two simple methods for determining gait events during treadmill and overground walking using kinematic data. Gait & posture. 2008;27(4):710–714. 10.1016/j.gaitpost.2007.07.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Dingwell J, Cusumano J, Cavanagh P, Sternad D. Local dynamic stability versus kinematic variability of continuous overground and treadmill walking. Journal of biomechanical engineering. 2001;123(1):27–32. 10.1115/1.1336798 [DOI] [PubMed] [Google Scholar]

- 16. O’Connor CM, Thorpe SK, O’Malley MJ, Vaughan CL. Automatic detection of gait events using kinematic data. Gait & posture. 2007;25(3):469–474. 10.1016/j.gaitpost.2006.05.016 [DOI] [PubMed] [Google Scholar]

- 17. Schache AG, Blanch PD, Rath DA, Wrigley TV, Starr R, Bennell KL. A comparison of overground and treadmill running for measuring the three-dimensional kinematics of the lumbo–pelvic–hip complex. Clinical Biomechanics. 2001;16(8):667–680. 10.1016/S0268-0033(01)00061-4 [DOI] [PubMed] [Google Scholar]

- 18. Hreljac A, Marshall RN. Algorithms to determine event timing during normal walking using kinematic data. Journal of biomechanics. 2000;33(6):783–786. 10.1016/S0021-9290(00)00014-2 [DOI] [PubMed] [Google Scholar]

- 19. Ghoussayni S, Stevens C, Durham S, Ewins D. Assessment and validation of a simple automated method for the detection of gait events and intervals. Gait & Posture. 2004;20(3):266–272. 10.1016/j.gaitpost.2003.10.001 [DOI] [PubMed] [Google Scholar]

- 20. Karčnik T. Using motion analysis data for foot-floor contact detection. Medical and Biological Engineering and Computing. 2003;41(5):509–512. 10.1007/BF02345310 [DOI] [PubMed] [Google Scholar]

- 21. Skelly MM, Chizeck HJ. Real-time gait event detection for paraplegic FES walking. IEEE Transactions on neural systems and rehabilitation engineering. 2001;9(1):59–68. 10.1109/7333.918277 [DOI] [PubMed] [Google Scholar]

- 22. Lauer RT, Smith BT, Betz RR. Application of a neuro-fuzzy network for gait event detection using electromyography in the child with cerebral palsy. IEEE Transactions on Biomedical Engineering. 2005;52(9):1532–1540. 10.1109/TBME.2005.851527 [DOI] [PubMed] [Google Scholar]

- 23. Miller A. Gait event detection using a multilayer neural network. Gait & posture. 2009;29(4):542–545. 10.1016/j.gaitpost.2008.12.003 [DOI] [PubMed] [Google Scholar]

- 24. Davis RB III, Ounpuu S, Tyburski D, Gage JR. A gait analysis data collection and reduction technique. Human movement science. 1991;10(5):575–587. 10.1016/0167-9457(91)90046-Z [DOI] [Google Scholar]

- 25. Kadaba MP, Ramakrishnan H, Wootten M. Measurement of lower extremity kinematics during level walking. Journal of orthopaedic research. 1990;8(3):383–392. 10.1002/jor.1100080310 [DOI] [PubMed] [Google Scholar]

- 26. Sutskever I, Vinyals O, Le QV. Sequence to sequence learning with neural networks In: Advances in neural information processing systems; 2014. p. 3104–3112. [Google Scholar]

- 27. Hochreiter S, Schmidhuber J. Long short-term memory. Neural computation. 1997;9(8):1735–1780. 10.1162/neco.1997.9.8.1735 [DOI] [PubMed] [Google Scholar]

- 28. Goodfellow I, Bengio Y, Courville A, Bengio Y. Deep learning. vol. 1 MIT press; Cambridge; 2016. [Google Scholar]

- 29. Henrich N, d’Alessandro C, Doval B, Castellengo M. On the use of the derivative of electroglottographic signals for characterization of nonpathological phonation. The Journal of the Acoustical Society of America. 2004;115(3):1321–1332. 10.1121/1.1646401 [DOI] [PubMed] [Google Scholar]

- 30.Billauer E. peakdet: Peak detection using MATLAB. Eli Billauer’s home page. 2008;.

- 31.Bruening DA, Ridge ST. Comparison of Automated Event Detection Algorithms in Pathological Gait. 2014;. [DOI] [PubMed]

- 32. Lambrecht S, Harutyunyan A, Tanghe K, Afschrift M, De Schutter J, Jonkers I. Real-Time Gait Event Detection Based on Kinematic Data Coupled to a Biomechanical Model. Sensors. 2017;17(4):671 10.3390/s17040671 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Van Rossum G, Drake FL Jr. Python tutorial Centrum voor Wiskunde en Informatica Amsterdam, The Netherlands; 1995. [Google Scholar]

- 34. Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, et al. TensorFlow: A System for Large-Scale Machine Learning In: OSDI. vol. 16; 2016. p. 265–283. [Google Scholar]

- 35. Barre A, Armand S. Biomechanical ToolKit: Open-source framework to visualize and process biomechanical data. Computer methods and programs in biomedicine. 2014;114(1):80–87. 10.1016/j.cmpb.2014.01.012 [DOI] [PubMed] [Google Scholar]

- 36. Pan SJ, Yang Q. A survey on transfer learning. IEEE Transactions on knowledge and data engineering. 2010;22(10):1345–1359. 10.1109/TKDE.2009.191 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset analyzed in this study was shared by Gillette Specialty Healthcare under a data-sharing agreement between Stanford University and Gillette Specialty Healthcare. These data are not publicly available due to restrictions on sharing patient health information but are available from the authors on reasonable request and with permission of Gillette Specialty Healthcare. Researchers interested in the data used for this study are asked to contact Joyce Trost, Manager of Research Administration (JTrost@Gillettechildrens.com).