Abstract

Child listeners have particular difficulty with speech perception when competing speech noise is present; this challenge is often attributed to their immature top-down processing abilities. The purpose of this study was to determine if the effects of competing speech noise on speech-sound processing vary with age. Cortical auditory evoked potentials (CAEPs) were measured during an active speech-syllable discrimination task in 58 normal-hearing participants (age 7–25 years). Speech syllables were presented in quiet and embedded in competing speech noise (4-talker babble, +15 dB signal-to-noise ratio; SNR). While noise was expected to similarly reduce amplitude and delay latencies of N1 and P2 peaks in all listeners, it was hypothesized that effects of noise on the P3b peak would be inversely related to age due to the maturation of top-down processing abilities throughout childhood. Consistent with previous work, results showed that a +15 dB SNR reduces amplitudes and delays latencies of CAEPs for listeners of all ages, affecting speech-sound processing, delaying stimulus evaluation, and causing a reduction in behavioral speech-sound discrimination. Contrary to expectations, findings suggest that competing speech noise at a +15 dB SNR may have similar effects on various stages of speech-sound processing for listeners of all ages. Future research directions should examine how more difficult listening conditions (poorer SNRs) might affect results across ages.

1. Introduction

Competing noise has a more negative effect on speech perception in children than adults (Bradley & Sato, 2008; Corbin, Bonino, Buss, & Leibold, 2016; Elliott, 1979; Etymotic Research, 2005; Johnson, 2000; Neuman, Wroblewski, Hajicek, & Rubinstein, 2010; Rashid, Leensen, & Dreschler, 2016; Talarico et al., 2006). These additional difficulties can include reductions in speech recognition (Neuman et al., 2010), increased listening effort (Howard, Munro, & Plack, 2010), and limited ability to use spatial cues (Wightman, Callahan, Lutfi, Kistler, & Oh, 2003) – consequences that are particularly concerning because children spend much of their time in noisy classroom environments where they are expected to listen and learn. Understanding the processes engaged in speech-in-noise perception could assist in identifying children who struggle more than would be expected based on their age.

At the most basic level, competing noise can interfere with the sensory representation of the signal in the peripheral auditory system (French & Steinberg, 1947; Kidd, Mason, Deliwala, Woods, & Colburn, 1994). When difficulty with competing speech noise is greater than would be expected based on peripheral masking of the signal (Freyman, Balakrishnan, & Helfer, 2001), it is referred to as informational or central masking, as the additional masking is thought to be attributed to confusion caused by processing speech in both the signal and masker. Speech-on-speech masking is experienced across ages; however, younger listeners exhibit an even greater detriment than older children and adults (Hall, Grose, Buss, & Dev, 2002). This phenomenon has been attributed to immaturity of top-down processing abilities like auditory working memory, attention, and linguistic strategies (Leibold, 2017). Unfortunately, the variability inherent in speech recognition performance for children limits us from dissociating how competing maskers affect the sensory representation and subsequent top-down processing of the speech signal of interest.

Cortical auditory-evoked potentials (CAEPs) offer a unique opportunity to assess the effect of competing maskers on speech perception across development, as they allow us to measure encoding of the speech stimulus at various processing stages. For instance, the N1, P2, and P3b peaks can be elicited using a simple speech discrimination task and used to represent the sensory representation (Näätänen, Kujala, & Winkler, 2011; Näätänen & Picton, 1987), classification (Crowley & Colrain, 2004; Tremblay, Ross, Inoue, McClannahan, & Collet, 2014), and evaluation of a signal (Polich, 2007; Sutton, Braren, Zubin, & John, 1965), respectively. When background noise, either competing noise or competing speech, is combined with a speech signal, N1 and P2 peaks recorded from speech sound onset are generally reduced in amplitude and delayed in latency for adults (Billings, Bennett, Molis, & Leek, 2011; Billings, McMillan, Penman, & Gille, 2013; Billings & Grush, 2016; Kaplan-Neeman, Kishon-Rabin, Henkin, & Muchnik, 2006; Parbery-Clark, Marmel, Bair, & Kraus, 2011; Whiting, Martin, & Stapells, 1998; Zendel, Tremblay, Belleville, & Peretz, 2015) and school-age children1 (Almeqbel & McMahon, 2015; Anderson, Chandrasekaran, Yi, & Kraus, 2010; Cunningham, Nicol, Zecker, Bradlow, & Kraus, 2001; Hassaan, 2015; Hayes, Warrier, Nicol, Zecker, & Kraus, 2003; Sharma, Purdy, & Kelly, 2014; Warrier, Johnson, Hayes, Nicol, & Kraus, 2004). Consistent with effects of competing noise on N1 and P2, the P3b peak is generally delayed and reduced in amplitude for adult listeners when competing noise is present (Bennett, Billings, Molis, & Leek, 2012; Koerner, Zhang, Nelson, Wang, & Zou, 2017; Whiting et al., 1998). Far less is known about the effect of competing noise on P3b in child listeners. To date, the only study to examine the effect of competing noise on P3b in children reported delayed latencies of the P3b to tone-burst stimuli with competing noise presented in the contralateral ear (Ubiali, Sanfins, Borges, & Colella-Santos, 2016). While these findings were generally consistent with noise-effects on speech-evoked CAEPs measured in adult listeners, there has yet to be a direct examination of the effect of noise on speech-evoked P3b peaks in children.

Importantly, active engagement with the listening task can show changes to earlier stages of sensory processing as reflected by N1 and P2 (Billings et al., 2011; Hillyard, Hink, Schwent, & Picton, 1973; Picton & Hillyard, 1974; Zendel et al., 2015). For instance, when adult listeners direct attention to the speech sounds, N1 amplitudes are larger and P2 latencies are delayed compared to when they listen passively (Billings et al., 2011; Zendel et al., 2015). These findings suggest that arousal and attentional processes active when listening to speech in noise (Salvi et al., 2002) might compensate for the degraded neural encoding present in earlier processing stages. Only one study has examined the effects of competing noise on speech-evoked N1 and P3b in the same listeners. Whiting and colleagues (1998) used steady-state, broadband noise to examine how amplitude and latency of N1 and P3b speech-evoked CAEPs in adult listeners respond to worsening SNRs. Their results showed little or no reduction in amplitudes of N1 and P3b peaks until the competing noise was the same level or higher than the speech. Unlike amplitude changes, however, latency of the two CAEPs responded differently to worsening SNR. That is, the N1 showed latency delays in conditions as favorable as +20 dB SNRs while P3b latencies remained unchanged until the more detrimental +5 dB SNR condition (Whiting et al., 1998). Because P3b is thought to be reflective of top-down processing mechanisms like attention and memory-related operations (see Polich, 2007 for an overview), this resiliency to competing noise suggests that the P3b might be useful in understanding compensatory mechanisms that aide in speech-in-noise perception, as it reflects the behavioral psychometric function of speech discrimination quite well (Whiting et al., 1998).

It is unclear if the resilience of P3b but not N1 in the presence of competing noise is present at an early age. That is, previous studies with children that measure CAEPs elicited with speech embedded in competing noise have only been completed using passive paradigms where children were instructed to ignore the stimuli. Because top-down processing abilities associated with speech-in-noise perception, such as selective attention and working memory, develop throughout childhood (Gomes, Molholm, Christodoulou, Ritter, & Cowan, 2000; Vuontela et al., 2003), it is plausible that adult-like resiliency of the P3b might follow a similar developmental time course. Similarly, it is unknown if effects of noise on CAEPs vary across development, as most previous studies that have evaluated child and adult differences have used age as a categorical variable – averaging data from children across a broad range of development. Because CAEP morphology continues to develop through adolescence (Polich, Ladish, & Burns, 1990; Ponton, Eggermont, Kwong, & Don, 2000), any age-related variation in changes to amplitude or latency in the presence of competing noise might be overlooked when averaging across ages. The only study to directly examine age effects on noise-induced changes to CAEP failed to find an age effect between 8- and 13-year-olds (Anderson et al., 2010); however, that study used a passive paradigm to elicit CAEPs. It is possible that any age-related effects might be related to developing top-down processing abilities and thus, not measurable using a passive listening task.

The purpose of this study was to examine the effect of competing speech noise on N1, P2, and P3b peaks using an active listening task in listeners from 7 to 25 years of age. Our goal was to determine if the effects of competing noise on various stages of speech-sound processing vary as a function of age when listeners are engaged with a speech processing task, and thus, utilizing top-down processing skills. Consistent with previous literature, we expected that the presence of background noise would generally reduce the amplitude and delay the latency of CAEPs. Additionally, due to the maturation of top-down processing of speech-in-noise perception across childhood (Leibold & Buss, 2013; Wightman & Kistler, 2005), we hypothesized that the effects of noise on P3b amplitude and latency might be negatively related with age. This was expected to result in a greater influence of age on noise effects in the P3b than the earlier N1 and P2 peaks, with the youngest listeners showing greater degradation (i.e., reduced amplitude, delayed latency) of the P3b peak in noise than mature adult listeners.

2. Methods

2.1. Participants

Fifty-eight participants (28 females) from the ages of 7 to 25.9 years of age (M = 16.6, SD = 5.55) were recruited from the university and medical center communities. Each age (years) was represented by three participants, with a fourth participant at age 20 years. Percent of participants who were female show the following distribution: 7–8 years (50%), 9–10 years (17%), 11–12 years (17%), 13–14 years (33%), 15–16 years (83%), 17–18 years (67%), 19–20 years (43%), 21–22 years (67%), 23–25 years (56%). Eligibility for enrollment in the study was verified by the participant or their parent. Exclusion criteria included any history of neuropsychological concerns, suspected or diagnosed attention deficits, or current use of medications known to affect the central nervous system (e.g., stimulants, antidepressants). Participants had normal hearing as verified by a standard hearing screening at 20 dB HL for octave frequencies ranging from 1000–8000 Hz. All participants exhibited average or above-average intelligence as measured by the Kaufman Brief Intelligence Test-II, (Kaufman & Kaufman, 2004). Participants were compensated for their participation. Informed consent and assent were obtained according to the procedures required by the Institutional Review Board at Vanderbilt University.

2.2. CAEP stimuli

Naturally-spoken digital samples of the syllables /da/ and /ga/ vocalized by the same female talker (Shannon, Jensvold, Padilla, Robert, & Wang, 1999) were used as the stimuli. Speech syllables were trimmed to 411 ms using a free digital audio editor (Audacity, version 2.0.2; Audacity Team, http://audacity.sourceforge.net/). These consonants were selected because they differ by one important phonetic feature known to be susceptible to competing noise – place of articulation (Miller & Nicely, 1955) and are similarly affected by competing speech noise (Parikh & Loizou, 2005). Because spectral differences between these two consonants are primarily isolated to the steepness of the second and third formant transitions of the consonant burst (Stevens & Blumstein, 1978), discriminating these two syllables in competing speech noise was expected to be difficult enough to require top-down processing – even for listeners with normal hearing.

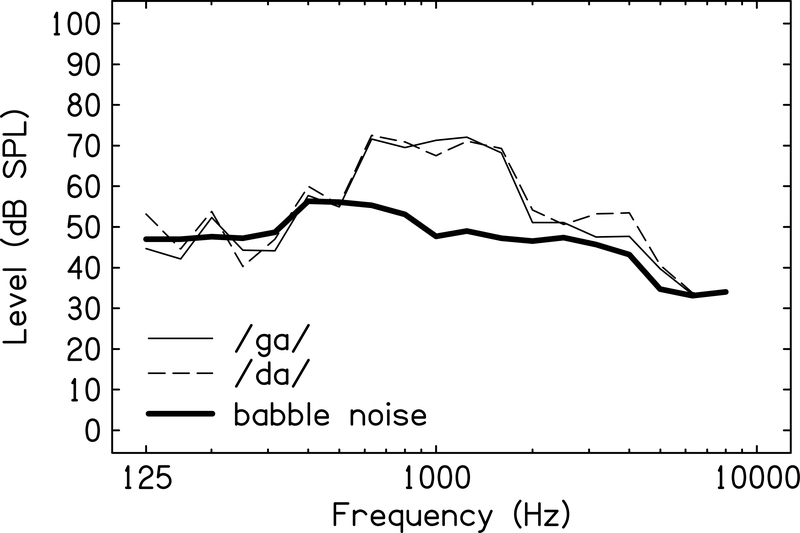

Competing speech noise included three female talkers and one male talker reading aloud (i.e., Four Talker Noise, Auditec, 1971). The noise level was adjusted relative to the average level of the speech syllables to create a +15 dB SNR, the level recommended by the American National Standard for Classroom Acoustics (ANSI, 2010)2. Long-term average intensity of concatenated speech syllable stimuli and the competing speech noise were calibrated using a Larson-Davis sound pressure level meter (Model 824) measured with a 2 cc, artificial ear coupler (AEC202). Left and right ear channels were calibrated separately. Figure 1 shows one-third octave band levels, as measured in the coupler, of the speech tokens and the competing speech noise that were used to elicit CAEPs.

Figure 1. Spectra of Stimuli.

One-third octave band levels for the syllables /ga/ and /da/ and the competing speech noise used as CAEP stimuli. Levels were adjusted to reflect an average overall level for the speech syllables of 75 dB SPL and an overall level of 60 dB SPL for the competing speech noise (+15 dB signal-to-noise ratio).

2.3. Procedures

All CAEP testing was conducted in a sound-dampened room in a single visit lasting less than two hours. While sitting quietly in a cushioned chair, the participant completed testing under four conditions: Quiet-Ignore (QI), Noise-Ignore (NI), Quiet-Attend (QA), Noise-Attend (NA). Using a Latin Squares design, each participant was assigned to one of four testing orders: QI-NI-QA-NA, NI-QI-NA-QA, QA-NA-QI-NI, NA-QA-NI-QI. The Quiet conditions consisted of speech syllables presented without competing speech noise while the Noise conditions included competing speech noise at +15 dB SNR. Consistent with previous studies in adults and children (Kaplan-Neeman et al., 2006; Sharma et al., 2014; Zendel et al., 2015), stimuli were presented to each participant binaurally using insert earphones (EARTone 3A). The level of the speech syllables remained constant at 75 dB SPL across Quiet and Noise conditions. Competing speech noise was presented through the earphones continuously throughout each recording session. The testing in each condition lasted 6–9 minutes. The washout interval between periods was about one to three minutes.

2.3.1. Stimuli, Response, and Data Capture

CAEPs were recorded using a 128-channel Geodesic sensor net (EGI, Inc., Eugene, OR) with electrodes embedded in soft electrolytic sponges. Prior to application, the net was soaked in warm saline solution. The electrode impedances were kept at or below 50 kOhms. The use of high-impedance amplifiers allowed for collection of high-quality data without having to abrade the scalp, thus minimizing any discomfort and reducing infection risks. A 250 Hz sampling rate was used, and all electrodes were referenced to electrode Cz during data collection.

The syllables were presented using an oddball paradigm, where the deviant stimulus appeared infrequently among more frequent presentations of the standard stimulus. Consistent with previous research in this area (Bennett et al., 2012; Martin & Stapells, 2005; Whiting et al., 1998), deviant stimuli comprised 20% of the trials. A total of 200 trials were presented within each condition (Quiet and Noise). Assignment of speech syllables to the standard/deviant were counterbalanced across participants – that is, half of participants were assigned /da/ as the deviant stimulus while the other half were assigned /ga/. Stimuli were presented and responses were captured using an automated presentation program (E-prime, PST, Inc., Pittsburgh, PA) with an inter-stimulus interval (ISI; offset-to-onset) randomly varying in increments of 1 ms between 1400–2400 ms. The relatively slow presentation rate maximized the likelihood of detecting the N1 peak in child participants (Gilley, Sharma, Dorman, & Martin, 2005).

During the Attend tests, participants were instructed to indicate which stimulus (standard or deviant) was presented on each trial by pressing the corresponding button on a response pad. Assignment of stimuli to buttons was counterbalanced across participants. Both accuracy and speed were emphasized. To allow child participants to become familiar with the task, a practice session lasting less than one minute was completed in quiet. All participants demonstrated performance better than 80% correct syllable identification before proceeding to the main task. The Ignore testing was used to measure passive sensory processing of the speech syllable. During the Ignore tests, participants were asked to watch a silent movie and ignore any sounds they heard through the earphones. Test conditions (Attend and Ignore) were counterbalanced across participants to account for fatigue effects. For the purposes of this report, only the data obtained during active listening in the Attend conditions will be discussed.

3. Data Analysis

3.1. Preliminary processing of CAEP data

The EEG data were filtered offline using a 0.1 Hz - 30 Hz bandpass filter. Segmentation of the CAEP data for each trial was performed automatically using the Net Station 5.3 software (EGI, Inc. Eugene, OR), starting 100 ms prior to each stimulus onset and continuing for 800 ms post-syllable onset. Next, data within each trial were screened for electrodes with poor signal quality and movement artifacts using an automated screening algorithm in Net Station. That is, any electrode with measured voltage >200 μV during a trial was marked as bad. Data for electrodes with poor signal quality within a trial were reconstructed using spherical spline interpolation procedures. Trials were discarded if any of the following criterion were met: (1) more than 10% of the electrodes were deemed bad, (2) voltage >140 μV for electrodes 8, 126, 26, and 127 (interpreted as an eye blink), or (3) voltage >55 μV for electrodes 125 and 128 (considered to reflect eye movements). Automated artifact removal was confirmed by manual verification by an examiner blinded to the participant’s age, session type (Attend vs. Ignore), and recording condition (Quiet vs. Noise). Following artifact screening, individual CAEPs were averaged, re-referenced to an average reference, and baseline-corrected by subtracting the average microvolt value across the 100-ms pre-stimulus interval from the post-stimulus segment.

3.2. Derivation of CAEP variables

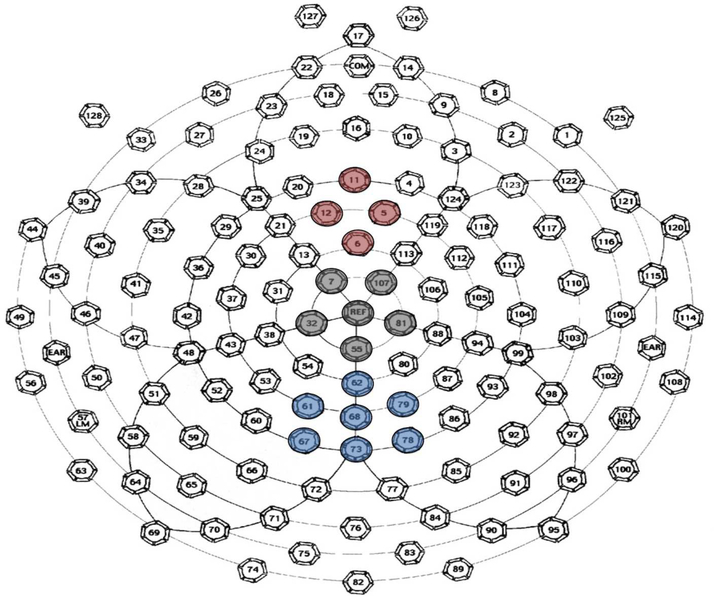

Amplitude and latency values for N1, P2, and P3b peaks were obtained separately for each electrode cluster shown in Figure 2. Voltage was averaged across individual electrodes within each electrode cluster to represent traditional Fz, Cz, and Pz locations (Polich, 2007). These voltages within each cluster were highly correlated across all participants (Fz, r = .780; Cz, r = .755; Pz, r = .856). Latency of peaks for each participant in each condition was defined using pre-specified time windows based on the grand average waveforms in quiet and in noise (N1 Quiet: 68–132 ms, N1 Noise: 100–180 ms; P2 Quiet: 148–240 ms, P2 Noise: 168–280 ms; P3b Quiet: 300–600 ms, P3b Noise: 332–700 ms). Actual time windows used to calculate adaptive mean amplitude were smaller than pre-specified time windows used to locate peak amplitude and were centered around peak latencies specific to each individual’s average waveform (±20 ms relative to the peak latency for N1 and P2; ±52 ms for P3b). Peak latency and adaptive mean amplitude values were automatically calculated using Microsoft Excel for each peak within the two conditions. We chose to use mean amplitude, rather than peak amplitude, because it accommodates for variations in trial numbers across participants and provides a stable measure less susceptible to measurement noise (Luck, 2014).

Figure 2. Electrode Map.

Map of the 128-channel Geodesic Sensor Net (EGI, Inc). Colors indicate electrode clusters used for analysis (Fz = red, Cz = grey, Pz = blue).

N1 and P2 peak latencies were defined using the minimum and maximum voltages of the Cz waveform from the standard stimulus, respectively. On average, 98.2 (SD = 23.5) standard artifact-free trials were included per condition for each participant. Peak latency of the P3b was identified using responses to deviant stimuli at Cz and Pz electrode clusters. On average, 26.0 (SD = 5.5) deviant artifact-free trials were included per condition for each participant. Adaptive mean amplitude of the P3b was based on the time window around the peak latency to the deviant stimulus. Because the goal was to measure noise-induced change in the P3b peak, participants were included only if they demonstrated a measurable P3b peak in quiet. Using 0.5 μV as our criterion for minimum detectible amplitude of the P3b peak (Gustafson, Key, Hornsby, & Bess, 2018; Tacikowski & Nowicka, 2010), we measured the magnitude of the P3b in quiet (deviant amplitude minus standard amplitude within the same time window) for each listener. Because previously published studies have measured P3b at Cz or Pz with comparable results (Pearce, Crowell, Tokioka, & Pacheco, 1989; Polich et al., 1990), we used the P3b at the Cz location (if 0.5 μV criterion was met) for participants who showed absent P3b responses at the Pz location (n=6). This present/absent criterion was not applied to data for P3b peaks in noise. All statistical analyses were conducted on the P3b peak measured from the response to deviant stimuli.

A reviewer blinded to participant age visually examined each response to confirm the expected N1-P2 morphology and accuracy of the automatically calculated peak latency and resulting adaptive mean amplitude of the N1, P2, and P3b responses. Five participants (one seven-, two eight-, and two nine-year-olds) were excluded from all visual representation and statistical analyses due to immature waveform morphology (i.e., P1-N250 instead of N1-P2 waveforms). Manual confirmation used the following criterion when evaluating if automatically selected peak latencies corresponded to the specific peak of interest: (1) N1 peaks were represented as the maxima of the first negative-going deflection after the start of the prespecified N1 time windows, (2) P2 peak latencies reflected the first positive peak following the N1 response, and (3) P3b peaks were characterized by the first peak maxima following the P2 response. This manual confirmation led to peak latency changes for 21 waveforms (6.73%)2. N1 data from the noise condition was removed from statistical analyses for one additional participant (age 14 years) because no clear N1 peak was present. Data in the noise condition for two additional participants (9- and 16-years-old) were excluded from the P3b data set due to chance-level behavioral performance on the speech discrimination task. The final sample for N1 and P2 peaks included 53 participants in quiet, with 52 and 53 participants showing N1 and P2 peaks in noise, respectively. The final sample for P3b adaptive mean amplitude included 53 participants in quiet and 51 participants in noise.

3.3. Statistical Analyses

3.3.1. Behavioral responses

To verify that the participants were actively engaged in the speech discrimination task during the CAEP test, median reaction time (ms) of response selection and accuracy (percent correct) of the deviant stimulus detection were examined. Separate repeated-measures analyses of covariance models for accuracy and response time were used to estimate the effects of stimulus (Standard vs. Deviant) and condition (Quiet vs. Noise) accounting for age as a covariate. Graphical figures and Pearson correlation coefficients were used to characterize the relationships among reaction time, accuracy, and participant age.

3.3.2. CAEP Responses

We analyzed amplitude and latency of each CAEP with a linear mixed-effects model using PROC MIXED and customized SAS code (Version 9.4, SAS Institute, Cary, NC). Linear mixed-effects models include both fixed and random effects to account for individual differences and correlation of repeated measures. For each CAEP, the model’s fixed effects represented mean response as a curvilinear function of the participant’s age and the experimental condition (Quiet vs Noise). Participant age was centered at 17 years to facilitate interpretation of the model’s intercept coefficient. Because the effects of age on CAEPs are curvilinear (Polich et al., 1990), we included linear, quadratic, and cubic terms for age in each model. Under the assumption that the shape and location of the response curve may depend on the experimental condition, interaction terms (age x condition, age2 x condition, age3 x condition) were included as fixed effects. Period effects (which may represent systematic effects of fatigue or practice) were also included in the model. In summary, the model included a single individual-specific random effect and 10 fixed effects (degrees of freedom): Intercept (1), Condition (1), Age (3), Condition x Age (3), period effects (2). We calculated the condition number of the matrix of observed values for these 10 variables to assess if multicollinearity was an issue for our data (Belsley, Kuh, & Welsch, 2005). A high condition number (e.g., >20) would indicate that two or more variables in our models are highly related (Dudek, 2005). The matrix for these data resulted in a condition number of 4.53 suggesting that multicollinearity was not a problem. Goodness of fit of each model was evaluated in terms of empirical distributions of residuals (total residuals, within-subject residuals, between-subject residuals) and the correlation between predicted and observed values. The choice of assumptions about the variance-covariance structure was not in question because only paired values were available for each dependent variable. The linear mixed-effects model was fit separately for each amplitude and latency of each peak (N1, P2, P3b). The parameter estimates (two variance components and 10 regression coefficients) were used to compute graphical representations of the mean response curves for Quiet, Noise, and Quite minus Noise, along with 95% confidence bands, estimates of the area between the two curves, and tests of two a priori null hypotheses (i.e., that there is no effect of competing noise on amplitude or latency, and that any effect of noise is the same across age). Parameter estimate tables can be found in Appendix A.

4. Results

4.1. Speech-Sound Behavioral Discrimination

Mean data for the speech-sound discrimination performance are shown in Table 1. Age was correlated with accuracy (0.31 ≤ r ≤ 0.47) and response time (−0.57 ≤ r ≤ −0.64) showing that children performed less accurately and responded slower than adults in each condition and for each stimulus type (p-values: 0.027 to <0.001). Therefore, age was included as a covariate in further analyses.

Table 1.

Sample mean and standard deviations for accuracy and response time data in the behavioral speech-sound discrimination task.

| Accuracy (%) | Response Time (ms) | |||||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | |||

| Quiet | Standard | 98.96 | 0.99 | 436.76 | 98.43 | |

| Deviant | 93.78 | 5.64 | 511.08 | 113.35 | ||

| Noise | Standard | 98.59 | 1.42 | 489.51 | 111.04 | |

| Deviant | 92.55 | 6.51 | 557.42 | 106.25 | ||

Note that mean accuracy was excellent across all conditions when collapsed across age. In the model for mean accuracy, there were main effects of Stimulus, F(1,49) = 22.489, p < .001, ηp2 = .315 but not Condition, p = .301. Specifically, syllable identification for standard stimuli was more accurate than for deviant stimuli. The Stimulus x Age interaction was significant, F(1,49) = 5.060, p = .029, ηp2 = .094. That is, the differences in accuracy between Stimuli (deviant < standard) were greatest for the youngest listeners and smallest for the oldest listeners. No other interactions were statistically significant (all p > .05).

The model for median response time indicated that responses were faster in the quiet compared to the noise condition, F(1,49) = 5.381, p = .025, ηp2 = .099, and that syllable identification for standard stimuli was quicker than for deviant stimuli, F(1,49) = 22.714, p < .001, ηp2 = .315. There were no statistically significant interactions (.086 ≤ p ≤ .546).

4.2. Effect of Noise on CAEPs

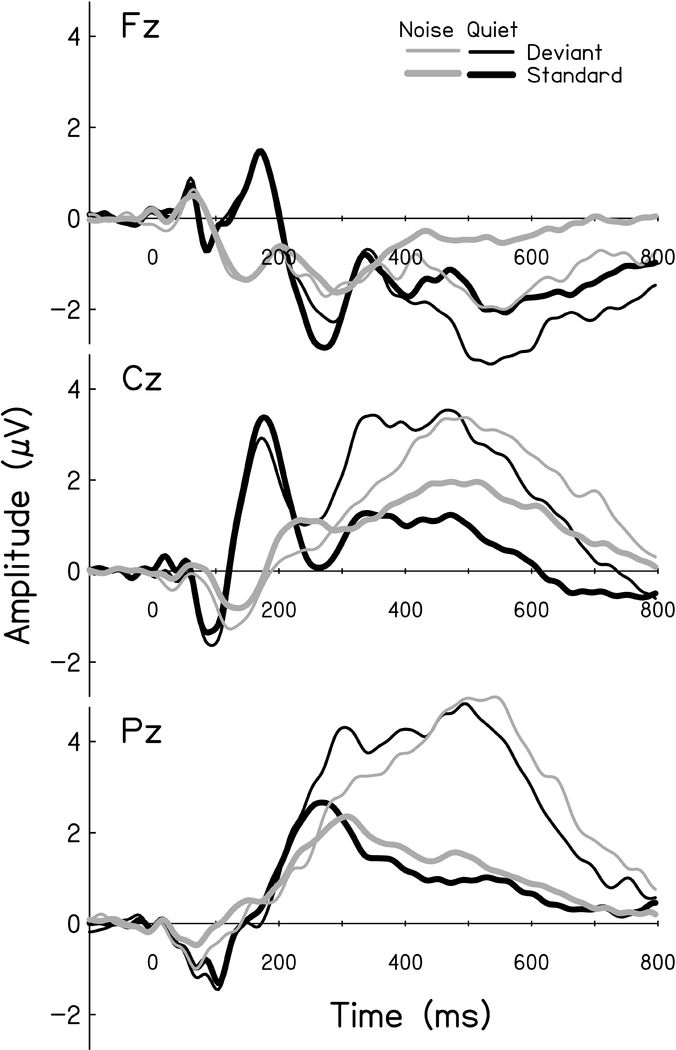

Grand average waveforms (Quiet, n=53; Noise, n=51) to standard and deviant stimuli at each electrode cluster for quiet and noise are shown in Figure 3. Listeners with chance-level performance in noise were excluded from the Noise waveform. As expected, the grand average N1 and P2 peaks at the Cz electrode cluster appear diminished in amplitude and delayed in latency in noise as compared to the quiet condition. Although present at both Cz and Pz electrode clusters, P3b peaks appear maximal at the expected Pz location in quiet and noise conditions.

Figure 3. Grand Average Waveforms.

Grand average waveforms (Quiet, n = 53; Noise, n=51) for responses to standard (thick lines) and deviant (thin lines) stimuli at the three electrode clusters. Responses obtained in Noise (gray) show reduced amplitude and increased latency when compared to those obtained in Quiet (black).

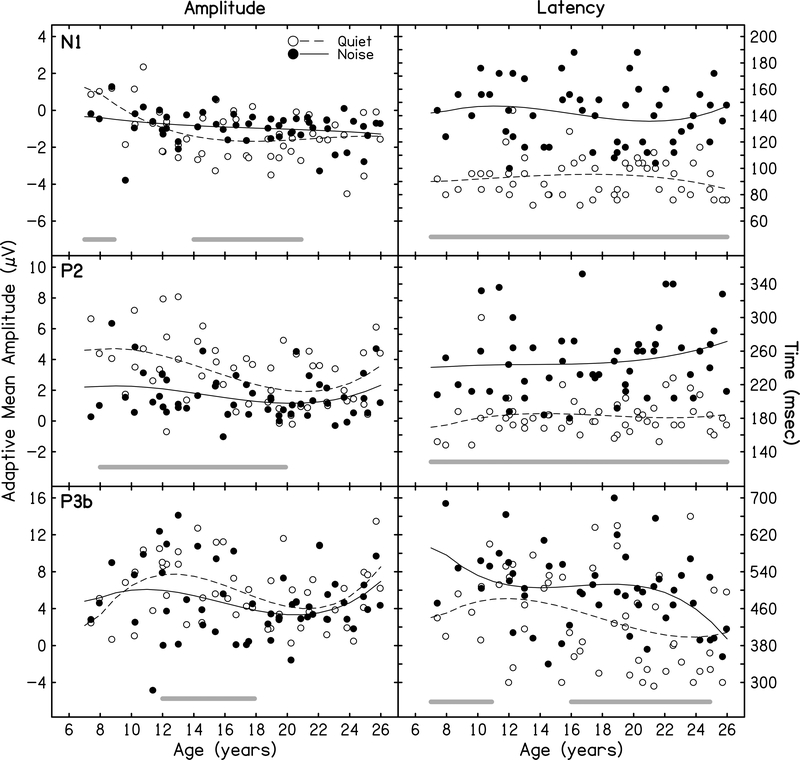

Recall that we expected competing speech noise to reduce amplitude and delay latency of CAEPs. As expected, model analyses confirmed differences between quiet and noise conditions for N1 amplitude (p = 0.015), N1 latency (p <.001), P2 amplitude (p < .001), P2 latency (p <.001), and P3b latency (p < 0.001). Specifically, N1 and P2 amplitudes were smaller and N1, P2, and P3b latencies were delayed in the noise compared to the quiet condition. No significant difference between quiet and noise conditions were found for P3b amplitude (p = 0.082). This pattern of results is shown in Figure 4 which illustrates individual data, model estimates of the mean response curves, and age-ranges of statistically significant differences between modeled data in quiet and in noise as a function of age. Differences are based on 95% confidence intervals of the quiet-minus-noise model.

Figure 4. CAEP Data in Quiet and Noise.

Adaptive mean amplitude (left panels) and peak latency (right panels) for N1, P2, and P3b in quiet (open circles) and noise (filled circles) conditions as a function of age. Model estimates for quiet (dashed lines) and noise (solid lines) are overlaid on these raw data. Shaded lines below raw data show regions of statistically significant differences between quiet and noise. With the exception of N1 amplitude, general amplitude reductions and latency delays can be seen across ages.

4.3. Variation of Effects of Noise across Age

Model analyses examining if the effects of noise varied across age, showed that only N1 amplitude showed a noise-induced change dependent upon age (p = 0.029). Visualization of this age-by-noise interaction using Figure 4 (top, left panel) shows that, unlike older children and adults, children ages 7–8 years showed more negative N1 amplitudes in noise than in the quiet condition. The effect of noise was not different across age for N1 latency (p = 0.411), P2 amplitude (p = 0.233), P2 latency (p = 0.560), P3 amplitude (p =0.304), or P3 latency (p = 0.148).

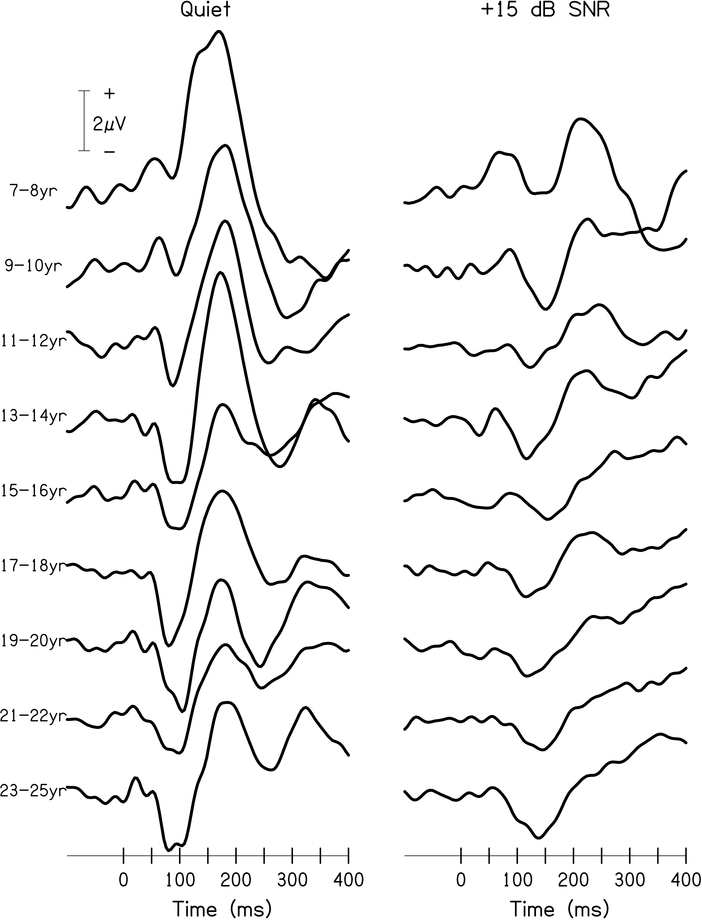

4.4. Exploratory Ad-Hoc Analysis

We did not anticipate the increase in N1 amplitude in noise for 7- to 8-year-old children. Further examination of the data suggests that this effect could be due to variations in morphology for the youngest listeners. For instance, the raw data presented in Figure 4 shows that the peak N1 amplitude for some 7- to 10-year-old listeners is positive. Figure 5 shows average waveforms to the standard stimuli at the Cz electrode cluster in quiet and in noise by two- or three-year age increments. It appears that the morphology might not be fully matured for some of the youngest listeners in quiet (e.g., bifurcation of a single, large peak rather than a true negativity prior to P2 [Ponton et al., 2000]). Rather than the baseline-to-peak measurement approach used here, measurement of peak-to-peak amplitude (i.e., N1-P2) might prove more sensitive to noise effects when examining responses with incomplete maturation of morphology. Therefore, model analyses were also conducted on the N1-P2 peak-to-peak amplitude to determine if the effect of age on noise-induced change in the N1 amplitude persists with a measurement method that might account for noise-related changes to other portions of the CAEP response with the potential to influence the N1 amplitude (e.g., P2). Peak amplitudes, rather than adaptive mean amplitudes, of the N1 and P2 peaks were used to derive this variable. Only participants with measurable N1 and P2 peaks were included in the N1-P2 peak-to-peak amplitude data set; therefore, the final sample included 53 participants in quiet and 52 participants in noise. Consistent with other CAEP parameters, N1-P2 peak-to-peak amplitudes were reduced in noise compared to quiet (p<0.001). Contrary to the model conducted on adaptive mean amplitude of the N1 peak, there was no age-related difference in the effect of noise on N1-P2 peak-to-peak amplitude (p=0.694).

Figure 5. CAEP waveforms by Age.

Average waveforms for responses to standard stimuli at the Cz electrode cluster in quiet (left panel) and in competing speech noise at +15 dB SNR (right panel), separated by age. Morphology of the N1-P2 response from 7- to 10-year-old listeners shows incomplete maturation but appears relatively mature by 11–12 years of age.

5. Discussion

The purpose of this study was to examine the effect of competing speech noise on speech-evoked CAEPs from listeners ages 7 to 25 years. Particularly, we were interested in assessing whether the detrimental effect of speech-on-speech masking depends on age at various stages of speech-sound processing. We used N1, P2, and P3b peaks to evaluate processing from sensory representation to voluntary evaluation of speech sounds embedded in speech noise. Based on prior studies, we anticipated that these three CAEPs would show detrimental effects of competing speech noise. Furthermore, we hypothesized that listeners of all ages would show similar effects of noise for the N1 response but that effects of noise on P3b latency would be inversely related with age due to prolonged maturation of top-down processing. Results showed that +15 dB SNR of competing speech noise causes reduction in N1 and P2 amplitudes and delays in N1, P2, and P3b latencies. Contrary to our prediction, 7- to 25-year-old listeners were not differentially affected by +15 dB SNR at any stage of speech processing measured using CAEPs.

In this study, the presence of +15 dB SNR competing speech noise caused small but statistically significant reductions in speech discrimination performance and an elongation of response time (e.g., 0.37–1.23% reduction in accuracy, 40–50 ms elongation in response time). The associated findings of reduced amplitude and delayed latencies of CAEPs are consistent with previous work (Billings et al., 2013; Katayama & Polich, 1998; Picton, 1992; Whiting et al., 1998; Zendel et al., 2015). Specifically, reduced amplitude of the N1 and P2 peaks suggests that competing speech noise reduces the robust encoding of the gross auditory signal (combination of competing speech and target speech information) in the auditory cortex. Furthermore, shifts in P3b latency suggest a delay in the stimulus evaluation process. Overall, this reduction and delay of synchronized neural activity from sensory representation to stimulus evaluation might suggest that even a relatively modest amount of competing noise (+15 dB SNR) had detrimental effects on multiple stages of neural processing across childhood into adulthood. Alternatively, these noise-induced changes may also reflect compensatory mechanisms within the auditory system to minimize the introduction of potentially irrelevant information (i.e., competing noise). This compensation hypothesis was posed by Anderson and colleagues (2010) who showed that speech-in-noise perception was better in children who showed stable N1 peak amplitude between quiet and noise conditions compared to children who showed an increase of in N1 peak amplitude with competing noise. Adult data, however, are inconsistent with this hypothesis, as better speech-in-noise perception has been found in listeners who show more robust N1 amplitude in noise than those with smaller N1 amplitudes in noise (Bidelman & Howell, 2016; Parbery-Clark et al., 2011). Further research is needed to explore how noise-induced changes to speech-evoked CAEPs relate with behavioral speech-in-noise performance in children.

P3b amplitudes were the only outcome not significantly different between the quiet and noise conditions. The lack of evidence of a reduction in P3b amplitude is consistent with results from Whiting and colleagues (1998), which showed that P3b amplitude remains robust and unchanged until more detrimental SNRs (e.g., 0 dB SNR). Their work also demonstrated that significant changes in P3b amplitude overlap with large degradations in behavioral performance on the speech discrimination task for adult listeners. Current results extend this finding to children, showing little change in P3b amplitude when behavioral performance is >90% correct (Table 1).

Recall that Whiting and colleagues (1998) showed greater effects of competing noise on N1 compared to P3b in adult listeners in positive SNR conditions. Based on the immature top-down processing of speech in children (Leibold & Buss, 2013; Wightman & Kistler, 2005), we expected that children might not demonstrate adult-like resiliency of the P3b in the presence of competing speech noise. Contrary to our prediction, effects of competing noise on P3b were not different across ages. This finding might suggest that compensatory processes engaged in active speech discrimination are mature by seven years of age. Alternatively, it could be argued that the +15 dB SNR competing speech condition was not challenging enough to cause significant age-related effects on top-down processes involved in speech sound discrimination. P3b amplitudes in adults are related to the level of difficulty of the task or degree of confidence in the response (Katayama & Polich, 1998; Zakrzewski, Wisniewski, Iyer, & Simpson, 2018); however, these relationships between P3b amplitude and behavioral performance have yet to be evaluated in children. Further research is warranted to investigate whether age-related effects of competing noise at various stages of speech sound processing can be detected in more detrimental listening conditions.

Although participants reported normal hearing and parents of younger participants reported no current ear infections or recent illnesses, we cannot confirm that participants had normal hearing thresholds below 1000 Hz or healthy middle ear function on the day of testing. Undetected middle ear dysfunction or low-frequency hearing loss could have affected CAEP responses (Hyde, 1997) or speech discrimination performance (Keogh, Kei, Driscoll, & Khan, 2010). Although it is unknown what effect of middle ear dysfunction or low-frequency hearing loss has on speech-elicited CAEPs, it is reasonable to assume that any effect on speech discrimination was minimal (e.g., excellent performance shown in Table 1).

Initial evaluation of N1 adaptive peak amplitude suggested there was an age-related effect of competing noise on the N1 amplitude, with younger children showing larger (i.e., more negative) N1 amplitudes in noise than in quiet while older children and adults show the opposite. Further analysis of the CAEP response using N1-P2 peak-to-peak amplitude suggests that the age-related effect of noise on N1 amplitude was potentially a byproduct of the incomplete maturation of morphology in quiet for children ages 7–10 years of age. The morphology in our youngest listeners is consistent with previous studies and is thought to reflect incomplete myelination and prolonged neuronal refractory periods (Gilley et al., 2005; Surwillo, 1981). Interestingly, these effects of immature central auditory processing in young listeners were evident in the quiet condition, but responses in noise appear to show N1 responses similar to those of adult listeners (see Figure 5). We speculate that the appearance of mature morphology in the noise condition might be attributed to the amplitude reduction of P2 measured in the presence of competing noise. That is, because the recorded CAEP reflects the summed activity of multiple components (Luck, 2014), the reduced positivity of the P2 in noise might have allowed for the summed activity to better reflect the N1 negative deflection in the competing noise condition when compared to the quiet condition. When using alternative CAEP measures (i.e., N1-P2 peak-to-peak amplitude measure), +15 dB SNR of competing speech noise caused an overall reduction in CAEP amplitude that was not different across age. It is unclear which measurement technique, adaptive peak amplitude or peak-to-peak amplitude, is more reflective of early sensory encoding of speech in noise for child listeners. If we are to use the N1 and P2 peaks to understand processes underlying the difficulty children experience with speech in noise, further research is needed to determine if peak amplitude or peak-to-peak amplitude show similar or different relationships with behavioral speech-in-noise performance.

6. Summary

This study assessed the effect of noise on active speech-sound discrimination at various processing stages in listeners 7–25 years of age by measuring CAEPs to speech syllables in quiet and in +15 dB SNR of competing speech noise. Consistent with previous work, results showed that +15 dB SNR reduces amplitudes and delays latencies in CAEPs for children and adults, affecting early stages of speech-sound processing, delaying stimulus evaluation, and causing a reduction in behavioral speech-sound discrimination. Contrary to expectations, our findings suggest that children and adults may exhibit similar effects of +15 dB competing speech noise at various stages of speech-sound discrimination. Further research is needed to explore if more difficult listening conditions yield significant age-related effects of competing speech noise on various stages of speech processing.

Supplementary Material

Highlights:

An active auditory oddball paradigm elicited N1, P2, and P3b in 7–25 year-olds

Competing speech noise reduced amplitude and delayed latency of CAEPs

Effects of competing speech noise compared to quiet were not different across age

Acknowledgements

This work was supported by the National Institutes of Health (grant numbers UL1TR000445, U54HD083211, and UL1TR002489), the Council of Academic Programs and in Communication Sciences and Disorders, and the Dan and Margaret Maddox Charitable Trust. Support for this work was also provided by Dorita Jones, Erna Hrstic, and Javier Santos, who assisted with data collection, by Dr. Julie Taylor who provided statistical consultation during the study planning process, and Dr. Paul W. Stewart, who provided statistical consultation, statistical computations, analysis, manuscript text and edits.

Abbreviations:

- CAEP

cortical auditory evoked potential

- SNR

signal-to-noise ratio

- ISI

inter-stimulus-interval

Footnotes

Declarations of interest: none

For the purposes of this study, we have identified peaks in previous research by examining the reported waveforms and labeling the first and second positive deflections as P1/P2, respectively, and the first negative deflection as N1.

Pilot data suggested that +10 dB SNR would yield minimal or absent N1-P2 responses in 7- to 10-year-old children. To ensure that present responses in noise were obtained across the age range, we chose to use a more favorable SNR that is representative of classroom acoustic recommendations.

Data requiring manual changes to peak latency values were dispersed across the age range (e.g., age range for changes spanned from 12–20 years, 10–25 years, and 10–23 years for N1, P2, and P3b, respectively) suggesting that prespecified windows were generally appropriate for automatic peak selection.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Almeqbel A, & McMahon C (2015). Objective measurement of high-level auditory cortical function in children. International Journal of Pediatric Otorhinolaryngology, 79(7), 1055–1062. [DOI] [PubMed] [Google Scholar]

- Anderson S, Chandrasekaran B, Yi HG, & Kraus N (2010). Cortical-evoked potentials reflect speech-in-noise perception in children. European Journal of Neuroscience, 32(8), 1407–1413. 10.1111/j.1460-9568.2010.07409.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- ANSI. (2010). Acoustical Performance Criteria, Design Requirements, and Guidelines for Schools, Part 1: Permanent Schools. ANSI/ASA S12.60–2010, 1–28. [Google Scholar]

- Auditec. Four-talker babble (1971). St. Louis, MO. [Google Scholar]

- Belsley DA, Kuh E, & Welsch RE (2005). Regression diagnostics: Identifying influential data and sources of collinearity (Vol. 571). John Wiley & Sons. [Google Scholar]

- Bennett KO, Billings CJ, Molis MR, & Leek MR (2012). Neural encoding and perception of speech signals in informational masking. Ear and Hearing, 32(2), 231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bidelman GM, & Howell M (2016). Functional changes in inter- and intra-hemispheric cortical processing underlying degraded speech perception. NeuroImage, 124, 581–590. 10.1016/j.neuroimage.2015.09.020 [DOI] [PubMed] [Google Scholar]

- Billings CJ, Bennett KO, Molis MR, & Leek MR (2011). Cortical encoding of signals in noise: effects of stimulus type and recording paradigm. Ear and Hearing, 32(1), 53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Billings CJ, & Grush LD (2016). Signal type and signal-to-noise ratio interact to affect cortical auditory evoked potentials. The Journal of the Acoustical Society of America, 140(2), EL221–EL226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Billings CJ, McMillan GP, Penman TM, & Gille SM (2013). Predicting perception in noise using cortical auditory evoked potentials. JARO - Journal of the Association for Research in Otolaryngology, 14(6), 891–903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley JS, & Sato H (2008). The intelligibility of speech in elementary school classrooms. The Journal of the Acoustical Society of America, 123(4), 2078–2086. [DOI] [PubMed] [Google Scholar]

- Corbin NE, Bonino AY, Buss E, & Leibold LJ (2016). Development of open-set word recognition in children: Speech-shaped noise and two-talker speech maskers. Ear and Hearing, 37(1), 55–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crowley KE, & Colrain IM (2004). A review of the evidence for P2 being an independent component process: age, sleep and modality. Clinical Neurophysiology, 115(4), 732–744. [DOI] [PubMed] [Google Scholar]

- Cunningham J, Nicol T, Zecker SG, Bradlow A, & Kraus N (2001). Neurobiologic responses to speech in noise in children with learning problems: Deficits and strategies for improvement. Clinical Neurophysiology, 112(5), 758–767. [DOI] [PubMed] [Google Scholar]

- Dudek H (2005). The Relationship between a Condition Number and Coefficients of Variation. Przegląd Statystyczny, 1(52), 75–85. [Google Scholar]

- Elliott LL (1979). Performance of children aged 9 to 17 years on a test of speech intelligibility in noise using sentence material with controlled word predictability. The Journal of the Acoustical Society of America, 66(3), 651–653. [DOI] [PubMed] [Google Scholar]

- Etymotic Research. (2005). Bamford-Kowal-Bench Speech in Noise Test (Version 1.03).

- French NR, & Steinberg JC (1947). Factors Governing the Intelligibility of Speech Sounds. The Journal of the Acoustical Society of America, 19(1), 90–119. 10.1121/1.1916407 [DOI] [Google Scholar]

- Freyman RL, Balakrishnan U, & Helfer KS (2001). Spatial release from informational masking in speech recognition. The Journal of the Acoustical Society of America, 109(5), 2112–2122. [DOI] [PubMed] [Google Scholar]

- Gilley PM, Sharma A, Dorman M, & Martin K (2005). Developmental changes in refractoriness of the cortical auditory evoked potential. Clinical Neurophysiology, 116(3), 648–657. [DOI] [PubMed] [Google Scholar]

- Gomes H, Molholm S, Christodoulou C, Ritter W, & Cowan N (2000). the Development of Auditory Attention in Children. Frontiers in Bioscience, 5, 108–120. 10.1093/toxsci/kfs057 [DOI] [PubMed] [Google Scholar]

- Gustafson SJ, Key AP, Hornsby BW, & Bess FH (2018). Fatigue Related to Speech Processing in Children With Hearing Loss: Behavioral, Subjective, and Electrophysiological Measures. Journal of Speech, Language, and Hearing Research, 61(4), 1000–1011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall JW, Grose JH, Buss E, & Dev MB (2002). Spondee recognition in a two-talker masker and a speech-shaped noise masker in adults and children. Ear and Hearing, 23(2), 159–165. [DOI] [PubMed] [Google Scholar]

- Hassaan MR (2015). Auditory evoked cortical potentials with competing noise in children with auditory figure ground deficit. Hearing, Balance and Communication, 13(1), 15–23. [Google Scholar]

- Hayes EA, Warrier CM, Nicol TG, Zecker SG, & Kraus N (2003). Neural plasticity following auditory training in children with learning problems. Clinical Neurophysiology, 114(4), 673–684. [DOI] [PubMed] [Google Scholar]

- Hillyard S, Hink R, Schwent V, & Picton T (1973). Electrical signs of selective attention in the human brain. Science, 182, 177–182. [DOI] [PubMed] [Google Scholar]

- Howard CS, Munro KJ, & Plack CJ (2010). Listening effort at signal-to-noise ratios that are typical of the school classroom. International Journal of Audiology, 49(12), 928–932. [DOI] [PubMed] [Google Scholar]

- Hyde M (1997). The N1 response and its applications. Audiology and Neurotology, 2(5), 281–307. [DOI] [PubMed] [Google Scholar]

- Johnson CE (2000). Children’s Phoneme Identification in Reverberation and Noise. Journal of Speech, Language, and Hearing Research, 43(February), 144–157. [DOI] [PubMed] [Google Scholar]

- Kaplan-Neeman R, Kishon-Rabin L, Henkin Y, & Muchnik C (2006). Identification of syllables in noise: Electrophysiological and behavioral correlates. The Journal of the Acoustical Society of America, 120(2), 926–933. [DOI] [PubMed] [Google Scholar]

- Katayama J, & Polich J (1998). Stimulus context determines P3a and P3b. Psychophysiology, 35(1), 23–33. [PubMed] [Google Scholar]

- Kaufman AS, & Kaufman NL (2004). Kaufman brief intelligence test (2nd ed.). Circle Pines, MN: American Guidance Services. [Google Scholar]

- Keogh T, Kei J, Driscoll C, & Khan A (2010). Children with minimal conductive hearing impairment: Speech comprehension in noise. Audiology and Neurotology, 15(1), 27–35. [DOI] [PubMed] [Google Scholar]

- Kidd G, Mason CR, Deliwala PS, Woods WS, & Colburn HS (1994). Reducing informational masking by sound segregation. The Journal of the Acoustical Society of America, 95(6), 3475–3480. 10.1121/1.410023 [DOI] [PubMed] [Google Scholar]

- Koerner TK, Zhang Y, Nelson PB, Wang B, & Zou H (2017). Neural indices of phonemic discrimination and sentence-level speech intelligibility in quiet and noise: A P3 study. Hearing Research, 350(April), 58–67. 10.1016/j.heares.2017.04.009 [DOI] [PubMed] [Google Scholar]

- Leibold LJ (2017). Speech Perception in Complex Acoustic Environments: Developmental Effects. Journal of Speech Language and Hearing Research, 60(10), 3001 10.1044/2017_JSLHR-H-17-0070 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leibold LJ, & Buss E (2013). Children’s identification of consonants in a speech-shaped noise or a two-talker masker. Journal of Speech, Language, and Hearing Research, 56(4), 1144–1155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luck SJ (2014). An introduction to the event-related potential technique. MIT press. [Google Scholar]

- Martin BA, & Stapells DR (2005). Effects of low-pass noise masking on auditory event-related potentials to speech. Ear and Hearing, 26(2), 195–213. [DOI] [PubMed] [Google Scholar]

- Miller GA, & Nicely PE (1955). An analysis of perceptual confusions among some English consonants. The Journal of the Acoustical Society of America, 27(2), 338–352. [Google Scholar]

- Näätänen R, Kujala T, & Winkler I (2011). Auditory processing that leads to conscious perception: A unique window to central auditory processing opened by the mismatch negativity and related responses. Psychophysiology, 48(1), 4–22. 10.1111/j.1469-8986.2010.01114.x [DOI] [PubMed] [Google Scholar]

- Näätänen R, & Picton T (1987). The N1 Wave of the Human Electric and Magnetic Response to Sound: A Review and an Analysis of the Component Structure. Psychophysiology, 24(4), 375–425. 10.1111/j.1469-8986.1987.tb00311.x [DOI] [PubMed] [Google Scholar]

- Neuman AC, Wroblewski M, Hajicek J, & Rubinstein A (2010). Combined effects of noise and reverberation on speech recognition performance of normal-hearing children and adults. Ear and Hearing, 31(3), 336–344. [DOI] [PubMed] [Google Scholar]

- Parbery-Clark A, Marmel F, Bair J, & Kraus N (2011). What subcortical-cortical relationships tell us about processing speech in noise. European Journal of Neuroscience, 33(3), 549–557. [DOI] [PubMed] [Google Scholar]

- Parikh G, & Loizou PC (2005). The influence of noise on vowel and consonant cues. The Journal of the Acoustical Society of America, 118(6), 3874–3888. [DOI] [PubMed] [Google Scholar]

- Pearce JW, Crowell DH, Tokioka A, & Pacheco GP (1989). Childhood developmental changes in the auditory P300. Journal of Child Neurology, 4(2), 100–106. [DOI] [PubMed] [Google Scholar]

- Picton TW (1992). The P300 wave of the human event-related potential. Journal of Clinical Neurophysiology, 9, 456–456. [DOI] [PubMed] [Google Scholar]

- Picton TW, & Hillyard SA (1974). Human auditory evoked potentials. II: Effects of attention. Electroencephalography and Clinical Neurophysiology, 36, 191–200. [DOI] [PubMed] [Google Scholar]

- Polich J (2007). Updating P300: An integrative theory of P3a and P3b. Clinical Neurophysiology, 118(10), 2128–2148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polich J, Ladish C, & Burns T (1990). Normal variation of P300 in children Age, memory span, and head size. International Journal of Psychophysiology, 9, 237–248. [DOI] [PubMed] [Google Scholar]

- Ponton CW, Eggermont JJ, Kwong B, & Don M (2000). Maturation of human central auditory system activity: evidence from multi-channel evoked potentials. Clinical Neurophysiology, 111(2), 220–236. [DOI] [PubMed] [Google Scholar]

- Rashid M, Leensen MJ, & Dreschler W (2016). Application of the online hearing screening test “Earcheck”: Speech intelligibility in noise in teenagers and young adults. Noise and Health, 18(85), 312 10.4103/1463-1741.195807 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salvi RJ, Lockwood a H., Frisina RD, Coad ML, Wack DS, & Frisina DR (2002). PET imaging of the normal human auditory system: responses to speech in quiet and in background noise. Hearing Research, 170(1–2), 96–106. [DOI] [PubMed] [Google Scholar]

- Shannon RV, Jensvold A, Padilla M, Robert ME, & Wang X (1999). Consonant recordings for speech testing. The Journal of the Acoustical Society of America, 106, L71–L74. [DOI] [PubMed] [Google Scholar]

- Sharma M, Purdy SC, & Kelly AS (2014). The contribution of speech-evoked cortical auditory evoked potentials to the diagnosis and measurement of intervention outcomes in children with auditory processing disorder. Seminars in Hearing, 35(1), 51–64. [Google Scholar]

- Stevens KN, & Blumstein SE (1978). Invariant cues for place of articulation in stop consonants. The Journal of the Acoustical Society of America, 64(5), 1358. [DOI] [PubMed] [Google Scholar]

- Surwillo WW (1981). Recovery of the cortical evoked potential from auditory stimulation in children and adults. Developmental Psychobiology, 14(1), 1–12. [DOI] [PubMed] [Google Scholar]

- Sutton S, Braren M, Zubin J, & John ER (1965). Evoked-potential correlates of stimulus uncertainty. Science, 150(3700), 1187–1188. [DOI] [PubMed] [Google Scholar]

- Tacikowski P, & Nowicka A (2010). Allocation of attention to self-name and self-face: An ERP study. Biological Psychology, 84(2), 318–324. [DOI] [PubMed] [Google Scholar]

- Talarico M, Abdilla G, Aliferis M, Balazic I, Giaprakis I, Stefanakis T, Paolini AG (2006). Effect of age and cognition on childhood speech in noise perception abilities. Audiology and Neurotology, 12(1), 13–19. [DOI] [PubMed] [Google Scholar]

- Tremblay KL, Ross B, Inoue K, McClannahan K, & Collet G (2014). Is the auditory evoked P2 response a biomarker of learning? Frontiers in Systems Neuroscience, 8, 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ubiali T, Sanfins MD, Borges LR, & Colella-Santos MF (2016). Contralateral noise stimulation delays P300 latency in school-aged children. PLoS ONE, 11(2), 1–14. 10.1371/journal.pone.0148360 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuontela V, Steenari M-R, Carlson S, Koivisto J, Fjällberg M, & Aronen ET (2003). Audiospatial and visuospatial working memory in 6–13 year old school children. Learning & Memory, 10(1), 74–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warrier CM, Johnson KL, Hayes EA, Nicol T, & Kraus N (2004). Learning impaired children exhibit timing deficits and training-related improvements in auditory cortical responses to speech in noise. Experimental Brain Research, 157(4), 431–441. [DOI] [PubMed] [Google Scholar]

- Whiting KA, Martin BA, & Stapells DR (1998). The Effect of Broadband Noise Masking on Cortical Event-Related Potentials to Speech Sounds /ba/ and /da/. Ear & Hearing, 19(3), 218–231. [DOI] [PubMed] [Google Scholar]

- Wightman FL, Callahan MR, Lutfi RA, Kistler DJ, & Oh E (2003). Children’s detection of pure-tone signals: Informational masking with contralateral maskers. The Journal of the Acoustical Society of America, 113(6), 3297–3305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wightman FL, & Kistler DJ (2005). Informational masking of speech in children: Effects of ipsilateral and contralateral distracters. The Journal of the Acoustical Society of America, 118(5), 3164–3176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zakrzewski AC, Wisniewski MG, Iyer N, & Simpson BD (2018). Confidence tracks sensory-and decision-related ERP dynamics during auditory detection. Brain and Cognition. [DOI] [PubMed] [Google Scholar]

- Zendel BR, Tremblay C-D, Belleville S, & Peretz I (2015). The Impact of Musicianship on the Cortical Mechanisms Related to Separating Speech from Background Noise. Journal of Cognitive Neuroscience, 27(5), 1044–1059. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.