Abstract

In this paper, we aim at tackling a general but interesting cross-modality feature learning question in remote sensing community—can a limited amount of highly-discriminative (e.g., hyperspectral) training data improve the performance of a classification task using a large amount of poorly-discriminative (e.g., multispectral) data? Traditional semi-supervised manifold alignment methods do not perform sufficiently well for such problems, since the hyperspectral data is very expensive to be largely collected in a trade-off between time and efficiency, compared to the multispectral data. To this end, we propose a novel semi-supervised cross-modality learning framework, called learnable manifold alignment (LeMA). LeMA learns a joint graph structure directly from the data instead of using a given fixed graph defined by a Gaussian kernel function. With the learned graph, we can further capture the data distribution by graph-based label propagation, which enables finding a more accurate decision boundary. Additionally, an optimization strategy based on the alternating direction method of multipliers (ADMM) is designed to solve the proposed model. Extensive experiments on two hyperspectral-multispectral datasets demonstrate the superiority and effectiveness of the proposed method in comparison with several state-of-the-art methods.

Keywords: Cross-modality, Graph learning, Hyperspectral, Manifold alignment, Multispectral, Remote sensing, Semi-supervised learning

1. Introduction

Multispectral (MS) imagery has been receiving an increasing interest in the urban area (e.g. a large-scale land-cover mapping (Huang et al., 2014, Hong et al., 2016), building localization (Kang et al., 2018)), agriculture (Yang et al., 2013), and mineral products (Van der Meer et al., 2014), as operational optical broadband (multispectral) satellites (e.g. Sentinel-2 and Landsat-8 (Yokoya et al., 2017)) enable the multispectral imagery openly available on a global scale. In general, a reliable classifier needs to be trained on a large amount of labeled, discriminative, and high-quality samples. Unfortunately, labeling data, in particular large-scale data, is very gruelling and time-consuming. A natural alternative way to this issue is to consider tons of unlabeled data, yielding a semi-supervised learning. On the other hand, MS data fails to spectrally discriminate similar classes due to its broad spectral bandwidth. A simple way is to improve the data quality by fusing high-discriminative hyperspectral (HS) data (Yokoya et al., 2017). Although such data is expensive to collect, we may be able to expect a small amount of such data available. The aforementioned two points motivate us to raise a question related to transfer learning and cross-modality learning: Can a limited amount of HS training data partially overlapping MS data improve the performance of a classification task using a large coverage of MS testing data?

Over the past decades, land-cover and land-use classification tasks of optical remote sensing imagery has received increasing attention in the unsupervised (Hong et al., 2017, Li et al., 2014, Tarabalka et al., 2009), supervised (Zhang et al., 2012, Hong et al., 2018), and semi-supervised ways (Xia et al., 2014, Tuia et al., 2014). To our best knowledge, the classifying ability in unsupervised learning (or dimensionality reduction) still remains limited, due to missing label information. By fully considering the variability of intra-class and inter-class from labels, supervised learning is able to perform the classification task better. In reality, a limited number of labeled samples usually hinders the trained classier towards a high classification performance, further leading to a possible failure in some challenging classification or transferring tasks owing to the lack of generalization and representability. Alternatively, semi-supervised learning draws into plenty of unlabeled data in learning process. This is capable of better capturing the distribution of different categories in order to find an accurate decision boundary.

On the other hand, considerable work related to transfer learning (TL) or domain adaptation (DA) has been successfully developed and applied in the remote sensing community (Bruzzone and Marconcini, 2010, Banerjee et al., 2015, Matasci et al., 2015, Tuia et al., 2016, Samat et al., 2016, Samat et al., 2017). According to the different transferred objects, the TL or DA approaches can be roughly categorized into three groups, including parameter adaptation, instance-based transfer, and feature-based alignment or representation.

The seminal work dealing with parameter adaptation was presented in Khosla et al., 2012, Woodcock et al., 2001, aiming at transferring an existing classifier (or parameters) trained or learned from the source domain to the target domain. Differently, the instance-based transferring technique transfers the knowledge by reweighting (Jiang and Zhai, 2007) or resampling (Sugiyama et al., 2008) the samples of the source domain to those of the target domain. A similar idea based on active learning (Samat et al., 2016) has also been proposed to address this issue, by selecting the most informative samples in the target domain to replace with those samples of the source domain that do not match the data distribution of the target domain (Persello and Bruzzone, 2012).

For the final group of feature-based alignment or representation, manifold alignment (MA) is one of the most popular semi-supervised learning framework (Wang et al., 2011) that facilitates transfer learning. MA has been successfully applied to various tasks in remote sensing community, e.g. classification (Tuia et al., 2016), data visualization (Liao et al., 2016), multi-modality data analysis (Tuia et al., 2014), etc. The key idea of MA can be generalized as learning a common (or shared) subspace where different data can be aligned to learn a joint feature representation. Generally, existing MA methods can be approximately categorized into unsupervised, supervised, and semi-supervised approaches. The unsupervised approach usually fails to align multimodal data sufficiently well, as their corresponding low-dimensional embeddings may be quite diverse (Wang and Mahadevan, 2009). In the supervised case, only aligning the limited number of training samples to learn a common subspace leads to weak transferability. While preserving a joint manifold structure created by both labeled and unlabeled data, semi-supervised alignment allows different data sources to be better transformed into the common subspace (Wang and Mahadevan, 2011).

Although the joint manifold structure used in conventional semi-supervised MA approaches can relate features or instances, poor connections between the common subspace and label information still hinder the low-dimensional feature representation from being more discriminative. More importantly, in most graph-based semi-supervised learning algorithms (e.g. graph-based label propagation (GLP) (Zhu et al., 2003), semi-supervised manifold alignment (S-SMA (Tuia et al., 2014)) (Wang and Mahadevan, 2011)), the topology of unlabeled samples is merely given by a fixed Gaussian kernel function, which is computed in the original space rather than in the common space. This makes it difficult to adaptively transfer unlabeled samples into the learned common subspace, particularly when applied to multimodal data due to different numbers of dimensions. To address these issues, we propose a learnable manifold alignment (LeMA) by a data-driven graph learning directly from a common subspace so as to make the multimodal data comparable as well as improve the explainability of the learned common subspace, which further results in a better transferability. More specifically, our contributions can be summarized as follows:

-

•

We propose a novel semi-supervised cross-modality learning framework called learnable manifold alignment (LeMA) for a large-scale land-cover classification task. One spectrally-poor MS and one spectrally rich HS data are considered as two different modalities and applied for this task, where the spatial extent of the former is a true superset of that of the latter.

-

•

Unlike jointly feature learning in which the model is both trained and tested from completed HS-MS correspondences, LeMA learns an aligned feature subspace from the labeled HS-MS correspondences and partially unlabeled MS data, and allows to identify out-of-samples using either MS data or HS data; Such the learnt subspace is a good fit for our case of cross-modality learning.1

-

•

Instead of directly computing graph structure with a Gaussian kernel function, a data-driven graph learning method is exploited behind LeMA in order to strengthen the abilities of transferring and generalization;

-

•

An optimization framework based on the alternating direction method of multipliers (ADMM) is designed to fast and effectively solve the proposed model.

The remainder of this paper is organized as follows. Section 2 elaborates on our motivation and proposes the methodology for the LeMA and the corresponding optimization algorithm. In Section 3, we present the experimental results on two HS-MS datasets over the areas of the University of Houston and Chikusei, respectively, and meanwhile discuss the qualitative and quantitative analysis. Section 4 concludes with a summary.

2. Learnable Manifold Alignment (LeMA)

In this section, a cross-modality learning problem is firstly casted and the motivation is stated in the following. Accordingly, we formulate the methodology of our proposed and then elucidate an ADMM-based optimization algorithm to solve it.

2.1. Problem statement and motivation

For many high-level data analysis tasks in remote sensing community, such as land-cover classification, data collection plays an important role, since information-rich training samples enable us to easily find an optimal decision boundary.

There is, however, a typical bottleneck in collecting a large amount of labeled and discriminative data. Despite the MS data available at a global scale from the satellites of Sentinel-2 and Landsat-8, the identification and discrimination of materials are unattainable at an accuracy level by MS data, resulting from its poorly spectral information. On the contrary, HS data is characterized by rich spectral information, but only can be acquired in very small areas, due to the limitations of imaging sensors. This issue naturally guides us to jointly utilize the HS and MS bi-modal data, specifically leading to the following interesting and challenging question can a limited number of HS training data contribute to the classification task of a large-scale MS data?

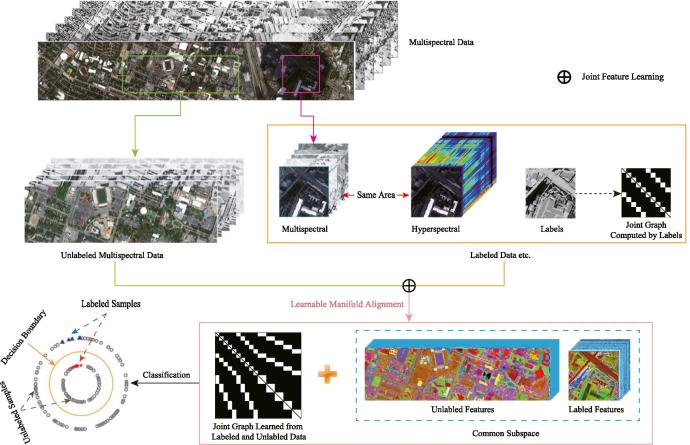

A feasible solution to the issue can be unfolded to two parts: (1) cross-modality learning: learning a common subspace where the features are expected to absorb the different properties from the HS-MS modalities and meanwhile the HS and MS data can be transferred each other; (2) semi-supervised learning: Embedding massive unlabeled MS samples which are relatively in large quantities and easy to be collected, so as to learn a more discriminative feature representation. Fig. 1 illustrates the workflow of LeMA.

Fig. 1.

An illustration of the proposed LeMA method.

2.2. Problem formulation

To effectively model the aforementioned issue, we intend to develop a joint learning framework which better learns a discriminative common subspace from high-quality HS data and low-quality MS data. Intuitively, such a common subspace can be shaped by selectively absorbing the benefits of both high-quality data with more details and low-quality data with more structural information. Therefore, following a popular joint learning framework (Ji and Ye, 2009), we formulate the common subspace learning problem as

| (1) |

where and is the label matrix represented by one-hot encoding, and and stand respectively for the data from hyperspectral and multispectral domains, and are respectively the common subspace projection and the linear projection to bridge the common subspace and label information. stands for a joint Laplacian matrix, is an adjacency matrix and . is generally used to measure the similarity between samples. With the orthogonal constraint (), the global optimal solutions with respect to the variables and can be theoretically guaranteed (Ji and Ye, 2009).

Algorithm 1

Learnable Manifold Alignment (LeMA)

The first term of Eq. (1) is a fidelity term, and the regularization term parameterized by aims to achieve a reliable generalization of the proposed model. The third term acts as supervised manifold alignment (SMA) (Wang et al., 2011). We refer to the proposed framework for joint common subspace learning as CoSpace.

To further exploit the information of unlabeled samples, we extend the CoSpace in Eq. (1) to LeMA by learning a joint Laplacian matrix, which can be formulated as follows with extra constraints related to necessary conditions of :

| (2) |

where , and represents the unlabeled MS samples and controls the scale. Note that a feasible and effective approach to choose the unlabeled data with respect to the variable is to group total samples besides the training samples into some landmarks (cluster centers). These landmarks are used as the unlabeled data, which can fully take into account the available information and meanwhile effectively reduce the computational cost. Due to the use of clustering technique in unlabeled data, we experimentally and empirically set the ratio of labeled and unlabeled data to approximately be 1:1.

The model in Eq. (2) can be simplified by optimizing the adjacency matrix () instead of directly solving a hard optimization problem of , then we have

| (3) |

where is defined as a pairwise Euclidean distance matrix: . denotes the Schur-Hadamard (termwise) product.

Algorithm 2

Solving the subproblem for

Using Eq. (3), we can equivalently convert the optimization problem of smooth manifold in (2) to that of graph sparsity

| (4) |

where can be interpreted as a weighted -norm of which enforces weighted sparsity.

We further elaborate the relationship between the proposed LeMA model and our motivation in an easy-understanding way. In general, we aim at finding a common subspace by learning a pair of projections ( and ) corresponding to two kinds of different modalities (e.g., MS and HS), respectively. In order to effectively improve the discriminative ability of the learned subspace, we make a connection between the subspace and label information by jointly estimating the regression coefficient and common projections , as formulated in Eq. (1). What’s more, the alignment behavior of different modalities can be represented by ’s connectivity, that is, if the sample and the sample are connected (), and then the two samples belong to the same class; vice versa. Besides, we construct an extra adjacency matrix based on those unlabeled samples in order to globally capture the data distribution. The matrix is usually obtained by a Gaussian kernel function (semi-supervised CoSpace) and also can be learned from the data (LeMA as formulated in Eq. (2)).

Algorithm 3

Solving the subproblem for

2.3. Model optimization

Considering the complexity of the non-convex problem (4), an iterative alternating optimization strategy is adopted to solve the convex subproblems of each variable , and . An implementation of LeMA is given in Algorithm 1.

Optimization with respect to : This is a typical least-squares problem with Tikhonov regularization, which can be formulated as

| (5) |

which has a closed-form solution

| (6) |

where .

Optimization with respect to : the optimization problem for can be formulated as

| (7) |

In order to solve (7) effectively with ADMM, we consider an equivalent form by introducing auxiliary variables and to replace and , respectively.

| (8) |

Algorithm 2 lists the more detailed procedures for solving the problem (8).

Algorithm 4

Solving the subproblem for

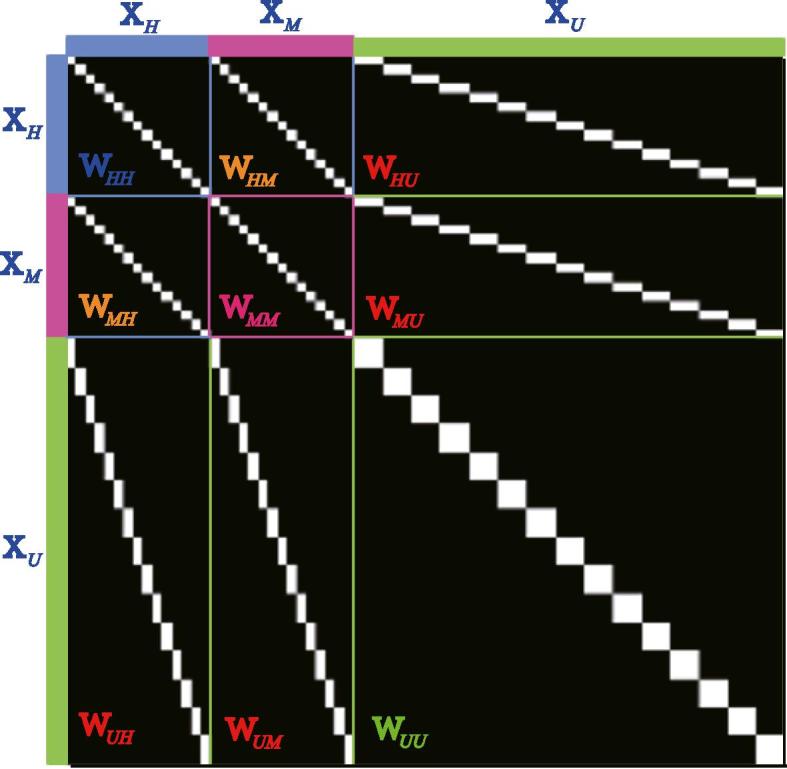

Optimization with respect to : is a joint adjacency matrix and consists mainly of nine parts as shown in Fig. 2. Among the nine parts, and can be directly inferred from label information in the form of the LDA-like graph (Gu et al., 2011):

| (9) |

Fig. 2.

An example for the joint adjacency matrix .

Given the symmetry of , (i.e., , and ), we only need to update three of out nine parts, namely , and . The optimization problems of and can be formulated by

| (10) |

which can be solved by ADMM. More details can be found in Algorithm 3, where and represent respectively the subspace features of and stands for the proximal operator for (Heide et al., 2015). We technically add the constraint in order to share the same unit level with LDA-like graph.

For , the objective function can be written as

| (11) |

which can be effectively solved using Algorithm 4.

Finally, we repeat these optimization procedures until a stopping criterion is satisfied.

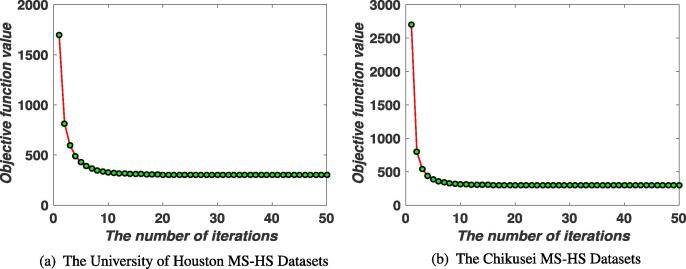

2.4. Convergence analysis

The alternative alternating strategy used in Algorithm 1 is nothing but a block coordinate descent (BCD), which has been theoretically supported to converge to a stationary point as long as each subproblem in Eq. (4) is exactly minimized (Bertsekas, 1999). As observed, these subproblems with respect to the variables and are strongly convex, and hence each independent task can ideally find a unique minimum when the Lagrangian parameter is updated within finitely iterative steps (Boyd et al., 2011). Besides, ADMM used in each subproblem optimization is actually generalized to inexact Augmented Lagrange Multiplier (ALM) (Chen et al., 2018), whose convergence has been well studied when the number of block is less than three (Lin et al., 2010) (e.g. Algorithm 2). Although there is still not a generally and strictly theoretical proof in multi-blocks case, yet the convergence analysis for some common cases such as our Algorithm 3, Algorithm 4 has been well conducted in Hong et al., 2017, Liu et al., 2013, Zhong et al., 2016, Zhou et al., 2017. We also experimentally record the objective function values in each iteration to draw the convergence curves of LeMA on two used HS-MS datasets (see Fig. 3).

Fig. 3.

Convergence analysis of LeMA are experimentally performed on the two MS-HS datasets.

3. Experiments

In this section, we quantitatively and qualitatively evaluate the performance of the proposed method on two simulated HS-MS datasets (University of Houston and Chikusei) and a real multispectral-lidar and hyperspectral dataset provided by 2018 IEEE GRSS data fusion contest (DFC2018), by the form of classification using two commonly used and high-performance classifiers, namely linear support vector machines (LSVM), and canonical correlation forest (CCF) (Tom and Frank, 2015). Three indices: overall accuracy (OA), average accuracy (AA), kappa coefficient (), are calculated to quantitatively assess the classification performance. Moreover, we compare the performance of the proposed LeMA and several other state-of-art algorithms, i.e. GLP (Zhu et al., 2003), SMA, S-SMA (Wang and Mahadevan, 2009), CoSpace and Semi-supervised CoSpace (S-CoSpace). The original MS data is used as a baseline. SMA constructs an LDA-like joint graph using label information. Besides label information, S-SMA method also uses unlabeled samples to generate the joint graph by computing the similarity based on Euclidean distance. The same strategy of graph construction is adopted for CoSpace and S-CoSpace.

3.1. The simulated MS-HS datasets over the University of Houston

3.1.1. Data description

The HS data in the simulated Houston MS-HS datasets was acquired by the ITRES-CASI-1500 sensor with the size of at a ground sampling distance (GSD) of 2.5 m over the University of Houston campus and its neighboring urban areas. This data was provided for the 2013 IEEE GRSS data fusion contest, with 144 bands covering the wavelength range from 364 nm to 1046 nm. Spectral simulation is performed to generate the MS image by degrading the HS image in the spectral domain using the MS spectral response functions (SRFs) of Sentinel-2 as filters (for more details refer to Yokoya et al., 2017). The MS data we used is generated with dimensions of .

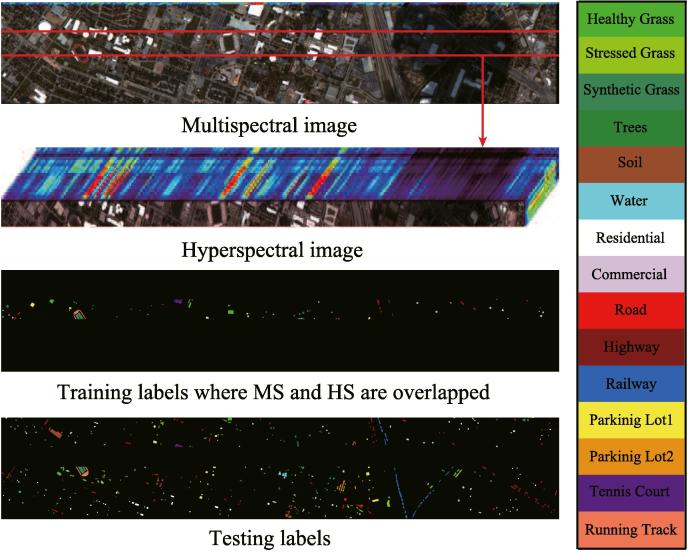

3.1.2. Experimental setup

To meet our problem setting, a HS image partially overlapping MS image and a whole MS image are used in our experiments, and meanwhile the corresponding training and test samples can be re-assigned, as shown in Fig. 4. In detail, since the total labels are available, we seek out a region where all kinds of classes are involved. The labels in the region are selected as the training set and the rest are seen as the test set, as shown in Fig. 4 and specifically quantified in Table 1.

Fig. 4.

The multispectral image and its corresponding hyperspectral image that partially covers the same area, as well as training and testing labels, for University of Houston dataset.

Table 1.

The number of training and testing samples for the two used MS-HS datasets.

| Class No. | Houston MS-HS dataset |

Chikusei MS-HS dataset |

||||

|---|---|---|---|---|---|---|

| Class Name | Training | Testing | Class Name | Training | Testing | |

| 1 | Healthy Grass | 537 | 699 | Water | 301 | 858 |

| 2 | Stressed Grass | 61 | 1154 | Bare Soil (School) | 992 | 1867 |

| 3 | Synthetic Grass | 340 | 357 | Bare Soil (Farmland) | 455 | 4397 |

| 4 | Tree | 209 | 1035 | Natural Plants | 150 | 4272 |

| 5 | Soil | 74 | 1168 | Weeds in Farmland | 928 | 1108 |

| 6 | Water | 22 | 303 | Forest | 486 | 11904 |

| 7 | Residential | 52 | 1203 | Grass | 989 | 5526 |

| 8 | Commercial | 320 | 924 | Rice Field (Grown) | 813 | 8816 |

| 9 | Road | 76 | 1149 | Rice Field (First Stage) | 667 | 1268 |

| 10 | Highway | 279 | 948 | Row Crops | 377 | 5961 |

| 11 | Railway | 33 | 1185 | Plastic House | 165 | 475 |

| 12 | Parking Lot1 | 329 | 904 | Manmade (Non-dark) | 170 | 568 |

| 13 | Parking Lot2 | 20 | 449 | Manmade (Dark) | 1291 | 6373 |

| 14 | Tennis Court | 266 | 162 | Manmade (Blue) | 111 | 431 |

| 15 | Running Track | 279 | 381 | Manmade (Red) | 35 | 187 |

| 16 | / | / | / | Manmade Grass | 21 | 1019 |

| 17 | / | / | / | Asphalt | 384 | 417 |

| Total | 2897 | 12021 | Total | 8335 | 55447 | |

The parameters of the different methods are determined by a 10-fold cross-validation on the training data. More specifically, we tune the parameters of the different algorithms to maximize their performances, e.g. dimension (d), penalty parameters (), etc. The dimension (d) is a common parameter for all compared algorithms, and it can be determined covering the range from 10 to 50 at an interval of 10. For the number of nearest neighbors (k) and the standard deviation of Gaussian kernel function () in artificially computing the adjacency matrix () of GLP, SMA, and S-SMA, we select them in the range of and , respectively, Similarly to CoSpace, S-CoSpace and LeMA, we set the two regularization parameters () ranging from .

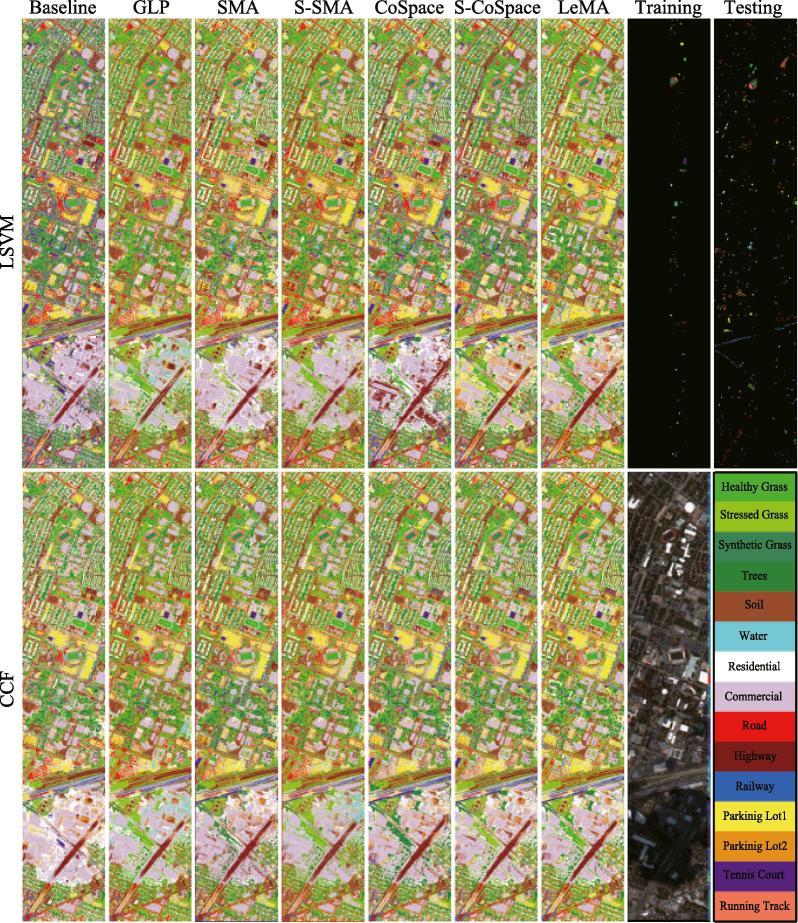

3.1.3. Results and analysis

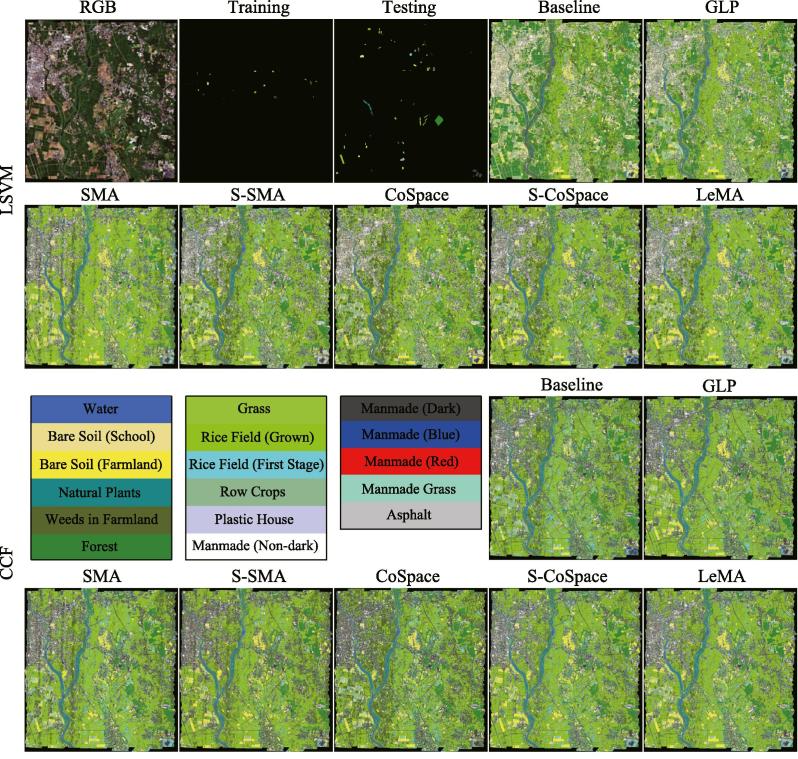

Fig. 5 shows the classification maps of compared algorithms using LSVM and CCF classifiers, while Table 2 lists the specific quantitative assessment results with optimal parameters obtained by 10-fold cross-validation.

Fig. 5.

Classification maps of the different algorithms obtained using two kinds of classifiers on the University of Houston dataset.

Table 2.

Quantitative performance comparison with the different algorithms on the University of Houston data. The best one is shown in bold.

| Methods | Baseline (%) | GLP (%) | SMA (%) | S-SMA (%) | CoSpace (%) | S-CoSpace (%) | LeMA (%) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Parameter | d | d | ||||||||||||

| 10 |

|

30 |

|

|

|

|

||||||||

| Classifier | LSVM | CCF | LSVM | CCF | LSVM | CCF | LSVM | CCF | LSVM | CCF | LSVM | CCF | LSVM | CCF |

| OA | 62.12 | 68.21 | 64.71 | 70.01 | 68.01 | 69.59 | 69.29 | 70.10 | 69.38 | 72.17 | 70.41 | 73.75 | 73.42 | 76.35 |

| AA | 65.97 | 70.47 | 68.18 | 72.18 | 70.50 | 71.02 | 72.00 | 72.88 | 71.69 | 73.56 | 73.12 | 75.61 | 74.76 | 77.18 |

| 0.5889 | 0.6543 | 0.6164 | 0.6728 | 0.6520 | 0.6695 | 0.6659 | 0.6754 | 0.6672 | 0.6975 | 0.6784 | 0.7146 | 0.7110 | 0.7428 | |

| Class1 | 76.39 | 67.95 | 77.83 | 77.97 | 75.25 | 68.53 | 74.25 | 73.53 | 75.54 | 69.96 | 91.85 | 87.98 | 89.56 | 85.84 |

| Class2 | 80.59 | 78.08 | 93.85 | 98.01 | 97.57 | 77.9 | 97.57 | 93.67 | 73.74 | 77.99 | 90.12 | 91.59 | 93.67 | 93.85 |

| Class3 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| Class4 | 85.51 | 92.27 | 89.66 | 96.62 | 94.78 | 98.74 | 95.85 | 98.55 | 98.74 | 98.26 | 92.75 | 97.29 | 97.49 | 99.61 |

| Class5 | 99.06 | 99.4 | 99.49 | 99.66 | 98.97 | 99.14 | 99.32 | 99.4 | 99.4 | 99.4 | 99.4 | 99.66 | 99.49 | 99.57 |

| Class6 | 86.14 | 86.14 | 96.37 | 99.01 | 86.47 | 70.96 | 99.67 | 99.67 | 85.48 | 85.15 | 99.67 | 96.70 | 86.47 | 86.47 |

| Class7 | 50.62 | 63.76 | 48.63 | 64.01 | 72.32 | 77.14 | 72.15 | 69.66 | 73.98 | 80.05 | 75.06 | 80.96 | 83.21 | 88.03 |

| Class8 | 56.49 | 56.06 | 56.60 | 59.85 | 62.01 | 62.23 | 64.61 | 63.85 | 63.53 | 62.01 | 55.84 | 60.39 | 62.77 | 62.01 |

| Class9 | 56.22 | 70.58 | 69.63 | 69.02 | 49.96 | 61.27 | 50.57 | 45.00 | 59.79 | 64.93 | 65.8 | 71.54 | 64.49 | 61.88 |

| Class10 | 45.36 | 45.25 | 45.46 | 49.89 | 58.12 | 52.32 | 58.33 | 63.61 | 64.14 | 57.70 | 58.97 | 51.79 | 60.97 | 53.59 |

| Class11 | 27.43 | 43.88 | 22.45 | 38.65 | 28.86 | 36.46 | 36.46 | 34.77 | 36.54 | 47.26 | 35.78 | 38.65 | 41.27 | 49.96 |

| Class12 | 31.64 | 56.08 | 31.75 | 37.83 | 35.84 | 62.50 | 34.18 | 55.2 | 46.79 | 62.72 | 34.29 | 58.52 | 45.02 | 76.88 |

| Class13 | 0.00 | 0.67 | 0.00 | 1.11 | 0.00 | 0.00 | 0.00 | 0.45 | 0.00 | 0.45 | 0.00 | 0.89 | 0.00 | 1.78 |

| Class14 | 97.53 | 98.77 | 94.44 | 92.59 | 100.00 | 100.00 | 99.38 | 98.15 | 100.00 | 99.38 | 99.38 | 100.00 | 99.38 | 100.00 |

| Class15 | 96.59 | 98.16 | 96.59 | 98.43 | 97.38 | 98.16 | 97.64 | 97.64 | 97.64 | 98.16 | 97.90 | 98.16 | 97.64 | 98.16 |

Overall, the methods based on manifold alignment outperform baseline and GLP using the different classifiers. This means that the limited amount of HS data can guide the corresponding MS data towards better discriminative feature representations. More specifically when compared with S-SMA, SMA yields a relatively poor performance since it only considers the correspondences of MS-HS labeled data. This indicates that reasonably embedding unlabeled samples into the manifold alignment framework can effectively help us capture the real data distribution, and thereby obtain more accurate decision boundaries. Unfortunately, these approaches only attempt to align different data in a common subspace, but they hardly take the connections between the common subspace and label information into account,2 which leads to a lack of discriminative ability. With regards to this, our proposed joint learning framework “CoSpace” and its semi-supervised version “S-CoSpace” achieve the desired results on the given MS-HS datasets.

By fully considering the connectivity of the common subspace, label information, and unlabeled information encoded by the learned graph structure, the performance of LeMA is much more superior to that of any other methods as can be observed in Table 2. This demonstrates that LeMA is likely to learn a more discriminative feature representation and to find a better decision boundary.

As observed from Fig. 4 and Table 2, the training samples are relatively a few and meanwhile the distribution between different classes is extremely unbalanced. While training the classifier, more attentions are paid on those classes with large-size samples, and some small-scale classes possibly play less and even nothing. For this reason, we propose to consider those large-scale unlabeled data, achieving a semi-supervised learning. Using this strategy, the semi-supervised methods, i.e. GLP, S-SMA, S-CoSpace, obviously perform better than baseline and their supervised ones (SMA and CoSpace). Moreover, we can see from Table 2 that there is a significant improvement of classification performance in some classes (e.g.Stressed Grass, Water) after accounting for unlabeled samples, particularly between SMA and S-SMA as well as CoSpace and S-CoSpace. However, these aforementioned semi-supervised methods carry out the label propagation on a given graph manually computed by gaussian kernel function, limiting the adaptiveness and discriminability of the algorithms. LeMA can adaptively learn a data-driven graph structure where the labels tend to spread more smoothly, which can result in a more effective material identification for those challenging classes (few training samples), such as Trees, Residential, Railway, Parking Lot1. In addition, we can also observe an easily overlooked phenomenon that the LeMA’s ability in identifying certain classes still remains limited, such as Parking Lot2 (only ) and Railway (). Parking Lot2 is basically classified to Commercial and Parking Lot1, while Railway is largely identified as Road and Commercial. This might be explained by the limited number of training samples as well as fairly similar spectral properties between several classes.

3.2. The simulated MS-HS datasets over Chikusei

3.2.1. Data description

Similarly to Houston data, the MS data with dimensions of at a GSD of 2.5 m was simulated by the HS data acquired by the Headwall’s Hyperspec-VNIR-C sensor over Chikusei area, Ibaraki, Japan. It consists of 128 bands in the spectral range from 363 nm to 1018 nm with the 10 nm spectral resolution. The dataset has been made available to the scientific research (Yokoya and Iwasaki, 2016).

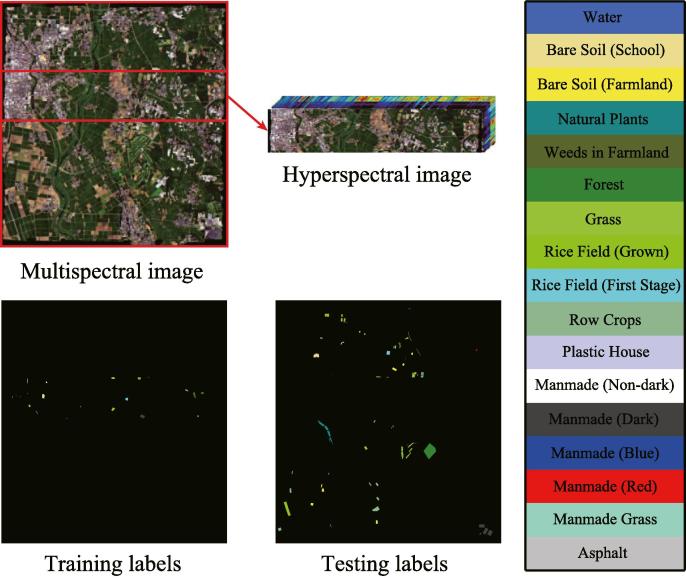

3.2.2. Experimental setup

Fig. 6 shows the corresponding MS and partial HS images as well as selected training labels and test labels. Again, the overlapped region between MS and HS, which should include all the classes listed in Table 1, is chosen based on the given ground truth (Yokoya and Iwasaki, 2016). Additionally, the parameters configuration for all algorithms can be adaptively completed by a 10-fold cross-validation on the training set, which is more generalized to different datasets. Regarding how to run the cross-validation for parameters setting, please refer to Section 3.1.2 for more details.

Fig. 6.

The multispectral image and its corresponding hyperspectral image that partially covers the same area, as well as training and testing labels, for Chikusei Dataset.

3.2.3. Results and analysis

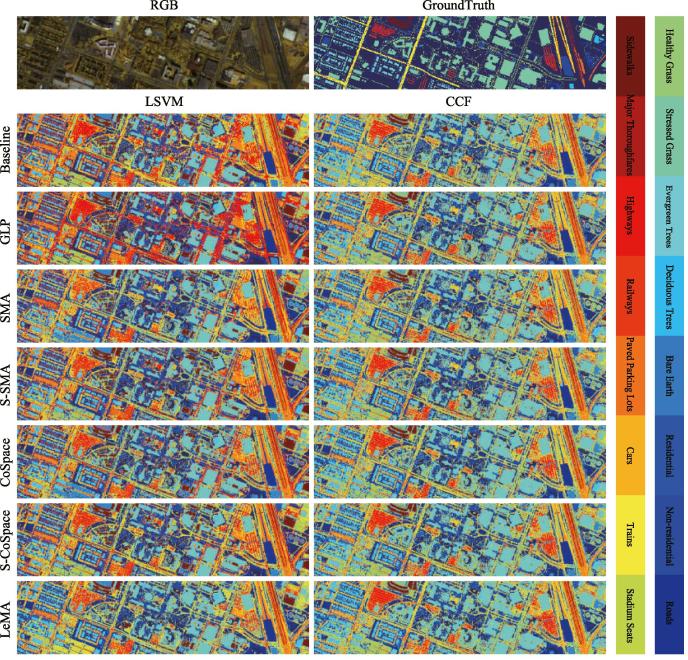

We assess the classification performance of the different algorithms for the Chikusei MS-HS data both quantitatively and visually, as shown in Fig. 7 and Table 3.

Fig. 7.

Classification maps of the different algorithms obtained using two kinds of classifiers on the Chikusei dataset.

Table 3.

Quantitative performance comparison with the different algorithms on the Chikusei data. The best one is shown in bold.

| Methods | Baseline (%) | GLP (%) | SMA (%) | S-SMA (%) | CoSpace (%) | S-CoSpace (%) | LeMA (%) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Parameter | d | d | ||||||||||||

| 10 |

|

20 |

|

|

|

|

||||||||

| Classifier | LSVM | CCF | LSVM | CCF | LSVM | CCF | LSVM | CCF | LSVM | CCF | LSVM | CCF | LSVM | CCF |

| OA | 60.20 | 71.11 | 62.30 | 72.26 | 67.90 | 71.53 | 69.68 | 73.27 | 71.12 | 75.69 | 72.60 | 77.11 | 75.11 | 81.71 |

| AA | 69.42 | 70.40 | 69.80 | 70.71 | 70.79 | 66.47 | 72.27 | 70.01 | 73.96 | 71.46 | 71.64 | 71.33 | 75.29 | 75.73 |

| 0.5523 | 0.6761 | 0.5784 | 0.6894 | 0.6391 | 0.6802 | 0.6602 | 0.6818 | 0.6746 | 0.7260 | 0.6911 | 0.7420 | 0.7194 | 0.7933 | |

| Class1 | 78.21 | 80.54 | 78.09 | 80.42 | 98.72 | 82.52 | 99.53 | 97.90 | 92.54 | 79.25 | 98.83 | 98.37 | 98.25 | 98.83 |

| Class2 | 94.43 | 82.70 | 94.11 | 93.84 | 93.20 | 92.50 | 93.20 | 93.09 | 93.47 | 94.91 | 87.04 | 93.63 | 93.20 | 93.79 |

| Class3 | 23.54 | 50.06 | 37.75 | 76.87 | 62.57 | 55.31 | 68.41 | 76.55 | 80.40 | 77.71 | 80.65 | 77.23 | 89.29 | 89.90 |

| Class4 | 92.13 | 92.56 | 92.23 | 95.72 | 90.57 | 91.53 | 92.51 | 88.76 | 90.59 | 96.23 | 94.64 | 92.49 | 95.11 | 96.96 |

| Class5 | 97.65 | 94.68 | 96.84 | 88.45 | 28.43 | 16.06 | 24.01 | 32.85 | 83.94 | 66.52 | 51.81 | 43.32 | 60.74 | 67.78 |

| Class6 | 62.01 | 81.48 | 57.47 | 69.67 | 62.52 | 78.91 | 68.27 | 79.67 | 63.61 | 79.02 | 72.34 | 88.48 | 76.34 | 87.27 |

| Class7 | 99.67 | 99.93 | 99.66 | 100.00 | 96.87 | 97.79 | 95.40 | 99.37 | 97.74 | 99.75 | 98.41 | 99.87 | 97.63 | 99.80 |

| Class8 | 57.11 | 93.40 | 69.06 | 98.93 | 95.59 | 93.49 | 96.88 | 96.53 | 95.05 | 92.72 | 99.48 | 98.45 | 99.27 | 99.18 |

| Class9 | 100.00 | 100.00 | 100.00 | 99.92 | 99.53 | 99.13 | 99.45 | 99.21 | 98.66 | 99.76 | 99.21 | 98.34 | 99.76 | 100.00 |

| Class10 | 24.81 | 19.56 | 26.64 | 19.06 | 21.39 | 15.48 | 20.94 | 13.09 | 22.35 | 18.00 | 22.75 | 14.83 | 26.47 | 26.46 |

| Class11 | 0.00 | 2.11 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.21 | 5.47 | 0.63 | 5.68 |

| Class12 | 90.32 | 88.91 | 90.32 | 89.61 | 90.14 | 85.92 | 90.14 | 89.44 | 90.32 | 80.46 | 89.96 | 89.44 | 88.38 | 90.14 |

| Class13 | 33.11 | 33.09 | 33.11 | 36.50 | 32.61 | 56.25 | 31.32 | 30.88 | 33.11 | 67.90 | 33.11 | 54.93 | 33.11 | 68.73 |

| Class14 | 94.20 | 85.38 | 79.12 | 59.40 | 72.85 | 59.40 | 94.20 | 86.31 | 59.40 | 52.44 | 14.39 | 49.19 | 45.01 | 53.60 |

| Class15 | 100.00 | 100.00 | 100.00 | 100.00 | 93.58 | 100.00 | 100.00 | 100.00 | 93.58 | 97.86 | 100.00 | 100.00 | 100.00 | 100.00 |

| Class16 | 74.88 | 88.62 | 74.19 | 93.52 | 99.71 | 99.51 | 99.80 | 98.82 | 97.84 | 100.00 | 97.35 | 97.25 | 98.04 | 95.78 |

| Class17 | 58.03 | 3.84 | 58.03 | 0.24 | 65.23 | 7.91 | 62.11 | 7.67 | 64.75 | 0.00 | 77.70 | 11.27 | 78.66 | 13.43 |

Similarly to the University of Houston MS-HS data, there is a basically consistent trend for the different algorithms in the Chikusei MS-HS data. On the whole, the original MS data (baseline) fails to identify some specific materials such as Plastic House, Manmade (Dark), Rice Field (Grown), Bare Soil (Farmland), and Forest, due to its poor spectral information and a limited number of training samples. GLP utilizes the unlabeled samples to augment the training samples in a semi-supervised way, yet it is still limited by the low-discriminative spectral signatures. By aligning the MS and HS data, these alignment-based approaches (e.g. SMA, S-SMA, CoSpace, S-CoSpace, and LeMA) are able to find a common subspace in which the learnt features are expected to absorb the different properties from two modalities, resulting in a better performance. Compared to the supervised methods (SMA and CoSpace), their corresponding semi-supervised versions (S-SMA and S-CoSpace) obtain higher classification accuracies on both classifiers, which is detailed in Table 3. As expected, the performance of the LeMA is significantly superior to that of others, thanks to the great contributions of a common subspace learning from MS-HS data, a data-driven graph learning and the semi-supervised learning strategy. Despite so, the LeMA still fails to recognize some challenging classes, such as Weeds in Farmland, Row Crops, Plastic House, and Asphalt. The reasons could be twofold. On one hand, the performance of LeMA is limited, to some extent, by the unbalanced data sets. On the other hand, LeMA’ transferring ability would sharply degrade when a great spectral variability between training and test samples exists.

3.3. The real multispectral-lidar and hyperspectral datasets in DFC2018

Although we follow strict simulation procedures, yet the two MS-HS datasets used above (Houston and Chikusei) essentially originate from a similar data source (homogeneous), which means there is a strong correlation in their spectral features. This makes the information of the different modalities transferred more effectively, but could limit the generalization ability in practice. To this end, we apply a real bi-modal dataset – multispectral-lidar and hyperspectral (heterogeneous) provided by the latest IEEE GRSS data fusion contest 2018 (DFC2018).

3.3.1. Data description

Multi-source optical remote sensing data, such as multispectral-lidar data, hyperspectral data, and very high-resolution RGB data, is provided in the contest. More specifically, the multispectral-lidar imagery consists of pixels with 7 bands (3 intensity bands and 4 DSMs-related bands (Saux et al., 2018)) collected from 1550 nm, 1064 nm, and 532 nm at a 0.5 m GSD, while the hyperspectral data comprises 48 bands covering a spectral range from 380 nm to 1050 nm at 1 m GSD, and its size is . In our case, our LeMA model is trained on partial multispectral-lidar and hyperspectral correspondences and tested only using multispectral-lidar data, in order to meet the requirement of our cross-modality learning task. The first row of Fig. 8 shows the RGB image of this scene and the labeled ground truth image.

Fig. 8.

Classification maps of the different algorithms obtained using two kinds of classifiers on the real dataset of DFC2018 (Multispectral-Lidar and Hyperspectral data).

3.3.2. Experimental setup

Our aim is, once again, to investigate whether the limited amount of hyperspectral data can improve the performance of another modality, e.g., multispectral data (homogeneous) or multispectral-lidar data (heterogeneous). Therefore, we randomly assign of total labeled samples as training set and the rest of it as test set in the experiment. Moreover, 16 main classes are selected out of 20 (see Fig. 8), by removing several small classes with too few samples, e.g. Artificial Turf, Water, Crosswalks, and Unpaved Parking Lots. Likewise, we automatically configure the parameters of the proposed LeMA and the compared algorithms by a 10-fold cross-validation on the training set, which is detailed in Section 3.1.2.

3.3.3. Results and analysis

We show the averaged results of the different algorithms out of 10 runs to obtain a relatively stable and meaningful performance comparison, because the training and test sets are randomly generated from total samples in each round, as listed in Table 4. Correspondingly, Fig. 8 visually highlights the differences of classification maps for the different methods.

Table 4.

Quantitative performance comparison with the different algorithms on the DFC2018 data. The best one is shown in bold.

| Methods | Baseline (%) | GLP (%) | SMA (%) | S-SMA (%) | CoSpace (%) | S-CoSpace (%) | LeMA (%) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Parameter | d | d | ||||||||||||

| 7 |

|

30 |

|

|

|

|

||||||||

| Classifier | LSVM | CCF | LSVM | CCF | LSVM | CCF | LSVM | CCF | LSVM | CCF | LSVM | CCF | LSVM | CCF |

| OA | 51.35 | 72.84 | 52.28 | 73.15 | 52.73 | 70.37 | 54.69 | 72.13 | 55.56 | 74.04 | 58.65 | 76.59 | 61.69 | 79.98 |

| AA | 59.46 | 78.64 | 60.57 | 81.64 | 58.06 | 77.78 | 65.34 | 78.72 | 66.16 | 80.46 | 67.72 | 83.67 | 65.54 | 88.82 |

| 0.4194 | 0.6534 | 0.4289 | 0.6587 | 0.4366 | 0.6256 | 0.4598 | 0.6441 | 0.4670 | 0.6682 | 0.4987 | 0.6990 | 0.5284 | 0.7414 | |

| Class1 | 91.70 | 84.62 | 96.15 | 93.12 | 84.01 | 85.43 | 94.13 | 90.89 | 95.14 | 89.07 | 94.74 | 95.14 | 92.31 | 100.00 |

| Class2 | 33.90 | 80.17 | 35.62 | 80.74 | 73.00 | 82.40 | 69.57 | 80.17 | 61.32 | 80.37 | 69.73 | 81.52 | 78.09 | 87.90 |

| Class3 | 94.92 | 96.16 | 96.02 | 96.57 | 95.06 | 95.06 | 96.30 | 96.30 | 93.83 | 97.26 | 94.79 | 96.30 | 96.57 | 99.45 |

| Class4 | 83.00 | 92.50 | 85.50 | 97.50 | 85.50 | 90.00 | 84.50 | 94.00 | 83.00 | 91.00 | 85.50 | 98.00 | 79.00 | 100.00 |

| Class5 | 43.71 | 90.42 | 30.54 | 87.43 | 53.29 | 87.43 | 52.10 | 85.03 | 61.08 | 92.22 | 45.51 | 92.22 | 30.54 | 100.00 |

| Class6 | 80.44 | 90.60 | 81.32 | 91.82 | 78.79 | 87.77 | 82.80 | 87.98 | 83.94 | 90.35 | 85.24 | 91.27 | 89.71 | 96.50 |

| Class7 | 59.26 | 82.01 | 61.11 | 81.52 | 57.62 | 78.21 | 58.66 | 82.45 | 59.89 | 82.37 | 63.95 | 85.14 | 69.56 | 87.47 |

| Class8 | 14.07 | 31.98 | 10.75 | 36.00 | 21.71 | 28.00 | 20.83 | 35.16 | 26.64 | 38.71 | 11.77 | 39.51 | 31.43 | 49.96 |

| Class9 | 48.54 | 54.14 | 50.77 | 58.40 | 44.87 | 56.96 | 52.60 | 53.49 | 47.94 | 63.30 | 53.69 | 68.55 | 40.47 | 62.26 |

| Class10 | 10.16 | 42.07 | 8.00 | 31.70 | 6.77 | 37.82 | 5.55 | 29.21 | 11.02 | 36.67 | 24.21 | 38.40 | 12.93 | 38.04 |

| Class11 | 23.54 | 72.03 | 25.96 | 79.07 | 79.07 | 74.45 | 45.88 | 75.45 | 34.21 | 76.26 | 54.12 | 81.49 | 62.58 | 100.00 |

| Class12 | 93.85 | 85.85 | 92.92 | 94.46 | 92.00 | 87.08 | 85.85 | 90.15 | 85.54 | 86.15 | 74.15 | 95.38 | 66.46 | 100.00 |

| Class13 | 60.50 | 74.96 | 57.31 | 87.56 | 59.33 | 73.45 | 60.17 | 77.98 | 63.03 | 79.33 | 64.71 | 87.06 | 70.59 | 99.83 |

| Class14 | 39.93 | 87.15 | 55.21 | 90.63 | 17.71 | 86.11 | 47.22 | 85.76 | 66.32 | 89.58 | 75.69 | 90.63 | 55.21 | 99.65 |

| Class15 | 95.39 | 96.77 | 97.70 | 100.00 | 93.55 | 98.16 | 99.54 | 97.70 | 99.54 | 98.62 | 99.54 | 100.00 | 95.85 | 100.00 |

| Class16 | 78.39 | 96.77 | 84.19 | 99.68 | 77.74 | 96.13 | 89.68 | 97.74 | 86.13 | 96.13 | 86.13 | 98.06 | 77.42 | 100.00 |

Generally speaking, hyperspectral information embedding can effectively improve the classification performance of the multispectral-lidar data, which implies that the models based common subspace learning (e.g., SMA, S-SMA, CoSpace, S-CoSpace, and LeMA) can transfer the knowledge from one modality to another modality to some extent. We also observe from Table 4 that the semi-supervised methods which consider the unlabeled samples (e.g., GLP, S-SMA, S-CoSpace, and LeMA) always perform better than those purely supervised ones. Not unexpectedly, LeMA integrating rich spectral information and unlabeled samples achieves a superior performance, which demonstrates that the learning-based graph structure is more applicable to capturing the data distribution and further find a potential optimal decision boundary.

One thing to be noted, however, is that compared to the performance of the different algorithms in the simulated MS-HS datasets from similar sources (homogeneous), the knowledge transferring ability of these algorithms in handling the real multispectral-lidar and hyperspectral datasets from different sources (heterogeneous) remains limited, since all listed methods including our LeMA are modeled in a linearized way. Unfortunately, a single linear transformation fails to fit the gap between heterogeneous modalities well, despite a limited performance improvement.

4. Conclusions

In real-world problems, a large amount of low-quality data (e.g. MS data) can often be easily collected. On the contrary, high-quality data (e.g. HS data) are usually expensive and difficult to obtain. This motivates us to investigate whether a limited amount of high-quality data can contribute to relevant tasks with a large amount of low-quality data. For this purpose, we propose a novel semi-supervised learning framework called LeMA, which effectively connects the common subspace and label information, and automatically embeds the unlabeled information into the proposed framework by adaptively learning a Laplacian matrix from the data. Extensive experiments are conducted using the LeMA on two homologous MS-HS simulated datasets and a heterogenous multispectral-lidar and hyperspectral real dataset in comparison with the other state-of-arts algorithms, demonstrating the superiority and effectiveness of the LeMA in the knowledge transferring ability. We have to admit, however, that despite a significant performance improvement in LeMA, yet its representative ability is still limited by linearly modeling way, especially facing highly-nonlinear heterogenous data. Towards this issue, we will continue to improve our model to a nonlinear version and simultaneously consider the spatial information (e.g., morphological profiles) to further strengthen the feature representation ability.

Acknowledgements

The authors would like to thank the Hyperspectral Image Analysis group and the NSF Funded Center for Airborne Laser Mapping (NCALM) at the University of Houston for providing the CASI University of Houston dataset. The authors would like to express their appreciation to Prof. D. Cai and Dr. C. Wang for providing MATLAB codes for LPP and manifold alignment algorithms.

This work was supported by funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (grant agreement No [ERC-2016-StG-714087]) and from Helmholtz Association under the framework of the Young Investigators Group “SiPEO” (VH-NG-1018, www.sipeo.bgu.tum.de). The work of N. Yokoya was supported by Japan Society for the Promotion of Science (JSPS) KAKENHI 15K20955 and Alexander von Humboldt Fellowship for postdoctoral researchers.

The connectivity in manifold alignment is not strictly equivalent to the similarity of the two samples.

In contrast to multi-modal learning (bi-modality for example), cross-modal learning trains on single modality and tests on bi-modality, or vice versa (train on bi-modality and test on single modality).

References

- Banerjee B., Bovolo F., Bhattacharya A., Bruzzone L., Chaudhuri S., Buddhiraju K.M. A novel graph-matching-based approach for domain adaptation in classification of remote sensing image pair. IEEE Trans. Geosci. Remote Sens. 2015;53(7):4045–4062. [Google Scholar]

- Bertsekas D.P. Athena Scientific Belmont; 1999. Nonlinear Programming. [Google Scholar]

- Boyd S., Parikh N., Chu E., Peleato B., Eckstein J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends® Mach. Learn. 2011;3(1):1–122. [Google Scholar]

- Bruzzone L., Marconcini M. Domain adaptation problems: a dasvm classification technique and a circular validation strategy. IEEE Trans. Pattern Anal. Mach. Intell. 2010;32(5):770–787. doi: 10.1109/TPAMI.2009.57. [DOI] [PubMed] [Google Scholar]

- Chen, L., Li, X., Sun, D., Toh, K., 2018. On the equivalence of inexact proximal alm and admm for a class of convex composite programming. arXiv preprint arXiv:1803.10803.

- Gu Q., Li Z., Han J. Proceedings of the 22th International Joint Conference on Artificial Intelligence (IJCAI) 2011. Joint feature selection and subspace learning; pp. 1294–1299. [Google Scholar]

- Heide F., Heidrich W., Wetzstein G. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2015. Fast and flexible convolutional sparse coding; pp. 5135–5143. [Google Scholar]

- Hong D., Yokoya N., Zhu X. Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), 2016 8th Workshop on. IEEE; 2016. The k-lle algorithm for nonlinear dimensionality reduction of large-scale hyperspectral data; pp. 1–5. [Google Scholar]

- Hong D., Yokoya N., Chanussot J., Zhu X. Proceedings of IEEE International Conference on Image Processing (ICIP) 2017. Learning low-coherence dictionary to address spectral variability for hyperspectral unmixing; pp. 1–5. [Google Scholar]

- Hong D., Yokoya N., Zhu X. Learning a robust local manifold representation for hyperspectral dimensionality reduction. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2017;10(6):2960–2975. [Google Scholar]

- Hong D., Yokoya N., Xu J., Zhu X. European Conference on Computer Vision (ECCV) Springer; 2018. Joint and progressive learning from high-dimensional data for multi-label classification; pp. 478–493. [Google Scholar]

- Huang X., Lu Q., Zhang L. A multi-index learning approach for classification of high-resolution remotely sensed images over urban areas. ISPRS J. Photogrammetry Remote Sens. 2014;90:36–48. [Google Scholar]

- Ji S., Ye J. Proceedings of the 21th International Joint Conference on Artificial Intelligence (IJCAI) 2009. Linear dimensionality reduction for multi-label classification; pp. 1077–1082. [Google Scholar]

- Jiang J., Zhai X. Proceedings of ACL. 2007. Instance weighting for domain adaptation in nlp; pp. 264–271. [Google Scholar]

- Kang J., Körner M., Wang Y., Taubenböck H., Zhu X. Building instance classification using street view images. ISPRS J. Photogrammetry Remote Sens. 2018 [Google Scholar]

- Khosla A., Zhou T., Malisiewicz T., Efros A., Torralba A. European Conference on Computer Vision (ECCV) Springer; 2012. Undoing the damage of dataset bias; pp. 158–171. [Google Scholar]

- Liao D., Qian Y., Zhou J., Tang Y. A manifold alignment approach for hyperspectral image visualization with natural color. IEEE Trans. Geosci. Remote Sens. 2016;54(6):3151–3162. [Google Scholar]

- Li J., Zhang H., Zhang L. Column-generation kernel nonlocal joint collaborative representation for hyperspectral image classification. ISPRS J. Photogrammetry Remote Sens. 2014;94:25–36. [Google Scholar]

- Lin, Z., Chen, M., Ma, Y., 2010. The augmented lagrange multiplier method for exact recovery of corrupted low-rank matrices. arXiv preprint arXiv:1009.5055.

- Liu G., Lin Z., Yan S., Sun J., Yu Y., Ma Y. Robust recovery of subspace structures by low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 2013;35(1):171–184. doi: 10.1109/TPAMI.2012.88. [DOI] [PubMed] [Google Scholar]

- Matasci G., Volpi M., Kanevski M., Bruzzone L., Tuia D. Semisupervised transfer component analysis for domain adaptation in remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2015;53(7):3550–3564. [Google Scholar]

- Persello C.C., Bruzzone L. Active learning for domain adaptation in the supervised classification of remote sensing images. IEEE Trans. Geosci. Remote Sens. 2012;50(11):4468–4483. [Google Scholar]

- Samat A., Gamba P., Abuduwaili J., Liu S., Miao Z. Geodesic flow kernel support vector machine for hyperspectral image classification by unsupervised subspace feature transfer. Remote Sens. 2016;8(3):234. [Google Scholar]

- Samat A., Gamba P., Liu S., Du P., Abuduwaili J. Jointly informative and manifold structure representative sampling based active learning for remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2016;54(11):6803–6817. [Google Scholar]

- Samat A., Persello C., Gamba P., Liu S., Abuduwaili J., Li E. Supervised and semi-supervised multi-view canonical correlation analysis ensemble for heterogeneous domain adaptation in remote sensing image classification. Remote Sens. 2017;9(4):337. [Google Scholar]

- Saux B.L., Yokoya N., Hansch R., Prasad S. 2018 IEEE GRSS data fusion contest: multimodal land use classification [technical committees] IEEE Geosci. Remote Sens. Mag. 2018;6(1):52–54. [Google Scholar]

- Sugiyama M., Nakajima S., Kashima H., Buenau P., Kawanabe M. Advances in Neural Information Processing Systems (NIPS) 2008. Direct importance estimation with model selection and its application to covariate shift adaptation; pp. 1433–1440. [Google Scholar]

- Tarabalka Y., Benediktsson J., Chanussot J. Spectral-spatial classification of hyperspectral imagery based on partitional clustering techniques. IEEE Trans. Geosci. Remote Sens. 2009;47(8):2973–2987. [Google Scholar]

- Tom, R., Frank, W., 2015. Canonical correlation forests. arXiv preprint arXiv:1507.05444.

- Tuia D., Volpi M., Trolliet M., Camps-Valls G. Semisupervised manifold alignment of multimodal remote sensing images. IEEE Trans. Geosci. Remote Sens. 2014;52(12):7708–7720. [Google Scholar]

- Tuia D., Persello C., Bruzzone L. Domain adaptation for the classification of remote sensing data: an overview of recent advances. IEEE Geosci. Remote Sens. Mag. 2016;4(2):41–57. [Google Scholar]

- Tuia D., Marcos D., Camps-Valls G. Multi-temporal and multi-source remote sensing image classification by nonlinear relative normalization. ISPRS J. Photogrammetry Remote Sens. 2016;120:1–12. [Google Scholar]

- Van der Meer F.D., Van der Werff H.M.A., Van Ruitenbeek F.J.A. Potential of esa’s sentinel-2 for geological applications. Remote Sens. Environ. 2014;148:124–133. [Google Scholar]

- Wang C., Mahadevan S. AAAI Fall Symposium on Manifold Learning and its Applications (AAAI) 2009. A general framework for manifold alignment. [Google Scholar]

- Wang C., Mahadevan S. Proceedings of the 22th International Joint Conference on Artificial Intelligence (IJCAI) 2011. Heterogeneous domain adaptation using manifold alignment; pp. 1541–1546. [Google Scholar]

- Wang C., Krafft P., Mahadevan S. CSC Press; 2011. Chapter of Manifold Learning: Theory and Applications-Manifold alignment. [Google Scholar]

- Woodcock C., Macomber S.A., Pax-Lenney M., Cohen W.B. Monitoring large areas for forest change using landsat: generalization across space, time and landsat sensors. Remote Sens. Environ. 2001;78(1–2):194–203. [Google Scholar]

- Xia J., Chanussot J., Du P., He X. Semi-supervised probabilistic principal component analysis for hyperspectral remote sensing image classification. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2014;7(6):2224–2236. [Google Scholar]

- Yang C., Everitt J.H., Du Q., Luo B., Chanussot J. Using high-resolution airborne and satellite imagery to assess crop growth and yield variability for precision agriculture. Proc. IEEE. 2013;101(3):582–592. [Google Scholar]

- Yokoya, N., Iwasaki, A., 2016. Airborne hyperspectral data over chikusei. Tech. Rep. SAL-2016-05-27.

- Yokoya N., Grohnfeldt C., Chanussot J. Hyperspectral and multispectral data fusion: a comparative review. IEEE Geosci. Remote Sens. Mag. 2017;5(2):29–56. [Google Scholar]

- Zhang L., Zhang L., Tao D., Huang X. On combining multiple features for hyperspectral remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2012;50(3):879–893. [Google Scholar]

- Zhong Y., Wang X., Zhao L., Feng R., Zhang L., Xu Y. Blind spectral unmixing based on sparse component analysis for hyperspectral remote sensing imagery. ISPRS J. Photogrammetry Remote Sens. 2016;119:49–63. [Google Scholar]

- Zhou P., Zhang C., Lin Z. Bilevel model based discriminative dictionary learning for recognition. IEEE Trans. Image Process. 2017;26(3):1173–1187. doi: 10.1109/TIP.2016.2623487. [DOI] [PubMed] [Google Scholar]

- Zhu X., Ghahramani Z., Lafferty J.D. Proceedings of the 20th International Conference on Machine Learning (ICML) 2003. Semi-supervised learning using gaussian fields and harmonic functions; pp. 912–919. [Google Scholar]