Abstract

Background

Virtual reality (VR) is a technology that allows the user to explore and manipulate computer-generated real or artificial three-dimensional multimedia sensory environments in real time to gain practical knowledge that can be used in clinical practice.

Objective

The aim of this systematic review was to evaluate the effectiveness of VR for educating health professionals and improving their knowledge, cognitive skills, attitudes, and satisfaction.

Methods

We performed a systematic review of the effectiveness of VR in pre- and postregistration health professions education following the gold standard Cochrane methodology. We searched 7 databases from the year 1990 to August 2017. No language restrictions were applied. We included randomized controlled trials and cluster-randomized trials. We independently selected studies, extracted data, and assessed risk of bias, and then, we compared the information in pairs. We contacted authors of the studies for additional information if necessary. All pooled analyses were based on random-effects models. We used the Grading of Recommendations, Assessment, Development and Evaluations (GRADE) approach to rate the quality of the body of evidence.

Results

A total of 31 studies (2407 participants) were included. Meta-analysis of 8 studies found that VR slightly improves postintervention knowledge scores when compared with traditional learning (standardized mean difference [SMD]=0.44; 95% CI 0.18-0.69; I2=49%; 603 participants; moderate certainty evidence) or other types of digital education such as online or offline digital education (SMD=0.43; 95% CI 0.07-0.79; I2=78%; 608 participants [8 studies]; low certainty evidence). Another meta-analysis of 4 studies found that VR improves health professionals’ cognitive skills when compared with traditional learning (SMD=1.12; 95% CI 0.81-1.43; I2=0%; 235 participants; large effect size; moderate certainty evidence). Two studies compared the effect of VR with other forms of digital education on skills, favoring the VR group (SMD=0.5; 95% CI 0.32-0.69; I2=0%; 467 participants; moderate effect size; low certainty evidence). The findings for attitudes and satisfaction were mixed and inconclusive. None of the studies reported any patient-related outcomes, behavior change, as well as unintended or adverse effects of VR. Overall, the certainty of evidence according to the GRADE criteria ranged from low to moderate. We downgraded our certainty of evidence primarily because of the risk of bias and/or inconsistency.

Conclusions

We found evidence suggesting that VR improves postintervention knowledge and skills outcomes of health professionals when compared with traditional education or other types of digital education such as online or offline digital education. The findings on other outcomes are limited. Future research should evaluate the effectiveness of immersive and interactive forms of VR and evaluate other outcomes such as attitude, satisfaction, cost-effectiveness, and clinical practice or behavior change.

Keywords: virtual reality, health professions education, randomized controlled trials, systematic review, meta-analysis

Introduction

Adequately trained health professionals are essential to ensure access to health services and to achieve universal health coverage [1]. In 2013, the World Health Organization (WHO) estimated a shortage of approximately 17.4 million health professionals worldwide [1]. The shortage and disproportionate distribution of health workers worldwide can be aggravated by the inadequacy of training programs (in terms of content, organization, and delivery) and experience needed to provide uniform health care services to all [2]. It has, therefore, become essential to generate strategies focused on scalable, efficient, and high-quality health professions education [3]. Increasingly, digital technology, with its pervasive use and relentless advancement, is seen as a promising source of effective and efficient health professions education and training systems [4].

Digital education (also known as eLearning) is the act of teaching and learning by means of digital technologies. It is an overarching term for an evolving multitude of educational approaches, concepts, methods, and technologies [5]. Digital education can include, but is not limited to, online and offline computer-based digital education, massive open online courses, virtual reality (VR), virtual patients, mobile learning, serious gaming and gamification, and psychomotor skills trainers [5]. A strong evidence base is needed to support effective use of these different digital modalities for health professions education. To this end, as part of an evidence synthesis series for digital health education, we focused on one of the digital education modalities, VR [6].

VR is a technology that allows the user to explore and manipulate computer-generated real or artificial three-dimensional (3D) multimedia sensory environments in real time. It allows for a first-person active learning experience through different levels of immersion; that is, a perception of the digital world as real and the ability to interact with objects and/or perform a series of actions in this digital world [7-9]. VR can be displayed with a variety of tools, including computer or mobile device screens, and VR rooms of head-mounted displays. VR rooms are projector-based immersive 3D visualization systems simulating real or virtual environments in a closed space and involve multiple users at the same time [10]. Head-mounted displays are placed over the user’s head and provide an immersive 3D environmental experience for learning [7]. VR can also facilitate diverse forms of health professions education. For example, it is often used for designing 3D anatomical structure models, which can be toggled and zoomed into [11]. VR also enables the creation of virtual worlds or 3D environments with virtual representations of users, called avatars. Avatars in VR for health professions education can represent patients or health professionals. By enabling simulation, VR is highly conducive to clinical and surgical procedures-focused training.

We found several reviews focusing primarily on the development of technical skills as part of surgical and clinical procedures-focused training, mostly calling for more research on the topic [12-15]. However, VR also offers a range of other educational opportunities, such as development of cognitive, nontechnical competencies [13-18]. Our review addresses this gap in the existing literature by investigating the effectiveness of VR for health professions education.

Methods

Systematic Review

We adhered to the published protocol [6] and followed the Cochrane guidelines [19]. The review is reported according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses guidelines [20]. For a detailed description of the methodology, please refer to the study by Car et al [5].

Study Selection

We included randomized and cluster-randomized controlled trials that compared any VR intervention with any control intervention, for the education of pre- or postregistration health professionals. We included health professionals with qualifications found in the Health Field of Education and Training (091) of the International Standard Classification of Education. VR interventions could be delivered as the only mode of education intervention or blended with traditional learning (ie, blended learning). We included studies on VR for cognitive and nontechnical health professions education, including all VR delivery devices and levels of immersion. We included studies that reported VR as an intervention for healthcare professionals without the participant using any additional physical objects or devices such as probes or handles for psychomotor or technical skill development. We included studies that compared VR or blended learning with traditional learning, other types of digital educations, or another form of VR intervention.

We differentiated the following types of VR: 3D models, virtual patient or virtual health professional (VP or VHP) within VR and surgical simulation. Although we included studies including virtual patients in a VR, studies of virtual patient scenarios outside VR were excluded and are part of a separate review looking at virtual patients alone (simulation) [10]. We excluded studies of students and/or practitioners of traditional, alternative, and complementary medicine. We also excluded studies with cross-over design because of the likelihood of a carry-over effect.

We extracted data on the following primary outcomes:

Learners’ knowledge postintervention: Knowledge is defined as learners’ factual or conceptual understanding measured using change between pre- and posttest scores.

Learners’ skills postintervention: Skills are defined as learners’ ability to demonstrate a procedure or technique in an educational setting.

Learners’ attitudes postintervention toward new competences, clinical practice, or patients (eg, recognition of moral and ethical responsibilities toward patients): Attitude is defined as the tendency to respond positively or negatively toward the intervention.

Learners’ satisfaction postintervention with the learning intervention (eg, retention rates, dropout rates, and survey satisfaction scores): This can be defined as the level of approval when comparing the perceived performance of digital education with one’s expectations.

Change in learner’s clinical practice or behavior (eg, reduced antibiotic prescriptions and improved clinical diagnosis): This can be defined as any changes in clinical practice after the intervention which results in improvement of the quality of care as well as the clinical outcomes.

We also extracted data on the following secondary outcomes:

Cost and cost-effectiveness of the intervention

Patient-related outcomes (eg, patient mortality, patient morbidity, and medication errors)

Data Sources, Collection, Analysis, and Risk of Bias Assessment

We developed a comprehensive search strategy for MEDLINE (Ovid), Embase (Elsevier), Cochrane Central Register of Controlled Trials (CENTRAL; Wiley), PsycINFO (Ovid), ERIC (Ovid), CINAHL (Ebsco), Web of Science Core Collection, and clinical trial registries (ClinicalTrial.gov and WHO ICTRP). Databases were searched from January 1990 to August 2017. The reason for selecting 1990 as the starting year for our search is that before this year, the use of computers and digital technologies was limited to very basic tasks. There were no language or publication restrictions (see Multimedia Appendix 1).

The search results from different bibliographic databases were combined in a single Endnote library, and duplicate records were removed. Four authors (BMK, NS, JV, and CKN) independently screened the search results and assessed full-text studies for inclusion. Any disagreements were resolved through discussion between the authors. Study authors were contacted for unclear or missing information.

Five reviewers (BMK, NS, JV, CKN, and UD) independently extracted data using a structured data extraction form. Disagreements between review authors were resolved by discussion. We extracted data on the participants, interventions, comparators, and outcomes. If studies had multiple arms, we compared the most interactive intervention arm with the least interactive control arm.

Two reviewers (BMK and NS) independently assessed the risk of bias for randomized controlled trials using the Cochrane risk of bias tool [19,21], which included the following domains: random sequence generation, allocation concealment, blinding of outcome assessors, completeness of outcome data, and selective outcome reporting. We also assessed the following additional sources of bias: baseline imbalance and inappropriate administration of an intervention as recommended by the Cochrane Handbook for Systematic Reviews of Interventions [21]. Studies were judged at high risk of bias if there was a high risk of bias for 1 or more key domains and at unclear risk of bias if they had an unclear risk of bias for at least 2 domains.

Data Synthesis and Analysis

Studies were grouped by outcome and comparison. Comparators included traditional education, other forms of digital education, and other types of VR. We included postintervention outcome data in our review for the sake of consistency as this was the most commonly reported form of findings in the included studies. For continuous outcomes, we summarized the standardized mean differences (SMDs) and associated 95% CIs across studies. We were unable to identify a clinically meaningful interpretation of SMDs specifically for digital education interventions. Therefore, in line with other evidence syntheses of educational research, we interpreted SMDs using the Cohen rule of thumb: <0.2 no effect, 0.2 to 0.5 small effect size, 0.5 to 0.8 medium effect size, and >0.80 a large effect size [22,22]. For dichotomous outcomes, we summarized relative risks and associated 95% CIs across studies. We employed the random-effects model in our meta-analysis. The I2 statistic was employed to evaluate heterogeneity, with I2<25%, 25% to 75%, and >75% to represent a low, moderate, and high degree of inconsistency, respectively. The meta-analysis was performed using Review Manager 5.3 (Cochrane Library Software, Oxford, UK). Where sufficient data were available, summary SMD and associated 95% CIs were estimated using random-effects meta-analysis [21].

We prepared Summary of Findings tables to present a summary of the results and a judgment on the quality of the evidence by using Grading of Recommendations, Assessment, Development and Evaluations methodology. We presented the findings that we were unable to pool, because of lack of data or high heterogeneity, in the form of narrative synthesis.

Results

Results of the Search

The searches identified 30,532 unique references; of these, 31 studies (33 reports; 2407 participants) fulfilled the inclusion criteria [11,24-53] (see Figure 1).

Figure 1.

Study flow diagram. RCT: randomized controlled trial.

Characteristics of Included Studies

All included studies were conducted in high-income countries. Moreover, 21 studies included only preregistration health professionals. A range of VR educational interventions were evaluated, including 3D models, VP or VHP within virtual worlds, and VR surgical stimulations. Control group interventions ranged from traditional learning (eg, lectures and textbooks) to other digital education interventions (online and offline) and other forms of VR (eg, with limited functions, noninteractivity, or nontutored support; see Multimedia Appendix 2). Although they met the inclusion criteria, some studies did not provide comparable outcome data. Out of the 24 studies assessing knowledge, 1 did not provide any comparable data to estimate the effect of the intervention [44]. Likewise, 2 out of 12 studies assessing skills [29,49], 4 out of 8 studies assessing attitude [38,40,46,50], and 8 out of 12 studies assessing satisfaction [24,29,33,37,48,49,52,53] did not provide comparable data.

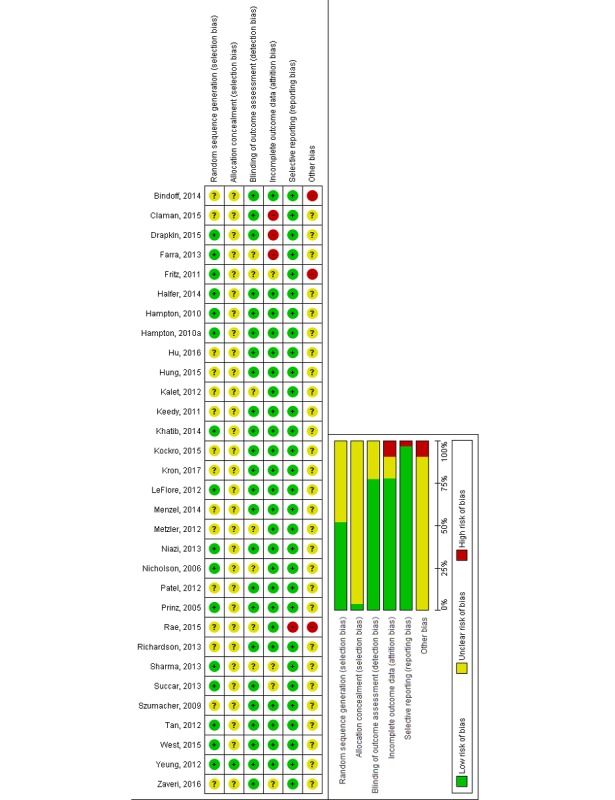

Risk of Bias

Overall, studies were judged at unclear or high risk of bias (see Figure 2). Most studies lacked information on randomization, allocation concealment, and participants’ baseline characteristics. Studies were mostly at low risk of bias for blinding of outcome assessment as they provided detailed information on blinding of outcome measures and/or used predetermined assessment tools (multiple choice questions, survey, etc). We judged the studies to be at low risk of detection bias in comparison with traditional education as blinding of participants was impossible because of the use of automated or formalized outcome measurement instruments. However, most of these instruments lacked information on validation. Most studies were judged to be at low risk of attrition and selection bias. Overall, 6 studies were judged at high risk of bias because of reported significant baseline differences in participant characteristics or incomplete outcome data.

Figure 2.

Risk of bias graph and summary.

Primary Outcomes

Knowledge Outcome

A total of 24 studies (1757 participants) [11,24,26-28,30-32, 34-37,39,41-44,46-48,50-53] assessed knowledge as the primary outcome. Of them, 6 studies focused on postregistration health professionals [30,42,46,47,50,53] and all others focused on preregistration health professionals.

The effectiveness of VR interventions was compared with traditional learning (via two-dimensional [2D] images, textbooks, and lectures) in 9 studies (659 participants) [24,30,32,36,39,43,47,48,52] (Table 1). Overall, studies suggested a slight improvement in knowledge with VR compared with traditional learning (SMD=0.44; 95% CI 0.18-0.69; I2=49%; 603 participants [8 studies]; moderate certainty evidence; see Figure 3).

Table 1.

Summary of findings table: virtual reality compared with traditional learning.

| Outcomesa | Illustrative comparative risks (95% CI) | Participants (n) | Studies (n) | Quality of evidence (GRADEb) | Comments |

| Postintervention knowledge scores: measured via MCQsc or quiz. Follow-up: immediate postintervention only | The mean knowledge score in the intervention group was 0.44 SDs higher (0.18 to 0.69 higher) than the mean score in the traditional learning group | 603 | 8 | Moderated | 1 study [36] reported mean change scores within the group, and hence, the study data were excluded from the pooled analysis |

| Postintervention skill scores: measured via survey and OSCEe. Follow-up duration: immediate postintervention only | The mean skill score in the intervention group was 1.12 SDs higher (0.81 to 1.43 higher) than the mean score in the traditional learning group | 235 | 4 | Moderated | 3 studies were excluded from the analysis as 1 study reported incomplete outcome data [29], 1 study assessed mixed outcomes [36], and 1 study reported self-reported outcome data [24] |

| Postintervention attitude scores: measured via survey. Follow-up duration: immediate postintervention only | The mean attitudinal score in the intervention group was 0.19 SDs higher (−0.35 lower to 0.73 higher) than the mean score in the traditional learning group | 83 | 2 | Moderated | N/Af |

| Postintervention satisfaction scores: measured via survey. Follow-up duration: immediate postintervention only | Not estimable | 100 | 1 | Lowd,g | 5 studies [24,29,33,48,52] reported incomplete outcome data or lacked comparable data. Therefore, these studies were excluded from the analysis. |

aPatient or population: health professionals; settings: universities and hospitals; intervention: virtual reality; comparison: traditional learning (face-to-face lecture, textbooks, etc).

bGRADE (Grading of Recommendations, Assessment, Development and Evaluations) Working Group grades of evidence. High quality: further research is very unlikely to change our confidence in the estimate of effect; moderate quality: further research is likely to have an important impact on our confidence in the estimate of effect and may change the estimate; low quality: further research is very likely to have an important impact on our confidence in the estimate of effect and is likely to change the estimate; and very low quality: we are very uncertain about the estimate.

cMCQs: multiple choice questions.

dDowngraded by 1 level for study limitations: the risk of bias was unclear or high in most included studies (−1).

eOSCE: objective structured clinical examination.

fN/A: not applicable.

gDowngraded as results were obtained from a single small study (−1).

Figure 3.

Forest plot for the knowledge outcome (postintervention). df: degrees of freedom; IV: interval variable; random: random effects model; VR: virtual reality.

A total of 10 studies (812 participants) compared VR with other forms of digital education (comprising 2D images on a screen, simple videos, or Web-based teaching) [11,27,28,35,37,41, 44,46,50,53] (see Table 2). The overall pooled estimate of 8 studies that compared different types of VR (such as computer 3D model and virtual world) with different controls (ie, computer-based 2D learning or online module or video-based learning) reported higher postintervention knowledge scores in the intervention groups over the control groups (SMD=0.43; 95% CI 0.07-0.79; I2=78%; 608 participants; low certainty evidence; see Figure 3). Additionally, 4 studies compared 3D models with different levels of interactivity (243 participants) [26,34,42,51]. Models with higher interactivity were associated with greater improvements in knowledge than those with less interactivity. The overall pooled estimate of the 4 studies reported higher postintervention knowledge score in the intervention groups with higher interactivity compared with the less interactive controls (SMD=0.60; 95% CI 0.05-1.14; I2=66%; moderate effect size; low certainty evidence; see Figure 3). A total of 3 studies could not be included in the meta-analysis: 1 study lacked data [44], whereas the other 2 studies reported a mean change score, favoring the VR group [36] or other digital education intervention [53].

Table 2.

Summary of findings table: virtual reality compared with other digital education interventions.

| Outcomesa | Illustrative comparative risks (95% CI) | Participants (n) | Studies (n) | Quality of evidence (GRADEb) | Comments |

| Postintervention knowledge score: measured via MCQsc and questionnaires. Follow-up duration: immediate postintervention to 6 months | The mean knowledge score in the intervention group was 0.43 SDs higher (0.07 to 0.79 higher) than the mean score in the other digital education interventions | 608 | 8 | Lowd,e | 1 study (32 participants) presented mean change score and favored VR group compared with the control group [53], and 1 study (172 participants) compared VR with computer-based video (2D) and presented incomplete outcome data [44] |

| Postintervention skills score: measured via scenario-based skills assessment. Follow-up duration: immediate postintervention only | The mean skill score in the intervention group was 0.5 SDs higher (0.32 to 0.69 higher) than the mean score in the other digital education interventions | 467 | 2 | Moderated | N/Af |

| Postintervention attitude: measured via survey and questionnaire. Follow-up duration: immediate postintervention only. | Not estimable | 21 | 1 | Lowd,g | 4 studies [38,40,46,50] reported incomplete outcome data or lacked comparable data. Therefore, these studies were excluded from the analysis. |

| Postintervention satisfaction: measured via MCQs, survey, and questionnaire. Duration: immediate postintervention only | The mean satisfaction score in the intervention group was 0.2 SDs higher (−0.71 lower to 1.11 higher) than the mean score in the other digital education interventions | 218 | 2 | Lowd,e | 2 studies [37,53] reported incomplete outcome data or lacked comparable data. Therefore, these studies were excluded from the analysis. |

aPatient or population: Health professionals; Settings: Universities and hospitals; Intervention: Virtual reality; Comparison: Other digital education interventions (such as online learning, computer-based video, etc).

bGRADE (Grading of Recommendations, Assessment, Development and Evaluations) Working Group grades of evidence. High quality: Further research is very unlikely to change our confidence in the estimate of effect; Moderate quality: Further research is likely to have an important impact on our confidence in the estimate of effect and may change the estimate; Low quality: Further research is very likely to have an important impact on our confidence in the estimate of effect and is likely to change the estimate; and Very low quality: We are very uncertain about the estimate.

cMCQs: multiple choice questions.

dDowngraded by 1 level for study limitations (−1): the risk of bias was unclear or high in most included studies.

eDowngraded by 1 level for inconsistency (−1): the heterogeneity between studies is high with large variations in effect and lack of overlap among confidence intervals.

fN/A: not applicable.

gDowngraded as results were obtained from a single small study (−1).

Skill Outcome

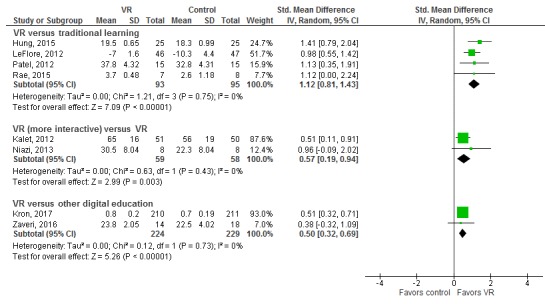

A total of 12 studies (1011 participants) assessed skills as an outcome [24,29,33,34,36,38,39,42,43,45,49,53]. Of which, 7 studies compared VR-based interventions with traditional learning (comprising paper- or textbook-based education and didactic lectures; 354 participants) [24,29,33,36,39,43,45], and the overall pooled estimate of 4 studies showed a large improvement in postintervention cognitive skill scores in the intervention groups compared with the controls (SMD=1.12; 95% CI 0.81-1.43; I2=0%, 235 participants; large effect size; moderate certainty evidence; see Figure 4 and Table 1). Additionally, 3 studies compared the effectiveness of different types of VR on cognitive skills acquisition (190 participants) [34,42,49]. We were able to pool the findings from 2 studies favoring more interactive VR (SMD=0.57; 95% CI 0.19-0.94; I2=0%; moderate effect size; low certainty evidence). Two studies compared VR with other forms of digital education on skills, favoring the VR group (SMD=0.5; 95% CI 0.32-0.69; I2=0%; 467 participants; moderate effect size; low certainty evidence; see Table 2). A total of 4 studies could not be included in the meta-analysis: 2 studies reported incomplete outcome data [29,49], 1 study assessed mixed outcomes [36], and 1 study reported self-reported outcome data [24].

Figure 4.

Forest plot for the skills outcome (postintervention). df: degrees of freedom; IV: interval variable; random: random effects model; VR: virtual reality.

Attitude Outcome

A total of 8 studies (762 participants) [25,30,31,38,40,43,46,50] assessed attitude as an outcome. Of them, 2 studies comparing VR-based interventions with traditional learning (small group teaching and didactic lectures; 83 participants) [30,43] reported no difference between the groups on postintervention attitude scores (SMD=0.19; 95% CI−0.35 to 0.73; I2=0%; moderate certainty evidence; see Table 1). One study compared blended learning with traditional learning (43 participants) [30] and reported higher postinterventional attitude score (large effect size) toward the intervention (SMD=1.11; 95% CI 0.46-1.75). Additionally, 5 studies (636 participants) [25,38,40,46,50] that compared VR with other digital education interventions reported that most of the studies had incomplete outcome data.

Satisfaction Outcome

A total of 12 studies (991 participants) [24,26,29,32,33,35, 37,44,48,49,52,53] assessed satisfaction, mostly only for the intervention group. Only 4 studies compared satisfaction in the intervention and control groups and largely reported no difference between the study groups.

Secondary Outcomes

Halfer et al (30 participants) [29] assessed the use of VR versus traditional paper floor plans of the hospital to prepare nurses for wayfinding in a new hospital building. A cost analysis showed that a virtual hospital-based approach increased development costs but provided increased value during implementation by reducing staff time needed for practicing wayfinding skills. The paper describes that the real-world paper floor plan approach had a development cost of US $40,000 and the implementation cost was US $530,000, bringing the total cost to US $570,000. In comparison, the virtual world would cost US $220,000 for development and US $201,000 for implementation, bringing the total to US $421,000.

Zaveri et al (32 participants) [53] assessed the effectiveness of a VR module (Second Life, Linden Lab) in teaching preparation and management of sedation procedures, compared with online learning. Development of the module occurred over 2 years of interactions with a software consultant and utilized a US $40,000 grant to create VR scenarios. Cost of the control group (online training) was not presented, and hence, no formal comparison was made.

No information on patient-related outcomes, behavior change, and unintended or adverse effects of VR on the patient or the learner were reported in any of the studies.

Discussion

Principal Findings

This systematic review assessed the effectiveness of VR interventions for health professions education. We found evidence showing a small improvement in knowledge and moderate-to-large improvement in skills in learners taking part in VR interventions compared with traditional or other forms of digital learning. Compared with less interactive interventions, more interactive VR interventions seem to moderately improve participants’ knowledge and skills. The findings for attitude and satisfaction outcomes are inconclusive because of incomplete outcome data. None of the included studies reported any patient-related outcomes, behavior change, as well as unintended or adverse effects of the VR on the patients or the learners. Only 2 studies assessed the cost of setup and maintenance of the VR as a secondary outcome without making any formal comparisons.

Overall, the risk of bias for most studies was judged to be unclear (because of a lack of information), with some instances of potentially high risk of attrition, reporting, and other bias identified. The quality of the evidence ranges from low to moderate for knowledge, skills, attitude, and satisfaction outcomes because of the unclear and high risks of bias and inconsistency, that is, heterogeneity in study results as well as in types of participants, interventions, and outcome measurement instruments [54].

The fact that no included studies were published before 2005 suggests that VR is an emerging educational strategy, attracting increasing levels of interest. The included studies were mainly conducted among doctors, nurses, and students pursuing their medical degree. Limited studies on pharmacists, dentists, and other allied health professionals suggest more research is needed on the use of VR among these groups of health professionals. Additionally, the majority of interventions studied were not part of a regular curriculum and none of the studies mentioned the use of learning theories to design the VR-based intervention or develop clinical competencies. This is an important aspect of designing any curriculum, and hence, applicability of the included studies might only be limited to their current setting and may not be generalizable to other geographic or socioeconomic backgrounds. Furthermore, most studies evaluated participants’ knowledge, and skills assessed may not translate directly into clinical competencies.

Although the included studies encompassed a range of participants and interventions, a lack of consistent methodological approach and studies conducted in any one health care discipline makes it difficult to draw any meaningful conclusions. There is also a distinct lack of data from low- or middle-income countries, which reduces applicability to those contexts that are most in need of innovative educational strategies. In addition, only 2 studies assessed the cost of setup and maintenance of the VR-based intervention, whereas none of the included studies assessed cost-effectiveness. Thus, no conclusions regarding costing and cost-effectiveness can be made at this point either. There was also a lack of information on patient-related outcomes, behavior change, and unintended or adverse effects of VR on the patients as well as the learner, which needs to be addressed.

Majority of the included studies assessed the effectiveness of nonimmersive VR, and there is a need to explore more on the effects of VR with different level of immersion as well as interactivity on the outcomes of interest. In our review, most of the studies assessing attitude and satisfaction outcomes reported incomplete outcome or incomparable outcome data, and there is a need for primary studies focusing on these outcomes. Finally, there is a need to standardize the methods for reporting meaningful and the most accurate data on the outcomes as most of the included studies reported postintervention mean scores rather than change scores on the outcomes, which limits the accuracy of the findings for the reported outcomes.

Strengths and Limitations

Our review provides the most up-to-date evidence on the effectiveness of different types of VR in health professions education. We conducted a comprehensive search across different databases including gray literature sources and followed the Cochrane gold standard methodology while conducting this systematic review. Our review also has several limitations. The included studies largely reported postintervention data, so we could not calculate pre- to postintervention change or ascertain whether the intervention groups were matched at baseline for key characteristics and outcome measure scores. We were also unable to perform the prespecified subgroup analysis because of limited data from the primary studies.

Conclusions

As an emerging and versatile technology, VR has the potential to transform health professions education. Our findings show that when compared with traditional education or other types of digital education, such as online or offline digital education, VR may improve postintervention knowledge and skills. VR with higher interactivity showed more effectiveness compared with less interactive VR for postintervention knowledge and skill outcomes. Further research should evaluate the effectiveness of more immersive and interactive forms of VR in a variety of settings and evaluate outcomes such as attitude, satisfaction, untoward effects of VR, cost-effectiveness, and change in clinical practice or behavior.

Acknowledgments

This review was conducted in collaboration with the Health Workforce Department at WHO. The authors would also like to thank Mr Carl Gornitzki, Ms GunBrit Knutssön, and Mr Klas Moberg from the University Library, Karolinska Institutet, Sweden, for developing the search strategy and the peer reviewers for their comments. The authors would like to thank Associate Professor Josip Car for his valuable inputs throughout the drafting and revision of the review. The authors would like to thank Dr Parvati Dev for her valuable inputs from a content expert point of view. The authors would also like to thank Dr Gloria Law for her inputs on the classification of measurement instruments used to assess outcomes in the included studies. The authors would also like to thank Dr Ram Bajpai for his statistical advice. The authors gratefully acknowledge funding from the Lee Kong Chian School of Medicine, Nanyang Technological University Singapore, Singapore: eLearning for health professionals education grant.

Abbreviations

- 2D

two-dimensional

- 3D

three-dimensional

- GRADE

Grading of Recommendations, Assessment, Development and Evaluations

- MCQ

multiple choice question

- OSCE

objective structured clinical examination

- SMD

standardized mean difference

- VHP

virtual health professional

- VP

virtual patient

- VR

virtual reality

- WHO

World Health Organization

MEDLINE (Ovid) search strategy.

Characteristics of included studies.

Footnotes

Authors' Contributions: LTC and NZ conceived the idea for the review. BMK, NS, and LTC wrote the review. LTC and PP provided methodological guidance, drafted some of the methodology-related sections, and critically revised the review. JV, PPG, IM, UD, AAK, CKN, and NZ provided comments on and edited the review.

Conflicts of Interest: None declared.

References

- 1.World Health Organization. 2016. Mar 02, [2019-01-14]. High-Level Commission on Health Employment and Economic Growth https://www.who.int/hrh/com-heeg/en/

- 2.Frenk J, Chen L, Bhutta ZA, Cohen J, Crisp N, Evans T, Fineberg H, Garcia P, Ke Y, Kelley P, Kistnasamy B, Meleis A, Naylor D, Pablos-Mendez A, Reddy S, Scrimshaw S, Sepulveda J, Serwadda D, Zurayk H. Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. Lancet. 2010 Dec 04;376(9756):1923–58. doi: 10.1016/S0140-6736(10)61854-5.S0140-6736(10)61854-5 [DOI] [PubMed] [Google Scholar]

- 3.Transformative scale up of health professional education: an effort to increase the numbers of health professionals and to strengthen their impact on population health. Geneva: World Health Organization; 2011. [2019-01-14]. http://apps.who.int/iris/handle/10665/70573 . [Google Scholar]

- 4.Crisp N, Gawanas B, Sharp I, Task Force for Scaling Up EducationTraining for Health Workers Training the health workforce: scaling up, saving lives. Lancet. 2008 Feb 23;371(9613):689–91. doi: 10.1016/S0140-6736(08)60309-8.S0140-6736(08)60309-8 [DOI] [PubMed] [Google Scholar]

- 5.Car J, Carlstedt-Duke J, Tudor Car L, Posadzki P, Whiting P, Zary N, Atun R, Majeed A, Campbell J. Digital education for health professions: methods for overarching evidence syntheses. J Med Internet Res. 2019:2018. doi: 10.2196/preprints.12913. (forthcoming) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Saxena N, Kyaw B, Vseteckova J, Dev P, Paul P, Lim KT, Kononowicz AA, Masiello I, Tudor Car L, Nikolaou CK, Zary N, Car J. Virtual reality environments for health professional education. Cochrane Database Syst Rev. 2016 Feb 09;(2):CD012090. doi: 10.1002/14651858.CD012090. https://www.cochranelibrary.com/cdsr/doi/10.1002/14651858.CD012090/full . [DOI] [Google Scholar]

- 7.Mantovani F, Castelnuovo G, Gaggioli A, Riva G. Virtual reality training for health-care professionals. Cyberpsychol Behav. 2003 Aug;6(4):389–95. doi: 10.1089/109493103322278772. [DOI] [PubMed] [Google Scholar]

- 8.McGaghie WC, Issenberg SB, Petrusa ER, Scalese RJ. A critical review of simulation-based medical education research: 2003-2009. Med Educ. 2010 Jan;44(1):50–63. doi: 10.1111/j.1365-2923.2009.03547.x.MED3547 [DOI] [PubMed] [Google Scholar]

- 9.Rasmussen K, Belisario JM, Wark PA, Molina JA, Loong SL, Cotic Z, Papachristou N, Riboli-Sasco E, Tudor Car L, Musulanov EM, Kunz H, Zhang Y, George PP, Heng BH, Wheeler EL, Al Shorbaji N, Svab I, Atun R, Majeed A, Car J. Offline eLearning for undergraduates in health professions: a systematic review of the impact on knowledge, skills, attitudes and satisfaction. J Glob Health. 2014 Jun;4(1):010405. doi: 10.7189/jogh.04.010405. http://europepmc.org/abstract/MED/24976964 .jogh-04-010405 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Andreatta PB, Maslowski E, Petty S, Shim W, Marsh M, Hall T, Stern S, Frankel J. Virtual reality triage training provides a viable solution for disaster-preparedness. Acad Emerg Med. 2010 Aug;17(8):870–6. doi: 10.1111/j.1553-2712.2010.00728.x. doi: 10.1111/j.1553-2712.2010.00728.x.ACEM728 [DOI] [PubMed] [Google Scholar]

- 11.Nicholson DT, Chalk C, Funnell WR, Daniel SJ. Can virtual reality improve anatomy education? A randomised controlled study of a computer-generated three-dimensional anatomical ear model. Med Educ. 2006 Nov;40(11):1081–7. doi: 10.1111/j.1365-2929.2006.02611.x.MED2611 [DOI] [PubMed] [Google Scholar]

- 12.Gurusamy KS, Aggarwal R, Palanivelu L, Davidson BR. Virtual reality training for surgical trainees in laparoscopic surgery. Cochrane Database Syst Rev. 2009 Jan 21;(1):CD006575. doi: 10.1002/14651858.CD006575.pub2. [DOI] [PubMed] [Google Scholar]

- 13.Nagendran M, Gurusamy KS, Aggarwal R, Loizidou M, Davidson BR. Virtual reality training for surgical trainees in laparoscopic surgery. Cochrane Database Syst Rev. 2013 Aug 27;(8):CD006575. doi: 10.1002/14651858.CD006575.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Piromchai P, Avery A, Laopaiboon M, Kennedy G, O'Leary S. Virtual reality training for improving the skills needed for performing surgery of the ear, nose or throat. Cochrane Database Syst Rev. 2015 Sep 09;(9):CD010198. doi: 10.1002/14651858.CD010198.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Walsh CM, Sherlock ME, Ling SC, Carnahan H. Virtual reality simulation training for health professions trainees in gastrointestinal endoscopy. Cochrane Database Syst Rev. 2012 Jun 13;(6):CD008237. doi: 10.1002/14651858.CD008237.pub2. [DOI] [PubMed] [Google Scholar]

- 16.Fritz PZ, Gray T, Flanagan B. Review of mannequin-based high-fidelity simulation in emergency medicine. Emerg Med Australas. 2008 Feb;20(1):1–9. doi: 10.1111/j.1742-6723.2007.01022.x.EMM1022 [DOI] [PubMed] [Google Scholar]

- 17.Issenberg SB, McGaghie WC, Petrusa ER, Lee Gordon D, Scalese RJ. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach. 2005 Jan;27(1):10–28. doi: 10.1080/01421590500046924.R3P0QDKQ9XBG5AJ9 [DOI] [PubMed] [Google Scholar]

- 18.Ziv A, Wolpe PR, Small SD, Glick S. Simulation-based medical education: an ethical imperative. Acad Med. 2003 Aug;78(8):783–8. doi: 10.1097/00001888-200308000-00006. [DOI] [PubMed] [Google Scholar]

- 19.Cochrane Library, The Cochrane Collaboration. 2011. [2019-01-15]. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011] http://crtha.iums.ac.ir/files/crtha/files/cochrane.pdf .

- 20.Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JP, Clarke M, Devereaux PJ, Kleijnen J, Moher D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med. 2009 Jul 21;6(7):e1000100. doi: 10.1371/journal.pmed.1000100. http://dx.plos.org/10.1371/journal.pmed.1000100 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Higgins JPT, Altman DG, Gotzsche PC, Juni P, Moher D, Oxman AD, Savovic J, Schulz KF, Weeks L, Sterne JAC. The Cochrane Collaboration's tool for assessing risk of bias in randomised trials. BMJ. 2011 Oct 18;343:d5928. doi: 10.1136/bmj.d5928. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Cook DA, Hatala R, Brydges R, Zendejas B, Szostek JH, Wang AT, Erwin PJ, Hamstra SJ. Technology-enhanced simulation for health professions education: a systematic review and meta-analysis. J Am Med Assoc. 2011 Sep 07;306(9):978–88. doi: 10.1001/jama.2011.1234.306/9/978 [DOI] [PubMed] [Google Scholar]

- 23.RevMan 5.3. Copenhagen: The Nordic Cochrane Centre: The Cochrane Collaboration; 2014. Jun 13, [2019-01-15]. https://community.cochrane.org/sites/default/files/uploads/inline-files/RevMan_5.3_User_Guide.pdf . [Google Scholar]

- 24.Bindoff I, Ling T, Bereznicki L, Westbury J, Chalmers L, Peterson G, Ollington R. A computer simulation of community pharmacy practice for educational use. Am J Pharm Educ. 2014 Nov 15;78(9):168. doi: 10.5688/ajpe789168. http://europepmc.org/abstract/MED/26056406 .ajpe168 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Claman FL. The impact of multiuser virtual environments on student engagement. Nurse Educ Pract. 2015 Jan;15(1):13–6. doi: 10.1016/j.nepr.2014.11.006.S1471-5953(14)00161-9 [DOI] [PubMed] [Google Scholar]

- 26.Drapkin ZA, Lindgren KA, Lopez MJ, Stabio ME. Development and assessment of a new 3D neuroanatomy teaching tool for MRI training. Anat Sci Educ. 2015;8(6):502–9. doi: 10.1002/ase.1509. [DOI] [PubMed] [Google Scholar]

- 27.Farra S, Miller E, Timm N, Schafer J. Improved training for disasters using 3-D virtual reality simulation. West J Nurs Res. 2013 May;35(5):655–71. doi: 10.1177/0193945912471735.0193945912471735 [DOI] [PubMed] [Google Scholar]

- 28.Fritz D, Hu A, Wilson T, Ladak H, Haase P, Fung K. Long-term retention of a 3-dimensional educational computer model of the larynx: a follow-up study. Arch Otolaryngol Head Neck Surg. 2011 Jun;137(6):598–603. doi: 10.1001/archoto.2011.76.137/6/598 [DOI] [PubMed] [Google Scholar]

- 29.Halfer D, Rosenheck M. Virtual education: is it effective for preparing nurses for a hospital move? J Nurs Adm. 2014 Oct;44(10):535–40. doi: 10.1097/NNA.0000000000000112.00005110-201410000-00008 [DOI] [PubMed] [Google Scholar]

- 30.Hampton BS, Sung VW. Improving medical student knowledge of female pelvic floor dysfunction and anatomy: a randomized trial. Am J Obstet Gynecol. 2010 Jun;202(6):601.e1–8. doi: 10.1016/j.ajog.2009.08.038.S0002-9378(09)00965-X [DOI] [PubMed] [Google Scholar]

- 31.Hampton BS, Sung VW. A randomized trial to estimate the effect of an interactive computer trainer on resident knowledge of female pelvic floor dysfunction and anatomy. Female Pelvic Med Reconstr Surg. 2010 Jul;16(4):224–8. doi: 10.1097/SPV.0b013e3181ed3fb1.01436319-201008000-00007 [DOI] [PubMed] [Google Scholar]

- 32.Hu A, Shewokis PA, Ting K, Fung K. Motivation in computer-assisted instruction. Laryngoscope. 2016 Aug;126 Suppl 6:S5–S13. doi: 10.1002/lary.26040. [DOI] [PubMed] [Google Scholar]

- 33.Hung P, Choi KS, Chiang VC. Using interactive computer simulation for teaching the proper use of personal protective equipment. Comput Inform Nurs. 2015 Feb;33(2):49–57. doi: 10.1097/CIN.0000000000000125. [DOI] [PubMed] [Google Scholar]

- 34.Kalet AL, Song HS, Sarpel U, Schwartz R, Brenner J, Ark TK, Plass J. Just enough, but not too much interactivity leads to better clinical skills performance after a computer assisted learning module. Med Teach. 2012 Aug;34(10):833–9. doi: 10.3109/0142159X.2012.706727. http://europepmc.org/abstract/MED/22917265 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Keedy AW, Durack JC, Sandhu P, Chen EM, O'Sullivan PS, Breiman RS. Comparison of traditional methods with 3D computer models in the instruction of hepatobiliary anatomy. Anat Sci Educ. 2011 Mar;4(2):84–91. doi: 10.1002/ase.212. [DOI] [PubMed] [Google Scholar]

- 36.Khatib M, Hald N, Brenton H, Barakat MF, Sarker SK, Standfield N, Ziprin P, Kneebone R, Bello F. Validation of open inguinal hernia repair simulation model: a randomized controlled educational trial. Am J Surg. 2014 Aug;208(2):295–301. doi: 10.1016/j.amjsurg.2013.12.007.S0002-9610(14)00038-5 [DOI] [PubMed] [Google Scholar]

- 37.Kockro RA, Amaxopoulou C, Killeen T, Wagner W, Reisch R, Schwandt E, Gutenberg A, Giese A, Stofft E, Stadie AT. Stereoscopic neuroanatomy lectures using a three-dimensional virtual reality environment. Ann Anat. 2015 Sep;201:91–8. doi: 10.1016/j.aanat.2015.05.006.S0940-9602(15)00084-9 [DOI] [PubMed] [Google Scholar]

- 38.Kron FW, Fetters MD, Scerbo MW, White CB, Lypson ML, Padilla MA, Gliva-McConvey GA, Belfore LA, West T, Wallace AM, Guetterman TC, Schleicher LS, Kennedy RA, Mangrulkar RS, Cleary JF, Marsella SC, Becker DM. Using a computer simulation for teaching communication skills: a blinded multisite mixed methods randomized controlled trial. Patient Educ Couns. 2017 Apr;100(4):748–59. doi: 10.1016/j.pec.2016.10.024.S0738-3991(16)30494-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.LeFlore JL, Anderson M, Zielke MA, Nelson KA, Thomas PE, Hardee G, John LD. Can a virtual patient trainer teach student nurses how to save lives--teaching nursing students about pediatric respiratory diseases. Simul Healthc. 2012 Feb;7(1):10–7. doi: 10.1097/SIH.0b013e31823652de. [DOI] [PubMed] [Google Scholar]

- 40.Menzel N, Willson LH, Doolen J. Effectiveness of a poverty simulation in Second Life®: changing nursing student attitudes toward poor people. Int J Nurs Educ Scholarsh. 2014 Mar 11;11(1):39–45. doi: 10.1515/ijnes-2013-0076./j/ijnes.2014.11.issue-1/ijnes-2013-0076/ijnes-2013-0076.xml [DOI] [PubMed] [Google Scholar]

- 41.Metzler R, Stein D, Tetzlaff R, Bruckner T, Meinzer HP, Büchler MW, Kadmon M, Müller-Stich BP, Fischer L. Teaching on three-dimensional presentation does not improve the understanding of according CT images: a randomized controlled study. Teach Learn Med. 2012 Apr;24(2):140–8. doi: 10.1080/10401334.2012.664963. [DOI] [PubMed] [Google Scholar]

- 42.Niazi AU, Tait G, Carvalho JC, Chan VW. The use of an online three-dimensional model improves performance in ultrasound scanning of the spine: a randomized trial. Can J Anaesth. 2013 May;60(5):458–64. doi: 10.1007/s12630-013-9903-0. [DOI] [PubMed] [Google Scholar]

- 43.Patel V, Aggarwal R, Osinibi E, Taylor D, Arora S, Darzi A. Operating room introduction for the novice. Am J Surg. 2012 Feb;203(2):266–75. doi: 10.1016/j.amjsurg.2011.03.003.S0002-9610(11)00262-5 [DOI] [PubMed] [Google Scholar]

- 44.Prinz A, Bolz M, Findl O. Advantage of three dimensional animated teaching over traditional surgical videos for teaching ophthalmic surgery: a randomised study. Br J Ophthalmol. 2005 Nov;89(11):1495–9. doi: 10.1136/bjo.2005.075077. http://bjo.bmj.com/cgi/pmidlookup?view=long&pmid=16234460 .89/11/1495 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Rae AO, Khatib M, Sarker SK, Bello F. The effect of a computer based open surgery simulation of an inguinal hernia repair on the results of cognitive task analysis performance of surgical trainees: an educational trial. Br J Surg. 2015;102(S1):40. [Google Scholar]

- 46.Richardson A, Bracegirdle L, McLachlan SI, Chapman SR. Use of a three-dimensional virtual environment to teach drug-receptor interactions. Am J Pharm Educ. 2013 Feb 12;77(1):11. doi: 10.5688/ajpe77111. http://europepmc.org/abstract/MED/23459131 .ajpe11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Sharma V, Chamos C, Valencia O, Meineri M, Fletcher SN. The impact of internet and simulation-based training on transoesophageal echocardiography learning in anaesthetic trainees: a prospective randomised study. Anaesthesia. 2013 Jun;68(6):621–7. doi: 10.1111/anae.12261. doi: 10.1111/anae.12261. [DOI] [PubMed] [Google Scholar]

- 48.Succar T, Zebington G, Billson F, Byth K, Barrie S, McCluskey P, Grigg J. The impact of the Virtual Ophthalmology Clinic on medical students' learning: a randomised controlled trial. Eye (Lond) 2013 Oct;27(10):1151–7. doi: 10.1038/eye.2013.143. http://europepmc.org/abstract/MED/23867718 .eye2013143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Szumacher E, Harnett N, Warner S, Kelly V, Danjoux C, Barker R, Woo M, Mah K, Ackerman I, Dubrowski A, Rose S, Crook J. Effectiveness of educational intervention on the congruence of prostate and rectal contouring as compared with a gold standard in three-dimensional radiotherapy for prostate. Int J Radiat Oncol Biol Phys. 2010 Feb 01;76(2):379–85. doi: 10.1016/j.ijrobp.2009.02.008.S0360-3016(09)00222-3 [DOI] [PubMed] [Google Scholar]

- 50.Tan S, Hu A, Wilson T, Ladak H, Haase P, Fung K. Role of a computer-generated three-dimensional laryngeal model in anatomy teaching for advanced learners. J Laryngol Otol. 2012 Apr;126(4):395–401. doi: 10.1017/S0022215111002830.S0022215111002830 [DOI] [PubMed] [Google Scholar]

- 51.West N, Konge L, Cayé-Thomasen P, Sørensen MS, Andersen SA. Peak and ceiling effects in final-product analysis of mastoidectomy performance. J Laryngol Otol. 2015 Nov;129(11):1091–6. doi: 10.1017/S0022215115002364.S0022215115002364 [DOI] [PubMed] [Google Scholar]

- 52.Yeung JC, Fung K, Wilson TD. Prospective evaluation of a web-based three-dimensional cranial nerve simulation. J Otolaryngol Head Neck Surg. 2012 Dec;41(6):426–36. doi: 10.2310/7070.2012.00049. [DOI] [PubMed] [Google Scholar]

- 53.Zaveri PP, Davis AB, O'Connell KJ, Willner E, Aronson Schinasi DA, Ottolini M. Virtual reality for pediatric sedation: a randomized controlled trial using simulation. Cureus. 2016 Feb 9;8(2):e486. doi: 10.7759/cureus.486. http://europepmc.org/abstract/MED/27014520 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Schünemann HJ, Oxman AD, Higgins JPT, Vist GE, Glasziou P, Guyatt GH. Cochrane Handbook for Systematic Reviews of Interventions. London, United Kingdom: Wiley; 2011. [2019-01-15]. Presenting results and 'summary of findings' tables https://handbook-5-1.cochrane.org/chapter_11/11_presenting_results_and_summary_of_findings_tables.htm . [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

MEDLINE (Ovid) search strategy.

Characteristics of included studies.