Abstract

Introduction

Simulation is increasingly used throughout medicine. Within ultrasound, simulators are more established for learning transvaginal and interventional procedures. The use of modern high-fidelity transabdominal simulators is increasing, particularly in centres with large trainee numbers. There is no current literature on the value of these simulators in gaining competence in abdominal ultrasound. The aim was to investigate the impact of a new ultrasound curriculum, incorporating transabdominal simulators into the first year of training in a UK radiology academy.

Methods

The simulator group included 13 trainees. The preceding cohort of 15 trainees was the control group. After 10 months, a clinical assessment was performed to assess whether the new curriculum resulted in improved ultrasound skills. Questionnaires were designed to explore the acceptability of simulation training and whether it had any impact on confidence levels.

Results

Trainees who had received simulator-enriched training scored higher in an objective clinical ultrasound assessment, which was statistically significant (p = 0.0463). End confidence scores for obtaining diagnostic images and demonstrating pathology were also higher in the simulation group. All trainees stated that transabdominal simulator training was useful in early training.

Conclusions

This initial study shows that embedded into a curriculum, transabdominal ultrasound simulators are an acceptable training method that can result in improved ultrasound skills and higher confidence levels. Using simulators early in training could allow trainees to master the basics, improve their confidence, enabling them to get more educational value from clinical ultrasound experience while reducing the impact of training on service provision.

Keywords: Ultrasound, transabdominal, simulation, education

Introduction

Ultrasound has traditionally been taught using apprenticeship-style methods.1 This has provided successful training for generations of ultrasound practitioners but has several potential disadvantages, which are of particular relevance in the current clinical climate. As a consequence of the increasing demand for imaging and thus workload within radiology, there is less time available for real-time practical ultrasound experience.1–3 The increasing interest in point-of-care ultrasound has also led to a higher demand for training across specialties and gaining the experience required to achieve the relevant competencies can be difficult.1–4

Apprenticeship-style teaching is also unpredictable as it is difficult to control the case mix, the variety of pathology and complexity of cases due to pressures on service provision, which means that delivering a comprehensive ultrasound curriculum using only this method is potentially challenging and inefficient.1

Simulation is well established within aviation, the nuclear power industry and in the military for learning procedural skills in a safe, protected environment. In medicine, patient safety has driven the increasing use of simulation where it is now well established for learning surgical skills, endoscopy and managing acute medical emergencies. Within ultrasound, there is some literature exploring the use of simulation for transvaginal and interventional scanning, where it is most widely used. This literature has shown that simulation training is acceptable and can lead to increased trainee confidence.4–6 There is some evidence to show that simulation also increases trainee competence7–10 and while most of these studies assess trainees using simulators, some have also demonstrated that these skills are transferable to clinical practice.10,11 One study evaluating transvaginal ultrasound simulation found that patients reported less discomfort and higher confidence in the operator’s ability when scanned by trainees who had undergone simulation training compared with controls.12 Abdominal ultrasound simulators have been used for some time in various forms including phantom models, live volunteers and virtual reality simulators. Over the last decade, higher fidelity transabdominal ultrasound simulators have been developed and are increasingly being used for ultrasound training. There are several papers assessing a variety of transabdominal ultrasound simulators but none of these assess the more modern high-fidelity simulators beyond the setting of Focussed Assessment with Sonography for Trauma (FAST).13–15 The benefits of simulation for transvaginal and interventional ultrasound are evident, given the intimate and invasive nature of these procedures. The drivers for simulation in transabdominal ultrasound are less obvious but these include protected time and space for initial training in a non-pressured environment, which may increase confidence and allow more efficient use of busy clinical ultrasound lists. If this training is used early, there may be the potential to reduce the time taken for trainees to achieve basic competence.

The national shortage of radiologists in the UK has driven the creation of several radiology academies with an aim to increase the numbers of consultant radiologists for the future.3,16 This is achieved by providing a dedicated space for learning and reporting, which is in parallel to the hospital environment. This means that at a given time there can be more trainees without crowding the clinical environment, allowing optimisation of training for all. Non-academy training schemes typically have up to 8 trainees in each cohort, whereas academies can accommodate up to 15. There are currently three radiology academies in the UK and there are further plans to create two more academies in the near future. This study took place at the Peninsula Radiology Academy, Plymouth, England, which opened in 2005. While there are many benefits of academy-based training, the large numbers of trainees can lead to increased pressure on limited clinical ultrasound training opportunities. By incorporating simulator training into these environments, the use of clinical training lists might be optimised.

In 2015, the Peninsula Radiology Academy acquired a Medaphor transabdominal ultrasound simulator (ScanTrainer, Medaphor, Cardiff, Wales). This prompted re-evaluation of ultrasound training for junior trainees within the academy and as a result, the transabdominal simulator was integrated into a redesigned curriculum. This combined the previous ultrasound training methods (supervised ultrasound lists, weekly ultrasound meeting and consultant led case-based teaching sessions) with new timetabled simulator sessions (6 h unsupervised per month until completion of all core general abdominal modules) and associated small group tutorials. The current study was designed to assess the outcome of this change in practice and was undertaken within the constraints of the busy clinical and educational environment with no additional funding.

Materials and methods

As this was an educational study on National Health Service (NHS) staff members, formal NHS Research Ethics Committee approval was not required. Institutional research approval was granted (Reference ID – 16/P/025).

The new ultrasound curriculum was introduced at the beginning of the academic year (2015–2016) and this cohort of first year radiology specialty trainees (ST1s) was enrolled as the simulation intervention group. The preceding cohort of trainees (2014–2015) was used as a control, accepting that this study design would not allow randomisation. All ST1 radiology trainees commencing training in 2014 or 2015 were included. Exclusion criteria were significant previous transabdominal ultrasound experience prior to radiology training (as declared in pre-training questionnaire) or termination of training for any reason during the study period. Based on these criteria all trainees in both cohorts were eligible for the study. A description of the study design was given to all participants and informed written consent was obtained following this. All participants agreed to take part in the study resulting in 15 trainees in the control group and 13 in the intervention group.

Questionnaires and an assessment were designed to enable comparisons between both the control and simulator groups. For the simulator group, two questionnaires were designed; one completed at the start of training and the other completed after ten months of training. Due to the timing of the study, the control group completed all questions in a single questionnaire after ten months of training. It was accepted that this would result in retrospective recollection of initial confidence scores. Most questions were scored using a Likert scale from 1 to 10 and some required free text answers. The main focus of the questionnaires was to establish pre- and post-training confidence levels at obtaining diagnostic images and identifying pathology by organ. The questionnaires also assessed trainee satisfaction with simulation training and preparedness for on-call ultrasound.

A clinical assessment tool was designed to assess competence at performing a systematic transabdominal ultrasound scan (Appendix). The Objective Structured Assessment of Ultrasound Skills (OSAUS) is a pre-existing generic ultrasound rating scale, which has been validated for use in assessing ultrasound skills. However, this grades trainees on their use of a ‘systematic approach to the examination and presentation of relevant structures according to guidelines.’17 This is a general assessment score and does not look at individual organs or structures separately. This study aimed to explore the impact of simulation training in more detail and there was no pre-existing ultrasound assessment tool to fit this purpose. The study assessment tool was therefore designed to reflect standard practice and refined in focus groups with experienced ultrasound practitioners. To obtain a detailed assessment, each individual task was assigned a score ranging from 1 to 4 (Table 1). Overall competence was also assessed using the same grading scale.

Table 1.

Grading scale for the clinical assessment.

| Grade | Description of each grade |

|---|---|

| 1 | Trainee fails to attempt assessed skill |

| 2 | Trainee attempts the skill but with limitations |

| 3 | Trainee demonstrates basic competence at the assessed skill |

| 4 | Trainee demonstrates familiarity with and competence at skill with awareness of diagnostic limitations |

After ten months of training and completing the curriculum, trainees were assessed using the assessment tool while scanning a consented healthy volunteer using a Toshiba Aplio XG ultrasound machine. The assessors (SJF and CMG) were two consultant radiologists both with a major subspecialty interest in ultrasound, with 19 and 11 years’ experience at consultant level in abdominal ultrasound respectively.

A descriptive analysis of the results was performed, with statistical analysis of the overall results provided by Plymouth University Statistics Department using Minitab software. The minimal level of statistical significance was set at p = 0.05 throughout.

Assessment

Medians, ranges and interquartile ranges (IQRs) were calculated to compare assessment scores for each task and aggregated scores for each organ. As the simulation training was in addition to the clinical ultrasound training, a one-sided Mann–Whitney test was selected to compare total assessment scores of the simulator group against the control group.

Questionnaires

The confidence scores pre- and post-training were reviewed and the difference between baseline and final self-assessed confidence scores was calculated for each participant. A one-sided Mann–Whitney test was again used to compare the distribution between the two groups. A descriptive analysis of questionnaire data was also performed using medians, ranges and IQRs. Free text comments were reviewed and collated.

Results

Clinical assessment

When comparing overall clinical assessment scores between both groups, the combined scores out of a total of 136 in the simulator group (median 113, range 83–130, IQR 17) were higher than that of the control group (median 89, range 73–126, IQR 31.5), which was statistically significant (p = 0.0463).

The simulator group assessment scores were aggregated into categories evaluating image optimisation and examination of each organ. The median scores were higher across all of these domains with the exception of the bladder (Table 2). The median score for the bladder was the same in both groups.

Table 2.

Aggregated assessment scores of control and simulator groups

| Control group |

Simulator group |

|||||

|---|---|---|---|---|---|---|

| Median | Range | IQR | Median | Range | IQR | |

| Image optimisation | 2 | 1–4 | 1.25 | 3 | 1–4 | 2 |

| Pancreas | 3 | 2–4 | 2 | 3.5 | 1–4 | 1 |

| Liver | 3 | 1–4 | 2 | 4 | 1–4 | 1 |

| Gall bladder | 3 | 1–4 | 2 | 4 | 1–4 | 1 |

| Right kidney | 3 | 2–4 | 1 | 4 | 1–4 | 1 |

| Left kidney | 3 | 2–4 | 1 | 4 | 1–4 | 1 |

| Spleen | 3 | 1–4 | 1 | 4 | 1–4 | 1 |

| Bladder | 4 | 2–4 | 1 | 4 | 1–4 | 1 |

| Aorta and retroperitoneum | 2 | 1–4 | 2 | 4 | 1–4 | 2 |

IQR: interquartile range.

Questionnaire results

The results for each question asked were as follows:

Q. How confident are you at identifying and obtaining diagnostic quality images of the following structures?

Scored 1–10 (1 = not at all confident, 10 = completely confident).

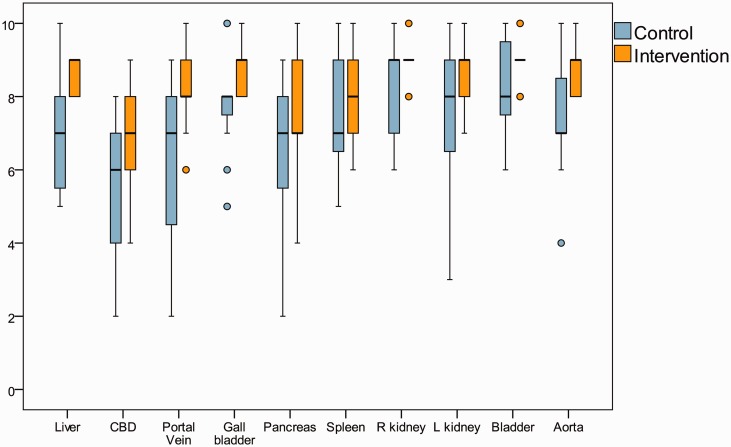

End of training confidence scores are displayed in Figure 1. Median end confidence scores were higher in the simulator group overall and for 8 out of 10 domains. Statistically significant results included confidence at obtaining diagnostic images of the liver (p = 0.02), common duct (p = 0.038), portal vein (p = 0.007), gall bladder (p = 0.002), and aorta (p = 0.029). Median end confidence scores for obtaining diagnostic quality images of the pancreas and right kidney were the same in both groups.

Figure 1.

End of training confidence scores for obtaining diagnostic ultrasound images. (Circles represent outliers.)

The overall difference in confidence scores for obtaining diagnostic images over the study period was higher in the simulator group (median 69, range 22–82, IQR 30.5) than in the control group (median 55, range 28–85, IQR 28), but this did not reach statistical significance (p = 0.365). Of note, both the initial and end confidence scores in the simulator group were higher than within the retrospectively questioned control group.

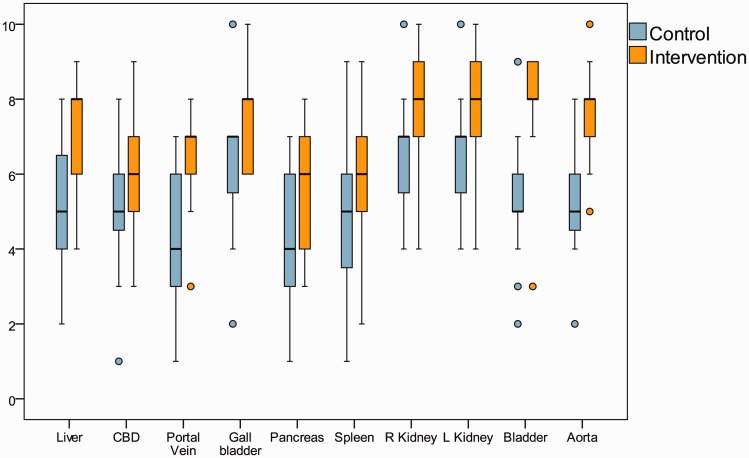

Q. How confident are you at identifying the presence of pathology in the following structures?

Scored 1–10 (1 = not at all confident, 10 = completely confident).

End of training confidence scores are displayed in Figure 2. The simulator group had higher end confidence scores for identifying the presence of pathology in each domain. Statistically significant results included those for the liver (p = 0.01), portal vein (p = 0.04), pancreas (p = 0.046), spleen (p = 0.043), right kidney (p = 0.01), left kidney (p = 0.017), bladder (p = 0.001) and aorta (p = < 0.001).

Figure 2.

End of training confidence scores for identifying the presence of pathology on ultrasound. (Circles represent outliers.)

The simulator group also demonstrated a greater increase in confidence over the study period (simulator group median 55, range 14–77, IQR 18.5; control group median 42, range −38 – 57, IQR 20), which was statistically significant (p = 0.0073).

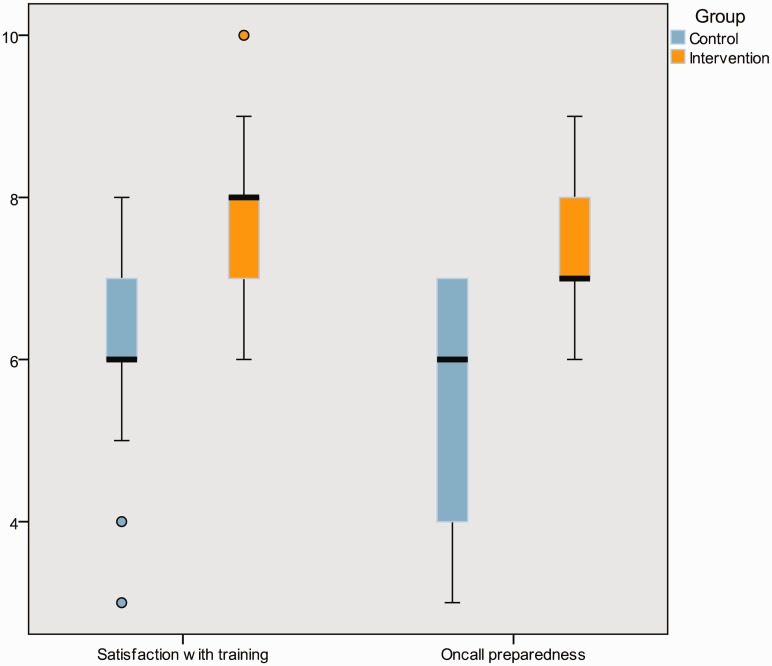

Q. How prepared do you feel to perform an abdominal ultrasound in the on-call setting?

Scored 1–10 (1 = not at all prepared, 10 = completely prepared).

The simulator group felt more prepared for on-call abdominal ultrasound than the control group (simulator group median 7, range 6–9, IQR 1; control group median 6, range 3–7, IQR 3), which was statistically significant (p = 0.001) (see Figure 3).

Figure 3.

Satisfaction with abdominal ultrasound training and perceived preparedness for performing on-call emergency transabdominal ultrasound. (Circles represent outliers.)

Q. How satisfied are you with your abdominal ultrasound training so far?

Scored 1–10 (1 = not at all satisfied, 10 = completely satisfied).

The simulator group were more satisfied with their abdominal ultrasound training than the control group (simulator group median 8, range 6–10, IQR 1; control group median 6, range 3–8, IQR 1), which was statistically significant (p = 0.0189).

Q. How useful have you found simulator training for learning abdominal ultrasound? Scored 1–10 (1 = not at all satisfied, 10 = completely satisfied).

The simulator group reported a wide range of scores for usefulness of simulator training (median 7, range 2–10, IQR 3). The perceived usefulness of other training methods are summarised in Table 3.

Table 3.

Perceived usefulness of training methods scored 1–10 using end of training questionnaire

| Control group |

Simulator group |

|||||

|---|---|---|---|---|---|---|

| Median | Range | IQR | Median | Range | IQR | |

| Simulator training | NA | NA | NA | 7 | 2–10 | 3 |

| Teaching US lists | 9 | 8–10 | 1 | 10 | 8–10 | 1 |

| Routine IP/OP lists | 8 | 6–10 | 2 | 8 | 4–10 | 3 |

| Non-practical small group tutorials | 8 | 4–10 | 1 | 8 | 6–10 | 2 |

IQR: interquartile range; IP: inpatient; OP: outpatient.

Q. What are your thoughts on the use of transabdominal ultrasound simulation to aid learning? (Free text box)

The simulator group all stated that it was useful early in training, despite wide ranging scores for the perceived usefulness of simulation training overall within the same group. However, many felt that the benefits of using the simulator did not extend beyond the first few months of training.

Discussion

This study has evaluated the additional value that abdominal simulator training can provide in early radiology training when added to an existing traditional ultrasound curriculum. While it does not look at simulation in isolation, it reflects real life and the intention was to assess its potential impact in this context.

The results show that those undergoing simulator-enhanced ultrasound training scored higher in an objective clinical ultrasound assessment. Scores were higher across all domains, apart from the bladder, where both groups scored equally highly. At the end of the training period, trainees who had undergone simulator-enhanced training were more confident in their abdominal ultrasound practice than those who had not, both at obtaining diagnostic images and demonstrating pathology. The overall increase in confidence levels from the start of training to the end of the ST1 curriculum was also higher in the simulation cohort. The improved level of confidence for obtaining diagnostic images in the simulator group did not reach statistical significance but the higher initial confidence scores in this group may explain this.

A literature search has not identified any previously published papers that are directly comparable with this study assessing systematic transabdominal ultrasound skills (on modern high-fidelity simulators). There are some studies that assess simulation training for FAST. Two of these compared virtual reality simulation with controls undergoing practice on healthy volunteers. One found no difference in skill levels between groups.15 In the second study, the simulator group achieved higher quality images than the controls.14 This preliminary study provides evidence that supports the additional value of abdominal ultrasound simulators in improving competence in early radiology training and the need for further research in this area.

In addition to an improvement in practical proficiency and confidence in obtaining diagnostic images, the simulator cohort also showed a statistically significant increase in confidence at demonstrating pathology at the end of their first year of training. This may be attributed to faster acquisition of technical skills and therefore earlier appreciation of pathology. The additional small group tutorials given to the simulator group, which introduced pathology in a structured manner, may have further contributed to this increase in confidence. It could also be argued that the simulator group were simply given more training (simulator sessions and tutorials in addition to clinical training) and that the differences seen were due to this rather than the nature of the training. Whilst this is irrefutable, there is often limited clinical capacity for more real-time ultrasound training and these results, combined with trainee perception that simulation is most useful in the first few months of training, suggest that simulation can be used to effectively supplement clinical scanning time when used in early training. The higher satisfaction felt by the simulator group reflects previous studies demonstrating that simulator training is acceptable.4–6 The fact that trainees prefer real-life scanning to simulation overall is not surprising and simulation should not replace traditional methods, but can be used as an adjunct to maximise the potential of clinical training opportunities.

Locally, trainees are expected to be competent at performing unsupervised emergency ultrasound in the on-call setting by the end of year one. Trainees who had undergone simulator training felt more prepared for on-call ultrasound scanning than those who had traditional training. This is a subjective measure but the combination of this perceived competence correlates with the objectively measured higher levels of competence.

This investigation has a number of limitations reflecting the nature of the study design, which was intended to evaluate the additional impact of simulation in the training practice of a busy teaching hospital and Academy rather than a stand-alone investigation of simulator training. The number of radiology trainees in each year group limited the study sample size. However, the numbers were higher than many previous studies assessing the use of simulation in ultrasound.

Groups were allocated by year cohort, in a non-randomised manner making it theoretically possible that the second cohort would have scored equally highly regardless of the method of training. This was considered during study design but after discussion with training leads, it was decided that it would be unethical to withhold the potential educational benefits of the additional simulator-based training from one half of the year group. A baseline assessment was not performed to assess this potential bias as it was felt this would be of limited value in trainees with similar prior medical training and no previous ultrasound experience. In addition, the extra time and costs involved could not be justified for the purposes of this initial study.

The trainees were assessed while scanning a healthy volunteer, which evaluated their ability to perform a systematic transabdominal ultrasound and obtain diagnostic images. Ability to detect pathology on real patients was not assessed; the unpredictable range of patients in clinical ultrasound lists was felt too unstandardised to allow a robust clinical assessment for the purposes of this study. The trainees were assessed by two consultant radiologists who specialise in ultrasound. Having two assessors has the potential for reducing the consistency of assessment scores. Inter-assessor reliability was not assessed; however, the assessment was designed in the style of an Objective Structured Clinical Examination (OSCE) station with a clear grading scale to reduce subjectivity. Educational literature has shown that OSCEs are a reliable assessment method, improved by using rating scales.18 There are several papers that have explored the use of an OSCE-style assessment in non-radiology doctors, where this was shown to be a reliable and valid method of assessing ultrasound skills.19–21 The clinical assessment was created with this in mind and felt to be as objective as possible within the study constraints. Assessing the trainees on multiple occasions using different volunteers would have increased the reliability of the assessment scores but this would have required additional time from the assessors, which was not possible within the study period. As the clinical assessment was conducted towards the end of the first year of radiology training in two subsequent cohorts, the assessors were not blinded to whether each trainee had received simulation training or not, which is a further potential source of significant bias but one that we believe has been reduced by the use of a structured OSCE. Both randomisation and blinding would be desirable for future research. Assessment of inter-assessor reliability would also be useful.

The current study shows promise for improved general abdominal ultrasound skills using high fidelity simulators as a part of a structured ultrasound curriculum. It provides evidence that would justify future randomised studies to more accurately quantify the impact of simulation in isolation to other training methods.

Conclusion

The traditional methods of ultrasound training are inherently unstandardised, particularly within a department with large numbers of trainees and practitioners. Progression through a simulator-enriched curriculum ensures that early training is more standardised. This enables radiology trainees to gain more educational value from clinical sessions, achieve on-call competence earlier and optimises patient throughput and service provision. Simulator-enhanced training potentially improves early engagement with ultrasound, which is important to ensure continued enthusiasm for the modality within radiology.

This study suggests that embedded within a multifaceted training programme simulation can improve clinical ultrasound skills and confidence levels in early training. The results of this study provide a stimulus for future research to quantify the unique contribution that simulation provides in improving abdominal ultrasound practice.

Acknowledgements

The authors are grateful to the Plymouth University statistics department for their help with statistical analysis during the study.

Appendix

Ultrasound simulator clinical assessment tool

Please assess trainees according to the following criteria:

Level 1 – Trainee fails to attempt assessed skill

Level 2 – Trainee attempts the skill but with limitations

Level 3 – Trainee demonstrates basic competence at the assessed skill

Level 4 – Trainee demonstrates familiarity with and competence at skill with awareness of diagnostic limitations

General skills

| Domain | Skill | Not achieved | Achieved |

|---|---|---|---|

| Communication skills | Introduces self to patient | ||

| Use of equipment | Uses appropriate probe | ||

| Selects appropriate preset | |||

| Hygiene | Hand washing | ||

| Cleans probe | |||

| Ergonomics | Positions self in relation to the patient to reduce risk of operator strain |

Ultrasound technique

| Organ | Skill | Level 1 | Level 2 | Level 3 | Level 4 |

|---|---|---|---|---|---|

| Pancreas | Attempt at visualising the entire pancreas | ||||

| Appropriate use of depth, gain, focal zone | |||||

| Liver | Whole volume scanned | ||||

| Images acquired in different planes to maximise visualisation | |||||

| Adapts positioning/uses breathhold if appropriate | |||||

| Portal vein identified with colour Doppler | |||||

| Portal vein velocity measured | |||||

| CBD identified and measured | |||||

| Appropriate use of depth, gain, focal zone | |||||

| Gall bladder | Whole volume scanned | ||||

| Images acquired in two planes | |||||

| GB scanned with patient in two positions | |||||

| Appropriate use of depth, gain, focal zone | |||||

| Right kidney | Whole volume scanned | ||||

| Images acquired in two planes | |||||

| Adapts positioning/uses breathhold if appropriate | |||||

| Appropriate use of depth, gain, focal zone | |||||

| Left kidney | Whole volume scanned | ||||

| Images acquired in two planes | |||||

| Adapts positioning/uses breathhold if appropriate | |||||

| Appropriate use of depth, gain, focal zone | |||||

| Spleen | Whole volume scanned | ||||

| Adapts positioning/uses breathhold if appropriate | |||||

| Appropriate use of depth, gain, focal zone | |||||

| Bladder | Whole volume scanned | ||||

| Images acquired in two planes | |||||

| Appropriate use of depth, gain, focal zone | |||||

| Aorta and retroperitoneum | Images acquired in two planes of para-aortic region | ||||

| Attempts/demonstrates awareness of Isikoff’s view | |||||

| Appropriate use of depth, gain, focal zone |

Overall competence

| Domain | Skill | Level 1 | Level 2 | Level 3 | Level 4 |

|---|---|---|---|---|---|

| Communication Skills | Clear explanation and communication throughout examination | ||||

| Use of equipment | Efficient navigation of ultrasound machine, demonstrating familiarity with the controls and appropriate use, whilst scanning | ||||

| General competence | Demonstrates ability to adapt examination to situation, responding to cues from patient. | ||||

| Shows familiarity with options for patient positioning/scan techniques to maximise quality of examination |

Declaration of Conflicting Interests

The author(s) declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: At the time of purchasing the Medaphor transabdominal ultrasound simulator, the authors agreed to conduct an educational study on the impact of the simulator. Medaphor were not involved with the study design, implementation, write up or submission for publication. No financial input was received.

Funding

The author(s) received no financial support for the research, authorship, and/or publication of this article.

Ethics approval:

As this was an educational study on National Health Service (NHS) staff members, formal NHS Research Ethics Committee approval was not required.

Guarantor:

SJF

Contributors:

KEO and SCH researched the literature, designed the study, clinical assessments and questionnaires under guidance from SJF and PS. SJF and CG completed the clinical assessments. RC and MYA assisted with assessments and data collection. KEO and SCH performed data analysis and wrote the manuscript with editing performed by SJF and PS. All authors reviewed and approved the final version of the manuscript.

References

- 1.Sidhu HS, Olubaniyiy BO, Bhatnagar G, et al. Role of simulation-based education in ultrasound practice training. J Ultrasound Med 2012; 31: 785–791. [DOI] [PubMed] [Google Scholar]

- 2.Temple J. Time for training: A review of the impact of European Working Time Directive on the quality of training, London: Medical Education England (MEE), 2010. [Google Scholar]

- 3.Royal College of Radiologists.. How the next Government can improve diagnosis and outcomes for patients: Four proposals from the Royal College of Radiologists, London: Royal College of Radiologists, 2015. . https://www.rcr.ac.uk/sites/default/files/RCR(15)2_CR_govtbrief.pdf (accessed 20 December 2016). [Google Scholar]

- 4.Lewiss R, Hoffmann B, Beaulieu Y, et al. Point-of-care ultrasound education: The increasing role of simulation and multimedia resources. J Ultrasound Med 2014; 33: 27–32. [DOI] [PubMed] [Google Scholar]

- 5.Burden C, Preshaw J, White P, et al. Validation of virtual reality simulation for obstetric ultrasonography. A prospective cross-sectional study. Simul Healthc 2012; 7: 269–273. [DOI] [PubMed] [Google Scholar]

- 6.Stather DR, MacEachern P, Chee A, et al. Wet laboratory versus computer simulation for learning endobronchial ultrasound: A randomized trial. Can Respir J 2012; 19: 325–330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Edrich T, Seethala RR, Olenchock BA, et al. Providing initial transthoracic echocardiography training for anaesthesiologists: Simulator training is not inferior to live training. J Cardiothorac Vasc Anaesth 2014; 28: 49–53. [DOI] [PubMed] [Google Scholar]

- 8.Stather DR, MacEachern P, Rimmer K, et al. Assessment and learning curve evaluation of endobronchial ultrasound skills following simulation and clinical training. Respirology 2011; 16: 698–704. [DOI] [PubMed] [Google Scholar]

- 9.Burden C, Preshaw J, White P, et al. Usability of virtual-reality simulation training in obstetric ultrasonography: A prospective cohort study. Ultrasound Obstet Gynaecol 2013; 42: 213–217. [DOI] [PubMed] [Google Scholar]

- 10.Stather DR, MacEachern P, Chee A, et al. Evaluation of clinical endobronchial ultrasound skills following clinical versus simulation training. Respirology 2012; 17: 291–299. [DOI] [PubMed] [Google Scholar]

- 11.Konge L, Clementen PF, Ringsted C, et al. Simulator training for endobronchial ultrasound: A randomised controlled trial. Eur Respir J 2015; 46: 1140–1149. [DOI] [PubMed] [Google Scholar]

- 12.Tolsgaard MG, Ringsted C, Rosthoj S, et al. The effects of simulation-based transvaginal ultrasound training on quality and efficacy of care: A multi-centre single blind randomized trial. Ann Surg 2017; 265: 630–637. [DOI] [PubMed] [Google Scholar]

- 13.Ostergaard ML, Ewertsen C, Konge L, et al. Simulation-based abdominal ultrasound training – a systematic review. J Ultrasound Med 2016; 37: 251–261. [DOI] [PubMed] [Google Scholar]

- 14.Chung GKWK, Gyllenhammer RG, Baker EL, et al. Effects of simulation-based practice on focussed assessment with sonography for trauma (FAST) window identification, acquisition, and diagnosis. Mil Med 2013; 178: 87–97. [DOI] [PubMed] [Google Scholar]

- 15.Damewood S, Jeanmonod D, Cadigan B. Comparison of a multimedia simulator to a human model for teaching FAST exam image interpretation and image acquisition. Acad Emerg Med 2011; 18: 413–419. [DOI] [PubMed] [Google Scholar]

- 16.Dubbins P. Radiology academies. Clin Radiol 2011; 66: 385–388. [DOI] [PubMed] [Google Scholar]

- 17.Tolsgaard MG, Toden T, Sorensen JL, et al. International multispecialty consensus on how to evaluate ultrasound competence: A Delphi consensus survey. PLOS One 2013; 8: e57687–e57687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Swanwick T. Understanding medical education: Evidence, theory, and practice, Chichester, UK: Wiley-Blackwell Publishing, 2010. [Google Scholar]

- 19.Breitkreutz R, et al. Thorax, trachea, and lung ultrasonography in emergency and critical care medicine: Assessment of an objective structured training concept. Emerg Med Int 2013. Article ID 312758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kissin E, et al. Musculoskeletal ultrasound objective structured clinical examination: An assessment of the test. Arthritis Care Res 2014; 66: 2–6. [DOI] [PubMed] [Google Scholar]

- 21.Schmidt JN, Kendall J, Smalley C. Competency assessment in senior emergency medicine residents for core ultrasound skills. Western J Emerg Med 2015; 16: 923–926. [DOI] [PMC free article] [PubMed] [Google Scholar]