Supplemental Digital Content is available in the text.

Abstract

Purpose

Many medical education studies focus on the effectiveness of educational interventions. However, these studies often lack clear, thorough descriptions of interventions that would make the interventions replicable. This systematic review aimed to identify gaps and limitations in the descriptions of educational interventions, using a comprehensive checklist.

Method

Based on the literature, the authors developed a checklist of 17 criteria for thorough descriptions of educational interventions in medical education. They searched the Ovid MEDLINE, Embase, and ERIC databases for eligible English-language studies published January 2014–March 2016 that evaluated the effects of educational interventions during classroom teaching in postgraduate medical education. Subsequently, they used this checklist to systematically review the included studies. Descriptions were scored 0 (no information), 1 (unclear/partial information), or 2 (detailed description) for each of the 16 scorable criteria (possible range 0–32).

Results

Among the 105 included studies, the criteria most frequently reported in detail were learning needs (78.1%), content/subject (77.1%), and educational strategies (79.0%). The criteria least frequently reported in detail were incentives (9.5%), environment (5.7%), and planned and unplanned changes (12.4%). No article described all criteria. The mean score was 15.9 (SD 4.1), with a range from 8 (5 studies) to 25 (1 study). The majority (76.2%) of articles scored 11–20.

Conclusions

Descriptions were frequently missing key information and lacked uniformity. The results suggest a need for a common standard. The authors encourage others to validate, complement, and use their checklist, which could lead to more complete, comparable, and replicable descriptions of educational interventions.

The core business of medical education is to educate trainees so that they become competent professionals. To optimize this process, many educational interventions are developed and studied for their effectiveness. Such studies can be considered complex because their outcomes may be influenced by many variables, such as teacher quality, the setting, and trainees’ previous experiences and levels of commitment. Moreover, the number of interacting components within intervention studies is generally high.1–3 It is of great importance, therefore, that all relevant components be described explicitly4 so that educational interventions can be replicated, study outcomes can be understood and interpreted, and reliable conclusions can be drawn regarding the effectiveness of (aspects of) interventions. Furthermore, clear descriptions of educational interventions help medical educators translate successful interventions to local settings.4–6

Studies published on educational interventions in the field of medical education often lack thorough descriptions, however.7–11 The main shortcomings that have been identified include incomplete information and a lack of uniformity in the descriptions of both the research methods and the educational interventions themselves.9,12,13 In a review focusing on how studies of educational interventions for evidence-based practice (EBP) describe these interventions, Phillips et al13 demonstrated that there were many inconsistencies in the descriptions. Because educational interventions affect medical education programs, which in turn affect health care, improving completeness and uniformity in the descriptions of educational interventions is important.14 To improve such descriptions, it would be helpful to know what problems currently exist. In this review, we therefore systematically analyzed published descriptions of educational interventions to identify potential gaps and limitations.

To identify the limitations of published intervention descriptions, we first needed to identify criteria for thorough descriptions. While several checklists had already been designed to report on educational interventions,5,9,10,14,15 none seemed fully appropriate for describing educational interventions in medical education because their scope was either content specific15 or too broad and also included the description of the study methodology.5,9,10,14 Therefore, based on the educational intervention literature and the aforementioned existing checklists, we established a new checklist of key items that authors should cover to describe interventions in medical education. Using this checklist, we performed a systematic review of medical education intervention studies, with a focus on interventions in postgraduate medical education.

Method

Development of the checklist

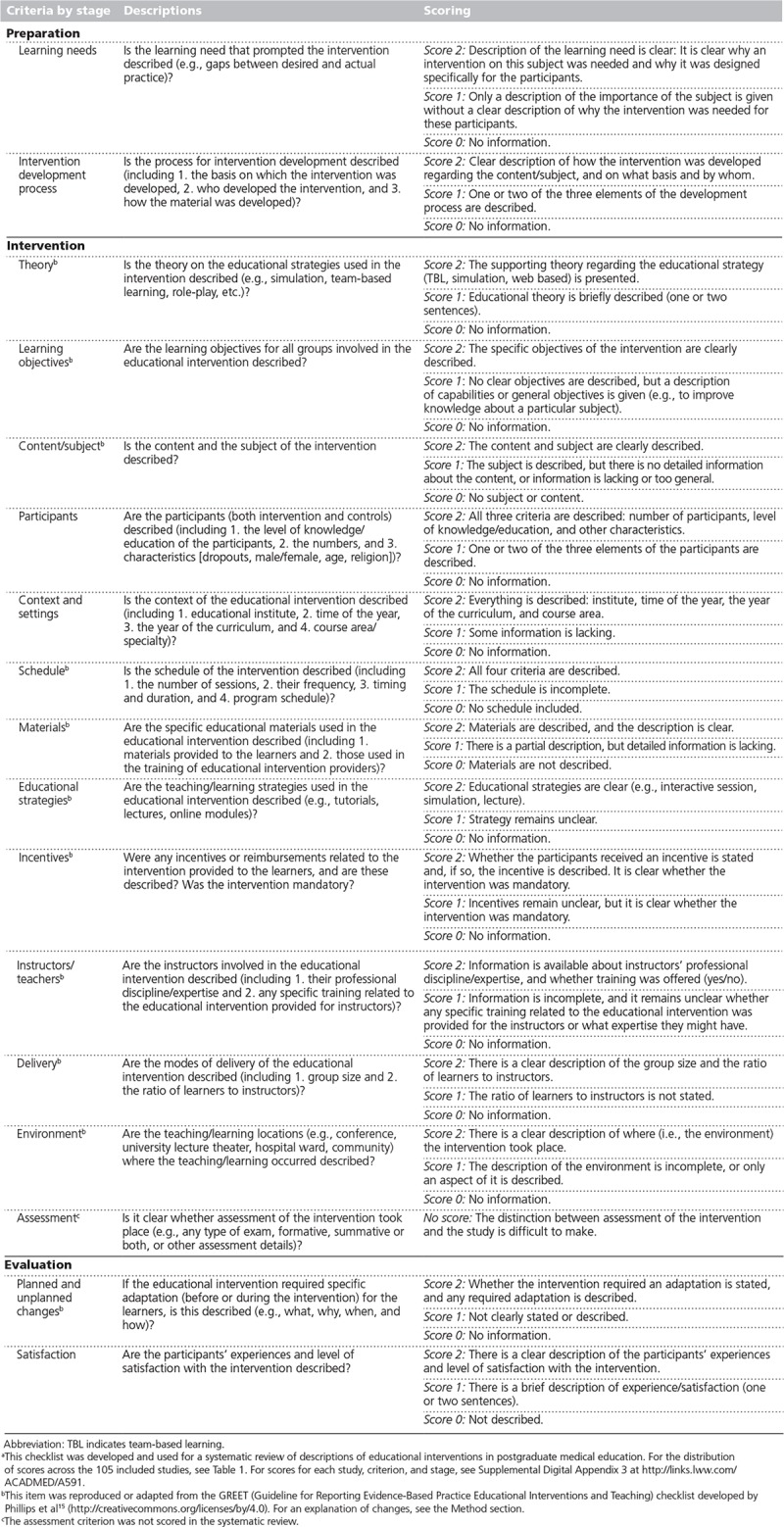

We began by developing a list of criteria to assess the descriptions of educational interventions in medical education in January 2015. This checklist, and hence this review, covered the description of the intervention only and did not deal with study design. Our list was based on the Guideline for Reporting Evidence-Based Practice Educational Interventions and Teaching (GREET) checklist developed by Phillips et al.15 We reformulated the GREET item that was specific to EBP (content/subject) to be applicable to educational interventions in general. We merged two items (planned changes, unplanned changes) and supplemented four items (theory, delivery, schedule, instructors). We incorporated four items (learning objectives, educational strategies, materials, environment) directly into our list. We did not include attendance because the scope of this item was covered by two others (delivery [from GREET] and participants [a new item, described below]).

On the basis of the literature, we identified additional items that were not covered by GREET. According to Morrison et al,14 Reznich and Anderson,5 and Windish et al,12 a first step when developing an intervention should be the assessment of learning needs of future participants. In addition, as discussed by Olson6 and by Windish et al,12 knowing the rationale behind the design of an educational intervention provides insight into the main decisions, and participants and context can influence the design. Therefore, we added the following criteria to our checklist: learning needs,5,6,12,14 intervention development process,6,9 context and settings,5,6,9 and participants.10 We also added items on evaluating the effect of and satisfaction with the intervention: assessment and satisfaction.2,5,6,9,10

The final list of 17 items was completed in November 2015 and discussed during a workshop that month at a Dutch medical education conference.16 The workshop participants agreed on the importance of all criteria. Subsequently, in January 2016, a pilot survey was conducted, using a subset of 15 educational intervention papers included in our systematic review (described below). Two of the authors (J.M. and N.B.) individually scored each intervention description on all items and discussed their scores. All criteria except assessment proved possible to score. The studies included in the pilot showed that it was often unclear whether the assessment performed was for the study, for the intervention (or part of the intervention), or for both. Assessment was therefore not scored in the current review, although we included this item in the checklist given its overall importance. Furthermore, scoring was difficult on the materials and planned/unplanned changes criteria because it was not always clear which materials were used or whether the intervention was changed during the study. J.M. and N.B. ultimately reached agreement on how to score all criteria. Based on the discussions, a comprehensive description of each item and its scoring was developed.

Our final checklist distinguishes three stages—preparation, intervention, and evaluation—and includes 17 items (Table 1). Each item, except assessment, is scored 0, 1, or 2, depending on the completeness of the description: A score of 2 corresponds to a detailed description, a score of 1 corresponds to an unclear or partial description, and a score of 0 is given when information is lacking entirely. Some criteria contain multiple elements; for example, a complete or detailed description of the context and settings item for a score of 2 includes the educational institute, time of the year, year of the curriculum, and course area/specialty. If one of these elements is missing from the description, the article scores a 1 on this criterion. The scoring guidelines for each item are provided in the last column of Table 1.

Table 1.

Checklist for Thorough Descriptions of Educational Interventions in Medical Education, With Criteria and Scoringa

Search methods

The MEDLINE (Ovid), Embase (Ovid), and ERIC (Ovid) databases were searched on March 22, 2016, for eligible English-language studies published since January 1, 2014. (The detailed search strategy used for each database is available in Supplemental Digital Appendix 1 at http://links.lww.com/ACADMED/A590.) To narrow the search, the scope was set to studies evaluating the effects of educational interventions during formal teaching (classroom teaching) in postgraduate medical education.

Inclusion and exclusion criteria

We included studies of educational interventions concerning postgraduate trainees (i.e., residents, interns) irrespective of specialty and year of education when residents accounted for at least 50% of the participants and the intervention and/or control session took place during classroom teaching.

Studies concerning physical examination or technical/clinical skills interventions (e.g., palpation instruction) were excluded, as were studies concerning interventions “on the job” and in bedside teaching, because these settings are less controlled than classroom settings and were therefore expected to be more difficult to describe. In addition, articles were excluded if the full text was unavailable in English or if they did not include an evaluation or study the effects of the intervention (i.e., reviews, abstracts, editorials, protocols, innovation reports, conference proceedings). Qualitative studies were excluded because they are generally exploratory and serve a different purpose than quantitative studies, which generally focus on effectiveness.

Study selection

N.B. and J.M. reviewed the titles and abstracts of records identified through the database searches against the inclusion and exclusion criteria. Their independent analysis of a subset (the first 200) of the publications yielded a 97% agreement rate. On the basis of this high level of agreement, the remaining publications were reviewed and selected by either J.M. or N.B. If the title and abstract met the inclusion criteria, or if they did not provide sufficient clarification to determine whether the study met the criteria, the full text was reviewed for eligibility.

The final set of included articles was scored using the 16 scored items on our checklist (Table 1), and data were collected on general study characteristics (journal, study design, country of origin, specialty of study participants). After the pilot survey (described above), the remaining articles were divided between J.M. and N.B. for scoring. Articles that referred to supplementary material (in an appendix, online, or in a prior publication) were scored on the basis of all information available, including the supplementary material when it could be obtained. J.M. and N.B. discussed scores frequently to maintain uniformity in scoring practice. Narrative details backing the scores were collected and are available from the corresponding author upon request. As noted above, the included studies were not assessed on the methodology used because the aim of this systematic review was to analyze descriptions of the interventions rather than methodology.

Analysis

All supporting quotations and other narrative data, associated scores, and general study characteristics were documented using Microsoft Excel 2011 (Microsoft Corp., Redmond, Washington). Descriptive statistics of study scores and characteristics were calculated using SPSS version 19.17 The analysis was performed from April 2016 to February 2017.

Results

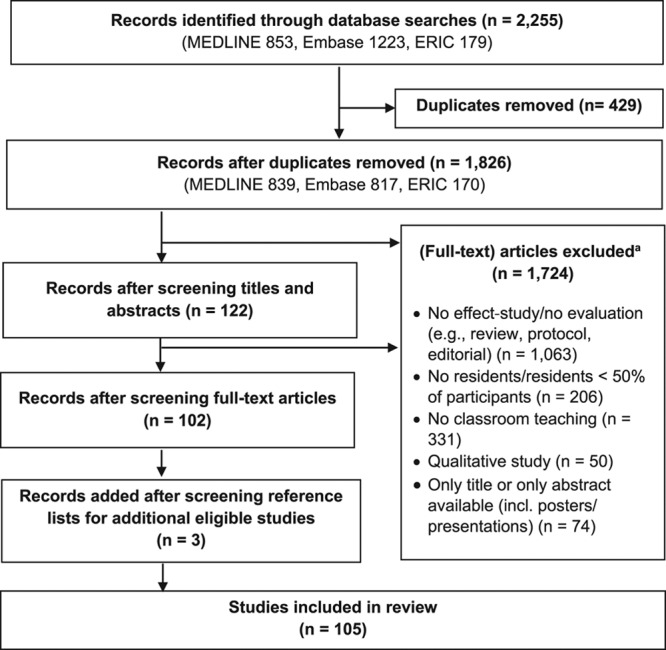

The electronic database searches identified 1,826 records (Figure 1). The initial screening of titles and abstracts yielded 122 articles. After review of the full texts, 102 articles were included. Screening the reference lists of these 102 articles for additional eligible studies yielded 3 articles, resulting in a total of 105 articles selected for inclusion in the review.18–122

Figure 1.

Flow diagram, from database searches to final included studies, for a systematic review of educational intervention descriptions in postgraduate medical education in studies published January 2014–March 2016. Inclusion criteria consisted of (1) educational intervention, (2) residents at least 50% of participants, and (3) classroom teaching (e.g., didactic lecture, small-group discussion). The authors excluded articles that did not have the full text available (in English) for review, as well as editorials, reviews, abstracts, conference proceedings, and qualitative studies. aSome studies were excluded on the basis of multiple exclusion criteria. This figure lists each excluded study only once, at the most relevant applicable exclusion criterion.

Throughout the screening process, articles were excluded for the following reasons, in order of incidence:

No effect study or no evaluation of the intervention (n = 1,063; 58.2%)

No classroom teaching (n = 331; 18.1%)

No resident participants or < 50% resident participants (n = 206; 11.3%)

Qualitative study (n = 50; 2.7%)

Only title and abstract available (e.g., congress or poster abstracts) (n = 74; 4.1%)

Details on individual articles and the reasons for exclusion are available from the corresponding author upon request.

Characteristics of included studies

The 105 included articles were published in 74 journals, the most prevalent of which were the Journal of Graduate Medical Education (8.6%; n = 9) and Academic Psychiatry (6.7%; n = 7). The studies were conducted in 17 countries, most often in the United States (61.0%; n = 64). The interventions covered 17 specialties; the most frequent were internal medicine (14.3%, n = 15), family medicine (12.4%, n = 13), emergency medicine (10.5%, n = 11), and anesthesiology (10.5%, n = 11). Some studies did not report the specialty of the participants clearly (13.3%, n = 14), and some studies included trainees from multiple specialties (5.7%, n = 6). Almost half of the studies evaluated the intervention by using a pre- and posttest design for one group (48.6%; n = 51). The study characteristics are summarized in Supplemental Digital Appendix 2 at http://links.lww.com/ACADMED/A590.

Assessment of educational innovation descriptions: Scores on the checklist

An overview of the scores for each item, stage, and article is provided in Supplemental Digital Appendix 3 at http://links.lww.com/ACADMED/A591. The distribution of scores for each criterion for the included articles is presented in Table 2. Good practice examples for each item are provided in Supplemental Digital Appendix 4 at http://links.lww.com/ACADMED/A590.

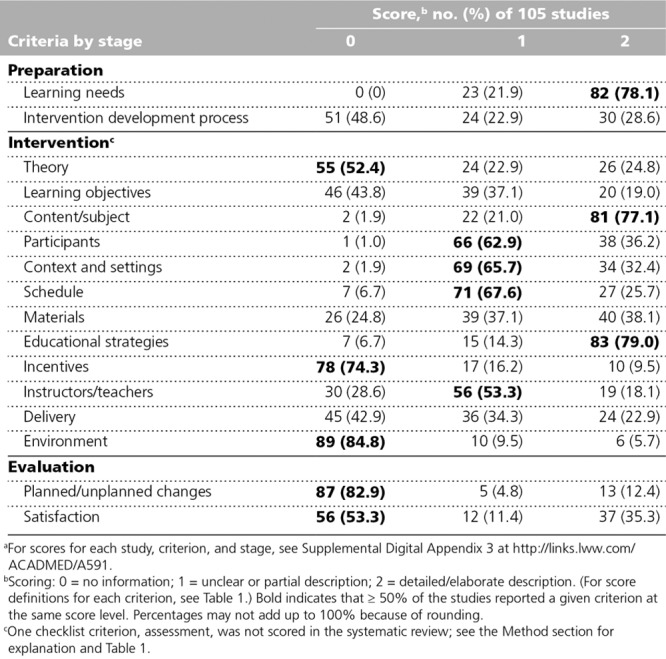

Table 2.

Distribution of Scores on Checklist for Thorough Descriptions of Educational Interventions in Medical Education, for Studies Included in a Systematic Review of Educational Intervention Descriptions in Postgraduate Medical Educationa

Each description of an educational intervention could score between 0 and 32 points based on the criteria checklist (Table 1). None of the articles described all of the criteria in detail. The lowest score of the articles we analyzed was 8 (5 studies29,58,61,68,101), and the highest score was 25 (1 study50). The mean score for the 105 included articles was 15.9 (SD 4.1). The range of scores was as follows: 12 articles (11.4%) scored 8–10, 80 articles (76.2%) scored 11–20, and 13 articles (12.4%) scored 21–25.

Twenty-four articles referred to additional material (in an appendix, online, or in a prior publication). We could not find the additional material for 5 articles (mean score: 13.8 [SD 3.1]).18,19,44,48,83 For the remaining 19 articles,22,23,25,35,39,41,50,51,74,78,81,91–93,98,104,110,116,119 scoring included the supplementary material. The mean score of these 19 articles was 18.3 (SD 3.7; range 13–25), significantly higher than the mean score of 15.5 (SD 4.0) for the 81 articles without additional information (P = .007; 95% CI on the observed difference = 0.8–4.8).

Preparation (2 criteria).

Learning needs were reported in all articles, with 78.1% (n = 82) describing them in detail (score 2). However, 21.9% (n = 23) failed to describe why the intervention was designed specifically for the participants (score 1).

The intervention development process was described in detail by 28.6% (n = 30) of the studies (score 2), as in the following example from Chee et al33:

We designed a teaching skills curriculum for the HMS [Harvard Medical School] Residency Training Program in Ophthalmology following Kern’s 6-step approach to curriculum development. These steps include problem identification, needs assessment of targeted learners, establishment of goals and objectives, design and implementation of educational strategies, and program evaluation.

Intervention (12 criteria).

Which educational strategy was applied during the intervention was reported by 79.0% (n = 83) of the studies (score 2). However, 52.4% (n = 55) failed to mention the educational theory behind the strategy (score 0). An illustrative example of a study that did support the applied strategy with theoretical substantiation is Daly et al36:

Priori studies have found that narrow-band imaging (NBI) can be taught to physicians by in-person training and web-based program.

Learning objectives were reported in detail by 19.0% (n = 20) of the studies (score 2), but 43.8% (n = 46) did not report any objectives (of the intervention) at all (score 0). The content/subject of the intervention was described clearly in 77.1% (n = 81; score 2). The number of participants was reported in all studies, except 1.60 However, details such as the participants’ level of knowledge or demographic information were missing in 62.9% (n = 66) of the studies (score 1).

Some information on the context and setting (i.e., the institution, time of the year, year of the curriculum, and course area/specialty) was reported by almost all studies (98.1%, n = 103). However, 65.7% (n = 69) did not describe all of these elements (score 1). A complete description of the schedule (i.e., number of sessions, frequency, timing and duration, and program schedule) was given in 25.7% (n = 27) of the studies (score 2); however, 67.6% (n = 71) did not describe all of these elements (score 1), and 6.7% (n = 7) reported no information on the schedule at all (score 0).

With regard to course materials, 24.8% (n = 26) of the studies did not describe any materials (score 0), and detailed information was missing from another 37.1% (n = 39; score 1). The remaining 38.1% (n = 40) described their materials in more detail (score 2), such as in this example from Haspel et al54:

PowerPoint lecture. Participants were given access to the activities at the workshop electronically (initially Google Docs and later Google Forms). We have also made all teaching materials and an instructor handbook available online at no cost and are planning train-the-trainer sessions to facilitate broader dissemination for local implementation.

Whether the participants received any incentives was not described in 74.3% (n = 78) of the studies (score 0). Whether the intervention was mandatory was mentioned in 16.2% (n = 17; score 1). Only 9.5% (n = 10) of the studies reported whether participants received some other kind of incentive (score 2), as in this example from Woodworth et al117:

The only reward that subjects received for study participation was a DVD containing the educational video and interactive simulation, which was given to them at the conclusion of the study.

Information about the instructors/teachers was reported in 18.1% (n = 19) of the studies in detail (score 2), but 28.6% (n = 30) did not report any information about the instructors at all (score 0). In more than half of the studies (53.3%, n = 56), information was missing on the instructors’ backgrounds or whether they received any specific training for the intervention (score 1).

Information on delivery (i.e., group size and the ratio of learners to instructors) was described in detail in 22.9% (n = 24) of the studies (score 2), but not at all in 42.9% (n = 45; score 0). The environment was not described in 84.8% (n = 89) of the studies (score 0). Only 5.7% (n = 6) of the studies reported where the intervention took place (score 2), as in this example from Sawatsky et al97:

Lectures are given by the same faculty member twice at two separate locations, a university-based hospital and a VA [Veterans Affairs] hospital. Both locations are set up with tables in rows, with several chairs at each table.

Evaluation (2 criteria).

Planned/unplanned changes, or whether the intervention required some kind of adaption before or during the intervention, were not reported by 82.9% (n = 87) of the studies (score 0). Furthermore, more than half of the studies (53.3%, n = 56) did not describe the participants’ level of satisfaction with, or their experience of, the intervention (score 0).

Discussion

Thorough and uniform descriptions of educational interventions are helpful for medical educators when comparing interventions and translating good practices into their own local programs. We therefore developed a checklist for thorough descriptions of educational interventions in medical education. Using this list, we performed a systematic evaluation of the 105 studies included in our review of educational interventions in postgraduate medical education. Our results indicate that descriptions of educational interventions can be improved.

The only criterion covered by all articles included in our review was the learning needs that prompted the educational intervention, with 78.1% of the studies providing detailed descriptions. Other criteria that were frequently described in detail were the content/subject (77.1%) and educational strategies (79.0%) of the intervention. This finding is in line with Phillips and colleagues’13 review of the literature describing educational interventions for EBP. Participants, context and settings, schedule, and instructors/teachers were frequently reported, but many studies failed to provide a complete description for these criteria. On the other hand, our evaluation also indicated that other criteria on our checklist were generally not reported; more than 75% of studies did not describe theory, incentives, environment, or planned and unplanned changes. With respect to the incentives and planned and unplanned changes criteria, it could be that some interventions had no incentives or changes. Such absence is clearly information that would be of interest to readers. Using a checklist, like ours, would aid in reporting all relevant information for a thorough description. Our results show that there is no uniformity in descriptions of educational interventions during classroom teaching for residents. Although not specific to educational interventions, lack of uniformity in descriptions of medical education research has been reported in the literature.5,7,9,12

One reason why certain criteria on our list are not covered by many studies might be that authors feel they must provide a limited description of their intervention when their article is restricted by a word limit. As a solution, authors could describe the educational intervention in detail in a separate (design) paper,123 or they could submit additional material.6 In our review, articles that referred to additional material scored better than average (independent t test, mean difference = 2.8; P = .007; 95% CI = 0.8–4.8). However, this additional information should be easy to find, which was not always the case. On the basis of our review, we recommend describing the educational intervention in a table or figure. This would ensure that medical educators and researchers can find all of the needed information easily and help them more swiftly adapt the intervention for their local sites.

Other reasons why certain criteria are not covered may be that authors are either unaware of all of the criteria (because of the lack of a standard) or disagree regarding their importance. Because the criteria we included in our checklist are based on the literature,5,6,9,10,15 and because they were considered to be important during discussions at the Dutch medical education conference,16 it seems more likely that authors are unaware of them. We hope that this review will enhance the awareness of the importance of a description that reports on all relevant criteria.

Our results showed that more than half of the studies failed to describe the theory supporting the educational strategies. In addition, the learning objectives were reported in detail by less than one-fifth of the included studies. Without addressing these key criteria, it is unclear how one can measure whether the intervention was successful and why study results were obtained.

As we noted above, we decided not to score one criterion, assessment, because our pilot survey showed that it is often not possible to determine whether an included assessment was developed to study the effectiveness of an intervention, was an integral part of the intervention, or both. However, to assess the effectiveness of an intervention, it is of paramount importance to know whether the intervention includes a formative or summative assessment.9 We therefore recommend including a clear description of the assessment and its purpose in educational intervention studies.

We did not evaluate the quality of the interventions performed; we only evaluated the descriptions of the interventions. The quality of the description of an intervention does not necessarily correlate with the quality of the intervention itself. For instance, many studies lacked a description of the intervention development process; however, the interventions were likely well thought out and set up systematically. Furthermore, a complete description of an intervention does not necessarily mean the intervention was successful.

A limitation of this systematic review is that the sample of studies was limited to reports on educational interventions for residents and classroom teaching. The type of intervention may have some influence on the level and pattern of description. Classroom teaching is a relatively regulated type of intervention that is probably more easily described than many other types of educational interventions. However, there are educational interventions that are even more regulated (e.g., e-learning) or that would require special criteria (e.g., skill training). Future research could include other settings to validate our criteria list. In addition, research could explore the level of agreement on the importance of the selected criteria so that a common standard can be developed. Our checklist provides a departure point for such a standard that, to the best of our knowledge, previously did not exist.

In conclusion, this systematic review confirms that there is room for improvement in descriptions of educational interventions during classroom teaching in postgraduate medical education. We found that key information on interventions was frequently missing and that there was a lack of uniformity of descriptions across studies. This suggests a need for a common standard for describing educational interventions. This review informs authors and readers on the essential criteria for describing educational interventions in medical education. We encourage other researchers to validate, complement, and use our criteria list, as this should lead to more complete, comparable, and replicable descriptions of interventions.

Acknowledgments:

The authors are sincerely grateful to Suzanne van Rhijn for the assistance with the search of articles.

Supplementary Material

Footnotes

Funding/Support: Stichting BeroepsOpleiding Huisartsen (SBOH) and ZonMw: project number: 12010095425. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Other disclosures: None reported.

Ethical approval: Reported as not applicable.

Previous presentations: The final list was discussed during a workshop at a Dutch medical education conference.16

The authors have informed the journal that they agree that both J.G. Meinema and N. Buwalda completed the intellectual and other work typical of the first author.

Supplemental digital content for this article is available at http://links.lww.com/ACADMED/A590 and http://links.lww.com/ACADMED/A591.

References

- 1.Sullivan GM. Getting off the “gold standard”: Randomized controlled trials and education research. J Grad Med Educ. 2011;3:285–289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Craig P, Dieppe P, Macintyre S, Michie S, Nazareth I, Petticrew M. Developing and evaluating complex interventions: The new Medical Research Council guidance. Int J Nurs Stud. 2013;50:587–592. [DOI] [PubMed] [Google Scholar]

- 3.Moore GF, Audrey S, Barker M, et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ. 2015;350:h1258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Abraham C, Michie S. A taxonomy of behavior change techniques used in interventions. Health Psychol. 2008;27:379–387. [DOI] [PubMed] [Google Scholar]

- 5.Reznich CB, Anderson WA. A suggested outline for writing curriculum development journal articles: The IDCRD format. Teach Learn Med. 2001;13:4–8. [DOI] [PubMed] [Google Scholar]

- 6.Olson CA. Reflections on using theory in research on continuing education in the health professions. J Contin Educ Health Prof. 2013;33:151–152. [DOI] [PubMed] [Google Scholar]

- 7.Cook DA, Beckman TJ, Bordage G. Quality of reporting of experimental studies in medical education: A systematic review. Med Educ. 2007;41:737–745. [DOI] [PubMed] [Google Scholar]

- 8.Cook DA, Bordage G, Schmidt HG. Description, justification and clarification: A framework for classifying the purposes of research in medical education. Med Educ. 2008;42:128–133. [DOI] [PubMed] [Google Scholar]

- 9.Patricio M, Juliao M, Fareleira F, Young M, Norman G, Vaz Carneiro A. A comprehensive checklist for reporting the use of OSCEs. Med Teach. 2009;31:112–124. [DOI] [PubMed] [Google Scholar]

- 10.Cohen ER, McGaghie WC, Wayne DB, Lineberry M, Yudkowsky R, Barsuk JH. Recommendations for Reporting Mastery Education Research in Medicine (ReMERM). Acad Med. 2015;90:1509–1514. [DOI] [PubMed] [Google Scholar]

- 11.Issenberg SB, McGaghie WC, Petrusa ER, Lee Gordon D, Scalese RJ. Features and uses of high-fidelity medical simulations that lead to effective learning: A BEME systematic review. Med Teach. 2005;27:10–28. [DOI] [PubMed] [Google Scholar]

- 12.Windish DM, Reed DA, Boonyasai RT, Chakraborti C, Bass EB. Methodological rigor of quality improvement curricula for physician trainees: A systematic review and recommendations for change. Acad Med. 2009;84:1677–1692. [DOI] [PubMed] [Google Scholar]

- 13.Phillips AC, Lewis LK, McEvoy MP, et al. A systematic review of how studies describe educational interventions for evidence-based practice: Stage 1 of the development of a reporting guideline. BMC Med Educ. 2014;14:152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Morrison JM, Sullivan F, Murray E, Jolly B. Evidence-based education: Development of an instrument to critically appraise reports of educational interventions. Med Educ. 1999;33:890–893. [DOI] [PubMed] [Google Scholar]

- 15.Phillips AC, Lewis LK, McEvoy MP, et al. Development and validation of the guideline for reporting evidence-based practice educational interventions and teaching (GREET). BMC Med Educ. 2016;16:237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Congres NVMO Nederlandse Vereniging voor Medisch Onderwijs [Netherlands Association for Medical Education]; November 2015; Egmond aan Zee, The Netherlands. [Google Scholar]

- 17.SPSS Statistics for Windows [computer program]. 2010Version 19.0 Armonk, NY: IBM Corp. [Google Scholar]

- 18.Acosta A, Azzalin A, Emmons CJ, Shuster JJ, Jay M, Lo MC. Improving residents’ clinical approach to obesity: Impact of a multidisciplinary didactic curriculum. Postgrad Med J. 2014;90:630–637. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Arora KS. A trial of a reproductive ethics and law curriculum for obstetrics and gynaecology residents. J Med Ethics. 2014;40(12):854–856. [DOI] [PubMed] [Google Scholar]

- 20.Azim A, Singh P, Bhatia P, et al. Impact of an educational intervention on errors in death certification: An observational study from the intensive care unit of a tertiary care teaching hospital. J Anaesthesiol Clin Pharmacol. 2014;30:78–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bank I, Snell L, Bhanji F. Pediatric crisis resource management training improves emergency medicine trainees’ perceived ability to manage emergencies and ability to identify teamwork errors. Pediatr Emerg Care. 2014;30:879–883. [DOI] [PubMed] [Google Scholar]

- 22.Barbas AS, Haney JC, Henry BV, Heflin MT, Lagoo SA. Development and implementation of a formalized geriatric surgery curriculum for general surgery residents. Gerontol Geriatr Educ. 2014;35:380–394. [DOI] [PubMed] [Google Scholar]

- 23.Becker TK, Skiba JF, Sozener CB. An educational measure to significantly increase critical knowledge regarding interfacility patient transfers. Prehosp Disaster Med. 2015;30:244–248. [DOI] [PubMed] [Google Scholar]

- 24.Berkenbosch L, Muijtjens AM, Zimmermann LJ, Heyligers IC, Scherpbier AJ, Busari JO. A pilot study of a practice management training module for medical residents. BMC Med Educ. 2014;14:107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Blackwood J, Duff JP, Nettel-Aguirre A, Djogovic D, Joynt C. Does teaching crisis resource management skills improve resuscitation performance in pediatric residents? Pediatr Crit Care Med. 2014;15:e168–e174. [DOI] [PubMed] [Google Scholar]

- 26.Brandler TC, Laser J, Williamson AK, Louie J, Esposito MJ. Team-based learning in a pathology residency training program. Am J Clin Pathol. 2014;142:23–28. [DOI] [PubMed] [Google Scholar]

- 27.Bray JH, Kowalchuk A, Waters V, Allen E, Laufman L, Shilling EH. Baylor pediatric SBIRT medical residency training program: Model description and evaluation. Subst Abus. 2014;35:442–449. [DOI] [PubMed] [Google Scholar]

- 28.Burden AR, Pukenas EW, Deal ER, et al. Using simulation education with deliberate practice to teach leadership and resource management skills to senior resident code leaders. J Grad Med Educ. 2014;6:463–469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Carek PJ, Dickerson LM, Stanek M, et al. Education in quality improvement for practice in primary care during residency training and subsequent activities in practice. J Grad Med Educ. 2014;6:50–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Carrié C, Biais M, Lafitte S, Grenier N, Revel P, Janvier G. Goal-directed ultrasound in emergency medicine: Evaluation of a specific training program using an ultrasonic stethoscope. Eur J Emerg Med. 2015;22:419–425. [DOI] [PubMed] [Google Scholar]

- 31.Cartier RA, 3rd, Skinner C, Laselle B. Perceived effectiveness of teaching methods for point of care ultrasound. J Emerg Med. 2014;47:86–91. [DOI] [PubMed] [Google Scholar]

- 32.Chau D, Bensalem-Owen M, Fahy BG. The impact of an interdisciplinary electroencephalogram educational initiative for critical care trainees. J Crit Care. 2014;29:1107–1110. [DOI] [PubMed] [Google Scholar]

- 33.Chee YE, Newman LR, Loewenstein JI, Kloek CE. Improving the teaching skills of residents in a surgical training program: Results of the pilot year of a curricular initiative in an ophthalmology residency program. J Surg Educ. 2015;72:890–897. [DOI] [PubMed] [Google Scholar]

- 34.Christianson MS, Washington CI, Stewart KI, Shen W. Effectiveness of a 2-year menopause medicine curriculum for obstetrics and gynecology residents. Menopause. 2016;23:275–279. [DOI] [PubMed] [Google Scholar]

- 35.Corbelli J, Bonnema R, Rubio D, Comer D, McNeil M. An effective multimodal curriculum to teach internal medicine residents evidence-based breast health. J Grad Med Educ. 2014;6:721–725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Daly C, Vennalaganti P, Soudagar S, Hornung B, Sharma P, Gupta N. Randomized controlled trial of self-directed versus in-classroom teaching of narrow-band imaging for diagnosis of Barrett’s esophagus-associated neoplasia. Gastrointest Endosc. 2016;83:101–106. [DOI] [PubMed] [Google Scholar]

- 37.Dielissen P, Verdonk P, Waard MW, Bottema B, Lagro-Janssen T. The effect of gender medicine education in GP training: A prospective cohort study. Perspect Med Educ. 2014;3:343–356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Dolan BM, Yialamas MA, McMahon GT. A randomized educational intervention trial to determine the effect of online education on the quality of resident-delivered care. J Grad Med Educ. 2015;7:376–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Dreyer J, Hannay J, Lane R. Teaching the management of surgical emergencies through a short course to surgical residents in East/Central Africa delivers excellent educational outcomes. World J Surg. 2014;38:830–838. [DOI] [PubMed] [Google Scholar]

- 40.Duello K, Louh I, Greig H, Dawson N. Residents’ knowledge of quality improvement: The impact of using a group project curriculum. Postgrad Med J. 2015;91:431–435. [DOI] [PubMed] [Google Scholar]

- 41.Feinstein RE. Violence prevention education program for psychiatric outpatient departments. Acad Psychiatry. 2014;38:639–646. [DOI] [PubMed] [Google Scholar]

- 42.Ferrero NA, Bortsov AV, Arora H, et al. Simulator training enhances resident performance in transesophageal echocardiography. Anesthesiology. 2014;120:149–159. [DOI] [PubMed] [Google Scholar]

- 43.Ferrell NJ, Melton B, Banu S, Coverdale J, Valdez MR. The development and evaluation of a trauma curriculum for psychiatry residents. Acad Psychiatry. 2014;38:611–614. [DOI] [PubMed] [Google Scholar]

- 44.Fok MC, Wong RY. Impact of a competency based curriculum on quality improvement among internal medicine residents. BMC Med Educ. 2014;14:252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Freundlich RE, Newman JW, Tremper KK, et al. The impact of a dedicated research education month for anesthesiology residents. Anesthesiol Res Pract. 2015;2015:623959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Galiatsatos P, Rios R, Daniel Hale W, Colburn JL, Christmas C. The lay health educator program: Evaluating the impact of this community health initiative on the medical education of resident physicians. J Relig Health. 2015;54:1148–1156. [DOI] [PubMed] [Google Scholar]

- 47.Garcia-Rodriguez JA, Donnon T. Using comprehensive video-module instruction as an alternative approach for teaching IUD insertion. Fam Med. 2016;48:15–20. [PubMed] [Google Scholar]

- 48.Gorgas DL, Greenberger S, Bahner DP, Way DP. Teaching emotional intelligence: A control group study of a brief educational intervention for emergency medicine residents. West J Emerg Med. 2015;16:899–906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Graham KL, Green S, Kurlan R, Pelosi JS. A patient-led educational program on Tourette syndrome: Impact and implications for patient-centered medical education. Teach Learn Med. 2014;26:34–39. [DOI] [PubMed] [Google Scholar]

- 50.Green JA, Gonzaga AM, Cohen ED, Spagnoletti CL. Addressing health literacy through clear health communication: A training program for internal medicine residents. Patient Educ Couns. 2014;95(1):76–82. [DOI] [PubMed] [Google Scholar]

- 51.Grillo EU, Koenig MA, Gunter CD, Kim S. Teaching CSD graduate students to think critically, apply evidence, and write professionally. Commun Disord Q. 2015;36(4):241–251. [Google Scholar]

- 52.Gurrera RJ, Dismukes R, Edwards M, et al. Preparing residents in training to become health-care leaders: A pilot project. Acad Psychiatry. 2014;38:701–705. [DOI] [PubMed] [Google Scholar]

- 53.Ha D, Faulx M, Isada C, et al. Transitioning from a noon conference to an academic half-day curriculum model: Effect on medical knowledge acquisition and learning satisfaction. J Grad Med Educ. 2014;6(1):93–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Haspel RL, Ali AM, Huang GC. Using a team-based learning approach at national meetings to teach residents genomic pathology. J Grad Med Educ. 2016;8:80–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Hettinger A, Spurgeon J, El-Mallakh R, Fitzgerald B. Using audience response system technology and PRITE questions to improve psychiatric residents’ medical knowledge. Acad Psychiatry. 2014;38:205–208. [DOI] [PubMed] [Google Scholar]

- 56.Hogan TM, Hansoti B, Chan SB. Assessing knowledge base on geriatric competencies for emergency medicine residents. West J Emerg Med. 2014;15:409–413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Hope AA, Hsieh SJ, Howes JM, et al. Let’s talk critical. Development and evaluation of a communication skills training program for critical care fellows. Ann Am Thorac Soc. 2015;12:505–511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Horton WB, Weeks AQ, Rhinewalt JM, Ballard RD, Asher FH. Analysis of a guideline-derived resident educational program on inpatient glycemic control. South Med J. 2015;108:596–598. [DOI] [PubMed] [Google Scholar]

- 59.Hubert V, Duwat A, Deransy R, Mahjoub Y, Dupont H. Effect of simulation training on compliance with difficult airway management algorithms, technical ability, and skills retention for emergency cricothyrotomy. Anesthesiology. 2014;120:999–1008. [DOI] [PubMed] [Google Scholar]

- 60.Ibrahim H, Nair SC. Focus on international research strategy and teaching: The FIRST programme. Perspect Med Educ. 2014;3:129–135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Isoardi J, Spencer L, Sinnott M, Eley R. Impact of formal teaching on medical documentation by interns in an emergency department in a Queensland teaching hospital. Emerg Med Australas. 2015;27:6–10. [DOI] [PubMed] [Google Scholar]

- 62.Jayaraman V, Feeney JM, Brautigam RT, Burns KJ, Jacobs LM. The use of simulation procedural training to improve self-efficacy, knowledge, and skill to perform cricothyroidotomy. Am Surg. 2014;80:377–381. [PubMed] [Google Scholar]

- 63.Johnson JM, Stern TA. Teaching residents about emotional intelligence and its impact on leadership. Acad Psychiatry. 2014;38:510–513. [DOI] [PubMed] [Google Scholar]

- 64.Kalaiselvan G, Dongre AR, Murugan V. Evaluation of medical interns’ learning of exposure to revised national tuberculosis control programme guidelines. Indian J Tuberc. 2014;61:288–293. [PubMed] [Google Scholar]

- 65.Kan C, Harrison S, Robinson B, Barnes A, Chisolm MS, Conlan L. How we developed a trainee-led book group as a supplementary education tool for psychiatric training in the 21st century. Med Teach. 2015;37:803–806. [DOI] [PubMed] [Google Scholar]

- 66.Kashani K, Carrera P, De Moraes AG, Sood A, Onigkeit JA, Ramar K. Stress and burnout among critical care fellows: Preliminary evaluation of an educational intervention. Med Educ Online. 2015;20:27840. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Kelly DM, London DA, Siperstein A, Fung JJ, Walsh MR. A structured educational curriculum including online training positively impacts American Board of Surgery In-Training Examination scores. J Surg Educ. 2015;72:811–817. [DOI] [PubMed] [Google Scholar]

- 68.Kim JS, Lee BI, Choi H, et al. Brief education on microvasculature and pit pattern for trainees significantly improves estimation of the invasion depth of colorectal tumors. Gastroenterol Res Pract. 2014;2014:245396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Kiwanuka JK, Ttendo SS, Eromo E, et al. Synchronous distance anesthesia education by Internet videoconference between Uganda and the United States. J Clin Anesth. 2015;27:499–503. [DOI] [PubMed] [Google Scholar]

- 70.Ledford CJ, Childress MA, Ledford CC, Mundy HD. Refining the practice of prescribing: Teaching physician learners how to talk to patients about a new prescription. J Grad Med Educ. 2014;6:726–732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Lehmann R, Hanebeck B, Oberle S, et al. Virtual patients in continuing medical education and residency training: A pilot project for acceptance analysis in the framework of a residency revision course in pediatrics. GMS Z Med Ausbild. 2015;32(5):Doc51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Loughland C, Kelly B, Ditton-Phare P, et al. ; ComPsych Investigators. Improving clinician competency in communication about schizophrenia: A pilot educational program for psychiatry trainees. Acad Psychiatry. 2015;39:160–164. [DOI] [PubMed] [Google Scholar]

- 73.Madden K, Sprague S, Petrisor BA, et al. Orthopaedic trainees retain knowledge after a partner abuse course: An education study. Clin Orthop Relat Res. 2015;473:2415–2422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Maddry JK, Varney SM, Sessions D, et al. A comparison of simulation-based education versus lecture-based instruction for toxicology training in emergency medicine residents. J Med Toxicol. 2014;10:364–368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Maguire S, Hanley K, Quinn K, Sheeran J, Stewart P. Teaching multimorbidity management to GP trainees: A pilot workshop. Educ Prim Care. 2015;26:410–415. [DOI] [PubMed] [Google Scholar]

- 76.Malone GP, Vale Arismendez S, Schneegans Warzinski S, et al. South Texas residency screening, brief intervention, and referral to treatment (SBIRT) training: 12-month outcomes. Subst Abus. 2015;36:272–280. [DOI] [PubMed] [Google Scholar]

- 77.Matinpour M, Sedighi I, Monajemi A, Jafari F, Momtaz HE, Ali Seif Rabiei M. Clinical reasoning and improvement in the quality of medical education. Shiraz E Med J. 2014;15(4):1–4. [Google Scholar]

- 78.McCallister JW, Gustin JL, Wells-Di Gregorio S, Way DP, Mastronarde JG. Communication skills training curriculum for pulmonary and critical care fellows. Ann Am Thorac Soc. 2015;12:520–525. [DOI] [PubMed] [Google Scholar]

- 79.McDonald PL, Straker HO, Schlumpf KS, Plack MM. Learning partnership: Students and faculty learning together to facilitate reflection and higher order thinking in a blended course. J Asynchronous Learn Netw. 2014;18(4):73–93. [Google Scholar]

- 80.Mehdi Z, Ross A, Reedy G, et al. Simulation training for geriatric medicine. Clin Teach. 2014;11(5):387–392. [DOI] [PubMed] [Google Scholar]

- 81.Mitchell JD, Mahmood F, Wong V, et al. Teaching concepts of transesophageal echocardiography via web-based modules. J Cardiothorac Vasc Anesth. 2015;29:402–409. [DOI] [PubMed] [Google Scholar]

- 82.Nasr Esfahani M, Behzadipour M, Jalali Nadoushan A, Shariat SV. A pilot randomized controlled trial on the effectiveness of inclusion of a distant learning component into empathy training. Med J Islam Repub Iran. 2014;28:65. [PMC free article] [PubMed] [Google Scholar]

- 83.Ngamruengphong S, Horsley-Silva JL, Hines SL, Pungpapong S, Patel TC, Keaveny AP. Educational intervention in primary care residents’ knowledge and performance of hepatitis B vaccination in patients with diabetes mellitus. South Med J. 2015;108:510–515. [DOI] [PubMed] [Google Scholar]

- 84.Nightingale B, Gopalan P, Azzam P, Douaihy A, Conti T. Teaching brief motivational interventions for diabetes to family medicine residents. Fam Med. 2016;48:187–193. [PubMed] [Google Scholar]

- 85.Ogilvie E, Vlachou A, Edsell M, et al. Simulation-based teaching versus point-of-care teaching for identification of basic transoesophageal echocardiography views: A prospective randomised study. Anaesthesia. 2015;70:330–335. [DOI] [PubMed] [Google Scholar]

- 86.O’Sullivan O, Iohom G, O’Donnell BD, Shorten GD. The effect of simulation-based training on initial performance of ultrasound-guided axillary brachial plexus blockade in a clinical setting—A pilot study. BMC Anesthesiol. 2014;14:110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Park CS, Stojiljkovic L, Milicic B, Lin BF, Dror IE. Training induces cognitive bias: The case of a simulation-based emergency airway curriculum. Simul Healthc. 2014;9:85–93. [DOI] [PubMed] [Google Scholar]

- 88.Prorok JC, Stolee P, Cooke M, McAiney CA, Lee L. Evaluation of a dementia education program for family medicine residents. Can Geriatr J. 2015;18:57–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Ramanathan R, Duane TM, Kaplan BJ, Farquhar D, Kasirajan V, Ferrada P. Using a root cause analysis curriculum for practice-based learning and improvement in general surgery residency. J Surg Educ. 2015;72:e286–e293. [DOI] [PubMed] [Google Scholar]

- 90.Ramaswamy R, Williams A, Clark EM, Kelley AS. Communication skills curriculum for foreign medical graduates in an internal medicine residency program. J Am Geriatr Soc. 2014;62:2153–2158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Ramsingh D, Alexander B, Le K, Williams W, Canales C, Cannesson M. Comparison of the didactic lecture with the simulation/model approach for the teaching of a novel perioperative ultrasound curriculum to anesthesiology residents. J Clin Anesth. 2014;26:443–454. [DOI] [PubMed] [Google Scholar]

- 92.Reed S, Kassis K, Nagel R, Verbeck N, Mahan JD, Shell R. Breaking bad news is a teachable skill in pediatric residents: A feasibility study of an educational intervention. Patient Educ Couns. 2015;98:748–752. [DOI] [PubMed] [Google Scholar]

- 93.Richards SE, Shiffermiller JF, Wells AD, et al. A clinical process change and educational intervention to reduce the use of unnecessary preoperative tests. J Grad Med Educ. 2014;6:733–737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Rodriguez Vega B, Melero-Llorente J, Bayon Perez C, et al. Impact of mindfulness training on attentional control and anger regulation processes for psychotherapists in training. Psychother Res. 2014;24:202–213. [DOI] [PubMed] [Google Scholar]

- 95.Rzouq F, Vennalaganti P, Pakseresht K, et al. In-class didactic versus self-directed teaching of the probe-based confocal laser endomicroscopy (pCLE) criteria for Barrett’s esophagus. Endoscopy. 2016;48:123–127. [DOI] [PubMed] [Google Scholar]

- 96.Salib S, Glowacki EM, Chilek LA, Mackert M. Developing a communication curriculum and workshop for an internal medicine residency program. South Med J. 2015;108:320–324. [DOI] [PubMed] [Google Scholar]

- 97.Sawatsky AP, Berlacher K, Granieri R. Using an ACTIVE teaching format versus a standard lecture format for increasing resident interaction and knowledge achievement during noon conference: A prospective, controlled study. BMC Med Educ. 2014;14:129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Sayeed Y, Marrocco A, Sully K, Werntz C, 3rd, Allen A, Minardi J. Feasibility and implementation of musculoskeletal ultrasound training in occupational medicine residency education. J Occup Environ Med. 2015;57:1347–1352. [DOI] [PubMed] [Google Scholar]

- 99.Schram P, Harris SK, Van Hook S, et al. Implementing adolescent screening, brief intervention, and referral to treatment (SBIRT) education in a pediatric residency curriculum. Subst Abus. 2015;36:332–338. [DOI] [PubMed] [Google Scholar]

- 100.Semler MW, Keriwala RD, Clune JK, et al. A randomized trial comparing didactics, demonstration, and simulation for teaching teamwork to medical residents. Ann Am Thorac Soc. 2015;12:512–519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Shahriari M. Case based teaching at the bed side versus in classroom for undergraduates and residents of pediatrics. J Adv Med Educ Prof. 2014;2:135–136. [PMC free article] [PubMed] [Google Scholar]

- 102.Shariff U, Kullar N, Haray PN, Dorudi S, Balasubramanian SP. Multimedia educational tools for cognitive surgical skill acquisition in open and laparoscopic colorectal surgery: A randomized controlled trial. Colorectal Dis. 2015;17:441–450. [DOI] [PubMed] [Google Scholar]

- 103.Shayne M, Culakova E, Milano MT, Dhakal S, Constine LS. The integration of cancer survivorship training in the curriculum of hematology/oncology fellows and radiation oncology residents. J Cancer Surviv. 2014;8:167–172. [DOI] [PubMed] [Google Scholar]

- 104.Sherer R, Wan Y, Dong H, et al. Positive impact of integrating histology and physiology teaching at a medical school in China. Adv Physiol Educ. 2014;38:330–338. [DOI] [PubMed] [Google Scholar]

- 105.Shershneva M, Kim JH, Kear C, et al. Motivational interviewing workshop in a virtual world: Learning as avatars. Fam Med. 2014;46:251–258. [PMC free article] [PubMed] [Google Scholar]

- 106.Slort W, Blankenstein AH, Schweitzer BP, Deliens L, van der Horst HE. Effectiveness of the “availability, current issues and anticipation” (ACA) training programme for general practice trainees on communication with palliative care patients: A controlled trial. Patient Educ Couns. 2014;95:83–90. [DOI] [PubMed] [Google Scholar]

- 107.Smith BW, Slack MB. The effect of cognitive debiasing training among family medicine residents. Diagnosis (Berl). 2015;2:117–121. [DOI] [PubMed] [Google Scholar]

- 108.Smith CC, McCormick I, Huang GC. The clinician–educator track: Training internal medicine residents as clinician–educators. Acad Med. 2014;89:888–891. [DOI] [PubMed] [Google Scholar]

- 109.Smith ME, Navaratnam A, Jablenska L, Dimitriadis PA, Sharma R. A randomized controlled trial of simulation-based training for ear, nose, and throat emergencies. Laryngoscope. 2015;125:1816–1821. [DOI] [PubMed] [Google Scholar]

- 110.Suzuki Y, Kato TA, Sato R, et al. Effectiveness of brief suicide management training programme for medical residents in Japan: A cluster randomized controlled trial. Epidemiol Psychiatr Sci. 2014;23:167–176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.Swensson J, McMahan L, Rase B, Tahir B. Curricula for teaching MRI safety, and MRI and CT contrast safety to residents: How effective are live lectures and online modules? J Am Coll Radiol. 2015;12:1093–1096. [DOI] [PubMed] [Google Scholar]

- 112.Trickey AW, Crosby ME, Singh M, Dort JM. An evidence-based medicine curriculum improves general surgery residents’ standardized test scores in research and statistics. J Grad Med Educ. 2014;6:664–668. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.VanderWielen BA, Harris R, Galgon RE, VanderWielen LM, Schroeder KM. Teaching sonoanatomy to anesthesia faculty and residents: Utility of hands-on gel phantom and instructional video training models. J Clin Anesth. 2015;27:188–194. [DOI] [PubMed] [Google Scholar]

- 114.van Onna M, Gorter S, Maiburg B, Waagenaar G, van Tubergen A. Education improves referral of patients suspected of having spondyloarthritis by general practitioners: A study with unannounced standardised patients in daily practice. RMD Open. 2015;1:e000152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115.Wasser TD. How do we keep our residents safe? An educational intervention. Acad Psychiatry. 2015;39:94–98. [DOI] [PubMed] [Google Scholar]

- 116.Weiland A, Blankenstein AH, Van Saase JL, et al. Training medical specialists to communicate better with patients with medically unexplained physical symptoms (MUPS). A randomized, controlled trial. PLoS One. 2015;10:e0138342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117.Woodworth GE, Chen EM, Horn JL, Aziz MF. Efficacy of computer-based video and simulation in ultrasound-guided regional anesthesia training. J Clin Anesth. 2014;26:212–221. [DOI] [PubMed] [Google Scholar]

- 118.Yang YY, Yang LY, Hsu HC, et al. A model of four hierarchical levels to train Chinese residents’ teaching skills for “practice-based learning and improvement” competency. Postgrad Med. 2015;127:744–751. [DOI] [PubMed] [Google Scholar]

- 119.Young OM, Parviainen K. Training obstetrics and gynecology residents to be effective communicators in the era of the 80-hour workweek: A pilot study. BMC Res Notes. 2014;7:455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 120.Young TP, Bailey CJ, Guptill M, Thorp AW, Thomas TL. The flipped classroom: A modality for mixed asynchronous and synchronous learning in a residency program. West J Emerg Med. 2014;15:938–944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 121.Zeinalizadeh M, Meybodi KT, Maleki F, et al. Short-term didactic lecture course and neurosurgical knowledge of emergency medicine residents. Arch Neurosci. 2015;2(4):e27261. [Google Scholar]

- 122.Ziganshin BA, Yausheva LM, Pichugin A, Ziganshin A, Sozinov A, Sadigh M. Training young Russian physicians in Uganda—A unique program for introducing global health education in Russia. Ann Glob Health. 2014;80(3):182–183. [DOI] [PubMed] [Google Scholar]

- 123.Cook DA, Bordage G, Schmidt HG. Description, justification and clarification: A framework for classifying the purposes of research in medical education. Med Educ. 2008;42:128–133. [DOI] [PubMed] [Google Scholar]