Abstract

In the information age, the ability to read and construct data visualizations becomes as important as the ability to read and write text. However, while standard definitions and theoretical frameworks to teach and assess textual, mathematical, and visual literacy exist, current data visualization literacy (DVL) definitions and frameworks are not comprehensive enough to guide the design of DVL teaching and assessment. This paper introduces a data visualization literacy framework (DVL-FW) that was specifically developed to define, teach, and assess DVL. The holistic DVL-FW promotes both the reading and construction of data visualizations, a pairing analogous to that of both reading and writing in textual literacy and understanding and applying in mathematical literacy. Specifically, the DVL-FW defines a hierarchical typology of core concepts and details the process steps that are required to extract insights from data. Advancing the state of the art, the DVL-FW interlinks theoretical and procedural knowledge and showcases how both can be combined to design curricula and assessment measures for DVL. Earlier versions of the DVL-FW have been used to teach DVL to more than 8,500 residential and online students, and results from this effort have helped revise and validate the DVL-FW presented here.

Keywords: data visualization, information visualization, literacy, assessment, learning sciences

The invention of the printing press created a mandate for universal textual literacy; the need to manipulate many large numbers created a need for mathematical literacy; and the ubiquity and importance of photography, film, and digital drawing tools posed a need for visual literacy. Analogously, the increasing availability of large datasets, the importance of understanding them, and the utility of data visualizations to inform data-driven decision making pose a need for universal data visualization literacy (DVL). Like other literacies, DVL aims to promote better communication and collaboration, empower users to understand their world, build individual self-efficacy, and improve decision making in businesses and governments.

Pursuit of Universal Literacy

In what follows, we review definitions and assessments of textual, mathematical, and visual literacy and discuss an emerging consensus around the definition and assessment of DVL.

Textual literacy, according to the Organisation for Economic Co-operation and Development’s (OECD’s) Program for International Student Assessment (PISA), is the process of “understanding, using, reflecting on and engaging with written texts, in order to achieve one’s goals, to develop one’s knowledge and potential, and to participate in society” (1). Major tests for textual literacy are issued by PISA (2) and are regularly administered in over 70 countries to measure how effectively they are preparing students to read and write text. Another major international test, Progress in International Reading Literacy Study (PIRLS), has measured reading aptitude for fourth graders every 5 years since 2001. For advanced students, the Graduate Record Examination Subject Tests are widely used to assesses verbal reasoning and analytical writing skills for people applying to graduate schools (3, 4). A review of major international education surveys with varying degrees of global coverage and diverse intended age groups can be found in ref. 5.

Mathematical literacy (also referred to as “numeracy”) has been defined as an “understanding of the real number line, time, measurement, and estimation” as well as an “understanding of ratio concepts, notably fractions, proportions, percentages, and probabilities” (6). PISA defines it as “an individual’s capacity to formulate, employ, and interpret mathematics in a variety of contexts,” including “reasoning mathematically and using mathematical concepts, procedures, facts and tools to describe, explain and predict phenomena.” PISA administers standardized tests for math, problem solving, and financial literacy (7). The PISA 2015 Draft Mathematics Framework (8) explains the theoretical underpinnings of the assessment, the formal definition of mathematical literacy, the mathematical processes that students undertake when using mathematical literacy, and the fundamental mathematical capabilities that underlie those processes.

Visual literacy was initially defined as a person’s ability to “discriminate and interpret the visible actions, objects, and symbols natural or man-made, that he encounters in his environment” (9). In 1978, it was defined “as a group of skills which enable an individual to understand and use visuals for intentionally communicating with others” (10). More recently, the Association of College and Research Libraries defined standards, performance indicators, and learning outcomes for visual literacy (11, 12). In the academic setting, Avgerinou (13) developed and validated a visual literacy index by running focus groups of visual literacy experts, and Taylor (14) reviewed visual, media, and information literacy, arguing for the design of a visual language and coining the term “visual information literacy.”

DVL, also called “visualization literacy,” has been defined as the “the ability to confidently use a given data visualization to translate questions specified in the data domain into visual queries in the visual domain, as well as interpreting visual patterns in the visual domain as properties in the data domain” (15); “the ability and skill to read and interpret visually represented data in and to extract information from data visualizations” (16); and “the ability to make meaning from and interpret patterns, trends, and correlations in visual representations of data” (17). Other works have sought to advance the assessment of DVL. Boy et al. (15) applied item response theory (IRT) to assess visualization literacy for bar charts and scatterplot graphs using 12 static visualization types as prompts with six tasks (minimum, maximum, variation, intersection, average, comparison). Börner et al. (17) used 20 graph, map, and network visualizations from newspapers, textbooks, and magazines to assess the basic DVL of 273 science museum visitors. Results show that participants had significant limitations in naming and interpreting visualizations and particular difficulties when reading network layouts (18). Maltese et al. (19) showed significant differences between novices and experts when reading and interpreting visualizations commonly found in textbooks and school curricula. Lee et al. (16) developed a visualization literacy assessment test (VLAT) that consists of 12 data visualizations (e.g., line chart, histogram, scatterplot graphs) and 53 multiple-choice test items that cover eight data visualization tasks (e.g., retrieve value, characterize distribution, make comparisons). The VLAT test demonstrated high reliability when administered to 191 individuals.

Interested to understand how users with no specific training create visualizations from scratch, Huron et al. (20) identified simplicity, expressivity, and dynamicity as the main challenges. In subsequent work, Huron et al. (21) identified 11 different subtasks and a variety of paths that subjects used to navigate to a final visualization; they then grouped these tasks into four categories: load data, build constructs, combine constructs, and correct. Building on this work, Alper et al. (22) developed and tested an interactive visualization creation tool for elementary school children, focusing on the abstraction from individual pictographs to abstract visuals. They observed that touch interactivity, verbal activity, and class dynamics were significant factors in how students used the application. Chevalier et al. (23) observed that, while visualizations are omnipresent in grades K–4, students are rarely taught how to read and create them and argued that visualization creation should be added to the concept of visualization literacy.

Interestingly, not one of the existing approaches explicitly covers the crucial question of how to assess the construction of data visualizations. Furthermore, there is no agreed on standardized terminology, typology, or classification system for core DVL concepts. In fact, most experts do not agree on names for some of the most widely used visualizations (e.g., the radar graph is also called a “polygon graph” or “star chart”). Plus, there is little agreement on how to classify visualizations (e.g., what is a chart, graph, or diagram?) or on the general process steps of how to render data into actionable insights. Finally, there is little agreement about how to teach visualization design most effectively.

Given the rather low level of DVL (17) and the high demand for it in the workforce (24), there is an urgent need for basic and applied research that defines and measures DVL and develops effective interventions that measurably increase DVL. In what follows, we present a data visualization literacy framework (DVL-FW) that provides the theoretical underpinnings required to develop teaching exercises and assessments for data visualization construction and interpretation.

DVL-FW

Analogous to the PISA mathematics and literacy frameworks (1, 8), we present here a DVL-FW that covers a typology of core concepts and terminology together with a process model for constructing and interpreting data visualizations. The initial DVL-FW was developed via an extensive review of more than 600 publications documenting 50+ years of work by statisticians, cartographers, cognitive scientists, visualization experts, and others. These publications were selected using a combination of expert surveys and cited reference searches for key publications, such as refs. 25–32. An extended review of prior work and an earlier version of the DVL-FW were presented in Börner’s Atlas of Knowledge: Anyone Can Map (33). The DVL-FW has been applied and systematically revised over more than 10 years of developing exercises and assessments for residential and online courses at Indiana University. More than 8,500 students applied the DVL-FW to solve 100+ real-world client projects; student performance and feedback were used to expand the coverage, internal consistency, utility, and usability of the framework.

In what follows, we present the revised DVL-FW that connects DVL core concepts and process steps. Plus, we showcase how the framework can be used to design exercises and associated assessments. While DVL requires textual, mathematical, and visual literacy skills, the DVL-FW focuses on core DVL concepts and procedural knowledge.

DVL-FW Typology.

Visualizations have been classified by insight needs, types of data to be visualized, data transformations used, visual mapping transformations, or interaction techniques among others. Over the past five decades, many studies have proposed diverse visualization taxonomies and frameworks (Table 1). Among the most notable are Bertin’s Semiology of Graphics: Diagrams, Networks, Maps (25), Harris’ Information Graphics: A Comprehensive Illustrated Reference (28), Shneiderman’s “The eyes have it: A task by data-type taxonomy for information visualizations” (32), MacEachren’s How Maps Work: Representation, Visualization, and Design (29), Few’s Show Me the Numbers: Designing Tables and Graphs to Enlighten (27), Wilkinson’s The Grammar of Graphics (Statistics and Computing) (31), Cairo’s The Functional Art (26), Interactive Data Visualization: Foundations, Techniques, and Applications by Ward et al. (34), and Munzner’s Visualization Analysis and Design (30). Several of these frameworks have guided subsequent tool development. For example, The Grammar of Graphics (Statistics and Computing) (31) informed the statistical software Stata (35) and Wickham’s ggplot2 (36).

Table 1.

Typology of the DVL-FW

| Insight needs | Data scales | Analyses | Visualizations | Graphic symbols | Graphic variables | Interactions |

| Categorize/cluster | Nominal | Statistical | Table | Geometric symbols | Spatial | Zoom |

| Order, rank, sort | Ordinal | Temporal | Chart | Point | Position | Search and locate |

| Distributions (also outliers) | Interval | Geospatial | Graph | Line | Retinal | Filter |

| Comparisons | Ratio | Topical | Map | Area | Form | Details on demand |

| Trends (process and time) | Relational | Tree | Surface | Color | History | |

| Geospatial | Network | Volume | Optics | Extract | ||

| Compositions (also of text) | Linguistic symbols | Motion | Link and brush | |||

| Correlations/relationships | Text | Projection | ||||

| Numerals | Distortion | |||||

| Punctuation marks | ||||||

| Pictorial symbols | ||||||

| Images | ||||||

| Icons | ||||||

| Statistical glyphs |

Table 1 shows the seven core types of the revised DVL-FW theory. The members for each type were derived from an extensive literature review and refined using feedback gained from constructing and interpreting data visualizations for the 100+ client projects in the Information Visualization massive open online course (IVMOOC) discussed in the next section. Subsequently, we will detail each of the seven types.

Insight needs.

Different stakeholders have different insight needs (also called “basic task types”) that must be understood in detail to design effective visualizations for communication and/or exploration. The DVL-FW builds on and extends prior definitions of insight needs and task types. For example, Bertin (25) identifies four task types: selection, order, association (or similarity), and quantity. The graph selection matrix by Few (27) distinguishes ranking, distribution, nominal comparison and deviation, time series, geospatial, part to whole, and correlation. Yau (37) distinguishes patterns over time, proportions, relationships, differences, and spatial relations. Ward et al. (34) propose tasks, such as identify, locate, distinguish, categorize, cluster, rank, compare, associate, and correlate. Munzner (30) identifies three actions (analyze, search, query), listing a variety of tasks, such as discover, annotate, identify, and compare. Fisher and Meyer (38) describe tasks, such as finding and reading values, characterizing distributions, and identifying trends. Table 1, column 1 shows a superset of core types covered by the DVL-FW proposed here. Any alignment of previously proposed needs and tasks will be imperfect, as detailed definitions of terms do not always exist. An extended discussion of additional prior works and their tabular alignment can be found in ref. 33.

Data scales.

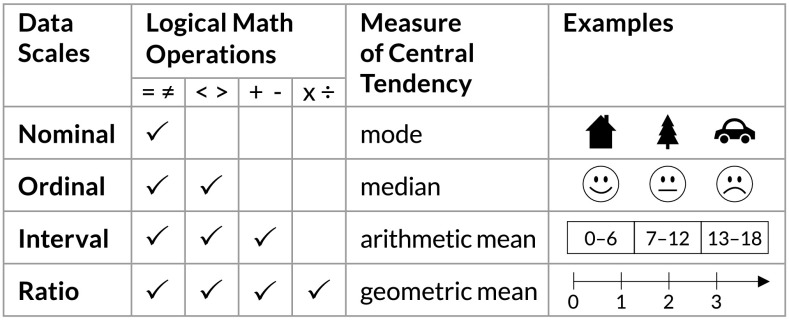

Data variables may have different scales (e.g., qualitative or quantitative), influencing which analyses and visual encodings can be used. Building on the work of Stevens (39), the DVL-FW distinguishes nominal, ordinal, interval, and ratio data based on the type of logical mathematical operations that are permissible (Fig. 1). The approach subsumes Bertin’s (25) three data-scale types: qualitative, ordered, and quantitative—which roughly correspond to nominal, ordinal, and quantitative (also called “numerical”; includes interval and ratio). Bertin’s terminology was later adopted by MacEachren (29) and many other cartographers and information visualization researchers (27, 30, 38). Atlas of Knowledge: Anyone Can Map (33) has a more detailed discussion of different approaches and their interrelations.

Fig. 1.

Logical mathematical operations permissible, measure of central tendency, and examples for different data scale types.

Nominal data (e.g., job type) have no ranking but support equality checks. Ordinal data assumes some intrinsic ranking but not at measurable intervals (e.g., chapters in a book). Interval- and ratio-scale data assume that the distance between any two adjacent values is equal. For interval data, the zero point is arbitrary (e.g., Celsius or Fahrenheit temperature scales), while for ratio, there exists a unique and nonarbitrary zero point (e.g., length or weight). Logical mathematical operations permissible for the different data-scale types are given in Fig. 1.

Note that quantitative data can be converted into qualitative data (e.g., one may use thresholds to convert interval data into ordinal data). Ordinal rankings can be converted to yes/no categorical decisions (e.g., to make funding decisions). The reverse is possible as well [e.g., multidimensional scaling (40) converts ordinal into ratio data]; other examples are in ref. 33.

Prior work has used data-scale types as independent variables in user studies (41) with regard to selecting appropriate visualization types (27, 38), tasks (32), and how different types relate to datasets (30, 34). Results provide valuable guidance for the design of visualizations, exercises, and assessments.

Analyses.

Most datasets need to be analyzed before they can be visualized. While focusing on visualization construction and interpretation, the DVL-FW does cover different types of analyses that are commonly used to preprocess, analyze, or model data before they are visualized (Table 1, column 3). Five general types are distinguished: statistical analysis (e.g., to order, rank, or sort); temporal analysis answering “when” questions (e.g., to discover trends); geospatial analysis answering “where” questions (e.g., to identify distributions over space); topical analysis answering “what” questions (e.g., to examine the composition of text); and relational analysis answering “with whom” questions (e.g., to examine relationships; also called network analysis). Algorithms for the different types of analyses come from statistics, geography, linguistics, network science, and other areas of research. The tools used in the IVMOOC (see below) support more than 100 different temporal, geospatial, topical, and network analyses (42).

Visualizations.

Any comprehensive and effective DVL-FW must contend with the many existing proposals for visualization naming and classification (33). For example, Harris (28) details hundreds of visualizations and distinguishes tables, charts (e.g., pie charts), graphs (e.g., scatterplots), maps, and diagrams (e.g., block diagrams, networks, Voronoi diagrams). Bertin (25) distinguishes diagrams, maps, and networks. Based on an extensive literature and tool review and with the goal of providing a universal set of visualization types, the DVL-FW identifies five general types: table, chart, graph, map, tree, and network visualizations (Table 1, column 4) (definitions and examples are in ref. 33).

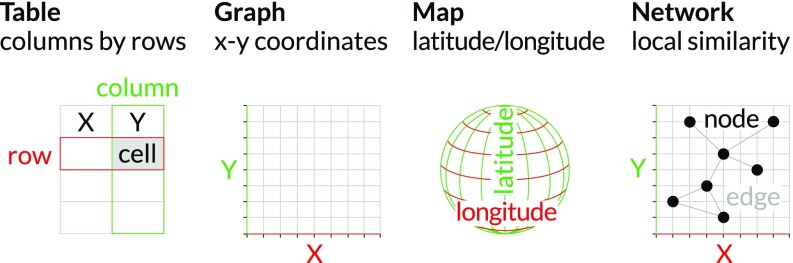

In addition, the DVL-FW distinguishes between the reference system (or base map) and data overlays. Fig. 2 exemplifies typical reference systems for four visualization types. All four support the placement of data records (data records can be connected via linkages); color coding of table cells, graph areas, geospatial areas (e.g., in choropleth maps), or subnetworks; and the design of animations (e.g., changes in the number of data records over time). Some visualizations use a grid reference system (e.g., tables), while others use a continuous reference system (e.g., scatterplot graph or geospatial map). Some visualizations use lookup tables to position data [e.g., lookup tables for US zip codes to latitude/longitude values or journals to the position of scientific disciplines in science maps (43)]. One visualization can be transformed into another. For instance, changing the quantitative axes of a graph into categorical axes results in a table. Similarly, interpolation applied to discrete area geospatial (or topic) maps results in continuous, smooth surface elevation maps.

Fig. 2.

Typical reference systems used in a 2D table, graph, map, and network visualization. Horizontal dimension is colored red, and vertical is in green to highlight commonalities and differences. Force-directed layout algorithms can be used to assign x–y values to each node in a network based on node similarity.

Prior research on DVL shows that people have difficulties reading most visualization types but especially, networks (17, 18). Controlled laboratory studies examining the recall accuracy of relational data using map and network visualizations have found that map visualizations are easier to read and increase memorability (44).

In psychology and cognitive science, research has aimed to identify the cognitive processes required for reading visualizations and confounding variables. Pinker (45) reviewed general cognitive and perceptual mechanisms to develop a theory of graph comprehension in which “graph schemas” provide template-like information on how to create or read certain visualizations. His “graph difficulty principle” helps explain why some graph-type visualizations are easier or harder to comprehend. Kosslyn et al. (46) demonstrated that the time required to read a visual image increases systematically with the distance between initial focus point and the target—independent of the “amount of material” between both points. Shah (47) showed that line graphs facilitate the extraction of information for x–y relationships and that bar graphs ease the comparison of graphical elements in close proximity to each other. However, when subjects had to perform computation while reading a visualization, comprehension became more difficult, showing that the interpretation of graphs is “serial and incremental, rather than automatic and holistic” (47). Building on the study by Shah (47) of the iterative nature of graph comprehension, Trickett and Trafton (48) emphasize the importance of spatial processes (e.g., the temporal storage and retrieval of an object’s location in memory, allowing for mental transformations, such as creating and transforming a mental image) for graph comprehension. Results from these studies can guide the design of visualization exercises and assessments.

When teaching DVL, a decision must be made about which visualizations should be taught and what subset of core types and process steps should be prioritized. The practical value of a visualization (e.g., determined by the frequency with which different populations, such as journalists, doctors, or high school students, encounter it in work contexts, scholarly papers, news reports, or social media) can guide this decision-making process. Simply put, the more usage and actionable insights gained, the more important it becomes to empower individuals to properly construct and interpret that visualization.

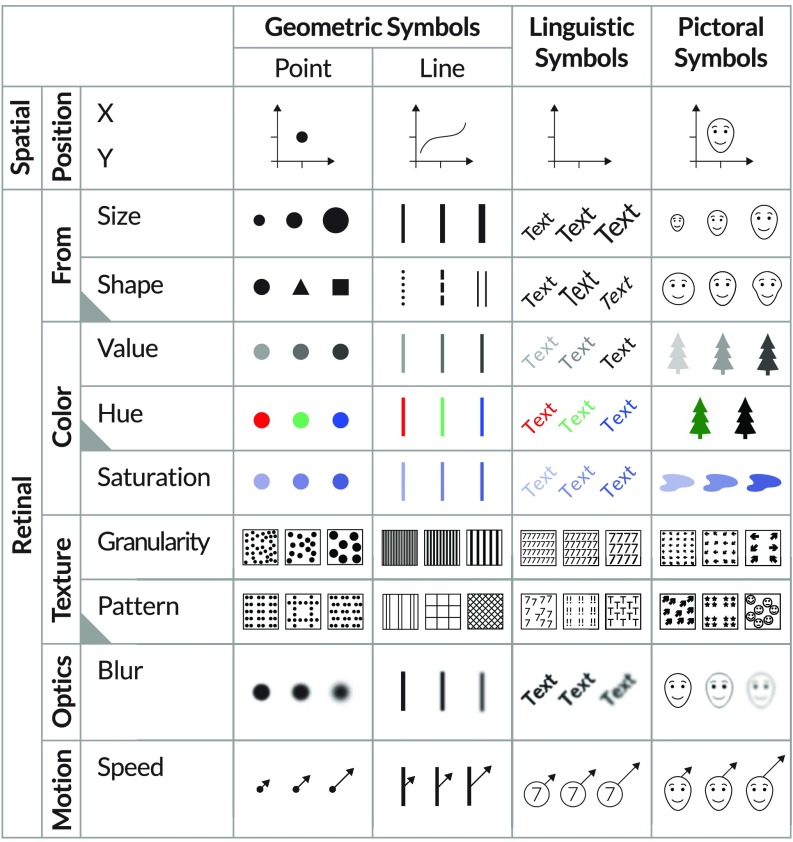

Graphic symbols.

Graphic symbols are essential to data visualization, as they give data records a visual representation. Any comprehensive framework must acknowledge and build on previous efforts to classify and name these symbols. Bertin (25), for instance, calls graphic symbols “visual marks” and proposes three geometric elements: point, line, and area. MacEachren (29) adopts Bertin’s framework to explain how geospatial maps work. Harris (28) distinguishes two classes of symbols, geometric and pictorial, and provides numerous examples. Horn (49) distinguishes three general classes of graphic symbols: shapes, words, and images. Other approaches are discussed in Atlas of Knowledge: Anyone Can Map (33).

The DVL-FW distinguishes three general classes consisting of 11 graphic symbols: geometric (point, line, area, surface, volume), linguistic (text, numerals, and punctuation marks), and pictorial (images, icons, and statistical glyphs). Fig. 3 shows a subset of graphic symbols and graphic variables listed in columns 5 and 6 in Table 1. A four-page table of 11 graphic symbol types vs. 24 graphic variable types can be found in Börner (33). Different graphic symbol types can be combined (e.g., a geometric symbol used to represent a node in a network might have an associated linguistic symbol label).

Fig. 3.

Four graphic symbols and 11 graphic variables from full 11 graphic symbols by 24 graphic variables set in ref. 34. Qualitative nominal variables (shape, color hue, and pattern) have a gray mark.

User studies that aim to assess the effectiveness of different graphic symbol types are typically combined with studies on graphic variable types and are discussed subsequently.

Graphic variables.

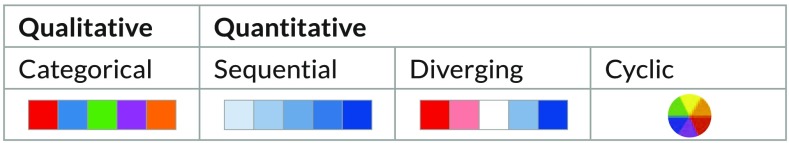

Data records commonly have attribute values that can be represented by so-called graphic variables (e.g., color or size) of graphic symbols. Bertin (25) calls graphic variables “visual channels” and identifies “retinal variables,” such as shape, orientation, color, texture, value, and size. MacEachren’s (29) instantiations (which he calls “implantations”) of different graphic variables for different symbol types include position and Bertin’s retinal variables. Munzner (30) distinguishes “magnitude channels” (e.g., position [1-3D], size [1-3D], color luminance/saturation, and curvature) from “identity channels” (e.g., color hue, shape, and motion). Wilkinson (31) proposes position, form, color, texture, optics, and transparency. The DVL-FW details and exemplifies a superset of these proposed graphic variables organized into spatial (x, y, z position) and retinal variables. Retinal variables are further divided into form (size, shape, rotation, curvature, angle, closure), color (value, hue, saturation), texture (spacing, granularity, pattern, orientation, gradient), optics (blur, transparency, shading, stereoscopic depth), and motion (speed, velocity, and rhythm) and grouped into quantitative and qualitative variables (33). A subset of these 24 variables is given in Fig. 3.

Like qualitative data variables, qualitative graphic variables (e.g., shape or color hue) have no intrinsic ordering. In contrast, quantitative graphic variables (e.g., size or color intensity) can have different ordering directions, such as sequential, diverging, or cyclic (30, 50); examples are given in Fig. 4. Quantitative graphic variables, such as motion, can be binned to encode qualitative data variables [e.g., binary yes/no motion can be used to encode binary data values as proposed by Munzner (30)].

Fig. 4.

Exemplary color schemas for qualitative and quantitative data variables using colors from ColorBrewer (50).

Laboratory studies by Cleveland and McGill (51) and crowdsourced studies using Amazon Mechanical Turk by Heer and Bostock (52) quantify how accurately humans can perceive different graphic variables. Both studies show that position encoding has the highest accuracy followed by length (“size” in the DVL-FW), angle and rotation, and then, area. When examining area encodings more closely, rectangular and circular area encoding yielded the lowest accuracy, explaining why visualizations, such as bubble charts and tree maps, are harder to read.

Kim and Heer (41) conducted a Mechanical Turk study with 1,920 subjects using US daily weather measurements to determine what combination of qualitative and quantitative data-scale types plus visual encodings leads to the best performance on key literacy tasks (e.g., read value, find maximum, compare values, and compare averages). Results from these studies help guide the design and interpretation of visualizations.

Different graphic variable types can be combined (e.g., a node in a network may be coded by size and color as shown in a proportional symbol map). Szafir et al. (53) investigated what graphic symbol and graphic variable types (position, size, orientation, color, and luminance) together support what visual insight needs (e.g., the identification of outliers, trends, or clustering as shown in Fig. 5). These findings make it possible to order graphic variables by effectiveness and guide the selection and combination of variables when constructing data visualizations.

Fig. 5.

Geometric symbol (circle) encoding using different graphic variable types in support of outlier, trend, and cluster identification as inspired by Szafir et al. (53).

Interactions.

The DVL-FW recognizes that, while some visualizations are static (e.g., printed on paper), many can be manipulated dynamically using diverse types of interaction. Shneiderman (32) identifies overview, zoom, filter, details on demand, relate (viewing relationships among items), history (keeping a log of actions to support undo, replay, and progressive refinement), and extract (access subcollections and query parameters). Keim (54) distinguishes zoom, filter, and link and brush as well as projection and distortion techniques as a means to provide focus and context. The typology proposed by Brehmer and Munzner (55) covers two main abstract visualization tasks. The first is “why,” which includes consume (present, discover, enjoy, produce), search (lookup, browse, locate, explore), and query (identify, compare, summarize). The second is “how,” which consists of encode, manipulate (select, navigate, arrange, change, filter, aggregate), and introduce (annotate, import, derive, record). Heer and Shneiderman (56) focus on the flexible and iterative use of visualizations by naming 12 actions ordered into three high-level categories: data and view specification (visualize, filter, sort, derive), view manipulation (select, manage, coordinate, organize), and process and provenance (record, annotate, share, guide). As before, the DVL-FW covers core interaction types, including zoom, search and locate, filter, details on demand, history, extract, link and brush, projection, and distortion (Table 1, column 7).

DVL-FW Process Model.

Human sense making in general—and particularly, sense making of data and data visualizations—has been studied extensively. Pirolli and Card (57) used cognitive task analysis to develop a notational model of sense making for intelligence analysts. It consists of a foraging loop (seeking information, searching and filtering it, and reading and extracting information) and a sense-making loop (iterative development of a mental model that best fits the evidence). Klein et al. (58) proposed a data/frame theory of how domain practitioners make decisions in complex real-world contexts. Lee et al. (59) observed novice users examining unfamiliar visualizations and identified five major cognitive activities: encountering visualization, constructing a frame, exploring visualization, questioning the frame, and floundering on visualization. Pioneering work by Mackinlay (60) aimed to automate the design of effective visualizations for graph-type visualizations. Huron et al. (21) published a flow diagram showing common paths when constructing graphs; it features four main tasks—load data, build constructs, combine constructs, and correct—and several mental and physical subtasks. Grammel et al. (61) conducted exploratory laboratory studies to identify three activities central to the interactive visualization construction process: data variable selection, visual template selection, and visual mapping specification (i.e., assigning graphic symbol types and variables to data variables). “Voyager: Exploratory analysis via faceted browsing of visualization recommendations” by Wongsuphasawat et al. (62) is a recommendation engine that suggests diverse visualization options to help users pick different data variable subsets, data transformations (e.g., aggregation and binning), and visual data encodings for graph-type visualizations using the Vega-Lite visualization specification language (63). The system also ranks results by perceptual effectiveness score and prunes visually similar results.

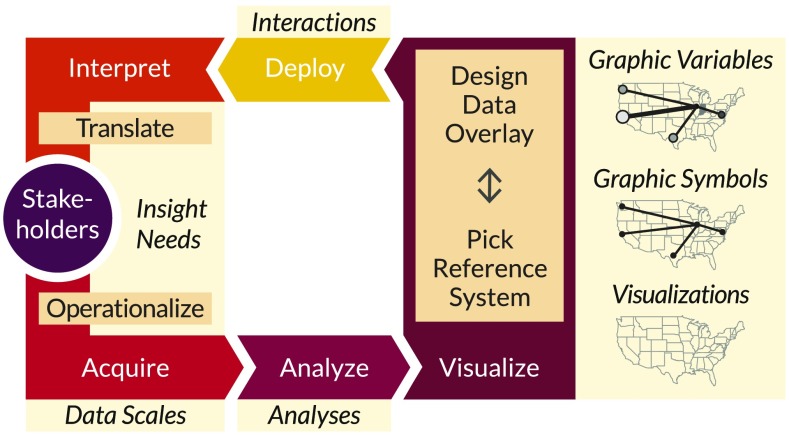

Building on this prior work and extending Börner (33), we identify key process steps involved in data visualization construction and interpretation (Fig. 6) and interlink process steps with the DVL-FW typology (Table 1). Subsequently, we discuss the important role of stakeholders and detail five process steps and their interrelation with the typology.

Fig. 6.

Process of data visualization construction and interpretation with major steps in white letters. Types identified in Table 1 are given in italics, and an exemplary US reference system and sample data overlays are given in Right.

Stakeholders.

The data visualization process (also called “workflow”) starts with the identification of stakeholders and their insight needs (Table 1, column 1). Just as a verbal math problem needs to be reformulated into a numerical math problem, the verbal or textual description of a real-world problem presented by a stakeholder must be operationalized (i.e., reformulated into a data visualization problem so that appropriate datasets, analysis and visualizations workflows, and deployment options can be identified). Math assessment frameworks allocate up to one-half of the overall problem-solving effort for the translation of verbal to numerical problems; analogously, major effort is required to translate real-world problems into well-defined insight needs.

Acquire.

Given well-defined insight needs, relevant datasets and other resources can be acquired. Data quality and coverage will strongly impact the quality of results, and much care must be taken to acquire the best dataset with data scales that support subsequent analysis and visualization.

Analyze.

Typically, data need to be preprocessed before they can be visualized. This step can include data cleaning (e.g., identify and correct errors, deduplicate data, deal with missing data, anomalies, unusual distributions); data transformations (e.g., aggregations, geocoding, network extraction); and statistical, temporal, geospatial, topical, or relational network analyses (Table 1, column 3).

Visualize.

This step can be split into two main activities: pick reference system (or base map) and design data overlay. The first activity is associated with selecting a visualization type, and the second activity is associated with mapping data records and variables to graphic symbols and graphic variables (e.g., position and retinal variables). Exemplarily, Fig. 6 shows a US map reference system with graphic symbols (circles and lines) that are first positioned and then coded by both size and color.

Deploy.

Different deployments (e.g., a printout on paper or in 3D; an interactive display on a handheld device, large tiled wall, or virtual reality headset) will support different types of interactions via different human–user interfaces and metaphors (e.g., zoom might be achieved by physical body movement toward a large format map, pinch on a touch panel, or body movement in a virtual reality setup). Different interface controls make diverse interactions possible: buttons, menus, and tabs support selection; sliders and zoom controls let users filter by time, region, or topic; hover and double click help users retrieve details on demand; and multiple coordinated windows are connected via link and brush.

Interpret.

Finally, the visualization is read and interpreted by its author(s) and/or stakeholder(s). This process includes translating the visualization results into insights and stories that make a difference in the real-world application.

Frequently, the entire process is cyclical (i.e., a first look at the data results in a discussion of adding more/alternative datasets, running different analysis and visualization workflows, or using different data mappings or even deployment options). In some cases, a better understanding of existing data leads to asking novel questions, compiling new datasets, and developing better algorithms. Sometimes, a first analysis of the data might result in the acquisition of new/different data, a first visualization might result in choosing a different analysis algorithm, and a first deployment might reveal that a different visualization is easier to read/interact with.

Exercises and Assessments.

Given the core DVL-FW typology in Table 1 and the associated process in Fig. 6, it becomes possible to design effective interventions that measurably improve DVL. This section presents selected exercises that facilitate learning and DVL assessment. Additional theoretical lectures and hands-on exercises can be accessed online via the IVMOOC (see below). Textual literacy (e.g., proper spelling of titles, axis labels, etc.), math literacy (e.g., measurement, estimation, percentages, correlations, and probabilities), and visual literacy (e.g., composition of a visualization, color theory) have standardized tests and are not covered here.

DVL-FW typology.

Factual knowledge of the core types in Table 1 can be taught and assessed by presenting students with short answer, multiple choice, fill in the blank, or matching tasks that ask them to pick the correct set of DVL-FW types. For example, different types can be trained and assessed as follows.

Insight needs.

Given a verbal description of a real-world problem or a recording of a stakeholder explaining a need, identify and operationalize insight need(s) in short answer responses.

Data scales and analysis.

Given a data table, identify the scale of data in each column and suggest analyses that could be run to meet a given set of insight needs. Then, review if there is missing or erroneous data, disambiguate text, geocode addresses, and sample or aggregate data as needed to run certain visualization workflows. Decide which data variables are control, independent, or dependent variables.

Visualizations.

Given a visualization, correctly name and classify it by type (graph, map, etc.). For a greater challenge, have students take analysis results and visualize them to satisfy a set of insight needs. While there might not always be a single best visualization solution, the DVL-FW provides guidance for visualization construction and assessment.

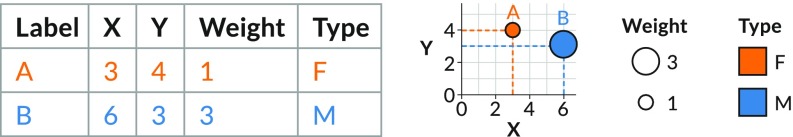

Graphic symbols and variables.

Graphic symbols/variables knowledge can be evaluated using matching problems that require students to deconstruct a visualization’s graphic symbols and variables into a data table (an example is in Fig. 7) or construct a visualization based on a dataset by matching data types and scales to graphic symbol types used in a visualization. These exercises can vary in complexity depending on the number of data variables and visualization types.

Fig. 7.

Data table with two data records (Left) and a scatterplot graph of the data showing the correct spatial position of both records and encoding weight by size and type by color hue (Right).

DVL-FW process model.

General steps in the design of data visualizations (Fig. 6) may be evaluated using fill in the blank and matching exercises that assess overall knowledge of the iterative process.

Construction.

Hands-on homework assignments can assess students’ ability to create and evaluate visualizations. Students are provided with insight needs, data, analysis and visualization algorithms, and rubrics that scaffold visualization construction, interpretation, and assessment. The rubrics cover the completeness and accuracy of the visualization, whether the results meet the insight needs, and if reported insights are supported by the data. Peer responses use rubrics to evaluate a peer submission, which also provide a means of training and evaluating a reviewer’s ability to use the DVL-FW to assess a visualization accurately.

Interpretation.

DVL assessments systematically evaluate students’ ability to interpret visualizations that address different insight needs. By using standardized visualizations with known interaction types, we can both quantify students’ ability to interpret visualizations across tasks and DVL-FW types and identify different kinds of misinterpretations. The assessments can be used pre- and postevaluation to determine changes in the interpretation ability of students.

Going forward, a close collaboration between researchers and educators is desirable to design exercises and assessments for use in different learning environments that leverage active learning, social learning, scaffolding, and horizontal transfer as suggested by Chevalier et al. (23).

DVL-FW Usage in IVMOOC

Over the last 15 years, earlier versions of the DVL-FW typology, process model, exercises, and assessments presented here have been implemented in the Information Visualization course at Indiana University, providing first evidence that the framework can be used to teach and assess DVL. Data on student engagement, performance, and feedback guided the continuous improvement of the DVL-FW. Since 2013, the DVL-FW has been taught in an online course called IVMOOC (https://ivmooc.cns.iu.edu/). More than 8,500 students registered and—as is typical for MOOCs—about 10% of those students completed the course. IVMOOC students’ online activity is captured in extensive detail, providing unique opportunities to validate and further improve the DVL-FW.

Learning Objectives.

In the IVMOOC, the main learning objective is mastery over the typology, process, and exercises defined in the DVL-FW. A special focus is on the construction of data visualizations (i.e., given an insight need and dataset, pick a valid data analysis and visualization workflow that renders data into insights; improve workflow preparation and parameter selection for statistical, temporal, geospatial, topical, and network analyses and visualizations; interpret results and add title, legend, and descriptive text; and document the workflow so that it can be replicated by others).

Customization of exercises is possible and desirable, as there are at least four critical factors that affect the comprehension of graphs: purpose for usage, task characteristics, discipline characteristics, and reader characteristics (64). Interventions can be tailored to fit different types of learners (e.g., theory or hands-on first) and different levels of expertise. Instructors might like to introduce students to the real-world stakeholder needs and datasets that are particularly relevant for a subject area (e.g., social networks in sociology; food webs in biology).

Instructional Strategies and Tools.

The IVMOOC uses a combination of lectures and quizzes, hands-on exercises and homework, real-world client projects, and examinations to increase and assess students’ DVL. The DVL-FW is introduced in the first week and used to structure the initial 7 weeks of the course, which include theory and hands-on lectures and exercises. Furthermore, the DVL-FW dictates the menu system of the Sci2 Tool (65) that provides easy access to 180+ analysis and visualization algorithms and organizes the 50 visualizations featured in the IVMOOC flashcard app. In the last 7 weeks of the course, students collaborate on real-world client projects that ask students to implement the full DVL-FW process—from stakeholder interviews to identify insight needs, data acquisition, analysis, visualization, and deployment to interpretation. Sample client projects are documented in the textbook Visual Insights: A Practical Guide to Making Sense of Data (42).

Assessments.

IVMOOC quizzes, homework, examinations, and peer reviews assess students’ knowledge and application of the DVL-FW typology (Table 1), process steps (Fig. 6), and interrelations between types and steps using classical test theory. Examination and quiz responses are analyzed using IRT to evaluate question difficulty and student misconceptions. Peer evaluation uses rubrics that scaffold the visualization evaluation process using the DVL-FW. Together, the assessments allow instructors to check the degree to which the students are meeting the learning objectives.

Discussion and Outlook

In this paper, we have presented a typology, process model, and exercises for defining, teaching, and assessing DVL. The DVL-FW combines and extends pioneering works by leading experts to arrive at a comprehensive set of core types and major process steps required for the systematic construction and interpretation of data visualizations. As a key contribution, this paper interlinks the typology and process steps and presents a set of DVL-FM exercises and assessments that can be used by anyone interested to measurably improve DVL. Early versions of the DVL-FW were implemented and tested in the IVMOOC over the last 6 years and have informed the DVL-FW typology, process model, exercises, and assessments presented here.

Controlled User Studies.

Going forward, there is a need to run controlled user studies to understand difficulty levels for the diverse DVL-FW types and process steps and their combinations and to provide additional guidance for the construction of effective visualizations based on scientific evidence. Seminal studies by Cleveland and McGill (66) and Heer and Bostock (52) have examined the effectiveness of different visual encodings. A similar study design can be used to examine the effectiveness of a larger range of graphic symbol types, variable types, and their combinations (53). Work by Wainer (67) and Boy et al. (15) used IRT to compute DVL scores for the interpretation of different graph visualizations. IRT was also used in the IVMOOC to assess student DVL when constructing visualizations, but more work is needed to optimally use the DVL-FW for teaching visualization construction.

User Studies in the Wild.

In addition to laboratory experiments, there is a need to understand how general audiences can construct and interpret data visualizations in real-world settings using so-called “research in the wild” (68). Building on prior work assessing the DVL of science museum visitors (17), we are developing a museum experience that lets visitors first generate and then visualize their very own data using a so-called “Make-a-Vis” (MaV) setup. MaV is aligned with the DVL-FW and supports the mapping of data to visual variables via the drag and drop of column headers to axis and legend areas in a data visualization. The active learning setup aims to empower learners to become producers and creators across the lifespan—in line with recommendations found in How People Learn II: Learners, Contexts, and Cultures (69).

Planned User Studies.

Going forward, we are interested in further improving DVL instruction using concreteness fading, scaffolding, horizontal transfer, and reciprocity.

Concreteness fading.

Alper et al. (22) and Chevalier et al. (23) examined visualization literacy teaching methods for elementary school children and developed their proof-of-concept tool C’est la Vis. The tool uses concreteness fading, an approach where concrete, countable entities (pictograms) are gradually transformed into abstract visual representations of quantitative data. We plan to use concreteness fading to ease the construction of different visualization types.

Scaffolding.

Studies are needed to determine what sequence is best for introducing the DVL-FW typology and process steps to support effective scaffolding. As for factual scaffolding (Table 1), only a subset of visualizations, graphic symbol, and variable types and their members might initially be familiar to a student. As students learn more types and their members, their DVL increases. In terms of procedural scaffolding (Fig. 6), students might be presented with a sequence of successively harder tasks: (i) examine a graph and answer yes/no insight questions by modifying usage of graphic variable types; (ii) read a simple case study that defines an insight need and dataset, and then, select the best visualization, graphic symbols, and variable types to meet the predefined need; and (iii) listen to a client explaining a real-world problem, identify insight need(s), pick the most relevant dataset(s), construct an appropriate visualization, and verbally communicate key insights to the client.

Horizontal transfer.

The DVL-FW aims to ease the transfer of knowledge across visualization-type reference systems (Fig. 2). Knowledge on how to construct graphs with diverse data overlays should make it easier to read and construct other visualization types. For example, Friel et al. (64) propose a sequence for the introduction of graph-type visualizations to students of different ages. Additional user studies are needed to determine how prior knowledge impacts the reading and construction of visualizations so that the typology and process steps can be taught most effectively.

Reciprocity.

Recent work shows that visualization construction (i.e., starting with a reference system and then adding graphic symbols and additional graphic variables) leads to better understanding and interpretation of the visualization than deconstructing a complete visualization (70). Additional user studies are needed to determine the strength of transfer between constructing and reading visualizations of different types and what construction workflows are most effective for increasing DVL.

Outlook.

DVL is of increasing importance for making sound decisions in one’s personal and professional life. Existing literacy tests—a review is in Pursuit of Universal Literacy—include statistical graphs as part of mathematical and financial literacy tests. In the United States, K–12 national standards for math and science cover statistical graphs (71, 72) and geospatial maps (72). However, most exercises ask students to read (not construct) data visualizations; topical or network analyses and visualizations are rarely covered. Adding DVL literacy exercises and assessments to existing tests or establishing separate DVL literacy tests will make it possible to assess how effectively different classes, schools, corporations, countries, etc. are preparing students to read and construct data visualizations; what interventions and exercises work for what age groups and industries/research areas; and how to further improve DVL typology, processes, exercises, and assessments via a close collaboration among academic and industry experts, learning scientists, instructional developers, teachers, and learners.

Acknowledgments

We thank Anna Keune and the anonymous reviewers for their extensive expert comments on an earlier version of this paper. We appreciate the support of figure design by Tracey Theriault and Leonard E. Cross and copyediting by Todd Theriault. This work was partially supported by a Humboldt Research Award, NIH Awards U01CA198934 and OT2OD026671, and NSF Awards 1713567, 1735095, and 1839167. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the NSF.

Footnotes

The authors declare no conflict of interest.

This paper results from the Arthur M. Sackler Colloquium of the National Academy of Sciences, “Creativity and Collaboration: Revisiting Cybernetic Serendipity,” held March 13–14, 2018, at the National Academy of Sciences in Washington, DC. The complete program and video recordings of most presentations are available on the NAS website at www.nasonline.org/Cybernetic_Serendipity.

This article is a PNAS Direct Submission. B.S. is a guest editor invited by the Editorial Board.

References

- 1.OECD . PISA 2015 Draft Reading Literacy Framework. OECD Publishing; Paris: 2013. [Google Scholar]

- 2.OECD 2018 PISA 2018 released field trial new reading items (ETS Core A, Paris). Available at https://www.oecd.org/pisa/test/PISA_2018_FT_Released_New_Reading_Items.pdf. Accessed December 20, 2018.

- 3.Educational Testing Service 2018 Graduate record examination (ETS, Erwing, NJ). Available at https://www.ets.org/gre/revised_general/about/?WT.ac=grehome_greabout_a_180410. Accessed December 20, 2018.

- 4.Educational Testing Service 2018 Graduate record examination subject test (ETS, Erwing, NJ). Available at https://www.ets.org/gre/subject/about/?WT.ac=grehome_gresubject_180410. Accessed December 20, 2018.

- 5.Schleicher A. 2013 Beyond PISA 2015: A longer-term strategy of PISA. Available at https://www.oecd.org/pisa/pisaproducts/Longer-term-strategy-of-PISA.pdf. Accessed December 20, 2018.

- 6.Reyna VF, Nelson WL, Han PK, Dieckmann NF. How numeracy influences risk comprehension and medical decision making. Psychol Bull. 2009;135:943–973. doi: 10.1037/a0017327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.OECD 2018 Programme for international student assessment. Available at www.oecd.org/pisa/. Accessed December 20, 2018.

- 8.OECD . PISA 2015 Draft Mathematics Framework. OECD Publishing; Paris: 2013. [Google Scholar]

- 9.Fransecky RB, Debes JL. Visual Literacy: A Way to Learn—A Way to Teach. Association for Educational Communications and Technology; Washington, DC: 1972. [Google Scholar]

- 10.Ausburn LJ, Ausburn FB. Visual literacy: Background, theory and practice. Program Learn Educ Technol. 1978;15:291–297. [Google Scholar]

- 11.Association of College and Research Libraries 2018 ACRL visual literacy competency standards for higher education (American Library Association). Available at www.ala.org/acrl/standards/visualliteracy. Accessed December 20, 2018.

- 12.Hattwig D, Burgess J, Bussert K, Medaille A. Visual literacy standards in higher education: New opportunities for libraries and student learning. Portal. 2013;13:61–89. [Google Scholar]

- 13.Avgerinou MD. Towards a visual literacy index. J Vis Lit. 2007;27:29–46. [Google Scholar]

- 14.Taylor C. 2003 New kinds of literacy, and the world of visual information. Proceedings of the Explanatory & Instructional Graphics and Visual Information Literacy Workshop. Available at www.conradiator.com/resources/pdf/literacies4eigvil_ct2003.pdf. Accessed January 6, 2019.

- 15.Boy J, Rensink RA, Bertini E, Fekete J-D. A principled way of assessing visualization literacy. IEEE Trans Vis Comput Graph. 2014;20:1963–1972. doi: 10.1109/TVCG.2014.2346984. [DOI] [PubMed] [Google Scholar]

- 16.Lee S, Kim S-H, Kwon BC. VLAT: Development of a visualization literacy assessment test. IEEE Trans Vis Comput Graph. 2017;23:551–560. doi: 10.1109/TVCG.2016.2598920. [DOI] [PubMed] [Google Scholar]

- 17.Börner K, Maltese A, Balliet RN, Heimlich J. Investigating aspects of data visualization literacy using 20 information visualizations and 273 science museum visitors. Inf Vis. 2016;15:198–213. [Google Scholar]

- 18.Zoss A. 2018. Network visualization literacy: Task, context, and layout. PhD thesis (Indiana University, Bloomington, IN)

- 19.Maltese AV, Harsh JA, Svetina D. Data visualization literacy: Investigating data interpretation along the novice—expert continuum. J Coll Sci Teach. 2015;45:84–90. [Google Scholar]

- 20.Huron S, Carpendale S, Thudt A, Tang A, Mauerer M. Proceedings of the 2014 Conference on Designing Interactive Systems. ACM; New York: 2014. Constructive visualization; pp. 433–442. [Google Scholar]

- 21.Huron S, Jansen Y, Carpendale S. Constructing visual representations: Investigating the use of tangible tokens. IEEE Trans Vis Comput Graph. 2014;20:2102–2111. doi: 10.1109/TVCG.2014.2346292. [DOI] [PubMed] [Google Scholar]

- 22.Alper B, Riche NH, Chevalier F, Boy J, Sezgin M. Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems. ACM; New York: 2017. Visualization literacy at elementary school; pp. 5485–5497. [Google Scholar]

- 23.Chevalier F, et al. Observations and reflections on visualization literacy in elementary school. IEEE Comput Graph Appl. 2018;38:21–29. doi: 10.1109/MCG.2018.032421650. [DOI] [PubMed] [Google Scholar]

- 24.Bortz D. 2017 The top data skills you need to get hired. Available at https://www.monster.com/career-advice/article/top-data-skills-0617. Accessed December 20, 2018.

- 25.Bertin J. Semiology of Graphics: Diagrams, Networks, Maps. Esri Press; Seattle: 1983. [Google Scholar]

- 26.Cairo A. The Functional Art. New Riders; San Francisco: 2012. [Google Scholar]

- 27.Few S. Show Me the Numbers: Designing Tables and Graphs to Enlighten. Analytics; Oakland, CA: 2012. [Google Scholar]

- 28.Harris RL. Information Graphics: A Comprehensive Illustrated Reference. Oxford Univ Press; New York: 1999. [Google Scholar]

- 29.MacEachren AM. How Maps Work: Representation, Visualization, and Design. Guilford; New York: 2004. [Google Scholar]

- 30.Munzner T. Visualization Analysis and Design. CRC; Boca Raton, FL: 2014. [Google Scholar]

- 31.Wilkinson L. The Grammar of Graphics (Statistics and Computing) Springer Science + Business Media; New York: 2005. [Google Scholar]

- 32.Shneiderman B. Proceedings of the IEEE Symposium on Visual Languages. IEEE Computer Society; Washington, DC: 1996. The eyes have it: A task by data type taxonomy for information visualizations; pp. 336–343. [Google Scholar]

- 33.Börner K. Atlas of Knowledge: Anyone Can Map. MIT Press; Cambridge, MA: 2015. [Google Scholar]

- 34.Ward MO, Grinstein G, Keim D. Interactive Data Visualization: Foundations, Techniques, and Applications. A. K. Peters Ltd.; Natick, MA: 2015. [Google Scholar]

- 35.StataCorp 2018 Stata (15). Available at https://www.stata.com. Accessed December 20, 2018.

- 36.Wickham H. A layered grammar of graphics. J Comput Graph Stat. 2010;19:3–28. [Google Scholar]

- 37.Yau N. Visualize This: The FlowingData Guide to Design, Visualization, and Statistics. Wiley; Indianapolis: 2011. [Google Scholar]

- 38.Fisher D, Meyer M. Making Data Visual: A Practical Guide to Using Visualization for Insight. O’Reilly Media; Sebastopol, CA: 2018. [Google Scholar]

- 39.Stevens SS. On the theory of scales of measurement. Science. 1946;103:677–680. doi: 10.1126/science.103.2684.677. [DOI] [PubMed] [Google Scholar]

- 40.Kruskal JB, Shepard RN. A nonmetric variety of linear factor analysis. Psychometrika. 1974;39:123–157. [Google Scholar]

- 41.Kim Y, Heer J. Assessing effects of task and data distribution on the effectiveness of visual encodings. Comput Graph Forum. 2018;37:157–167. [Google Scholar]

- 42.Börner K, Polley DE. Visual Insights: A Practical Guide to Making Sense of Data. MIT Press; Cambridge, MA: 2014. [Google Scholar]

- 43.Börner K, et al. Design and update of a classification system: The UCSD map of science. PLoS One. 2012;7:e39464. doi: 10.1371/journal.pone.0039464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Saket B, Scheidegger C, Kobourov S, Börner K. Map-Based Visualizations Increase Recall Accuracy of Data. EUROGRAPHICS; Zurich: 2015. pp. 441–450. [Google Scholar]

- 45.Pinker S. A theory of graph comprehension. In: Freedle R, editor. Artificial Intelligence and the Future of Testing. L. Erlbaum Associates; Hillsdale, NJ: 1990. pp. 73–126. [Google Scholar]

- 46.Kosslyn SM, Ball TM, Reiser BJ. Visual images preserve metric spatial information: Evidence from studies of image scanning. J Exp Psychol Hum Percept Perform. 1978;4:47–60. doi: 10.1037//0096-1523.4.1.47. [DOI] [PubMed] [Google Scholar]

- 47.Shah P. A model of the cognitive and perceptual processes in graphical display comprehension. In: Anderson M, editor. Reasoning with Diagrammatic Representations. AAAI Press; Menlo Park, CA: 1997. pp. 94–101. [Google Scholar]

- 48.Trickett SB, Trafton JG. Proceedings of the International Conference on Theory and Application of Diagrams. Springer; Berlin: 2006. Toward a comprehensive model of graph comprehension: Making the case for spatial cognition; pp. 286–300. [Google Scholar]

- 49.Horn RE. Visual Language: Global Communication for the 21st Century. MacroVU Inc.; Bainbridge Island, WA: 1998. [Google Scholar]

- 50.ColorBrewer Team 2018 ColorBrewer 2.0 (Cynthia Brewer). Available at colorbrewer2.org. Accessed December 20, 2018.

- 51.Cleveland WS, McGill R. Graphical perception: Theory, experimentation, and application to the development of graphical methods. J Am Stat Assoc. 1984;79:531–554. [Google Scholar]

- 52.Heer J, Bostock M. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. ACM; New York: 2010. Crowdsourcing graphical perception: Using Mechanical Turk to assess visualization design; pp. 203–212. [Google Scholar]

- 53.Szafir DA, Haroz S, Gleicher M, Franconeri S. Four types of ensemble coding in data visualizations. J Vis. 2016;16:11. doi: 10.1167/16.5.11. [DOI] [PubMed] [Google Scholar]

- 54.Keim DA. Visual exploration of large data sets. Commun ACM. 2001;44:38–44. [Google Scholar]

- 55.Brehmer M, Munzner T. A multi-level typology of abstract visualization tasks. IEEE Trans Vis Comput Graph. 2013;19:2376–2385. doi: 10.1109/TVCG.2013.124. [DOI] [PubMed] [Google Scholar]

- 56.Heer J, Shneiderman B. Interactive dynamics for visual analysis. Commun ACM. 2012;55:45–54. [Google Scholar]

- 57.Pirolli P, Card S. 2005 The sensemaking process and leverage points for analyst technology as identified through cognitive task analysis. Proceedings of the International Conference on Intelligence Analysis. Available at https://www.e-education.psu.edu/geog885/sites/www.e-education.psu.edu.geog885/files/geog885q/file/Lesson_02/Sense_Making_206_Camera_Ready_Paper.pdf. Accessed January 6, 2019.

- 58.Klein G, Moon B, Hoffman RR. Making sense of sensemaking 2: A macrocognitive model. IEEE Intell Syst. 2006;21:88–92. [Google Scholar]

- 59.Lee S, et al. How do people make sense of unfamiliar visualizations? A grounded model of novice’s information visualization sensemaking. IEEE Trans Vis Comput Graph. 2016;22:499–508. doi: 10.1109/TVCG.2015.2467195. [DOI] [PubMed] [Google Scholar]

- 60.Mackinlay J. Automating the design of graphical presentations of relational information. ACM Trans Graph. 1986;5:110–141. [Google Scholar]

- 61.Grammel L, Tory M, Storey M-A. How information visualization novices construct visualizations. IEEE Trans Vis Comput Graph. 2010;16:943–952. doi: 10.1109/TVCG.2010.164. [DOI] [PubMed] [Google Scholar]

- 62.Wongsuphasawat K, et al. Voyager: Exploratory analysis via faceted browsing of visualization recommendations. IEEE Trans Vis Comput Graph. 2016;22:649–658. doi: 10.1109/TVCG.2015.2467191. [DOI] [PubMed] [Google Scholar]

- 63.Satyanarayan A, Moritz D, Wongsuphasawat K, Heer J. Vega-lite: A grammar of interactive graphics. IEEE Trans Vis Comput Graph. 2017;23:341–350. doi: 10.1109/TVCG.2016.2599030. [DOI] [PubMed] [Google Scholar]

- 64.Friel SN, Curcio FR, Bright GW. Making sense of graphs: Critical factors influencing comprehension and instructional implications. J Res Math Educ. 2001;32:124–158. [Google Scholar]

- 65.Sci2 Team 2009 Science of Science (Sci2) Tool (1.3; Indiana University and SciTech Strategies). Available at https://sci2.cns.iu.edu/user/index.php. Accessed December 20, 2018.

- 66.Cleveland WS, McGill R. The many faces of a scatterplot. J Am Stat Assoc. 1984;79:807–822. [Google Scholar]

- 67.Wainer H. A test of graphicacy in children. Appl Psychol Meas. 1980;4:331–340. [Google Scholar]

- 68.Rogers Y, Marshall P. Research in the wild. Synth Lect Hum Cent Inf. 2017;10:i–97. [Google Scholar]

- 69.National Academies of Sciences, Engineering, and Medicine . How People Learn II: Learners, Contexts, and Cultures. Natl Acad Press; Washington, DC: 2018. [Google Scholar]

- 70.Wojton MA, Palmquist S, Yocco V, Heimlich JE. 2014 Making meaning through data representation: Construction and deconstruction, Evaluation Reports 41. Available at www.informalscience.org/meaning-making-through-data-representation-construction-and-deconstruction. Accessed December 20, 2018.

- 71.National Governors Association Center for Best Practices and Council of Chief State School Officers . Common Core State Standards for Mathematics. NGA and CCSCO; Washington, DC: 2010. [Google Scholar]

- 72.NGSS Lead States . Next Generation Science Standards: For States, by States. Natl Acad Press; Washington, DC: 2013. [Google Scholar]