Abstract

Purpose:

Adherence to antiretroviral therapy is essential to HIV management and sustaining viral suppression. Despite simplified regimens, adherence remains difficult for certain persons living with HIV (PLWH). There is evidence to support the use of mHealth apps for effective self-management in PLWH; however, a medication adherence app with real-time monitoring for this population has not been developed and rigorously evaluated by PLWH. We developed a mHealth app (WiseApp) for HIV self-management to help PLWH self-manage their health. The purpose of this study was to evaluate the usability of the WiseApp.

Methods:

We conducted a three-step usability evaluation using 1) a traditional think-aloud protocol with end-users, 2) a heuristic evaluation with experts in informatics, and 3) a cognitive walkthrough with end-users. During the cognitive walkthrough, we tested two devices (fitness tracker and medication tracking bottle) that were linked to the WiseApp.

Results:

The think-aloud protocol informed iterative updates to the app, specifically to make it easier to see different sections of the app. The heuristic evaluation confirmed the necessity of these design changes. The cognitive walkthrough informed additional updates and confirmed that overall, the app and the linked devices were usable for the end-users.

Conclusion:

The results of the cognitive walkthrough with both experts and end-users informed iterative refinements to the WiseApp and finalization of a mHealth app for PLWH to better self-manage their health.

Keywords: mobile health applications, usability, human computer interaction, HIV/AIDS

1.0. Background

1.1. The Importance of Medication Adherence in HIV

Although HIV is now considered a chronic condition, sustained adherence to antiretroviral therapy is essential to disease management and maintaining viral suppression (1). Despite the development of simplified, single-tablet regimens, adherence remains difficult for some persons living with HIV (PLWH) (2, 3). Specifically, underserved populations, namely those with lower socioeconomic status and educational level, concomitant drug or alcohol use, and more complex medication regimens have an increased risk of nonadherence to HIV medications (4). Importantly, individuals with these same characteristics are disproportionately affected by HIV; therefore, it is a significant problem that requires effective interventions. There is evidence to support the use of mobile health (mHealth) application (app) for persons living with chronic illnesses to self-manage their health (5). HIV is now largely considered a chronic illness in the US and so the use of mHealth technology may have wide reaching implications across chronic illness populations (e.g. diabetes, cardiovascular disease) (6). A recent study testing a symptom self-management mHealth app demonstrated that the intervention group receiving self-care strategies had a significant improvement in symptom burden and medication adherence compared with those in the control group (p=0.017) (7). However, medication adherence was a secondary measure in the study and was limited to a self-report measure (8).

There is a paucity of self-management apps for PLWH. The following functional components were identified by PLWH as being components of an ideal mHealth app to support symptom and health self-management: communication, reminders, medication logs, lab reports, pharmacy information, nutrition and fitness, resources, settings, and search. A systematic search for commercially-available apps for self-management of health for PLWH, however, identified 15 apps, none of which had all of these functionalities (9, 10). Specifically, none of these apps mentioned capabilities to link to external devices, such as an electronic pill bottle, or provision of nutrition and fitness information.

Given these data, our research team used participatory research methods to design a self-management app that contains real-time medication monitoring for PLWH in addition to the functional components previously identified (11, 12). We developed a mHealth app (“WiseApp”) for HIV self-management to help PLWH self-manage their health and monitor their medication adherence. The development of the app was guided by Fogg’s functional triad for computing technology model which discusses modes for technology to affect behavior change (13). Important components to include in the app framed within this model include medication reminders (tools), testimonial videos (social actors) and to-do lists (medium). In addition, real-time medication monitoring is important for medication reminder systems and it is both feasible and acceptable to PLWH (14).

1.2. Significance of Usability Testing of mHealth Applications

Usability is the extent to which a user can use the IT system (e.g. website, computerized provider order entry system, mobile app) to achieve their goals, and how satisfied they are with the process. IT systems which do not adequately consider the needs of the intended end-users are often difficult to learn, misused or underutilized (15). mHealth technology has encountered similar challenges (16). As many of the inpatient IT systems such as Computerized Provider Order Entry (CPOE) and Electronic Health Records (EHRs) which were developed for clinicians with insufficient attention paid to the intended end-users and as a result some of these systems were related to fatal errors. Therefore usability has been widely recognized as a critical component in the development of health IT systems (17). More specifically, mHealth apps require a thorough understanding of the context of its proposed use by incorporating input from end-users to understand and improve the deficiencies of the technology and reduce the risk of failure (18, 19). Even if a mHealth app is aesthetically appealing, if it is too difficult to use, users will become frustrated and unwilling to use the app (20). Therefore usability of mHealth apps becomes of critical import (21).

1.3. WiseApp

The WiseApp is derived from formative work to design a self-management app for PLWH (14), with the goal of being more widely applicable across chronic illness populations who require medications and additional self-management strategies. A comprehensive process for the design of the self-management is described elsewhere but in short, the work was guided by the Information Systems Research (ISR) framework and incorporated end-user feedback throughout the design process (22). The resultant WiseApp is comprised of the following functional components: 1) testimonial videos of PLWH, 2) push-notification reminders, 3) medication trackers, 4) health surveys, and 5) a “To-Do” list outlining their tasks for the day, such as medications to take and goal steps to take. A key component of the app is a medication tracker linked to an electronic pill bottle (“CleverCap” – Figure 1) and a capability to link to a fitness tracker and monitor physical activity. The app can then send tailored reminders based on the feedback from the linked devices, such as medication reminders if the pill bottle has not been opened, or reminders to walk more steps. In addition to the home screen (Figure 2), the WiseApp has 3 additional domains including a screen to review medication adherence; a chat room; and a “Me” screen where users can set up their preferences and settings (Supplementary file).

Figure 1:

CleverCap

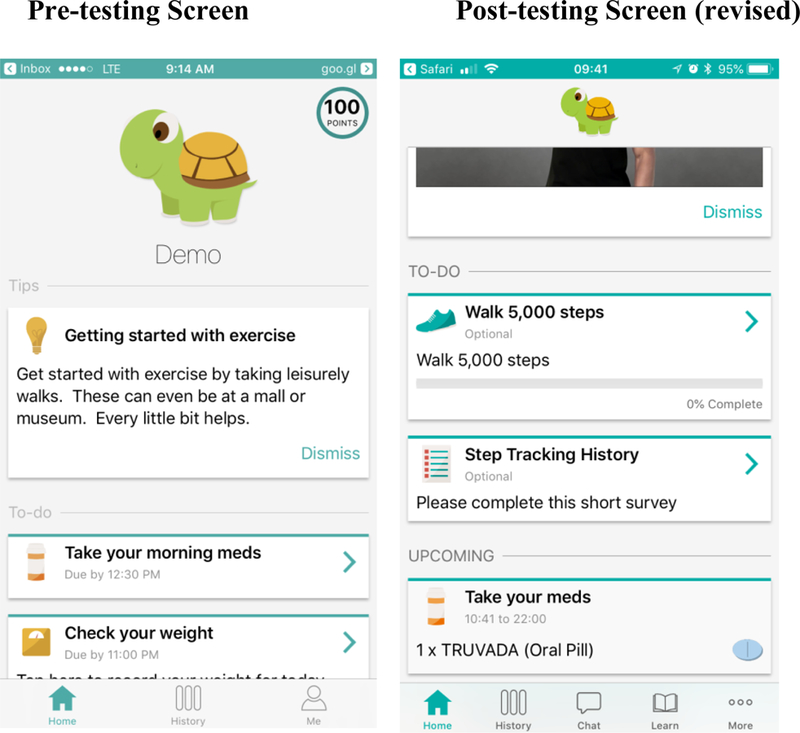

Figure 2:

Pre- and post-usability testing home screens

The purpose of this study was to evaluate the usability of the WiseApp for PLWH to self-manage their health.

2.0. Methods

We conducted a three-step usability evaluation using 1) a traditional think-aloud protocol with end-users, 2) a heuristic evaluation with experts in informatics, and 3) a cognitive walkthrough with end-users.

2.1. Usability Testing

2.1.1. Sample.

End-users:

Our sample was comprised of targeted end-users and informatics experts. We recruited from clinics and community based organizations in the Washington Heights neighborhood in northern New York City. Out of 24 potential participants screened, 4 were ineligible due to incompatible technology or not being comfortable using a smart phone. End-users were eligible if they were HIV-positive, over the age of 18 years, owned a smartphone (equal number of participants for both Android and iPhone), and could read and communicate in English. The rationale for 20 participants is based on past usability research, which has indicated that 95% of usability problems can be identified with 20 users (23). For the usability testing, we did not require adherence criteria for eligibility because we were not testing the effectiveness of the app. We also administered a modified version of the Mini Mental State Examination (MMSE) for cognitive screening, assessing their orientation (asking for the date/season and location by state or city), registration (naming 3 items and asking to repeat), attention and calculation (counting by serial 7’s at least to 21, and spelling a word backwards), and recall (asking again for the 3 items at the end of the screen). (24). End-users were eligible if they were able to answer all the responses correctly.

The mean age of end-user participants (n=20) was 51.3 years (range 27 – 68). 70% of end-users reported their race as African American/Black, and 45% reported their ethnicity as Hispanic/Latino; 45% of the end-users had completed some high school or less. 45% of end-users reported an annual income less than $20,000 although 25% reported they did not know their income, and 45% of end-users had not completed high school. Ten end-users owned an iPhone, and the other 10 and Android. End-users all reported that they use their smartphones at least daily and 18 out of 20 reported using it multiple times a day. In addition, all end-users had been using their smartphones for at least 2 years.

Experts:

Experts were eligible if they were experts in the field of biomedical informatics, specifically human computer interaction (HCI) and usability testing. Experts were invited by email to participate by the study team.

The mean age of the heuristic evaluators (n=4) was 54.3 years (range 41 – 67) and all reported English as their primary language. All 4 experts had a PhD and had an average of 19.5 years of experience in the field of bioinformatics (range 15 – 30). One expert had experience researching HIV and 3 were nurses with extensive training in medication monitoring and promotion of health activities including physical activity monitoring. Thus, they were dually-qualified to contribute to this usability evaluation.

2.1.2. Procedures:

End-users and experts were given a list of 26 tasks (Table 1) to complete related to the functionality of the app, including managing the medication profile, reviewing medication adherence history, and identifying their goal steps to take for the day. Completion of each of the 26 tasks while using the app was recorded using Morae software™ (TechSmith Corporation, Okemos, MI). This software allows for audio and on-screen movement recording simultaneously. In addition, the Morae software™ was recorded using a mirroring recording on the study laptop; this allowed the study team to both observe the movements of the participants in real-time from the laptop as well as record the session for later viewing and analysis. Research staff, consisting of two nurse scientists (postdoctoral trainees), one nurse practitioner (predoctoral trainee), and one coordinator with a MPH degree, also took field notes during each interview, or session. Both experts and end-users were asked to go step-by-step through the 26 tasks and to evaluate the app using a think-aloud protocol. Research staff prompted participants to describe their thought process when attempting to complete a task but were encouraged not to provide any specific guidance to participants. Occasionally, the research staff provided prompts when the participants were unable to complete a task; we recorded all instances of help to study participants. The interviews were analyzed using the interview recordings and were not transcribed.

Table 1.

Proportion of end-users and experts able to execute the 26 tasks

| Task Name | % of end-users able to execute the task easily (n=20) | % of experts able to execute the task easily (n=4) | Exemplar |

|---|---|---|---|

| Complete a mission | 15% (17 of 20 end- users did not complete) | All 4 experts did not complete this task | “I thought it was going to be the app’s mission statement” (End-user 18) |

| Enter “My Medication” section (in the “Me” screen) | 20% | 25% | “The profile should be first. Also “Me” should be “My profile” (End-user 17) |

| Mark your medication as taken | 25% (1 of 20 end-users did not complete | 50% | “the location for marking medication should be in the same page as “My Medications”…I don’t like go back and forth” (End-user 12) |

| Report a question to support center | 30% | 25% | “I guess eventually I would’ve found it” (End-user 19) |

| Confirm your fitness tracker step goals on the home page | 35% (1 user did not do) | 75% (1 expert did not do) | “to me walking is not fitness” (End-user 13) |

| Add a medication | 45% | 25% | “confusing at first…once I got it, it was simple” (End-user 13) |

| Log out of the app | 45% | 25% | “log out button should be on the top or easy location to see” (End-user 9) |

| Review your “Medication History” | 50% | 75% | “it tells me right here, it gives me from today to 30 days ago, the red one, I did not take it, the yellow one is a reminder I will be taking it, the green one is I took it”“ (End-user 33) |

| Add a member to your “Care Circle” | 65% | 75% | “all in one stop shop…it’s helpful to have everything in one app, medications, caregivers” (End-user 13) |

| Enter the “My account” section | 65% | 100% | “maybe things should be a little brighter. This is really bland” (End-user 5) |

| Enter secret key (to log into app) | 75% | 50% | “I forgot to capitalize!” end-user 18 |

| Enter date of birth (to log into app) | 75% | 75% | “I thought I was supposed to enter my own DOB” end-user 14 (end-users were prompted to provide a standard DOB rather than their actual DOB) |

| Log out (2nd attempt) | 75% | 100% | “they should know after the 1st time” end-user 4 |

| Retrieve email | 75% | 75% | “It would be easier with my own email” end-user 2 |

| Set up “My Routine” | 75% | 100% | “On the home page it shouldn’t be so blatant…nobody follows a schedule for bedtime” end-user 5 |

| Set up a mission (home screen) | 80% | 100% | “the word mission is not appropriate to texting or cooking dinner…adding options would be better, like short-term goals and long-term goals” end-user 10 |

| Create a pin code | 80% | 100% | “if I didn’t want someone to know my situation…it would be helpful” end-user 4 |

| Change your alias | 80% | 100% | “the location to change avatar makes more sense because I would be familiar with my account when I have something to change” end-user 11 |

| Enter “Security” section | 85% (1 user did not do) | 75% | “ “ME” button is appropriate to find it” end-user 16 |

| Enter the “Support Center” | 85% | 75% | “to get support on something, I’m usually already annoyed and don’t want to wait for a response. So I might want to call” end-user 5 |

| Take a survey (home screen) | 85% | 100% | “for me it was so easy” end-user 3 |

| Enter the chat section | 85% | 100% | “I didn’t even see the chat, if y’all was to have this a little darker” (End-user 4) |

| Enter date of birth (to log back in) | 90% | 100% | “easy second time around!” (End-user 7) |

| Chat with others | 95% (1 user did not do) | 100% | “I don’t like to talk on the phone, I like to text so…sometimes I might want to get someone’s take on what they went through” (End-user 5) |

| View testimonial video (home screen) | 95% | 100% | “these are good…but make sure to get balance between man’s talks and woman’s; keeping short is better, 2 min is good” (End-user 12) |

| Click the “log back in” button | 95% | 100% | “the second (time) is easier to use” (End-user 2) |

In addition, following the usability testing, both end-users and experts completed a survey to collect demographic information as well as the Health-ITUES instrument to evaluate usability. Surveys were completed through Qualtrics and all data was stored for later analysis. In this survey, participants rated the app’s usability using Health Information Technology Usability Evaluation Scale (Health-ITUES; scored 0–5; a higher score indicates higher perceived usability) (25).

2.1.3. Data Analysis:

All interviews were analyzed by the study team using the audio and video recordings of the app use. Each task was coded dichotomously (i.e., 1=easy, 2=not easy) and the proportion of participants who were able to complete the task easily were calculated. Any task that required assistance from the research staff was coded as “not easy”. Of the 24 total interviews conducted for the think-aloud and heuristic testing (end-users n=20; heuristic experts n=4), 60% of the interviews were double-coded by two members of the research team, and any disagreements were resolved through discussion and reviewing the Morae video recording if necessary. In addition, qualitative comments were extracted from the interviews during the coding procedures if they were related to the perceived usefulness.

2.1.4. Results

The ease of executing a task for both end-users and experts for the 26 tasks is reported in Table 1 and the exemplars were derived from the audio recording. 19 of the 26 tasks were easy to complete for at least 50% of the end-users. Entering the section “My Medication” was the task that was the least easy to complete. Although only 12.5% completed the task “complete a mission” easily, many participants did not attempt to complete this task. In addition, both the experts and end-users reported the following tasks as difficult: 1) adding new medications and 2) reporting medications as taken. The mean of the overall Health-ITUES score for the WiseApp was 4.39 (SD=0.55) for end-users and 3.63 (SD=0.80) for experts suggesting that the end-users perceived the app to be usable.

These results were summarized and discussed with the developers of the app, and updates were made to reduce the difficulty in using these components of the app. For example, the color of the dock domains was made darker and the text on the home screen was darkened to improve visibility (Figure 2). In addition, the “Missions” were felt to be irrelevant or redundant to the “tasks” for the participants, and this functionality of the app was removed (Figure 3). Finally, the “Me” screen was renamed to “More” for clarification.

Figure 3:

WiseApp “Missions”

2.2. Cognitive Walkthrough

Building on our findings from the traditional think-aloud protocol with end-users and the heuristic evaluation with experts, we then refined the task list and conducted a cognitive walkthrough (CW) to provide more depth and granularity to our evaluation approach.

2.2.1. Sample:

We recruited 10 PLWH who had not participated in the first part of this study. Because 20 PLWH participated in the think aloud protocol and we were testing the same app, we chose 10 participants as this number of participants would be sufficient to identify at least 80% of usability problems (23). We screened 16 potential participants, of whom 6 were ineligible due to incompatible technology (not having a smart phone) or not comfortable using a smart phone.

All of the end-users owned a smart phone; 5 reported using an iPhone and 5 an Android phone. The average age of the participants was 54.5 years (range 39–63), 70% reported African American/Black as their race and 40% reported their ethnicity as Hispanic. Fifty percent reported an annual income of less than $20,000, although one end-user reported not knowing, and 30% of participants had not completed high school. All participants could read and communicate in English and met the same criteria as required in the initial usability testing on the modified MMSE previously described. All participants reported using their smartphones at least daily and 8 out of 10 participants had been using their smartphone for at least 2 years.

2.2.2. Procedures:

The CW evaluates the ease with which users can perform a task with little formal instruction or informal coaching (26). Compared to other usability testing methods, the CW is more structured with a goal of understanding a technology’s learnability, and it can be especially useful when developing technology intended for individuals with low health literacy (27). The CW session is comprised of: 1) a detailed design of the user interface 2) a task scenario 3) explicit assumptions about end-users 4) the context of use and 5) a sequence of actions that would allow the user to successfully complete the task.(26) Participants in the CW are then asked to complete a set of tasks and describe their thought process while doing so. During task completion, the evaluator assesses the potential end-user’s ability to identify, achieve, and interpret the correct action that is required for each step in the task. If all tasks are completed in the sequence of actions described in step 5 of the CW, then it can be determined that there are not any usability concerns at this stage.

The CW focused on the major tasks that were identified during the initial think-aloud and heuristic usability testing as either challenging or integral to the app functionality, specifically: 1) medication management, 2) routine set-up that informs the medication reminder system and 3) navigating to the different sections of the app. In addition, during this phase of the study, we tested the end-users ability to use external devices that connected with the app, specifically, an electronic pill bottle and a fitness tracker with the study app. Both devices can be linked to the app to provide reminders about taking medications and meeting the daily step goal. A predetermined list was developed in an iterative process by the study team after coding and analyzing the initial usability testing. The list consisted of high-level tasks, end goals that required multiple tasks, or sub-tasks to complete (Table 2). End-users were asked to complete 10 high-level tasks which translated into 31 sub-tasks.

Table 2:

Number of steps to complete each sub-task

| High-level Task | Task Name | Mean (SD) | Range |

|---|---|---|---|

| Log In | Find the “Enter secret key” button | 1.4 (0.7) | 1–3 |

| Enter secret key | 1.6 (0.7) | 1–3 | |

| Enter DOB (Time 1) | 2.3 (0.95) | 1–4 | |

| Set up “My Routine” | Find “my routine” section | 4 (1.83) | 2–7 |

| Modify “My routine” | 4.4 (7.32) | 1–24 | |

| Save “My Routine” | 1 (0) | n/a | |

| Set up “My Medications” | Find “My Medication” section | 2.7 (4.35) | 1–15 |

| Enter + button to add medication | 1.6 (1.07) | 1–4 | |

| Enter Medication Name | 1.7 (1.25) | 1–4 | |

| Enter Dose of Medication | 1 (0) | n/a | |

| Enter Frequency of Medication | 1 (0) | n/a | |

| Enter time for medication to be due | 3.5 (4.5) | 1–16 | |

| Save Medication | 1.1 (0.32) | 1–2 | |

| Confirm Medication was added to “To-do” list | Find “To Do List” section | 8.3 (7.23) | 3–22 |

| Confirm Medication was added to list | 1.1 (0.33) | 1–2 | |

| Manage Medication Tracking Bottle | Open medication tracking bottle | 1.7 (1.34) | 1–5 |

| Close medication tracking bottle | 1 (0) | n/a | |

| Connect Fitness Tracker | Find “Connected device” section | 2.7 (1.42) | 1–6 |

| Select “Fitbit” to link | 1.2 (0.42) | 1–2 | |

| Select “all” to set up “Fitbit” | 1 (0) | n/a | |

| Select “allow” for Fitbit setup | 1.1 (0.32) | 1–2 | |

| Log out | Log Out (First attempt) | 4.3 (1.64) | 3–7 |

| Select Log back in | 1.3 (0.48) | 1–2 | |

| Log back in | Select “Log Back In” | 1.1 (0.32) | 1–2 |

| Enter email to log in | 1.2 (0.63) | 1–3 | |

| Enter Gmail section | 1.6 (0.84) | 1–3 | |

| Enter DOB (Time 2) | 1.3 (0.95) | 1–4 | |

| Find “Review Medication History” section | 5.75 (6.27) | 1–18 | |

| Review Medication history | 2.7 (2.26) | 1–6 | |

| Confirm Fitness Tracker recorded steps | Find “Confirm steps” on home screen | 4.5 (4.74) | 1–16 |

| Log out (Final) | Log Out (Time 2) | 4 (3.46) | 1–12 |

At the start of the study visit, participants were given basic information about the capabilities of the app as well as the list of high-level tasks. They were then asked to complete each task and think-aloud as they were attempting to complete each task. The research staff informed the participants that they would not provide guidance unless the task was found to be impossible to complete without assistance at which point they would ask open-ended questions to help guide the participant. Similar to the initial analysis, end-users rated the app’s usability using Health-ITUES. All activities were recorded using Morae software and interview notes were taken during each interview by the research staff.

2.2.3. Data Analysis:

The analysis was completed using the Morae recordings, field notes, and Health-ITUES scores. Each sub-task was analyzed and coded for 1) the number of actions it took for the participant to achieve the task and 2) if any assistance was provided to the participant by the research staff. The mean number of actions for each sub-task was calculated in addition to the mean number of users who required assistance. Of the 10 interviews conducted for the cognitive walkthrough testing (end-users n=10), 20% of the interviews were double-coded for inter-rater reliability and any disagreements were resolved by discussion among the team member and re-review of the Morae video recordings. The mean of the overall Health-ITUES score for the WiseApp was also calculated.

2.2.4. Results

The number of steps to execute each sub-task for end-users is reported in Table 2.

19 of the 31 tasks were easy to complete, requiring less than 2 steps on average. The tasks that took more steps to complete on average were frequently related to finding a specific section within the app. For example, the “To-Do” list was frequently cited as being difficult to find on the home screen (mean=8.3, range 3–22 steps): “for me, it would be easier if it was under my meds that I’d entered…maybe it could be in bolder, bigger and bolder, say if you wear reading glasses” (end-user 31). Confirming that a medication, which can be noted on the home screen, also took more steps on average, suggesting that this section should be made more visible: ““what do I go to…open it again?...press more? It should be bolder, easier to see” (end-user 27). This informed additional iterative updates to the app as well as the development of an onboarding procedure for future end-users participating in the ongoing randomized controlled trial (https://clinicaltrials.gov/ct2/show/NCT03205982). The mean of the overall Health-ITUES score for the WiseApp was 4.21 (SD=0.70), similar to the end-users in the usability testing phase, further suggesting that the end-users perceived the app to be usable.

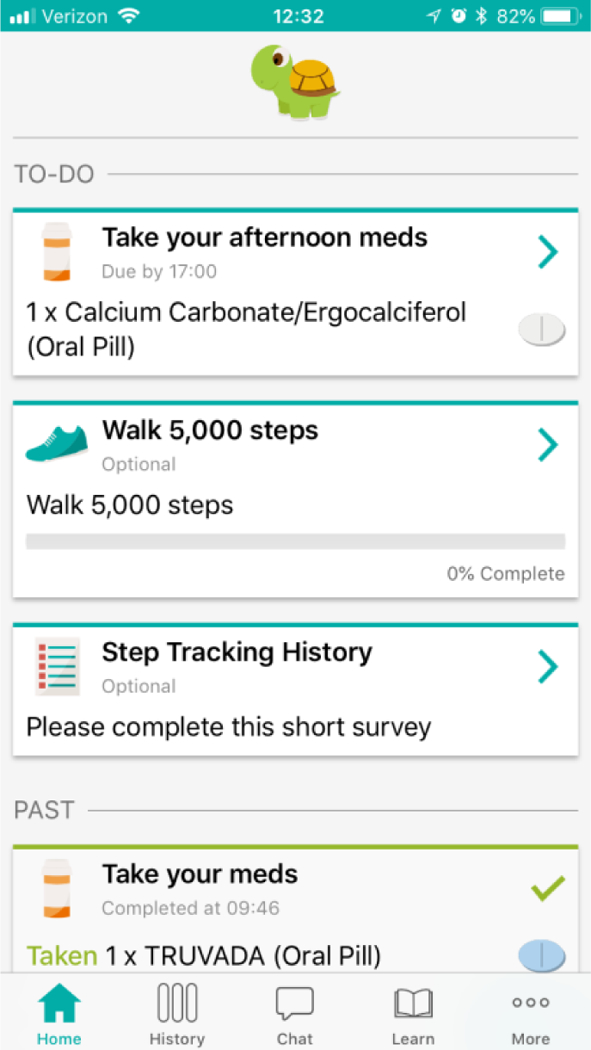

The medication tracking bottle was easy to navigate requiring 1.7 steps on average to complete the task of opening and closing the bottle, and many end-users reported that it could be helpful: “this is pretty easy because it says push…that right there was very very complicated” (end-user 25). However, others had some concerns about the tracking device: “I don’t need some pill bottle with the flashing lights…it’s basically programmed in my head, I just do it” (end-user 27). End-users sometimes required some guidance confirming that the medication was taken showed up on the home screen (mean steps to complete task: 5.75; range: 1–18): “oh I got it! sorry” didn’t see the “past” banner” (end-user 34, Figure 4).

Figure 4:

Highlighting the “Past Banner”

End-users had more difficulty confirming the number of steps they had taken with the fitness tracker compared with other tasks; however, some participants were positive toward this capability once they saw how to link and track their steps: ““I love it because it’s like one of these that’s going to remind me that I have to take my medication!” (end-user 33). Other participants, however, found the connection of a device to the app confusing: “I think that was the most confusing one out of all of them…the pill box was great, but to get to this… (connected device)…see I wouldn’t even know what to do with that. After I connect to the device, what do I do? I think it would be easier if it explained to me what it was doing...more details. I don’t really care how many steps I take” (end-user 32). Therefore, some end-users may need further information about the purpose and capabilities of using a fitness tracker.

This multi-component usability evaluation, the ease of completion for the think aloud protocol and the more granular task analysis provides a complete picture of the usability of the app for the end-user population PLWH. The end-users in both groups perceived the app to be usable as measured by the Health-ITUES scores (4.39 and 4.21 respectively).

3.0. Discussion

This study reports on a multi-step usability evaluation of a self-management app to improve medication adherence for PLWH, including a heuristic evaluation, think-aloud protocol, and cognitive walkthrough. Findings demonstrate that the WiseApp is usable from the end-user perspective. The initial think-aloud protocol demonstrated qualitatively that the app was perceived to be useful and quantitatively that the majority of components (73%) within the app were easy to use. Both the end-users and heuristic evaluators, however, recommended necessary changes to the user interface which were completed prior to the cognitive walkthrough. For example, both the end-users and heuristic evaluators described the need for some color and contrasting changes to the text within the app as well as clarification over the profile dock from “Me” to “More” were recommended to the app developers. Although the heuristic evaluation, as reported by the Health-ITUES scores, was lower than the end-users’, their negative feedback was primarily related to design issues, such as the log out button being difficult to find. Although this was noted by the end-users, this did not affect the usability as much for this group of participants.

Following the think-aloud protocol with end-users and the heuristic evaluation with experts, the CW allowed the research team to conduct a more granular assessment of the usability issues that were most pronounced in the first component of the usability evaluation. Similar to the end-users from the think-aloud protocol, the end-users in the CW also reported through Health-ITUES scores, as being usable. Supporting this assessment is the task analysis that both supported the majority of tasks requiring less than 2 steps to complete and the subsequent improvement in ease of completion for tasks assessed more than once (e.g. “log out” and “enter DOB”).

Importance of Multi-Step Usability Methods:

Studies utilizing CW methodology in healthcare are limited. To detail, a prior study used a walkthrough to test home-based telemedicine intervention in diabetic patients but this methodology has had limited application to date for mobile technology (28). Extending the examination of usability issues through a CW provided more robust information to better understand and address the usability issues with our app.

Our application of this usability evaluation approach was also unique in the context of mHealth technology since most mobile apps are not connected to devices. Therefore, this evaluation allowed us to assess the usability of the app and the linkage of the devices to the app. We demonstrated that most end-users were able to operate the app with the linkage to these devices although they may require some guidance especially the first time using the app. These features may provide feasible interventions to help improve health by increasing medication adherence and physical activity.

Implications for Medication Adherence in Underserved Patients with Chronic Illnesses

Our study population is also unique in the field of mHealth technology research, specifically utilizing cognitive walkthrough methodology as this has not traditionally been used in healthcare settings. It has been used to test usability for healthcare providers, but only one study to our knowledge used CW with patients as the end-users (28). Our participants were both patients within a healthcare system but also mostly undereducated and low-income. Of the 24 out of 30 study participants who reported their income, 58% reported an annual income less than $20,000. 40% had less than a high school diploma and 13% had graduated college. This app was tested among some of the lowest socioeconomic persons in the US, which is representative of urban, ethnic-minority PLWH (29), and we were able to successfully refine the app to meet end-users needs. Given the findings from our study, further use of these evaluation methods in low SES populations are well supported.

Importantly, many of the participants were able to overcome the usability issues when they were carrying out tasks that were assessed at two time points. For example, “Log out” and “Enter DOB” were easier to complete the second time, suggesting that the app is learnable. Further qualitative evidence supports this; for example, one participant said “I did it the first time to log in, basically the same thing…” (end-user 27),” demonstrating the learnability of the app. Learnability is an important construct of usability (30–32) defined as the capability of the software product to enable the user to learn its application (33). Although some of the tasks had a large range of steps needed to complete, qualitative review of the interviews revealed that this variability was often due to a small number of end-users who ultimately required assistance in navigating to that task.

This usability evaluation overcame earlier reported technological challenges of the use of Morae software on mobile devices specifically related to viewing by the study team and later data analysis (34). To do this, we used an emulator which provided a real-time view by the research team of the participants’ movements on the smartphone screen. In addition, the recordings were easily accessible for later data analysis.

The CW evaluation provided a greater understanding of the usability violations of our app and informed changes to the app; importantly there were some usability issues that could not be addressed and we used this information to guide our onboarding process for our current RCT. When study participants are enrolled in our trial, we provide them with additional information related to the tasks identified as having the most severe usability issues which could not be addressed as part of the software development process. This highlights a potential need for a user guide or “info-buttons” within mHealth apps intended for underserved populations.

4.0. Conclusion

The results of this usability evaluation informed iterative updates to the app at each step of testing and support the learnability of our app. This study demonstrated that the app and its capability to link to external devices for medication and fitness tracking are usable and in general, accepted by a group of PLWH. Future evaluation of mobile applications may benefit from this multi-step usability evaluation approach with a CW providing more granular information on the usability issues of health information systems. This approach provided useful information and should be considered for future use across socioeconomic groups. Finally, this study provides important insights into medication adherence mHealth apps both in this field and in other related fields.

Highlights:

three-step usability evaluation using 1) a traditional think-aloud protocol with end-users, 2) a heuristic evaluation with experts in informatics, and 3) a cognitive walkthrough with end-users informed iterative updates to the mHealth app for PLWH

Design issues were identified, including location and boldness of text to highlight areas of interest within the mHealth app

The mHealth app was determined to be usable overall, and these results informed updates to the app which is currently being tested to improve symptom self-management and medication adherence in an RCT

Acknowledgement:

This work was funded by the Agency for Healthcare Research and Quality (R01HS025071). MB is funded by the Reducing Health Disparities through Informatics (RHeaDI) training grant funded by the National Institute of Nursing Research (T32 NR007969) and a Doctoral Degree Scholarship in Cancer Nursing, DSCN-18-068-01, from the American Cancer Society. The findings and conclusions in this document are those of the authors, who are responsible for its content, and do not necessarily represent the views of AHRQ. No statement in this report should be construed as an official position of AHRQ or of the U.S. Department of Health and Human Services.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflict of interest statement

The authors declare that they have no conflicts of interest in the research.

References:

- 1.Deeks SG, Lewin SR, Havlir DV. The end of AIDS: HIV infection as a chronic disease. Lancet (London, England) 2013;382(9903):1525–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Liu H, Golin CE, Miller LG, Hays RD, Beck CK, Sanandaji S, et al. A comparison study of multiple measures of adherence to HIV protease inhibitors. Annals of internal medicine 2001;134(10):968–77. [DOI] [PubMed] [Google Scholar]

- 3.Murri R Patient-reported nonadherence to HAART is related to protease inhibitor levels. Journal of acquired immune deficiency syndromes (1999) 2000;24(2):123. [DOI] [PubMed] [Google Scholar]

- 4.Golin CE, Liu H, Hays RD, Miller LG, Beck CK, Ickovics J, et al. A Prospective Study of Predictors of Adherence to Combination Antiretroviral Medication. Journal of General Internal Medicine 2002;17(10):756–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kumar S, Nilsen WJ, Abernethy A, Atienza A, Patrick K, Pavel M, et al. Mobile health technology evaluation: the mHealth evidence workshop. American journal of preventive medicine 2013;45(2):228–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Justice AC. HIV and Aging: Time for a New Paradigm. Current HIV/AIDS Reports 2010;7(2):69–76. [DOI] [PubMed] [Google Scholar]

- 7.Schnall R, Cho H, Mangone A, Pichon A, Jia H. Mobile Health Technology for Improving Symptom Management in Low Income Persons Living with HIV. AIDS and behavior 2018. [DOI] [PMC free article] [PubMed]

- 8.Mannheimer SB. The CASE adherence index: A novel method for measuring adherence to antiretroviral therapy. AIDS care 2006;18(7):853–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Schnall R, Mosley JP, Iribarren SJ, Bakken S, Carballo-Dieguez A, Brown Iii W. Comparison of a User-Centered Design, Self-Management App to Existing mHealth Apps for Persons Living With HIV. JMIR mHealth and uHealth 2015;3(3):e91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Schnall R, Rojas M, Bakken S, Brown W, Carballo-Dieguez A, Carry M, et al. A user-centered model for designing consumer mobile health (mHealth) applications (apps). J Biomed Inform 2016;60:243–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Schnall R, Bakken S, Rojas M, Travers J, Carballo-Dieguez A. mHealth Technology as a Persuasive Tool for Treatment, Care and Management of Persons Living with HIV. AIDS and behavior 2015;19 Suppl 2:81–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Schnall R, Rojas M, Travers J, Brown W 3rd, Bakken S. Use of Design Science for Informing the Development of a Mobile App for Persons Living with HIV. AMIA Annual Symposium Proceedings 2014;2014:1037–45. [PMC free article] [PubMed] [Google Scholar]

- 13.Fogg BJ. Persuasive technology: using computers to change what we think and do. Ubiquity 2002;2002(December):2. [Google Scholar]

- 14.Haberer JE, Kahane J, Kigozi I, Emenyonu N, Hunt P, Martin J, et al. Real-time adherence monitoring for HIV antiretroviral therapy. AIDS and behavior 2010;14(6):1340–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Maguire M Methods to support human-centred design. International Journal of Human-Computer Studies 2001;55(4):587–634. [Google Scholar]

- 16.Nilsen W, Kumar S, Shar A, Varoquiers C, Wiley T, Riley WT, et al. Advancing the Science of mHealth. Journal of Health Communication 2012;17(sup1):5–10. [DOI] [PubMed] [Google Scholar]

- 17.Shackel B. Usability—context, framework, definition, design and evaluation. In: Brian S, Richardson SJ, editors. Human factors for informatics usability: Cambridge University Press; 1991. p. 21–37. [Google Scholar]

- 18.Dumas JS, Redish JC. A Practical Guide to Usability Testing: Intellect Books; 1999. 416 p. [Google Scholar]

- 19.Ben-Zeev D, Kaiser SM, Brenner CJ, Begale M, Duffecy J, Mohr DC. Development and Usability Testing of FOCUS: A Smartphone System for Self-Management of Schizophrenia. Psychiatric rehabilitation journal 2013;36(4):289–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hamine S, Gerth-Guyette E, Faulx D, Green BB, Ginsburg AS. Impact of mHealth chronic disease management on treatment adherence and patient outcomes: a systematic review. Journal of medical Internet research 2015;17(2):e52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Brown W 3rd, Yen PY, Rojas M, Schnall R. Assessment of the Health IT Usability Evaluation Model (Health-ITUEM) for evaluating mobile health (mHealth) technology. J Biomed Inform 2013;46(6):1080–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hevner AR. A three cycle view of design science research. Scand J Inform Syst 2007;2. [Google Scholar]

- 23.Faulkner L Beyond the five-user assumption: Benefits of increased sample sizes in usability testing. Behavior Research Methods, Instruments, & Computers 2003;35(3):379–83. [DOI] [PubMed] [Google Scholar]

- 24.Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. Journal of psychiatric research 1975;12(3):189–98. [DOI] [PubMed] [Google Scholar]

- 25.Schnall R, Cho H, Liu J. Health Information Technology Usability Evaluation Scale (Health-ITUES) for Usability Assessment of Mobile Health Technology: Validation Study. JMIR mHealth and uHealth 2018;6(1):e4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Polson PG, Lewis C, Rieman J, Wharton C. Cognitive walkthroughs: a method for theory-based evaluation of user interfaces. International Journal of Man-Machine Studies 1992;36(5):741–73. [Google Scholar]

- 27.Jaspers MW. A comparison of usability methods for testing interactive health technologies: methodological aspects and empirical evidence. Int J Med Inform 2009;78(5):340–53. [DOI] [PubMed] [Google Scholar]

- 28.Kaufman DR, Patel VL, Hilliman C, Morin PC, Pevzner J, Weinstock RS, et al. Usability in the real world: assessing medical information technologies in patients’ homes. J Biomed Inform 2003;36(1–2):45–60. [DOI] [PubMed] [Google Scholar]

- 29.Vaughan AS, Rosenberg E, Shouse RL, Sullivan PS. Connecting Race and Place: A County-Level Analysis of White, Black, and Hispanic HIV Prevalence, Poverty, and Level of Urbanization. American Journal of Public Health 2014;104(7):e77–e84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Nielsen J Usability engineering: Elsevier; 1994. [Google Scholar]

- 31.Yen PY, Wantland D, Bakken S. Development of a Customizable Health IT Usability Evaluation Scale. AMIA Annual Symposium proceedings AMIA Symposium 2010;2010:917–21. [PMC free article] [PubMed] [Google Scholar]

- 32.Bevan N International standards for HCI and usability. International Journal of Human-Computer Studies 2001;55(4):533–52. [Google Scholar]

- 33.ISO/IEC 9126. Software engineerting-product quality. 2001.

- 34.Sheehan B, Lee Y, Rodriguez M, Tiase V, Schnall R. A comparison of usability factors of four mobile devices for accessing healthcare information by adolescents. Applied clinical informatics 2012;3(4):356–66. [DOI] [PMC free article] [PubMed] [Google Scholar]