Abstract

In the past two decades, functional Magnetic Resonance Imaging (fMRI) has been used to relate neuronal network activity to cognitive processing and behavior. Recently this approach has been augmented by algorithms that allow us to infer causal links between component populations of neuronal networks. Multiple inference procedures have been proposed to approach this research question but so far, each method has limitations when it comes to establishing whole-brain connectivity patterns. In this paper, we discuss eight ways to infer causality in fMRI research: Bayesian Nets, Dynamical Causal Modelling, Granger Causality, Likelihood Ratios, Linear Non-Gaussian Acyclic Models, Patel’s Tau, Structural Equation Modelling, and Transfer Entropy. We finish with formulating some recommendations for the future directions in this area.

Keywords: Causal inference, Effective connectivity, Functional Magnetic Resonance Imaging, Dynamic Causal Modeling, Granger Causality, Structural Equation Modeling, Bayesian Nets, Directed Acyclic Graphs, Pairwise inference, Large-scale brain networks

INTRODUCTION

What is causality?

Although inferring causal relations is a fundamental aspect of scientific research, the notion of causation itself is notoriously difficult to define. The basic idea is straightforward: When process A is the cause of process B, A is necessarily in the past from B, and without A, B would not occur. But in practice, and in dynamic systems such as the brain in particular, the picture is far less clear. First, for any event a large number of (potential) causes can be identified. The efficacy of certain neuronal process in producing behavior is dependent on the state of many other (neuronal) processes, but also on the availability of glucose and oxygen in the brain, and so forth. In a neuroscientific context, we are generally not interested in most of these causes, but only in a cause that stands out in such a way that it is deemed to provide a substantial part of the explanation, for instance causes that vary with the experimental conditions. However, the contrast between relevant and irrelevant causes (in terms of explanatory power) is arbitrary and strongly dependent on experimental setup, contextual factors, and so forth. For instance, respiratory movement is typically considered a confound in fMRI experiments, unless the research question concerns the influence of respiration speed on the dynamics of the neuronal networks.

In dynamic systems, causal processes are unlikely to be part of a unidirectional chain of events, but rather a causal web, with often mutual influences between process A and B (Mannino & Bressler, 2015). As a result, it is hard to maintain the temporal ordering of cause and effect and, indeed, a clear separation between them (Schurger & Uithol, 2015).

Furthermore, causation can never be observed directly, just correlation (Hume, 1772). When a correlation is highly stable, we are inclined to infer a causal link. Additional information is then needed to assess the direction of the assumed causal link, as correlation indicates for association and not for causation (Altman & Krzywiński, 2015). For example, the motor cortex is always active when a movement is made, so we assume a causal link between the two phenomena. The anatomical and physiological properties of the motor cortex, and the timing of the two phenomena provide clues about the direction of causality (i.e., cortical activity causes the movement, and not the other way around). However, only intervention studies, such as delivering Transcranial Magnetic Stimulation (Kim, Pesiridou, & O’Reardon, 2009), pulses over the motor cortex or lesion studies, can confirm the causal link between the activity in the motor cortex and behavior.

Causal studies in fMRI are based on three types of correlations: correlating neuronal activity to (1) mental and behavioral phenomena, (2) to physiological states (such as neurotransmitters, hormones, etc.), and (3) to neuronal activity in other parts of the brain. In this review, we will focus on the last field of research: establishing causal connections between activity in two or more brain areas.

A Note on the Limitations of fMRI Data

fMRI studies currently use a variety of algorithms to infer causal links (Fornito, Zalesky, & Breakspear, 2013; S. Smith et al., 2011). All these methods have different assumptions, advantages and disadvantages (see, e.g., Stephan & Roebroeck, 2012; Valdes-Sosa, Roebroeck, Daunizeau, & Friston, 2011), and approach the problem from different angles. An important reason for this variety of approaches is the complex nature of fMRI data, which imposes severe restrictions on the possibility of finding causal relations using fMRI.

-

•

Temporal resolution and hemodynamics. First, and best known, the temporal resolution of the image acquisition in MR imaging is generally restricted to a sampling rate <1[Hz]. Recently, multiband fMRI protocols have gained in popularity (Feinberg & Setsompop, 2013), which increases the upper limit for the scanning frequency to up to 10[Hz], albeit at the cost of a severely decreased signal-to-noise ratio. However, no imaging protocol (including multiband imaging) can overcome the limitation of the recorded signal itself: the lagged change in blood oxygenation, which peaks 3 to 6[s] after neuronal firing in the adult human brain (Arichi et al., 2012). The hemodynamic response thus acts as a low-pass filter, which results in high correlations between activity in consecutive frames (J. D. Ramsey et al., 2010). Since the hemodynamic lags (understood as the peaks of the hemodynamic response) are region- and subject-specific (Devonshire et al., 2012) and vary over time (Glomb, Ponce-Alvarez, Gilson, Ritter, & Deco, 2017), it is difficult to infer causality between two time series with potentially different hemodynamic lags (Bielczyk, Llera, Buitelaar, Glennon, & Beckmann, 2017). Computational work by Seth, Chorley, and Barnett (2013) suggests that upsampling the signal to low repetition times (TRs) (<0.1[s]) might potentially overcome this issue. Furthermore, hemodynamics typically fluctuates in time. These slow fluctuations, similarly to other low frequency artifacts such as heartbeat or body movements, should be removed from the datasets through high-pass filtering before the inference procedure (J. D. Ramsey, Sanchez-Romero, & Glymour, 2014).

-

•

Signal-to-noise ratio. Second, fMRI data is characterized by a relatively low signal-to-noise ratio. In gray matter, the recorded hemodynamic response changes by 1 to 2% at field strengths of 1.5–2.0[T] (Boxerman et al., 1995; Ogawa et al., 1993), and by 5 to 6% at field strengths of 4.0[T]. Moreover, typical fMRI protocols generate relatively short time series. For example, the Human Connectome Project resting state datasets (Essen et al., 2013) do not contain more than a few hundred to maximally few thousand samples. The two most popular ways of improving on the signal-to-noise ratio in fMRI datasets are averaging signals over multiple voxels (K. J. Friston, Ashburner, Kiebel, Nichols, & Penny, 2007) and spatial smoothing (Triantafyllou, Hoge, & Wald, 2006).

-

•

Caveats associated with region definition. Third, in order to propose a causal model, one first needs to define the nodes of the network. A single voxel does not represent a biologically meaningful part of the brain (Stanley et al., 2013). Therefore, before attempting to establish causal connection in the network, one needs to integrate the BOLD time series over regions of interest (ROIs): groups of voxels that are assumed to share a common signal with a neuroscientific meaning. Choosing the optimal ROIs for a study is a complex problem (Fornito et al., 2013; Kelly et al., 2012; Marrelec & Fransson, 2011; Poldrack, 2007; Thirion, Varoquaux, Dohmatob, & Poline, 2014). In task-based fMRI, ROIs are often chosen on the basis of activation patterns revealed by the standard General Linear Model analysis (K. J. Friston et al., 2007).

On the other hand, in research on resting-state brain activity, the analysis is usually exploratory and the connectivity in larger, meso- and macroscale networks is typically considered. In that case, a few strategies for ROI definition are possible. First, one can define ROIs on the basis of brain anatomy. However, a consequence of this strategy could be that BOLD activity related to the cognitive process of interest will be mixed with other, unrelated activity within the ROIs. This is particularly likely to happen given that brain structure is not exactly replicable across individuals, so that a specific area cannot be defined reliably based on location alone. As indicated in the computational study by S. Smith et al. (2011), and also in a recent study by Bielczyk et al. (2017), such signal mixing is detrimental to causal inference and causes all the existing methods for causal inference in fMRI to underperform. What these studies demonstrate is that parcellating into ROIs based on anatomy rather than common activity, can induce additional scale-free background noise in the networks. Since this noise has high power in low frequencies, the modeled BOLD response cannot effectively filter it out. As a consequence, the signatures of different connectivity patterns are getting lost.

As an alternative to anatomical parcellation, choosing ROIs can be performed in a functional, data-driven fashion. There are multiple techniques developed to reach this goal, and to list some recent examples: Instantaneous Correlations Parcellation implemented through a hierarchical Independent Component Analysis (ICP; van Oort et al., 2017), probabilistic parcellation based on Chinese restaurant process (Janssen, Jylänki, Kessels, & van Gerven, 2015), graph clustering based on intervoxel correlations (van den Heuvel, Mandl, & Pol, 2008), large-scale network identification through comparison between correlations among ROIs versus a model of the correlations generated by the noise (LSNI; Bellec et al., 2006), multi-level bootstrap analysis (Bellec, Rosa-Neto, Lyttelton, Benali, & Evans, 2010), clustering of voxels revealing common causal patterns in terms of Granger Causality (DSouza, Abidin, Leistritz, & Wismüller, 2017), spatially constrained hierarchical clustering (Blumensath et al., 2013) and algorithms providing a trade-off between machine learning techniques and knowledge coming from neuroanatomy (Glasser et al., 2016). Another possibility to reduce the effect of mixing signals is to perform Principal Component Analysis (PCA; Jolliffe, 2002; Shlens, 2014), separate the BOLD time series within each anatomical region into a sum of orthogonal signals (eigenvariates) and choose only the signal with the highest contribution to the BOLD signal (the first eigenvariate; K. J. Friston, Harrison, & Penny, 2003), instead of averaging activity over full anatomical regions. Finally, one can build ROIs on the basis of patterns of activation only (task localizers; Fedorenko, Hsieh, Nieto-Castañón, Whitfield-Gabrieli, & Kanwisher, 2010; Heinzle, Wenzel, & Haynes, 2012). However, this approach cannot be applied to resting-state research. In this work, we assume that the definition of ROIs has been performed by the researcher prior to the causal inference, and we do not discuss it any further.

Criteria for Evaluating Methods for Causal Inference in Functional Magnetic Resonance Imaging

Given the aforementioned characteristics of fMRI data (low temporal resolution, slow hemodynamics, low signal-to-noise ratio) and the fact that causal webs in the brain are likely dense and dynamic, is it in principle possible to investigate causality in the brain by using fMRI? Multiple distinct families of models have been developed in order to approach this problem over the past two decades. One can look at the methods from different angles and classify them into different categories.

One important distinction proposed by K. Friston, Moran, and Seth (2013), includes division of methods with respect to the depth of the neuroimaging measurements at which a method is defined. Most methods (such as the original formulation of Structural Equation Modeling for fMRI (Mclntosh & Gonzalez-Lima, 1994) see section Structural Equation Modeling) operate on the experimental observables, that is, the measured BOLD responses. These methods are referred to as directed functional connectivity measures. On the contrary, other methods (e.g., Dynamic Causal Modeling) consider the underlying neuronal processes. These methods are referred to as effective connectivity measures. Mind that while some methods such as Dynamic Causal Modeling are hardwired to assess effective connectivity (as they are built upon a generative model), other methods can be used both as a method to assess directed functional connectivity or effective connectivity. For example, in Granger Causality research, a blind deconvolution is often used in order to deconvolve the observed BOLD responses into an underlying neuronal time series (David et al., 2008; Goodyear et al., 2016; Hutcheson et al., 2015; Ryali et al., 2016; Ryali, Supekar, Chen, & Menon, 2011; Sathian, Deshpande, & Stilla, 2013; Wheelock et al., 2014), which allows for assessing effective connectivity. On the contrary, when Granger Causality is used without deconvolution (Y. C. Chen et al., 2017; Regner et al., 2016; Zhao et al., 2016), it is a directed functional connectivity method. Of course, both scenarios have pros and cons, as blind deconvolution can be a very noisy operation (Bush et al., 2015), and for more details, please see K. Friston, Moran, and Seth (2013).

Another important distinction was proposed by Valdes-Sosa et al. (2011). According to this point of view, methods can be divided on the basis of the approach toward temporal sequence of the samples: some of the methods are based on the temporal sequence of the signals (e.g., Transfer Entropy (Schreiber, 2000), see section Transfer Entropy, or Granger Causality, (Granger, 1969), see section Granger Causality), or rely on the dynamics expressed by state-space equations (so-called state-space models, e.g., Dynamic Causal Modeling), while other methods do not draw information from the sequence in time, and solely focus on the statistical properties of the time series (so-called structural models, e.g., Bayesian Nets (Frey & Jojic, 2005, see section Bayesian Nets).

In this work, we would like to propose another classification of methods for causal inference in fMRI. First, we identify nine characteristics of models used to study causality. Then, we compare and contrast the popular approaches to the causal research in fMRI according to these criteria. Our list of features of causality is as follows:

-

1.

Sign of connections: Can the method distinguish between excitatory and inhibitory causal relations? In this context, we do not mean synaptic effects, but rather an overall driving or attenuating impact of the activity in one brain region on the activity in another region. Certain methods only detect the existence of causal influence from the BOLD responses, whereas others can distinguish between these distinct forms of influence.

-

2.

Strength of connections: Can the method distinguish between weak and strong connections, apart from indicating the directionality of connections at a certain confidence level?

-

3.

Confidence intervals: How are the confidence intervals for the connections determined?

-

4.

Bidirectionality: Can the method pick up bidirectional connections X ⇌ Y or only indicate the strongest of the two connections X → Y and Y → X? Some methods do not allow for bidirectional relations, since they cannot deal with cycles in the network.

-

5.

Immediacy: Does the method specifically identify direct influences X → Y, or does it pool across direct and indirect influences Zi: X → Zi → Y? We assume that Zi represent nodes in the network, and the activity in these nodes is measured (otherwise Zi become a latent confounder). While some methods aim to make this distinction, others highlight any influence X → Y, whenever it is direct or not.

-

6.

Resilience to confounds: Does the method correct for possible spurious causal effects from a common source (Z → X, Z → Y, so we infer X → Y and/or Y → X), or other confounders? In general, confounding variables are an issue to all the methods for causal inference, especially when a given study is noninterventional (Rohrer, 2017); however, different methods can suffer from these issues to a different extent.

-

7.

Type of inference: Does the method probe causality through classical hypothesis testing or through model comparison? Hypothesis-based methods will test a null hypothesis H0 that there is no causal link between two variables, against a hypothesis H1 that there is causal link between the two. In contrast, model comparison based methods do not have an explicit null hypothesis. Instead, evidence for a predefined set of models is computed. In particular cases, when the investigated network contains only a few nodes and the estimation procedure is computationally cheap, a search through all the connectivity patterns by means of model comparison is possible. In all the other cases, prior knowledge is necessary to select a subset of possible models for model comparison.

-

8.

Computational cost: What is the computational complexity of the inference procedure? In the case of model comparison, the computational cost refers to the cost of finding the likelihood of a single model, as the range of possible models depends on the research question. This can lead to practical limitations based on computing power.

-

9.

Size of the network: What sizes of network does the method allow for? Some methods are restricted in the number of nodes that it allows, for computational or interpretational reasons.

In certain applications, an additional criterion of empirical accuracy in realistic simulation could be of help to evaluate the method. Testing the method on synthetic, ground truth datasets available for the research problem at hand can give a good picture on whether or not the method gives reliable results when applied to experimental datasets. In fMRI research, multiple methods for causal inference were directly compared with each other in a seminal simulation study by Smith et al. In this study, the authors employed a Dynamic Causal Modeling generative model (DCM; K. J. Friston et al., 2003), introduced in section Dynamic Causal Modeling in order to create synthetic datasets with a known ground truth. Surprisingly, most of the methods struggled to perform above chance level, even though the test networks were sparse and the noise levels introduced to the model were low compared with what one would expect in real recordings. In this manuscript, we will refer to this study throughout the text. However, we will not list empirical accuracy as a separate criterion, for two reasons. First, some of the methods reviewed here, for example, Structural Equation Modeling (SEM; Mclntosh & Gonzalez-Lima, 1994), were not tested on the synthetic benchmark datasets. Second, the most popular method in the field, DCM (K. J. Friston et al., 2003), builds on the same generative model that is used for comparing methods to each other in Smith’s study. Therefore, it is hard to perform a fair comparison between DCM and other methods in the field by using this generative model.

In the following chapters, the references to this “causality list” will be marked in the text with subscripted indices that refer to 1–9 above.

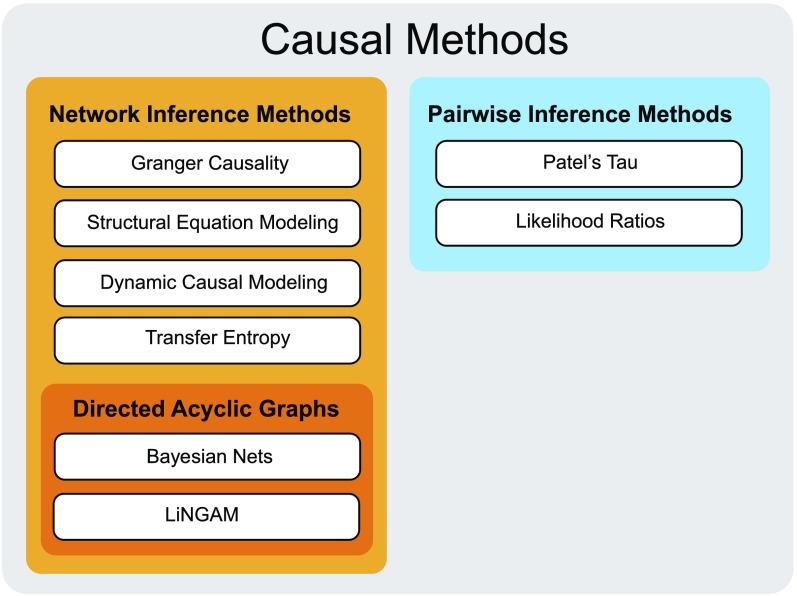

With respect to assumptions made on the connectivity structure, the approaches discussed here can be divided into three main groups (Figure 1). The first group comprises multivariate methods that search for directed graphs without imposing any particular structure onto the graph: GC (Seth, Barrett, & Barnett, 2015), Transfer Entropy (TE; Marrelec et al., 2006), SEM (Mclntosh & Gonzalez-Lima, 1994) and DCM (K. J. Friston et al., 2003). These methods will be referred to as network-wise models throughout the manuscript. The second group of methods is also multivariate, but requires an additional assumption of acyclicity. Models in this group assume that information travels through the brain by feed-forward projections only. As a result, the network can always be represented by a Directed Acyclic Graph (DAG; Thulasiraman & Swamy, 1992). Methods in this group include Linear Non-Gaussian Acyclic Models (LiNGAM; Shimizu, Hoyer, Hyvärinen, & Kerminen, 2006) and Bayesian Nets (BNs; Mumford & Ramsey, 2014), and will be referred to as hierarchical network-wise models throughout the manuscript. The last group of methods, referred to as pairwise methods, use a two-stage procedure: first, a map of nondirectional functional connections is rendered; and second, the directionality in each connection is assessed. Since these methods focus on pairwise connections rather than complete network architectures, they by definition do not impose network assumptions like acyclicity. Patel’s tau (PT; Patel, Bowman, & Rilling, 2006) and Pairwise Likelihood Ratios (PW-LR; Hyvärinen & Smith, 2013) are members of this group. In this review, we do not include studying a coupling between brain region and the rest of the brain with relation to a particular cognitive task, The Psycho-Physiological Interactions (PPIs; K. J. Friston et al., 1997), as we are only focused on the methods for assessing causal links within brain networks, and we do not include brain-behavior causal interactions.

Figure 1. .

Causal research in fMRI. The discussed methods can be divided into two families: Network Inference Methods, which are based on a one-step multivariate procedure, and Pairwise Inference Methods, which are based on a two-step pairwise inference procedures. As pairwise methods by definition establish causal connections on a connection-by-connection basis, they do not require any assumptions on the structure of the network, but also do not reveal the structure of the network.

NETWORK-WISE METHODS

The first group of models that we discuss in this review involves multivariate methods: methods that simultaneously assess all causal links in the network—specifically, GC (Granger, 1969), TE (Schreiber, 2000), SEM (Wright, 1920) and DCM (K. J. Friston et al., 2003). These methods do not pose any constraints on the connectivity structure. GC, TE, and SEM infer causal structures through classical hypothesis testing. As there are no limits to the size of the analyzed network, these methods allow for (relatively) hypothesis-free discovery. DCM on the other hand, compares a number of predefined causal structures in networks of only a few nodes. As such, it requires a specific hypothesis based on prior knowledge.

Granger Causality

Clive Granger introduced Granger Causality (GC) in the field of economics (Granger, 1969). GC has found its way into many other disciplines, including fMRI research (Bressler & Seth, 2011; Roebroeck, Seth, & Valdes-Sosa, 2011; Seth et al., 2015; Solo, 2016). GC is based on prediction (Diebold, 2001): the signal in a certain region is dependent on its past values. Therefore, a time series Y(t) at time point t can be partly predicted by its past values Y(t − i). A signal in an upstream region is followed by the same signal in a downstream region with a certain temporal lag. Therefore, if prediction of Y(t) improves when past values of another signal X(t − i) are taken into account, X is said to Granger-cause Y. Time series X(t) and Y (t) can be multivariate, therefore they will be further referred to as (t), (t).

Y(t) is represented as an autoregressive process: it is predicted by a linear combination of its past states and a Gaussian noise (there is also an equivalent of GC in the frequency domain, spectral GC [Geweke, 1982, 1984], but this method will not be covered in this review). This model is compared with model including the past values of X(t):

| (1) |

| (2) |

where σ(t) denotes noise (or rather, the portion of the signal not explained by the model). Theoretically, this autoregressive (AR) model can take any order N (which can be optimized using, e.g., Bayesian Information Criterion; Schwarz, 1978), but in fMRI research it is usually set to N = 1 (Seth et al., 2015), that is, a lag that is equal to the TR.

By fitting the parameters of the AR model, which include the influence magnitudes Byi, Bxi, the sign1 as well as the strength2 of the causal direction can be readily assessed with GC. The significance of the results is evaluated by comparing variance of the noise obtained from models characterized by Equation 1 and Equation 2. This can be achieved either by means of F tests or by permutation testing3. Like all the methods in this chapter, GC does not impose any constraints on the network architecture and therefore can yield bidirectional connections4. As a multivariate method, GC fits the whole connectivity structure at once. Therefore, ideally, it indicates the direct causal connections only5, whereas the indirect connections should be captured only through higher order paths in the graph revealed in the GC analysis. However, this is not enforced directly by the method. Furthermore, in the original formulation of the problem by Granger, GC between X and Y works based on the assumption that the input of all the other variables in the environment potentially influencing X and Y has been removed (Granger, 1969). In theory, this would provide resilience to confounds6. However, in reality this assumption is most often not valid in fMRI (Grosse-Wentrup, 2014). In a result, direct and indirect causality between X and Y are in fact pooled. In terms of the inference type, one can look at GC in two ways. On the one hand, GC is a model comparison technique, since the inference procedure is, in principle, based on a comparison between two models expressed by Equations 1 and 2. On the other hand, the difference between GC and other model comparison techniques lies in the fact that GC does not optimize any cost function, but uses F tests or permutation testing instead, and it can therefore also be interpreted as a method for classic hypothesis testing7. Since the temporal resolution of fMRI is so low, typically first order AR models with a time lag equal to 1 TR are used for the inference in fMRI. Therefore, there is no need to optimize either the temporal lag or the model order, and as such the computational cost of GC estimation procedure in fMRI is low8. One constraint though, is that the AR model imposes a mathematical restriction on the size of the network: the number of regions divided by the number of shifts can never exceed the number of time points (degrees of freedom).

GC is used in fMRI research in two forms: as mentioned in section Criteria for Evaluating Methods for Causal Inference in Functional Magnetic Resonance Imaging, GC can be either applied to the observed BOLD responses (Y. C. Chen et al., 2017; Regner et al., 2016; Zhao et al., 2016), or to the BOLD responses deconvolved into neuronal time series (David et al., 2008; Goodyear et al., 2016; Hutcheson et al., 2015; Ryali et al., 2016, 2011; Sathian et al., 2013; Wheelock et al., 2014). The purpose of deconvolution is to model fMRI data more faithfully. However, estimating the hemodynamic response from the data—a necessity to perform this deconvolution—adds uncertainty to the results.

The applicability of GC to fMRI data has been heavily debated (Stokes & Purdon, 2017). Firstly, the application of GC requires certain additional assumptions such as signal stationarity (stationarity means that the joint probability distribution in the signal does not change over time. This also implies that mean, variance and other moments of the distribution of the samples in the signal do not change over time), which does not always hold in fMRI data. Theoretical work by Seth et al. (2013), and work by Roebroeck, Formisano, and Goebel (2005), suggest that despite the limitations related to slow hemodynamics, GC is still informative about the directionality of causal links in the brain (Seth et al., 2015). In the study by S. Smith et al. (2011), several versions of GC implementation were tested. However, all versions of GC were characterized by a low sensitivity to false positives and low overall accuracy in the directionality estimation. The face validity of GC analysis was empirically validated using joint fMRI and magnetoencephalography recordings (Mill, Bagic, Bostan, Schneider, & Cole, 2017), with the causal links inferred with GC matching the ground truth confirmed by MEG. On the other hand, experimental findings report that GC predominantly identifies major arteries and veins as causal hubs (Webb, Ferguson, Nielsen, & Anderson, 2013). This result can be associated with a regular pulsating behavior with different phases in the arteries across the brain. This is a well-known effect and is even explicitly targeted with physiological noise estimates such as RETROICOR (Glover, Li, & Ress, 2000).

Another point of concern is the time lag in fMRI data, which restricts the possible scope of AR models that can be fit in the GC procedure. Successful implementations of GC in EEG/MEG research typically involve lags of less than 100 ms (Hesse, Möller, Arnold, & Schack, 2003). In contrast, for fMRI the minimal lag is one full TR, which is typically between 0.7[s] and 3.0[s] (although new acceleration protocols allow for further reduction of TR). What is more, the hemodynamic response function (HRF) may well vary across regions (David et al., 2008; Handwerker, Ollinger, & D’Esposito, 2004), revealing spurious causal connections: when the HRF in one region is faster than in another, the temporal precedence of the peak will easily be mistaken for causation. The estimated directionality can in the worst case, even be reversed, when the region with the slower HRF in fact causes the region with the faster HRF (Bielczyk, et al., 2017). Furthermore, the BOLD signal might be noninvertible into the neuronal time series (Seth et al., 2015), which can affect GC analysis regardless of whether it is performed on the BOLD time series or the deconvolved signal.

Transfer Entropy

Transfer Entropy (TE; Schreiber, 2000) is another data-driven technique, equivalent to Granger Causality under Gaussian assumptions (Barnett, Barrett, & Seth, 2009), and asymptotically equivalent to GC for general Markovian (nonlinear, non-Gaussian) systems (Barnett & Bossomaier, 2012). In other words, TE is a nonparametric form of GC (or, GC is a parametric form of TE). It was originally defined for pairwise analysis and later extended to multivariate analysis (J. Lizier, Prokopenko, & Zomaya, 2008; Montalto, Faes, & Marinazzo, 2014). TE is based on the concept of Shannon entropy (Shannon, 1948). Shannon entropy H(x) quantifies the information contained in a signal of unknown spectral properties as the amount of uncertainty, or unpredictability. For example, a binary signal that only gets values of 0 with a probability p, and values of 1 with a probability 1 − p, is most unpredictable when p = 0.5. This is because there is always exactly a 50% chance of correctly predicting the next sample. Therefore, being informed about the next sample in a binary signal of p = 0.5 reduces the amount of uncertainty to a higher extent than being informed about the next sample in a binary signal of, say, p = 0.75. This can be interpreted as a larger amount of information contained in the first signal as compared with the latter. The formula which quantifies the information content according to this rule reads as follows:

| (3) |

where xi denotes the possible values in the signal (for the binarized signal, there are only two possible values: 0 and 1).

TE builds up on the concept of Shannon entropy by extension to conditional Shannon entropy: it describes the amount of uncertainty reduced in future values of Y by knowing the past values of X along with the past values of Y:

| (4) |

where τ denotes the time lag.

In theory, TE requires no assumptions about the properties of the data, not even signal stationarity. However, in most real-world applications, stationarity is required to almost the same extent as in GC. Certain solutions for TE in nonstationary processes are also available (Wollstadt, Martinez-Zarzuela, Vicente, Diaz-Pernas, & Wibral, 2014). TE does need an a priori definition of the causal process, and it may work for both linear and nonlinear interactions between the nodes.

TE can distinguish the signum of connections1, as the drop in the Shannon entropy can be both positive and negative. Furthermore, the absolute value of the drop in the Shannon entropy can provide a measure of the connection strength2. TE can also distinguish bidirectional connections, as in this case, both TEX → Y and TEY → X will be nonzero4. In TE, significance testing by means of permutation testing is advised (Vicente, Wibral, Lindner, & Pipa, 2011)3. Immediacy and resilience to confounds in TE is the same as in GC: multivariate TE represents direct interactions, and becomes resilient to confounds only when defined for an isolated system. The inference in TE is performed through classical hypothesis testing7 and is highly cost-efficient8. As in GC, the maximum number of regions in the network divided by the number of shifts can never exceed the number of time points (degrees of freedom)9.

TE is a straightforward and computationally cheap method (Vicente et al., 2011). However, TE did not perform well when applied to synthetic fMRI benchmark datasets (S. Smith et al., 2011). One reason for this could be the time lag embedded in the inference procedure, which poses an obstacle to TE in fMRI research for the same reasons as to GC: it requires at least one full TR. TE is nevertheless gaining interest in the field of fMRI (Chai, Walther, Beck, & Fei-Fei, 2009; J. T. Lizier, Heinzle, Horstmann, Haynes, & Prokopenko, 2011; Montalto et al., 2014; Ostwald & Bagshaw, 2011; Sharaev, Ushakov, & Velichkovsky, 2016).

Structural Equation Modeling

Structural Equation Modeling (SEM; Mclntosh & Gonzalez-Lima, 1994) is a simplified version of GC and can be considered a predecessor to DCM (K. J. Friston et al., 2003). This method was originally applied to a few disciplines: economics, psychology and genetics (Wright, 1920), and was only recently adapted for fMRI research (Mclntosh & Gonzalez-Lima, 1994). SEM is used to study effective connectivity in cognitive paradigms, for example, on motor coordination (Kiyama, Kunimi, Iidaka, & Nakai, 2014; Zhuang, LaConte, Peltier, Zhang, & Hu, 2005), as well as in search for biomarkers of psychiatric disorders (Carballedo et al., 2011; R. Schlösser et al., 2003). It was also used for investigating heritability of large-scale resting-state connectivity patterns (Carballedo et al., 2011).

The idea behind SEM is to express every ROI time series in a network by a linear combination of all the time series (with the addition of noise), which implies no time lag in the communication. These signals are combined in a mixing matrix B:

| (5) |

where denotes the noise, and the assumption is that each univariate component Xi(t) is a mixture of the remaining components Xj(t), j ≠ i. This is a simple multivariate regression equation. The most common strategy for fitting this model is a search for the regression coefficients that correspond to the maximum likelihood (ML) solution: a set of model parameters B that give the highest probability of the observed data (Anderson & Gerbing, 1988; Mclntosh & Gonzalez-Lima, 1994). Assuming that variables Xi are normally distributed, the ML function can be computed and optimized. This function is dependent on the observed covariance between variables, as well as a concept of a so-called implied covariance; for the details, see Bollen (1989), and for a practical example of SEM inference, see Ferron and Hess (2007). Furthermore, under the assumption of normality of the noise, there is a closed-form solution to this problem which gives the ML solution for parameters B, known as Ordinary Least Squares (OLS) approximation (Bentler, 1985; Hayashi, 2000).

In SEM applications to fMRI datasets, it is a common practice to establish the presence of connections with use of anatomical information derived, for example, from Diffusion Tensor Imaging (Protzner & McIntosh, 2006). In that case, SEM inference focuses on estimating the strength of causal effects and not on identifying the causal structure.

SEM does not constrain the weight of connections, therefore it can retrieve both excitatory and inhibitory connections1 as well as bidirectional connections4. The connection coefficients Bij can take any values of rational numbers and as such they can reflect the strength of the connections2. Since OLS gives a point estimate for β, it does not provide a measure of confidence that would determine whether the obtained β is significantly different from zero. This issue can be overcome in multiple ways. First, one can perform parametric tests, for example, a t test. Second, one can obtain confidence intervals through nonparametric permutation testing (generate a null distribution of B values by the repeated shuffling of node labels across subjects and creating surrogate subjects). Third, one can perform causal inference through model comparison: various models are fitted one by one, and the variance of the residual noise resulting from different model fits is compared, using either an F test, or a goodness of fit (Zhuang et al., 2005). Highly optimized software packages such as LiSREL (Joreskög & Thillo, 1972) allow for an exploratory analysis with SEM by comparing millions of models against each other (James et al., 2009). Last, one can fit the B matrix with new methods including regularization that enforces sparsity of the solution (Jacobucci, Grimm, & McArdle, 2016), and therefore eliminates weak and noise-induced connections from the connectivity matrix3. As with GC, SEM was designed to reflect direct connections5: if regions Xi and Xj are connected only through a polysynaptic causal web, Bij should come out as zero, and the polysynaptic connection should be retrievable from the path analysis. Again, similar to GC, SEM is resilient to confounds only under the assumption that the model represents an isolated system, and all the relevant variables present in the environment are taken into account6. Moreover, in order to obtain the ML solution for B parameters, one needs to make a range of assumptions on the properties of the noise in the network. Typically, a Gaussian white noise is assumed, although background noise in the brain is most probably scale-free (He, 2014). Inference can be performed either through the classical hypothesis testing (as the computationally cheap version) or through model comparison (as the computationally heavier version)7,8.

In summary, SEM is a straightforward approach: it simplifies the causal inference by reducing the complex network with a low-pass filter at the output to a very simple linear system, but this simplicity comes at the cost of a number of assumptions. In the first decade of fMRI research, SEM was often a method of choice (R. G. M. Schlösser et al., 2008; Zhuang, Peltier, He, LaConte, & Hu, 2008) however recently, using DCM has become more popular in the field. One recently published approach in this domain, by Schwab et al. (2018), extends linear models by introducing time-varying connectivity coefficients, which allows for tracking the dynamics of causal interactions over time. In this approach, linear regression is applied to each node in the network separately (in order to find causal influence of all the remaining nodes in the network on that node). The whole graph is then composed from node-specific DAGs node by node, and that compound graph can be cyclic.

Dynamic Causal Modeling

All the aforementioned network-wise methods were developed in other disciplines, and only later applied to fMRI data. Yet, using prior knowledge about the properties of fMRI datasets can prove useful when searching for causal interactions. Dynamic Causal Modeling (DCM; K. J. Friston et al., 2003) is a model comparison tool that uses state space equations reflecting the structure of fMRI datasets. This technique was also implemented for other neural recording methods: EEG and MEG (Kiebel, Garrido, Moran, & Friston, 2008). DCM is well received within the neuroimaging community (the original article by K. J. Friston et al. gained over 3,300 citations at the time of publishing this manuscript).

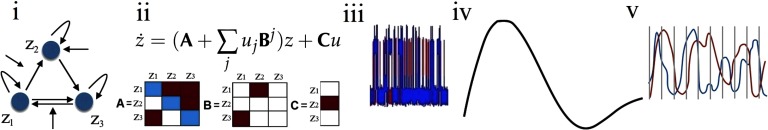

In this work, we describe the original work by (K. Friston, Moran, & Seth, 2013) because, despite multiple recent developments (Daunizeau, Stephan, & Friston, 2012; Frässle, Lomakina, Razi, Friston, Buhmann, & Stephan, 2017; Frässle, Lomakina-Rumyantseva, Razi, Buhmann, & Friston, 2016; K. J. Friston, Kahan, Biswal, & Razi, 2011; Havlicek et al., 2015; Kiebel, Kloppel, Weiskopf, & Friston, 2007; Li et al., 2011; Marreiros, Kiebel, & Friston, 2008; Prando, Zorzi, Bertoldo, & Chiuso, 2017; Razi & Friston, 2016; Seghier & Friston, 2013; Stephan et al., 2008; Stephan, Weiskopf, Drysdale, Robinson, & Friston, 2007), it remains the most popular version of DCM in the fMRI community. The idea of DCM is as follows. First, one needs to build a generative model (Figure 2). This model has two levels of description: the neuronal level (Figure 2, iii), and the hemodynamic level (Figure 2, v). Both of these levels contain parameters that are not directly recorded in the experiment and need to be inferred from the data. This model reflects scientific evidence on how the BOLD response is generated from neuronal activity.

Figure 2. .

The full pipeline for the DCM forward model. The model involves three node network stimulated during the cognitive experiment (i). The parameter set describing the dynamics in this network includes a fixed connectivity matrix (A), modulatory connections (B), and inputs to the nodes (C) (ii). In the equation describing the fast neuronal dynamics, z denotes the dynamics in the nodes, and u is an experiment-related input. Red: excitatory connections. Blue: inhibitory connections. The dynamics in this network can be described with use of ordinary differential equations. The outcome is the fast neuronal dynamics (iii). The neuronal time series is then convolved with the hemodynamic response function (HRF) (iv) in order to obtain the BOLD response (v), which may be then subsampled (vertical bars). This is the original, bilinear implementation of DCM (K. J. Friston et al., 2003). Now, more complex versions of DCM with additional features are available, such as spectral DCM (K. J. Friston et al., 2011), stochastic DCM (Daunizeau et al., 2012), nonlinear DCM (Stephan et al., 2008), two-state DCM (Marreiros et al., 2008), large DCMs (Frässle et al., 2018; Frässle, Lomakina-Rumyantseva, et al., 2016; Seghier & Friston, 2013) and so on.

At the neuronal level of the DCM generative model, simple interactions between brain areas are posited, either bilinear (K. J. Friston et al., 2003) or nonlinear (Stephan et al., 2008). In the simplest, bilinear version of the model, the bilinear state equation reads:

| (6) |

where z denotes the dynamics in the nodes of the network, u denotes the experimental inputs, A denotes the connectivity matrix characterizing causal interactions between the nodes of the network, B denotes the modulatory influence of experimental inputs on the connections within the network, and C denotes the experimental inputs to the nodes of the network (Figure 2). The hemodynamic level is more complex and follows the biologically informed Balloon-Windkessel model (Buxton, Wong, & Frank, 1998); for details please see K. J. Friston et al. (2003). The Balloon–Windkessel model (Buxton et al., 1998) describes the BOLD signal observed in fMRI experiments as a function of neuronal activity but also region-specific and subject-specific physiological features such as the time constant of signal decay, the rate of flow-dependent elimination, and the hemodynamic transit time or resting oxygen fraction. This is a weakly nonlinear model with free parameters estimated for each brain region. These parameters determine the shape of the hemodynamic response (Figure 2, iv), which typically peaks at 4–6[s] after the neuronal activity takes place, to match the lagged oxygen consumption in the neuronal tissue mentioned in section A Note on the Limitation of fMRI Data. The Balloon–Windkessel model is being iteratively updated based on new experimental findings, for instance to mimic adaptive decreases to sustained inputs during stimulation or the poststimulus undershoot (Havlicek et al., 2015).

In this paper, the deterministic, bilinear single-state per region DCM will be described (K. J. Friston et al., 2003). The DCM procedure starts with defining hypotheses based on observed activations, which involves defining which regions are included in the network (usually on the basis of activations found through the General Linear Model (K. J. Friston et al., 2007) and then defining a model space based on the research hypotheses. In the latter model selection phase, a range of literature-informed connectivity patterns and inputs in the networks (referred to as “models”) are posited (Figure 2, i). The definition of a model space is the key to the DCM analysis. The models should be considered carefully in the light of the existing literature. The model space represents the formulation of a prior over models, therefore, it should always be constructed prior to the DCM analysis. Subsequently, for every model one needs to set priors on the parameters of interest: connectivity strengths and input weights in the model (Figure 2, ii) and the hemodynamic parameters. The priors for hemodynamic parameters are experimentally informed Gaussian distributions (K. J. Friston et al., 2003). The priors for connectivity strengths are Gaussian probability distributions centered at zero (which is often referred to as conservative shrinkage priors). The user usually does not need to specify the priors, as they are already implemented in the DCM algorithms.

Next, an iterative procedure is used to find the model evidence by maximizing a cost function, a so-called negative free energy (K. J. Friston & Stephan, 2007). Negative free energy is a particular cost function which gives a trade-off between model accuracy and complexity (which accounts for correlations between parameters, and for moving away from the prior distributions). During the iterative procedure, the prior probability distributions gradually shift their mean and standard deviation, and converge toward the final posterior distributions. Negative free energy is a more sophisticated approximation of the model evidence when compared to methods such as Akaike’s Information Criterion (AIC; Akaike, 1998) or Bayesian Information Criterion (BIC; Schwarz, 1978); AIC and BIC simply count the number of free parameters (thereby assuming that all parameters are independent), while negative free energy also takes the covariance of the parameters into account (W. D. Penny, 2012).

In DCM, causality is modeled as a set of upregulating or downregulating connections between nodes. During the inference procedure, conservative shrinkage priors can shift towards both positive and negative values, which can be interpreted as effective excitation or effective inhibition. The exceptions aren self-connections, which are always only negative (this self inhibition is mathematically motivated: the system characterizing the fast dynamics of the neuronal network must be stable, and this requires the diagonal terms of the adjacency matrix A to be negative), Figure 2, ii, connections denoted in blue1. During the inference procedure, the neural and hemodynamic parameters of all models postulated for model comparison are optimized2. The posterior probability distributions determine significance of all the parameters3. The models can contain both uni- and bidirectional connections (Buijink et al., 2015; Vaudano et al., 2013)4. The estimated model evidence can then be compared7. As such, the original DCM (K. J. Friston et al., 2003) is a hypothesis-testing tool working only through model comparison. However, now a linear version of DCM dedicated to exploratory research in large networks is also available (Frässle, Lomakina-Rumyantseva, et al., 2016). Testing the immediacy5 and resilience to confounds6 in DCM is possible through creating separate models and comparing their evidence. For instance, one can compare the evidence for X → Y with evidence for X → Z → Y in order to test whether or not the connection X → Y is direct or rather mediated by another region Z. Note that this strategy requires an explicit specification of the alternative models and it cannot take hidden causes into consideration (in this work, we refer to the original DCM implementation [K. J. Friston et al., 2003], but there are also implementations of DCM involving estimation of time-varying hidden states, such as Daunizeau, Friston, & Kiebel, 2009). However, including extra regions in order to increase resilience to confounds is not necessarily a good idea. Considering the potentially large number of fitted parameters per region (the minimum number of nodes per region is two hemodynamic parameters and one input/output to connect to the rest of network), this may result in a combinatorial explosion. Also, models with different nodes are not comparable in DCM for fMRI (K. J. Friston et al., 2003). DCM is, in general, computationally costly. The original DCM (K. J. Friston et al., 2003) is restricted to small networks of a few nodes9 (as mentioned previously, today, large DCMs dedicated to exploratory research in large networks are also available; Frässle, Lomakina-Rumyantseva, et al., 2016; Seghier & Friston, 2013).

The proper application of DCM needs a substantial amount of expertise (Daunizeau, David, & Stephan, 2011; Stephan et al., 2010). Even though ROIs can be defined in a data-driven fashion (through a preliminary classical General Linear Model analysis; K. J. Friston et al., 1995), the model space definition requires prior knowledge of the research problem (Kahan & Foltynie, 2013). In principle, the model space should reflect prior knowledge about possible causal connections between the nodes in the network. If a paradigm developed for the fMRI study is novel, there might be no reference study that can be used to build the model space. In that case, using family-wise DCM modeling can be helpful (W. D. Penny et al., 2010). Family-wise models group large families of models defined on the same set of nodes, in order to test a particular hypothesis. For instance, one can explore a three node network with nodes X, Y, Z and compare the joint evidence behind all the possible models that contain connection X → Y with the joint evidence behind all the possible models that contain connection Y → X (Figure 2, i). Another solution that allows for constraining a large model space is Bayesian model averaging (Hoeting, Madigan, Raftery, & Volinsky, 1999; Stephan et al., 2010) which explores the entire model space and returns average value for each model parameter, weighted by the posterior probability for each model. Finally, one can perform a Bayesian model reduction (J. Friston et al., 2016), in which the considered models are reduced versions of a full (or “parent”) model. This is possible when the priors can be reduced, for example, when a prior distribution of a parameter in a parent model is set to a mean and variance of zero.

There are a few points that need particular attention when interpreting the results of the DCM analysis. First, in case the data quality is poor, evidence for one model over another will not be conclusive. In the worst case, it could give a preference to the simplest model (i.e., the model with the fewest free parameters). In that case, simpler models will be preferred over more complex ones regardless of the low quality of fit. It is important, therefore, to include a “null model” in a DCM analysis, with all parameters of interest fixed at zero. This null model can then act as a baseline against which other models can be compared (W. D. Penny, 2012).

Second, the winning model might contain parameters with a high probability of being equal to zero. To illustrate this, let us consider causal inference in a single subject (also referred to as first level analysis). Let us assume that we chose a correct set of priors (i.e., model space). The Variational Bayes (VB; Bishop, 2006) procedure then returns a posterior probability distribution for every estimated connectivity strength. This distribution gives a measure of probability for the associated causal link to be larger than zero. Some parameters may turn out to have high probability of being equal to zero in the light of this posterior distribution. This may be due to the fact that the winning model is correct, but some of the underlying causal links are weak and therefore hard to confirm by the VB procedure. Also, DCM requires data of high quality; when the signal-to-noise ratio is insufficient, it is possible that the winning model would explain a small portion of the variance in the data. In that case, getting insignificant parameters in the winning model is likely. Therefore, it is advisable to check the amount of variance explained by the winning model at the end of the DCM analysis.

The most popular implementation of the DCM estimation procedure is based on VB (Bishop, 2006) which is a deterministic algorithm. Recently, also Markov-Chain Monte Carlo (MCMC; Bishop, 2006; Sengupta, Friston, & Penny, 2015) was implemented for DCM. When applied to a unimodal free energy landscape, these two algorithms will both identify the global maximum. MCMC will be slower than VB as it is stochastic and therefore computationally costly. However, free energy landscape for multiple-node networks is most often multimodal and complex. In such case, VB—as a local optimization algorithm—might settle on a local maximum. MCMC on the other hand, is guaranteed to converge to the true posterior densities— and thus the global maximum (given an infinite number of samples).

DCM was tailored for fMRI and, unlike other methods, it explicitly models the hemodynamic response in the brain. The technique tends to return highly reproducible results, and is therefore statistically reliable (Bernal-Casas et al., 2013; Rowe, Hughes, Barker, & Owen, 2010; Schuyler, Ollinger, Oakes, Johnstone, & Davidson, 2010; Tak et al., 2018). Recent longitudinal study on spectral DCM in resting state revealed systematic and reliable patterns of hemispheric asymmetry (Almgren et al., 2018). DCM also yielded high test-retest reliability in an fMRI motor task study (Frässle et al., 2015) in a face perception study (Frässle, Paulus, Krach, & Jansen, 2016), in a facial emotion perception study (Schuyler et al., 2010), and in a finger-tapping task in a group of subjects suffering from Parkinson’s disease (Rowe et al., 2010). It has also been demonstrated most reliable when directly compared with GC and SEM (W. Penny, Stephan, Mechelli, & Friston, 2004). Furthermore, the DCM procedure can provide complimentary information to GC (K. Friston, Moran, & Seth, 2013): GC models dependency among observed BOLD responses, whereas DCM models coupling among the hidden states generating observations. GC seems to be equally effective as DCM in certain circumstances, such as when the HRF is deconvolved from the data (David et al., 2008; Ryali et al., 2016, 2011; Wang, Katwal, Rogers, Gore, & Deshpande, 2016). Importantly, the face validity of DCM was examined on experimental datasets coming from interventional study with use of rat model of epilepsy (David et al., 2008; Papadopoulou et al., 2015).

DCM is not always a method of choice in causal studies in fMRI. Proper use of DCM requires knowledge of the biology and of the inference procedure. DCM also has limitations in terms of the size of the possible models. Modeling a large network may run into problems with identifiability; there will be many possible combinations of parameter settings that could give rise to the same or similar model evidence. In other words, strong covariance between parameters will preclude confident estimates of the strength of each connection. One possible remedy for this, in the context of large-scale networks, is to impose appropriate prior constrains on the connections, for example, using priors based on functional connectivity as priors (Razi et al., 2017). Large networks may also give rise to comparisons of large number of different models with varying combinations of connections. To reduce the possibility of overfitting at the level of model comparison—that is, finding a model which is appropriate for one subject or group of subjects’ data, but not for others—it can be useful to group the models into a small number of families (W. D. Penny et al., 2010) based on pre-defined hypotheses. More information on the limitations of DCM can be found in work by Daunizeau et al. (2011). A critical note on limitations of DCM in terms of network size can also be found in Lohmann, Erfurth, Muller, and Turner (2012), and see also a response to this article, Breakspear (2013); K. Friston, Daunizeau, and Stephan (2013).

However, to extend the scope of application of the DCM analysis to larger networks, recently two approaches were developed. First, a new, large-scale DCM framework for resting-state fMRI has been proposed (Razi et al., 2017). This framework uses the new, spectral DCM (K. J. Friston et al., 2011) designed for resting-state fMRI and is able to handle dozens of nodes in the network. Spectral DCM is then combined with functional connectivity priors in order to estimate the effective connectivity in the large-scale resting-state networks. Second, a new approach by Frässle et al. (2018) imposes sparsity constraints on the variational Bayesian framework for task fMRI, which enables for causal inference on the whole-brain network level.

DCM was further developed into multiple procedures including more sophisticated generative models than the original model discussed here. The field of DCM research in fMRI is still growing (K. J. Friston et al., 2017). The DCM generative model is continuously being updated in terms of the structure of the forward model (Havlicek et al., 2015), the estimation procedure (Sengupta et al., 2015), and the scope of the possible applications (K. J. Friston et al., 2017).

HIERARCHICAL NETWORK-WISE MODELS

The second group of methods involves hierarchical network-wise models: Linear Non-Gaussian Acyclic Models (LiNGAM, Shimizu et al., 2006) and Bayesian Nets (BNs; Frey & Jojic, 2005). Similarly, as network-wise methods reviewed in the previous chapter, these methods are also multivariate but with one additional constraint: the network can only include feed forward projections (and therefore, no closed cycles). Consequently, the resulting models have a hierarchical structure with feed forward distribution of information through the network.

LiNGAM

Linear Non-Gaussian Acyclic Models (LiNGAM; Shimizu et al., 2006) is an example of a data driven approach working under the assumption of acyclicity (Thulasiraman & Swamy, 1992). The model is simple: every time course within an ROI Xi(t) is considered to be a linear combination of all other signals with no time lag:

| (7) |

in which B denotes a matrix containing the connectivity weights, and denotes multivariate noise. The model is in principle the same as in SEM (section Structural Equation Modeling), but the difference lies in the inference procedure: whereas in SEM, inference is based on minimizing the variance of the residual noise under the assumption of independence and Gaussianity, LiNGAM finds connections based on the dependence between residual noise components and regressors .

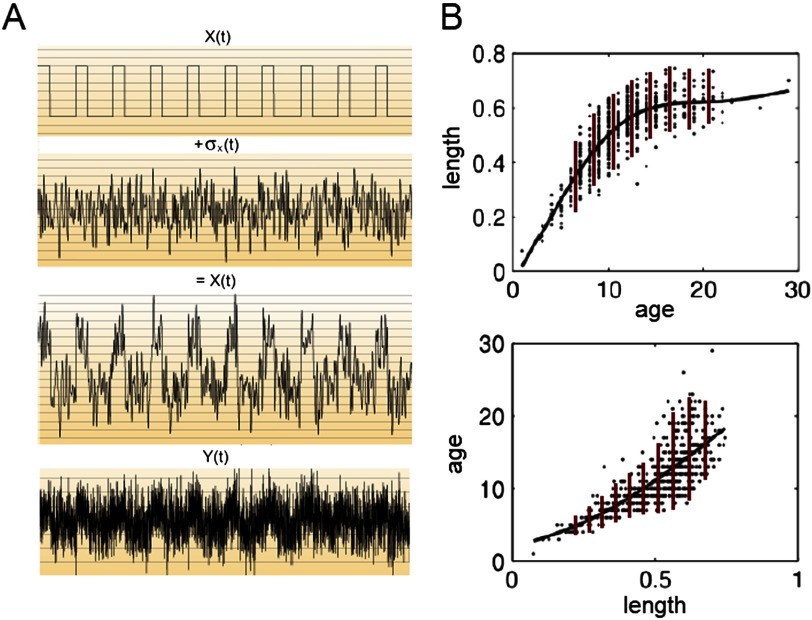

The rationale of this method is as follows (Figure 3). Let us assume that the network is noisy, and every time series within the network is associated with a background noise uncorrelated with the signal in that node. An example of such a mixture of signal with noise is given in Figure 3A. Then, let us assume that , which is a mixture of signal X(t) and noise σX(t), causes Y (t). Then, as it cannot distinguish between the signal and the noise, Y becomes a function of both these components. Y(t) is also associated with noise σY(t); however, as there is no causal link Y → X, X(t) is not dependent on the noise component σY(t). Therefore, if Y depends on the σX(t) component, but X does not depend on the σY(t) component, one can infer projection X → Y.

Figure 3. .

The Linear Non-Gaussian Acyclic Model (LiNGAM). A: The noisy time series consists of signal X(t) and noise σX(t). Y (t) thus becomes a function of both the signal and the noise in . B: Causal inference through the analysis of the noise residuals (figure reprinted from http://videolectures.net/bbci2014_grosse_wentrup_causal_inference/). The causal link from age to length in a population of fish can be inferred from the properties of the residual noise in the system. If fish length is expressed in a function of fish age (upper panel), the residual noise in the dependent variable (length) is uncorrelated with the independent variable (age): the noise variance is constant over a large range of fish age (red bars). On the contrary, once the variables are flipped and fish age becomes a function of fish length (lower panel), the noise variance becomes dependent on the independent variable (length): it is small for small values of fish length and large for the large values of fish length (red bars).

This effect is further explained on an example of a simple causal relationship between two variables is demonstrated in Figure 3B: age versus length in a fish. If fish length is expressed in a function of fish age (upper panel), the residual noise in the dependent variable (length) is uncorrelated with the independent variable (age). Therefore, the noise variance is constant over a large range of fish age. On the contrary, once the variables are flipped and fish age becomes a function of fish length (lower panel), the noise variance becomes dependent on the independent variable (length): it is small for small values of fish length and large for the large values of fish length. Therefore, the first causal model (fish age influencing fish length) is correct.

In applications to causal research in fMRI, the LiNGAM inference procedure is often accompanied by an Independent Component Analysis (ICA; Hyvärinen & Oja, 2000) as follows. The connectivity matrix B in Equation 7 describes how signals in the network mix together. By convention, not B but a transformation of B into

| (8) |

is used as a mixing matrix in the LiNGAM inference procedure. By using this mixing matrix A, one can look at Equation 7 in a different way:

| (9) |

Now, the BOLD time course in the network can be represented as a mixture of independent sources of noise . This is the well-known cocktail party problem and it was originally described in acoustics (Bronkhorst, 2000): in a crowded room, a human ear registers a linear combination of the noises coming from multiple sources. In order to decode the components of this cacophony, the brain needs to perform a blind source separation (Comon & Jutten, 2010): to decompose the incoming sound into a linear mixture of independent sources of sounds. In the LiNGAM procedure, ICA (Hyvärinen & Oja, 2000) is used to approach this issue. ICA assumes that the noise components are independent and have a non-Gaussian distribution, and finds these components as well as the mixing matrix A through dimensionality reduction with Principal Component Analysis (Jolliffe, 2002; Shlens, 2014). From this mixing matrix, one can in turn estimate the desired adjacency matrix B with use of Equation 8.

Since the entries Bij of the connectivity matrix B can take any value, LiNGAM can in principle retrieve both excitatory and inhibitory connectivity1 of any strength2. The author of LiNGAM recommends (Shimizu, 2014) performing significance testing through either bootstrapping (Hyvärinen, Zhang, Shimizu, & Hoyer, 2010; Komatsu, Shimizu, & Shimodaira, 2010; Thamvitayakul, Shimizu, Ueno, Washio, & Tashiro, 2012) or permutation testing (Hyvärinen & Smith, 2013)3. However, LiNGAM makes the assumption of acyclicity, therefore only unidirectional connections can be picked up4. Moreover, the connectivity matrix revealed with the use of LiNGAM is meant to pick up on direct connections5. The original formulation of LiNGAM assumes no latent confounds (Shimizu et al., 2006), but the model can be extended to a framework that can capture the causal links even in the presence of (unknown) hidden confounds (Z. Chen & Chan, 2013; Hoyer, Shimizu, Kerminen, & Palviainen, 2008)6. LiNGAM-ICA’s causal inference consists of ICA and a simple machine learning algorithm, and, as such, it is a fully data-driven strategy that does not involve model comparison7. Confidence intervals for the connections B can be found through permutation testing. ICA itself can be computationally costly and its computational stability cannot be guaranteed (the procedure that searches for independent sources of noise can get stuck in a local minimum). Therefore, the computational cost in LiNGAM can vary depending on the dataset8. This also sets a limit on the potential size of the causal network. When the number of connections approaches the number of time points (degrees of freedom), the fitting procedure will become increasingly unstable as it will be overfitting the data9.

When tested on synthetic fMRI benchmark datasets (S. Smith et al., 2011), LiNGAM-ICA performs relatively well, but is more sensitive to confounders than several other methods discussed in this paper, such as Patel’s tau or GC. However, as LiNGAM performs particularly well for datasets containing a large number of samples, the authors suggested that a group analysis could resolve the sensitivity problem in LiNGAM. The concept was then picked up and developed by at least two groups. Firstly, Ramsey et al. (J. D. Ramsey, Hanson, & Glymour, 2011) proposed LiNG Orientation, Fixed Structure technique (LOFS). The method is inspired by LiNGAM and uses the fact that, within one graph equivalence class, the correct causal model should return conditional probability distributions that are maximally non-Gaussian. LOFS was tested on the synthetic benchmark datasets, where it achieved performance very close to 100%. Second, Xu et al. published a pooling-LiNGAM technique (Xu et al., 2014), which is a classic LiNGAM-ICA applied to the surrogate datasets. Validation on synthetic datasets revealed that both False Positive (FP) and False Negative (FN) rates decrease exponentially along with the length of the (surrogate) time series; however, combining time series of as long as 5,000 samples is necessary for this method to give both FP and FN as a reasonable level of 5%.

Despite the promising results obtained in the synthetic datasets, LiNGAM is still rarely applied to causal research in fMRI to date.

Bayesian Nets

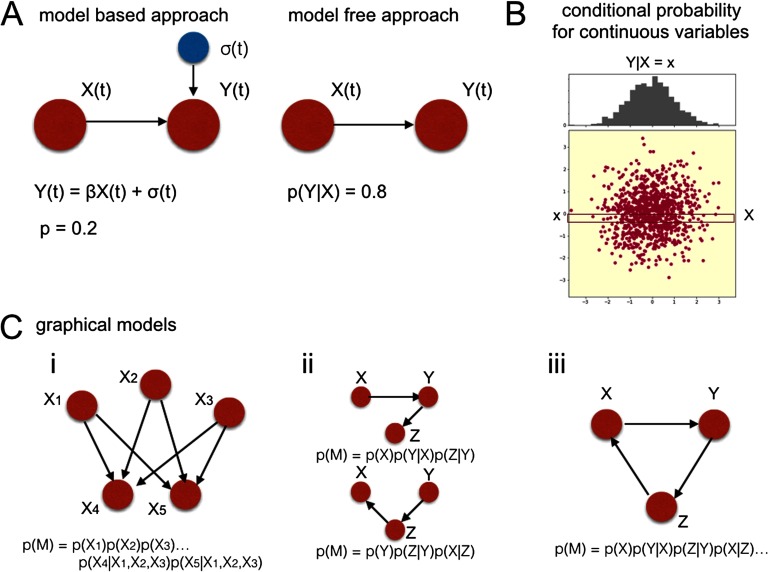

The use of the LiNGAM inference procedures assumes a linear mixing of signals underlying a causal interaction. Model-free methods do not make this assumption: the bare fact that one is likely to observe Y given the presence of X can indicate that the causal link X → Y exists (Figure 4). Let as assume the simplest example: causal inference for two binary signals X(t), Y (t). In a binary signal, only two values are possible: 1 and 0; 1 can be interpreted as an “event” while 0 - as “no event.” Then, if in signal Y(t), events occur in 80% of the cases when events in signal X(t) occur (Figure 4A), but the opposite is not true, the causal link X → Y is likely. Computing the odds of events given the events in the other signal, is sufficient to establish causality. In a model-based approach on the other hand, a model is fitted to the data, in order to establish the precise form of the influence of the independent variable X on the dependent variable Y.

Figure 4. .

Bayesian nets. A: Model-based versus model-free approach. β: a regressor coefficient fitted in the modeling procedure. σ(t): additive noise. Both model-based and model-free approach contain a measure of confidence. In a model-based approach, a model is fitted to the data, and p-values associated with this fit are a measure of confidence that the causal link exists (i.e., is a true positive, left panel). In a model-free approach, this confidence is quantified directly by expressing causal relationships in terms of conditional probabilities (right panel). B: Conditional probability for continuous variables. Since BOLD fMRI is a continuous variables, the joint probability distribution for variables X and Y is a two-dimensional distribution. Therefore, conditional probability of P(Y|X = x) becomes a distribution. C: (i) An exemplary Bayesian Net. X1, X2, X3: parents, X4, X5: children. (ii) Competitive Bayesian Nets: one can define competitive models (causal structures) in the network and compare their joint probability derived from the data. (iii) Cyclic belief propagation: if there was a cycle in the network, the expression for the joint probability would convert into an infinite series of conditional probabilities.

Note that both model-based and model-free approaches contain a measure of uncertainty, but this uncertainty is computed differently. In model-based approaches, p values associated with the fitted model are a measure of confidence that the modeled causal link is a true positive (Figure 4A, left panel). In contrast, in model-free approaches this confidence is quantified directly by quantifying causal relationships in terms of conditional probabilities (Figure 4A, right panel). In practice, since the BOLD response—unlike the aforementioned example of binary signals—takes continuous values, estimating conditional probabilities is based on the basis of the joint distribution of the variables X and Y (Figure 4B). Conditional probability P(Y|X) becomes a distribution of Y when X takes a given value. BNs (Frey & Jojic, 2005) are based on such a model-free approach (Figure 4C).

The causal inference in BNs is based on the concept of conditional independency (a.k.a. Causal Markov Condition; (Hausman & Woodward, 1999). For example, suppose there are two events that could independently cause the grass to get wet: either a sprinkler, or rain. When one only observes the grass being wet, the direct cause for this event is unknown. However, once rain is observed, it becomes less likely that the sprinkler was used. Therefore, one can say that the variables X1 (sprinkler) and X2 (rain) are conditionally dependent given variable X3 (wet grass), because X1, X2 become dependent on each other after information about X3 is provided. In BNs, the assumption of conditional dependency in the network is used to compute the joint probability of a given model, that is, the model evidence (once variables Xi are conditionally dependent on Xj, the joint distribution P(Xi, Xj) factorizes into a product of probabilities P(Xj)P(Xi|Xj).

Implementing a probabilistic BN requires defining a model: choosing a graph of “parents” who send information to their “children.” For instance, in Figure 4C, i, node X1 is a parent of nodes X4 and X5, and node X4 is a child of nodes X1, X2 and X3. The joint probability of the model can then be computed as the product of all marginal probabilities of the parents and conditional probabilities of the children given the parents. Marginal probability P(Xj) is the total probability that the variable of interest Xj occurs while disregarding the values of all the other variables in the system. For instance, in Figure 4C, (i), P(X1) means a marginal probability of X1 happening in this experiment. Conditional probability P(Xi|Xj) is the probability of a given variable (Xi) occurring given that another variable has occurred (Xj). For instance, in Figure 4C, i, P(X5|X1, X3) means a conditional probability of X5 given its parents X1 and X3.

Then, once the whole graph is factorized into the chain of marginal and conditional probabilities, the joint probability of the model can be computed as the product of all marginal and conditional probabilities. For instance, in Figure 4C, i, the joint probability of the model M yields

| (10) |

Finally, there are at least three possible approaches to causal inference with BNs:

-

1.

Model comparison: choosing the scope of possible models (by defining their structure a priori), and comparing their joint probability. Mind that in this case, the algorithm will simply return the winning graphical model, without estimation of the coefficients representing connection weights

-

2.

Assuming one model structure a priori, and only inferring the weights. This is common practice, related to, for example, Naive Bayes (Bishop, 2006) in which the structure is assumed, and the connectivity weights are estimated from conditional probabilities. In this case, the algorithm will assume that the proposed graphical model is correct, and infer the connection weights only

-

3.

Inferring the structure of the model from the data in an iterative way, by using a variety of approximate inference techniques that attempt to maximize posterior probability of the model by minimizing a cost function called free energy (Frey & Jojic, 2005), similar to DCM): expectation maximization (EM; Bishop, 2006; Dempster, Laird, & Rubin, 1977), variational procedures (Jordan, Ghahramani, Jaakkola, & Saul, 1998), Gibbs sampling (Neal, 1993) or the sum-product algorithm (Kschischang, Frey, & Loeliger, 2001), which gives a broader selection of procedures than in the DCM.

BNs can detect both excitatory and inhibitory connections X → Y, depending on whether the conditional probability p(Y|X) is higher or lower than the marginal probability p(X)1. Like LiNGAM, BNs cannot pick up on bidirectional connections in general. The assumption of acyclicity comes from the cyclic belief propagation (Figure 4C, iii): the joint probability of a cyclic graph would be expressed by an infinite chain of conditional probabilities, which usually does not converge into a closed form. This restricts the scope of possible models to DAGs (Thulasiraman & Swamy, 1992). However, there are also implementations of BNs that cope with cyclic propagation of information throughout the network, for example, Cyclic Causal Discovery algorithm (CCD; Richardson & Spirtes, 2001). This algorithm is not often used in practice. However, as it works in the large sample limit, CCD requires assumption on the graph structure and retrieves a complex output4. In BNs, the value of conditional probability P(Y|X) can be a measure of a connection strength2. We can consider conditional probabilities significantly higher than chance as an indication for significant connections3. In principle, BNs are not resilient to latent confounds. However, some classes of algorithms were designed specifically to tackle this problem, such as Stimulus-based Causal Inference (SBCI; Grosse-Wentrup, Janzing, Siegel, & Schölkopf, 2016), Fast Causal Inference (FCI; P. Spirtes, Glymour, & Scheines, 1993; Zhang, 2008) and Greedy Fast Causal Inference (GFCI; Ogarrio, Spirtes, & Ramsey, 2016)6. BNs can either work through model comparison or as an exploratory technique7. In the first case, it involves model specification that, like in DCM, requires a priori knowledge about the experimental paradigm. In the latter case, the likelihood is intractable and can only be approximated8 (Diggle, 1984). In principle, networks of any size can be modeled with BNs, either through a model comparison or through exploratory techniques. Exploratory techniques typically minimize a cost function during the iterative search for the best model. Since together with the growing network size, the landscape of the cost function becomes multidimensional and complex, and the algorithm is more likely to fall into a local minimum, exploratory techniques may become unreliable for large networks9.

What can also become an issue while using BNs in practice is that multiple BN algorithms return an equivalence class of a graph: the set of all graphs indistinguishable from the true causal structure on the basis of their sole probabilistic independency (Spirtes, 2010). These structures cannot be further distinguished without further assumptions or experimental interventions. For finite data, taking even one wrong assumption upon the directionality of causal link in the graph can be propagated through the network, and cause an avalanche of incorrect orientations (Spirtes, 2010). One approach designed to overcome this issue is the Constraint-Based Causal Inference (Claassen & Heskes, 2012). In this approach, Bayesian Inference is employed to estimate the reliability of a set of constraints. This estimation can further be used to decide whether this prior information should be used to determine the causal structure in the graph.

BNs cope well with noisy datasets, which makes them an attractive option for causal research in fMRI (Mumford & Ramsey, 2014). S. Smith et al. (2011) tested multiple implementations of BNs, including FCI, CCD, as well as other algorithms: Greedy Equivalence Search (GES; Chickering, 2002; Meek, 1995), “Peter and Clark” algorithm (PC; Meek, 1995) and a conservative version of “Peter and Clark” (J. Ramsey, Zhang, & Spirtes, 2006). All these implementation performed similarly well with respect to estimating the existence of connections, but not to the directionality of the connections.

BNs are not widely used in fMRI research up to date, the main reason being the assumption of acyclicity. One exception is Fast Greedy Equivalence Search (FGES; J. D. Ramsey, 2015; J. D. Ramsey, Glymour, Sanchez-Romero, & Glymour, 2017; J. D. Ramsey et al., 2014), a variant of GES optimized to large graphs. The algorithm assumes that the network is acyclic with no hidden confounders, and returns an equivalence class for the graph. In a recent work by Dubois et al. (2017), FGES was applied with use of a new, computational-experimental approach to causal inference from fMRI datasets. In the initial step, causal inference is performed from large observational resting-state fMRI datasets with use of FGES in order to get the aforementioned class of candidate causal structures. Further steps involve causal inference in a single patient informed by the results of the initial analysis, and interventional study with use of an electrical stimulation in order to determine which of the equivalent structures revealed by FGES can be associated with a particular subject.

PAIRWISE INFERENCE