Abstract

The provision of feedback to clinicians and organizations on the quality of care they provide is thought to influence clinician and organizational behavior leading to care improvements. Clinical Dashboards use data visualization techniques to provide feedback to individuals on their performance compared to quality metrics. In this paper we outline a theoretical approach to the design of a clinical dashboard; Feedback Intervention Theory (FIT). Priorities for feedback were identified using focus groups with home care nurses (n=61). Individual variation in graph literacy and numeracy among nurses and their ability to comprehend visualized data displays were evaluated using an online survey. The results from the focus group and survey were used to inform a prototype dashboard which was evaluated for usability with a separate sample of home care nurses. FIT provided a theoretical base for the dashboard design to ensure feedback that should positively impact clinician behavior.

Introduction

The quality and safety of health care is the focus of a number of quality improvement strategies in health care organizations1. A component of such quality improvement initiatives is the provision of feedback on performance against a variety of quality standards, at individual clinician, health care team and organizational level. For example, the Centers for Medicare & Medicaid services (CMS) use data collected from hospitals and post-acute care settings to both publicly report and provide feedback to organizations on the quality of care they provide2-4. The reporting of these measures is intended to incentivize quality improvement initiatives, thereby raising the quality of care provided by organizations overall. Organizations may in turn provide internal feedback on performance at both team and individual level to their staff, with the assumption that performance will improve5. In this paper we discuss a theoretical approach to the design of feedback interventions, Feedback Intervention Theory (FIT)6, and describe how this theory was applied in developing clinical dashboards for home healthcare nurses.

Feedback interventions are ‘the act of providing knowledge of the results of a behavior or performance to an individual’7. It can be provided in a number of formats including verbally or in written form, from a range of sources (e.g. a supervisor or colleague, a professional organization, an employer), include a variety of different information (such as explicit goals and action plans) and with varying frequency1. Several systematic reviews have explored the impact of feedback interventions on outcomes such as behavior change, improvements in performance and patient outcomes1 6 8. A consistent finding across reviews is the wide variation observed in the effect of feedback on performance1 6. Overall conclusions are that feedback at individual level has a small to moderate positive effect on practice6 7, yet a significant number of studies indicate a negative effect.1 6 The reviews also highlight the challenges of identifying which components of a feedback intervention are likely to affect behavior; due to the variation in content, delivery mode and frequency1 6. Characteristics of feedback interventions that correlate with improved performance include sufficient timeliness of the feedback (minimal delay between when the data is collected and the feedback is provided)7 9 10, the need for the feedback to be actionable9 10, and feedback that includes measurable targets and an action plan1.

Dashboards as a Feedback Intervention

Clinical dashboards use health information technology (HIT) and data visualization to provide feedback to individuals on their performance compared to quality metrics11. Clinical dashboards can provide feedback in ‘real time’ when clinicians are engaged in care activities, rather than providing a retrospective summary of performance11,12. A recent review of the evidence on use of clinical dashboards found 11 studies which reported empirical evaluations of the impact of the dashboards on clinical performance and/or quality outcomes11. The dashboards were used across different care contexts, targeted at a range of users (anesthetists, radiologists, pharmacists), provided feedback on a variety of different types of care standard (e.g. appropriate/inappropriate antibiotic prescription, adherence to the ventilator bundle in ICU) and incorporated different design characteristics (including variation in how the dashboard was presented to the user, and the visual displays used). As with the reviews exploring other forms of feedback, the impact of dashboards on performance and outcomes was varied, with an overall positive impact on care processes and patient outcomes.11 However, some studies found that dashboards have no overall impact on clinician behavior or outcomes. It is therefore unclear what aspects of dashboard design (e.g. different types of visualization graph, use of ‘color coding’ of outcomes, ways in which clinicians access the information) will effect clinicians’ behavior and quality outcomes.

One of the key recommendations from the most recent Cochrane review on the impact of feedback on quality improvements addressed the explicit use of theory to inform intervention development1. A theoretical approach to feedback intervention design can both ensure that the interventions are likely to have an effect on the desired behavior and identify how and why the intervention does or does not work. In this paper we describe how FIT was applied to explore how clinical dashboards may be an effective approach to provide feedback, and how using FIT can ensure that future dashboards are designed to enhance performance. Firstly we outline the key components of the FIT model, before analyzing how the model can be used to explain existing evidence about dashboard use and effectiveness. We then outline how FIT was used as the framework for the design of the dashboard described in the paper; providing a brief overview of 3 inter related phases (focus groups, experimental online survey and usability evaluation) that were used to both design and evaluate the dashboard. The usability evaluation was guided by a formal evaluation framework (TURF), which ensured that all aspects of usability for the dashboard were considered. We then briefly discuss the implications of our approach for dashboard design.

Feedback Intervention Theory

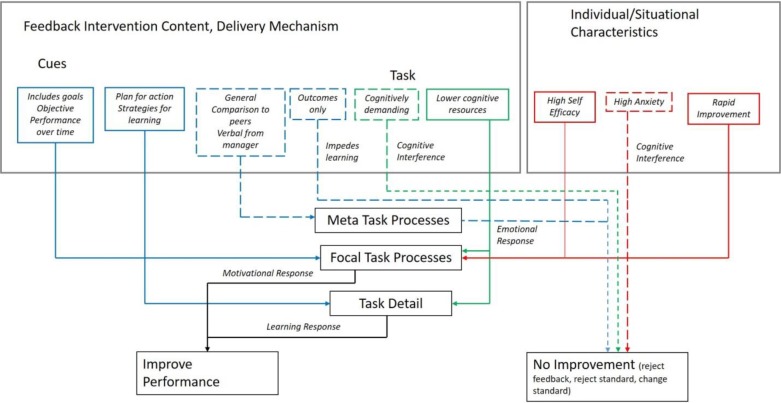

FIT is a hybrid theory, first proposed by Kluger et al.6 which integrates a number of behavioral theories (e.g. control theory, goal setting theory, social-cognitive theory) to attempt to explain the effects of feedback interventions on performance. The key components of the theory can be seen in Figure 1 (solid lines represent mechanisms that lead to improvement, dotted lines mechanisms that lead to no improvement).

Figure 1.

Characteristics of Feedback Interventions and Impact on Performance

Feedback normally provides information about how the individual is performing on a particular area of practice compared to a goal or standard. Feedback may vary according to the content, presentation format and frequency with which it is provided. Both the feedback intervention itself, contextual/situational variables and the characteristics of the individual receiving the feedback will affect how individuals react to the feedback, which may lead to one of four different coping strategies; improving performance to try and achieve the standard, changing the standard (so that it is more achievable), rejecting the feedback or rejecting the goal or standard altogether6.

FIT presents three levels of processes that govern how individuals react to a feedback intervention; meta-task processes are at the top of the hierarchy and are focused towards the self and often reflect an emotional response towards the feedback; focal task processes which trigger a response to motivate individuals to improve performance and task details, which invoke learning processes to complete the task. To be successful, interventions need to trigger focal task processes (which stimulate motivation to improve performance); less successful feedback draws attention away from focal task and task detail processes to the higher level or meta-task processes (so individuals are likely to focus on their emotional response to the feedback, rather than how to change performance).

Successful feedback interventions therefore need to stimulate motivation from the individual, or trigger a learning process that includes strategies for improving performance; Kluger et al suggest that pure outcome feedback without such strategies may impede learning, and therefore have a negative effect on performance.6

Factors that determine the effect of the feedback intervention on performance

Three classes of variables are proposed as determinates of the effect a feedback intervention has on performance; the cues of the intervention message; the nature of the task or behavior to be performed; and situational/personality variables. Cues determine which standards or goals receive the most attention, and through this effect behavior. For example: feedback that provides specific information about the task and goals to be achieved; potential actions to improve performance; monitoring of performance over time; and feedback that is perceived to be objective (such as that provided by a computer) are all hypothesized to focus attention on the task and performance goals, thereby leading to improved performance. In contrast, feedback that is more generalized in nature, compares an individual’s performance to their peers, or is provided verbally by their manager, is hypothesized to direct attention away from the task to the self (for example, feedback that may affect self-esteem may be rejected).

In terms of the nature of the task or behavior to be performed, FIT suggests feedback will be more or less effective depending on how susceptible the task is to shifts in attention. If a task is cognitively demanding or complex, then feedback may be less effective. Overall, the less cognitive resources required for effective task completion, the more likely feedback will improve performance.

Finally, situational and personality characteristics are hypothesized to affect individual reaction to feedback. Kluger et al suggest that individuals who have high self-efficacy are more likely to be motivated to change their behavior and less likely to quit, meaning feedback may be more effective for these individuals. They also suggest that individuals who are anxious are more likely to experience cognitive interference, meaning their attention will be taken away from the task, and feedback is likely to be less effective. One of the situational variables hypothesized to affect performance is rate of change; if the original feedback is very negative (there is a large gap between individual performance and the goal/standard) and the rate of improvement in performance is rapid, then an individual is more likely to continue with trying to change their behavior. In contrast if there is a minimal improvement in performance, then an individual is more likely to either reject the feedback or abandon the goal or standard altogether.

Feedback Intervention Theory and Dashboard Design

FIT can be used to both provide an explanation for why some clinical dashboard interventions appear to positively affect performance and outcomes, and others appear to have no impact. It can also be used to inform dashboard design to be more effective in providing feedback across a number of quality metrics to improve clinical performance and patient outcomes.

How clinical dashboard design can lead to improved outcomes

A number of features of clinical dashboard design would theoretically lead to improved performance on a quality standard or care goal. Dashboards tend to provide visualized information about a specific area of performance (task specific information); examples from the recent review include timely signing of radiology reports13, timely antibiotic administration14, compliance with the ventilator bundle in ICU settings 15 and appropriate prescribing of antibiotics16 17. They also tend to use color-coding (commonly a traffic light system of red, yellow, green) to indicate if a particular goal or standard is being achieved and provide information over time about whether or not the goal has been met 13 15 16 18-21. In addition, by providing feedback on a very specific element of clinician behavior, such as prescribing14 16 17, or elements of care associated with the ventilator bundle15 21, they provide information on the actions that should be taken for the individual to achieve that goal or performance standard. They also use technology to deliver information in real time which can enable focus on task performance through removing responses associated with the self. Further, through techniques such as graphical displays to summarize data, dashboards are thought to improve data comprehension and reduce cognitive load associated with the actual task22-24.

However, a key weakness in almost all studies on feedback intervention effectiveness is failure to consider individual differences in response to feedback; so hypotheses regarding the effect of feedback on a clinician’s self-efficacy and anxiety levels remain untested. Also lacking are specific analyses looking at the rate of improvement on a particular area of performance. However dashboards have a potential benefit in this area, in that their ability to provide feedback in ‘real time’ means that any adjustments in a clinician’s behavior related to meeting a performance goal (such as improving the timeliness of prescriptions, or adherence to an element of the ventilator bundle) will be seen immediately in dashboard information.

Given the potential for dashboards to provide feedback that is effective in improving individual performance, it is puzzling that in the recent review two studies that developed dashboards for improving appropriate antibiotic prescribing in primary care had no impact on outcomes16 17. A potential explanation can be found in how dashboards were made available to individual users. In both studies clinicians had to actively seek the dashboards out, as opposed to other studies where the dashboard was constantly available in the form of a screen saver14 21. Using FIT to explain this finding, a screen saver approach focuses the individual’s attention on the task, and the information contained in the dashboard is designed to keep that attention focused, thereby leading to changes in behavior and subsequent performance. In contrast, if individuals must actively seek out the dashboard, individual differences (such as motivation to improve performance) will affect task attention. Only motivated individuals will seek out the information, and in fact both studies reported improved performance in situations where the clinician actually accessed the dashboard.

FIT applied to the development of clinical dashboards for nurses at a home healthcare agency

In this section of the paper we give an overview of a study which used FIT to inform dashboard design. The clinical dashboards were designed for use in a large home care agency in the North East region of the United States. The study had three phases; focus groups with nurses to explore care issues and needs for feedback 25, an experimental survey assessing graph literacy and numeracy26 27 and dashboard prototype design with usability and feasibility testing. In 2010 an estimated 12 million individuals received care from home health agencies28, a number that is likely to rise with the increase of the age of the US population and a growing emphasis on moving care from the acute care sector into community settings. This first phase of the study developed and tested the dashboards for usability and feasibility in practice, with the focus on improving care processes and outcomes for home health patients with congestive heart failure (CHF). Patients with CHF have the highest overall rates of re-hospitalization across all health care settings; home health patients with CHF have a 42% greater risk of hospital readmission (adjusted odds ratio 1.42, 95% CI 1.37-1.48)29. Nurses in a home care setting have a number of evidence based clinical guidelines available to guide their care30 31, with the majority of care interventions identified as actionable by nurses and effective in helping manage CHF patients in their homes to reduce hospitalizations. A summary of the approach taken to ensure that the dashboard incorporated elements of FIT is provided in Table 1. Individual differences across nurses were evaluated in terms of numeracy and graph literacy (as discussed below).

Table 1.

FIT and Dashboard Design

| FIT Process | Dashboard Design elements | Sources of data to inform design |

|---|---|---|

| Meta-task Aim is to direct attention to the task and not at the self |

|

|

| Focal Task Aim to improve performance on task; increasing motivation to improve performance |

|

|

| Task Details Aim to improve performance on task through triggering learning to improve specific task |

|

|

Focus groups with home care nurses were conducted to explore which of the elements of the CHF evidence based practice guidelines were: a priority for care; issues for which nurses wanted real time point of care feedback; and under their control (actionable). Specific goals or standards for care, based on published evidence based guidelines30-32 were used as the basis for identifying priorities for real time feedback for nurses. A total of 6 focus groups (n=61 participants) were conducted (the full methods and results are published elsewhere).25 The analysis identified specific themes related to the utility of feedback over time, for specific patient signs and symptoms (such as weight, vital signs) and educational goals, with the ability to see the feedback in real time as nurses are caring for patients.

An online experimental survey was designed to explore if ability to understand data in different types of visualization is effected by a nurse’s numeracy or graph literacy. Numeracy (the ability to understand numerical information) and graph literacy (the ability to understand information presented graphically) may influence individual ability to perceive and understand the data presented in dashboard visualizations. Previous studies have demonstrated that individuals with low graph literacy understand numerical information better if it is presented as numbers, whereas those with higher graph literacy had better understanding of the same information when it was presented in graphical format33 34. A recent small study exploring nurses’ numeracy and graph literacy found considerable variation across both numeracy and graph literacy, with only one third of the sample scoring high for both, and with low correlation between the two sets of skills35.

The experimental survey evaluated graph literacy and numeracy using the graph literacy scale33 and the expanded numeracy scale36. It employed simulated quality of care indicators on heart failure patients (e.g. percentage of CHF patients hospitalized within 30 days of admission) which were presented to nurses in one of 4 different presentation conditions (bar graph, line graph, spider graph or table/text). Nurses were randomized to one of four experimental groups. The full methods and results are published elsewhere.26 27 Briefly 195 nurses from two home care agencies completed the survey. Overall comprehension was highest for information presented in a bar graph. Individuals with both low numeracy and graph literacy had the lowest overall comprehension across all display formats; however high graph literacy was associated with higher overall comprehension.

Dashboard Development and Usability Evaluation

The results from the focus group study and the survey informed dashboard prototypes based on FIT (see Table 1). The dashboard display targeted information related to a patient’s weight and vital signs, the highest priority for the nurses. The survey results were applied to ensure dashboard visualizations maximized comprehension, with the flexibility for nurses to view the data as either a bar graph or a line graph.

The prototype dashboards were developed incorporating the features identified in Table 1. The design process included contextual inquiry (observation and interviews with home care nurses during patient visits n=4) to ensure the dashboard fitted with workflow, rapid feedback on paper prototypes of the dashboard (n = 10) and a usability evaluation of the final dashboard prototype (n=22).

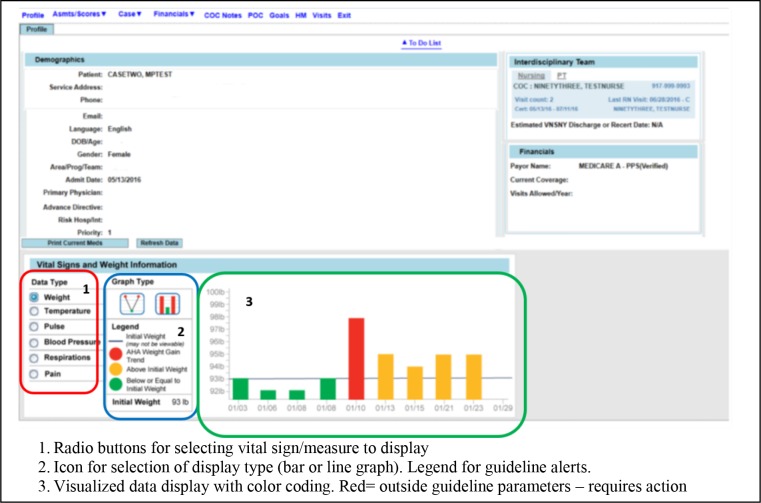

Functionality of the final prototype included flexible visualization of the data (users could choose to view the data as a line graph or bar graph), display of different vital signs, customizable location of the visualization frequently used within the EHR (front of patient note and in documentation of vital signs screens) and decision support (indicators of measures outside recommended guidelines with guidance on action) (see Figure 2).

Figure 2.

Example Screen Shot of Dashboard Prototype

Dashboard Evaluation: The feasibility and usability of the dashboards were evaluated using the TURF (Tasks, Users, Representations and Functions) framework37 with a sample of home care nurses. TURF has four components which guided our usability evaluation; function (how useful is the dashboard?), users (how satisfying to the users find the dashboard to use?), representations and task (how usable is the dashboard in practice?).

Participants were provided with a series of tasks to complete using the dashboard. Usefulness was evaluated through analysis of nurse interactions with the dashboard, which were recorded using Morae® software, including time to complete tasks and on-screen movements (mouse and keyboard). Satisfying was evaluated using two established measures of system usability, the System Usability Scale (SUS)38 and the short form questionnaire for user interaction satisfaction (QUIS)39. We evaluated usability in two ways, by conducting a heuristic evaluation with experts (n=3), and conducting a task analysis where we compared user performance in time on task to system experts.

Results of Usability Evaluation

The full results of the usability evaluation are in press40. The majority of nurses were able to use the dashboard immediately without any prior training or overview of the system (91%), and were able to use the icons and radio buttons to switch between data types and views (96%). There was some disagreement between nurses on the requirement for training; overall the dashboard layout and use of color coding was positively received and nurses liked the ability to see trends over time. The mean SUS score for the dashboard was 73.2 (SD=18.8) (SUS range is 0-100 with a higher rating = greater usability). QUIS scores are rated from 1 (word has a negative meaning) to 9 (where the word has a positive meaning). Mean QUIS scores ranged from 7.8 (SD=1.1) for terminology and dashboard information to overall user reactions (mean=6.1; SD=1.0). The expert heuristic evaluation identified 5 minor usability issues and 12 cosmetic problems with the design of the dashboard, such as using a contrasting color for the icon indicating which graph is selected. On average the nurses took 5.7 minutes (SD=2.4) to complete the dashboard tasks. This was more time than expert users (mean=1.4, SD=0.6), but reflects that the nurses had no training on the system.

Conclusion

To ensure that feedback interventions such as clinical dashboards will lead to the desired changes in clinicians’ performance against quality standards, it is important to consider if and how the intervention may actually impact on behavior. FIT offers a valuable approach to the design of interventions such as clinical dashboards, which can be empirically tested. In this paper we outline an approach to dashboard design which has explicitly used FIT as the theoretical basis for the functionality of the dashboard, to provide feedback to home care nurses at the point of care. The results of the usability evaluation will inform further development prior to deployment and evaluation of the dashboard in a clinical setting.

One limitation of our approach involves participant self-efficacy or anxiety, which were not explicitly addressed in the usability evaluation. These components of the FIT model reflect individual user response to the feedback. Due to our theory-based design, including availability of the dashboard (without seeking it out) and custom user preferences, we hypothesize that use will modify self-efficacy (motivation) and anxiety to positively influence the care process. Future studies can measure participants’ anxiety and self-efficacy with and without dashboard feedback and compare effects on care process and patient outcomes.

Our approach illustrates the value of accounting for individual user differences and design features that ensure fit with clinician workflow. The FIT model suggests these features should positively impact clinician reactions to feedback information. Once the dashboard is deployed in practice further research will be needed to evaluate its impact on clinician behavior and patient outcomes, to identify if it leads to improvements in the quality of care received by patients.

Acknowledgements

This project was supported by grant number R21HS023855 from the Agency for Healthcare Research and Quality. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality.

We would also like to the following members of the research team who contributed to the data collection and analysis of the studies highlighted here: Ms Nicole Onorato, Ms Karyn Jonas, Dr Robert J Rosati, Ms Yolanda Barrón.

Figures & Table

References

- 1.Ivers N, Jamtvedt G, Flottorp S, et al. Audit and feedback: effects on professional practice and patient outcomes. Cochrane Database of Systematic Reviews. 2012;(Issue 6) doi: 10.1002/14651858.CD000259.pub3. Art. No.: CD000259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Washington, DC: Centers for Medicare & Medicaid Services; Centers for Medicare & Medicaid Services. Outcome-based quality improvement (OBQI) manual. 2010. [Google Scholar]

- 3.Washington, DC: Centers for Medicare & Medicaid Services. Process-based quality improvement (PBQI) manual. 2010. [Google Scholar]

- 4.Washington, DC: Centers for Medicare & Medicaid Services. Outcome-based quality monitoring (OBQM) manual. Agency patient-related characteristics reports and potentially avoidable event reports. 2010. [Google Scholar]

- 5.Giesbers A, Schouteen R, Poutsma E, et al. Feedback provision, nurses’ well-being and quality improvment: towards a conceptual framework. Journal of Nursing Management. 2015;23:682–91. doi: 10.1111/jonm.12196. [DOI] [PubMed] [Google Scholar]

- 6.Kluger A, DeNisi A. The effects of feedback interventions on performance: A historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychological Bulletin. 1996;119(2):254–84. [Google Scholar]

- 7.Benn J, Arnold G, Wei I, et al. Using quality indicators in anaesthesia: feedback back data to improve care. British Journal of Anaesthesia. 2012;109(1):80–91. doi: 10.1093/bja/aes173. [DOI] [PubMed] [Google Scholar]

- 8.Hysong S. Meta-analysis: audit and feedback features impact effectiveness on care quality. Medical Care. 2009;47(3):356–63. doi: 10.1097/MLR.0b013e3181893f6b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chen-Yuan E, Vinci L, Quinn M, et al. Using feedback to change primary care physician behavior. Journal of Ambulatory Care Management. 2015;38(2):118–24. doi: 10.1097/JAC.0000000000000055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sinuff T, Muscedere J, Rozmovits L, et al. A qualitative study of the variable effects of audit and feedback in the ICU. BMJ Quality and Safety. 2015;24:393–99. doi: 10.1136/bmjqs-2015-003978. [DOI] [PubMed] [Google Scholar]

- 11.Dowding D, Randell R, Gardner P, et al. Dashboards for improving patient care: Review of the literature. International Journal of Medical Informatics. 2015;84:87–100. doi: 10.1016/j.ijmedinf.2014.10.001. [DOI] [PubMed] [Google Scholar]

- 12.Bennett J, Glasziou P. Computerized reminders and feedback in medication management: a systematic reveiw of randomised controlled trials. Medical Journal of Australia. 2003;178:217–22. doi: 10.5694/j.1326-5377.2003.tb05166.x. [DOI] [PubMed] [Google Scholar]

- 13.Morgan MB, Branstetter BI, Lionetti DM, et al. The radiology digital dashboard: effects on report turnaround time. Journal of Digital Imaging. 2008;21(1):50–58. doi: 10.1007/s10278-007-9008-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Pablate J. University of North Florida; The effect of electronic feedback on anesthesia providers’ timely preoperative antibiotic adminstration. 2009 90p. [Google Scholar]

- 15.Starmer J, Giuse D. A real-time ventilator management dashboard: toward hardwiring compliance with evidence-based guidelines. AMIA. 2008;Annual Sym:702–06. [PMC free article] [PubMed] [Google Scholar]

- 16.Batley NJ, Osman HO, Kazzi AA, et al. Implementation of an emergency department computer system: design features that users value. Journal of Emergency Medicine. 2011;41(6):693–700. doi: 10.1016/j.jemermed.2010.05.014. [DOI] [PubMed] [Google Scholar]

- 17.Linder JA, Schnipper JL, Tsurikova R, et al. Electronic health record feedback to improve antibiotic prescribing for acute respiratory infections. American Journal of Managed Care. 2010;16(12 Suppl HIT):e311–9. [PubMed] [Google Scholar]

- 18.Koopman RJ, Kochendorfer KM, Moore JL, et al. A diabetes dashboard and physician efficiency and accuracy in accessing data needed for high-quality diabetes care. Annals of Family Medicine. 2011;9:398–405. doi: 10.1370/afm.1286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.McMenamin J, Nicholson R, Leech K. Patient Dashboard: the use of a colour-coded computerised clinical reminder in Whanganui regional general practices. Journal of Primary Health Care. 2011;3(4):307–10. [PubMed] [Google Scholar]

- 20.Waitman LR, Phillips IE, McCoy AB, et al. Adopting real-time surveillance dashboards as a component of an enterprisewide medication safety strategy. Joint Commission Journal on Quality & Patient Safety. 2011;37:326–32. doi: 10.1016/s1553-7250(11)37041-9. [DOI] [PubMed] [Google Scholar]

- 21.Zaydfudim V, Dossett LA, Starmer JM, et al. Archives of Surgery. 2009. Implementation of a real-time compliance dashboard to help reduce SICU ventilator-associated pneumonia with the ventilator bundle; pp. 656–62. [DOI] [PubMed] [Google Scholar]

- 22.Van Der Meulen M, Logie RH, Freer Y, et al. When a graph is poorer than 100 words: A comparison of computerised natural language generation, human generated descriptions and graphical displays in neonatal intensive care. Applied Cognitive Psychology. 2010;24(1):77–89. [Google Scholar]

- 23.Hutchinson J, Alba JW, Eisenstein EM. Heuristics and biases in data-based decision making: Effects of experience, training, and graphical data displays. Journal of Marketing Research. 2010;47(4):627–42. [Google Scholar]

- 24.Harvey N. Dhami M, Schlottmann A, Waldmann M. Judgement and Decision Making as a Skill Learning, Development and Evolution. Cambridge, UK: Cambridge University Press; Learning judgment and decision making from feedback. 2013 199 226. [Google Scholar]

- 25.Dowding DW, Russell D, Onorato N, et al. Technology solutions to support care continuity in home care: A focus group study. Journal for Healthcare Quality. 2017. Epub ahead of print:doi: 10.1097/JHQ.0000000000000104. [DOI] [PMC free article] [PubMed]

- 26.Dowding DW, Russell D, Jonas K, et al. Does Level of Numeracy and Graph Literacy Impact Comprehension of Quality Targets? Findings from a Survey of Home Care Nurses. AMIA Annual Symposium Proceedings. 2017;2017:635–640. [PMC free article] [PubMed] [Google Scholar]

- 27.Dowding D, Merrill J, Onorato N, et al. The impact of home care nurses’ numeracy and graph literacy on comprehension of visual display information: implications for dashboard design. Journal of the American Medical Informatics Association. 2018;25(2):175–182. doi: 10.1093/jamia/ocx042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.National Association for Home Care & Hospice. Washington, DC: Basic statistics about home care. National Association for Home Care & Hospice. 2010. [Google Scholar]

- 29.Fortinsky R, Madigan E, Sheehan T, et al. Risk factors for hospitalization in a national sample of medicare home health care patients. Journal of Applied Gerontology. 2014;33(4):474–93. doi: 10.1177/0733464812454007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Schipper J, Coviello J, Chyun D. Bolyz M, Capezuti E, Fulmer T. Evidence-Based Geriatric Nursing Protocols for Best Practice. 4th Edition ed. New York, NY: Springer Publishing Company; Fluid Overload: Identifying and Managing Heart Failure Patients At Risk for Hospital Readmission. 2011. [Google Scholar]

- 31.National Institute for Health and Clinical Excellence. Manchester, UK: NICE; Chronic heart failure. Management of chronic heart failure in adults in primary and secondary care. 2010. [Google Scholar]

- 32.Yancy C, Jessup M, Bozkurt B, et al. 2013 ACCF/AHA Guideline for the Management of Heart Failure. Journal of the American College of Cardiology. 2013;62(16):e147–239. doi: 10.1016/j.jacc.2013.05.019. [DOI] [PubMed] [Google Scholar]

- 33.Galesic M, Garcia-Retamero R. Graph Literacy: A Cross-Cultural Comparison. Medical Decision Making. 2011;31:444–57. doi: 10.1177/0272989X10373805. [DOI] [PubMed] [Google Scholar]

- 34.Gaissmaier W, Wegwarth O, Skopec D, et al. Numbers can be worth a thousand pictures: Individual differences in understanding graphical and numerical representations of health-related information. Health Psychology. 2012;31(3):286–96. doi: 10.1037/a0024850. [DOI] [PubMed] [Google Scholar]

- 35.Dunn Lopez K, Wilkie D, Yao Y, et al. Nurses’ Numeracy and Graphical Literacy: Informing Studies of Clinical Decision Support Interfaces. Journal of Nursing Care Quality. 2016;31(2):124–30. doi: 10.1097/NCQ.0000000000000149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lipkus I, Samsa G, Rimer B. General performance on a numeracy scale among highly educated samples. Medical Decision Making. 2001;21:37–44. doi: 10.1177/0272989X0102100105. [DOI] [PubMed] [Google Scholar]

- 37.Zhang J, Walji M. TURF: Toward a unified framework of EHR usability. Journal of Biomedical Informatics. 2011;44:1056–67. doi: 10.1016/j.jbi.2011.08.005. [DOI] [PubMed] [Google Scholar]

- 38.Bagnor A, Kortum P, Miller J. An empirical evaluation of the system usability scale. International Journal of Human-Computer Interaction. 2008;24(6):574–94. [Google Scholar]

- 39.Chin J, Diehl V, Norman K. Development of an instrument measuring user satisfaction of the human-computer interface. CHI ‘88 Proceedings of the SIGHCHI Conference on Human Factors in Computing Systems; Washington DC. ACM 1988 213. [Google Scholar]

- 40.Dowding D, Merrill J, Barrón Y, et al. Usability evaluation of a dashboard for home care nurses. CIN: Computers, Informatics, Nursing. 2018. In press. [DOI] [PMC free article] [PubMed]