Abstract

The Consolidated Clinical Document Architecture (C-CDA) is the primary standard for clinical document exchange in the United States. While document exchange is prevalent today, prior research has documented challenges to high quality, effective interoperability using this standard. Many electronic health records (EHRs) have recently been certified to a new version of the C-CDA standard as part of federal programs for EHR adoption. This renewed certification generated example documents from 52 health information technologies that have been made publicly available. This research applies automated tooling and manual inspection to evaluate conformance and data quality of these testing artifacts. It catalogs interoperability progress as well as remaining barriers to effective data exchange. Its findings underscore the importance of programs that evaluate data quality beyond schematron conformance to enable the high quality and safe exchange of clinical data.

Introduction

Interoperability of medical data is essential to improve care quality and efficiency. It has been identified as necessary for clinical innovation and critical to open electronic health records (EHRs)1, 2 Value-based care models rely on information sharing to properly function and the US federal government has focused recent attention on advancing interoperability3, 4.

Over the past decade in the United States, significant interoperability progress has been made. Today, a majority of hospitals and physicians can electronically share data and 87% of patients report access to their medical data5. In addition, the volume of data exchange has accelerated as EHR and health information exchange (HIE) adoption has grown. Despite this, industry surveys highlight interoperability as a major challenge. They identify data quality concerns due to incompleteness, inadequate codification and poor usability6, 7. Reducing the complexity and cost of interoperability has been recognized as vital to promote wider adoption and effective use8, 9. Industry-wide standards represent a significant opportunity to decrease the burden of exchange while improving data quality3.

There are multiple standards available for clinical data exchange. As part of the Medicare and Medicaid EHR Incentive Program, commonly known as Meaningful Use, the federal government requires providers to use certified technology to send and receive patient summaries using clinical document standards10. Currently, the primary document standard for exchange is the Consolidated Clinical Document Architecture (C-CDA) maintained by Health Level 7 (HL7). This document standard can be used for care transitions, EHR migrations, data export, research repositories, quality measurement and patient download11-13. Over 500 million C-CDA documents are exchanged annually in the United States and large technology companies, like Apple, increasingly use this format to aggregate patient data14-16. Newer standards also hold great potential, such as Fast Health Interoperability Resources (FHIR), but have had limited adoption to date.

The C-CDA is a library of documents that support multiple scenarios for data exchange. These documents use extensible markup language (XML) to encode data in a format that permits both human-readable display (narrative parts of C-CDA) and machine-readable parsing (structured sections). The C-CDA standard is complicated, running over 1,000 pages in length and covering a wide range of clinical domains, different terminologies and use cases. Prior research has demonstrated substantial variability in how clinical content is encoded in C-CDA documents partly due to this complexity17-19. Such variability impedes effective data exchange, broader user adoption and improved patient outcomes. In response, the most recent stage of federal regulations strengthened the testing of C-CDA 2.1 documents and added a requirement that testing artifacts of certified systems be made publicly available20. The domains of clinical data in C-CDA documents and their respective relevance for interoperability and clinical use are shown in Table 1.

Table 1.

Relevance of Clinical Domains in C-CDA documents Tested as Part of Certification

| Domain | C-CDA 2.1 Location | Relevance for Interoperability and Clinical Use |

|---|---|---|

| Patient Name | Document header | Patient identification and matching |

| Sex (administrative and birth sex) | Document header and structured section (social history) | Patient matching and gender related care |

| Date of Birth | Document header | Patient matching and age calculation. Common eligibility criteria for quality measures |

| Race and Ethnicity | Document header | Race related medical decisions. Common stratification criteria for quality measures |

| Preferred Language | Document header | Patient communication |

| Care Team Members | Document header | Care team coordination |

| Drug Allergies | Structured section (allergies) | Drug-allergy checking. Common exclusion criteria for quality measures |

| Heath Concerns | Structured section (health concerns) | Patient risk factors |

| Immunizations | Structured section (immunizations) | Preventative care. Common quality measure criteria |

| Implanted Devices | Structured section (equipment) | Prior medical history |

| Medications | Structured section (medications) | Prior and current therapies. Used in medication reconciliation. Common quality measure criteria |

| Laboratory Tests & Values | Structured section (results) | Objective assessments of organ systems. Common quality measure criteria |

| Patient Goals | Structured section (goals) | Desired care outcomes |

| Plan of Treatment | Structured section (plan of treatment) | Planned care activities |

| Problems | Structured sections (problems and encounter diagnoses) | Prior and current diagnostic history. Common eligibility criteria for quality measures |

| Procedures | Structured section (procedures) | Prior and current medical interventions. Common quality measure criteria |

| Smoking Status | Structured section (social history) | Prior and current social history. Common quality measure criteria |

| Vital Signs | Structured section (vital signs) | Objective assessments for patient health and dosing. Common quality measure criteria |

Objectives

The Veterans Health Administration (VA) exchanges clinical documents with over 145 partners, providing data on up to nine million veterans21. This constitutes one of the largest HIEs in size and breadth in the United States. The VA has been active in national initiatives to advance interoperability, such as eHealth Exchange, and research to promote effective use of C-CDA documents18,22. Given the importance of data quality in driving user adoption, the VA has implemented an active data quality surveillance program to monitor and score C-CDA document using automated tools.

Given that EHRs will begin transitioning in 2018 to the C-CDA 2.1 standard, the VA seeks to understand progress in the adoption of standards and current issues that affect interoperability. This research examines testing artifacts from recent certification through automated tooling and manual review. These clinical documents were tested as part of the Office of the National Coordinator for Health Information Technology (ONC) Health IT Certification Program with implementation specifications and certification criteria adopted by the Secretary of Health and Human Services. Specifically, it focuses on demonstrating the value of clinical rules beyond schematron conformance to identify computability and usability issues.

Methods

854 C-CDA documents were retrieved from a public repository maintained by ONC as of January 22, 2018 (https://github.com/siteadmin/2015-C-CDA-Certification-Samples). These documents are testing artifacts from fictional patient records scripted by ONC and created by health information technologies as part of certification. The repository contains duplicates from multiple testing scenarios and some older standards of data exchange. To focus on documents relevant to the C-CDA 2.1 standard, the pool of documents was filtered to exclude documents with either invalid XML (n = 1) or declared conformance to prior C-CDA templates (n = 105). To reduce duplication bias among the remaining samples, documents for the same patient from the same technology of the same document type were excluded except the most recent (n = 347). The most recent document was selected since it was likely to have addressed any defects identified during certification.

The remaining 401 documents represented 52 distinct certified technologies across 46 different companies. According to document timestamps, a majority (n = 275) of these documents were generated in 2017. A majority of the clinical documents were continuity of care documents (CCDs, n = 284) with the remainder being referral notes (n = 80), discharge summaries (n = 14) or other document types (n = 23). The mean number of documents per technology was 7.7 (range of 1-67). Nearly half (n = 164) of these documents were from four fictional patients outlined in certification testing. A summary of vendors’ samples, available clinical document types and respective test cases are shown in Table 2. A repository of the test scenarios and clinical documents examined in this research have been made publicly available (https://www.github.com/jddamore/ccda-samples).

Table 2.

C-CDA Document Samples Examined in this Research. Document types: CCD = continuity of care document, Ref = referral note, DS = discharge summary. Test cases: A = Alice Newman test scenario. B = Jeremy Bates test scenario. C = John Wright test scenario. D = Rebecca Larson test scenario.

| Technology | Document Count | Document Types | Test Cases |

|---|---|---|---|

| 360 Oncology | 2 | CCD | A, B |

| Advanced Technologies Group | 5 | CCD | A, B |

| Afoundria | 7 | CCD, Ref | A, B |

| Agastha | 10 | CCD | A, B |

| Allscripts Follow My Health | 7 | CCD, Ref, DS | A, B, C, D |

| Allscripts Professional | 2 | CCD, Ref | A, B |

| Allscripts Sunrise | 4 | CCD | A, B, C, D |

| Allscripts TouchWorks | 9 | CCD, Ref | A, B |

| Amrita | 67 | CCD, Ref, DS, other | C, D |

| Atos Pulse | 4 | CCD | A, B, C, D |

| Bizmatics PrognoCIS | 1 | CCD | A |

| CareEvolution | 10 | CCD | A, B |

| Carefluence | 4 | Ref | A, B, C, D |

| ChartLogic | 1 | CCD | A |

| CompuLink | 1 | CCD | B |

| EchoMan | 3 | CCD | None |

| Edaris Forerun | 4 | CCD, Ref | A, B |

| EHealthPartners | 2 | CCD | A, B |

| EMR Direct | 1 | CCD | B |

| Equicare | 1 | CCD | A |

| eRAD | 3 | CCD | A, B |

| Freedom Medical | 2 | CCD | A, B |

| Get Real Health | 6 | CCD, Ref | A, B, C, D |

| Health Companion | 1 | CCD | A |

| HealthGrid | 6 | CCD, Ref, DS | A, B, C, D |

| Henry Schein | 6 | CCD, other | A, B |

| Intellichart | 4 | CCD | A, B, C, D |

| ioPracticeWare | 5 | other | A, B |

| iPatientCare | 13 | CCD, Ref, DS, other | A, B, C, D |

| Key Chart | 2 | other | None |

| McKesson Paragon | 10 | CCD, Ref, DS | C, D |

| MDIntellisys IntelleChart | 7 | CCD, other | A, B |

| MDLogic | 2 | CCD | A, B |

| MDOffice | 3 | other | None |

| MedConnect | 11 | CCD, Ref | A, B |

| Medflow RCP | 2 | other | A, B |

| Medfusion | 2 | CCD | A, B |

| MedHost Enterprise | 53 | CCD | A, B, C, D |

| Medical Office Technologies | 4 | CCD, Ref | A, B |

| Meditech Magic | 6 | CCD, Ref, DS | C, D |

| ModuleMD Wise | 4 | Ref | A, B, C, D |

| Navigating Cancer | 3 | CCD | A, B |

| Netsmart myEvolv | 33 | Ref | A, B, C |

| NextGen | 13 | CCD, Ref, other | A, B |

| NextGen MediTouch | 7 | CCD, Ref | A, B |

| NextTech | 15 | CCD, Ref | A, B, C, D |

| OpenVista CareVue | 10 | CCD, Ref, DS | A, B, C, D |

| Practice Fusion | 4 | CCD, Ref | A, B |

| SocialCare | 1 | CCD | A |

| Sophrona Solutions | 2 | CCD | A, B |

| SuccessEHS | 9 | CCD, Ref, other | A, B |

| YourCareUniverse | 7 | CCD, Ref, DS | A, B, C, D |

Each of the documents was assessed using three tooling methods to evaluate potential interoperability concerns. The first was to submit the documents to the HL7 schematron using an open-source tool for schematron evaluation (https://github.com/ewadkins/cda-schematron-server). Schematron validation uses conformance statements made in the HL7 C-CDA 2.1 Implementation Guide to create testable rules for the XML. This level of testing focuses primarily on the XML structure of documents rather than the semantic meaning of the data. For example, a patient diagnosis could have an onset date in the future, which is not clinically reasonable but would validate using schematron conformance. Results from schematron testing were collected and five records from each schematron error were examined to validate whether the rule was triggered appropriately. In addition, all C-CDA documents were submitted to the Standards Implementation and Testing Environment, maintained by the ONC, for conformance evaluation. This tool does not use the HL7 schematron but provides error reporting in a similar format23. There are 2,123 potential schematron tests for errors using the C-CDA 2.1 schematron and all documents were successfully processed using the open-source and federal tooling.

Next, each of the documents was parsed using open-source Model-Driven Health Tools (MDHT). This tooling parsed, extracted, and formatted the structured clinical document data into tables, one for each section of the C-CDA. Results were analyzed in Microsoft Excel (Redmond, Washington) by data quality specialists for relevance and compliance to the C-CDA 2.1 standard and best practices in information exchange. Observations and themes of this processing were recorded and categorized. In addition, content of the human-readable portions was examined using HL7 and VA stylesheets for issues that may affect readability and clinical utility. All documents were successfully processed by MDHT.

The final method was to submit each of the documents to a proprietary tool to evaluate C-CDA data quality, Diameter Health Analyze v3.10 (Farmington, CT). The current release of this tool has 323 rules that check semantic aspects of C-CDA data quality beyond what is included in schematron testing. For example, content checks are made for patient safety, dates are checked for reasonableness and terminologies for appropriate coding. Similar to the schematron validation, five records from each alert were examined to validate whether the rule was triggered appropriately. The authors identified a subset of rules as critical to patient safety and effective data exchange for comparative analysis across technologies. All documents were successfully processed by the software.

Results

Schematron Testing

Of the 401 documents, 55 had no schematron errors according to the HL7 schematron tooling. The remaining documents had a total of 1,695 schematron errors (average 4.9 errors per document) with a range of 1 -42 errors per document. When using the ONC conformance tooling, 311 documents have no reported errors23. The remaining documents had a total of 374 errors (average 4.2 errors per document) with a range of 1-51 errors per document. The authors noted that the ONC tooling, which is more likely to be used for the certification testing, produced fewer errors relative to the HL7 schematron. Additional schematron analysis focused on the HL7 tooling since its errors draw directly from standards guidance.

Of the 1,695 schematron errors, 76 unique schematron rules were fired. Since the schematron contains 2,132 potential errors, only a small fraction (3.6%) of the all rules were triggered. Based on individual auditing of the rules, the authors found a positive predictive value of 83% for the rule firing to an actual XML conformance related issue. False positives were examined and generally caused by the schematron not being up to date with errata approved to the C-CDA 2.1. Review of the individual schematron errors and corresponding XML showed that 77% of the schematron errors related to inappropriate methods to encode “no information.” 10% of errors were due to template related issues of the C-CDA documents. The remaining 13% fell into categories regarding incorrect XML formatting and terminology use.

Model Driven Health Tools and Visual Inspection

Parsing of machine-readable content from the 401 documents resulted in 10,286 clinical data elements for inspection. These included 589 allergy entries; 471 encounter entries; 408 equipment and implant entries; 505 goal observations; 623 immunization entries; 1,155 medication entries; 716 plan of treatment entries; 1,497 problem or diagnosis entries; 1,196 result entries; 783 social history entries; 621 status assessment entries and 1,722 vital sign entries. Results tabulated in a spreadsheet were examined to investigate and catalog heterogeneity and errors.

Variations across clinical domains were categorized into four generalizable themes. The first was how null and “no information” were encoded across different domains. This variation reiterated errors from schematron testing and findings from prior research17, 18. In some instances, a single negated entry was included in documents to represent no data, while in others a code was used, while in others no entry was provided. Parsing the machine-readable content in such instances introduces complexity to ensure that empty data are excluded while no real patient data are dropped. The second theme was variability in the location of information within a particular clinical data element. For example, among the 471 encounters in the clinical documents, 202 included the encounter time as a <low> child element of <effectiveTime>, 143 included time only in the <effectiveTime> value attribute, 107 included no time information, while 19 used a combination of the <effectiveTime> and its child <high> element. This variability strains consuming systems that have to parse and reconcile multiple locations for similar data. A third theme was variability in where information was included across clinical domains. An illustrative example of this was the inclusion of a flu immunization as a procedure rather than an immunization. This may result from how clinical activities are recorded and subsequently assembled in C-CDA documents. The final theme was an inconsistency in the use of terminology. This category spans many clinical domains. One example is a medication coding, which used brand names in some technologies (e.g., Tylenol) while generic names (e.g., acetaminophen) were used in others. Both concepts are represented in the same medication terminology and acceptable in the C-CDA standard. Another example is heterogeneity in how status can be invoked. For example with a refused immunization, one product used an “aborted” status, another “cancelled” status, while others used “completed” status with clinical entry negation. While other observations were made outside of the four categorical themes above, these capture the vast majority of data concerns from manual review of parsed clinical data.

In addition, each of the documents for a single test case were examined using a combination of HL7 and VA stylesheets. Variability in the information rendered in the human readable display was noted across all of the clinical domains. For example, with medications, variability in the use capitalization, brand names, TALLman lettering, and coordinated dose amounts were observed in rendering drug information. Additionally, ancillary information such as the medication sig, status, dose, route, patient instructions, dispense, and fill quantities were rendered in some systems but not others. Full contrast of this medication example has been made publicly available online (https://github.com/jddamore/ccda-samples/blob/master/z-infographic/medications.jpg).

Proprietary Tooling Evaluation: Best Practice Clinical Validation

For the 401 documents, all triggered multiple alerts using the Diameter Health software. The total number of alerts was 21,304 (average 53.1 per document) with a range from 14 to 224 per document. Of the potential 323 rules active in the software, 227 (70.3%) fired on at least one document. Based on individual alert auditing, the authors found a positive predictive value of 93% for the rule firing to an actual data quality issue in the C-CDA document.

Generated alerts were categorized by two dimensions. The first was whether the alert was related to completeness (data richness of C-CDA document) or syntax (clinical meaning or terminology of C-CDA content). Of all alerts generated by the tooling, 57% were related to data completeness and 43% were related to syntax. The second dimension was which clinical domain the rule fell into from Table 1. Of all alerts, 19% were in document header, 17% in medications, 16% in vital signs, 12% in problems, and 11% in results with the remaining 25% spread across other clinical domains included in C-CDA documents.

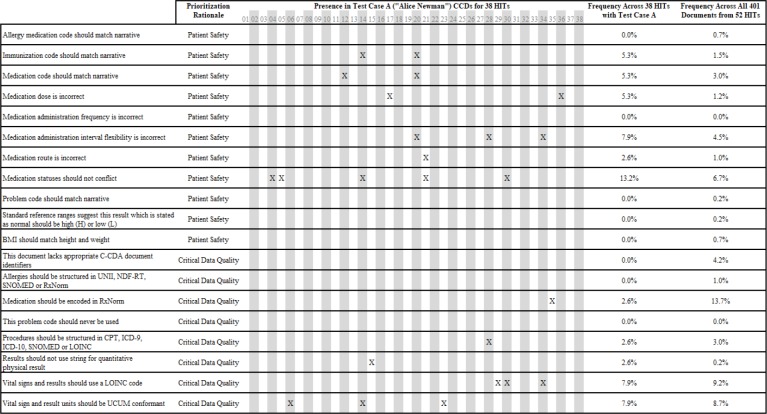

Patient Safety and Critical Data Analysis

The authors identified 19 categories for specific review to focus on how data in C-CDA documents may affect patient safety and critical data quality. These domains were examined using the automated tooling from the above analyses. The first basis for comparative analysis was to use all technologies that had a common test patient available in the same document format. The test case A scenario for “Alice Newman” in a CCD provided for the largest comparison across health information technologies. This allowed for 38 of the 52 technologies included in study to be compared in two ways. First, the 19 categories were examined to determine whether the issue appeared in the test scenario across 38 technologies. Next, those same rules were applied to all 401 available documents from the 52 technologies to determine overall prevalence of the potential concern. These results are shown in Table 3.

Table 3:

Patient Safety & Critical Data Analysis Comparison across Health Information Technologies (HITs)

|

The four most frequent problems identified as part of this analysis were that medications should be encoded in RxNorm (13.7% of all documents), vital signs and results should use LOINC (9.2% of all documents), vital signs, and results should use unified code of units of measure (UCUM) for physical values (8.7% of all documents) and the inclusion of conflicting status information for medications (6.7% of all documents). Identified errors were distributed without clear pattern across technologies and several vendors had no critical issues.

Illustrative Example of Identified Issues

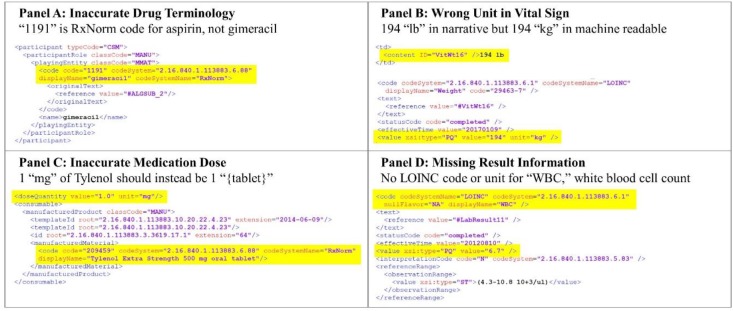

Using the combination of automated tooling and manual inspection, illustrative XML issues affecting interoperability were captured throughout the research. Four examples that had clinical relevance for data exchange follow. The first example (Figure 1 Panel A) uses a code for a drug allergy which does not match its description, which could adversely impact drug-allergy checks. The second (Figure 1 Panel B) inaccurately records the units for patient weight, which could impact drug dosing. The third (Figure 1 Panel C) inaccurately records a medication dose, which could impact medication administration and reconciliation. The last (Figure 1 Panel D) omits a code and unit for a common laboratory result which adversely impact data usability in care transitions or research.

Figure 1:

Illustrative Examples of Interoperability Issues

Discussion

While progress has been made over the past several years in respect to interoperability, significant work remains. Three perspectives emerged from observations made in the course of this research: 1) Interoperability progress relative to past standards; 2) the value of automated tooling to evaluate content beyond schematron conformance; and 3) implications for policymakers and standards developers.

Interoperability Progress

When comparing this research to prior studies of C-CDA documents, there are notable improvements in the quality of C-CDA documents. First, the breadth of information included in C-CDA document has increased as part of the federal program for health information technology certification20. Several of the clinical domains shown in Table 1, such as implanted devices, were not regularly included in previous C-CDA versions. Other domains, such as plan of treatment, were previously unstructured. Next, the overall number of schematron errors, key patient safety, and data quality issues appear less frequent than previous research17. This represents increasing maturity among health information technologies to achieve technical conformance to the standard. Finally, the industry knowledge base around C-CDA documents has improved over the past several years. HL7 has generated examples based on common clinical scenarios, hosted vendor events to address implementation issues, and finalized publication of a C-CDA 2.1 companion guide24.

Overall, the authors believe these represent modest improvements in the interoperability of clinical documents. Other issues affecting data usability and patient safety identified in this research remain interoperability challenges for future policy, technology development, and data surveillance programs to address.

Need for Automated Tooling and Surveillance

In contrast to prior research, the availability of open-source and commercial tooling has improved in the past several years. These tools provide an increasing range of automation in the evaluation of clinical documents, particularly in assessing issues beyond the HL7 schematron. The automated tools used in this work identified 13 times more issues than schematron validation alone and did so with a strong positive predictive value.

Prior research has identified the need to develop rubrics beyond the schematron17, 18, 21. The specific issues detailed in Table 3 and Figure 1 represent critical impediments to interoperability not detectable through schematron validation. While errors frequency may be decreasing, tools and data quality surveillance programs will be essential to quantify progress. In addition, automated methods can categorize and score sources of error and heterogeneity in C-CDA documents23. These issues can occur at multiple points in the translation of clinical care to an interoperable document, such as data collection, data entry, data storage, information retrieval, and C-CDA assembly. The VA has developed a multi-faceted approach to data quality surveillance similar to the tools utilized in this research (https://github.com/iddamore/ccda-samples/blob/master/z-infographic/VATools.jpg). While exchange has grown rapidly in the past several years, programs by HIEs and providers will be critical to resolving outstanding interoperability concerns.

Implications for Policymakers and Standards Developers

This research also provides insight for policymakers and standards developers. The investments made over the past decade in health information technology have yielded meaningful improvements in the technical conformance of C-CDA documents, the breadth of data included, and the availability of vendor and best-practice examples. Notably, this research would not have been possible without federal regulations requiring the disclosure of C-CDA samples produced as part of certification. We support public transparency of C-CDA documents and other interoperability standards produced by health information technologies.

In addition, automated tooling provides new ways to evaluate the completeness, semantic reconciliation, and clinical meaning of C-CDA documents. Policymakers and standards developers should find ways to promote the use of such tools in application development and ongoing information exchange. This should be done in a manner that promotes interoperability without undue burden to the industry. Continuous surveillance using automated tooling provides a means to create a positive feedback loop for information exchange participants. Benchmarking performance today can set quantitative metrics for the industry and goals for future improvement.

Finally, lessons learned from this and other research should be applied to C-CDA and other standards development, such as FHIR. This research demonstrates continued variability and errors in the implementation of complex medical data standards. Standards development needs to actively solicit and incorporate findings from both research and real-world implementations to enhance the usability of this information. Medical care, patient access, population health, and secondary data use all benefit from the continuing maturity and strengthening of clinical interoperability standards.

Limitations

Unlike prior research, this study uses testing artifacts produced as part of health information technology certification to evaluate the interoperability of clinical documents17, 18. This provides an advantage since common test scenarios enable comparisons across systems. The use of testing data, however, is also a limitation. The implementation of health information technologies routinely varies from testing environments and information collected as part of this research may not represent real-world use. More research is merited on production systems exchanging clinical documents, and larger sample sizes may reveal issues not observed in this research.

In addition, while the 52 technologies represented in this study represent a large survey of available products, they do not represent all commercial technologies. Our analysis was limited to the C-CDA documents released to the public at the time of research. We expect the public repository of available samples from various technologies will grow in the future. Finally, other technologies not evaluated in this research exist for the parsing and evaluation of clinical documents. This research was not intended as a comprehensive evaluation of C-CDA scoring and analysis technologies, instead focusing on the tooling selected by the VA in the development and validation of their data quality surveillance program.

Conclusion

Health information technologies using the most recent C-CDA standard for clinical document exchange have made progress in achieving interoperability, specifically along XML schematron conformance. Issues, however, related to critical data access and patient safety remain. Automated tooling for the detection of data quality issues holds great potential to help identify and resolve barriers to clinical document exchange. Data quality surveillance programs using such tools can be valuable to improve standards implementation and adoption. Data quality and interoperability research should be considered in future policy and standards development.

Acknowledgements

We would like to thank and acknowledge several individuals who provided assistance and feedback in this work. Judith Clark and Liliya Sadovaya of Diameter Health conducted data submission and collection work for the assessment of the SITE tooling for C-CDA 2.1 validation. Eric Hwang, Marie Swall and Eric LaChance from the VA provided feedback on the course of the research as well as specific guidance in regards to terminology issues identified. In addition, we would like to acknowledge John Wintermute from the VA and Jim Setser from Four Points Technology for their participation in product selection and implementation of tooling to improve clinical document data quality.

Disclosure

John D’Amore and Chun Li receive salaries from and have equity interest in Diameter Health, Inc. Diameter Health supplied the proprietary technology used in this evaluation as part of a federal award with the VA. Russell Leftwich receives a salary from InterSystems Inc. InterSystems supplies proprietary technology to the VA and is a leading vendor with health information exchange globally.

References

- 1.Mandl KD, Kohane IS. No small change for the health information economy. N Engl J Med. 2009 Mar 26;360(13):1278–81. doi: 10.1056/NEJMp0900411. [DOI] [PubMed] [Google Scholar]

- 2.Sittig DF, Wright A. What makes an EHR “open” or interoperable? J Am Med Inform Assoc. 2015 Sep;22(5):1099–101. doi: 10.1093/jamia/ocv060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.JASON task force final report [Internet] 2014. Oct 15, Available from: https://www.healthit.gov/hitac/sites/faca/files/Joint_HIT_JTF%20Final%20Report%20v2_2014-10-15.pdf.

- 4.Draft trusted exchange framework released by Centers for Medicare and Medicaid Services [Internet] 2018. Jan 5, Available from https://www.hhs.gov/about/news/2018/01/05/draft-trusted-exchange-framework-and-common-agreement-released-hhs.html.

- 5.Washington V, DeSalvo K, Mostashari F, Blumenthal D. The HITECH era and the path forward. N Engl J Med. 2017 Sep 7;377(10):904–6. doi: 10.1056/NEJMp1703370. [DOI] [PubMed] [Google Scholar]

- 6.HL7 CDA R2 Implementation Guide: Clinical Summary Relevant and Pertinent Data, Release 1. Health Level 7 [Internet] 2017. Apr 1, Available from: http://www.hl7.org/implement/standards/product_brief.cfm?product_id=453.

- 7.Castellucci M. Interoperability is biggest barrier to value-based payment adoption, hospital execs say. Modern Healthcare [Internet] 2018. Feb 14, Available from: http://www.modernhealthcare.com/article/20180214/NEWS/180219962.

- 8.Downing NL, Adler-Milstein J, Palma JP, Lane S, Eisenberg M, Sharp C, et al. Health information exchange policies of 11 diverse health systems and the associated impact on volume of exchange. J Am Med Inform Assoc. 2017 Jan;24(1):113–22. doi: 10.1093/jamia/ocw063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Adler-Milstein J. Moving past the EHR interoperability blame game. NEJM Catalyst [Internet] 2017. Jul 18, Available from: https://catalyst.nejm.org/ehr-interoperability-blame-game/.

- 10.Blumenthal D, Tavenner M. The “meaningful use” regulation for electronic health records. N Engl J Med. 2010 Aug 5;363(6):501–4. doi: 10.1056/NEJMp1006114. [DOI] [PubMed] [Google Scholar]

- 11.D’Amore JD, Sittig DF, Ness RB. How the continuity of care document can advance medical research and public health. Am J Public Health. 2012 May;102(5):e1–4. doi: 10.2105/AJPH.2011.300640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Klann JG, Mendis M, Phillips LC, Goodson AP, Rocha BH, Goldberg HS, et al. Taking advantage of continuity of care documents to populate a research repository. J Am Med Inform Assoc. 2015 Mar;22(2):370–9. doi: 10.1136/amiajnl-2014-003040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Dolin RH, Rogers B, Jaffe C. Health level seven interoperability strategy: big data, incrementally structured. Methods Inf Med. 2015;54(1):75–82. doi: 10.3414/ME14-01-0030. [DOI] [PubMed] [Google Scholar]

- 14.Leventhal R. Epic’s Faulkner on new share everywhere solution: “putting interoperability control in hands of patient.” Modern Healthcare [Internet] 2017. Sep 14, Available from: https://www.healthcare-informatics.com/article/interoperability/epic-s-faulkner-new-share-everywhere-solution-putting-interoperability.

- 15.Tahir D. Providers are sharing more data than ever. So why is everyone so unhappy? Modern Healthcare [Internet] 2015. Apr 22, Available from: http://www.modernhealthcare.com/article/20150422/NEWS/150429965.

- 16.Apple, Inc; 2018. Apple announces effortless solution bringing health records to iPhone [Internet] Available from: https://www.apple.com/newsroom/2018/01/apple-announces-effortless-solution-bringing-health-records-to-iPhone/. [Google Scholar]

- 17.D’Amore JD, Mandel JC, Kreda DA, Swain A, Koromia GA, Sundareswaran S, et al. Are meaningful use stage 2 certified EHRs ready for interoperability? Findings from the SMART C-CDA Collaborative. J Am Med Inform Assoc. 2014 Dec;21(6):1060–8. doi: 10.1136/amiajnl-2014-002883. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Botts N, Bouhaddou O, Bennett J, Pan E, Byrne C, Mercincavage L, et al. Data quality and interoperability challenges for eHealth exchange participants: observations from the Department of Veterans Affairs’ Virtual Lifetime Electronic Record Health pilot phase. AMIA Annu Symp Proc. 2014;2014:307–14. [PMC free article] [PubMed] [Google Scholar]

- 19.Boufehja A, Poiseau E, et al. Model-based analysis of HL7 CDA R2 conformance and requirements coverage. EJBI. 2015;11(2):en41–en50. [Google Scholar]

- 20.Office of the National Coordinator for Health IT Department of Health and Human Services. 2015 edition health information technology certification criteria. Federal Register [Internet] 2015. Available from https://www.federalregister.gov/articles/2015/10/16/2015-25597/2015-edition-health-information-technology-certification-criteria-2015-edition-base-electronic.

- 21.Lyle J, Bouhaddou O, Botts N, Swall M, Pan E, Cullen T, et al. Veterans Health Administration experience with data quality surveillance of continuity of care documents: interoperability challenges for eHealth exchange participants. AMIA Annu Symp Proc. 2015;2015:870–9. [PMC free article] [PubMed] [Google Scholar]

- 22.Byrne CM, Mercincavage LM, Bouhaddou O, Bennett JR, Pan EC, Botts NE, et al. The Department of Veterans Affairs’ (VA) implementation of the Virtual Lifetime Electronic Record (VLER): findings and lessons learned from health information exchange at 12 sites. Int J Med Inform. 2014 Aug;83(8):537–47. doi: 10.1016/j.ijmedinf.2014.04.005. [DOI] [PubMed] [Google Scholar]

- 23.Office of the National Coordinator for Health IT Standards implementation and testing environment. [Internet] 2018. Available from: https://ttpedge.sitenv.org/ttp/#/validators/ccdar2 and https://sitenv.org/home.

- 24.HL7 CDA R2 IG. C-CDA templates for clinical notes R1 companion guide, Release 1. Health Level 7 [Internet] 2017. Mar 1, Available from http://www.hl7.org/implement/standards/product_brief.cfm?product_id=447.