Abstract

Content importing technology enables duplication of large amounts of clinical text in electronic health record (EHR) progress notes. It can be difficult to find key sections such as Assessment and Plan in the resulting note. To quantify the extent of text length and duplication, we analyzed average ophthalmology note length and calculated novelty of each major note section (Subjective, Objective, Assessment, Plan, Other). We performed a retrospective chart review of consecutive note pairs and found that the average encounter note was 1182 ± 374 words long and less than a quarter of words changed between visits. The Plan note section had the highest percentage of change, and both the Assessment and Plan sections comprised a small fraction of the full note. Analysis of progress notes by section and unique content helps describe physician documentation activity and inform best practices and EHR design recommendations.

Introduction

Physicians perform documentation via electronic health record systems (EHRs) that most find time consuming and inefficient.1-4 They often rely upon content importing technology (CIT – e.g. copy-forward, copy-paste, templates) to increase documentation efficiency5 and to import large blocks of text (e.g. lab results, medication lists) into the note.6 Ninety percent of physicians report using copy-paste7 and a significant portion of progress notes is now generated using content importing technology. One 2016 study reported that only 18% of inpatient progress notes were manually entered as opposed to copied or imported.8

The resulting progress notes are often lengthy and redundant which may compromise legibility and increase time required to review the note.9 Eye-tracking studies have shown that physicians spend little time reviewing certain sections with large amounts of text, such as medication lists.10 These large volumes of imported text do not necessarily add proportional clinical value to the note but may instead obscure key information. For example, physicians jump to preferred sections like the Assessment and Plan10,11 but they may require much on-screen scrolling to find.12

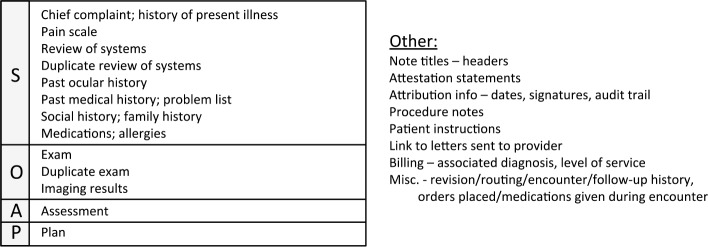

The aim of this study was to quantify the extent of ophthalmology progress note duplication and length. Previous studies described percentage of new vs. copied text in entire inpatient progress notes.8 Another study analyzed redundancy in the specialties of pediatrics, internal medicine, psychiatry, and surgery.13 This study, however, used a macro written in Microsoft Word (Richmond, WA) which allowed researchers to quickly separate full notes into individual sections (Subjective - S, Objective – O, Assessment – A, Plan – P and Other). The Word macro allowed each section to be quickly compared to the corresponding section in the preceding note. This enabled study of average note breakdown and redundancy by section at a higher level of granularity than was done previously. We evaluated forty pairs of sequential ophthalmology progress notes for overall length as well as similarity between note pairs. Mean percentage of new words per individual section of the progress note was calculated.

Ophthalmology is a unique field for this study of note length/similarity because notes are generated in a high-volume clinical setting where providers may rely more heavily upon CIT. Also, the ophthalmology-specific modules in the EHR affect note creation and review practices.

Methods

This study was approved by the Institutional Review Board at Oregon Health & Science University (OHSU). Patient informed consent was waived because all reviewed progress notes were previously collected for clinical, non-research purposes.

Patient Chart Selection

Eight ophthalmologists from 4 different subspecialties (cornea, neuro-ophthalmology, retina, and comprehensive) were selected for our study, two per subspecialty. “Common” patient notes were determined by identifying the top 3 ICD-10 encounter diagnosis codes for a one-year period from November 1, 2016 to November 1, 2017. To be included in the patient sample population, each patient must have been seen by the provider at least twice during the study period. The earlier of the patient’s two progress notes also had to have an ICD 10 encounter diagnosis code that matched one of the provider’s top 3 for the year. Perioperative notes were excluded from the study as they often use specific templates not representative of the average ophthalmology note. Five patients were randomly selected per provider (n=40 total notes) and the first two consecutive follow-up notes during the study year were compared for each patient.

Analysis of Note Pairs

Sequential pairs of full encounter notes were copied from the Epic EHR Chart Review, Ophthalmology Encounter tab and pasted into Microsoft Word (Redmond, WA). Consecutive notes were compared for similarity using the software text computational tool Workshare Compare (San Francisco, CA), which highlighted all new/unique words in the later note. (Identical sentences were not considered novel if only moved from one part of the note to another.) Researcher AH then divided all words in the later note into one of the five sections – Subjective (S), Objective (O), Assessment (A), Plan (P) or Other. The Other category contained items not generally included in the traditional SOAP progress note such as attribution information or patient instructions.

Each section was then broken down and coded into subsections given in Table 1. Coding was performed in Microsoft Word using a macro that changed selected text to a font color assigned to each subsection. A separate Word macro was written to calculate number of highlighted and non-highlighted words of each font color using the wdStatisticWords Word Count function. The macro automatically exported subsection new and total word counts into Microsoft Excel (Richmond, WA) and the mean percentage of new words vs. total words in the later note was then analyzed for each section and subsection.

Table 1.

Note sections and subsections. Main sections of progress notes - Subjective (S), Objective (O), Assessment (A), Plan (P) and Other - and their subsections.

|

Statistical Methods

To check reproducibility of subsection coding, a representative, de-identified note was coded by AH and was given to another ophthalmologist BH along with a section/subsection content key. BH then independently coded 4 other notes using the key for reference. Agreement between AH and BH’s coding of progress notes per section (S,O,A,P or Other) was assessed using Spearman’s correlation coefficient and the Bland-Altman Plot.14 A one-way, between-groups ANOVA test was used to perform multiple comparisons between group means and assess for statistical difference between groups. If a difference was found, post hoc Tukey testing was performed to identify the statistically different pairs. Possible outliers were identified by examining all values which had a magnitude of standardized residuals outside the interval [-1.96, 1.96]. All data manipulations were performed in Microsoft Excel with XLSTAT statistical analysis add-in software (New York, NY).

Results

Inter-observer Agreement Between Note Section Coders

Inter-observer agreement was analyzed for word count per section (S, O, A, P and Other) for the 4 pairs of notes coded by both AH and BH. There was good agreement with mean difference of -0.675 words and only 2 of 40 data points lying outside the Bland-Altman Plot limits of agreement. Excellent correlation was found (Spearman’s r=0.983, p<0.0001).

Distribution of Word Counts (Total and New) Among Note Sections

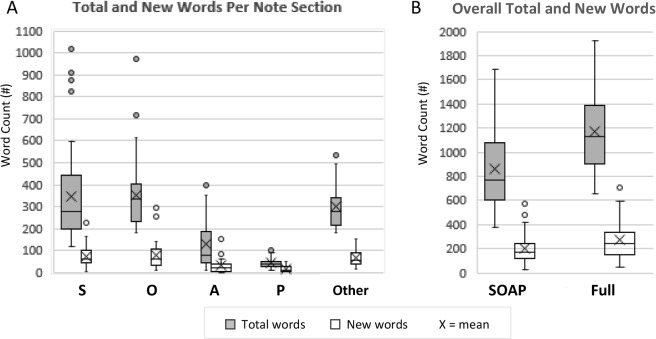

For the 40 note pairs, the full Epic encounter notes ranged from 518-2128 words (1055-12342 characters no spaces) and had a mean ± SD of 1182 ± 374 words (6631 ± 2124 characters). For reference, a 1886 word encounter note printed on 6.5 US letter size pages.

The full note had the following average breakdown: S 345 ± 228 words (29 ± 13%), O 351 ± 161 words (30 ± 9%), A 129 ± 106 words (10 ± 6%), P 42 ± 23 words (P 4 ± 2%), and Other 301 ± 102 words (27 ± 10%).

Thus, only 73 ± 10% (867 +339 words) of the note was comprised of the traditional SOAP sections. The distribution of total and new word counts per section and overall note are illustrated by box plot in Figure 1A and 1B respectively.

Figure 1.

A) Box plot of mean total and new words per note section: Subjective-S, Objective-O, Assessment-A, Plan-P and Other. B) Box plot of mean total and new words for the overall note: SOAP words only and full encounter note.

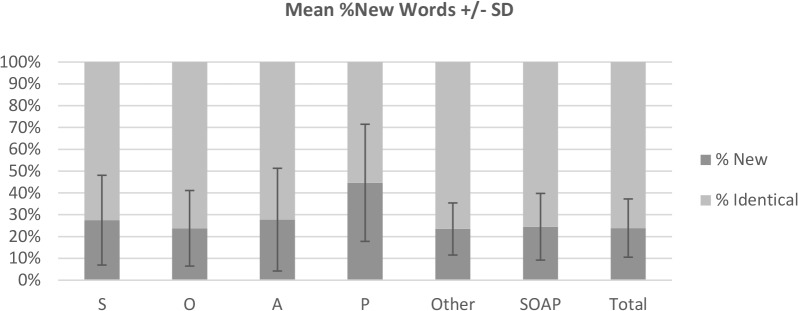

Percentage of New Words per Note Section

On average, only 24 ± 15% (205/867) words were new between serial notes when comparing only serial SOAP sections, and 24 ± 13% (271/1168) of words were new when comparing the serial full notes (SOAP plus Other section). (Percentages were mean percent differences. All fractions have mean word counts both in the numerator and denominator.) By section, mean % new words per note were S: 27 ± 21%, O: 24 ± 17%, A: 28 ± 24%, P: 45 ± 27%, and Other: 23 ± 12% (Figure 2).

Figure 2.

Mean %new words +/- SD. %new words for individual note sections as well as for combined SOAP sections and overall note.

A between-groups ANOVA was performed to see if % new words differed between S, O, A, P, or Other sections. It yielded a significant variation among conditions [F(4,195) = 7.128, P<0.001]. A post hoc Tukey test showed that Plan had a statistically higher percentage of new words than the other 4 sections (p<0.001). The other note sections (S, O, A, and Other) did not significantly differ from each other.

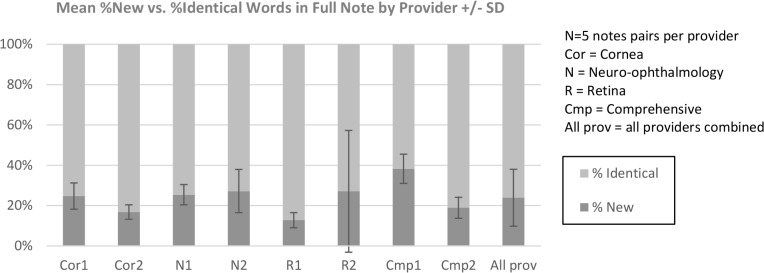

Percentage of New Words and Total Note Word by Provider

Figure 3 shows the mean % new words by provider as well as for all providers combined. Of note, there was a single outlier for one of the note pairs for retina provider (R2) - the later note was 81% new (standardized residual = 4.376), signified by the large error bar in Figure X. Upon further examination, it was determined that the first note was a progress note for a “procedure only” office visit where no Medications, History, slit lamp exam, etc. were documented. Therefore, the percentage of new words was high in the later note, which did include these sections.

Figure 3.

Mean %new words +/- SD in the full note. Analysis was done for each individual provider and for all providers combined.

A one-way between-subjects ANOVA was conducted to assess the effect of provider on % new words in the full note. There was no significant difference at the p<0.05 level [F(7,32)=2.045, p=0.08]. Thus, we accept the null hypothesis that mean % new words were similar between all providers, but given that the p value was close to 0.05, this pointed toward possible significance had there been a larger sample size. The p value here represented some variability between provider means.

Another one-way between-subjects ANOVA was conducted to assess the effect of provider on total number of words in the full note. The analysis was significant at [F(7,32) = 8.252, P<0.0001]. Thus, mean total note length differed based on provider.

Duplication of Words Within Each Progress Note

Six of eight providers had a standard note template that imported a portion (70 ± 22%) of the full objective exam into the progress note. Thus, objective exam information was repeated twice in the final Epic Encounter note – once automatically imported by Epic and once imported by the provider. This increased the average Exam section length from 184 ± 36 words for providers without exam duplication to 337 ± 72 words (84% increase in length) for those with the exam duplication. Two of eight providers also sometimes added a duplicate Review of Systems (ROS) which added an average of 32 ± 8 words to the overall note when present. On average, among all provider notes, 108/867 (12 ± 8%) of the words in the SOAP sections were duplicates within a single note (from combined repeated Exam and ROS).

Further Analysis by Note Subsections

Of the Subjective subsections, four rarely changed at each visit. % new words per subsection were as follows: Past Ocular History: 15 ± 31% (n=20 notes), Past Medical History: 8 ± 20% (n=30 notes), Social/Family History: 8 ± 23% (n=22 notes) and Medications/Allergies: 13 ± 22% (n=40 notes). Among all 40 pairs of notes, these 4 subsections combined were on average 12 ± 20% new in each encounter and comprised 28 ± 16% (247/867) of all words in the SOAP sections.

The highest mean percentage of new words per total SOAP subsection words were found in Chief Complaint (48/68 words, 65 ± 19%) and Plan (18/42 words, 45 ± 27%). On average, the physical exam was 19 ± 17% (37/193) new when not including any duplicate exam words.

Discussion

This study has the following key findings: 1) The average ophthalmology progress note is long. 2) The majority of each note subsection stays the same between visits. 3) Key sections with the most change make up a small percentage of overall note length. This supported the conclusion from other studies that EHR content importing technology has made it more difficult for physicians to find the most important sections of the note.9 EHR design exacerbates many of the challenges associated with electronic progress notes. This study’s detailed analysis of note length and redundancy by section can inform proposals for better EHR design.

1. The average ophthalmology note is long.

A common complaint about modern EHR progress notes is that they are long, redundant, and difficult to read.15,16 Our results agree with studies showing large volumes of text per progress note – the average full ophthalmology note was 6631 ± 2124 characters, comparable to the 5006-7053 characters per note found in Wang’s analysis of 23,630 inpatient progress notes.6,8

When paper records were used, the effort required to handwrite encouraged conciseness. EHR content importing technology, on the other hand, enables rapid reproduction of blocks of text with minimal effort.6 “Note bloat” occurs when providers indiscriminately fill progress notes with copied text. Our study confirmed the high prevalence of copied and potentially unnecessary text in EHR notes.

In this study, the majority of ophthalmology providers used note templates that imported historical data (e.g., past medical history and social/family history) into every progress note despite the fact that these subsections rarely change. Furthermore, this historical information is readily available for viewing in designated EHR tabs making its duplication unnecessary. The majority of providers also used note templates that duplicated the eye exam from Epic Kaleidoscope into the progress note.

2. The majority of the progress note stays the same between visits.

On average, the full Encounter note is only 24 ± 13% new with the rest identical to prior notes. Total note section length varied by provider but the percentage of new words per section did not. Percent new words was similar among all providers (Figure 3). Of the 5 notes sections, only Plan had a significantly higher percent change between visits with 45 ± 27% new words. When the majority of each progress is the same as its predecessor, it lowers the yield of reviewing prior notes and makes finding new information more difficult.9 The practice of importing large sections of text such as Past Medical History which rarely change only exacerbates this problem.

3. Important sections of the note make up a small percentage of overall note length.

A study by Brown tracking eye movements found that physicians spend 67% of total reading time in the Assessment/Impression and Plan sections. The rest of the note is quickly skimmed or ignored.10 Physicians have demonstrated a preference for the Assessment and Plan note section, but in our sample these highest-yield sections made up a minority of the full note, 10 ± 6% and 4 ± 2% respectively. This makes finding critical or high-yield difficult when obscured in a large volume of text.

EHR design features result in increased note length

EHRs automatically generate content that lengthen progress notes.

A previous study comparing ophthalmology electronic and paper notes found that electronic notes were at least twice as long as a paper progress note that described the same disease (≥ 2 pages in the EHR compared to 1 page on paper). Sanders et. al found that most of this extra note length was due to computer-generated text that added sections to the progress note (e.g. Orders, Results, Level of Service).17 We found similar results in our study where the Epic EHR automatically added content such as Pharmacy information or Patient Instructions to the encounter note. As a result, only 73 ± 10% of the Epic Encounter note was comprised of the traditional SOAP sections. On average, Epic Encounter notes were 301 ± 102 words longer than notes only containing SOAP content. This extra Encounter note length was a direct result of Epic EHR design. The Epic EHR allows two ways of reviewing prior visit notes 1) the Note tab that displays only clinical progress note text and 2) the Encounter tab in which progress notes are integrated and displayed amidst other encounter details – including attribution, billing, prescribing information, etc. The structured eye exam elements recorded in the ophthalmology module are displayed by default only in the longer Encounter notes; therefore, providers must scroll through lengthy documents to review clinical information.

EHR design may inadvertently encourage more use of CIT and result in longer notes

In informal conversations with other ophthalmologists, several cited the difficulty of finding desired clinical data in the Encounter note as the reason for importing the eye exam into the progress note – to increase ease of review. In other words, overly long EHR-generated Encounter notes motivated physicians to import the eye exam in their Progress Notes, which then further increases Encounter note length since eye exam data is now included twice in the Encounter note.

Other providers cited EHR screen fragmentation as the reason for their documentation practices that contributed to “note bloat.” They often imported historical data into progress notes to avoid having to click through multiple locations in the chart to find them. For example, past medical history is found on a different screen than the eye exam which is different than the screen for imaging results. Previous studies have found that providers often import past data into the current progress note just to have it available while drafting the Assessment and Plan.18 Thus, fragmentation of important data display may prompt physicians to import all of it to one central location. The length and redundancy of electronic progress notes has important implications for patient care. With the EHR becoming the primary mode of communication between doctors,19 overlooking key information buried in a long document can have serious consequences. In one case report, an admission note documented that a patient would receive heparin for venous thromboembolism prophylaxis. The patient was transferred to a different care team and this statement was copied and pasted in four consecutive notes without the order for heparin ever being placed. As a result, the patient never received the medication and developed a pulmonary embolism requiring re-hospitalization two days after discharge.20

In addition to the risk of overlooking important data, copied text may be outdated or internally inconsistent and errors may propagate throughout subsequent notes. In one case report, it was found that the same sentence regarding a patient’s discharge referral to a gastroenterologist was repeated at multiple hospitalizations over 7 years.21

Redundant information makes it difficult to differentiate current versus historical data. It also becomes difficult to identify when and by whom the documentation was first written.19 In this way, the trustworthiness of the medical record comes into question.13

Furthermore, reading longer progress notes takes more time. One study found a linear association between character count and time spent reading.10 Doctors face increasing pressure to see more patients in less time due to health care costs and limited accessibility.22,23 The extra time and greater cognitive load required by doctors to review unnecessarily long progress notes only exacerbates this problem.13,24

Recommendations

Institutional policies

Institutions should encourage brief, succinct progress notes and limit the use of templates that import large amounts of EHR data such as medication lists or past medical history. A recent study showed that implementing a new best practice progress note template along with resident education decreased note length while improving note quality.22 Some institutions have also implemented an APSO rather than SOAP note format so that the key sections of Assessment and Plan are more quickly located at the beginning, rather than the end, of a note.12,23

EHR redesign

Large blocks of background information (e.g. medication lists, past medical history) are often imported into progress notes using templates. Such text is associated with a patient encounter but should be kept separate from the main body of the progress note text. For example, imported text could be appended to the end of the note in a separate window or print group rather than integrated throughout the main body of the note. Similarly, EHRs should limit the amount of computer-generated text inserted into the progress note. Any information not directly related to patient care (e.g. billing information, attribution data, pharmacy address) should be kept separate from clinician-generated text or displayed only on demand rather than by default. Given the large amount of identical text between notes, EHRs should also make manually-entered or novel text easily identifiable. Epic has a function to grey-out copied text. However, it does not hide the large amount of information imported via templates, and this function only works while reviewing progress notes in the Notes tab, NOT when viewing full encounter notes in the Encounter tab (which is the default note review mode for ophthalmology notes). Highlighting text that is new compared to the prior note would help clinicians quickly identify changes in a patient’s condition or treatment plan. A previous study found that visualization cues such as highlighted text did indeed save time as physicians navigated notes.13 Our study did a direct word-to-word comparison between pairs of notes to identify new text. Other researchers have used statistical language modeling and semantic similarity metrics in an effort to more intelligently identify new information beyond just a change in words13. This work can inform EHR redesign that offers better visualization of novel data. EHRs should also reduce screen fragmentation or allow physicians more control over how and where important information is displayed. A physician customizable “dashboard” that displays the most important information on one screen would decrease physicians’ perceived need to use the progress note for this function. This may reduce the practice of importing large blocks of text into the note.

Future directions

Further study should be undertaken at our institution to explore ophthalmologists’ reasons for reproducing eye exam and historical information in every progress note. Preliminary discussions have revealed that large-volume content importing behavior has been driven by 1) the need to work around screen fragmentation and 2) the inability to easily find desired information. If further motivations behind content importing behavior can be characterized, they can be used to suggest changes to future EHR design.

Previous studies have found a correlation between note length and time required to read progress notes.10 To evaluate the impact of long, redundant notes, a study should be done to quantify amount of time spent reading ophthalmology notes of various lengths. Providers can also be timed as they try to identify important content in progress notes with and without highlighting of novel words. The impact of different data displays can then be compared.

This study found that ophthalmology progress notes are long, mostly identical to prior notes and that important note sections make up a small portion of overall length. It identified EHR design features that contribute to “note bloat” both directly (by inserting computer-generated text) and indirectly (e.g. screen fragmentation promoting physician use of content importing technology). A novel use of Word macros allowed us to quickly analyze and compare note sections for more granular analysis of documentation behavior. The section with the highest percentage of new documentation was Plan, and this objectively demonstrated the importance of this note section. However, this key section represented only 4 ± 2% of the total word count.

The results of this study help identify major problems with electronic progress notes and provides direction for future EHR design. Namely, EHRs should make important information obvious, possibly by highlighting new information in the most important note sections.

Limitations

This study examined % new words per exam section in consecutive progress notes. However, there are some limitations - this was a single-site, single-specialty analysis of progress notes which may limit its generalizability. The practice of importing historical as well as exam information may be a function of intradepartmental culture as well as shared custom templates. Furthermore, length of note sections and % new words may be related to the type of EHR used as well as what types of CIT are available. Thus, the findings may be specific only to the Epic EHR and specifically, its ophthalmology-specific Kaleidoscope model. Finally, the relatively low number of progress note pairs prevented statistical analysis of the 21 subsections coded per progress note. Future coding of a larger number of notes using the described method will allow further analysis by subsection.

Conclusions

Inefficiency associated with EHR documentation has resulted in widespread use of content importing technology that contribute to overly long progress notes filled with redundant information.15,16 Analysis of similarity has helped characterize the problem of redundant information in subsequent progress notes. The increased granularity of our study (by note section – S, O, A, P, and Other) allows deeper exploration into provider content importing behavior and can inform future efforts to decrease “note-bloat” and improve data display.

References

- 1.Chan P, Thyparampil PJ, Chiang MF. Accuracy and speed of electronic health record versus paper-based ophthalmic documentation strategies. Am J Ophthalmol. 2013 Jul;156(1):165–172 e2. doi: 10.1016/j.ajo.2013.02.010. [DOI] [PubMed] [Google Scholar]

- 2.Bae J, Encinosa WE. National estimates of the impact of electronic health records on the workload of primary care physicians. BMC Health Serv Res. 2016;10(16):172. doi: 10.1186/s12913-016-1422-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Arndt BG, Beasley JW, Watkinson MD, Temte JL, Tuan W-J, Sinsky CA, et al. Tethered to the EHR: Primary Care Physician Workload Assessment Using EHR Event Log Data and Time-Motion Observations. Ann Fam Med. 2017 Sep;15(5):419–26. doi: 10.1370/afm.2121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chiang MF, Read-Brown S, Tu DC, Choi D, Sanders DS, Hwang TS, et al. Evaluation of electronic health record implementation in ophthalmology at an academic medical center (an American Ophthalmological Society thesis). Trans Am Ophthalmol Soc. 2013 Sep;111:70–92. [PMC free article] [PubMed] [Google Scholar]

- 5.Weis JM, Levy PC. Copy, paste, and cloned notes in electronic health records: prevalence, benefits, risks, and best practice recommendations. Chest. 2014 Mar 1;145(3):632–8. doi: 10.1378/chest.13-0886. [DOI] [PubMed] [Google Scholar]

- 6.Lowry SZ, Ramaiah M, Prettyman SS, Simmons D, Brick D, Deutsch E, et al. Examining the Copy and Paste Function in the Use of Electronic Health Records. NIST Interagency Internal Rep NISTIR - 8166 [Internet] 2017. Jan 19, [cited 2018 Mar 8]; Available from: https://www.nist.gov/publications/examining-copy-and-paste-function-use-electronic-health-records.

- 7.O’Donnell HC, Kaushal R, Barrón Y, Callahan MA, Adelman RD, Siegler EL. Physicians’ attitudes towards copy and pasting in electronic note writing. J Gen Intern Med. 2009 Jan;24(1):63–8. doi: 10.1007/s11606-008-0843-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wang MD, Khanna R, Najafi N. Characterizing the Source of Text in Electronic Health Record Progress Notes. JAMA Intern Med. 2017 Aug 1;177(8):1212–3. doi: 10.1001/jamainternmed.2017.1548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Payne TH, tenBroek AE, Fletcher GS, Labuguen MC. Transition from paper to electronic inpatient physician notes. J Am Med Inform Assoc JAMIA. 2010 Feb;17(1):108–11. doi: 10.1197/jamia.M3173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Brown PJ, Marquard JL, Amster B, Romoser M, Friderici J, Goff S, et al. What do physicians read (and ignore) in electronic progress notes? Appl Clin Inform. 2014;5(2):430–44. doi: 10.4338/ACI-2014-01-RA-0003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Zheng K, Padman R, Johnson MP, Diamond HS. An Interface-driven Analysis of User Interactions with an Electronic Health Records System. J Am Med Inform Assoc JAMIA. 2009;16(2):228–37. doi: 10.1197/jamia.M2852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lin C-T, McKenzie M, Pell J, Caplan L. Health Care Provider Satisfaction With a New Electronic Progress Note Format: SOAP vs APSO Format. JAMA Intern Med. 2013 Jan 28;173(2):160–2. doi: 10.1001/2013.jamainternmed.474. [DOI] [PubMed] [Google Scholar]

- 13.Zhang R, Pakhomov SVS, Arsoniadis EG, Lee JT, Wang Y, Melton GB. Detecting clinically relevant new information in clinical notes across specialties and settings. BMC Med Inform Decis Mak. 2017 Jul 5;17(2):68. doi: 10.1186/s12911-017-0464-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sedgwick P. Limits of agreement (Bland-Altman method). BMJ. 2013 Mar 15;346:f1630. doi: 10.1136/bmj.f1630. [DOI] [PubMed] [Google Scholar]

- 15.Bowman S. Impact of Electronic Health Record Systems on Information Integrity: Quality and Safety Implications. Perspect Health Inf Manag. 2013 Oct 1;10(Fall) [PMC free article] [PubMed] [Google Scholar]

- 16.Siegler EL, Adelman R. Copy and paste: a remediable hazard of electronic health records. Am J Med. 2009 Jun;122(6):495–6. doi: 10.1016/j.amjmed.2009.02.010. [DOI] [PubMed] [Google Scholar]

- 17.Sanders D, Lattin D, Tu D, Read-Brown S, Wilson D, Hwang T, et al. Electronic health record (EHR) systems in ophthalmology: Impact on clinical documentation. Invest Ophthalmol Vis Sci. 2013 Jun 16;54(15):4424–4424. doi: 10.1016/j.ophtha.2013.02.017. [DOI] [PubMed] [Google Scholar]

- 18.Mamykina L, Vawdrey DK, Stetson PD, Zheng K, Hripcsak G. Clinical documentation: composition or synthesis? J Am Med Inform Assoc JAMIA. 2012 Dec;19(6):1025–31. doi: 10.1136/amiajnl-2012-000901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.The Joint Commission Division of Health Care Improvement. Preventing copy-and-paste errors in EHRs. Quick Safety. 2015 Feb;(10):1–2. [Google Scholar]

- 20.Copy and Paste | AHRQ Patient Safety Network [Internet] [cited 2018 Jul 3]. Available from: https://psnet.ahrq.gov/webmm/case/157.

- 21.Markel A. Copy and Paste of Electronic Health Records: A Modern Medical Illness. Am J Med. 2010 May 1;123(5):e9. doi: 10.1016/j.amjmed.2009.10.012. [DOI] [PubMed] [Google Scholar]

- 22.Kahn D, Stewart E, Duncan M, Lee E, Simon W, Lee C, et al. 2018. A Prescription for Note Bloat: An Effective Progress Note Template. J Hosp Med. [DOI] [PubMed] [Google Scholar]

- 23.Sieja A, Pell J, Markley K, Johnston C, Peskind R. Lin C-T. Successful Implementation of APSO Notes Across a Major Health System. AJMC. 2017 Mar;5(1):29–34. [Google Scholar]

- 24.Farri O, Pieckiewicz DS, Rahman AS, Adam TJ, Pakhomov SV, Melton GB. A Qualitative Analysis of EHR Clinical Document Synthesis by Clinicians. AMIA Annu Symp Proc. 2012 Nov 3;2012:1211–20. [PMC free article] [PubMed] [Google Scholar]