Highlights

-

•

Repeating spike patterns exist and are informative. Can a single cell do the readout?

-

•

We show how a leaky integrate-and-fire (LIF) can do this readout optimally.

-

•

The optimal membrane time constant is short, possibly much shorter than the pattern.

-

•

Spike-timing-dependent plasticity (STDP) can turn a neuron into an optimal detector.

-

•

These results may explain how humans can learn repeating visual or auditory sequences.

Abbreviations: LTD, Long-Term Depression; LTP, Long-Term Potentiation; LIF, leaky integrate-and-fire; SNR, signal-to-noise ratio; STDP, spike-timing-dependent plasticity

Key words: spike-timing-dependent plasticity (STDP), leaky integrate-and-fire neuron, coincidence detection, multi-neuron spike sequence, spatiotemporal spike pattern, unsupervised learning

Abstract

Repeating spatiotemporal spike patterns exist and carry information. How this information is extracted by downstream neurons is unclear. Here we theoretically investigate to what extent a single cell could detect a given spike pattern and what the optimal parameters to do so are, in particular the membrane time constant . Using a leaky integrate-and-fire (LIF) neuron with homogeneous Poisson input, we computed this optimum analytically. We found that a relatively small (at most a few tens of ms) is usually optimal, even when the pattern is much longer. This is somewhat counter-intuitive as the resulting detector ignores most of the pattern, due to its fast memory decay. Next, we wondered if spike-timing-dependent plasticity (STDP) could enable a neuron to reach the theoretical optimum. We simulated a LIF equipped with additive STDP, and repeatedly exposed it to a given input spike pattern. As in previous studies, the LIF progressively became selective to the repeating pattern with no supervision, even when the pattern was embedded in Poisson activity. Here we show that, using certain STDP parameters, the resulting pattern detector is optimal. These mechanisms may explain how humans learn repeating sensory sequences. Long sequences could be recognized thanks to coincidence detectors working at a much shorter timescale. This is consistent with the fact that recognition is still possible if a sound sequence is compressed, played backward, or scrambled using 10-ms bins. Coincidence detection is a simple yet powerful mechanism, which could be the main function of neurons in the brain.

Introduction

Electrophysiologists report the existence of repeating spike sequence involving multiple cells, also called “spatiotemporal spike patterns”, with precision in the millisecond range, both in vitro and in vivo, lasting from a few tens of ms to several seconds (Tiesinga et al., 2008). In sensory systems, different stimuli evoke different spike patterns (also called “packets”) (Luczak et al., 2015). In other words, the spike patterns contain information about the stimulus. How this information is extracted by downstream neurons is unclear. Can it be done by neurons only one synapse away from the recorded neurons? Or are multiple integration steps needed? Can it be done by simple coincidence detector neurons, or should other temporal features, such as spike ranks, be taken into account? Here we wondered how far we can go with the simplest scenario: the readout is done by simple coincidence detector neurons only one synapse away from the neurons involved in the repeating pattern. We demonstrate that this approach can lead to very robust pattern detectors, provided that the membrane time constants are relatively short, possibly much shorter than the pattern duration.

In addition, it is known that mere repeated exposure to meaningless sensory sequences facilitates their recognition afterward, in the visual (Gold et al., 2014) and auditory modalities (Agus et al., 2010, Andrillon et al., 2015, Viswanathan et al., 2016) (see also contributions in this special issue), even when the subjects were unaware of these repetitions. Thus, an unsupervised learning mechanism must be at work. It could be the so called spike-timing-dependent plasticity (STDP). Indeed, some theoretical studies by us and others have shown that neurons equipped with STDP can become selective to arbitrary repeating spike patterns, even without supervision (Masquelier et al., 2008, Masquelier et al., 2009, Gilson et al., 2011, Humble et al., 2012, Hunzinger et al., 2012, Klampfl and Maass, 2013, Kasabov et al., 2013, Nessler et al., 2013, Krunglevicius, 2015, Yger et al., 2015, Sun et al., 2016). Using numerical simulations, we show here that the resulting detectors can be close to the theoretical optimum.

Formal description of the problem

We assess the problem of detecting a spatiotemporal spike pattern with a single LIF neuron. Intuitively, one should connect the LIF to the neurons that are particularly active during the pattern, or during a subsection of it. That way, the LIF will tend to be more activated by the pattern than by some other input. More formally, we note L the pattern duration, N the number of neurons it involves. We call Strategy the strategy which consists in connecting the LIF to the M neurons that emit at least n spike(s) during a certain time window of the pattern. Strategy #1 is illustrated on Fig. 1.

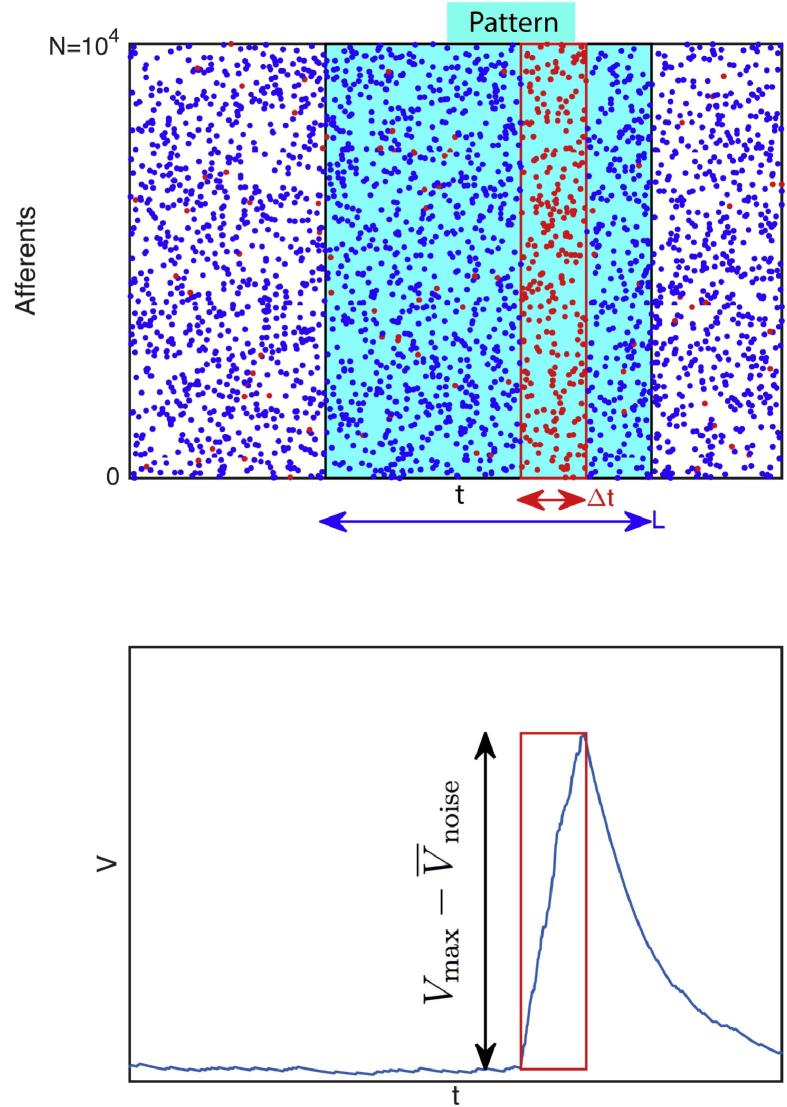

Fig. 1.

Detecting a spike pattern with a LIF neuron. (Top) Raster plot of neurons firing according to an homogeneous Poisson process. A pattern of duration L can be repeated (frozen noise). Here we illustrated Strategy #1, which consists in connecting the LIF to all neurons that fire at least once during a certain time window of the pattern, with duration . These neurons emit red spikes. Of course they also fire outside of the window. (Bottom) Typically the LIF’s potential will be particularly high when integrating the spikes of the window, much higher than with random Poisson’s inputs, and we want to optimize this difference, or more precisely the signal-to-noise ratio (SNR, see text).

We hypothesize that all afferent neurons fire according to an homogeneous Poisson process with rate f, both inside and outside the pattern. That is the pattern corresponds to one realization of the Poisson process, which can be repeated (this is sometimes referred to a “frozen noise”). To model jitter, at each repetition a random time lag is added to each spike, drawn from a uniform distribution over (a normal distribution is more often used, but it would not allow analytical treatment, see next section).

We also assume that synapses are instantaneous (i.e. excitatory postsynaptic currents are made of Diracs), which facilitates the analytic calculations.

For now we ignore the LIF threshold, and we want to optimize its signal-to-noise ratio (SNR), defined as:

| (1) |

where is the maximal potential reached during the pattern presentation, is the mean value for the potential with Poisson input (noise period), and its standard deviation (see Fig. 1).

A theoretical optimum

Deriving the SNR analytically

We now want to calculate the SNR analytically. In this section, we assume unitary synaptic weights. Since the LIF has instantaneous synapses, and the input spikes are generated with a Poisson process, we have and , where is the membrane’s time constant (Burkitt, 2006). We assume that (large number of synaptic inputs), so that the distribution of V is approximately Gaussian (Burkitt, 2006). Otherwise it would be positively skewed, thus a high SNR as defined by Eq. (1) would not guarantee a low false alarm rate.

The number of selected afferents M depends on the strategy n. The probability that an afferent fires k times in the window is given by the Poisson probability mass function: , with . The probability that an afferent fires at least n times is thus , and finally, on average:

| (2) |

We now need to estimate . Intuitively, during the window, the effective input spike rate, which we call r, is typically higher than fM, because we deliberately chose the most active afferents. For example, using Strategy with ensures that this rate is at least 100 Hz per afferent, even if f is only a few Hz. More formally, Strategy discards the afferents that emit fewer than n spikes. This means on average the number of discarded spikes is . Thus on average:

| (3) |

We note the mean potential of the steady regime that would be reached if was infinite. We now want to compute the transient response. The LIF with instantaneous synapses and unitary synaptic weights obeys the following differential equation:

| (4) |

where are the presynaptic spike times. We first make the approximation of continuity, and replace the sum of Diracs by an equivalent firing rate :

| (5) |

should be computed on a time bin which is much smaller than , but yet contains many spikes, to avoid discretization effects. In other words, this approximation of continuity is only valid for a large number of spikes in the integration window, that is if , which for Strategy #1 leads to .

Note that during the noise period, and during the window (in the absence of jitter).

At this point it is convenient to introduce the reduced variable , which obeys the following differential equation:

| (6) |

where is the dimensionless input current, such as during the noise period (when the input spike rate is fM), and when the input spike rate is r).

Without jitter, would be a simple step function, equals to 1 during the window, and 0 elsewhere. Adding jitter, however, turns into a trapezoidal function, which can be calculated (see Fig. 2). Now that is known, one can compute by integrating Eq. (6).

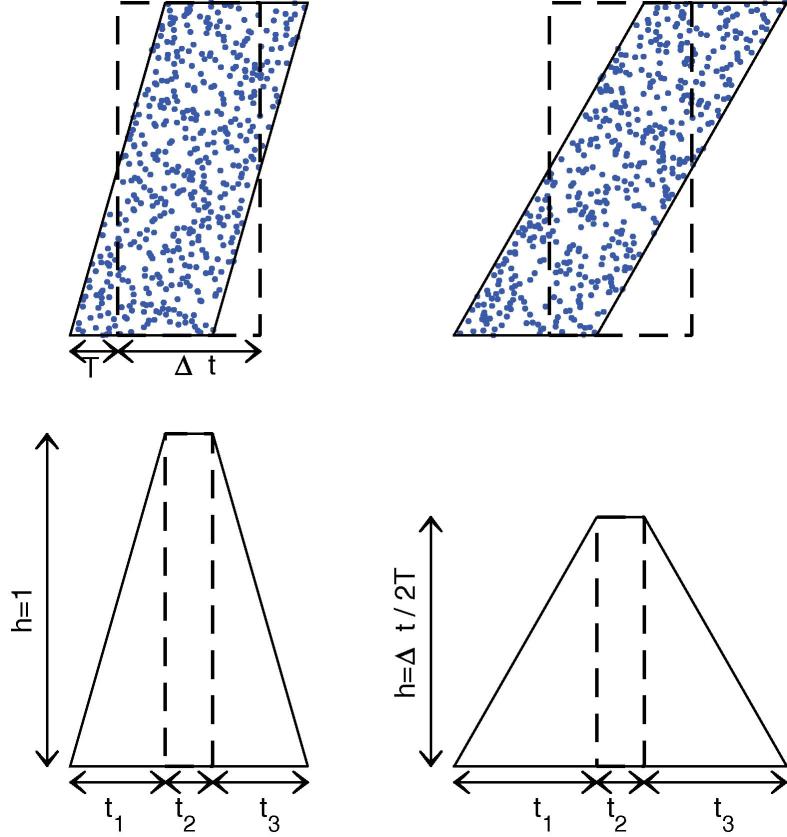

Fig. 2.

Jittering the spike pattern. (Top) Raster plots for the M selected afferents. x-Axis is time, and y-axis is spike number (arbitrary, so we order them in increasing added jitter, which is a random variable uniformly distributed over ). Dashed (resp. solid) lines corresponds to the boundaries of the raster plot before (resp. after) adding jitter. The left (resp. right) panel illustrates the case (resp. ). (Bottom) We plotted the corresponding spike time histograms, or, equivalently, doing the approximation of continuity, . One can easily compute , and . One can check that the trapezoidal area is whatever T (jittering does not add nor remove spikes).

The response of the LIF to an arbitrary current is (Tuckwell, 1988):

| (7) |

With , and given that a primitive of is , the integral can be computed exactly:

| (8) |

Note that another jitter distribution than uniform (e.g. normal), would not lead to a piece-wise linear function for , and thus would typically not permit exact integration like here.

As illustrated on Fig. 3, one can use Eq. (8) to compute successively :

| (9) |

| (10) |

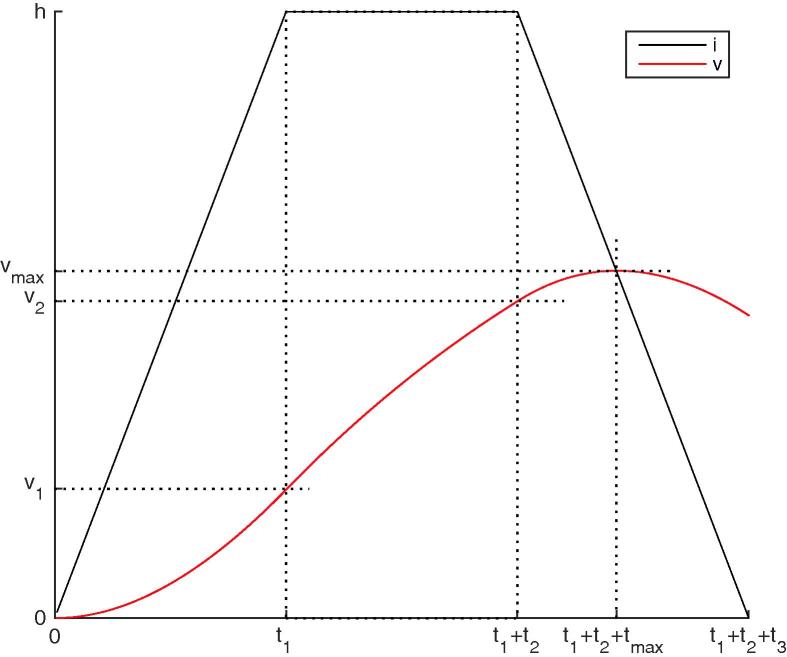

Fig. 3.

is piece-wise linear. , which lowpass filters , can be computed exactly on each piece. One can thus compute successively for and its maximum , reached for .

One can now compute for :

| (11) |

and differentiate it:

| (12) |

This derivative is 0, indicating that v is maximal, for

| (13) |

One can check that which means that the maximum is on the trapezoid edge, which is logical: before the crossing , so v increases; after the crossing , so v decreases. Plugging the value into Eq. (11), and expliciting all variables, we have:

| (14) |

One can check that if and , then , which is the classical response of a LIF to a step current.

From the definition of . We now have everything we need to compute the signal-to-noise ratio:

| (15) |

Numerical validation

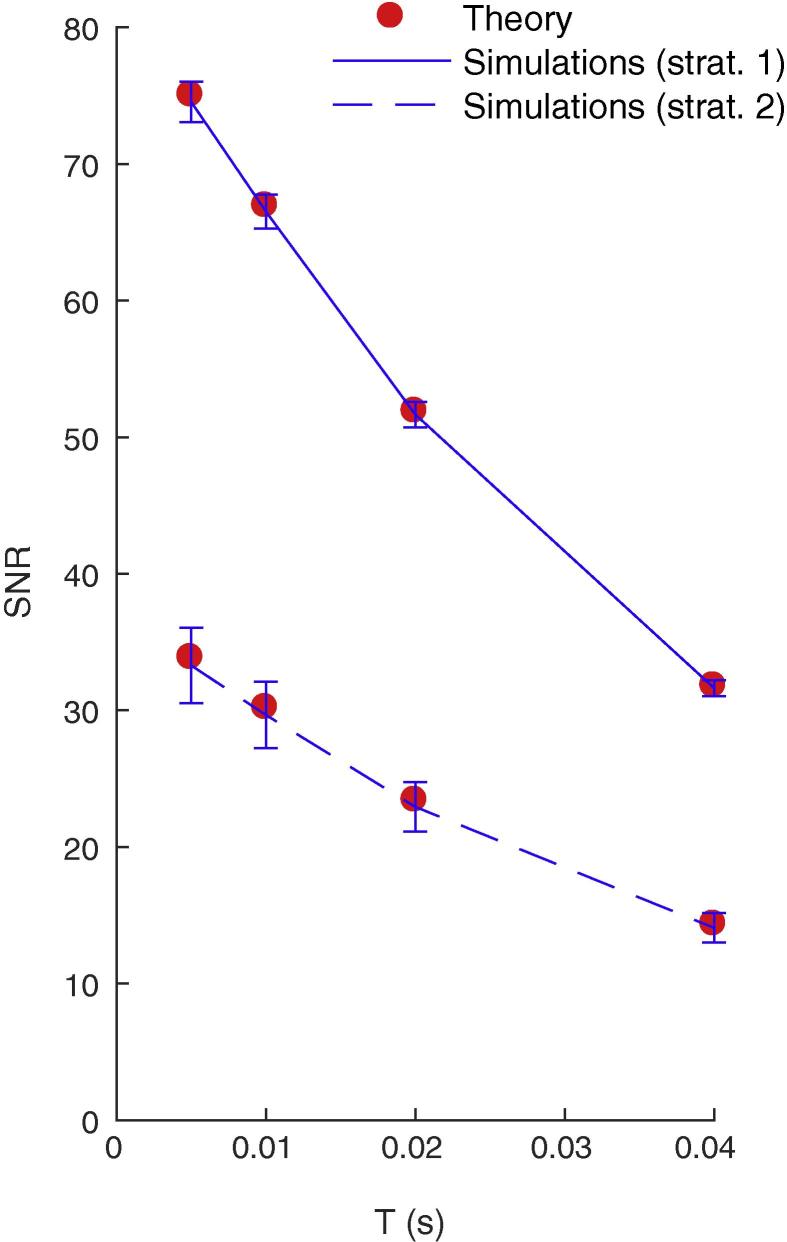

We verified the exact Eq. (15) through numerical simulations. We used a clock-based approach, and integrated Eq. (4) using the forward Euler method with a 0.1-ms time bin. We generated 100 random Poisson patterns of duration , involving neurons with rate . We chose , i.e. the LIF was connected to all the afferents that emitted at least n spikes during the whole pattern, n being the strategy number (the constraint imposes here ). In order to estimate , each pattern was presented 1000 times, every 400 ms. Between pattern presentations, the afferents fired according to a Poisson process, still with rate , which allowed to estimate and . We could thus compute the SNR from Eq. (1) (and its standard deviation across the 100 patterns), which, as can be seen on Fig. 4, matches very well the theoretical values for a broad range of jitters, and for strategies 1 and 2.

Fig. 4.

Numerical validation of the theoretical SNR values, for strategies 1 and 2, and for different maximal jitter T. Error bars show s.d.

Optimizing the SNR

We now want to optimize the SNR given by Eq. (15). We consider that f and T are external variables, and that we have the freedom to choose the strategy number and . We also add the constraint (large number of synaptic inputs). We assume that L is sufficiently large so that an upper bound for is not needed. We used the Matlab R2015b Optimization Toolbox (MathWorks Inc., Natick, MA, USA) to compute the optimum numerically.

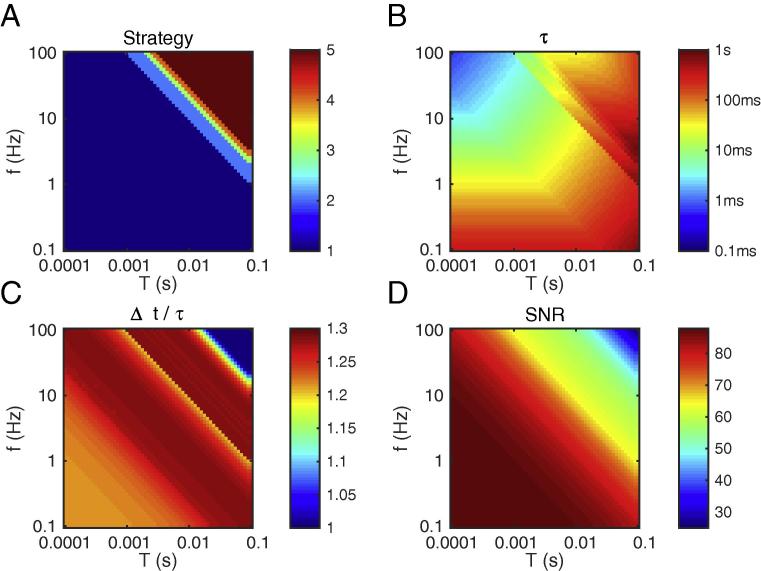

Fig. 5 illustrates the results. One can make the following observations:

-

•

Strategy #1 is usually the best for f and T in the biological ranges (see below), while higher numbers are optimal for very large f and T (see panel A). This means that emitting a single spike is already a significant event, that should not be ignored. We will come back to this point in the discussion.

-

•

Unsurprisingly, optimal and typically have the same order of magnitude ( being slightly larger, see panel C). Unless T is high (>10 ms), or f is low (<1 Hz), then these timescales should be relatively small (at most a few tens of ms). This means that even a long pattern (hundreds of ms or above) is optimally detected by a coincidence detector working at a shorter timescale. This could explain the apparent paradox between typical ecological stimulus durations (hundreds of ms or above) and the neuronal integration timescales (at most a few tens of ms).

-

•

The constraint imposes larger when both f and T are small (panel B, lower left). In the other cases, it is naturally satisfied.

-

•

Unsurprisingly, the optimal SNR decreases with T. What is more surprising, is that it also decreases with f. In other words, sparse activity is preferable. We will come back to this point in the discussion.

Fig. 5.

Optimal parameters, as a function of f and T. (A) Optimal strategy. For clarity we only computed strategies , but it is clear that higher numbers would be optimal for large f and T. (B) Optimal (note the logarithmic colormap). (C) Optimal , divided by . (D) Resulting SNR.

What is the biological range for f and T? It is worth mentioning that f is probably largely overestimated in the electrophysiological literature, because the technique totally ignores the cells that do not fire. Furthermore, experimentalists tend to select the most responsive cells, and search for stimuli that elicit strong responses. Mean firing rates, averaged across time and cells, could be smaller than 1 Hz (Shoham et al., 2006). But obviously some cells strongly respond to some stimuli. The frozen Poisson noise model captures this variability. For example, with , and (values used in the next section), leading to expected spikes per cell, on average the pattern will elicit 0 spike in 7261 cells, 1 spike (10 Hz) in 2324 cells, 2 spikes (20 Hz) in 372 cells, 3 spikes (30 Hz) in 40 cells, and 4 spikes (40 Hz) in 3 cells.

T corresponds to the spike time precision. Millisecond precision in cortex has been reported (Kayser et al., 2010, Panzeri and Diamond, 2010, Havenith et al., 2011). We are aware that other studies found poorer precision, but this could be due to uncontrolled variable or the use of inappropriate reference times (Masquelier, 2013).

We now focus, as an example, on the point on the middle of the plane, whose parameters are gathered in Table 1. The resulting SNR is very high (about 80). In other words, it is possible to choose a threshold for the LIF which will be reached when the pattern is presented, but hardly ever in the noise periods.

Table 1.

Numerical parameters. First two lines correspond to external parameters, the rest of them are parameters to optimize.

| Parameter | Value |

|---|---|

| T | 3.2 ms |

| f | 3.2 Hz |

| Optimal | 18 ms |

| Optimal | 23 ms |

| Optimal n | 1 |

In the next section, we investigated, through numerical simulations, if STDP can find this optimum. More specifically, since STDP does not adjust , we set it to the optimal value in Table 1 and investigated whether STDP could lead to the optimal n and .

Simulations show that STDP can be close-to-optimal

Set-up

The set up we used was similar to the one of our previous studies (Masquelier et al., 2008, Gilson et al., 2011). We simulated a LIF neuron connected to all of the afferents with plastic synaptic weights , obeying the following differential equation:

| (16) |

Initial synaptic weights were all equal. Then these synaptic weights evolved in with additive, all-to-all spike STDP like in Song et al. (2000). Yet we only modeled the Long-Term Potentiation (LTP) part of STDP, ignoring its Long-Term Depression (LTD) term. Here LTD was modeled by a simple homeostatic term , which is added to each synaptic weight at each postsynaptic spike (Kempter et al., 1999). Note that using a spike-timing-dependent LTD, could also lead to the detection of a repeating pattern, as demonstrated in our earlier studies (Masquelier et al., 2008, Masquelier et al., 2009), but less robustly, because it is more difficult to depress the synapses corresponding to afferents that do not spike in the repeating pattern.

As in Song et al. (2000), at each synapse i, we introduce the trace of presynaptic spikes , which obeys the following differential equation:

| (17) |

Furthermore:

-

•

At each presynaptic spike: .

-

•

At each postsynaptic spike: for , then the weights are clipped in [0,1].

We used and , while and the LIF threshold were systematically varied (see below). The refractory period was ignored for simplicity.

We used a clock-based approach, and integrated Eqs. (16), (17) using the forward Euler method with a 0.1-ms time bin. The Matlab code for these simulations has been made available in ModelDB (Hines et al., 2004) at https://senselab.med.yale.edu/modeldb/.

We now describe the way the input spikes were generated. Between pattern presentations, the input spikes were generated randomly with a homogeneous Poisson process with rate f (see Table 1). The spike pattern with duration was generated only once using the same Poisson process (frozen noise). The pattern presentations occurred every 400 ms (in previous studies, we demonstrated that irregular intervals did not matter (Masquelier et al., 2008, Gilson et al., 2011), so here regular intervals were used for simplicity). At each pattern presentation, all the spike times were shifted independently by some random jitters uniformly distributed over (see Table 1).

Results: two optimal modes

The theory developed in the previous sections ignored the LIF threshold (a difference of unconstrained potential was maximized). But in the simulations, one needs a threshold to have postsynaptic spikes, necessary for STDP. Since we did not know which threshold values could lead to the optimal , we performed an exhaustive search over threshold values, using a geometric progression with a 1.1 ratio. Note that (from Eq. (16)) the threshold can be interpreted as the number of synchronous presynaptic spikes needed to reach the threshold from the resting potential if these spikes arrive through maximally reinforced synapses .

We also used a geometric progression with a 1.1 ratio to search for . This parameter tunes the strength of the LTD relative to the LTP, and thus influences the number of reinforced synapses after convergence. For each point, 100 simulations were performed with different random patterns, and computed the proportion p of “optimal” ones (see below for the definition).

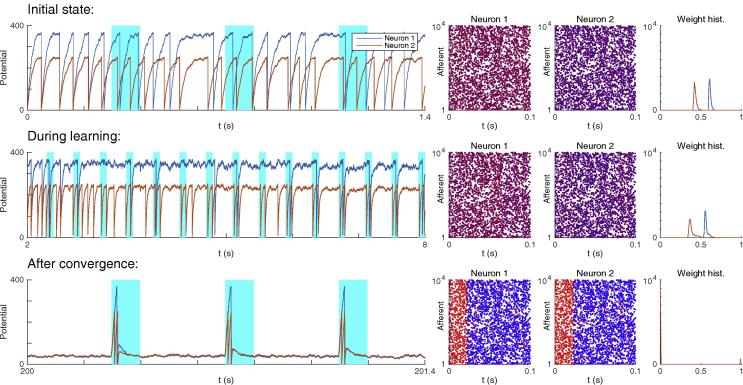

The initial weights were computed such that (leading to an initial firing rate of about 20 Hz, see Fig. 6 top). After 500 pattern presentations, the synaptic weights converged by saturation. That is synapses were either completely depressed , or maximally reinforced , as usual with additive STDP (Song et al., 2000, van Rossum et al., 2000, Gütig et al., 2003). A simulation was considered optimal if the reinforced synapses did correspond to a set of afferents which fired at least once (Strategy #1) in a subsection of the pattern, whose duration had to match the optimal window of the pattern given in Table 1 (with a 10% margin). In practice this subsection typically corresponded to the beginning of the pattern, because STDP tracks back through the pattern (Masquelier et al., 2008, Gilson et al., 2011), but this is irrelevant here.

Fig. 6.

Unsupervised STDP-based pattern learning. Neuron #1 and #2 illustrate modes #1 and #2 respectively. (Top) Initial state. On the left, we plotted the potential of each neuron as a function of time. Cyan rectangles indicate pattern presentations. Next, we represented the weights corresponding to the rightmost time point in two different ways. First, we plotted the spike pattern, coloring the spikes as a function of the corresponding synaptic weight for each neuron: blue for low weight, purple for intermediate weight, and red for high weight. Initial weights were uniform (we used 0.68 for Neuron #1 and 0.47 for Neuron #2, in order to have ). We also plotted the weight histogram for each neuron. (Middle) During learning. Selectivity emerges at , after pattern presentations. Yet the weights still have intermediate values, leading to suboptimal SNR. (Bottom) After convergence. For both neurons, STDP has concentrated the weights on the afferents which fire at least once in a long window, located at the beginning of the pattern. This results in 1 and 2 postsynaptic spikes for Neuron #1 and #2 respectively each time the pattern is presented. Elsewhere both and are law, resulting in optimal SNR.

We found two optimal modes (see Fig. 6). The first one, with a high threshold and strong LTD () led to 1 postsynaptic spike at each pattern presentation (as in our previous studies (Masquelier et al., 2008, Masquelier et al., 2009, Gilson et al., 2011)). For this mode, . The second mode, with a lower threshold and weaker LTD led to 2 postsynaptic spikes at each pattern presentation, and (the lower threshold increases the probability of false alarms during the noise period, but this problem could be solved by requiring two consecutive spikes for pattern detection). Fig. 6 illustrates an optimal simulation for both modes. We conclude that for most patterns, STDP can turn the LIF neuron into an optimal, or close-to-optimal pattern detector.

Detection is optimal only after convergence (i.e. weight binarization), which takes time (about 500 pattern presentations). This is because the learning rate we used is weak (, in other words, the maximal weight increase caused by one pair of pre- and post-synaptic spike is only 1% of the maximal weight), as in other theoretical studies and in accordance with experimental measurements (Song et al., 2000, Masquelier et al., 2008, Masquelier et al., 2009, Yger et al., 2015). Using a higher rate, it is possible to converge faster, at the expense of the robustness. For example with , convergence occurs in pattern presentations, but p decreases to 44% and 80% for modes #1 and #2 respectively. In any case, it is worth mentioning that (suboptimal) selectivity emerges way before convergence (e.g. around , or pattern presentations in Fig. 6).

Critically, for successful learning the pattern presentation rate must be high in the early phase of learning, before selectivity emerges. For example presenting the pattern every 800 ms instead of 400 ms leads to and 43% for modes #1 and #2 respectively. Once selectivity has emerged, this rate has much less impact, since the neuron tends to fire (and thus changes its weights) only at pattern presentations, whatever the intervals between them.

Discussion

One of the main result of this study is that even a long pattern (hundreds of ms or above) is optimally detected by a coincidence detector working at a shorter timescale (tens of ms), and which thus ignores most of the pattern. One could have thought that using , to integrate all the spikes from the pattern would be the best strategy. Instead, it is more optimal to use a subpattern as the signature for the whole pattern (see Fig. 5).

We also demonstrated that STDP can find the optimal signature in an unsupervised manner, by mere pattern repetitions. Note that the problem that STDP solves here is similar to the one addressed by the Tempotron (Gütig and Sompolinsky, 2006), which finds the best spike coincidence to separate two (classes of) patterns, by emitting or not a postsynaptic spike. Recently, the framework has been extended to fire more than one spike per pattern (Gütig, 2016), like here (e.g. Neuron #2 in Fig. 6). Yet these mechanisms require supervision.

In this work we only considered single-cell readout. But of course in the brain, it is likely that a population of cells is involved, and these cells could learn different subpatterns (lateral inhibition could encourage them to do so (Masquelier et al., 2009)). If each cell is selective to a subpart of the repeating pattern, how can one make a full pattern detector? One solution is to use one downstream neuron with appropriate delay lines (Carr and Konishi, 1988). Specifically, the conduction delays should compensate for the differences of latencies, so that the downstream neuron receives the input spikes simultaneously if and only if the sub-patterns are presented in the correct order. Another solution would be to convert the spatiotemporal firing pattern into a spatial one, using neuronal chains with delays as suggested by Tank and Hopfield (1987). Such a spatial pattern – a set of simultaneously active neurons – can then be learned by one downstream neuron equipped with STDP, and fully connected to the neuronal chains, as demonstrated in Larson et al. (2010).

It is also conceivable that the whole pattern is detected based on the mere number of subpattern detectors’ spikes, ignoring their times. Two studies in the human auditory system are consistent with this idea: after learning meaningless white noise sounds, recognition is still possible if the sounds are compressed or played backward (Agus et al., 2010), or chopped into 10-ms bins that are then played in random order (Viswanathan et al., 2016).

Our theoretical study suggests that synchrony is an important part of the neural code (Stanley et al., 2012), that it is computationally efficient (Gütig and Sompolinsky, 2006, Brette, 2012), and that coincidence detection is the main function of neurons (Abeles, 1982, König et al., 1996). In line with this proposal, neurons in vivo appear to be mainly fluctuation-driven, not mean-driven (Brette, 2012, Brette, 2015). It remains unclear if other spike time aspects such as ranks (Thorpe and Gautrais, 1998) also matter.

Our results show that, somewhat surprisingly, lower firing rates lead to better signal-to-noise ratio. This could explain why average firing rates are so low in brain, possibly smaller than 1 Hz (Shoham et al., 2006). It seems like neurons only fire when they need to signal an important event, and that every spike matters (Wolfe et al., 2010).

Acknowledgments

This research received funding from the European Research Council under the European Union’s 7th Framework Program (FP/2007–2013)/ERC Grant Agreement no. 323711 (M4 project). We thank Saeed Reza Kheradpisheh and Matthieu Gilson for the many insightful discussions we had about this work, and the two anonymous reviewers for their valuable comments. We also thank Tom Morse and ModelDB for distributing our code.

This article is part of a Special Issue entitled: Sensory Sequence Processing in the Brain.

References

- Abeles M. Role of the cortical neuron: integrator or coincidence detector? Isr J Med Sci. 1982;18:83–92. [PubMed] [Google Scholar]

- Agus T.R., Thorpe S.J., Pressnitzer D. Rapid formation of robust auditory memories: insights from noise. Neuron. 2010;66:610–618. doi: 10.1016/j.neuron.2010.04.014. [DOI] [PubMed] [Google Scholar]

- Andrillon T., Kouider S., Agus T., Pressnitzer D. Perceptual learning of acoustic noise generates memory-evoked potentials. Curr Biol. 2015;25:2823–2829. doi: 10.1016/j.cub.2015.09.027. [DOI] [PubMed] [Google Scholar]

- Brette R. Computing with neural synchrony. PLoS Comput Biol. 2012;8:e1002561. doi: 10.1371/journal.pcbi.1002561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brette R. Philosophy of the spike: rate-based vs. spike-based theories of the brain. Front Syst Neurosci. 2015;9:1–14. doi: 10.3389/fnsys.2015.00151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burkitt A.N. A review of the integrate-and-fire neuron model: I. Homogeneous synaptic input. Biol Cybern. 2006;95:1–19. doi: 10.1007/s00422-006-0068-6. [DOI] [PubMed] [Google Scholar]

- Carr C.E., Konishi M. Axonal delay lines for time measurement in the owl’s brainstem. Proc Natl Acad Sci USA. 1988;85:8311–8315. doi: 10.1073/pnas.85.21.8311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilson M., Masquelier T., Hugues E. STDP allows fast rate-modulated coding with Poisson-like spike trains. PLoS Comput Biol. 2011;7:e1002231. doi: 10.1371/journal.pcbi.1002231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold J.M., Aizenman A., Bond S.M., Sekuler R. Memory and incidental learning for visual frozen noise sequences. Vision Res. 2014;99:19–36. doi: 10.1016/j.visres.2013.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gütig R. Spiking neurons can discover predictive features by aggregate-label learning. Science (New York NY) 2016;351:aab4113. doi: 10.1126/science.aab4113. [DOI] [PubMed] [Google Scholar]

- Gütig R., Aharonov R., Rotter S., Sompolinsky H. Learning input correlations through nonlinear temporally asymmetric Hebbian plasticity. J Neurosci. 2003;23:3697–3714. doi: 10.1523/JNEUROSCI.23-09-03697.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gütig R., Sompolinsky H. The tempotron: a neuron that learns spike timing-based decisions. Nat Neurosci. 2006;9:420–428. doi: 10.1038/nn1643. [DOI] [PubMed] [Google Scholar]

- Havenith M.N., Yu S., Biederlack J., Chen N.H., Singer W., Nikolic D. Synchrony makes neurons fire in sequence, and stimulus properties determine who is ahead. J Neurosci. 2011;31:8570–8584. doi: 10.1523/JNEUROSCI.2817-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hines M.L., Morse T., Migliore M., Carnevale N.T., Shepherd G.M. ModelDB: a database to support computational neuroscience. J Comput Neurosci. 2004;17:7–11. doi: 10.1023/B:JCNS.0000023869.22017.2e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humble J., Denham S., Wennekers T. Spatio-temporal pattern recognizers using spiking neurons and spike-timing-dependent plasticity. Front Comput Neurosci. 2012;6:84. doi: 10.3389/fncom.2012.00084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hunzinger J.F., Chan V.H., Froemke R.C. Learning complex temporal patterns with resource-dependent spike timing-dependent plasticity. J Neurophysiol. 2012;108:551–566. doi: 10.1152/jn.01150.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kasabov N., Dhoble K., Nuntalid N., Indiveri G. Dynamic evolving spiking neural networks for on-line spatio- and spectro-temporal pattern recognition. Neural Networks. 2013;41:188–201. doi: 10.1016/j.neunet.2012.11.014. [DOI] [PubMed] [Google Scholar]

- Kayser C., Logothetis N.K., Panzeri S. Millisecond encoding precision of auditory cortex neurons. Proc Natl Acad Sci USA. 2010;107:16976–16981. doi: 10.1073/pnas.1012656107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kempter R., Gerstner W., van Hemmen J.L. Hebbian learning and spiking neurons. Phys Rev E. 1999;59:4498–4514. [Google Scholar]

- Klampfl S., Maass W. Emergence of dynamic memory traces in cortical microcircuit models through STDP. J Neurosci. 2013;33:11515–11529. doi: 10.1523/JNEUROSCI.5044-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- König P., Engel A.K., Singer W. Integrator or coincidence detector? The role of the cortical neuron revisited. Trends Neurosci. 1996;19:130–137. doi: 10.1016/s0166-2236(96)80019-1. [DOI] [PubMed] [Google Scholar]

- Krunglevicius D. Competitive STDP learning of overlapping spatial patterns. 1685Neural Comput. 2015;27:1673–1685. doi: 10.1162/NECO_a_00753. [DOI] [PubMed] [Google Scholar]

- Larson E., Perrone B.P., Sen K., Billimoria C.P. A robust and biologically plausible spike pattern recognition network. J Neurosci. 2010;30:15566–15572. doi: 10.1523/JNEUROSCI.3672-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luczak A., McNaughton B.L., Harris K.D. Packet-based communication in the cortex. Nat Rev Neurosci. 2015;16:745–755. doi: 10.1038/nrn4026. [DOI] [PubMed] [Google Scholar]

- Masquelier T. Neural variability, or lack thereof. Front Comput Neurosci. 2013;7:1–7. doi: 10.3389/fncom.2013.00007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masquelier T., Guyonneau R., Thorpe S.J. Spike timing dependent plasticity finds the start of repeating patterns in continuous spike trains. PLoS ONE. 2008;3:e1377. doi: 10.1371/journal.pone.0001377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masquelier T., Guyonneau R., Thorpe S.J. Competitive STDP-based spike pattern learning. Neural Comput. 2009;21:1259–1276. doi: 10.1162/neco.2008.06-08-804. [DOI] [PubMed] [Google Scholar]

- Nessler B., Pfeiffer M., Buesing L., Maass W. Bayesian computation emerges in generic cortical microcircuits through spike-timing-dependent plasticity. PLoS Comput Biol. 2013;9:e1003037. doi: 10.1371/journal.pcbi.1003037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Panzeri S., Diamond M.E. Information carried by population spike times in the whisker sensory cortex can be decoded without knowledge of stimulus time. Front Synaptic Neurosci. 2010;2:1–14. doi: 10.3389/fnsyn.2010.00017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Rossum M.C., Bi G.Q., Turrigiano G.G. Stable Hebbian learning from spike timing-dependent plasticity. J Neurosci. 2000;20:8812–8821. doi: 10.1523/JNEUROSCI.20-23-08812.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shoham S., O’Connor D.H., Segev R. How silent is the brain: Is there a “dark matter” problem in neuroscience? J Comp Physiol A Neuroethol Sensory Neural Behav Physiol. 2006;192:777–784. doi: 10.1007/s00359-006-0117-6. [DOI] [PubMed] [Google Scholar]

- Song S., Miller K.D., Abbott L.F. Competitive hebbian learning through spike-timing-dependent synaptic plasticity. Nat Neurosci. 2000;3:919–926. doi: 10.1038/78829. [DOI] [PubMed] [Google Scholar]

- Stanley G.B., Jin J., Wang Y., Desbordes G., Wang Q., Black M.J., Alonso J.M. Visual orientation and directional selectivity through thalamic synchrony. J Neurosci. 2012;32:9073–9088. doi: 10.1523/JNEUROSCI.4968-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sun H., Sourina O., Huang G.B. Learning polychronous neuronal groups using joint weight-delay spike-timing-dependent plasticity. 2212Neural Comput. 2016;28:2181–2212. doi: 10.1162/NECO_a_00879. [DOI] [PubMed] [Google Scholar]

- Tank D.W., Hopfield J.J. Neural computation by concentrating information in time. Proc Natl Acad Sci USA. 1987;84:1896–1900. doi: 10.1073/pnas.84.7.1896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thorpe S.J., Gautrais J. Rank order coding. In: Bower J.M., editor. Computational Neuroscience: Trends in Research. Plenum Press; New York: 1998. pp. 113–118. [Google Scholar]

- Tiesinga P., Fellous J.M., Sejnowski T.J. Regulation of spike timing in visual cortical circuits. Nat Rev Neurosci. 2008;9:97–107. doi: 10.1038/nrn2315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tuckwell, H.C., 1988. Introduction to theoretical neurobiology - volume 1. http://dx.doi.org/10.2277/052101932X.

- Viswanathan J., Rémy F., Bacon-Macé N., Thorpe S. Long term memory for noise: evidence of robust encoding of very short temporal acoustic patterns. Front Neurosci. 2016;10:490. doi: 10.3389/fnins.2016.00490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolfe J., Houweling A.R., Brecht M. Sparse and powerful cortical spikes. Curr Opin Neurobiol. 2010;20:306–312. doi: 10.1016/j.conb.2010.03.006. [DOI] [PubMed] [Google Scholar]

- Yger P., Stimberg M., Brette R. Fast learning with weak synaptic plasticity. J Neurosci. 2015;35:13351–13362. doi: 10.1523/JNEUROSCI.0607-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]