Abstract

This article reviews recent research on the extinction of instrumental (or operant) conditioning from the perspective that it is an example of a general retroactive interference process. Previous discussions of interference have focused primarily on findings from Pavlovian conditioning. The present review shows that extinction in instrumental learning has much in common with other examples of retroactive interference in instrumental learning (e.g., omission learning, punishment, second-outcome learning, discrimination reversal learning, and differential reinforcement of alternative behavior). In each, the original learning can be largely retained after conflicting information is learned, and behavior is cued or controlled by the current context. The review also suggests that a variety of stimuli can play the role of context, including room and apparatus cues, temporal cues, drug state, deprivation state, stress state, and recent reinforcers, discrete cues, or behaviors. In instrumental learning situations, the context can control behavior through its direct association with the reinforcer or punisher, through its hierarchical relation with response-outcome associations, or its direct association (inhibitory or excitatory) with the response. In simple instrumental extinction and habit learning, the latter mechanism may play an especially important role.

Keywords: Extinction, Instrumental learning, Context, Interference

One can think of extinction, the decline in learned responding that occurs when a Pavlovian conditioned stimulus (CS) or an instrumental (operant) behavior occur without a reinforcer, as an example of a general retroactive interference process that occurs whenever new learning conflicts with old (e.g., Bouton, 1991; Miller, Kasprow, & Schachtman, 1986; Spear, 1981). For example, Bouton (1993) reviewed the literature on a number “interference paradigms” in Pavlovian conditioning (i.e., paradigms in which a Pavlovian CS is associated with different outcomes in different phases of an experiment, as in extinction, counterconditioning, discrimination reversal, latent inhibition, and others). He noted that they had a great deal in common. Across the different paradigms, the evidence suggested that learning from the first phase was largely retained through the second, and that performance at any point was determined by which of the two available associations (or memories) was retrieved by background contextual cues. Consistent with this view, context seemed to play a fundamental role in each of the interference paradigms. For example, ABA renewal effects, in which Phase-1 performance returns after Phase 2 if the subject is returned to the original context (A) after Phase 2 had occurred in another one (B), had been demonstrated in all of them. And interestingly, where data were available, the passage of time after Phase 2 also led to spontaneous recovery effects. Bouton suggested a general conceptualization of interference as well as a general conceptualization of context, where a variety of exteroceptive and interoceptive stimuli were thought to be available to play its fundamental role. For example, spontaneous recovery was understood as another renewal effect in which the role of “context” was played by cues correlated with the passage of time. The effects of extinction (and other retroactive interference treatments) were thus relatively specific to the temporal context as well as the physical context in which they were learned. Finally, a review of the mechanisms by which contextual cues operated suggested that contexts did not merely function as simple CSs that were associated with the unconditioned stimulus (US), but instead often worked in a “hierarchical” way by retrieving or setting the occasion for the CS’s current association with the US (cf. Holland, 1992).

Most theoretical work on interference in animal learning has focused on research with Pavlovian conditioning procedures (see also Miller & Escobar, 2002; Polack, Jozefowiez, & Miller, 2017). In recent years, however, the field’s focus on extinction has expanded to include extinction in instrumental learning. The increased attention to operant learning is partly motivated by an interest in the general processes of behavior change, and partly because operant learning provides the method for studying voluntary behavior in the laboratory. Many real-world behavior problems, such as drug taking, smoking, overeating, or gambling, are problems with voluntary behavior; voluntary contact with the reinforcer is the defining feature of instrumental or operant learning. The purpose of the present article is thus to discuss extinction in instrumental learning from the perspective that it is a representative example of retroactive interference. In a rough parallel with the points made by Bouton (1993), it asks whether instrumental extinction shares features with other forms of interference in instrumental learning, reviews new research on the extent to which “context” can be played by different types of stimuli, and considers the fundamental behavioral mechanisms of contextual control. One especially important mechanism in instrumental learning may be that the context excites or inhibits the instrumental response directly.

Contextual control of instrumental extinction and other types of retroactive interference

Perhaps the key phenomenon that demonstrates the role of context in extinction is the renewal effect. Although renewal has been widely studied in Pavlovian conditioning, renewal actually had one of its earliest demonstrations in instrumental learning (Welker & McAuley, 1978). In that experiment, lever pressing in rats was renewed in its original context after extinction was conducted in a novel one. However, introduction of the novel context produced a massive disruption in lever press performance, and the contexts of acquisition and extinction were not counterbalanced, leaving some questions about interpretation. The issues were addressed by Nakajima, Tanaka, Urushihara, and Imada (2000), who demonstrated ABA renewal with less disruptive non-novel (and counterbalanced) contexts. The authors demonstrated renewal in both a free-operant method in which lever press responding was reinforced throughout experimental sessions and in a discriminated-operant method in which responding was reinforced when a 30-s light stimulus was on but not when it was off. ABA renewal has since been widely documented using drug self-administration procedures in which animals perform an operant response to earn drug reinforcers including a mixture of heroin and cocaine (Crombag & Shaham, 2002), cocaine (e.g., Hamlin, Clemens, & McNally, 2007), heroin (e.g., Bossert, Liu, & & Shaham, 2004), and alcohol (e.g., Chaudri, Sahuque, Cone, & Janak, 2008; Hamlin, Newby, & McNally, 2007). Reinstatement, in which extinguished responding recovers when the reinforcer is presented again after extinction, has also been widely studied (Shaham, Shalev, Lu, de Wit, & Stewart, 2003; see also Bouton, Winterbauer, & Vurbic, 2012). There is much overlap in what we know about extinction and relapse in Pavlovian learning and in instrumental drug self-administration (Bouton et al., 2012).

Although ABA renewal appears to be easy to produce in instrumental learning, the status of ABC and AAB renewal, two other forms of the renewal effect, was originally in doubt because of early failures to produce the AAB effect (Bossert et al., 2004; Crombag & Shaham, 2002; Nakajima et al., 2000). In the ABC and AAB designs, extinguished responding recovers in a new context that has never been associated with conditioning, suggesting that mere removal from the context of extinction is sufficient to renew the response. In ABC renewal, testing occurs in Context C after conditioning and extinction have been conducted in A and B, respectively; in AAB renewal, testing occurs in Context B after conditioning and extinction have both occurred in Context A. Recent work in my laboratory suggests that all three forms of renewal do indeed occur after extinction of instrumental responding reinforced with food pellet reinforcers (Bouton, Vurbic, Todd, & Winterbauer, 2011; Todd, 2013; Todd, Winterbauer, & Bouton, 2012; Trask, Shipman, Green, & Bouton, 2017). Nakajima (2014) has also reported all three forms in an active shuttle-avoidance paradigm. And ABA, ABC, and AAB renewal have also been shown in studies with children diagnosed with with autism spectrum disorder (Cohenour, Volkert, & Allen, 2018; Kelley, Jimenez-Gomez, Podlesnik, & Morgan, 2018; Kelley, Liddon, Ribeiro, Grief, & Podlesnik, 2015; Liddon, Kelley, Rey, Liggett, & Ribeiro, 2018). Although AAB and ABC renewal effects are often weaker than ABA (e.g., Bouton et al., 2011; but see Todd, 2013; Todd et al., 2012), the overall picture suggests that the different forms of renewal do occur in several operant conditioning methods. The presence of ABC and AAB renewal suggests that extinction can be more context-dependent than conditioning is, much as it is in studies of Pavlovian conditioning.

Although instrumental renewal has mostly been studied after extinction, it clearly does occur with other forms of instrumental retroactive interference. Nakajima, Urushihara, and Masaki (2002) reported ABA renewal after the operant response was suppressed by an omission (or negative punishment) contingency in which reinforcers were presented only if the subject had not lever pressed in the last 30 s. ABA renewal was also found after the reinforcer had been presented independently of the response during the response elimination phase (see also Rescorla, 2001). Bouton and Schepers (2014) reported that the resurgence effect—another relapse effect discussed in detail below that can be interpreted as a form of renewal—also survived omission procedures in which reinforcement only occurred if the rat refrained from making the target response for 45, 90, or 135 s. The results clearly suggest that renewal can occur even when reinforcement contingencies actively suppress or inhibit the response.

Recent research has also documented renewal after positive punishment, where behavior is eliminated by pairing it with an aversive event like footshock (see Marchant, Campbell, Pelloux, Bossert, & Shaham, 2018, for a review). Punishment is arguably a better model than extinction of the cessation of drug-taking in humans, because drug-taking in the natural world is always reinforced (and never truly extinguished); humans may quit because they understand the negative consequences of their behavior. In the first published experiment on renewal after punishment, Marchant, Khuc, Pickens, Bonci, and Shaham (2013) reported an ABA effect: Rats that lever pressed for alcohol in Context A and were punished in Context B renewed their performance when returned to Context A. However, the study did not adequately eliminate a role for simple fear conditioning of Context B, which the punishing shocks could have produced; contextual fear could have suppressed performance in B through a Pavlovian fear conditioning rather than an instrumental punishment mechanism. The issue was addressed by Bouton and Schepers (2015), who also found ABA renewal after punishment, but no effect in a yoked control group that received the same shocks as the punished group in a noncontingent manner (see also Pelloux et al., 2018). Renewal appeared complete after punishment in that the punished group renewed to a level that was not distinguishable from that of the control group. Bouton and Schepers also documented ABC renewal in a similar experiment. Further, they also found ABA renewal after punishment in an experiment using the design sketched in the lower section of Table 1. The experiment involved the training and punishment of two responses. R1 (e.g., lever pressing) was first reinforced in Context A during sessions that were intermixed with sessions in which R2 (e.g., chain pulling) was reinforced in Context B. In the punishment phase, the responses were switched to the opposite context (R1 to B and R2 to A) and both were reinforced and punished there. (Punishment procedures typically involve continued reinforcement of the punished response.) Notice that Context A and B were equally associated with the reinforcer and the punisher in the design. Despite this, there was a clear renewal of responding when R1 was returned to Context A and R2 was returned to Context B. The results could not have been due to differences in the contexts’ associations with reinforcement and punishment. Moreover, the same pattern was observed whether R1 and R2 were tested alone or with their manipulanda available at the same time. The results thus suggest that the effects of punishment are not only specific to their context, but that the context can affect choice.

Table 1.

Designs of experiments that demonstrated ABA renewal after extinction (Todd, 2013) and punishment (Bouton & Schepers, 2015) when each context’s association with reinforcement and nonreinforcement or punishment was controlled.

| Phase | ||

|---|---|---|

| Acquisition | Retroactive interference treatment | Test |

| (Extinction) | ||

| A: R1-food | A: R2-- | A: R1, R2 |

| B: R2-food | B: R1-- | B: R1, R2 |

| (Punishment) | ||

| A: R1-food | A: R2-food + shock | A: R1, R2 |

| B: R2-food | B: R1-food + shock | B: R1, R2 |

Note. A and B refer to contexts; -- refers to extinction. Both experimental designs were within-subject designs; thus, every subject received the treatments shown in each phase in an intermixed fashion. Bold indicates the response with the higher level during the test.

The punishment results just described provided a parallel with a prior experiment run in my laboratory that used the analogous design in extinction (Todd, 2013). The design and results of the extinction experiment are sketched in the upper part of Table 1. The results of the two experiments suggest that both extinction and punishment depend on the animal learning to inhibit a specific behavior in a specific context. The parallel is also supported by the fact that punished responding recovers if time elapses after punishment (spontaneous recovery; e.g., Estes, 1944) or if the original Phase-1 reinforcer is presented again in a response-independent manner after punishment (reinstatement; Panlilio, Thorndike, & Schindler, 2003). Of course, punishment entails exposure to a salient aversive stimulus and extinction does not, so there will inevitably be some differences between the two paradigms (e.g., Panlilio, Thorndike, & Schindler, 2005; see also Jean-Richard-Dit-Bressel, Killcross, & McNally, 2018).

Rescorla has reported other, non-punishment, experiments in which an instrumental response in rats is associated with a second outcome in a second phase (see Rescorla, 2001, for one review). In Rescorla’s case, the two outcomes are reinforcers with similarly positive values, such as a food pellet and a drop of liquid sucrose. After first associating the response with pellet and then with sucrose (or the reverse), Rescorla has shown that when one reinforcer is devalued by pairing it with illness, and then the response is tested in extinction, devaluation weakens the response equally well regardless of whether the first or second reinforcer has been devalued (e.g., Rescorla, 1995, 1996a). The apparently equivalent effects of devaluing the first or second reinforcer associated with the response suggests that the first response-reinforcer association has been preserved through the interference treatment. And when time is allowed to elapse after a response has been associated with one and then the other reinforcer, responding spontaneously increases in strength (Rescorla, 1996b, 1997), as if an inhibitory process learned during Phase 2 weakens over time (a change in temporal context).

Another well-known retroactive interference effect studied in instrumental learning is discrimination reversal learning. Here, animals learn to make an instrumental response in the presence of one stimulus (X) but not in the presence of another one (Y). In the reversal phase, responding is now reinforced in Y but not in X. Most of the research on instrumental discrimination reversal has been done with paradigms that probably involve quite a bit of Pavlovian control of the behavior. However, there is good evidence that discrimination reversal learning does follow principles that are compatible with extinction. In a method developed by Spear (e.g., Spear, 1971), rats first learn to passively avoid the black compartment of a black-white box (B+/W−) if they are shocked when they move into black. They are then shocked in the white section (B−/W+) during a reversal phase. During testing, the rat is put in the white compartment, and the latency to escape it (to black) is measured. Long latencies suggest retrieval of B+/W−, while short latencies suggest retrieval of B−/W+. When the original and reversal phases are run in different contexts, a return to the first context causes a renewal of the Phase-1 performance (e.g., Spear, 1971; Spear, Smith, Bryan, Gordon, Timmons, & Chiszar, 1980). Thomas and his colleagues have run analogous experiments in pigeons (e.g., Thomas, McKelivie, & Mah, 1985; Thomas, McKelvie, Ranney, & Moye, 1981; Thomas, Moye & Kimose, 1984). Here, the birds are reinforced for pecking one colored keylight and not another; in the second phase the contingencies are reversed. When the phases are conducted in different contexts, there is a renewal of first-phase performance on return to the first-phase context after Phase 2 (Thomas et al., 1981, 1984, 1985). And in both the rat passive avoidance paradigm and pigeon reversal paradigm, spontaneous recovery effects can also be observed over time (e.g., Gordon & Spear, 1973; Spear et al., 1980; Thomas et al., 1980). As in extinction, learning from the first phase survives, and can be revealed by manipulations of context and time.

Two recent reports suggest that renewal can occur after two additional instrumental retroactive interference treatments. First, in a clinical study, Saini, Sullivan, Baxter, DeRosa and Roane (2018) reduced “destructive” behavior in four children diagnosed with autism spectrum disorder using a differential reinforcement of alternative behavior (DRA) procedure. In that procedure, a therapist extinguished the destructive behavior in the clinic at the same time s/he reinforced an alternative response. The destructive behaviors declined, but they were renewed when the children were returned home (to their caregivers rather than the therapists). Renewal may thus occur after a DRA procedure. Finally, Kincaid and Lattal (2018) studied several relapse effects known to occur after extinction (renewal, reinstatement, and resurgence) after pigeons had stopped key pecking on a progressive ratio schedule. In that schedule, the response requirement for successive reinforcers was increased by a constant amount (e.g., fixed-ratio 10 to 20 to 30…). The increasing response requirement eventually caused the subjects to stop responding. If noncontingent reinforcers were then presented after the stoppage, the behavior returned (it was reinstated); if a second key was reinforced (after responding on the first key had stopped) and was then extinguished, the originally suppressed behavior resurged; and if a response key was reinforced while it was illuminated red (or green), and then reinforced on a progressive ratio schedule while it was green (or red), keypecking recovered after stoppage if the key was returned to the first color. Although the latter effect was labeled “renewal,” it is not clear that the label is appropriate, because key color was the stimulus that directly evoked responding, and not a context controlling responding to a separate stimulus. Nevertheless, Kincaid and Lattal’s results suggest that “ratio strain” observed in progressive ratio schedules can be considered another interference effect controlled by factors that influence extinction.

In general, the findings from a number of retroactive interference paradigms in instrumental learning support the idea that the results with extinction may generalize to a number of retroactive interference effects. In every one studied, behavior change in Phase 2 does not necessarily destroy the original learning, and can instead involve an adjustment to behavior that appears relatively context-specific. Studies of instrumental extinction may thus be tapping into a fairly general instrumental retroactive interference process.

There are many kinds of contexts

As noted earlier, theoretical approaches to interference have emphasized the possible role of many kinds of contextual stimuli (e.g., Bouton, 1993). As one example, in addition to the standard exteroceptive room and apparatus stimuli, interoceptive cues provided by drug states are known to control extinction performance (e.g., Bouton, Kenney, & Rosengard, 1990; Cunningham, 1979; Lattal, 2007); when extinction is conducted in the presence of a drug state, it is renewed when tested outside the context of that state. And as noted above, Bouton (1993) also emphasized the fact that background temporal cues can play the role of context; thus, when conditioning occurs at Time 1, extinction at Time 2, and testing at Time 3, spontaneous recovery can be seen as an ABC renewal effect. This approach to spontaneous recovery is consistent with the fact that retrieval cues for extinction have the same effect of reducing both spontaneous recovery and renewal (e.g., Brooks & Bouton, 1993, 1994) and by the fact that the temporal intervals between successive presentations of Pavlovian CSs can demonstrably exert contextual control over responding to the CS (e.g., Bouton & Garcia-Guttierez, 2006; Bouton & Hendrix, 2011). More recent work on instrumental extinction supports extending the “context concept” even further.

Deprivation state as a context.

Schepers and Bouton (2017) recently reported that interoceptive states produced by hunger and satiety can control instrumental extinction. In one experiment, rats were trained to lever press for sucrose or sweet-fatty pellets while they were on ad lib food, and thus in a state of satiety. Once responding had stabilized, the response was extinguished over several sessions conducted while the rats were hungry (food-deprived for the previous 23 hrs). When responding was then tested separately in both the satiated and hungry states, renewed responding was observed in the satiated state, suggesting an ABA renewal effect in which satiety played the role of Context A. Schepers and Bouton (2017) noted that renewed responding in the satiety context went against traditional notions about how behavior is motivated by hunger and satiety; it may also partly explain why dieters who learn to inhibit their eating while starving themselves on a diet might overeat again when they are satiated. Interestingly, an AAB effect (conditioning-extinction-testing while hungry-hungry-satiated) was not observed, perhaps because it was less able to override hunger’s possible prior association with feeding acquired in the rat’s previous experience. The fact that the ABA effect was mediated by an interoceptive satiety/hunger cue, rather than the presence/absence of food in the home cage prior to the experimental sessions, was further suggested by an experiment which showed that the presence/absence of food in the home cage was alone ineffective at signaling whether lever pressing would be reinforced or not. Deprivation level was also still an effective cue when the rats had no food in the home cage before either reinforced or nonreinforced sessions (they discriminated between 2-hr and 23-hr deprivation conditions). The findings add to many results reported by Davidson and colleagues which suggest that hunger and satiety states can provide discriminative cues for Pavlovian conditioned responding (e.g., Davidson, 1993; Davidson, Kanoski, Tracy, Walls, Clegg, & Benoit, 2005; Kanoski, Walls, & Davidson, 2007; Sample, Jones, Hargrave, Jarrard, & Davidson, 2016). Motivational states may function as contexts.

Stress as a context.

Schepers and Bouton (2018) also asked whether stress created by a recent stressor can provide a kind of context. The question was worth asking because stress states (or negative affective states) are widely thought to precipitate relapse in smokers, drug users, and overeaters (e.g., Baker, Piper, McCarthy, Majeskie, & Fiore, 2004; Torres & Nowson, 2007; Sinha, Shaham, & Heilig, 2011). Perhaps consistent with the idea, there is an extensive laboratory literature suggesting that exposure to shock after extinction of cocaine or heroin self administration can reinstate the extinguished response (e.g., Mantsch, Baker, Funk, Lê, & Shaham, 2016), although instrumental food-seeking is notoriously difficult to “reinstate” with stress (e.g., Ahmed & Koob, 1997; Buczek, Le, Wang, Stewart, & Shaham, 1999). Many drugs of abuse create stressful effects (e.g., Sinha, 2008), whereas food reinforcers may be less stressful. The hypothesis was that if drug taking occurs in the context of stress and is inhibited in the absence of stress, a return to the stress state may cause an ABA renewal effect (see also Mantsch & Goeders, 1998, 1999). Schepers and Bouton tested this with food-motivated instrumental responding. In an initial experiment, rats were trained to lever press for sucrose pellets in sessions that immediately followed exposure to a stressor. The stressors varied from day to day to prevent habituation of the stress response (Hammack, Cheung, Rhodes, Schutz, Falls, Braas, & May, 2009). The response was then extinguished during sessions that were not preceded by stress. In final tests, responding was tested in sessions that followed exposure to a stressor and sessions that did not. Stress was sufficient to cause recovery of the response, if and only if stressors had preceded lever press training. If the same sequence of stressors was presented before extinction sessions instead of acquisition sessions, stressor exposure did not cause a renewal of responding. And equally important, responding recovered if testing followed exposure to a stressor that had not itself preceded sessions of acquisition training. The results encourage the view that the stress context that controlled renewal was a general, presumably interoceptive, stress cue that was common to the different stressors. In total, the results suggest that stress can initiate relapse after instrumental extinction by providing a context that enables an ABA renewal effect.

The reinforcer context.

Another source of contextual control may be more discrete events that occur, perhaps repeatedly, in time. For example, a long tradition in learning theory has held that after-effects of recent reinforcers can provide a stimulus that exerts discriminative control over instrumental performance. This allows recent reinforcers to effectively influence behavior as a kind of context. The most straightforward example of such an effect is reinstatement, in which an extinguished instrumental response recovers if the organism is given a few presentations of the reinforcer after extinction (e.g., Baker, 1990; Franks & Lattal, 1976; Reid, 1958; Rescorla & Skucy, 1969). Although reinforcer presentations can have a number of effects that might account for reinstatement, the possibility that it can be a context is suggested by a number of results. For example, response-independent presentations of the reinforcer during extinction can abolish the ability of free reinforcers to reinstate the extinguished response (e.g., Baker, 1990; Rescorla & Skucy, 1969; Winterbauer & Bouton, 2011); making the reinforcers a feature of extinction (as well as conditioning) reduces its power to cue conditioning. In addition, Ostlund and Balleine (2007) established that specific reinforcers can set the occasion for specific responses that follow them, and thus cause a specific reinstatement effect when they are presented after extinction. Thus, at least part of the reinstatement effect may be controlled by the reinforcer acting as a context and enabling another ABA renewal effect. (For a second behavioral mechanism that can yield reinstatement, see Baker, Steinwald, & Bouton, 1991.) A variety of other experiments do suggest that recent reinforcers can play the role of context (e.g., Bouton, Rosengard, Achenbach, Peck & Brooks, 1993).

Recent research on resurgence, another response-recovery paradigm that I have mentioned occasionally above, also suggests the importance of recent reinforcers as a context. In this paradigm, one instrumental behavior (R1) is first reinforced and then extinguished in a second phase while a new, alternative, behavior (R2) is reinforced. Although R1 is suppressed by this DRA treatment, it can recover (resurge) when reinforcers for R2 are then omitted (e.g., Leitenberg, Rawson, & Bath, 1970). Resurgence is, of course, more evidence that DRA does not produce permanent behavior change (see also Saini et al., 2018). Recall that DRA treatments are widely used in the treatment of problem behaviors in individuals with autism or deveopmental disabilities; the implication of resurgence is that the response may reappear when reinforcement of the alternative behavior is merely discontinued. My students and I have argued that resurgence may occur because discontinuing the reinforcers for R2 changes the context, and thus allow extinguished R1 to renew. When we have compared procedures that make reinforcers contingent or not contingent on R2 in the treatment phase, we find the same amount of resurgence when the reinforcers are then discontinued (e.g., Winterbauer & Bouton, 2010). Therefore, it seems that the removal of the Phase-2 reinforcers is the key event causing resurgence. Thus, on the context view, reinforcers provided in Phase 2 provide the context for R1 extinction, and their removal in the resurgence test causes an ABC renewal of R1.

A variety of evidence is consistent with this account of resurgence (see Trask et al., 2015 for one review). Its most basic insight is that procedures that make the contextual conditions consistent across treatment and testing should reduce or defeat the resurgence effect. A number of experiments have tested this by modifying the treatment procedure so as to make it more similar to the conditions of testing. In this way, extinction of R1 is learned under conditions that more closely match the testing conditions. As one example, “leaner” reinforcement schedules for R2, which contain fewer reinforcers per minute than “richer” ones, reduce the amount of final resurgence observed (e.g., Bouton & Trask, 2016; Smith, Smith, Shahan, Madden, & Twohig, 2017; Sweeney & Shahan, 2013). Theoretically, the animal learns to inhibit R1 in the absence of reinforcers—the conditions prevailing during testing. Similarly, “thinning” the reinforcement schedules for R2 over sessions, so that the schedules start from rich and go to lean, also reduces resurgence (e.g., Schepers & Bouton, 2015; Winterbauer & Bouton, 2012; Sweeney & Shahan, 2013). “Reverse thinning” (going from lean to rich) also reduces resurgence (e.g., Schepers & Bouton, 2015; see also Bouton & Schepers, 2014), although perhaps not to the level of a standard thinning procedure. And alternating between sessions in which R2 is reinforced and then extinguished can reduce resurgence in a final extinction test compared to groups that either have R2 consistently reinforced, even at the same overall average rate (Schepers & Bouton, 2015). In a recent extension of this result, Trask, Keim, and Bouton (2018) found resurgence after a 20-session treatment phase that was abolished by the alternating treatment. Interestingly, the size of the resurgence effects that occurred during each repeating extinction sessions also diminished over training (see also Schepers & Bouton, 2015). All of the results are consistent with the idea that letting the client learn to suppress a problematic R1 under conditions that resemble the relapse testing conditions (i.e., the discontinuation of reinforcement of the alternative behavior) will reduce resurgence after a DRA treatment.

A second kind of test of the context account of resurgence has been to do the reverse, i.e., make the conditions at testing more similar to those during treatment. Bouton and Trask (2016) first reinforced R1 with one type of outcome (O1, a sucrose or grain-based pellet) and then extinguished R1 while R2 was reinforced with the other outcome (O2). When R2 was then extinguished, substantial resurgence was observed. However, a group that received O2 pellets response-independently during the test showed no resurgence at all (see also Lieving & Lattal, 2003), whereas a group that received O1 pellets at the same rate resurged to the same level as controls. Thus, presentation of the reinforcer that was earned while R1 was being inhibited—but not another reinforcer—suppressed the resurgence effect. In a related experiment, Trask et al. (2018) first reinforced R1 with O1 and then introduced double alternating sessions in which (a.) R1 was extinguished while R2 was reinforced with O2 and (b.) R2 was reinforced with O3. R1 was not extinguished in the latter sessions (the lever manipulandum was removed). Here, presenting the O2 reinforcer during testing again abolished resurgence, whereas presenting the O3 reinforcer, which was equally familiar but had not been associated with R1’s extinction, did not. Combined with the experiments described in the preceding paragraph, the results of these experiments suggest that resurgence depends on a contextual change between response elimination and extinction testing. They are not anticipated by other explanations of resurgence that do not emphasize the discriminative, contextual, effects of reinforcers (Shahan & Sweeney, 2011; Shahan & Craig, 2017).

Other discrete cues as contexts.

One might expect that other kinds of discrete cues besides reinforcers can also play the role of context, and there is evidence that they can. For example, in experiments on Pavlovian appetitive conditioning, a neutral brief stimulus presented during extinction sessions can reduce spontaneous recovery (Brooks & Bouton, 1993), renewal (Brooks & Bouton, 1994), or reinstatement of responding to the CS (Brooks & Fava, 2017) if it is presented just before the test. Tests of the properties of effective retrieval cues suggested that they were not simple conditioned inhibitors (Brooks, 2000; Brooks & Bouton, 1993, 1994). The authors argued that they were instead encoded as a feature of the context, and thus served to reintroduce the extinction context at the time of the test. Similar results have been reported in instrumental extinction, where similar retrieval cues have likewise reduced renewal (Nieto, Uengoer, & Bernal-Gamboa, 2017; Willcocks & McNally, 2014) as well as spontaneous recovery and reinstatement (Bernal-Gamboa, Gámez, & Nieto, 2017).

Interestingly, Willcocks and McNally (2014) found the cue was ineffective at slowing down the rapid reacquisition that occurred after extinction when the response was paired with the reinforcer again. Working in my laboratory, Trask (2019) also found that a brief, neutral audiovisual cue presented during treatment sessions in the resurgence paradigm had little effect when it was presented during the resurgence test (Experiment 1b). However, if that cue was paired with R2’s reinforcer when it was presented in the treatment phase, it attenuated resurgence when it was presented during the resurgence test. Importantly, the cue had to be associated with the reinforcer during sessions in which R1 was being extinguished; a cue merely associated with a reinforcer did not work. This may explain why a stimulus associated with the reinforcer during both R1 acquisition and extinction can fail to weaken resurgence significantly (Craig, Browning, & Shahan, 2017). Trask suggested that when a neutral cue is associated with a reinforcer, it may attract more attention (e.g., Mackintosh, 1975) and therefore better serve as a contextual cue associated with R1’s extinction. It is possible that an attentional boost may be necessary in paradigms, like resurgence and rapid reacquisition (Willcocks & McNally, 2014), where the rat is otherwise earning attention-grabbing reinforcers during the treatment or test phase. The overarching point, though, is that discrete cues may serve as contextual cues, and thus further supports the value in taking a broad view of “context.”

The response context.

A final kind of “cue” that may play the role of context is provided by prior behaviors. One can reach this conclusion based on recent research on behavior chains, which have attracted the attention of behavioral pharmacologists recently (e.g., LeBlanc, Ostlund, & Maidment, 2012; Olmstead, Parkinson, Miles, Everitt, & Dickinson, 2000; Zapata, Minney, & Shippenberg, 2010). Behavior chains capture the idea that many behaviors like drug taking or smoking are actually embedded in a sequence of other behaviors. Thus, users must minimally “seek” (or procure or purchase) a drug before they can “take” (or consume) it. In a discriminated heterogeneous chain, the first and second responses are each occasioned by their own auditory or visual stimulus (see Thrailkill & Bouton, 2016a, 2017 for reviews). Thus, presentation of S1 sets the occasion for R1 (e.g., chain pulling), which eventually earns presentation of S2, which now occasions R2 (e.g., lever pressing) and the delivery of a primary reinforcer (a food pellet). The fact that procurement (R1) and consumption (R2) each occurs in the presence of its own separate stimulus is meant to capture a feature of the real-world drug-taking or smoking chains, although it is worth noting that with our current methods the rats complete the entire chain within a few seconds.

Several facts about the discriminated chain have been identified. First, after training the S1-R1-S2-R2+ chain, presenting S1 leads the rat to perform R1, and presenting S2 leads it to perform R2. Thus, the explicit discriminative stimuli are indeed involved in the control of each behavior. But more interestingly, if R1 is extinguished separately from the chain (by presenting S1 and allowing the rat to make R1 without a consequence), R1 is weakened, but so is R2 as revealed in tests of S2-R2 (Thrailkill & Bouton, 2015a). Conversely, if R2 is extinguished separately from the chain (by presenting S2 and allowing the rat to make R2 in its presence), R2 as well as R1 are weakened (Thrailkill & Bouton, 2016b). These effects are specific to responses associated in a chain: If the rat learns two separate chains, extinction of R1 affects only the associated R2, and extinction of R2 only affects the associated R1. Thus, an important thing learned in the chain is an association between R1 and R2. In a sense, R1 is part of the “context” for R2.

The latter idea is further supported by the discovery of a new kind of renewal effect. The idea behind it is illustrated in Table 2. Thrailkill, Trott, Zerr, and Bouton (2016) first trained the S1-R1-S2-R2+ chain and then extinguished R2 on its own (by S2-R2-). This resulted in the reliable suppression of R2. As illustrated in the table, Thrailkill et al. then tested S2-R2 after a return to the chain (i.e., S2-R2 was presented after S1-R1), after S1 only (the manipulandum that supported R1 was removed), or on its own. R2 was renewed when it was returned to the chain and now preceded again by S1-R1-S2. R1 was the crucial element, however. When S2-R2 was preceded by S1 without R1, there was no renewal of R2. An additional two-chain experiment showed that R2 was only renewed by the specific response that it had been trained with in a chain. And in newer experiments that are still in progress, we have found that elimination of R1 through extinction (to a point where it does not weaken R2 alone) can abolish the chain renewal effect. Once again, the renewal effect depends on the rat’s prior performance of R1—a contextual cue for R2.

Table 2.

The response context. Design of an experiment demonstrating renewal of a response by return to the context of a preceding response (Thrailkill, Trott, Zerr, & Bouton, 2016).

| Phase | |||

|---|---|---|---|

| Group | Acquisition | Extinction | Test |

| Renew after R1 | S1-R1-S2-R2+ | S2-R2-- | S1-R1-S2-R2 |

| Renew after S1 | S1-R1-S2-R2+ | S2-R2-- | S1- S2-R2 |

| Control | S1-R1-S2-R2+ | S2-R2-- | S2-R2 |

Note. S1 and S2 are different auditory and visual stimuli; R1 and R2 are different responses (lever pressing and chain pulling). + = reinforcement; -- = nonreinforcement. Bold at right indicates the only group that showed the tested R2 response.

Other experiments on heterogeneous discriminated chains have revealed another tentative insight into contextual control. Thrailkill et al. (2016) trained the usual (S1-R1-S2-R2+) chain in physical Context A, one of our usual sets of Skinner boxes, and then tested S1-R1 and S2-R2 separately in Context A and in Context B, a familiar but different physical context in which the chain had not been trained. The context switch weakened R1, just as it does with other discriminated operants (Bouton, Todd, & León, 2014). But the switch from A to B had no effect on R2, as if the physical context was irrelevant to controlling R2. Remarkably, though, when Thrailkill et al. tested the whole chain (S1-R1-S2-R2-) in Contexts A and B, the context switch weakened both R1 and R2. The unique effect on R2 apparently occurred because the switch had weakened the R1 response that preceded R2. The pattern is entirely sensible from the point of view that R1, and not Context A, is the context for R2. Evidently, R1 may block or overshadow the acquisition of Context A’s control of R2 during acquisition of the chain.

Mechanisms of contextual control

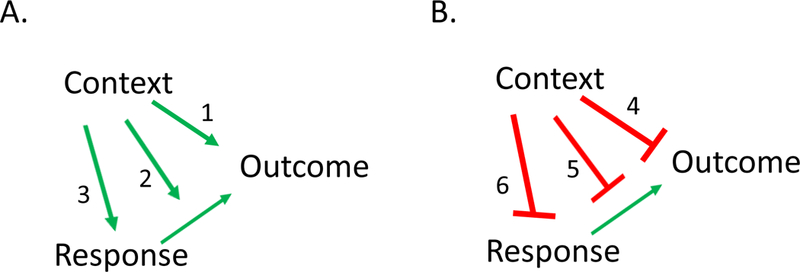

Research on the contextual control of instrumental behavior and extinction has also been directed at the behavioral mechanisms underlying contextual control (see Trask, Thrailkill, & Bouton, 2017, for a review). Several possibilities have been considered, and they are sketched in Figure 1 as they might occur in a simple free-operant situation (see also Bouton & Todd, 2014). As a guide to interpretation of the figure, in a simple ABA renewal experiment, Context A might excite responding through any of the associative links sketched in Panel A, and Context B might inhibit responding through any of the links sketched in Panel B. The role of some form of inhibition in Context B is suggested by effects like ABC and AAB renewal, which indicate that mere removal from the extinction context is sufficient to cause responding to return (e.g., Bouton et al., 2011; Nakajima, 2014; Todd, 2013).

Figure 1.

Possible mechanisms by which a context might control a free operant response. A. Excitatory contextual control: An excitatory association between the context and reinforcing outcome (1), positive occasion setting by the context (2), and direct (habitual) evocation of the response by context (3). B. Inhibitory contextual control (as in an extinction context): An inhibitory association between the context and outcome (4), negative occasion setting by the context (5), and direct inhibition of the response by context (6).

Direct context-reinforcer associations.

In the basic ABA renewal design, a simple possibility is that Context A excites instrumental responding because it is directly associated with the reinforcer (Link 1 in Figure 1); Context B might analogously inhibit instrumental responding because of its direct inhibitory association with the reinforcer (Link 4 in Figure 1). Such Pavlovian context-reinforcer associations might excite or inhibit the instrumental response through mechanisms that have been discussed in the literature on Pavlovian-instrumental transfer (e.g., Holmes, Marchand, & Coutureau, 2010). We know that direct excitatory associations between a context and a reinforcer can indeed excite an instrumental response (Baker et al., 1991; Pearce & Hall, 1979). However, contextual control of instrumental behavior often involves more than this. Todd (2013) observed ABA, ABC, and AAB renewal in experiments that controlled for the contexts’ direct associations with the reinforcer. One example of Todd’s experiments is the design for ABA renewal that was illustrated in Table 1 and discussed above. Recall that R1 and R2 were renewed in contexts that had been treated equivalently with the other response. Such results, along with results of the analogous punishment experiment shown in the lower half of Table 1 (Bouton & Schepers, 2015), strongly suggest that other mechanisms besides the context’s direct associations with significant outcomes can play a role in contextual control.

Occasion setting by context.

A second possibility is that the contexts “set the occasion” for an R-O relationship rather than merely enter into an association with O. The occasion setting concept is illustrated in Links 2 and 5 of Figure 1. It is notable that research in the 1980s and 1990s suggested the role of occasion setting by context in the control of Pavlovian extinction, where the idea is that contexts enable or retrieve CS-US relations, e.g., Context➜(S-O) (see Trask et al., 2017). We know that contexts can operate in an occasion-setting way in instrumental learning under some conditions. Trask and Bouton (2014) reported an experiment illustrated in Table 3, which was inspired by an analogous earlier experiment by Colwill and Rescorla (1990) conducted with different discriminative stimuli instead of contexts. Here, two responses (R1 and R2) were associated with different pellet outcomes (O1 and O2) in two different contexts. In Context A, R1 produced O1 and R2 produced O2. In Context B, the reverse was true: R1 now produced O2 and R2 produced O1. The possibility that the A set the occasion for R1-O1 and R2-O2 and B set the occasion for R1-O2 and R2-O1 was confirmed by the results of a reinforcer devaluation treatment. As shown in Table 2, O2 was paired with illness from an injection of lithium chloride (LiCl) so that the animals came to reject it completely. When R1 and R2 were subsequently tested in Contexts A and B, the rats suppressed R2 relative to R1 in A and R1 relative to R2 in B. The pattern could only have come about if the animals had learned some appreciation of the different R-O relationships in the two contexts. Thus, contexts can indeed set the occasion for different R-O associations, and thereby control responding “hierarchically,” in the manner sketched in Links 2 and 5.

Table 3.

Design of an experiment demonstrating occasion setting of instrumental responding by the context (Trask & Bouton, 2014).

| Phase | ||

|---|---|---|

| Acquisition | Reinforcer Devaluation | Test |

| A: R1-O1, R2-O2 | A: O2-Illness | A: R1, R2 |

| B: R1-O2, R2-O1 | B: O2-Illness | B: R1, R2 |

Note. A and B refer to different contexts; R1 and R2 refer to different responses; O1 and O2 are different food pellets (grain and sucrose). Illness was created by lithium chloride injection. This is a within-subject design; thus, every subject received the treatments shown in each phase in an intermixed fashion.

On the other hand, the type of occasion setting just discussed might not develop naturally when the rat solves a simpler context discrimination, as in simple ABA renewal. One testable characteristic of Pavlovian occasion setting is that the ability of one occasion setter to influence a particular target CS can “transfer” and influence another target CS trained in another similar occasion-setting relationship (e.g., Holland & Coldwell, 1993; Morell & Holland, 1997). Todd (2013) asked whether something analogous occurs in instrumental learning. That is, does the ability of a context to inhibit one response (e.g., lever pressing) transfer and inhibit a separate, but similarly trained response (e.g., chain pulling)? The answer was that it did not; extinction of a response in one context did not suppress performance of another response when it was tested there after a similar prior treatment (see also Bouton, Trask, & Carranza-Jasso, 2016). Thus, as implied (but not quite proven) by the results of designs sketched in Table 1, inhibition of R1 and R2 in a given context can occur quite independently, with little evidence of transfer between them. Such results suggest that the animal might learn to inhibit a specific response in a specific context during simple extinction or punishment.

Direct associations between the context and the response.

The third possibility sketched in Figure 1 is that the context might evoke or inhibit an instrumental response through a direct excitatory or inhibitory association with the response (Links 3 and 6). Bouton et al. (2016) reported a number of experiments in which rats were reinforced for performing R1 in two discriminative stimuli (S1R1+, S2R1+, S3R2+, S4R2+). A key and highly replicable finding was that when R1 was extinguished in S1, it was inhibited when it was tested in either S1 or S2, but R2 was not inhibited when it was tested in S3-- again suggesting a high degree of specificity of inhibition to the particular response. In another experiment, a single response (lever pressing) was associated with one outcome (e.g., sucrose pellet) in S1 and a different outcome (e.g., grain pellet) in S2. When S1R was extinguished, the response was inhibited in both S1 and S2. Such a result may further suggest that extinction can cause direct inhibition of the response (Link 6) rather than inhibition of a specific R-O relationship (Link 5). Rescorla (e.g., 1993, 1997a) had previously suggested such a possibility (see also Colwill, 1991), but had not necessarily separated link 5 from link 6. However, the newer set of results (Todd, 2013; Bouton et al., 2016; see also Todd et al., 2014) begin to suggest that the animal might indeed simply learn to inhibit the response when the response is extinguished in a specific context. “Stop making the response in this context” is all the animal really needs to learn.

The idea that animals might learn direct context-response associations receives additional support from other studies of contextual control. A number of experiments have established that a context switch after instrumental training can weaken the instrumental response, whether it is lever pressing, chain pulling, or nose poking (e.g., Bouton et al., 2014; Trask & Bouton, 2018), and whether the response is occasioned by a discriminative stimulus (Bouton et al., 2014) or occurs freely, without such a stimulus (e.g., Bouton et al., 2011; Thrailkill & Bouton, 2015b). This context-specificity of instrumental responding is potentially accommodated by any of the links in Panel A of Figure 1. However, after excluding a role for Link 1, Thrailkill and Bouton (2015b) attempted to distinguish between Links 2 and 3. In one experiment, lever pressing was extensively trained, so that the response was “habitual” (e.g., Adams, 1982). That is, when the reinforcer was devalued by pairing it with LiCl, the rats continued to perform it as if they were not processing the value of O or the relationship between the behavior and O. The response was thus a habit rather than a goal-directed action (e.g., Corbit, 2018; Dickinson, 1985). And importantly, it was weakened when the context was changed. In contrast, when the response received less training, it had the status of goal-directed action rather than a habit: Rats that had an aversion conditioned to the reinforcer suppressed their responding relative to control rats that did not. Importantly, the size of that reinforcer devaluation effect (the difference between responding in rats that had the taste aversion vs. those that did not) was not weakened by a change of context, suggesting that the animal’s knowledge of the R-O relation was equally strong in Context A and B. The results thus suggested that the context did not control the R-O relation (i.e., through the occasion setting Link 2), but instead controlled the direct evocation of R (as in Link 3). We take this to be the complement of the direct inhibitory Context-R relation that results suggest the animal might learn in extinction (Link 6).

Conclusion.

It appears that the context can control instrumental behavior via several mechanisms. We find it especially interesting, however, that in simple extinction situations, the context seems to directly inhibit R, rather than work via the negative occasion setting mechanism that has proved to be important in Pavlovian learning. Even there, however, the context may enter into several types of associations—although the negative occasion setting mechanism seems to dominate.

Summary and conclusions

The research reviewed here suggests that studies of extinction in instrumental learning can inform our understanding of a fairly general retroactive interference process. Within the domain of instrumental learning, extinction has parallels with other paradigms that cause behavioral suppression, such as omission learning, punishment, second-outcome learning, discrimination reversal learning, and DRA. In all cases, learning from Phase 1 can be retained through Phase 2, and behavior may be controlled or selected by the current context. In this way, the instrumental paradigms have much in common with the so-called Pavlovian interference paradigms, such as extinction, counterconditioning, reversal learning, and latent inhibition (e.g., Bouton, 1993). The potential generality across the instrumental and Pavlovian paradigms further encourages the idea that the various kinds of contexts discussed here (physical apparatus or room, time, deprivation state, stress state, reinforcers, other discrete events, and prior behaviors) might play a broad role in influencing interference.

The mechanisms of contextual control that were reviewed in the third section of this article (context-reinforcer associations, contextual occasion setting, and context-response associations) may also be generally relevant—especially in the instrumental paradigms. However, it is worth noting that the role of direct context-response associations played in instrumental learning may be relatively unique to instrumental learning situations. For example, in instrumental learning, extinction of a response in S has an impact on the response in other Ss (Bouton et al., 2016; Todd et al., 2014), whereas in Pavlovian conditioning it might do so only under special conditions (Vurbic & Bouton, 2011). In addition, although the response is weakened by context change after conditioning in instrumental learning (e.g., Bouton et al., 2011, 2014; Thrailkill & Bouton, 2015; Trask & Bouton, 2018), Pavlovian responses seem to be less affected by context change; that is, responding to a CS often transfers almost perfectly across contexts (see Rosas, Todd, & Bouton, 2013, for a review). If the control of the response by the context in instrumental learning is due to the context’s direct association with the response (Thrailkill & Bouton, 2015b), this tentative difference between instrumental and Pavlovian responding may further suggest that direct context-response learning may be a mechanism of contextual control that is relatively unique to instrumental learning and thus instrumental interference.

Acknowledgments

Preparation of this article was supported by Grant RO1 DA 033123 from NIH. I thank Catalina Rey, Scott Schepers, Mike Steinfeld, Eric Thrailkill, Travis Todd, and Sydney Trask for comments.

Footnotes

For Psychopharmacology special issue on Extinction.

The author declares no conflicts of interest.

References

- Adams CD (1982). Variations in the sensitivity of instrumental responding to reinforcer devaluation. Quarterly Journal of Experimental Psychology, 34B, 77–98. [Google Scholar]

- Ahmed SH, & Koob GF (1997). Cocaine-but not food-seeking behavior is reinstated by stress after extinction. Psychopharmacology, 132, 289–295. [DOI] [PubMed] [Google Scholar]

- Baker AG (1990). Contextual conditioning during free-operant extinction: Unsignaled, signaled, and backward-signaled noncontingent food. Animal Learning & Behavior, 18, 59–70. [Google Scholar]

- Baker AG, Steinwald H, & Bouton ME (1991). Contextual conditioning and reinstatement of extinguished instrumental responding. The Quarterly Journal of Experimental Psychology, 43B, 199–218. [Google Scholar]

- Baker TB, Piper ME, McCarthy DE,Majeskie MR, & Fiore MC (2004). Addiction motivation reformulated: An affective processing model of negative reinforcement. Psychological Review, 111, 33–51. [DOI] [PubMed] [Google Scholar]

- Bernal-Gamboa R, Gámez AM, & Nieto J (2017). Reducing spontaneous recovery and reinstatement of operant performance through extinction-cues. Behavioural Processes, 135, 1–7. [DOI] [PubMed] [Google Scholar]

- Bossert JM, Liu SY, Lu L, & Shaham Y (2004). A role of ventral tegmental area glutamate in contextual cue-induced relapse to heroin seeking. The Journal of Neuroscience, 24, 10726–10730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME (1991). Context and retrieval in extinction and in other examples of interference in simple associative learning. In Dachowski L & Flaherty CF (Eds.), Current topics in animal learning: Brain, emotion, and cognition (pp. 25–53). Hillsdale, NJ: Lawrence Erlbaum. [Google Scholar]

- Bouton ME (1993). Context, time, and memory retrieval in the interference paradigms of Pavlovian learning. Psychological Bulletin, 114, 80–99. [DOI] [PubMed] [Google Scholar]

- Bouton ME, & García-Gutiérrez A (2006). Intertrial interval as a contextual stimulus. Behavioural Processes, 71, 307–317. [DOI] [PubMed] [Google Scholar]

- Bouton ME, & Hendrix MC (2011). Intertrial interval as a contextual stimulus: Further analysis of a novel asymmetry in temporal discrimination learning. Journal of Experimental Psychology: Animal Behavior Processes, 37, 79–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Kenney FA, & Rosengard C (1990). State-dependent fear extinction with two benzodiazepine tranquilizers. Behavioral Neuroscience, 104, 44–55. [DOI] [PubMed] [Google Scholar]

- Bouton ME, Rosengard C, Achenbach GG, Peck CA, & Brooks DC (1993). Effects of contextual conditioning and unconditional stimulus presentation on performance in appetitive conditioning. The Quarterly Journal of Experimental Psychology, 41B, 63–95. [PubMed] [Google Scholar]

- Bouton ME, & Schepers ST (2014). Resurgence of instrumental behavior after an abstinence contingency. Learning & Behavior, 42, 131–143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, & Schepers ST (2015). Renewal after the punishment of free operant behavior. Journal of Experimental Psychology: Animal Learning and Cognition 41, 81–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Todd TP, & León SP (2014). Contextual control of discriminated operant behavior. Journal of Experimental Psychology: Animal Learning and Cognition, 40, 92–105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Winterbauer NE, & Vurbic D (2012). Context and extinction: Mechanisms of relapse in drug self-administration. In Haselgrove M & Hogarth L (Eds.), Clinical applications of learning theory (pp. 103–133). East Sussex, UK: Psychology Press. [Google Scholar]

- Bouton ME, & Todd TP (2014). A fundamental role for context in instrumental learning and extinction. Behavioural Processes, 104, 13–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Todd TP, Vurbic D, & Winterbauer NE (2011). Renewal after the extinction of free operant behavior. Learning & Behavior, 39, 57–67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, & Trask S (2016). Role of the discriminative properties of the reinforcer in resurgence. Learning & Behavior, 44, 137–150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Trask S, & Carranza-Jasso R (2016). Learning to inhibit the response during instrumental (operant) extinction. Journal of Experimental Psychology: Animal Learning and Cognition, 42, 246–258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brooks DC (2000). Recent and remote extinction cues reduce spontaneous recovery. The Quarterly Journal of Experimental Psychology Section B: Comparative and Physiological Psychology, 53, 25–58. [DOI] [PubMed] [Google Scholar]

- Brooks DC, & Bouton ME (1993). A retrieval cue for extinction attenuates spontaneous recovery. Journal of Experimental Psychology: Animal Behavior Processes, 19, 77–89. [DOI] [PubMed] [Google Scholar]

- Brooks DC, & Bouton ME (1994). A retrieval cue attenuates response recovery (renewal) caused by a return to the conditioning context. Journal of Experimental Psychology: Animal Behavior Processes, 20, 366–379. [Google Scholar]

- Brooks DC, & Fava DA (2017). An extinction cue reduces appetitive Pavlovian reinstatement in rats. Learning and Motivation, 58, 59–65. [Google Scholar]

- Buczek Y, Le AD, Wang A, Stewart J, Shaham Y (1999). Stress reinstates nicotine seeking but not sucrose solution seeking in rats. Psychopharmacology, 144, 183–188. [DOI] [PubMed] [Google Scholar]

- Chaudri N, Sahuque LL, Cone JJ, & Janak PH (2008). Reinstated ethanol-seeking in rats is modulated by environmental context and requires the nucleus accumbens core. European Journal of Neuroscience, 28, 2288–2298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohenour JM, Volkert VM, & Allen KD (2018). An experimental demonstration of AAB renewal in children with autism spectrum disorder. Journal of the Experimental Analysis of Behavior, in press. [DOI] [PubMed]

- Colwill RM (1991). Negative discriminative stimuli provide information about the identity of omitted response-contingent outcomes. Animal Learning & Behavior, 19, 326–336. [Google Scholar]

- Colwill RM, & Rescorla RA (1990). Evidence for the hierarchical structure of instrumental learning. Animal Learning & Behavior, 18, 71–82. [Google Scholar]

- Corbit LH (2018). Understanding the balance between goal-directed and habitual behavioral control. Current Opinion in Behavioral Sciences, 20, 161–168. [Google Scholar]

- Craig AR, Browning KO, & Shahan TA (2017). Stimuli previously associated with reinforcement mitigate resurgence. Journal of the Experimental Analysis of Behavior, 108, 139–150. [DOI] [PubMed] [Google Scholar]

- Crombag HS, & Shaham Y (2002). Renewal of drug seeking by contextual cues after prolonged extinction in rats. Behavioral Neuroscience, 116, 169–173. [DOI] [PubMed] [Google Scholar]

- Cunningham CL (1979). Alcohol as a cue for extinction: State dependency produced by conditioned inhibition. Animal Learning & Behavior, 7, 45–52. [Google Scholar]

- Davidson TL (1993). The nature and function of interoceptive signals to feed: toward integration of physiological and learning perspectives. Psychological Review, 100, 640–657. [DOI] [PubMed] [Google Scholar]

- Davidson TL, Kanoski SE, Tracy AL, Walls EK, Clegg D, & Benoit SC (2005). The interoceptive cue properties of ghrelin generalize to cues produced by food deprivation. Peptides, 26, 1602–1610. [DOI] [PubMed] [Google Scholar]

- Dickinson A (1985). Actions and habits: the development of behavioural autonomy. Philosophical Transactions of the Royal Society of London B: Biological Sciences, 308, 67–78. [Google Scholar]

- Estes WK (1944). An experimental study of punishment. Psychological Monographs: General and Applied, 57, i–40. [Google Scholar]

- Franks GJ, & Lattal KA (1976). Antecedent reinforcement schedule training and operant response reinstatement in rats. Animal Learning & Behavior, 4, 374–378. [Google Scholar]

- Gordon WC, & Spear NE (1973). Effect of reactivation of a previously acquired memory on the interaction between memories in the rat. Journal of Experimental Psychology, 99, 349–355. [DOI] [PubMed] [Google Scholar]

- Hamlin AS, Clemens KJ, & McNally GP (2008). Renewal of extinguished cocaine-seeking. Neuroscience, 151, 659–670. [DOI] [PubMed] [Google Scholar]

- Hamlin AS, Newby J, & McNally GP (2007). The neural correlates and role of D1 dopamine receptors in renewal of extinguished alcohol-seeking. Neuroscience, 146, 525–536. [DOI] [PubMed] [Google Scholar]

- Hammack SE, Cheung J, Rhodes KM, Schutz KC, Falls WA, Braas KM, & May V (2009). Chronic stress increases pituitary adenylate cyclase-activating peptide (PACAP) and brain-derived neurotrophic factor (BDNF) mRNA expression in the bed nucleus of the stria terminalis (BNST): Roles for PACAP in anxiety-like behavior. Psychoneuroendocrinology, 34, 833–843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holland PC (1992). Occasion setting in Pavlovian conditioning. In Medin DL (ed.), The psychology of learning and motivation (Vol. 28, pp. 69–125). San Diego, CA: Academic Press. [Google Scholar]

- Holland PC, & Coldwell SE (1993). Transfer of inhibitory stimulus control in operant feature-negative discriminations. Learning and Motivation, 24, 345–375. [Google Scholar]

- Jean-Richard-Dit-Brussel P, Killcross S, & McNally GP (2018). Behavioral and neurobiological mechanisms of punishment: Implications for psychiatric disorders. Neuropsychopharmacology, 43, 1639–1650. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanoski SE, Walls EK, & Davidson TL (2007). Interoceptive “satiety” signals produced by leptin and CCK. Peptides, 28, 988–1002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelley ME, Jimenez-Gomez C, Podlesnik CA, & Morgan A (2018). Evaluation of renewal mitigation of negatively reinforced socially significant operant behavior. Learning and Motivation, 63, 133–141. [Google Scholar]

- Kelley ME, Liddon CJ, Ribeiro A, Greif AE, & Podlesnik CA (2015). Basic and translational evaluation of renewal of operant responding. Journal of Applied Behavior Analysis, 48, 390–401. [DOI] [PubMed] [Google Scholar]

- Kincaid SL, & Lattal KA (2018). Beyond the breakpoint: Reinstatement, renewal, and resrugence of ratio-strained behavior. Journal of the Experimental Analysis of Behavior, 109, 475–491. [DOI] [PubMed] [Google Scholar]

- Lattal KM (2007). Effects of ethanol on the encoding, consolidation, and expression of extinction following contextual fear conditioning. Behavioral Neuroscience, 121, 1280–1292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeBlanc KH, Ostlund SB, & Maidment NT (2012). Pavlovian-to-instrumental transfer in cocaine seeking rats. Behavioral Neuroscience, 126, 681–689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leitenberg H, Rawson RA, & Bath K (1970). Reinforcement of competing behavior during extinction. Science, 169, 301–303. [DOI] [PubMed] [Google Scholar]

- Liddon CJ, Kelley ME, Rey CN, Liggett AP, & Ribeiro A (2018). A translational analysis of ABA and ABC renewal of operant behavior. Journal of Applied Behavior Analysis, in press. [DOI] [PubMed]

- Lieving GA, & Lattal KA (2003). Recency, repeatability, and reinforcer retrenchment: An experimental analysis of resurgence. Journal of the Experimental Analysis of Behavior, 80, 217–233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mackintosh NJ (1975). A theory of attention: Variations in the associability of stimuli with reinforcement. Psychological Review, 82, 276–298. [Google Scholar]

- Mantsch JR, & Goeders NE (1998) Generalization of a restraint-induced discriminative stimulus to cocaine in rats. Psychopharmacology, 468, 423–426. [DOI] [PubMed] [Google Scholar]

- Mantsch JR, & Goeders NE (1999) Ketoconazole blocks the stress induced reinstatement of cocaine-seeking behavior in rats: the relationship to the discriminative stimulus effects of cocaine. Psychopharmacology, 142, 399–407. [DOI] [PubMed] [Google Scholar]

- Mantsch JR, Baker DA, Funk D, Lê AD, & Shaham Y (2016). Stress-induced reinstatement of drug seeking: 20 years of progress. Neuropsychopharmacology, 41, 335–356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marchant NJ, Campbell EJ, Pelloux Y, Bossert JM, & Shaham Y (2018). Context-induced relapse after extinction versus punishment: Similarities and differences. Psychopharmacology, in press. [DOI] [PMC free article] [PubMed]

- Marchant NJ, Khuc TN, Pickens CL, Bonci A, & Shaham Y (2013). Context-induced relapse to alcohol seeking after punishment in a rat model. Biological Psychiatry, 73, 256–262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller RR, & Escobar M (2002). Associative interference between cues and between outcomes presented together and presented apart: An integration. Behavioural Processes, 57, 163–185. [DOI] [PubMed] [Google Scholar]

- Miller RR, Kasprow WJ, & Schachtman TR (1986). Retrieval variability: Sources and consequences. American Journal of Psychology 99, 145–218. [PubMed] [Google Scholar]

- Morell JR, & Holland PC (1993). Summation and transfer of negative occasion setting. Animal Learning & Behavior, 21, 145–153. [Google Scholar]

- Nakajima S (2014). Renewal of signaled shuttle box avoidance in rats. Learning and Motivation, 46, 27–43. [Google Scholar]

- Nakajima S, Tanaka S, Urushihara K, & Imada H (2000). Renewal of extinguished lever-press responses upon return to the training context. Learning and Motivation, 31, 416–431. [Google Scholar]

- Nakajima S, Urushihara K, & Masaki T (2002). Renewal of operant performance formerly eliminated by omission or noncontingency training upon return to the acquisition context. Learning and Motivation, 33, 510–525. [Google Scholar]

- Nieto J, Uengoer M, & Bernal-Gamboa R (2017). A reminder of extinction reduces relapse in an animal model of voluntary behavior. Learning and Memory, 24, 76–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olmstead MC, Lafond MV, Everitt BJ, & Dickinson A (2001). Cocaine seeking by rats is a goal-directed action. Behavioral Neuroscience, 115, 394–402. [PubMed] [Google Scholar]

- Ostlund SB, & Balleine BW (2007). Selective reinstatement of instrumental performance depends on the discriminative stimulus properties of the mediating outcome. Learning & Behavior, 35, 43–52. [DOI] [PubMed] [Google Scholar]

- Panlilio LV, Thorndike EB, & Schindler CW (2003). Reinstatement of punishment-suppressed opioid self-administration in rats: An alternative model of relapse to drug abuse. Psychopharmacology, 168, 229–235. [DOI] [PubMed] [Google Scholar]

- Panlilio LV, Thorndike EB, & Schindler CW (2005). Lorazepam reinstates punishment-suppressed remifentanil self-administration in rats. Psychopharmacology, 179, 374–382. [DOI] [PubMed] [Google Scholar]

- Pearce JM, & Hall G (1979). The influence of context-reinforcer associations on instrumental performance. Animal Learning & Behavior, 7, 504–508. [Google Scholar]

- Pelloux Y, Hoots JK, Cifani C, Adhikary S, Martin J, Minier-Toribio A, Bossert JM, & Shaham Y (2018). Context-induced relapse to cocaine seeking after punishment-imposed abstinence is associated with activation of cortical and subcortical brain regions. Addiction Biology, 23, 699–712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polack CW, Jozefowiez J, & Miller RR (2017). Stepping back from ‘persistence and relapse’ to see the forest: Associative interference. Behavioural Processes, 141, 128–136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reid RL (1958). The role of the reinforcer as a stimulus. British Journal of Psychology, 49, 202–209. [DOI] [PubMed] [Google Scholar]

- Rescorla RA (1993). Inhibitory associations between S and R in extinction. Animal Learning & Behavior, 21, 327–336. [Google Scholar]

- Rescorla RA (1995). Full preservation of a response-outcome association through training with a second outcome. The Quarterly Journal of Experimental Psychology, 48b, 252–261. [Google Scholar]

- Rescorla RA (1996a). Response-outcome associations remain functional through interference treatments. Animal Learning & Behavior, 24, 450–458. [Google Scholar]

- Rescorla RA (1996b). Spontaneous recovery after training with multiple outcomes. Animal Learning & Behavior, 24, 11–18. [Google Scholar]

- Rescorla RA (1997a). Response inhibition in extinction. The Quarterly Journal of Experimental Psychology Section B: Comparative and Physiological Psychology, 50, 238–252. [Google Scholar]

- Rescorla RA (1997b). Spontaneous recovery of instrumental discriminative responding. Animal Learning & Behavior, 25, 485–497. [Google Scholar]

- Rescorla RA (2001). Experimental extinction. In Mower RR & Klein SB (Eds.), Handbook of contemporary learning theories (pp. 119–154). Mahwah, NJ: Erlbaum. [Google Scholar]

- Rescorla RA, & Skucy JC (1969). Effect of response-independent reinforcers during extinction. Journal of Comparative and Physiological Psychology, 67(3), 381–389. [Google Scholar]

- Saini V, Sullivan WE, Baxter EL DeRosa NM, & Roane HS (2018). Renewal during functional communication training. Journal of Applied Behavior Analysis, in press. [DOI] [PubMed]

- Sample CH, Jones S, Hargrave SL, Jarrard LE, & Davidson TL (2016). Western diet and the weakening of the interoceptive stimulus control of appetitive behavior. Behavioural Brain Research, 312, 219–230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schepers ST, & Bouton ME (2015). Effects of reinforcer distribution during response elimination on resurgence of an instrumental behavior. Journal of Experimental Psychology: Animal Learning and Cognition, 41, 179–192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schepers ST, & Bouton ME (2017). Hunger as a context: Food-seeking that is inhibited during hunger can renew in the context of satiety. Psychological Science, 28, 1640–1648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schepers ST, & Bouton ME (2018). Stress as a context: Stress causes relapse of inhibited food seeking if it has been associated with prior food seeking. Appetite, submitted for publication. [DOI] [PMC free article] [PubMed]

- Shaham Y, Shalev U, Lu L, de Wit H, & Stewart J (2003). The reinstatement model of drug relapse: history, methodology and major findings. Psychopharmacology, 168, 3–20. [DOI] [PubMed] [Google Scholar]

- Shahan TA, & Craig AR (2017). Resurgence as choice. Behavioural Processes, 141, 100–127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahan TA, & Sweeney MM (2011). A model of resurgence based on behavioral momentum theory. Journal of the Experimental Analysis of Behavior, 95, 91–108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sinha R (2008). Chronic stress, drug use, and vulnerability to addiction. Annals of the New York Academy of Sciences, 1141, 105–130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sinha R, Shaham Y, & Heilig M (2011). Translational and reverse translational research on the role of stress in drug craving and relapse. Psychopharmacology, 218, 69–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith BM, Smith GS, Shahan TA, Madden GJ, & Twohig MP (2017). Effects of differential rates of alternative reinforcement on resurgence of human behavior. Journal of the Experimental Analysis Behavior, 107, 191–202. [DOI] [PubMed] [Google Scholar]

- Spear NE (1971). Forgetting as retrieval failure. In Honig WK & James PHR (Eds.), Animal memory (pp. 45–109). San Diego, CA: Academic Press. [Google Scholar]

- Spear NE (1981). Extending the domain of memory retrieval. In Spear NE & Miller RR (Eds.), Information processing in animals: Memory mechanisms (pp. 341–378). Hillsdale, NJ: Erlbaum. [Google Scholar]

- Spear NE, Smith GJ, Bryan R, Gordon W, Timmons R, & Chiszar D (1980). Contextual influences on the interaction between conflicting memories in the rat. Animal Learning & Behavior, S, 273–281.

- Sweeney MM, & Shahan TA (2013). Effects of high, low, and thinning rates of alternative reinforcement on response elimination and resurgence. Journal of Experimental Analysis of Behavior, 100, 102–116. [DOI] [PubMed] [Google Scholar]

- Thomas DR, McKelvie AR, & Mah WL (1985). Context as a conditional cue in operant discrimination reversal learning. Journal of Experimental Psychology: Animal Behavior Processes, 11, 317–330. [Google Scholar]

- Thomas DR, McKelvie AR, Ranney M, & Moye TB (1981). Interference in pigeons’ long-term memory viewed as a retrieval problem. Animal Learning & Behavior, 9, 581–586. [Google Scholar]

- Thomas DR, Moye TB, & Kimose E (1984). The recency effect in pigeons’ long-term memory. Animal Learning & Behavior, 12, 21–28. [Google Scholar]

- Thrailkill EA, & Bouton ME (2015a). Extinction of chained instrumental behaviors: Effects of procurement extinction on consumption responding. Journal of Experimental Psychology: Animal Learning and Cognition, 41, 232–246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, & Bouton ME (2015b). Contextual control of instrumental actions and habits. Journal of Experimental Psychology: Animal Learning and Cognition, 41, 69–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, & Bouton ME (2016a). Extinction and the associative structure of heterogeneous instrumental chains. Neurobiology of Learning and Memory, 133, 61–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, & Bouton ME (2016b). Extinction of chained instrumental behaviors: Effects of consumption extinction on procurement responding. Learning & Behavior, 44, 85–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, & Bouton ME (2017). Factors that influence the persistence and relapse of discriminated behavior chains. Behavioural Processes, 141, 3–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, Trott JM, Zerr CL, & Bouton ME (2016). Contextual control of chained instrumental behaviors. Journal of Experimental Psychology: Animal Learning and Cognition, 42, 401–414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd TP (2013). Mechanisms of renewal after the extinction of instrumental behavior. Journal of Experimental Psychology: Animal Behavior Processes, 39, 193–207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd TP, Vurbic D, & Bouton ME (2014). Mechanisms of renewal after the extinction of discriminated operant behavior. Journal of Experimental Psychology: Animal Learning and Cognition, 40, 355–368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd TP, Winterbauer NE, & Bouton ME (2012). Effects of the amount of acquisition and contextual generalization on the renewal of instrumental behavior after extinction. Learning & Behavior, 40, 145–157. [DOI] [PubMed] [Google Scholar]

- Torres SJ, & Nowson CA (2007). Relationship between stress, eating behavior, and obesity. Nutrition, 23, 887–894. [DOI] [PubMed] [Google Scholar]