Abstract

The Speech Intelligibility Index includes a series of frequency importance functions for calculating the estimated intelligibility of speech under various conditions. Until recently, techniques to derive frequency importance required averaging data over a group of listeners, thus hindering the ability to observe individual differences due to factors such as hearing loss. In the current study, the “random combination strategy” [Bosen and Chatterjee (2016). J. Acoust. Soc. Am. 140, 3718–3727] was used to derive frequency importance functions for individual hearing-impaired listeners, and normal-hearing participants for comparison. Functions were measured by filtering sentences to contain only random subsets of frequency bands on each trial, and regressing speech recognition against the presence or absence of bands across trials. Results show that the contribution of each band to speech recognition was inversely proportional to audiometric threshold in that frequency region, likely due to reduced audibility, even though stimuli were shaped to compensate for each individual's hearing loss. The results presented in this paper demonstrate that this method is sensitive to factors that alter the shape of frequency importance functions within individuals with hearing loss, which could be used to characterize the impact of audibility or other factors related to suprathreshold deficits or hearing aid processing strategies.

I. INTRODUCTION

The Speech Intelligibility Index (SII; ANSI, 1997) provides a means to estimate the intelligibility of speech under certain conditions, such as reduced audibility due to a listener's hearing loss. The SII is calculated by multiplying the proportion of speech available in each frequency region of the spectrum with the relative importance of that frequency region to overall speech reception, and then summing together the values across frequency bands. The importance of each frequency region is described by frequency importance functions, with different functions provided for different types of speech materials.

The standard calculation of the SII involves accounting for audibility of each speech frequency region (either as determined through the speech-to-noise ratio or the listener's audiometric thresholds) and can be modified with other considerations for listeners with hearing loss, such as high presentation levels (ANSI, 1997). However, the frequency importance functions used in the calculations are based on data obtained from listeners with normal hearing (Bell et al., 1992; Duggirala et al., 1988; Studebaker and Sherbecoe, 1991; Studebaker et al., 1993), which may be problematic for computations involving hearing loss. It is well known that sensorineural hearing loss introduces several supra-threshold processing deficits (deficits which remain after audibility has been accounted for), so a calculation based on audibility alone may not provide accurate estimates. This consideration was recognized several decades ago by Fletcher (1952), who proposed that a “proficiency factor” could be used to account for effects of hearing loss beyond reduced audibility. Since then, the SII has been shown to provide imperfect estimations for listeners with hearing loss, particularly when the hearing loss is relatively severe (e.g., Dugal et al., 1980; Dubno et al., 1989; Ching et al., 1998), and several modifications to the SII have been proposed (e.g., Ludvigsen, 1987; Pavlovic et al., 1986). Many of these adaptations have considered the supra-threshold deficits associated with sensorineural hearing loss, such as reduced spectral and temporal resolution, deficits which introduce distortions into the auditory processing of speech (Moore, 1985; Mehraei et al., 2014; Davies-Venn et al., 2015).

As an alternative, it may be more beneficial to base frequency importance functions on data from listeners with hearing loss, rather than modifying frequency importance functions from normal hearing listeners in an attempt to account for differences in speech perception between the two groups of listeners. To obtain the importance functions used in SII calculations, several techniques have been developed, including the standard technique that was utilized for the values in the SII (e.g., Studebaker and Sherbecoe, 1991), the correlational method (Doherty and Turner, 1996), the “hole” method (Kasturi et al., 2002), a method by Whitmal et al. (2015), and the compound method (Apoux and Healy, 2012; Healy et al., 2013). Unfortunately, all of these techniques require averaging data across large numbers of listener participants. In addition, many require the use of background noise, and this may confound the particular importance of a speech band with the detrimental influence of background noise on that region, as it appears that speech bands across the spectrum may be differentially impacted by noise (Yoho et al., 2018a). This possible confound is a particular concern for listeners with sensorineural hearing loss who have been shown to suffer from a more negative and more complex impact of noise in speech perception tasks (Festen and Plomp, 1990; Bacon et al., 1998). Therefore, despite the fact that the SII calculations are used clinically for listeners with hearing loss, it has until now been unrealistic to measure frequency importance directly for these listeners. Given the large degree of variability and heterogeneity amongst listeners with hearing loss, averaging frequency importance data over a large group of listeners would be impractical and potentially misleading.

Recently, techniques have been developed to measure frequency importance functions for individual listeners (Bosen and Chatterjee, 2016; Shen and Kern, 2018). These techniques vary the combination of frequency bands that contain speech energy on a trial-by-trial basis, and regress speech recognition accuracy against the presence or absence of each band across trials. This approach estimates the contribution each band makes to speech recognition, averaged over their co-occurrence with the other frequency bands. This approach allows for reasonably precise estimates of frequency importance functions in a single experimental session. As a result, these methods provide a means to estimate frequency importance on the individual-listener level, and can be adjusted to provide different levels of frequency resolution as needed.

The purpose of the current study was to determine the shape of frequency importance functions for individual listeners with sensorineural hearing impairment, and to determine if these functions deviate from functions observed in listeners with normal hearing. Individual frequency importance functions were estimated using an adapted version of the method described in Bosen and Chatterjee (2016), and from hereafter referred to as the “random combination strategy.” To approximate the spectral shape and level of audibility (amount of speech information available to the listener based on that listener's audiometric thresholds) that would be provided by an individual's hearing aid, a standard hearing aid prescription formula (NAL-R) was applied for each listener to amplify the speech signal. Thus, effects of reduced audibility could be diminished, and somewhat ecologically-valid processing could be achieved without the confounding variables introduced by differing processing schemes amongst participants' individual hearing aids. A group of normal-hearing participants was included as reference to observe the shape of frequency-importance functions with healthy, intact auditory processing for the conditions tested.

II. METHOD

A. Participants

Participants with normal hearing (NH) and with hearing impairment (HI) were recruited for the current study. None had previous exposure to the sentence materials employed here. Ten participants with NH were recruited from undergraduate courses at Utah State University. These participants ranged in age from 18 to 24 years, four were male, and all had pure tone thresholds at or below 20 dB hearing level (HL) at octave frequencies between 0.25 and 8 kHz (ANSI, 2004). Fourteen participants with HI were recruited from the hearing clinic at Utah State University, but only ten were included in the final analysis due to an inability of four participants to achieve sufficient levels of performance (details below). All were experienced and current bilateral hearing aid users (average of eight years using hearing aids, years of experienced ranged from 1 to 12 years). The ten participants who were included in the analysis ranged in age from 20 to 73 years, six were male, and all ten had bilateral, symmetric, sensorineural hearing loss that ranged in degree from moderate to severe (see Fig. 2). Eight of the participants had sloping losses, one had a trough or “cookie bite” loss, and one had a rising loss.

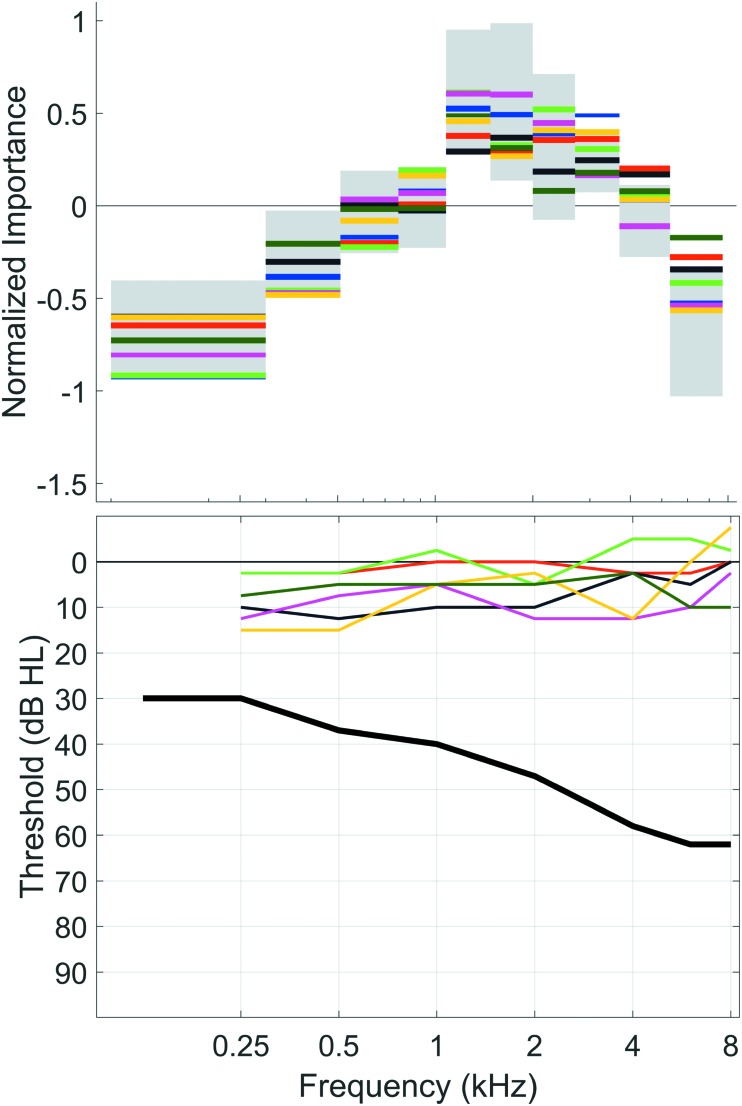

FIG. 2.

Normalized frequency importance functions for listeners with HI. Each vertical pair of panels represents an individual's frequency importance function and audiogram. Frequency importance is plotted for each individual in color on top of the 95% range from the NH group, for reference. Thresholds were plotted with blue Xs and red circles for the left and right ears, respectively, and the black line represents the mean threshold across ears.

B. Stimuli and procedure

The stimuli were filtered Institute of Electrical and Electronics Engineers (IEEE) sentences (IEEE, 1969) spoken by two male and two female talkers (22.05 kHz sampling, 32-bit resolution). Multiple talkers were used to average out any possible talker specific variability in frequency importance functions (see Yoho et al., 2018b). To balance the granularity of the measured bands against the amount of data collected per participant, we decided to include ten bands in our importance functions. Band edge frequencies were selected based on the Critical Band procedure in the SII (ANSI, 1997). This procedure uses 21 bands, so to reduce this number to the desired ten bands, we combined adjacent bands and excluded the 21st band from the Critical Band procedure, to obtain band edge frequencies of 100, 300, 510, 770, 1080, 1480, 2000, 2700, 3700, 5300, and 7700 Hz. For each sentence, a combination of these bands was selected for inclusion, and the rest were removed. This was accomplished by passing the original sentence through a series of bandpass filters (finite impulse response filters implemented via the filtfilt command in matlab, filter order was 4000 with no phase distortion) with cutoff frequencies corresponding to adjacent edge frequencies. For 100 and 7700 Hz cutoffs, filter slopes in the transition band were approximately 200 dB/octave and 16 000 dB/octave, respectively, with a transition band about 15 Hz wide for both. Filter outputs corresponding to the bands selected for inclusion were summed to produce the stimulus that was presented to participants.

Each experimental session started by completing a hearing screening for participants with NH and a full audiologic test battery including otoscopy, tympanometry, and air and bone conduction pure tone thresholds for each participant with HI. Stimuli were presented at 65 dBA for participants with NH and 65 dBA plus frequency-specific gains as prescribed by the NAL-R hearing aid fitting formula (Byrne and Dillon, 1986) for each individual participant with HI. NAL-R was used to avoid potential effects of nonlinear amplification strategies on band importance and to ensure that the loudest components of the amplified signal would be tolerable to participants. The stimuli were spectrally shaped by a digital equalizer (ART HQ-231) for each participant with HI to control for individual differences in pure-tone thresholds. The maximum overall presentation level for any participant with HI was 87 dBA. A Presonus Studio 26 digital-to-analog converter was used, as well as a Mackie 1202VLZ4 mixer to adjust overall gain, and stimuli were presented diotically via Sennheiser HD 280 Pro headphones.

Participants listened to and repeated back a series of IEEE sentences that were filtered as described above. Participants were seated in a double-walled sound booth with the experimenter. Presentation of stimuli by the experimenter was controlled through a custom user interface designed in matlab. Participants were only allowed to hear each sentence once and were then asked to repeat each sentence aloud. Responses were recorded and scored by an experimenter in real time, with each of the five keywords for the response labeled as correct if the participant repeated the word back exactly, and incorrect otherwise. Participants were not provided feedback on their performance.

Each participant started with 20 IEEE sentences that included all 10 of 10 bands (which produced a bandlimited stimulus containing all frequencies between 100 and 7700 Hz) to obtain a baseline speech recognition score. Next, they completed eight blocks of 40 sentences each (320 sentences total, 80 per talker), filtered to contain three of ten bands. The number of times each band was included in a sentence was balanced across bands, so this approach included each band in 24 sentences per talker, and 96 times overall. This number of presentations was selected to balance experimental session length against measurement precision (see the supplemental material from Bosen and Chatterjee, 2016). Preliminary experimentation used only two of ten bands on each trial, but this was too difficult for many listeners to achieve reliable performance (>20% of keywords correct). A total of four participants with HI were excluded from analysis, as they could not achieve 20% of keywords correct with three of ten bands. These four participants had average pure tone averages across the two ears of 22, 34, 56, and 58 dB HL. Choosing three out of ten bands produced 120 possible combinations, of which 80 were pseudorandomly selected for each talker. Band combinations were selected such that each band was presented an equal number of times for each talker, and across talkers the pairwise co-occurrence of bands was evenly distributed. These selection goals ensured that each band was sampled an equal number of times, and that the contribution of each band was averaged across its synergetisic interactions with all other bands. Details of how band combinations were selected are described in Bosen and Chatterjee (2016). Band combinations for each sentence were randomized across listeners to avoid systematic interactions between band combinations and sentences across listeners. Total testing time was approximately 1.5 h for listeners with NH and 2 h for listeners with HI. Most listeners completed the experiment over two sessions, with an average of four days between sessions (maximum time between sessions for any individual listener was 15 days).

C. Analysis

Binomial logistic regression was used to estimate the importance of each frequency band. Each response word was labeled correct or incorrect, and these binary outcomes were regressed against the presence or absence of each band across both the baseline and three of ten bands sentences. This regression calculates how much each band contributed to the odds of the participant correctly identifying words. This regression produces log-odds values for each band, where positive values indicates increases in the fraction of words identified. Zeros or negative values would indicate no change or a decrease in the fraction of words identified, respectively. Bands 1 (100–300 Hz) and 10 (5300–7700 Hz) often had negative importance in listeners with NH, and at least seven out of the ten bands had positive importance across listeners with HI. As described in Bosen and Chatterjee (2016), negative importance can occur as a result of the fixed number of bands in each trial, and should be interpreted with caution. Negative importance could simply reflect a band taking the place of a band that would provide more speech information, rather than a true impairment of speech recognition by that band. Note that although this method produces frequency importance functions that are qualitatively similar to functions observed in the SII, their quantitative relationship to the SII has not been characterized and these values should not be used interchangeably.

Each participant's frequency importance function was normalized by subtracting the mean log-odds importance across all bands from each band. This normalization centers the data so that the mean importance of all bands was zero, which effectively centers the data at 50% (sum log-odds of 0) identification accuracy in the baseline condition. The data were normalized for two reasons. First, overall performance differed across participants with HI and across NH and HI. This normalization removes the effect of their performance in the baseline condition that had all ten bands present, which was 100% for several participants with NH. Because importance is expressed in log-odds, perfect performance would produce importance values that approach infinity as the odds ratio approached 1/0 (i.e., 100% chance of correctly identifying a word, 0% chance of incorrectly identifying it). Normalization accounts for the apparently perfect performance in the baseline condition by subtracting out the mean importance, leaving only the shape of the band importance function remaining. Second, the goal of this experiment was to compare differences in frequency importance function shape (i.e., the importance of each frequency band relative to other bands) across participants, rather than overall performance. This normalization does not affect the rank order of frequency importance within participants, which enables comparison of shape across participants.

III. RESULTS

Percent keywords correct across participants in the baseline condition ranged from 96 to 100% (mean 98%) for participants with NH and from 88 to 100% (mean 96%) for participants with HI. Percent keywords correct across participants in the experimental blocks ranged from 41 to 61% (mean 51%) for participants with NH and from 25 to 50% (mean 34%) for participants with HI.

Figure 1 shows the normalized frequency importance functions for participants with NH. As expected based on previous studies (e.g., Healy et al., 2013), importance peaks between 1 to 2 kHz, and decreases with frequency distance from this peak. Participants with NH all had similar frequency importance functions, indicating that they all had similar ability to use speech information in different frequency ranges. We assumed that differences across participants with NH were due to measurement error and calculated the 95% range of each frequency band as the mean normalized importance of each band, plus and minus two standard deviations. The largest of these 95% ranges was 0.85 (for the 1480–2000 Hz band). For comparison, the range of mean band importances was 1.31, so the size of the largest 95% range was about 65% of the overall range of mean importances. This indicates that there is some variability in measured frequency band importance in participants with NH, but that the variability is smaller than the overall trend observed across participants. Given the relatively small number of participants, these 95% ranges are only rough estimates of the range of variation that would be produced by measurement error when sampling from an assumed to be homogenous population, rather than a comprehensive characterization of variation across participants with NH.

FIG. 1.

Normalized frequency importance functions for listeners with NH. Each frequency band is represented by a set of horizontal lines denoting the frequency range of that band, and the height of each band representing its importance (plotted on a log-odds scale and normalized by subtracting the mean band importance). Each color represents the frequency importance for an individual participant, and gray regions represent an estimated 95% range (mean +/- two standard deviations) for the group.

In comparison, Fig. 2 shows that participants with HI had frequency importance functions that substantially differed from the NH group. In all participants with HI, frequency importance fell outside of the 95% range of the NH group for multiple bands, indicating that these listeners relied on different frequency ranges for speech recognition than the participants with NH. Visual comparison of each individual's audiogram to their frequency importance function suggests that frequency regions with lower (better) audiometric thresholds tended to contribute more to speech recognition. This trend is particularly evident in participants HL1 and HL9, who had audiograms that differed from the sloping sensorineural audiograms of the rest of the participants. Participant HL1 had frequency importances below the 95% range of the NH group in the range of 1–2 kHz, which is also where this participant had the highest (worst) audiometric thresholds. Similarly, participant HL9 tended to have frequency importances lower than the 95% range of the NH group at low frequencies and higher than the NH range at high frequencies, which matches this participant's rising audiogram. Even though stimuli had been spectrally shaped to compensate for each participant's hearing loss, bands were not fully audible. Supplemental Fig. 1 shows the distribution of root-mean-square (rms) level across speech signals relative to each listener's audiogram, which demonstrates that some of the speech signal remained inaudible after amplification. A full description of this analysis is provided in the supplemental materials (see supplemental material).1

To quantify the relationship between audiogram and band importance within listeners, linear mixed-effects models were used to characterize the relationship between frequency importance and audiometric threshold. The dependent variable, relative importance, was the difference between importance for each frequency band for each participant with HI and the group mean importance for that band across participants with NH. Band edges did not cleanly align with audiogram frequencies, so we interpolated between measured thresholds and averaged the interpolated thresholds across the range of frequencies in each band to estimate each band's threshold. The average interpolated audiometric threshold across each frequency band for each listener was considered a fixed effect. Figure 3 shows the relationship between relative importance and interpolated threshold for all participants with HI. We tested models that assumed no correlation between interpolated threshold and relative importance, that assumed the intercept was the same across all participants, that allowed intercept to vary randomly across participants, and that allowed intercept and slope to vary as random effects across participants. The best fitting model used only random intercepts across participants. This model had an Akaike Information Criterion (AIC, Akaike, 1974) of 56.0, which was 3.4 better than a model that assumed a fixed intercept across participants, was 3.8 better than a model that allowed random intercepts and slopes across participants and was 65.2 better than a model that assumed no correlation between interpolated threshold and relative importance. This indicates that the effect of interpolated threshold on frequency importance had a fixed ratio (each 10 dB of hearing loss reduced frequency importance by 0.22), but because each listener had a different pure tone average (defined as the average of thresholds at 0.5, 1, and 2 kHz), the interpolated threshold at which the relative importance crossed zero differed across individuals. This analysis was repeated using the audibility of each band, as shown in Supplemental Fig. 2.1 Audibility and threshold were closely related (see Supplemental Fig. 3),1 so using audibility as a predictor variable instead of threshold produced similar results. Because relative importance has to sum to zero, our data violate the assumption that the dependent variable measures are all independent, but this violation of assumptions would not spuriously produce the observed relationship between interpolated threshold and relative importance. To summarize, listeners with HI were less able to benefit from speech information in frequency regions where they had higher audiometric thresholds because spectral shaping with the NAL-R fitting formula did not fully restore audibility.

FIG. 3.

Simple linear regression of individual frequency importance relative to NH mean frequency importance against mean interpolated audiometric threshold for each frequency band, for each listener with HI. Colors represent the same listeners as in Fig. 2.

IV. CONTROL EXPERIMENT

This control experiment examined whether observed differences between importance functions for NH and HI participants could also be attributed to alterations in the speech spectrum during spectral shaping for HI participants. For this experiment, seven new NH listeners (age 22 to 33 years, two male) heard stimuli that were spectrally shaped using the same procedure and apparatus described above to match the NAL-R prescription targets (Byrne and Dillon, 1986) for average thresholds of 70–79 year old individuals with presbycusis, as indicated in Fei et al. (2011) (see Fig. 4, bottom panel for thresholds). After spectral shaping, the stimuli were presented at an overall level of 65 dBA.

FIG. 4.

Normalized frequency importance functions for listeners with NH in the spectrally shaped control experiment. The top panel shows frequency importance for seven subjects in this condition as separate bars, and the gray region represents the 95% range of results from NH listeners without spectral shaping shown in Fig. 1. The bottom panel shows the cross-ear average threshold for six listeners that we obtained audiograms from (thin lines), and the typical presbyacusis profile used to generate the spectral profile used to shape the stimuli (thick line).

Percent keywords correct across participants ranged from 93 to 100% (mean 97%) in the baseline condition and ranged from 36 to 65% (mean 53%) in the experimental conditions. As can be seen from the top panel of Fig. 4, the resulting frequency importance functions for the participants with NH listening to spectrally-shaped stimuli reflect the same overall shape as Fig. 1. However, there was a slight shift in importance for these listeners relative to the group of NH participants from Experiment 1, with the highest frequencies weighted higher and low frequencies weighted lower on average across participants.

The relationship between relative band importance and change in sensation level (the amount of gain provided minus the mean interpolated audiometric threshold within each band) was quantified using the same set of linear mixed-effects models as in the main experiment. Figure 5 shows the observed trends for both the listeners with HI in the main experiment and listeners with NH in the spectrally shaped control condition. There is a small but significant slope in a fixed slope and intercept model [0.08/10 dB sensation level, t(1,52) = 2.937, p = 0.005], but a model that assumed no slope across the NH listeners provided the best fit (AIC was 2.91 better than the fixed slope model). These results indicate that suprathreshold changes in sensation level from spectral shaping had a small impact on band importance relative to the effect of audibility in listeners with HI.

FIG. 5.

Simple linear regression of individual frequency importance relative to the NH mean frequency importance (as in Fig. 3), plotted against the relative change in sensation level due to audiometric threshold and spectral shaping. Listeners with HI are represented by large points and solid lines in the top panel, and listeners with NH and spectrally shaped stimuli are represented by small points and broken lines in the bottom panel. Colors correspond to the same listeners in Figs. 3 and 4 for the respective groups.

V. DISCUSSION

The current study examined how the contributions of various speech bands differed for individual listeners with sensorineural hearing loss from those predicted by listeners with normal hearing. Results demonstrate that the shape of frequency importance functions in listeners with sensorineural hearing loss differs from the “inverted U” shape observed in listeners with normal hearing. Specifically, listeners with HI displayed frequency importance functions which reflect their individual audiometric thresholds, with regions of maximum importance correlating with regions of better audiometric thresholds and vice versa. This occurred regardless of the degree or configuration of hearing loss. Although a hearing aid prescription formula was used to compensate for increased auditory thresholds, the speech cues from some bands were still inaudible to many listeners, and therefore audibility likely played a meaningful role in the observed results.

The results of the current experiment demonstrate that it is possible to capture individual variability in frequency importance across a group of listeners with sensorineural hearing impairment. As stated above, the resulting frequency importance functions for listeners with sensorineural hearing loss are highly individualized and related to a listener's audiometric thresholds, which determine the resulting audibility of speech bands. This underscores the idea that such functions cannot be readily averaged across a population of listeners with hearing loss, and that techniques which measure frequency importance by utilizing data on a group of listeners may not be appropriate for these populations. Past techniques for deriving frequency importance required averaging data over several listeners (e.g., Studebaker and Sherbecoe, 1991; Healy et al., 2013), but more recent techniques have overcome this limitation (Bosen and Chatterjee, 2016; Shen and Kern 2018; Whitmal et al., 2015). The random combination strategy has allowed for examinations of frequency importance on an individual level for clinical populations such as cochlear implant users (i.e., Bosen and Chatterjee, 2016) or hearing aid users (the current study). An additional benefit of the random combination strategy is the ability to examine frequency importance for relatively discrete and narrow frequency regions, which allows for a thorough evaluation of factors such as the relationship observed here between frequency importance and audiometric thresholds.

In the current study, although the shift in frequency weighting followed a shift in an listener's audiometric thresholds, there was also a relationship between these audiometric thresholds and the resulting audibility of the speech bands for each listener. This impact of reduced audibility on frequency weighting is of real consequence and important to evaluate, as fully restoring audibility through the use of hearing aids is often highly challenging due to issues such as device limitations and acoustic feedback (Arbogast et al., 2018), physiological factors such as profoundly raised thresholds and loudness recruitment (Moore, 1996), or simply an inability of the user to utilize such cues even when they are provided (Ching et al., 2001). Therefore, the type of shift observed in the current results may also occur to varying degrees with other amplification schemes or hearing aid prescriptions, as a listener's frequency weighting is clearly impacted by the degree of audibility of each band.

One point of interest is the shape of the importance functions for participants HL 1 and HL 9. These two listeners had configurations of loss which differed from the traditional sloping presbycusis configuration and had more audible speech cues across the entire spectrum than other HI listeners. Despite this, the resulting importance functions for these two listeners still followed closely with the shape of their audiometric thresholds, possibly indicating some other factor than audibility has an impact. One possible explanation is that HI listeners who have permanent hearing loss may simply be habituated to rely on the auditory cues that are consistently audible, and therefore rely most on their regions of favorable thresholds. However, all of the HI participants in the current study (including HL 1 and HL 9) are long-term, regular hearing aid users who are most likely accustomed to receiving at least some speech cues in their regions of increased auditory thresholds. Another possibility is the influence of alterations in spectral profile due to the use of spectral shaping based on audiometric thresholds. Amplifying the speech to compensate for individual differences in hearing threshold changed the long-term average speech spectrum, but this difference could not entirely account for changes in frequency importance in HI listeners. In our control experiment, listeners with NH show only small shifts in their frequency importance functions as a result of spectrally shaping the signal to match the average hearing aid prescription for presbycusis.

Although additional investigation is required to identify the specific mechanism or mechanisms underlying the relationship between audiometric threshold and frequency importance, listeners with HI must compensate for a broad range of changes in their auditory system (for review, see Moore, 1996). The potential impact of these changes on speech recognition are known to be quite complex. It is speculated that there are a few likely factors which may result in potential shifts in frequency importance for HI listeners. First, hearing aid prescription formulas, including the one used here, often do not fully restore audibility in every frequency band, or may not equally restore audibility across all listeners (Humes, 2007). Therefore, even though the influence of reduced audibility was limited somewhat through the use of individualized spectral shaping, it was not entirely mitigated, and played an important role in the current results. Second, amplification can result in rather high presentation levels for many listeners with HI in frequency bands in which the listeners' audiometric thresholds are poor. High presentation levels have been shown to introduce broadened auditory tuning, and negatively impact the perception of speech, even for listeners with NH (French and Steinberg, 1947; Speaks et al., 1967; Studebaker et al., 1999), and high presentation levels have been shown to impact the higher frequency region of the speech spectrum more than the lower frequency region (Molis and Summers, 2003; Summers and Cord, 2007). This factor may also have played a role in the current study. Last, even if audibility were able to be perfectly restored, there are several supra-threshold deficits associated with sensorineural hearing loss that impact the perception of speech and may possibly influence frequency weighting. The active cochlear mechanism, by which energy is added into the traveling wave along the basilar membrane thus sharpening the frequency response of the cochlea, is typically lost in sensorineural hearing loss. This in turn results in broadened tuning and reduced spectral resolution (Glasberg and Moore, 1986). This broadened tuning can distort the perception of speech cues through spectral “smearing” (ter Keurs et al., 1992). For a listener with HI, frequency regions with poorer audiometric thresholds are also generally regions with broader than normal auditory tuning (Moore, 2007). Therefore, it may be expected that listeners with HI are unable to utilize speech information effectively in regions with poor audiometric thresholds, and in fact evidence has been shown to support this. Ching et al. (1998) showed that listeners with HI do not receive as much speech information from regions where their loss is maximal, even when those listeners have access to speech cues in those regions. Bernstein et al. (2013) further demonstrated that incorporating individual spectrotemporal sensitivity into models of speech intelligibility produces more accurate estimates than estimates based on audibility alone.

These observed differences in frequency weighting for listeners with sensorineural hearing loss are important to consider. The possible reasons for shifted frequency weighting—a long-term speech spectrum alterations, reduced audibility, high presentation levels, broadened auditory tuning—are factors inherent to the listener that largely remain despite the use of hearing aids, or are introduced by the hearing aids themselves. Therefore, the use of the current SII (ANSI, 1997) model for predicting speech recognition for listeners with HI, and the use of the SII clinically, may not be entirely appropriate in all circumstances.

Importantly, the current results indicate that the random combination strategy of determining frequency importance (Bosen and Chatterjee, 2016) is sensitive and accurate enough to identify individual-to-individual differences in frequency weighting due to listener-specific characteristics. This strategy can thus be used to identify the impact of listener factors such as suprathreshold deficits in auditory processing, as well as hearing aid parameters such as amplitude compression or frequency transposition on frequency importance. Future work should focus on examining the specific influences of these possible contributions to frequency importance, as well as the consequences of shifts in frequency-importance functions which may aid in the refinement of hearing aid processing strategies.

VI. SUMMARY AND CONCLUSIONS

The current study utilized the random combination strategy of Bosen and Chatterjee (2016) to derive individualized frequency importance functions for listeners with sensorineural hearing impairment. Results from the ten listeners indicate that under the specific conditions tested here, frequency importance differs considerably from the “inverted-U” shape that is commonly observed in listeners with normal hearing when an individual has sensorineural hearing loss. Furthermore, there is a strong relationship between frequency importance and audiometric thresholds, which appears to be driven by a listener-specific loss of audibility despite the use of clinically-appropriate spectral shaping. Differences in frequency importance between listeners with normal and impaired hearing are important to consider for clinical applications. The methods presented here could be used to characterize listener-specific factors, including audibility (as observed here), suprathreshold deficits, and hearing aid processing strategies, that alter the shape of frequency importance functions.

ACKNOWLEDGMENTS

This paper was written with partial support from Utah State University's Office of Research and Graduate Studies, Research Catalyst Grant (awarded to S.E.Y.) and National Institutes of Health, Centers of Biomedical Research Excellence (COBRE) Grant No. NIH-NIGMS/5P20GM109023-05, awarded to A.K.B. We gratefully acknowledge our research assistants S. Long, N. Thiede, and M. Muncy for data collection and manuscript preparation assistance.

Footnotes

See supplementary material at https://doi.org/10.1121/1.5090495 E-JASMAN-145-024902 to see the distribution of root-mean-square (rms) level across speech signals relative to each listener's audiogram (supplemental Fig. 1); regression of relative band importance as a function of Inaudible level proportion, (supplemental Fig. 2); and comparison of audiometric thresholds and inaudible level proportions for each band (Supplemental Fig. 3).

References

- 1. Arbogast, T. L. , Moore, B. C. , Puria, S. , Dundas, D. , Brimacombe, J. , Edwards, B. , and Levy, S. C. (2018). “ Achieved gain and subjective outcomes for a wide-bandwidth contact hearing aid fitted using CAM2,” Ear Hear. (published online 2018). 10.1097/AUD.0000000000000661 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Akaike, H. (1974). “ A new look at the statistical model identification,” IEEE Trans. Automat. Contr. 19, 716–723. 10.1109/TAC.1974.1100705 [DOI] [Google Scholar]

- 4.ANSI (1997). ANSI S3.5 (R2007), American National Standard Methods for the Calculation of the Speech Intelligibility Index ( ANSI, New York: ). [Google Scholar]

- 5.ANSI (2004). ANSI S3.21 (R2009), American National Standard Methods for Manual Pure-Tone Threshold Audiometry ( ANSI, New York: ). [Google Scholar]

- 6. Apoux, F. , and Healy, E. W. (2012). “ Use of a compound approach to derive auditory-filter-wide frequency-importance functions for vowels and consonants,” J. Acoust. Soc. Am. 132, 1078–1087. 10.1121/1.4730905 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Bacon, S. P. , Opie, J. M. , and Montoya, D. Y. (1998). “ The effects of hearing loss and noise masking on the masking release for speech in temporally complex backgrounds,” J. Speech Hear. Res. 41, 549–563. 10.1044/jslhr.4103.549 [DOI] [PubMed] [Google Scholar]

- 8. Bell, T. S. , Dirks, D. D. , and Trine, T. D. (1992). “ Frequency-importance functions for words in high- and low-context sentences,” J. Speech Hear. Res. 35, 950–959. 10.1044/jshr.3504.950 [DOI] [PubMed] [Google Scholar]

- 9. Bernstein, J. G. W. , Summers, V. , Grassi, E. , and Grant, K. W. (2013). “ Auditory models of suprathreshold distortion and speech intelligibility in persons with impaired hearing,” J. Am. Acad. Audiol. 24, 307–328. 10.3766/jaaa.24.4.6 [DOI] [PubMed] [Google Scholar]

- 10. Bosen, A. K. , and Chatterjee, M. (2016). “ Band importance functions of listeners with cochlear implants using clinical maps,” J. Acoust. Soc. Am. 140, 3718–3727. 10.1121/1.4967298 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Byrne, D. , and Dillon, H. (1986). “ The National Acoustic Laboratories' (NAL) new procedure for selecting the gain and frequency response of a hearing aid,” Ear Hear. 7, 257–265. 10.1097/00003446-198608000-00007 [DOI] [PubMed] [Google Scholar]

- 12. Ching, T. Y. , Dillon, H. , and Byrne, D. (1998). “ Speech recognition of hearing-impaired listeners: Predictions from audibility and the limited role of high-frequency amplification,” J. Acoust. Soc. Am. 103, 1128–1140. 10.1121/1.421224 [DOI] [PubMed] [Google Scholar]

- 13. Ching, T. Y. , Dillon, H. , Katsch, R. , and Byrne, D. (2001). “ Maximizing effective audibility in hearing aid fitting,” Ear Hear. 22, 212–224. 10.1097/00003446-200106000-00005 [DOI] [PubMed] [Google Scholar]

- 14. Davies-Venn, E. , Nelson, P. , and Souza, P. (2015). “ Comparing auditory filter bandwidths, spectral ripple modulation detection, spectral ripple discrimination, and speech recognition: Normal and impaired hearing,” J. Acoust. Soc. Am. 138, 492–503. 10.1121/1.4922700 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Doherty, K. A. , and Turner, C. W. (1996). “ Use of a correlational method to estimate a listener's weighting function for speech,” J. Acoust. Soc. Am. 100, 3769–3773. 10.1121/1.417336 [DOI] [PubMed] [Google Scholar]

- 16. Dubno, J. R. , Dirks, D. D. , and Schaefer, A. B. (1989). “ Stop-consonant recognition for normal-hearing listeners and listeners with high-frequency hearing loss. II: Articulation index predictions,” J. Acoust. Soc. Am. 85, 355–364. 10.1121/1.397687 [DOI] [PubMed] [Google Scholar]

- 17. Dugal, R. L. , Braida, L. D. , and Durlach, N. I. (1980). “ Implications of previous research for the selection of frequency-gain characteristics,” in Acoustical Factors Affecting Hearing Aid Performance ( Brown University Press, Providence, RI: ), pp. 379–403. [Google Scholar]

- 18. Duggirala, V. , Studebaker, G. A. , Pavlovic, C. V. , and Sherbecoe, R. L. (1988). “ Frequency importance functions for a feature recognition test material,” J. Acoust. Soc. Am. 83, 2372–2382. 10.1121/1.396316 [DOI] [PubMed] [Google Scholar]

- 19. Fei, J. , Lei, L. , Su-Ping, Z. , Ke-Fang, L. , Qi-You, Z. , and Shi-Ming, Y. (2011). “ An investigation into hearing loss among patients of 50 years or older,” J. Otol. 6, 44–49. 10.1016/S1672-2930(11)50008-X [DOI] [Google Scholar]

- 20. Festen, J. M. , and Plomp, R. (1990). “ Effects of fluctuating noise and interfering speech on the speech-reception threshold for impaired and normal hearing,” J. Acoust. Soc. Am. 88, 1725–1736. 10.1121/1.400247 [DOI] [PubMed] [Google Scholar]

- 21. Fletcher, H. (1952). “ The perception of speech sounds by deafened persons,” J. Acoust. Soc. Am. 24, 490–497. 10.1121/1.1906926 [DOI] [Google Scholar]

- 22. French, N. R. , and Steinberg, J. C. (1947). “ Factors governing the intelligibility of speech sounds,” J. Acoust. Soc. Am. 19, 90–119. 10.1121/1.1916407 [DOI] [Google Scholar]

- 23. Glasberg, B. R. , and Moore, B. C. (1986). “ Auditory filter shapes in subjects with unilateral and bilateral cochlear impairments,” J. Acoust. Soc. Am. 79, 1020–1033. 10.1121/1.393374 [DOI] [PubMed] [Google Scholar]

- 24. Healy, E. W. , Yoho, S. E. , and Apoux, F. (2013). “ Band importance for sentences and words reexamined,” J. Acoust. Soc. Am. 133, 463–473. 10.1121/1.4770246 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Humes, L. E. (2007). “ The contributions of audibility and cognitive factors to the benefit provided by amplified speech to older adults,” J. Am. Acad. Audiol. 18, 590–603. 10.3766/jaaa.18.7.6 [DOI] [PubMed] [Google Scholar]

- 26.IEEE (1969). “ IEEE recommended practice for speech quality measurements,” IEEE Trans. Audio Electroacoust. 17, 225–246. 10.1109/TAU.1969.1162058 [DOI] [Google Scholar]

- 27. Kasturi, K. , Loizou, P. C. , Dorman, M. , and Spahr, T. (2002). “ The intelligibility of speech with ‘holes’ in the spectrum,” J. Acoust. Soc. Am. 112, 1102–1111. 10.1121/1.1498855 [DOI] [PubMed] [Google Scholar]

- 28. Ludvigsen, C. (1987). “ Prediction of speech intelligibility for normal-hearing and cochlearly hearing-impaired listeners,” J. Acoust. Soc. Am. 82, 1162–1171. 10.1121/1.395252 [DOI] [PubMed] [Google Scholar]

- 29. Mehraei, G. , Gallun, F. J. , Leek, M. R. , and Bernstein, J. G. (2014). “ Spectrotemporal modulation sensitivity for hearing-impaired listeners: Dependence on carrier center frequency and the relationship to speech intelligibility,” J. Acoust. Soc. Am. 136, 301–316. 10.1121/1.4881918 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Molis, M. R. , and Summers, V. (2003). “ Effects of high presentation levels on recognition of low- and high-frequency speech,” Acoust. Res. Lett. Onl. 4, 124–128. 10.1121/1.1605151 [DOI] [Google Scholar]

- 31. Moore, B. C. (1985). “ Frequency selectivity and temporal resolution in normal and hearing-impaired listeners,” Brit. J. Audiol. 19, 189–201. 10.3109/03005368509078973 [DOI] [PubMed] [Google Scholar]

- 32. Moore, B. C. J. (1996). “ Perceptual consequences of cochlear hearing loss and their implications for the design of hearing aids,” Ear Hear. 17, 133–161. 10.1097/00003446-199604000-00007 [DOI] [PubMed] [Google Scholar]

- 33. Moore, B. C. (2007). Cochlear Hearing Loss, Physiological, Psychological, and Technical Issues, 2nd Ed. ( Wiley, New York, 2007). [Google Scholar]

- 34. Pavlovic, C. V. , Studebaker, G. A. , and Sherbecoe, R. L. (1986). “ An articulation index based procedure for predicting the speech recognition performance of hearing-impaired individuals,” J. Acoust. Soc. Am. 80, 50–57. 10.1121/1.394082 [DOI] [PubMed] [Google Scholar]

- 35. Shen, Y. , and Kern, A. B. (2018). “ An analysis of individual differences in recognizing monosyllabic words under the speech intelligibility index framework,” Trends Hear. 22, 1–14. 10.1177/2331216518761773 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Speaks, C. , Karmen, J. L. , and Benitez, L. (1967). “ Effect of a competing message on synthetic sentence identification,” J. Speech Hear. Res. 10, 390–395. 10.1044/jshr.1002.390 [DOI] [PubMed] [Google Scholar]

- 37. Studebaker, G. A. , and Sherbecoe, R. L. (1991). “ Frequency-importance and transfer functions for recorded CID W-22 word lists,” J. Speech Hear. Res. 34, 427–438. 10.1044/jshr.3402.427 [DOI] [PubMed] [Google Scholar]

- 38. Studebaker, G. A. , Sherbecoe, R. L. , and Gilmore, C. (1993). “ Frequency-importance and transfer functions for the Auditec of St. Louis recordings of the NU-6 word test,” J. Speech Hear. Res. 36, 799–807. 10.1044/jshr.3604.799 [DOI] [PubMed] [Google Scholar]

- 39. Studebaker, G. A. , Sherbecoe, R. L. , McDaniel, D. M. , and Gwaltney, C. A. (1999). “ Monosyllabic word recognition at higher-than-normal speech and noise levels,” J. Acoust. Soc. Am. 105, 2431–2444. 10.1121/1.426848 [DOI] [PubMed] [Google Scholar]

- 40. Summers, V. , and Cord, M. T. (2007). “ Intelligibility of speech in noise at high presentation levels: Effects of hearing loss and frequency region,” J. Acoust. Soc. Am. 122, 1130–1137. 10.1121/1.2751251 [DOI] [PubMed] [Google Scholar]

- 41. ter Keurs, M. , Festen, J. M. , and Plomp, R. (1992). “ Effect of spectral envelope smearing on speech reception. I,” J. Acoust. Soc. Am. 91, 2872–2880. 10.1121/1.402950 [DOI] [PubMed] [Google Scholar]

- 42. Whitmal, N. A., III , DeMaio, D. , and Lin, R. (2015). “ Effects of envelope bandwidth on importance functions for cochlear implant simulations,” J. Acoust. Soc. Am. 137(2), 733–744. 10.1121/1.4906260 [DOI] [PubMed] [Google Scholar]

- 43. Yoho, S. E. , Apoux, F. , and Healy, E. W. (2018a). “ The noise susceptibility of various speech bands,” J. Acoust. Soc. Am. 143, 2527–2534. 10.1121/1.5034172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Yoho, S. E. , Healy, E. W. , Youngdahl, C. L. , Barrett, T. S. , and Apoux, F. (2018b). “ Speech-material and talker effects in speech band importance,” J. Acoust. Soc. Am. 143, 1417–1426. 10.1121/1.5026787 [DOI] [PMC free article] [PubMed] [Google Scholar]