Abstract

Objective:

Minimal knowledge exists on the factors that affect implementation of performance measurement systems, particularly in low- and middle-income countries (LMICs). To address this, we describe the implementation of a performance measurement system for South Africa’s substance abuse treatment services known as the Service Quality Measures (SQM) initiative.

Method:

We conducted a mixed-methods evaluation of system implementation. We surveyed 81 providers about the extent of system implementation within their agencies and the factors that facilitated implementation. We conducted 26 in-depth interviews of providers’ perceived barriers and facilitators to implementation.

Results:

The overall penetration of this system was high. Almost all providers viewed the system as feasible to implement, acceptable, appropriate for use in their context, and useful for guiding service improvements. However, the extent of implementation varied significantly across sites (p < .05). Leadership support (p < .05) was associated with increased implementation in multivariable analyses. Providers reflected that high rates of patient attrition, variability in willingness to implement the system, and limited capacity for interpreting performance feedback affected the extent of system implementation.

Conclusions:

It is feasible to implement a performance measurement system in LMICs if the system is acceptable, appropriate, and useful to providers. To ensure the utility of this system for treatment service strengthening, system implementation must be optimized. Efforts to enhance target population coverage, strengthen leadership support for performance measurement, and build capacity for performance feedback utilization may enhance the implementation of this performance measurement system.

Objectifs :

Peu de connaissance existe sur les facteurs influençant l’implantation d’un système de mesure du rendement, particulièrement dans les pays à revenu faible et intermédiaire (PRFI). Pour aborder cette question, nous décrivons l’implantation d’un système de mesure du rendement des services de traitement en toxicomanie d’Afrique du Sud, connu sous le nom du projet de Mesures de la qualité du service (MQS).

Méthode :

Nous avons mené une évaluation d’implantation du système à l’aide d’une méthode mixte. Nous avons interrogé 81 pourvoyeurs de services sur l’ampleur de l’implantation du système dans leur agence et les facteurs qui ont facilité l’implantation. Nous avons mené 26 entrevues approfondies sur les perceptions des pourvoyeurs concernant les obstacles et les facilitateurs de l’implantation.

Résultats :

L’appropriation générale de ce système était élevée. Presque tous les pourvoyeurs voyaient la faisabilité d’implantation du système et le considéraient comme étant acceptable, approprié à leur contexte et utile pour guider l’amélioration des services. Cependant, l’ampleur de l’implantation variait de façon significative à travers les sites (p < ,05). Le soutien de la direction (p < ,05) était associé à une implantation plus importante dans les analyses multivariées. Les pourvoyeurs soulignaient qu’un taux élevé d’attrition des patients, une variabilité dans la volonté d’implanter le système ainsi qu’une capacité limitée d’interpréter les rétroactions sur le rendement ont eu un impact sur l’ampleur de l’implantation du système.

Conclusion :

Il est faisable d’implanter un système de mesure du rendement dans les PRFI, si le système est acceptable, approprié et utile pour les pourvoyeurs de soins. Pour veiller à ce que ce système soit utile pour l’amélioration des services de traitement, l’implantation du système doit être optimale. Des efforts pour mieux couvrir la population cible, l’amélioration du soutien de la direction à l’égard de la mesure du rendement et le développement de la capacité à interpréter les rétroactions sur le rendement peuvent améliorer l’implantation de ce système de mesure du rendement.

Objetivos:

Existe un conocimiento mínimo sobre los factores que afectan la implementación de los sistemas de medición del desempeño, particularmente en los países de ingresos bajos y medios (PIMB). Para abordar esto, describimos la implementación de un sistema de medición del rendimiento para los servicios de tratamiento del abuso de sustancias de Sudáfrica conocido como la iniciativa de Medidas de Calidad del Servicio (MCS).

Método:

Realizamos una evaluación de métodos mixtos de la implementación del sistema. Encuestamos a 81 proveedores sobre el alcance de la implementación del sistema dentro de sus agencias y los factores que facilitaron la implementación. Llevamos a cabo 26 entrevistas en profundidad sobre las barreras percibidas por los proveedores y los facilitadores para la implementación.

Resultados:

La penetración total de este sistema fue alta. Casi todos los proveedores vieron el sistema como factible de implementar, aceptable, apropiado para usar en su contexto y útil para guiar las mejoras del servicio. Sin embargo, el grado de implementación varió significativamente entre los sitios (p <.05). El apoyo al liderazgo (p <.05) se asoció con una mayor implementación en análisis multivariables. Los proveedores reflejaron que las altas tasas de desgaste del paciente, la variabilidad en la disposición para implementar el sistema y la capacidad limitada para interpretar la retroalimentación del desempeño afectaron el alcance de la implementación del sistema.

Conclusión:

Es factible implementar sistemas de medición del rendimiento en PIBM si el sistema es aceptable, apropiado y útil a los proveedores. Para garantizar la utilidad de este sistema para el fortalecimiento del servicio de tratamiento, se debe optimizar la implementación del sistema. Los esfuerzos para mejorar la cobertura de la población objetivo, fortalecer el apoyo del liderazgo para la medición de los resultados, y fomentar la capacidad de utilización de la información sobre el rendimiento pueden mejorar la aplicación de este sistema de medición del desempeño.

In south africa, there is a high prevalence of substance use disorders (SUDs; Herman et al., 2009), yet less than 5% of people ever receive treatment for their SUD (Myers et al., 2010, 2014a). Drivers of this treatment gap include the limited availability of SUD treatment in relation to need, geographic access barriers, and patient concerns about treatment quality that influence decisions about whether to seek care (Myers et al., 2010, 2016a). Given the high burden of disease associated with untreated SUDs (Bradshaw et al., 2007) and few opportunities for treatment, there is a public health imperative to ensure that available SUD services are effective and of an acceptable quality.

A better understanding of the quality of South African SUD services is needed to inform interventions to optimize treatment outcomes. In high-income countries, performance measurement systems that collect data on a standardized set of treatment indicators guide the design and evaluation of quality improvement initiatives (Ferri & Griffiths, 2015; Garnick et al., 2012). Most of these systems use process data extracted from administrative databases for this purpose, with few collecting outcome data (Garnick et al., 2012; Harris et al., 2009). South Africa, like most other low- and middle-income countries (LMICs), has lacked a system for monitoring SUD treatment quality. To address this gap, we developed a performance measurement system for South Africa’s SUD treatment system known as the Service Quality Measures (SQM) initiative. The development and initial implementation of this system is described elsewhere (Myers et al., 2015, 2016b).

We developed a system tailored to the South African treatment system because of contextual and resource differences between South Africa and high-income settings. Compared with the United States, South Africa has considerably fewer health and SUD providers and facilities per 100,000 patient population (Connell et al., 2007; Pasche et al., 2015), providers have higher caseloads and less administrative support (Magidson et al., 2017), and there are no administrative databases that can be used for SUD treatment monitoring (Myers et al., 2014b). The SQM system is comparable to performance measurement systems used within high-income settings in that it assesses the effectiveness of, efficiency of, access to, person-centeredness of, and quality of treatment (Garnick et al., 2012; Institute of Medicine, 2005). However, instead of using only one data source, the SQM system collects both patient-reported outcome data and process data extracted from administrative records. Consistent with principles of person-centered treatment (Greenhalgh, 2009), patients therefore participate in evaluating the services they receive.

For the SQM and other performance measurement systems to generate useful data, system implementation must be adequate. In high-income settings, the extent to which SUD providers implement performance measurement systems varies considerably (Herbeck et al., 2010; Kilbourne et al., 2010). This raises questions about the feasibility of implementing such a system in a less-resourced setting in which providers already have heavy administrative burdens (Magidson et al., 2017). To improve the extent of system implementation, a broad range of potential facilitators to implementation must be identified and enhanced. Yet, the few studies that have examined barriers and facilitators to the implementation of SUD performance measurement systems have focused narrowly on organizational barriers (Kilbourne et al., 2010; Wisdom et al., 2006). Although largely unexplored, system attributes may also influence the likelihood of implementation. For instance, health innovations that are compatible with existing practices and needs, require limited resources, and show observable benefits appear more likely to be implemented than innovations without these features (Rogers, 2003). To address this gap, we used mixed methods to evaluate the implementation of the SQM performance measurement system in South African SUD treatment services. This article describes (a) the extent to which the SQM system was implemented and (b) organizational and system barriers and facilitators to implementation.

Method

Late in 2014, the SQM system was implemented by 10 SUD treatment agencies (7 outpatient, 3 residential), with most outpatient agencies comprising multiple service sites. At the time of implementation, these agencies collectively accounted for 82% of 6,968 SUD treatment slots in the province. These agencies were purposively chosen as initial implementers because they treated a minimum of five adult patients (≥18 years of age) per month. All agencies that were approached agreed to implement the system.

During the implementation process, intake workers at participating agencies completed a treatment admission form that collects sociodemographic and substance use history information on each adult patient. After 3–4 weeks of treatment, patients then completed a survey on their perceptions of the quality and outcomes of treatment. Finally, each patient’s caseworker completed a standardized discharge form (that includes information on patient engagement, retention, and response to treatment) after the patient left the program.

Between January and March 2015, we evaluated the initial implementation of the SQM system through conducting a provider survey of system implementation along with indepth qualitative interviews (IDIs) of providers’ implementation experiences. This allowed us to quantify the extent of SQM system implementation while providing a detailed account of implementation barriers and facilitators. The South African Medical Research Council’s Ethics Committee approved the evaluation (EC 001-2/2014).

Provider survey

Population and procedures.

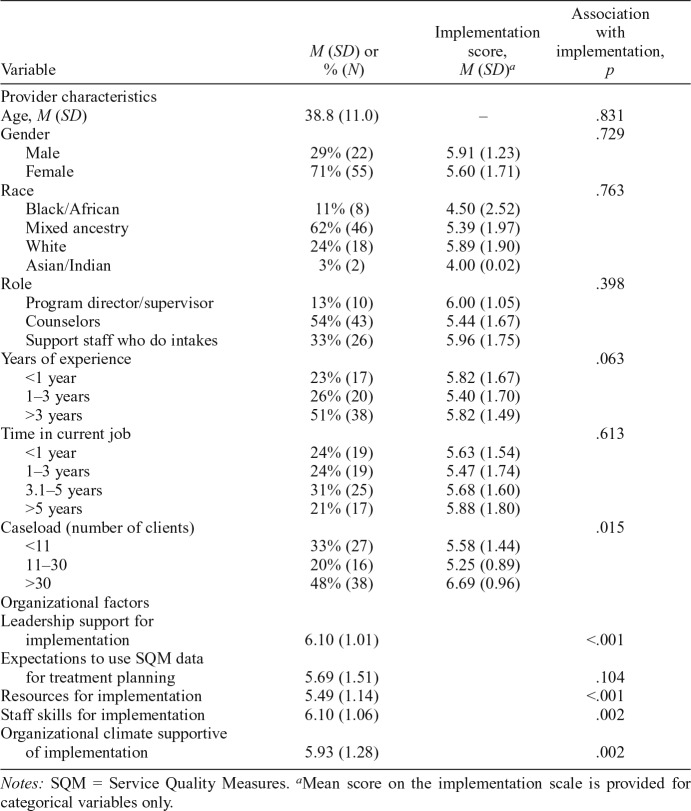

At each treatment agency in which the SQM system was implemented, the staff members responsible for implementation were asked to complete a brief questionnaire. At the 22 treatment sites represented by these 10 agencies, we purposefully approached three to eight staff members to reflect the diverse set of roles involved in implementation (see Table 1 for a description of the agencies). None of the 100 providers approached refused to participate in this study. Participants gave written informed consent before self-completing the questionnaire. Participants were provided with an instruction guide that defined the core concepts referred to in the questionnaire and described how to use the rating scales. A member of the implementation team collected the completed questionnaires 1 month after distributing them for completion.

Table 1.

Description of implementation sites and their participation in the evaluation

| Agency | No. of treatment sites | Intensity of treatment | Annual no. of patients | Average no. of staffa |

| 1 | 1 | Residential | 98 | 10 |

| 2 | 1 | Residential | 430 | 17 |

| 3 | 1 | Residential | 418 | 18 |

| 4 | 3 | Outpatient | 627 | 16 |

| 5 | 6 | Outpatient | 1,254 | 24 |

| 6 | 5 | Outpatient | 1,603 | 15 |

| 7 | 2 | Outpatient | 906 | 13 |

| 8 | 1 | Outpatient | 70 | 4 |

| 9 | 1 | Outpatient | 70 | 4 |

| 10 | 1 | Outpatient | 198 | 9 |

Notes: No. = number.

Refers to the total number of full- and part-time personnel working at the facility, irrespective of designation. Not all are responsible for implementing the SQM system.

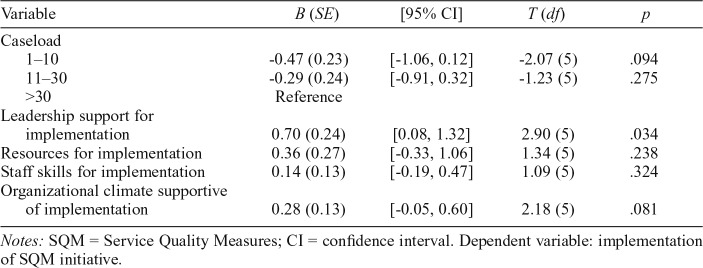

Nineteen providers did not return a completed questionnaire despite reminders. The final sample comprised 81 participants (of whom 71% were women), including program directors (n = 7), clinical supervisors (n = 3), counselors (n = 43), and administrators responsible for treatment intakes (n = 26). Fifty-one percent of the staff members had worked in substance abuse services for at least 3 years. Almost a third (30%) had more than 30 patients on their caseload (Table 2).

Table 2.

Individual-level factors and relationship with implementation of the SQM initiative (n = 81)

| Variable | M (SD) or % (N) | Implementation score, M (SD)a | Association with implementation, p |

| Provider characteristics | |||

| Age, M (SD) | 38.8 (11.0) | – | .831 |

| Gender | .729 | ||

| Male | 29% (22) | 5.91 (1.23) | |

| Female | 71% (55) | 5.60 (1.71) | |

| Race | .763 | ||

| Black/African | 11% (8) | 4.50 (2.52) | |

| Mixed ancestry | 62% (46) | 5.39 (1.97) | |

| White | 24% (18) | 5.89 (1.90) | |

| Asian/Indian | 3% (2) | 4.00 (0.02) | |

| Role | .398 | ||

| Program director/supervisor | 13% (10) | 6.00 (1.05) | |

| Counselors | 54% (43) | 5.44 (1.67) | |

| Support staff who do intakes | 33% (26) | 5.96 (1.75) | |

| Years of experience | .063 | ||

| <1 year | 23% (17) | 5.82 (1.67) | |

| 1–3 years | 26% (20) | 5.40 (1.70) | |

| >3 years | 51% (38) | 5.82 (1.49) | |

| Time in current job | .613 | ||

| <1 year | 24% (19) | 5.63 (1.54) | |

| 1–3 years | 24% (19) | 5.47 (1.74) | |

| 3.1–5 years | 31% (25) | 5.68 (1.60) | |

| >5 years | 21% (17) | 5.88 (1.80) | |

| Caseload (number of clients) | .015 | ||

| <11 | 33% (27) | 5.58 (1.44) | |

| 11–30 | 20% (16) | 5.25 (0.89) | |

| >30 | 48% (38) | 6.69 (0.96) | |

| Organizational factors | |||

| Leadership support for implementation | 6.10 (1.01) | <.001 | |

| Expectations to use SQM data for treatment planning | 5.69 (1.51) | .104 | |

| Resources for implementation | 5.49 (1.14) | <.001 | |

| Staff skills for implementation | 6.10 (1.06) | .002 | |

| Organizational climate supportive of implementation | 5.93 (1.28) | .002 |

Notes: SQM = Service Quality Measures.

Mean score on the implementation scale is provided for categorical variables only.

Post-implementation questionnaire.

A work group within the SQM initiative’s national steering committee developed a brief questionnaire to assess the extent to which system implementation had occurred at treatment agencies. Guided by the implementation science literature (Aarons et al., 2011; Rogers, 2003; Stamatakis et al., 2012), the work group identified five domains potentially associated with implementation (leadership, organizational climate, resources, staff skills, system utility), and developed an indicator for each domain and measurement specifications for each indicator. The final questionnaire comprised 12 items grouped into three sections.

(A) IMPLEMENTATION: Participants used a 7-point scale to rate the extent to which the SQM system was implemented by their organization. Responses ranged from 1 (not at all) to 7 (completely). This was the primary outcome variable.

(B) PROVIDER CHARACTERISTICS: Items assessed providers’ age, gender, race, job role (program director, clinical supervisor, counselor, or support staff), years of experience (<1 year; 1–3 years; >3 years), time in current job (<1 year; 1–3 years; >3 and <5 years; >5 years), and number of patients on caseload (1–10; 11–30; >30).

(C) IMPLEMENTATION FACILITATORS: Participants used a 7-point scale to rate the extent to which their facility had sufficient resources to implement the SQM system. Similarly, 7-point scales rated the extent to which their agency’s (a) leadership and (b) organizational climate supported system implementation. Responses for each of these scales ranged from 1 (strongly disagree) to 7 (strongly agree). Participants also used 7-point scales to rate the extent to which they (a) were expected to use SQM data to guide treatment planning and (b) thought that the staff had the necessary skills and training to implement the system. Responses for these two scales ranged from 1 (not at all) to 7 (completely).

Analysis.

Data were analyzed using IBM SPSS Statistics for Windows, Version 25 (IBM Corp., Armonk, NY). First, we generated descriptive statistics for all provider, organization, and implementation variables. We used analysis of variance procedures to explore possible differences in the extent to which agencies implemented the system. Next, using the complex samples general linear model function, we conducted simple and multiple linear regression analyses to examine unadjusted and adjusted associations between provider characteristics, potential organizational facilitators of implementation, and the extent of implementation while controlling for the clustering of responses within treatment agencies. Only variables significantly associated with the extent of implementation in the simple regression analyses entered the multivariable model.

In-depth interviews

Population and procedures.

We conducted IDIs with providers from the three residential and seven outpatient agencies implementing the SQM system. Treatment managers identified staff members from each agency to participate in the IDIs. To be eligible, participants had to have direct experience with implementing the SQM system. Thirty providers were approached for an interview, of whom 26 were interviewed (Table 1). The four providers who were not interviewed did not differ from those who were interviewed. The final sample included program directors and managers (n = 5), addiction counselors (n = 6), social workers (n = 10), psychologists (n = 2), and administrators responsible for treatment intakes (n = 3). These participants were mainly (77%) women.

All IDIs were conducted between January and April 2015. Written informed consent was obtained before the interview, which occurred in a private room at the treatment facility and lasted approximately 30 minutes. An experienced qualitative interviewer, independent from the implementation process, conducted the interviews. A semi-structured interview guide facilitated discussions around experiences with system implementation, system features that facilitated or hindered implementation, and the use of system findings for quality improvement activities. Interviews were audio-recorded and transcribed verbatim.

Analysis.

We used the Framework Method (Ritchie et al., 2003) for qualitative data analysis. This involves data familiarization, identifying a thematic framework, indexing, charting or mapping, and interpretation. Two researchers read the transcripts for emergent themes and then independently coded two transcripts before meeting to compare codes and to agree on a coding list. Next, the researchers independently coded the remaining transcripts, meeting regularly to compare notes. Coding discrepancies were resolved by discussion. Saturation of content was reached after coding half the transcripts. Inter-coder reliability checks were conducted, with a kappa score of .81 being obtained. We used NVivo 10.0, a qualitative software program, to aid data analysis.

Results

Extent of system implementation

On average, there were high rates of system implementation at participating agencies. A mean score of 5.88 (SD = 1.28) was obtained on this indicator, with scores ranging between 2 and 7. Forty-two percent of the sample had scores at the upper range of the indicator, suggesting a possible ceiling effect. The extent to which agencies implemented the system differed, F(9) = 2.97, p = .038. From a total target population of 664 adult patients in the 2014/2015 core implementation period, we obtained 489 provider-completed discharge forms (a 74% response rate) and 405 patient surveys (a 61% response rate). Response rates for these tools varied across agencies, χ2(9) = 73.95, p < .0001, for the discharge form, and χ2(9) = 67.38, p <.0001, for the patient survey. Agency response rate variations generally seem aligned with agency implementation score differences: the lowest and highest scoring agencies also had the lowest and highest response rates, respectively.

Factors associated with greater implementation

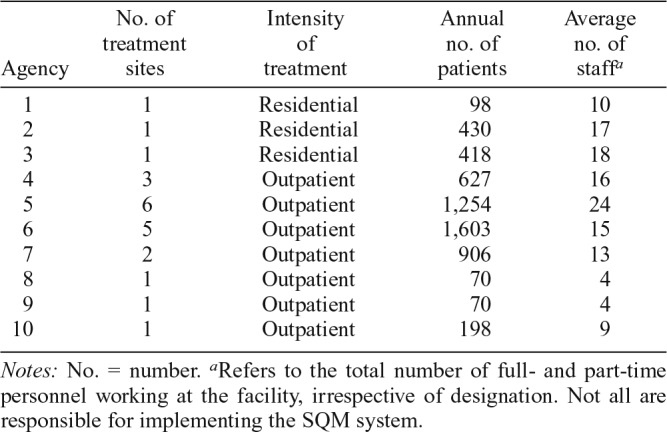

Caseload was the only provider characteristic associated with system implementation during simple regression analyses, F(2, 70) = 14.49, p = .015. Organization-level variables associated with implementation were leadership support, F(5, 70) = 70.61, p < .0001; organizational climate, F(5, 77) = 34.11, p < .002; staff skills, F(5, 77) = 37.09, p < .002; and resources for implementation, F(5, 77) = 100.41, p < .0001 (Table 1). In the multivariable model, only leadership support, F(5, 70) = 8.42, p < .034, remained significantly associated with SQM system implementation (Table 3).

Table 3.

General linear regression model of SQM system implementation

| Variable | B (SE) | [95% CI] | T (df) | p |

| Caseload | ||||

| 1–10 | -0.47 (0.23) | [-1.06, 0.12] | -2.07 (5) | .094 |

| 11–30 | -0.29 (0.24) | [-0.91, 0.32] | -1.23 (5) | .275 |

| >30 | Reference | |||

| Leadership support for implementation | 0.70 (0.24) | [0.08, 1.32] | 2.90 (5) | .034 |

| Resources for implementation | 0.36 (0.27) | [-0.33, 1.06] | 1.34 (5) | .238 |

| Staff skills for implementation | 0.14 (0.13) | [-0.19, 0.47] | 1.09 (5) | .324 |

| Organizational climate supportive of implementation | 0.28 (0.13) | [-0.05, 0.60] | 2.18 (5) | .081 |

Notes: SQM = Service Quality Measures; CI = confidence interval. Dependent variable: implementation of SQM initiative.

Providers’ perceptions of system implementation

Participants identified three system attributes thought to facilitate implementation: perceived utility of the system for improving treatment quality, system compatibility with current practices, and simplicity of implementation. Providers’ beliefs that the SQM system benefited their facility was the strongest driver of implementation, overriding their initial concerns that the SQM system would create additional work for overburdened clinical staff:

“Well, we are always running against time we thought, oh heck, a little bit more to do. It wasn’t easily received. But then we realized how relevant the information was . . . and we knew it was going to be beneficial.” [Residential Provider 4]

Most participants thought the system was beneficial, as it provided them with new information on patients’ treatment needs and responses that they could use for service evaluation and planning.

“The participation from them [the patients] is really helpful and with that we now know where our patients are and how to take it further.” [Residential Provider 3]

“It’s one thing for us to say that we do well and it’s another thing when someone from outside evaluates our work. . . . and to see that people are happy with the services that we give them.” [Outpatient Provider 10]

Several participants wished to continue implementing the SQM system because the performance feedback they received was “useful” for informing treatment strengthening efforts. Some participants described using their agency’s performance feedback to improve services. For example, a residential agency began offering social support groups for patients after performing poorly on a social connectedness indicator. They noted how they “were able to improve service delivery.” Similarly, an outpatient agency started transporting patients to treatment after performing poorly on an access indicator. They noted how they “used the feedback to assist with improving how things are done at the centre.”

Although some participants were able to use their agency’s performance feedback to adapt services, several struggled to interpret the feedback they received and therefore were unable to use findings to shape quality improvement activities. These participants wanted more training from the SQM team in how to interpret their performance feedback. A few participants also requested quarterly rather than annual feedback so that they could rapidly assess whether their efforts to improve service quality had been successful.

Participants also thought that the SQM system’s compatibility with their agencies’ vision and values facilitated implementation. Several commented that the SQM system supported their agency’s vision of providing “quality services to substance users” and was congruent with their treatment processes. The implementation of this system therefore did not “require a big adjustment to service provision.” As one outpatient provider [15] remarked: “It kind of fitted quite easily into our program . . . it was not a huge adjustment to what we were doing.”

Furthermore, the relative simplicity of the system seemed to facilitate implementation. Most participants described the SQM system as “easy to implement” and the implementation process as “uncomplicated and understandable.” Participants noted that this limited administrative burden, with implementation “not taking up a lot of [staff] time.” A few providers thought it possible to further limit the resource burden associated with system implementation by transitioning from a paper-based to an electronic system. According to participants, the availability of implementation supports, in the form of monthly telephone calls and an implementation tool kit, enhanced the simplicity of implementation:

“Whenever we were uncertain we could ask someone, and we would get feedback immediately. So then, you were able to continue with what you were doing.” [Outpatient Provider 7]

In addition to these three system attributes, participants noted how agency leadership played an important role in system implementation. Participants indicated that leadership style influenced providers’ openness to system implementation. They provided this as a potential reason for lower than expected completion rates of the staff-completed administrative indicators. They thought that a participatory leadership style that consulted staff members and informed them about the potential benefits of performance measurement would improve providers’ motivation to implement the system:

“Explain to them that this system is important and that it will give feedback in terms of the service that they’re providing . . . how they can improve as service providers.” [Outpatient Provider 16]

“If they can see how the information obtained was usable to other treatment centers and how [they use the information] to improve their services.” [Outpatient Provider 4]

Participants also noted how high rates of patient attrition from outpatient treatment affected their ability to implement the SQM system as intended. Providers did not have the resources to track patients once they had dropped out of treatment, and this resulted in low response rates for the patient survey component of the SQM system. This issue was not raised by residential providers. As one outpatient provider [3] stated: “It’s difficult to get them to actually come in here (once they have dropped out). You can get them on the phone, you speak to them. Then they will say, “I’m working . . . I can’t come in.”

Discussion

Despite global initiatives to strengthen SUD treatment systems in LMICs (United Nations Office on Drugs and Crime, 2016) and increasing interest in the use of performance measurement systems to monitor system strengthening efforts (Ferri & Griffiths, 2015), few LMICs have implemented systems to assess the quality of SUD treatment. This is partly because the system attributes and other factors required to ensure successful implementation of these systems in severely resource-constrained treatment systems are unknown. To address this gap, we evaluated the implementation of the SQM system in South Africa. Findings show (a) good penetration of the SQM system across treatment agencies, although the degree of implementation varied; (b) leadership, resources, and skills within agencies influenced the extent of system implementation; and (c) core attributes of the SQM system facilitated implementation.

We found relatively high levels of SQM system penetration across participating treatment agencies, providing the first evidence that it is feasible to implement a performance measurement system for SUD treatment services in a resource-limited setting in which the workforce is less skilled and more burdened than in high-income settings (Magidson et al., 2017; Pasche et al., 2015). As in high-income countries (Herbeck et al., 2010), agencies varied in the extent of SQM system implementation. This is worrisome, as performance data from agencies with low rates of system implementation may not adequately reflect the quality of care. To ensure that data produced by the SQM system are useful for treatment improvement purposes, every effort should be made to optimize system implementation.

Our findings suggest that enhancing leadership support and staff capacity for performance measurement may improve the extent of system implementation within SUD treatment agencies. In keeping with the implementation science literature (Fixsen et al., 2005), our qualitative findings suggest that a participatory leadership approach to introducing a performance measurement system into treatment services may increase staff members’ willingness and motivation to implement the system. Through adopting a consultative approach to system implementation, providers are more likely to feel like active partners in their agency’s efforts to provide quality care. Findings also show that providers who have limited skills for interpreting data struggle to use their performance feedback for quality improvement purposes. As providers are unlikely to implement this system if they cannot use their findings (Braa et al., 2012), this is a potentially important barrier to the continued implementation of this system. To address this possible challenge, we are planning a series of workshops to build provider capacity and a shared framework for using performance feedback to guide service improvement efforts.

Improving target population coverage so that the feedback generated from this system is representative of patients’ experiences is also key to system implementation. As in other studies (Gouse et al., 2016), we noted high rates of attrition from outpatient treatment. Because this attrition occurred mainly during the first 2 weeks of treatment, it affected the completion of the patient survey component of the SQM system. As limited patient coverage probably biases the system toward patients with better experiences of treatment, we need to find ways of enhancing patient participation. One possibility is to transition the SQM system from pen-and-paper to a mobile technology platform, thereby permitting remote completion of the survey. This may assist in obtaining feedback from patients who are unable or unwilling to continue with treatment. Additional benefits of a digital system would be automated and expedited data capture, potentially enabling agencies to obtain more regular performance feedback. Although there is excellent penetration of mobile technology in South Africa and many other LMICs (Pew Research Centre, 2016) and mobile health applications appear acceptable to South African patients (Nachega et al., 2016), the extent to which patients with SUDs have access to mobile technology is unknown. Future research should consider examining the feasibility and acceptability of implementing a mobile version of this system.

Notwithstanding some challenges, providers viewed the SQM system as acceptable, appropriate, and useful for guiding treatment improvements, which seemed to facilitate system implementation. Providers described several benefits of using this system—both for patient care and for treatment agency reputation. This reflects high system acceptability, particularly as the perceived benefits of implementing this system seemed to outweigh any implementation concerns. Providers also seemed to view the SQM system as appropriate for their treatment settings, describing it as compatible with existing treatment processes and simple to implement. The importance that providers place on these system attributes is not surprising, given the resource-constrained nature of these treatment settings (Pasche et al., 2015). Finally, providers’ perceptions of the usefulness of their performance feedback increased their motivation to implement the system. They gave concrete examples of how they had used their agency’s performance feedback to make program improvements, noting that the usefulness of this feedback encouraged them to continue implementation. The participatory approach used for SQM system development, which involved extensive stakeholder consultation and tailoring of the system to the needs and constraints of the treatment community (Myers et al., 2015), probably enhanced providers’ perceptions of the acceptability, appropriateness, and usefulness of this system for treatment service strengthening. In other LMICs, this participatory approach to system development may yield similar benefits.

Several limitations should be considered. First, SQM system implementation was limited to treatment agencies in the Western Cape, and findings may not be generalizable to agencies located in other provinces. In other provinces, fluency in English may be more variable among providers, and this could affect the extent to which system implementation protocols (which are available only in English) are understood and implemented as intended. Although we are confident that SUD treatment services are similar across the provinces, the expansion of the SQM initiative to the rest of the country will require ongoing evaluation to ensure it still meets the needs of the treatment community. Second, because we did not randomly recruit treatment staff to complete the survey, there is a possibility of selection bias, particularly given the relatively small sample size. Third, the scale we used to assess system implementation was not validated. Although findings on this scale mainly aligned with the SQM response rates of treatment agencies, this measure must be validated for future implementation research. Despite these limitations, our use of mixed methods allowed us to triangulate findings that helped increase our confidence in the evaluation findings.

In conclusion, this study is among the first to demonstrate that it is feasible to implement a performance measurement system for SUD treatment services in an LMIC, providing that the system is acceptable to providers, appropriate for use in their treatment context, and useful for improving practice. To ensure the utility of this system for treatment service strengthening, system implementation must be optimized. Findings indicate two potential avenues for system strengthening. First, transferring the system to a mobile platform may allow for greater patient participation and population coverage, particularly in outpatient settings. Second, efforts to strengthen leadership support for performance measurement and to build capacity for performance feedback utilization may enhance the implementation of this system.

Footnotes

The Western Cape Department of Social Development (WC-DoSD), the South African Medical Research Council, and the National Research Foundation of South Africa funded this study. The contents of this article are solely the responsibility of the authors and do not necessarily represent the official views of the funders.

References

- Aarons G. A., Hurlburt M., Horwitz S. M. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health. 2011;38:4–23. doi: 10.1007/s10488-010-0327-7. doi:10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braa J., Heywood A., Sahay S. Improving quality and use of data through data-use workshops: Zanzibar, United Republic of Tanzania. Bulletin of the World Health Organization. 2012;90:379–384. doi: 10.2471/BLT.11.099580. doi:10.2471/BLT.11.099580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradshaw D., Norman R., Schneider M. A clarion call for action based on refined DALY estimates for South Africa. South African Medical Journal. 2007;97:438–440. [PubMed] [Google Scholar]

- Connell J., Zurn P., Stilwell B., Awases M., Braichet J. M. Sub-Saharan Africa: Beyond the health worker migration crisis? Social Science & Medicine. 2007;64:1876–1891. doi: 10.1016/j.socscimed.2006.12.013. doi:10.1016/j.socscimed.2006.12.013. [DOI] [PubMed] [Google Scholar]

- Ferri M., Griffiths P. Good practice and quality standards. In: el-Guebaly N., Carrà G., Galanter M., editors. Textbook of addiction treatment: International perspectives. (pp. 1337–1359). Italy: Springer-Verlag; 2015. [Google Scholar]

- Fixsen D. L., Naoom S. F., Blasé K. A., Friedman R. M., Wallace F. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network; 2005. Implementation research: A synthesis of the literature. [Google Scholar]

- Garnick D. W., Horgan C. M., Acevedo A., McCorry F., Weisner C. Performance measures for substance use disorders—what research is needed? Addiction Science & Clinical Practice. 2012;7:18. doi: 10.1186/1940-0640-7-18. doi:10.1186/1940-0640-7-18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gouse H., Magidson J. F., Burnhams W., Remmert J. E., Myers B., Joska J., Carrico A. W. Implementation of cognitive-behavioral substance abuse treatment in sub-Saharan Africa: Treatment engagement and abstinence at treatment exit. PLOS ONE. 2016;11:e0147900. doi: 10.1371/journal.pone.0147900. doi:10.1371/journal.pone.0147900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenhalgh J. The applications of PROs in clinical practice: What are they, do they work, and why? Quality of Life Research: An International Journal of Quality of Life Aspects of Treatment, Care and Rehabilitation. 2009;18:115–123. doi: 10.1007/s11136-008-9430-6. doi:10.1007/s11136-008-9430-6. [DOI] [PubMed] [Google Scholar]

- Harris A. H. S., Kivlahan D. R., Bowe T., Finney J. W., Humphreys K. Developing and validating process measures of health care quality: An application to alcohol use disorder treatment. Medical Care. 2009;47:1244–1250. doi: 10.1097/MLR.0b013e3181b58882. doi:10.1097/MLR.0b013e3181b58882. [DOI] [PubMed] [Google Scholar]

- Herbeck D. M., Gonzales R., Rawson R. A. Performance improvement in addiction treatment: Efforts in California. Journal of Psychoactive Drugs. 2010;42:261–268. doi: 10.1080/02791072.2010.10400549. doi:10.1080/027910 72.2010.10400549. [DOI] [PubMed] [Google Scholar]

- Herman A. A., Stein D. J., Seedat S., Heeringa S. G., Moomal H., Williams D. R. The South African Stress and Health (SASH) study: 12-month and lifetime prevalence of common mental disorders. South African Medical Journal. 2009;99:339–344. [PMC free article] [PubMed] [Google Scholar]

- Institute of Medicine. Washington, DC: National Academies Press; 2005. Improving the quality of health care for mental and substance-use conditions: Quality chasm series. [PubMed] [Google Scholar]

- Kilbourne A. M., Keyser D., Pincus H. A. Challenges and opportunities in measuring the quality of mental health care. Canadian Journal of Psychiatry. 2010;55:549–557. doi: 10.1177/070674371005500903. doi:10.1177/070674371005500903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Magidson J. F., Lee J. S., Johnson K., Burnhams W., Koch J. R., Manderscheid R., Myers B. Substance Abuse. Advance online publication; 2017. Openness to adopting evidence-based practice in public substance use treatment in South Africa using task shifting: Caseload size matters. doi:10.1080/08897077.2017.1380743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers B., Carney T., Wechsberg W. M. “Not on the agenda”: A qualitative study of influences on health services use among poor young women who use drugs in Cape Town, South Africa. International Journal on Drug Policy. 2016a;30:52–58. doi: 10.1016/j.drugpo.2015.12.019. doi:10.1016/j.drugpo.2015.12.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers B., Govender R., Koch J. R., Manderscheid R., Johnson K., Parry C. D. Development and psychometric validation of a novel patient survey to assess perceived quality of substance abuse treatment in South Africa. Substance Abuse Treatment, Prevention, and Policy. 2015;10:44. doi: 10.1186/s13011-015-0040-3. doi:10.1186/s13011-015-0040-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers B., Kline T. L., Doherty I. A., Carney T., Wechsberg W. M. Perceived need for substance use treatment among young women from disadvantaged communities in Cape Town, South Africa. BMC Psychiatry. 2014a;14:100. doi: 10.1186/1471-244X-14-100. doi:10.1186/1471-244X-14-100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers B. J., Louw J., Pasche S. C. Inequitable access to substance abuse treatment services in Cape Town, South Africa. Substance Abuse Treatment, Prevention, and Policy. 2010;5:28. doi: 10.1186/1747-597X-5-28. doi:10.1186/1747-597X-5-28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers B., Petersen Z., Kader R., Koch J. R., Manderscheid R., Govender R., Parry C. D. H. Identifying perceived barriers to monitoring service quality among substance abuse treatment providers in South Africa. BMC Psychiatry. 2014b;14:31. doi: 10.1186/1471-244X-14-31. doi:10.1186/1471-244X-14-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers B., Williams P. P., Johnson K., Govender R., Manderscheid R., Koch J. R. Providers’ perceptions of the implementation of a performance measurement system for substance abuse treatment: A process evaluation of the Service Quality Measures initiative. South African Medical Journal. 2016b;106:308–311. doi: 10.7196/SAMJ.2016.v106i3.9969. doi:10.7196/SAMJ.2016.v106i3.9969. [DOI] [PubMed] [Google Scholar]

- Nachega J. B., Skinner D., Jennings L., Magidson J. F., Altice F. L., Burke J. G., Theron G. Acceptability and feasibility of mHealth and community-based directly observed antiretroviral therapy to prevent mother-to-child HIV transmission in South African pregnant women under Option B+: An exploratory study. Patient Preference and Adherence. 2016;10:683–690. doi: 10.2147/PPA.S100002. doi:10.2147/PPA.S100002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pasche S., Kleintjes S., Wilson D., Stein D. J., Myers B. Improving addiction care in South Africa: Development and challenges to implementing training in addictions care at the University of Cape Town. International Journal of Mental Health and Addiction. 2015;13:322–332. doi:10.1007/s11469-014-9537-7. [Google Scholar]

- Pew Research Center. Washington, DC: Author; 2016. Cell phones in Africa: Communication lifeline. Retrieved from http://www.pewglobal.org/2015/04/15/cell-phones-in-africa-communication-lifeline. [Google Scholar]

- Ritchie J., Spencer L., O’Connor W. Carrying out qualitative analysis. In: Ritchie J., Lewis J., editors. Qualitative research practice: A guide for social science students and research. London, England: Sage: 2003. [Google Scholar]

- Rogers E. M. 5th ed. New York, NY: Free Press; 2003. Diffusion of innovations. [Google Scholar]

- Stamatakis K. A., McQueen A., Filler C., Boland E., Dreisinger M., Brownson R. C., Luke D. A. Measurement properties of a novel survey to assess stages of organizational readiness for evidence based interventions in community chronic disease prevention settings. Implementation Science. 2012;7:65. doi: 10.1186/1748-5908-7-65. doi:10.1186/1748-5908-7-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- United Nations Office on Drugs and Crime. International standards for the treatment of drug use disorders. 2016 Retrieved from https://www.unodc.org/documents/commissions/CND/CND_Sessions/CND_59/ECN72016_CRP4_V1601463.pdf.

- Wisdom J. P., Ford J. K., Hayes R. A., Edmundson E., Hoffman K., McCarty D. Addiction treatment agencies’ use of data: A qualitative assessment. Journal of Behavioral Health Services & Research. 2006;33:394–407. doi: 10.1007/s11414-006-9039-x. doi:10.1007/s11414-006-9039-x. [DOI] [PubMed] [Google Scholar]