Abstract

Objectives

Patients are often provided with medicine information sheets (MIS). However, up to 60% of patients have low health literacy. The recommended readability level for health-related information is ≤grade 8. We sought to assess the readability of MIS given to patients by rheumatologists in Australia, the UK and Canada and to examine Australian patient comprehension of these documents.

Design

Cross-sectional study.

Setting

Community-based regional rheumatology practice.

Participants

Random sample of patients attending the rheumatology practice.

Outcome measures

Readability of MIS was assessed using readability formulae (Flesch Reading Ease formula, Simple Measure of Gobbledygook scale, FORCAST (named after the authors FORd, CAylor, STicht) and the Gunning Fog scale). Literal comprehension was assessed by asking patients to read various Australian MIS and immediately answer five simple multiple choice questions about the MIS.

Results

The mean (±SD) grade level for the MIS from Australia, the UK and Canada was 11.6±0.1, 11.8±0.1 and 9.7±0.1 respectively. The Flesch Reading Ease score for the Australian (50.8±0.6) and UK (48.5±1.5) MIS classified the documents as ‘fairly difficult’ to ‘difficult’. The Canadian MIS (66.1±1.0) were classified as ‘standard’. The five questions assessing comprehension were correctly answered by 9/21 patients for the adalimumab MIS, 7/11 for the methotrexate MIS, 6/28 for the non-steroidal anti-inflammatory MIS, 10/11 for the prednisone MIS and 13/24 for the abatacept MIS.

Conclusions

The readability of MIS used by rheumatologists in Australia, the UK and Canada exceeds grade 8 level. This may explain why patient literal comprehension of these documents may be poor. Simpler, shorter MIS with pictures and infographics may improve patient comprehension. This may lead to improved medication adherence and better health outcomes.

Keywords: health literacy, rheumatology, medication adherence, patient comprehension, readability

Strengths and limitations of this study.

Readability of medicine information sheets (MIS) from three countries (Australia, UK and Canada) was assessed.

While readability formulae only measure the number/complexity of words/sentences, Australian patient literal comprehension of MIS was also assessed.

The study population was from a regional community and may not be representative of a more urban population.

Introduction

Health literacy is defined as the ‘capacity to obtain, process and understand written and oral health information and services needed to make appropriate health decisions’.1 Low health literacy has been associated with poorer health-related knowledge, increased hospitalisations, reduced immunisations, poorer health status and higher mortality.2 Patients with poor health literacy are less likely to successfully manage chronic disease3 and have greater difficulty in following instructions for prescription medications.4 Higher health literacy has been associated with increased medication adherence.5 6

Although the importance of health literacy and patient–physician communication on health outcomes is well recognised, many patients have difficulty in understanding what their physicians tell them.7 Immediately after leaving a consultation with their specialist, patients were able to recall less than half the information just provided to them.8 9 The provision of written health information in addition to verbal information significantly increases patient knowledge and satisfaction.10 Written information may also lead to increased adherence with treatment.9 However, designing effective written health information remains challenging due to differences in patient literacy levels.

The recommended level of reading difficulty for health-related written material in inconsistent. Some agencies have recommended up to eighth grade level11—the average reading level of an adult in the USA,12 13 whereas others have suggested levels as low as fifth grade to be more inclusive of those with limited literacy.14 No national guidelines exist in Australia, although the South Australian government has recommended up to eighth grade level.15 Despite these inconsistencies, many studies have found written health information provided to patients often exceeds these levels.16–19 While there is greater access to health-related information on the internet, this often also exceeds recommended readability levels.20 21

Literacy levels in Australia are poor, with up to 60% of the population having low literacy skills22 23—defined as the ‘minimum required for individuals to meet the complex demands of everyday life’.24 The International Adult Literacy Survey found 57% of Canadians fall into the lowest two literacy categories.25 In the UK, just under one in six adults has the literacy of an 11-year-old.26 A study of over 200 rural and urban Australian rheumatology patients found that 15% of patients had low health literacy and up to one-third of patients incorrectly followed dosing instructions for common rheumatology drugs.23 Ten per cent of patients with rheumatoid arthritis (RA) who attended an urban community-based Australian rheumatology practice had inadequate/marginal functional health literacy or a reading age at or below the US high school grade equivalent of seventh–eighth grade.27 Up to 24% of rheumatology patients at a US medical centre had a reading level of eighth grade or less.28 In 2002, one in six rheumatology patients at a Scottish hospital were illiterate and struggled to understand education materials and prescription labels.29 These findings are concerning, as rheumatologists often use medications such as methotrexate (MTX) or expensive biological therapies with severe side effects, even death,30 if taken incorrectly.

Given the importance of health literacy and its relationship to health outcomes and medication adherence, we sought to assess: (i) the readability of patient medication information sheets (MIS) given to patients by Australian rheumatologists and (ii) patient comprehension of these documents.

We also compared the readability of the Australian MIS to similar documents given to rheumatology patients in the UK and Canada.

Methods

Assessment of readability

Text from the MIS of commonly prescribed rheumatology medications available on the Australian Rheumatology Association (ARA) website31 was imported into a Microsoft Word document and readability assessed using Readability Studio (Oleander Software).18 32–35

Non-essential text including logos, headers, footers, hyperlinks and contact information was deleted prior to analysis as these may have adversely affected readability scores. Readability was assessed using a range of measures such as the Flesch Reading Ease formula, Simple Measure of Gobbledygook (SMOG) scale, FORCAST (named after the authors FORd, CAylor, STicht) and the Gunning Fog scale. The Flesch Reading Ease formula calculates an index score of a document based on sentence length and number of syllables. It is often used for school textbooks and technical manuals. The standard score is between 0 and 100, with a high score indicating the document is easier to read36 (however, it is possible to also gain minus scores and scores over 100). The SMOG formula calculates grade level and reader age based on complex word density and assigns a grade level (fourth grade to college level).33 37 It is particularly useful for secondary age readers and attempts to predict 100% comprehension, whereas most other formulae predict 50%–75% comprehension. Consequently, SMOG may produce grade level scores one to two grades higher than other formulae.33 37 The Gunning Fog formula calculates grade level and reader age based on number of sentences, their mean length and number of complex words (three or more syllables).38 The FORCAST readability formula was initially used for assessing technical documents by calculating the grade level of text based on number of monosyllabic words. It is the only test not designed for running narrative, for example multiple choice quizzes and applications. As sentence length is not considered, there may be some variability in grade level compared with other readability formulae.33

It was felt the above four formulae allowed comprehensive assessment of an MIS by focussing on various aspects: Flesch Reading Ease—sentence length and syllable number, SMOG—complex word density, Gunning Fog—sentence number/length and complex words and FORCAST—number of monosyllabic words and non-dependence on running narrative.

The readability of 10 corresponding MIS of a sample of commonly prescribed rheumatology medications published in the UK by Arthritis Research UK39 and from Canada published by Rheuminfo40 was also assessed as above. These 10 MIS were representative of the MIS available on both these websites.

Assessment of literal comprehension

Coffs Harbour is a growing regional city of 70 000 people located half-way between the Australian capital cities of Sydney and Brisbane. Its medical specialists provide services to another 50 000 people from the surrounding area. Rheumatology services are provided by two rheumatologists (PKKW and HB) under the auspices of the Mid-North Coast Arthritis Clinic (MNCAC). The MNCAC has over 16 000 patients on its computerised database.

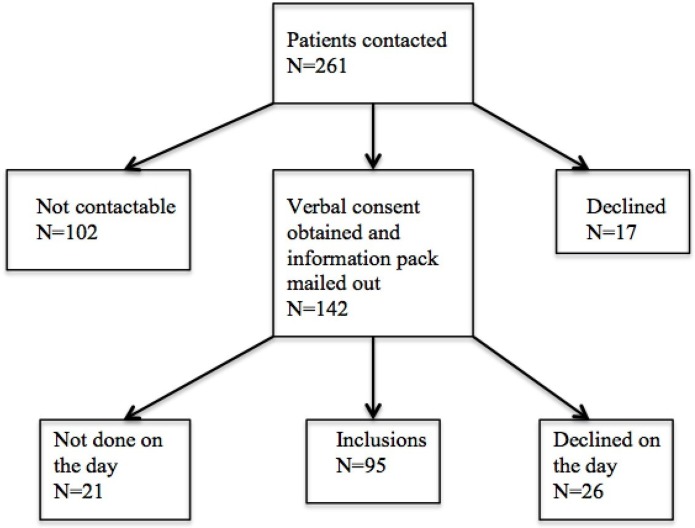

A random sample of patients referred to the MNCAC was asked to read one ARA MIS31 containing information about one of the following medications which the patient was unfamiliar with: online supplementary material 1 MTX,41 online supplementary material 2 non-steroidal anti-inflammatory drugs (NSAIDs),42 online supplementary material 3 adalimumab (ADA),43 online supplementary material 4 abatacept (ABA)44 or online supplementary material 5 prednisone.45 All consecutive patients scheduled for a randomly selected consulting day were contacted via telephone by an investigator (MO or ET). Patients (n=261) were asked whether they were interested in study participation to determine what they understood after reading information from the doctor. Responses are outlined in figure 1. Those who expressed interest in study participation were mailed information about the study and a consent form to be returned in a stamped preaddressed envelope (n=142). Those who agreed to participate were assessed on the day of the planned consultation (n=95). These was no difference in gender or age between those included compared with those not contactable (data not shown). Comprehension was assessed by asking the patient to answer five multiple choice questions (see online supplementary material 6) about the content of the one ARA MIS they had just read. These questions were designed by two rheumatologists (PKKW, HB), a rheumatology nurse (DF) and an education academic with expertise in literacy (JJ). The questions were trialled on small focus groups of patients. A time limit of 15 min in a quiet well-lit room was provided. If needed, study participants could refer back to the MIS while answering the questions.

Figure 1.

Inclusions and exclusions.

bmjopen-2018-024582supp001.pdf (281.1KB, pdf)

bmjopen-2018-024582supp002.pdf (299.6KB, pdf)

bmjopen-2018-024582supp003.pdf (292.4KB, pdf)

bmjopen-2018-024582supp004.pdf (282.4KB, pdf)

bmjopen-2018-024582supp005.pdf (329.3KB, pdf)

bmjopen-2018-024582supp006.pdf (84.8KB, pdf)

Patient and public involvement

Previous work by us found that up to 15% of patients had low health literacy and up to one-third of patients incorrectly followed dosing instructions for common rheumatology drugs.23 These findings prompted us to conduct this study which examined the readability of MIS routinely used in our clinical practice. Furthermore, some of our patients had previously commented that the ARA MIS were difficult to understand. A summary of study results will be disseminated to all study participants. Patients were not involved in the recruitment to and conduct of the study. However, many study participants indicated they hoped their study involvement would lead to the development of better written material for future patients.

Statistical analyses

Descriptive summary statistics (mean ±SD and median ±IQR range, as appropriate) were used to analyse parameters. Student’s t-test (unpaired) was used to compare means of normally distributed parameters. The Mann-Whitney U test was used to compare medians of groups. For all statistical tests, p<0.05 was considered significant. Data analysis was undertaken using GraphPad Prism V.6 (GraphPad Software).46 The correlation (r value) between comprehension score and various parameters (age, gender, postcode, highest level of education) was performed using STATA (Stata V.11.1, StataCorp).

Results

Assessment of readability

The mean (±SD) grade level for the ARA MIS calculated using Readability Studio was 11.6±0.1 with a mean reading age of 16.6±0.1 years (table 1). (These were obtained by calculating the mean of the FORCAST, Gunning Fog and SMOG mean grade level and reading age. Due to the heterogeneity of these instruments, the mean of each of these measures is available in the relevant table). The mean (±SD) Flesch Reading Ease score of 50.8±0.6 indicated the ARA MIS were either ‘fairly difficult’ or ‘difficult’33 (table 1). Overall, difficult sentences (>22 words) and complex words (≥3 syllables) made up 9.0% and 18.4% of the text, respectively (table 2).

Table 1.

Readability scores for Australian Rheumatology Association Medicine Information Sheets

| Medication | Flesch Reading Ease *

(0–100) |

FORCAST grade level | FORCAST reader age (years) | Gunning Fog grade level | Gunning Fog reader age (years) | SMOG grade level | SMOG reader age (years) | Mean grade level† | Mean reader age† (years) |

| Abatacept | 49 | 11.2 | 16–17 | 12.3 | 17–18 | 12.4 | 17–18 | 12.0 | 17.2 |

| Adalimumab | 46 | 11.2 | 16–17 | 12.7 | 17–18 | 12.8 | 17–18 | 12.2 | 17.2 |

| Allopurinol | 53 | 10.8 | 15–16 | 10.5 | 15–16 | 11.5 | 16–17 | 10.9 | 15.8 |

| Apremilast | 56 | 10.6 | 15–16 | 11.3 | 16–17 | 11.7 | 16–17 | 11.2 | 16.2 |

| Azathioprine | 50 | 10.7 | 15–16 | 11.6 | 16–17 | 12.2 | 17–18 | 11.5 | 16.5 |

| Bisphosphonates intravenous | 49 | 11.1 | 16–17 | 12.1 | 17–18 | 12.2 | 17–18 | 11.8 | 17.2 |

| Bisphosphonates oral | 49 | 11.2 | 16–17 | 12.2 | 17–18 | 12.3 | 17–18 | 11.9 | 17.2 |

| Bosentan | 59 | 10.4 | 15–16 | 11.0 | 16–17 | 11.5 | 16–17 | 11.0 | 16.2 |

| Certolizumab | 46 | 11.1 | 16–17 | 12.8 | 17–18 | 12.9 | 17–18 | 12.3 | 17.2 |

| Colchicine | 53 | 11.1 | 16–17 | 11.7 | 16–17 | 11.7 | 16–17 | 11.5 | 16.5 |

| Cyclophosphamide | 53 | 10.7 | 15–16 | 10.8 | 15–16 | 11.8 | 16–17 | 11.1 | 15.8 |

| Ciclosporin | 54 | 10.7 | 15–16 | 11.8 | 16–17 | 12.0 | 17–18 | 11.5 | 16.5 |

| Denosumab | 50 | 11.0 | 16–17 | 11.9 | 16–17 | 12.1 | 17–18 | 11.7 | 16.8 |

| Etanercept | 48 | 11.1 | 16–17 | 12.7 | 17–18 | 12.8 | 17–18 | 12.2 | 17.2 |

| Febuxostat | 54 | 10.7 | 15–16 | 10.8 | 15–16 | 11.7 | 16–17 | 11.1 | 15.8 |

| Golimumab | 48 | 11.1 | 16–17 | 12.8 | 17–18 | 12.8 | 17–18 | 12.2 | 17.2 |

| Hyaluronic acid | 51 | 11.1 | 16–17 | 11.8 | 16–17 | 11.9 | 16–17 | 11.6 | 16.5 |

| Hydroxychloroquine | 49 | 10.9 | 15–16 | 11.6 | 16–17 | 11.7 | 16–17 | 11.4 | 16.2 |

| Infliximab | 49 | 11.1 | 16–17 | 12.5 | 17–18 | 12.6 | 17–18 | 12.1 | 17.2 |

| Leflunomide | 54 | 10.7 | 15–16 | 11.6 | 16–17 | 12.2 | 17–18 | 11.5 | 16.5 |

| Methotrexate | 52 | 10.9 | 15–16 | 11.4 | 16–17 | 12.3 | 17–18 | 11.5 | 16.5 |

| Mycophenolate | 50 | 11.0 | 16–17 | 11.6 | 16–17 | 12.5 | 17–18 | 11.7 | 16.8 |

| NSAIDs | 58 | 10.6 | 15–16 | 11.0 | 16–17 | 11.3 | 16–17 | 11.0 | 16.2 |

| Prednisone | 51 | 10.9 | 15–16 | 11.2 | 16–17 | 11.9 | 16–17 | 11.3 | 16.2 |

| Rituximab | 48 | 11.3 | 16–17 | 12.3 | 17–18 | 12.5 | 17–18 | 12.0 | 17.2 |

| Sulfasalazine | 50 | 10.9 | 15–16 | 11.4 | 16–17 | 11.9 | 16–17 | 11.4 | 16.2 |

| Teriparatide | 49 | 10.9 | 15–16 | 11.6 | 16–17 | 12.1 | 17–18 | 11.5 | 16.5 |

| Tocilizumab | 47 | 11.1 | 16–17 | 12.0 | 17–18 | 12.5 | 17–18 | 11.9 | 17.2 |

| Tofacitinib | 46 | 11.1 | 16–17 | 12.1 | 17–18 | 12.2 | 17–18 | 11.8 | 17.2 |

| Ustekinumab | 54 | 10.8 | 15–16 | 11.5 | 16–17 | 12.0 | 17–18 | 11.4 | 16.5 |

| Mean | 50.8 | 10.9 | 11.8 | 12.1 | 11.6 | 16.6 | |||

| SD | 0.6 | 0.0 | 0.1 | 0.1 | 0.1 | 0.1 |

*Flesch Scale Value: very easy (90–100), easy (80–89), fairly easy (70–79), standard (60–69), fairly difficult (50–59), difficult (30–49), very confusing (0–29).

†Mean of FORCAST, Gunning Fog and SMOG scores.

FORCAST (named after the authors FORd, CAylor, STicht); NSAIDs, non-steroidal anti-inflammatory drugs; SMOG, Simple Measure Of Gobbledygook.

Table 2.

Word and sentence statistics for Australian Rheumatology Association Medicine Information Sheets

| Medication | No. of sentences | No. of difficult* sentences | Mean sentence length (no. of words) |

Total no. of words | No. of complex† words |

| Abatacept | 133 | 8 (5%) | 12.1 | 1612 | 314 (19.5%) |

| Adalimumab | 125 | 11 (8.8%) | 12.6 | 1576 | 315 (20%) |

| Allopurinol | 124 | 10 (8.1%) | 12.2 | 1507 | 252 (16.7%) |

| Apremilast | 92 | 9 (9.8%) | 11.9 | 1095 | 184 (16.8%) |

| Azathioprine | 118 | 9 (7.6%) | 13 | 1539 | 273 (17.7%) |

| Bisphosphonates intravenous | 95 | 11 (11.6%) | 12.6 | 1199 | 217 (18.1%) |

| Bisphosphonates oral | 112 | 11 (9.8%) | 13 | 1456 | 277 (19%) |

| Bosentan | 107 | 11 (10.3%) | 11.4 | 1219 | 214 (17.6%) |

| Certolizumab | 125 | 12 (9.6%) | 13 | 1624 | 320 (19.7%) |

| Colchicine | 123 | 8 (6.5%) | 11.6 | 1426 | 260 (18.2%) |

| Cyclophosphamide | 118 | 12 (10.2%) | 12.4 | 1469 | 266 (18.1%) |

| Ciclosporin | 102 | 8 (7.8%) | 12.1 | 1235 | 227 (18.4%) |

| Denosumab | 110 | 10 (9.1%) | 12 | 1317 | 243 (18.5%) |

| Etanercept | 124 | 11 (8.9%) | 13.1 | 1621 | 321 (19.8%) |

| Febuxostat | 120 | 12 (10%) | 12.4 | 1484 | 255 (17.2%) |

| Golimumab | 123 | 12 (9.8%) | 12.9 | 1588 | 316 (19.9%) |

| Hyaluronic acid | 81 | 4 (4.9%) | 11.3 | 919 | 181 (19.7%) |

| Hydroxychloroquine | 87 | 9 (10.3) | 12 | 1046 | 184 (17.6%) |

| Infliximab | 138 | 13 (9.4%) | 13.1 | 1807 | 344 (19%) |

| Leflunomide | 111 | 10 (9%) | 12.9 | 1427 | 254 (17.8%) |

| Methotrexate | 156 | 20 (12.8%) | 13.4 | 2097 | 375 (17.9%) |

| Mycophenolate | 141 | 15 (10.6%) | 12.1 | 1712 | 334 (19.5%) |

| NSAIDs | 137 | 14 (10.2%) | 12.8 | 1750 | 266 (15.2%) |

| Prednisone | 128 | 12 (9.4%) | 13 | 1668 | 292 (17.5%) |

| Rituximab | 132 | 9 (6.8%) | 12.3 | 1627 | 318 (19.5%) |

| Sulfasalazine | 124 | 9 (7.3%) | 12.1 | 1497 | 276 (18.4%) |

| Teriparatide | 114 | 13 (11.4%) | 11.5 | 1310 | 238 (18.2%) |

| Tocilizumab | 130 | 12 (9.2%) | 12.7 | 1654 | 311 (18.8%) |

| Tofacitinib | 111 | 7 (6.3%) | 12 | 1336 | 249 (18.6%) |

| Ustekinumab | 114 | 8 (7%) | 12.3 | 1406 | 259 (18.4%) |

| Mean | 118.5 | 10.7 (9.0%) | 12.4 | 1474.1 | 271.2 (18.4%) |

| SD | 3.0 | 0.5 | 0.1 | 44.6 | 9.0 |

*Difficult sentence: ≥22 words.

†Complex word: ≥3 syllables.

NSAIDs, non-steroidal anti-inflammatory drugs.

As the validity of the above readability assessment measures has been questioned due to over-reliance on sentence and word length,47 48 we proceeded to assess patient literal comprehension of the ARA MIS.

Assessment of comprehension

A total of 261 patients were contacted, with 95 study participants (figure 1). Mean (±SD) age of study participants was 60±13.2 years, with 71/95 (75%) women and 24/95 (25%) men (table 3). Nineteen of the 95 (20%) patients had a university degree (table 3). Only 9/21 (43%) and 13/24 (54.2%) patients correctly answered all five questions for adalimumab and ABA, respectively (table 3). Only 7/11 (63.6%) of patients correctly answered all five simple questions assessing literal comprehension of the MTX MIS (table 3). Questions assessing comprehension of the prednisone MIS were correctly answered by most participants (10/11; 90.9%). Of concern, only 21.4% (6/28) of patients correctly answered all questions assessing comprehension of the NSAID MIS. Responses to the five NSAID questions are shown in figure 2.

Table 3.

Assessment of patient literal comprehension (n=95 patients)

| Age (years, mean ±SD) | 60.0±13.2 |

| Sex (F/M) | 71/24 |

| Highest level of education | no. (%) |

| ≤Year 10 | 39 (41) |

| Year 10–12 | 15 (16) |

| Subdegree, eg, TAFE, apprenticeship | 22 (23) |

| University degree | 19 (20) |

| Median total score (max=5) | 4 |

| No. with all correct answers (ie, 5/5) | no. (%) |

| Adalimumab | 9/21 (43) |

| MTX | 7/11 (63.6) |

| NSAIDs | 6/28 (21.4) |

| Prednisone | 10/11 (90.9) |

| Abatacept | 13/24 (54.2) |

MTX, methotrexate; NSAID, non-steroidal anti-inflammatory drugs; TAFE, technical and further education.

Figure 2.

Answers to NSAID questions.

Highest level of education achieved (r=0.33, p=0.001) and age (r=−0.3, p=0.0002) correlated moderately strongly with a higher comprehension score.

Comparison of readability scores for Australian, UK and Canadian MIS

Given our findings, we sought to determine using Readability Studio what the readability scores were for MIS used in other countries. The mean (±SD) grade level for 10 of the commonly used UK MIS was 11.8±0.1 with a reader age of 16.9±0.1 years (table 4). The mean Flesch Reading Ease score was 48.5±1.5 classified as ‘difficult’. Readability of the Canadian MIS was easier with a mean (±SD) grade level of 9.7±0.1 and mean (±SD) reader age of 14.8±0.1 years (table 5). The mean (±SD) Flesch Reading Ease score for the Canadian MIS was 66.1±1.0 classified as ‘standard’.33

Table 4.

Readability scores for Arthritis Research United Kingdom Medicine Information Sheets

| Medication | Flesch Reading Ease*

(0–100) |

FORCAST grade level | FORCAST reader age | Gunning Fog grade level | Gunning Fog reader age (years) |

SMOG grade level | SMOG reader age (years) |

Mean grade level † | Mean reader age† (years) |

| Abatacept | 46 | 10.9 | 15–16 | 13.1 | 18–19 | 13.2 | 18–19 | 12.4 | 17.5 |

| Adalimumab | 47 | 11.1 | 16–17 | 12.1 | 17–18 | 12.5 | 17–18 | 11.9 | 17.2 |

| Bisphosphonates | 53 | 11.1 | 16–17 | 11.9 | 16–17 | 12.3 | 17–18 | 11.8 | 16.8 |

| Denosumab | 42 | 11.7 | 16–17 | 12 | 17–18 | 12.6 | 17–18 | 12.1 | 17.2 |

| Etanercept | 49 | 11 | 16–17 | 11.9 | 16–17 | 12.4 | 17–18 | 11.8 | 16.8 |

| Hydroxychloroquine | 41 | 11.2 | 16–17 | 12.5 | 17–18 | 12.5 | 17–18 | 12.1 | 17.2 |

| Leflunomide | 53 | 10.8 | 15–16 | 11.9 | 16–17 | 12.2 | 17–18 | 11.6 | 16.5 |

| Methotrexate | 51 | 10.8 | 15–16 | 12.1 | 17–18 | 12.4 | 17–18 | 11.8 | 16.8 |

| Prednisolone | 55 | 11.1 | 16–17 | 11.3 | 16–17 | 11.6 | 16–17 | 11.3 | 16.5 |

| Sulfasalazine | 48 | 10.8 | 15–16 | 11.9 | 16–17 | 12.2 | 17–18 | 11.6 | 16.5 |

| Mean | 48.5 | 11.1 | 12.1 | 12.4 | 11.8 | 16.9 | |||

| SD | 1.5 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 |

*Flesch Scale Value: very easy (90–100), easy (80–89), fairly easy (70–79), standard (60–69), fairly difficult (50–59), difficult (30– 49), very confusing (0–29).

†Mean of FORCAST, Gunning Fog and SMOG scores.

FORCAST (named after the authors FORd, CAylor, STicht); SMOG, Simple Measure Of Gobbledygook.

Table 5.

Readability scores for Canadian Medicine Information Sheets

| Medication | Flesch Reading Ease *

(0–100) |

FORCAST grade level | FORCAST reader age (years) |

Gunning Fog grade level | Gunning Fog reader age (years) |

SMOG grade level | SMOG reader age (years) |

Mean grade level† | Mean reader age† (years) |

| Abatacept | 65 | 10 | 15–16 | 8.5 | 13–14 | 10.3 | 15–16 | 9.6 | 14.8 |

| Adalimumab | 61 | 10.1 | 15–16 | 9.8 | 14–15 | 10.2 | 15–16 | 10 | 15.2 |

| Bisphosphonates | 63 | 10.2 | 15–16 | 9.5 | 14–15 | 10 | 15–16 | 9.9 | 15.2 |

| Denosumab | 66 | 9.6 | 14–15 | 9.6 | 14–15 | 10 | 15–16 | 9.7 | 14.8 |

| Etanercept | 64 | 10.1 | 15–16 | 9.9 | 14–15 | 10.3 | 15–16 | 10.1 | 15.2 |

| Hydroxychloroquine | 72 | 8.8 | 13–14 | 8.4 | 13–14 | 9.5 | 14–15 | 8.9 | 13.8 |

| Leflunomide | 67 | 9.9 | 14–15 | 9.4 | 14–15 | 9.9 | 14–15 | 9.7 | 14.5 |

| Methotrexate | 66 | 9.8 | 14–15 | 9.5 | 14–15 | 10.1 | 15–16 | 9.8 | 14.8 |

| Prednisolone | 69 | 10.2 | 15–16 | 9.8 | 14–15 | 10.1 | 15–16 | 10 | 15.2 |

| Sulfasalazine | 68 | 9.3 | 14–15 | 9.1 | 14–15 | 9.7 | 14–15 | 9.4 | 14.5 |

| Mean | 66.1 | 9.8 | 9.4 | 10.0 | 9.7 | 14.8 | |||

| SD | 1.0 | 0.1 | 0.2 | 0.1 | 0.1 | 0.1 |

*Flesch Scale Value: very easy (90–100), easy (80–89), fairly easy (70–79), standard (60–69), fairly difficult (50–59), difficult (30–49), very confusing (0–29).

†Mean of FORCAST, Gunning Fog and SMOG scores.

FORCAST (named after the authors FORd, CAylor, STicht); SMOG, Simple Measure Of Gobbledygook.

There was no significant difference in mean grade levels between the Australian and UK MIS (p=0.10). However, the mean grade level of the Canadian MIS (9.7±0.1) was less than that of the corresponding Australian MIS (11.7±0.1, p<0.0001).

The Australian MIS were the longest (mean ±SD, number of words=1474.1±44.6) (table 2) compared with the UK (mean ±SD, number of words=922.4±109.6) (table 6A) and Canadian MIS (mean ±SD, number of words=297.7 ± 19.2) (table 6B). The Australian MIS also had the highest percentage of complex words (three or more syllables, 18%), compared with the UK (16%) and Canadian (14%) MIS.

Table 6.

Word and sentence statistics for (A) UK and (B) Canadian Medicine Information Sheets

| Drug | No. of sentences | No. of difficult* sentences | Mean sentence length (no. of words) | No. of words | No. of complex† words |

| (A) UK | |||||

| Abatacept | 66 | 18 (27%) | 17.1 | 1130 | 206 (18%) |

| Adalimumab | 71 | 10 (14%) | 15.3 | 1086 | 191 (18%) |

| Bisphosphonates | 36 | 10 (28%) | 15.7 | 566 | 92 (16%) |

| Denosumab | 8 | 2 (25%) | 14.4 | 115 | 22 (19%) |

| Etanercept | 81 | 16 (20%) | 15.8 | 1282 | 214 (17%) |

| Hydroxychloroquine | 60 | 13 (22%) | 15.3 | 916 | 159 (17%) |

| Leflunomide | 63 | 12 (19%) | 16.1 | 1016 | 157 (15%) |

| Methotrexate | 75 | 13 (17%) | 16.2 | 1212 | 193 (16%) |

| Prednisolone | 60 | 15 (25%) | 17 | 1020 | 131 (13%) |

| Sulfasalazine | 53 | 12 (23%) | 16.6 | 881 | 132 (15%) |

| Mean | 57.3 | 12.1 (21%) | 15.95 | 922.4 | 149.7 (16%) |

| SD | 6.7 | 1.4 | 0.3 | 109.6 | 18.7 |

| (B) Canadian | |||||

| Abatacept | 25 | 0 | 11.1 | 278 | 38 (14%) |

| Adalimumab | 31 | 0 | 11 | 341 | 47 (14%) |

| Bisphosphonates | 30 | 0 | 10 | 301 | 41 (14%) |

| Denosumab | 24 | 0 | 10.3 | 246 | 34 (14%) |

| Etanercept | 31 | 0 | 10.9 | 339 | 48 (14%) |

| Hydroxychloroquine | 21 | 0 | 9.3 | 195 | 23 (12%) |

| Leflunomide | 34 | 0 | 10 | 339 | 46 (14%) |

| Methotrexate | 32 | 0 | 11.2 | 357 | 47 (13%) |

| Prednisolone | 36 | 0 | 10.1 | 363 | 53 (15%) |

| Sulfasalazine | 21 | 0 | 10.4 | 218 | 27 (12%) |

| Mean | 28.5 | 0 | 10.43 | 297.7 | 40.4 (14%) |

| SD | 1.7 | 0.0 | 0.2 | 19.2 | 3.1 |

*Difficult sentence: ≥22 words.

†Complex word: ≥3 syllables.

Discussion

We showed that the readability of commonly used rheumatology MIS given to patients in Australia, the UK and Canada exceeded eighth grade level—the recommended level for a low-literacy population.11 15 The Canadian MIS assessed were easier to read, although remained slightly above eighth grade level. We found that in a population of patients attending a regional private rheumatology practice where only 20% of participants possessed a university degree, patient comprehension of the Australian MIS was poor, with up to 79% of patients failing to correctly answer all five simple questions assessing literal comprehension of commonly prescribed rheumatology medications. As expected, a higher level of education achieved was associated with better comprehension (r=0.33, p=0.001). This, along with high readability scores, suggested that current ARA MIS may be too difficult for many patients to understand. While comprehension of the Canadian MIS was not performed, this would provide useful information about the effectiveness of these easier-to-read materials.

The Canadian MIS were simpler, more ‘readable’ and included pictures. Many studies have shown that incorporating pictograms into patient information material improves patient comprehension.49–54 One study of 60 patients showed that pictograms improved comprehension of patient information sheets from 40% to 93%.50 Another strategy to improve MIS readability is to shorten the document. However, a shorter, simpler MIS may remove important information and be inadequate for patients with high literacy. Yet, studies have shown both low and high literacy groups recalled information best when the text was easy.55 These findings suggest that written materials designed for patients with low health literacy may also be useful for a general audience.

It is important to consider the primary purpose of providing written health-related information to a patient. Although the provision of information as part of patient education to facilitate informed patient treatment decisions is important, worry over potential medicolegal exposure from a treatment-related adverse event continues to drive complexity of written materials.56

Potential limitations of this study include the type of population studied and the measures used to assess readability. All study participants were from Coffs Harbour, a large regional community on the east coast of Australia. Although one may expect literacy levels to be lower in a rural setting, previous work from our centre showed no difference in health literacy between our patients compared with an urban rheumatology private practice in a capital city.23

There has been criticism of readability formulae such as the Flesch Reading Ease formula, SMOG scale and the Gunning Fog scale.48 57–59 Readability formulae are usually based solely on word length or syllable number. They may therefore fail to adjust for patient familiarity with vocabulary associated with their illness, therefore overestimating the difficulty of written information when read by patients familiar with their disease.57 59 By necessity, health-related written material uses text characterised by polysyllabic technical jargon, which elevates readability formulae scores.60 For example, exchanging ‘adalimumab’ for ‘Humira’ in the Australian MIS increases the Flesch Reading ease score from 46 to 50 and reduces the Gunning Fog score from 12.7 to 12.5 (the SMOG remains unchanged at 12.8). Readability formulae fail to account for the stylistic properties of text as well as grammatical errors, which influences the readability of written text. Textual coherence, that is, the relationship and connection between sentences within a document and the relationship between the reader and practitioner are also unaccounted for. Finally, readability formulae do not usually consider visual and design factors which may influence MIS readability or patient comprehension.61 62 While the Flesch Reading Ease formula tends to over-estimate readability of health-related material due to its lower level of expected comprehension criteria,58 the SMOG formula is appropriate for assessing health-related written information as it has been validated against 100% comprehension.58 One approach to addressing these limitations is the use of a more holistic linguistic framework for assessing written patient information which incorporates structure, factual content and visual aspects of the material as well as the relationship between writer and reader.48 This method has been validated using RA medication leaflets in an Australian cohort of patients with RA.63 However, the education level of patients in that study exceeded that seen in our cohort, with 17/27 (63%) having completed tertiary studies compared with 19/95 (20%) in ours.

In view of the potential limitations of readability formulae, we were careful to assess patient literal comprehension of various ARA MIS. As suggested by the relatively low readability scores of the ARA MIS, patient literal comprehension of a selection of the ARA MIS was poor. Due to the simplicity of the five questions posed to the patients, we hoped a satisfactory score would be correct answers to all five questions. However, this only occurred in 21% of patients for NSAIDs and 40%–60% of patients for the MTX, ADA and ABA MIS.

Despite the confines and limitations of readability formulae, we believe they remain an important guide when developing written patient information or revising original drafts. This has been validated by several studies that used these formulae to simplify existing written patient information—resulting in enhanced patient comprehension.64 65 We hope the results of this study will encourage clinicians from rheumatology and all other specialities to consider the health literacy of their patients and readability of the written information they provide, particularly given the potential of technology to improve patient education.

Conclusion

Medication information sheets currently used by many rheumatologists in Australia, the UK and Canada exceed eighth grade level—the recommended level for a low-literacy population. This may explain why patient comprehension of the information contained in these materials is limited. Comprehension may be improved using simpler, shorter words and sentences with greater use of pictures and infographics. This may lead to greater patient medication adherence, understanding of their condition and reduced medication-related errors. It is hoped our findings will encourage all healthcare professionals to consider the appropriateness of written healthcare material provided to patients. The health literacy of patients should always be considered when communicating a management plan.

Supplementary Material

Acknowledgments

We thank all the patients who participated in this study.

Footnotes

Patient consent for publication: None declared.

Contributors: MO, ET: were responsible for data acquisition. MO: was responsible for drafting the manuscript and data analysis under the supervision of PKKW. PKKW, JJ, DF, HB: conceived and designed the study. All authors contributed to interpretation of data and revision of the manuscript and approve the final manuscript.

Funding: This study was partially funded by an unrestricted research grant from Bristol Myers Squibb.

Competing interests: None declared.

Ethics approval: Approval as a low/negligible risk project was obtained from the New South Wales North Coast Human Research Ethics Committee (NCNSW HREC No LNR 150).

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: No additional data available.

References

- 1. Peerson A, Saunders M. Health literacy revisited: what do we mean and why does it matter? Health Promot Int 2009;24:285–96. 10.1093/heapro/dap014 [DOI] [PubMed] [Google Scholar]

- 2. Berkman ND, Sheridan SL, Donahue KE, et al. . Low health literacy and health outcomes: an updated systematic review. Ann Intern Med 2011;155:97–107. 10.7326/0003-4819-155-2-201107190-00005 [DOI] [PubMed] [Google Scholar]

- 3. DeWalt DA, Berkman ND, Sheridan S, et al. . Literacy and health outcomes. J Gen Intern Med 2004;19:1228–39. 10.1111/j.1525-1497.2004.40153.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Davis TC, Wolf MS, Bass PF, et al. . Literacy and misunderstanding prescription drug labels. Ann Intern Med 2006;145:887–94. 10.7326/0003-4819-145-12-200612190-00144 [DOI] [PubMed] [Google Scholar]

- 5. Zhang NJ, Terry A, McHorney CA. Impact of health literacy on medication adherence: a systematic review and meta-analysis. Ann Pharmacother 2014;48:741–51. 10.1177/1060028014526562 [DOI] [PubMed] [Google Scholar]

- 6. Wong PK. Medication adherence in patients with rheumatoid arthritis: why do patients not take what we prescribe? Rheumatol Int 2016;36:1535–42. 10.1007/s00296-016-3566-4 [DOI] [PubMed] [Google Scholar]

- 7. Williams MV, Davis T, Parker RM, et al. . The role of health literacy in patient-physician communication. Fam Med 2002;34:383–9. [PubMed] [Google Scholar]

- 8. Ong LM, de Haes JC, Hoos AM, et al. . Doctor-patient communication: a review of the literature. Soc Sci Med 1995;40:903–18. 10.1016/0277-9536(94)00155-M [DOI] [PubMed] [Google Scholar]

- 9. Kessels RP. Patients' memory for medical information. J R Soc Med 2003;96:219–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Johnson A, Sandford J, Tyndall J. Written and verbal information versus verbal information only for patients being discharged from acute hospital settings to home. The Cochrane Library 2003;20:423–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.National Institutes of Health. How to Write Easy-to-Read Health Materials: MedlinePlus. https://medlineplus.gov/etr.html (accessed 10 Sep 2018).

- 12. Kirsch IS. Adult literacy in America: A first look at the results of the National Adult Literacy Survey. Princeton, New Jersey: Educational Testing Service, 1993. [Google Scholar]

- 13. Kutner M, Greenberg E, Baer J. A First Look at the Literacy of America’s Adults in the 21st Century. Jessup, MD: National Center for Education Statistics, 2006. [Google Scholar]

- 14. Weiss BD. Communicating with patients who have limited literacy skills. Report of the National Work Group on Literacy and Health. J Fam Pract 1998;46:168–76. [PubMed] [Google Scholar]

- 15.South Australia Health. Assessing Readability. 2013. https://www.sahealth.sa.gov.au/wps/wcm/connect/public+content/sa+health+internet/resources/assessing+readability (accessed 22 April 2017).

- 16. Ebrahimzadeh H, Davalos R, Lee PP. Literacy levels of ophthalmic patient education materials. Surv Ophthalmol 1997;42:152–6. 10.1016/S0039-6257(97)00027-1 [DOI] [PubMed] [Google Scholar]

- 17. Badarudeen S, Sabharwal S. Readability of patient education materials from the American Academy of Orthopaedic Surgeons and Pediatric Orthopaedic Society of North America web sites. J Bone Joint Surg Am 2008;90:199–204. 10.2106/JBJS.G.00347 [DOI] [PubMed] [Google Scholar]

- 18. Colaco M, Svider PF, Agarwal N, et al. . Readability assessment of online urology patient education materials. J Urol 2013;189:1048–52. 10.1016/j.juro.2012.08.255 [DOI] [PubMed] [Google Scholar]

- 19. Kapoor K, George P, Evans MC, et al. . Health literacy: readability of acc/aha online patient education material. Cardiology 2017;138:36–40. 10.1159/000475881 [DOI] [PubMed] [Google Scholar]

- 20. Hochhauser M. Patient education and the Web: what you see on the computer screen isn’t always what you get in print. Patient Care Manag 2002;17:10. [PubMed] [Google Scholar]

- 21. McInnes N, Haglund BJ. Readability of online health information: implications for health literacy. Inform Health Soc Care 2011;36:173–89. 10.3109/17538157.2010.542529 [DOI] [PubMed] [Google Scholar]

- 22. Cheng C, Dunn M. Health literacy and the Internet: a study on the readability of Australian online health information. Aust N Z J Public Health 2015;39:309–14. 10.1111/1753-6405.12341 [DOI] [PubMed] [Google Scholar]

- 23. Wong PK, Christie L, Johnston J, et al. . How well do patients understand written instructions?: health literacy assessment in rural and urban rheumatology outpatients. Medicine 2014;93:e129–e29. 10.1097/MD.0000000000000129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Australian Bureau of Statistics. Adult Literacy and Life Skills Survey, Summary Results, Australia, 2008. [Google Scholar]

- 25. First Results of the Adult Literacy and Life Skills Survey. Ottawa–Paris: OECD publishing, 2005. [Google Scholar]

- 26. Harding C, Romanou E, Williams J, et al. . The 2011 Skills for Life Survey: a survey of literacy, numeracy, and ICT levels in England. London: Business, Innovation and Skills, 2011. [Google Scholar]

- 27. Buchbinder R, Hall S, Youd JM. Functional health literacy of patients with rheumatoid arthritis attending a community-based rheumatology practice. J Rheumatol 2006;33:879–86. [PubMed] [Google Scholar]

- 28. Swearingen CJ, McCollum L, Daltroy LH, et al. . Screening for low literacy in a rheumatology setting: more than 10% of patients cannot read "cartilage," "diagnosis," "rheumatologist," or "symptom". J Clin Rheumatol 2010;16:359–64. 10.1097/RHU.0b013e3181fe8ab1 [DOI] [PubMed] [Google Scholar]

- 29. Gordon MM, Hampson R, Capell HA, et al. . Illiteracy in rheumatoid arthritis patients as determined by the Rapid Estimate of Adult Literacy in Medicine (REALM) score. Rheumatology 2002;41:750–4. 10.1093/rheumatology/41.7.750 [DOI] [PubMed] [Google Scholar]

- 30. Cairns R, Brown JA, Lynch AM, et al. . A decade of Australian methotrexate dosing errors. Med J Aust 2016;204:384 10.5694/mja15.01242 [DOI] [PubMed] [Google Scholar]

- 31.Australian Rheumatology Association. Medication Information Sheets. https://rheumatology.org.au/patients/medication-information.asp (accessed Dec 2017).

- 32. Hansberry DR, Agarwal N, Shah R, et al. . Analysis of the readability of patient education materials from surgical subspecialties. Laryngoscope 2014;124:405–12. 10.1002/lary.24261 [DOI] [PubMed] [Google Scholar]

- 33.Oleander Software. Readability Studio 2015 [program]. 2015. https://www.oleandersolutions.com/readabilitystudio.html.

- 34. Huang G, Fang CH, Agarwal N, et al. . Assessment of online patient education materials from major ophthalmologic associations. JAMA Ophthalmol 2015;133:449–54. 10.1001/jamaophthalmol.2014.6104 [DOI] [PubMed] [Google Scholar]

- 35. Douglas A, Kelly-Campbell RJ. Readability of Patient-Reported Outcome Measures in Adult Audiologic Rehabilitation. Am J Audiol 2018;27:208–18. 10.1044/2018_AJA-17-0095 [DOI] [PubMed] [Google Scholar]

- 36. Flesch R. A new readability yardstick. J Appl Psychol 1948;32:221–33. 10.1037/h0057532 [DOI] [PubMed] [Google Scholar]

- 37. McLaughlin GH. SMOG grading-a new readability formula. Journal of Reading 1969;12:639–46. [Google Scholar]

- 38. Gunning R. The Technique of Clear Writing. New York, NY: McGraw-Hill Book Company, 1968. [Google Scholar]

- 39. Arthritis Research UK: Drugs. https://www.arthritisresearchuk.org/arthritis-information/drugs.aspx (accessed Dec 2017).

- 40.Rheuminfo. Medications. https://rheuminfo.com/medications/ (accessed 10 Dec 2017).

- 41.Australian Rheumatology Association. Medication Information Sheets (Methotrexate). https://rheumatology.org.au/patients/documents/Methotrexate_2018.pdf (accessed Dec 2017).

- 42.Australian Rheumatology Association. Medication Information Sheets (Non-Steroidal Anti-Inflammatory Drugs NSAIDs). https://rheumatology.org.au/patients/documents/NSAIDS_2017_final_170308_000.pdf (accessed Dec 2017).

- 43.Australian Rheumatology Association. Medication Information Sheets (Adalimumab). https://rheumatology.org.au/patients/documents/Adalimumab_2016_002.pdf (accessed Dec 2017).

- 44.Australian Rheumatology Association. Medication Information Sheets (Abatacept). https://rheumatology.org.au/patients/documents/Abatacept_2016_002.pdf (accessed Dec 2017).

- 45.Australian Rheumatology Association. Medication Information Sheets (Prednisone). https://rheumatology.org.au/patients/documents/Prednisolone_2016_005.pdf (accessed Dec 2017).

- 46.GraphPad Software, Inc. GraphPad Prism 6 [program], 2012. [Google Scholar]

- 47. Wu DT, Hanauer DA, Mei Q, et al. . Applying multiple methods to assess the readability of a large corpus of medical documents. Stud Health Technol Inform 2013;192:647. [PMC free article] [PubMed] [Google Scholar]

- 48. Clerehan R, Buchbinder R, Moodie J. A linguistic framework for assessing the quality of written patient information: its use in assessing methotrexate information for rheumatoid arthritis. Health Educ Res 2005;20:334–44. 10.1093/her/cyg123 [DOI] [PubMed] [Google Scholar]

- 49. Dowse R, Ehlers M. Medicine labels incorporating pictograms: do they influence understanding and adherence? Patient Educ Couns 2005;58:63–70. 10.1016/j.pec.2004.06.012 [DOI] [PubMed] [Google Scholar]

- 50. Mansoor LE, Dowse R. Effect of pictograms on readability of patient information materials. Ann Pharmacother 2003;37:1003–9. 10.1345/aph.1C449 [DOI] [PubMed] [Google Scholar]

- 51. Patel VL, Eisemon TO, Arocha JF. Comprehending instructions for using pharmaceutical products in rural Kenya. Instr Sci 1990;19:71–84. 10.1007/BF00377986 [DOI] [Google Scholar]

- 52. Morrow DG, Hier CM, Menard WE, et al. . Icons improve older and younger adults' comprehension of medication information. J Gerontol B Psychol Sci Soc Sci 1998;53:P240–P254. 10.1093/geronb/53B.4.P240 [DOI] [PubMed] [Google Scholar]

- 53. Houts PS, Doak CC, Doak LG, et al. . The role of pictures in improving health communication: a review of research on attention, comprehension, recall, and adherence. Patient Educ Couns 2006;61:173–90. 10.1016/j.pec.2005.05.004 [DOI] [PubMed] [Google Scholar]

- 54. Katz MG, Kripalani S, Weiss BD. Use of pictorial aids in medication instructions: a review of the literature. Am J Health Syst Pharm 2006;63:2391–7. 10.2146/ajhp060162 [DOI] [PubMed] [Google Scholar]

- 55. Meppelink CS, Smit EG, Buurman BM, et al. . Should we be afraid of simple messages? The effects of text difficulty and illustrations in people with low or high health literacy. Health Commun 2015;30:1181–9. 10.1080/10410236.2015.1037425 [DOI] [PubMed] [Google Scholar]

- 56. Williams MV, Parker RM, Baker DW, et al. . Inadequate functional health literacy among patients at two public hospitals. JAMA 1995;274:1677–82. 10.1001/jama.1995.03530210031026 [DOI] [PubMed] [Google Scholar]

- 57. Smith H, Gooding S, Brown R, et al. . Evaluation of readability and accuracy of information leaflets in general practice for patients with asthma. BMJ 1998;317:264–5. 10.1136/bmj.317.7153.264 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Wang LW, Miller MJ, Schmitt MR, et al. . Assessing readability formula differences with written health information materials: application, results, and recommendations. Res Social Adm Pharm 2013;9:503–16. 10.1016/j.sapharm.2012.05.009 [DOI] [PubMed] [Google Scholar]

- 59. Bailin A, Grafstein A. The linguistic assumptions underlying readability formulae. Lang Commun 2001;21:285–301. 10.1016/S0271-5309(01)00005-2 [DOI] [Google Scholar]

- 60. Zion AB, Aiman J. Level of reading difficulty in the American College of Obstetricians and Gynecologists patient education pamphlets. Obstet Gynecol 1989;74:955–60. [PubMed] [Google Scholar]

- 61. Friedman DB, Hoffman-Goetz L. A systematic review of readability and comprehension instruments used for print and web-based cancer information. Health Educ Behav 2006;33:352–73. 10.1177/1090198105277329 [DOI] [PubMed] [Google Scholar]

- 62. Doak LG, Doak CC, Meade CD. Strategies to improve cancer education materials. Oncol Nurs Forum 1996;23:1305–12. [PubMed] [Google Scholar]

- 63. Hirsh D, Clerehan R, Staples M, et al. . Patient assessment of medication information leaflets and validation of the Evaluative Linguistic Framework (ELF). Patient Educ Couns 2009;77:248–54. 10.1016/j.pec.2009.03.011 [DOI] [PubMed] [Google Scholar]

- 64. Baker GC, Newton DE, Bergstresser PR. Increased readability improves the comprehension of written information for patients with skin disease. J Am Acad Dermatol 1988;19:1135–41. 10.1016/S0190-9622(88)70280-7 [DOI] [PubMed] [Google Scholar]

- 65. Overland JE, Hoskins PL, McGill MJ, et al. . Low literacy: a problem in diabetes education. Diabet Med 1993;10:847–50. 10.1111/j.1464-5491.1993.tb00178.x [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2018-024582supp001.pdf (281.1KB, pdf)

bmjopen-2018-024582supp002.pdf (299.6KB, pdf)

bmjopen-2018-024582supp003.pdf (292.4KB, pdf)

bmjopen-2018-024582supp004.pdf (282.4KB, pdf)

bmjopen-2018-024582supp005.pdf (329.3KB, pdf)

bmjopen-2018-024582supp006.pdf (84.8KB, pdf)