Abstract

Line-field confocal optical coherence tomography (LC-OCT) operating in two distinct spectral bands centered at 770 nm and 1250 nm is reported, using a single supercontinuum light source and two different line-scan cameras. B-scans are acquired simultaneously in the two bands at 4 frames per second. Greyscale representation and color fusion of the images are performed to either produce a single image with both high resolution (1.3 µm × 1.2 µm, lateral × axial, measured at the surface) in the superficial part of the image and deep penetration, or to highlight the spectroscopic properties of the sample. In vivo images of fair and dark skin are presented with a penetration depth of ∼700 µm.

1. Introduction

Optical coherence tomography (OCT) is an optical technique based on low-coherence interferometry for imaging semi-transparent samples with micrometric resolution. Since its invention in 1991 [1], OCT has grown rapidly in the field of medical imaging [2]. It is now widely used clinically in ophthalmology [3], and is beginning to be used in cardiology for intravascular imaging [4], and in gastroenterology [5] and dermatology to assist in the diagnosis of cancerous lesions [6].

Line-field confocal optical coherence tomography (LC-OCT) is a recently invented imaging technique [7], based on the principle of time-domain OCT [1], with line illumination and line detection. LC-OCT produces B-scans in real-time from multiple A-scans acquired in parallel. The focus is continuously adjusted during the scan of the sample depth, which allows the use of high numerical aperture (NA) microscope objectives to image with high lateral resolution. By using a supercontinuum laser as the light source and balancing the optical dispersion in the interferometer arms, the axial resolution of LC-OCT images reaches the best axial resolution achieved in OCT at comparable central wavelengths [8,9]. On the other hand, line illumination and detection, combined with the use of high NA microscope objectives, produce an efficient confocal gate preventing most scattered light within the sample that does not contribute to the signal from being detected by the camera, which is beneficial for the imaging penetration depth in scattering biological tissues [10]. Due to the spectral response of the Silicon-based line-scan camera used in the original LC-OCT prototype, imaging was performed at a central wavelength of ~800 nm with a quasi-isotropic spatial resolution of ~1 µm [11]. In skin tissues, the imaging penetration depth was ~400 µm, which allowed to image with a cellular-level spatial resolution in the region of the dermal-epidermal junction, where most tumors appear. This imaging system is promising for skin cancer detection [7], especially melanoma that needs to be detected in its early stages of development to be efficiently treated. However, it can be of interest to extend the penetration depth of LC-OCT to image deeper in the dermis so as to, for example, better evaluate margins during Mohs surgery or investigate the structure of collagen fibers and study the effects of aging on the skin deeper layers.

In most biological tissues, scattering of light is the dominant factor that limits the imaging penetration depth [12]. Since scattering becomes weaker at longer wavelengths, imaging with longer wavelengths significantly improves the penetration depth, as long as the absorption of light by the tissue does not become limiting [13]. OCT imaging in the spectral region around 1300 nm has been demonstrated as optimal for the penetration in highly scattering tissues such as skin [14]. However, improved penetration achieved with longer wavelengths is at the expense of spatial resolution. It is therefore interesting to image in distinct spectral bands simultaneously to benefit from both penetration and resolution, which has already been demonstrated in dual-band and three-band OCT systems [15–18].

We present in this paper an LC-OCT system operating simultaneously in two distinct bands at 770 nm and 1250 nm central wavelengths, respectively called VIS/nIR band and nIR band. Ultrahigh-resolution imaging is achieved in the VIS/nIR band, whereas imaging with extended penetration depth is achieved in the nIR band. Compounding of the images acquired in both bands is implemented to generate a single greyscale image, while benefiting from the specific advantages of each band in terms of resolution and penetration. A color representation can be made in order to enhance the contrast and highlight differences of spectroscopic properties of the sample.

2. Experimental setup

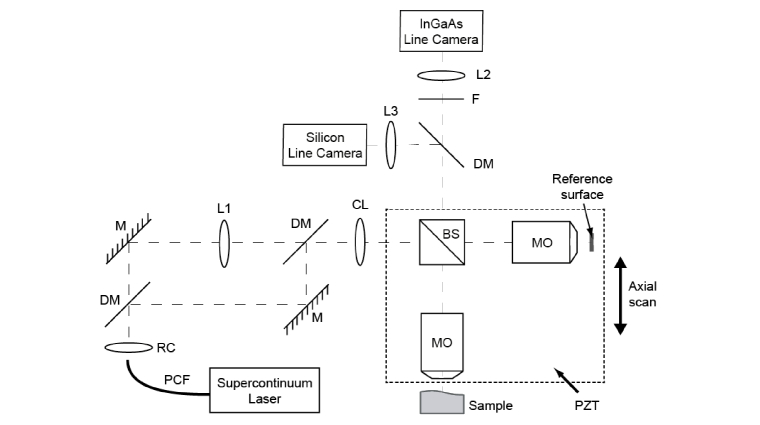

The layout of our dual-band LC-OCT prototype is depicted in Fig. 1. The setup is based on a Linnik-type interferometer with a water-immersion microscope objective (MO, Olympus UMPLanFLN 20x/0.5W) placed in each of the two interferometer arms. A supercontinuum laser source (NKT Photonics SuperK EXR4), emitting from 480 nm to 2100 nm, is used as a broadband light source. A cylindrical lens (50-mm focal length, Thorlabs LJ1695RM) is placed in front of the interferometer so as to illuminate the sample with a line of light. This line is imaged, after spectral separation by a dichroic mirror (Thorlabs DMLP950), by two tube lenses of 200-mm focal length (L3, Thorlabs TTL200-B; L2, Thorlabs TTL200-S8) onto a silicon CCD line-scan camera (e2V Aviiva EM4 BA9, 2048 pixels, 12 bits, 70 kHz) and an InGaAs line-scan camera (SUI Goodrich LDH2, 1024 pixels, 12 bits, 91 kHz). The pixel size of the silicon camera is 14 µm × 28 µm (width × height). The InGaAs camera has square 25-µm pixels. The interferometer, consisting of a non-polarizing beam splitter and the two microscope objectives, is attached to a piezoelectric-driven linear stage (Physik Instrumente P625.1CD) to enable depth scanning of the sample. The non-polarizing beam splitter (Thorlabs BS015) has broadband coatings designed for antireflection and 50/50 beamsplitting ratio in a spectral range between 1100 nm and 1600 nm. It is therefore suited for the nIR band but not perfectly for the Vis/nIR band where light losses are not minimized. We have chosen to favor the nIR band which requires more optical intensity due to the higher full-well capacity of the InGaAs camera pixels compared to the Silicon camera.

Fig. 1.

Schematic of the dual-band LC-OCT device. PCF: Photonic-crystal fiber; RC: reflective collimator; DM: long-pass (950 nm) dichroic mirror; F: long-pass filter; M: mirror; L1: adjustable focal length optical system; CL: cylindrical lens; BS: cube beam splitter; MO: microscope objective; PZT: piezo-electric linear stage; L2, L3: tube lenses.

Due to chromatic aberrations of the microscope objectives, the object focal plane in the Vis/nIR band and that in the nIR band are separated by a distance of about 20 µm, which is greater than the depth of focus. In the absence of optical dispersion mismatch in the interferometer arms, the coherence plane - defined as an optical path difference of zero - is independent of the wavelength. Due to a careful balance of the optical dispersion in the interferometer arms of our setup (as will be explained later), the coherence plane is at the same position in both bands. Therefore, the coherence plane cannot be superimposed simultaneously on the distinct object focal planes associated with each of the two bands. The length of the reference arm is adjusted so that the coherence plane matches the object focal plane in the Vis/nIR band. The sensor of the Si camera is thus placed in the image focal plane of tube lens L3, whereas the sensor of the InGaAs is placed at 11 mm away from the focal plane of tube lens L2 so that both cameras are conjugated with the same plane in the object space. The microscope objective and the tube lens in the nIR band are not used in infinite conjugate ratios, which is theoretically not optimal in relation to optical aberrations. However, compared to the ideal conjugation, no degradation in image resolution could be observed in practice.

Moreover, the illumination line must be focused in the coherence plane for both imaging bands. This cannot be achieved using a single illumination beam due to the chromatic aberrations of the microscope objectives. The beam of the supercontinuum laser at the output of the photonic crystal fiber is divided into two beams by a spectral splitter with a cutoff wavelength of 950 nm (NKT SuperK Split). Each of the two beams is injected into a suitable single mode optical fiber. The Vis/nIR beam is collimated using an achromatic reflective collimator (Thorlabs RC12APC-P01). The nIR beam is collimated and then made slightly convergent using an optical system with an adjustable focal length. In practice, we use a reflective collimator with a smaller output diameter than for the Vis/nIR band (Thorlabs RC08APC-P01) and a fixed magnification beam expander with an adjustable collimation (Thorlabs GBE02-C). The role of the beam expander is to adjust the divergence of the nIR beam so that the nIR illumination line is superimposed to the Vis/nIR line in the coherence plane. The two beams are recombined by a dichroic mirror (Thorlabs DMLP1000) before entering the interferometer.

In order to ensure identical optical dispersion in both arms of the interferometer, the microscope objectives are immerged in vats filled with oil and sealed with fused silica glass plates of identical thickness. In the reference arm, the external side of the glass plate is used as a plane reference surface reflecting 3.4% and 3.3% of light in the Vis/nIR and nIR bands respectively. In the sample arm, the glass plate provides a plane contact surface for the sample to ensure correct stability and flatness. In addition, to avoid a shift between the focal plane and the coherence plane when the sample depth is scanned due to the gradual substitution of the immersion medium by the sample tissue [19], an immersion medium that matches the mean optical refractive index of skin (∼1.40) and that is highly transparent in both bands is used (Cargille Laboratories, code S1050, n = 1.4035 at 583.3 nm). Finally, a dermoscopic oil is deposited between the glass plate and the surface of the sample to minimize the reflection of light at the interfaces.

The interferometric signal acquired by the cameras during the scan of the sample depth is demodulated by digital extraction of the fringe envelop using a five-frame phase-shifting algorithm with a phase step of [20]. To induce a phase shift of between two adjacent frames, the displacement of the interferometer between two successive images acquired by the cameras should be of , where and n denote the central wavelength of the detected spectrum and the refractive index of the immersion medium in each band, respectively. The waveform that drives the displacement of the piezoelectric stage being known, the appropriate phase shift is controlled by triggering timewise the cameras using a field-programmable gate array (FPGA). The triggering frequencies are respectively 46.5 kHz and 26.5 kHz for the Si camera and the InGaAs camera. The FPGA also collects the data from the cameras and processes it by applying the above-mentioned algorithm before sending it to a computer for real-time visualization of the resulting cross-sectional images at a rate of 4 frames per second. The images are displayed on the computer monitor with a gamma correction of 0.25 in both bands.

3. System performance characterization

Spectral bands

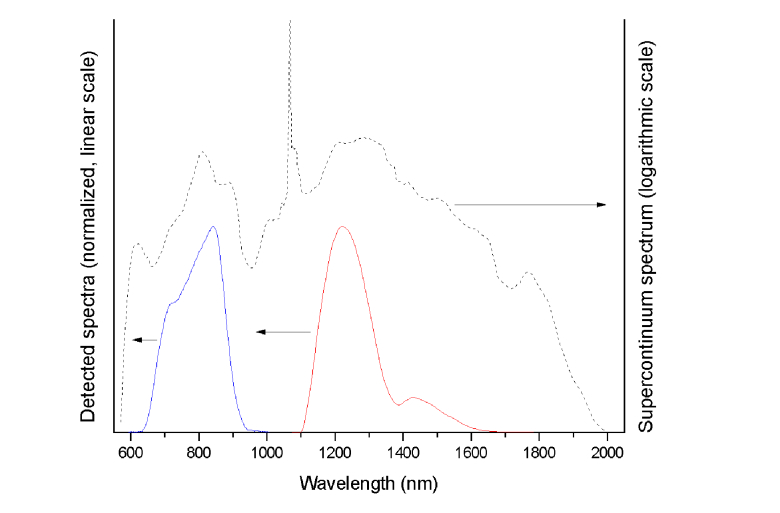

The spectral profiles of the two bands were determined through Fourier analysis of an interference pattern acquired from a glass/air interface. They are shown in Fig. 2 along with the emission spectrum of the supercontinuum laser. The measured spectra correspond to the multiplication of the emission spectrum of the supercontinuum laser source with the spectral response of the cameras and the spectral transmission/reflectivity of the optical elements on the optical path. For spectral separation of the two bands before detection, a longpass dichroïc mirror with a cutoff wavelength at 950 nm is used. This optical wavelength corresponds to the end of the spectral sensitivity of the Si camera and the beginning of the spectral sensitivity of the InGaAs camera. A longpass filter (F) with a cutoff wavelength at 1100 nm is placed in the nIR detection path in order to remove the high peak at 1064 nm present in the supercontinuum laser spectrum, which is imperative to avoid strong side lobes in the interferogram that would generate artifacts in the images. The nIR band thus starts à 1100 nm although the InGaAs camera can detect shorter wavelengths. It should be mentioned that the longpass filter (F) could also be placed in the nIR illumination beam before entering the interferometer with the advantage of reducing the object's light exposure.

Fig. 2.

Measured spectra of the Vis/nIR (solid blue line) and nIR bands (solid red line), derived by Fourier transform of the interference patterns shown in Fig. 3. The emission spectrum of the supercontinuum laser source (dashed black line) presents a high peak at 1064 nm.

The central wavelength of a spectrum is defined as the center of mass, according to

| (1) |

According to this definition, central wavelengths of 770 nm and 1250 nm are measured in the Vis/nIR band and nIR bands, respectively.

The spectral width is then evaluated from the variance of the spectral distribution as

| (2) |

where

| (3) |

Spectral widths of 150 nm and 210 nm are measured in the Vis/nIR band and nIR band, respectively. This general definition of the spectral width is more appropriate in our case than the conventional full width at half maximum (FWHM), due to the shape of the spectra, especially the one of the nIR band that presents a strong side lobe (see Fig. 2). It should be noted that this definition of the spectral width gives the same value than the FWHM in case of a Gaussian-shaped spectrum.

Spatial resolution

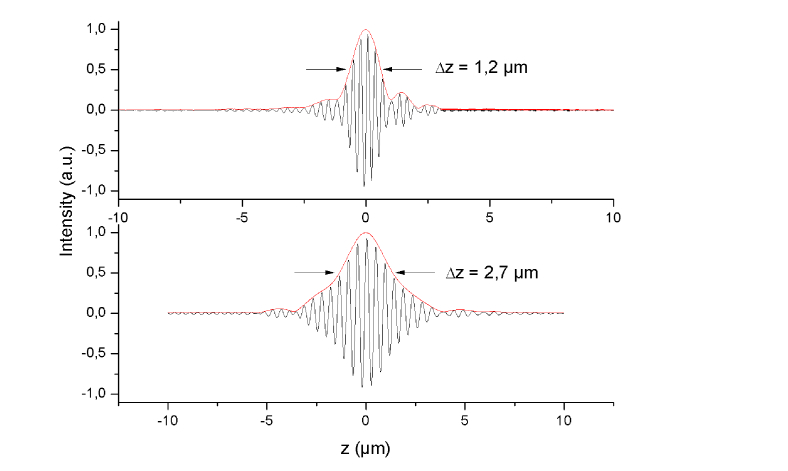

The axial resolution of our imaging system is defined as the FWHM of the envelop of the interference pattern from a glass/air interface. For this purpose, the interference pattern is flattened to remove the confocal reflection from the interface, and the envelope is extracted using the method proposed in [21] (see Fig. 3). In the Vis/nIR band, the axial resolution was measured at 1.2 µm. In the nIR band, it was measured at 2.7 µm. The theoretical axial resolutions, computed assuming Gaussian-shaped spectra with central wavelengths and spectral widths equal to the measured values, are respectively 1.3 µm and 2.4 µm. The disagreement between the theoretical resolutions and the experimental resolutions in both bands is attributed to the non-Gaussian shape of the experimental spectra. The measured fringes envelop in the Vis/nIR band (Fig. 3(a)) presents non-negligible side lobes, which leads to an optimistic estimation of the axial resolution when defined as the FWHM.

Fig. 3.

Interference patterns measured from a glass/air interface in the VIS/nIR band (a) and the nIR band (b).

The lateral resolution of our imaging system is defined as the 20-80% width of the intensity profile from the image of a sharp edge. The measured values are 1.3 µm and 2.3 µm in the Vis/nIR and nIR bands respectively. These results are coherent with the measured central wavelengths as the ratio of the lateral resolutions is approximately equal to the ratio of the central wavelengths. However, these values are not as good as what can be expected for a diffraction-limited imaging system (respectively 1.0 µm and 1.6 µm, meaning that the experimental values are about 40% larger than the theoretical values). This could be explained by the presence of optical aberrations due to the use of an immersion medium different from water and the presence of the glass plate.

Since the axial and lateral resolutions are uncorrelated it was possible to reach an almost isotropic spatial resolution. It should be noted that the measured resolution corresponds to the resolution at the surface of the object. The effective resolution in the depth of the object may be degraded due to several phenomena such as optical aberrations and optical dispersion mismatch in the interferometer. Since the numerical aperture of the microscope objectives is not very high (0.5) and the immersion medium has a refractive index close to that of the object (skin tissue), we can reasonably assume that the degradation of the resolution at depths less of few hundreds of micrometers is weak. The observation of the images seems to confirm this assumption.

Detection sensitivity

In LC-OCT, line illumination with spatially coherent light and line detection with line cameras, combined with the use of relatively high NA microscope objectives, provide an efficient confocal gate, which prevents most of the unwanted scattered light to be detected. LC-OCT can be considered as a slit scanning confocal interference microscope where the line cameras are slit detectors, the width of the slit being determined by the height of the camera pixels (28 µm and 25 µm for the Si camera and the InGaAs camera, respectively).

Defined as the smallest detectable reflectivity, the detection sensitivity was considered to be the mean value of the background noise in the images. It can be expressed as an equivalent reflectivity coefficient by comparison with the signal measured from an interface with a known reflectivity. Using the plane interface between the glass window and dermoscopic oil as a calibration sample of reflectivity equal to ∼8 × 10−5, the detection sensitivity was evaluated at 86 dB in the Vis/nIR band and 94 dB in the nIR band. The difference can mainly be explained by the characteristics of the cameras. The full well capacities (FWC) of the detectors are respectively 0.31 Me- and 8.7 Me- for the Si camera and the InGaAs camera. If we take into account only the FWC, then the theoretical difference between the two sensitivities should be 14.4 dB. The measured difference is lower due to the higher electronic noise of the InGaAs camera that is not shot-noise limited [22].

Image acquisition and merging

The images in two bands are acquired using two different line scan cameras that do not have the same field of view. The size of the Si detector is 28.7 mm wide (2048 14-µm pixels) whereas the InGaAs detector is 25.6 mm (1024 25-µm pixels). Therefore, the images have to be registered so that the fields of view can coincide exactly before they can be merged. The registration was done by calibrating the device using a reference object composed of thin glass plates arranged in the form of “stairs”. The edges of the plates were used to precisely select common points. A linear transformation was then computed using these points. The sides of the largest image (the one obtained in the Vis/nIR band) are finally cropped so that both images finally have the same field of view of 1.15 mm in the object space.

The main specifications of our LC-OCT imaging system in the two bands are summarized in Table 1.

Table 1. Specifications in the two imaging bands.

| Spectral band | Vis/nIR | nIR |

|---|---|---|

| Center wavelength | 770 nm | 1250 nm |

| Spectral width | 150 nm | 210 nm |

| Axial resolution | 1.2 µm | 2.7 µm |

| Lateral resolution | 1.3 µm | 2.3 µm |

| Detection sensitivity | 86 dB | 94 dB |

4. Application to skin imaging

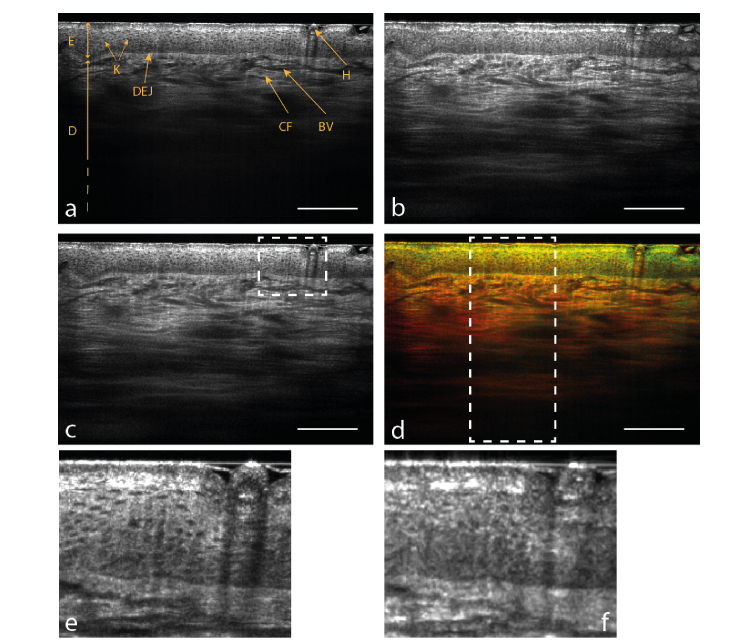

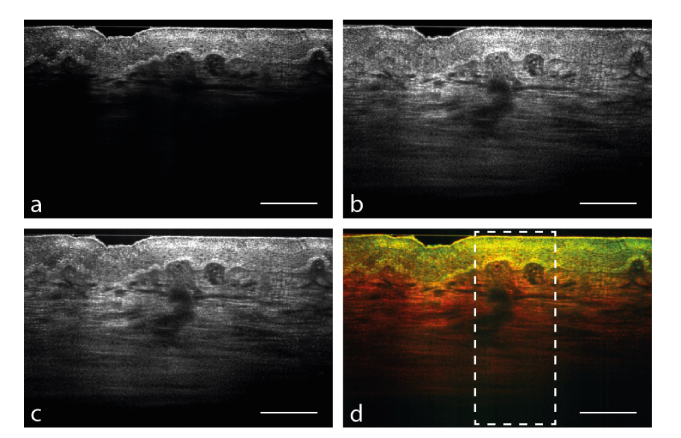

Simultaneously acquired images of the back of the hand of a 25-year old male are displayed in Fig. 4(a,b). The two main layers of skin can be distinguished: the epidermis and the dermis. The dermal-epidermal junction (DEJ) is clearly visible. The epidermis is divided into the stratum corneum, the superficial layer mostly containing dead cells and responsible for the roughness of the skin; the stratum granulosum; the stratum spinosum, containing the keratinocytes whose nuclei appear as black spots; the basal layer, formed by basal cells, separating the stratum spinosum and the dermis. The dermis is mostly composed of collagen fibers and contains the blood vessels. The nIR image, compared to the Vis/nIR image, displays a substantial increase in penetration from approximately 400 µm to 700 µm, enabling to see significantly deeper into the dermis. However, the spatial resolution is degraded in the nIR band, in particular when looking at the stratum spinosum where the cells nuclei become less noticeable.

Fig. 4.

Simultaneous in vivo B-scans of skin on the back of the hand in the Vis/nIR band centered at 770 nm (a) and in the nIR band centered at 1250 nm (b). (c) is the compounded image from (a) and (b). (d) is the color image where (a) is assigned to the green channel and (b) to the red channel. (e) and (f) are magnified images from (a) and (b) respectively, corresponding to the region in the dashed frame drawn in (c). Scale bar: 200 µm.

The simplest method for greyscale fusion of the images acquired in both bands is to average them. However, this method reduces the resolution in the superficial part of the compounded image and takes into account the Vis/nIR image in the deep part although it brings no information. An “up-down” fusion method using a linear law correlated to the average intensity of the signal according to the depth is more efficient to keep the valuable features of both images. This method was used to produce the compounded image shown in Fig. 4(c). This method is justified by the fact that the Vis/nIR image always displays better resolution in the superficial parts and the nIR image always penetrates deeper in the sample. Also, as the output is a linear combination of the images, speckle reduction through frequency compounding occurs to some extent [23,24]. Note that for in vivo imaging, simple time-averaging of successive images can effectively reduce speckle through spatial compounding due to micro-movements of the sample during the acquisition. In Fig. 4(c), both high resolution in the epidermis and high penetration in the dermis are achieved in the compounded image.

Colored images were constructed using the red-green-blue color space, with the nIR image assigned to the red channel and the Vis/nIR image assigned to the green channel. Images are smoothed using a Gaussian filter to compensate for too strong resolution dissimilarity and reduce high-frequency noise. Consequently, areas where the Vis/nIR band scatter dominantly appear in green tones, while those where the nIR band prevails are of red color. If the two bands scatter equally, pixels are colored in yellow. With this method, the brightness of a pixel simply depends on the intensity of the individual images, therefore in terms of intensity the color compounded image is equivalent to a simple pixelwise average. For light skin (Fig. 4(d)), the epidermis appears mostly yellow as both bands are equally prominent. The dermis exhibits a color gradient from light orange to red in accordance with the dependence of scattering with the optical wavelength.

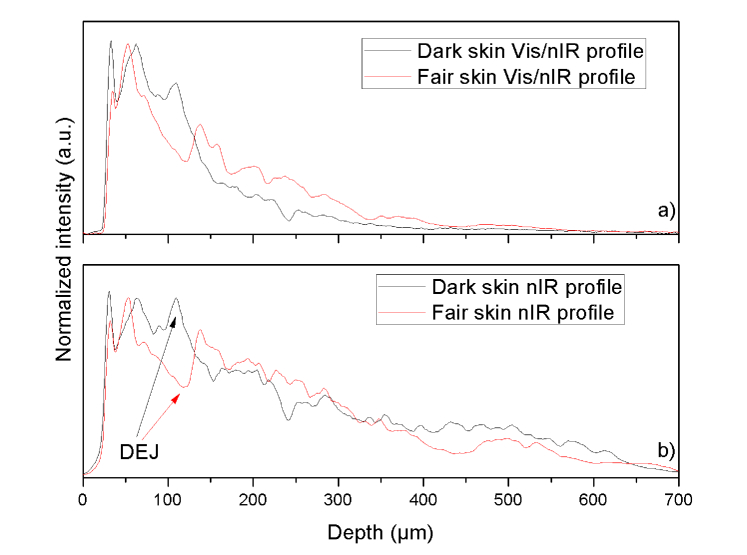

Melanin in skin is a strong absorber of UV radiation but its absorption spectrum extends to near-infrared light. Since the absorption of light by melanin decreases with the wavelength, images of melanin-rich skin are expected to differ in the two bands [25]. Dark skin has been imaged using our dual-band LC-OCT system to emphasize the presence of melanin by highlighting absorption differences. This could be useful for the detection of pathologies with high concentrations of melanin, such as melanoma. Images of dark skin are presented in Fig. 5. Melanin, due to its higher refractive index, appears as a bright layer above the DEJ [26]. In the Vis/nIR band, one can notice the strong absorption of melanin due to the substantial reduction of depth penetration compared to Fig. 5(a). However, the nIR band does not appear to be as much affected by the presence of melanin. This effect is highlighted by the graphs shown in Fig. 6 comparing the depth intensity profiles in dark and fair skin for the Vis/nIR band on one side and for the nIR band on the other. These profiles are obtained by averaging the columns included in the areas delimited by the dashed frames drawn in Fig. 4(c) and Fig. 5(c), that being approximately 500-pixel wide in the Vis/nIR image and 250-pixel wide in the nIR image. In color, the result is a sharp segmentation of areas above and under melanin, in this case between the epidermis and the dermis. This effect can be observed in Fig. 4 due to the presence of a black hair in the top right-hand corners of the images.

Fig. 5.

Images of dark skin (phototype VI). The Vis/nIR band (a) is strongly absorbed by the melanin whereas the IR band (b) penetrates into the dermis. The compounded image (c) can display a high resolution in the epidermis and penetration in the dermis at the same time. The color image (d) clearly differentiates the epidermis from the dermis. Scale bar: 200 µm.

Fig. 6.

Intensity depth profiles from images presented in Fig. 4 and Fig. 5(a,b) compare the depth penetrations in dark skin and fair skin for the Vis/nIR band and the nIR band respectively. The position of the DEJ (dermal-epidermal junction) is easily detectable because of the peaks due to the presence of melanin in dark skin (black arrow) and the hollows in fair skin (red arrow) occurring at a depth of approximately 100 µm. The profile for dark skin in (a) drops sharply after the DEJ reducing effectively the depth penetration compared to fair skin. In (b), if a drop is noticeable after the DEJ, the depth penetration is similar to fair skin nonetheless. The scale for the intensity is the same as the one used to display the images.

5. Discussion

With this dual-band LC-OCT system, we have been able to obtain cross-sectional in vivo images with an unprecedented resolution-to-penetration ratio thanks to greyscale fusion. Images with higher penetration depths could have been obtained in a spectral band centered at a longer wavelength. For example, it has been demonstrated that imaging at a central wavelength of 1600 nm yields an improved penetration in biological tissues compared to 1300 nm [27]. However, the imaging depth accessible with our device is limited by the travel range of 800 µm of the piezoelectric motor. By using a spectral band centered around 1250 nm, we have achieved an imaging depth of ~700-800 µm in skin tissue. Moreover, working at longer wavelengths would have further degraded the resolution and made the image fusion with the Vis/nIR band more difficult.

The choice of up-down fusion for greyscale compounding is justified by the fact that the nIR band usually has little added value in the superficial parts compared to the Vis/nIR band. Likewise, the Vis/nIR band being more quickly attenuated, there is no reason to take it into account for the mid- or deep dermis. Some samples may contain elements with very different absorption properties independently of their depth such as the hair in the right-hand corner of the light skin images in Fig. 4. The Vis/nIR light is strongly absorbed by the hair and, as a consequence, some signal is lost in the Vis/nIR image contrary to the nIR image. With the up-down fusion method, the zone without signal remains in the compounded image because there is almost no contribution from the nIR image in the epidermis. Yet, the greyscale compounding has more of a visual purpose, highlighting the differences between the two bands would be the role of color fusion. A more complex greyscale fusion algorithm using wavelet analysis can be used in order to better use the contribution of the nIR signal in the superficial parts without degrading the spatial resolution [23,28]. Wavelet analysis decomposes an image into approximation and details coefficients that can be compounded independently according to what is to be highlighted and recombined using the inverse wavelet transform. To date, the wavelet fusion method has not produced noticeably better results than the simple up-down fusion method. However, with wavelets and levels of decomposition adapted to the resolutions of both bands the wavelet fusion algorithm could be perfectible.

Color images could provide a valuable tool to enhance image contrast and reveal spectroscopic information. However, interpretation of the colored images can be difficult, especially in depth. Indeed, the backscattered spectral response of the tissue strongly depends on the absorption and scattering properties of the layers through which light passes. For instance, due to the wavelength dependence of scattering, a red shift naturally occurs as light penetrates in the sample [29]. Information from the dermis in the differential images needs to be interpreted consequently to avoid misinterpretation. The difference in spatial resolution between the two bands can also lead to errors when investigating high-frequency details. To correct this effect, images are smoothed, which degrades the resolution of the resulting color image to at least the resolution of the nIR band.

Working with two spectral bands simultaneously may yield some advantages, it also comes with extra complexity that could limit its use. Indeed, if only one supercontinuum laser source is enough thanks to its broad emission spectrum, the illumination path has to be spectrally separated to compensate for chromatic aberrations in the microscope objectives. The beams in the illumination path have then to be recombined manually and with great precision as a small angle error would imply visualizing different planes in the sample. Also, images are not perfectly registered by construction so a calibration step is required every time the positions of the cameras are changed, which can be cumbersome for the user. The high spatial resolution complicates this process as slightly shifted (more than the resolution) images will not be efficiently compounded.

Finally, operating in two spectral bands simultaneously obviously requires two different detectors, which increases the price of the device (especially the InGaAs detector). The use of two cameras, along with the duplication of the optical path and the necessary optical elements to correct the illumination, also increases the size of the device and has so far limited it to installation on an optical bench. In this research work, we have used state-of-the-art components to achieve the best possible performance. Cheaper components (light source, piezoelectric motor, cameras) could be used to reduce costs but at the expense of possible deterioration in performance. Nevertheless, a better price-quality ratio would probably be possible. Finally, the dimensions of the experimental set-up could be reduced to make it more easily transportable.

6. Conclusion

We believe that LC-OCT can answer the lack of lateral resolution of conventional OCT by acquiring several A-scans in parallel. This reduces the necessary speed for the axial scanning and allows the dynamic adjustment of the focus during the scan and thus the use of high-numerical aperture objectives. The combination of line illumination and line detection creates a confocal gate that rejects most of the incoherently detected light and results in improved penetration images in real-time. The choice of the system’s wavelength is important and depends on whether resolution or penetration is favored. However, this compromise can be overcome by using two different wavelengths simultaneously within the transparency window of biological tissues. Compounding of the two images can produce a greyscale image with both high resolution and high penetration. Colored images can also be created to reveal spectroscopic information about the examined tissue or to enhance image contrast.

The amount of spectroscopic information is in our case limited due to the use of only two bands. Despite an increased experimental complexity, it might be interesting to develop a three-band LC-OCT device to obtain full RGB images. The additional band could be at a higher central wavelength around 1600 nm, such as in [27] in accordance with the local minimum of water absorption at this wavelength [30]. In order to reduce chromatic aberration, reflective microscope objectives may be required, but they are usually not compatible with immersion, which is essential to the proper functioning of the device. Moreover, working with large spectral bands increases the risk of optical dispersion mismatch between the sample and the reference arms, and may inevitably deteriorate the resolution in depth. Working at shorter wavelengths between 500 and 700 nm, as certain visible-light OCT [31], may be a better option. A silicon-based camera could be used instead of an expensive InGaAs camera. Good resolution could be achieved without the need for a large bandwidth. However, the short penetration depth in skin tissues would probably restrain the analysis of color images containing the spectroscopic information to the epidermis.

Acknowledgments

The authors are grateful to the company DAMAE Medical for technical support.

Funding

Action de Soutien à la Technologie et à la Recherche en Essonne (ASTRE 2016).

Disclosures

The authors declare that there are no conflicts of interest related to this article.

References

- 1.Huang D., Swanson E. A., Lin C. P., Schuman J. S., Stinson W. G., Chang W., Hee M. R., Flotte T., Gregory K., Puliafito C. A., Fujimoto J. G., “Optical coherence tomography,” Science 254(5035), 1178–1181 (1991). 10.1126/science.1957169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zysk A. M., Nguyen F. T., Oldenburg A. L., Marks D. L., Boppart S. A., “Optical coherence tomography: a review of clinical development from bench to bedside,” J. Biomed. Opt. 12(5), 051403 (2007). 10.1117/1.2793736 [DOI] [PubMed] [Google Scholar]

- 3.Schuman J. S., Puliafito C. A., Fujimoto J. G., Duker J. S., Optical Coherence Tomography of Ocular Diseases, 3rd ed. (Slack Inc., 2013). [Google Scholar]

- 4.Bezerra H. G., Costa M. A., Guagliumi G., Rollins A. M., Simon D. I., “Intracoronary optical coherence tomography: A Comprehensive Review clinical and research applications,” JACC Cardiovasc. Interv. 2(11), 1035–1046 (2009). 10.1016/j.jcin.2009.06.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Adler D. C., Chen Y., Huber R., Schmitt J., Connolly J., Fujimoto J. G., “Three-dimensional endomicroscopy using optical coherence tomography,” Nat. Photonics 1(12), 709–716 (2007). 10.1038/nphoton.2007.228 [DOI] [Google Scholar]

- 6.Levine A., Wang K., Markowitz O., “Optical Coherence Tomography in the Diagnosis of Skin Cancer,” Dermatol. Clin. 35(4), 465–488 (2017). 10.1016/j.det.2017.06.008 [DOI] [PubMed] [Google Scholar]

- 7.Dubois A., Levecq O., Azimani H., Siret D., Barut A., Suppa M., Del Marmol V., Malvehy J., Cinotti E., Perrot J. L., “Line-field confocal optical coherence tomography for in situ diagnosis of skin tumors,” J. Biomed. Opt. 23, 106007 (2018). 10.1117/1.JBO.23.10.106007 [DOI] [PubMed] [Google Scholar]

- 8.Drexler W., Morgner U., Kärtner F. X., Pitris C., Boppart S. A., Li X. D., Ippen E. P., Fujimoto J. G., “In vivo ultrahigh-resolution optical coherence tomography,” Opt. Lett. 24(17), 1221–1223 (1999). 10.1364/OL.24.001221 [DOI] [PubMed] [Google Scholar]

- 9.Humbert G., Wadsworth W., Leon-Saval S., Knight J., Birks T., St J Russell P., Lederer M., Kopf D., Wiesauer K., Breuer E., Stifter D., “Supercontinuum generation system for optical coherence tomography based on tapered photonic crystal fibre,” Opt. Express 14(4), 1596–1603 (2006). 10.1364/OE.14.001596 [DOI] [PubMed] [Google Scholar]

- 10.Chen Y., Huang S.-W., Aguirre A. D., Fujimoto J. G., “High-resolution line-scanning optical coherence microscopy,” Opt. Lett. 32(14), 1971–1973 (2007). 10.1364/OL.32.001971 [DOI] [PubMed] [Google Scholar]

- 11.Dubois A., Levecq O., Azimani H., Davis A., Ogien J., Siret D., Barut A., “Line-field confocal time-domain optical coherence tomography with dynamic focusing,” Opt. Express 26(26), 33534–33542 (2018). 10.1364/OE.26.033534 [DOI] [PubMed] [Google Scholar]

- 12.Schmitt J. M., Knüttel A., Yadlowsky M., Eckhaus M. A., “Optical-coherence tomography of a dense tissue: statistics of attenuation and backscattering,” Phys. Med. Biol. 39(10), 1705–1720 (1994). 10.1088/0031-9155/39/10/013 [DOI] [PubMed] [Google Scholar]

- 13.Tseng S. H., Bargo P., Durkin A., Kollias N., “Chromophore concentrations, absorption and scattering properties of human skin in-vivo,” Opt. Express 17(17), 14599–14617 (2009). 10.1364/OE.17.014599 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Alex A., Povazay B., Hofer B., Popov S., Glittenberg C., Binder S., Drexler W., “Multispectral in vivo three-dimensional optical coherence tomography of human skin,” J. Biomed. Opt. 15(2), 026025 (2010). 10.1117/1.3400665 [DOI] [PubMed] [Google Scholar]

- 15.Spöler F., Kray S., Grychtol P., Hermes B., Bornemann J., Först M., Kurz H., “Simultaneous dual-band ultra-high resolution optical coherence tomography,” Opt. Express 15(17), 10832–10841 (2007). 10.1364/OE.15.010832 [DOI] [PubMed] [Google Scholar]

- 16.Sacchet D., Moreau J., Georges P., Dubois A., “Simultaneous dual-band ultra-high resolution full-field optical coherence tomography,” Opt. Express 16(24), 19434–19446 (2008). 10.1364/OE.16.019434 [DOI] [PubMed] [Google Scholar]

- 17.Cimalla P., Walther J., Mehner M., Cuevas M., Koch E., “Simultaneous dual-band optical coherence tomography in the spectral domain for high resolution in vivo imaging,” Opt. Express 17(22), 19486–19500 (2009). 10.1364/OE.17.019486 [DOI] [PubMed] [Google Scholar]

- 18.Federici A., Dubois A., “Three-band, 1.9-μm axial resolution full-field optical coherence microscopy over a 530-1700 nm wavelength range using a single camera,” Opt. Lett. 39(6), 1374–1377 (2014). 10.1364/OL.39.001374 [DOI] [PubMed] [Google Scholar]

- 19.Dubois A., “Focus defect and dispersion mismatch in full-field optical coherence microscopy,” Appl. Opt. 56(9), D142–D150 (2017). 10.1364/AO.56.00D142 [DOI] [PubMed] [Google Scholar]

- 20.Larkin K. G., “Efficient nonlinear algorithm for envelope detection in white light interferometry,” J. Opt. Soc. Am. A 13(4), 832–843 (1996). 10.1364/JOSAA.13.000832 [DOI] [Google Scholar]

- 21.Gianto G., Salzenstein F., Montgomery P., “Comparison of envelope detection techniques in coherence scanning interferometry,” Appl. Opt. 55(24), 6763–6774 (2016). 10.1364/AO.55.006763 [DOI] [PubMed] [Google Scholar]

- 22.Oh W. Y., Bouma B. E., Iftimia N., Yun S. H., Yelin R., Tearney G. J., “Ultrahigh-resolution full-field optical coherence microscopy using InGaAs camera,” Opt. Express 14(2), 726–735 (2006). 10.1364/OPEX.14.000726 [DOI] [PubMed] [Google Scholar]

- 23.Magnain C., Wang H., Sakadžić S., Fischl B., Boas D. A., “En face speckle reduction in optical coherence microscopy by frequency compounding,” Opt. Lett. 41(9), 1925–1928 (2016). 10.1364/OL.41.001925 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Schmitt J. M., Xiang S. H., Yung K. M., “Speckle in optical coherence tomography,” J. Biomed. Opt. 4(1), 95–105 (1999). 10.1117/1.429925 [DOI] [PubMed] [Google Scholar]

- 25.Tran M. L., Powell B. J., Meredith P., “Chemical and Structural Disorder in Eumelanins: A Possible Explanation for Broadband Absorbance,” Biophys. J. 90(3), 743–752 (2006). 10.1529/biophysj.105.069096 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Rajadhyaksha M., Grossman M., Esterowitz D., Webb R. H., Anderson R. R., “In vivo confocal scanning laser microscopy of human skin: melanin provides strong contrast,” J. Invest. Dermatol. 104(6), 946–952 (1995). 10.1111/1523-1747.ep12606215 [DOI] [PubMed] [Google Scholar]

- 27.Kodach V. M., Kalkman J., Faber D. J., van Leeuwen T. G., “Quantitative comparison of the OCT imaging depth at 1300 nm and 1600 nm,” Biomed. Opt. Express 1(1), 176–185 (2010). 10.1364/BOE.1.000176 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mayer M. A., Borsdorf A., Wagner M., Hornegger J., Mardin C. Y., Tornow R. P., “Wavelet denoising of multiframe optical coherence tomography data,” Biomed. Opt. Express 3(3), 572–589 (2012). 10.1364/BOE.3.000572 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Morgner U., Drexler W., Kärtner F. X., Li X. D., Pitris C., Ippen E. P., Fujimoto J. G., “Spectroscopic optical coherence tomography,” Opt. Lett. 25(2), 111–113 (2000). 10.1364/OL.25.000111 [DOI] [PubMed] [Google Scholar]

- 30.Hale G. M., Querry M. R., “Optical constants of water in the 200-nm to 200-μm wavelength region,” Appl. Opt. 12(3), 555–563 (1973). 10.1364/AO.12.000555 [DOI] [PubMed] [Google Scholar]

- 31.Shu X X., Beckmann L L., Zhang H., “Visible-light optical coherence tomography: a review,” J. Biomed. Opt. 22, 1–14 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]