Three-dimensional (3D) imaging is increasingly important in echocardiography. However, viewing of 3D images on a flat, two-dimensional screen is a barrier to comprehension of latent information. There have been previous attempts to visualize the full 3D nature of the data, but they have not been widely adopted. For example, 3D printing offers realistic interaction, but is time consuming, has limited means for the observer to move into or through the model, and is not yet practical for routine clinical use. Further, the heart beats and 3D printed models are static. Stereoscopic viewing on 2D screens (as at the movie theater) is possible, but is expensive, may not provide an immersive experience, and does not have integrated 3D input devices (controllers).

Stereoscopic virtual reality (VR) is developing rapidly but is being driven by the video gaming industry, with features not directly applicable to visualization of medical imaging data. Commonly available VR environments require surface models of structures, typically created by manual segmentation, which is time-consuming, subject to user interpretation, and not feasible in real time. In contrast to displaying segmentation based surface models, volume rendering is a visualization method that can display 3D echocardiographic (3DE) data nearly instantaneously, and is notably the primary method of displaying 3DE in current clinical practice. However, to our knowledge, there is no readily available software application that can display volume rendering of standard 3DE formats (e.g. DICOM) in VR. Further, there is no scientific platform, which allows end-user customization of image processing and modeling with display in VR.

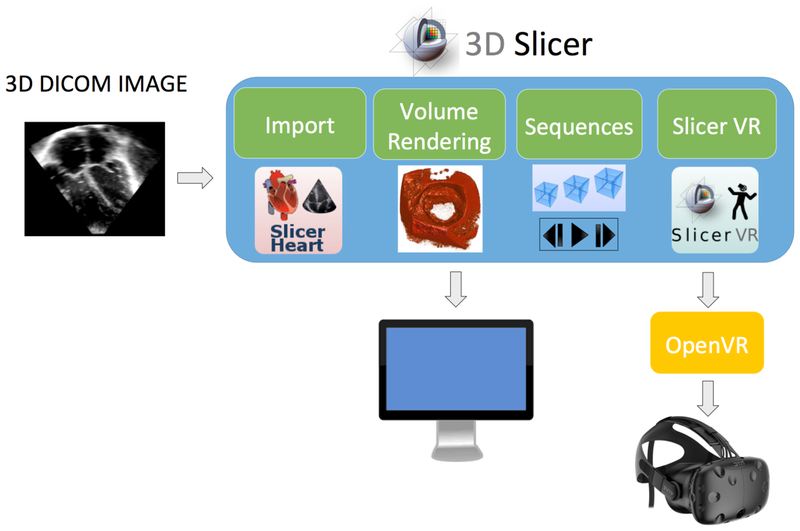

To address this need we undertook significant software development to extend open-source software (3D Slicer) to allow native viewing and interaction of volume rendered 3DE images in stereoscopic VR (Figure 1).[1] First, we dramatically improved volume rendering efficiency to allow display of cine 3D echo images at realistic frame rates in VR on readily availabel computer hardware. Next, we created an importer for standard 3DE volumes (SlicerHeart) and a cine player (Sequences) to view moving 3D volumes. Finally, we created functionality (SlicerVR) to allow volume rendered output from 3D Slicer to be viewed on OpenVR compatible VR devices. This combination of achievements allowed the import of 3DE obtained on standard echo machine (Epiq 7, Philips Medical, Andover, MA) into 3D Slicer running on a laptop computer, and display in VR using a commercially available open VR compatible headset, such as the HTC Vive (HTC, New Taipei City, Taiwan). We demonstrate viewing of 3DE data and provide examples of potential relevance to planning of surgery or catheter based interventions (Figure 2, Video 1). Total time from import of DICOM data to interactive VR visualization in 3D Slicer is less than 1 minute.

Figure 1: Flow Diagram of Flow of Data from Echocardiogram to Virtual Reality.

3D data is converted and imported into 3D Slicer using the SlicerHeart Module. Improved capabilities and efficiency in volume rendering allows display of 3D images at realistic frame rates on readily availabel computer hardware. The SEquence module provides controls over the Cine 3D data playing. SlicerVR provides output to standard OpenVR viewers like the HTC Vive.

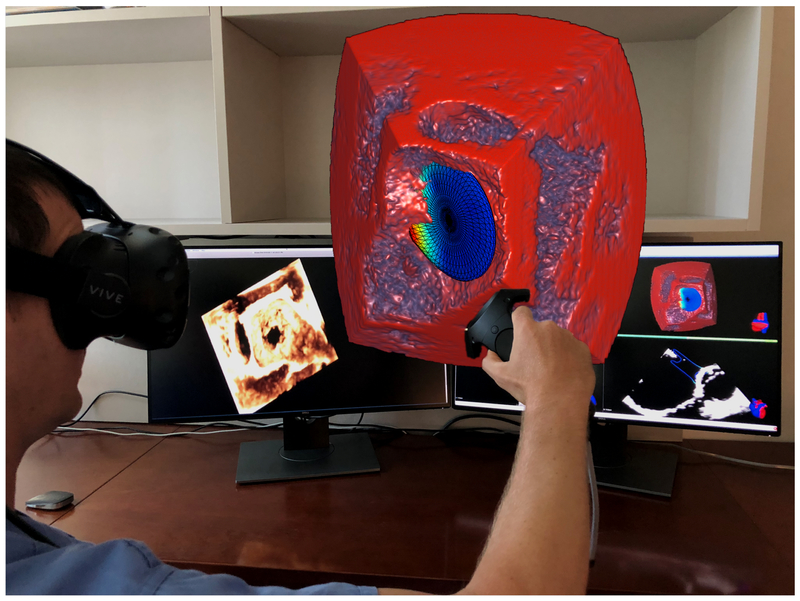

Figure 2: Depiction of Interaction with Volume Rendered 3D Echocardiographic Images in Virtual Reality.

Volume rendering of atrial septal defect with simulated device closure. See Video 1 for demonstration of interaction with this and other volumes and the potential for utilization of VR for clinical decision making.

The full benefit of stereoscopic VR visualization of medical and research images is difficult to convey in the static images or even the “single eye VR perspective” we present in Video 1. We attempt to compare the viewing experience in VR to a standard 2D screen in Table 1. Spatial relationships and perception of depth are, at least subjectively, markedly improved in VR over standard on-screen viewing. Interaction with the image is intuitive; one can walk “through” structures and “into” the rendered structure to intuitively crop the image, naturally obtaining perspectives that are not typically achieved with flat screen viewing. One can also sit at a desk and grab and manipulate a volume rendered structure with the controller just as if one were examining a real object by moving and rotating it in virtual space.

Table 1:

Comparison of Viewing of 3D on a Traditional 2D Screen to Virtual Reality

| Traditional 2D screens | Virtual reality | |

|---|---|---|

| Depth Perception | Requires artificial enhancement (special color maps, lighting simulation, etc) to indicate depth indirectly | Direct, accurate 3D depth through stereoscopic display and head-motion parallax resulting in less ambiguity |

| Field of View | Approximately 30 degrees | 100 degrees, allowing viewing images in much higher magnification |

| Viewpoint Setup | Indirect view manipulation using mouse and keyboard; manual cutting plane definition | Direct, intuitive viewpoint setting by simply moving head or body as in real life; dynamic cutting plane defined by putting head inside the region of interest |

| 3D Object Positioning | Indirect definition of 3D position and orientation using two-dimensional controls through series of interactions | Intuitive, direct 3D positioning, points can be placed or objects can be aligned or moved by simply grabbing them similar to physical objects |

We believe this is the first readily available integrated solution for the viewing of volume rendered 3DE images in stereoscopic VR. Notably, we have made SlicerVR and the other components available in the free and open-source 3D Slicer platform allowing both academic and commercial use without restriction. Our incorporation of 3DE import, sequential volume viewing, improved volume rendering, and VR output further extend this power tool for the image processing of 3D data.[2, 3]

Given the complexity and motion of 3D cardiac data, we believe VR based technology, and the related field of augmented reality (AR), will have a significant clinical impact upon how we look at and comprehend 3D cardiac images. This may be especially relevant to understanding complex structural relationships as well as conveying those relationships to surgeons and interventionalists. Further optimization of volume rendering, interaction, display resolution, and VR viewing technology will improve the viewing experience, while the cost for VR viewers will simultaneously decrease. Of course, it remains to be objectively demonstrated that this approach results in significantly improved diagnostic understanding and speed, or subsequent improvements in therapeutic outcomes.

We invite you to try it for yourself. Compatible hardware is readily available and 3D Slicer platform is available for download and documented at www.slicer.org. The newly developed capabilities are available in the SlicerVirtualReality extension within the 3D Slicer Extension Manager. Open-source code and documentation are available at: https://github.com/KitwareMedical/SlicerVirtualReality.

Supplementary Material

Video 1: See attached multimedia file or download at link below: https://drive.google.com/open?id=139ZBbC69CmuI3aOCPwJlKnKHB44QHKT1 Note: We are happy to expand or tailor the video with the Editor’s input.

Acknowledgments

Funding: This work was supported the Department of Anesthesia and Critical Care at the Childrenis Hospital of Philadelphia, National Institute of Health U24CA180918, P41EB015902, P41EB015898, 1R01EB021396–01A1 and by CANARIE’s Research Software Program.

Abbreviations:

- 3D

Three-Dimensional

- DICOM

Digital Imaging and Communication in Medicine

- 3DE

Three-Dimensional Echocardiography

- TEE

Transesophageal Echocardiogram

- VR

Virtual Reality

Footnotes

Disclosures: Jean-Christophe Fillion-Robin, Sankhesh Jhaveri, and Ken Martin are employees of Kitware, Inc. Jean Baptist Vimort was an intern at Kitware, Inc. Steve Pieper is an employee of Isomics, Inc. No other disclosures.

References

- [1].Fedorov A, Beichel R, Kalpathy-Cramer J, Finet J, Fillion-Robin JC, Pujol S, et al. 3D Slicer as an image computing platform for the Quantitative Imaging Network. Magn Reson Imaging. 2012;30:1323–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Pinter C, Lasso A, Wang A, Jaffray D, Fichtinger G. SlicerRT: radiation therapy research toolkit for 3D Slicer. Med Phys. 2012;39:6332–8. [DOI] [PubMed] [Google Scholar]

- [3].Ungi T, Lasso A, Fichtinger G. Open-source platforms for navigated image-guided interventions. Med Image Anal. 2016;33:181–6. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Video 1: See attached multimedia file or download at link below: https://drive.google.com/open?id=139ZBbC69CmuI3aOCPwJlKnKHB44QHKT1 Note: We are happy to expand or tailor the video with the Editor’s input.