Abstract

Angiography by Optical Coherence Tomography (OCT-A) is a non-invasive retinal imaging modality of recent appearance that allows the visualization of the vascular structure at predefined depths based on the detection of the blood movement through the retinal vasculature. In this way, OCT-A images constitute a suitable scenario to analyze the retinal vascular properties of regions of interest as is the case of the macular area, measuring the characteristics of the foveal vascular and avascular zones. Extracted parameters of this region can be used as prognostic factors that determine if the patient suffers from certain pathologies (such as diabetic retinopathy or retinal vein occlusion, among others), indicating the associated pathological degree. The manual extraction of these biomedical parameters is a long, tedious and subjective process, introducing a significant intra and inter-expert variability, which penalizes the utility of the measurements. In addition, the absence of tools that automatically facilitate these calculations encourages the creation of computer-aided diagnosis frameworks that ease the doctor’s work, increasing their productivity and making viable the use of this type of vascular biomarkers. In this work we propose a fully automatic system that identifies and precisely segments the region of the foveal avascular zone (FAZ) using a novel ophthalmological image modality as is OCT-A. The system combines different image processing techniques to firstly identify the region where the FAZ is contained and, secondly, proceed with the extraction of its precise contour. The system was validated using a representative set of 213 healthy and diabetic OCT-A images, providing accurate results with the best correlation with the manual measurements of two experts clinician of 0.93 as well as a Jaccard’s index of 0.82 of the best experimental case in the experiments with healthy OCT-A images. The method also provided satisfactory results in diabetic OCT-A images, with a best correlation coefficient with the manual labeling of an expert clinician of 0.93 and a Jaccard’s index of 0.83. This tool provides an accurate FAZ measurement with the desired objectivity and reproducibility, being very useful for the analysis of relevant vascular diseases through the study of the retinal micro-circulation.

Introduction

Over the recent years, the constant technological advances allow the integration of specialized computed-aided diagnosis systems in different fields of medicine [1–3]. These systems ease the doctor’s work, facilitating and accelerating the diagnosis and monitoring of many diseases, in addition to the inclusion of important advantages as objectivity and determinism that are not always present in the diagnostic processes of the experts in their clinical routine. These facts are present in ophthalmology, where the analysis and diagnostic procedures frequently involve the use of different image modalities as a relevant source of information of a large variability of relevant diseases. Among the ophthalmological image modalities, in the recent years, we can find the appearance of the Angiography by Optical Coherence Tomography (OCT-A) that is a new non-invasive imaging modality that allows the visualization, with great precision, of the vasculature at different depths over the retinal eye fundus. OCT-A images are mainly based on the detection of blood movement without the need of injecting intravenous contrast, fact that was unavoidable in previous capture techniques, as happens with classic angiographies. The classic angiography is a simple but invasive image modality that allows the study of the vascular characteristics of the retina using the injection of an intravenous contrast to the patient. Subsequently, Optical Coherence Tomography (OCT) [4] allows to observe, non-invasively, a cross-sectional visualization of the layers of the retina. Finally, OCT-A combines the advantages of both, offering a suitable visualization for the analysis of the retinal vasculature, as angiographies, but non-invasively, using the tomography capture characteristics, which constitutes a more comfortable scenario for the patients. OCT-A images are typically taken at superficial and deep views of the eye fundus, which facilitates the subsequent vascular analysis; in addition, these images can be obtained at different levels of zoom, being 3 and 6 millimeters-wide (greater and smaller zooms) the most used configurations. This image technique offers many advantages [5] compared to those previously used, such as the possibility of generating volumetric scans that can be captured at specific depths, offering a 3D visualization of the eye fundus with a limited time and cost that it typically involves (image acquisition in about 2 or 3 seconds). Given these characteristics, OCT-A images are suitable for the analysis of the retinal micro-circulation, being spread their use in many health-care systems.

The higher or lower presence of vessels in certain areas of the eye fundus is a very useful biomedical parameter since they are affected by many vascular pathologies, such as diabetic retinopathy or age related macular degeneration, being their level of presence or absence a significant prognostic factor. One of these parameters is the area of the Foveal Avascular Zone (FAZ), the region of the fovea that has no blood supply. The analysis of the FAZ region is crucial given its characteristics are directly related to many relevant clinical conditions. As reference, it is related to the visual acuity of patients who suffer from diabetic retinopathy or the occlusion of the retinal vein [6].

As reference, the population with diabetes has from 40% to 90% of suffering from diabetic retinopathy; in addition, people with diabetic retinopathy are 5 times more likely to derive in total blindness. Given those facts, the identification, segmentation and analysis of the FAZ region is crucial for the early diagnosis of relevant diseases as diabetic retinopathy.

Given that it is a recently technology, there are still few studies that are related to the automatic extraction of measurements of interest using the OCT-A image modality. Instead, these early studies are mainly based on the clinical analysis of these images to define manual parameters that can be extracted and the characteristics they typically offer [7]. There are works that study the repeatability and reproducibility of these measures in healthy patients [8, 9] indicating the satisfactory impact of this analysis. In addition, as previously indicated, it was shown that visual acuity is related to the FAZ area in patients with diabetic retinopathy and with the occlusion of the retinal vein [6], demonstrating the suitability and the clinical relevance of this analysis in the diagnosis of relevant pathologies related to the vision loss. However, still few proposed computational studies are based on the extraction of the FAZ region. Lu et al. [10] faces the automatic FAZ extraction and its quantification in different measurements to classify the images as healthy or diabetic cases. Particularly, the FAZ region is extracted applying a region growing approach in the exact central point of the image as seed, which represents a significative limitation with the initialization of this static point; then, morphological operators and an active contour model are applied in order to obtain the final FAZ segmentation. Next, four different parameters are calculated to quantify the FAZ region and classify the image as a healthy or diabetic case. In the work of Hwang et al. [11], the proposal directly subtracts the image intensities over consecutive OCT-A images in order to generally obtain avascular zones, deleting posteriorly the non-representatives ones using a given size as reference.

In this paper, we propose a fully automated and robust methodology to localized and measure the FAZ region in OCT-A images. The validation of the proposal was performed with a set of experiments, using a representative public dataset that covered a significative age-range as well as modalities of healthy and diabetic OCT-A images. Specifically, this public dataset contains 3 × 3 millimeters and 6 × 6 millimeters superficial and depth healthy OCT-A images from people between 10 and 69 years old, including all the types in each age-range. Moreover, a smaller part of the dataset belongs to diabetic patients, including about 17 images for each of the 4 mentioned subgroups: 3 × 3 millimeters superficial and depth and 6 × 6 millimeters superficial and depth. In the Section Image dataset we explain extensively these used image dataset. The methodology that is presented in this work is able to perform the aforementioned actions automatically, without the need of the user intervention. Generally, the methodology to segment the FAZ region implies the following steps: first, the image acquisition and normalization of its values in order to facilitate the following stages of the process; second, an exhaustive analysis of the image to detect FAZ candidates and the consecutive removal of existing false positives; then, from the remaining candidates, we select the correct FAZ; and, finally, a precise segmentation of the FAZ region is achieved. The obtained results were compared with the manual measurements of two expert clinicians to analyze the correlation and similarity of the results of the system with the manual performance of an expert clinician.

This paper is organized as follows: Section Materials and methods presents the OCT-A image dataset that was used in the experiments as well as the detailed characteristics of the proposed method. Section Results exposes the results and comparisons with the manual segmentations. Finally, Section Discussion and Conclusions discusses about the obtained results, concludes the paper and indicates possible future lines of work.

Materials and methods

Image dataset

The “Comité de Ética da Investigación de Santiago-Lugo” committee belonging to the “Rede Galega de Comités de Ética da Investigación” attached to the regional government “Secretaría Xeral Técnica da Consellería de Sanidade da Xunta de Galicia” approved this study, which was conducted in accordance with the tenets of the Helsinki Declaration. This study was carried out retrospectively on existing data that have previously been anonymized. The validation process was done using the public image dataset OCTAGON [12], that contains 144 healthy and 69 diabetic OCT-A images (all the cases presenting diabetic retinopathy (RD)), summing a total of 213 cases. The images were taken using the Optical Coherence Tomography capture device DRI OCT Triton; Topcon Corp taking images from both left and right eyes of different patients. Additionally, the images were obtained at different levels of zoom and depths, with a resolution of 320 × 320 pixels. In particular, the following configurations were represented in the dataset:

Superficial. OCT-A images in which the foveal area can be observed from the surface.

Deep. OCT-A images visualizing the deep foveal area.

The previous configurations were also captured at the following resolutions:

3 × 3 millimeters OCT-A images centered in the fovea covering a region of 3 × 3 millimeters. Hence, a greater level of detail of the captured macular region is appreciated.

6 × 6 millimeters OCT-A images centered in the fovea covering a region of 6 × 6 millimeters. Hence, a wider range of the macular region is visualized.

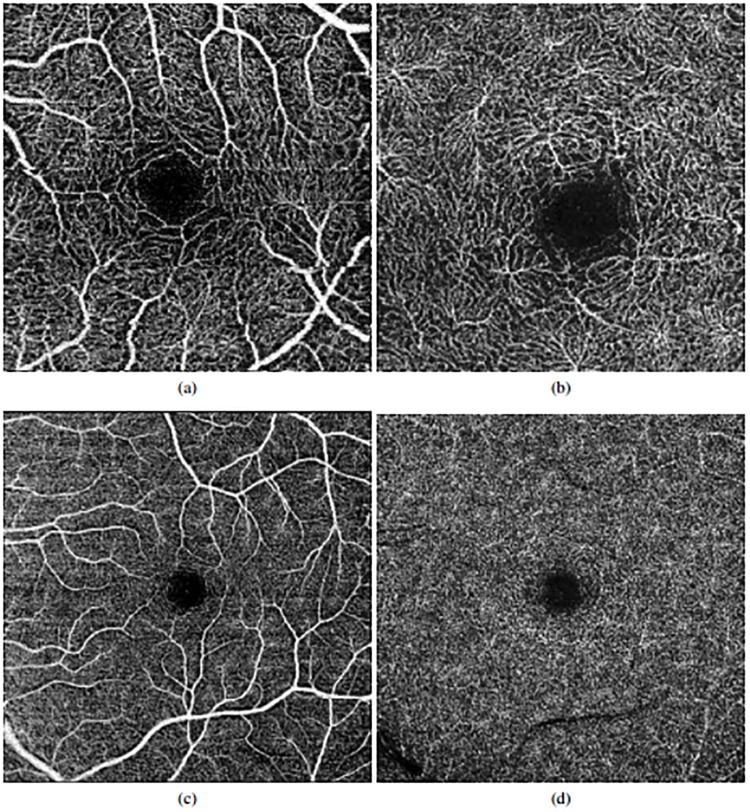

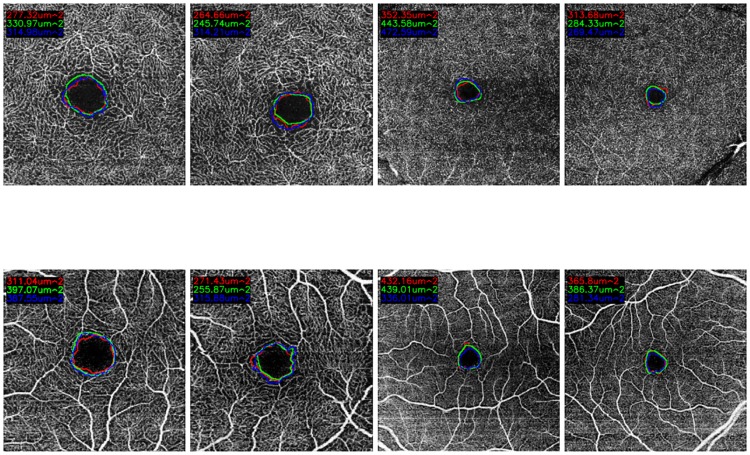

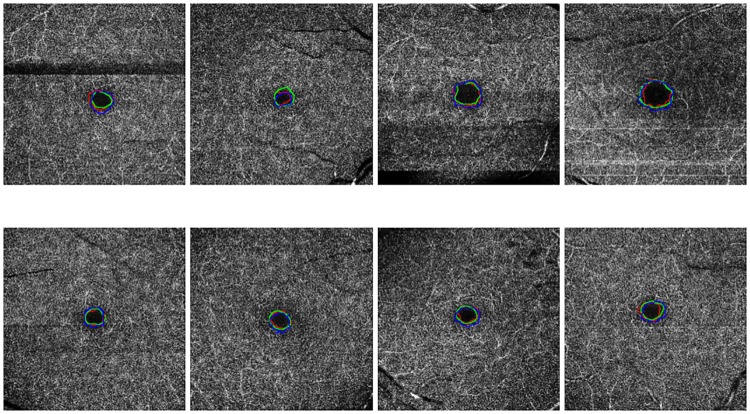

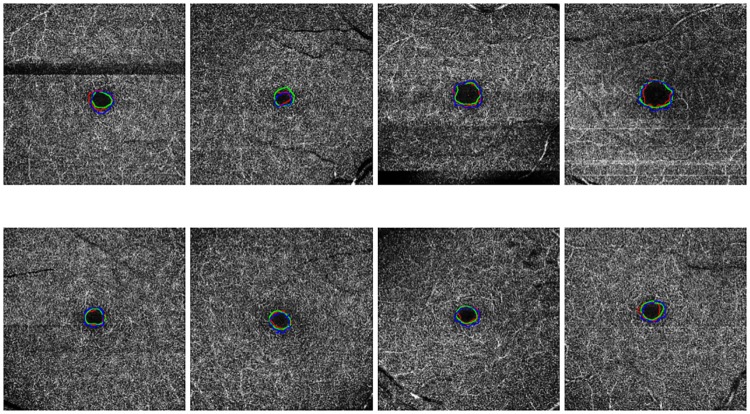

Fig 1 illustrates, with representative examples, all the 4 configurations that are represented in the used dataset. Additionally, the set of 144 healthy images presents the following clinical and population characteristics:

Fig 1. Examples of OCT-A images representing all the configurations that were used in this work.

1st row, images of 3x3 millimeters. 2nd row, images of 6x6 millimeters. (a) & (c) Superficial OCT-A images. (b) & (d), Deep OCT-A images.

Age range. The image dataset is divided into 6 age ranges: 10-19 years, 20-29 years, 30-39 years, 40-49 years, 50-59 years and 60-69 years. This way, we used a diverse set of images with a significant variability of ages.

Division by patients. For each mentioned age range, images from three different patients were captured.

Eye. For each patient, we have OCT-A images that were extracted from both left and right eyes.

Depth and size. Finally, for each eye, 4 images were captured ranging all the superficial/deep and 3/6 millimeters configurations.

Additionally, two expert clinicians manually labeled and segmented the FAZ region of each OCT-A image. This ground truth served as reference for the validation process of the method.

As said, the dataset also includes 69 diabetic OCT-A images, about 17 of each mentioned subgroup. Given that these OCT-A images are manually labeled by an expert clinician, the validation process is the same as with healthy cases, testing that the method is valid for both healthy and diabetic OCT-A images.

Proposed methodology

We based the proposed methodology on the analysis of the main image characteristics of the FAZ region as it typically appears in the OCT-A images. Generally, these characteristics are the following:

Macular centered area. Although this is not exactly in all the cases, the FAZ region is typically centered on the macular region, specially in the cases of healthy patients.

Low intensity profile region. Given the absence of vasculature, the FAZ region is generally defined as a dark area with a significative contrast with respect to the neighbor areas of the macular region.

Surrounded by blood vessels. Given this low intensity profile region, surrounded by blood vessels, we can base the precise delimitation of the FAZ region using this surrounding vasculature as reference.

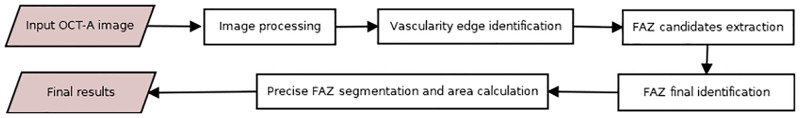

The proposed methodology based its characteristics in these properties to achieve the desired results. Fig 2 illustrates the main steps of the proposed method. They are progressively discussed in next subsections.

Fig 2. Main steps of the proposed methodology.

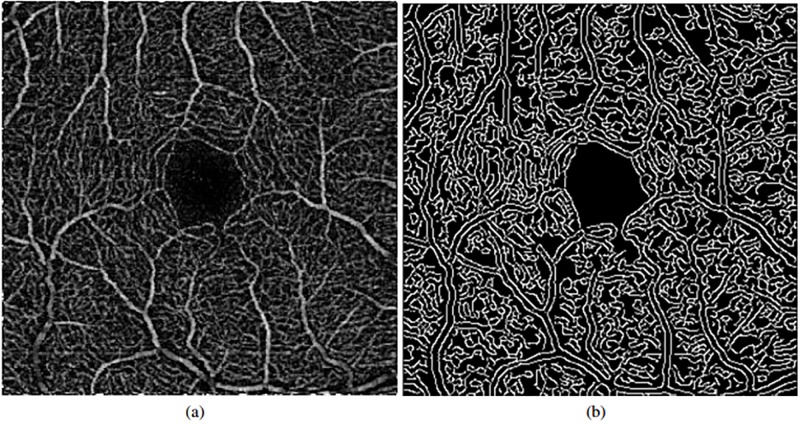

Image processing

We initiality intensify the visualization of the vasculature to facilitate its following differentiation by applying morphological operators. Morphological operators are often used to highlight the geometric properties of the image. Our first purpose is to clearly differentiate what is an avascular zone and what is not, so the objective of the application of the morphological operators is to make this difference stronger. Given that is used in different works with satisfactory results [13], we apply the white top-hat operator (see Fig 3), since it makes the bright areas of the image more intense. In this way, vessels will present higher intensities while areas without vessels will remain with low intensity profiles.

Fig 3. Application of the preprocessing step.

(a) Original image. (b) Image result after applying the-top hat operator.

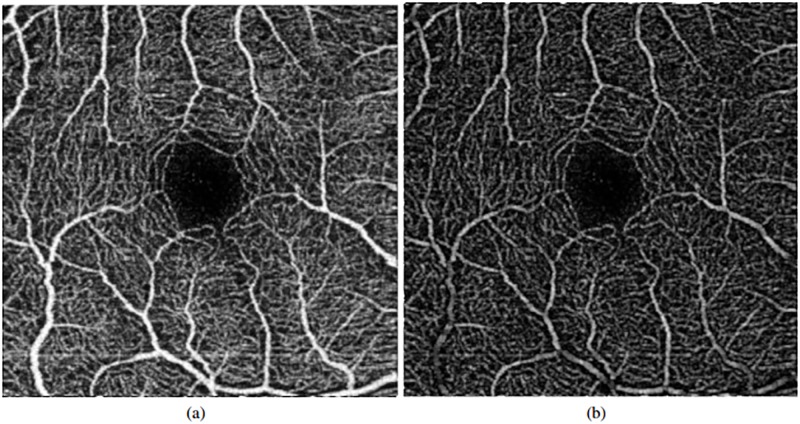

Vascular edge identification

Using the previous image, we can easily identify the vascular regions and differentiate them from the target FAZ area. Additionally, this enhanced image also facilitates the removal of possible wrong identifications in subsequent stages of the methodology. To identify the vascularity, the Canny edge detector [14] is used, extracting the edges of the vessels. The parameters of Canny edge detector are decisive for the results; in this case these parameters vary based on the image average values, allowing to acquire satisfactory results independently of the input OCT-A image. This way, we obtain solid and continuous detections of the vasculature that serve as baseline for the vascular region identification. In Fig 4, we can see a representative example of the result after the application of the Canny edge detector.

Fig 4. Vascularity edge identification using the Canny edge detector.

(a) Original OCT-A image (after the top-hat preprocessing step). (b) Results of the vascular edge identification.

Extraction of the FAZ candidates

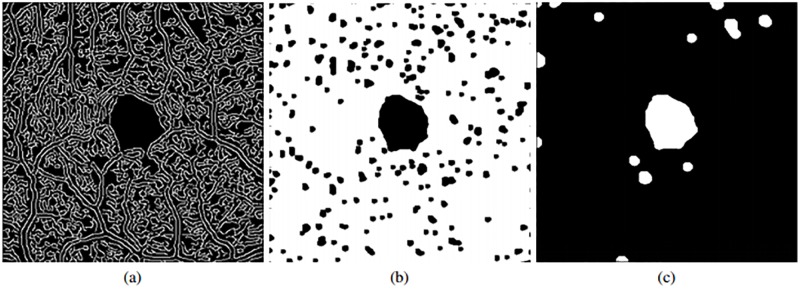

Using the previous set of vascular detections as baseline, we identify all the regions that are suspicious of being candidates of the FAZ location. To remove most of the false positives, we firstly apply a morphological closure. The reason for choosing this operator instead of a dilatation is that the target vascular area would be excessively modified if an erosion is not applied after dilatation. Thus, after the application of the morphological closure we obtain an adequate scenario where we can easily identify the most suitable candidate as the target FAZ region, as illustrated in the example of Fig 5.

Fig 5. Morphological closure and inversion of intensities followed by a removal of small elements.

(a) Image with the vascular edge identification. (b) Result after applying a morphological closure. (c) Result after applying an inversion of intensity and an opening.

Afterwards, after inverting the image to facilitate posterior stages, an opening morphological operator is applied given that the previous image still contains a significant number of spurious detections. This way, as result, the fewer possible candidates (Fig 5(c)) are preserved.

FAZ region final identification

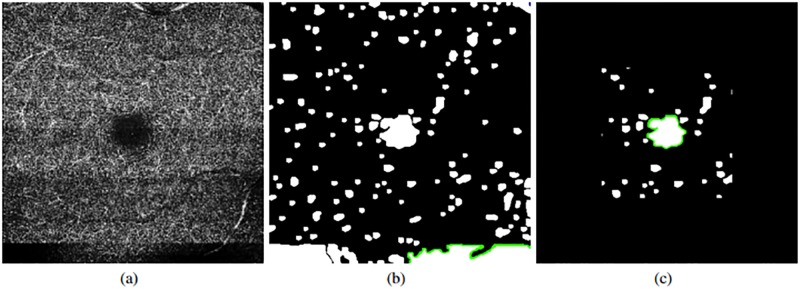

As indicated before, the main characteristics of the FAZ region imply a centered location, given that the OCT-A images are typically taken macular-centered, as well as their common appearance of low intensity profiles. These properties permitted that, in most of the cases, we obtain images from the previous stage as the case presented in Fig 5(b). In that cases, the larger identified region directly represents the FAZ region. However, other times, we face situations, as the one presented in Fig 6, where errors in the capture process or pathological conditions can introduce other significant dark regions in the OCT-A images, producing mistakes in the FAZ identifications. In that sense, we analyzed the morphological characteristics of the remaining candidates to perform a precise identification, avoiding those that are not clearly FAZ regions. In particular, peripheral and disperse candidates are directly discarded and marked as background.

Fig 6. Example of error in the capture process.

(a) Original image. (b) Initial set of identified FAZ candidates. (c) Final set of FAZ candidates after FP removal.

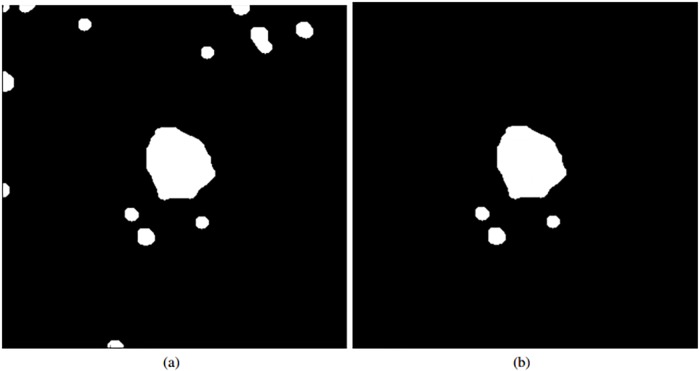

Applying these rules we can see that, as happens with the example of Fig 6(c), we remove many false positives, specially those problematic that could be confused with FAZ regions and therefore, introduce identification errors. Moreover, even without the existence of pathological or capture artifacts, this stage contributes discarding a significant number of FP candidates, as happens with the example of Fig 7.

Fig 7. Removal process of FAZ FP candidates.

(a) Initial set of identified FAZ candidates. (b) Final set of FAZ candidates.

Finally, from the remaining candidates, we decide which of them represents the final FAZ identification. Carefully analyzing the candidates, at this stage, we normally preserved the FAZ region and other small candidates of spurious artifacts. For that reason, we select the largest remaining candidate as the most significant one being, therefore, the identified FAZ region. There are many ways to check the largest sized regions. In our case, the used criterion is the measurement of the perimeter of the candidates. This preliminary extraction serves as baseline of the following precise FAZ segmentation.

Precise FAZ segmentation and area calculation

The previously obtained FAZ segmentation is adequate in many cases. However, the use of morphological operators and the significant level of complexity of the OCT-A images penalize the segmentation precision in the surrounding FAZ limits. For that reason, we afterwards applied region growing [15, 16] using the previous segmentation as seed to further adjust with a higher precision the contour of the segmentation to the surrounding vascular edges. In this case, we implemented a new version of region growing, based on the original idea and adding new features. This implementation add to the original region growing the ability of deleting pixels that are in the region and not accomplish the region conditions.

Given that the preliminar segmentation could exceed the vasculature limits, we performed a preliminary erosion step to guarantee that the area that is used as seed for the region growing process is contained inside the real existing FAZ region. Then, the contour points of this seed are used by the region growing process to progressively aggregate or delete neighboring pixels by intensity similarity until reaching the entire vascular edge contour. Finally, where no further pixels are added, the growing process is stopped.

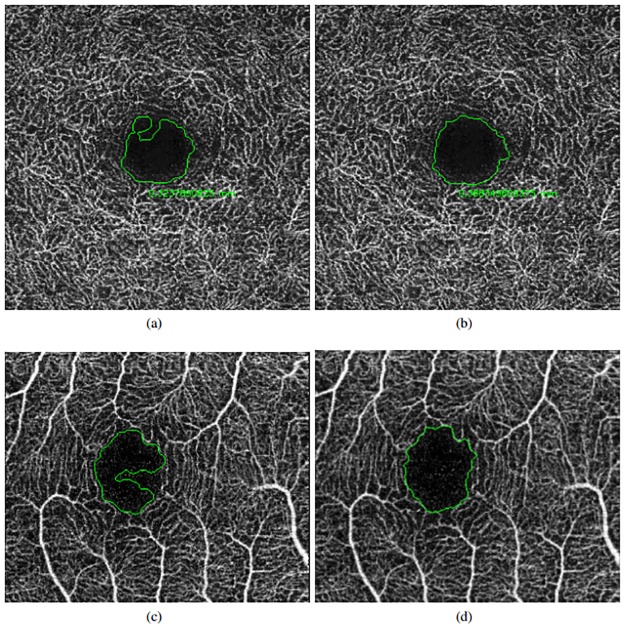

The similarity criterion calculates the average intensity of the extracted region, letting a 30% of variation as the tolerance for the addition of new pixels to the segmentation. This means that we accept a pixel in the region if it value is content in [ARV − 0.3 × ARV, ARV + 0.3 × ARV], where ARV is the average region value. Fig 8 presents a couple of imperfect preliminary FAZ extractions and their corresponding final precise segmentations. This way, we obtain more adjusted FAZ segmentations that are suitable for their use in following analyses and diagnostic processes.

Fig 8. Application of the precise final FAZ segmentation.

(a) & (c) Preliminary FAZ extractions. (b) & (d) Final segmentation results.

Finally, using the resultant segmentation, the method also calculates the corresponding area of the identified FAZ zone, as a global and complementary numeric parameter to be used in clinical procedures. The area is calculated as follows:

| (1) |

where a represents the count of pixels of the segmented region, mm represents the size in millimeters of the image (in our experiments 3 or 6 millimeters), and height and width indicates the dimensions of the analyzed OCT-A image.

Results

We conducted different experiments to validate the suitability of the proposed method using the image dataset that was presented in Section Materials and methods. As indicated, this dataset includes a significant variability of conditions with images at superficial and deep levels as well as sizes of 3 and 6 millimeters. In the experiments, we compared the results of the method with the manual labeling of two experts clinician. The designed experiments were the following:

Experiment 1. Validation of the accuracy of the localization process.

Experiment 2. Validation of the quality of the segmentation results. We performed a couple of comparisons: firstly, a global comparison analyzing the area of the retrieved FAZ regions; secondly, a more adjusted comparison using the Jaccard’s index.

Additionally, we divided the experiments by the analysis of the included 4 configurations of the OCT-A images, given the difference of complexity of each case. This way, we obtain more precise results and conclusions of the performance of the proposal in all the existing scenarios.

Experiment 1: Validation of the FAZ localization stage

We firstly tested if the proposal correctly identifies the location of the FAZ region that corresponds to the first part of the proposed methodology. This is a crucial stage as the subsequent precise FAZ segmentation depends on a preliminary correct detection. As gold standard, we consider that a localization was successfully achieved if the centroid of the preliminary extraction is placed inside the manual segmentation of the specialist.

Table 1 summarizes the main localization results including the success and failure rates for both superficial and deep OCT-A healthy images. As we can see, the results using deep images were satisfactory, localizing correctly all the aimed 72 FAZ cases. Regarding the superficial images, the method also provided accurate results in most of the cases, remaining 4 cases where it was not correctly detected (the 4 cases are presented in Fig 9). About these cases, they belong to 6 millimeters images, where the tonalities of the images are fairly regular and the FAZ normally presents small dimensions. This short size can make that the final selection of the biggest candidate returns a candidate that does not belong to the real FAZ region, discarding the real detected one. Despite that, we would like to highlight that this situation is only present in a very low number of particular cases.

Table 1. Accuracy localization FAZ results using the proposed method in healthy OCT-A images.

| Size | Superficial | Deep | Total |

|---|---|---|---|

| 3 × 3 millimeters | 36/36 (100%) | 36/36 (100%) | 72/72 (100%) |

| 6 × 6 millimeters | 32/36 (88.89%) | 36/36 (100%) | 68/72 (94.45%) |

Fig 9. Error cases on the FAZ localization process.

Table 2 summarizes the localization results and the success and failure rates for both superficial and deep OCT-A diabetic images, reaching accurate results in all the subgroups.

Table 2. Accuracy localization FAZ results using the proposed method in diabetic OCT-A images.

| Size | Superficial | Deep | Total |

|---|---|---|---|

| 3 × 3 millimeters | 19/19 (100%) | 16/16(100%) | 35/35 (100%) |

| 6 × 6 millimeters | 17/17 (100%) | 17/17 (100%) | 34/34 (100%) |

Experiment 2: Validation of the FAZ segmentation

Over the correctly localized FAZ regions, we further analysed the characteristics of the obtained FAZ precise segmentations in comparison with the manual segmentations of the specialist. We firstly compared the final area size of the extracted regions given this is the aimed final parameter that is being used by clinicians in the diagnostic procedures, providing a general and bright idea about the usefulness of the results for their final purpose. In particular, we used the correlation coefficient [17] to measure the similarity and relationship between both method and clinician extracted area sizes. In this way, it can be verified whether the relationship between both sets is directly proportional. More formally, the correlation is calculated as the quotient between the covariance and the product of the standard deviations of both area size sets:

| (2) |

The results of this operation returns values in the interval [−1, 1], where:

1 ≥ r > 0. The correlation between both sets is directly proportional, being r = 1 the maximum possible correlation.

r = 0. No correlation is identified between both sets.

0 > r ≥ −1. The correlation is inversely proportional, being r = -1 the maximum inverse correlation.

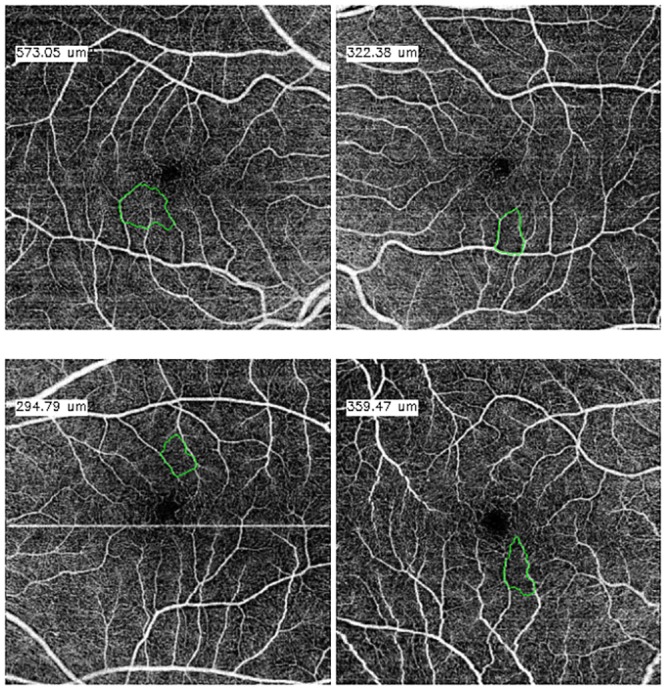

The results of the correlation are presented in Table 3, where we can observe that the performance of the proposed method is significantly correlated to the manual performance of the specialists, being even clearer this similarity in the cases of superficial OCT-A images. The higher values in superficial images are obtained given that the FAZ regions in these images present clearer surrounding vascular edges, for what the manual and the automatic region identifications agree with higher rates. Also, we have to consider the typical variability and imperfection of the manual identifications that are normally made by the specialists, instead of the determinism and repeatability of the computational performance of our proposal, which puts in valuable consideration the correlation rates that were obtained. In addition, it should be noted that the correlation between the specialists do not reach the highest value of the correlation coefficient of Pearson. This means that there is a discrepancy between the performance of both experts. Consequently, the correlation of the automatic system performance and the expert results is also penalized. Fig 10 presents representative examples of superficial and deep images with the manual and automatic FAZ extractions and the area size measurements. As we can see, the similarity in the measurements motivates the significative results that were presented in Table 3.

Table 3. Correlation coefficients that were obtained using the manual and the automatic area size measurements in healthy OCT-A images.

| Size | Superficial | Deep | Comparisons |

|---|---|---|---|

| 3 × 3 millimeters | 0.93 | 0.54 | Expert1 vs. Expert2 |

| 0.90 | 0.81 | System vs. Expert2 | |

| 0.93 | 0.66 | System vs. Expert1 | |

| 6 × 6 millimeters | 0.68 | 0.74 | Expert1 vs. Expert2 |

| 0.48 | 0.66 | System vs. Expert2 | |

| 0.40 | 0.72 | System vs. Expert1 |

Fig 10. Comparative examples of the experts (green and red) and the automatic computational (blue) segmentations as well as the corresponding area size measurements.

On the other hand, the obtained correlation coefficients with the diabetic image subset are presented in Table 4.

Table 4. Correlation coefficients that were obtained using the manual and the automatic area size measurements in diabetic OCT-A images.

| Size | Superficial | Deep |

|---|---|---|

| 3 × 3 millimeters | 0.83 | 0.89 |

| 6 × 6 millimeters | 0.82 | 0.96 |

Despite the satisfactory results of the area size correlations, we analysed not only the final measured area sizes but also the specific matching degree of both extracted regions. In that sense, we performed an additional analysis of the manual and the computational segmented FAZ regions using the Jaccard’s index [18, 19]. We used this index given its simplicity and accurate representation of the agreement degree, frequently used in a large variability of domains and, specifically, in the evaluation of medical image segmentation issues [20–23]. The Jaccard’s index is defined by:

| (3) |

where A and B represent the regions of the segmentations that are compared. The Jaccard’s index tends to one with high levels of agreement. In this case, with largely similar segmentations, their intersection is practically the same as their union. On the contrary, the Jaccard’s index tends to zero for a reduced level of agreement. The Jaccard’s index presents values in the range [0, 1], being generally considered the obtained values as:

Poor. If the Jaccard’s index is 0.4 or less, it is considered a poor result.

Good. If the obtained value with the Jaccard’s index is approximated to 0.7 the result is considered good.

Excellent. In the case that the Jaccard’s index takes values of 0.9 or higher, the result of the segmentation is considered excellent.

Table 5 details the average Jaccard’s indexes that were obtained for all the analyzed images using the manual and the automatic segmented regions in healthy OCT-A images. The results were divided in 4 parts using both size and depth dimensions, as mentioned, with the typical configurations that the specialists normally use: superficial & 3x3 millimeters, superficial & 6x6 millimeters, deep & 3x3 millimeters and deep & 6x6 millimeters. We divided the analysis in this 4 subgroups given that each case presents specific characteristics and complexity obtaining, therefore, a more adjusted analysis of the performance of the method. In addition, there were divided into other 3 subgroups, based on the comparisons that were performed (comparison between both experts or between each expert and the automatic segmentation). In general terms, we can see that all the cases reached satisfactory results, but presenting slight variations that are discussed in detail next.

Table 5. Jaccard indexes that were obtained for each subgroup of healthy OCT-A images.

| Size | Superficial | Deep | Comparisons |

|---|---|---|---|

| 3 × 3 millimeters | 0.83 | 0.72 | Expert1 vs. Expert2 |

| 0.82 | 0.72 | System vs. Expert2 | |

| 0.81 | 0.74 | System vs. Expert1 | |

| 6 × 6 millimeters | 0.72 | 0.68 | Expert1 vs. Expert2 |

| 0.77 | 0.72 | System vs. Expert2 | |

| 0.72 | 0.66 | System vs. Expert1 |

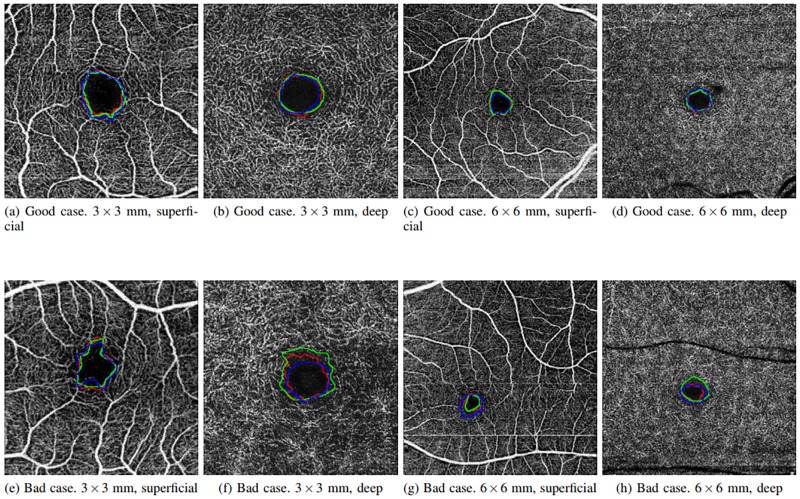

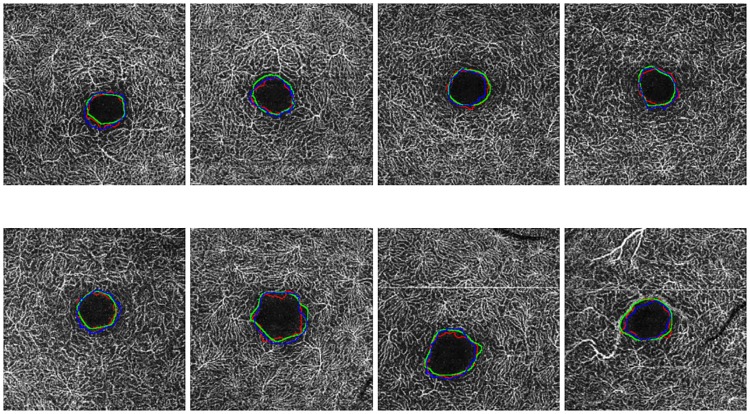

Images with a size of 6x6 millimeters typically present smaller FAZ regions, which means that small variations and imperfections in the segmentation process of the system and/or the expert impact and penalize in a higher rate the obtained agreements, producing lower Jaccard’s indexes than the results with 3x3 millimeters images that include a zoom with more resolution of the FAZ region. In addition, deep images (as stated above) present more diffuse, small and rough edges which constitutes a more complex scenario. In these cases, the computational results are slightly more irregular, given that they are based in the intensity characteristics, than the manual labelling given the expert tried to produce a smoother manual segmentation. Given that, the Jaccard’s indexes were slightly penalized, although in the graphic results (examples are presented in Fig 11) we can appreciate similar results and, even in this case, Jaccard’s indexes approximate values of 0.7, which are considered satisfactory. In addition, the Jaccard’s index between the specialists is, in all the four cases, similar to the Jaccard’s index between the system and both the experts. Therefore, we consider that the automatic segmentation is satisfactory in relation with to the results of both specialist.

Fig 11. Comparative examples with goods and bad results in the Jaccard’s index in the four subgroups (superficial and deep in 3 × 3 and 6 × 6 sizes).

Table 6 details the average Jaccard’s index using the expert’s annotations and the system’s extracted region in the different image subgroups. As in the previous case, the 3 × 3 superficial case represents the subgroup with the highest results whereas the 6 × 6 deep case provided the lowest values, as we explained before.

Table 6. Jaccard’s indexes that were obtained for each subgroup of diabetic OCT-A images.

| Size | Superficial | Deep |

|---|---|---|

| 3 × 3 millimeters | 0.83 | 0.69 |

| 6 × 6 millimeters | 0.75 | 0.64 |

Normally, healthy OCT-A images present a more regular and circular FAZ contours than the pathological cases. For that reason, we performed an additional analysis of the performance of the method considering the irregularity of the FAZ contour, more specifically the circularity of the analyzed FAZ region. The circularity is a metric that measures, in the range [0, 1], the contour of the analyzed region, determining quantitatively their similarity with a circle. In particular, in our case, we measured the circularity of each extracted FAZ region as follows:

| (4) |

where A indicates the area and P the perimeter of the FAZ region. Given that the FAZ irregularity is related to the RD degree, we decided to organize all the diabetic OCT-A images into three groups, being characterized by their circularity degree: low, medium and high. To do that, we used all the four subgroups of OCT-A images: 3 × 3 and 6 × 6 millimeters, deep and superficial.

First of all, we calculated the FAZ circularity metric for each image. Then, we sorted all the circularity values increasingly. With the sorted set of values, we divided them in the mentioned three groups, with the same number of elements in each one. Then, we analyzed the results with the validation metrics that we explained before to test the performance of the method in each circularity degree group. In Table 7, we can see the defined three circularity levels with their corresponding values of correlation coefficients and Jaccard’s indexes. As we can see, generally, the results were satisfactory in all the cases. In particular, the correlation coefficient is stable, with values around 0.9 in all the tested scenarios. In the case of the Jaccard’s index, the method provided satisfactory results, with values over 0.7 in all the groups, being progressively slightly higher in more circular cases given the simple scenario. We would like to highlight that all the values of circularity were in the range [0.27, 0.83], indicating a significative variation of the analyzed FAZ contours.

Table 7. Circularities that were obtained for each group of diabetic OCT-A images.

| Circularity level | Low | Medium | High |

|---|---|---|---|

| Correlation | 0.91 | 0.88 | 0.93 |

| Jaccard’s index | 0.70 | 0.73 | 0.77 |

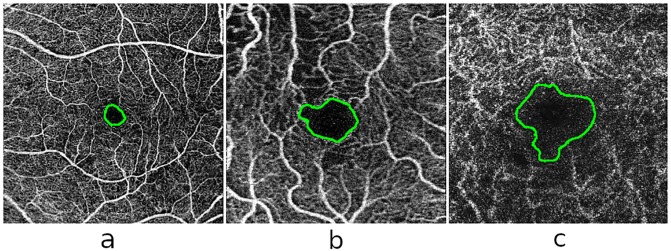

In Fig 12 we can see representative examples of diabetic OCT-A images from each defined circularity degree group.

Fig 12. Examples of representative FAZ regions from the defined levels of circularity in the diabetic OCT-A dataset.

(a) High level of circularity, (b) medium level of circularity and (c) low level of circularity.

The case that presented the best results was the one including OCT-A images with a size of 3x3 millimeters and at a superficial depth (see Fig 13), given they present FAZ regions with better marked contours and larger sizes. The size of the FAZ region influences the Jaccard’s index since the greater that is the size, the less that it is penalize by variations in the contour.

Fig 13. Comparative examples of the experts (green and red) and the automatic computational (blue) FAZ measurements in superficial 3 millimeters images.

Images with a size of 6x6 millimeters and at a superficial depth (see Fig 14), also provided satisfactory results, having clear and marked edges, allowing that the segmentation of the system and the labeling of the expert are significantly similar. Despite that, given that the FAZ region is smaller, changes that are generated in the contour identification affect in a higher rate the Jaccard’s index.

Fig 14. Comparative examples of the experts (green and red) and the automatic computational (blue) FAZ measurements in superficial 6 millimeters images.

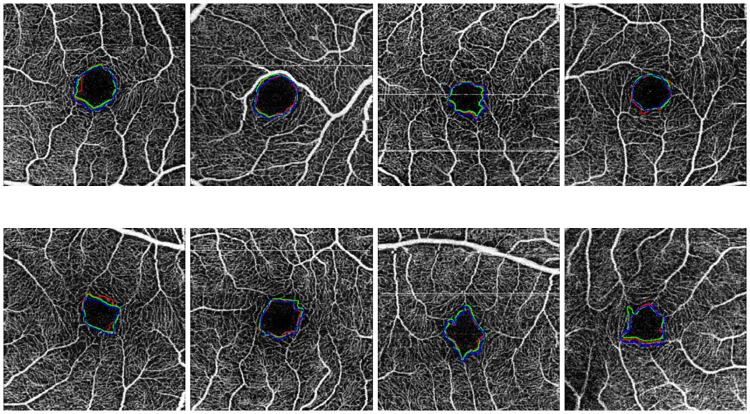

Finally, deep images (see Figs 15 and 16) are those that retrieved the worse results, nevertheless they remain within the range of Jaccard index results that are considered correct. From both sizes coherently once again 3x3 millimeters images presented better results.

Fig 15. Comparative examples of the experts (green and red) and the automatic computational (blue) FAZ measurements in deep 3 millimeters images.

Fig 16. Comparative examples of the experts (green and red) and the automatic computational (blue) FAZ measurements in deep 6 millimeters images.

Additionally, Fig 11 presents examples of the best and worst cases of each subgroup, demonstrating that frequently even in the worst scenario, the method provides acceptable results in the segmentation of the FAZ region and the calculation of the corresponding area size for the following clinical analysis. In addition, the summary of all the subgroups of Jaccard’s index is represented in the Table 8, where there are the best and worst cases of this metric in each subgroup.

Table 8. Worst and best Jaccard’s indexes that were obtained for each subgroup of OCT-A healthy images.

| Size | Superficial | Deep | Comparisons |

|---|---|---|---|

| 3 × 3 millimeters best | 0.92 | 0.83 | Expert1 vs. Expert2 |

| 0.93 | 0.88 | System vs. Expert2 | |

| 0.88 | 0.86 | System vs. Expert1 | |

| 3 × 3 millimeters worst | 0.74 | 0.44 | Expert1 vs. Expert2 |

| 0.68 | 0.59 | System vs. Expert2 | |

| 0.69 | 0.57 | System vs. Expert1 | |

| 6 × 6 millimeters best | 0.84 | 0.84 | Expert1 vs. Expert2 |

| 0.88 | 0.87 | System vs. Expert2 | |

| 0.88 | 0.83 | System vs. Expert1 | |

| 6 × 6 millimeters worst | 0.43 | 0.40 | Expert1 vs. Expert2 |

| 0.43 | 0.40 | System vs. Expert2 | |

| 0.42 | 0.41 | System vs. Expert1 |

Discussion and conclusions

There exist many vascular diseases that affect the retinal micro circulation, not only specific vascular diseases of the human eye but also others of general impact in the patients, as hypertension or diabetes. For that reason, the availability of automatic tools that quickly calculate suitable biomarkers and assist clinicians in the diagnosis and monitoring of patients is of great interest in the healthcare systems.

Among the different ophthalmological image modalities, we can find the recent appearance of the OCT-A image modality, offering visualizations of the characteristics of the retinal vasculature at different depths, but being non-invasive as it omits the injection of fluorescein, as happens with the case of classical angiographies. Given its utility, the OCT-A image modality is increasing its interest in clinical and research practice. The automatic extraction of the FAZ region in OCT-A images is of a great interest given it offers important advantages in many aspects with respect to the manual extraction of the specialist. In addition to the avoidance of a tedious manual labeling process with an computational and instant tool, the automatic extraction provides repeatability and determinism, which is largely complicated with the manual extractions of the clinical experts, representing a fundamental characteristic in accurate diagnostic and monitoring processes.

In this work, we present a novel automatic methodology that identifies and precisely segments the FAZ region using OCT-A images. The proposed method applies morphological operators to enhance the vascular brightness of the OCT-A images. Subsequently, edge detection techniques are performed to eliminate unnecessary spurious details and detect the vascular regions. After this, morphological operations are performed, again, to eliminate areas that are not of interest in the detection of the aimed FAZ region and keep a reduced number of candidates. Then, specific domain knowledge is used to preserve, from all the candidates, the most suitable identification as the FAZ localization. Finally, a region growing approach is applied using this preliminary identification as seed to obtain a precise segmentation as the final FAZ segmentation result. Additionally, using this precise segmentation, the method calculates the corresponding FAZ area size, as an important biomarker for its use in the study of the evolution of different relevant diseases and their treatments.

Regarding the obtained results with the used image dataset, the FAZ localization obtained a success rate over a 97%, as well as a correlation coefficient about a 0.9 in 3 × 3 superficial images (better case), whereas a coefficient of 0.7 in 6 × 6 deep images (worse case), using the manual performance of the clinical experts as reference. The similarity results were also measured with the Jaccard’s index, obtaining an average value of 0.8 in 3x3 millimeters superficial images (better case) and a 0.7 of average value in 6x6 millimeters deep images (worse case). Summarizing, we can conclude that the proposed method offered a satisfactory performance in all the designed scenarios.

To perform the validation process, we tested the method with the public image dataset OCTAGON [12], that contains 213 images grouped in 2 image subsets: the first one, formed by 144 healthy OCT-A images; and the second one, formed by 69 diabetic OCT-A images. The healthy dataset is divided into different groups of ages (10-19, 20-29, 30-39, 40-49, 50-59 and 60-69 years old) with 3 patients in each age-range. Each of these patients contains OCT-A images of each eye (left and right), containing both of them one image of each subgroup (3 × 3 millimeters in superficial, 3 × 3 millimeters in depth, 6 × 6 millimeters in superficial and 6 × 6 millimeters in depth). The healthy image subset also provides the manual labeling of 2 experts, that allows us to proceed with robust validations. On the other hand, the diabetic subset contains 69 images: 19 superficial images of 3 × 3 millimeters, 17 deep images of 3 × 3 millimeters, 16 superficial images of 6 × 6 millimeters and 17 deep images of 6 × 6 millimeters. This subset also contains the manual labeling of an expert clinician. As we can see, we use a complete dataset that contains healthy and pathological images, with a large variability of OCT-A images in different age-ranges (specially in the healthy case) also including, at least, manual annotations of one expert clinician, allowing us a robust validation. The different methods in the state of the art worked only with datasets with 1 or 2 of the subgroups that we use in our proposal, as we can see in Table 9, where we compare our OCT-A image coverage with different published works.

Table 9. Comparative of the coverage OCT-A image types between this proposal and the Lu et al. [10] and Hwang et al. [11] works.

Regarding the results, we tested the method with the dataset OCTAGON [12], as we said, using the correlation coefficient of Pearson and the Jaccard’s index. The first one is useful to prove that the manual extracted and the automated extracted areas are related. The second one is useful to check the coverage area between the manual and automated extractions. As we saw in the Section Experiment 2: validation of the FAZ segmentation, the results in both validation methods are satisfactory, concluding that the method correlates accurately with the manual labeling of the expert. To compare our approach with other similar works, we can see Table 10. There, we can check the results of the Jaccard’s index in healthy and diabetic cases with our proposal and the Lu et al. [10] method. Given that our image dataset fits better with the real conditions that face the expert clinicians, including a significative variability in the image conditions as detailed, we implemented a more general solution than other proposals. Also, we would like to remark that our dataset contains 69 diabetic OCT-A images with advanced stages of RD, whereas the dataset of [10] contains 66 images, being 16 of them without RD, 22 mild to moderate RD and 28 with severe RD. Additionally, our dataset includes cases with high levels of irregularity in the FAZ contours, as we said, providing more variability and a higher representativity that is typically present in real environments. For this reasons, our method provided slightly lower results in 3 × 3 millimeters, superficial, representing in any case satisfactory results. In fact, we obtain satisfactory results in all the subgroups that were tested, both in healthy and also in diabetic OCT-A images. In this comparative, no results were reported regarding the work of Hwang et al. [11] given that their proposal is centered in clinical research and they propose the validation of the method as future work.

Table 10. Comparison of the Jaccard’s indexes that were obtained for 3 × 3 millimeters superficial OCT-A images in our proposal and Lu et al. method [10].

| Method | Proposed | Lu et al. [10] |

|---|---|---|

| Superficial 3 × 3 mm healthy case | 0.82 | 0.87 |

| Superficial 3 × 3 mm diabetic case | 0.83 | 0.82 |

To further test the robustness and suitability of the obtained results, it is proposed as future work to design experiments that involve image datasets of patients with different relevant pathologies that affect the retinal vascularity. On the other hand, it is proposed the use of the proposed methodology to perform the measurements of the FAZ region in real scenarios, monitoring pathologies to confirm the validity of the method.

All the code developed in this work is publicly available on the repository https://github.com/macarenadiaz/FAZ_Extraction.

Data Availability

All images OCT-A files are available from the OCTAGON database (http://www.varpa.org/research/ophtalmology.html) All code files are available in https://github.com/macarenadiaz/FAZ_Extraction.

Funding Statement

This work is supported by the Ministerio de Economía y Competitividad, Government of Spain through the DPI2015-69948-R research project. Also, this work has received financial support from the European Union (European Regional Development Fund - ERDF) and the Xunta de Galicia, Centro singular de investigación de Galicia accreditation 2016-2019, Ref. ED431G/01; Grupos de Referencia Competitiva, Ref. ED431C 2016-047 and Instituto de salud Carlos III, Ref. PI-00940. Also, this work has received partial financial support from the Fundación Mutua Madrileña project, Ref. 2017/365.

References

- 1. Novo J, Rouco J, Barreira N, Ortega M, Penedo M, Campilho A. Hydra: A web-based system for cardiovascular analysis, diagnosis and treatment. Computer methods and programs in biomedicine. 2017;139:61–81. 10.1016/j.cmpb.2016.10.019 [DOI] [PubMed] [Google Scholar]

- 2. Novo J, Hermida A, Ortega M, Barreira N, Penedo M, López Jt. Wivern: a Web-Based System Enabling Computer-Aided Diagnosis and Interdisciplinary Expert Collaboration for Vascular Research. Journal of Medical and Biological Engineering. 2017;37:920–935. 10.1007/s40846-017-0256-y [DOI] [Google Scholar]

- 3. Nishio M, Nishizawa M, Sugiyama O, Kojima R, Yakami M, Kuroda Tt. Computer-aided diagnosis of lung nodule using gradient tree boosting and Bayesian optimization. PLoS One. 2018;4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. de Moura J, Novo J, Charlón P, Barreira N, Ortega M. Enhanced visualization of the retinal vasculature using depth information in OCT. Medical & biological engineering & computing. 2017;55:2209–2225. 10.1007/s11517-017-1660-8 [DOI] [PubMed] [Google Scholar]

- 5. de Carlo T, Romano A, Waheed N, Duker J. A review of optical coherence tomography (OCTA). International Journal of Retina and Vitreous. 2015;1 10.1186/s40942-015-0005-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Balaratnasingam C, Inoue M, Ahn S, McCann J, Dhrami-Gavazi E, Yannuzzi Lt. Visual Acuity Is Correlated with the Area of the Foveal Avascular Zone in Diabetic Retinopathy and Retinal Vein Occlusion. American Academy of Ophthalmology. 2016;123. [DOI] [PubMed] [Google Scholar]

- 7. Mastropasqua R, Toto L, Borrelli E, Di Antonio L, Mattei P, Senatore At. Optical Coherence Tomography Angiography Findings in Stargardt Disease. PLoS ONE. 2017;12 10.1371/journal.pone.0170343 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Mastropasqua R, Toto L, Mattei P, Di Nicola M, Zecca I, Carpineto Pt. Reproducibility and repeatability of foveal avascular zone area measurements using swept-source optical coherence tomography angiography in healthy subjects. British Journal of Ophthalmology. 2016;100. [DOI] [PubMed] [Google Scholar]

- 9. Carpineto P, Mastropasqua R, Marchini G, Toto L, Di Nicola M, Di Antonio L. Reproducibility and repeatability of foveal avascular zone measurements in healthy subjects by optical coherence tomography angiography. British Journal of Ophthalmology. 2016;100 10.1136/bjophthalmol-2015-307330 [DOI] [PubMed] [Google Scholar]

- 10. Lu Y, Simonett J, Wang J, Zhang M, Hwang T, Hagag At. Evaluation of Automatically Quantified Foveal Avascular Zone Metrics for Diagnosis of Diabetic Retinopathy Using Optical Coherence Tomography Angiography. PInvestigative ophthalmology. 2018;59:2212–2221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Hwang T, Gao S, Liu L, Lauer A, Bailey S, Flaxel Ct. Automated quantification of capillary nonperfusion using optical coherence tomography angiography in diabetic retinopathy. JAMA Ophthalmol. 2016;5658:1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Díaz M, Novo J, Ortega M, Penedo M, Gómez-Ulla F. OCTAGON; 2018.

- 13. Leroy F, Mangin J, Rousseau F, Glasel H, Hertz-Pannier L, Dubois Jt. Atlas-free surface reconstruction of the cortical grey-white interface in infants. PLos One. 2011;11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Ding L, Goshtasby A. On the Canny edge detector. Pattern Recognition. 2001;34:721–725. 10.1016/S0031-3203(00)00023-6 [DOI] [Google Scholar]

- 15. Zhu S, Yuille A. Region competition: unifying snakes, region growing, and Bayes/MDL for multiband image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence. 1996;18:884–900. 10.1109/34.537343 [DOI] [Google Scholar]

- 16. Chang Y, Li X. Adaptive image region-growing. IEEE Transactions on Image Processing. 1994;3:868–872. 10.1109/83.336259 [DOI] [PubMed] [Google Scholar]

- 17. R T. Interpretation of the Correlation Coefficient: A Basic Review. Journal of Diagnostic Medical Sonography. 1990;6:35–39. 10.1177/875647939000600106 [DOI] [Google Scholar]

- 18. Real R, Vargas J. The Probabilistic Basis of Jaccard’s Index of Similarity. Systematic Biology. 1996;45:380–385. 10.1093/sysbio/45.3.380 [DOI] [Google Scholar]

- 19. Ilea D, Whelan P. Image segmentation based on the integration of colour-texture descriptors—A review. Pattern Recognition. 2011;44 10.1016/j.patcog.2011.03.005 [DOI] [Google Scholar]

- 20. Bouix S, Martin-Fernandez M, Ungar L, Nakamura M, Koo M, McCarley Rt. On evaluating brain tissue classifiers without a ground truth. Neuroimage. 2007;36:1207–1224. 10.1016/j.neuroimage.2007.04.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Silva S, Madeira J, Santos B, Ferreira C. Inter-observer variability assessment of a left ventricle segmentation tool applied to 4D MDCT images of the heart. IEEE Engineering in Medicine and Biology Society. 2011;2011:3411–3414. [DOI] [PubMed] [Google Scholar]

- 22. Lassen B, Jacobs C, Kuhnigk J, van Ginneken B, van Rikxoort E. Robust semi-automatic segmentation of pulmonary subsolid nodules in chest computed tomography scans. Physics in medicine and biology. 2015;60:1307–1323. 10.1088/0031-9155/60/3/1307 [DOI] [PubMed] [Google Scholar]

- 23. Gonçalves L, Novo J, Campilho A. Hessian based approaches for 3D lung nodule segmentation. Expert Systems with Applications. 2016;61:1–15. 10.1016/j.eswa.2016.05.024 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All images OCT-A files are available from the OCTAGON database (http://www.varpa.org/research/ophtalmology.html) All code files are available in https://github.com/macarenadiaz/FAZ_Extraction.