In a recent article in PNAS, Lenc et al. (1) use frequency tagging to claim that low-frequency sounds boost “selective neural locking to the beat, thus explaining the privileged role of bass sounds in driving people to move along with the musical beat.” While this is an interesting hypothesis, there are major flaws in the authors’ methods.

We (2) previously demonstrated that frequency-tagged z scores lack the specificity required to make them a reliable, unambiguous metric of “neural locking to the beat” (see figure S4 in ref. 2). Nozaradan et al. (3) cite our study, but perhaps overlooked our critique of their z-scoring method. Therefore, we reiterate the fundamental problem: Frequency tagging discards crucial information about phase. It cannot capture how frequency components interact in the time domain, making the method insensitive to the temporal structure of signals. Beat perception is undeniably structured in time, typically with a steady period and phase. It is impossible to know whether neural signals are “selectively locked” to the beat if the phases of frequency components in these recorded signals are discarded.

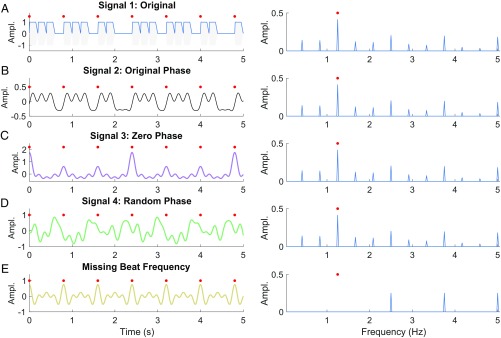

Fig. 1 illustrates the problem: Fig. 1A shows the unsyncopated stimulus used in Lenc et al. (1), together with its amplitude spectrum. Preserving the original phase of just the 12 frequency peaks considered by the method allows us to reconstruct a time domain signal that resembles the original stimulus envelope (Fig. 1B). However, setting the phase of these frequency components to zero results in a dramatically different signal, with arguably better locking to the beat (Fig. 1C). Meanwhile, rhythmic temporal structure is largely lost if phases are random (Fig. 1D). Crucially, the four time domain signals (Fig. 1 A–D) all have identical frequency spectra up to 5 Hz, and thus identical z scores.

Fig. 1.

Why phase matters. (A, Left) Unsyncopated low-carrier frequency stimulus (gray) and envelope (blue) used in Lenc et al. (1), red circles mark beat times (see figure S1 in ref. 2). (A, Right) Amplitude spectrum of stimulus envelope, beat frequency at 1.25 Hz. (B) Signal reconstruction using original amplitudes and phases of just the 12 frequency components considered by the method. Original stimulus structure is largely preserved. (C) Same as B but with phases set to zero. Constructive interference between frequency components results in a signal that appears better locked to the beat. (D) Same as B but with phases set randomly between 0 and 2π. Frequency components no longer align to produce sharp time domain features aligned to beat times. Note that the signals in A–D are considered identical by frequency tagging since they share the same amplitude spectrum up to 5 Hz (Right). (E) A signal clearly aligned to beat times (Left), but with zero amplitude, and therefore a negative z score, at the beat frequency (Right).

One could try to argue that only comparing z scores at the beat frequency might avoid phase interaction problems. Unfortunately, this too is false. Fig. 1E illustrates a signal with zero amplitude (and a negative z score) at the beat frequency. Nevertheless, its in-phase harmonics produce a time domain signal with prominent peaks aligned to beat times. Together, these examples demonstrate that frequency-tagged z scores cannot measure how well a signal locks to a regular beat. If these were neural signals, their respective z scores would have us believe that the signals in Fig. 1A–D all represent the isochronous 1.25-Hz beat equally well, and better than the signal in Fig. 1E. Clearly, this is not the case.

Given the lack of specificity, it is perhaps unsurprising that Henry et al. (4) found no correlation between frequency-tagged z scores and perceived beat salience. Novembre and Iannetti (5) also pointed out that similar frequency domain signatures can be obtained by signals that exhibit selective locking to the beat and by evoked response potentials due to rhythmic stimulus structure. Similar potential pitfalls are discussed at length in Zhou et al. (6).

Music neuroscience is a young discipline with ongoing method development. Conclusions based on frequency tagging (1, 3, 7–9) will need to be confirmed using methods that are phase sensitive, such as intertrial phase coherence (10) or time domain methods (2).

Footnotes

The authors declare no conflict of interest.

References

- 1.Lenc T, Keller PE, Varlet M, Nozaradan S. Neural tracking of the musical beat is enhanced by low-frequency sounds. Proc Natl Acad Sci USA. 2018;115:8221–8226. doi: 10.1073/pnas.1801421115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Rajendran VG, Harper NS, Garcia-Lazaro JA, Lesica NA, Schnupp JWH. Midbrain adaptation may set the stage for the perception of musical beat. Proc Biol Sci. 2017;284:20171455. doi: 10.1098/rspb.2017.1455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Nozaradan S, Schönwiesner M, Keller PE, Lenc T, Lehmann A. Neural bases of rhythmic entrainment in humans: Critical transformation between cortical and lower-level representations of auditory rhythm. Eur J Neurosci. 2018;47:321–332. doi: 10.1111/ejn.13826. [DOI] [PubMed] [Google Scholar]

- 4.Henry MJ, Herrmann B, Grahn JA. What can we learn about beat perception by comparing brain signals and stimulus envelopes? PLoS One. 2017;12:e0172454. doi: 10.1371/journal.pone.0172454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Novembre G, Iannetti GD. Tagging the musical beat: Neural entrainment or event-related potentials? Proc Natl Acad Sci USA. 2018;115:E11002–E11003. doi: 10.1073/pnas.1815311115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Zhou H, Melloni L, Poeppel D, Ding N. Interpretations of frequency domain analyses of neural entrainment: Periodicity, fundamental frequency, and harmonics. Front Hum Neurosci. 2016;10:274. doi: 10.3389/fnhum.2016.00274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Nozaradan S. Exploring how musical rhythm entrains brain activity with electroencephalogram frequency-tagging. Philos Trans R Soc Lond B Biol Sci. 2014;369:20130393. doi: 10.1098/rstb.2013.0393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nozaradan S, Peretz I, Keller PE. Individual differences in rhythmic cortical entrainment correlate with predictive behavior in sensorimotor synchronization. Sci Rep. 2016;6:20612. doi: 10.1038/srep20612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Nozaradan S, Keller PE, Rossion B, Mouraux A. EEG frequency-tagging and input-output comparison in rhythm perception. Brain Topogr. 2018;31:153–160. doi: 10.1007/s10548-017-0605-8. [DOI] [PubMed] [Google Scholar]

- 10.Doelling KB, Poeppel D. Cortical entrainment to music and its modulation by expertise. Proc Natl Acad Sci USA. 2015;112:E6233–E6242. doi: 10.1073/pnas.1508431112. [DOI] [PMC free article] [PubMed] [Google Scholar]