Abstract

Soldier-based simulators have been attracting increased attention recently, with the aim of making complex military tactics more effective, such that soldiers are able to respond rapidly and logically to battlespace situations and the commander’s decisions in the battlefield. Moreover, body area networks (BANs) can be applied to collect the training data in order to provide greater access to soldiers’ physical actions or postures as they occur in real routine training. Therefore, due to the limited physical space of training facilities, an efficient soldier-based training strategy is proposed that integrates a virtual reality (VR) simulation system with a BAN, which can capture body movements such as walking, running, shooting, and crouching in a virtual environment. The performance evaluation shows that the proposed VR simulation system is able to provide complete and substantial information throughout the training process, including detection, estimation, and monitoring capabilities.

Keywords: virtual reality, body area network, training simulator

1. Introduction

In recent years, since virtual reality (VR) training simulators allow soldiers to be trained with no risk of exposure to real situations, the development of a cost-effective virtual training environment is critical for training infantry squads [1,2,3]. This is because if soldiers do not develop and sustain tactical proficiency, they will not be able to react in a quickly evolving battlefield. To create a VR military simulator that integrates immersion, interaction, and imagination, the issue of how to use VR factors (e.g., VR engine, software and database, input/output devices, and users and tasks) is an important one. The VR simulator is able to integrate the terrain of any real place into the training model, and the virtual simulation trains soldiers to engage targets while working as a team [4,5].

With a head-mounted display (HMD), the key feature used in VR technology, soldiers are immersed in a complex task environment that cannot be replicated in any training areas. Visual telescopes are positioned in front of the eyes, and the movement of the head is tracked by micro electro mechanical system (MEMS) inertial sensors. Gearing up with HMDs and an omnidirectional treadmill (ODT), soldiers can perform locomotive motions without risk of injury [6,7,8]. Note that the above systems do not offer any posture or gesture interactions between the soldiers and the virtual environment. To address this problem, the authors of [9,10,11,12,13,14,15,16,17] proposed an action recognition method based on multiple cameras. To tackle the occlusion problem, the authors mostly used Microsoft Kinect to capture color and depth data from the human body, which requires multiple devices arranged in a specific pattern for the recognizing different human actions. Therefore, the above methods are not feasible in a real-time virtual environment for military training.

To overcome the limitations of the real-time training system, the dismounted soldier training system (DSTS) was developed [18,19,20,21]. In the DSTS, soldiers wear special training suits and HMDs, and stand on a rubber pad to create a virtual environment. However, the inertial sensors of training suits can only recognize simple human actions (e.g., standing and crouching). When soldiers would like to walk or run in the virtual environment, they have to control small joysticks and function keys on simulated rifles, which are not immersive for locomotion. Thus, in a training situation, soldiers will not be able to react quickly when suddenly encountering enemy fire. As a result, though the DSTS provides a multi-soldier training environment, it has weaknesses with respect to human action recognition. Therefore, in order to develop a training simulator that is capable of accurately capturing soldiers’ postures and movements and is less expensive and more effective for team training, body area networks (BANs) represent an appropriate bridge for connecting physical and virtual environments [22,23,24,25,26], which contain inertial sensors like accelerometers, e-compasses and gyroscopes to capture human movement.

Xsens Co. have been developing inertial BANs to track human movement for several years. Although the inertial BAN, called the MVN system, is able to capture human body motions wirelessly without using optical cameras, the 60 Hz system update rate is too slow to record fast-moving human motion. The latest Xsens MVN suit solved this problem, reaching a system update rate of 240 Hz. However, the MVN suit is relatively expensive in consumer markets. In addition, it is difficult to support more than four soldiers in a room with the MVN system, since the wireless sensor nodes may have unstable connections and high latency. On the other hand, since the Xsens MVN system is not an open-source system, the crucial algorithm of the inertial sensors does not adaptively allow the acquisition by and integration with the proposed real-time program. Moreover, the MVN system is only a motion-capture BAN and is not a fully functional training simulator with inertial BANs [26].

For the above-mentioned reasons, this paper proposes a multi-player training simulator that immerses infantry soldiers in the battlefield. The paper presents a technological solution for training infantry soldiers. Fully immersive simulation training provides an effective way of imitating real situations that are generally dangerous, avoiding putting trainees at risk. To achieve this, the paper proposes an immersive system that can immediately identify human actions. Training effectiveness in this simulator is highly remarkable in several respects:

Cost-Effective Design: We designed and implemented our own sensor nodes, such that the fundamental operations of the inertial sensors could be adaptively adjusted for acquisition and integration. Therefore, it has a competitive advantage in terms of system cost.

System Capacity: Based on the proposed simulator, a six-man squad is able to conduct military exercises that are very similar to real missions. Soldiers hold mission rehearsals in the virtual environment such that leaders can conduct tactical operations, communicate with their team members, and coordinate with the chain of command.

Error Analysis: This work provides an analysis of the quaternion error and further explores the sensing measurement errors. Based on the quaternion-driven rotation, the measurement relation with the quaternion error between the earth frame and the sensor frame can be fully described.

System Delay Time: The update rate of inertial sensors is about 160 Hz (i.e., the refresh time of inertial sensors has a time delay of about 6 ms). The simulator is capable of training six men at a 60 Hz system update rate (i.e., the refresh time of the entire system needs about 16 ms), which is acceptable for human awareness with delay (≤40 ms).

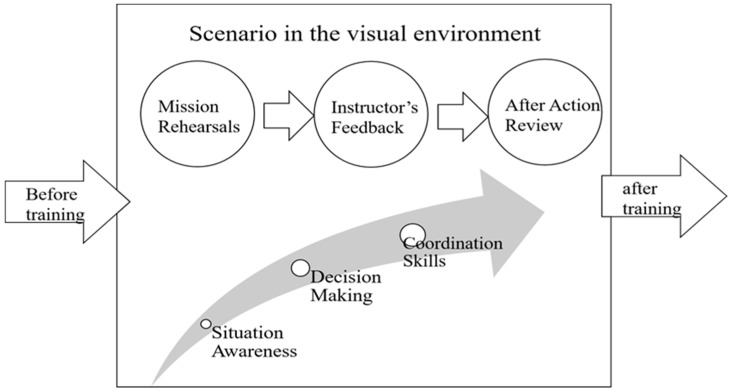

System Feedback: Instructors can provide feedback after mission rehearsals using the visual after action review (AAR) function in the simulator, which provides different views of portions of the action and a complete digital playback of the scenario, allowing a squad to review details of the action. Furthermore, instructors can analyze data from digital records and make improvements to overcome the shortcomings in the action (Figure 1). Accordingly, in the immersive virtual environment, soldiers and leaders improve themselves with respect to situational awareness, decision-making, and coordination skills.

Figure 1.

System training effectiveness process.

2. System Description

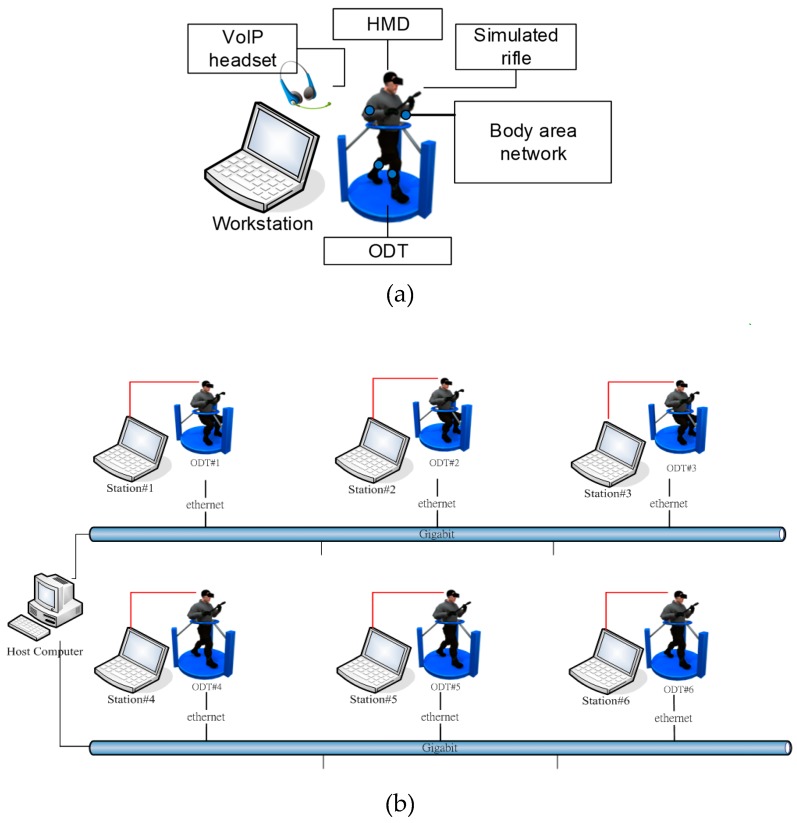

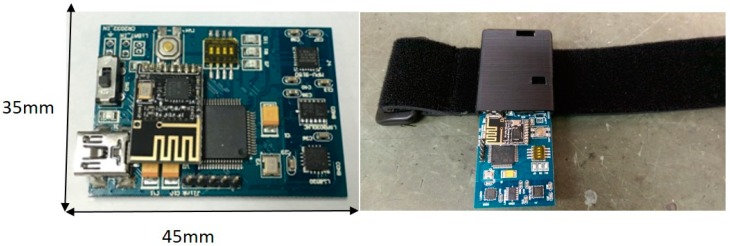

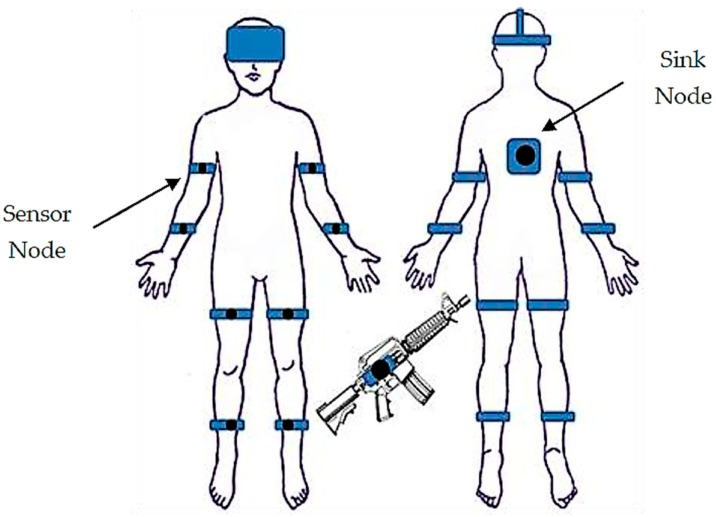

The proposed system, which is deployed in five-square-meter area (Figure 2), is a robust training solution that can support up to six soldiers. The virtual reality environment is implemented in C++ and DirectX 11. In addition to the virtual system, each individual soldier stands in the center of an ODT. The ODTs are customized hardware designed for the soldier training simulator, which is equipped with a high-performance workstation that generates the visual graphics for the HMD and provides voice communication functions based on VoIP technology. The dimensions of the ODT are 47” x47 “x78”, 187 lbs. The computing workstation on the ODT is equipped with a 3.1 GHz Intel Core i7 processor, 8 GB RAM, and an NVidia GTX 980M graphics card. The HMD is an Oculus Rift DK2, which has two displays with 960 × 1080 resolution per eye. The VoIP software is implemented based on Asterisk open-source PBX. Soldiers are not only outfitted with HMDs, but also equipped with multiple inertial sensors, which consist of wearable sensor nodes (Figure 3) deployed over the full body (Figure 4). As depicted in Figure 3, a sensor node consists of an ARM-based microcontroller and nine axial inertial measurement units (IMUs), which are equipped with ARM Cotex-M4 microprocessors and ST-MEMS chips. Note that these tiny wireless sensor nodes can work as a group to form a wireless BAN, which uses an ultra-low power radio frequency band of 2.4G.

Figure 2.

System architecture: single-soldier layout (a); multi-soldier network (b).

Figure 3.

Top view of sensor nodes (Left); the wearable sensor node (Right).

Figure 4.

Deployment of sensor nodes and the sink node.

2.1. Sensor Modeling

This subsection the sensors are modeled in order to formulate the orientation estimation problem. The measurement models for the gyroscope, accelerometer, and magnetometer are briefly discussed in the following subsections [27].

(1) Gyroscope: The measured gyroscope signal can be represented in the sensor frame s at time t using

| (1) |

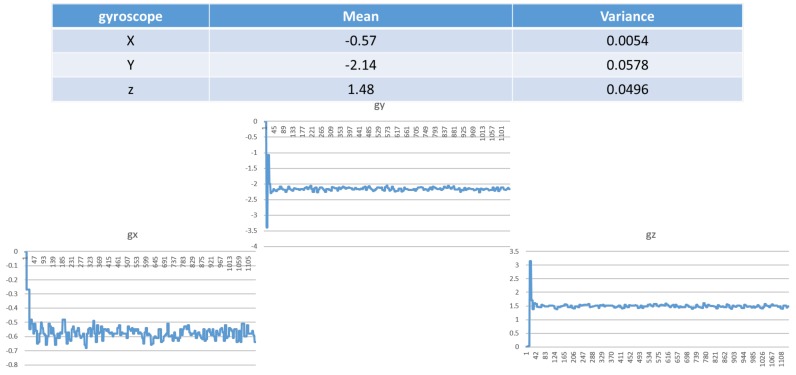

where is the true angular velocity, is the bias caused by low-frequency offset fluctuations, and the measurement error is assumed to be zero-mean white noise. As shown in Figure 5, the raw data of the measured gyroscope signals in the x, y and z directions includes true angular velocity, bias and error.

Figure 5.

Mean and variance of the gyroscope in the x, y and z directions.

(2) Accelerometer: Similar to the gyroscope signal, the measured accelerometer signal can be represented using

| (2) |

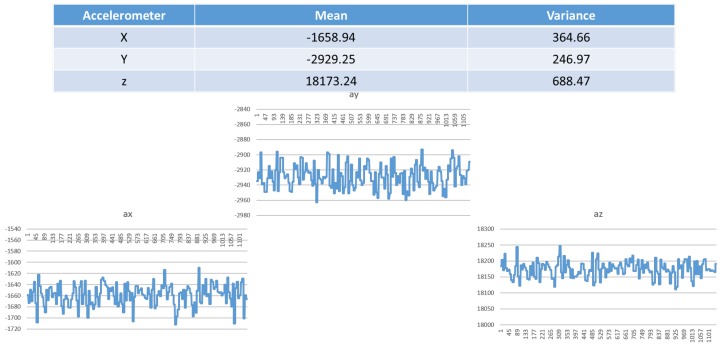

where is the linear acceleration of the body after gravity compensation, is the bias caused by low-frequency offset fluctuations, and the measurement error is assumed to be zero-mean white noise. As shown in Figure 6, the raw data of the measured accelerometer signals in the x, y and z directions includes true linear acceleration, offset and error.

Figure 6.

Mean and variance of the accelerometer in the x, y and z directions.

(3) Magnetometer: For the magnetometer, the measured signal is often modeled as the sum of the earth’s magnetic field and noise , which yields

| (3) |

As shown in Figure 7, the raw data of the measured magnetometer signals in the x, y and z directions includes the earth’s magnetic field, offset and error.

Figure 7.

Mean and variance of the magnetometer in the x, y and z directions.

2.2. Sensor Calibration

Since the accuracy of the orientation estimate depends heavily on the measurements, the null point, and the scale factor of each axis of the sensor, a calibration procedure should be performed before each practical use. To this end, the sensor calibration is described as follows:

Step 1: Given a fixed gesture, we measure the sensing data (i.e., the raw data) and calculate the measurement offsets.

Step 2: Remove the offset and normalize the modified raw data to the maximum resolution of the sensor’s analog-to-digital converter. In this work, the calibrated results (CR) of the sensors are described by Equations (4)–(6).

Table 1 shows the means of the calibrated results in the x, y and z directions of sensor nodes, which include the accelerometer, the magnetometer, and the gyroscope. Note that the inertial sensor signals (e.g., , , and ) are measured without movement, and that a small bias or offset (e.g., or or ) in the measured signals can therefore be observed. In general, the additive offset that needs to be corrected for before calculating the estimated orientation is very small. For instance, the additive offset would be positive or negative in the signal output (e.g., the average degree/sec and degree/sec) without movement.

| (4) |

| (5) |

| (6) |

Table 1.

The calibrated results of the sensor nodes.

| x | 0.95 | 0.98 | 0.96 |

| y | 0.97 | 0.99 | 0.96 |

| z | 0.99 | 0.98 | 0.94 |

After bias error compensation for each sensor, the outputs of the sensors represented in (1)–(3) can be rewritten as , , and , and they are then applied as inputs for the simulation system. In particular, the proposed algorithm can be good at handling zero-mean white noise. After the completion of modeling and calibration of the sensor measurements from the newly developed MARG, all measurements are then represented in the form of quaternions as inputs of the proposed algorithm.

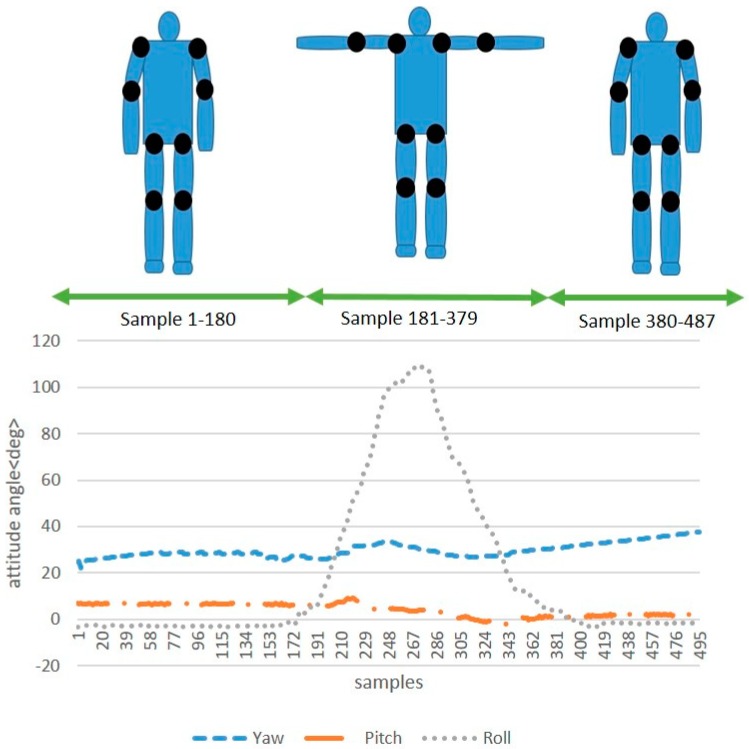

As shown in Figure 8, a typical run of the attitude angles of a sensor node placed on the upper arm when standing in a T-pose is recorded. In the first step, we stand with the arms naturally at the sides. Then, we stretch the arms horizontally with thumbs forward, and then we move back to the first step. This experiment shows that the sensor node is capable of tracking the motion curve directly.

Figure 8.

The attitude angles of a sensor node placed on the upper arm when standing in a T-pose.

2.3. Information Processing

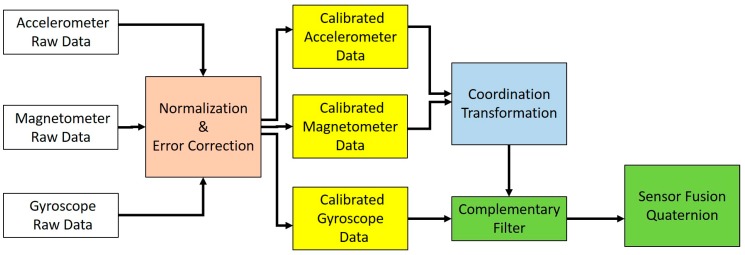

As mentioned above, human action recognition is crucial to developing an immersive training simulator. The wireless BAN is able to instantaneously track the action of the skeleton of a soldier. The microcontroller of the sensor node acquires the raw data from the accelerometer, gyroscope and magnetometer through SPI and I2C interfaces. The measurements of the accelerometer, gyroscope and magnetometer are contaminated by errors of scale factor and bias, resulting in a large estimation error during sensor fusion. Thus, a procedure for normalization and error correction is needed for the sensor nodes to ensure the consistency of the nine-axis data (Figure 9). After that, the calibrated accelerometer and magnetometer data can be used to calculate the orientation and rotation. Estimation based on the accelerometer does not include the heading angle, which is perpendicular to the direction of gravity. Thus, the magnetometer is used to measure the heading angle. the Euler angles are defined as follows:

Figure 9.

The diagram shows the process of sensor fusion.

Let Xacc, Yacc, Zacc be the accelerometer components. We have:

| (7) |

| (8) |

Let Xmag, Ymag, Zmag be the magnetometer components. We have:

| (9) |

| (10) |

| (11) |

Accordingly, the complementary filter output (using gyroscope) is

| (12) |

To mitigate the accumulated drift from directly integrating the linear angular velocity of the gyroscope, the accelerometer and magnetometer are used as aiding sensors to provide the vertical and horizontal references for the Earth. Moreover, a complementary filter [28,29] is able to combine the measurement information of an accelerometer, a gyroscope and a magnetometer, offering big advantages in terms of both long-term and short-term observation. For instance, an accelerometer does not drift over the course of a long-term observation, and a gyroscope is not susceptible to small forces during a short-term observation. The W value of the complementary filter in Equation (12) is the ratio in which inertial sensor data is fused, which can then be used to compensate for gyroscope drift by using the accelerometer and magnetometer measurements. We always set the W value to 0.9, or probably even higher than 0.9. Therefore, the complementary filter provides an accurate orientation estimation.

2.4. Communication and Node Authentication Procedures

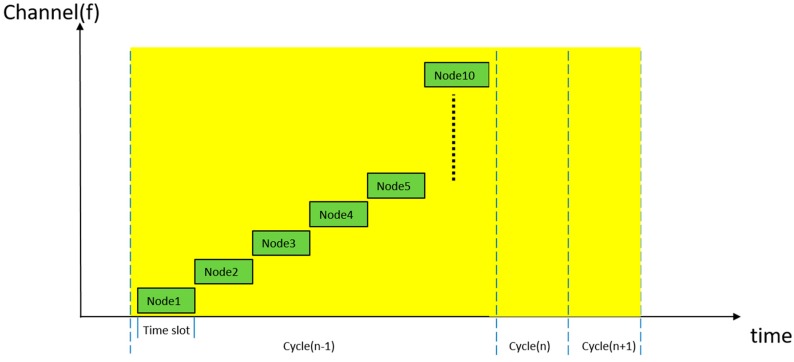

To tackle the problem of interference and to reduce the bit error rate (BER) of the wireless data transmission between the sensor nodes and the sink node, communication mechanisms can be applied to build a robust wireless communication system [30,31]. The communication technique employed for communication between the sensor nodes and the sink node is frequency hopping spread spectrum (FHSS). The standard FHSS modulation technique is able to avoid interference between different sensor nodes in the world-wide ISM frequency band, because there are six BANs in total, all of which are performing orientation updates in a small room. Moreover, the sensor nodes’ transmission of data to the sink node is based on packet communication.

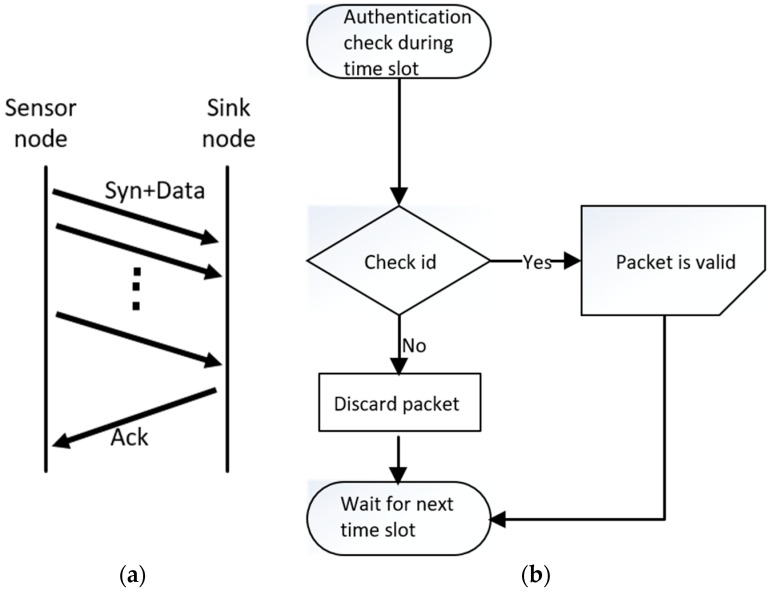

In the beginning, sensor nodes send packets, which include orientation and rotation data. When the sink node receives a packet from a sensor node, an acknowledgement packet will be transmitted immediately (Figure 10). If the acknowledgement fails to arrive, the sensor node will retransmit the packet, unless the number of retries exceeds the retransmission limit. When the sink node receives packets in operation mode, it will check the payload of the packets. As shown in Figure 11, if the payload is equal to the sensor node’s unique identification information, the sink node will accept the packet and scan the next sensor node’s channel during the next time slot. The ten wireless sensor node channels operate at a frequency range of 2400–2525 GHz.

Figure 10.

Operation mode during each time slot. (a) Step 1: automatic packet synchronization; (b) Step 2: identification check on valid packets by the sink node.

Figure 11.

The diagram shows that sensor nodes communicate with sink node in two domains.

2.5. System Intialization

The sink node is deployed on the back of the body and collects the streaming data from multiple wireless sensor nodes. Afterwards, the streaming data from the sensor nodes is transmitted to the workstation via Ethernet cables, modeling the skeleton of the human body along with the structure of the firearm (Figure 12). Table 2 describes the data structure of a packet, which is sent by a sensor node to the sink node in every ODT. Table 3 shows the integrated data that describes the unique features of each soldier in the virtual environment. The description provides the appearance characteristics, locomotion, and behaviors of each soldier [32,33].

Figure 12.

A fully equipped soldier.

Table 2.

Data structure of a packet.

| Header | Data to Show Packet Number | 8 bits |

|---|---|---|

| Payload | Bone data size | 144 bits |

| Total data length | ||

| Soldier No. | ||

| T-Pose status | ||

| Sensor nodeID | ||

| Total Bones of a skeleton | ||

| Yaw value of the bone | ||

| Pitch value of the bone | ||

| Roll value of the bone | ||

| Tail | Data to show end of packet | 8 bits |

Table 3.

Description of the features of each soldier.

| DATA | UNIT | RANGE |

|---|---|---|

| Soildier no. | N/A | 0~255 |

| Friend or Foe | N/A | 0~2 |

| exterbal Control | N/A | 0/1 |

| Team no. | N/A | 0~255 |

| Rank | N/A | 0~15 |

| Appearance | N/A | 0~255 |

| BMI | Kg/Meter2 | 18~32 |

| Health | Percentage | 0~100 |

| Weapon | N/A | 0~28 |

| Vechicle | N/A | 0~5 |

| Vechicle Seat | N/A | 0~5 |

| Position X | Meter | N/A |

| Position Y | Meter | N/A |

| Position Z | Meter | N/A |

| Heading | Degree | −180~180 |

| Movement | N/A | 0~255 |

| Behavior | N/A | 0~255 |

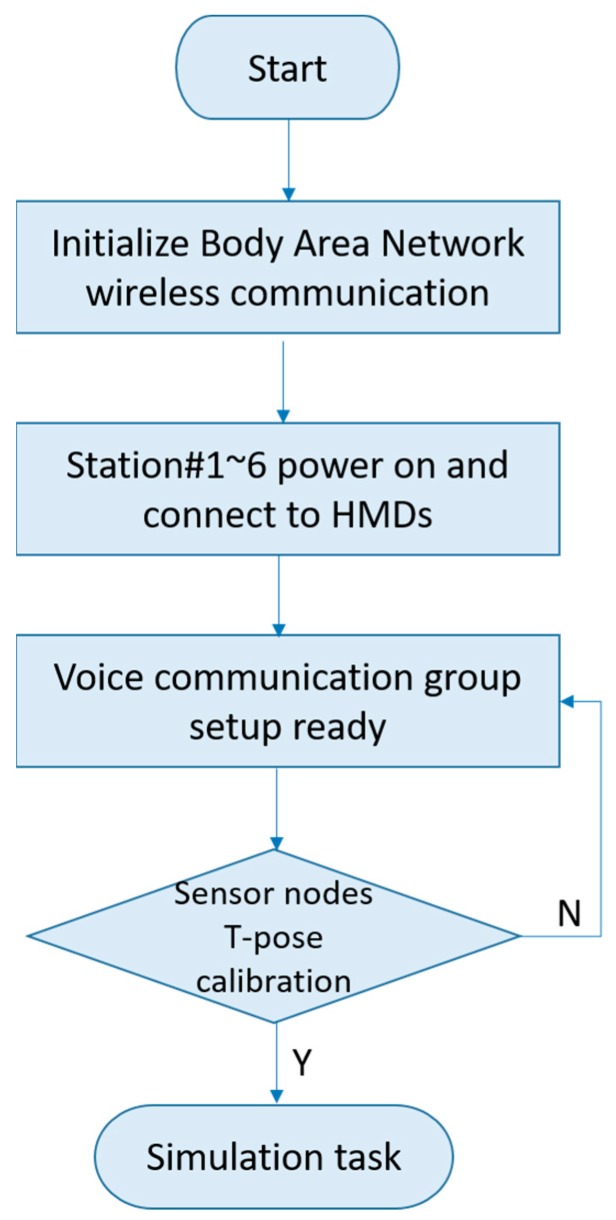

For sensor nodes, the data stream reported to the sink node is interpreted as the movement-based data structures of a skeleton. Each wearable sensor node of the skeleton has a unique identification information during the initialization phase. As a result, the sink node can distinguish which sensor nodes are online. Accordingly, when turning the power on, the HMDs on the soldiers are automatically connected to the workstations, and the voice communication group setting is immediately ready. Please note that the T-pose calibration is performed in sensor node n of a skeleton for initializing the root frame, which is given by

| (13) |

where is the reading of sensor node n in the modified T-pose and is the new body frame from the initial root frame .

Although sensor nodes are always worn on certain positions of a human body, the positions of sensor nodes may drift due to the movements occurring during the training process. Hence, the T-pose calibration procedure can be applied to estimate the orientation of the sensors. After that, the system is prepared to log in for simulation tasks. The system initialization flow diagram is shown in Figure 13.

Figure 13.

System initialization flow diagram.

3. Quaternion Representation

This section outlines a quaternion representation of the orientation of the sensor arrays. Quaternions provide a convenient mathematical notation for representing the orientation and rotation of 3D objects because quaternion representation is more numerically stable and efficient compared to rotation matrix and Euler angel representation. According to [34,35,36], a quaternion can be thought of as a vector with four components,

| (14) |

as a composite of a scalar and ordinary vector. The quaternion units , , are called the vector part of the quaternion, while is the scalar part. The quaternion can frequently be written as an ordered set of four real quantities,

| (15) |

Denote as the orientation of the earth frame ue with respect to the sensor frame us. The conjugate of the quaternion can be used to represent an orientation by swapping the relative frame, and the sign * denotes the conjugate. Therefore, the conjugate of can be denoted as

| (16) |

Moreover, the quaternion product can be used to describe compounded orientations, and their definition is based on the Hamilton rule in [37]. For example, the compounded orientation can be defined by

| (17) |

where denotes the orientation of the earth frame ue with respect to the frame uh.

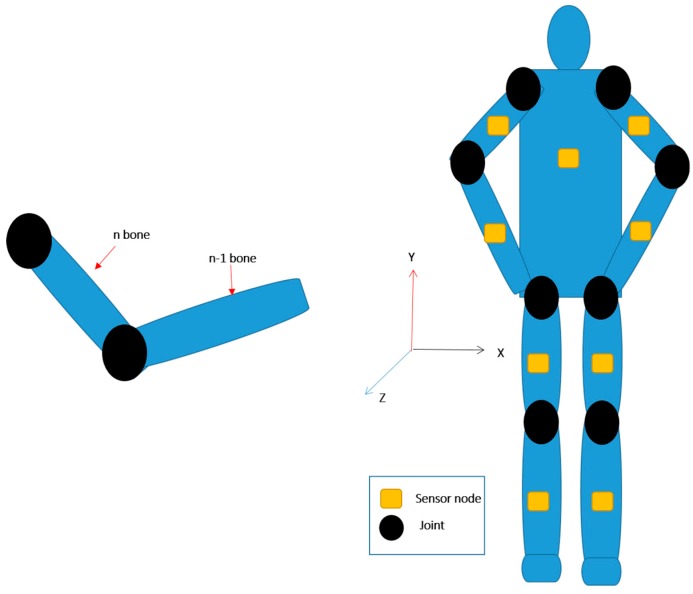

A human body model consists of a set of body segments connected by joints. For upper limbs and lower limbs, kinematic chains are modeled that branch out around the torso. The kinematic chain describes the relationship between rigid body movements and the motions of joints. A forward kinematics technique, which was introduced for the purposes of robotic control, is used to configure each pair of adjacent segments. In the system, the aim of building human kinematic chains is to determine the transformation matrix of a human body from the first to the last segments and to find the independent configuration for each joint and the relationship with the root frame.

Thus, the rotation matrix used for orientation from sensor node n-1 to sensor node n is given by

| (18) |

Figure 14 shows the simplified segment biomechanical model of the human body. The kinematics of segments on which no inertial sensors are attached (e.g., hand, feet, toes) are considered to be rigid connections between neighboring segments. The transformation matrix is defined as

| (19) |

where is the translation matrix from sensor frame to body frame. According to [37,38], therefore, the transformation matrix of a human body from the first segment to the n-th segment is

| (20) |

Figure 14.

The kinematic chain of a human body.

4. Performance Analysis

The analysis focuses on the quaternion error and further explores the sensing measurement errors. Based on the quaternion-driven rotation, the measurement relation with the quaternion error between the earth frame and sensor frame can be further described.

4.1. Rotation Matrix

According to [36], given a unit quaternion q = + i + j + k, the quaternion-driven rotation can be further described by the rotation matrix R, which yields

| (21) |

Let be an estimate of the true attitude quaternion . The small rotation from the estimated attitude, , to the true attitude is defined as . The error quaternion is small but non-zero, due to errors in the various sensors. The relationship is expressed in terms of quaternion multiplication as follows:

| (22) |

Assuming that the error quaternion, , is to represent a small rotation, it can be approximated as follows:

| (23) |

Noting that the error quaternion is a perturbation of the rotation matrix, and the vector components of are small, the perturbation of the rotation matrix R in Equation (21) can be written as:

| (24) |

Equation (22) relating and q can be written as

| (25) |

is the estimate of the rotation matrix or the equivalent of . Now, considering the sensor frame and the earth frame , we have

| (26) |

Thus, the measurement relation for the quaternion error is obtained:

| (27) |

Accordingly, given the error quaternion and the sensor frame , the perturbation of the earth frame can be described. The quantitative analysis of the error quaternion is detailed in Section 6.1.

4.2 Error Analysis

The analysis in Section 4.1 focuses on the quaternion error. Here we further explore the sensing measurement errors, which consist of the elements of the error quaternion.

Now we associate a quaternion with Euler angles, which yields

| (28) |

Denote the pitch angle measurement as , where is the true pitch angle information and . To simplify the error analysis, assume the rotation errors are neglected in roll angle and heading angle measurements. Let and . Accordingly, considering the measurement error in the pitch angle, the quaternion can be rewritten as

| (29) |

Assuming that the measurement error in the pitch angle is small, we obtain

| (30) |

| (31) |

According to Equation (29), the quaternion with measurement error in the pitch angle can be further approximated by

| (32) |

Note that, given a measurement error in the pitch angle, and the roll, pitch, and heading angle measurements, the error quaternion can be approximately derived by Equation (32). Therefore, the measurement relation with the quaternion error between the earth frame and the sensor frame can be further described using Equation (27).

5. System Operating Procedures

To evaluate the effectiveness and capability of the virtual reality simulator for team training, we designed a between-subjects study. In the experiments, the impacts of three key factors on system performance are considered: training experience, the group size of the participants, and information exchange between the group members. The experimental results are detailed as follows.

5.1. Participants

The experiment involved 6 participants. No participants had ever played the system before. Half of the participants were volunteers who had done military training in live situations, while the other half had never done live training. The age of the participants ranged from 27 to 35.

5.2. Virtual Environment

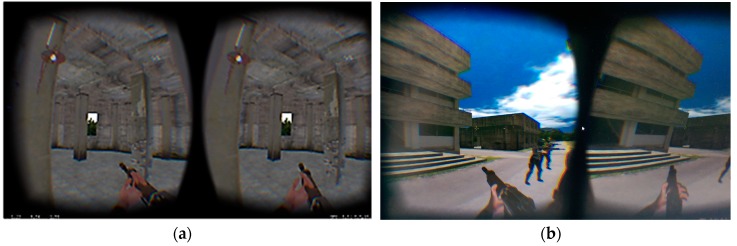

The immersive environment was designed for a rescue mission in an enemy-held building (Figure 15). In addition, three hostages were being guarded on the top floor of a three-storied building which was controlled by 15 enemies. To ensure that the virtual environment was consistent with an actual training facility, we simulated a real military training site, including the urban street, the enemy-held building, and so on. All enemies controlled automatically by the system were capable of shooting, evading attacks, and team striking. When participants were immersed in the virtual environment, they could interact with other participants not only through gesture tracking, but also through VoIP communication technology.

Figure 15.

Virtual environment on a HMD. (a) An indoor view. (b) An outdoor view.

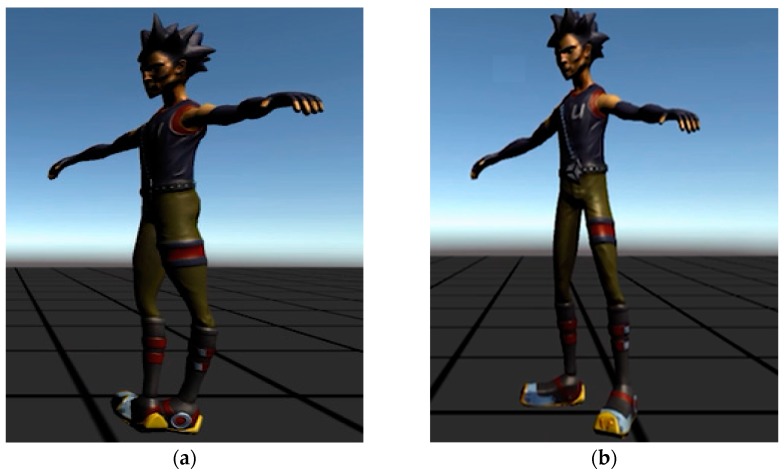

As mentioned above, sensing measurement errors greatly affect the sensor nodes, which are attached to a human body. The integration of the inertial sensors, including sensor signals and drift errors, is performed on the basis of the kinematics of the human body. Therefore, sensing errors will be accumulated in quaternion form. As shown in Figure 16, the sensing measurement errors are calibrated when the T-pose calibration is performed. In the first step, we normalize the accelerometer, gyroscope and magnetometer in all of the sensor nodes and compensate for bias errors. In the following step, a complementary filter is used to mitigate the accumulated errors based on the advantages of long-term observation and short-term observation respectively. In the final step, T-pose calibration is performed to align the orientation of the sensor nodes with respect to the body segments, after which the sensor node is able to capture body movement accurately in the virtual environment.

Figure 16.

Sensing measurement errors of the sensor nodes were calibrated when the T-pose calibration was performed. (a) All sensor nodes were calibrated well during the T-pose procedure. (b) One sensor node attached to the right thigh was not calibrated well, and a sensing error was derived in the pitch direction.

5.3. Procedure

The purpose of the experiment is to evaluate the system performance. Participants follow the same path through the virtual environment in the experiment. The time-trial mission starts when the participants begin from the storm gate. Moreover, the time taken by the participants to kill all of the enemies who guard the three hostages on the top floor will be recorded. All participants control simulated M4 rifles, and the enemies control virtual AK47 rifles. All of the weapons have unlimited ammo. Under these experimental conditions, the participants’ death rate (hit rate) is recorded for data analysis by the experimenters. Moreover, if all participants are killed before they complete the mission, the rescue task is terminated, and the time will not be recorded for the experiment.

6. Experimental Results

In order to assess the system performance, four sets of experiments were performed to explore the impact of quaternion error and the training experience on mission execution and management.

6.1. Error Analysis

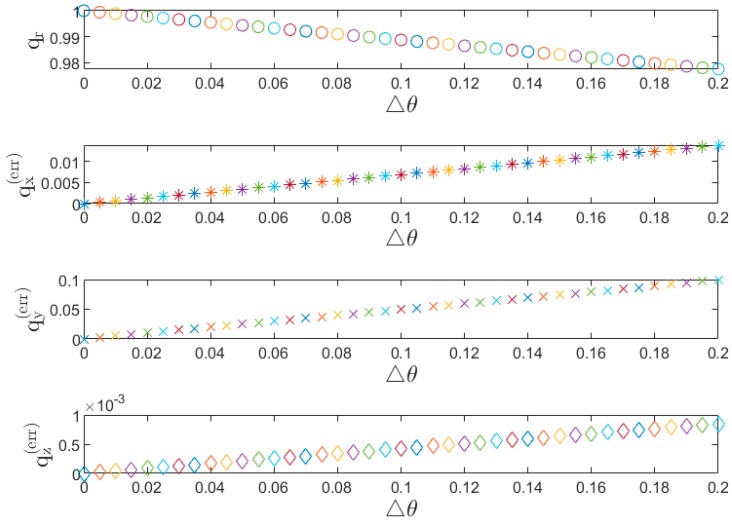

In the first set of simulations, we explored the characteristic of the error quaternion . With reference to the analysis in Section 4.2, the rotation errors are assumed to be negligible in the roll angle and heading angle measurements, and the measurement error in the pitch angle is considered to be . With angle information (e.g., the heading angle , the roll angle , the pitch angle ) and Figure 17 presents the behavior of the error quaternion when varying the measurement error of the pitch angle. Note that, given , the vector parts of (i.e., ) are approximately linear with respect to the which can provide a sensible way of describing the error behaviors of rotation X, rotation Y, and rotation Z. According to Equation (27), given the quaternion error and the sensor frame , the perturbation of the earth frame can be described. As shown in Figure 17, when the measurement error in the pitch angle is small, the small vector components of lead to a small perturbation of the earth frame In contrast, as the measurement error in the pitch angle increases, the perturbation in the Y-axis increases, which results in a larger error component in the Y-axis (e.g., with , = 0.0004).

Figure 17.

The scalar part and the vector part of the quaternion error for a small rotation of measurement error of pitch angle.

6.2. Simulated Training Performance

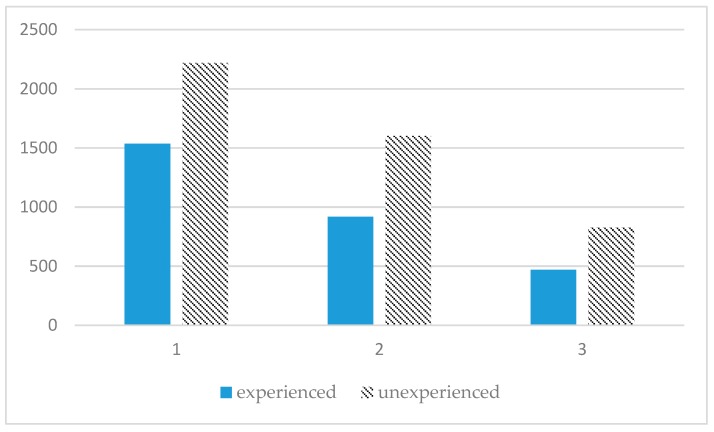

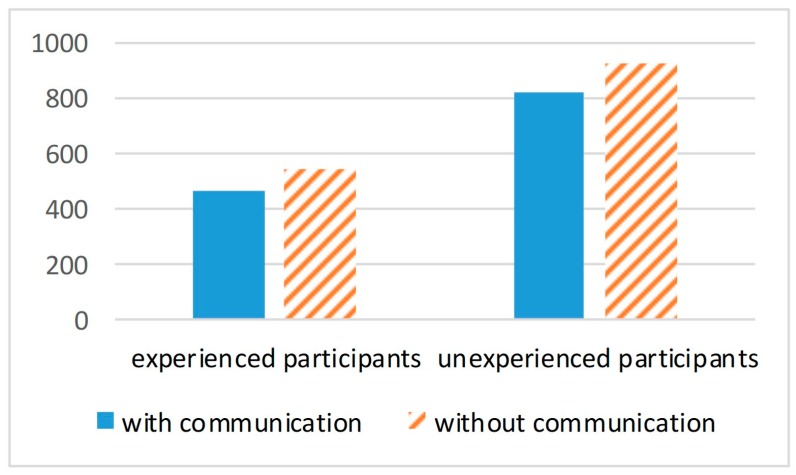

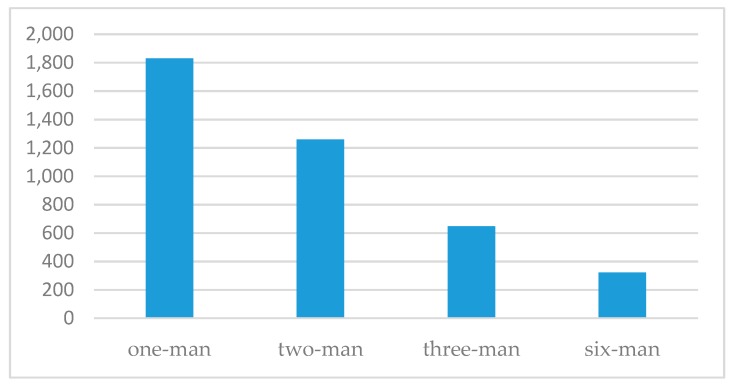

Figure 18 shows a snapshot of the proposed system. All three individual participants, who had done the same military training at the actual training site, successfully completed the rescue mission, with times of 22′16″, 25′40″, 28′51″, respectively (mean = 25′36″, standard deviation = 3′18″). However, of the three participants who had never done the same training and started the mission individually, only one participant completed the rescue mission, with a time of 36′59″, and the other two participants were killed by enemies before completing the rescue task. The three two-man teams who had been trained in the live situation completed the rescue mission with times of 11′28″, 18′19″, 16′5″, respectively (mean = 15′17″, standard deviation = 3′30″). However, the three two-man teams who had never done the same training also completed the rescue mission, with times of 25′19″, 28′33″, 26′12″, respectively (mean = 26′41″, standard deviation = 1′40″). Finally, the three-man team that had live training experience completed rescue mission with a time of 7′49″. On the other hand, the three-man team that had never done the same training completed the rescue mission with a time of 13′47″. The results of experienced and unexperienced participants’ mean times in the experiment are shown in Figure 19. We also evaluated another situation, in which two subjects in the three-man groups completed the mission without the VoIP communication function. The mean time in this experiment increased by 1′26″ (Figure 20), which implies that communication and information processing can improve the performance for rescue missions.

Figure 18.

Snapshot of the system.

Figure 19.

Means of experienced participants and unexperienced participants under various experimental conditions (horizontal axis: single-man, two-man, three-man; vertical axis unit: seconds).

Figure 20.

Mean of three-man teams with voice communication/without voice communication under the experimental conditions (horizontal axis: experienced/unexperienced participants; vertical axis unit: seconds).

Finally, we evaluated a six-man team of all participants in the rescue task, because standard deployment for a real live mission is a six-man entry team. The mission time decreased by 2′28″ with respect to the three-man experiment with experienced participants. The experimental results for mean times with different numbers of participants are shown in Figure 21. In addition, death rate (hit rate) revealed another difference between single and multiple participants. From the results, the mean of death rate (hit rate) was 1.5 shots/per mission when a single participant interacted with the system. However, the mean of death rate decreased to 0.38 shots/per mission when multiple participants interacted with the system.

Figure 21.

Mean times with different numbers of participants under the experimental conditions (horizontal axis: number of participants; vertical axis unit: seconds).

6.3. Discussion

When the participants executed the rescue mission, the activities involved in the experiment included detecting enemies, engaging enemies, moving inside the building and rescuing the hostages. The results reveal significant differences in several respects, including experience, quantity, and communication, and show that compared with the inexperienced participants, all experienced participants who had done the same training in a live situation took less time to complete the rescue mission. The wireless BANs of the participants are able to work accurately in the virtual environment for experienced participants. Tactical skills (e.g., moving through built-up areas, reconnoiter area, reacting to contact, assaulting, and clearing a room) absolutely require team work, demanding that wireless BANs interact with each other perfectly in terms of connection and accuracy. Without proper BANs, participants may feel mismatched interaction with their virtual avatars, and may feel uncomfortable or sick in the virtual environment.

The experimental results show that a larger sized group of participants took less time to complete the rescue mission than a smaller sized group of participants. Moreover, a group of multiple participants had a lower death rate compared with that of a single participant. This is due to the fact that, as the group size of participants increases, team movement is more efficient and fire power is greater in the virtual environment, which is similar to a real world mission. Furthermore, when the VoIP communication function was disabled, whether participants were experienced or not, the rescue mission time in the experiment consequently increased. As we know, in the real rescue mission, team coordination is important in the battlefield. In the system, all participants are able to interact with each other through hand signal tracking and voice communication. As a result, multiple-user training may become a key feature of the system.

7. Conclusions

In this paper, we have addressed problems arising when building an infantry training simulator for multiple players. The immersive approach we proposed is an appropriate solution that is able to train soldiers in several VR scenarios. The proposed simulator is capable of training six men at a system update rate of 60 Hz (i.e., the refresh time of the entire system takes about 16 ms), which is acceptable for human awareness with delay (≤ 40 ms). Compared with the expensive Xsens MVN system, the proposed simulator has a competitive advantage in terms of system cost. For future work, we intend to develop improved algorithms to deal with accumulated sensing errors and environment noise on wireless BANs. Consequently, the system can develop finer gestures for military squad actions and enrich the scenario simulation for different usages in military training. The system is expected to be applied in different kind of fields and situations.

Author Contributions

Y.-C.F. and C.-Y.W. conceived and designed the experiments; Y.-C.F. performed the experiments; Y.-C.F. analyzed the data; Y.-C.F. and C.-Y.W. wrote the paper.

Funding

This research was funded by the Ministry of Science and Technology of Taiwan under grant number MOST-108-2634-F-005-002, and by the “Innovation and Development Center of Sustainable Agriculture” from The Featured Areas Research Center Program within the framework of the Higher Education Sprout Project by the Ministry of Education (MOE) in Taiwan.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Dimakis N., Filippoupolitis A., Gelenbe E. Distributed Building Evacuation Simulator for Smart Emergency Management. Comput. J. 2010;53:1384–1400. doi: 10.1093/comjnl/bxq012. [DOI] [Google Scholar]

- 2.Knerr B.W. Immersive Simulation Training for the Dismounted Soldier. Army Research Inst Field Unit; Orlando, FL, USA: 2007. No. ARI-SR-2007-01. [Google Scholar]

- 3.Lele A. Virtual reality and its military utility. J. Ambient Intell. Hum. Comput. 2011;4:17–26. doi: 10.1007/s12652-011-0052-4. [DOI] [Google Scholar]

- 4.Zhang Z., Zhang M., Chang Y., Aziz E.-S., Esche S.K., Chassapis C. Cyber-Physical Laboratories in Engineering and Science Education. Springer; Berlin/Heidelberg, Germany: 2018. Collaborative Virtual Laboratory Environments with Hardware in the Loop; pp. 363–402. [Google Scholar]

- 5.Stevens J., Mondesire S.C., Maraj C.S., Badillo-Urquiola K.A. Workload Analysis of Virtual World Simulation for Military Training; Proceedings of the MODSIM World; Virginia Beach, VA, USA. 26–28 April 2016; pp. 1–11. [Google Scholar]

- 6.Frissen I., Campos J.L., Sreenivasa M., Ernst M.O. Enabling Unconstrained Omnidirectional Walking through Virtual Environments: An Overview of the CyberWalk Project. Springer; New York, NY, USA: 2013. pp. 113–144. Human Walking in Virtual Environments. [Google Scholar]

- 7.Turchet L. Designing presence for real locomotion in immersive virtual environments: An affordance-based experiential approach. Virtual Real. 2015;19:277–290. doi: 10.1007/s10055-015-0267-3. [DOI] [Google Scholar]

- 8.Park S.Y., Ju H.J., Lee M.S.L., Song J.W., Park C.G. Pedestrian motion classification on omnidirectional treadmill; Proceedings of the 15th International Conference on Control, Automation and Systems (ICCAS); Busan, Korea. 13–16 October 2015. [Google Scholar]

- 9.Papadopoulos G.T., Axenopoulos A., Daras P. Real-Time Skeleton-Tracking-Based Human Action Recognition Using Kinect Data; Proceedings of the MMM 2014; Dublin, Ireland. 6–10 January 2014. [Google Scholar]

- 10.Cheng Z., Qin L., Ye Y., Huang Q., Tian Q. Human daily action analysis with multi-view and color-depth data; Proceedings of the European Conference on Computer Vision; Florence, Italy. 7–13 October 2012; Berlin/Heidelberg, Germany: Springer; 2012. [Google Scholar]

- 11.Kitsikidis A., Dimitropoulos K., Douka S., Grammalidis N. Dance analysis using multiple kinect sensors; Proceedings of the 2014 International Conference on Computer Vision Theory and Applications (VISAPP); Lisbon, Portugal. 5–8 January 2014; [Google Scholar]

- 12.Kwon B., Kim D., Kim J., Lee I., Kim J., Oh H., Kim H., Lee S. Implementation of human action recognition system using multiple Kinect sensors; Proceedings of the Pacific Rim Conference on Multimedia; Gwangju, Korea. 16–18 September 2015. [Google Scholar]

- 13.Beom K., Kim J., Lee S. An enhanced multi-view human action recognition system for virtual training simulator; Proceedings of the 2016 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA); Jeju, Korea. 13–16 December 2016. [Google Scholar]

- 14.Liu T., Song Y., Gu Y., Li A. Human action recognition based on depth images from Microsoft Kinect; Proceedings of the 2013 Fourth Global Congress on Intelligent Systems; Hong Kong, China. 3–4 December 2013. [Google Scholar]

- 15.Berger K., Ruhl K., Schroeder Y., Bruemmer C., Scholz A., Magnor M.A. Marker-less motion capture using multiple color-depth sensors; Proceedings of the the Vision, Modeling, and Visualization Workshop 2011; Berlin, Germany. 4–6 October 2011. [Google Scholar]

- 16.Kaenchan S., Mongkolnam P., Watanapa B., Sathienpong S. Automatic multiple kinect cameras setting for simple walking posture analysis; Proceedings of the 2013 International Computer Science and Engineering Conference (ICSEC); Nakorn Pathom, Thailand. 4–6 September 2013. [Google Scholar]

- 17.Kim J., Lee I., Kim J., Lee S. Implementation of an Omnidirectional Human Motion Capture System Using Multiple Kinect Sensors. IEICE Trans. Fundam. 2015;98:2004–2008. doi: 10.1587/transfun.E98.A.2004. [DOI] [Google Scholar]

- 18.Taylor G.S., Barnett J.S. Evaluation of Wearable Simulation Interface for Military Training. Hum Factors. 2012;55:672–690. doi: 10.1177/0018720812466892. [DOI] [PubMed] [Google Scholar]

- 19.Barnett J.S., Taylor G.S. Usability of Wearable and Desktop Game-Based Simulations: A Heuristic Evaluation. Army Research Inst for the Behavioral and Social Sciences; Alexandria, VA, USA: 2010. [Google Scholar]

- 20.Bink M.L., Injurgio V.J., James D.R., Miller J.T., II . Training Capability Data for Dismounted Soldier Training System. Army Research Inst for the Behavioral and Social Sciences; Fort Belvoir, VA, USA: 2015. No. ARI-RN-1986. [Google Scholar]

- 21.Cavallari R., Martelli F., Rosini R., Buratti C., Verdone R. A Survey on Wireless Body Area Networks: Technologies and Design Challenges. IEEE Commun. Surv. Tutor. 2014;16:1635–1657. doi: 10.1109/SURV.2014.012214.00007. [DOI] [Google Scholar]

- 22.Alam M.M., Ben Hamida E. Surveying wearable human assistive technology for life and safety critical applications: Standards, challenges and opportunities. Sensors. 2014;14:9153–9209. doi: 10.3390/s140509153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bukhari S.H.R., Rehmani M.H., Siraj S. A Survey of Channel Bonding for Wireless Networks and Guidelines of Channel Bonding for Futuristic Cognitive Radio Sensor Networks. IEEE Commun. Surv. Tutor. 2016;18:924–948. doi: 10.1109/COMST.2015.2504408. [DOI] [Google Scholar]

- 24.Ambroziak S.J., Correia L.M., Katulski R.J., Mackowiak M., Oliveira C., Sadowski J., Turbic K. An Off-Body Channel Model for Body Area Networks in Indoor Environments. IEEE Trans. Antennas Propag. 2016;64:4022–4035. doi: 10.1109/TAP.2016.2586510. [DOI] [Google Scholar]

- 25.Seo S., Bang H., Lee H. Coloring-based scheduling for interactive game application with wireless body area networks. J. Supercomput. 2015;72:185–195. doi: 10.1007/s11227-015-1540-7. [DOI] [Google Scholar]

- 26.Xsens MVN System. [(accessed on 21 January 2019)]; Available online: https://www.xsens.com/products/xsens-mvn-animate/

- 27.Tian Y., Wei H.X., Tan J.D. An Adaptive-Gain Complementary Filter for Real-Time Human Motion Tracking with MARG Sensors in Free-Living Environments. IEEE Trans. Neural Syst. Rehabil. Eng. 2013;21:254–264. doi: 10.1109/TNSRE.2012.2205706. [DOI] [PubMed] [Google Scholar]

- 28.Euston M., Coote P., Mahony R., Kim J., Hamel T. A complementary filter for attitude estimation of a fixed-wing UAV; Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems; Nice, France. 22–26 September 2008. [Google Scholar]

- 29.Yoo T.S., Hong S.K., Yoon H.M., Park S. Gain-Scheduled Complementary Filter Design for a MEMS Based Attitude and Heading Reference System. Sensors. 2011;11:3816–3830. doi: 10.3390/s110403816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wu Y., Liu K.S., Stankovic J.A., He T., Lin S. Efficient Multichannel Communications in Wireless Sensor Networks. ACM Trans. Sens. Netw. 2016;12:1–23. doi: 10.1145/2840808. [DOI] [Google Scholar]

- 31.Fafoutis X., Marchegiani L., Papadopoulos G.Z., Piechocki R., Tryfonas T., Oikonomou G.Z. Privacy Leakage of Physical Activity Levels in Wireless Embedded Wearable Systems. IEEE Signal Process. Lett. 2017;24:136–140. doi: 10.1109/LSP.2016.2642300. [DOI] [Google Scholar]

- 32.Ozcan K., Velipasalar S. Wearable Camera- and Accelerometer-based Fall Detection on Portable Devices. IEEE Embed. Syst. Lett. 2016;8:6–9. doi: 10.1109/LES.2015.2487241. [DOI] [Google Scholar]

- 33.Ferracani A., Pezzatini D., Bianchini J., Biscini G., Del Bimbo A. Locomotion by Natural Gestures for Immersive Virtual Environments; Proceedings of the 1st International Workshop on Multimedia Alternate Realities; Amsterdam, The Netherlands. 16 October 2016. [Google Scholar]

- 34.Kuipers J.B. Quaternions and Rotation Sequences. Volume 66 Princeton University Press; Princeton, NJ, USA: 1999. [Google Scholar]

- 35.Karney C.F. Quaternions in molecular modeling. J. Mol. Graph. Model. 2007;25:595–604. doi: 10.1016/j.jmgm.2006.04.002. [DOI] [PubMed] [Google Scholar]

- 36.Gebre-Egziabher D., Elkaim G.H., Powell J.D., Parkinson B.W. A gyro-free quaternion-based attitude determination system suitable for implementation using low cost sensors; Proceedings of the IEEE Position Location and Navigation Symposium; San Diego, CA, USA. 13–16 March 2000. [Google Scholar]

- 37.Horn B.K.P., Hilden H.M., Negahdaripour S. Closed-form solution of absolute orientation using orthonormal matrices. JOSA A. 1988;5:1127–1135. doi: 10.1364/JOSAA.5.001127. [DOI] [Google Scholar]

- 38.Craig J.J. Introduction to Robotics: Mechanics and Control. Volume 3 Pearson/Prentice Hall; Upper Saddle River, NJ, USA: 2005. [Google Scholar]