Abstract

Whereas modern digital cameras use a pixelated detector array to capture images, single-pixel imaging reconstructs images by sampling a scene with a series of masks and associating the knowledge of these masks with the corresponding intensity measured with a single-pixel detector. Though not performing as well as digital cameras in conventional visible imaging, single-pixel imaging has been demonstrated to be advantageous in unconventional applications, such as multi-wavelength imaging, terahertz imaging, X-ray imaging, and three-dimensional imaging. The developments and working principles of single-pixel imaging are reviewed, a mathematical interpretation is given, and the key elements are analyzed. The research works of three-dimensional single-pixel imaging and their potential applications are further reviewed and discussed.

Keywords: single-pixel imaging, ghost imaging, compressive sensing, three-dimensional imaging, time-of-flight, depth mapping, stereo vision

1. Introduction

Image retrieval has been an important research topic since the invention of cameras. In modern times, images are usually retrieved by forming an image with a camera lens and recording the image using a detector array. With the rapid development of complementary metal-oxide-semiconductor (CMOS) and charge-coupled devices (CCDs) driven by global market demands, digital cameras and cellphones can take pictures containing millions of pixels using a chip not larger than a fingernail.

Given the fact that the number of pixels in a camera sensor has already passed twenty million, the purchase of further increasing the pixel number seems to be not only beyond necessity, but also a waste of data storage in conventional applications. Alternatively, it is possible to reconstruct an image with just a single-pixel detector [1,2,3,4] by measuring the total intensity of overlap between a scene and a set of masks using a single element detector, and then combining the measurements with knowledge of the masks. As a matter of fact, if one looks back more than a hundred years, when detector arrays hadn’t been developed, one would see that scientists and inventors were already endeavoring to retrieve images using just a single-pixel detector, such as an “electric telescope” using a spiral-perforated disk conceived by Paul Nipkow in 1884 [5] and the “televisor” pioneered by John Logie Baird in 1929 [6]. This imaging technique was referred to as “raster scan” and the mathematical theory of image scanning was developed in 1934 [7]. Though no longer the first choice for visible spectrum imaging after the emergence of detector arrays, raster scan systems are commonly used in applications of non-visible spectrums [8,9,10], where detector arrays of certain wavelengths are either expensive or unavailable.

Over the past two decades, so-called “ghost imaging” has reignited the research interests of single-pixel imaging architectures after its first experimental implementation [1]. Originally designed to measure the entanglement of biphotons emitted from a spontaneous parametric down-conversion (SPDC) light source with two bucket detectors (a combination of a single-pixel detector and a collecting lens) for imaging purposes [11,12], it was soon demonstrated, by thermal light experiments [13,14,15,16] and computational ones [2,4,17], that ghost imaging is not quantum-exclusive but a variant of classical light field cross-correlation [18,19].

However, ghost imaging is sometimes referred to as a quantum-inspired computational imaging technique [20], where “computational” means that the imaging data measured by a ghost imaging system needs to be processed by computational algorithms before it can actually look like a conventional image. While using a single-pixel detector in the imaging hardware may offer a better detection efficiency, a lower noise, and a higher time resolution than a pixelated detector array does, the computational algorithms introduce competitive edges from the software perspective, due to ever-increasing processing power. One such example is compressive sensing [21,22,23,24], which allows the imaging system to sparsely measure the scene with a single-pixel detector and consequently to collect, transfer, and store a smaller amount of image data. This imaging technique using compressive sensing became known as single-pixel imaging [3].

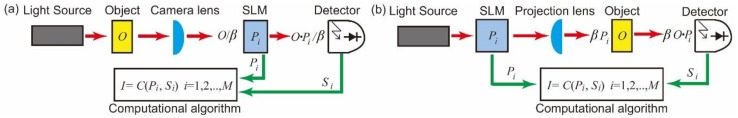

It soon became obvious to both the single-pixel imaging community and the ghost imaging community that the two imaging architectures are essentially the same in an optical sense, as shown in Figure 1, where single-pixel imaging (Figure 1a) places a spatial light modulator (SLM) on the focal plane of the camera lens to modulate the image of the scene with different masks before measuring the light intensities with a single-pixel detector, while ghost imaging (Figure 1b) uses different structured light distributions generated by the SLM to illuminate the scene and measures the reflected or transmitted light intensities. The object and the SLM are conjugated by the lens between them in both architectures.

Figure 1.

Schematics of two imaging architectures: (a) In single-pixel imaging, the object is first illuminated by the light source, then imaged by a camera lens onto the focal plane, where a spatial light modulator (SLM) is placed. The SLM modulates the image with different masks, and the reflected or transmitted light intensities are measured by a single-pixel detector. A computational algorithm uses knowledge of the masks. along with their corresponding measurements, to reconstruct an image of the object; (b) in ghost imaging, the object is illuminated by the structured light distribution generated from different masks on an SLM, and the reflected or transmitted light intensities are then measured by a single-pixel detector. A computational algorithm uses knowledge of the masks along, with their corresponding measurements, to reconstruct an image of the object.

To avoid confusion, both ghost imaging and single-pixel imaging are hereafter referred to as single-pixel imaging. Though not performing as well as detector array in conventional visible imaging, single-pixel imaging has been demonstrated for multi-wavelength imaging [25,26,27,28,29], terahertz imaging [30,31], X-ray imaging [32,33,34], temporal measurement [35,36,37], and three-dimensional (3D) imaging [38,39,40,41,42,43,44,45,46,47,48], all of which pose difficulties for conventional cameras. This review focuses on how single-pixel imaging can be utilized to obtain 3D information of a scene. The focal plane modulation architecture (Figure 1a) is used in the following discussion.

2. Three-Dimensional Single-Pixel Imaging

2.1. Mathematic Interperation of Single-Pixel Imaging

Mathematically, a greyscale image is a two-dimensional (2D) array, in which the value of each element represents the reflectivity of the scene at the corresponding spatial location. If the 2D array is transformed into one dimension, then the image is I = [i1, i2, …, iN]T, and obtaining an image is all about determining N elements in I. The easiest way to achieve that is to measure the value of one element at one time and sequentially measure all N values to acquire the image, which is the raster scan imaging approach. However, this single-point scanning approach has an image formation time which is proportional to N (i.e., the number of the pixels in the image). A better way is to measure N elements simultaneously with a detector array containing N pixels, which is exactly what modern digital cameras are doing. Unfortunately, detector arrays are not always available for unconventional spectrums and applications, such as ultraviolet- and time-correlated single photon counting, which is when single-pixel imaging comes into play. In single-pixel imaging, the camera lens forms an image I onto the surface of an SLM placed at the focal plane of the lens, the SLM modulates the image I with a mask Pi, and the single-pixel detector measures the total intensity of the reflected or transmitted light as the inner product of Pi and I,

| si = Pi × I, | (1) |

where Pi = [pi1, pi2, …, piN] is a one-dimensional (1D) array transformed from the 2D distribution. After the single-pixel system makes M measurements, a linear equation set is formed as

| S = P × I, | (2) |

where S = [s1, s2, …, sM]T is a 1D array of M measurement values, and P = [P1, P2, …, PM]T is an M × N 2D array known as the measuring matrix. The problem of reconstructing the image of the scene becomes a problem of solving N independent unknowns (i1, i2, …, iN) using a set of M linear equations. To solve Equation (2) perfectly, there are two necessities: (i) M = N and (ii) P is orthogonal, otherwise Equation (2) is ill-posed.

Providing the two necessities are satisfied, the image can be reconstructed by

| I = P−1 × S. | (3) |

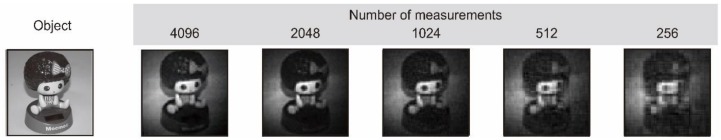

The most straightforward choice of the measuring matrix P is an identity matrix EN, which corresponds to the experimental implementation of raster scan imaging systems [3,4,49,50,51]. However, the point-by-point strategy seems inefficient in light of the fact that many natural scenes are sparse or compressible in a way that they can be concisely represented with a proper basis. More importantly, the measuring matrices formed from these sparse bases are orthogonal as well, such as Hadamard [27,52,53,54], Fourier [47,55,56], and wavelet [57,58,59,60], which are commonly used in single-pixel imaging. Consequently, sub-sampling strategies are proposed to sample the scene using M smaller than N, without jeopardizing the quality of the reconstruction [27,55,57,61]. Figure 2 shows that an image of the scene can be approximately reconstructed with M << N. It is worth noting that the orthogonal sub-sampling concept, which requires a prior knowledge of the specific scene, is not the same as the compressive sensing, which needs only a general assumption that the scene is sparse. Orthogonal sub-sampling is similar to the idea of image compression techniques such as JPEG [62].

Figure 2.

Single-pixel imaging reconstructions (64 × 64 pixel resolution) using experimental data with different numbers of measurements.

If the measuring matrix P is not orthogonal, things become more interesting. In early stages of single-pixel imaging research, the sample masks are (pseudo) random, generated by illuminating a rotating ground glass with a laser beam [10,11], and they form a non-orthogonal measuring matrix. Due to the classical light field cross-correlation interpretation of single-pixel imaging at that time, the reconstruction algorithm simply weights each sampling mask by the magnitude of the corresponding measurement, and then sums these weighted masks to yield the reconstruction of the scene [2,4,10,11,63]. Even using M >> N during the measuring, the signal-to-noise ratio (SNR) of the yielded images are usually low because of the partial correlation nature of the measurements as well as the lack of sophistication in the reconstruction algorithms. SNR improvement methods are proposed during this stage [64,65,66,67,68,69], among which differential ghost imaging [64] is the most commonly used option.

Fortunately, the pioneering information theory work of Candès and Tao in 2006 demonstrated that by compressively sampling a signal with (pseudo) random measurements, which are incoherent to the sparse basis of the signal, the signal can be recovered from M measurements (M << N) using two approaches: matching pursuit and basis pursuit [70]. This is a perfect match for single-pixel imaging, which uses (pseudo) random masks to sample the scene and requires a large number of measurements to yield a good reconstruction. In a nutshell, for single-pixel imaging via compressive sensing, if the image I has an n-sparse representation in an N orthogonal basis Q, and the product of the measuring matrix P and the orthogonal basis Q (i.e., P × Q) satisfies the restricted isometry property, then the image I can be stably reconstructed from M measurements sampled by P, where M ~ nlog(N/n) [71]. The understanding of compressive sensing is not in the scope of this review; those who are interested can refer to the works of Candès, Donoho, and Baraniuk [21,22,23,24].

It is worth mentioning that the time of image formation in single-pixel imaging consists of two parts, acquisition time (i.e., performing M measurements) and reconstruction time (i.e., processing the acquired data with a reconstruction algorithm). Compressive sensing reduces the required number of measurements dramatically but has a computational overhead for reconstruction, which limits its application in real-time imaging. Nevertheless, compressive sensing enables the imaging system to perform high dynamic data acquisition [71], provided that the processing of the acquired data is not an immediate requirement. In the case of orthogonal measuring matrices, the reconstruction algorithm usually has a linear iteration nature. Not only is this type of algorithm much less computational compared to those used in compressive sensing, but also the linear iteration can be performed in a multi-thread parallel manner along with the data acquisition, which minimizes the time of image formation. The limitation for the orthogonal measuring matrix strategy is that the required number of measurements increases in proportion to the pixel resolution of the reconstructed image, and it cannot be significantly reduced even if certain adaptive algorithms are utilized [27,57,61].

2.2. Performance of Single-Pixel Imaging

If one compares the system architecture of a conventional digital camera to that of single-pixel imaging, one would see that the only difference between them is that the pixelated detector array in a digital camera is replaced by the combination of an SLM and a single-pixel detector. Therefore, the performance of single-pixel imaging is essentially determined by the performance of this combination.

2.2.1. SLM

There are a variety of SLM technologies used in single-pixel imaging, such as a rotating ground glass [10,11], a customized diffuser with pre-designed masks [39,72], a liquid-crystal device (LCD) [2,4], a digital micromirror device (DMD) [27,28], a light-emitting diode (LED) array [48,73], and an optical phased array (OPA) [74,75].

Spatial resolution

Within the single-pixel imaging approach, the pixel resolution of the reconstructed image is determined by the spatial resolution of the masks, which is limited by the spatial resolution of the SLM used in the system. The spatial resolution of a commonly used DMD module is 1024 × 768, an order smaller than that of a typical commercial digital camera. However, a programmable LCD or DMD offers the flexibility to perform the sampling in various ways, which improves the performance of single-pixel imaging in SNR [53], frequency aliasing suppression [76], or regional resolution [77].

Data acquisition time

The time to acquire the data of one image in single-pixel imaging is the product of the mask switch time and the number of measurements M needed for one reconstruction. DMD, the most common choice in single-pixel imaging, has a typical modulation rate of 22 kHz. Without the help of compressive sensing, it corresponds to a 46.5 ms (1024/22 kHz) acquisition time for 32 × 32 pixel resolution single-pixel imaging, leading to a frame rate of 21 frames-per-second, which is not satisfying. Recent works demonstrated that by using fast-switching photonics components, such as LED array [73] and OPA [74], the modulation rate can be increased beyond 1 MHz, with the potential of reaching GHz.

Spectrum

For ground glass and customized diffusers, the spectrum they operate in is determined by the materials from which they are made. In the case of LCDs and DMDs, their transmissive or reflective properties decide the bandwidth of the wavelength. In these two circumstances, there is usually a long range of wavelength, which makes wide spectral imaging possible for single-pixel imaging. For LED array and OPAs, the spectrum depends on the light-emitting component, and is usually a narrow-band wavelength.

2.2.2. Single-Pixel Detector

The single-pixel detector is the reason why single-pixel imaging has a much wider range of choices of detection subjects than a digital camera using a detector array does. For starters, single-pixel detectors are available for almost any wavelength throughout the whole electromagnetic spectrum. More importantly, because of the fact that any cutting-edge sensor becomes available in the form of a single-pixel detector long before it can be manufactured into an array, single-pixel imaging systems always enjoy the privilege of using newly developed sensors much earlier than detector array-based conventional cameras do. For example, by using single-pixel detector with single-photon sensitivity, single-pixel imaging systems will be able to image objects much farther away than conventional digital cameras can.

However, these privileges come with a price; that is, with only one detection element, the measurements needed to reconstruct an image must be performed sequentially over a period of time, while they could be performed easily in one shot using a detector array. To compensate for this disadvantage, fast-modulating SLMs, high-speed electrics, and powerful computational capabilities are needed for the single-pixel imaging technique.

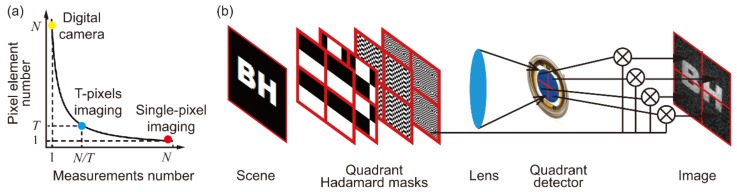

An interesting idea [78,79] worth mentioning is that one can always make a compromise between two extreme measuring manners; that is, rather than performing N measurements using either one pixel with N measurements or N pixels with a single measurement, the same number of measurements can be achieved by using T pixels with N/T measurements, as shown in Figure 3a. By adopting this idea in single-pixel imaging (Figure 3b), the acquisition time can be reduced by a factor of T, though “single-pixel imaging” might no longer be an appropriate name for the imaging system.

Figure 3.

Space–time trade-off relationship for performing N measurements: (a) Single-pixel imaging and digital cameras are at the two ends of the curve, while the idea of using T pixels and N/T measurements is a compromise between the two extremes; (b) by using a quadrant detector, the imaging system is 4 times faster in data acquisition [79].

Before going any further, a summary is provided in Table 1, in which major elements of a single-pixel imaging system are summarized; their possible choices and corresponding pros and cons are listed.

Table 1.

Summary of major elements in single-pixel imaging.

| Element | Choices | Advantages (*) and Disadvantages (^) |

|---|---|---|

| System architecture | Focal plane modulation | * Active or passive imaging. ^ Limited choice on modulation. |

| Structured light illumination | * More choices for active illumination. ^ Active imaging only. |

|

| Modulation method | Rotating ground glass | * High power endurance; cheap. ^ Not programmable; random modulation only. |

| Customized diffuser | * High power endurance; can be customized. ^ Not programmable; complicated manufacturing. |

|

| LCD | * Greyscale modulation; programmable. ^ Slow modulation; low power endurance |

|

| DMD | * Faster than LCD; programmable. ^ Binary modulation; not fast enough. |

|

| LED array | * Much faster than DMD; programmable. ^ Binary modulation; structured illumination only. |

|

| OPA | * Much faster than DMD; controllable. ^ Random modulation; complicated manufacturing. |

|

| Reconstruction algorithm | Orthogonal sub-sampling | * Not computationally demanding. ^ Requires a specific prior. |

| Compressive sensing | * A computational overhead. ^ Needs only a general sparse assumption. |

2.3. From 2D to 3D

3D imaging is an intensively explored technique, which is applied to disciplines such as public security, robotics, medical sciences, and defense [80,81,82,83,84,85]. A variety of approaches have been proposed for different applications, among which time-of-flight [50,51,86] and stereo vision [87,88,89,90,91,92] are commonly used. In the following, we review how single-pixel imaging adopts these two approaches to go from 2D to 3D.

2.3.1. Time-of-Flight Approach

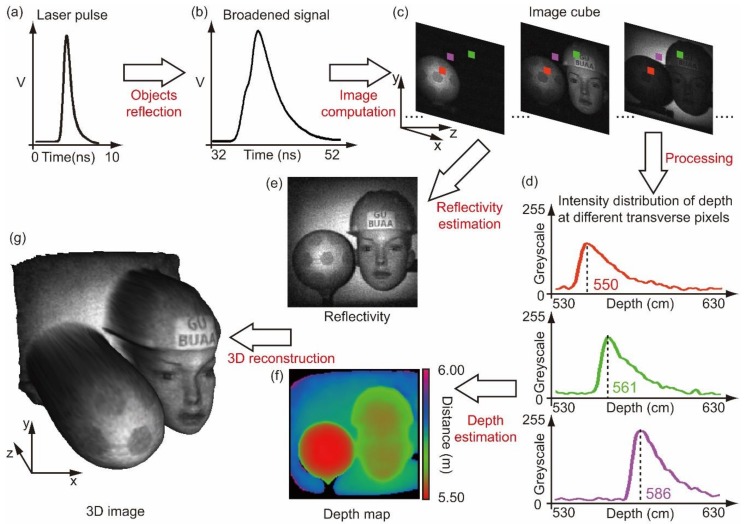

Time-of-flight measurement determines the distance d to a scene by illuminating it with pulsed light and comparing the detection time ta of the back-scattered light to the time of the illumination pulse t0 (i.e., d = Δtc/2), where Δt = (ta−t0) is the time of flight and c is the speed of light. For single-pixel imaging, if the distance information can be obtained at each spatial location of the scene, then a 3D image can be reconstructed by combining a depth map (i.e., the 2D array of distance information) with a transverse reflectivity image of the scene. However, with the flood illumination implemented in single-pixel imaging, the illuminating pulsed laser back-scattered from a scene is significantly broadened, providing only an approximate distance of the whole scene in conventional time-of-flight understanding. Methods for extraction of depth information of each spatial location from a series of broadened pulsed signals are described as follows.

In 2D single-pixel imaging, one mask only corresponds to one measured intensity. However, by using pulsed light for illumination and a time-resolving detector for detection, one mask will correspond to a series of measured intensities at different depths. Consequently, a series of images can be obtained by associating the masks with the measured intensities at different depths, forming an image cube in 3D. By further processing the data in the image cube, both reflectivity and depth information of the scene can be extracted, and therefore a 3D image is reconstructed. Many works utilized this concept [38,39,40,41,43,45,72], among which [45] demonstrated its merits most. Figure 4 illustrates the procedure of this method.

Figure 4.

Overview of the image cube method: (a) The illuminating laser pulses back-scattered from a scene are measured as (b) broadened signals; (c) an image cube, containing images at different depths, is obtained using the measured signals; (d) each transverse location has an intensity distribution along the longitudinal axis, indicating depth information; (e) reflectivity and (f) a depth map can be estimated from the image cube, and then be used to reconstruct (g), a 3D image of the scene. Experimental data used in this figure is from the work of [45].

This method is straightforward in a physical sense, because the image cube is a 3D array which is also the collection of temporal measurements at each spatial location, that is, the measured data of the raster scan imaging system with a time resolving detector [50,51]. However, the image cube method is computational, because all images at different depths are reconstructed. Therefore, 2D image reconstruction using an orthogonal measuring matrix might be a wise choice, while utilizing compressive sensing would only further burden the data processing.

An alternative method [40] to recover the depth map is abstract in its physical sense but computationally elegant and efficient. Instead of trying to recovering a depth map ID directly, the method considers a signal IQ made up of the element-wise product IQ = I.ID, where I is a 2D reflectivity image of the scene obtained by standard single-pixel imaging. More importantly, it is proved that IQ satisfies the following equation:

| SQ = P × IQ, | (4) |

where SQ = [ΣJj=1(s1,j tj), ΣJj=1(s2,j tj), …, ΣJj=1(sM,j tj)]T is a 1D array of the sum of the products between the number of received photons si,j at time tj for the ith mask measurement. Therefore, by using the same treatment, a second “image” IQ can be reconstructed, and dividing by I, a depth map ID of the scene can be yielded as well. Again, it is worth noting that this image IQ, which is the element-wise product of the reflectivity image I and the depth map ID, does not have a straightforward physical meaning. In this method, only two image reconstructions are performed, and compressive sensing could be utilized without adding too much computational burden.

The performance of the time-of-flight based 3D single-pixel imaging is related to the following aspects. The aspects affecting 2D single-pixel imaging performance are not mentioned here.

Repetition rate of the pulsed light: One pulse corresponds to one mask measurement, therefore the higher the repetition rate is, the faster an SLM displays the set of masks.

Pulse width of the pulsed light: A narrower pulse width means a smaller uncertainty in time-of-flight measurement and less overlapping between back-scattered signals from objects of different depths, which in turn improves the system depth resolution.

The type of the single-pixel detector: The choice of whether to use a conventional photodiode or one operated with a higher reverse bias (e.g., a single-photon counting detector), is dependent on the application. A single-photon counting detector, which can resolve single-photon arrival with a faster response time, is well suited for low-light-level imaging. However, its total detection efficiency is very low since only one photon is detected for each measuring pulse. Furthermore, the inherent dead time of the single-photon counting detector, often 10s of nanoseconds, prohibits the information retrieval of a farther object if a closer one has a relatively higher detection probability. In contrast, a high-speed photodiode can record the temporal response from a single illumination pulse, which can be advantageous in applications with a relatively large illumination.

Time bin and time jitter of the electronics: These two parameters are usually closely related, and the smaller they are, the better the depth resolution will be. However, a smaller time bin also means a larger amount of data, which will burden the reconstruction of the 3D image.

A major advantage of time-of-flight-based 3D imaging over other 3D imaging techniques, such as stereo vision [87,88] or structured-light 3D imaging [93,94], is that time-of-flight measurement is an absolute measurement, meaning that the depth resolution of time-of-flight-based 3D single-pixel imaging systems are not largely affected by increases in the distance between the system and the object. Therefore, it is a good candidate for long-distance 3D measurement, such as LiDAR [83].

2.3.2. Stereo Vision Approach

Stereo vision uses two or more images obtained simultaneously from different viewpoints to reconstruct a 3D image of the scene. However, the geometry registration between several images during the reconstruction can be problematic. Contrarily, photometric stereo [89,90,91,92] captures images with a fixed viewpoint but different illuminations. The pixel correspondence in photometric stereo is easier to perform than in stereo vision, but images of different illumination have to be taken sequentially, which limits its real-time application.

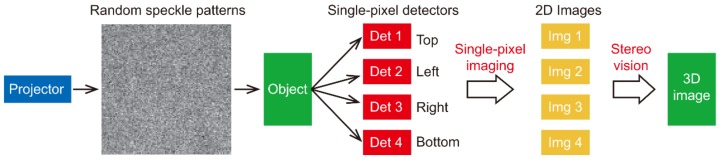

In 3D single-pixel imaging utilizing stereo vision, as show in Figure 5, a digital projector illuminates the object with random speckle masks. Four single-pixel detectors, placed above, below, and to the left and right sides of the projector, measure the back-scattered light intensities. Four images of the viewpoints are obtained by associating the measured data with knowledge of the random speckle masks. A 3D image of the object is reconstructed from four images and the geometry information of their corresponding viewpoints. The single-pixel imaging fundamentally functions as conventional digital cameras do in the system, however, the architecture of single-pixel imaging enables the simplification of the 3D imaging system to only one SLM, one camera lens, and several pixels without compromising the quality of the reconstruction [42]. More importantly, with a concise system setup, both simultaneous capture of images and pixel correspondence among images can be easily addressed [92].

Figure 5.

Schematic of a stereo vision based 3D single-pixel imaging.

The performance of stereo vision-based 3D single-imaging is essentially determined by two aspects, the quality of the viewpoint’s 2D images and the stereo vision geometry of the system setup. For example, both 3D reconstruction quality and speed are improved in [92] compared to [42]. The improvements are mainly achieved by implementing orthogonal measuring masks with an SLM of a higher modulation rate. A recent work replaces the SLM with an LED array [48], further lowering the system cost. The depth resolution of the system is a relative quantity, which is largely constrained by the geometry of the system setup, in particular, the ratio of the separation between the single-pixel detectors to their distance to the object. Applications such as close industrial inspection and object 3D profiling would be suitable for stereo vision-based 3D single-imaging.

3. Conclusions and Discussions

In this review, we briefly go through the development of the single-pixel imaging technique, provide a mathematical interpretation of its working principles, and discuss its performance from our understanding. Two different approaches to 3D single-pixel imaging, and their pros, cons, and potential applications are discussed.

The potential of the single-pixel imaging technique lies in three aspects of its system architecture. First, the use of single-pixel detectors makes single-pixel imaging a perfect playground for testing cutting edge sensors, such as single-photon counting detectors, in imaging techniques. Furthermore, it offers an easy platform from which to adopt other single-pixel based techniques, for example, the spatial resolution of the ultrafast time-stretch imaging [95,96,97,98] could be enhanced by adopting single-pixel imaging in time domain.

Second, the use of programmable SLMs provides extra flexibility for the imaging formation. For example, the pixel geometry of the image can be arranged in non-Cartesian manners, and the trade-off between SNR, spatial resolution, and frame-rate of the imaging system can be tuned according to the demands of the application. SLMs also set limitations on the performance of single-pixel imaging with their own spatial resolutions and modulation rates. Therefore, high performance SLM devices are desirable for the development of the single-pixel imaging technique.

Third, the use of compressive sensing enables imaging reconstruction using sub-sampling without following the Nyquist’s theorem, resulting in a significant decrease in the amount of data during the image acquisition and transfer, rather than compressing the acquired image after it is sampled completely. Sooner or later, with the ever-growing capabilities of processors, the computational burden of compressive sensing algorithms will not be a limitation.

Despite the fact that the current performance of single-pixel imaging is not comparable, particularly in visible spectrum, to that of conventional digital cameras based on detector array, it is a fascinating research field in which to test cutting edge sensors and experiment with new imaging concepts. Unlike digital cameras, single-pixel imaging doesn’t have any components which are specially developed for it due to global market demands and the funding that follows. However, the situation might be changing with the emerging need for low-cost 3D sensing techniques for autonomous vehicles.

Acknowledgments

The authors would like to thank Miles J. Padgett, University of Glasgow, for inspiration.

Author Contributions

M.-J.S. and J.-M.Z. structured and wrote the manuscript.

Funding

This research was funded by National Natural Science Foundation of China, grant number 61675016, and Beijing Natural Science Foundation, grant number 4172039.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Pittman T.B., Shih Y.H., Strekalov D.V., Sergienko A.V. Optical imaging by means of two-photon quantum entanglement. Phys. Rev. A. 1995;52:R3429. doi: 10.1103/PhysRevA.52.R3429. [DOI] [PubMed] [Google Scholar]

- 2.Shapiro J.H. Computational ghost imaging. Phys. Rev. A. 2008;78:061802. doi: 10.1103/PhysRevA.78.061802. [DOI] [Google Scholar]

- 3.Duarte M.F., Davenport M.A., Takhar D., Laska J.N., Sun T., Kelly K.F. Single-pixel imaging via compressive sampling. IEEE Signal Process. Mag. 2008;25:83–91. doi: 10.1109/MSP.2007.914730. [DOI] [Google Scholar]

- 4.Bromberg Y., Katz O., Silberberg Y. Ghost imaging with a single detector. Phys. Rev. A. 2009;79:053840. doi: 10.1103/PhysRevA.79.053840. [DOI] [Google Scholar]

- 5.Nipkow P. Optical Disk. 30,150. German Patent. 1884 Jan 6;

- 6.Baird J.L. Apparatus for Transmitting Views or Images to a Distance. 1,699,270. U.S. Patent. 1929 Jan 15;

- 7.Mertz P., Gray F. A theory of scanning and its relation to the characteristics of the transmitted signal in telephotography and television. Bell Syst. Tech. J. 1934;13:464–515. doi: 10.1002/j.1538-7305.1934.tb00675.x. [DOI] [Google Scholar]

- 8.Kane T.J., Byvik C.E., Kozlovsky W.J., Byer R.L. Coherent laser radar at 1.06 μm using Nd:YAG lasers. Opt. Lett. 1987;12:239–241. doi: 10.1364/OL.12.000239. [DOI] [PubMed] [Google Scholar]

- 9.Hu B.B., Nuss M.C. Imaging with terahertz waves. Opt. Lett. 1995;20:1716–1718. doi: 10.1364/OL.20.001716. [DOI] [PubMed] [Google Scholar]

- 10.Thibault P., Dierolf M., Menzel A., Bunk O., David C., Pfeiffer F. High-resolution scanning x-ray diffraction microscopy. Science. 2008;321:379–382. doi: 10.1126/science.1158573. [DOI] [PubMed] [Google Scholar]

- 11.Scarcelli G., Berardi V., Shih Y.H. Can two-photon correlation of chaotic light be considered as correlation of intensity fluctuations? Phys. Rev. Lett. 2006;96:063602. doi: 10.1103/PhysRevLett.96.063602. [DOI] [PubMed] [Google Scholar]

- 12.Shih Y.H. Quantum imaging. IEEE J. Sel. Top. Quant. 2007;13:1016. doi: 10.1109/JSTQE.2007.902724. [DOI] [Google Scholar]

- 13.Bennink R.S., Bentley S.J., Boyd R.W. “Two-photon” coincidence imaging with a classical source. Phys. Rev. Lett. 2002;89:113601. doi: 10.1103/PhysRevLett.89.113601. [DOI] [PubMed] [Google Scholar]

- 14.Gatti A., Brambilla E., Bache M., Lugiato L. Correlated imaging: Quantum and classical. Phys. Rev. A. 2004;70:013802. doi: 10.1103/PhysRevA.70.013802. [DOI] [PubMed] [Google Scholar]

- 15.Valencia A., Scarcelli G., D’Angelo M., Shih Y. Two-photon imaging with thermal light. Phys. Rev. Lett. 2005;94:063601. doi: 10.1103/PhysRevLett.94.063601. [DOI] [PubMed] [Google Scholar]

- 16.Zhai Y.H., Chen X.H., Zhang D., Wu L.A. Two-photon interference with true thermal light. Phys. Rev. A. 2005;72:043805. doi: 10.1103/PhysRevA.72.043805. [DOI] [Google Scholar]

- 17.Katz O., Bromberg Y., Silberberg Y. Compressive ghost imaging. Phys. Rev. A. 2009;95:131110. doi: 10.1063/1.3238296. [DOI] [Google Scholar]

- 18.Erkmen B.I., Shapiro J.H. Unified theory of ghost imaging with Gaussian-state light. Phys. Rev. A. 2012;77:043809. doi: 10.1103/PhysRevA.77.043809. [DOI] [Google Scholar]

- 19.Shapiro J.H., Boyd R.W. The physics of ghost imaging. Quantum Inf. Process. 2012;11:949–993. doi: 10.1007/s11128-011-0356-5. [DOI] [Google Scholar]

- 20.Altmann Y., Mclaughlin S., Padgett M.J., Goyal V.K., Hero A.O., Faccio D. Quantum-inspired computational imaging. Science. 2018;361:eaat2298. doi: 10.1126/science.aat2298. [DOI] [PubMed] [Google Scholar]

- 21.Candès E.J. Compressive sampling; Proceedings of the 2006 International Congress of Mathematicians; Madrid, Spain. 22–30 August 2006; Berlin, Germany: International Mathematical Union; 2006. pp. 1433–1452. [DOI] [Google Scholar]

- 22.Donoho D.L. Compressed sensing. IEEE Trans. Inform. Theory. 2006;52:1289–1306. doi: 10.1109/TIT.2006.871582. [DOI] [Google Scholar]

- 23.Candès E., Romberg J. Sparsity and incoherence in compressive sampling. Inverse Probl. 2007;23:969. doi: 10.1088/0266-5611/23/3/008. [DOI] [Google Scholar]

- 24.Baraniuk R.G. Compressive sensing [lecture notes] IEEE Signal Process. Mag. 2007;24:118–121. doi: 10.1109/MSP.2007.4286571. [DOI] [Google Scholar]

- 25.Studer V., Jérome B., Chahid M., Mousavi H.S., Candes E., Dahan M. Compressive fluorescence microscopy for biological and hyperspectral imaging. Proc. Natl. Acad. Sci. USA. 2012;109:E1679–E1687. doi: 10.1073/pnas.1119511109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Welsh S.S., Edgar M.P., Edgar S.S., Bowman M.P., Jonathan R.P., Sun B., Padgett M.J. Fast full-color computational imaging with single-pixel detectors. Opt. Express. 2013;21:23068–23074. doi: 10.1364/OE.21.023068. [DOI] [PubMed] [Google Scholar]

- 27.Radwell N., Mitchell K.J., Gibson G.M., Edgar M.P., Bowman R., Padgett M.J. Single-pixel infrared and visible microscope. Optica. 2014;1:285–289. doi: 10.1364/OPTICA.1.000285. [DOI] [Google Scholar]

- 28.Edgar M.P., Gibson G.M., Bowman R.W., Sun B., Radwell N., Mitchell K.J. Simultaneous real-time visible and infrared video with single-pixel detectors. Sci. Rep. 2015;5:10669. doi: 10.1038/srep10669. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bian L., Suo J., Situ G., Li Z., Fan J., Chen F. Multispectral imaging using a single bucket detector. Sci. Rep. 2016;6:24752. doi: 10.1038/srep24752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Watts C.M., Shrekenhamer D., Montoya J., Lipworth G., Hunt J., Sleasman T. Terahertz compressive imaging with metamaterial spatial light modulators. Nat. Photonics. 2014;8:605–609. doi: 10.1038/nphoton.2014.139. [DOI] [Google Scholar]

- 31.Stantchev R.I., Sun B., Hornett S.M., Hobson P.A., Gibson G.M., Padgett M.J. Noninvasive, near-field terahertz imaging of hidden objects using a single-pixel detector. Sci. Adv. 2016;2:e1600190. doi: 10.1126/sciadv.1600190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Cheng J., Han S. Incoherent coincidence imaging and its applicability in X-ray diffraction. Phys. Rev. Lett. 2004;92:093903. doi: 10.1103/PhysRevLett.92.093903. [DOI] [PubMed] [Google Scholar]

- 33.Greenberg J., Krishnamurthy K., David B. Compressive single-pixel snapshot x-ray diffraction imaging. Opt. Lett. 2014;39:111–114. doi: 10.1364/OL.39.000111. [DOI] [PubMed] [Google Scholar]

- 34.Zhang A.X., He Y.H., Wu L.A., Chen L.M., Wang B.B. Tabletop x-ray ghost imaging with ultra-low radiation. Optica. 2018;5:374–377. doi: 10.1364/OPTICA.5.000374. [DOI] [Google Scholar]

- 35.Ryczkowski P., Barbier M., Friberg A.T., Dudley J.M., Genty G. Ghost imaging in the time domain. Nat. Photonics. 2016;10:167–170. doi: 10.1038/nphoton.2015.274. [DOI] [Google Scholar]

- 36.Faccio D. Optical communications: Temporal ghost imaging. Nat. Photonics. 2016;10:150–152. doi: 10.1038/nphoton.2016.30. [DOI] [Google Scholar]

- 37.Devaux F., Moreau P.A., Denis S., Lantz E. Computational temporal ghost imaging. Optica. 2016;3:698–701. doi: 10.1364/OPTICA.3.000698. [DOI] [Google Scholar]

- 38.Howland G.A., Dixon P.B., Howell J.C. Photon-counting compressive sensing laser radar for 3D imaging. Appl. Opt. 2011;50:5917–5920. doi: 10.1364/AO.50.005917. [DOI] [PubMed] [Google Scholar]

- 39.Zhao C., Gong W., Chen M., Li E., Wang H., Xu W. Ghost imaging LIDAR via sparsity constraints. Appl. Phys. Lett. 2012;101:141123. doi: 10.1063/1.4757874. [DOI] [Google Scholar]

- 40.Howland G.A., Lum D.J., Ware M.R., Howell J.C. Photon counting compressive depth mapping. Opt. Express. 2013;21:23822. doi: 10.1364/OE.21.023822. [DOI] [PubMed] [Google Scholar]

- 41.Zhao C., Gong W., Chen M., Li E., Wang H., Xu W. Ghost imaging lidar via sparsity constraints in real atmosphere. Opt. Photonics J. 2013;3:83. doi: 10.4236/opj.2013.32B021. [DOI] [Google Scholar]

- 42.Sun B., Edgar M.P., Bowman R., Vittert L.E., Welsh S., Bowman A. 3D computational imaging with single-pixel detectors. Science. 2013;340:844–847. doi: 10.1126/science.1234454. [DOI] [PubMed] [Google Scholar]

- 43.Yu H., Li E., Gong W., Han S. Structured image reconstruction for three-dimensional ghost imaging lidar. Opt. Express. 2015;23:14541. doi: 10.1364/OE.23.014541. [DOI] [PubMed] [Google Scholar]

- 44.Yu W.K., Yao X.R., Liu X.F., Li L.Z., Zhai G.J. Three-dimensional single-pixel compressive reflectivity imaging based on complementary modulation. Appl. Opt. 2015;54:363–367. doi: 10.1364/AO.54.000363. [DOI] [Google Scholar]

- 45.Sun M.J., Edgar M.P., Gibson G.M., Sun B., Radwell N., Lamb R. Single-pixel three-dimensional imaging with time-based depth resolution. Nat. Commun. 2016;7:12010. doi: 10.1038/ncomms12010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Zhang Z., Zhong J. Tree-dimensional single-pixel imaging with far fewer measurements than effective image pixels. Opt. Lett. 2016;41:2497–2500. doi: 10.1364/OL.41.002497. [DOI] [PubMed] [Google Scholar]

- 47.Zhang Z.B., Liu S.J., Peng J.Z., Yao M.H., Zheng G.A., Zhong J.G. Simultaneous spatial, spectral, and 3D compressive imaging via efficient Fourier single-pixel measurements. Optica. 2018;5:315–319. doi: 10.1364/OPTICA.5.000315. [DOI] [Google Scholar]

- 48.Salvador-Balaguer E., Latorre-Carmona P., Chabert C., Pla F., Lancis J., Enrique Tajahuerce E. Low-cost single-pixel 3D imaging by using an LED array. Opt. Express. 2018;26:15623–15631. doi: 10.1364/OE.26.015623. [DOI] [PubMed] [Google Scholar]

- 49.Massa J.S., Wallace A.M., Buller G.S., Fancey S.J., Walker A.C. Laser depth measurement based on time-correlated single photon counting. Opt. Lett. 1997;22:543–545. doi: 10.1364/OL.22.000543. [DOI] [PubMed] [Google Scholar]

- 50.McCarthy A., Collins R.J., Krichel N.J., Fernandez V., Wallace A.M., Buller G.S. Long-range time-of-flight scanning sensor based on high-speed time-correlated single-photon counting. Appl. Opt. 2009;48:6241–6251. doi: 10.1364/AO.48.006241. [DOI] [PubMed] [Google Scholar]

- 51.McCarthy A., Krichel N.J., Gemmell N.R., Ren X., Tanner M.G., Dorenbos S.N. Kilometer-range, high resolution depth imaging via 1560 nm wavelength single-photon detection. Opt. Express. 2013;21:8904–8915. doi: 10.1364/OE.21.008904. [DOI] [PubMed] [Google Scholar]

- 52.Lochocki B., Gambín A., Manzanera S., Irles E., Tajahuerce E., Lancis J., Artal P. Single pixel camera ophthalmoscope. Optica. 2016;3:1056–1059. doi: 10.1364/OPTICA.3.001056. [DOI] [Google Scholar]

- 53.Sun M.J., Edgar M.P., Phillips D.B., Phillips D.B., Gibson G.M., Padgett M.J. Improving the signal-to-noise ratio of single-pixel imaging using digital microscanning. Opt. Express. 2016;24:10476–10485. doi: 10.1364/OE.24.010476. [DOI] [PubMed] [Google Scholar]

- 54.Wang L., Zhao S. Fast reconstructed and high-quality ghost imaging with fast Walsh-Hadamard transform. Photonics Res. 2016;4:240–244. doi: 10.1364/PRJ.4.000240. [DOI] [Google Scholar]

- 55.Zhang Z., Ma X., Zhong J. Single-pixel imaging by means of Fourier spectrum acquisition. Nat. Commun. 2015;6:6225. doi: 10.1038/ncomms7225. [DOI] [PubMed] [Google Scholar]

- 56.Czajkowski K.M., Pastuszczak A., Kotyński R. Real-time single-pixel video imaging with Fourier domain regularization. Opt. Express. 2018;26:20009–20022. doi: 10.1364/OE.26.020009. [DOI] [PubMed] [Google Scholar]

- 57.Aβmann M., Bayer M. Compressive adaptive computational ghost imaging. Sci. Rep. 2013;3:1545. doi: 10.1038/srep01545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Yu W.K., Li M.F., Yao X.R., Liu X.F., Wu L.A., Zhai G.J. Adaptive compressive ghost imaging based on wavelet trees and sparse representation. Opt. Express. 2014;22:7133–7144. doi: 10.1364/OE.22.007133. [DOI] [PubMed] [Google Scholar]

- 59.Rousset F., Ducros N., Farina A., Valentini G., D’Andrea C., Peyrin F. Adaptive basis scan by wavelet prediction for single-pixel imaging. IEEE Trans. Comput. Imaging. 2017;3:36–46. doi: 10.1109/TCI.2016.2637079. [DOI] [Google Scholar]

- 60.Czajkowski K.M., Pastuszczak A., Kotyński R. Single-pixel imaging with Morlet wavelet correlated random patterns. Sci. Rep. 2018;8:466. doi: 10.1038/s41598-017-18968-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Sun M.J., Meng L.T., Edgar M.P., Padgett M.J., Radwell N.A. Russian Dolls ordering of the Hadamard basis for compressive single-pixel imaging. Sci. Rep. 2017;7:3464. doi: 10.1038/s41598-017-03725-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Aravind R., Cash G.L., Worth J.P. On implementing the JPEG still-picture compression algorithm. Adv. Intell. Rob. Syst. Conf. 1989;1199:799–808. doi: 10.1117/12.970090. [DOI] [Google Scholar]

- 63.Cheng X., Liu Q., Luo K.H., Wu L.A. Lensless ghost imaging with true thermal light. Opt. Lett. 2009;34:695–697. doi: 10.1364/OL.34.000695. [DOI] [PubMed] [Google Scholar]

- 64.Ferri F., Magatti D., Lugiato L., Gatti A. Differential ghost imaging. Phys. Rev. Lett. 2010;104:253603. doi: 10.1103/PhysRevLett.104.253603. [DOI] [PubMed] [Google Scholar]

- 65.Agafonov I.N., Luo K.H., Wu L.A., Chekhova M.V., Liu Q., Xian R. High-visibility, high-order lensless ghost imaging with thermal light. Opt. Lett. 2010;35:1166–1168. doi: 10.1364/OL.35.001166. [DOI] [PubMed] [Google Scholar]

- 66.Sun B., Welsh S., Edgar M.P., Shapiro J.H., Padgett M.J. Normalized ghost imaging. Opt. Express. 2012;20:16892–16901. doi: 10.1364/OE.20.016892. [DOI] [Google Scholar]

- 67.Sun M.J., Li M.F., Wu L.A. Nonlocal imaging of a reflective object using positive and negative correlations. Appl. Opt. 2015;54:7494–7499. doi: 10.1364/AO.54.007494. [DOI] [PubMed] [Google Scholar]

- 68.Song S.C., Sun M.J., Wu L.A. Improving the signal-to-noise ratio of thermal ghost imaging based on positive–negative intensity correlation. Opt. Commun. 2016;366:8–12. doi: 10.1016/j.optcom.2015.12.045. [DOI] [Google Scholar]

- 69.Sun M.J., He X.D., Li M.F., Wu L.A. Thermal light subwavelength diffraction using positive and negative correlations. Chin. Opt. Lett. 2016;14:15–19. doi: 10.3788/COL201614.040301. [DOI] [Google Scholar]

- 70.Candes E.J., Tao T. Near-optimal signal recovery from random projections: Universal encoding strategies? IEEE Trans. Inf. Theory. 2006;52:5406–5425. doi: 10.1109/TIT.2006.885507. [DOI] [Google Scholar]

- 71.Sankaranarayanan A.C., Studer C., Baraniuk R.G. CS-MUVI: Video compressive sensing for spatial-multiplexing cameras; Proceedings of the 2012 IEEE International Conference on Computational Photography (ICCP); Seattle, WA, USA. 28–29 April 2012. [Google Scholar]

- 72.Gong W., Zhao C., Yu H., Chen M., Xu W., Han S. Three-dimensional ghost imaging lidar via sparsity constraint. Sci. Rep. 2016;6:26133. doi: 10.1038/srep26133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Xu Z.H., Chen W., Penulas J., Padgett M.J., Sun M.J. 1000 fps computational ghost imaging using LED-based structured illumination. Opt. Express. 2018;26:2427–2434. doi: 10.1364/OE.26.002427. [DOI] [PubMed] [Google Scholar]

- 74.Komatsu K., Ozeki Y., Nakano Y., Tanemura T. Ghost imaging using integrated optical phased array; Proceedings of the Optical Fiber Communication Conference 2017; Los Angeles, CA, USA. 19–23 March 2017. [Google Scholar]

- 75.Li L.J., Chen W., Zhao X.Y., Sun M.J. Fast Optical Phased Array Calibration Technique for Random Phase Modulation LiDAR. IEEE Photonics J. 2018 doi: 10.1109/JPHOT.2018.2889313. [DOI] [Google Scholar]

- 76.Sun M.J., Zhao X.Y., Li L.J. Imaging using hyperuniform sampling with a single-pixel camera. Opt. Lett. 2018;43:4049–4052. doi: 10.1364/OL.43.004049. [DOI] [PubMed] [Google Scholar]

- 77.Phillips D.B., Sun M.J., Taylor J.M., Edgar M.P., Barnett S.M., Gibson G.M., Padgett M.J. Adaptive foveated single-pixel imaging with dynamic super-sampling. Sci. Adv. 2017;3:e1601782. doi: 10.1126/sciadv.1601782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Herman M., Tidman J., Hewitt D., Weston T., McMackin L., Ahmad F. A higher-speed compressive sensing camera through multi-diode design. Proc. SPIE. 2013;8717 doi: 10.1117/12.2015745. [DOI] [Google Scholar]

- 79.Sun M.J., Chen W., Liu T.F., Li L.J. Image retrieval in spatial and temporal domains with a quadrant detector. IEEE Photonics J. 2017;9:3901206. doi: 10.1109/JPHOT.2017.2741966. [DOI] [Google Scholar]

- 80.Dickson R.M., Norris D.J., Tzeng Y.L., Moerner W.E. Three-dimensional imaging of single molecules solvated in pores of poly (acrylamide) gels. Science. 1996;274:966–968. doi: 10.1126/science.274.5289.966. [DOI] [PubMed] [Google Scholar]

- 81.Udupa J.K., Herman G.T. 3D Imaging in Medicine. CRC Press; Boca Raton, FL, USA: 1999. [Google Scholar]

- 82.Bosch T., Lescure M., Myllyla R., Rioux M., Amann M.C. Laser ranging: A critical review of usual techniques for distance measurement. Opt. Eng. 2001;40:10–19. doi: 10.1117/1.1330700. [DOI] [Google Scholar]

- 83.Schwarz B. Lidar: Mapping the world in 3D. Nat. Photon. 2010;4:429–430. doi: 10.1038/nphoton.2010.148. [DOI] [Google Scholar]

- 84.Zhang S. Recent progresses on real-time 3D shape measurement using digital fringe projection techniques. Opt. Lasers Eng. 2010;48:149–158. doi: 10.1016/j.optlaseng.2009.03.008. [DOI] [Google Scholar]

- 85.Cho M., Javidi B. Three-dimensional photon counting double-random-phase encryption. Opt. Lett. 2013;38:3198–3201. doi: 10.1364/OL.38.003198. [DOI] [PubMed] [Google Scholar]

- 86.Velten A., Willwacher T., Gupta O., Veeraraghavan A., Bawendi M.G., Raskar R. Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging. Nat. Commun. 2012;3:745. doi: 10.1038/ncomms1747. [DOI] [PubMed] [Google Scholar]

- 87.Keppel E. Approximating complex surfaces by triangulation of contour lines. IBM J. Res. Dev. 1975;19:2–11. doi: 10.1147/rd.191.0002. [DOI] [Google Scholar]

- 88.Boyde A. Stereoscopic images in confocal (tandem scanning) microscopy. Science. 1985;230:1270–1272. doi: 10.1126/science.4071051. [DOI] [PubMed] [Google Scholar]

- 89.Woodham R.J. Photometric method for determining surface orientation from multiple images. Opt. Eng. 1980;19:139–144. doi: 10.1117/12.7972479. [DOI] [Google Scholar]

- 90.Horn B.K.P. Robot Vision. MIT Press; Cambridge, CA, USA: 1986. [Google Scholar]

- 91.Horn B.K.P., Brooks M.J. Shape from Shading. MIT Press; Cambridge, CA, USA: 1989. [Google Scholar]

- 92.Zhang Y., Edgar M.P., Sun B., Radwell N., Gibson G.M., Padgett M.J. 3D single-pixel video. J. Opt. 2016;18:035203. doi: 10.1088/2040-8978/18/3/035203. [DOI] [Google Scholar]

- 93.Geng J. Structured-light 3D surface imaging: A tutorial. Adv. Opt. Photonics. 2011;3:128–160. doi: 10.1364/AOP.3.000128. [DOI] [Google Scholar]

- 94.Jiang C.F., Bell T., Zhang S. High dynamic range real-time 3D shape measurement. Opt. Express. 2016;24:7337–7346. doi: 10.1364/OE.24.007337. [DOI] [PubMed] [Google Scholar]

- 95.Goda K., Tsia K.K., Jalali B. Serial time-encoded amplified imaging for real-time observation of fast dynamic phenomena. Nature. 2009;458:1145–1149. doi: 10.1038/nature07980. [DOI] [PubMed] [Google Scholar]

- 96.Diebold E.D., Buckley B.W., Gossett D.R., Jalali B. Digitally synthesized beat frequency multiplexing for sub-millisecond fluorescence microscopy. Nat. Photonics. 2013;7:806–810. doi: 10.1038/nphoton.2013.245. [DOI] [Google Scholar]

- 97.Tajahuerce E., Durán V., Clemente P., Irles E., Soldevila F., Andrés P., Lancis J. Image transmission through dynamic scattering media by single-pixel photodetection. Opt. Express. 2014;22:16945. doi: 10.1364/OE.22.016945. [DOI] [PubMed] [Google Scholar]

- 98.Guo Q., Chen H.W., Weng Z.L., Chen M.H., Yang S.G., Xie S.Z. Compressive sensing based high-speed time-stretch optical microscopy for two-dimensional image acquisition. Opt. Express. 2015;23:29639. doi: 10.1364/OE.23.029639. [DOI] [PubMed] [Google Scholar]