Abstract

Single-shot ultrafast optical imaging can capture two-dimensional transient scenes in the optical spectral range at ≥100 million frames per second. This rapidly evolving field surpasses conventional pump-probe methods by possessing the real-time imaging capability, which is indispensable for recording non-repeatable and difficult-to-reproduce events and for understanding physical, chemical, and biological mechanisms. In this mini-review, we survey comprehensively the state-of-the-art single-shot ultrafast optical imaging. Based on the illumination requirement, we categorized the field into active-detection and passive-detection domains. Depending on the specific image acquisition and reconstruction strategies, these two categories are further divided into a total of six sub-categories. Under each sub-category, we describe operating principles, present representative cutting-edge techniques with a particular emphasis on their methodology and applications, and discuss their advantages and challenges. Finally, we envision prospects of technical advancement in this field.

Keywords: (100.0118) Imaging ultrafast phenomena, (170.6920) Time-resolved imaging, (320.7160) Ultrafast technology

1. INTRODUCTION

Optical imaging of transient events in their actual time of occurrence exerts compelling scientific significance and practical merits. Occurring in two-dimensional (2D) space and at femtosecond to nanosecond timescales, these transient events reflect many important fundamental mechanisms in physics, chemistry, and biology [1–3]. Conventionally, the pump-probe methods have allowed capture of these dynamics through repeated measurements. However, many ultrafast phenomena are either non-repeatable or difficult-to-reproduce. Examples include optical rogue waves [4, 5], irreversible crystalline chemical reactions [6], light scattering in living tissue [7], shockwaves in laser-induced damage [8], and chaotic laser dynamics [9]. Under these circumstances, the pump-probe methods are inapplicable. In other cases, although reproducible, the ultrafast phenomena have significant shot-to-shot variations and low occurrence rates. Examples include dense plasma generation by high-power, low-repetition laser systems [10, 11] and laser-driven implosion in inertial confinement fusion [12]. For these cases, the pump-probe methods would lead to significant inaccuracy and low productivity.

To overcome the limitations in the pump-probe methods, many single-shot ultrafast optical imaging techniques have been developed in recent years. Here, “single-shot” describes capturing the entire dynamic process in real time (i.e., the actual duration in which an event occurs) without repeating the event. Extended from the established categorization [13, 14], we define “ultrafast” as imaging speeds at 100 million frames per second (Mfps) or above, which correspond to inter-frame time intervals of 10 ns or less. “Optical” refers to detecting photons in the extreme ultraviolet to the far-infrared spectral range. Finally, we restrict “imaging” to two-dimensional (2D, i.e., x, y). With the unique capability of recording non-repeatable and difficult-to-reproduce transient events, single-shot ultrafast optical imaging techniques have become indispensable for understanding fundamental scientific questions and for achieving high measurement accuracy and efficiency.

The prosperity of single-shot ultrafast optical imaging is built upon advances in three major scientific areas. The first contributor is the vast popularization and continuous progress in ultrafast laser systems [15], which enable producing pulses with femtosecond-level pulse widths and up to joule-level pulse energy. The ultrashort pulse width naturally offers outstanding temporal slicing capability. The wide bandwidth (e.g., ~30 nm for a 30-fs, 800 nm, Gaussian pulse) allows implementing different pulse shaping technologies [16] to attach various optical markers to the ultrashort pulses. The high pulse energy provides a sufficient photon count in each ultrashort time interval for obtaining images with a reasonable signal-to-noise ratio. All three features pave the way for the single-shot ultrafast recording of transient events. The second contributor is the incessantly improving performance of ultrafast detectors [17–22]. New device structures and state-of-the-art fabrication have enabled novel storage schemes, faster electronic responses, and higher sensitivity. These efforts have circumvented the limitations of conventional detectors in on-board storage and read-out speeds. The final contributor is the development of new computational frameworks in imaging science. Of particular interest is the effort to apply compressed sensing (CS) [23, 24] in spatial and temporal domains to overcome the speed limit of conventional optical imaging systems. These three major contributors have largely propelled the field of single-shot ultrafast optical imaging by improving existing techniques and by enabling new imaging concepts.

In this mini-review, we provide a comprehensive survey of the cutting-edge techniques in single-shot ultrafast optical imaging and their associated applications. The limited article length precludes covering the ever-expanding technical scope in this area, resulting in possible omission. We restrict the scope of this review by our above-described definition of single-shot ultrafast optical imaging. As a result, multiple-shot ultrafast optical imaging methods—including various pump-probe arrangements implemented via either temporal scanning by ultrashort probe pulses and ultrafast gated devices [25–29] or spatial scanning using zero-dimensional and one-dimensional (1D) ultrafast detectors [18, 30, 31]—are not discussed. In addition, existing single-shot optical imaging modalities at less than 100-Mfps imaging speeds, such as ultra-high-speed sensors [32], rotating mirror cameras [33], and time-stretching methods [34–36], are excluded. Finally, ultrafast imaging using non-optical sources, such as electron beams, x-rays, and terahertz radiations [37–39], falls out of the scope here. Interested readers can refer to the selected references listed herein and the extensive literature elsewhere.

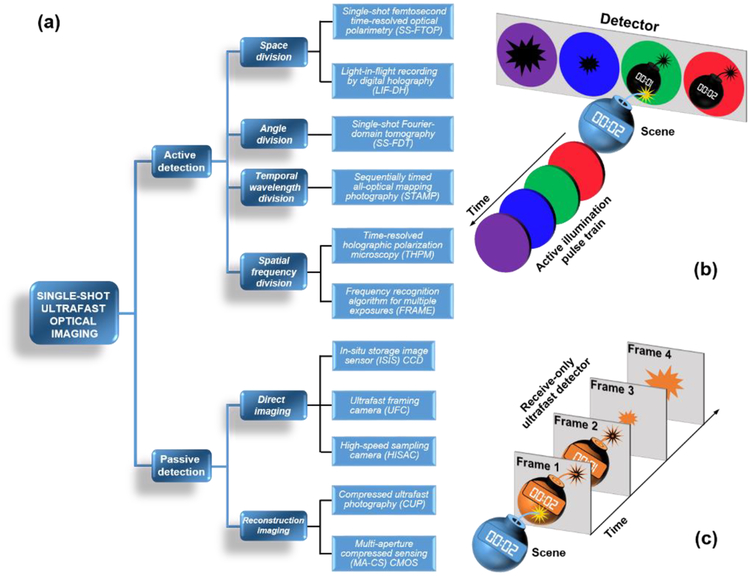

The subsequent sections are organized by the following conceptual structure [Fig. 1(a)]. According to the illumination requirement, single-shot ultrafast optical imaging can be categorized into active detection and passive detection. The active-detection domain exploits specially designed pulse trains for probing 2D transient events [Fig. 1(b)]. Depending on the optical marker carried by the pulse train, the active-detection domain can be further separated into four methods, namely space division, angle division, temporal wavelength division, and spatial frequency division. The passive-detection domain leverages receive-only ultrafast detectors to record 2D dynamic phenomena [Fig. 1(c)]. It is further divided into two image formation methods—direct imaging and reconstruction imaging. The in-depth description of each method starts by its basic principle, followed by the description of representative techniques, with their applications as well as their strengths and limitations. In the last section, a summary and an outlook are provided to conclude this mini-review.

Fig. 1.

(a) Categorization of single-shot ultrafast optical imaging in two detection domains and six methods with 11 representative techniques. (b) Conceptual illustration of active-detection-based single-shot ultrafast optical imaging. Colors represent different optical markers. (c) Conceptual illustration of passive-detection-based single-shot ultrafast optical imaging.

2. ACTIVE-DETECTION DOMAIN

In general, the active-detection domain works by using an ultrafast pulse train to probe a transient event in a single image acquisition. Each pulse in the pulse train is attached with a unique optical marker [e.g., different spatial positions, angles, wavelengths, states of polarization (SOPs), or spatial frequencies]. Leveraging the properties of these markers, novel detection mechanisms are deployed to separate the transmitted probe pulses after the transient scene in the spatial or spatial frequency domain to recover the (x, y, t) datacube. In the following, we will present four methods with six representative techniques in this domain.

A. Space division

The first method in this domain is space division. Extended from Muybridge’s famous imaging setup to capture a horse in motion [40], this method constructs a probe pulse train with each pulse occupies a different spatial and temporal position. Synchronized with a high-speed object traversing through the field of view (FOV), each probe pulse records object’s instantaneous position at the different time. These probe pulses are projected onto different spatial areas of a detector, which thus records the (x, y, t) information. Two representative techniques are presented in this sub-section.

1. Single-shot femtosecond time-resolved optical polarimetry (SS-FTOP)

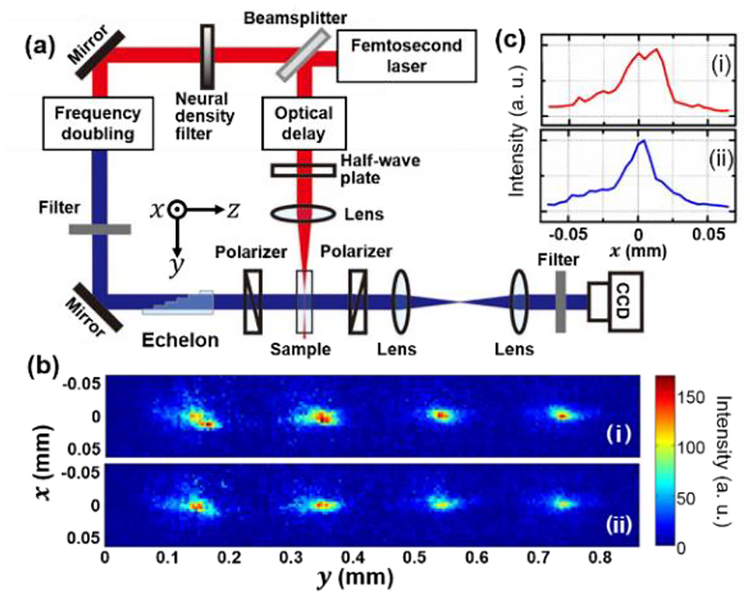

Figure 2(a) shows the schematic of the SS-FTOP system for imaging ultrashort pulse’s propagation in an optical nonlinear medium [41]. An ultrafast Ti:sapphire laser generated 800-nm, 65-fs pulses, which were split by a beam splitter to generate pump and probe pulses. The pump pulse, reflected from the beam splitter, passed through a half-wave plate for polarization control and then was focused into a 10-mm-long Kerr medium. The transmitted pulse from the beam splitter was frequency doubled by a β-barium borate (BBO) crystal. Then, it was incident on a four-step echelon [42], which had a step width of 0.54 mm and a step height of 0.2 mm. This echelon, therefore, produced four probe pulses with an inter-pulse time delay of 0.96 ps, corresponding to an imaging speed of 1.04 trillion frames per second (Tfps). These probe pulses were incident on the Kerr medium orthogonally to the pump pulse. The selected step width and height of the echelon allowed each probe pulses to arrive coincidentally with the propagating pump pulse in the Kerr medium. A pair of polarizers, whose polarization axes are rotated 90° relative to each other, sandwiched the Kerr medium [43]. As a result, the birefringence induced by the pump pulse allowed part of the probe pulses to be transmitted to a CCD camera. The transient polarization change thus provided contrast to image the pump pulse’s propagation. Each of the four recorded frames had 41×60 pixels (Prof. L. Yan, personal communication, May 2nd, 2018). SS-FTOP’s temporal resolution, determined by the probe pulse width and pump pulse’s lateral size, was 276 fs.

Fig. 2.

Single-shot femtosecond time-resolved optical polarimetry (SS-FTOP) based on space division followed by varied time delays for imaging a single ultrashort pulse’s propagation in a Kerr medium (adapted from [41]). (a) Schematic of experimental setup. (b) Two sequences of single ultrashort pulses’ propagation. The time interval between adjacent frames is 0.96 ps. (c) Transverse intensity profiles of the first frames of the two single-shot observations in (b).

Figure 2(b) shows two sequences of propagation dynamics of single, 45-μJ laser pulses in the Kerr medium. In spite of nearly constant pulse energy, the captured pulse profiles varied from shot to shot, attributed to self-modulation-induced complex structures within the pump pulses [44]. As a detailed comparison, the transverse (i.e., x-axis) profiles of the first frames of both measurements [Fig. 2(c)] show different intensity distributions. In particular, two peaks are observed in the first measurement, indicating the formation of double filaments during the pump pulse’s propagation [44].

SS-FTOP can fully leverage the ultrashort laser pulse width to achieve exceptional temporal resolution and imaging speeds. With the recent progress in attosecond laser science [45, 46], the imaging speed could exceed one quadrillion (i.e., 1015) fps. However, this technique has three limitations. First, using the echelon to induce the time delay discretely, SS-FTOP has a limited sequence depth (i.e., the number of frames captured in one acquisition). In addition, the sequence depth conflicts with the imaging FOV. Finally, SS-FTOP can only image ultrafast moving objects with non-overlapping trajectory.

2. Light-in-flight (LIF) recording by holography

Invented in the 1970s [47], this technique records time-resolving holograms by using an ultrashort reference pulse sweeping through a holographic recording medium (e.g., a film [48] for conventional holography or a CCD camera [49] for digital holography). The pulse front of the obliquely incident reference pulse intersects with different spatial areas of the recorded holograms, generating time-resolved views of the transient event.

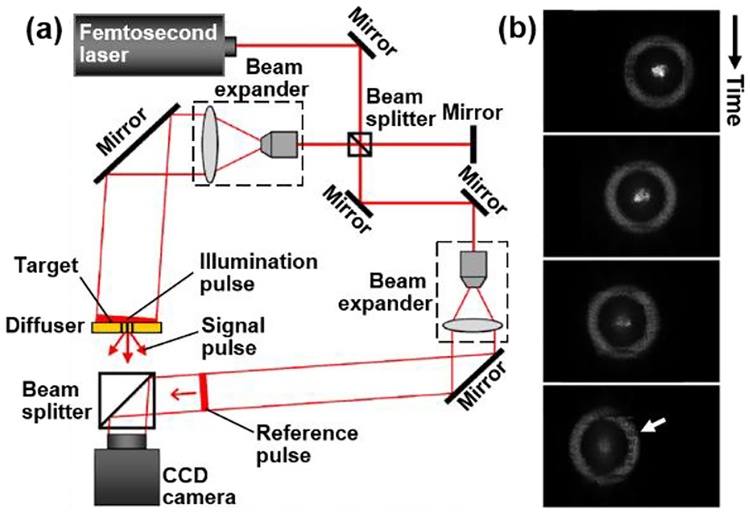

As an example, Fig. 3(a) illustrates a digital holography (DH) system for LIF recording of a femtosecond pulse’s sweeping motion on a patterned plate [50]. An ultrashort laser pulse (800-nm wavelength and 96-fs pulse width), from a mode-locked Ti:sapphire laser, was divided into an illumination pulse and a reference pulse. Both pulses were expanded and collimated by beam expanders. The illumination pulse was incident on a diffuser plate at 0.5° against the surface normal. A USAF resolution test chart was imprinted on the plate. The light scattered from the diffuser plate formed the signal pulse. The reference pulse was directed to a CCD camera with an incident angle of 0.5°. When the time of arrival of scattered photons coincided with that of the reference pulse, interference fringes were formed. Images were digitally reconstructed from the acquired hologram. The temporal resolution of LIF-DH was mainly determined by the FOV, incident angle, and coherence length [50].

Fig. 3.

Light-in-flight (LIF) recording by digital holography (DH) based on obliquely sweeping the reference pulse on the imaging plane, a form of space division [50]. (a) Experimental setup for recording the hologram. (b) Representative frames of single ultrashort laser pulses’ movement on a USAF resolution target. The time interval between frames is 192 fs. The white arrow points to the features of the USAF resolution target.

Figure 3(b) shows the sequence of the femtosecond laser pulse sweeping over the patterned plate. In this example, seven sub-holograms (512×512 pixels in size), extracted from the digitally recorded hologram, were used to reconstruct frames. The temporal resolution was 88 fs, corresponding to an effective imaging speed of 11.4 Tfps. In each frame, the bright spot at the center is the zeroth-order diffraction, and the reconstructed femtosecond laser pulse is shown as the circle. The USAF resolution target can be seen in the reconstructed frames. The sweeping motion of the femtosecond laser pulse is observed by sequentially displaying the reconstructed frames.

Akin to SS-FTOP, LIF holography fully leverages the ultrashort pulse width to achieve femtosecond-level temporal resolution. The sequence depth is also inversely proportional to the imaging FOV. Different from SS-FTOP, the FOV in LIF holography is tunable in reconstruction, which could provide a much-improved sequence depth. In addition, in theory, provided a sufficiently wide scattering angle and a long-coherence reference pulse, LIF holography could be used to image ultrafast moving objects with complex trajectories and other spatially-overlapped transient phenomena. However, the shape of the reconstructed object is determined by the geometry for which the condition of the same time-of-arrival between the pump and the probe pulses is satisfied [50]. The shape is also subject to observation positions [51]. Finally, based on interferometry, this technique cannot be implemented in imaging transient scenes with incoherent light emission.

B. Angle division

In the angle-division method, the transient event is probed from different angles in two typical arrangements. The first scheme uses an angularly separated and temporally distinguished ultrashort pulse train, which can be generated by dual echelons with focusing optics [52]. The second scheme uses longer pulses to cover the entire duration of the transient event. These pulses probe the transient events simultaneously. Each probe pulse records a projected view of the transient scene from a different angle. This scheme, implementing the Radon transformation in the spatiotemporal domain, allows leveraging well-established methods in medical imaging modalities, such as x-ray computed tomography (CT) and therefore has received extensive attention in recent years. Here, we discuss a representative second scheme.

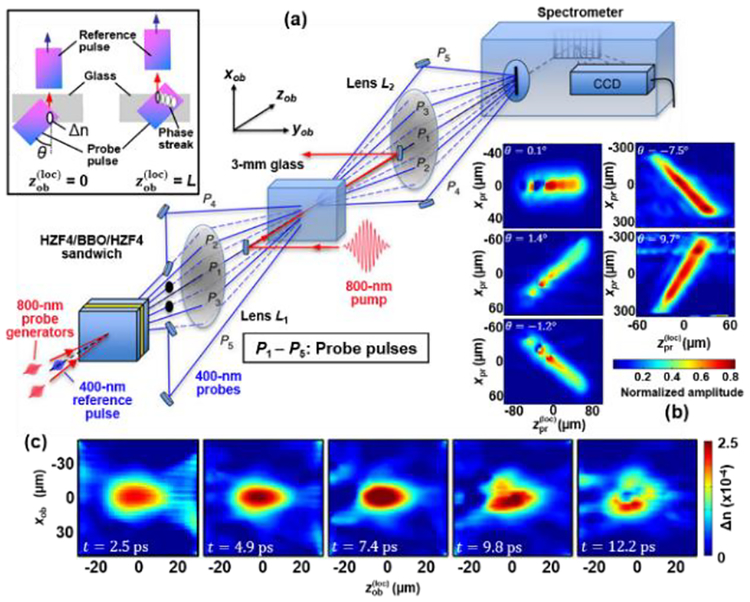

Figure 4(a) shows the system setup of single-shot frequency-domain tomography (SS-FDT) [53] for imaging transient refractive index perturbation. An 800-nm, 30-fs, 0.7-μJ pump pulse induced a transient refractive index structure (Δn) in a fused silica glass due to the nonlinear refractive index dependence and pump-generated plasma. This structure evolved at a luminal speed. In addition, a pair of pulses, directly split from the pump pulse (each with a 30-μJ pulse energy), were crossed spatiotemporally in a BBO crystal that was sandwiched by two HZF4 glasses. The first HZF4 glass generated a fan of up to eight 800 nm daughter pulses by cascaded four-wave mixing. The BBO crystal doubled the frequency as well as increased the number of pulses. The second HZF4 glass chirped these frequency-doubled daughter pulses to 600 fs. In the experiment, five angularly separated probe pulses were selected.

Fig. 4.

Single-shot frequency-domain tomography (SS-FDT) for imaging transient refractive index perturbation based on angle division followed by spectral imaging holography [53]. (a) Schematic of the experimental setup. Upper-left inset: principle of imprinting a phase streak in a probe pulse (adapted from [54]). θ, incident angle of the probe pulse; Δn, refractive index change. (b) Phase streaks induced by the evolving refractive index profile. xpr and , the transverse and the longitudinal coordinates of probe pulses. (c) Representative snapshots of the refractive index change using a pump energy of E = 0.7 μJ. xob and , the transverse and the longitudinal coordinates of the object.

The HZF4/BBO/HZF4 structure was directly imaged onto the target plane. The five probe pulses illuminated the target with probing angles of θ. Because all the probe pulses were generated at the same time from the same origin, they overlapped both spatially and temporally at the target. The transient scene was measured by spectral imaging interferometry [54–56]. Specifically, the transient refractive index structure imprinted a phase streak in each probe pulse [see the upper-left inset in Fig. 4(a)]. Before these probe pulses arrived, a chirped 400-nm reference pulse, directly split from the pump pulse, recorded the phase reference. The reference pulse and the transmitted probe pulses were imaged to an entrance slit of an imaging spectrometer. Inside the spectrometer, a diffraction grating and focusing optics broadened both reference pulse and probe pulses, which overlapped temporally to form a frequency-domain hologram [57] on a CCD camera. In the experiment, this hologram recorded all five projected views of the transient event.

Three steps were taken in image reconstruction. First, a 1D inverse Fourier transform was taken to convert the spatial axis into the incident angle. This step, therefore, separated the five probe pulses in the spatial frequency domain. Second, by windowing, shifting, and inverse transforming the hologram area associated with each probe pulse, five phase streaks were retrieved [Fig. 4(b)]. Finally, these streaks were fed into a tomographic image reconstruction algorithm (e.g., algebraic reconstruction technique [53, 58]) to recover the evolution of the transient scene. The reconstructed movie had a 4.35 Tfps imaging speed, an approximately 3-ps temporal resolution, and a sequence depth of 60 frames. Each frame had 128×128 pixels in size [59].

Figure 4(c) shows representative frames of nonlinear optical dynamics induced by intense laser pulse’s propagation in a fused silica glass. The reconstructed movie has revealed that the pulse experienced self-focusing within 7.4 ps. Then, an upper spatial lobe split off from the main pulse appeared at 9.8 ps, attributed by laser filamentation. After that, a steep-walled index ‘hole’ was generated near the center of the profile at 12.2 ps, indicating that the generated plasma had induced a negative index change that locally offset the laser-induced positive nonlinear refractive index change.

The synergy of CT and spectral imaging interferometry has endowed SS-FDH with advantages of the large sequence depth, the ability of recording complex amplitude, and the capability of observing dynamic events along the probe pulses’ propagation directions. However, the sparse angular sampling results in artifacts that strongly affect the reconstructed images [60], which thus limits the spatial and temporal resolutions.

C. Temporal wavelength division

The third method in the active-detection domain is temporal wavelength division. Rooted in time-stretching imaging [34–36], the wavelength-division method attaches different wavelengths to individual pulses. After probing the transient scene, a 2D (x, y) image at a specific time point is stamped by unique spectral information. At the detection side, dispersive optical elements spectrally separate transmitted probe pulses, which allows directly obtaining the (x, y, t) datacube.

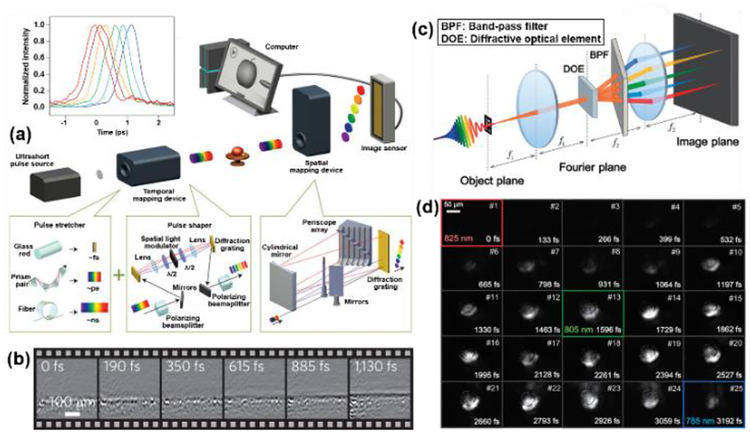

The representative technique discussed in this sub-section is the sequentially time all-optical mapping photography (STAMP) [61]. As shown in Fig. 5(a), a femtosecond pulse first passed through a temporal mapping device that comprised a pulse stretcher and a pulse shaper. Depending on specific experimental requirements, the pulse stretcher used different dispersing materials to increase the pulse width and to induce spectral chirping. Then, a classic 4f pulse shaper [62] filtered selective wavelengths, generating a pulse train containing six wavelength-encoded pulses [see an example in the upper-left inset in Fig. 5(a)] to probe the transient scene. After the scene, these transmitted pulses went through a spatial mapping unit, which used a diffraction grating and imaging optics to separate them in space. Finally, these pulses were recorded at different areas on an imaging sensor. Each frame has 450×450 pixels in size.

Fig. 5.

Sequentially timed all-optical mapping photography (STAMP) based on temporal wavelength division. (a) System schematic of STAMP [61]. Upper inset: normalized intensity profiles of the six probe pulses with an inter-frame time interval of 229 fs (corresponding to a frame rate of 4.4 Tfps) and an exposure time of 733 fs. Lower insets: schematics of the temporal mapping device and the spatial mapping device. (b) Single-shot imaging of electronic response and phonon formation at 4.4 Tfps [61]. (c) Schematic setup of spectrally filtered (SF)-STAMP [64]. f1 and f2, focal lengths of lenses. (d) Full sequence of crystalline-to-amorphous phase transition in Ge2Sb2Te5 captured by the SF-STAMP system with an inter-frame time interval of 133 fs (corresponding to an imaging speed of 7.52 Tfps) and an exposure time of 465 fs [64].

The STAMP system has been deployed in visualizing light-induced plasmas and phonon propagation in materials. A 70-fs, 40-μJ laser pulse was cylindrically focused into a ferroelectric crystal wafer at room temperature to produce coherent phonon-polariton waves. The laser-induced electronic response and phonon formation were captured at 4.4 Tfps with an exposure time of 733 fs per frame [Fig. 5(b)]. The first two frames show the irregular and complex electronic response of the excited region in the crystal. The following three frames show an upward-propagating coherent vibration wave.

Since STAMP’s debut in 2014, various recent development [63–66] has reduced the system’s complexity and improve system’s specifications. For example, the schematic setup of the spectrally filtered (SF)-STAMP system [64] is shown in Fig. 5(c). SF-STAMP abandoned the pulse shaper in the temporal mapping device. Consequently, instead of using several temporally discrete probe pulses, SF-STAMP used a single frequency-chirped pulse to probe the transient event. At the detection side, SF-STAMP adopted a single-shot ultrafast pulse characterization setup [67, 68]. In particular, a diffractive optical element (DOE) generated spatially resolved replicas of the transmitted probe pulse. These replicas were incident to a tilted bandpass filter, which selected different transmissive wavelengths according to the incident angles [67]. Consequently, the sequence depth was equal to the number of replicas produced by the DOE. The imaging speed and the temporal resolution were limited by the transmissive wavelength range.

Figure 5(d) shows the full sequence (a total of 25 frames) of the crystalline-to-amorphous phase transition of Ge2Sb2Te5 alloy captured by SF-STAMP at 7. 52 Tfps (with an exposure time of 465 fs). Each frame has 400×300 pixels in size. The gradual change in the probe laser transmission up to ~660 fs is clearly shown, demonstrating the phase transition process. In comparison with this amorphized area, the surrounding crystalline areas retained high reflectance. The sequence also shows that the phase change domain did not spatially spread to the surrounding area. This observation has verified the theoretical model that attributed the initiation of non-thermal amorphization to Ge-atom displacements from octahedral to tetrahedral sites [64, 69].

The SF-STAMP technique has achieved one of the highest imaging speeds (7.52 Tfps) in active-detection-based single-shot ultrafast optical imaging. The original STAMP system, although having a high light throughout, is restricted by a low sequence depth. SF-STAMP, although having increased the sequence depth, significantly sacrifices the light throughput. In addition, the trade-off between the pulse width and spectral bandwidth limits STAMP’s temporal resolution. Finally, they are applicable to only color-neutral objects.

D. Spatial frequency division

The last method discussed in the active-detection domain is spatial frequency division. It works by attaching different spatial carrier frequencies to probe pulses. The transmitted probe pulses are spatially superimposed at the detector. In the ensuing imaging reconstruction, temporal information associated with each probe pulse is separated at the spatial frequency domain, which allows recovering the (x, y, t) datacube. Two representative techniques are shown for this method.

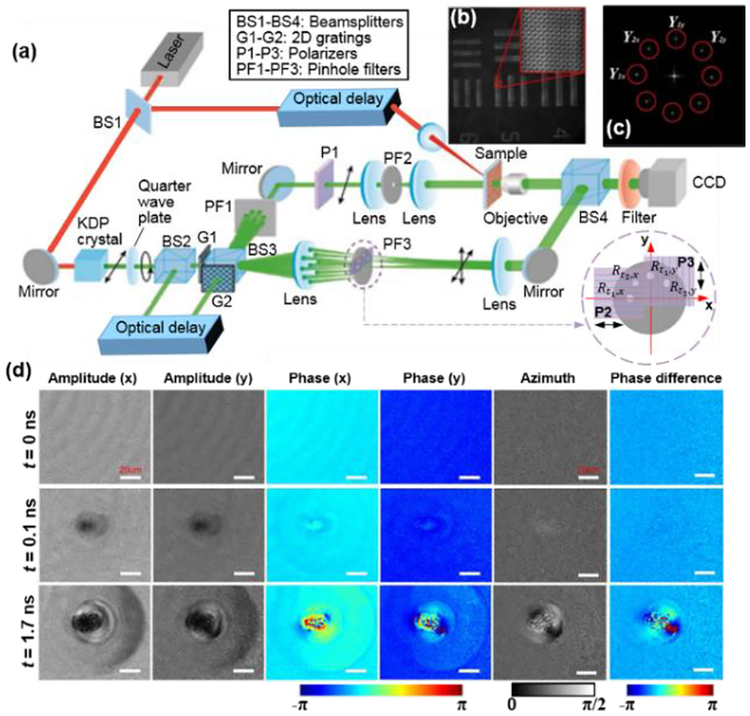

1. Time-resolved holographic polarization microscopy (THPM)

In the THPM system [Fig. 6(a)] [70], a pulsed laser generated a pump pulse and a probe pulse by using a beam splitter. The pump beam illuminated the sample. The probe pulse was first frequency doubled by a potassium dihydrogen phosphate crystal, tuned to circular polarization by a quarter wave plate, and then split by another beam splitter to two pulses with a time delay. Each probe pulse passed through a 2D grating and was further split to signal pulses and reference pulses. The orientation of the grating determined the polarization of diffracted light to be 45° with the x-axis. Two signal pulses were generated by selecting only the zeroth diffraction order from a pinhole filter. The reference pulses passed through a four-pinhole filter, on which two linear polarizers were placed side by side [see the lower-right inset in Fig. 6(a)]. The orientations of the linear polarizers P2 and P3 were along the x-axis and the y-axis, respectively. As a result, a total of four reference pulses (i.e., ), each carrying a different combination of arrival times and SOPs, were generated. After the sample, the reference pulses interfered with the transmitted signal pulses with the same arrival times on a CCD camera (2048×2048 pixels). There, the interference fringes of signal pulse with the four reference pulses had different spatial frequencies. Therefore, a frequency multiplexed hologram was recorded [Fig. 6(b)].

Fig. 6.

Time-resolved holographic polarization microscopy (THPM) based on time delays and spatial frequency division of the reference pulses for imaging laser-induced damage of a mica lamina sample (adapted from [70]). (a) Schematic of experimental setup. Black arrows indicates the pulses’ SOPs. KDP, potassium dihydrogen phosphate. Lower-right inset: Generation of four reference pulses. (b) Recorded hologram of a USAF resolution target. The zoom-in picture shows the detailed interferometric pattern of this hologram. (c) Spatial frequency spectrum of (b). (d) Time-resolved multi-contrast imaging of ultrafast laser-induced damage in a mica lamina sample.

In image reconstruction, the acquired hologram was first Fourier transformed. Information carried by each carrier frequency was separated in the spatial frequency domain [Fig. 6(c)]. Then, by windowing, shifting, and inverse Fourier transforming of hologram associated with each carrier frequency, four images of complex amplitudes were retrieved. Finally, the phase information in the complex amplitude was used to calculate the SOP, in terms of azimuth and phase difference [71].

THPM has been used for real-time imaging of laser-induced damage [Fig. 6(d)]. A mica lamina plate, obtained by mechanical exfoliation, was the sample. A pump laser damaged the plate with an intensity of >40 J/cm2. THPM has captured the initial amplitude and phase change at 0.1 ns and 1.7 ns after the pump pulse, corresponding to a sequence depth of two frames and an imaging speed of 625 Gfps. The images have revealed the generation and propagation of shock waves. In addition, the movie reflects non-uniform changes in amplitude and phase, which was due to the sample’s anisotropy. Furthermore, the SOP analysis revealed a phase change of 0.4π and an azimuth angle of ~36° at the initial stage. Complex structures in azimuth and phase difference were observed at the 1.7-ns delay, indicating anisotropic changes in transmission and refractive index in the process of laser irradiation.

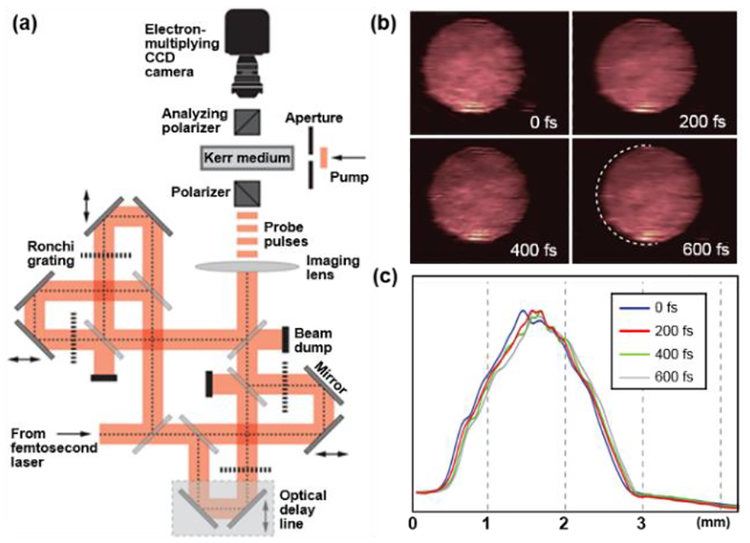

2. Frequency recognition algorithm for multiple exposures (FRAME) imaging

The second technique is the frequency recognition algorithm for multiple exposures (FRAME) imaging [72]. Instead of forming the fringes via interference, FRAME attaches various carrier frequencies to probe pulses using intensity modulation. In the setup [Fig. 7(a)], a 125-fs pulse output from an ultrafast laser was split into four sub-pulses, each having a specified time delay. The intensity profile of each sub-pulse was modulated by a Ronchi grating with an identical period but a unique orientation. These Ronchi gratings, providing sinusoidal intensity modulation to the probe pulses, were directly imaged to the measurement volume. As a result, the spatially modulated illumination patterns were superimposed onto the dynamic scene in the captured single image. In the spatial frequency domain, the carrier frequency of the sinusoidal pattern shifted the band-limited frequency content of the scene to unexploited areas. Thus, the temporal information of the scene, conveyed by sinusoidal patterns with different angles, were separated in the spatial frequency domain without any cross-talk. Following a similar procedure as THPM, the sequence could be recovered. The FRAME imaging system has captured the propagation of a femtosecond laser pulse through a CS2 medium at imaging speeds up to 5 Tfps with a frame size of 1002×1004 pixels. The temporal resolution, limited by the imaging speed, was 200 fs. Using a similar Kerr-gate setup as the one in Fig. 2(a), transient refractive index change was used as the contrast to indicate the propagation of the pulse in the Kerr medium [Fig. 7(b) and (c)].

Fig. 7.

Frequency recognition algorithm for multiple exposures (FRAME) imaging based on spatial frequency division of the probe pulses [72]. (a) System schematic. (b) Sequence of reconstructed frames of a propagating femtosecond light pulse in a Kerr medium at 5 Tfps. The white dashed arc in 600-fs frame indicates the pulse’s position at 0 fs. (c) Vertically summed intensity profiles of (b).

Akin to the space-division method (Section 2A), the generation of the probe pulse train using the spatial frequency division method does not rely on dispersion. Thus, the preservation of the laser pulse’s entire spectrum allows simultaneously maximizing the frame rate and the temporal resolution. The carrier frequency can be attached via either interference or intensity modulation. The former offers the ability of directly measuring the complex amplitude of the transient event. The latter allows easy adaptation to other ultrashort light sources, such as sub-nanosecond flash lamps [73] and LEDs [74, 75]. By integrating imaging modalities that can sense other photon tags (e.g., polarization), it is possible to achieve high-dimensional imaging of ultrafast events. The major limitation of the frequency-division method, similar to the space-division counterpart, is the limited sequence depth. To increase the sequence depth, either the FOV or spatial resolution must be sacrificed.

3. PASSIVE-DETECTION DOMAIN

A transient event can also be passively captured in a single measurement. In this domain, the ultra-high temporal resolution is provided by receive-only ultrafast detectors without the need for active illumination. The transient event is either imaged directly or reconstructed computationally. Compared with active detection, passive detection has unique advantages in imaging self-luminescent and color-selective transient scenes or distant scenes that are light-years away. In the following, we will present two passive-detection methods implemented in five representative techniques.

A. Direct imaging

The first method in the passive-detection domain is direct imaging using novel ultrafast 2D imaging sensors. In spite of limitations in pixel counts, sequence depth, or light throughput, this method has the highest technological maturity, manifesting in the successful commercialization and wide applications of various products. Here, three representative techniques are discussed.

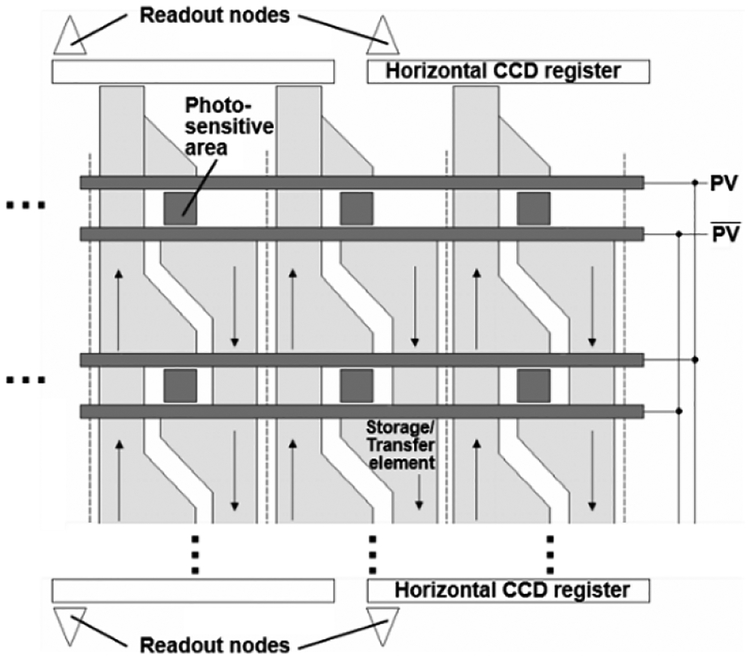

1. In-situ storage image sensor (ISIS) CCD

The ISIS CCD camera uses a novel charge transfer and storage structure to achieve ultra-high imaging speed. As an example, the ISIS CCD camera manufactured by DALSA [76] (Fig. 8) had 64×64 pixels, each with a 100-μm pitch. Each pixel contained a photosensitive area (varies from 10×10 μm2 to 18×18 μm2 in size, corresponding to a fill factor of 1–3%), two readout gates (PV and in Fig. 8), and 16 charge storage/transfer elements (arranged into two groups of eight elements, with opposite transfer directions). Transfer elements from adjacent pixels constituted continuous two-phase CCD registers in the vertical direction. Horizontal CCD registers with multiport readout nodes were distributed at the top and the bottom of the sensor. The two readout gates, operating out of phase in burst mode, transferred photo-generated charges alternatingly into the up and down groups of storage elements within each frame’s exposure time of 10 ns (corresponding to an imaging speed of 100 Mfps). During image capturing, charges generated by the odd or even numbered frames filled up the storage elements on both sides without being read out, which is the bottleneck in speed. After a full integration cycle (16 exposures in total), the sensor was reset while all time-stamped charges were read out in a conventional manner. As a result, the inter-frame time interval could be decreased to the transfer time of an image signal to the in-situ storage [77]. The ultrafast imaging ability of this ISIS-based CCD camera has been demonstrated by imaging a 4-ns pulsed LED light source [76].

Fig. 8.

Structure of DALSA’s in-situ storage image sensor (ISIS) CCD camera based on on-chip charge transfer and storage (adapted from [76]). The sensor has 64×64 pixels while six are shown here. Arrows indicate the charge transfer directions.

The DALSA’s ISIS-based CCD camera, to our best knowledge, is currently the fastest CCD camera. Based on mature fabrication technologies, this camera holds great potential for being further developed towards a consumer product. However, currently, its limited number of pixels, low sequence depth, and extremely low fill factor, make this camera far below most users’ requirements [77].

2. Ultrafast framing camera (UFC)

In general, the UFC is built upon the operation of beam splitting with ultrafast time gating [17]. In an example [78] schematically shown in Fig. 9(a), a pyramidal beam splitter with an octagonal base generated eight replicated images of the transient scene. Each image was projected onto a time-gated intensified CCD camera. Onset and width of the time gate for each intensified CCD camera were precisely controlled to capture successive temporal slices [i.e., 2D spatial (x, y) information at a given time point] of the transient event. The recent advances in ultrafast electronics have enabled inter-frame time intervals as short as 10 ps and a gate time of 200 ps [79]. The implementation of new optical design has increased the sequence depth to 16 frames [80].

Fig. 9.

Ultrafast framing camera (UFC) based on beam splitting along with ultrafast time gating. (a) Schematic of a UFC [78]. (b) Schematic of shadowgraph imaging of cylindrical shock waves using a UFC (adapted from [84]). Inset: Configuration of the multi-layered target. (c) Sequence of captured shadowgraph frames showing the convergence and subsequent divergence of the shock waves generated by a laser excitation ring (red dashed circle in the first frame) in the target [84]. The shock front is pointed by the white arrows. Additional rings and structure instabilities are shown by the blue arrows and orange arrows, respectively.

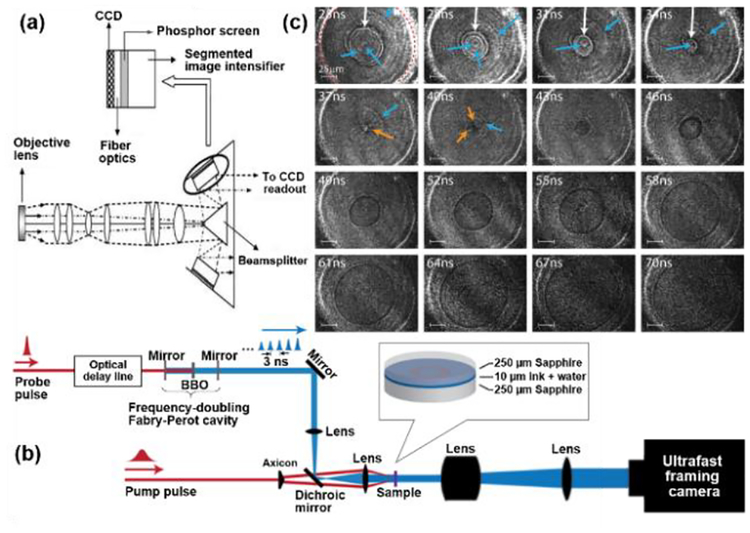

UFCs have been used in widespread applications, including ballistic testing, materials characterization, and fluid dynamics [81–83]. Figure 9(b) shows the setup of ultrafast imaging shadowgraphy of cylindrically propagating shock waves using the UFC [84]. In the experiment, a multi-stage amplified Ti:sapphire laser generated pump and probe pulses. The pump pulse, with a 150-ps pulse width and a 1-mJ pulse energy, was converted into a ring shape (with a 150-μm inner diameter and an 8-μm width) by a 0.5° axicon and a 30-mm-focal-length lens. This ring pattern was used to excite a shock wave on the target. The probe pulse, compressed to a 130-fs pulse width, passed through a frequency-doubling Fabry-Perot cavity. The output 400-nm probe pulse train was directed through the target of a 10-μm-thick ink-water layer that was sandwiched between two sapphire wafers. The transient density variations of the shock wave altered the refractive index in the target, causing light refraction in probe pulses. The transmitted probe pulses were imaged onto a UFC (Specialised Imaging, Inc.) that is able to capture 16 frames (1360×1024 pixels) with an imaging speed of 333 Mfps and a temporal resolution of 3 ns [80].

The single-shot ultrafast shock imaging has allowed tracking non-reproducible geometric instability [Fig. 9(c)]. The sequence revealed asymmetric structures of converging and diverging shock waves. While imperfect circles from the converging shock front were observed, the diverging wave maintained a nearly circular structure. The precise evolution was different in each shock event, which was characteristic of converging shock waves [85]. In addition, faint concentric rings [indicated by blue arrows in Fig. 9(c)] were seen in these events. Tracking these faint features has allowed detailed studies of shock behavior (e.g., substrate shocks and coupled wave interactions) [84].

3. High-speed sampling camera (HISAC)

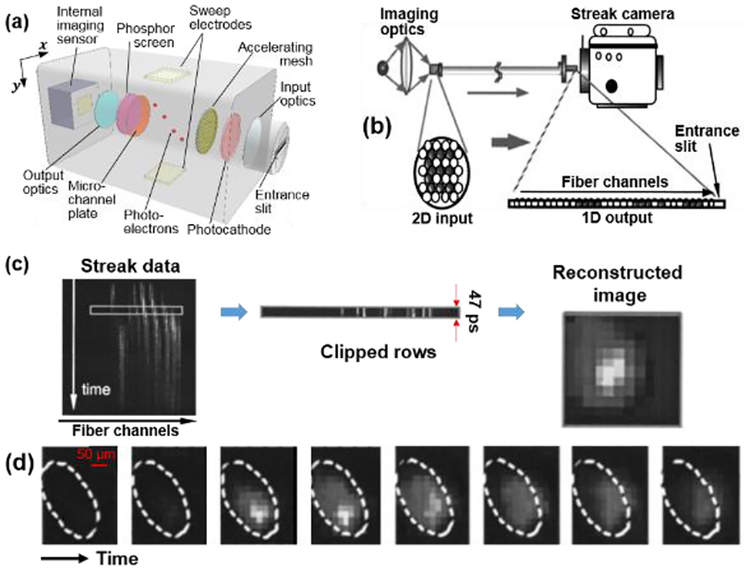

Streak cameras are ultrafast detectors with up to femtosecond temporal resolutions [22]. In the conventional operation [Fig. 10(a)], incident photons first pass through a narrow (typically 50–200 μm wide) entrance slit [along the x-axis in Fig. 10(a)]. The image of this entrance slit is formed on a photocathode, where photons are converted into photoelectrons via the photoelectric effect. These photoelectrons are accelerated by a pulling voltage and then passed between a pair of sweep electrodes. A linear voltage ramp is applied to the electrodes so that photoelectrons are deflected to different vertical positions [along the y-axis in Fig. 10(a)] according to their times of arrival. The deflected photoelectrons are amplified by a micro-channel plate and then converted back to photons by bombarding a phosphor screen. The phosphor screen is imaged onto an internal imaging sensor (e.g., a CCD or a CMOS camera), which records the time course with a 1D FOV [i.e., an (x, t) image at a specific y position]. Because the streak camera’s operation requires using one spatial axis on the internal imaging sensor to record the temporal information, the narrow entrance confines its FOV. Therefore, the conventional streak camera is a 1D ultrafast imaging device.

Fig. 10.

High-speed sampling camera (HISAC) based on remapping the scene from 2D to 1D in space and streak imaging. (a) Schematic of a streak camera. (b) Schematic of a high-speed sampling camera (HISAC) system. (c) Formation process of individual frames from the streak data. (d) Sequence showing shock wave breakthrough. The time interval between frames is ~336 ps. The laser focus is outlined by the white dashed circle. (b)-(d) are adapted from [87].

To overcome this limitation, various dimension-reduction imaging techniques [86–89] have been developed to allow direct imaging of a 2D transient scene by a streak camera. In general, this imaging modality maps a 2D image into a line to interface with the streak camera’s entrance slit, so that the streak camera can capture an (x, y, t) datacube in a single shot. As an example, Fig. 10(b) shows the system schematic of the high-speed sampling camera (HISAC) [87]. An optical telescope imaged the transient scene to a 2D-1D optical fiber bundle. The input end of this fiber bundle was arranged as a 2D fiber array (15×15) [90], which sampled the 2D spatial information of the relayed image. The 2D fiber array was remapped to a 1D configuration (1×225) at the output end that interfaced with a streak camera’s entrance slit. The ultrashort temporal resolution, provided by the streak camera, was 47 ps, corresponding to an imaging speed of 21.3 Gfps. A 2D frame at a specific time point was covered by reshaping one row on the streak image to 2D according to the optical fiber mapping [Fig. 10(c)]. Totally, the sequence depth was up to 240 frames.

HISAC has been implemented in many imaging applications, including heat propagation in solids [91], electron energy transport [92], and plasma dynamics [93]. As an example, Fig. 10(d) shows the dynamic spatial dependence of ablation pressure imaged by HISAC [87]. A 100-J, 1.053-μm laser pulse was loosely focused on a 10-μm-thick hemispherical plastic target. The sequence shows the shock breakthrough process. It revealed that the shock heating decreased with the incident angle, and the breakthrough speed was faster for smaller incident angles. This non-uniformity was ascribed to the angular dependence of pressure generated by the laser absorption.

The dimension-reduction-based streak imaging has an outstanding sequence depth. In addition, its temporal resolution is not bounded by the response time in electronics, which permits hundreds of Gfps to even Tfps imaging speed. However, its biggest limitation is the low number of fibers in the bundle, which produces either a low spatial resolution or a small FOV.

B. Reconstruction imaging

Despite the recent progress, existing ultrafast detectors, restricted by their operating principles, still have limitations, such as imaging FOV, pixel count, and sequence depth. To overcome these limitations, novel computational techniques are thus brought in. Of particular interest among existing computational techniques is compressed sensing (CS). In conventional imaging, the number of measurements is required to be equal to the number of pixels (or voxels) to precisely reproduce a scene. In contrast, CS allows underdetermined reconstruction of sparse scenes. The underlying rationale is that natural scenes possess sparsity when expressed in an appropriate space. In this case, many independent bases in the chosen space convey little to no useful information. Therefore, the number of measurements can be substantially compressed without excessive loss of image fidelity [94]. Here, two representative techniques are discussed.

1. Compressed ultrafast photography (CUP)

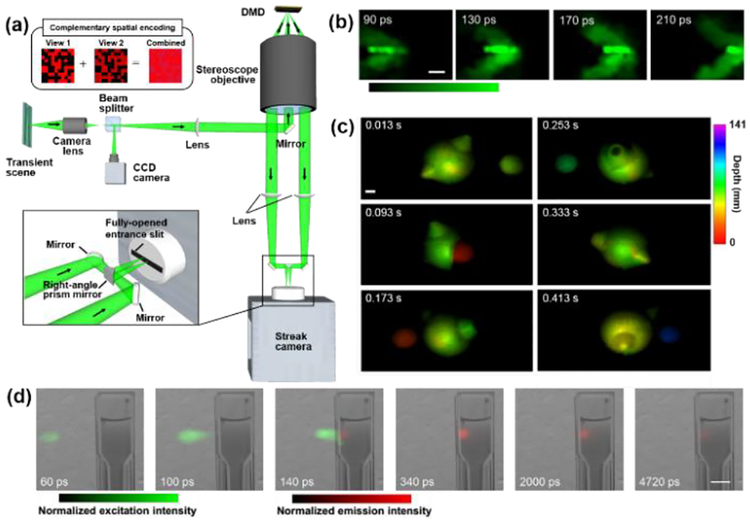

CUP synergistically combines CS with streak imaging [95]. Fig. 11(a) shows the schematic of lossless encoding CUP system [96, 97]. In data acquisition, the dynamic scene, denoted as I, was first imaged by a camera lens. Following the intermediate image plane, a beam splitter reflected half of the light to an external CCD camera. The other half of the light passed through the beam splitter and was imaged to a digital micromirror device (DMD) through a 4f system consisting of a tube lens and a stereoscope objective. The DMD, as a binary amplitude spatial light modulator [98], spatially encoded transient scenes with a pseudo-random binary pattern. Because each DMD pixel can be tilted to either +12° (ON state) or–12° (OFF state) from its surface normal, two spatially-encoded scenes, encoded by complementary patterns [see the upper inset in Fig. 11 (a)], were generated after the DMD. The light beams from both channels were collected by the same stereoscope objective, passed through tube lenses, planar mirrors and a right-angle prism mirror to form two images at separate horizontal positions on a fully-opened entrance port (5 mm×17 mm). Inside the streak camera, the spatially-encoded scenes experienced temporal shearing and spatiotemporal integration and were finally recorded by an internal CCD camera in the streak camera.

Fig. 11.

Compressed ultrafast photography (CUP) for single-shot real-time ultrafast optical imaging based on spatial encoding and 2D streaking followed by compressed-sensing reconstruction. (a) Schematic of the lossless-encoding CUP system [97]. DMD, digital micromirror device. Upper inset: Illustration of complementary spatial encoding. Lower inset: Close-up of the configuration before the streak camera’s entrance port (black box). (b) CUP of a propagating photonic Mach cone [96]. (c) CUP of dynamic volumetric imaging [104]. (d) CUP of spectrally resolved pulse-laser-pumped fluorescence emission [95]. Scale bar: 10 mm.

For image reconstruction, the acquired snapshots from the external CCD camera and the streak camera, denoted as E, were used as inputs for the two-step iterative shrinkage/thresholding algorithm [99], which solved the minimization problem of . Here O is a joint measurement operator that accounts for all operations in data acquisition, denotes the l2 norm, Φ(I) is a regularization function that promotes sparsity in the dynamic scene, and β is the regularization parameter. The solution to this minimization problem can be stably and accurately recovered, even with a highly compressed measurement [94, 100]. For the original CUP system [95], the reconstruction produced up to 350 frames in a movie with an imaging speed of up to 100 Gfps and with an effective exposure time of ~50 ps [101]. Each frame contained 150×150 pixels. The LLE-CUP system, while maintaining the imaging speed at 100 Gfps, improved the numbers of (x, y, t) pixels in the datacube to 330×200×300 [96]. Recently, a trillion-frame-per-second CUP (T-CUP) system, employing a femtosecond streak camera [102], has achieved an imaging speed of 10 Tfps, an effective exposure time of 0.58 ps, numbers of (x,y) pixels of 450×150 per frame, and a sequence depth of 350 frames [103].

CUP has been used for a number of applications. First, it allows the capture, for the first time, a scattering-induced photonic Mach cone [96]. A thin scattering plate assembly contained an air (mixed with dry ice) source tunnel that was sandwiched between two silicone-rubber (mixed with aluminum oxide powder) display panels. When an ultrashort laser pulse was launched into the source tunnel, the scattering events generated secondary sources of light that advanced superluminally to the light propagating in the display panels, forming a scattering-induced photonic Mach cone. CUP imaged the formation and propagation of a photonic Mach cone at 100 Gfps [Fig. 11(b)]. Second, by leveraging the ultrashort temporal resolution and the spatial encoding, CUP has enabled single-shot encrypted volumetric imaging [104]. Through the sequential imaging of the CCD camera inside the streak camera, high-speed volumetric imaging at 75 volume per second was demonstrated by using a two-ball object rotating at 150 revolutions per minute [Fig. 11(c)]. Finally, CUP has achieved single-shot spectrally resolved fluorescence lifetime mapping [95]. Showing in Fig. 11(d), Rhodamine 6G dye solution placed in a cuvette was excited by a single 7-ps laser pulse. The CUP system clearly captured both the excitation and the fluorescence emission processes. The movie has also allowed quantification of the fluorescence lifetime.

CUP exploits the spatial encoding and temporal shearing to tag each frame with a spatiotemporal “barcode”, which allows an (x, y, t) datacube to be compressively recorded as a snapshot with spatiotemporal mixing. This salient advantage overcomes the limitation in the FOV in conventional streak imaging, converting the streak camera into a 2D ultrafast optical detector. In addition, CUP’s recording paradigm allows fitting more frames onto the CCD surface area, which significantly improves the sequence depth while maintaining the inherent imaging speed of the streak camera. Compared with the LIF holography, CUP does not require the presence of a reference pulse, making it especially suitable for imaging incoherent light events (e.g., fluorescence emission). However, the spatiotemporal mixture in CUP trades spatial and temporal resolutions of a streak camera for the added spatial dimension.

2. Multiple-aperture compressed sensing (MA-CS) CMOS

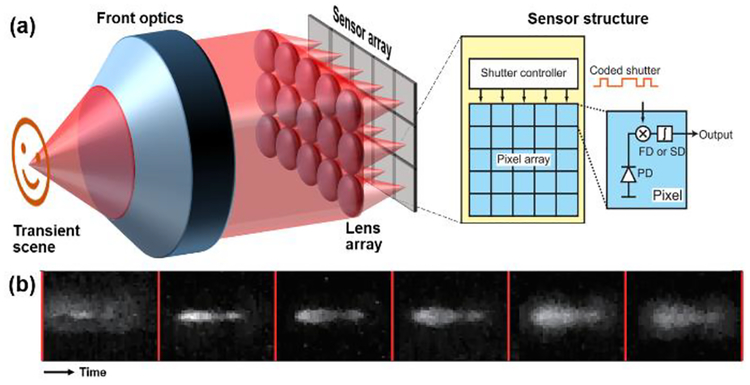

CS has also been implemented in the time domain with a CMOS sensor. Figure 12(a) shows the structure of the MA-CS CMOS sensor [105]. In image acquisition, a transient scene, I, first passed through the front optics. A 5×3 lens array (0.72 mm × 1.19 mm pitch size and 3-mm focal length), sampling the aperture plane, optically generated a total of 15 replicated images, each was formed onto an imaging sensor (64×108 pixels in size). A dynamic shutter, encoded by a unique binary random code sequence for each sensor, modulated the temporal integration process [106, 107]. The temporally encoded transient scene was spatiotemporally integrated on the sensor. The acquired data from all sensors, denoted by E, were fed into a compressed-sensing-based reconstruction algorithm [108, 109] that solved the inverse problem of . Here, DiI is the discrete gradient of I at pixel i, means the p norm (p = 1 or 2), and A is the observation matrix. With the prior information about the 15 temporal codes and the assumption that all 15 images are spatially identical, the spatiotemporal datacube was recovered. The reconstructed frame rate and the temporal resolution, determined by the maximum operation frequency of the shutter controller, was 200 Mfps. Based on the captured 15 images, 32 frames could be recovered in the reconstructed movie.

Fig. 12.

Multiple-aperture compressed-sensing (MA-CS) CMOS sensor based on temporally encoding each of the image replicas (adapted from [105]). (a) System schematic. PD, photodiode; FD, float diffuser; SD, storage diode. (b) Temporally resolved frame of laser-pulse-induced plasma emission. The inter-frame time interval is 5 ns.

This sensor has been implemented in time-of-flight LIDAR [110] and plasma imaging [105]. As an example, Fig. 12(b) shows the plasma emission induced by an 8-ns, 532-nm laser pulse. The MA-CS CMOS sensor captured these dynamics in a period of 30 ns.

The implementation of the MA recording and CS overcomes the imaging speed limit in the conventional CMOS sensor. In addition, built upon the mature CMOS fabrication technology, the MA-CS CMOS sensor has great potential in increasing the pixel count. Moreover, different from the UFCs, a series of temporal gates, instead of one, was applied to the sensor, which significantly increased the light throughput. Finally, compared with the ISIS CCD camera, the fill factor of the MA-CS CMOS sensor has been improved to 16.7%. However, the MA recording scheme may face technical difficulties in scalability and parallax, which poses challenges in improving the sequence depth.

4. SUMMARY AND OUTLOOK

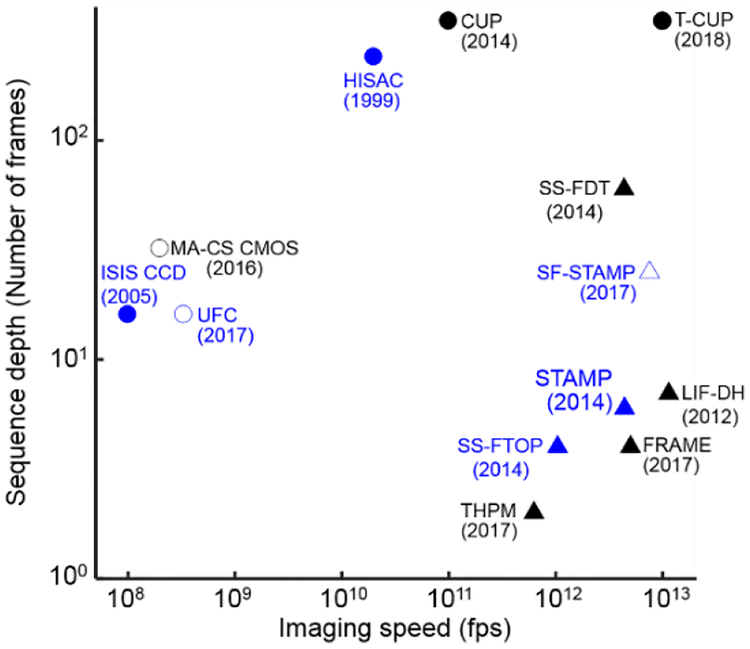

In this mini-review, based on the illumination requirement, we categorize single-shot ultrafast optical imaging into two general domains. According to specific image acquisition and reconstruction strategies, these domains are further divided into six methods. A total of 11 representative techniques have been surveyed from aspects of their underlying principles, system schematics, specifications, applications, as well as their advantages and limitations. This information is summarized in Table 1. In addition, Fig. 13 compares the sequence depths versus the imaging speeds of these techniques. In practice, researchers could use this table and figure as general guidelines to assess the fortes and bottlenecks, and select the most suitable technique for their specific studies.

Table 1.

Comparative summary of representative single-shot ultrafast optical imaging techniques

| Imaging technique | Detection domain | Image formation | Imaging speed | Temporal resolution | Number of (x, y) pixels | Sequence depth | Light throughput | Selected applications |

|---|---|---|---|---|---|---|---|---|

| SS-FTOP [41] | Active | Direct | 1.04 Tfps | 276 fs | 41×60 | 4 | High | Laser pulse characterization [41] |

| LIF-DH [50] | Active | Reconstruction | 11.4 Tfps | 88 fs | 512×512 | 7 | High | Laser pulse characterization [50] |

| SS-FDT [53] | Active | Reconstruction | 4.35 Tfps | 3 ps | 128×128 | 60 | High | Nonlinear optical physics [53] |

| STAMP [61] | Active | Direct | 4.4 Tfps | 733 fs | 450×450 | 6 | High | Laser plasma; Phonon propagation [61] |

| SF-STAMP [64] | Active | Direct | 7.52 Tfps | 465 fs | 400×300 | 25 | Low[a] | Phase transition in alloys [64] |

| THPM [70] | Active | Reconstruction | 625 Gfps | 1.6 ns | 2048×2048 | 2 | High | Laser-induced damage [70] |

| FRAME [72] | Active | Reconstruction | 5 Tfps | 200 fs | 1002×1004 | 4 | High | Laser pulse characterization [72] |

| ISIS CCD [76] | Passive | Direct | 100 Mfps | 10 ns | 64×64 | 16 | High | Laser pulse characterization [76] |

| UFC [78] | Passive | Direct | 333 Mfps | 3 ns | 1360×1024 | 16 | Low[b] | Fluid dynamics [81]; Material characterization [82]; Shock wave dynamics [84] |

| HISAC [87] | Passive | Direct | 21.3 Gfps | 47 ps | 15×15 | 240 | High | Laser ablation [87]; Electron energy transport [92]; Plasma dynamics [93] |

| CUP [95] | Passive | Reconstruction | 100 Gfps | 50 ps | 150×150 | 350 | High | Fluorescence lifetime mapping [95]; Shock wave dynamics [96]; Time-of-flight volumetric imaging [104]; |

| T-CUP [103] | Passive | Reconstruction | 10 Tfps | 0.58 ps | 450×150 | 350 | High | Laser pulse characterization [103] |

| MA-CS CMOS [105] | Passive | Reconstruction | 200 Mfps | 5 ns | 64×108 | 32 | Medium[c] | Plasma dynamics [105] Time-of-flight LIDAR [110] |

The light throughput can be approximated estimated by the reciprocal of the sequence depth.

The light throughput is determined by τg/τs, where τg and τs are the width of ultrafast gating and that of the transient event, respectively.

50% in current MA-CS CMOS configuration. Specific light throughput depends on the ratio between “ON” and “OFF” pixels in temporal binary random encoding.

Fig. 13.

Comparison of representative single-shot ultrafast optical imaging techniques in imaging speeds and sequence depths. Triangles and circles represent active and passive detection domains. Blue and black colors represent the direct and reconstruction imaging methods, respectively. Solid and hollow marks represent high and low (including medium) light throughputs. The numbers in the parentheses are the years in which the techniques were published. CUP, Compressed ultrafast photography; T-CUP, Trillion-frames-per-second CUP; FRAME, Frequency recognition algorithm for multiple exposures; HISAC, High-speed sampling camera; ISIS CCD, In-situ storage image sensor CCD; LIF-DH, Light-in-flight recording by digital holography; MA-CS CMOS, Multi-aperture compressed sensing CMOS; SS-FDT, Single-shot Fourier-domain tomography; SS-FOP, Single-shot femtosecond time-resolved optical polarimetry; STAMP, Sequentially timed all-optical mapping photography; SF-STAMP, Spectral-filtering STAMP; THPM, Time-resolved holographic polarization microscopy; UFC, Ultrafast framing camera.

Single-shot ultrafast optical imaging will undoubtedly continue its fast evolution in the future. This interdisciplinary field is built upon the areas of laser science, optoelectronics, nonlinear optics, imaging theory, computational techniques, and information theory. Fast progress in these disciplines will create new imaging techniques and will improve the performance of existing techniques, both of which, in turn, will open new avenues for a wide range of laboratory and field applications [111–114]. In the following, we outline four prospects in system development.

First, the recent development of a number of emerging techniques suggests intriguing opportunities for significantly improving the specifications of existing single-shot ultrafast optical imaging modalities in the near future. For example, implementing the dual-echelon-based probing schemes [115, 116] could easily improve the sequence depth by approximately one order of magnitude for SS-FTOP (Section 2A). In addition, ptychographic ultrafast imaging [117] has been recently demonstrated in simulation. Spectral multiplexing tomography [118] has shown ultrafast imaging ability experimentally on single frame recording. Both techniques, having solved the issue of limited probing angles, could be implemented in SS-FDT (Section 2B) to reduce reconstruction artifacts and thus to improve spatial and temporal resolutions. As another example, time-stretching microscopy with GHz-level line rates has been demonstrated [119, 120]. Integrating a 2D disperser in these systems could result in new wavelength-division-based 2D ultrafast imaging (Section 2C). Finally, a high-speed image rotator [121] could increase the sequence depth of FRAME imaging (Section 2D). For the passive-detection domain, the highest speed limit of silicon sensors, in theory, has been predicted to be 90.1 Gfps [122]. Currently, a number of Gfps-level sensors are under development [77, 123, 124]. This recent progress could significantly improve the imaging FOV, pixel count, and sequence depth in ultrafast CCD and CMOS sensors (Section 3A). In addition, the advent of many femtosecond streak imaging techniques [125] could support the pursuit of the imaging speed of CUP (Section 3B) further toward hundreds of Tfps levels.

Second, computational techniques will exert a more significant role in single-shot ultrafast optical imaging. Optical realization of mathematical models can transfer some unique advantages in these models into the ultrafast optical imaging systems and thus has alleviated the hardware limitations in, for example, imaging speed for MA-CS CMOS (Section 3B). In addition, a number of established image construction algorithms used in tomography have been grafted to the spatiotemporal domain [e.g., the algebraic reconstruction technique for SS-FDT (Section 2B)]. It is therefore believed that this trend will continue, and more algorithms used in x-ray CT, magnetic resonance imaging, and ultrasound imaging may be applied in newly developed ultrafast optical imaging instruments. Finally, it is predicted that machine learning techniques [126, 127] will be implemented in the single-short ultrafast optical imaging to improve imaging reconstruction speed and accuracy.

Third, high-dimensional single-shot ultrafast optical imaging [128] will gain more attention. Many transient events may not be reflected by light intensity. Therefore, the ability to measure other optical contrasts, for example, phase and polarization, will significantly enhance the ability and the application scope of ultrafast optical imaging. A few techniques [e.g., SS-FTOP (Section 2A) and THPM (Section 2D)] have already explored this path. It is envisaged that imaging modalities that sense other optical contrasts (e.g., volumography, spectroscopy, and light-field photography) will be increasingly integrated into ultrafast optical imaging.

Finally, continuous streaming will be one of the ultimate milestones in single-shot ultrafast optical imaging. Working in the stroboscopic mode, current single-shot ultrafast imaging techniques still require a precise synchronization in imaging transient events, falling short in visualizing asynchronous processes. Towards this goal, innovations in large format imaging sensors [129], high-speed interfaces [130], ultrafast optical waveform recorders [131], can be leveraged. In addition, intelligent selection, reconstruction, and management of big data are also indispensable [132].

Acknowledgments

Funding: National Institutes of Health (NIH Director’s Pioneer Award DP1 EB016986 and NIH Director’s Transformative Research Award R01 CA186567); Natural Sciences and Engineering Research Council (NSERC) of Canada (RGPIN-2017–05959 and RGPAS-507845–2017); Fonds de Recherche du Québec–Nature et Technologies (FRQNT) (2019-NC-252960).

REFERENCES

- 1.Allen JB and Larry RF, Electrochemical methods: fundamentals and applications (John Wiley & Sons, Inc, 2001), pp. 156–176. [Google Scholar]

- 2.Gorkhover T, Schorb S, Coffee R, Adolph M, Foucar L, Rupp D, Aquila A, Bozek JD, Epp SW, Erk B, Gumprecht L, Holmegaard L, Hartmann A, Hartmann R, Hauser G, Holl P, Hömke A, Johnsson P, Kimmel N, Kühnel K-U, Messerschmidt M, Reich C, Rouzée A, Rudek B, Schmidt C, Schulz J, Soltau H, Stern S, Weidenspointner G, White B, Küpper J, Strüder L, Schlichting I, Ullrich J, Rolles D, Rudenko A, Möller T, and Bostedt C, “Femtosecond and nanometre visualization of structural dynamics in superheated nanoparticles,” Nat. Photon 10, 93–97 (2016). [Google Scholar]

- 3.Imada M, Fujimori A, and Tokura Y, “Metal-insulator transitions,” Rev. Mod. Phys 70, 1039 (1998). [Google Scholar]

- 4.Solli DR, Ropers C, Koonath P, and Jalali B, “Optical rogue waves,” Nature 450, 1054–1057 (2007). [DOI] [PubMed] [Google Scholar]

- 5.Jalali B, Solli DR, Goda K, Tsia K, and Ropers C, “Real-time measurements, rare events and photon economics,” Eur. Phys. J. Spec. Top 185, 145–157 (2010). [Google Scholar]

- 6.Poulin PR and Nelson KA, “Irreversible organic crystalline chemistry monitored in real time,” Science 313, 1756–1760 (2006). [DOI] [PubMed] [Google Scholar]

- 7.Tuchin VV, “Methods and Algorithms for the Measurement of the Optical Parameters of Tissues,” in Tissue optics: light scattering methods and instruments for medical diagnosis (SPIE press; Bellingham, 2015), pp. 303–304. [Google Scholar]

- 8.Šiaulys N, Gallais L, and Melninkaitis A, “Direct holographic imaging of ultrafast laser damage process in thin films,” Opt. Lett 39, 2164–2167 (2014). [DOI] [PubMed] [Google Scholar]

- 9.Sciamanna M and Shore KA, “Physics and applications of laser diode chaos,” Nat. Photon 9, 151 (2015). [Google Scholar]

- 10.Kodama R. l., Norreys P, Mima K, Dangor A, Evans R, Fujita H, Kitagawa Y, Krushelnick K, Miyakoshi T, and Miyanaga N, “Fast heating of ultrahigh-density plasma as a step towards laser fusion ignition,” Nature 412, 798 (2001). [DOI] [PubMed] [Google Scholar]

- 11.Li Z, Tsai H-E, Zhang X, Pai C-H, Chang Y-Y, Zgadzaj R, Wang X, Khudik V, Shvets G, and Downer MC, “Single-shot visualization of evolving plasma wakefields,” AIP Conf. Proc 1777, 040010 (2016). [DOI] [PubMed] [Google Scholar]

- 12.Bradley D, Bell P, Kilkenny J, Hanks R, Landen O, Jaanimagi P, McKenty P, and Verdon C, “High‐speed gated x‐ray imaging for ICF target experiments,” Rev. Sci. Instrum 63, 4813–4817 (1992). [Google Scholar]

- 13.Fuller P, “An introduction to high speed photography and photonics,” Imaging Sci. J 57, 293–302 (2009). [Google Scholar]

- 14.Camm DM, “World’s most powerful lamp for high-speed photography,” in 20th International Congress on High Speed Photography and Photonics, (SPIE, 1993), 6. [Google Scholar]

- 15.Fermann ME, Galvanauskas A, and Sucha G, Ultrafast lasers: technology and applications (CRC Press, 2002), Vol. 80. [Google Scholar]

- 16.Weiner AM, “Ultrafast optical pulse shaping: A tutorial review,” Opt. Commun 284, 3669–3692 (2011). [Google Scholar]

- 17.Patwardhan SV and Culver JP, “Quantitative diffuse optical tomography for small animals using an ultrafast gated image intensifier,” J. Biomed. Opt 13, 011009-011009-011007 (2008). [DOI] [PubMed] [Google Scholar]

- 18.Kirmani A, Venkatraman D, Shin D, Colaço A, Wong FN, Shapiro JH, and Goyal VK, “First-photon imaging,” Science 343, 58–61 (2014). [DOI] [PubMed] [Google Scholar]

- 19.Ishikawa H, Ultrafast all-optical signal processing devices (John Wiley & Sons, 2008). [Google Scholar]

- 20.Sato K, Saitoh E, Willoughby A, Capper P, and Kasap S, Spintronics for next generation innovative devices (John Wiley & Sons, 2015). [Google Scholar]

- 21.El-Desouki M, Jamal Deen M, Fang Q, Liu L, Tse F, and Armstrong D, “CMOS image sensors for high speed applications,” Sensors 9, 430–444 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hamamatsu KK, “Guide to Streak Cameras”(2008), retrieved 2018/08/13, https://www.hamamatsu.com/resources/pdf/sys/SHSS0006E_STREAK.pdf.

- 23.Donoho DL, “Compressed sensing,” IEEE Trans. Inf. Theory 52, 1289–1306 (2006). [Google Scholar]

- 24.Candes EJ and Wakin MB, “An Introduction To Compressive Sampling,” IEEE Signal Process. Mag 25, 21–30 (2008). [Google Scholar]

- 25.Feurer T, Vaughan JC, and Nelson KA, “Spatiotemporal coherent control of lattice vibrational waves,” Science 299, 374–377 (2003). [DOI] [PubMed] [Google Scholar]

- 26.Fieramonti L, Bassi A, Foglia EA, Pistocchi A, D’Andrea C, Valentini G, Cubeddu R, De Silvestri S, Cerullo G, and Cotelli F, “Time-gated optical projection tomography allows visualization of adult zebrafish internal structures,” PLoS One 7, e50744 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Balistreri M, Gersen H, Korterik JP, Kuipers L, and Van Hulst N, “Tracking femtosecond laser pulses in space and time,” Science 294, 1080–1082 (2001). [DOI] [PubMed] [Google Scholar]

- 28.Gariepy G, Krstajic N, Henderson R, Li C, Thomson RR, Buller GS, Heshmat B, Raskar R, Leach J, and Faccio D, “Single-photon sensitive light-in-fight imaging,” Nat. Commun 6, 6021 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Gariepy G, Tonolini F, Henderson R, Leach J, and Faccio D, “Detection and tracking of moving objects hidden from view,” Nat. Photon 10, 23 (2015). [Google Scholar]

- 30.Becker W, The bh TCSPC handbook (Becker & Hickl, 2014). [Google Scholar]

- 31.Velten A, Willwacher T, Gupta O, Veeraraghavan A, Bawendi MG, and Raskar R, “Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging,” Nat. Commun 3, 745 (2012). [DOI] [PubMed] [Google Scholar]

- 32.Etoh TG, Vo Le C, Hashishin Y, Otsuka N, Takehara K, Ohtake H, Hayashida T, and Maruyama H, “Evolution of Ultra-High-Speed CCD Imagers,” Plasma Fusion Res 2, S1021–S1021 (2007). [Google Scholar]

- 33.Chin CT, Lancée C, Borsboom J, Mastik F, Frijlink ME, de Jong N, Versluis M, and Lohse D, “Brandaris 128: A digital 25 million frames per second camera with 128 highly sensitive frames,” Rev. Sci. Instrum 74, 5026–5034 (2003). [Google Scholar]

- 34.Goda K, Tsia K, and Jalali B, “Serial time-encoded amplified imaging for real-time observation of fast dynamic phenomena,” Nature 458, 1145–1149 (2009). [DOI] [PubMed] [Google Scholar]

- 35.Lei C, Guo B, Cheng Z, and Goda K, “Optical time-stretch imaging: Principles and applications,” Appl. Phys. Rev 3, 011102 (2016). [Google Scholar]

- 36.Wu J-L, Xu Y-Q, Xu J-J, Wei X-M, Chan AC, Tang AH, Lau AK, Chung BM, Shum HC, and Lam EY, “Ultrafast laser-scanning time-stretch imaging at visible wavelengths,” Light Sci. Appl 6, e16196 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Zewail AH, “4D ultrafast electron diffraction, crystallography, and microscopy,” Annu. Rev. Phys. Chem 57, 65–103 (2006). [DOI] [PubMed] [Google Scholar]

- 38.Gaffney KJ and Chapman HN, “Imaging Atomic Structure and Dynamics with Ultrafast X-ray Scattering,” Science 316, 1444–1448 (2007). [DOI] [PubMed] [Google Scholar]

- 39.Zhang XC, “Terahertz wave imaging: horizons and hurdles,” Phys. Med. Biol 47, 3667 (2002). [DOI] [PubMed] [Google Scholar]

- 40.Muybridge J, “The horse in motion,” Nature 25, 605 (1882). [Google Scholar]

- 41.Wang X, Yan L, Si J, Matsuo S, Xu H, and Hou X, “High-frame-rate observation of single femtosecond laser pulse propagation in fused silica using an echelon and optical polarigraphy technique,” Appl. Opt 53, 8395–8399 (2014). [DOI] [PubMed] [Google Scholar]

- 42.Shin T, Wolfson JW, Teitelbaum SW, Kandyla M, and Nelson KA, “Dual echelon femtosecond single-shot spectroscopy,” Rev. Sci. Instrum 85, 083115 (2014). [DOI] [PubMed] [Google Scholar]

- 43.Fujimoto M, Aoshima S, Hosoda M, and Tsuchiya Y, “Femtosecond time-resolved optical polarigraphy: imaging of the propagation dynamics of intense light in a medium,” Opt. Lett 24, 850–852 (1999). [DOI] [PubMed] [Google Scholar]

- 44.Couairon A and Mysyrowicz A, “Femtosecond filamentation in transparent media,” Phys. Rep 441, 47–189 (2007). [Google Scholar]

- 45.Corkum PB and Krausz F, “Attosecond science,” Nat. Phys 3, 381 (2007). [Google Scholar]

- 46.Hassan MT, Luu TT, Moulet A, Raskazovskaya O, Zhokhov P, Garg M, Karpowicz N, Zheltikov A, Pervak V, and Krausz F, “Optical attosecond pulses and tracking the nonlinear response of bound electrons,” Nature 530, 66 (2016). [DOI] [PubMed] [Google Scholar]

- 47.Abramson N, “Light-in-flight recording by holography,” Opt. Lett 3, 121–123 (1978). [DOI] [PubMed] [Google Scholar]

- 48.Kubota T, Komai K, Yamagiwa M, and Awatsuji Y, “Moving picture recording and observation of three-dimensional image of femtosecond light pulse propagation,” Opt. Express 15, 14348–14354 (2007). [DOI] [PubMed] [Google Scholar]

- 49.Rabal H, Pomarico J, and Arizaga R, “Light-in-flight digital holography display,” Appl. Opt 33, 4358–4360 (1994). [DOI] [PubMed] [Google Scholar]

- 50.Kakue T, Tosa K, Yuasa J, Tahara T, Awatsuji Y, Nishio K, Ura S, and Kubota T, “Digital light-in-flight recording by holography by use of a femtosecond pulsed laser,” IEEE J. Sel. Topics Quantum Electron 18, 479–485 (2012). [Google Scholar]

- 51.Komatsu A, Awatsuji Y, and Kubota T, “Dependence of reconstructed image characteristics on the observation condition in light-in-flight recording by holography,” J. Opt. Soc. Am. A 22, 1678–1682 (2005). [DOI] [PubMed] [Google Scholar]

- 52.Wakeham GP and Nelson KA, “Dual-echelon single-shot femtosecond spectroscopy,” Opt. Lett 25, 505–507 (2000). [DOI] [PubMed] [Google Scholar]

- 53.Li Z, Zgadzaj R, Wang X, Chang Y-Y, and Downer MC, “Single-shot tomographic movies of evolving light-velocity objects,” Nat. Commun 5, 3085 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Li Z, Zgadzaj R, Wang X, Reed S, Dong P, and Downer MC, “Frequency-domain streak camera for ultrafast imaging of evolving light-velocity objects,” Opt. Lett 35, 4087–4089 (2010). [DOI] [PubMed] [Google Scholar]

- 55.Matlis NH, Reed S, Bulanov SS, Chvykov V, Kalintchenko G, Matsuoka T, Rousseau P, Yanovsky V, Maksimchuk A, and Kalmykov S, “Snapshots of laser wakefields,” Nat. Phys 2, 749 (2006). [Google Scholar]

- 56.Le Blanc S, Gaul E, Matlis N, Rundquist A, and Downer M, “Single-shot measurement of temporal phase shifts by frequency-domain holography,” Opt. Lett 25, 764–766 (2000). [DOI] [PubMed] [Google Scholar]

- 57.Nuss MC, Li M, Chiu TH, Weiner AM, and Partovi A, “Time-to-space mapping of femtosecond pulses,” Opt. Lett 19, 664–666 (1994). [DOI] [PubMed] [Google Scholar]

- 58.Gordon R, Bender R, and Herman GT, “Algebraic reconstruction techniques (ART) for three-dimensional electron microscopy and X-ray photography,” J. Theor. Biol 29, 471IN1477–1476IN2481 (1970). [DOI] [PubMed] [Google Scholar]

- 59.Li Z, “Single-shot visualization of evolving, light-speed refractive index structures,” PhD. Thesis (the University of Texas at Austin, 2014). [Google Scholar]

- 60.Kak AC and Slaney M, Principles of computerized tomographic imaging (IEEE press, 1988). [Google Scholar]

- 61.Nakagawa K, Iwasaki A, Oishi Y, Horisaki R, Tsukamoto A, Nakamura A, Hirosawa K, Liao H, Ushida T, Goda K, Kannari F, and Sakuma I, “Sequentially timed all-optical mapping photography (STAMP),” Nat. Photon 8, 695–700 (2014). [Google Scholar]

- 62.Weiner A, Ultrafast optics (John Wiley & Sons, 2011), Vol. 72. [Google Scholar]

- 63.Gao G, He K, Tian J, Zhang C, Zhang J, Wang T, Chen S, Jia H, Yuan F, Liang L, Yan X, Li S, Wang C, and Yin F, “Ultrafast all-optical solid-state framing camera with picosecond temporal resolution,” Opt. Express 25, 8721–8729 (2017). [DOI] [PubMed] [Google Scholar]

- 64.Suzuki T, Hida R, Yamaguchi Y, Nakagawa K, Saiki T, and Kannari F, “Single-shot 25-frame burst imaging of ultrafast phase transition of Ge2Sb2Te5 with a sub-picosecond resolution,” Appl. Phys. Express 10, 092502 (2017). [Google Scholar]

- 65.Suzuki T, Isa F, Fujii L, Hirosawa K, Nakagawa K, Goda K, Sakuma I, and Kannari F, “Sequentially timed all-optical mapping photography (STAMP) utilizing spectral filtering,” Opt. Express 23, 30512–30522 (2015). [DOI] [PubMed] [Google Scholar]

- 66.Gao G, Tian J, Wang T, He K, Zhang C, Zhang J, Chen S, Jia H, Yuan F, Liang L, Yan X, Li S, Wang C, and Yin F, “Ultrafast all-optical imaging technique using low-temperature grown GaAs/AlxGa1−xAs multiple-quantum-well semiconductor,” Phys. Lett 381, 3594–3598 (2017). [Google Scholar]

- 67.Gabolde P and Trebino R, “Single-frame measurement of the complete spatiotemporal intensity and phase of ultrashort laser pulses using wavelength-multiplexed digital holography,” J. Opt. Soc. Am. B 25, A25–A33 (2008). [Google Scholar]

- 68.Gabolde P and Trebino R, “Single-shot measurement of the full spatio-temporal field of ultrashort pulses with multi-spectral digital holography,” Opt. Express 14, 11460–11467 (2006). [DOI] [PubMed] [Google Scholar]

- 69.Takeda J, Oba W, Minami Y, Saiki T, and Katayama I, “Ultrafast crystalline-to-amorphous phase transition in Ge2Sb2Te5 chalcogenide alloy thin film using single-shot imaging spectroscopy,” Appl. Phys. Lett 104, 261903 (2014). [Google Scholar]

- 70.Yue Q-Y, Cheng Z-J, Han L, Yang Y, and Guo C-S, “One-shot time-resolved holographic polarization microscopy for imaging laser-induced ultrafast phenomena,” Opt. Express 25, 14182–14191 (2017). [DOI] [PubMed] [Google Scholar]

- 71.Colomb T, Dürr F, Cuche E, Marquet P, Limberger HG, Salathé R-P, and Depeursinge C, “Polarization microscopy by use of digital holography: application to optical-fiber birefringence measurements,” Appl. Opt 44, 4461–4469 (2005). [DOI] [PubMed] [Google Scholar]

- 72.Ehn A, Bood J, Li Z, Berrocal E, Aldén M, and Kristensson E, “FRAME: femtosecond videography for atomic and molecular dynamics,” Light Sci. Appl, e17045 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Birch D and Imhof R, “Coaxial nanosecond flashlamp,” Rev. Sci. Instrum 52, 1206–1212 (1981). [Google Scholar]

- 74.O’Hagan W, McKenna M, Sherrington D, Rolinski O, and Birch D, “MHz LED source for nanosecond fluorescence sensing,” Meas. Sci. Technol 13, 84 (2001). [Google Scholar]

- 75.Araki T and Misawa H, “Light emitting diode‐based nanosecond ultraviolet light source for fluorescence lifetime measurements,” Rev. Sci. Instrum 66, 5469–5472 (1995). [Google Scholar]

- 76.Lazovsky L, Cismas D, Allan G, and Given D, “CCD sensor and camera for 100 Mfps burst frame rate image capture,” in Defense and Security, (SPIE, 2005), 7. [Google Scholar]

- 77.Etoh TG, Son DV, Yamada T, and Charbon E, “Toward one giga frames per second—evolution of in situ storage image sensors,” Sensors 13, 4640–4658 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Tiwari V, Sutton M, and McNeill S, “Assessment of high speed imaging systems for 2D and 3D deformation measurements: methodology development and validation,” Exp. Mechan 47, 561–579 (2007). [Google Scholar]

- 79.Stanford Computer Optics, “XXRapidFrame: Multiframing ICCD camera”(2017), retrieved 2018/05/13, http://www.stanfordcomputeroptics.com/download/Brochure-XXRapidFrame.pdf.

- 80.Specialised Imaging, “SIMD - Ultra High Speed Framing Camera”(2017), retrieved 2018/05/13, http://specialised-imaging.com/products/simd-ultra-high-speed-framing-camera.

- 81.Versluis M, “High-speed imaging in fluids,” Exp. Fluids 54, 1458 (2013). [Google Scholar]

- 82.Xing H, Zhang Q, Braithwaite CH, Pan B, and Zhao J, “High-speed photography and digital optical measurement techniques for geomaterials: fundamentals and applications,” Rock Mech. Rock Eng 50, 1611–1659 (2017). [Google Scholar]

- 83.Fujita H, Kanazawa S, Ohtani K, Komiya A, and Sato T, “Spatiotemporal analysis of propagation mechanism of positive primary streamer in water,” J. Appl. Phys 113, 113304 (2013). [Google Scholar]

- 84.Dresselhaus-Cooper L, Gorfain JE, Key CT, Ofori-Okai BK, Ali SJ, Martynowych DJ, Gleason A, Kooi S, and Nelson KA, “Development of Single-Shot Multi-Frame Imaging of Cylindrical Shock Waves for Deeper Understanding of a Multi-Layered Target Geometry,” arXiv preprint arXiv:1707.08940 (2017). [Google Scholar]

- 85.Kilkenny JD, Glendinning SG, Haan SW, Hammel BA, Lindl JD, Munro D, Remington BA, Weber SV, Knauer JP, and Verdon CP, “A review of the ablative stabilization of the Rayleigh–Taylor instability in regimes relevant to inertial confinement fusion,” Phys. Plasmas 1, 1379–1389 (1994). [Google Scholar]