Abstract

Background:

Scientists have developed evidence-based interventions that improve the symptoms and functioning of youth with psychiatric disorders; however, these interventions are rarely used in community settings. Eliminating this research-to-practice gap is the purview of implementation science, the discipline devoted to the study of methods to promote the use of evidence-based practices in routine care.

Methods:

We review studies that have tested factors associated with implementation in child psychology and psychiatry, explore applications of social science theories to implementation, and conclude with recommendations to advance implementation science through the development and testing of novel, multilevel, causal theories.

Results:

During its brief history, implementation science in child psychology and psychiatry has documented the implementation gap in routine care, tested training approaches and found them to be insufficient for behavior change, explored the relationships between variables and implementation outcomes, and initiated randomized controlled trials to test implementation strategies. This research has identified targets related to implementation (e.g., clinician motivation, organizational culture) and demonstrated the feasibility of activating these targets through implementation strategies. However, the dominant methodological approach has been atheoretical and predictive, relying heavily on a set of variables from heuristic frameworks.

Conclusions:

Optimizing the implementation of effective treatments in community care for youth with psychiatric disorders is a defining challenge of our time. This review proposes a new direction focused on developing and testing integrated causal theories. We recommend implementation scientists: (a) move from observational studies of implementation barriers and facilitators to trials that include causal theory; (b) identify core set of implementation determinants; (c) conduct trials of implementation strategies with clear targets, mechanisms, and outcomes; (d) ensure that behaviors that are core to EBPs are clearly defined; and (e) agree upon standard measures. This agenda will help fulfill the promise of evidence-based practice for improving youth behavioral health.

Keywords: children, adolescents, implementation science, evidence-based practice, causal theory

Introduction

Over the past three decades, there has been growing urgency within the health and behavioral health fields to address the research-to-practice gap, given estimates that it takes 17 years for 14% of research to make its way into practice (Balas & Boren, 2000). This urgency is fueled by the simple observation that research produces many interventions that work, often referred to as evidence-based practices (EBPs), and yet individuals in the community often do not receive these effective interventions. In child psychology and psychiatry, consider that over the past 50 years, treatment developers have generated approximately 500 interventions that fall broadly into 86 evidence-based treatment approaches (Chorpita et al., 2011; Weisz, Ng, & Bearman, 2014). Yet, services for youth in the community rarely incorporate these interventions; instead, youth receive a range of clinician-preferred interventions, many of them without research support, and most delivered in a low-intensity manner unlikely to improve youth well-being (Garland et al., 2010; Garland et al., 2013). The low rates at which clinicians adopt EBPs and the manner in which EBPs are implemented may help explain the poor outcomes of behavioral health services for youth and the ‘implementation cliff’ in which the positive effects of EBPs are diminished once they are moved out of the lab and into the community (Weisz, Jensen-Doss, & Hawley, 2005; Weisz et al., 2014). In recognition of how uneven implementation undermines service effectiveness and behavioral health (Weisz et al., 2013a), the Institute of Medicine and other international health organizations have prioritized closure of the research-to-practice gap, explicitly calling for a focus on improving the adoption and implementation of EBPs in community settings (Collins et al., 2011; Committee on Developing Evidence-Based Standards for Psychosocial Interventions for Mental Disorders, 2015).

In response to these calls to action, a new interdisciplinary scientific discipline has emerged, called implementation science, or the systematic study of methods to promote the use of research findings in real-world practice settings with the explicit goal of improving the quality of community-based care and population health and behavioral health (Eccles & Mittman, 2006). Implementation science is on the applied end of the translational science continuum and includes research on both dissemination (i.e., targeted transfer of knowledge to professionals) and implementation (i.e., active strategies to change provider behavior and organizational functioning) (Lomas, 1993). The growth of implementation science as a field is evident in the publication of 61 implementation frameworks (Tabak, Khoong, Chambers, & Brownson, 2012), established implementation outcomes (Proctor et al., 2011), a taxonomy of implementation strategies (Powell et al., 2015), and reviews that have collated over 600 determinants of practice (i.e., factors that might be barriers or facilitators in the implementation process) (Flottorp et al., 2013), that are conceptually or empirically associated with implementation success.

The field of implementation science has evolved rapidly over the course of its brief history, particularly in child psychology and psychiatry. The first wave of implementation research sought to establish standards for identifying treatment approaches as ‘evidence-based’ (APA Presidential Task Force on Evidence-Based Practice, 2006; Chambless & Hollon, 1998; Chambless & Ollendick, 2001) and began to evaluate whether the use of EBPs in community settings improved the outcomes of community care. This research uncovered differences in the effectiveness of youth psychotherapy delivered in community settings (mean effect size = .01) compared to research settings (mean effect size = .77), suggesting an advantage to youth treated by EBPs (Weisz, Donenberg, Han, & Weiss, 1995). Analysis of the differences between these two types of therapy indicated that research-based therapy was of higher intensity, less eclectic, and incorporated behavioral approaches and greater structure (i.e., treatment manuals) (Weisz, Donenberg, Han, & Kauneckis, 1995). Based on this work, researchers recommended that youth behavioral health could be improved by increasing clinicians’ adoption and implementation of EBPs in community settings.

The second wave of implementation research sought to increase the implementation of EBPs in the community through trials that experimented with different ways to train clinicians (Beidas, Edmunds, Marcus, & Kendall, 2012; Miller, Yahne, Moyers, Martinez, & Pirritano, 2004; Sholomskas et al., 2005). The assumption embedded within these studies was that community clinicians did not use EBPs because they lacked the knowledge and skills to do so. Researchers sought to ameliorate this by training clinicians using strategies employed in randomized clinical efficacy trials. Broadly, these studies found that training improved clinicians’ knowledge and attitudes towards EBPs but was not a sufficient catalyst for practice change (Beidas et al., 2012; Beidas & Kendall, 2010; Herschell, Kolko, Baumann, & Davis, 2010; Sholomskas et al., 2005). Researchers concluded that training was necessary but not sufficient for improving the delivery of effective treatments in the community; furthermore, this research highlighted the fact that contextual factors, such as clinician knowledge and organizational culture, typically considered a nuisance factor in efficacy trials, were important and understudied in their own right (Weisz, Ugueto, Cheron, & Herren, 2013,b).

Given the disappointing findings emerging from training studies and the recognition that context was important (McHugh & Barlow, 2010), implementation research entered a third wave, focused primarily on identifying determinants at multiple levels (e.g., clinician, organization, system) that might be related to implementation success or failure spurred on by the publication of several heuristic implementation frameworks (Aarons, Hurlburt, & Horwitz, 2011; Damschroder et al., 2009; Greenhalgh, Robert, Macfarlane, Bate, & Kyriakidou, 2004). Third wave studies often used mixed-methods to describe the determinants or test the relationships between these determinants and a variety of implementation outcomes such as EBP adoption, fidelity, and sustainment (Beidas et al., 2015; Beidas et al., 2016; Locke et al., 2017; Palinkas et al., 2017; Stein, Celedonia, Kogan, Swartz, & Frank, 2013). We refer to this approach as the ‘disaggregation paradigm’ because it involves dismantling established social science theories such as the Theory of Planned Behavior (Ajzen, 1991), Social Cognitive Theory (Bandura, 1977), Learning Theory (Blackman, 1974), and Organizational Climate Theory (Ehrhart, Schneider, & Macey, 2013) into their constituent variables (e.g., attitudes, organizational culture), measuring a large number of these variables in a single study, and examining which are most strongly associated with implementation in a single multivariate model that includes all measured variables. Although this approach incorporates variables from social science theories, these theoretical moorings have typically been ignored in favor of an empirical predictive analytic approach that seeks to optimize the variance explained or to identify the most empirically robust predictors of implementation success (Williams, 2016). As this work has grown, so have questions related to the causal associations between determinants (e.g., knowledge) and other determinants (e.g., organizational culture); as well as determinants (e.g., knowledge) and a host of implementation outcomes that include those outlined by Proctor and colleagues in their framework such as fidelity (how closely a clinician adheres to the EBP), penetration (how many eligible clients within a clinician’s caseload receive the EBP), stage of implementation (Chamberlain, Brown, & Saldana, 2011; Saldana, Chamberlain, Wang, & Brown, 2012) and sustainment (if the EBP becomes ‘usual care’ in a particular context).

We consider the need for implementation research to move beyond the disaggregation paradigm and into a fourth wave of research that purposefully develops and tests new, integrated causal theories designed specifically to explain implementation. We define integrated causal theories as systems of interrelated and internally-consistent ideas that articulate the necessary and sufficient set of conditions to explain some phenomenon, specify the relationships between those conditions or constructs, and explain the mechanisms through which they cause the phenomenon of interest (Tabak et al., 2012).

We pursue the following aims in this review. First, we describe what has been gleaned from the disaggregation paradigm about clinician and organizational determinants and their associations with EBP implementation outcomes in child psychology and psychiatry. Second, we summarize a set of social science theories that may inform implementation science in child psychology and psychiatry. Although increasing the use of theory in implementation strategies and research is paramount (Grol, Bosch, Hulscher, Eccles, & Wensing, 2007; Williams, 2016), we argue that these existing theories from social science are insufficient to explain implementation. To support this assertion, we explore both the strengths and limitations of these theories as they apply to implementation and we suggest that a new set of implementation-specific causal theories are needed to advance the field. The third section of our review offers examples of the types of integrated causal theories we believe may be useful for advancing implementation science including an exemplar study that tests integrated causal theory. Fourth, we conclude with recommendations to advance implementation science towards its goal of improving population behavioral health for youth.

Section 1: What have we learned from the disaggregation paradigm?

In this section, we review the work conducted in the third wave of implementation research. Specifically, we describe what is known about clinician (e.g., knowledge, attitudes, self-efficacy) and organizational (e.g., culture and climate) determinants and their associations with EBP implementation in child psychology and psychiatry. Although factors at other levels have been hypothesized to be associated with EBP implementation (e.g., system-level) (Aarons et al., 2011), we focus on clinician and organization levels for two reasons. First, much of the variance in EBP implementation occurs at the individual and organizational levels, suggesting these levels are important targets for intervention (Beidas et al., 2015). Second, implementation science is fundamentally the study of clinician behavior change within organizational constraints (i.e., the immediate environment directly facilitates and/or constraints clinician behavior) and as such unpacking the causal associations between these two levels will allow for a richer and more nuanced understanding of implementation.

To organize our review, we focus on determinants (Flottorp et al., 2013) related to the implementation of EBPs for child psychology and psychiatry as posited by three leading implementation frameworks: the Consolidated Framework for Implementation Research (CFIR) (Damschroder et al., 2009), the Tailored Implementation for Chronic Diseases Checklist (TICD) (Flottorp et al., 2013), and the Theoretical Domains Framework (TDF) (Michie et al., 2005). We selected these frameworks because each is widely used (Tabak et al., 2012), presents a list of determinants that are conceptually or empirically associated with implementation, and is not specific to a particular implementation setting (e.g., child public sector systems) (Aarons et al., 2011). We extracted all clinician- and organization-level determinants listed by at least two of the three frameworks; we define these determinants and provide a brief review of the empirical associations between each determinant and implementation outcomes below.

Clinician-level determinants

We present clinician determinants in Table 1. The selection process described above resulted in the inclusion of all clinician level CFIR and TICD determinants. One determinant from the TDF, ‘memory/attention/decision processes’, was not included because it was not endorsed in the CFIR or TICD.

Table 1.

Mapping individual-level determinants from three leading heuristic frameworks

| Determinants | Definition | Framework |

|---|---|---|

| Knowledge | Awareness, familiarity, and exposure to facts (includes declarative and procedural knowledge) | CFIR (knowledge and beliefs about the intervention) |

| TICD (knowledge and skills) | ||

| TDF (knowledge) | ||

| Affiliation with organization | How individuals perceive their organization and degree of commitment to organization | CFIR (individual identification with organization) |

| TDF (social professional role/identity) | ||

| Self-efficacy | Belief in ability to carry out implementation | CFIR (self-efficacy) |

| TICD (cognition – self-efficacy) | ||

| TDF (self-efficacy) | ||

| Attitudes/Beliefs/Cognitions | Perceptions about EBP and implementation | CFIR (knowledge and beliefs about the intervention) |

| TICD (cognitions) | ||

| TDF (beliefs about consequences) | ||

| Stage of change | Current phase that the individual is in toward use of the intervention | CFIR (stage of change) |

| TDF (motivation and goals – transtheoretical model and stages of change) | ||

| Skills | Actual ability level/competence in the delivering the EBP | CFIR (knowledge & beliefs about the intervention) |

| TICD (knowledge & skills) | ||

| TDF (skills) | ||

| Emotions | Affective response of implementing an EBP (e.g, stress, fear, burnout) | TICD (emotions) |

| TDF (emotions) | ||

| Motivation and goals/intention | The extent to which professionals have intention to perform an EBP; the reason behind why a clinician implements an EBP | TDF (motivation and goals [intention]) |

| TICD (Cognition – intention and motivation) | ||

| Behavioral Regulation | Individual processes around how they will self-monitor implementation, procedures around implementation | TDF (behavioral regulation) |

| TICD (professional behavior – capacity to plan change) | ||

| Nature of the behaviors | Characteristics of the behavior including frequency, degree of habit, sequence of behaviors, and the number of people involved | TDF (nature of the behavior) |

| TICD (Professional behavior; nature of the behavior) |

Knowledge.

Knowledge can be defined as awareness, familiarity, and exposure to facts related to EBP and is described as a ‘precursor to implementation’ (Powell et al., 2017b). To appropriately treat psychiatric disorders, it is necessary for clinicians to be knowledgeable about the treatments that are appropriate for common problems in youth. For example, the Knowledge of Evidence-Based Services Questionnaire (Stumpf, Higa-McMillan, & Chorpita, 2009), a 40-item self-report measure, assesses clinician knowledge of EBPs in the treatment of youth psychopathology (Okamura, Nakamura, Mueller, Hayashi, & Higa-McMillan, 2016). Research has demonstrated a positive relationship between knowledge and use of EBP (Beidas et al., 2015; Stephan et al., 2012) as well as a negative relationship between knowledge and use of non-EBP such as psychodynamic techniques (Beidas et al., 2017). However, there are also studies that have not found any relationship between knowledge and use of EBP (Brookman-Frazee, Haine, Baker-Ericzén, Zoffness, & Garland, 2010; Higa-McMillan, Nakamura, Morris, Jackson, & Slavin, 2015). Other studies have examined the relationship between knowledge and other determinants of implementation such as attitudes, finding that these determinants are positively related (Lim, Nakamura, Higa-McMillan, Shimabukuro, & Slavin, 2012; Nakamura, Higa-McMillan, Okamura, & Shimabukuro, 2011).

Affiliation with organization.

Affiliation with organization refers to the individual’s personal degree of commitment to the organization (i.e., organizational commitment) (Damschroder et al., 2009). This determinant is hypothesized to be important to implementation in that it may be related to clinician willingness to use an EBP or to fully engage in efforts around implementation (Greenberg, 1990). Measures of organizational commitment have been published in the organizational sciences (Mathieu & Zajac, 1990) and in children’s behavioral health (Glisson et al., 2008a); however, to our knowledge, there is no empirical work linking this construct to implementation in child psychology and psychiatry.

Self-efficacy.

Self-efficacy refers to one’s belief in one’s ability to succeed in the implementation of a psychosocial treatment for youth (Bandura, 1977). Measurement of self-efficacy has been sub-optimal, as most studies have used investigator-created measures (Edmunds et al., 2013). A small body of empirical research has examined the relationship between self-efficacy and implementation of youth psychosocial treatments and has identified a positive relationship, specifically in schools (Rohrbach, Graham, & Hansen, 1993; Schiele, 2013).

Attitudes/Beliefs/Cognitions.

Attitudes, also described as beliefs and cognitions in competing frameworks, refer to opinions and perceptions about EBPs and the implementation process (Aarons, 2004) and are hypothesized to be important for implementation (Aarons, Cafri, Lugo, & Sawitzky, 2012). Frequently used to measure EBP attitudes, the Evidence Based Practice Attitudes Scale, a 15-item self-report measure, assesses willingness to adopt an EBP based upon its appeal, requirements from external sources, general openness to innovation, and perceptions of divergence between EBP and current practices (Aarons et al., 2010). Literature suggests a positive relationship between attitudes and adoption and use of EBPs for youth with psychiatric disorders (Beidas et al., 2015; Garner, Godley, & Bair, 2011; Henggeler et al., 2008; Jensen-Doss, Hawley, Lopez, & Osterberg, 2009; Rohrbach et al., 1993; Williams et al., 2014) although there are studies that have not found a relationship between attitudes and use of EBPs for youth with psychiatric disorders (Beidas et al., 2015; Higa-McMillan et al., 2015).

Stage of change.

Stage of change refers to the current phase that the individual is in as s/he progresses toward routine use of an EBP. Measurement depends upon which stage of change model one is using (e.g., transtheoretical model for change, diffusion of innovation, five-step model) (Grol et al., 2007; Prochaska & Velicer, 1997; Rogers, 2003). To our knowledge, there is no empirical work linking stage of change to implementation in child psychology and psychiatry, thus representing an area for future research.

Skills.

Skills refer to the behavioral ability level that a provider demonstrates in the delivery of an EBP. Skill, also described as competence, has been described as an integral component to fidelity, a frequently measured implementation outcome (Proctor et al., 2011). Consequently, skill may be conceptualized as a determinant of EBP implementation or an implementation outcome. Measurement of skill is highly specific to the particular EBP being implemented and is best captured using well-established observational methods (Schoenwald, 2011). Because skill is often measured as an outcome rather than as a determinant, limited research exists with regard to the predictive association between skill and implementation. One study found a positive association between clinician skill and client outcomes in the implementation of an EBP in family court (Berkel et al., 2017).

Emotions.

Emotions refer to the affective reaction and experience of the provider in response to the process of implementing an EBP (e.g., stress, fear, burnout). Chronic and/or momentary stress, or an imbalance between environmental demands and capacity (Koolhaas et al., 2011), can be measured using self-report measures such as the Maslach Burnout Inventory (Maslach, Jackson, Leiter, Schaufeli, & Schwab, 1986) or using biological markers of stress such as heart rate or cortisol levels (Allen, Kennedy, Cryan, Dinan, & Clarke, 2014; Russell, Koren, Rieder, & Van Uum, 2012). This area has been understudied in child psychiatry and psychology particularly with regard to biological markers of stress; one study found that clinicians who endorsed higher levels of financial stress were more likely to use non evidence-based practices, such as psychodynamic techniques (Beidas et al., 2017).

Motivation and goals/intention.

Motivation refers to the extent to which professionals have intention or desire to perform an EBP (Flottorp et al., 2013; Michie et al., 2005). Intention can be measured using established measurement techniques from the social psychological literature (Ajzen, 1991; Burgess, Chang, Nakamura, Izmirian, & Okamura, 2017; Dawson, Mullan, & Sainsbury, 2015) and the Evidence-Based Practice Intentions scale (Williams, 2015). This determinant has been implicated as a strong predictor of behavior in the broader literature examining health providers’ practice behaviors. Specifically, meta-analyses find a robust correlation (r = .46) between healthcare professionals’ intentions and practice behaviors (Eccles et al., 2006; Godin, Bélanger-Gravel, Eccles, & Grimshaw, 2008). Despite that fact that there is a long tradition of studying the predictive validity of intentions in health services, recent reviews suggest that little attention has been paid to the intentions of mental health clinicians to implement EBP (Williams, 2015). Preliminary research has identified intentions as a strong predictor of implementation behavior amongst teachers using an evidence-based practice for youth with autism spectrum disorder (Fishman, Beidas, Blanch, & Mandell, in press) and also that intentions are a strong predictor of clinicians’ self-reported EBP use in outpatient mental health clinics (Williams, 2015); suggesting this as a promising future direction in implementation science.

Behavioral regulation.

Behavioral regulation refers to an individual’s self-monitoring of one’s own implementation of EBP (Flottorp et al., 2013; Michie et al., 2005). Qualitative work has associated behavioral regulation with implementation processes for screening tools for pediatric mental health in emergency departments (MacWilliams, Curran, Racek, Cloutier, & Cappelli, 2017). To our knowledge, there is no quantitative work linking this construct to implementation for efforts related to child psychology and psychiatry and no established measures of this construct.

Nature of the behaviors.

Nature of the behaviors refer to characteristics of the implementation behaviors including frequency (i.e., how often the behavior is performed), degree of habit (i.e., how habitual the behavior is), sequence of behaviors, and the number of people involved in the behavior (Flottorp et al., 2013; Michie et al., 2005). To our knowledge, there is no empirical work linking this determinant to implementation for efforts related to child psychology and psychiatry.

Organizational-level determinants

We present organizational-level determinants and corresponding definitions posited as important to implementation by at least two of the three leading frameworks (i.e., CFIR, TICD, TDF) in Table 2. With the exception of one variable (implementation climate), all of the variables included in Table 2 were addressed by all three frameworks; however, consistent with other reviews (Allen et al., 2017), we found considerable heterogeneity in how the frameworks defined and categorized these organizational constructs.

Table 2.

Mapping organization-level determinants from three leading heuristic frameworks

| Construct | Definition | Framework |

|---|---|---|

| Leadership | Extent to which leaders or supervisors are capable of guiding, directing, and making necessary changes to support implementation | CFIR (leadership engagement) |

| TDF (leadership; management commitment) | ||

| TICD (capable leadership) | ||

| Organizational culture | Shared norms, behavioral expectations, values, and basic assumptions of a given organization | CFIR (culture) |

| TDF (organizational climate/ culture; social/ group norms) | ||

| TICD (communication and influence) | ||

| Organizational climate | Shared perceptions regarding the impact of the work environment on clinicians’ sense of personal well-being | CFIR (climate) |

| TDF (organizational climate/ culture) | ||

| TICD (nonfinancial incentives/ disincentives including working conditions and relations with management) | ||

| Implementation climate | Shared perceptions regarding the extent to which use of one or more EBPs is expected, supported, and rewarded, within the organization; extent to which organizational regulations, rules, or policies facilitate implementation | CFIR (implementation climate) |

| TICD (organizational regulations, rules, policies) | ||

| Relative priority | Perception of the importance of EBP implementation within the organization and the extent to which competing tasks and time constraints crowd out implementation | CFIR (relative priority) |

| TDF (environmental stressors) | ||

| TICD (priority of necessary change) | ||

| Team working | Nature and quality of the webs of social networks and formal and informal communications within an organization; the extent to which professional teams or groups have the skills needed to interact in ways that facilitate implementation | CFIR (networks and communications) |

| TDF (team working) | ||

| TICD (team processes) | ||

| Resources | Availability and management of material and time resources; environmental stressors such as competing tasks and time constraints; | CFIR (available resources) |

| TDF (resources/ material resources) | ||

| TICD (availability of necessary resources) |

Leadership.

Leadership is the act of providing guidance or direction to an organization (Damschroder et al., 2009; Flottorp et al., 2013; Michie et al., 2005). Both general leadership models (e.g., full range leadership) (Bass, Avolio, Jung, & Berson, 2003; Judge & Piccolo, 2004) and implementation-specific leadership models (i.e., implementation leadership) (Aarons, Ehrhart, & Farahnak, 2014) have been studied in implementation research in child psychology and psychiatry. The full-range leadership model includes transformational leadership behaviors, which inspire and motivate clinicians to pursue an ideal, and transactional leadership behaviors, which involve appropriate management of interactions and rewards to maintain clinicians’ motivation (Bass et al., 2003). This type of leadership is most often measured via the Multifactor Leadership Questionnaire (Avolio, Bass, & Jung, 1999; Bass, 1997). Implementation leadership involves being proactive in anticipating and addressing implementation challenges, being knowledgeable about EBP, supporting clinicians in implementing EBP, and persevering through the ups and downs of EBP implementation; (Aarons et al., 2014) it is measured with the Implementation Leadership Scale (Aarons et al., 2014). Few studies have examined how leadership relates to EBP implementation in child psychology and psychiatry and findings from these studies are mixed. Some studies have identified a positive relationship between transformational, transactional, and implementation leadership and clinician attitudes towards EBP, knowledge of EBP (Aarons, 2006; Aarons & Sommerfeld, 2012; Powell et al., 2017b), and use of EBP (Guerrero, Fenwick, & Kong, 2017). However, other studies have failed to substantiate these relationships or have shown negative relationships (Beidas et al., 2015; Powell et al., 2017a).

Organizational Culture.

Organizational culture refers to the assumptions, values, shared norms, and behavioral expectations that characterize and guide behavior within a workplace (Cameron & Quinn, 2011; Hartnell, Ou, & Kinicki, 2011). It is hypothesized to influence clinicians’ practice behaviors by shaping beliefs and perceptions of clinical processes (e.g., regarding which approaches are effective) and by signaling to clinicians how they should prioritize their job tasks and approach their work (Glisson, 2002). Quantitative measurement of organizational culture typically assesses the shared norms and behavioral expectations that characterize a service setting (Beidas & Kendall, 2014; Hartnell et al., 2011). For example, the Organizational Social Context measure (Glisson et al., 2008a) assesses organizational culture in three domains of proficiency (i.e., norms and expectations that clinicians prioritize improvement in client well-being and maintain competence in up-to-date treatment practices), rigidity (i.e., norms and expectations that clinicians carefully follow prescribed routines and procedures and exert minimal autonomy in substantive decisions) and resistance (i.e., norms and expectations that clinicians show minimal interest in changes to well-established procedures and remain critical of change efforts). Observational studies have shown a positive relationship between proficient cultures and clinician attitudes towards EBP (Aarons & Sommerfeld, 2012), knowledge of EBP (Powell et al., 2017b), intentions to adopt EBP (Williams, Glisson, Hemmelgarn, & Green, 2017), EBP adoption and fidelity (Beidas et al., 2015), and sustainment (Glisson et al., 2008a; Glisson et al., 2008b; Glisson, Williams, Hemmelgarn, Proctor, & Green, 2016). Experimental studies have shown that planned improvement in proficient culture is positively associated with clinicians’ voluntary attendance at EBP workshops, odds of adopting one or more EBP protocols, and the percentage of clients treated using an EBP (Williams et al., 2017) . These studies emphasize how organizational culture influences individual clinicians’ practice behaviors; additional research is needed to assess the relationship between organizational culture and program-level implementation outcomes such as the speed and extent to which organizations move from considering the adoption of an EBP program during pre-implementation to full competence and effective service delivery during sustainment (Chamberlain et al., 2011; Saldana et al., 2012). Theory suggests organizational culture should influence implementation outcomes at both the clinician and program levels (Hartnell et al., 2011; Schein, 2010; Williams & Glisson, 2014).

Organizational Climate.

Developments in organizational climate theory and empirical research over the last two decades have established that organizations engender multiple climates including: a general or molar organizational climate, which is most often referred to simply as ‘organizational climate’ and is defined as employees’ shared perceptions of the influence of the work environment on their personal well-being (Glisson, 2002; James et al., 2008); and, strategically-focused climates which refer to employees’ shared perceptions of the specific behaviors that are supported, rewarded, and expected within the organization (Ehrhart et al., 2013). Implementation climate, described in the following section of this paper, is an example of a strategically-focused proximal climate, that indicates the extent to which clinicians share perceptions that they are expected, supported, and rewarded for use of EBPs in their practice (Ehrhart, Aarons, & Farahnak, 2014; Weiner, Belden, Bergmire, & Johnston, 2011).

In studies of children’s behavioral health services and implementation science, researchers have traditionally focused on general organizational climate, defined as clinicians’ shared perceptions of how the work environment influences their personal well-being (Glisson, 2002; Glisson & Williams, 2015; James et al., 2008; Schneider, Ehrhart, & Macey, 2013). Two scales frequently used to assess organizational climate in behavioral health settings are the Organizational Social Context measure (Glisson et al., 2008a) and the climate subscale of the Organizational Readiness for Change measure (Lehman, Greener, & Simpson, 2002). While some studies have shown a positive relationship between organizational climate and use of EBP, adherence to EBP, and rates of EBP adoption, and the speed with which system leaders agreed to adopt an EBP (Aarons & Sommerfeld, 2012; Beidas et al., 2015; Brimhall et al., 2016; Schoenwald, Carter, Chapman, & Sheidow, 2008; Wang, Saldana, Brown, & Chamberlain, 2010), other research has failed to substantiate a relationship between organizational climate and clinicians’ adoption of EBP, adherence to EBP protocols, or speed of EBP adoption (Beidas et al., 2014; Henggeler et al., 2008; Williams, Ehrhart, Aarons, Marcus, & Beidas, under review). Given that multiple types of climate exist within an organization (i.e., general and strategic), one potential reason for these mixed findings is that organizational climate’s most important role in implementation may be to modify the effect of other strategically-focused climates such as implementation climate. According to this formulation, organizational climate is not directly related to EBP use; instead, it forms a necessary precondition for successful EBP implementation and therefore acts as an effect modifier. Preliminary evidence in children’s behavioral health clinics supports this view (Williams et al., under review) and suggests that clinicians must experience a baseline level of support for their personal well-being (i.e., positive organizational climate) in order to successfully respond to an organization’s strategic implementation climate.

Implementation Climate.

Implementation climate refers to clinicians’ shared perceptions of the extent to which the use of a specific EBP or EBP in general is expected, supported, and rewarded within their organization (Ehrhart, Aarons, & Farahnak, 2014; Jacobs, Weiner, & Bunger, 2014; Klein & Sorra, 1996). Two measures of implementation climate for use in behavioral health services exist: the Implementation Climate Scale developed by Ehrhart and colleagues (Ehrhart et al., 2014), and a tailored implementation climate measure developed by Weiner and colleagues (Jacobs, Weiner, & Bunger, 2014; Weiner et al., 2011) that can be adapted to specific EBPs. While some studies have shown a positive relationship between implementation climate and program use and adherence (Asgary-Eden & Lee, 2012) other research has failed to link implementation climate to clinicians’ EBP use (Becker-Haimes et al., 2017; Beidas et al., 2015; Beidas et al., 2017). One explanation for these mixed findings is that the effects of implementation climate may be moderated by other organizational characteristics such as organizational climate as described above (Williams et al., under review).

Relative Priority.

The relative priority of EBP implementation within an organization refers to the shared sense that EBP implementation is a high priority relative to competing demands and the extent to which competing tasks and time constraints crowd out implementation (Damschroder et al., 2009). Conceptually, relative priority is closely related to organizational readiness for change, defined as a shared psychological state in which organizational members feel willing and able to implement an innovation because of a perceived need to alter the current state of affairs, as measured using the motivation for change subscale of the ORC (Lehman et al., 2002; Weiner, Amick, & Lee, 2008). Some research has shown a positive relationship between organizational readiness and clinicians’ attitudes towards EBPs and treatment manuals, and faster rates of adoption of specific EBPs (Saldana, Chapman, Henggeler, & Rowland, 2007; Wang et al., 2010). However, other research has failed to link readiness for change to clinicians’ EBP adoption or adherence (Henggeler et al., 2008).

Team Working.

Team working refers to the ability of a clinical team to interact in ways that facilitate implementation including the nature and quality of communications and formal and informal social networks (Michie et al., 2005). To our knowledge, there is no empirical work linking this construct to implementation in child psychology and psychiatry. Measures of molar organizational climate such as the Organizational Social Context measure (Glisson et al., 2008a) include subscales (e.g., cooperation) that assess team working (e.g., “There is a feeling of cooperation among my coworkers”) suggesting the need for additional conceptual development and research.

Resources.

Resources refer to materials and time to support EBP implementation (Damschroder et al., 2009; Flottorp et al., 2013; Michie et al., 2005). The results of research examining the importance of resources to implementation in child psychology and psychiatry is highly equivocal. While one study showed that the availability of resources (e.g., information technology infrastructure) was a major barrier or facilitator to EBP adoption in 50% of organizations participating in a statewide roll-out of functional family therapy (Zazzali et al., 2008); two other studies failed to link resources to implementation (Henggeler et al., 2008; Wang et al., 2010), and another study found that increased funding was associated with less use of EBP for adolescents with substance use disorders (Henderson et al., 2007). Intervention characteristics, such as interventions with high resource needs, may be an effect modifier explaining these equivocal relationships.

Summary and critique

Three leading heuristic frameworks in implementation science (i.e., CFIR, TICD, and TDF), have considerable overlap in the clinician and organization determinants suggested for consideration during implementation (Damschroder et al., 2009; Flottorp et al., 2013; Michie et al., 2005). Our review of these determinants suggests the following: (a) there are a plethora of determinants for consideration (10 categories of clinician determinants; and 7 categories of organizational determinants), which makes it challenging to identify which set of determinants to include in an implementation trial without using causal theory to guide decisions; (b) certain clinician determinants (e.g., knowledge, attitudes) seem to be better studied than others (e.g., stage of change, emotion) whereas the organization determinants (e.g., culture and climate) are more evenly studied; (c) established measures exist for some determinants but not all, (d) for those determinants which have been well studied, the findings are somewhat equivocal; (e) more clarity on the relationship between determinants and specific implementation outcomes are needed; and (f) there are particularly promising directions for future research including clinician motivation as a target for clinician level implementation strategies and the need for a deeper understanding of the relationship between general and implementation specific organizational determinants.

After reviewing the set of studies above, it is clear that more work exploring the causal relationship between determinants and a range of implementation outcomes is needed. Although there is a well-established, well-specified, and growing set of implementation outcomes (Proctor et al., 2011), many of the studies reviewed included investigator-created measures of implementation which were specific to the EBP of interest (often referred to as ‘use of EBP’). Whenever possible, we specified the implementation outcome under study, but the wide variability in implementation outcomes limits the ability to make conclusions across studies. Further, there are differences between individual- and program-level implementation outcomes. For example, fidelity can be conceptualized as both the behavior of an individual; and may also be aggregated to the organizational level (i.e., average fidelity of clinicians within an organization), which also may be important when considering the relationship between individual and organizational determinants. To fully understand the relationship between determinants and implementation outcomes, more precision about which implementation outcomes are measured and at what level is needed. One particular promising organizational-level implementation outcome that is not specific to a particular EBP that could be measured across implementation studies is the Stages of Implementation Completion (SIC) measure (Chamberlain et al., 2011; Saldana et al., 2012).

Although the disaggregation paradigm has allowed us to glean insight into the implementation process, it also has several limitations. First, because the heuristic frameworks that dominate this paradigm list constructs of interest without specifying the causal relationships between them (e.g., the relationships between clinician and organizational factors) (Powell et al., 2017b) or their relationships to specific implementation outcomes (e.g. clinician fidelity to EBP, stage of implementation), causal theory testing has been limited. Specifically, this overreliance on work guided by atheoretical empiricism leads to a lack of understanding about specific causal processes that lead to increased adoption, high fidelity, and long-term sustainment of EBPs in routine practice. Entering many predictor variables into a single multivariate model to see “what works” can mask relationships (e.g., when a mediation or moderation effect is operating) and arbitrarily bias parameter estimates, leading to conflicting findings and difficulty in interpreting the body of scientific work. Third, this approach overlooks substantively important differences between constructs drawn from different theories by combining them into broadly defined categories (e.g., lumping many different types of motivational constructs into one category). Fourth, this approach ignores that theories are more than the sum of their parts (e.g., the way motivation is conceptualized may be integral, but these nuances are discarded when theories are broken into their constituent parts in the disaggregation paradigm). We suggest that to move the science of implementation forward, the time has come to think beyond the disaggregation paradigm in pursuit of causal theory.

Section 2: What can be applied from established causal theories from the social sciences?

Some thought leaders have called for incorporating well-established social science theories into implementation research (Grol et al., 2007). We concur with the spirit of this recommendation; however, we believe that implementation science needs to go further than simply using existing social science theories “as is” by adapting, developing, and testing integrated theories designed specifically to explain implementation. To support this point, in this section, we review the strengths and weaknesses of four well-established theories from the behavioral and organizational sciences. We chose these theories because of their salience for two important themes that emerged in our review (a) motivation as a key construct for understanding clinician implementation, and (b) general and implementation-specific organizational functioning and capacity to support EBP implementation.

Two of the theories (i.e., Theory of Planned Behavior, Self-Determination Theory) describe motivation and its role in individual behavior change. The importance of motivation to implementation is highlighted by the disappointing results of second wave training studies. These studies demonstrated that expert knowledge and skill are not enough to increase use of EBP whereas intentions may present an important avenue for future research. We purposefully selected two theories that offer different conceptualizations of motivation in order to highlight the value of considering different perspectives.

The other two theories describe organizational factors and their relationship with individual behavior change (i.e., Organizational Culture Theory, Implementation Climate Theory). One of the important lessons learned from both second wave training studies and third wave disaggregation paradigm studies is that organizational context matters for implementation. Furthermore, these studies confirm that implementation is influenced by both general organizational characteristics that are common to all organizations (e.g., organizational culture) and implementation-specific characteristics that pertain specifically to a particular EBP or set of EBPs. To address this issue, we review one general organizational theory and one implementation-specific theory. The primary purposes of this section are to (a) explore how future studies might integrate and extend these and other causal theories, and (b) motivate the need for developing new integrated causal theories for implementation.

Theory of planned behavior

The Theory of Planned Behavior (TPB) has been widely tested within the social psychology literature and has been validated as highly predictive of behavior change (Armitage & Conner, 2001). Given that adult behavior change is the main focus of implementation science, TPB (Ajzen, 1988, 1991) represents a candidate causal theory for consideration. TPB identifies a parsimonious set of variables that are predictive of behavior change; all relating to one’s intention to perform a behavior (Albarracin, Johnson, Fishbein, & Muellerleile, 2001; Armitage & Conner, 2001; Fishbein & Ajzen, 2010; Sheeran, 2002; Sheppard, Hartwick, & Warshaw, 1988). In TPB, intention is synonymous with motivation, or the effort that one plans to exert in performing a behavior and is a function of (a) attitudes toward the behavior, (b) subjective norms, and (c) perceived behavioral control. A behavior is most likely to be performed if intentions are high, there are no environmental constraints hindering the individual’s ability to enact the behavior, and the individual is capable of performing the behavior.

This theory has only recently begun to be applied to implementation science within child psychology and psychiatry. To give an example of how TPB might be applied, we use exposure therapy, a highly underutilized evidence-based technique for child anxiety (Becker-Haimes et al., 2017). TPB identifies clinician intention to implement exposure therapy as the most robust predictor of engaging in the actual behavior (e.g., if I intend to use exposure therapy with my anxious client, then I will use exposure therapy, assuming there are no environmental constraints that keep me from doing so). The strength of one’s intention is determined by one’s attitudes towards the behavior (i.e., perceptions of the benefits of doing exposure therapy with anxious youth), subjective norms (i.e., the belief that people whose opinion I care about such as my colleagues think I should use exposure therapy with my anxious client) and perceived behavioral control (i.e., how confident I feel in my ability to enact an exposure). In one study, clinicians were randomly assigned to one of two continuing education workshops: a TPB-informed workshop and a standard continuing education workshop (Casper, 2007). Outcomes included clinician intentions and behavior in the usage of an assessment tool for behavioral health. The key component to the TPB-informed workshop was an elicitation exercise to gather participant attitudes, social norms, and perceived control which were then fed back into the workshop approach. Findings supported the TPB-informed workshop in that clinicians demonstrated both higher intentions and higher implementation rates in the use of the assessment tool (Casper, 2007). In another study, teacher intentions to use EBPs for youth with autism were variable and intentions were strongly associated with observed use of EBPs (Fishman et al., in press).

Self-determination theory

Another causal theory from the behavioral sciences literature, Self-Determination Theory (SDT), is based on the assumption that individuals have three main psychological needs: (a) the ability to act in ways that are aligned with their values (i.e., autonomy), (b) competence (i.e., feeling effective), and (c) relatedness (i.e., a need for being connected to the larger social group) (Deci & Ryan, 2002). In comparison to TPB which relies on a unitary theory of motivation, SDT argues that there are different types of motivation, ranging from externally-regulated and controlled to internally-regulated and autonomous, and that these different types of motivation differentially influence behavior (Ryan & Deci, 2000). Externally-regulated motivation has a locus of causality that is either impersonal outside of the person (i.e., external regulation), or alienated from the person, in that the behavior is not fully accepted as one’s own (i.e., introjected regulation). For example, a clinician might use exposure therapy with anxious clients because that is the only way to receive payment. In contrast, internally-regulated motivation arises from the conscious valuing of a behavior because of its role in producing personally valued outcomes or from the acceptance and personal importance of the behavior due to its congruence with one’s identity or values. An example is using exposure therapy because one genuinely believes it is the best possible way to help clients with anxiety. Studies of behavior in several domains support STD by demonstrating that internally-regulated motivation is associated with greater task effort, persistence, problem-solving, and performance, particularly for complicated cognitive tasks (Arnold, 2017; Deci, Koestner, & Ryan, 1999; Judson, Volpp, & Detsky, 2015). STD has been scantly applied in implementation science, although there have been calls for its broader application (Smith & Williams, 2017). Two proof-of-concept studies have provided evidence that concepts from SDT such as autonomy, relatedness, and competence, are associated with increased implementation behaviors (Lynch, Plant, & Ryan, 2005; Williams et al., 2015).

Organizational culture theory

Organizational culture theory derives from both anthropological (organizations are cultures) and sociological (organizations have cultures) perspectives although the latter approach has dominated quantitative research in the organizational sciences and in children’s mental health services (Cameron & Quinn, 2011; Glisson & Williams, 2015; Hartnell et al., 2011). From this perspective, organizational culture is understood as a social characteristic of an organization that consists of shared assumptions, values, norms, and behavioral expectations (Glisson et al., 2008a; Hartnell et al., 2011). Organizations are believed to develop cultures in the same way that other groups develop cultures--through the actions of leaders and through shared learning processes in which group members come to adopt and share specific assumptions, norms, and behavioral expectations because of their value for group survival and success (Schein, 2010). Once a culture is established, it is transmitted to new employees through socialization processes and reinforced among group members through formal and informal sanctions, modeling, symbols, rituals, dialogue, and contingencies (Cameron & Quinn, 2011). The causal effects of culture occur through these social influence processes which guide and constrain what clinicians pay attention to, how they perceive information, ideas, and experiences, the meanings they attach to information and experiences, their beliefs, and their willingness to exhibit certain behaviors (Ehrhart et al., 2013; Schein, 2010; Williams & Glisson, 2014). The value of organizational culture for the group is that it forms the basis for members’ shared understanding and enactment of meaningful responses to their work, to each other, and to their environment (Cooke & Rousseau, 1988). The consequences of organizational culture include homogenizing and directing employees’ attitudes, cognitions, motivation, and behavior (Klein, Dansereau, & Hall, 1994).

Returning to the example of exposure therapy for youth anxiety disorders, organizational culture theory suggests that organizational variation in the number of clinicians who use exposure therapy, the fidelity with which they use it, and the extent to which their use of it is sustained, will depend in part on the cultural values, norms, and behavioral expectations that characterize clinicians’ work environments (Hartnell et al., 2011; Williams & Glisson, 2014). Optimal implementation of exposure therapy would be expected in clinics where clinicians experienced shared norms and behavioral expectations that emphasize improvement in client well-being, clinician competence in up-to-date and effective treatment practices, and responsiveness to client needs (e.g., proficient organizational culture). Conversely, suboptimal use of exposure therapy would be expected in organizational cultures that engendered competing values, norms, or behavioral expectations such as an emphasis on the optimization of billable units, timely completion of paperwork, and correct documentation.

Implementation climate theory

Implementation climate theory was originally developed to explain variation in organizational success in implementing innovations (Klein, Conn, & Sorra, 2001; Klein & Sorra, 1996). It has been extended and applied by mental health services researchers to explain organizational variation in clinicians’ adoption, implementation, and sustainment of EBP (Ehrhart et al., 2014; Jacobs et al., 2014). According to implementation climate theory, clinicians within an organization seek to optimize their receipt of formal and informal rewards within the work environment by actively discerning the true, enacted priorities of an organization (versus its espoused priorities) and aligning their behavior with those priorities (Zohar & Hofmann, 2012). The theory argues that many jobs engender inherently conflicting or competing task demands such as the demands for efficiency versus quality in service professions; in order to resolve these conflicting demands, employees actively scan their work environment for cues—including organizational policies, procedures, and practices, and formal and informal communications from supervisors and organizational leaders—to help them determine which demands should be prioritized in order to optimize their rewards within the organization (Ehrhart et al., 2013; Zohar & Polachek, 2014).

With regard to EBP implementation, once an organization has adopted a new EBP, clinicians interpret cues in the work environment regarding whether they should invest the time, effort, and energy necessary to master the new EBP or whether they should ignore it (Klein & Sorra, 1996). Based on these perceptions of implementation climate, clinicians behave in a way that optimizes their positive outcomes; either they implement the EBP because they perceive it to be truly valued or they ignore it and focus on other behaviors because they perceive EBP is not a true priority for the organization (Ehrhart et al., 2014; Klein & Sorra, 1996).

Applying implementation climate theory to our exposure therapy example would mean that a specific organization had decided to adopt exposure therapy for anxiety disorders. Given this decision by the organization’s leadership, clinicians within the organization would begin observing and interpreting the organizations’ policies, procedures, and practices around exposure therapy, as well as the messages they received from supervisors and organizational leaders, to assess whether the organization was merely espousing the use of exposure therapy as a priority (e.g., for reimbursement or credentialing reasons) or whether the organization was truly committed to clinicians’ competent and effective delivery of this practice. Based on these implementation climate perceptions, clinicians would align their behavior with the organization’s enacted priorities in order to optimize their receipt of desired rewards within the organization.

Limitations of existing causal theories

The causal theories described above offer a fruitful basis for better elucidating the causal processes that contribute to clinicians’ EBP implementation in child psychology and psychiatry (Grol et al., 2007). However, these theories are limited for at least two reasons. First, they do not account for the multiple levels at which implementation determinants and outcomes occur. Individual-level theories of behavior change such as the TPB are not designed to account for organization-level variance in behavior; consequently, they do not provide an optimal explanation for the significant variance in implementation that occurs across organizations (Armitage & Conner, 2001; Klein et al., 1994). Conversely, organizational theories do not sufficiently account for the individual level determinants that influence clinicians’ EBP implementation apart from homogenizing social forces nor the cross-level mechanisms through which characteristics of organizations influence clinicians’ behavior. These limitations may explain why established causal theories often do not explain a high percentage of variance in clinicians’ implementation behaviors (Eccles et al., 2012).

Second, existing causal theories often assume that the behavior change outcome of interest is dichotomous. This is in contrast with the complex behavioral repertoires that are required to successfully implement psychosocial treatments and care processes that are often the target of implementation research in child psychology and psychiatry. Psychosocial EBPs, including disorder specific manualized treatments and more generalized interventions such as those designed to increase client engagement or monitor treatment outcomes and use feedback, often incorporate a series of behaviors or skills. Such behaviors are not well-explained by theories designed around relatively simplistic, binary behaviors (e.g., “did you do the behavior, yes or no?”). Furthermore, the skilled and appropriate use of EBPs requires responsiveness to dyadic interactions between the clinician and client. Such recursive processes are not easily accounted for by models designed to predict narrowly and carefully specified behaviors. Because of these limitations, we believe that well-established social science theories provide a useful starting point, but not an end point, for implementation science and that the field needs to develop and test its own, new, integrated, multilevel causal theories that explain implementation.

Section 3: Moving towards integrated causal theory in implementation science

In order to illustrate the type of theory integration that we believe is essential for advancing implementation science we offer two examples in this section. First, we present an example of an integrated causal theory that could be tested to explain EBP implementation in child psychology and psychiatry. We do not suggest that all studies should use this specific causal theory; rather we present it as an exemplar of how causal theories might be integrated across levels with an emphasis on identifying targets and mechanisms. Second, we review a recently published randomized trial that tested the cross-level causal mechanisms through which an implementation strategy increased clinicians’ use of EBP. Although neither example is perfect, we hope the presentation of these ideas will spur innovation, creativity, and forward progress for the field.

Developing integrated, multilevel, causal theory: An example

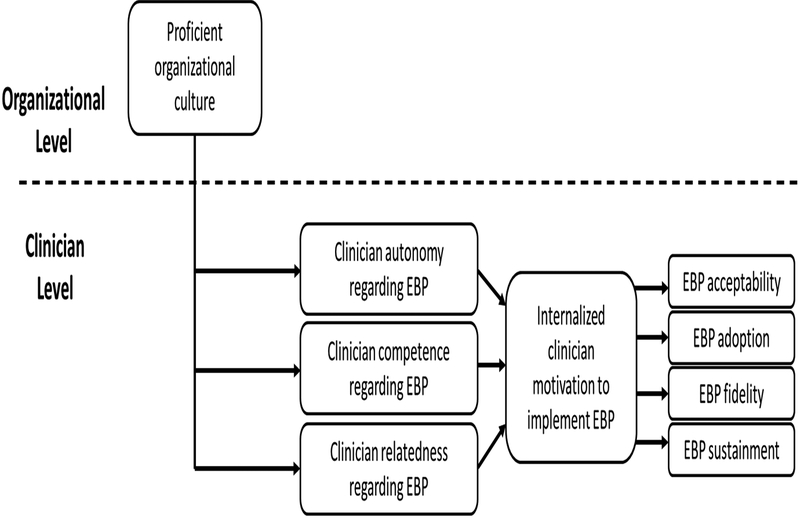

In this example, we combine organizational culture theory with self-determination theory (Cameron & Quinn, 2011; Hartnell et al., 2011; Ryan & Deci, 2017) to describe how different types of organizational culture might influence the type of motivation that clinicians experience with regard to EBP implementation and subsequently their behavior (See Figure 1). Broadly, the values, norms, and behavioral expectations that characterize an organization’s culture influence the extent to which clinicians’ basic psychological needs are met with regard to EBP implementation (e.g., autonomy, competence, relatedness), which subsequently determines whether clinicians experience amotivation (i.e., the lack of motivation), externally-regulated motivation (i.e., engaging in a behavior solely to obtain some external reward or avoid a negative consequence), or internally-regulated motivation (i.e., engaging in a behavior because of its congruence with one’s closely held values) to use EBP in their work with clients. In turn, the type of motivation that is activated leads to the level and quality of EBP implementation behaviors.

Figure 1.

Integrated Organizational Culture – Self-Determination Theory of Evidence-Based Practice Implementation

Building on self-determination theory, numerous studies have shown that internalized motivation leads to greater task effort, persistence, and problem-solving in support of behavioral performance, particularly when behaviors are complex or cognitively demanding (Judson et al., 2015; Ryan & Deci, 2000, 2017). This suggests that developing internalized motivation may be critically important for mastering and implementing the complex sets of skills involved in EBPs, particularly in the face of varied and novel clinical presentations. This research has further demonstrated that internalized motivation is highest when people experience the satisfaction of three psychological needs with respect to a target behavior—autonomy (i.e., the clinician enacts the behavior for its own sake because of its congruence with personal values rather than having it imposed from the outside or doing it to obtain some other outcome such as compensation), competence (i.e., the clinician experiences a sense of increased effectiveness in their role as they complete the behavior), and relatedness (i.e., the clinician experiences a sense of increased connection and camaraderie with others as a result of the behavior) (Ryan & Deci, 2017). We suggest that variation in organizations’ cultures may explain differences in clinicians’ experiences of these three conditions with regard to EBP implementation and that these differences may explain the different types of motivation that clinicians experience with regard to EBP and consequently the level and quality of their EBP adoption, implementation, and sustainment. Furthermore, as one considers the varied clinical presentations that occur in routine practice, clinicians who have highly internalized motivation to implement EBPs will meet these clinical challenges in an optimal way that maintains fidelity to the spirit of the EBP while also making EBP-concordant adaptations that benefit client well-being. Indeed, the right culture within a treatment organization could contribute to ongoing clinician openness to feedback and continued learning of an EBP in the face of challenges and obstacles.

In this formulation, organizational culture serves as an antecedent that catalyzes clinicians’ internalized motivation to implement EBP or as a barrier that contributes to either amotivation or externalized motivation. One type of culture that might catalyze clinicians’ internalized motivation to implement EBP is proficient organizational culture (i.e., shared norms and behavioral expectations that clinicians prioritize improvement in client well-being, maintain competence in up-to-date treatment models, and be responsive to client needs) (Glisson et al., 2008a; Williams & Glisson, 2014, in press). By combining an emphasis on improved client well-being as the sine qua non of clinical practice with an emphasis on supporting clinician competence in up-to-date treatment practices, proficient cultures might provide the right balance of autonomy, competence, and relatedness with regard to EBP that clinicians need to experience highly internalized motivation, leading to improved and effective EBP implementation.

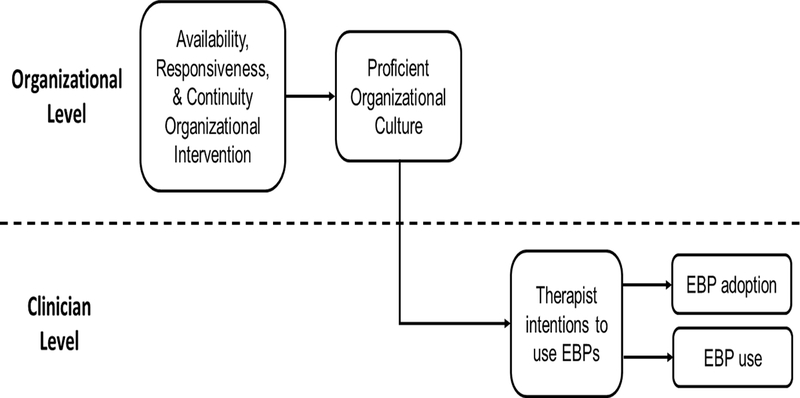

Testing integrated, multilevel, causal theory: An example

The field is beginning to move toward testing integrated causal theories. To bring our suggestions to life, we share a recently published study with community clinicians serving youth in outpatient mental health clinics (Williams et al., 2017). In this study, Williams and colleagues tested the mechanisms that linked an organizational-level implementation strategy, known as ARC for Availability, Responsiveness, and Continuity (Glisson et al., 2012; Glisson, Hemmelgarn, Green, & Williams, 2013), to clinicians’ adoption and use of EBP in a four-year randomized controlled trial. Consistent with ARC’s emphasis on tailored service improvement initiatives, organizations and clinicians were not directed to implement a specific EBP but rather had the freedom to select EBPs according to their judgment. The investigators hypothesized that ARC would increase clinicians’ EBP adoption and use via a cross-level mechanism in which improvement in proficient organizational culture (organizational level) would contribute to improved clinician intentions to use EBP (clinician level) which would subsequently increase clinicians’ EBP adoption behavior (see Figure 2). The investigators tested this two-step, serial mediation model over a 4-year period and results confirmed their hypotheses. The ARC organizational intervention improved proficient organizational culture midway through the ARC intervention (d = .96), increased clinicians’ intentions to adopt EBPs by the conclusion of the ARC intervention (d = .44), and increased clinicians’ EBP adoption (OR = 3.19) and EBP use (d = .79) 12-months after completion of ARC. Most importantly, these effects were linked in a serial mediation chain (ARC ➞ proficient culture ➞ intentions ➞ behavior) which explained 96% of ARC’s effect on EBP adoption and 61% of ARC’s effect on EBP use.

Figure 2.

Cross-Level Mechanisms of Change in the ARC Organizational Intervention

Note: ARC = Availability, Responsiveness, and Continuity organizational intervention; EBP = evidence-based practice. ARC explained 28% of the variance in proficient organizational culture, 39% of the organization-level variance in clinician intentions to use EBPs, 80% of the organization-level variance in EBP adoption, and 79% of the organization-level variance in EBP use. The indirect effect (through improvement in proficient culture and increased clinician intentions to use EBPs) accounted for 96% of ARC’s effect on EBP adoption and 61% of ARC’s effect on EBP use.

This type of study advances the science of implementation in three ways. First, it tests an integrated, multilevel theory designed to explain EBP implementation in child psychology and psychiatry. The theoretical model proposed in this study integrated organizational culture theory with TPB by describing how proficient organizational cultures generate clinician intentions to use EBPs and how this in turn leads to increased clinician EBP implementation. Second, the study clarifies the targets and mechanisms through which the organizational-level implementation strategy operated, thus elucidating future targets for other implementation strategies. Third, it acknowledges the cross-level nature of the implementation process and examines sequential mechanisms at the organization and clinician levels. This kind of study is an example of the type of studies needed to impact population behavioral health through implementation science.

Section 4: Recommendations for the field

Our review of implementation research in child psychology and psychiatry provides reason for optimism and highlights specific areas of growth for this emerging field. Calls to action to increase the implementation of EBPs in community settings have been heeded and implementation science is now a major priority area for research funders such as the National Institutes of Health, as evidenced by a standing program announcement, associated study section, and an annual meeting. In 2017, the NIH made 18 R01 level awards through the Dissemination and Implementation Research Program announcement, several of which were awarded via the National Institute of Mental Health and focused on child psychology and psychiatry.

This infusion of research funding has advanced the emerging field. Investigators have confirmed the value of EBPs for improving the outcomes of community care, demonstrated gaps in implementation in community settings, shown that training approaches are necessary but not sufficient for changing care delivery, collated a wide range of determinants that may be useful for understanding implementation, and conducted observational studies. Newer generation studies attempted to better understand how these variables facilitate and hinder EBP implementation. This research has led to discoveries including the need for high clinician motivation to achieve implementation success, the power of organizational social contexts for shaping implementation behavior, and the feasibility of activating these clinician and organization targets through implementation strategies. However, there is still much to learn, particularly with regard to moving beyond the disaggregation paradigm, developing and testing integrated causal theories, and identifying targets and mechanisms of implementation strategies on implementation outcomes. We conclude by making several recommendations to move the field forward.

Recommendation 1: Move from observational studies of implementation barriers and facilitators to trials that include causal theory

As described, much of the research to date has focused on contextual inquiry and has used mixed methods to elucidate determinants (i.e., barriers and facilitators) associated with implementation success. Although this research has been valuable, we suggest that the next generation of studies should test causal theory. These trials will likely use well-established social science theories to guide the underpinnings; however, we also advocate for developing new integrated theories designed specifically to address implementation in child psychology and psychiatry that account for the multilevel nature of implementation. Investigators should design experiments and observational studies that allow testing of causal associations between putative determinants, other determinants, and established specific and generalizable implementation outcomes.

Recommendation 2: Identify core set of implementation determinants

The field has identified over 600 putative determinants of practice at multiple levels (Flottorp et al., 2013) that could be included in causal theories of implementation. This staggering number of determinants is overwhelming and creates confusion for scientists and those hoping to implement EBPs. It is recommended that researchers engage in contextual inquiry prior to beginning an implementation (i.e., conducting an assessment of the context in order to tailor implementation strategies (Flottorp et al., 2013; Powell et al., 2017a)). However, it is likely that there are common or ‘core’ determinants that are always important in the implementation of EBPs such as clinician motivation and organizational culture; whereas there may be specific determinants depending on the intervention being implemented and the context within which implementation is occurring. To streamline the process of identifying which determinants are important, it will be necessary to provide empirical and conceptual guidance around how to select which determinants to target implementation strategies towards in a particular implementation effort. For example, if one is implementing a firearm safety promotion intervention in pediatric primary care, the political climate around clinicians asking parents about guns in the home (Wolk et al., 2017) may be a specific determinant that is relevant only to this implementation. Providing guidance around the identification of relevant determinants to be included in causal theories is a priority area for the field. Further, consideration of other variables conceptually related to implementation such as psychological safety (Edmondson, 1999) and habitual processes not currently included in heuristic frameworks should be considered (Potthoff et al., 2017; Presseau et al., 2014).

Recommendation 3: Conduct trials of implementation strategies with clear targets, mechanisms, and outcomes

Given the nascence of the field, it is not surprising that randomized trials conducted to date have often not incorporated clear causal theory, targets, mechanisms, and outcomes (Howarth, Devers, Moore, O’Cathain, & Dixon-Woods, 2016; Papoutsi, Boaden, Foy, Grimshaw, & Rycroft-Malone, 2016; Turner, Goulding, Denis, McDonald, & Fulop, 2016; Williams, 2016) and that implementation strategies have been mismatched with the determinants of interest (Bosch, Van Der Weijden, Wensing, & Grol, 2007). However, it is incumbent going forward that trials of implementation strategies clearly specify the causal theory underpinning study design with an eye towards targets, mechanisms, and outcomes. Given the emphasis at NIMH on the experimental therapeutic approach (Insel, 2013), future studies funded by the National Institutes of Health will require investigators to specify targets and explain how (a) how the implementation strategy will activate the target, and (b) how the target will influence implementation outcomes. Without a clear understanding of the malleable targets that implementation strategies engage, based upon the causal theory proposed at the outset of study, we will not be able to prioritize the most promising and effective implementation strategies nor will we be able to tailor implementation strategies (Powell et al., 2017a) using a precision implementation approach (Chambers, Feero, & Khoury, 2016).

Recommendation 4: Ensure that the behaviors that are core to EBPs are clearly defined

Developing a science of implementation requires precise delineation of the target outcomes or behaviors of interest. To date, there has been a lack of clarity regarding what the core components of various EBPs are, thus making it difficult to apply theories from social science given the need for specificity of the outcomes. While there has been movement toward defining a set of high-level implementation outcomes for the field (Proctor et al., 2011), it is often hindered by the fact that core components of the interventions are not clearly operationalized. As a field, implementation science may need to take on a translational role that involves developing expertise in categorizing and operationalizing behavioral sequences using a consistent nosology of behavior; this expertise would be applied to the wide variety of EBPs that need to be implemented. For example, in child psychology and psychiatry the implementation of psychosocial EBPs requires a nuanced understanding of the active ingredients of the EBP (Kazdin, 2018; Miklowitz, Goodwin, Bauer, & Geddes, 2008) in order to understand which specific sequences of behaviors are core components of the intervention and which behaviors are peripheral (Damschroder et al., 2009).. For example, implementing cognitive-behavioral therapy for anxiety is comprised of many steps and techniques including psychoeducation, cognitive restructuring, problem-solving, and exposure (Weersing, Rozenman, & Gonzalez, 2009). Each of these techniques is made up of additional steps. Understanding the common elements of evidence-based approaches may allow for more precise specification of the target behaviors (Chorpita, Becker, Daleiden, & Hamilton, 2007) for implementation and it is necessary for treatment development researchers to provide guidance on which ingredients and in what sequence are needed in order to plan for implementation. Further, because existing causal theory assumes that the behaviors of interest are simple rather than complex, multi-step, and sequenced, new integrated causal theories will need to allow for more sophistication in predicting more complex behavioral repertoires as the end points (Eccles et al., 2012; Papoutsi et al., 2016). This recommendation is important in light of the many different types of EBPs that improve clinical outcomes (e.g., psychotherapy protocols, client engagement strategies, feedback systems, modified care processes, and quality improvement), and because clinical and services researchers are constantly developing new innovations (e.g., personalized medicine) that need to be implemented (Garland et al., 2013; Ng & Weisz, 2016). Developing procedures to systematically operationalize these wide-ranging innovations represents a significant challenge to implementation science.

Recommendation 5: Agree upon standard measures