Abstract

Research shows that neurotypical individuals struggle to interpret the emotional facial expressions of people with Autism Spectrum Disorder (ASD). The current study uses motion-capture to objectively quantify differences between the movement patterns of emotional facial expressions of individuals with and without ASD. Participants volitionally mimicked emotional expressions while wearing facial markers. Marker movement was recorded and grouped by expression valence and intensity. We used Growth Curve Analysis to test whether movement patterns were predictable by expression type and participant group. Results show significant interactions between expression type and group, and little effect of emotion valence on ASD expressions. Together, results support perceptions that expressions of individuals with ASD are different from -- and more ambiguous than -- those neurotypical individuals’.

Keywords: ASD, Motion Capture, Emotional Facial Expressions, Social Communication

Facial expressions are used in all human cultures as a method for sharing emotions with others (Ekman, 2004; Ekman & Friesen, 1971), and they are some of the very first social behaviors demonstrated by infants (Izard, Huebner, Risser, & Dougherty, 1980). The ability to use facial expressions to convey emotions clearly to another individual is not only crucial to transmitting one’s own intentions and basic needs, but can also communicate information that is important beyond the individual. For instance, a person expressing a fearful face can warn others that there is a threat nearby. Emotional facial expressions can even affect the way people are perceived by others: Research shows that the production of frequent smiles increases a person’s likeability, trustworthiness, and their perceived attractiveness (Lau, 1982; Otta, Lira, Delevati, Cesar, & Pires, 1994; Scharlemann, Eckel, Kacelnik, & Wilson, 2001).

Individuals with Autism Spectrum Disorder (ASD) struggle with most aspects of social communication, including their ability to use facial expressions to convey their emotions (American Psychiatric Association, 2013). In fact, idiosyncratic facial expressions are even used as a diagnostic measure for ASD in evaluative tools like the Autism Diagnostic Observation Schedule (ADOS: Lord et al., 2012), suggesting that non-canonical emotional facial expressions are fundamental to ASD. Certainly, atypical facial expressions will hinder individuals with ASD from communicating their feelings clearly to others, they may also contribute to the negative judgments neurotypical (NT) individuals make of people with ASD within seconds of exposure (Grossman, 2015; Sasson et al., 2017; Stagg, Slavny, Hand, Cardoso, & Smith, 2014).

The research on expression quality in ASD suggests that autistic emotional facial expressions simply look different from NT individuals’. This research relies on NT individuals rating the appearance of facial expressions produced by people with and without ASD. In one such study, raters deemed expressions of individuals with ASD as of lower quality (on a scale from “poor” to “good”) than expressions made by both NT individuals and by individuals with other neurodevelopmental disorders, like Down Syndrome (Langdell, 1981). In others, autistic expressions have been rated as relatively less “natural” (Faso, Sasson, & Pinkham, 2015) or more “awkward” (Grossman, Edelson, & Tager-Flusberg, 2013). NT individuals also seem to struggle to interpret emotional facial expressions made by people with ASD (Brewer et al., 2016). For instance, Love (1993) showed that NT individuals are less accurate at identifying the emotion an expression conveys when it is produced by an individual with ASD, so that they cannot discern a sad face from an angry one.

The underlying features of autistic expressions that make them appear atypical/ambiguous to NT individuals have yet to be identified. Some research has attempted to determine these features by asking human coders to assess the facial muscle movements in expressions made by people with ASD. In this research, coders use well-established facial-coding systems that have been designed so that human beings can objectively classify facial movements (Ekman & Friesen, 1971, 1977; C. Izard, 1983; Kring & Sloan, 2007). Coders watch video-recordings of facial expressions in slow motion, and then assign codes to changes in appearance (i.e., muscle activity in the eyebrows, nose, cheeks, mouth, etc.). Yoshimura et al. (2015) applied two such systems to assess facial expression quality in adults with ASD. Using the Facial Action Coding System (FACS: Ekman & Friesen, 1977) and the Facial Expression Coding System (FACES: Kring & Sloan, 2007), these researchers coded expressions of participants with and without ASD as they spontaneously and intentionally mimicked expressions. Yirmiya et al. (1989) used the Maximally Discriminative Facial Movement Coding System (MAX), developed by Izard (1983), to study the facial expressions of preschoolers during a social interaction. Their participants not only included children with ASD and NT children, but also preschoolers who had a cognitive impairment, but did not have ASD.

In both studies, participants with ASD made fewer spontaneous facial expressions overall, which provides objective support for perceptions of overall flatter emotional affect in ASD (e.g., Kasari et al., 1993; Stagg et al., 2014). In addition, Yoshimura et al. (2015) found that spontaneously imitated expressions in ASD contained muscle movements that were incongruous with the expression being mimicked. For example, participants with ASD were more likely than NT participants to raise the corners of their lips into a smile while observing an angry face. Such incongruent expressions, if they occur during a social interaction, might be off-putting to NT individuals and difficult for them to interpret. The findings from Yirmiya et al. (1989) add to this story. They found that expressions in ASD involve atypical and incongruous combinations of facial-muscle contractions, for instance, simultaneous blends of angry and joyful expressions. These blends were unique to ASD; they were not observed in any of the NT children, nor were they produced by any of the children with a non-autistic intellectual disability. The authors conclude that such unusual combinations cause the expressions of people with ASD to be ambiguous and unclear, and could contribute to negative judgments of ASD expressions by NT individuals.

While these studies provide some possible explanations for what might make autistic facial expressions atypical, it is arguable that the use of human coders is not an ideal tool for studying expressivity in ASD. First of all, facial-expression coding systems are useful for characterizing static expressions, such as photographs of faces, but they are not as easily applied to dynamic facial expressions, where the features of the face shift seamlessly over time. Because dynamic expressions are what we typically experience in real-life social interactions, it is crucial to analyze these temporal changes. This is especially true since such changes are meaningful: They can represent transitions from one facial expression to another or from a neutral face to an emotive face. Another reason that facial-expression coding systems may not be ideal for studying facial-expression difference in ASD is that they do not result in truly objective data. This is particularly true when coders attempt to map these facial-muscle movements to emotional affect (disgust, anger, etc.). In the end, results still rely on NT human coders to interpret the meaning of facial movements in ASD. Since it has already been established that NT individuals find autistic expressions to be unclear and odd, it is perhaps not surprising that NT coders categorize facial expressions of individuals with ASD as incongruous and unusual.

Facial electromyography (fEMG) is a more objective measure of facial feature movements. FEMG measures the electrical impulses of facial muscle contractions. However, the application of fEMG is limited to two muscle groups responsible for frowning (corrugator supercilii) and smiling (zygomaticus major). FEMG has been applied to the study of spontaneous and voluntary mimicry of facial expressions in ASD. Some studies have shown that the timing of muscle movements in spontaneous – but not voluntary-- mimicry of static facial expressions is delayed as compared to NT individuals (McIntosh, Reichmann-Decker, Winkielman, & Wilbarger, 2006; Oberman, Winkielman, & Ramachandran, 2009). Others have shown that individuals with ASD show atypical muscle activation and undifferentiated muscle activity when they are expressing different types of emotions (e.g., angry vs. happy). For instance, one study showed an atypical combination of muscle activity in children with ASD during the spontaneous mimicry of photographs of fearful faces (Beall, Moody, McIntosh, Hepburn, & Reed, 2008). Another study showed that the facial muscle activity of children with ASD was the same whether they watched dynamic positive (happy) or negative (angry and fearful) facial expressions (Rozga, King, Vuduc, & Robins, 2013).

While fEMG is more objective than perceptual facial-expression coding, it cannot assess qualitative features of expressions, nor can it be used to define facial movements beyond smiles and frowns. FEMG is useful for determining whether and when two specific muscle groups are moving, but it does not identify the corresponding movement of facial features with relation to one another, nor does it capture the movement of all facial features during an expression. Therefore, it is difficult to use fEMG to try to determine what makes autistic facial expressions appear different.

A promising method for objective analysis of facial movements is the use of Motion Capture (MoCap) technology. MoCap allows for the quantification of movement patterns of multiple facial features across time. Since MoCap captures movements from the skin surface area over the entire face, the resulting data correspond directly to what humans see, rather than the underlying twitches of muscles recorded by fEMG. Further, MoCap provides a measurement with high temporal resolution, so that visible changes in facial movement patterns in dynamic expressions can be tracked every few milliseconds.

Using a FACS-based array of 32 markers across the entire face, we have used MoCap to show that individuals with ASD make smiles that are more asymmetrical and less fluid than NT individuals (Metallinou, Grossman, & Narayanan, 2013). In two other papers, we analyzed a variety of expressions, and found that individuals with ASD made facial expressions that were less complex than those of NT individuals (Guha, Yang, Grossman, & Narayanan, 2016; Guha et al., 2015), where complexity indexes the amount of repetition in facial movement patterns over time (more complex = less repetition). In these previous analyses, we analyzed marker movement in separate regions of the face; in the current paper, we use distances between markers in order to measure muscle movements and contractions that are indicative of emotional expressions (Ekman & Friesen, 1977).

In the current study, participants watched videos of dynamic facial expressions and mimicked them while MoCap was recorded. We compared facial movement patterns across groups (NT vs. ASD) for different types of stimuli (more vs. less intense emotions and positive vs. negative emotions). We predicted that facial expressions in ASD would show more overlap between different emotion types (positive vs. negative and intense vs. not intense) than NT individuals since previous work has reported blended and ambiguous spontaneous expressions in ASD (Beall et al., 2008; Rozga et al., 2013; Yirmiya, Kasari, Sigman, & Mundy, 1989). Additionally, we hypothesized that high-intensity emotions would result in more movement than low-intensity emotions for both groups. And, because we used changes in distance between positions of facial features as our measure of facial-feature movement, we also predicted that positive expressions would contain more movement than negative expressions due to increased horizontal distances caused by smiling. Increased horizontal distance (between mouth corners, lip corners, etc.) has been used to identify positive expressions (smiles and laughter) in previous work (e.g., Matsugu, Mori, Mitari, & Kaneda, 2003). In the current analysis, we focused on MoCap’s ability to capture whole-face movement, so that we could obtain an objective measure of what is perceived during dynamic expressions: i.e., the whole face moving at once, rather than focusing on the movements of individual components.

Methods

Participants

Nineteen (19) children and adolescents with ASD (2 female), and 18 NT children (1 female) participated. See Table 1 for descriptive statistics. Participant groups did not differ significantly on age, gender, non-verbal IQ as measured by the Leiter-R, and receptive vocabulary as measured by the PPVT-4 (p > 0.10 for all comparisons).

Table 1.

Ages, gender, IQ and language scores for participants in each

| Group | Gender | Age | Leiter-R | PPVT-4 Stand. Score |

|---|---|---|---|---|

| ASD N = 19 |

17 male | M = 12;8 Range = 8;5 – 19 |

M = 105 (13.7) |

M = 108 (17.6) |

| TD N = 18 |

17 male | M = 12;11 Range = 8;8– 17;11 |

M = 110 (10.3) |

M = 119 (16.7) |

The ADOS (C Lord et al., 2000) was conducted by a research-reliable administer in order to confirm ASD diagnosis for the participants in the ASD cohort. These data were collected in 2011, before the second edition of the ADOS (ADOS-2) was released.

Stimuli

Participants watched thirty-six short videos from the Mind Reading CD (Baron-Cohen & Kingsley, 2003). Each video presents an actor portraying an emotion through facial expressions. The videos are silent and last between 2 to 9 seconds, with the majority of them (22) lasting 5 seconds. Across the videos, a variety of emotions are represented, including happiness, anger, sadness, surprise, fear and disgust. Some videos include emotional transitions, like surprise transitioning into happiness. We elected to use dynamic (versus static) expressions because they have been shown to be more easily recognizable than static expressions in both NT and ASD populations (Arsalidou, Morris, & Taylor, 2011; Uono, Sato, & Toichi, 2010). Further, dynamic expressions are more ecologically valid than static expressions, since real human-to-human interaction involves the processing and production of moving facial features. The 36 expressions used in this study are a subset of those included in the Mind Reading CD. The CD contains 412 videos, which are divided into 24 types of expressions. We selected the seven categories that best corresponded to the six universal facial expressions -- sad, angry, happy, afraid, excited (as a variant of happy), surprised, and disgusted – and eliminated videos that fell into the other 17 categories. We then showed this set of videos to 10 college students and asked them, “Could this expression be ‘X’?”, where “X” was the name of the larger category, for instance, “Could this expression be ‘afraid’?” When at least 8 students agreed that the facial expression in the video matched the target emotion label, we selected that video for the stimulus set. This resulted in 42 videos, with 4 to 7 videos in each of the categories. We ultimately eliminated the six “excited” videos, so that all remaining stimulus videos (36) represented one of the 6 universal emotion types (Ekman & Friesen, 1971). Even though these videos had been categorized as one of the 6 basic emotional categories -- both in the Mind Reading CD’s categorization and during our lab’s stimuli-selection procedure -- it is important to note that the original labels for many of the videos were more complex emotions (e.g., complaining, confused, and cheeky) and that some of the expressions within each of the six categories where quite different from each other. We therefore re-categorized all expressions into two binary metrics (See analysis section) to more accurately reflect the underlying expressions and to increase power.

Procedure

Participants sat in a chair with a 30” (resolution: 2560×1600) computer screen positioned at a comfortable distance in front of them with the monitor tilted at a 15-degree angle. The 36 video stimuli were presented on this screen. These stimulus videos were split into two lists of 18, A and B. The presentation of lists was alternated so that half of the participants saw list A first and half saw list B first. To prevent order effects, presentation of the stimuli within these lists were reversed so that, for example, those participants who saw list A first, saw the items in list A in the opposite order from those who saw list A second.

Participants were told to mirror the facial movement the actors made in the second set of 18 videos (list A for half the participants and list B for the others). Participants were instructed to try to sync their facial movements so that they were simultaneous to the actors’ movements.

Motion-Capture Recording

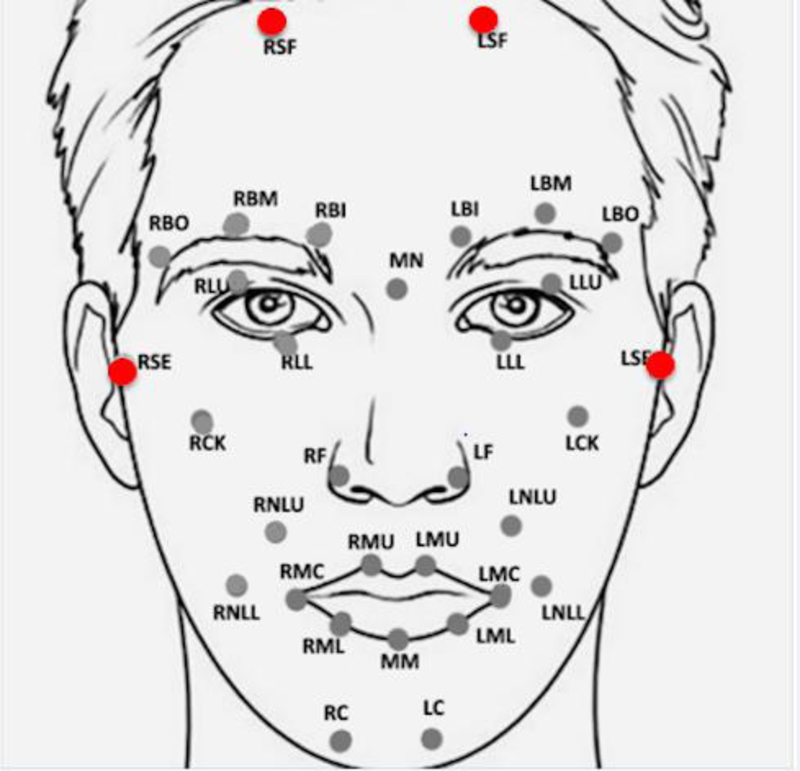

Motion-capture data was captured at 100 frames per second (fps) using the VICON MX-T40 camera system in a room specifically set up for best usage of the cameras. We attached 32 reflective markers (4–10mm diameter, depending on location) on participants’ faces using defined landmarks with high movement involvement in facial expression production (Trotman et al., 1998). See Fig 1.

Fig 1.

Positions of 32 reflective markers, including 4 larger stabilizer markers (red) and 28 smaller markers (gray)

Four larger markers (10mm in diameter, colored red in Figure 1) were positioned on the sides of the forehead and on both temples. These markers were placed in locations where facial skin does not move and were used to track head movements in all three planes. Twenty-eight (28) smaller markers (4mm in diameter) tracked movements of facial features. Marker distribution was derived from the 92-marker template developed by The Digital Concepts Group, Inc. of House of Moves (Hauck, D. J., 2007) for the purposes of digitally animating human facial movements and expressions in the movie industry. The Hauck (2007) template was based on basic facial movement patterns identified in the Facial Action Coding System (Ekman et al., 1978).

Motion capture was recorded from the onset of each stimulus presentation to the end of the participants’ movement.

Motion-Capture Data Processing

Normalization

Data were normalized to smooth out variations in feature distances due to subject-specific facial structure. This way, our analysis could focus on variability related to facial movements, rather than facial features. Normalization procedures followed those utilized in Metallinou et al. (2013): Individuals’ mean marker coordinates were shifted to match to the global mean coordinates computed across all subjects.

Artifact detection and correction

Data visualization tools were developed to visually inspect the Motion Capture sequence and correct for artifacts. Some data contained gaps where certain marker positions were missing. Gaps occurred when markers were occluded from the cameras’ view. This happened when participants turned their heads away from the cameras, moved their hands in front of their face, et cetera. Missing marker trajectories were interpolated to fill in gaps shorter than 1sec, using a cubic Hermite spline interpolation, as described in Metallinou et al. (2013). Trials with gaps larger than 1sec were excluded from analysis, so that there were fewer than 18 stimulus recordings for some participants. After this process, we ended with 475 usable trials – around 13 trials per participant.

Distances

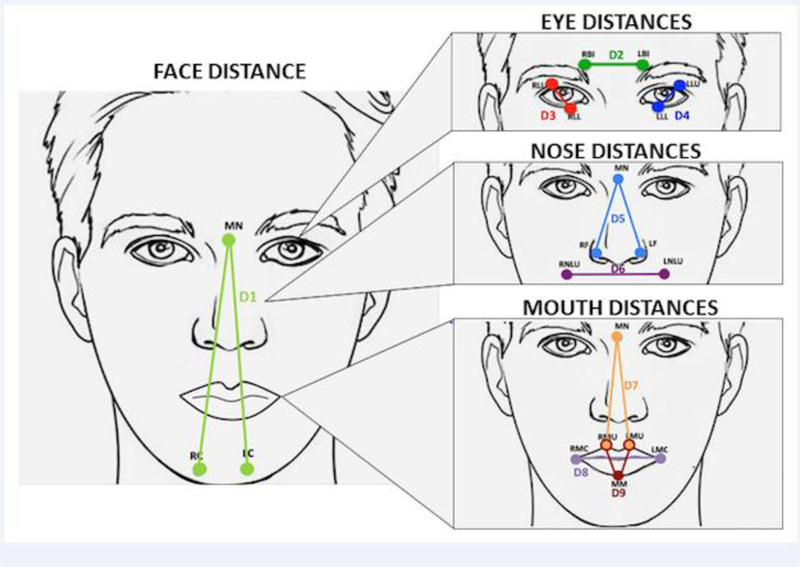

To categorize facial movement, we calculated 9 distances between markers. Distances were selected to correspond to facial muscle movements and contractions that are indicative of emotional expressions (Ekman & Friesen, 1977), including: eye opening/shutting (D3 and D4), eyebrow furrowing and widening (D2), nose wrinkling/lengthening and flaring (D5 and D6, respectively), upper lip raising (D7), mouth widening/narrowing (D8), mouth opening/closing (D9), and face lengthening—a combination of eyebrow raising/lowering and/or mouth opening/closing (D1). See Fig 2 and Table 2. These distances are computed using the Euclidean distance between markers. For example, D2 is the distance between markers RBI (x1,y1,z1) and LBI (x2,y2,z2), and is computed as squareroot(square(x1–x2) + square(y1–y2) + square(z1–z2)).

Fig 2.

Nine distances selected for analysis: 1 face distance (Left), 3 eye distances, 2 nose distances, and 3 mouth distances (Right)

Table 2.

Description of 9 facial distances selected for analysis

| Distance | Name | Markers at Distance Endpoints |

Movement |

|---|---|---|---|

| D1 | Face Vertical | Midline Nose to (Right Chin & Left Chin) |

Face lengthening/shortening |

| D2 | Inner Eyebrow Horizontal |

Right Brow Inner to Left Brow Inner |

Inner eyebrow widening/narrowing |

| D3 | Left Eyelid Vertical | Left Lid Lower to Left Lid Upper |

Left eyelid opening/closing |

| D4 | Right Eyelid Vertical |

Right Lid Lower to Right Lid Upper |

Right eyelid opening/closing |

| D5 | Nose Vertical | (Right Nostril & Left Nostril) to Midline Nose |

Nose lengthening/shortening (“wrinkling”) |

| D6 | Nasal-lip horizontal | Left Labionasal to Right Labionasal |

Nasal-lip widening/narrowing (“deepening”) |

| D7 | Upper lip vertical | (Right Mouth Upper & Left Mouth Upper) to Midline Nose |

Upper lip raising/lowering |

| D8 | Mouth Horizontal | RMC to LMC | Mouth widening/narrowing |

| D9 | Mouth Vertical | (RMU & LMU) to MM | Mouth opening/closing |

Stimulus Categorization

Each stimulus video shows a unique series of facial expressions and movements. In order to increase the power of our analyses by grouping stimuli, we categorized videos according to two binary metrics: Intensity of expression (High or Low) and Valence of expression (Positive or Negative).

To reliably categorize the stimuli, we presented the 36 videos to 22 adults (14 females, M age = 22) and asked them to judge each video on these two measures (Intensity and Valence). They were given a binary choice for both measures (high/low and positive/negative, respectively). Stimulus videos were categorized as Positive/Negative and High/Low when more than two-thirds of participants agreed on categorization. All videos had higher than 67% agreement (chance) for Valence, and so all videos were categorized as either Positive or Negative. For Intensity, there were eight stimulus videos that received less than 67% agreement. For instance, 11 participants labeled a video of a man acting “cheeky” as High Intensity and 11 as Low Intensity. These eight stimuli (“Medium Intensity”) were excluded from analyses where Intensity was used a predictor variable but remained in Valence comparisons. Twenty-four (24) videos were determined to show Negative valence, 12 Positive. Sixteen (16) videos were categorized as High Intensity and 12 were categorized as Low Intensity. The number of videos was not evenly distributed across the six possible categories (e.g., High Intensity Positive, Medium Intensity Negative, etc.), but the difference in proportions was not statistically significant (χ2= 2.63, p = 0.269).

Time

Motion capture recordings ran from 2 seconds (1 trial of 475) to 11 seconds (13 trials). So that we could compare across trials, we trimmed all longer trials to the median trial length — 600 samples from trial onset.

Analysis

We averaged the 9 marker distances (Figure 2) together to calculate overall facial movement for each participant for each stimulus video. Before calculating this average, we used correlations between distances to identify redundant distances, since we did not want to skew our results by over-representing such distances. Vertical mouth and nose (D5 and D9) were highly correlated with one another (R = 0.99) and both were highly correlated with vertical face, D1 (R > 0.80). From these three distances, we included only vertical face (D1), on the assumption that mouth/nose lengthening/shortening would contribute to vertical face distances and would therefore be represented in the D1 value (along with other meaningful movements, like eyebrow raising/lowering). The remaining six distances (D2, D3, D4, D6, D7, and D8) showed minimal correlations with one another and with D1 (R < 0.19). We averaged the seven unrelated distances together and used this average to explore movement patterns in subsequent analyses.

We used growth curve analysis (GCA) to compare the amount of overall movement and analyze movement patterns across time between groups (NT and ASD) and between stimulus type (positive vs. negative valence and high vs. low intensity; Mirman, 2014). GCA is a multilevel regression technique designed for analysis of data across a time course. In GCA, time is transformed into independent, polynomial vectors. The approach provides a model of the impact of differences between conditions and groups on features of condition curves of movement over time (Mirman, Dixon, & Magnuson, 2008).

In our analysis, we modeled time as linear, quadratic and cubic. We used GCA to analyze overall movement from the onset of movement to 600 frames later. In the first comparison, fixed effects included Group (ASD and NT) and Valence (positive and negative). In the second comparison, fixed effects included Group (ASD and NT) and Intensity (high and low).

Results

Tables 3–6 show all effects. Only significant effects (p < 0.05) are discussed in the body of the text.

Table 3.

Valence and group GCA results. The estimates are for Valence, Group, and Valence-by-Group interaction terms (Standard errors are in parentheses). The left section shows results for the positive emotions versus negative emotions. The middle section shows results for the ASD group relative to NT group. The right section shows interactions between these two comparisons. Marginal R2: 0.025; conditional R2: 0.572.

| Valence | Group | Valence: Group |

|||||||

|---|---|---|---|---|---|---|---|---|---|

| Estimate | t | p < | Estimate | t | p < | Estimate | t | p < | |

| Fixed | 0.700 (0.253) |

2.76 | 0.01 | 1.71 (1.47) |

1.16 | n.s. | 0.020 (0.349) |

0.35 | n.s. |

| Linear | 0.312 (0.136) |

2.30 | 0.05 | −0.800 (0.533) |

1.50 | n.s. | 0.452 (0.186) |

2.43 | 0.05 |

| Quadratic | −0.241 (0.134) |

−1.80 | 0.1 | −1.340 (0.810) |

−1.80 | 0.1 | −1.101 (0.184) |

−5.99 | 0.0001 |

| Cubic | −0.131 (0.133) |

−0.98 | n.s. | −0.161 (0.261) |

−0.62 | n.s. | −0.279 (0.184) |

−1.52 | n.s. |

Table 6.

Intensity GCA results within group. The left half shows the results of GCA for NT participants (marginal R2: 0. 065; conditional R2: 0.362), and the right half for participants with ASD (marginal R2: 0.011; conditional R2: 0.633).

| Intensity for NT Participants | Intensity for ASD Participants | |||||

|---|---|---|---|---|---|---|

| Estimate | t | p < | Estimate | t | p < | |

| Fixed | 0.841 (0.189) | 4.44 | 0.0001 | 1.315 (0.408) | 3.22 | 0.01 |

| Linear | 0.214 (0.058) | 3.67 | 0.001 | −0.795 (0.166) | −4.80 | 0.0001 |

| Quadratic | 0.122 (0.058) | 2.12 | 0.05 | 0.163 (0.163) | 0.10 | n.s. |

| Cubic | 0.102 (0.058) | 1.78 | 0.1 | −0.616 (0.166) | 3.72 | 0.001 |

Valence

The model included random effects (intercept and slope) for participant-by-Valence random effects on all time terms. See Table 3 for all effects.

There was a significant effect of Valence on the intercept term, indicating more overall movement for positive emotions relative to negative emotions (Estimate = 0.70, SE = 0.253, p < 0.01).

There were significant positive effects of Valence on the linear term, indicating a shallower slope for positive as compared to negative emotions—that is, distances in the Negative trials decrease more than in the Positive trials (Estimate = 0.31, SE = 0.14, p <0.05).

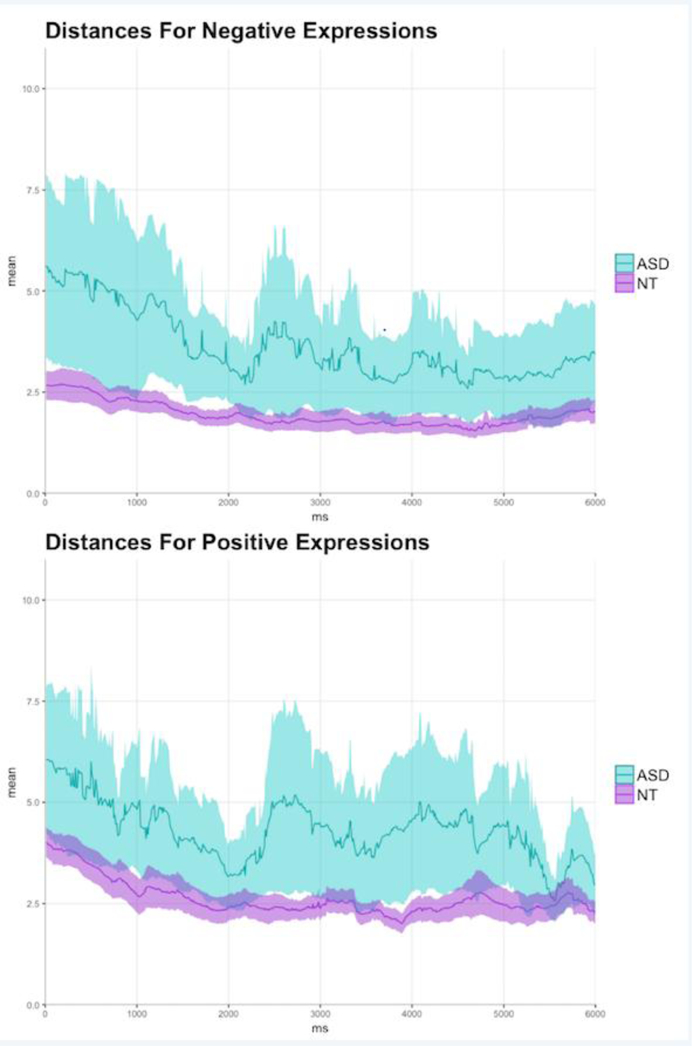

There were also significant positive effects of the interaction between Group and Valence on the linear and quadratic movement curves, respectively. The former interaction indicates smaller slope differences between rise and fall rates between Positive and Negative emotions for the ASD group (Estimate = 0.45, SE = 0.19, p = 0.015). The latter indicates a steeper rise and fall shape (an inverted-U shape) for Positive vs. Negative emotions in the ASD group as compared to the NT group; (Estimate = −1.10, SE = 0.18, p <0.0001). This latter interaction is interesting, since all other effects of Group (and Group by Valence) were not significant. See Figure 3 for movement patterns by Valence.

Fig 3.

Overall movement for negative (top) and positive (bottom) emotions for NT and ASD groups

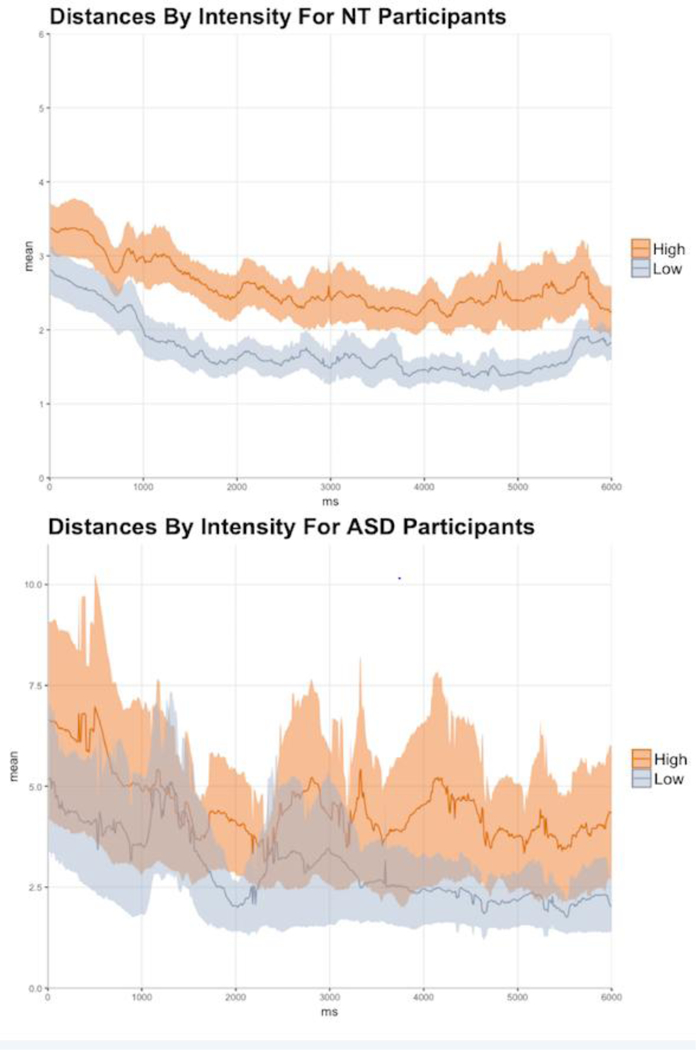

The significant interactions between Group and Valence motivated us to analyze movement patterns between negative vs. positive for each group. So, we followed the across-group analysis by conducting within-group comparisons. See Figure 4 for graphs showing movement patterns within each group.

Fig 4.

Overall movement for emotions with different valence (positive and negative) for NT group (top) and ASD group (bottom). Note: There are different scales on the y-axis (movement axis) for the two groups

In these models, time was again modeled as linear, quadratic and cubic, but we included only one fixed effect, Valence (Positive and Negative), and the models again included random effects (intercept and slope) for participant-by-valence random effects on all time terms. See Table 4.

Table 4.

Valence GCA results within group. The left half shows the results of GCA for NT participants (marginal R2: 0.063; conditional R2: 0.349), and the right half for participants with ASD (marginal R2: 0.008; conditional R2: 0.588).

| Valence for NT Participants | Valence for ASD Participants | |||||

|---|---|---|---|---|---|---|

| Estimate | t | p < | Estimate | t | p < | |

| Fixed | 0.712 (0.228) | 3.14 | 0.01 | 0.393 (0.302) | 1.30 | n.s. |

| Linear | 0.015 (0.057) | 0.26 | n.s. | 0.235 (0.163) | 1.44 | n.s. |

| Quadratic | 0.201 (0.057) | 3.50 | 0.001 | −0.345 (0.160) | −2.16 | 0.05 |

| Cubic | −0.131 (0.133) | −0.98 | n.s. | −0.298 (0.160) | −1.85 | 0.1 |

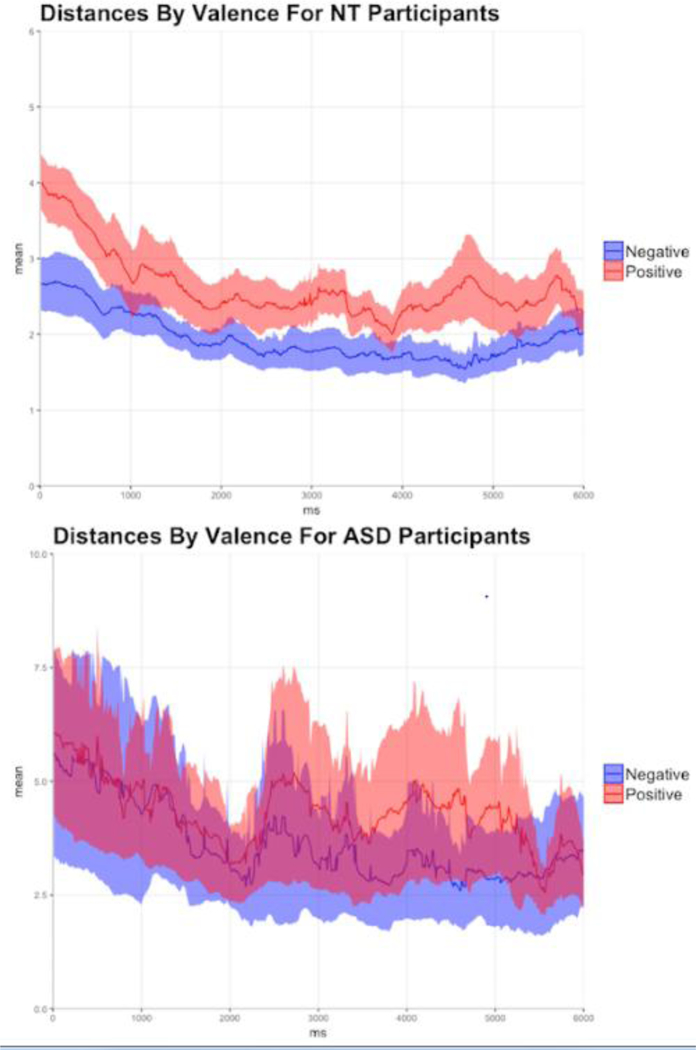

The NT group shows a significant effect of Valence on the intercept term, indicating more overall movement for positive emotions relative to negative emotions (Estimate = 0.71, SE = 0.23, p = 0.002). There is no fixed effect of Valence in the ASD group.

The two groups show opposite effects on the quadratic terms. The NT group shows a significant positive effect, suggesting that Positive emotions are significantly more U-shaped than Negative emotions—i.e., distances are larger at the start and end of the trial for Positive emotions (Estimate = 0.20, SE = 0.06, p < 0.001. Conversely, there is a significant negative effect on the quadratic term for the ASD group (Estimate = −0.35, SE = 0.163, p = 0.03), suggesting that Positive emotions show a steeper peak in the middle of the trial as compared to Negative emotions. These differences are visible in Figure 4. These results show opposite movement shapes across groups, and they are consistent with the significant negative interaction between Group and Valence in the across-group GCA.

Intensity

The model included random effects (intercept and slope) for participant-by-Intensity-by random effects on all time terms.

There was a significant positive effect of Intensity on the intercept term, indicating more overall movement (averaged across time) for High-Intensity expressions relative to Low-Intensity expressions in both groups (Estimate = 0.85, SE = 0.33, p = 0.01).

There was a significant negative effect of the interaction between Group and Intensity on the linear term and a significant negative effect on the cubic term. The former indicates steeper slope difference for the ASD group for High- as compared to Low-Intensity emotions —that is, there is more of a difference between the rate of distance decrease for high- vs. low-intensity trials in the ASD group (Estimate = 1.00, SE = 0.18, p < 0.001). The latter (cubic) effect indicates steeper negative slopes at the beginning and ends of the trials for High-Intensity (vs. Low-Intensity expressions) in the ASD group as compared to the NT group (Estimate = −3.99, SE = 1.18, p < 0.001).

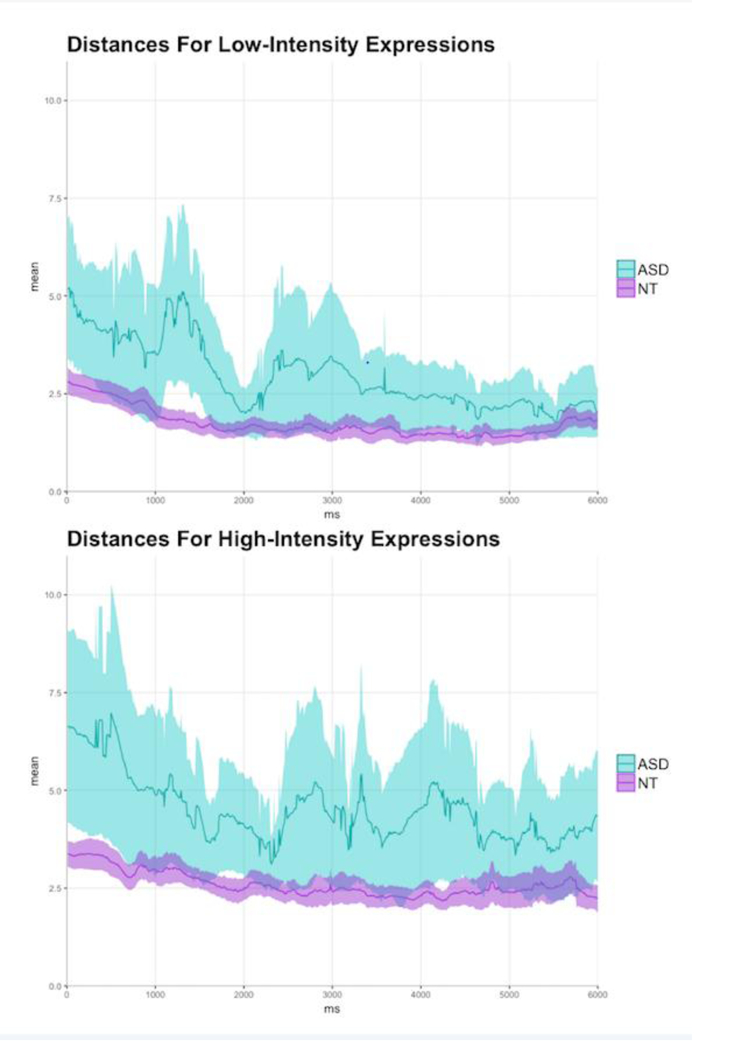

There were no other significant effects. See Table 5 for full results and Figure 5 for movement patterns by Intensity.

Table 5.

Intensity and group GCA results. The estimates are for Intensity, Group, and Intensity-by-Group interaction terms (Standard errors are in parentheses). The left section shows results for the high-intensity emotions versus low-intensity emotions. The middle section shows results for the ASD group relative to NT group. The right section shows interactions between these two comparisons. Marginal R2: 0.036; conditional R2: 0.638.

| Intensity | Group | Intensity: Group |

|||||||

|---|---|---|---|---|---|---|---|---|---|

| Estimate | t | p < | Estimate | t | p < | Estimate | t | p < | |

| Fixed | 0.850 (0.333) |

2.56 | 0.05 | 1.635 (1.510) |

1.0826 375 |

n.s. | 0.463 (0.462) |

1.00 | n.s. |

| Linear | 0.209 (0.127) |

1.64 | n.s. | −0.0294 (0.762) |

−0.039 | n.s. | −1.00 (0.178) |

−5.64 | 0.0001 |

| Quadratic | 0.100 (0.124) |

0.810 | n.s. | 0.602 (0.527) |

1.14 | n.s. | 0.061 (0.175) |

0.35 | n.s. |

| Cubic | 0.094 (0.129) |

0.743 | n.s | 0.587 (0.338) |

1.7356 756 |

n.s. | −0.710 (0.178) |

−3.99 | 0.0001 |

Fig 5.

Overall movement for low-intensity (top) and high-intensity (bottom) emotions for NT and ASD groups

Because there were significant interactions between Intensity and Group, we followed this analysis by conducting within-group comparisons. In these comparisons, we analyzed movement patterns between High- vs. Low-Intensity facial expressions for each group. In these models, time was again modeled as linear, quadratic and cubic. We included only one fixed effect, Intensity (High and Low), and the models again included random effects (intercept and slope) for participant-by-valence random effects on all time terms. See Table 6.

In both groups, there is a significant, positive fixed effect of Intensity, showing that High-Intensity expressions yielded larger overall facial movement than Low-Intensity emotions (NT: Estimate = 0.84, SE = 0.19, p < 0.0001; ASD: Estimate = 1.32, SE = 0.41, p < 0.01).

In both groups, there is a significant linear effect of Intensity as well, but the direction of these effects is different in each group. In the NT group, there is a significant positive linear effect of Intensity, indicating a more positive slope, from the start to end of the trial for High-Intensity expressions as compared to Low-Intensity expressions. As can be seen in Figure 6, both expression types start out with greater distances that decrease as the trial continues (i.e., both have a negative slope).

Fig 6.

Overall movement for emotions with different intensity (high and low) for NT group (top) and ASD group (bottom). Note: There are different scales on the y-axis (movement axis) for the two groups

Thus, a simpler way to interpret these results is to reverse the comparison: Low-Intensity emotions show a steeper negative slope than High-Intensity expressions in the NT group (Estimate = 0.21, SE = 0.06, p < 0.001). In the ASD group, the opposite is true: High-Intensity expressions show significantly steeper negative slopes than Low-Intensity expressions (Estimate = −0.80, SE = 0.17, p < 0.0001).

The NT group shows a small, but significant, positive effect on the quadratic term, suggesting that High-Intensity are more U-shaped than Low-Intensity expressions (Estimate = 0.12, SE = 0.06, p = 0.03). This is likely caused by an increase in distance at the end of the trial for High-Intensity expressions in the NT group; such an increase is not visible for Low-Intensity expressions in this group (See Figure 6). In contrast, the ASD group shows a significant negative effect of Intensity on the cubic term. This result indicates steeper negative slopes at both the beginning and the end of the trials for High-Intensity versus Low-Intensity expressions (Estimate = 0.62, SE = 0.17, p < 0.001).

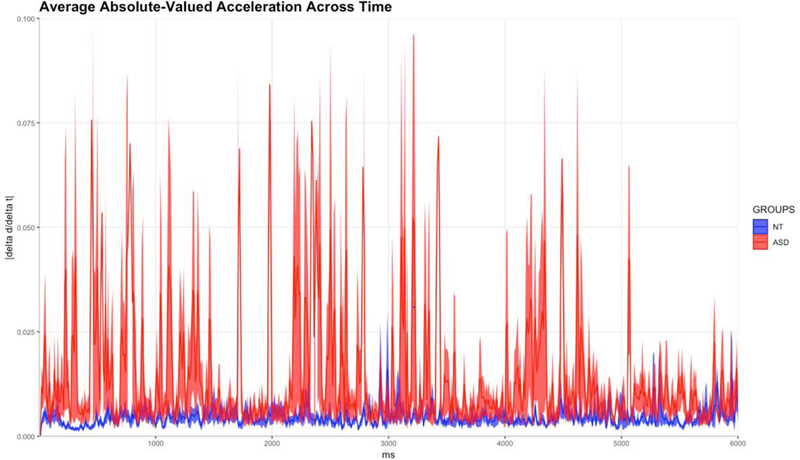

Overall, the ASD group shows much larger variance in facial distance than the NT group does (NT M = 2.1; SD = 2.0; ASD M = 3.7; SD = 7.8). Narrow spikes in acceleration across time (Figure 7) indicate that this variance may be caused by relatively fast/large changes in facial distance for this group across time.

Fig 7.

Average (across all stimuli) acceleration (absolute valued) of movement across time for participants in each group

Discussion

Movement size

This is the first study using MoCap to use distances between facial features to objectively model facial movement across time for adolescents with and without ASD. We successfully used GCA to demonstrate that positive and high-intensity emotions result in larger distances overall than negative and low-intensity emotions, respectively. This confirmed our hypotheses regarding the effects of expression type on facial-feature movements for both groups.

We also hypothesized that the facial movements of individuals with ASD would show less differentiation for different emotion types. The fixed effects from our within-group models support this hypothesis. For individuals with ASD, Valence does not significantly predict overall facial movement, suggesting that the degree to which they move their facial features does not depend on whether they are making a happy or sad face. This result corroborates findings from previous fEMG research showing that facial-muscle activity is undifferentiated between negative and positive emotions for children with ASD (Rozga et al., 2013). Additionally, our results objectively confirm the perceptions that human coders have made in previous studies. In these studies, coders have described facial expressions in ASD as “uniform” across different emotions, and/or “blended”, where expressions simultaneously combine facial movements associated with both positive and negative expressions (Beall et al., 2008; Kasari, Sigman, Mundy, & Yirmiya, 1990; Yirmiya et al., 1989). Our analysis of whole-face distances matches such descriptions, and our future work will explore this further, by analyzing distances in particular parts of the face.

Finally, because previous reports have described atypically flat or neutral affect in ASD (Kasari et al., 1990), we predicted that individuals with ASD would show less movement than NT individuals, overall. This hypothesis was not supported: In neither the Intensity nor the Valence comparisons were there significant fixed effects of Group. If all participants with ASD had produced fewer or smaller facial movements in the current study, we would have seen significantly smaller distances – and smaller changes in distance -- in the ASD group as compared to the NT group. If anything, distances tended to be larger in the ASD group than they were in the NT group, on average, albeit not significantly so.

Instead of showing smaller distances, the ASD group showed much more variation in movement than the NT group did for all trials; this is evident in larger standard deviation from the mean for the ASD group. This could suggest that some participants with ASD produced very small facial movements, while others showed very large movements. This explanation matches inconsistencies in previous literature on emotional expressiveness in ASD. While many describe facial expressions as being more uniform than NT individuals’ (Kasari et al., 1993; Stagg et al., 2014; Yirmiya et al., 1989), some report the opposite—that individuals with ASD show relatively intense expressions (Faso et al., 2015; Grossman et al., 2013; Zane, Neumeyer, Mertens, Chugg, & Grossman, 2017). The large variance in overall facial movement for our ASD group may indicate a range of expressivity in our participants with ASD.

It is also possible that some of the large variation we see across time is due to increased variability in movement for each participant with ASD. Rapid changes from large to small distances (and vice versa) in target facial markers would result in large variance across average movement across time. A plot of acceleration across time (Figure 7) supports this explanation, showing relatively large changes in velocity for the ASD group. Although previous literature has not reported increased variability in the facial-expression movements of individuals with ASD, such intra-participant variability is believed to be characteristic of their other social-communicative behaviors, like prosody (Bone et al., 2017; Bonneh, Levanon, Dean-Pardo, Lossos, & Adini, 2011; Nadig & Shaw, 2012). Additionally, some research has suggested that the quality of other motor movements – like gait and grip -- is marked by increased irregularity and variability in ASD (David et al., 2009; Hallett et al., 1993).

Importantly, the relative variability in velocity in the ASD group should not be confused with increased complexity of facial motion. As mentioned in the introduction, our previous work has shown that the facial motions of individuals with ASD are less complex than NT individuals’ (Guha et al., 2016, 2015). Our complexity measure of facial motion can be interpreted as an index of how repetitive the temporal patterns of motion are, where higher complexity indicates less repetitive motion. When our current results are interpreted alongside the complexity results from Guha et al. (2015; 2016), findings suggest that participants with ASD make large, quick movements which repeat over time, while the NT group makes smaller, more subtle movements that are less repetitive in their patterns.

Movement Shape

Because this work represents the first study using MoCap and GCA to explore dynamic facial expressions, we were not able to make informed predictions about the way that expression-type would affect the shape of movement across time. Thus, our curve-shape results remain mainly exploratory.

These results reveal several significant interactions between group (ASD vs. NT) and stimulus category (Positive vs. Negative and High- vs. Low-Intensity) on the linear, quadratic and cubic terms. These interactions reflect different movement patterns across time across groups for different types of expressions. This is particularly striking for the interaction between Valence and Group on the quadratic term, and between Intensity and Group on the linear term. In both cases, the results of the across-group comparison suggest very different effects of expression-type on the shape of movement for each group. The within-group analysis actually reveals significant and opposite effects between groups of expression-type on movement.

The NT group shows a significantly positive effect of Intensity on the linear term, indicating that high-intensity expressions have a more positive slope than low-intensity expressions. In other words, distance starts large and stays large for high-intensity expressions, while distance starts large and ends small for low-intensity expressions in this group. This pattern makes sense, since sustaining large facial distances for several seconds seems characteristic of expressions perceived as highly intense. However, the ASD group shows the opposite effect: Distances remain more constant for low-intensity emotions, while High-Intensity emotions show large movement at the beginning of the trial that subside by the end of the trial. This is reflected by a significant negative effect of Intensity on the linear and cubic terms for the ASD group: High-Intensity expressions are defined by steeper negative slopes than low-intensity emotions, particularly at the beginning and end of the trial. As discussed in the previous section, both groups show a significant, positive fixed effect of Intensity, showing that High-Intensity expressions lead to greater overall distances in both groups. The difference in slopes, though, shows that these greater distances are not sustained well in the ASD group. This is visible in Figure 6. Together, these results might indicate an ability to produce intense expressions in ASD, but an inability to sustain intensity.

The visible shape of movement curves in the ASD group supports this explanation – that children with ASD do not maintain expressions for long periods. For all expression types, there are short moments of large distances (short, sharp “peaks” in the movement curves) visible in the shape of movement for the ASD group. Such peaks are almost entirely absent in the NT group, aside from their production of positive expressions. For example, compare low-intensity emotions across groups (Figure 5). In the ASD group, there is a brief period of increased distance that occurs between 1 and 2 seconds, and again between 2 and 3 seconds. These spurts of distance may reflect large, but very brief, facial expressions in the ASD group. In fact, these expressions are so brief that they nearly meet the criteria for micro-expressions, and may not even be produced consciously (Yan, Wu, Liang, Chen, & Fu, 2013). Or, these spasmodic bursts of muscle movements could be indicative of a movement disorder (e.g., dyskinesia), which is frequently co-morbid with ASD and has even been claimed to be symptomatic of ASD (Fournier, Hass, Naik, Lodha, & Cauraugh, 2010; Leary & Hill, 1996; Ming, Brimacombe, & Wagner, 2007). The effect of these brief bursts of facial-feature movement could be to make the resulting expressions harder to interpret, which could well contribute to the perceived ambiguity of autistic facial expressions (Yirmiya et al., 1989).

The Valence comparisons add to this picture. Similar to Intensity, expression Valence predicts the shape of movement in the NT group, reflected by a significant positive effect of Valence on the quadratic term. In the NT group, positive expressions start with a more negative slope and end with a more positive slope – i.e., are more U-shaped – than negative expressions. We have interpreted this finding as reflecting large smiles at the beginning of the trial, which subside a bit, and then return at the end of the trial. To verify our interpretation, we reexamined the stimuli videos, and found that a slight majority of the positive videos (7 of 12) show just this pattern, where the actor begins with a large smile, stops smiling (or makes a smaller smile) and then finishes with another large smile. There are two more videos where the actor changes expression from surprise to happy; in these videos, the actors start with the mouth open vertically (large vertical distances), close their mouth, and then transition into a large smile. Again, these patterns match the U-shaped pattern seen in the NT group’s Positive expressions. There is only one video where the actor makes a large smile that maintains its intensity from start to end. Most importantly, there are no trials where the actor starts off with a neutral face, makes a smile, and then returns to a neutral face. This is important, because such a movement pattern is observable in the ASD group.

Participants with ASD show the opposite pattern of Valence movements in comparison to the NT participants. In this group, positive expressions have a significant negative effect on the quadratic term, meaning that they have more of an inverted U-shape than negative expressions do. This finding is interesting for several reasons. Firstly, as described, this movement pattern is not visible in the positive-valence stimulus videos, so, at the least, the participants with ASD are not accurately mirroring the dynamic patterns of the expressions they see in this volitional mimicry task. However, when the movement patterns for positive expressions are compared across participant groups (see Figure 4, Top), it is clear that the shape of ASD movement is actually not completely different from that of NT movement. Instead, peaks are relatively exaggerated in the ASD group, suggesting extreme changes in facial configuration; this corresponds to descriptions of exaggerated prosody in ASD (Nadig, Vivanti, & Ozonoff, 2009). For negative expressions, across-group comparisons are very different. The shape of autistic negative expressions includes multiple short apexes, and is reminiscent of our discussion of Intensity above. Again, the facial-movement of participants with ASD is marked by frequent, brief spurts of large distances, which is not apparent in NT expressions. As posited earlier, these short moments of large distances may be characteristic of autistic expressions, and could contribute to negative judgments of facial expression quality in ASD.

Some potential limitations of our study should be noted. First, we were unable to make specific predictions about how particular parts of the face would move in participants with ASD and how these movements might be different from NT individuals because we are the first researchers to use motion capture to analyze facial muscle movements during dynamic, voluntary facial mimicry in ASD. Thus, we compared the total movement of many facial features at once, rather than the movement of individual facial muscles. This means that we cannot determine whether the movement patterns in certain parts of the face (e.g., the mouth) are more or less similar across groups than others (e.g., the eyebrows). Now that we have analyzed global movement and established differences between groups, we plan to explore more specific movements in future analyses. It would also be interesting to use a different stimulus set that more directly corresponds to the six universal emotions (Ekman & Friesen, 1971) to determine whether the group differences we found are driven by variations in expression behaviors in only some or all of the universal expressions.

Second, as yet, we cannot verify that our findings correspond to the perceptions of autistic facial expressions by NT individuals. Our results show that Valence does not predict the amount of facial movement in individuals with ASD, nor does it seem to be strongly predictive of the shape of movement in this group. We propose that this lack of predictability helps explain the perceived expression ambiguity in ASD. Unfortunately, we cannot verify this because we do not have video recordings of all participants in our study as they made the facial expressions recorded by MoCap. Thus, we cannot determine whether differences in MoCap patterns actually correspond to perceptible differences in facial expressions. We are currently addressing this in a follow-up study that includes recordings in both modalities – MoCap and video -- while participants with and without ASD produce dynamic facial expressions. This will allow us to determine whether quantifiable measures in facial movement (MoCap patterns) can predict perceptions of facial expression quality.

And finally, our study only explored facial-movement patterns as participants voluntarily mimicked emotional facial expressions, rather than during the production of natural, spontaneous expressions. This limits our conclusions about facial expressions in ASD to merely those that are volitionally mimicked. Voluntarily mimicked expressions rely on different underlying processes than spontaneous, automatic facial expressions (Matsumoto & Lee, 1993; Rinn, 1984). Previous work has suggested that spontaneous expressions are more affected in ASD than those produced during overt mimicry tasks (McIntosh et al., 2006). Thus, it is important to explore differences in facial-expression movements during the production of spontaneous expressions in ASD. Still, despite the fact that voluntarily mimicked expressions should be relatively unimpaired in ASD, our work has established that there are quantitative differences even here.

Conclusion

We have provided a first demonstration that facial MoCap can be used to objectively quantify the perceived atypicality of autistic facial expressions described in previous reports. Our results show that the amount and shape of facial-expression movement is predictable in NT participants by both the intensity of the emotion expressed and by the valence of that emotion. For ASD participants, the intensity of voluntarily mimicked expressions predicts the quantity of facial movement, but the valence of that expression does not. This suggests that participants with ASD are moving their facial features to a similar degree, regardless of whether they are copying a smiling face or a frowning face. This lack of distinction may help to explain why NT individuals find autistic facial expressions to be ambiguous. Our data also indicate that individuals with ASD do not or cannot sustain expression intensity as long as their NT peers do. Comparisons of acceleration patterns also find that facial expressions of adolescents with ASD are relatively jerky, characterized by brief moments of increased distance. These fleeting, exaggerated facial-muscle movements may be difficult to perceive and interpret, and could lead to negative judgments by NT individuals. Future research should explore whether such movements are also characteristic of spontaneous expressions in this population. Overall, our findings provide an objective explanation for perceptions of atypical, ambiguous, and undifferentiated facial expressions in ASD.

Acknowledgments

This study was funded by the NIH (NIDCD), grant number 1R01DC012774-01.

Footnotes

Compliance with Ethical Standards

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed consent

Informed consent or assent (for participants under the age of 12) was obtained from all individual participants who were included in the study, and informed consent was obtained from all participants’ caregivers.

Conflict of interest: Dr. Emily Zane declares that she has no conflict of interest. Dr. Zhaojun Yang declares that she has no conflict of interest. Dr. Lucia Pozzan declares that she has no conflict of interest. Dr. Tanaya Guha declares that she has no conflict of interest. Dr. Shrikanth Narayanan declares that he has no conflict of interest. Dr. Ruth Grossman declares that she has no conflict of interest.

References

- Arsalidou M, Morris D, & Taylor MJ (2011). Converging evidence for the advantage of dynamic facial expressions. Brain Topography, 24(2), 149–163. 10.1007/s10548-011-0171-4 [DOI] [PubMed] [Google Scholar]

- Association AP (2013). Diagnostic and statistical manual of mental disorders (5th ed.). Washington DC: American Psychiatric Association. [Google Scholar]

- Baron-Cohen S, & Kingsley J (2003). Mind Reading: The Interactive Guide to Emotions London, UK, 2003. [Google Scholar]

- Beall PM, Moody EJ, McIntosh DN, Hepburn SL, & Reed CL (2008). Rapid facial reactions to emotional facial expressions in typically developing children and children with autism spectrum disorder. Journal of Experimental Child Psychology, 101(3), 206–223. 10.1016/j.jecp.2008.04.004 [DOI] [PubMed] [Google Scholar]

- Bone D, Mertens J, Zane E, Lee S, Narayanan S, & Grossman R (2017). Acoustic-prosodic and physiological response to stressful interactions in children with autism spectrum disorder. In Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH (Vol. 2017–Augus). 10.21437/Interspeech.2017-179 [DOI]

- Bonneh YS, Levanon Y, Dean-Pardo O, Lossos L, & Adini Y (2011). Abnormal speech spectrum and increased pitch variability in young autistic children. Frontiers in Human Neuroscience, 4, 237 10.3389/fnhum.2010.00237 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brewer R, Biotti F, Catmur C, Press C, Happé F, Cook R, & Bird G (2016). Can Neurotypical Individuals Read Autistic Facial Expressions? Atypical Production of Emotional Facial Expressions in Autism Spectrum Disorders. Autism Research, 9(2), 262–271. 10.1002/aur.1508 [DOI] [PMC free article] [PubMed] [Google Scholar]

- David FJ, Baranek GT, Giuliani CA, Mercer VS, Poe MD, & Thorpe DE (2009). A Pilot Study: Coordination of Precision Grip in Children and Adolescents with High Functioning Autism. Pediatric Physical Therapy, 21(2), 205–211. 10.1097/PEP.0b013e3181a3afc2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P (2004). Emotional and Conversational Nonverbal Signals. Language, Knowledge, and Representation 10.1093/acprof [DOI]

- Ekman P, & Friesen W (1971). Constants across cultures in the face and emotion. Journal of Personality and Social Psychology, 17(2), 124. [DOI] [PubMed] [Google Scholar]

- Ekman P, & Friesen W (1977). Facial Action Coding System Palo Alto, CA, CA: Consulting Pscyhologists Press, Stanford University. [Google Scholar]

- Faso DJ, Sasson NJ, & Pinkham AE (2015). Evaluating Posed and Evoked Facial Expressions of Emotion from Adults with Autism Spectrum Disorder. Journal of Autism and Developmental Disorders, 45(1), 75–89. 10.1007/s10803-014-2194-7 [DOI] [PubMed] [Google Scholar]

- Fournier KA, Hass CJ, Naik SK, Lodha N, & Cauraugh JH (2010). Motor coordination in autism spectrum disorders: A synthesis and meta-analysis. Journal of Autism and Developmental Disorders, 40(10), 1227–1240. 10.1007/s10803-010-0981-3 [DOI] [PubMed] [Google Scholar]

- Grossman RB (2015). Judgments of social awkwardness from brief exposure to children with and without high-functioning autism. Autism : The International Journal of Research and Practice, 19(5), 580–587. 10.1177/1362361314536937 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossman RB, Edelson LR, & Tager-Flusberg H (2013). Emotional Facial and Vocal Expressions During Story Retelling by Children and Adolescents With High-Functioning Autism. Journal of Speech, Language, and Hearing Research, 56(June 2013), 1035–1044. 10.1044/1092-4388(2012/12-0067)Journal [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guha T, Yang Z, Grossman RB, & Narayanan SS (2016). A Computational Study of Expressive Facial Dynamics in Children with Autism. IEEE Transactions on Affective Computing, 1–1. 10.1109/TAFFC.2016.2578316 [DOI] [PMC free article] [PubMed]

- Guha T, Yang Z, Ramakrishna A, Grossman RB, Hedley D, Lee S, & Narayanan SS (2015). On quantifying facial expression-related atypicality of children with Autism Spectrum Disorder. ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing - Proceedings, 2015–Augus(April), 803–807. 10.1109/ICASSP.2015.7178080 [DOI] [PMC free article] [PubMed]

- Hallett M, Lebiedowska MK, Thomas SL, Stanhope SJ, Denckla MB, & Rumsey J (1993). Locomotion of autistic adults. Archives of Neurology, 50(12), 1304–1308. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/8257307 [DOI] [PubMed] [Google Scholar]

- Hauck DJ,J (2007). House of Moves High Resolution Facial Merker-set (92 Markers) Los Angeles, CA, CA: Digital Concepts Group, Inc. House of Moves. [Google Scholar]

- Izard C (1983). The Maximally Discriminative Facial Movement Coding System Newark, DE: University of Delaware, Instructional Resource Center. [Google Scholar]

- Izard CE, Huebner RR, Risser D, & Dougherty L (1980). The young infant’s ability to produce discrete emotion expressions. Developmental Psychology, 16(2), 132–140. 10.1037/0012-1649.16.2.132 [DOI] [Google Scholar]

- Kasari C, Sigman M, Mundy P, & Yirmiya N (1990). Affective Sharing in the Context of Joint Attention Interactions of Normal, Autistic, and Mentally Retarded Children. Journal of Autism and Developmental Disorders, 20(1), 87–100. Retrieved from http://search.proquest.com.proxy.bc.edu/docview/63081157?accountid=9673 [DOI] [PubMed] [Google Scholar]

- Kasari C, Sigman M, & Yirmiya N (1993). Focused and social attention of autistic children in interactions with familiar and unfamiliar adults: A comparison of autistic, mentally retarded, and normal children. Development and Psychopathology, 5(3), 403–414. [Google Scholar]

- Kring AM, & Sloan DM (2007). The Facial Expression Coding System (FACES): development, validation, and utility. Psychological Assessment, 19(2), 210–224. 10.1037/1040-3590.19.2.210 [DOI] [PubMed] [Google Scholar]

- Langdell T (1981). Face perception: An approach to the study of autism Universtity of London. [Google Scholar]

- Lau S (1982). The effect of smiling on person perception. Journal of Social Psychology 10.1080/00224545.1982.9713408 [DOI]

- Leary MR, & Hill D. a. (1996). Moving on: autism and movement disturbance. Mental Retardation, 34(1), 39–53. [PubMed] [Google Scholar]

- Lord C, DiLavore PC, Gotham K, Guthrie W, Luyster RJ, Risi S, & Rutter M (2012). Autism Diagnostic Observation Schedule: ADOS-2 Los Angeles, CA: Western Psychological Services. [Google Scholar]

- Lord C, Risi S, Lambrecht L, Cook EHJ, Leventhal BL, DiLavore PC, … Rutter M (2000). The Autism Diagnostic Schedule – Generic: A standard measures of social and communication deficits associated with the spectrum of autism. Journal of Autism and Developmental Disorders, 30(3), 205–223. 10.1023/A:1005592401947 [DOI] [PubMed] [Google Scholar]

- Love SR (1993). Recognition and Production of Facial Emotion by Autistic Children Louisiana State University; Retrieved from http://digitalcommons.lsu.edu/gradschool_disstheses [Google Scholar]

- Matsugu M, Mori K, Mitari Y, & Kaneda Y (2003). Subject independent facial expression recognition with robust face detection using a convolutional neural network. Neural Networks, 16, 555–559. 10.1016/S0893-6080(03)00115-1 [DOI] [PubMed] [Google Scholar]

- Matsumoto D, & Lee M (1993). Consciousness, Volition, and the Neuropsychology of Facial Expressions of Emotion. Consciousness and Cognition, 2, 237–254. 10.1006/ccog.1993.1022 [DOI] [Google Scholar]

- McIntosh DN, Reichmann-Decker A, Winkielman P, & Wilbarger JL (2006). When the social mirror breaks: Deficits in automatic, but not voluntary, mimicry of emotional facial expressions in autism. Developmental Science, 9(3), 295–302. 10.1111/j.1467-7687.2006.00492.x [DOI] [PubMed] [Google Scholar]

- Metallinou A, Grossman RB, & Narayanan S (2013). Quantifiying Atypicality in Affective Facial expressions of Children with Autism Spectrum Disorders [DOI] [PMC free article] [PubMed]

- Ming X, Brimacombe M, & Wagner GC (2007). Prevalence of motor impairment in autism spectrum disorders. Brain and Development, 29(9), 565–570. 10.1016/j.braindev.2007.03.002 [DOI] [PubMed] [Google Scholar]

- Mirman D (2014). Growth Curve Analysis and Visualization Using R, 168. [Google Scholar]

- Mirman D, Dixon JA, & Magnuson JS (2008). Statistical and computational models of the visual world paradigm: Growth curves and individual differences. Journal of Memory and Language, 59(4), 475–494. 10.1016/j.jml.2007.11.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nadig A, & Shaw H (2012). Acoustic and Perceptual Measurement of Expressive Prosody in High-Functioning Autism: Increased Pitch Range and What it Means to Listeners. Journal of Autism and Developmental Disorders, 42(4). Retrieved from https://www.researchgate.net/profile/Aparna_Nadig/publication/51084950_Acoustic_and_Perceptual_Measurement_of_Expressive_Prosody_in_High-Functioning_Autism_Increased_Pitch_Range_and_What_it_Means_to_Listeners/links/571a473f08ae7f552a472dba.pdf [DOI] [PubMed] [Google Scholar]

- Nadig A, Vivanti G, & Ozonoff S (2009). Adaptation of object descriptions to a partner under increasing communicative demands: A comparison of children with and without autism. Autism Research, 2(6), 334–347. 10.1002/aur.102 [DOI] [PubMed] [Google Scholar]

- Oberman LM, Winkielman P, & Ramachandran VS (2009). Slow echo: Facial EMG evidence for the delay of spontaneous, but not voluntary, emotional mimicry in children with autism spectrum disorders. Developmental Science, 12(4), 510–520. 10.1111/j.1467-7687.2008.00796.x [DOI] [PubMed] [Google Scholar]

- Otta E, Lira BBP, Delevati NM, Cesar OP, & Pires CSG (1994). The effect of smiling and of head tilting on person perception. Journal of Psychology: Interdisciplinary and Applied 10.1080/00223980.1994.9712736 [DOI] [PubMed] [Google Scholar]

- Rinn WE (1984). The Neuropsychology of Facial Expression: A Review of the Neurological and Psychological Mechanisms for Producing Facial Expressions. Psychological Bulletin, 95(1), 52–77. 10.1037/0033-2909.95.1.52 [DOI] [PubMed] [Google Scholar]

- Rozga A, King TZ, Vuduc RW, & Robins DL (2013). Undifferentiated facial electromyography responses to dynamic, audio-visual emotion displays in individuals with autism spectrum disorders. Developmental Science, 16(4), 499–514. 10.1111/desc.12062 [DOI] [PubMed] [Google Scholar]

- Sasson NJ, Faso DJ, Nugent J, Lovell S, Kennedy DP, & Grossman RB (2017). Neurotypical Peers are Less Willing to Interact with Those with Autism based on Thin Slice Judgments. Nature Publishing Group, (December 2016), 1–10. 10.1038/srep40700 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scharlemann JP, Eckel CC, Kacelnik A, & Wilson RK (2001). The value of a smile: Game theory with a human face. Journal of Economic Psychology, 22, 617– 640. Retrieved from www.elsevier.com/locate/joep [Google Scholar]

- Stagg SD, Slavny R, Hand C, Cardoso A, & Smith P (2014). Does facial expressivity count? How typically developing children respond initially to children with autism. Autism, 18(6), 704–711. 10.1177/1362361313492392 [DOI] [PubMed] [Google Scholar]

- Trotman CA, Faraway JJ, Silvester KT, Greenlee GM, & Johnston LE (1998). Sensitivity of a method for the analysis of facial mobility. I. Vector of displacement. Cleft Palate-Craniofacial Journal, 35(2), 132–141. [DOI] [PubMed] [Google Scholar]

- Uono S, Sato W, & Toichi M (2010). Brief report: Representational momentum for dynamic facial expressions in pervasive developmental disorder. Journal of Autism and Developmental Disorders, 40(3), 371–377. 10.1007/s10803-009-0870-9 [DOI] [PubMed] [Google Scholar]

- Yan WJ, Wu Q, Liang J, Chen YH, & Fu X (2013). How Fast are the Leaked Facial Expressions: The Duration of Micro-Expressions. Journal of Nonverbal Behavior, 37(4), 217–230. 10.1007/s10919-013-0159-8 [DOI] [Google Scholar]

- Yirmiya N, Kasari C, Sigman M, & Mundy P (1989). Facial expressions of affect in autistic, mentally retarded and normal children. Journal of Child Psychology and Psychiatry and Allied Disciplines, 30(5), 725–735. 10.1111/j.1469-7610.1989.tb00785.x [DOI] [PubMed] [Google Scholar]

- Zane E, Neumeyer K, Mertens J, Chugg A, & Grossman RB (2017). I Think We’re Alone Now: Solitary Social Behaviors in Adolescents with Autism Spectrum Disorder. Journal of Abnormal Child Psychology 10.1007/s10802-017-0351-0 [DOI] [PMC free article] [PubMed] [Google Scholar]