Abstract

With the recent growth in intensive longitudinal designs and corresponding demand for methods to analyze such data, there has never been a more pressing need for user-friendly analytic tools that can identify and estimate optimal time lags in intensive longitudinal data. Available standard exploratory methods to identify optimal time lags within univariate and multivariate multiple subject time series are greatly under-powered at the group (i.e., population) level. We describe a hybrid exploratory-confirmatory tool, referred to herein as the Differential Time-Varying Effect Model (DTVEM), which features a convenient user-accessible function to identify optimal time lags and estimate these lags within a state-space framework. Data from an empirical ecological momentary assessment study are used to demonstrate the utility of the proposed tool in identifying the optimal time lag for studying the linkages between nervousness and heart rate in a group of undergraduate students. Using a simulation study, we illustrate the effectiveness of DTVEM in identifying optimal lag structures in multiple-subject, time series data with missingness, as well as its strengths and limitations as a hybrid exploratory-confirmatory approach compared to other existing approaches.

Since the 1990s, studies which utilize intensive longitudinal data (ILD; e.g. ecological momentary assessment, experience sampling, physiological measurements) have grown exponentially in popularity. For instance, Google Ngram Viewer, an online phrase-usage graphing tool, suggests that publications with the term “experience sampling assessment” have grown by 385% in 2008 compared to 1990 (Michel et al., 2011). Among the most popular ILD designs in the behavioral sciences are variations of the multivariate, multiple-subject, replicated time series design (Nesselroade & Ford, 1985), wherein intensive repeated measures of multiple variables within relatively short time-lengths are collected from multiple subjects (prototypically about 4–5 times daily over the course of a week; Bolger & Laurenceau, 2013; Nesselroade & Ford, 1985; Shiffman, Stone, & Hufford, 2008).

Coinciding with its increased prevalence, there has been great progress in analyzing ILD (Walls & Schafer, 2006). This includes linear and non-linear time series models (e.g., variations of vector autoregressive moving average (VARMA) models), state-space models, dynamical systems models, multilevel modeling, and various other examples (Boker & Graham, 1998; Box, Jenkins, & Reinsel, 2013; Browne & Nesselroade, 2005; Durbin & Koopman, 2012; Goldstein, Healy, & Rasbash, 1994). Importantly, the advancement of new methods has increased the variety of research questions that can be answered by ILD.

In a discrete-time modeling framework, one model variation of interest is a vector autoregressive model of order p (VAR(p) model), in which multivariate measurements of a set of endogenous (dependent) variables from up to p measurement occasions ago (i.e., t-1, t-2, …, t-p) are used to explain these variables’ current values at time t. VAR models continue to serve as an important basis for many substantive studies and new methodological innovations (Bringmann et al., 2017; Chow, Hamagani, & Nesselroade, 2007; Epskamp et al., 2018; Gates, Molenaar, Hillary, Ram, & Rovine, 2010). Values from previous occasions are referred to as lagged responses, with the number of lags defined as the number of backward shifts in measurement occasion such that a lag-0 response refers to the current response at time t, a lag-1 response refers to the response at time t-1, a lag-2 response refers to the response at time t-2, and so on. Relatedly, p in a VAR(p) model is denoted as the lag order of the VAR process. The VAR(p) model is expressed as:

| (1) |

where yi,t represents an M × 1 vector of observed variables measured on occasion t, Ap is an M × M matrix of regression coefficients containing the autoregression and cross-regression coefficients from lag p (i.e., from time t-p) on the m observed variables at time t; and εi,j is an M × 1 vector of residuals (process noises). A VAR(p) model can also be conceived as a set of difference equations or equivalently, continuous-time differential equation models in which the rate of change, change in the rate of change, and other higher-order changes are defined as unfolding over a time interval, Δt, of 1.0 (Hamilton, 1994).1 In practice, discrete-time models such as VAR(p) models have typically been fitted to data measured at discrete, mostly equally spaced intervals (aside from occasional missingness). Most often, the measurement interval in a study is assumed by design to correspond to Δt = 1 in the underlying difference or differential equation model.

The measurement intervals utilized for data collection have direct implications on the strengths of the regression coefficients linking the lagged responses to the current responses at time t – a point that has been brought up by several researchers in advocating direct use of continuous-time models over discrete-time models (Kuiper & Ryan, 2018; Voelkle & Oud, 2013). We will return to this point in the Discussion section. For now, suffice it to say that when researchers have equally spaced data, fitting discrete—as opposed to continuous—time to the data often have some practical advantages. For instance, there is a wide and well-established array of tools available from the statistical and econometric literature for diagnosing, exploring, and interpreting results from discrete-time models, particularly those involving complex lag structures (e.g., when p is high, and/or when different processes show distinct optimal lag orders). Inference and interpretations of continuous-time models have thus far been limited to those involving a lower lag order, which, in a continuous-time framework, mirrors the highest-order changes included in a differential equation of choice (e.g., rate of change is a first-order change, acceleration/deceleration is a second-order change). Tools for model exploration are still nascent, and estimation of continuous-time models is often characterized by greater numerical difficulties than estimation of their discrete-time counterparts of the same order.

When a VAR(p) model is determined to be the model of choice and researchers have a priori knowledge concerning the true lag order, p, of the process, researchers can proceed directly to model fitting. However, when the optimal lag order is unknown, the lag order is often determined using exploratory tools such as autocorrelation, partial autocorrelation, cross-correlation, and partial cross-correlation plots (Chatfield, 2013; Turchin & Taylor, 1992).2 Because these diagnostic methods originated primarily from the time series or econometric literature, current software implementation of the autocorrelation, partial autocorrelation, cross-correlation, and partial cross-correlation functions is restricted for use with single-subject time series, univariate or bivariate data, and often assuming no missingness. When multiple endogenous variables are present, selecting the optimal lag order for each process and that for all the processes as a system can quickly become a cumbersome variable selection problem: not all processes would have the same p, and not all the coefficients linked to the t-1, t-2, …, t-p lagged responses of the same variable (termed autoregression coefficients) and of different have to be freed up for reasons of parsimony.

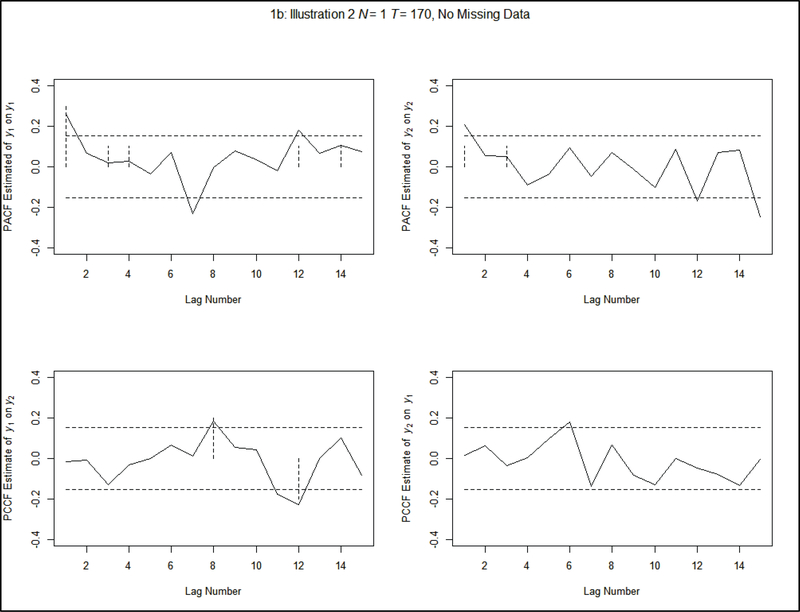

To illustrate the utility of the partial autocorrelation and partial cross-correlation functions in the scenario for which they were originally intended (Turchin & Taylor, 1992), we first present diagnostic plots of the partial autocorrelation with an autoregressive model of the order 1 (a VAR(1) model; termed Illustration 1, which is based on Simulation 1 below), 1 person, 100 time points, and complete data. In particular, the partial autocorrelation function, which summarizes the correlations of a variable with itself at various lags after the effects of lower-order lags have been partialled out, correctly identifies the autoregressive lag of 1 with no Type I errors (Figure 1a). In our second illustration (i.e. Illustration 2), we present diagnostic results from brief simulation based on a bivariate model of order 14 (a VAR(14) model) with simulated non-zero autoregression coefficients at lags 1, 3, 4, 12, and 14 for one variable; non-zero autoregression coefficients at lags 1 and 3 for the second variable; and non-zero cross-regression coefficients at lags 8 and 12 for the first variable on the second variable (see the empirical example for the motivation, and the multivariate case in our Simulation 3 below). With simulated data from a single subject over 170 time points and no missingness, diagnostic results based on the partial autocorrelation and partial cross-correlation functions are shown in Figure 1b. In particular, the partial autocorrelation function estimated three of the seven non-zero autoregressive lags as significant. However, it lacked power to detect the remaining lags whose coefficients were characterized by smaller (absolute) magnitudes. The partial cross-correlation function (see pp. 402–414; Wei, 2006), which serves to detect correlations of a variable with another variable at pre-specified lags after the effects of lower-order lags have been partialled out, correctly identified both non-zero cross-regressive lags (although two lags were incorrectly identified as significant). These results suggest that although these methods might be under-powered in low effect-size scenarios, these tools performed adequately in identifying lags with higher strength in complete single-subject time series (i.e. their intended function).

Figure 1a.

This plot depicts the partial autocorrelation function results with single-subject data, 100 time points and where the data are complete. The solid black line represents the partial autocorrelation function estimates, the dashed black lines represent the critical values (based on , where T = the number of time points, 100 in this case). In this plot the lags of 1 is correctly identified as significantly different from 0. In addition, there are no Type I errors (a.k.a. false positives). This is the scenario for which the partial autocorrelation function was designed.

Figure 1b.

These plots depict the partial autocorrelation and partial cross-correlation function results with single-subject data, 170 time points, and where the data are complete. The solid black line represents the partial autocorrelation function estimates, the dashed black lines represent the critical values (based on , where T = the number of time points, 170 in this case). This figure depicts from left to right, top to bottom: (a) the partial autocorrelation function results of y1 on itself (lags 1 and 12 are correctly identified as significant, but lags 3, 4, and 14 are not identified due to their low magnitude, and lag-7 is incorrectly identified as significant); (b) the partial autocorrelation function results of y2 on itself (lag-1 is correctly identified as significant, but lag-3 is not identified as significant, and lag-15 is incorrectly identified as significant); (c) the partial cross-correlation function results of y1 on y2 (lag-8 and 12 are correctly identified as significant, and lag 11 is incorrectly identified as significant); and (d) the cross-correlation function results of y2 on y1 (where lag-6 is incorrectly identified as significant). This is the scenario for which the partial autocorrelation function and partial cross-correlation function were designed.

Next, we focused on scenarios that were explicitly beyond the intended purpose of these exploratory functions, with data typically seen in the behavioral sciences (Turchin & Taylor, 1992). Particularly, we focused on the performance of these methods in estimating group level statistics from multiple subjects and with missing data. In a third simulation based on the same simulation model in Illustration 2, we tested the utility of these exploratory functions on situations that are typical of daily diary data in the behavioral sciences (Roche, Jacobson, & Pincus, 2016), where there were a large number of subjects and short time series. We retained complete data in this simulation to show that even without missing data (which is quite uncommon in ILD within the behavioral sciences), the partial autocorrelation and partial cross-correlation functions were not adequate for such designs. With 159 subjects, 14 time points, and complete data (i.e. Illustration 3), the results were substantially worse than the single-subject simulation (see Figure 1c). In particular, no lags were identified as significant, and there were no clearly recognizable patterns even when collapsing across the 159 different partial autocorrelation and partial cross-correlation estimates. This showed that the partial autocorrelation function and partial cross-correlation functions did not perform adequately in multiple subject data with short time series.

Figure 1c.

These plots depict the partial cross-correlation function results with multiple-subject data, 14 time points, and where there is no missing data. The grey lines in this figure depict the results of each of the individual cross-correlation functions. The solid black line represents the average of the individual cross-correlation function estimates to obtain a group-level autocorrelation function estimate. The dashed black lines represent the critical values (based on , where T = the number of time points [14]). This figure depicts from left to right, top to bottom: (a) the partial autocorrelation function results of y1 on itself; (b) the partial autocorrelation function results of y2 on itself; (c) the partial cross-correlation function results of y1 on y2; and (d) the partial cross-correlation function results of y2 on y1. In all plots, no lags are correctly identified as significant. This demonstrates that the partial autocorrelation and cross-correlation function performs quite poorly with multigroup data because the formula for the 95% confidence lines utilizes T from a single subject and does not pool information across multiple subjects. Note that if N is added to the critical value formula , most of the simulated non-zero lags would still be not significant, and many spurious lags would be identified.

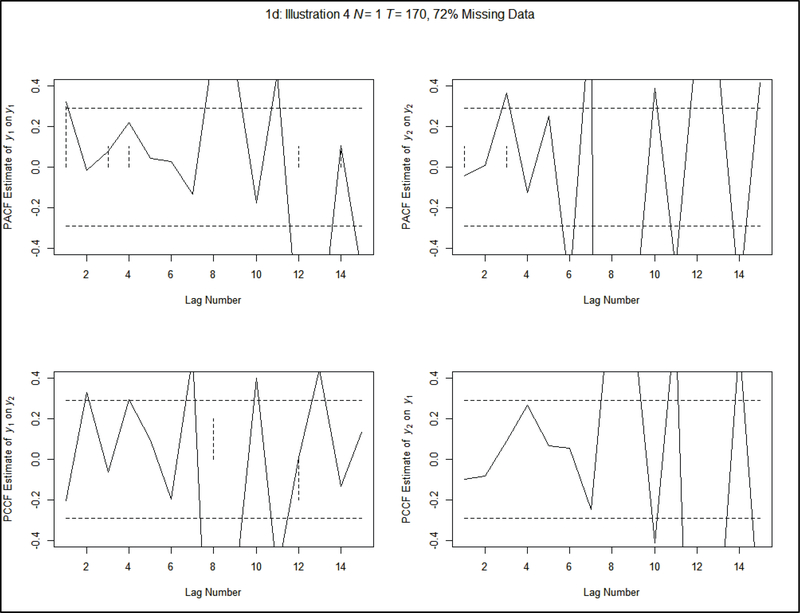

Fourthly, we demonstrated that the partial autocorrelation and partial cross-correlation functions performed poorly in single time series data when there were missing data (i.e. Illustration 4). In simulating single-subject data with 72% of the data missing (which was the level of missing data in the empirical example), the model incorrectly identified 24 lags as significant (i.e. 24 Type I errors) and failed to identify 7 of 9 non-zero lags as significant in correct direction (see Figure 1d). Further details of these demonstrations are provided later in the context of a simulation study (see Simulation 3). For now, they serve to highlight the inadequacies of current exploratory methods in correctly identifying potential lags in multiple-subject time series data of finite lengths and with missing data– namely, the kind of ILD that is typically available in psychology and other behavioral sciences.

Figure 1d.

These plots depict the cross-correlation function results with single-subject data, 170 time points, and where 72% of the data are missing. The solid black line represents the partial cross-correlation function estimates. The dashed black lines represent the critical values (based on , where T = the number of time points [48]). This figure depicts from left to right, top to bottom: (a) the partial autocorrelation function results of y1 on itself; (b) the partial autocorrelation function results of y2 on itself; (c) the cross-correlation function results of y1 on y2; and (d) the cross-correlation function results of y2 on y1. In these plots, only the correct lags of lag-1 for y1 on y1 and lag-3 for y1 on y2 are correctly identified. Of the other 7 simulated non-zero lags, 2 were identified as significant but in the incorrect direction, and 24 Type I errors are made. This demonstrates that the partial autocorrelation and partial cross-correlation function both perform quite poorly at the single subject level when there are missing data.

One alternative strategy is to simply ignore higher-order lags due to convenience or due to theory, and to simply focus on lower-order lags (most typically just lags of 1). This strategy assumes that the effects of higher-order lags are negligible compared to those of lower-order lags. In other words, there are stronger associations among measurement occasions that are more closely separated in time than those that were further apart – not unreasonable for many processes in the social and behavioral sciences. However, as we next illustrate, the impact of failing to incorporate higher-order lags when they exist may not be as innocuous as is commonly assumed. Consider a the same VAR(14) model in our first illustration, but now with 72% missing data (see Simulation 3). If one simply ignores all higher-order lags beyond lag 1 and proceeds with estimating a VAR(1) model, inaccurate point estimates would result. In particular, when ignoring higher-order lags, the 95% confidence intervals for the autoregression coefficient of lag 1 for y1 did not include the simulated population value. Moreover, the cross-regressive lag of y2 on y1 at lag 1 was identified as significant. In contrast, when the data was fitted with correctly specified lag structure, the point estimates were all extremely close to their simulated values (see results in Simulation Study 3 below for greater detail). Thus, in addition to obtaining an incomplete test of one’s theories, ignoring higher-order lags can lead to misleading inferential results.

The current paper describes a convenient, user-accessible exploratory and confirmatory tool to find the optimal lag structure in VAR(p) models (Browne & Nesselroade, 2005; Nesselroade, McArdle, Aggen, & Meyers, 2001). In particular, the current procedure allows users to first narrow the search space estimated in VAR(p) models by using variable selection methods grounded within the penalized additive modeling framework (Wood, 2006; Wood, 2003). Within the penalized additive modeling framework, each of the potential lags in the search space is translated into a large number of independent variables or manifest predictors (e.g. the Illustration 3 with 2 predictors × 2 dependent variables × 14 lags considered would result in a total of 56 manifest independent variables). Nevertheless, variable selection methods in the penalized additive framework allow one to reduce the search space by down-weighting negligible (near-zero) lag coefficients to zero (similar to Gasparrini, Armstrong, & Kenward, 2010). After determining the optimal lag structure (including information on p in a VAR(p) model, in conjunction with the auto- and cross-regression coefficients that are to be freely estimated vs. constrained to be zero), results from fitting the final selected VAR(p) model in a confirmatory framework are returned. Ultimately, the primary utility of this approach is to offer users a convenient way of exploring and modeling optimal lag structures in a VAR(p) framework.

In addition to describing the novel combination of these two methods modeling frameworks in identifying and modeling the optimal lag structure, the current paper will provide empirical illustration and simulation studies demonstrating the utility, strengths, and limitations of these technique.

Differential Time-Varying Effect Model (DTVEM)

DTVEM is a set of integrated subroutines for diagnosing the optimal lag identification in equally spaced ILD for single or multiple subjects.3 In particular, DTVEM combined some of the flexible smoothing and estimation routines for fitting generalized additive mixed models (GAMMs, available as part of the R package mgcv; Wood, 2006) and the state-space estimation routines available in the R package OpenMx (Chow, Ho, Hamaker, & Dolan, 2010a; Harvey, 2001; Neale et al., 2016) to explore, diagnose, and fit group-based VAR models of unknown lag structures. That is, we assumed that the underlying model of interest was a group-based VAR model with the same lag structure across all individuals, but this optimal lag structure was unknown and had to be detected using multivariate, replicated time series from multiple subjects.

The exploratory stage using GAMM was useful in narrowing down the search space, as lags that had substantial effects on outcome variable(s) were weighted heavily, and lags that had negligible effects on outcome variable(s) were down-weighted. In “smoothing over” the effects of successive lags, this approach provides a parsimonious but flexible way to explore relationships among multiple variables while considering possible lagged associations among them. Using GAMM to narrow down the search space prior to confirmatory model estimation can be important as large numbers of lags can be computationally inefficient, and interdependencies from concurrent and lagged associations can sometimes interfere with confirmatory estimation (e.g. Bottan & Perez Truglia, 2011; Buckner, Crosby, Wonderlich, & Schmidt, 2012; Buysse et al., 2007; Carels et al., 2004; Starr & Davila, 2012). Following this exploratory stage with a confirmatory stage within the state-space framework subsequently allows one to estimate the identified lags with greater precision than the exploratory stage. The integrated routines cycle iteratively among exploratory lag detection and confirmatory model fitting and output maximum-likelihood estimates from the “final” model in the final iteration. These automated routines were labeled using the DTVEM function shown in Appendix 1. Because they utilized subroutines developed both for the GAMM and state-space frameworks, we described each of these in turn.

Narrowing Down the Search Space with the Generalized Additive Mixed Effects Model (GAMM)

To narrow down the search space for the confirmatory stage, DTVEM offers users the choice to first explore the lag structure in the GAMM framework prior to proceeding to the confirmatory stage. The general GAMM framework, which extends the generalized linear model (McCullagh & Nelder, 1989) and generalized additive model (Hastie & Tibshirani, 1993; James, 2002), postulates that person i’s any one particular response variable, yi (where i = 1, …, n; n indexes the total number of subjects), may be distributed as any of the members from the exponential family (e.g., Normal, Poisson, Gamma, Multinomial, etc.; for further examples see Chapter 13, Cohen, Cohen, West, & Aiken, 2003). The mean of yi, μi ≡ E(yi), is linked to a semiparametric predictor, ηi, expressed as:

| (2) |

via ηi = g(μi), where g is a link function that maps the mean of yi to ηi, and g−1(ηi) is the reverse transformation that converts ηi into μi. The first and last terms constitute the usual parametric components in standard linear mixed effects models; and the second, third, and fourth terms are nonparametric components (i.e., of unknown functional forms) wherein the effects of a series of covariates on the mean of the transformed dependent response variable are of unknown functional forms. Specifically, Xi is a 1 × nβ design vector that contains person i’s fixed effects components; β is the corresponding nβ × 1 vector of fixed effects parameters; Zi is the 1 × nb random effects design vector for person i; and bi~N(0,ψb) is a vector of random effects assumed to be multivariate normally distributed with zero means and positive-definite covariance matrix, ψb. The terms f1,k(.), f2,c,o(.) and f3,s,q(.) are nonparametric functions involving the covariates in different ways.4 The covariates that appear in each smooth function may be different, and we thus use different indices and subscripts to distinguish the covariates that appear in the three sets of smooth functions.

The term f1,k (.) is the smooth function of the kth covariate, x1,k,i (k = 1, …, K). For instance, if yi represents depression for person i and x1,k,i represents the anxiety of person i, f1,k (.) then captures the unknown association between depression and anxiety across all the individuals in the sample. This is specified as s(x1,k) in the model specification portion of the “gamm” function. Because a nonparametric function is used, the resultant smooth (approximation curve) may be linear or nonlinear. The third term allows the smooth function for the oth covariate, f2,c,o(x2,o,i), c = 1, …, C; o = 1, …, O), to depend on, or interact with another (unsmoothed) covariate (Ahmad, Leelahanon, & Li, 2005; Hastie & Tibshirani, 1993). In GAMM, the unsmoothed covariate is specified in the model specification line using the “by” keyword to specify a varying-coefficient model (Ahmad et al., 2005; Chow, Zu, Shifren, & Zhang, 2011; Hastie & Tibshirani, 1993; Shiyko, Lanza, Tan, Li, & Shiffman, 2012), in which the effect of the cth covariate is assumed to vary nonparametrically but smoothly over another covariate such as time or geographical regions. Finally, the term f3,s,q (s = 1, …, S; q = 1, …, Q) is a tensor product used to approximate the unknown but possibly jointly nonlinear effects of a pair of covariates on ηi.

Typically, when researchers fit VAR-type models in a regression framework, one approach is to create p new lagged variables for the intended lag order. So for four lags, four new variables will have to be included in the data set and model (e.g., Chow, Haltigan, & Messinger, 2010b). However, this requires a priori decision on p, the maximum order in the VAR(p) process. Specifying an arbitrarily large value for p, in contrast, would lead to a large number of independent variables and correspondingly, missingness in these independent variables. Using GAMM for this exploratory stage, we estimate one lag variable which contains all possible lags; differences across lags are only distinguished by means of a covariate, time differences (i.e. Δt). Thus, in this exploratory stage, we fit lags using the varying-coefficient model in GAMM (using the third term, f2,c,o(x2,o,i), c = 1, …, C; o = 1, …, O) and thereby estimate the linear association between lagged variables and the outcome as a smooth function of non-linear time differences.

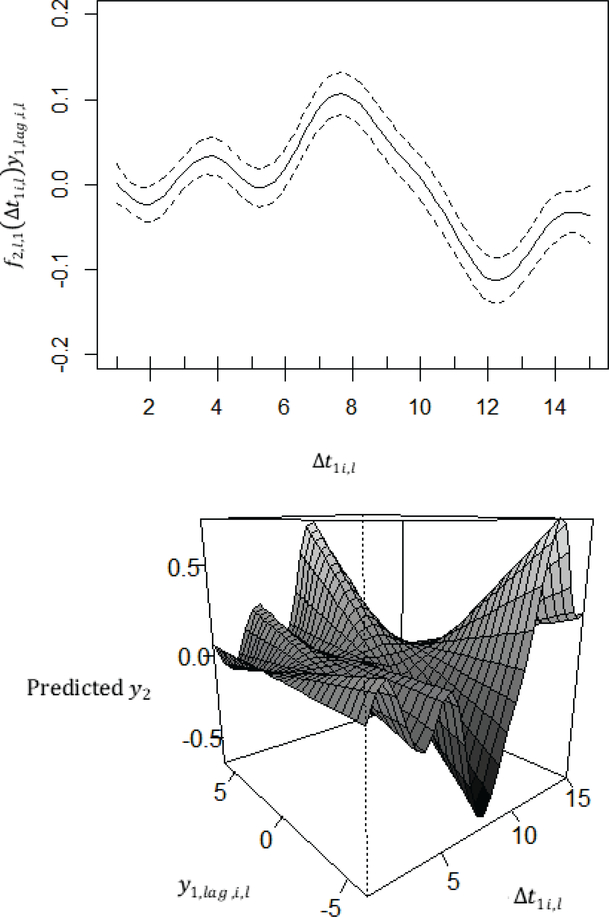

See Figure 2 for an illustration of this exploratory stage when used to detect significant lagged associations using simulated data from a model with non-zero lags of 8 and 12 (see multivariate example for greater details). These lags are evident as peaks and valleys whose 95% confidence intervals did not include zero at time differences of 8 and 12.5 These plots also highlight one property or limitation of using the exploratory stage of DTVEM alone. That is, in VAR processes – the kind of group-based processes modeled in DTVEM at the moment – the effects of earlier lags would still linger at later lags unless the effects of the earlier lags have been partialled out. This “limitation” is similar in nature to the inadequacy of diagnosing lag structures in AR processes using auto- as opposed to partial autocorrelation functions. Thus, results from the exploratory step cannot be interpreted alone, but rather lags identified in the exploratory stage are passed on to the confirmatory state-space framework.

Figure 2.

These figures present the output from the exploratory stage of DTVEM. In the first figure, the solid black line represents the predicted values, and the dashed lines represent the corresponding 95% confidence intervals. The data generating model were simulated to have non-zero lags 8 and 12. The label f2,l,1(Δt1i,l)y1,lag,i,l depicts the smoothed weight of y1,lag,i,l on yi,l at every possible value of Δt1i,l.

Social and behavioral scientists are often interested in the simultaneous estimation of outcomes within a single model to investigate lead-lag relationships among multiple dependent variables. In this case, Equation (2) can be extended to involve M dependent variables using the dummy variable indicator approach by MacCallum, Kim, Malarkey, and Kiecolt-Glaser (1997). Because the linear state-space model is parametric in nature, nonparametric (possible nonlinear) time trends identified in this exploratory stage are first removed (yielding group-detrended data) before proceeding to the confirmatory model.

In sum, estimating the associations between the predictor and the outcome across a smooth of non-linear time differences help one to detect optimal lag structures. Moreover, this smoothing procedure helps to retain only the important lags for the confirmatory stage.

Confirmatory Stage: Verification of Lag Structure via a Confirmatory VAR(p) Model

The primary utility of DTVEM is estimating confirmatory VAR(p) models (see Equation (1)). As example consider a VAR(3) with two dependent variables, which would be expressed as:

| (3) |

where y1,i,t and y2,i,t represent the vector of observed dependent variables measured on occasion t. The matrices A1, A2, and A3 are each 2 × 2 matrices in this particular example, with the diagonal entries corresponding to autoregression coefficients for the two dependent variables at lags 1, 2 and 3, respectively, whereas the off-diagonal entries represent the cross-regression coefficients at those lags.

If the user opts to use the exploratory stage, not all of these auto- and cross-regression coefficients illustrated in Equation (3) would need to be freed up and estimated all at once. Rather, subsets of these coefficients, as identified to be significant within the exploratory stage, are freed up and estimated. Alternatively, the user can opt to estimate all auto- and cross-regressions if they so desire. Our particular confirmatory approach of specifying a VAR(p) process with known lag structure as a state-space model and obtaining the associated maximum-likelihood parameter estimates by optimizing the so-called “prediction error decomposition function” is known to yield satisfactory point and standard error estimates when the correctly specified model is fitted (Chow et al., 2010a; Harvey, 2001).

Additional Exploratory and Confirmatory Iterations

Following the confirmatory stage, the user is given the choice to repeat additional exploratory stages to ensure that all potentially statistically significant lags are identified, while controlling for the effects that were significant in the confirmatory stage. If the user opts for additional exploratory stages, the varying-coefficients from the first exploratory iteration are nearly identical. However, lagged responses at particular lags found to yield statistically significant coefficients in the confirmatory stage were included as additional columns in Xi in Equation (2), and removed from the list of predictors with varying-coefficients, f2,c,o(.) in Equation (2). This additional exploratory stage essentially partials out the effects from those particular lags before other lags are evaluated again. Any newly identified lags are then iteratively re-estimated with the confirmatory stage. If this is elected by the user, this process is repeated iteratively until no new peaks or valleys are identified. At the end of the DTVEM function, DTVEM outputs the last confirmatory state-space estimates.

All the stages of the DTVEM models are fully automated in the R function illustrated in the Appendix section. In practice, a variety of spline functions or penalized spline functions may be used to obtain the smooths [i.e., all terms involving f(.)] in these equations. In DTVEM, we use the thin-plate regression splines, which are a generalization of natural cubic splines (Bookstein, 1989), meaning that smooth curves are constructed from a series of higher-order polynomials with specific constraints that these functions must be smooth (Wood, 2003). Thin-plate regression splines use an eigenvalue decomposition to pick the basis coefficients that can explain the greatest variance. Thin-plate regression splines have the advantages of (1) not requiring a researcher to choose knot locations, thereby reducing subjectivity in modeling and otherwise having optimal bases (Wood, 2006) and (2) better accommodating a high number of predictors than other spline regression methods.

In summary, DTVEM was built to allow users to easily explore lags while reducing the search space by utilizing varying-coefficient models in GAMM and next using confirmatory state-space models to estimate optimal lag structures in the VAR framework.

Empirical Example

The following will demonstrate the utility of DTVEM using an empirical problem involving the time course of anxious symptoms in daily life. Anxious moods are often thought to constitute the co-occurrence of several symptoms, including both feelings and physiological activation (Lang, McTeague, & Bradley, 2016). Recent movements have been particularly focused on the examination of these symptoms within one integrated framework (Cuthbert & Insel, 2013).

In contrast to theories about anxiety and physiological activation being concomitant with one another, there is considerable evidence that physiological measurements and self-reported anxious moods often have low associations when measured concurrently (Hodges, 2015; Mauss, Wilhelm, & Gross, 2004; Morris & Liebert, 1970). Rather than representing a single unitary construct, recent theories suggest that anxiety may be apprehended through multiple subsystems (for example, somatic arousal, anticipation of physiological arousal, avoidance of arousal are all considered integrated subsystems) that are connected to one another over different timescales (Epskamp et al., 2018; Frank, Jacobson, Hurley, & McKay, 2017). Although quite recent, such theories of a temporal relationship between physiology and subjective anxiety build on classical conceptualizations that physiological responses and the perception of emotion precede and predict one another over hours in a day (Cannon, 1927; Lange & James, 1922). Importantly, the interaction between physiology and feelings of anxiety are thought to inform the manifestation and maintenance of anxiety disorders (Frank et al., 2017). Consequentially, this relationship is crucial to the study of the nature of psychopathology within daily life.

In line with such theories, there is evidence that cognitive anxiety processes and negative emotions are associated with physiological activation later in the day and during the subsequent night (Brosschot, Van Dijk, & Thayer, 2007; Brosschot & Thayer, 2003). There also has been suggestion that this may be due to the effects of anxiety on the neuroendocrine system, which can lead to prolonged heart rate over the span of hours (Mergler & Valcciukas, 1998). Nevertheless, the optimal time in which the perception of anxiety and physiological activation predict one-another remains unknown (Epskamp et al., 2018).

To date, no researcher has examined lead-lag relationships between perceptions of anxious moods and physiological reactions over hours (Barrett, Quigley, Bliss-Moreau, & Aronson, 2004). Studying the temporal course of anxious moods over hours or even days in conjunction with lagged changes in physiological responses, such as heart rate, could hasten understanding of the phenomenology of anxious moods, and have implications regarding the intersection of multiple units of analysis of pathological systems (Cuthbert & Insel, 2013). For example, if self-reported anxiety predicts later heart rate, treatment might focus on cognitive or emotional-processing therapy to prevent later increases in heart rate (Frank et al., 2017). In contrast, if physiological activation is a “leading indicator” of the perception of self-reported anxiety, it may suggest that anxious moods may be due to noticing changes in physiological responses (Epskamp et al., 2018). In this case, treatment might focus on relaxation techniques to directly target the physiology.

The current empirical example was based on a set of ecological momentary assessment data collected every hour that subjects were awake. Most participants (N = 159) completed 68.5% of prompts. The Profile of Mood States (POMS) “nervous” item was used on a 0 – 100 slider. Heart rate was measured with an open-source application which used the camera on smartphones (Wetherell, 2013). The application used photoplethysmographic signals that were obtained by taking pictures of the color changes in the index finger when the finger was pressed against the phone’s camera. The application ran for 30 seconds, and average heart rate was measured during this time. This method of obtaining heart rate through smartphone applications has been validated (Scully et al., 2012) and had high convergence with traditional measures (r =.98 – 1.00 with heart rate) (Bolkhovsky, Scully, & Chon, 2012). For this illustrative data, the lack of a priori knowledge about the time lagged associations in the data motivated our use of DTVEM.

As participants were prompted once per hour, the data were broken down into hourly segments for the analyses. There was a total of 7,509 data points collected out of a possible 26,880 (if prompts had occurred evenly, and each person had a complete sampling of every period). Thus, based on the sampling period of interest, the data were 28% complete. Because there was a theorized bi-directional relationship between nervousness and heart rate in the literature, a bivariate model was considered.

Note that the exploratory stage of DTVEM was fitted using the following equation:

NRonNR represents the autoregressive effect of nervousness, NRonHR represents the cross-regressive effect of nervousness on heart rate, HRonNR represents the cross-regressive effects of heart rate on nervousness, and HRonHR represents the autoregressive effects of heart rate. Among the results available from the exploratory stage were nonparametric time trends at the group level, as shown in Figure 3. In regard to the time trends, nervousness showed significant time trends, that were highest at the first hours in the study and peaked again approximately 60 hours later. Interestingly, heart rate began to decrease at this time. Notably, participants arrived for a compliance check at approximately this time, and this systemic increase in nervousness may coincide with the evaluation of their compliance.

Figure 3.

These figures depict the time trends of nervousness and heart rate over time for the population.

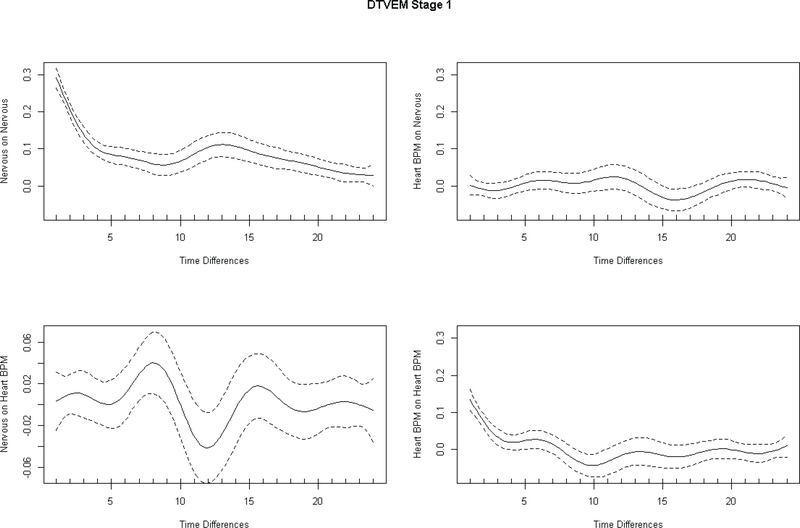

The exploratory stage showed significant AR effects of nervousness and heart rate, such that the strongest effects of nervousness on nervousness occurred one to four hours later and peaked again 14 hours later. The exploratory stage of DTVEM showed the strongest AR effects of heart rate on itself was one to three hours later. In regard to cross-regressive trends, the exploratory stage of DTVEM suggested that nervousness may positively predict heart rate 7 and 8 hours later and negatively predict heart rate 12 hours later. The exploratory stage of DTVEM suggested that heart rate may positively predict nervousness 15, 16, and 17 hours later. See Figure 4 for a summary of the exploratory stage varying-coefficients.

Figure 4.

The results depict the exploratory stage of DTVEM for nervousness and heart rate predicting themselves and each other. The solid lines depict the autoregressive and cross-regressive coefficients over all possible time differences. The dashed lines depict the confidence bands of the regression coefficients.

Following the optional exploratory stage, time trends were modeled by focusing solely on nervousness and heart rate time smooths. The residuals of this model were then passed on to the state-space confirmatory stage of DTVEM.

The final state-space confirmatory results indicated that nervousness predicted itself one hour later (α1,1 = 0.275, = 0.012, < .001), three hours later (α1,3 = 0.071, = 0.014, < .001), fours later (α1,4 = 0.075, = 0.014, < .001), 12 hours later (α1,12 = 0.077, = 0.020, < .001), and 14 hours later (α1,14 = 0.082, = 0.019, < .001). The many significant AR terms associated with nervousness may suggest that the dynamics of nervousness are complex and conform the multiple time scales. These significant AR terms may also reflect person-specific time scales and individual differences, which were not modeled. In contrast, heart rate significantly predicted itself one (α2,1 = 0.144, = 0.015, < .001) and three hours later (α2,3 = 0.037, = 0.016, = .020). The cross-regressive relationships suggested that nervousness significantly predicted heart rate 8 hours later (γ1,2,8 = 0.055, = 0.020, = .007). Interestingly, nervousness significantly negatively predicted heart rate 12 hours later (γ1,2,12 = −0.052, = 0.024, ) = .030). Next, we compared the magnitude of the effect of nervousness on heart rate 8 hours later compared to the effect of 12 hours later by removing the lagged effects alone and examining the differences in model fit by means of a likelihood ratio test. The change in model fit (−2*log likelihood) was greater when the cross-regressive coefficient of 8 hours was removed (−2 LL = 7.29 with 1 df), compared to that observed when the coefficient for 12 hours later was removed (−2LL = 4.68 with 1 df). These results indicate that the effect of nervousness on heart rate was stronger 8 hours later than 12 hours later. Notably, the model was tested for stationarity, and was stationary (based on all the values of the modulus of the roots of the determinant of the identity matrix minus the transition matrix being greater than one).

In sum, we did not find a bidirectional relationship between nervousness and heart rate, but rather, that nervousness unidirectionally drove the degree of heart rate 8 and 12 hours later. A hormonal response may explain the significantly positive relationship followed by a significantly negative relationship between nervousness and later heart rate. Specifically, nervousness predicts the release of norepinephrine, and norepinephrine has a positive association with heart rate that quickly dissipates over approximately the same number of hours (Mergler & Valcciukas, 1998). This illustrates the utility of DTVEM in providing a convenient automated framework to estimate unknown lag structures.

Simulation Study

A simulation study was performed to test the performance of DTVEM in detecting optimal lag structures in processes that unfold over diverse time scales. Our goal was to consider sample size and design conditions that mirrored empirical processes of varying complexity (e.g., involving processes unfolding over a single vs. multiple time scales, with univariate vs. multivariate data, and complete vs. missing data).

First, we chose one of the most common AR structures in the behavioral sciences, an AR model of the order 1 with two sample size configurations: (1a) T = 100, N = 1, a configuration commonly utilized in the time series literature to evaluate finite-sample performance of statistical approaches; and (1b) T = 14, N = 100, as commonly seen in many ILD studies in psychology (Armstrong et al., 2010; Beidel, Neal, & Lederer, 1991; Roche et al., 2016; Steger & Frazier, 2005). Secondly, we simulated a complex AR structure with sharp shifts using a Seasonal Autoregressive Integrated Moving Average (SARIMA, with N = 100, T = 14) (Box et al., 2013; Box & Jenkins, 1976; Brockwell & Davis, 2002; Reinsel, 2003).

Thirdly, we wanted to test a typical multivariate case (N = 159, T = 170) that mirrored the irregular measurement intervals and complex lag structure and found in the empirical example. Our goal was to evaluate the performance of the DTVEM in recovering complex group-based, multivariate, lag structure using multiple-subject data. This simulation study served to extend the results from previous simulation studies in which researchers compared inferential results obtained from fitting discrete-time models to unequally spaced assessments that were binned or blocked together at pre-specified windows (e.g., hourly, every 4 hours) with missingness inserted, where appropriate, to create a set of equally spaced data. These designs tend to be highly prevalent in ILDs (Ebner-Priemer, Eid, Kleindienst, Stabenow, & Trull, 2009), and blocking irregularly-spaced data at equally spaced time windows with missingness inserted where appropriate is a relatively common way of handling irregular spacing in ILDs (Silvia, Kwapil, Walsh, & Myin-Germeys, 2014).

Researchers (de Haan-Rietdijk, Voelkle, Keijsers, & Hamaker, 2017) have found in a previous simulation study that applying this method of data blocking to single-subject, equally-spaced time series blocked over successive windows yielded inferential results that closely approximated those obtained from continuous-time models in situations involving a lag-1 structure. However, this method of blocking was not as successful in approximating continuous-time models in other scenarios involving continuous-time models that capture higher-order changes (and hence higher-order lag structure in their discrete-time counterparts), particularly when the time intervals used to define successive lags are large relative to those used in data generation (de Haan-Rietdijk et al., 2017). In other words, de Haan-Rietdijk et al. considered scenarios where the time intervals used in data generation might be different from those used to define the lags (e.g., measurement occasions that were separated by an interval of Δt = 2, as opposed to those separated by Δt =1, were specified as one lag apart). Here we generated data using discrete-time models in which Δt was set to 1.0 both in the data generation and model-fitting process. Our goal is to determine, under situations with correctly specified Δt, the performance of the DTVEM when applied to multi-subject data with an unknown lag structure, a large amount of missing data (72%), and small signal-to-noise ratios.6

We conducted 500 Monte Carlo (MC) simulations for each of the three primary modeling variations considered. These variations include: (1a): an AR(1) model with N = 100, T = 14, (1b) an AR(1) model with n = 1, T = 100, (2) a SARIMA model with N = 100, T = 14, and (3) a multivariate case with N = 159, T = 170, and 72% missing data. The ratio between the simulated values and the total variance (i.e. signal to noise ratio) ranged from 0.10 to 0.60 (process noise equals was set to 2.25 for all models). The DTVEM was applied without any a priori knowledge about the lag structure and allowed to go through iterative cycles of the lag exploration and confirmatory VAR(p) model-fitting stage until no significant lags were identified in the additional exploratory stages of DTVEM.

Simulation 1: AR(1) Simulation

The first simulation was based on an AR process of order 1 (i.e., AR(1) process) with univariate equidistant time points and complete cases. Time series data were generated using one of two possible sample size configurations: (Simulation 1a) N = 100 persons over T = 14 time points, and (Simulation 1b) n = 1 person over T = 100 time points, using the simulation model:

In this formula, y is the outcome variable for person i at time t, εi,t represents the process noise for person i and time t, assumed to be normally distributed. The process noise was set to be 2.25, and, as such, the signal to noise ratio was 0.34.

Simulation 2: Seasonal Autoregressive Integrated Moving Average (SARIMA(1,0,0)×(1,0,0)7) Simulation

Next, we tested DTVEM using a process that unfolds over multiple time scales by pairing an AR model with a seasonal component (i.e. SARIMA, e.g., a day-to-day process embedded in a weekly cycle; hour-by-hour fluctuations with diurnal rhythms embedded) (Box & Jenkins, 1976; Brockwell & Davis, 2002; Reinsel, 2003). In this case, we included a seasonal component with a period of 7, formulated such that the current cycle was affected by the immediately preceding cycle. That is, there was an AR(1) component for this seasonal component, such that the current level of the process was affected by the level of the process 7 lags ago. Beyond this seasonal component, the process was hypothesized to also show lag-1 AR dynamics, yielding a SARIMA(1,0,0) × (1,0,0)7 model. Our specific choice of AR weights for the seasonal and non-seasonal components yielded the following combined model:

In this formula, y is the outcome variable for person i at time t, εi,t represents the process noise for person i and time t, assumed to be normally distributed. Note that the SARIMA has complex AR dynamics, as the process has a significantly positive lag at 7 and has a significantly negative lag one lag later.7 The signal to noise ratio for this model was 0.60.

Simulation 3: Multivariate Time Series Simulation with 72% Missingness

Thus far, all simulations of DTVEM have been univariate with no missingness. As such, we wanted to test the validity of DTVEM with multivariate data that more closely resembled typical-use scenarios with ILD. Inspired by the empirical example, we created a simulation condition using the same number of persons, with the same pattern of missingness, and the same lag structure to generate a more realistic scenario. Thus, the multivariate simulations were generated through two simulation equations:

In this formula, two time-series were simulated together. In these cases, y1 and y2 represented the two time series outcomes for person i at time t, and represent the process noise for person i and time t for, y1 and y2, respectively, which was assumed to be normally distributed with zero means. The process noise variances for both time series was set to 2.25 as in the other models. Note that there were five separate autoregressive lags for y1, at 1, 3, 4, 12, and 14; and there were two autoregressive lags for y2 at 1 and 3. Additionally, the outcome variable y2 was also influenced by y1 at a lag of 8 and 12. Thus, we increased the size of the lag coefficients to increase the signal to noise ratio within the data (between absolute value strengths of 0.1 to 0.3). Nevertheless, the signal to noise ratios were still quite small at 0.23 for y1 and 0.10 for y2.

Model Evaluation

All DTVEM estimates were examined through the following metrics: (1) number of auto- and cross-regressive parameters estimated, (2) mean estimated values across simulations (i.e. mean values); (3) the root mean square error (RMSE) which is ; (4) bias which is defined by ; (5) mean SE across MC runs as compared to the standard deviation of the estimates across MC runs; (6) mean relative deviance of the SE (RDSE) which is defined by ; (7) coverage which is the proportion of 95% confidence intervals whose values include the simulated θ across MC simulations; (8) power, defined as the proportion of parameter estimates for truly non-zero population parameters from the confirmatory stage across the MC runs whose 95% confidence intervals do not contain zero; (9) average Type I error rate, which is defined as the proportion of MC replications for which the 95% confidence intervals incorrectly identified any one spurious lag as statistically significant, (as averaged over all possible spurious lags considered); and (10) total Type I error rate, which is defined as the proportion of MC replications for which the 95% confidence intervals incorrectly detected one or more spurious lags. Note that we considered a maximum lag of 10 for each simulation. We compared the final confirmatory estimates from DTVEM to a state-space model with lagged structures that coincided with the data generation models. That is, to provide a benchmark against which the DTVEM estimates were compared, we obtained ML estimates under the “ideal” scenario that a priori knowledge concerning the lag structures was already available – a scenario that is often not fulfilled in practice. Estimation was performed using OpenMx (Neale et al., 2016) through state-space specification of a VAR(p) model and was subjected to the same evaluation criteria as the DTVEM.

Results

Simulation 1: AR(1) Results with No Missingness

For AR(1) with one person with 100 time points, and 100 persons with 14 time points results were grouped together because they were very similar. The exploratory stage passed an average of 3 autoregressive parameters to be estimated in the confirmatory stage (see Table 1). The summary of the findings is depicted in Table 2, and comparison results from using confirmatory state-space models with correctly specified lag structures are shown in Table 3. Power estimates from the DTVEM suggested that there was 99.8% and 100% power for detecting the truly non-zero parameters as being significantly different from zero for the two sample size configurations considered (with N = 1, T = 100 and N = 100, T = 14, respectively), compared to 100% power for both simulation conditions in the confirmatory models. Both DTVEM and the confirmatory models also showed 100% power to detect the truly non-zero AR(1) parameter and process noise variance across both simulations. For the N = 1 and T = 100 condition, we considered up to lag-10, and the average Type I error rate was 1.6% and the total Type I error rate was 11.8%. Likewise, for N = 100 and T = 14, the average Type I error rate was 1.6%, and the total Type I error rate was 8.6%. For both simulation conditions, the biases and RMSEs of the point estimates were very small and closely paralleled the biases and RMSEs from the confirmatory models. For both conditions, the standard error estimates also closely mirrored the “true” or empirical standard errors, and coverage rates for all time series parameters were close to the 95% nominal level. The results of this condition suggest that the combined DTVEM procedures led to excellent recovery of the lag structure and corresponding estimates in the absence of a priori knowledge concerning the lag structure. Unlike standard exploratory approaches such as PACF plots, DTVEM worked for both single-subject time series of moderate length as well as multiple-subject replicated (but short) time series.

Table 1.

Number of Parameters Estimated Based on Variable Selection Based on DTVEM Exploratory Stage

| Simulation | T | %M | # of Non-Zero Regressive Parameters | M Number of Regressive Parameters Estimated | SD # of Regressive Parameters Estimated | 5th Quantile of Number of Regressive Parameter Estimates | 95th Quantile of Number of Regressive Parameter Estimates |

|---|---|---|---|---|---|---|---|

| AR(1), N = 1 | 100 | 0 | 1 | 3.288 | 1.926 | 1 | 7 |

| AR(1), N = 100 | 14 | 0 | 1 | 3.948 | 1.767 | 1 | 7 |

| SARIMA | 14 | 0 | 3 | 4.092 | 0.939 | 3 | 6 |

| Multivariate | 170 | 72 | 9 | 27.484 | 4.473 | 21 | 36 |

Note. This table predicts the number of auto- and cross-regressive parameters estimated based on significant lags extracted from the exploratory stage of DTVEM. In all simulations, DTVEM extracted a larger number of parameters than non-zero parameters on average. Nevertheless, these simulations demonstrated, the exploratory stage of DTVEM reduced the number of parameters estimated in confirmatory models substantially across every simulation in every case.

Table 2.

Summary of DTVEM Confirmatory Stage Estimates of Complete Data

| Simulation | T | Term | Simulated θ | Mean | RMSE | Bias | SD of | Mean of SE | RDSE | Coverage of 95% CI | Power |

|---|---|---|---|---|---|---|---|---|---|---|---|

| AR(1), N = 1 | 100 | AR(1) | 0.500 | 0.449 | 0.051 | −0.051 | 0.092 | 0.090 | −0.031 | 0.906 | 0.998 |

| AR(1), N = 1 | 100 | ζy,i,j | 2.250 | 2.152 | 0.098 | −0.098 | 0.315 | 0.305 | −0.033 | 0.882 | 1.000 |

| AR(1), N = 100 | 14 | AR(1) | 0.500 | 0.499 | 0.001 | −0.001 | 0.024 | 0.024 | 0.015 | 0.964 | 1.000 |

| AR(1), N = 100 | 14 | ζy,i,j | 2.250 | 2.265 | 0.015 | 0.015 | 0.091 | 0.086 | −0.060 | 0.938 | 1.000 |

| SARIMA | 14 | AR(1) | 0.500 | 0.510 | 0.010 | 0.010 | 0.025 | 0.025 | −0.021 | 0.918 | 1.000 |

| SARIMA | 14 | AR(7) | 0.400 | 0.393 | 0.007 | −0.007 | 0.039 | 0.037 | −0.039 | 0.936 | 1.000 |

| SARIMA | 14 | AR(8) | −0.200 | −0.209 | 0.009 | −0.009 | 0.038 | 0.041 | 0.093 | 0.956 | 0.832 |

| SARIMA | 14 | ζy,i,j | 2.250 | 2.407 | 0.157 | 0.157 | 0.109 | 0.097 | −0.108 | 0.634 | 1.000 |

Note. This is the summary of the DTVEM confirmatory stage estimates. Simulation refers to the way in which the data were simulated. T refers to the total number of time points for each person. AR refers to whether the simulated model term of interest was autoregressive. The symbol: Δt refers to the time difference in which the model was simulated. These results depict the (1) simulated θ (the ways in which the data were simulated), (2) mean values, (3) the root mean square error (RMSE) which is (4) bias which is defined by , (5) standard deviation of the across Monte Carlo runs, (6) mean standard error (SE) across Monte Carlo runs, (7) mean relative deviance of the SE (RDSE) which is defined by , and (8) power, defined as the proportion of parameter estimates from the confirmatory stage across the MC runs whose 95% confidence intervals do not contain zero.

Table 3.

Summary of Confirmatory State-Space Estimates of Complete Data

| Simulation | T | Term | Simulated θ | Mean | RMSE | Bias | SD of | Mean of SE | RDSE | Coverage of 95% CI | Power |

|---|---|---|---|---|---|---|---|---|---|---|---|

| AR(1), N = 1 | 100 | AR(1) | 0.500 | 0.496 | 0.004 | −0.004 | 0.086 | 0.087 | 0.011 | 0.954 | 1.000 |

| AR(1), N = 1 | 100 | ζy,i,j | 2.250 | 2.242 | 0.008 | −0.008 | 0.319 | 0.317 | −0.005 | 0.926 | 1.000 |

| AR(1), N = 100 | 14 | AR(1) | 0.500 | 0.501 | 0.001 | 0.001 | 0.024 | 0.024 | 0.021 | 0.958 | 1.000 |

| AR(1), N = 100 | 14 | ζy,i,j | 2.250 | 2.271 | 0.021 | 0.021 | 0.092 | 0.087 | −0.055 | 0.936 | 1.000 |

| SARIMA | 14 | AR(1) | 0.500 | 0.514 | 0.014 | 0.014 | 0.024 | 0.025 | 0.019 | 0.902 | 1.000 |

| SARIMA | 14 | AR(7) | 0.400 | 0.399 | 0.001 | −0.001 | 0.035 | 0.035 | −0.007 | 0.948 | 1.000 |

| SARIMA | 14 | AR(8) | −0.200 | −0.210 | 0.010 | −0.010 | 0.037 | 0.040 | 0.078 | 0.956 | 1.000 |

| SARIMA | 14 | ζy,i,j | 2.250 | 2.389 | 0.139 | 0.139 | 0.098 | 0.097 | −0.011 | 0.712 | 1.000 |

Note. This is the summary of the confirmatory state-space estimates across each of the simulations with complete data. Simulation refers to the way in which the data were simulated. T refers to the total number of time points for each person. AR refers to whether the simulated model term of interest was autoregressive. The symbol: Δt refers to the time difference in which the model was simulated. These results depict the (1) simulated θ (the ways in which the data were simulated), (2) mean values, (3) the root mean square error (RMSE) which is , (4) bias which is defined by , (5) standard deviation of the across Monte Carlo runs, (6) mean standard error (SE) across Monte Carlo runs, (7) mean relative deviance of the SE (RDSE) which is defined by , (8) coverage which is the proportion of simulated values that fall within the 95% confidence intervals across Monte Carlo simulations, and (9) power, defined as the proportion of parameter estimates across the MC runs whose 95% confidence intervals do not contain zero.

Simulation 2: SARIMA(1,0,0)×(1,0,0)7) Results with 14 Time Points with No Missingness

The DTVEM estimates and SE were similar to those from previous AR(1) conditions. Based on the variable selection in the exploratory stage, an average of 4 autoregressive parameters were estimated in the confirmatory stage (see Table 1). The process noise variance estimate for ζy,i,j was characterized by slight increases in RMSE and bias compared to the AR(1) conditions, reflecting the increased difficulties in estimating this parameter with more complex lag structures and limited time series length. The power was slightly decreased for part of the estimates compared to prior models, with DTVEM showing 100% power to detect the non-zero lag values of 1 and 7, and 98.4% power in detecting the lag of 8. The high power across conditions is noteworthy given the small value of the simulated lag-8 coefficient and the limited replications of data spanning 8 lags apart. For the 7 lags considered (we considered up to lag-10, and thus lags 2–6, 9–10 were truly zero), the average Type I error rate was 1.2%, and the total Type I error rate was 8.6%. As with previous results, the estimates and standard errors were satisfactory and closely mirrored those from the correctly specified confirmatory state-space model (see Table 3).

Simulation 3: Multivariate Time Series Results with 72% Missing Data and a Complex Lag Structure

The DTVEM point and SE estimates in this model showed trends similar to previous models (i.e. satisfactory point and SE estimates that closely mirrored the correctly specified confirmatory model; see Tables 2–3). Based on the variable selection in exploratory stages, an average of 27 auto- and cross-regressive parameters were estimated in the confirmatory stage (see Table 1). This means that 55% of the potential lags were eliminated from the exploratory stage (relative to only 15% of potential lags which were simulated to be non-zero in the population). Coverage rates for all other parameters were close to the .95 nominal level. Power and Type I error rates were similar to prior models with DTVEM showing approximately 100% power in detecting both the lag-1, lag-3, lag-4, lag-12, and lag-14 autoregressive parameter of y1, power in detecting the lag-8 and lag-12 cross-regressive of y1 on y2, and the lag-1 autoregressive parameter y1. Nevertheless, the power in detecting lag-3 autoregressive parameter y2 was 61%, likely resulting from very low signal-to-noise ratio. Regarding the AR relationships with y1 as an outcome, the average Type I error rate was 2.8%, and total Type I error rate was 10.0%. For the AR relationships with y2 as an outcome, average Type I error rate was 2.1%, and total Type I error rate was 8.3%. The average Type I error rate for the cross-regressive relationship of y2 on y1 was 1.1%, and the total Type I error rate was 8.3%. For y1 on y2, the average Type I error rate was 3.1%, and the total Type I error rate was 15.4%. Overall, these results suggest a slight increase in difficulties for DTVEM to simultaneously identify the correct lag structures when multiple constructs were involved with a complex lag structure, and there was a large proportion of missing data. Nevertheless, the high power for detecting most of the truly non-zero lag coefficients, and the relatively low average and total Type I error rates of the DTVEM in such a challenging scenario provide some reassurance of the proposed approach’s utility in handling complex lag structures in other similar empirical settings.

Summary of Simulation Results

The simulations suggest that DTVEM has high exploratory power and low Type I error rates across both univariate and bivariate models with no a priori information concerning the lag structures. DTVEM’s point estimates were accurate across the simulation conditions. The SE estimates were unbiased for all conditions without missing data or with 72% of the data missing. The computational time needed for the R DVTEM program to manipulate and perform the analyses was under two minutes for each of the current simulations, except for the multivariate simulation which took approximately 2 hours to run (note that this is primarily due to an expansion of the Hessian matrix in the state-space models where the estimation process is number of subjects multiplied by the number of potential lags squared = (159*30)2 = 26,010,000).

Discussion

This paper describes a convenient user-accessible exploratory and confirmatory tool, DTVEM, which allows users to model time lags in the state-space framework. Using a series of simulation examples, we found that DTVEM had high power in the detection of optimal lag structures, low Type I error rates, accurate point estimates, and accurate SEs as long as sufficient measurement occasions were available to recover higher-order lags. The simulation results support the utility of DTVEM as an exploratory tool over the partial autocorrelation function with multi-subject data. The simulation results are particularly notable given the high dimensionality of the lags considered. For example, the multivariate simulation across 15 lags with two dependent variables is analogous to 30 independent predictors across each of the two outcomes (i.e. 60 potential regression estimates). Thus, DTVEM demonstrated high performance across a diverse number of conditions with high dimensionality, low signal to noise ratio, and high missingness.

Within the behavioral sciences, DTVEM can be used to answer a variety of research questions involving different ILDs such as daily diary studies, ecological momentary assessments, physiological, and neurological data collection. Examples of research question the DTVEM can facilitate include: (1) Does one construct predict itself or another construct at a later time? (this could be detected through auto- and cross-regressive lags); (2) When does one construct maximally predict other constructs? (this could be detected through the consideration of many distinct lags beyond lag 1); and (3) Are oscillatory or other cyclic patterns present in my data? Such patterns are typically manifested as oscillatory patterns in the lag coefficients, such as significant positive lag coefficients followed by significant negative lag coefficients, or vice versa. Weekly cycles may be revealed through the inspection of statistically significant coefficient at lag 7 with daily data, whereas a yearly cycle can be capture as significant lag-12 coefficient with monthly data). Taken together, DTVEM has a substantially broad application and has considerable utility for a number of research paradigms. For example, DTVEM would allow (1) psychopathology researchers to uncover maintenance or short-term risk factors within psychopathology (i.e. where within-person variation in one construct is largely driven by within-person variation in another construct), (2) personality and social psychology researchers to explore dynamics in behavior across several time periods, and (3) researchers to explore how and when developmental processes occur (for example, how and when does a mother’s behavior influence a child’s behavior, and vice-versa).

The DTVEM comprises relatively well-known (specifically, GAMM and state-space estimation) subroutines and as such, may not be seen as a novel tool. However, DTVEM and the results highlighted in this paper make several novel contributions to the field in a number of ways. Firstly, DTVEM can be seen as a utility function that features novel integration and application of some of the key strengths of the GAMM and state-space modeling frameworks toward the issue of lag detection. Secondly, this novel combination of GAMM and state-space subroutines results in high detection of the simulated lag structure with satisfactory point estimates, reasonable standard error estimates, and relatively high power. Additionally, this paper builds on prior findings that evaluated the feasibility of drawing inferential conclusions concerning the dynamics of a system when discrete-time models are fitted to irregularly spaced data that are binned at equally spaced time blocks (de Haan-Rietdijk et al., 2017). Our stimulation results extend those findings in three notable ways: (1) results suggest that this method of binning irregularly spaced data – which inevitably leads to large proportions of missingness -- yielded reasonable inferential results with DTVEM when the lag order used in model fitting mirrored the true lag order in data generation defined with Δt = 1; (2) they provide an assessment of the extent to which variable selection tools in the regression framework such as GAMM can be used to uncover unknown but potentially complex lag structures; and (3) they reveal the quality of the point and standard error estimates, as well as the strengths and limitations of the proposed approach when used to estimate VAR models of complex lag structures with little a priori knowledge on the lag structures of the data. Lastly, by offering this tool within a convenient user-accessible format, it allows users who are not overly familiar with either GAMM or state-space modeling frameworks to run these models in a more accessible and highly automated fashion.

One of the most important aspects of the DTVEM is its ability to easily help users visualize and explore possible changes in the dependent variables as linear as well as non-linear functions of time, time differences, and other variables implicated in the system’s dynamics. For instance, while many systems that conform to a simplex structure (Guttman & Guttman, 1965) show reduced autocorrelations between occasions that are closely spaced in time than those that are further apart – thereby manifesting a monotonic decrease in autocorrelations with an increase in time differences (or time lags) – abundant examples exist that point to the contrary. Consider the results of the empirical example, in which nervousness predicted heart rate 8 and 12 hours later. In this case, the partial cross-correlational structure of the data changes as a non-monotonic function of time differences as the cross-regression effect of nervousness on heart rate does not degrade monotonically as time elapses – as would be predicted by a simplex data structure – but rather shows abrupt jumps at particular lags. Processes that are characterized by only higher-order lags are also commonly seen in seasonal (e.g., diurnal, weekly, monthly, annually) processes that only manifest significant associations with prior levels at specific lags (e.g., a strictly weekly process may only show a significant lag at a time interval of 7 but no other lower-order lags). The auto- and cross-correlational structures of empirical data may also show further dependencies on other variables, such as time (Chow et al., 2011), spatial region (Crainiceanu, Ruppert, Carroll, Joshi, & Goodner, 2007), and other intra- and inter-individual difference characteristics (e.g., Chow & Zhang, 2013).

Other approaches have been previously used to select and model optimal lag structures. For example, Ho, Shumway, and Ombao (2006) used the Bayesian information criterion (BIC) to perform model selection in VAR(1) model. Such an information criterion-based approach requires that a researcher fits all possible candidate models and compares their BIC values before selecting the final model. Naturally, the number of candidate models considered is typically small (e.g., Ho et al. (2006) only considered up to 1 lag) to render the model comparison process computationally feasible. As an example, consider the number of models required for 2 variables with 15 time lags. This would require fitting 60 separate models (there are 60 possible lag coefficients), followed by a possibility of exploring all potentially significant lags. This could in practice require a total of 60! = 8.32 × e81 models to be fit if selection was based on the BIC. Higher-order lags might be ignored and as we have illustrated, such omission can lead to fundamentally incorrect inferential conclusion. In addition, this approach alone is only practically feasible in particularly small-use scenarios (i.e. both small number of variables and a small number of lags).

Admittedly, DTVEM is designed to be a preliminary tool. As an example, if lags of 3 and 12 were identified by means of DTVEM in a study involving hourly measurements, this then raises the question of whether the process of interest should be modeled as an AR process with non-zero coefficients at lags 3 and 12 (an AR(3,12) process) or an AR process with non-zero coefficients at lags 1 and 4 (an AR (1,4) process), with each lag spacing 4 hours apart. Ultimately, DTVEM, as it stands, is meant to serve as an accessible exploratory tool – a first step toward identifying the proper lag structures for a specific class of models. The final choice and model formulation still depend on characteristics of the constructs under study and the change characteristics expected of them.

In addition to the strengths of the DTVEM, it is important to note the approach also has limitations and other unresolved issues. Firstly, DTVEM operates only to detect the optimal lag structure in discrete-time VAR(p) models. In the future, DTVEM could be modified to allow continuous-time modeling variations (e.g., Oud, 2004) or other hybrid continuous-discrete variations (Bar-Shalom, Li, & Kirubarajan, 2001). However, just like lag selection is a central issue in fitting discrete-time VAR models, users interested in fitting continuous-time models have to select the order of their models (i.e., the highest-order changes, or derivatives, involved in the model), which usually take on the form of ordinary differential equations (ODEs) and stochastic differential equations (SDEs). Thus, continuous-time models are not immune from the issue of lag selection, although they do have some advantages in that the results from continuous-time models are not affected by the measurement time intervals used in a particular study, whereas time intervals and lags can be confounded in discrete-time models. Nevertheless, dominant continuous-time models have their own limitations and computational difficulties. For example, the exact discrete-time approach advocated by Voelkle, Oud, Davidov, and Schmidt (2012) requires one to work with the solutions of stochastic differential equations (SDEs). Not all SDEs have known analytic forms and even when they do, the solutions can take on very different forms depending on the order of SDEs, and often require the specification of complex constraints in the model-fitting process. Results from models that include high-order derivatives (e.g., beyond rates of change and changes in the rates of change) may also be difficult to interpret substantively. In contrast, there is a well-established statistical and econometric literature on how to diagnose, explore, estimate, and interpret results from discrete-time models such as SARIMA models. Finally, there are some special cases of discrete-time models that cannot be obtained in the continuous-time framework (e.g., AR(1) process with AR(1) weight of −1). We limited ourselves to lag detection in a discrete-time framework in the present article. Future studies should extend this line of work to continuous-time models.

Additionally, the current simulation studies did not consider person-specific differences in dynamics or lag structures. Given this, future extensions of the DTVEM model may be able to include random effects within the GAMM framework to model person-specific differences such as individual differences in intercept, as well as the issue of lag identification in discrete data. Although the number of parameters estimated were large within simulations (i.e. 60 lag parameters in Simulation 3), the current simulation studies were limited to bivariate VAR models with two dependent variables. As such, our conclusions are limited in generalizability to particular kinds of VAR models. Future work should add simulation by increasing the number of independent and dependent variables considered, especially given that intensive longitudinal datasets often are collected with a large number of variables. Doing this would be especially important given the increased popularity of network psychometric models, and extensions of these models to incorporate VAR-based lag structures within network analysis (Epskamp et al., 2018; Marsman et al., 2018). Moreover, as the inclusion of latent variables is currently not feasible within the GAMM framework, we have restricted our attention to a VAR(p), namely, a manifest variable framework. An alternative framework focuses on evaluations of lag structures in models involving latent variables (Browne & Nesselroade, 2005; Molenaar, 1985; Molenaar & Nesselroade, 2001; Zhang & Browne, 2010). Given that the optimal lag structures for the same data set may differ depending on whether lag relations are assumed among manifest variables or as exerted through latent factors, one important extension to the present work is to extend the proposed methods to facilitate the diagnosis of lag structures in models involving latent variables. Possible ways to accommodate latent variables may include the incorporation of a third stage in DTVEM to estimate latent variable scores that are then used within the exploratory GAMM stage, followed by the specification of state-space models in the confirmatory stage that can accommodate latent variables.

In summary, current exploratory methods to identify optimal times in which processes predict one another are inadequate with multi-subject data and data with substantial missingness. Based on the inadequacies of present exploratory methods, we set out to design an exploratory model that would address these concerns. The principal utility of this tool is to provide a user-accessible manner to uncover and estimate optimal lag orders in a state-space framework. Based on the performance in the simulation studies and the utility demonstrated in the empirical example, DTVEM appears to fill these needs.

Table 4.

Summary of DTVEM Confirmatory Stage Estimates of Missing Data

| Simulation | T | %M | Term | Simulated θ | Mean | RMSE | Bias | SD of | Mean of SE | RDSE | Coverage of 95% CI | Power |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Multivariate | 170 | 72 | AR(1) | 0.300 | 0.300 | 0.013 | 0.000 | 0.013 | 0.011 | −0.157 | 0.924 | 1.000 |

| Multivariate | 170 | 72 | AR(3) | 0.100 | 0.102 | 0.015 | −0.012 | 0.014 | 0.013 | −0.076 | 0.911 | 0.948 |

| Multivariate | 170 | 72 | AR(4) | 0.100 | 0.102 | 0.019 | −0.012 | 0.019 | 0.013 | −0.291 | 0.911 | 0.988 |

| Multivariate | 170 | 72 | AR(12) | 0.100 | 0.100 | 0.015 | 0.000 | 0.015 | 0.014 | −0.074 | 0.935 | 0.986 |

| Multivariate | 170 | 72 | AR(14) | 0.100 | 0.099 | 0.015 | 0.001 | 0.015 | 0.014 | −0.101 | 0.936 | 1.000 |

| Multivariate | 170 | 72 | AR(1) | 0.100 | 0.101 | 0.013 | −0.001 | 0.013 | 0.012 | −0.048 | 0.944 | 1.000 |

| Multivariate | 170 | 72 | AR(3) | 0.100 | 0.098 | 0.013 | 0.002 | 0.013 | 0.013 | 0.019 | 0.950 | 0.606 |

| Multivariate | 170 | 72 | CR(8) | 0.200 | 0.202 | 0.017 | −0.002 | 0.017 | 0.015 | −0.110 | 0.930 | 1.000 |

| Multivariate | 170 | 72 | CR(12) | −0.200 | −0.201 | 0.018 | 0.001 | 0.018 | 0.015 | −0.142 | 0.950 | 1.000 |

| Multivariate | 170 | 72 | 2.250 | 2.243 | 0.033 | 0.007 | 0.032 | 0.031 | −0.032 | 0.938 | 1.000 | |

| Multivariate | 170 | 72 | 2.250 | 2.247 | 0.037 | 0.003 | 0.038 | 0.035 | −0.080 | 0.960 | 1.000 |