Abstract

Research in burns has been a continuing demand over the past few decades, and important advancements are still needed to facilitate more effective patient stabilization and reduce mortality rate. Burn wound assessment, which is an important task for surgical management, largely depends on the accuracy of burn area and burn depth estimates. Automated quantification of these burn parameters plays an essential role for reducing these estimate errors conventionally carried out by clinicians. The task for automated burn area calculation is known as image segmentation. In this paper, a new segmentation method for burn wound images is proposed. The proposed methods utilizes a method of tensor decomposition of colour images, based on which effective texture features can be extracted for classification. Experimental results showed that the proposed method outperforms other methods not only in terms of segmentation accuracy but also computational speed.

Introduction

Burns are among the most life-threatening of traumatic injuries1. Severe burns constitute a major crisis for the public health with an implication to a considerable health-economic impact, as they can cause substantial morbidity and mortality through infection, sepsis, organ failure, and death, where the mortality rate has been reported between 1.4% and 18% (maximum 34%)2.

The World Health Organization has guidelines for burn treatment that, at least, there must be one bed in a burn unit for each 500,000 inhabitants3. A burn unit covers a wide geographic area and a burnt patient is usually diagnosed by non-specialized burn experts. In Sweden, for example, there are only two burn centres in the whole country: one located in Linköping and the other one in Uppsala.

Burns are categorized into several types by depth: 1st degree, superficial partial-thickness, deep partial-thickness, and full-thickness burns4. When calculating the percentage of total body surface area (%TBSA) burnt, only superficial partial-thickness burns and deeper are included in the area calculation, while 1st degree burns (with intact epidermis) are excluded. To provide the right clinical treatment to a burn patient, the %TBSA of the burn must be calculated as it dictates the early fluid resuscitation. The actual %TBSA is also useful for later surgical planning and for estimating mortality using the revised Baux-score, which has proven to be a reliable predictor of both mortality and morbidity even though the %TBSA used is estimated through clinical means5. Given limited burn treatment facilities over a large geographic environment, especially in middle and low income countries, and the importance of burn area calculation, the demand for developing automated methods for accurate and objective assessment of burn parameters have been increasingly realized in burn research.

This project proposes a new method for the burn-wound image segmentation using a method of tensor decomposition that can extract effective luminance-colour texture features for classification of burn and non-burn areas. The tensor decomposition is a generalization of PCA. Both PCA and ICA are still actively applied to image segmentation6,7. The remaining of this paper is organized as follows. Section 2 reviews works relating to the proposed method. Section 3 describes the materials and models of the proposed method. Section 4 presents the experimental results and discussion. Finally, Section 5 summarizes the research finding and suggests issues for future research.

Related Works

Segmentation is one of the major research areas in image processing and computer vision. The goal of image segmentation is to extract the region of interest in an image that includes a background and other non-interest objects. There are many different techniques developed to accomplish the segmentation, such as edge detection, histogram thresholding, region growing, active contours or snake algorithm, clustering, and machine-learning based methods, as reviewed in8,9, which extract the characteristics that describe image such as: luminance, brightness, colour, texture, and shape9,10. The combination of these properties, where applicable, is expected to provide better segmentation results than those that utilize just one or fewer.

Deng and Manjunath11 proposed the JSEG method, which separates the segmentation process into two stages: colour quantization and spatial segmentation. In the first stage, colours in the image are quantized to several representative classes that can be used to differentiate regions in the image. This quantization is performed in the colour space without considering the spatial distributions of the colours. The image pixel values are then replaced by their corresponding colour class labels, thus forming a class-map of the image. The class-map can be viewed as a special kind of texture composition. In the second stage, spatial segmentation is performed directly on this class-map without considering the corresponding pixel colour similarity. Cucchiara et al.12 developed a segmentation method for extracting skin lesions based on a recursive version of the fuzzy c-means algorithm13 (FCM) for 2D colour histograms constructed by the principal component analysis (PCA) of the CIELab colour space.

Acha et al.14 worked with the CIELuv colour space for the image segmentation by extracting the colour-texture information from a 5 × 5 pixel area around a point that the user selects with the mouse. These features are combined, and the Euclidean distance between the previously chosen area and the others is calculated to classify two regions of burn and non-burn, using the Otsu’s thresholding method15. Gomez et al.16 developed an algorithm based on the CIELab colour space and independent histogram pursuit (IHP) to segment skin lesions images. The IHP is composed by 2 steps: firstly, the algorithm finds a combination of spectral bands that enhance the contrast between healthy skin and lesion; secondly, it estimates the remaining combinations which enhance subtle structures of the image. The classification is done by the k-means cluster analysis to identify the skin lesion on an image.

Papazoglou et al.17 proposed an algorithm for wound segmentation which requires manual input, uses the combination of RGB and CIELab colour spaces, as well as the combination of threshold and pixel-based colour comparing segmentation methods. Cavalcanti et al.18 used the independent component analysis19,20 (ICA) to locate skin lesions in an image and to separate it from the healthy skin. Given the ICA results, an initial lesion localization is obtained, the lesion boundary is then determined by using the level-set method with post-processing steps. Wantanajittikul et al.21 utilized the Cr values of the YCbCr colour space to identify the skin from the background in the first step, secondly the u* and v* chromatic sets of the CIELuv colour space were used to capture the burnt region, and finally, the FCM was used to separate the burn wound region from the healthy skin. Loizou et al.22 applied the snake algorithm23 for image segmentation to extract texture and geometrical features for the evaluation of wound healing process.

Materials and Proposed Method

Ethics

This study was approved by the Regional Ethics Committee in Linköping, Sweden (DNr 2012/31/31), and conducted in compliance with the “Ethical principles for medical research involving human subjects” of the Helsinki Declaration. Guardians for research subjects for this study, which was undertaken in children, were provided a consent form describing this study and providing sufficient information for subjects to make an informed decision about their child’s participation in this study. The consent form was approved by the Regional Ethics Committee in Linköping for the study. Before a subject underwent any study procedure, an informed consent discussion was conducted and written informed consent was obtained from the legal guardians attending at the visit.

Data acquisition

All RGB images of burn patients were acquired at the Burn Centre of the Linköping University Hospital, Linköping, Sweden. The images were taken in the JPEG format utilizing the smart-phone Oneplus2 camera: 13 Mega-pixel, 6 lenses to avoid distortion and colour aberration, OIS, Laser Focus, Dual-LED flash and f/2.0 aperture. The camera was located approximately 30–50 cm from the burn wound without using the flash. Moreover, the patients were laid in a bed covered by a green sheet.

Colour model

The green and blue components are represented by a* and b* CIELab negative values, whereas the skin and the burn wound are represented by positive values. The purpose of a colour model is to facilitate the specification of colours in some standard, generally accepted way. In essence, a colour model is a specification of a coordinate system and a subspace within that system, where each colour is represented by a single point24. There exist several colour models for different functions: (i) RGB model, (ii) CMY and CMYK models, (iii) HSI model that decouples the intensity component from the colour-carrying information (hue and saturation)24, (iv) YCbCr, CIELab, CIELuv and CIELch, where their components represent the image luminance and chromatic scales separately.

The CIELab colour model is the most complete one specified by the International Commission on illumination. The CIELab extracts the luminance and the chromatic information of an image utilizing three coordinates: the L* coordinate (L* = 0 encloses black and L* = 100 encloses white) that describes the luminance, the a* and b* coordinates that represent the pure colours from green to red (a* = −127 encloses green, a* = +128 yields red) and from blue to yellow (b* = −127 encloses blue, b* = +128 encloses yellow), respectively. Another important characteristic of this model is its uniformity, where the distance between two different colours corresponds to the Euclidean one and it coincides to the perceptual difference detected by the human visual system25–27. For all these reasons, the CIELab colour model was therefore chosen in this study.

Furthermore, the L*, a* and b* images were filtered with Gaussian filters in the frequency domain using the Gaussian low-pass filter function24. The best filters’ cut-off frequency, σ0, which keeps the 99% of the power spectrum of the zero-padded discrete Fourier transforms of the CIELab coordinates was estimated with the bisection method28.

Tensor decomposition

A tensor is a multidimensional array. More formally, an N-way or Nth-order tensor is an element of the tensor product of N vector spaces, each of which has its own coordinate system29–31. There are two main techniques for tensor decomposition: the CANDECOMP/PARAFAC, and the Tucker tensor decomposition. The CANDECOMP/PARAFAC, or shortly CP, decomposition factorizes a tensor into the sum of rank-one components. For example, a third-order tensor is decomposed as:

| 1 |

or element-wise:

| 2 |

where and represent the noise or the error, represents the number of rank-one components which decompose the tensor , , and for are the rank-one vectors which compose the factor matrices , and respectively, and the symbol “” indicates the vector outer product.

It is often useful to assume that the factor matrices columns are normalized to length one with the weight absorbed into a vector :

| 3 |

where , the symbol “×n” indicates the n-mode product between the core tensor and the factor matrices.

On the other hand Tucker decomposition decomposes a tensor into a core tensor multiplied (or transformed) by a matrix along each mode. For example, the third-order tensor is decomposed as:

| 4 |

where is the core tensor, whereas , and are the factor matrices considered as the principal components in each mode. Equation (4) can be element-wise written as:

where , , , , and .

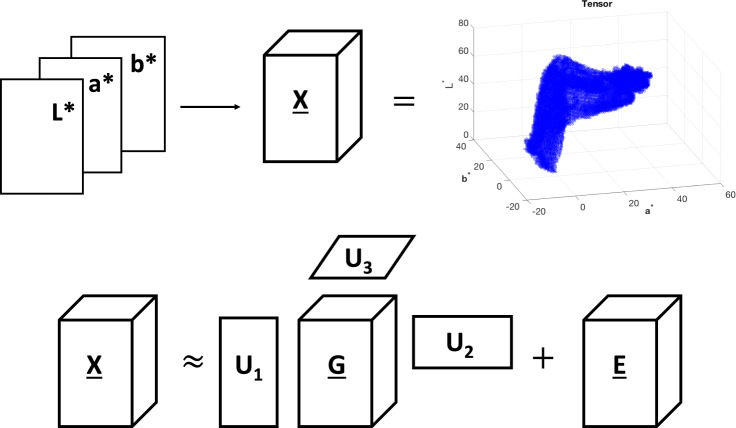

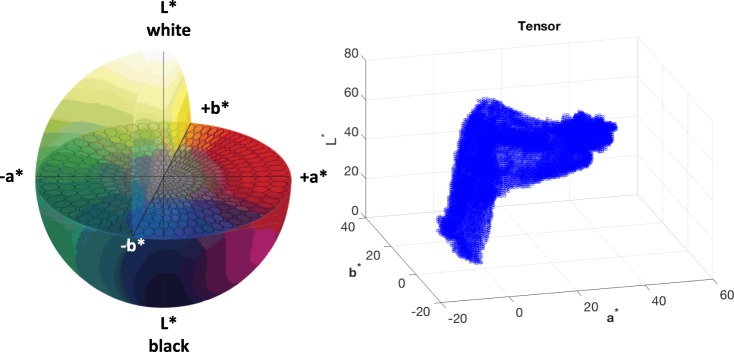

Both decompositions are a form of higher-order principal component analysis30,31. Equation (3) represents the decomposition as a multi-linear product of a core tensor and factor matrices and it is often used in signal processing. Equation (4) builds the core tensor with a different dimension for each mode and it is often used for data compression31. For the purpose of data compression in this study, the Tucker decomposition, known as the Tucker3 model30,31, is preferred to the CP. An RGB image can be converted into the CIELab space, and can be expressed as tensor , where I, J and K are the number of grey-levels in the L* a* and b* image, respectively. Figure 1 graphically shows the Tucker decomposition of the CIELab tensor.

| 5 |

| 6 |

Figure 1.

Graphical representation of the third-order CIELab tensor and its Tucker decomposition.

Equations (5) and (6) can be element-wise written as:

| 7 |

| 8 |

where is the CIELab colour tensor, is the tensor to estimate, is the tensor error, U1, U2 and U3 are the factor matrices calculated from the a*, b* and L* mode of the tensor respectively, whereas i, j and k are the coordinates and p, q and r are the core tensor ones.

Setting as the CIELab vector module with coordinate , two tensors, and , are built in order to mix the image luminance and colour as follows:

| 9 |

| 10 |

where , , and . The tensors and in Equations (9) and (10), contain the normalized values of the addition and difference of the colour sets in proportion to the luminance, respectively. The values set to 0 indicate the background: a* and b* negative values corresponds to the green and blue components respectively; whereas defines the dark regions where δ is a parameter arbitrarily chosen, in this study .

Finally, the estimated and are re-transformed into images Yd and Ys, the chromaticity sources of the I image, where the texture features are extracted.

Tensor rank

Let be an Nth-order tensor of size . Then the n-rank of the tensor , rankn, is the dimension of the vector space spanned by the mode-n fibres. Bro et al.29,32 developed a technique, called core consistency diagnostics (CORCONDIA), for estimating an optimal number R of rank-one tensor, which produces the factor matrices for the CP decomposition. Unfortunately, there is not such a single algorithm for the Tucker decomposition, so the core tensor dimensions have to be decided with a reasonable choice.

In this study, the three-mode tensor is the CIELab colour space. So, its the upper limit rank is rank, when all the luminance and chroma values define the image; whereas its lower limit is rank, when just one luminance and chroma grey-level defines the image. An intuitive choice for the rank of the CIELab tensor is the amount of grey levels of each CIELab component that defines the image I:

| 11 |

As being expressed in Equations (9) and (10), the background values are set to 0, there is a further reduction which does not involve the background grey-levels of the a* and b* colour sets and the very dark regions by the L* set.

Feature extraction

In this study, the grey-level co-occurrence matrix (GLCM)9 were applied on two sources of the decomposed luminance and colour components to extract the contrast, homogeneity, correlation and energy. They were calculated with a mask 5 × 5 with offsets: 0, 45, 90 and 135. The means of luminance-colour images were also included. The total of 10 values of the 5 features were extracted, 5 for each luminance-colour source. These feature vectors were used for cluster analysis to identify burn regions.

Cluster analysis

The cluster analysis was carried out using the FCM algorithm. Since the number clusters of colours and their shades with the different luminance levels are unknown, a high value of clusters = 20 was selected for the FCM analysis, and then a cluster merging process was performed to distinguish burn from the healthy skin background regions.

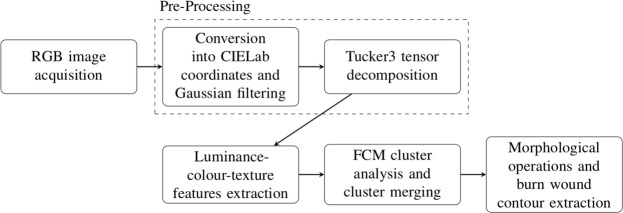

Figure 2 illustrates the steps of the proposed segmentation method that works as follows. Given an RGB image of burn as the input, it is converted into CIELab space, filtered with a Gaussian filter, and two image components are computed by the Tucker3 decomposition for colour texture feature extraction. These features are the inputs of the fuzzy c-mean algorithm that divides the image into 20 clusters. The clusters are then merged in order to obtain three main regions of interest: burn wound, skin, and background. Once these 3 regions are obtained, the closing morphological operation is applied to obtain the burn-wound contour. However, the user has the possibility of choosing the hole filling after the closing operation.

Figure 2.

Flowchart of the proposed colour-texture segmentation of burn wounds using tensor decomposition.

Results and Discussion

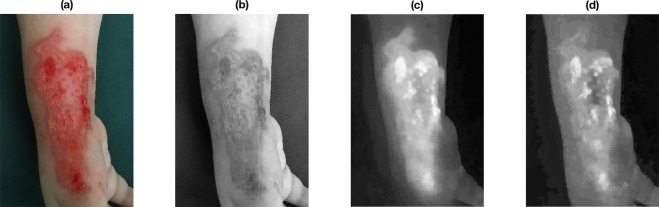

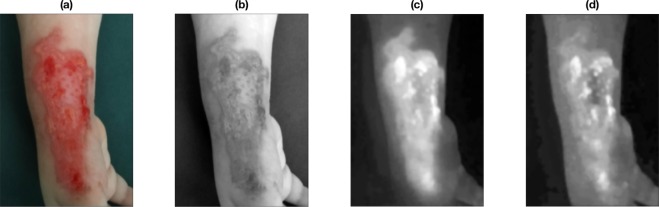

Figure 3 shows a burn image of pixels of a paediatric patient with a burn wound located on the right hand assessed 96 hours after the burn injury. The acquired RGB image was converted into the CIELab colour space with standard D65 illuminant and its components were filtered in the frequency domain with Gaussian filters, keeping the the 99% of the power spectrum of the zero-padded discrete Fourier transforms of them (see Fig. 4). Figures 3 and 4 show the effect of Gaussian filtering on the reduction of the reflection and producing a homogeneous background. This study does not consider the effect of illumination, which will be an issue for future investigation. Moreover, Fig. 5 shows the CIELab colour space and the CIELab tensor for the image in Fig. 4(a).

Figure 3.

Burn wound colour image (a) and its CIELab coordinates: L* (b), a* (c) and b* (d) respectively.

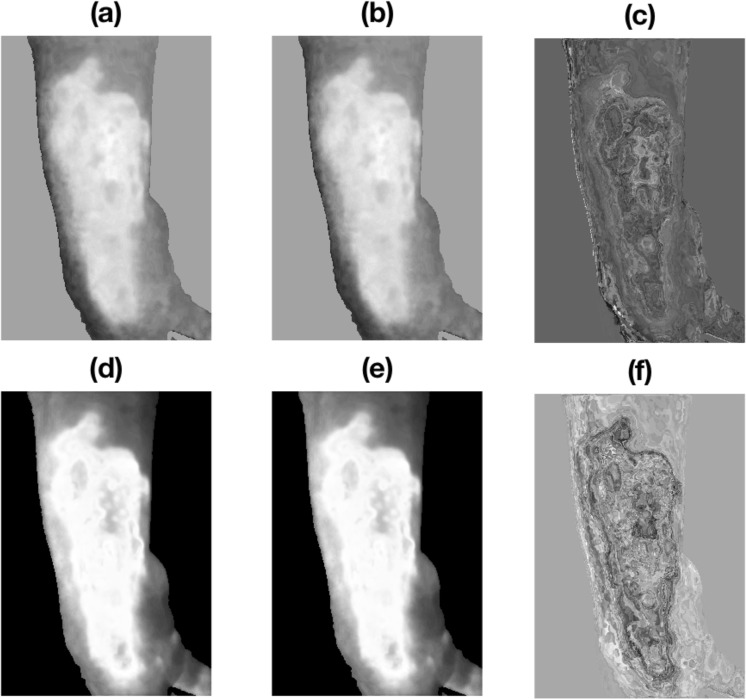

Figure 4.

Burn wound colour image (a) and its CIELab coordinates (b–d) after Gaussian filtering in the frequency domain.

Figure 5.

CIELab colour space and the CIELab tensor for the image in Fig. 4(a).

Tensors and were constructed as explained in Equations (9) and (10). The tensor estimations of and were obtained by the Tucker3 tensor decomposition technique. The tensor rank is the amount of a*, b* and L* grey-levels: . Since there is a background (the green blanket) and some dark areas (left side) in the image, the core tensors’ rank is reduced by using Equations (9) and (10) to rank. It should be pointed out that such decomposition was carried out on the number of unique combination of luminance and chromatic values instead on the number of pixels in the original image. In this case, the decomposition was performed on instead of pixels, resulting in a data reduction about 98.3% without losing the image information. Finally, the and values were re-transformed into images Yd and Ys with the same size of the original (see Fig. 6).

Figure 6.

Re-transformation of images of images of the tensors (a) and (d), their estimations (b), (e) and the respective errors (c), (f) after Tucker3 tensor decompositions using rank.

Figure 6 illustrates that the tensor decompositions can enhance the contrast of tensors and with the estimated and after the error eliminations and , respectively. In the end, 4 and 6 show how Gaussian filtering and tensor decomposition can remove errors and/or artefacts from the image. Based on these estimated tensors, one statistical (mean) and four GLCM-based texture (contrast, homogeneity, correlation, and energy) features were extracted. These re-transformed images produce a data reduction about 25 times, from to .

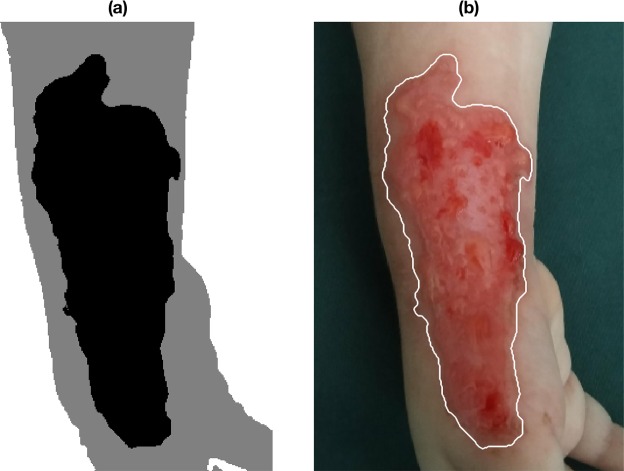

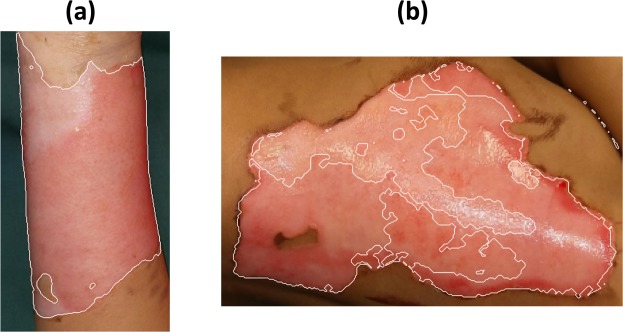

These features were used in the FCM analysis, which initially grouped the data in 20 different clusters and successively manually merged into 3 cluster: burn wound, healthy skin and background (see Fig. 7(a)). On the other hand, Fig. 7(b) shows the final image segmentation result with the burn contour superimposed over the original image. 7(a) and 7(a) are the segmentation result after a closing morphological operation with structure element disk with radius 2. Moreover it is user choice to fill or not eventual holes with another morphological operation.

Figure 7.

Result of merging of FCM clusters with the burn wound marked in black, the normal skin in grey and the background in white (a), and final segmentation result with the burn contour superimposed over the original image (b).

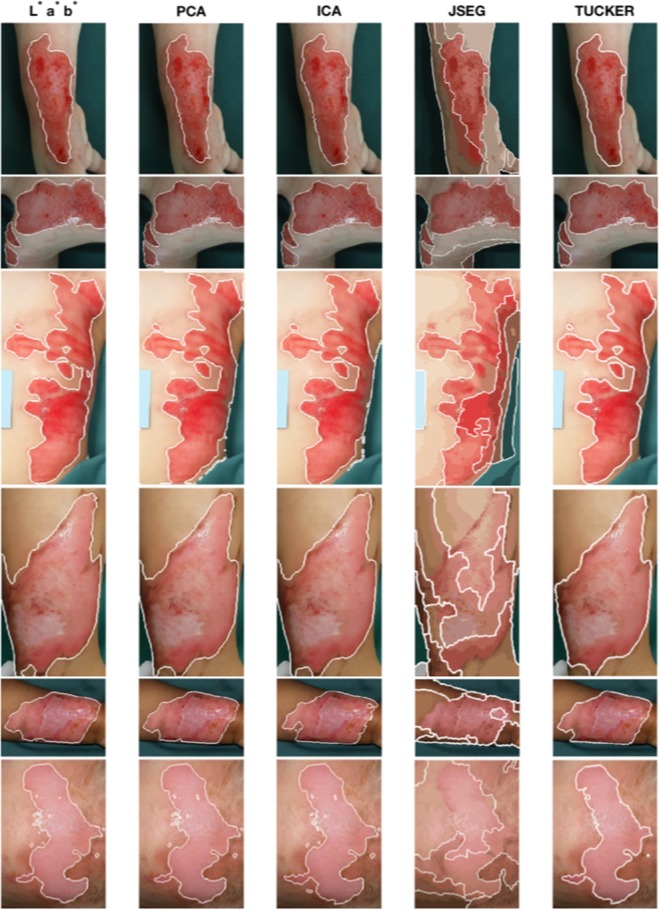

In order to compare the proposed method with others, image segmentation results were obtained using four other techniques: Gaussian pre-filtering, PCA, ICA20 and the JSEG11. Figure 8 shows six segmentation results in six rows obtained from the proposed and other four methods, which are discussed as follows. It is obvious in all cases that the JSEG can only distinguish the human body from the background but not the burn wound from the healthy skin; and therefore not further included in the following comparisons.

Figure 8.

Segmentation results for six different burn wound images: the 1st column shows the segmentation results using the CIELab coordinates as input of the FCM algorithm with 20 clusters; the 2nd and 3rd column show the segmentation result using the PCA and ICA sources as input of the FCM algorithm with 20 clusters respectively; the 4th column shows the segmentation results using the JSEG technique by Deng and Manjunath; in the end, the 5th column shows the segmentation results using the proposed method.

For the results shown in the first row, the original image is the one discussed previously with size . The CIELab and PCA methods present under-segmented areas along the burn wound on the left side, and they took 375 and 1527 seconds for the segmentation, respectively. The ICA result is comparative with the proposed method but it required 2286 seconds for the segmentation. The proposed method successfully detects the burn wound contour after 297 seconds and using as Tucker tensor core rank: .

Results in the second row involves a image, which shows three burn wounds after 96 hours of injury, located on the right side of the chest and in the right shoulder of a patient. The CIELab segmentation presents an over-segmentation along the upper side of the chest wound and required 493 seconds for the segmentation task. The PCA and ICA segmentation show over-segmented results along the right side of the wound in the bottom and took 1178 and 1705 seconds, respectively. The proposed method excludes the central white spot, caused by a specular reflection, from the segmentation and correctly identifies the burn wound contours in 358 seconds with Tucker tensor core rank: .

The third row shows segmentation results for a image of a burn wound after 17 hours of injury, located on the left side of the chest and lower left flank of a patient. CIELab segmentation resulted in over-segmentation as it joins a smaller burnt area separated by uninjured skin with the rest of the burn. At the same time, the CIELab segmentation also resulted in under-segmentation as it excluded a bit of the burnt areas on the left flank. The time taken for the CIELab is 239 seconds. The PCA and ICA present over-segmented results along the right side, and required 1952 and 2348 seconds for the task, respectively. The proposed method detects the burn wound contour well with a minor under-segmentation on the upper-right side of the injury in 214 seconds with Tucker tensor core rank: .

Segmentation results shown in the forth row for an burn image after 96 hours after injury, located next to the right ankle of a patient. Results obtained from the CIELab, PCA and ICA are similar, with over-segmentation along the left side of the image including some normal skin. These methods took 208, 800, and 3275 seconds, respectively. The proposed method detects the burn wound contour well in 118 seconds with Tucker tensor core rank: .

The fifth row shows the segmentation results for a image of a burn wound after 312 hours of injury, located on the left forearm of a patient. The CIELab and PCA results show some minor over-segmentation on the left side, requiring 223 and 1430 seconds for the segmentation, respectively. The ICA extracted the burn area well, but it required 3653 seconds for the task. The segmentation obtained from the proposed method is similar to the CIELab and PCA methods, but only took 115 seconds for the task and Tucker tensor core rank: .

The sixth row presents results for a burn image after 24 hours of injury, located on the forehead of a patient. The CIELab, PCA and ICA segmentations show noisy results caused by the reflected light and they required 308, 958 and 2824 seconds for the segmentation, respectively. Moreover, the CIELab and PCA results are of under-segmentation on the bottom-left side of the wound. The proposed method can eliminate such noise and detects the burn wound contour well in only 185 seconds with Tucker tensor core rank: .

Table 1 illustrates the computational times for the image segmentation obtained from different methods illustrated in Fig. 8, except for the JSEG method which produces unsatisfactory results for every images. The experimental results suggest that the proposed method can provide the best results not only in terms of segmentation accuracy but also the computational speed is approximately 10 times faster than the ICA, 5 times faster than the PCA, and 1.5 times faster than the CIELab.

Table 1.

Computational times (seconds) for image segmentation results obtained from different methods as shown in Fig. 8, where R1, …, R6 stand for images shown in rows 1, …, row 6 of Fig. 8, respectively.

| Method | R1 | R2 | R3 | R4 | R5 | R6 | Average |

|---|---|---|---|---|---|---|---|

| CIELab | 375 | 493 | 239 | 208 | 223 | 308 | 307.66 |

| PCA | 1527 | 1178 | 1952 | 800 | 1430 | 958 | 1307.5 |

| ICA | 2286 | 1705 | 2348 | 3275 | 3653 | 2824 | 2073 |

| Proposed method | 297 | 358 | 214 | 118 | 115 | 185 | 214.5 |

Table 2 shows the quantitative measurements that consist of positive predicted value (PPV) and sensitivity (SEN) for a segmented image. The PPV and SEN are defined as21

| 12 |

| 13 |

Table 2.

Quantitative measurements for image segmentation results obtained from different methods as shown in Fig. 8, where R1, …, R6 stand for images shown in rows 1, …, row 6 of Fig. 8, respectively.

| CIELab | PCA | ICA | Tucker | |||||

|---|---|---|---|---|---|---|---|---|

| PPV | SEN | PPV | SEN | PPV | SEN | PPV | SEN | |

| R1 | 0.9366 | 0.9449 | 0.9371 | 0.9324 | 0.8848 | 0.9952 | 0.9508 | 0.9467 |

| R2 | 0.8567 | 0.9767 | 0.9334 | 0.9711 | 0.9156 | 0.9853 | 0.9444 | 0.9674 |

| R3 | 0.9462 | 0.9283 | 0.9018 | 0.9688 | 0.8568 | 0.9869 | 0.9478 | 0.9562 |

| R4 | 0.8474 | 0.9992 | 0.8639 | 0.9967 | 0.8581 | 0.9969 | 0.9047 | 0.9953 |

| R5 | 0.9666 | 0.9825 | 0.9597 | 0.9949 | 0.9936 | 0.9004 | 0.9935 | 0.9147 |

| R6 | 0.9080 | 0.9795 | 0.9149 | 0.9787 | 0.9023 | 0.9916 | 0.9518 | 0.9732 |

| Average | 0.9102 | 0.9685 | 0.9184 | 0.9737 | 0.9018 | 0.9760 | 0.9488 | 0.9589 |

For a perfect segmentation, and . In case of under-segmentation, and , whereas in case of over-segmentation, and . Based on the results shown in Table 2, cases of under-segmentation are ICA with image R5 and Tucker with R5; and over-segmentation are CIELab with R6, PCA with R2, R3, R4, R5 and R6, ICA with R1, R2, R3, R4 and R6, and Tucker with R4.

Both results shown in Fig. 8 and Table 2 suggest that the proposed method provides better segmentation results for the images in the 1st, 2nd, 3rd and 6th row of Fig. 8. The average PPV and SEN values of the segmentations obtained from the proposed method are better than the other three methods in terms of the balance between over-segmentation and under-segmentation.

Furthermore, the proposed method is also compared with the simple linear iterative clustering (SLIC) superpixel33, the efficient graph-based image segmentation34, and the SegNet35 methods that are discussed as follows.

The SLIC superpixel method performs on the local clustering of CIELab values and pixel coordinates. It is fast and requires the specified number of superpixels as the input. Figure 9 shows the segmentation results of the original burn image as shown in Fig. 3(a) obtained from the superpixel method using 5, 20, 100, 500 and 1000 as the numbers of desired superpixels. It is quite obvious that the bigger the number of superpixels are, the better the segmentation result is obtained, but it is very difficult assign to which class a superpixel belongs to. The algorithm distinguishes quite well the skin from the background but then encounters a problem in classifying a superpixel as skin or burn wound, resulting in either under- or over-segmentation. Being similar to the proposed method, the SLIC superpixel technique requires a manual merging process in order to distinguish the three classes of interest. However, an advantage of the proposed method over the superpixel method is that the merging process can be carried out faster since the number of clusters specified for the proposed method is much smaller than that for the SLIC superpixel technique to achieve a good final segmentation result as shown in Fig. 7(a).

Figure 9.

SLIC superpixel segmentation result using 5 (a), 20 (b), 100 (c), 500 (d) and 1000 (e) as the number of desired superpixels.

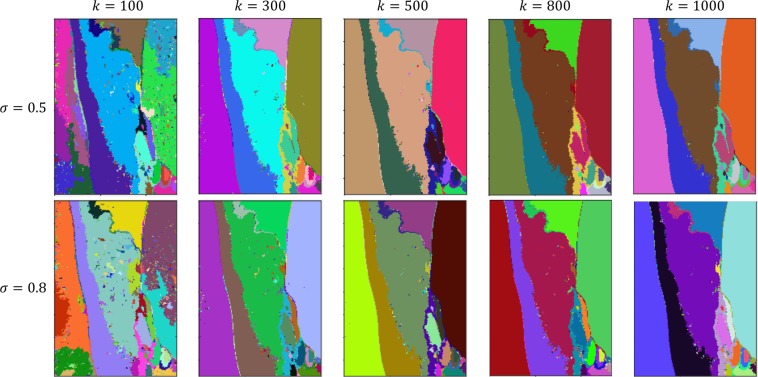

The efficient graph-based image segmentation method defines a predicate to highlight the boundary between 2 or more regions using a graph-based representation of the image of interest. Figure 10 shows the segmentation results of the original burn image as shown in Fig. 3(a) obtained from the graph-based image segmentation method, where its input parameters , and , where σ is the standard deviation of the Gaussian filter in the pre-processing and k is a scaling parameter. It is easy to observe that all the results are not satisfactory as they were largely over-segmented.

Figure 10.

Efficient graph-based image segmentation results using various values of σ and k.

The SegNet consists of an encoder network and a corresponding decoder network followed by a pixel-wise classification layer. It is composed of an encoder sub-network and a corresponding decoder sub-network. The depth of such network is specified by a scalar D that determines the number of times an input image is downsampled or upsampled as it is processed. The encoder network downsamples the input image by a factor of 2D. The decoder network performs the opposite operation and upsamples the encoder network output by a factor of 2D. Two types of the SegNet were designed: i) encoder and decoder with D = 4, and ii) the network is initialized using the VGG-16 weights and D = 5. Using these two networks with 11 images as training and 2 as testing, the accuracies achieved after 10 epochs and with learning rate = 10−3 are 26% and 25%, respectively. A reason for the poor results produced by SegNet can be that SegNet was primarily designed for scene understanding applications (road and indoor scenes), while the data domain in this study is medical imaging of burn wounds. Another possible reason is the very small training sample size (11 images) used for training the SegNet in this study, which was not sufficient for the deep network to capture the feature map information for correct learning, particularly the vague boundary information between the skin and burn areas.

It should be pointed out that good outcome of automated merging of the fuzzy clusters obtained from the FCM depends on sufficient training data for various types of burn, as shown in Fig. 11, where the assignment of new fuzzy clusters to the trained ones is based on the minimum distance criterion. A dataset with about 220 clusters centres (8 belong to the background, 135 to healthy skin, and 77 to burn wound) was developed and as reference to assign each new cluster centre extracted from an image under the current analysis to the class of the reference centre that has the minimum distance. As the number of the reference cluster centres are limited, the automated merging fails sometimes. Therefore, the user has the opportunity to do this process manually and then adds the new labelled cluster into the reference set.

Figure 11.

Example of desirable (a), and undesirable (b) segmentation results with automatic merging of fuzzy clusters, depending on sufficient training data.

Regarding the sufficient data and training process, at present it is difficult to optimally determine how much more data would be needed for the good training of the proposed algorithm. In fact, sample size planning for classifiers is an area of research in its own right. Most methods of sample size planning for developing classifiers require non-singularity of the sample covariance matrix of the covariates36. In biomedicine, methods for sample size planning for classification models were developed on the basis of learning curves that show the classification performance as the function of the training sample size to appropriately select the sample size needed to train a classifier. However, these methods require extensive previous data that attempted to differentiate the same classes37, or suggest sizes between 75–100 samples to achieve only reasonable accuracy in the validation38. This issue will certainly be investigated in our future research when more clinical data become available.

Conclusion

The proposed method has been shown to be able to extract burn wounds from the complex background with relatively fast computational time. The tensor decomposition is independent from the camera resolution, because it works on the CIELab tensor model instead on the number of pixels of the image. The proposed method results in a big data reduction without any information lost for the image source estimation, and therefore applicable for real-time processing. The CIELab, PCA and ICA do not consistently provide good segmentation results over various burn images, showing over/under-segmentation errors. Moreover, these techniques require longer computational times than the proposed method.

Besides, the fuzzy burn wound centres extracted by the FCM during the cluster analysis, in this paper used to distinguish partial-thickness burns from normal skin and 1st degree burns, but they could also be used to identify the depth of the burn and classify it into: superficial partial-thickness burn, deep partial-thickness, and full-thickness burns. The 1st degree burns are not included in the total area of burn estimation and should therefore not be included in this estimation.

The strategy for colour image segmentation with fuzzy integral and mountain clustering39 which does not require an initial estimate of the number of fuzzy clusters, is worth investigating for improving the proposed approach in terms of limited training data for cluster merging. It would be desirable to utilize the proposed method for segmenting images captured with a polarized camera that can eliminate the light reflection problem, and try to extract features on the CIELab tensor instead of the re-constructed images. As another issue for future research, it is worth investigating the segmentation of burn areas on 3D images to include curves and depth to further improve the segmentation accuracy. Furthermore, there exist many image segmentation techniques such as semantic segmentation35,40, superpixels segmentation33,41, spectral clustering42, fully connected conditional random fields43, and mask R-CNN44. However, all these techniques require a huge amount of training data to achieve a high degree of accuracy. To utilize such techniques, developing a method for simulating and generating a large quantity of burn images would be an important area of research to pursue in our future work.

Acknowledgements

This research was funded by a Faculty of Science and Engineering Grant to T.D.P. The PARAFAC toolbox for tensor decomposition was used in this study. MATLAB codes for implementing the proposed method and image data are available at the first author’s website (https://liu.se/medarbetare/marci30).

Author Contributions

T.D.P. conceived the concept of tensor decomposition for image segmentation and provided technical supervision to M.D.C. M.D.C. further developed the proposed method, conducted the experiment. M.D.C. and T.D.P. wrote the paper. R.M. and F.S. provided the image data and validation of the extraction of burn areas. All authors analysed the results, reviewed and revised the manuscript, and approved the submission of the manuscript.

Competing Interests

The authors declare no competing interests.

Footnotes

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Marco D. Cirillo, Email: marco.domenico.cirillo@liu.se

Tuan D. Pham, Email: tuan.pham@liu.se

References

- 1.Injuries, W. H. O. & Department, V. P. The Injury Chart Book: A Graphical Overview Of The Global Burden Of Injuries (World Health Organization, 2002).

- 2.Brusselaers N, Monstrey S, Vogelaers D, Hoste E, Blot S. Severe burn injury in Europe: a systematic review of the incidence, etiology, morbidity, and mortality. Critical Care. 2010;14:R188. doi: 10.1186/cc9300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Jeschke, M. G. Burn Care and Treatment: A Practical Guide. (Springer, 2013).

- 4.Hettiaratchy S, Papini R. ABC of burns: Initial management of a major burn: II—assessment and resuscitation. BMJ: British Medical Journal. 2004;329:101. doi: 10.1136/bmj.329.7457.101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Steinvall I, Elmasry M, Fredrikson M, Sjoberg F. Standardised mortality ratio based on the sum of age and percentage total body surface area burned is an adequate quality indicator in burn care: An exploratory review. Burns. 2016;42:28–40. doi: 10.1016/j.burns.2015.10.032. [DOI] [PubMed] [Google Scholar]

- 6.Katkar, J., Baraskar, T. & Mankar, V. R. A novel approach for medical image segmentation using PCA and K-means clustering. In International Conference on Applied and Theoretical Computing and Communication Technology (iCATccT), 430–435 (IEEE, 2015).

- 7.Soomro TA, et al. Impact of ICA-Based image enhancement technique on retinal blood vessels segmentation. IEEE Access. 2018;6:3524–3538. doi: 10.1109/ACCESS.2018.2794463. [DOI] [Google Scholar]

- 8.Pham DL, Xu C, Prince JL. Current methods in medical image segmentation. Annual Review Of Biomedical Engineering. 2000;2:315–337. doi: 10.1146/annurev.bioeng.2.1.315. [DOI] [PubMed] [Google Scholar]

- 9.Ilea DE, Whelan PF. Image segmentation based on the integration of colour–texture descriptors—a review. Pattern Recognition. 2011;44:2479–2501. doi: 10.1016/j.patcog.2011.03.005. [DOI] [Google Scholar]

- 10.Hawkins, J. K. Textural properties for pattern recognition. Picture Processing and Psychopictorics 347–370 (1970).

- 11.Deng Y, Manjunath B. Unsupervised segmentation of color-texture regions in images and video. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2001;23:800–810. doi: 10.1109/34.946985. [DOI] [Google Scholar]

- 12.Cucchiara R, Grana C, Seidenari S, Pellacani G. Exploiting color and topological features for region segmentation with recursive fuzzy c-means. Machine Graphics and Vision. 2002;11:169–182. [Google Scholar]

- 13.Bezdek JC, Ehrlich R, Full W. Fcm: The fuzzy c-means clustering algorithm. Computers & Geosciences. 1984;10:191–203. doi: 10.1016/0098-3004(84)90020-7. [DOI] [Google Scholar]

- 14.Pinero BA, Serrano C, Acha JI, Roa LM. Segmentation and classification of burn images by color and texture information. Journal of Biomedical Optics. 2005;10:034014. doi: 10.1117/1.1921227. [DOI] [PubMed] [Google Scholar]

- 15.Kurita T, Otsu N, Abdelmalek N. Maximum likelihood thresholding based on population mixture models. Pattern Recognition. 1992;25:1231–1240. doi: 10.1016/0031-3203(92)90024-D. [DOI] [Google Scholar]

- 16.Gómez DD, Butakoff C, Ersboll BK, Stoecker W. Independent histogram pursuit for segmentation of skin lesions. IEEE Transactions on Biomedical Engineering. 2008;55:157–161. doi: 10.1109/TBME.2007.910651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Papazoglou ES, et al. Image analysis of chronic wounds for determining the surface area. Wound Repair and Regeneration. 2010;18:349–358. doi: 10.1111/j.1524-475X.2010.00594.x. [DOI] [PubMed] [Google Scholar]

- 18.Cavalcanti, P. G., Scharcanski, J., Di Persia, L. E. & Milone, D. H. An ICA-based method for the segmentation of pigmented skin lesions in macroscopic images. In Annual International Conference of the IEEE on Engineering in Medicine and Biology Society (EMBC), 5993–5996 (IEEE, 2011). [DOI] [PubMed]

- 19.Cardoso, J.-F. & Souloumiac, A. Blind beamforming for non-Gaussian signals. In IEE Proceedings F (Radar and Signal Processing), vol. 140, 362–370 (IET, 1993).

- 20.Hyvärinen, A., Karhunen, J. & Oja, E. Independent Component Analysis (John Wiley & Sons, 2004).

- 21.Wantanajittikul, K., Auephanwiriyakul, S., Theera-Umpon, N. & Koanantakool, T. Automatic segmentation and degree identification in burn color images. In Biomedical Engineering International Conference (BMEiCON), 169–173 (IEEE, 2012).

- 22.Loizou CP, Kasparis T, Polyviou M. Evaluation of wound healing process based on texture image analysis. Journal of Biomedical Graphics and Computing. 2013;3:1. doi: 10.5430/jbgc.v3n3p1. [DOI] [Google Scholar]

- 23.Chan TF, Vese LA. Active contours without edges. IEEE Transactions On Image Processing. 2001;10:266–277. doi: 10.1109/83.902291. [DOI] [PubMed] [Google Scholar]

- 24.Gonzalez, R. C. & Woods, R. E. Digital Image Processing, 3rd edn (Prentice-Hall, 2010).

- 25.Acha B, Serrano C, Fondón I, Gómez-Cía T. Burn depth analysis using multidimensional scaling applied to psychophysical experiment data. IEEE Transactions on Medical Imaging. 2013;32:1111–1120. doi: 10.1109/TMI.2013.2254719. [DOI] [PubMed] [Google Scholar]

- 26.Madina, E., El Maliani, A. D., El Hassouni, M. & Alaoui, S. O. Study of magnitude and extended relative phase information for color texture retrieval in L* a* b* color space. In International Conference on Wireless Networks and Mobile Communications (WINCOM), 127–132 (IEEE, 2016).

- 27.Serrano C, Boloix-Tortosa R, Gómez-Cía T, Acha B. Features identification for automatic burn classification. Burns. 2015;41:1883–1890. doi: 10.1016/j.burns.2015.05.011. [DOI] [PubMed] [Google Scholar]

- 28.Arfken, G. B. & Weber, H. J. Mathematical methods for physicists (1999).

- 29.Bro R. Parafac. tutorial and applications. Chemometrics and Intelligent Laboratory Systems. 1997;38:149–171. doi: 10.1016/S0169-7439(97)00032-4. [DOI] [Google Scholar]

- 30.Kolda TG, Bader BW. Tensor decompositions and applications. SIAM Review. 2009;51:455–500. doi: 10.1137/07070111X. [DOI] [Google Scholar]

- 31.Cichocki A, et al. Tensor decompositions for signal processing applications: From two-way to multiway component analysis. IEEE Signal Processing Magazine. 2015;32:145–163. doi: 10.1109/MSP.2013.2297439. [DOI] [Google Scholar]

- 32.Andersson CA, Bro R. The n-way toolbox for MATLAB. Chemometrics and Intelligent Laboratory Systems. 2000;52:1–4. doi: 10.1016/S0169-7439(00)00071-X. [DOI] [Google Scholar]

- 33.Achanta R, et al. Slic superpixels compared to state-of-the-art superpixel methods. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2012;34:2274–2282. doi: 10.1109/TPAMI.2012.120. [DOI] [PubMed] [Google Scholar]

- 34.Felzenszwalb PF, Huttenlocher DP. Efficient graph-based image segmentation. International Journal of Computer Vision. 2004;59:167–181. doi: 10.1023/B:VISI.0000022288.19776.77. [DOI] [Google Scholar]

- 35.Badrinarayanan V, Kendall A, Cipolla R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2017;39:2481–2495. doi: 10.1109/TPAMI.2016.2644615. [DOI] [PubMed] [Google Scholar]

- 36.Simon R. Development and validation of biomarker classifiers for treatment selection. Journal of Statistical Planning and Inference. 2008;138:308–320. doi: 10.1016/j.jspi.2007.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Mukherjee S, et al. Estimating dataset size requirements for classifying dna microarray data. Journal of Computational Biology. 2003;10:119–142. doi: 10.1089/106652703321825928. [DOI] [PubMed] [Google Scholar]

- 38.Beleites C, Neugebauer U, Bocklitz T, Krafft C, Popp J. Sample size planning for classification models. Analytica Chimica Acta. 2013;760:25–33. doi: 10.1016/j.aca.2012.11.007. [DOI] [PubMed] [Google Scholar]

- 39.Pham T, Yan H. Color image segmentation using fuzzy integral and mountain clustering. Fuzzy Sets and Systems. 1999;107:121–130. doi: 10.1016/S0165-0114(97)00318-7. [DOI] [Google Scholar]

- 40.Long, J., Shelhamer, E. & Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 3431–3440 (2015). [DOI] [PubMed]

- 41.Fulkerson, B., Vedaldi, A. & Soatto, S. Class segmentation and object localization with superpixel neighborhoods. In IEEE 12th International Conference on Computer Vision, 670–677 (IEEE, 2009).

- 42.Ng, A. Y., Jordan, M. I. & Weiss, Y. On spectral clustering: Analysis and an algorithm. In Advances in Neural Information Processing Systems, 849–856 (2002).

- 43.Krähenbühl, P. & Koltun, V. Efficient inference in fully connected CRFs with Gaussian edge potentials. In Advances in Neural Information Processing Systems, 109–117 (2011).

- 44.He, K., Gkioxari, G., Dollár, P. & Girshick, R. Mask R-CNN. In IEEE International Conference on Computer Vision (ICCV), 2980–2988 (IEEE, 2017).