Abstract

Objectives:

To examine the outcomes of e-learning or blended learning interventions in undergraduate dental radiology curricula and analyze the nature of the knowledge levels addressed in learning interventions.

Methods:

A systematic literature review was performed using a search strategy based on MeSH key words specific to the focus question and indexed in the MEDLINE database. The search again was supplemented by hand-searching of selected journals. Data were extracted relating to outcomes of knowledge and student perceptions. Analysis of the e-learning intervention was performed using a new framework to examine the level of knowledge undertaken: (1) remember/understand (2) analysis or evaluation or diagnosis and (3) performance (“knows how” or “shows how”).

Results:

From the selected 17 papers, 11 were positive about student reported outcomes of the interventions, and 8 reported evidence that e-learning interventions enhanced learning. Out of the included studies, 8 used e-learning at the level of remember/understand, 4 at the level of analysis/evaluate/diagnosis, and 5 at the level of performance (“knows how,” “shows how”).

Conclusions:

The learning objectives, e-learning intervention, outcome measures and reporting methods were diverse and not well reported. This makes comparison between studies and an understanding of how interventions contributed to learning impractical. Future studies need to define “knowledge” levels and performance tasks undertaken in the planning and execution of e-learning interventions and their assessment methods. Such a framework and approach will focus our understanding in what ways e-learning is effective and how it contributes to better evidence-based e-learning experiences.

Introduction

Healthcare education faces a number of challenges relating to increasing student cohort sizes, shortages of clinical teaching staff to meet these numbers, an increasingly diverse curricula for new learning domains and content, and a desire to meet the learning needs of 21st century learners. E-learning is a growing phenomenon in education that supports students learning in diverse ways and in flexible environments.1E-learning is considered a generic term that encompasses electronically supported learning and teaching and may or may not be online and can be delivered or supported by technology either in-classroom or out-of-classroom. One of e-learning’s strengths is that it facilitates self-paced or instructor-led-learning and that can include an array of media in the form of text, images, animation, video and audio.2

E-learning can help address some of the challenges in healthcare education by allowing: (1) on demand access (time, place, pace and scale); (2) control (standardized content, quality assurance); (3) and learning analytics. E-learning has been explored in clinical and other curricula either as an isolated standalone intervention or combined with traditional classroom or face-to-face teaching to form blended learning.3 In a survey of online learning, 69% of “chief academic leaders” believe that online learning is critical to their long-term teaching methodology and 77% perceived that the learning outcomes with online learning is the same or superior to that of face-to-face learning.4 A large number of papers have reported on “blended learning”. However, there is a need to examine in detail the nature of the blend as “no two blended learning designs are identical”.5 Blended learning has been described as “the integration of modern learning technology with asynchronous or synchronous interaction into traditional classroom learning/pedagogy”.6

In dentistry, e-learning and blended learning has been particularly explored in radiology because it is rich in digital images and suited for online access and viewing. Furthermore, it can be easily used to test learner’s recognition and diagnosis of anatomical features or disease attributes. The aim of this systematic review is to examine the outcomes of dental radiology e-learning and blended learning outcomes as well as to analyze the knowledge level of the learning interventions undertaken and to consider recommendations for future use in educational research and e-learning design. From these, more detailed understanding may be possible about the nature of online learning and how we may determine good practices on which to propose guidelines for future teaching and learning.

Methods and materials

This systematic review was conducted and reported according to Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines.7

Search strategy

An electronic search was done by one of the authors (KRA) using the following databases: PubMed, Science Direct, Web of Science, ERIC (via ProQuest) and Scopus using keywords specific to the research question to examine the outcomes of e-learning. The search terms used and dates of individual searches are provided in Table 1. The search strategy was determined after consultation with review team members and was designed for high recollection rather than high precision for the first occurrence. The end search date was April 24, 2017 across all databases, and the evaluated time span was 25 years. From the studies selected, an analysis of the knowledge levels undertaken in the e-Learning experiences was performed. All references were managed by software (EndNote X8, Clarivate Analytics, Philadelphia, PA).

Table 1.

Database Search strategy

| Database (Date searched) | Search Terms |

| PubMed (April 24, 2017) |

#1 oral radiology OR dental

radiologic science course OR dental radiology OR oral radiologic

science course OR craniofacial radiology OR head and neck

radiology OR maxillofacial radiology #2 blended learning OR hybrid learning OR integrated learning OR e-learning OR computed-aided learning OR computer assisted learning OR self instruction learning OR self instruction programs, computerized OR programmed learning OR Self-instruction Programs OR Program, Self-Instruction OR Programs, Self-Instruction OR Self Instruction Programs OR Self-Instruction Program OR Learning, Programmed OR web based education OR computer based learning OR computerized programmed instruction OR instruction, computer assisted OR self instruction program, computerized OR online learning OR computerized assisted instruction OR web based learning OR distance learning OR distance education OR web based training OR computer based training OR online education OR internet based learning OR learning management system OR computer aided instruction OR internet based training OR multimedia learning OR technology enhanced learning #3 #1 AND #2 #4 (#1 and #2) Filters: Publication date from 1991/01/01 to 2017/04/24 |

| Web of Science (April 24, 2017) |

TOPIC: (oral radiology OR dental

radiologic science course OR dental radiology OR oral radiologic

science course OR craniofacial radiology OR head and neck

radiology OR maxillofacial radiology) AND TOPIC: (blended

learning OR hybrid learning OR integrated learning OR e-learning

OR computed-aided learning OR computer assisted learning OR self

instruction learning OR self instruction programs, computerized

OR programmed learning OR Self-instruction Programs OR Program,

Self-Instruction OR Programs, Self-Instruction OR Self

Instruction Programs OR Self-Instruction Program OR Learning,

Programmed OR web based education OR computer based learning OR

computerized programmed instruction OR instruction, computer

assisted OR self instruction program, computerized OR online

learning OR computerized assisted instruction OR web based

learning OR distance learning OR distance education OR web based

training OR computer based training OR online education OR

internet based learning OR learning management system OR

computer aided instruction OR internet based training OR

multimedia learning OR technology enhanced

learning) Timespan: 1991–2017 |

| Science Direct (April 24, 2017) | “oral radiology” OR

“dental radiologic science course” OR

“dental radiology” OR “oral radiologic

science course” OR “craniofacial radiology”

OR “head and neck radiology” OR

“maxillofacial radiology” AND “blended

learning” OR “hybrid learning” OR

“integrated learning” OR

“e-learning” OR “computed-aided

learning” OR “computer assisted learning”

OR “self instruction learning” OR “self

instruction programs, computerized” OR “programmed

learning” OR “Self-instruction Programs” OR

“Program, Self-Instruction” OR “Programs,

Self-Instruction” OR “Self Instruction

Programs” OR “Self-Instruction Program” OR

“Learning, Programmed” OR “web based

education” OR “computer based learning” OR

“computerized programmed instruction” OR

“instruction, computer assisted” OR “self

instruction program, computerized” OR “online

learning” OR “computerized assisted

instruction” OR “web based learning” OR

“distance learning” OR “distance

education” OR “web based training” OR

“computer based training” OR “online

education” OR “internet based learning” OR

“learning management system” OR “computer

aided instruction” OR “internet based

training” OR “multimedia learning” OR

“technology enhanced learning” Time limit: 1991 to present |

| Scopus (April 24, 2017) | ALL (“oral radiology” OR “dental radiologic science course” OR “dental radiology” OR “oral radiologic science course” OR “craniofacial radiology” OR “head and neck radiology” OR “maxillofacial radiology” ) AND ALL ( “blended learning” OR “hybrid learning” OR “integrated learning” OR “e-learning” OR “computer-aided learning” OR “computer assisted learning” OR “self instruction learning” OR “self instruction programs, computerized” OR “programmed learning” OR “Self-instruction Programs” OR “Program, Self-Instruction” OR “Programs, Self-Instruction” OR “Self Instruction Programs” OR “Self-Instruction Program” OR “Learning, Programmed” OR “web based education” OR “computer based learning” OR “computerized programmed instruction” OR “instruction, computer assisted” OR “self instruction program, computerized” OR “online learning” OR “computerized assisted instruction” OR “web based learning” OR “distance learning” OR “distance education” OR “web based training” OR “computer based training” OR “online education” OR “internet based learning” OR “learning management system” OR “computer aided instruction” OR “internet based training” OR “multimedia learning” OR “technology enhanced learning” ) AND PUBYEAR >1990 |

| ERIC (via ProQuest) (April 24, 2017) | (oral radiology OR dental radiologic

science course OR dental radiology OR oral radiologic science

course OR craniofacial radiology OR head and neck radiology OR

maxillofacial radiology) AND (blended learning OR hybrid

learning OR integrated learning OR e-learning OR computed-aided

learning OR computer assisted learning OR self instruction

learning OR self instruction programs, computerized OR

programmed learning OR Self-instruction Programs OR Program,

Self-Instruction OR Programs, Self-Instruction OR Self

Instruction Programs OR Self-Instruction Program OR Learning,

Programmed OR web based education OR computer based learning OR

computerized programmed instruction OR instruction, computer

assisted OR self-instruction program, computerized OR online

learning OR computerized assisted instruction OR web based

learning OR distance learning OR distance education OR web based

training OR computer based training OR online education OR

internet based learning OR learning management system OR

computer aided instruction OR internet based training OR

multimedia learning OR technology enhanced learning) Time limit: January 01 1991 to April 24 2017 |

Selection criteria strategy

Table 2 provides details of inclusion and exclusion selection criteria which were based on the PICOS (Population, Intervention, Comparison, Outcome and Studies) framework.8

Table 2.

Eligibility criteria based on PICOS framework i.e. p = population, I = intervention, C = comparison, O = outcomes, S = studies

| Inclusion criteria | Exclusion criteria | |

| P | Studies conducted among dental students at any stage of undergraduate radiology education | Postgraduate students, instructor, faculty staff and clinicians Not dental or medical under graduate students. |

| I | Studies that explored the effect of either e-learning or blended learning teaching approaches. E-learning intervention that were web-based educational software, online Power point slides, online documents, online tests, and online interactivity. | Not exploring the effects of

e-learning or blended learning intervention. E-learning

intervention that were based on video-conferencing, television

or social media. Studies not involving online or computer-based activities i.e. occurring mainly in simulation laboratories. Studies about the development of computer technologies or software, but not applied directly to dental students |

| C | Studies that involved a comparison of

either e-learning or blended learning with or without

traditional learning OR just the evaluation of e-learning or blended learning intervention |

|

| O | Studies that involved the investigation of learning outcomes related to knowledge content, learning of clinical skills (radiologic and radiographic) performance and assessment of student’s knowledge. Studies that explored the learning outcomes related to student’s attitudes, student’s preference and student’s satisfaction in the learning activity. | Studies which did not report quantitative or qualitative learning outcomes related to these two domains |

| S | Studies such as randomized, non-randomized and cohort studies. | Studies in which e-learning or computer technologies were not used as a part of the education content delivery but only for administrative purposes; reviews, conference abstracts, book chapters were excluded. |

Data collection process

A data extraction sheet was developed and pilot tested on five randomly selected studies. One author (KRA) extracted the data from included studies and the second author (MB) checked the extracted data and clarified any issues with the first. The remaining studies were analyzed, after approval of the data collection form based on the calibration exercise.

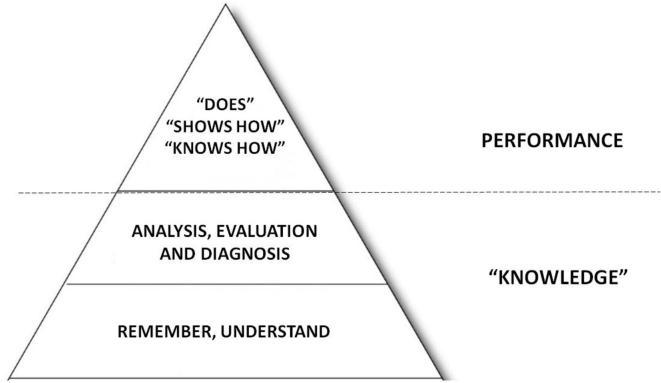

In addition, the nature of the e-learning intervention with regards to levels of learning has been classified into three proposed domains based on combining particular features of Blooms and Millers taxonomies.9, 10 The proposed levels of learning designed by the authors are:

Knowledge—remembering and understanding

Knowledge—analysis, evaluation, diagnosis

Performance—“knows how”/“shows how”

“Knowledge” in this instance reflects two levels of Blooms Taxonomy - remembering and understanding of facts and their meaning, which are the lower levels of the taxonomy. The higher knowledge level is—analysis, evaluation and diagnosis which involves visual recognition of anatomical and pathological features and the application of knowledge for clinical diagnoses. These are at the higher levels of Blooms pyramid (Figure 1). We place on top of this “knowledge” base, Millers classification of performance knowledge relating to: knowing how, showing how or the doing of a clinical procedure (Figure 1).

Figure 1.

A proposed diagrammatic framework for classifying types of learning activity used in e-learning combining Blooms and Millers taxonomy. “Knowledge” has lower order and higher order levels according to Blooms taxonomy and performance from Millers classification. In the case of the current papers examined “does” was not evaluated as an outcome measure of the e-learning papers analyzed.

Results

Study selection

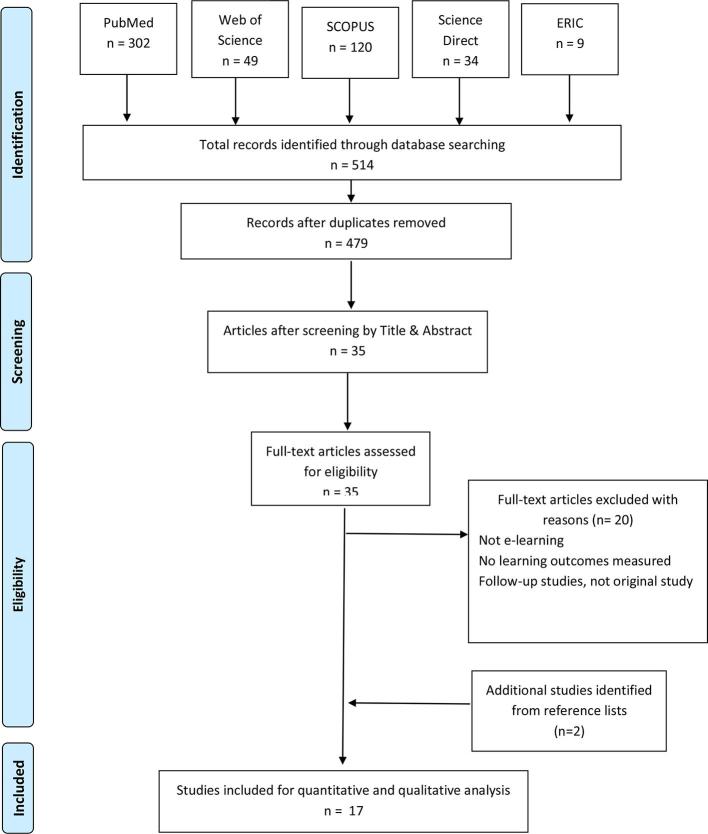

A flow chart of the process of identification, screening, eligibility and inclusion of studies is shown in Figure 2. The electronic search initially yielded 514 hits, of which 35 articles were duplicates. By applying the inclusion and exclusion criteria detailed above, screening of title and abstract was performed for the remaining 479 articles and 35 were found to be potentially eligible. Then, full texts of these studies were examined in detail for further assessment, and an additional 20 studies were excluded (Table 3). In addition 2 studies were further added from hand searching. Finally, 17 studies were included for analysis of outcomes data and the classification of the nature of the level of knowledge undertaken.

Figure 2.

Workflow of the numbers of studies identified, screened and those meeting eligibility and included in the evaluation.

Table 3.

Excluded articles and reasons for exclusion

| First Author, Date | Reason for exclusion | First Author, Date | Reason for exclusion | First Author, Date | Reason for exclusion |

| Chang et al., 201711 | 2 | Kumar et al., 201212 | 1 | Tanimoto et al., 200613 | 4 |

| Eraso et al., 200214 | Lee et al., 200615 | van der Stelt et al., 200816 | 4 | ||

| Farkhondeh et al., 201517 | 4 | Ludlow et al., 200018 | 4 | Wenzel et al., 199719 | 3 |

| Fleming et al., 200320 | 4 | Ludwig et al., 201621 | 4 | Wenzel et al., 200222 | 3 |

| Foster et al., 201123 | 2 & 4 | Miller et al., 199824 | 1 | Wu et al., 200725 | 2 |

| Gonzalez et al., 201626 | 2 | Nilsson et al., 201127 | 1 | Wu et al., 201028 | 2 |

| Kavadella et al., 200729 | 1 | Ramesh et al., 201630 | 2 |

1 = Not e-learning; 2 = Outcome measures not identified or no evaluation of learning approach; 3 = Follow-up-studies, not original study; 4 = not clear study design or development of tool not applied directly to dental undergraduate students.

Intervention designs and reporting

The mode of e-learning interventions varied across the included studies. The types of interventions, study designs and learning outcome measures reported in these studies are shown in Table 4. Seven out of 17 studies focused on the use of an e-learning intervention alone, which ranged from an isolated 30 min e-learning event such as learning the bisecting angle technique31to an online e-course over one semester on the topic of radiological anatomy.32Ten out of 17 studies reported a blended learning design, which also showed diversity. These designs ranged from an e-learning intervention such as a 90 min activity to help with the use of CBCT software inserted in an existing traditional course on 3-dimensional imaging for a particular visualization skill33 or with “e-learning” used to supplement existing lectures over the duration of the course.34–36 In another study, the described blended group used the slides and notes from the weekly lectures to be available online to replace the traditional lectures.37 The different types of “e-learning” interventions enforced in the included studies are shown in Table 5.

Table 4.

Types of interventions, study designs and learning outcome measures reported in included studies

| Studies | Intervention design | Study design | Outcome measures | |

| *=E-learning #=Blended learning |

Quantitative outcomes (p value or mean) | Qualitative outcomes (p value or mean) | ||

| Al-Rawi, 2007 | Online interactive assessment modules on “basics” of CBCT* | Split cohorts | Assessment outcomes—no significant difference (p = 0.14) | “Attitude”—“positive” |

| Busanello, 2015 | 3 e-learning sessions of 110 digitally altered images for recognition and diagnosis of changes* | Split cohorts | Significant writing (p < 0.004) and practice (p < 0.003) test results | Preference—(mean = 90.5%) |

| Cruz, 2014 | “e-course”: digital periapical images with texts and questions to evaluate the maxillofacial anatomy* | Two cohorts | Assessment outcomes —no significant difference (p > 0.05) | “Satisfaction”—(mean = 8.47) |

| Howerton, 2002 | 27 online interactive video clips for virtual exposing, developing and mounting dental radiographs on a mannikin* | Split cohorts | Performance—– no difference in quality of radiograph (p = 0.30) | “Preference”—(p < 0.0001) |

| Howerton, 2004 | 27 online interactive video clips blended with 3 Power point lectures for exposing dental radiographs# | Split cohorts | Performance—no significant difference in posttest (p = 0.98) | “Preference”—(p < 0.0001) |

| Kavadella, 2012 | e-class course blended with weekly lectures for knowledge of radiological lesions# | Split cohorts | Significant post test results for knowledge (p < 0.005) | “Attitude”—“positive” (mean = 91%) |

| Meckfessel, 2011 | Online “Medical schoolbook” blended with 20 lectures on the positioning of X-ray apparatus and to obtain radiographs virtually” and knowledge of “physical basics”# | Two cohorts | Significant examination results for Knowledge grade (p < 0.001) | “Attitude”—“positive” (mean = 70%) |

| Mileman, 2003 | Computer-assisted learning for the detection of proximal caries on digitized bitewings* | Split cohorts | Significant higher sensitivity for caries detection (p = 0.005) | |

| Nilsson, 2007 | 2 self-directed sessions using

software for a virtual “tube shift technique”.

Cohort 1. Tutor led session with “10 cases of computerized materials” on the impact of tube positioning. Cohort 2* |

Two cohorts | Cohort 1 had significantly better pre and post “proficiency and radiography test” for interpreting spatial information (p < 0.01) | |

| Nkenke, 2012 | 8 online e-learning modules blended with 8 lectures for “radiological science course” # | Split cohorts | No significant examination results for Knowledge grade (p = 0.449) | “Attitude”—“positive” (p = 0.020) |

| Nkenke, 2012 | 8 lectures followed by e-mail with MCQs and feedback on “radiological science course” # | Split cohorts | “spent more time with learning content” (p < 0.0005) | “Attitude”—“positive” (p = 0.022) |

| Silveira, 2008 | Lecture first, blended with 30 min online virtual procedures for bisecting angle technique then tested tube positioning on simulated patient and then radiograph exposure on a manikin# | Split cohorts | Significant “simulation” test grade (p < 0.01) | “more confident and better prepared” for real patient |

| Silveira, 2009 | Interactive e-learning using virtual objects, animations and quizzes to identify 28 cephalometric landmarks* | Split cohorts | Significant knowledge grade of correct landmark identification (p < 0.05) by delayed post-test | Preference—(mean = 82.5%) |

| Tan, 2009 | Lectures first, blended with 8 e-learning modules for the “radiological science course” # | Split cohorts | Significant knowledge assessment scores in examination (p < 0.01) | Perception—(p < 0.05) |

| Tsao, 2016 | Lectures first, blended with 5 e-learning modules for the diagnosis of interproximal caries# | Split cohorts | No significant difference assessment scores in diagnostic accuracy (p = 0.45) | Perception—(mean = 62.5%) |

| Vuchkova, 2011 | Use of conventional textbook on oral pathosis, blended with a seminar with 3D software on depth relationships of pathosis on panoramic radiographs # | Only one cohort | Use of 3D software did not improve outcome (p > 0.05) | “Preference”—(mean = 88%) |

| Vuchkova, 2012 | First cohort: Use of online textbook

followed by online “digital tool” (first cohort)

and vice versa (second cohort). Phase 2: use of conventional textbook followed by “digital tool” for the knowledge of radiographic anatomy# |

Two cohorts in Phase 1 one cohort in Phase 2 | No significant difference in test scores for knowledge (p > 0.05) | “Preference”—(mean = 94%) |

Where, Two cohort study design means students compared from different semesters or years. Split cohorts study design means students from same semester or year divided into two groups.

Table 5.

Types of e-learning interventions used in included studies

| E-learning interventions used in included studies | Studies |

| Web-based software/platform | Cruz 2014, Meckfessel 2011, Mileman 2003 Nilsson 2007, Nkenke 2012, Vuchkowa 2011 and Vuchkowa 2012 |

| Interactive modules with MCQ (self-assessment tests)/quizzes/matching questions | Busanello 2015, Tsao 2016 and Silveira 2008 |

| Interactive animations with videos | Al-Rawi 2007, Howerton 2002, 2004 and Silveira 2009 |

| Narrated PP lectures | Tan 2009 |

| Online word documents/notes | Kavadella 2012 |

| Emails containing MCQs and an additional email including the correct answers | Nkenke 2012 |

The nature of the research learning intervention design was diverse. Ten out of 17 studies were randomized, five studies were not randomized, and 2 studies did not have a comparison group. In one study, a cross-over design was used, comparing the use of an online visual technology, an interactive digital image was used that allowed scrolling through superimposed images of clinical photographs to reveal the deep radiological labeled anatomical structures.38 In another study, Nkenke39 supplemented 8 lectures by e-mailing 3 MCQs for each lecture in the intervention group and after students replied the answers, explanations were returned to them.

There were also some shortcomings in the reporting nature of the studies. Tsao40 used a 5-module online program for the diagnosis of interproximal caries, which was available 2 months before access to clinics but was not compulsory. 3 clinical cases were used to assess the learning accessed from the modules. However, no difference was observed between the students who used the modules and those who did not. The cohort design was not clear, nor the participation rate of the use of the 5 modules. Of the 17 studies, 3 studies31, 41,42 specifically mentioned that e-learning was used in the class while the remainder did not report if it was in the class or out of the class/remote access. With regards to completion of the online materials, 4 studies33, 35,36,39 stated 100% of students had access to the e-learning material while only two studies clearly reported that 100% of students actually completed the e-learning activity.35, 39 Also, different experimental designs were used in the studies with regards to evaluation of knowledge outcomes. Four studies33, 37,42,43 did pre- and post-tests on the knowledge outcomes using an online test. However, none of these studies stated if the questions were different or similar between the two tests, which may have resulted in a familiarity effect bias. Furthermore, examples of questions were not given to understand the level of knowledge assessed.

Learning objectives and levels of knowledge

The learning objectives of the study interventions were diverse and at different levels of knowledge. From an analysis of the learning outcomes it was possible to infer the level of knowledge or skill intended to be achieved by the intervention. Out of the 17 included studies, 8 examined the level of “knowledge” with learning objectives ranging from: identification of radiological landmarks,44 knowledge of basic radiological science,,36, 38,39 knowledge of radiological lesions,37 learning of dento-alveolar anatomy in intraoral periapical radiographs,32 and craniofacial anatomy in CBCT.45 Four studies examined analysis or diagnosis skills in their intervention with the learning objectives ranging from diagnosis of proximal caries,40, 46 oral pathosis33 to diagnosis of changes in manipulated dental radiographs.41

There were five “performance”-related learning objectives. At the “knows how” level, two studies examined the correct virtual X-ray tube positioning with regards to obtaining proper intraoral images34 and understanding anatomical relationship changes when performing tube shift.42 At the “shows how” level, two studies related to performing long-cone paralleling technique to understand exposing, developing and mounting dental radiographs on a manikin.43, 47 While in another study, the learning objective was to perform bisecting angle simulation technique on peers but with no exposure.31

There was no apparent trend in the outcomes of knowledge or students attitudes with regards to the levels of knowledge examined in the interventions. For all the assessment outcome measures, it was not possible to evaluate the knowledge level that was examined as this was not consistently presented in the papers and therefore if this assessment was aligned to the learning outcomes.

Outcome analysis

Outcome measures—knowledge and attitudes

Quantitative outcomes were measured in all 17 studies. A total of 8 out of the 17 papers reported positive knowledge outcomes relating to knowledge, analysis, diagnosis and knows how and shows how of student outcomes in the assessment tests. Qualitative outcomes were measured in 15 out of 17 studies. A total 11 of 15 papers demonstrated positive student reported outcomes relating to student’s attitude.

Student’s “knowledge”—remember/understand and analysis/evaluation/diagnosis

Here we consider the task for “performing a diagnosis” to be a cognitive task and in our taxonomy the “knows how” and “shows how” relates to a performance skill. Six studies examined knowledge alone as a learning outcome, and two of these reported a significant benefit using either e-learning or blended learning. Of these, Silveira44 only observed a difference in the delayed and not the immediate post-test assessment of the e-learning group. Tan36 identified a significant difference in knowledge assessment of “basics and radiological anatomy” in the blended learning group. In the remaining four studies, no significant differences were observed between e-learning/blended learning and conventional teaching groups in knowledge outcomes.32, 35,38,39

Two researchers37, 45 examined learning for both knowledge and analysis or diagnosis and of these, only Kavadella37 observed a significant benefit of blended learning in knowledge outcomes. Interestingly, in both studies it appeared that analysis or diagnosis was not evaluated in the assessment outcome, meaning the assessment was not aligned to the learning objectives.

Four papers33, 40,41,46 examined analysis and/or diagnosis in the learning outcomes and from these, two studies reported significant benefits of e-learning. Busanello41 found significant benefits of e-learning for recognizing radiographic changes using digitally modified images when compared to the control groups having three 50 min lectures. In this study, there may be expected to be a testing effect of experiencing the images online before the assessment outcome measure. Mileman46 also found a significantly higher sensitivity for caries detection after using a computer-assisted learning program.

Student’s performance—“knows how” and “shows how”

Five studies31, 34,42,43,47 related to "knows" and "shows how" for dental radiography which was assessed either online or in the moment by examiners. Two of these five studies examined “knows how” in the assessment outcome with significant effect, Meckfessel34 examined virtual positioning of X-ray tubes and the other investigator, Nilsson42 examined spatial awareness skills of virtual tube-shift radiographic technique. In another two split cohort designs, researchers observed student’s performance of full mouth radiographs on manikins and found no significant difference between e-learning and traditional lecture group.43, 47 Similarly, in the last “shows how” study,31 the students used an interactive e-learning tool (30 min) to learn and understand the virtual performance of the bisecting angle technique for periapical radiographs compared to the control group using “phantoms”. In the performance test on manikins, the e-learning students were “significantly better” than the control group, however the p-value was not given. No studies examined “does” as an assessment outcome on the actual performance of radiography on patients.

Students’ attitude

Qualitative outcomes were measured in 15 out of 17 studies regarding student’s attitudes of e-learning and blended learning. 13 of the 15 studies had positive responses ranging from 70–95% of approval of the intervention by the students. Two studies out of these 15 reported no significant difference between the conventional and intervention group.

Discussion

This paper performed a systematic review to examine the nature of e-learning - whether alone or blended - for dental radiology with a particular novel focus on analysis of the levels of “knowledge” and performance of the e-learning activity undertaken. The findings of the current study on student knowledge and student attitudes are similar to previous healthcare e-learning reviews.48, 49 There is a heterogeneity in the nature of the studies and there was positive reporting on student attitudes and satisfaction to e-learning48, 49 and support for the benefit of e-learning for knowledge outcomes.49

The main focus of this paper was to analyze e-learning activities undertaken, from this a new framework for evaluating “knowledge” and performance levels in these studies has evolved (Figure 1). Such a framework does not appear to have been used before and this framework is recommended to be used to help researchers and curriculum planners when designing e-learning activities. Using this approach, a focused evaluation of the outcomes mapped to the “knowledge” and performance will allow a more refined understanding of the nature and benefit of e-learning. In particular the differentiation of knowledge into the lower and higher levels (Figure 1) will allow course designers and researchers to consider the nature of the level of learning being undertaken and the best way to teach these as they are quite different. This in turn will affect teaching and assessment approaches. The detailed examination of these two levels of knowledge will allow more meaningful insights for researchers so that they may be able to answer: how, when why and where e-learning is better.50

A number of limitations were found in the majority of the studies examined. There was often a lack of reported details on the type and nature of the learning content, e-learning interventions and the assessment outcomes undertaken. This has been reported previously.51 In addition, no studies aligned the learning objectives and e-learning content with the method of assessment. This means we do not clearly know if what was planned was taught and if this was specifically and appropriately assessed. Furthermore, only a few of the e-learning interventions reported how many students completed the learning activity.39, 43,47 Thus, poor compliance of e-learning may account for the studies that observed no difference between the test groups such that students were not participating. Therefore, it may be possible that the benefits of e-learning may be under reported. Based on these anomalies in study reporting, it makes comparison of outcomes difficult or meaningless between the studies included in the present review. Therefore the reporting details in e-learning studies needs to be more meticulous to allow understanding of not only what was undertaken but also the content and presentation of the e-learning experience and how it was assessed. In particular, the assessment instrument used should be presented with examples to understand the level of the knowledge/performance level evaluated. Therefore, the proposed approach will be to first define the learning objectives for the course and choose the content to be taught to support this. Then using the proposed framework, map the content to the “knowledge” level and performance. After this then choose the most suitable mode of delivery for the content i.e. e-learning or face-to-face instruction and finally map the “knowledge” and performance levels to the considered appropriate assessment method.

In a previous literature review on undergraduate oral radiology education, it was concluded that e-learning was more properly used as a support to traditional education and not as a substitution for it.49 However, it could be argued it depends on which knowledge level or skill set is the goal of the learning, for example visual diagnosis skills may be better learnt using online learning, testing and automated feedback. Conversely, when considering performance level of radiography one should question if e-learning alone is a suitable tool to learn performance level psychomotor activities.

In an evaluation of e-learning of undergraduate medical radiology education, the nature and characteristics of e-learning was evaluated according to Kirkpatricks learning model levels.52 Level 1 demonstrated good support for learner satisfaction and level 2 for learning outcomes was generally positive although not as strong as level 1. However, for the higher levels (3 and 4) student practice performance and enhanced patient health outcomes, were not supported by the evidence found by the authors. This outcome is also supported in a systematic review specifically examining the effect of e-learning on clinician behavior and patient outcomes.51 However, the limited evidence for levels 3 and 4 is not surprising as currently online or e-learning activities are not designed to replace certain skill sets such as psychomotor skills learning or communication skills. Therefore one may question why evaluate this teaching methodology for an outcome it is not suited for? We may therefore be able to deduce that e-learning can be considered suitable for “knowledge” including procedural performance knowledge but not for actual patient practice.

In a meta-analysis of health professions education, it was unsurprisingly shown that internet-based learning had large positive effect sizes when compared to no intervention.53 However, when they compared e-learning to “traditional” methods, the outcome effects were heterogeneous with some studies favoring e-learning and some traditional. While overall the differences between e-learning and traditional format were small, the authors reported that computer assisted instruction is neither inherently superior to nor inferior to traditional methods. This was observed in the current study. However, the review authors raised question of what is it about the design of the studies that favored e-learning over traditional and vice-versa, they also proposed that future research should compare different internet-based interventions and clarify “how” and “when” to use e-learning effectively. The current study supports these observations.

The current study observed diverse approaches in e-learning and blended learning which has been reported in a systematic review of blended learning where none of the interventions were alike.54 This makes comparisons difficult or even impossible between studies, and reinforces the need to more focused approaches on study design and reporting. The diverse and uncontrolled nature of blended learning research studies has too many confounding factors to allow for meaningful comparison between them.

E-learning is here to stay. As our understanding improves on what it can achieve and how to integrate it into “traditional” teaching it will only improve in how and where it is implemented and the outcomes achieved. We are aware of the benefits of e-learning for learner access to content with regards to time, location and scalability. In addition, the use of “e-learning” in assessment formats both for and of learning allows automated marking and feedback for learners to support learning effectively and efficiently. In addition, the analytics possible through learning management systems with e-learning will provide insights and information on individuals and groups of learners to allow feedback to teachers and course planners and students. This can also be allowed to create more customized learning experiences and even be adapted to individual’s needs. In particular, this may allow e-learning planners to consider the multiple intelligences of students (e.g. logical, intrapersonal and interpersonal) and how these can be addressed to meet the preferences and needs of learners.55

Conclusion

This paper supports previous literature on the benefit of e-learning and blended learning based on knowledge and attitude outcomes. However, from the current literature it is not possible to clearly identify how, when and in what way it is beneficial. This paper highlights the need for more focused and detailed research and reporting of e-learning to further our understanding of its benefits. A framework is proposed to help curriculum designers and researchers to examine particular levels of knowledge required and use this for learning objective setting, the mode of content delivery and assessment method to determine and deliver effective e-learning content. It is proposed that such an approach will help to create more meaningful e-learning experiences and help generate an evidence base to inform better e-learning practices across all clinical disciplines.

REFERENCES

- 1.Li TY, Gao X, Wong K, Tse CS, Chan YY. Learning clinical procedures through internet digital objects: experience of undergraduate students across clinical faculties. JMIR Med Educ 2015; 1: 1–10. doi: 10.2196/mededu.3866 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Donnelly P. E-learning.what is it? How to succeed at e-learning. 12th ed: The British Institute of Radiology.; 2012. 5–27. [Google Scholar]

- 3.Norberg A, Dziuban CD, Moskal PD. A time‐based blended learning model. On the Horizon 2011; 19: 207–16. [Google Scholar]

- 4.Allen IE, Seaman J. Changing course: ten years of tracking online education in the United States. Babson Park, MA: The British Institute of Radiology.; 2013. [Google Scholar]

- 5.Garrison DR, Kanuka H. Blended learning: Uncovering its transformative potential in higher education. The Internet and Higher Education 2004; 7: 95–105. [Google Scholar]

- 6.Bonk CJ, Graham CR. The handbook of blended learning: global perspectives, local designs. 1st ed San Francisco: The British Institute of Radiology.; 2006. 585. [Google Scholar]

- 7.Moher D, Liberati A, Tetzlaff J, Altman DG, PRISMA Group . Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med 2009; 151: 264. doi: 10.7326/0003-4819-151-4-200908180-00135 [DOI] [PubMed] [Google Scholar]

- 8.Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JP, et al. . The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med 2009; 6: e1000100. doi: 10.1371/journal.pmed.1000100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bloom BS, Hastings JT, Madaus GF. Handbook on formative and summative evaluation of student learning. New York: The British Institute of Radiology.; 1971. [Google Scholar]

- 10.Miller GE. The assessment of clinical skills/competence/performance. Acad Med 1990; 65(9 Suppl): S63–7. [DOI] [PubMed] [Google Scholar]

- 11.Chang HJ, Symkhampha K, Huh KH, WJ Y, Heo MS, Lee SS, et al. . The development of a learning management system for dental radiology education: a technical report. Imaging Sci Dent 2017; 47: 51–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kumar V, Gadbury-Amyot CC. A case-based and team-based learning model in oral and maxillofacial radiology. J Dent Educ 2012; 76: 330–7. [PubMed] [Google Scholar]

- 13.Tanimoto H, Gröndahl HG, Gröndahl K, Arai Y. Further development of a versatile computer-assisted learning program for dental education with an exemplifying application on how to logically arrange and mount periapical and bitewing radiographs. Oral Radiology 2006; 22: 75–9. [Google Scholar]

- 14.Eraso F. The effectiveness of a web-based oral radiology education. J Dent Res 2002; 81: A347–A347. [Google Scholar]

- 15.Lee BD, Koh SH, Park HU. Comprehension of oral and maxillofacial radiology by using a E-Learning system. Int J Comput Assist Radiol Surg 2006; 1: 541. [Google Scholar]

- 16.van der Stelt PF. Better imaging: the advantages of digital radiography. J Am Dent Assoc 2008; 139: 7–13. [DOI] [PubMed] [Google Scholar]

- 17.Farkhondeh A, Geist JR. Evaluation of web-based interactive instruction in intraoral and panoramic radiographic anatomy. J Mich Dent Assoc 2015; 97: 34–8. [PubMed] [Google Scholar]

- 18.Ludlow JB, Platin E. A comparison of Web page and slide/tape for instruction in periapical and panoramic radiographic anatomy. J Dent Educ 2000; 64: 269–75. [PubMed] [Google Scholar]

- 19.Wenzel A, Gotfredsen E. Students' attitudes towards and use of computer-assisted learning in oral radiology over a 10-year period. Dentomaxillofac Radiol 1997; 26: 132–6. doi: 10.1038/sj.dmfr.4600212 [DOI] [PubMed] [Google Scholar]

- 20.Fleming DE, Mauriello SM, McKaig RG, Ludlow JB. A comparison of slide/audiotape and web-based instructional formats for teaching normal intraoral radiographic anatomy. J Dent Hyg 2003; 77: 27–35. [PubMed] [Google Scholar]

- 21.Ludwig B, Bister D, Schott TC, Lisson JA, Hourfar J. Assessment of two e-learning methods teaching undergraduate students cephalometry in orthodontics. Eur J Dent Educ 2016; 20: 20–5. [DOI] [PubMed] [Google Scholar]

- 22.Wenzel A. Two decades of computerized information technologies in dental radiography. J Dent Res 2002; 81: 590–3. doi: 10.1177/154405910208100902 [DOI] [PubMed] [Google Scholar]

- 23.Foster L, Knox K, Rung A, Mattheos N. Dental students’ attitudes toward the design of a computer-based treatment planning tool. J Dent Educ 2011; 75: 1434–42. [PubMed] [Google Scholar]

- 24.Miller CS, Rolph C, Lin B, Rayens MK, Rubeck RF. Evaluation of a computer-assisted test engine in oral and maxillofacial radiography. J Dent Educ 1998; 62: 381–5. [PubMed] [Google Scholar]

- 25.Wu M, Koenig L, Zhang X, Lynch J, Wirtz T. Web-based training tool for interpreting dental radiographic images. AMIA Annu Symp Proc 2007; 1159: 1159. [PubMed] [Google Scholar]

- 26.Gonzalez SM, Gadbury-Amyot CC. Using twitter for teaching and learning in an oral and maxillofacial radiology course. J Dent Educ 2016; 80: 149–55. [PubMed] [Google Scholar]

- 27.Nilsson TA, Hedman LR, Ahlqvist JB. Dental student skill retention eight months after simulator-supported training in oral radiology. J Dent Educ 2011; 75: 679–84. [PubMed] [Google Scholar]

- 28.Wu M, Zhang X, Koenig L, Lynch J, Wirtz T, Mao E, et al. . Web-based training method for interpretation of dental images. J Digit Imaging 2010; 23: 493–500. doi: 10.1007/s10278-009-9223-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kavadella A. Comparative evaluation of the educational effectiveness of two didactic methods conventional and blended learning in teaching dental radiology to undergraduate students. Eur J Dent Educ 2007; 11: 112–24. [Google Scholar]

- 30.Ramesh A, Ganguly R. Interactive learning in oral and maxillofacial radiology. Imaging Sci Dent 2016; 46: 211–6. doi: 10.5624/isd.2016.46.3.211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Silveira H, Liedke G, Dalla-Bona R, Silveira H. Development of a graphic application and evaluation of teaching and learning of the bisecting-angle technique for periapical radiographs. Educação, Formação Tecnologias 2008; 1: 59–65. [Google Scholar]

- 32.Cruz AD, Costa JJ, Almeida SM. Distance learning in dental radiology: Immediate impact of the implementation. Brazilian Dental Science 2014; 17: 90. doi: 10.14295/bds.2014.v17i4.930 [DOI] [Google Scholar]

- 33.Vuchkova J, Maybury TS, Farah CS. Testing the educational potential of 3D visualization software in oral radiographic interpretation. J Dent Educ 2011; 75: 1417–25. [PubMed] [Google Scholar]

- 34.Meckfessel S, Stühmer C, Bormann KH, Kupka T, Behrends M, Matthies H, et al. . Introduction of e-learning in dental radiology reveals significantly improved results in final examination. J Craniomaxillofac Surg 2011; 39: 40–8. doi: 10.1016/j.jcms.2010.03.008 [DOI] [PubMed] [Google Scholar]

- 35.Nkenke E, Vairaktaris E, Bauersachs A, Eitner S, Budach A, Knipfer C, et al. . Acceptance of technology-enhanced learning for a theoretical radiological science course: a randomized controlled trial. BMC Med Educ 2012; 12: 18. doi: 10.1186/1472-6920-12-18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Tan PL, Hay DB, Whaites E. Implementing e-learning in a radiological science course in dental education: a short-term longitudinal study. J Dent Educ 2009; 73: 1202–12. [PubMed] [Google Scholar]

- 37.Kavadella A, Tsiklakis K, Vougiouklakis G, Lionarakis A. Evaluation of a blended learning course for teaching oral radiology to undergraduate dental students. Eur J Dent Educ 2012; 16: e88–95. [DOI] [PubMed] [Google Scholar]

- 38.Vuchkova J, Maybury T, Farah CS. Digital interactive learning of oral radiographic anatomy. Eur J Dent Educ 2012; 16: e79–e87. doi: 10.1111/j.1600-0579.2011.00679.x [DOI] [PubMed] [Google Scholar]

- 39.Nkenke E, Vairaktaris E, Bauersachs A, Eitner S, Budach A, Knipfer C, et al. . Spaced education activates students in a theoretical radiological science course: a pilot study. BMC Med Educ 2012; 12: 32. doi: 10.1186/1472-6920-12-32 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Tsao A, Park SE. The role of online learning in radiographic diagnosis in dental education. J Educ Train Stud 2016; 4: 189–93. [Google Scholar]

- 41.Busanello FH, da Silveira PF, Liedke GS, Arús NA, Vizzotto MB, Silveira HED, et al. . Evaluation of a digital learning object (DLO) to support the learning process in radiographic dental diagnosis. Eur J Dent Educ 2015; 19: 222–8. [DOI] [PubMed] [Google Scholar]

- 42.Nilsson TA, Hedman LR, Ahlqvist JB. A randomized trial of simulation-based versus conventional training of dental student skill at interpreting spatial information in radiographs. Simul Healthc 2007; 2: 164–9. doi: 10.1097/SIH.0b013e31811ec254 [DOI] [PubMed] [Google Scholar]

- 43.Howerton WB, Enrique PR, Ludlow JB, Tyndall DA. Interactive computer-assisted instruction vs. lecture format in dental education. J Dent Hyg 2004; 78: 10. [PubMed] [Google Scholar]

- 44.Silveira HL, Gomes MJ, Silveira HE, Dalla-Bona RR. Evaluation of the radiographic cephalometry learning process by a learning virtual object. Am J Orthod Dentofacial Orthop 2009; 136: 134–8. doi: 10.1016/j.ajodo.2009.03.001 [DOI] [PubMed] [Google Scholar]

- 45.Al-Rawi WT, Jacobs R, Hassan BA, Sanderink G, Scarfe WC. Evaluation of web-based instruction for anatomical interpretation in maxillofacial cone beam computed tomography. Dentomaxillofac Radiol 2007; 36: 459–64. doi: 10.1259/dmfr/25560514 [DOI] [PubMed] [Google Scholar]

- 46.Mileman PA, van den Hout WB, Sanderink GC. Randomized controlled trial of a computer-assisted learning program to improve caries detection from bitewing radiographs. Dentomaxillofac Radiol 2003; 32: 116–23. doi: 10.1259/dmfr/58225203 [DOI] [PubMed] [Google Scholar]

- 47.Howerton WB, Platin E, Ludlow J, Tyndall DA. The influence of computer-assisted instruction on acquiring early skills in intraoral radiography. J Dent Educ 2002; 66: 1154–8. [PubMed] [Google Scholar]

- 48.George PP, Papachristou N, Belisario JM, Wang W, Wark PA, Cotic Z, et al. . Online eLearning for undergraduates in health professions: a systematic review of the impact on knowledge, skills, attitudes and satisfaction. J Glob Health 2014; 4: 010406. doi: 10.7189/jogh.04.010406 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Santos GN, Leite AF, Figueiredo PT, Pimentel NM, Flores-Mir C, de Melo NS, et al. . Effectiveness of E-Learning in oral radiology education: a systematic review. J Dent Educ 2016; 80: 1126–39. [PubMed] [Google Scholar]

- 50.Cook DA. The failure of e-learning research to inform educational practice, and what we can do about it. Med Teach 2009; 31: 158–62. doi: 10.1080/01421590802691393 [DOI] [PubMed] [Google Scholar]

- 51.Sinclair PM, Kable A, Levett-Jones T, Booth D. The effectiveness of Internet-based e-learning on clinician behaviour and patient outcomes: a systematic review. Int J Nurs Stud 2016; 57: 70–81. [DOI] [PubMed] [Google Scholar]

- 52.Zafar S, Safdar S, Zafar AN. Evaluation of use of e-Learning in undergraduate radiology education: a review. Eur J Radiol 2014; 83: 2277–87. doi: 10.1016/j.ejrad.2014.08.017 [DOI] [PubMed] [Google Scholar]

- 53.Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet-based learning in the health professions: a meta-analysis. JAMA 2008; 300: 1181–96. doi: 10.1001/jama.300.10.1181 [DOI] [PubMed] [Google Scholar]

- 54.McCutcheon K, Lohan M, Traynor M, Martin D. A systematic review evaluating the impact of online or blended learning vs. face-to-face learning of clinical skills in undergraduate nurse education. J Adv Nurs 2015; 71: 255–70. doi: 10.1111/jan.12509 [DOI] [PubMed] [Google Scholar]

- 55.Pappas C. Multiple intelligences in e-learning: the theory and its impact. 2015. Available from: https://elearningindustry.com/multiple-intelligences-in-elearning-the-theory-and-its-impact.

- 56.Andrews KG, Demps EL. Distance education in the U.S. and Canadian undergraduate dental curriculum. J Dent Educ 2003; 67: 427–38. [PubMed] [Google Scholar]