Abstract

The evaluation, comparison, and public report of health care provider performance is essential to improving the quality of health care. Hospitals, as one type of provider, are often classified into quality tiers (e.g., top or suboptimal) based on their performance data for various purposes. However, potential misclassification might lead to detrimental effects for both consumers and payers. Although such risk has been highlighted by applied health services researchers, a systematic investigation of statistical approaches has been lacking. We assess and compare the expected accuracy of several commonly used classification methods: unadjusted hospital-level averages, shrinkage estimators under a random-effects model accommodating between-hospital variation, and two others based on posterior probabilities. Assuming that performance data follow a classic one-way random-effects model with unequal sample size per hospital, we derive accuracy formulae for these classification approaches and gain insight into how the misclassification might be affected by various factors such as reliability of the data, hospital-level sample size distribution, and cutoff values between quality tiers. The case of binary performance data is also explored using Monte Carlo simulation strategies. We apply the methods to real data and discuss the practical implications..

Keywords: Bayesian, hospital compare data, hospital profiling, mixture distribution, National Hospital Ambulatory Medical Care Survey, sensitivity, specificity

1. Introduction

The assessment and report of hospital quality is increasingly important in quality improvement efforts as the US health care system undergoes major reform. Because quality of care is an abstract and multidimensional construct that cannot be measured directly, measurable indicators are used to characterize three dimensions of quality of care: structure, process, and outcome [1]. Structural measures are ‘hardware’ type of characteristics such as presence of residence programs in hospitals. Outcome measures refer to responses that characterize patient health status following care received, such as the 30-day hospital mortality rate after surgery. Process measures are meant to reflect the extent to which a provider complies with evidence-based guidelines. For example, we use the compliance rate of hospitals on providing influenza vaccination for patients diagnosed with pneumonia to illustrate the proposed methods (Section 5). In contrast to outcome measures, profiling hospitals on their process measures does not require risk-adjustment [2] when restricted to sample of patients eligible for the given procedure. This enables us to build the analytical framework on a simple two-stage Gaussian model (Section 2.1), and extensions of our methods can be applied to outcome measures (Section 6).

Hospital profiling involves a comparison of a hospital’s performance data to a normative or community standard [3]. A major profiling interest is to classify hospitals into quality tiers (e.g., top or suboptimal) on the basis of their performance data [4]. This has several important policy implications. First, such information could be used by patients, employers, and insurance plans to make better choices of hospitals from which they would buy services, thus improving hospital performance indirectly through the market. Second, a hospital’s quality tier has been used as the basis for pay-for-performance initiatives, which in theory improves the quality of providers using direct economic incentives [5]. These initiatives use retrospectively collected information on performance measures to provide financial rewards to hospitals that show high quality based on these data. For example, the Center for Medicare and Medicaid Services (CMS) initiated in 2005 the Premier Hospital Quality Incentive Demonstration project that paid bonuses totaling $8.69m to 115 superior (those in the upper decile) performing institutions that voluntarily participated in the project [6]. In such projects, hospital-specific compliance rates for some standard treatments were used as performance indicators. For example, a high tier can be defined as hospitals having compliance rate higher than an objective threshold (e.g., 0.9) or a relative threshold (e.g., 90%-tile of the sample).

There are two main analytical approaches to quantifying hospital quality on the basis of performance data collected over patient samples. One popular approach is to use the sample average of the chosen quality indicator across patients (or procedures) at hospital level. We refer to it as a direct estimator because it is based only on the hospital-specific sample data and does not involve data from other hospitals. We borrow this term from the literature of small area estimation in surveys [7], treating a hospital as a ‘small area’ (cluster or unit). On the other hand, justified by the multilevel structure of hospital performance data, an alternative estimation approach uses a shrinkage estimator obtained under a random-effects (hierarchical or multilevel) model, which assumes that there exist hospital-specific ‘random effects’ drawn from some common distributions. Such models are particularly appealing because they have a full account of variation (within-hospital sampling variation and between-hospital variation). Past literature has well documented the benefit of using shrinkage estimators over direct estimators. See Section 2.1 for more details.

Given predetermined thresholds (cutoff points), hospital classification can be conducted using either direct or shrinkage estimators. Related to the use of shrinkage estimators, another advanced approach with a more Bayesian flavor uses posterior probabilities for classification [8]. However, the risk of misclassification exists for these approaches because hospital quality is an unknown quantity under statistical models, and the uncertainty associated with its estimate would lead to misclassification. Such risk is often ignored in practice, yet it would have important implications to consumers’ decisions and targeting quality improvement efforts [9]. For example, misclassifying hospitals with suboptimal care into the tier meant for high quality might lead to detrimental effects to patients who seek best care or result in economic loss for those pay-for-performance programs. Although this issue has been identified in applied health services research [10], there is little statistical literature on systematically studying the classification accuracy of profiling methods.

Recently, Adams et al. [11] pointed out the connection between the reliability of provider performance data and risk of misclassification, showing that classifying data with lower reliability would be more error-prone. However, several unanswered methodological questions remain. One is whether using advanced methods (e.g., shrinkage estimators or posterior probabilities) rather than direct estimators might lead to improved accuracy. Given the increasing popularity of using hierarchical Bayesian models for provider profiling [12], the answer to this question is critical to both practitioners and methodologists. In addition, the impact of several key factors including the reliability of data, the distribution of hospital-level sample size, and the cutoff point between quality tiers have not been well characterized. Elucidating these patterns might also have important practical implications. For example, patient volume often varies across hospitals, and in practice, those with small sample size are often excluded to obtain reliable profiling estimates. Yet, the decision rule for the sample size cutoff is often ad hoc (e.g., fewer than 30) and does not depend on the actual data. Therefore, it is preferable to have a more rigorous and data-driven procedure. Several of these issues have been raised in the discussion of [11] ([13, 14])

These concerns therefore call for more methodological studies on the issue of misclassification in hospital profiling. Monte Carlo simulation assessments have been conducted in limited cases [15, 16]; yet, there is the lack of systematic studies for identifying general patterns. We assess and compare the classification accuracy of conventional and advanced approaches both analytically and numerically. The remainder of the paper is organized as follows. In Section 2, we introduce a one-way unbalanced random-effects model for hospital performance data and present the classification methods. In Section 3, we derive the formulae of accuracy measures for these methods under the model and investigate some of the properties. In Section 4, we provide numerical illustrations using both real examples and simulations, and include some recommendations for practitioners. In Section 5, we briefly study the case for binary performance data using simulation-based strategies. Finally, in Section 6, we discuss limitations of our approach and propose a basis for future work.

2. Statistical background

2.1. One-way normal random-effects model for hospital performance data

For hospital i = 1, …, M, we consider a two-level normal random-effects model:

| (1) |

where yij denotes the performance measurement of patient j at hospital i, j = 1; …, ni, ni is the sample size for hospital i, μi is the unknown quality for hospital i, σ2 is the within-hospital variation, and τ2 is the between-hospital variation. When ni’s are different, this is also often referred to as an unbalanced one-way analysis of variance model. An example of continuous performance measure yij might be door-to-balloon time for patients having a heart attack who require percutaneous coronary intervention or patient waiting time in hospital emergency departments (EDs) (Section 4).

Despite its simplicity, model (1) can be viewed as a basic characterization of hospital performance and frequently used as a statistical framework for research on profiling [17]. Without covariates, it is particularly suitable for process measures. Its extensions and generalizations include generalized mixed-effects models for categorical outcomes, risk-adjustment models including patient-level confounders for outcome measures [2], and multivariate generalized mixed-effects models for multiple performance measures [18]. A survey of profiling models can be found in [12] and reference therein.

We focus on the expected accuracy of classification methods under model (1). For better illustration, we use an alternative form of model (1):

| (2) |

where Without a loss of generality, we assume that a hospital with larger μi has better quality under model (2). We view the collection of sample size over hospitals {ni} as fixed quantities for a particular dataset. For the ease of derivation, we assume that μ, τ2, and σ2 are known in model (2), although these parameters are unknown and require estimation from model (1) with actual data. Therefore, we further assume that the estimators, under either classic or Bayesian estimation strategies, are consistent under some regularity conditions. We also consider a Bayesian version of model (1) where μ, τ2, and σ2 are treated as random variables. See Section 3 for more details.

Under model (2), the direct estimator for μi is , and the shrinkage estimator is , where Bi = τ2/(τ2 + σ2/ni) is the shrinkage factor [19], also the reliability measure (Section 2.3). Theoretical arguments [20] and empirical studies [21] have demonstrated the improved average point predictive ability of shrinkage estimators over direct estimators. In addition, the shrinkage of the direct estimator toward the overall average implies that the shrinkage prediction adjusts for regression-to-the-mean [22,23]. The weighting scheme of the shrinkage estimator also allows increased precision for units with small ni (i.e., ‘stabilizing’). However, from our limited experience, there is less research on demonstrating the advantages, if any, of using shrinkage estimators (and posterior probabilities) for the purpose of classifying clusters/units. We investigate this topic in the context of hospital classification.

2.2. Classification methods

For simplicity, we consider only two tiers (‘high’ and ‘suboptimal’), although the methods can be readily extended to more than two tiers. In a straightforward manner, we compare the point estimate for the quality against some threshold to decide which tier hospital i belongs to: it would be included in the high tier if is greater than the threshold and included in the other tier otherwise. The cutoff value can be either absolute or relative. We focus on using the relative threshold for classification. That is, for a given c ∊ (0, 1) (e.g., c = 0.9), the goal is to select 100(1 − c)% of M hospitals into the top tier. The primary reason for using a relative threshold is that all methods would identify an identical proportion of top-tier hospitals so that the comparison among methods has a common ground. Practically, this also aligns with ‘pay-for-performance’ initiatives, which award hospitals whose performance estimates place them in top 100(1 − c)% of the sample. We include a brief study for classification using an external threshold in Appendix.

The following are two classification methods corresponding to using the direct and shrinkage estimators:

Direct method (DIR): Hospital i is included in the high tier if , where denotes the 100c%-tile of the collection of .

Shrinkage method (SHR): Hospital i is included in the high tier if [13].

As both DIR and SHR are solely based on point estimates of μi’s, there exist some proposals for incorporating the uncertainty of point estimates. From a Bayesian perspective, Christiansen and Morris [8] proposed to make a decision using the posterior probability that μi exceeds some threshold, that is, Pr(μi > Cprob|Y) > Pprob, where Cprob is a threshold value at performance level and Pprob is the cutoff for the posterior probability. Austin and Brunner [24] provided justifications of this approach from the perspective of Bayesian decision theory. However, there exist two subtly different methods depending on whether Cprob or Pprob is given:

Posterior probability method I (PROB1): Pprob is given, and this requires that all selected top-tier hospitals have at least Pprob (posterior) chance of being greater than the threshold Cprob. For hospital i, the probability for μi being greater than the 100(1 − Pprob)%-tile of the posterior distribution of μi is Pprob. If we choose Cprob as the 100c%-tile of the collection of these percentiles from all hospitals, then we can guarantee that exactly 100(1 − c)% of the M hospitals would be selected into the top tier [18]. Typical choices of Pprob are generally greater than 0.5 (e.g., 0.9).

Posterior probability method II (PROB2): Cprob is given, and Pprob = {Pr(μi > Cprob|Y)}100c. Under model (2), an apparent choice is to set [25]. Practical choices of Cprob can be based on expert opinions [8].

For all methods, we use sensitivity (the probability of assigning a true top-tier hospital into the top tier) and specificity (the probability of assigning a true suboptimal-tier hospital into the suboptimal tier) as the accuracy measure. This corresponds to a null hypothesis stating that the hospital of interest belongs to a suboptimal tier. These two measures suffice for our purpose because the typical measures for misclassification, the false positive rate and false negative rate are one minus sensitivity and one minus specificity, respectively.

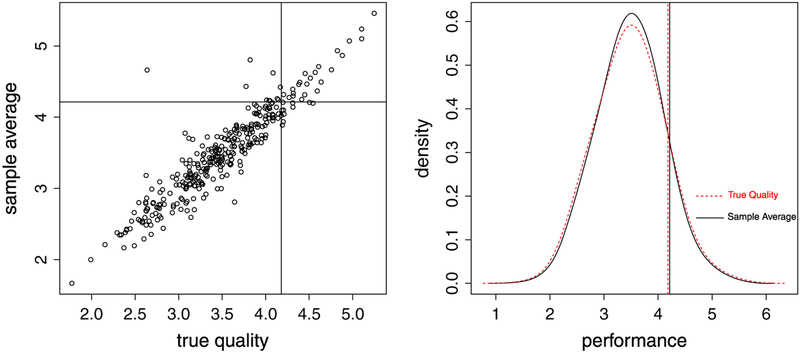

Misclassification can be readily illustrated by simulation. For example, we set μ = 3.48, τ2 = 0.29, and σ2 = 2.31 (all are estimates from the real data in Section 4) and use sample size {ni} of the data to generate random numbers of {μi} and using model (2). Suppose our aim is to identify the top 10% from these 329 hospitals in the data. From one simulation, the true top-tier hospitals are those for which , yet the identified top performers using DIR are those for which . The sensitivity is therefore . Figure 1 demonstrates the misclassification using the simulated data. The left panel is a scatter plot of {μi} versus , divided into four regions by {μi}90 and . The sensitivity is the relative frequency of the sample from the top right region over that from the right region. The right panel shows the smoothed marginal density plots of {μi} and and their respective 90%-tiles, which are purposefully overlapped to show the distinction between the two distributions and cutoff values.

Figure 1.

Left panel: scatter plot of {μi} versus , and the sample space is divided into four regions by {μi}90 and . Right panel: smoothed density plots of {μi} and and their respective 90%-tiles. Dashed line: true quality {μi}; Concrete line: sample average .

2.3. Past literature on reliability and misclassification

In the context of physician cost profiling, Adams et al. [11] suggested using the measure of reliability, the between-provider variance divided by the sum of between-provider and within-provider variance, to gauge the risk of misclassification. Performance data with higher reliability are expected to have better classification accuracy.

A technical argument is sketched here. For hospital i, model (2) implies that

| (3) |

| (4) |

where BVN (μ, μ, τ2 + σ2/ni, τ2, ρi)denotes a bivariate normal distribution with identical mean μ, marginal variance τ2 + σ2/ni and τ2, and correlation coefficient , the square root of reliability measure. Note that . But , and therefore .

By using DIR, the expected sensitivity of identifying top 100(1 − c)% of hospitals is , and the specificity is . In both formulae, Φ() denotes the cumulative distribution function of a standard univariate normal, and Φ2() denotes the cumulative distribution function of a standard bivariate normal (Z1 and Z2) with correlation coefficient ρi. Given a fixed c, Φ2 is a monotonic function of ρi [26], hence both sensitivity and specificity increase with higher reliability.

However, an implicit assumption behind the aforementioned reasoning is equal sample size per hospital, that is, ni = n for i = 1, … M. Less is known for the accuracy of all considered methods in the case of unequal sample size across hospitals routinely encountered in practice. Section 3 provides our answers to these questions.

3. Accuracy of classification approaches

3.1. Linear classifiers

Under model (2), the sensitivity from DIR is

The denominator is 1 − c. For the numerator, we treat as independent realizations of a univariate random variable, denoted by . The key is to obtain its marginal distribution (the smoothed marginal density plot of in right panel of Figure 1) and its joint distribution with μi, . Because by Eq. (3), and noting that hospital i has an independent 1/M probability being in the sample, is a mixture of normals, denoted as . Similarly, , a mixture bivariate normals.

The aforementioned reasoning can be generalized to a class of linear classifiers , where ai and bi are functions of μ, τ2, σ2, and ni. This is motivated by the fact that both DIR and SHR can be considered as a linear combination of and μ. Later, the four classification methods are shown to be special cases of this linear classifier. Viewing as independent realizations of a univariate random variable , then under model (2)

| (5) |

| (6) |

both of which are mixture of normals.

The sensitivity of using the linear classifier is The denominator is 1 − c. Let , then cLIN has to satisfy . The numerator is an average of cumulative distribution functions of bivariate normals. After some simplification (Appendix), we obtain

| (7) |

| (8) |

where SEN and SPE denote sensitivity and specificity, respectively. For simplicity, we omit the conditioning statements in equations hereafter.

For DIR, ai = 1 and bi = 0. For SHR, ai = Bi and bi = (1 − Bi)μ. For PROB1 and PROB2, because (after further conditioning on μ, τ2, and σ2) implies that

| (9) |

For PROB1 in which Pprob is given, ai = Bi and . In addition, let , which is the 100(1 − Pprob)%-tile of the posterior distribution of μi. From Eq. (9), we obtain that Cprob is {hi}100c (Section 2.2). However, for PROB2 is which Cprob prefixed, Eq. (9) implies that

| (10) |

Therefore, . Let , then Φ−1(Pprob) is {si}100c based on Eq. (10). Plugging these ai’s and bi’s into Eqs. (7) and (8), we obtain the sensitivity and specificity functions for all methods (Table I).

Table I.

Sensitivity and specificity functions for classifying top 100(1 − c)% of hospitals.

| Method | Accuracy measures |

|---|---|

| DIR | Cutoff equation: |

| Sensitivity: | |

| Specificity: | |

| SHR | Cutoff equation: |

| Sensitivity: | |

| Specificity: | |

| PROB1 | Cutoff equation: |

| Sensitivity: | |

| Specificity: | |

| PROB2 | Cutoff equation: |

| Sensitivity: | |

| Specificity: |

Note: , , , and are the cutoff points.

DIR, direct method; SHR, shrinkage method.

With actual data, μ, τ2, and σ2 are unknown and require estimation. We can plug the corresponding estimates into these formulae to estimate the accuracy. However, the associated uncertainty due to estimation is unknown. Because there generally exists no closed-form solution to cLIN, the (asymptotic) variance formulae of the accuracy functions are intractable. On the other hand, this problem might be better approached if we adopt a Bayesian version of model (1) in which μ, τ2, and σ2 are treated as random variables. For each posterior draw of the parameters under the Bayesian model, we calculate the accuracy measures using formulae to construct the corresponding posterior distribution, f (SENLIN|Y) and f (SPELIN|Y), and then use the credible intervals to summarize the uncertainty.

3.2. Optimal approach

When all ni’s are equal, the sensitivity/specificity from all methods are identical. For PROB1, set Pprob = 0.5 makes it identical to SHR. In addition, as all ni’s → ∞ or K = τ/σ → ∞, both sensitivity and specificity → 1 for all methods. When ni’s are different, there is generally no closed-form solution to cLIN. Thus, it might be difficult to compare these methods algebraically. Section 4.3 provides some numerical comparisons.

To see which method is the optimal one in terms of yielding the highest sensitivity/specificity, we note that Eqs. (7) and (8), including their special cases in Table I, can be framed as an optimization problem subject to a constraint. example Take the of sensitivity, the goal is to maximize the objective function , subject to , where . This optimization problem can be solved by the Lagrange multiplier, . After some algebra (Appendix), the solution , where λ* is the solution to λ. From Table I, we observe that the cutoff values from DIR and SHR are not the solutions because the functional form of does not involve μ yet only involves τ2 and σ2. For PROB2, set Cprob = μ + τΦ−1 (c), the cutoff as , and then we obtain the solutions to the Lagrange multiplier. in addition, the second derivative test [27] shows that these solutions (critical points) are strictly local maxima (Appendix). These arguments suggest that PROB2 with Cprob = μ + τΦ−1 (c) is the optimal approach among the linear classifiers.

3.3. Reliability

We derive the reliability measure for direct and shrinkage estimators as a generalization from [11] to hospitals with unequal sample size. We follow the definition that reliability is the squared correlation between a measurement and true value. For the linear function , its correlation with μi is , conditional on μ, τ2, σ2, and {ni}. Under models (5) and (6),

| (11) |

For the direct estimator, set ai = 1 and bi = 0, then . For the shrinkage estimator, set ai = Bi and bi = (1 − Bi)μ, then .

By Cauchy–Schwartz inequality, , and the equality holds if and only if ni = n for each i. Therefore, RESHR ⩾ REDIR, showing that shrinkage estimators generally have larger correlation with true quality values than direct estimators, consistent with the literature that the former approach produces smaller prediction error.

4. Numerical illustration

4.1. Data background and setup

Our data come from the 2008 ED component of the National Hospital Ambulatory Care Survey (NHAMCS) (http://www.cdc.gov/nchs/ahcd.htm). This annual survey is conducted by the Centers for Disease Control and Preventions National Center for Health Statistics. The NHAMCS collects data on a nationally representative sample of visits to EDs and outpatient departments of non-Federal, short-stay general, or childrens general hospitals and uses a multistage probability sample design [28]. The data include multiple visit-related and patient-related characteristics. For example, visit-level characteristics include what type of provider was seen; what type and number of medications were prescribed; and the times at which the patient arrived, was seen by a provider, and was granted a final disposition (either discharged or admitted to the hospital).

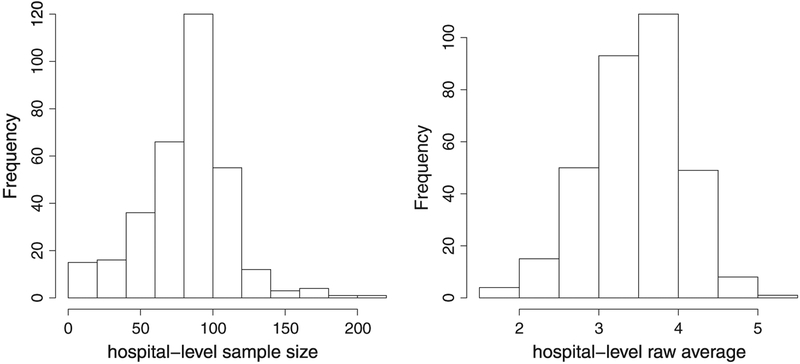

We focus on the continuous time interval between a patients arrival and the time when they are seen by a doctor (provider). Waiting time to see a provider in the ED has been proposed as a quality metric by the National Quality Forum and is expected to be reported without any adjustment [29]. Our analytic sample consists of 27,151 ED visit-level wait times recorded from 329 hospitals and was obtained from the public-use file of NHAMCS. The left panel of Figure 2 shows the distribution of sample size {ni} (the number of visits from each ED). We apply a log transformation to the wait time before the analysis to make the normality assumption of yij in model (1) more plausible. We also exclude the missing cases and obtain a weighted average at ED level, incorporating the survey design. The right panel of Figure 2 shows the distribution . Although we estimate the classification accuracy for this dataset using developed methods (Section 4.2), we focus on numerically illustrating some general patterns of accuracy functions (Sections 4.3–4.5), using elicited parameter values from this dataset.

Figure 2.

Left panel: the distribution of emergency department-level sample size {ni}. Right panel: the distribution of direct estimates ..

We consider a Bayesian version of model (1) [30], assuming vague priors for the parameters: p(μ) ~ N(0, 1000), p(τ) ~ Unif (10−3, 100), and p(σ) ~ Unif (10−3, 100), where Unif denotes uniform distribution. We diagnose the convergence of the Gibbs sampling chain in the model fitting using statistics developed by [31] and conclude that the Gibbs chain achieves the convergence after 1000 iterations (the statistics is below 1.1). The posterior median estimates are , and based on 1000 posterior samples after discarding the first 1000 iterations.

4.2. Estimating the accuracy

We use R (www.r-project.org) library ‘nor1mix’ to obtain the cutoff points for normal mixtures and library ‘mvtnorm’ to obtain the cumulative distribution functions of bivariate normals (sample code attached in Appendix). Although hospitals with shorter wait time are considered as having better quality, it is unnecessary to reverse the signs in formulae of Table I because of the symmetry of the normal distribution function. That is, the accuracy of identifying top and bottom (1 − c)100% of hospitals are identical, and the formulae can therefore be directly applied. For identifying top 10% of the hospitals, Table II shows the estimates by plugging in , , from the formulae as well as Bayesian estimates from 1000 posterior draws. The plug-in estimates are rather close to the posterior medians. Using PROB2 yields the highest accuracy, and around a half more hospital would be correctly identified compared with using DIR(329×0.1×(0.778 − 0.765) = 0.423). Of additional note is that the Bayesian method provides credible intervals to quantify the uncertainty of the estimates.

Table II.

Bayesian estimates of expected accuracy for identifying top 10% of hospitals.

| Sensitivity | Specificity | |||||

|---|---|---|---|---|---|---|

| Method | Direct | Posterior median | 95%CI | Direct | Posterior median | 95% CI |

| DIR | 0.760 | 0.765 | (0.546, 0.874) | 0.974 | 0.974 | (0.950, 0.986) |

| SHR | 0.773 | 0.778 | (0.573, 0.878) | 0.975 | 0.975 | (0.953, 0.986) |

| PR0B1 | 0.765 | 0.771 | (0.566, 0.871) | 0.974 | 0.975 | (0.952, 0.986) |

| PROB2 | 0.773 | 0.778 | (0.574, 0.878) | 0.975 | 0.975 | (0.953, 0.986) |

Note: CI: credible interval. In PROB1 method (Pprob = 0:9). In PROB2 method .

Direct: plug-in estimates.

DIR, direct method; SHR, shrinkage method.

4.3. Comparison among methods

For practitioners without sophisticated statistical background, DIR versus SHR might be of interest because it compares between the naïve method and an advanced approach. This directly answers the question from [13] on how well shrinkage estimators perform for classification. For methodologists, SHR versus PROB1 and PROB2 might be of interest because they all have shrinkage properties, yet there is the lack of comparison among them in past literature. In the latter approaches, however, the thresholds in classification can vary. Therefore, we choose multiple Cprob’s and Pprob’s in the assessment.

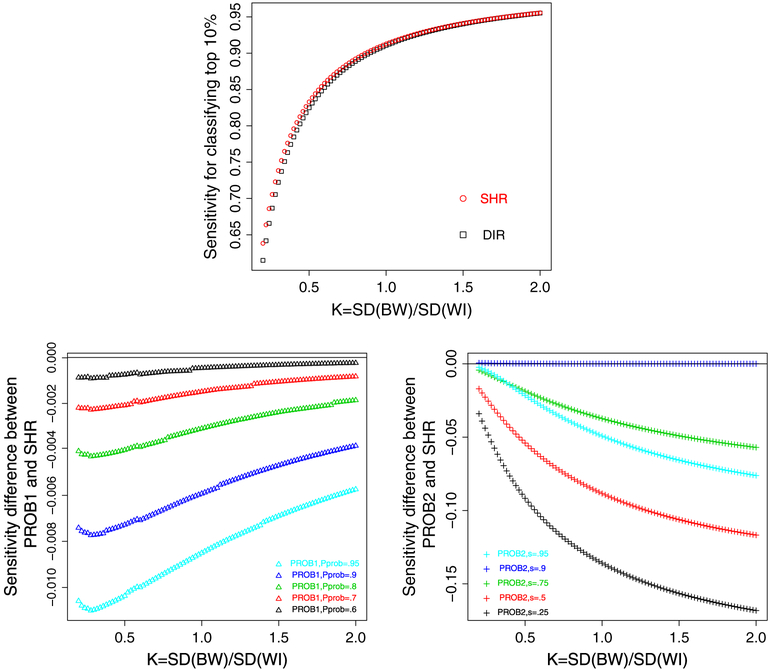

Let K = τ/σ characterize the between/within-hospital standard deviation ratio, a measure of reliability. We fix μ = 3:48 and τ2 = 0.29 but change σ2 so that K increases from 0.2 to 2 with a consecutive increment of 0.02. In each scenario, we calculate the sensitivity and specificity of identifying top 10% of hospitals using each method. For PROB1, we set Pprob = {0.6, 0.7, 0.8, 0.9, 0.95}. For PROB2, we choose Cprob = μ + τΦ−1 (s), where s = {0.25, 0.5, 0.75, 0.9, 0.95}. Note that the option with s = 0.9 corresponds to the optimal method by our theoretical argument (Section 3.2).

Figure 3 shows the comparative patterns. The top panel plots the sensitivity from DIR and SHR. When K increases, both sensitivities increase and approach 1. The sensitivity from SHR is consistently higher than that from DIR, and the advantage of the former is more prominent with smaller K. In addition, because we assess PROB1 using multiple Cprob’s, the bottom left panel plots the difference of the sensitivity between PROB1 and SHR, and the horizonal line at 0 is the benchmark. It suggests that the sensitivity from PROB1 is consistently lower than that from SHR regardless of Pprob chosen. The bottom right panel plots the difference of sensitivity between PROB2 and SHR. When s = 0.9, which corresponds to the optimal classification, the sensitivity from PROB2 is slightly higher than SHR with smaller K yet indistinguishable as K increases, consistent with the theoretical argument. But for s ≠ 0.9, the sensitivity from PROB2 is apparently lower, corresponding to the curves below the benchmark line. The comparative pattern for specificity is similar (results not shown). These results suggest that PROB2 (with appropriately chosen Cprob = μ + τΦ−1 (c)) and SHR are the preferred methods to DIR and PROB1.

Figure 3.

Top panel: sensitivity from direct method (DIR) and shrinkage method (SHR) (square: DIR and circle: SHR). Bottom left panel: the difference of sensitivity between PROB1 and SHR (triangle: PROB1, Pprob takes 0.6, 0.7, 0.8, 0.9, and 0.95 for the five curves from top to bottom). Bottom right panel: the difference of sensitivity between PROB2 and SHR (plus: PROB2, s = 0:95 for the curve above 0, and s takes 0.25, 0.5, 0.75, and 0.9 for the 4 curves below). The classification goal is to identify top 10% of hospitals.

4.4. The impact of hospital-level sample size on accuracy

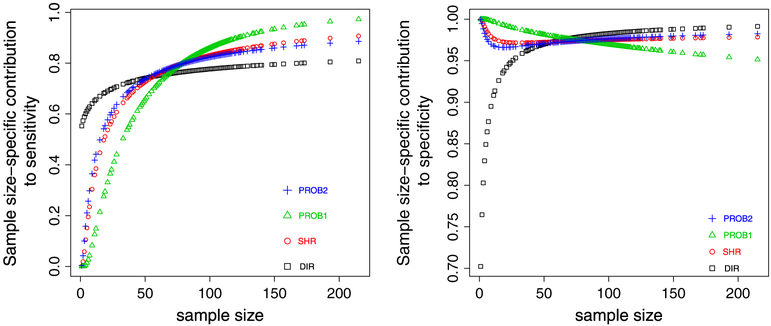

Equations (7) and (8) show that the accuracy function is an average of contributions from each hospital. For sensitivity, the hospital-level contribution is . Showing S(ni) as a function of ni while fixing other parameters reveals the impact of the distribution of hospital-level sample size on accuracy. Figure 4 plots S(ni) against ni in the example data (Pprob = 0.9 in PROB1 and Cprob = μ + τΦ−1 (0.9) in PROB2). For each method, the accuracy can be viewed as the area under the curve, however weighted by the frequency of the distinct sample size, as some hospitals have identical sample size. Clearly, these plots show the complicated impact of ni on Sni.

Figure 4.

Left panel: hospital size-specific contribution to overall sensitivity. Right panel: hospital size-specific contribution to overall specificity. square: direct method (DIR), circle: shrinkage method (SHR), triangle: PROB1 with Pprob = 0.9, plus: PROB2 with . The classification goal is to identify top 10% of hospitals.

To gain better insight, Table III shows S(ni) evaluated at a few selected sample size. For DIR, S(ni) is smaller with a larger hospital (e.g., ni > 50), yet larger with a smaller hospital (e.g., ni < 15) compared with other methods, all of which have the shrinkage property. We give an intuitive explanation. In general, the accuracy of a classifier improves if the estimator has a smaller bias or variance. For a small hospital whose true quality is in the top tier, its shrinkage estimate is pulled toward the population average, yet the direct estimate is unbiased. Although the variance of the shrinkage estimate might be smaller, the effect of bias dominates, and therefore, DIR is a better classifier. This hospital is less likely to be classified as the top performer using methods other than DIR, leading to a smaller contribution from this small hospital to sensitivity. But for a large hospital whose true quality is in the top tier, the shrinkage effect is little, yet its smaller variance makes it a more reliable classifier than the direct estimate. Therefore, using shrinkage-based methods other than DIR tend to lead to a larger contribution from this large hospital to the sensitivity.

Table III.

Individual hospital-level contribution to the overall sensitivity for identifying top 10% of hospitals.

| Method | ||||

|---|---|---|---|---|

| Sample size ni | DIR | SHR | PROB1 | PROB2 |

| 1 | 0.553 | 0.001 | 5.63 ×10−6 | 0.004 |

| 15 | 0.670 | 0.447 | 0.214 | 0.498 |

| 50 | 0.741 | 0.727 | 0.658 | 0.738 |

| 100 | 0.777 | 0.831 | 0.860 | 0.823 |

| 150 | 0.795 | 0.876 | 0.934 | 0.860 |

DIR, direct method; SHR, shrinkage method.

4.5. The impact of cutoff on accuracy

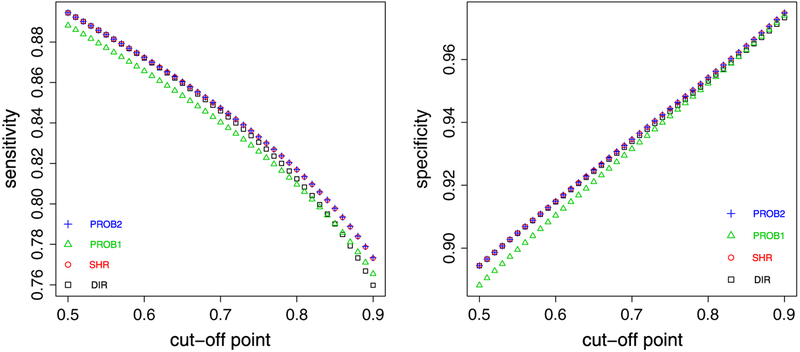

In previous illustrations, c is fixed at 0.9. We now assess the impact of changing c on the accuracy of these methods, motivated by the fact that c determines the distribution of quality tiers. For example, if identifying top performers is associated with monetary award, for which the total amount might be fixed in certain cases, then program evaluators might vary c for different options of awarding mechanisms, such as rewarding fewer hospitals with paying each more or rewarding more hospitals with paying each less. Figure 5 shows the sensitivity and specificity, fixing μ = 3.48, τ2 = 0.29, σ2 = 2.31 and changing c from 0.5 to 0.9 with a consecutive increment of 0.01. Sensitivity estimates from all methods decrease as c increases, as it would be more difficult to distinguish among hospitals on the tail of the distribution. PROB1 (Pprob = 0:9) generally has the lowest sensitivity. PROB2 (Cprobe = μ + τΦ−1 (c)) and SHR are nearly indistinguishable, and they have better performance than the other two methods. As c increases, the advantages from SHR and PROB2 over DIR is more prominent, corresponding to the tail of the distribution. The pattern of specificity function overall mirrors that from the sensitivity.

Figure 5.

Left panel: sensitivity versus cutoff c. Right panel: specificity versus cutoff c. square: direct method (DIR), circle: shrinkage method (SHR), triangle: PROB1 with Pprob = 0.9, plus: PROB2 with Cprob = μ + τΦ−1(c). The classification goal is to identify top 100(1 − c)% of hospitals.

4.6. Simulation validation

We conduct a brief simulation study to assess the validity of the developed formulae. We fix μ = 3:48, τ2 = 0.29 but change σ2 so that K = 0.2, 0.6, 1.0. For each of the three scenarios, we generate random samples of {μi} and under model (2) for 100 simulations, using sample size {ni} from the example data. For each simulation, we implement two strategies to estimate the sensitivity of classifying top 10% of hospitals. One is to plug posterior medians of parameters (based on 1000 draws) into the formulae, as the proposed strategy in Section 3.1. We denote the results as the ‘estimated’ sensitivity. The other is to calculate the proportion of hospitals identified in the top tier among true top performers, because we know the true tier status of each hospital from the data generating process. This Monte Carlo simulation procedure has been used in literature [15, 16] where the closed-form formulae have not been developed. We denote the results as the ‘empirica’ sensitivity. We average both ‘estimated’ and ‘empirical’ sensitivity over 100 simulations and compare them with the one calculated from the formulae by plugging in true data generating parameters. We refer to the latter quantity as the ‘true’ sensitivity. Table IV shows the results, and the closeness between three sensitivity estimates suggest the validity of the formulae. The validation results for specificity are similar (not shown).

Table IV.

Simulation validation: sensitivity estimates for identifying top 10% of hospitals.

| τ/σ = 0.2 | |||

|---|---|---|---|

| Method | True | Estimated | Empirical |

| DIR | 0.615 | 0.613 | 0.612 |

| SHR | 0.638 | 0.635 | 0.643 |

| PROB1 | 0.631 | 0.628 | 0.636 |

| PROB2 | 0.639 | 0.635 | 0.641 |

| τ/σ = 0.6 | |||

| DIR | 0.853 | 0.849 | 0.849 |

| SHR | 0.859 | 0.856 | 0.850 |

| PROB1 | 0.852 | 0.850 | 0.845 |

| PROB2 | 0.859 | 0.856 | 0.855 |

| τ/σ = 1.0 | |||

| DIR | 0.911 | 0.904 | 0.901 |

| SHR | 0.913 | 0.907 | 0.900 |

| PROB1 | 0.907 | 0.901 | 0.897 |

| PROB2 | 0.913 | 0.907 | 0.903 |

Note: The data generating parameter μ = 3.48 and τ2 = 0.29. ‘True’ is based on the formulae using the population generating parameters. ‘Estimated’ is based on the formulae using posterior medians of parameters. ‘Empirical’ is the relative frequency of correctly identified top performers from the Monte Carlo procedure. Both ‘estimated’ and ‘empirical’ are averages over 100 simulations.

DIR, direct method; SHR, shrinkage method.

4.7. Practical implications

We discuss some of the practical implications from the methodological development. First, the developed formulae provide an accessible tool for practitioners to quantify the accuracy from different classification methods. Rather than merely identifying hospitals in quality tiers, we recommend practitioners also reporting estimated accuracy associated with the classification, analogous to providing variance of point estimate in statistical analysis. Such information might be helpful for the decision making, as apparently the classification with suboptimal accuracy might be less preferable. In addition, the preferred classification methods are SHR and PROB2 (with Cprob = μ + τΦ−1 (c)) based on our evaluation results.

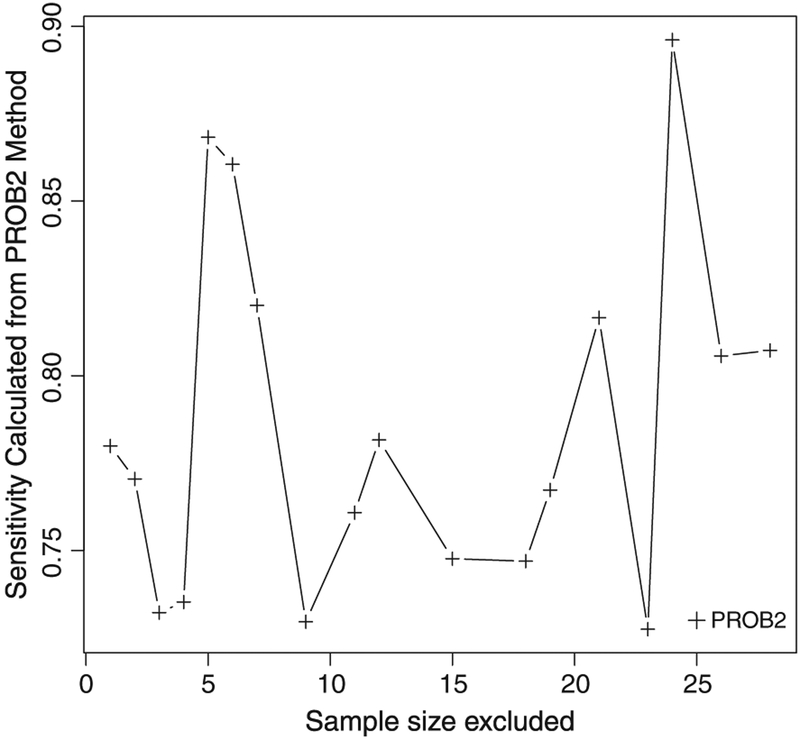

The estimates of accuracy can also be used to refine classification. One conventional practice in hospital profiling is to exclude those with small samples to obtain more reliable hospital-level performance estimates. However, there exists no formal rule on how small a hospital would need to be for exclusion. If the primary goal is to tier hospitals, we can remove small hospitals sequentially with increasing sample size, estimate the classification accuracy for those remain in the data, and identify the appropriate cutoff based on the desired level of accuracy. For example, Figure 6 shows the sensitivity estimates from PROB2 in classifying top 10% of hospitals when small hospitals (ni ranges from 1 to fewer than 30) are excluded sequentially from the example data. The sensitivity overall increases as small hospitals are excluded. However, we see some fluctuation because the estimates of μ, τ2, and σ2 vary with different hospitals excluded. Suppose, we would like to achieve at least 75% for the sensitivity, then hospitals with fewer than 10 patients might need to be excluded. Compared with some ad hoc rules (e.g., exclude hospitals with ni < 20 regardless of the data structure), this data-based procedure would prevent one from excluding too many (or too few) small hospitals.

Figure 6.

The sensitivity for classifying top 10% of hospitals when small-size hospitals are sequentially excluded. Plus: PROB2 Cprob = μ + τΦ−1(0.9).

The developed formulae might also be useful for designing profiling studies. For example, to estimate the required sample size for achieving the desired accuracy in classification, we can solve ni’s from the accuracy functions in Table I when either sensitivity or specificity is predetermined. A simple solution is to assume equal sample size per hospital. But it might be impractical because hospital volume often varies. For example, it is unlikely that many patients can be recruited for some rural hospitals within a limited study period. We can stratify the distribution {ni} (e.g., using historical data) and solve for the required ni’s from each strata. For instance, we might divide hospitals into three groups (large, medium, and small) based on their volume and assume that the average sample size in the small-volume hospitals is nS and that in the medium-volume and large-volume hospitals are nM = K1n and nL = K2n, respectively. On the basis of some empirical estimates of K1 and K2, we could solve for nS, nM, and nL from the formulae.

5. Binary outcome

We next consider the extension to a binary performance measure. The example dataset is a subset of the hospital compare database (www.hospitalcompare.hhs.gov), which is collected by CMS and includes process performance measures from 4000 plus US hospitals for care delivered to patients eligible for Medicare. We use one measure designated for assessing the compliance rate of ‘Influenza vaccination’ for patients diagnosed with pneumonia from October 2005 to September 2006. For each hospital, the data contain the number of patients who were admitted and were eligible for the therapy (denominator) and the number of eligible patients who received the treatment (numerator). The guidelines for defining the eligibility for a patient and sample selection criteria are given by the specifications from CMS. The dataset includes 3304 hospitals with nonmissing values for both numerator and denominator.

Because of the binary nature of the outcome, we consider a logistic-normal model [32]:

| (12) |

where yi is the number of patients receiving the vaccination at hospital i (numerator), ni is the hospital-level patient size (denominator), pi is the hospital-level compliance rate, and μB and are the mean and variance of the random effects on the logit scale of pi. A larger pi might indicate a higher compliance rate for hospital i for the vaccination procedure, implying a better quality.

Similar to the case of continuous measures (Section 2), we consider four classification methods:

DIR: Hospital i is included in the high tier if .

SHR: Hospital i is classified the high tier if , where is the shrinkage estimator for pi under model (12).

PROB1: Hospital i is included in the high tier if , where Pprob is given.

PROB2: Hospital i is included in the high tier if , where Cprob is given.

However, unlike model (2), closed-form formulae for accuracy measures under model (12) are intractable. We use a simulation-based strategy to assess and compare among different methods. We first fit model (12) to the dataset using a Bayesian scheme assuming vague priors: p(μB) ~ N(0, 1000), p(τ) ~ Unif (10−3, 100). The posterior medians for parameters are and based on 1000 draws. In the simulation, μB is fixed at 1.1, and we increase from 0.1 to 4.0 with a consecutive increment of 0.1 to reflect an increasing between-hospital variation. For each combination of μB and , we use the actual sample size {ni} to generate data {yi} under model (12) for 100 replicates. We apply the four methods to each replicate to identify top 10% of hospitals (PROB1: Pprob = 0.9; PROB2: logit.(Cprob) = μB + τBΦ−1 (0.9)). We calculate the average ‘empirical’ sensitivity and specificity over 100 simulations.

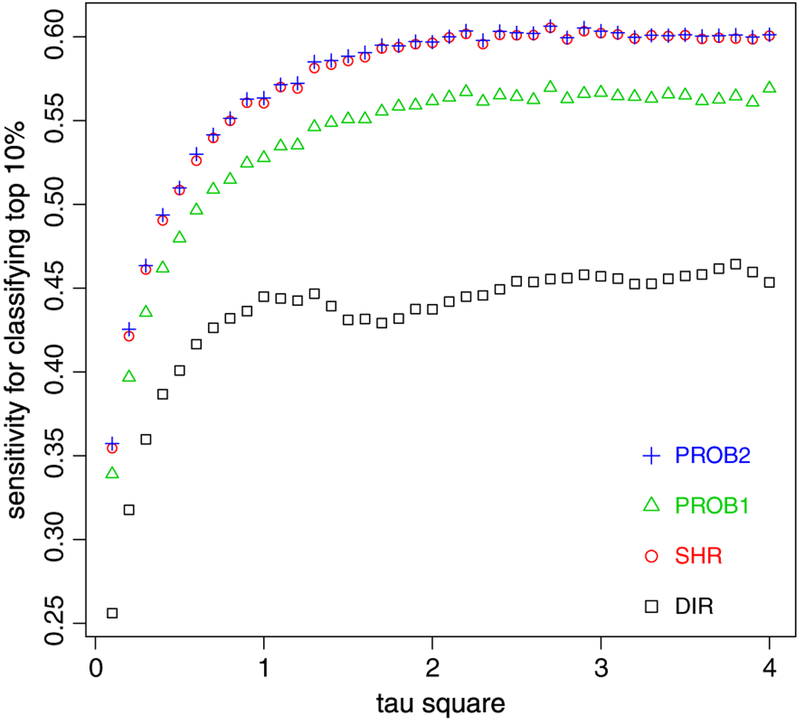

Figure 7 shows the sensitivity estimates within the range of . The results seem to suggest that with an increasing , the sensitivity from all methods overall increase. DIR has the lowest sensitivity. Both SHR and PROB2 have some advantages over the other two methods, and they are nearly indistinguishable over the range of tested. Results for the specificity function reflect similar patterns (not shown). Overall, the comparative pattern is similar to that for continuous measures under normal random-effects models (Section 4).

Figure 7.

Sensitivity estimates for binary performance data. square: direct method (DIR), circle: shrinkage method (SHR), triangle: PROB1, plus: PROB2. The classification goal is to identify top 10% of hospitals.

In addition, we might use a double simulation strategy to yield the variation of the estimate of accuracy measures. First, we obtain posterior draws of μB and from . Then, for each draw of parameters, we treat them as true values of parameters to generate random samples of {yi}and obtain the average accuracy estimates across simulations as described earlier. Ignoring the Monte Carlo error, this constitutes a posterior sample of the accuracy function, from which the 95% credible interval can be constructed. However, this strategy might be computationally intensive.

An alternative strategy is to apply the arcsine square root transformation to the proportion data by considering the model [33]

| (13) |

where . On the transformed scale θi, model (13) is reduced to model (2) with σ2 set to 1/4. Because θi is a monotonic transformation of pi, the accuracy of classifying hospitals on pi can be readily calculated on θi using formulae in Table I.

However, the simulation-based strategy might be a more viable strategy for models with more complicated features such as with risk adjustment or non-normal random effects (Section 6) when closed-form formulae are lacking. Using software familiar to practitioners (e.g., R and WinBUGS), this approach can be largely automatic with well-specified input such as hospital-level sample size, population mean of the underlying quality, and between/within hospital variation. For model (12), the simulation programming code is available upon request.

6. Discussion

Profiling hospitals using performance data is an important activity in both research and practice. The purpose of this study is to assess and compare the accuracy of several commonly used approaches to classifying hospitals into quality tiers. We derive the function of expected accuracy for these methods under the classic unbalanced one-way random-effects model (2), the basic form of profiling models. Our study shows that the optimal approach is to use posterior probabilities for classification (PROB2) and set Cprob = {μi}100c. Our numerical evaluations show that the classification using SHR might have comparable performance. Therefore, we suggest that practitioners use these two methods rather than other alternatives. However, for data with high reliability (larger between than within-provider variance), there is little difference among these methods. We advocate practitioners calculating and reporting the expected accuracy of the classification using the developed formulae or simulation strategies.

Actual hospital performance data often have complicated structure and therefore require more sophisticated statistical models. Our study is based on the one-way random-effects model, a largely simplified analytical framework. Our research is therefore a building block, and there exist several limitations, which deserve future research. For example, our simulation results for the binary performance data also suggest that PROB2 has the highest classification accuracy. This prompts us to ponder the generality of this pattern. If we view competing classification approaches as alternative decisions, then the goal of identifying top 100(1 − c)% of hospitals with the largest μi’s has the utility function U(d, μi) = I(μi >{μi}100c) and the expected utility . Arguments from [16, 24], which stem from the Bayesian decision theory [34], suggest that the optimal decision should maximize . Therefore, choosing top 100(1−c)% of hospitals with the largest posterior probability of being greater than {μi}100c would minimize the expected misclassification. This argument might hold regardless of model assumptions on the distribution of {μi} and data features (e.g., unbalance data). Verification of our conjecture, however, requires further research.

Also note from Figure 7, the ‘wiggle’ of the curves suggests that the accuracy functions over τ2 for binary performance measures might have more complicated forms than those for continuous case, which is apparently more smooth (Figure 3). One future research topic, with a more technical flavor, is to obtain approximation formulae for accuracy measures under model (12). This problem might be related to the well-known problem of constructing approximate confidence intervals for binomial proportions [35]. Approximation formulae can be used as off-the-shelf tools for exploratory analysis and might guard against possible programming errors in simulation studies.

In addition, we will consider models with risk adjustment that are suitable for outcome performance measures, controlling for patient-level confounders. An example is

| (14) |

where the risk is adjusted through covariates xij. The random effects β0i, unexplained by risk-adjustment, quantifies the quality of hospital i. For an estimator of β0i, , the sensitivity of using to classify top 100(1−c)% hospitals is

When the normality assumption of the random-effects models (1, 12, and 14) is violated, as often happen for actual data, the problem of classification might be considerably harder. We foresee two future research directions. One is to conduct simulation-based comparative studies, assessing the performance of the classification approaches on data generated from models with non-normal random effects. However, when applying these methods, we still assume normal random-effects for estimation approaches because the software assuming non-normal random effects is not readily available. Similar studies can be found in [36–38], where they studied the behavior of the estimates for fixed effects and variance components under mis-specified linear or generalized linear mixed effects models. Another research topic is to estimate the classification accuracy using semiparametric Bayesian approach, such as using Dirichlet process prior to model non-normal random effects [39, 40].

The methods development for a single outcome can be extended to the case in which hospital programs collect and report multiple yet related performance indicators. A commonly used strategy is to summarize these measures into a unidimensional composite score and to classify the programs using the summary score. The composite score can be constructed using a simple average or estimated via Bayesian hierarchical latent models [18, 41–43]. Because a latent variable in general can also be treated as random-effects [44], the developed formulae under model (2) might be extended to the summary scoring approach.

A related technique in profiling is to rank hospitals, and there exist a large body of related literature. For example, [45] pointed out that ranking based on the posterior distribution of ranks is more optimal than ranking based on the posterior means under a two-stage model as in Eq. (2). Lockwood et al. [46] performed a simulation-based investigation on the performance of optimal ranking procedures and related percentile methods, showing their considerable variations within a normal range of reliability. Lin et al. [47] presented a theoretical study of optimal ranking procedures under various loss functions. For the loss function on identifying top 100(1−c)% of the units using ranks, they have shown that the optimal ranking is asymptotically equivalent to the ‘exceeding probability’ procedure, which is better than ranking using observed means or posterior means. This is consistent with our finding that PROB2 achieves better accuracy than DIR or SHR for classification under model (1). Therefore, additional research might be conducted to elucidate the connection between ranking and classification.

Finally, although our research is motivated from hospital classification, the methods might also be applied and extended to other settings involve program classification, most notably for the performance indicators in education [48–50].

Acknowledgement

The findings and conclusions in this study are those of the authors and do not necessarily represent the views of the Centers for Disease Control and Prevention. The authors acknowledged Jennifer Madans for her valuable input.

Appendix

Classification using an external threshold (Section 2.2)

We develop the functions for accuracy measures if an absolute threshold c1 is used. The sensitivity from using the linear classifier is

and the specificity is

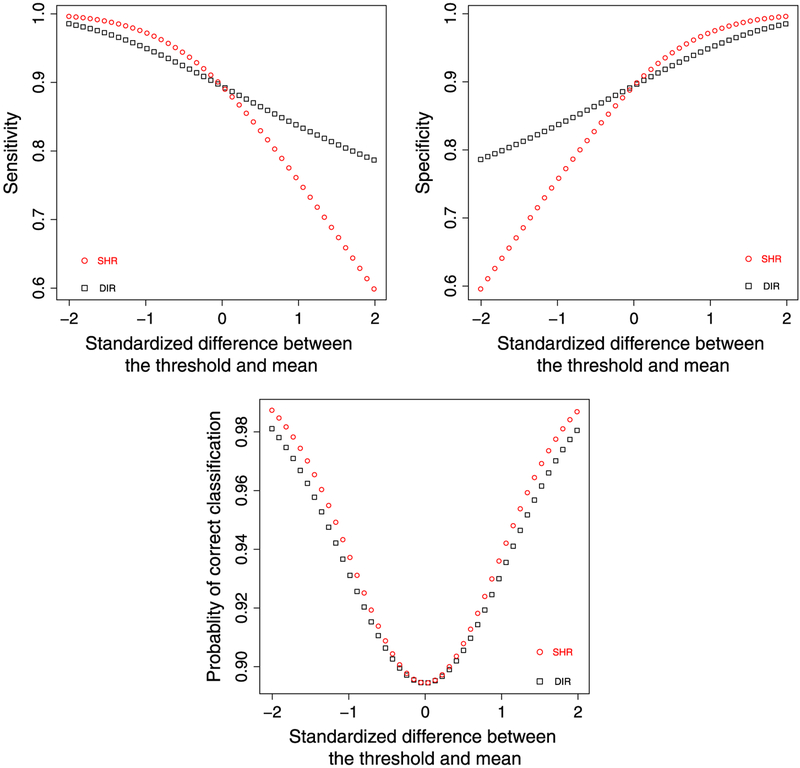

In the following, we gauge the behavior of the classifier using the direct and shrinkage estimators. For brevity, we do not consider the classifier using posterior probabilities because it require an additional cutoff on probability scale. We consider the sensitivity, specificity, and probability of correct classification (PCC) as a summary measure of sensitivity and specificity [51]. After some algebra, the corresponding formulae are derived and listed in Table A1.

We focuses on the comparison between the two classifiers. Without a loss of generality, suppose c1 > μ, then

| (A1) |

An intuitive explanation for Eq. (A1) is that compared with the true quality μi, the direct estimator tend to be over-dispersed yet the shrinkage estimator shrunk to the center. If the goal is to identify the hospitals on the right tail (top performers), then SHR is more likely to miss them because of the shrinkage and therefore has a lower sensitivity than DIR. It can also be shown that SPECDIR < SPECSHR. When c1 < μ, the inequality switches. When c1 = μ, SENDIR = SENSHR, and SPECDIR = SPECSHR.

Table A1.

Sensitivity, specificity, and probability of correct classification for classifying hospitals using the external threshold c1.

| Method | Accuracy measures |

|---|---|

| DIR | Sensitivity: |

| Specificity: | |

| PCC: | |

| SHR | Sensitivity: |

| Specificity: | |

| PCC: |

DIR, direct method; SHR, shrinkage method; PCC, probability of correct classification.

Despite the trade-off between sensitivity and specificity of the two methods, one might wonder which method yields higher PCC. Note that PCC for both methods is an average of , where for DIR and for SHR. Let we seek to find the maxima of Li as a function of xi. After some algebra, we obtain . Set it as 0 leads to . In addition . therefore Li achieves the local maxima for , corresponds to SHR. Sum it over i and take the average, then PCCSHR > PCCDIR. More generally, SHR yields the highest PCC among all linear classifiers when an external threshold is used.

We use the example data in Section 4 to illustrate the comparative pattern. We set c1 = sτ, where s varies from −2 to 2 with a consecutive increment of 0.01, and calculate the sensitivity, specificity, and PCC of DIR and SHR using various c1’s. Figure A1 plots the results, showing the trade-off of sensitivity and specificity between two methods, and the superiority of SHR over DIR for PCC across all c1’s.

Derivations of Eqs. (7) and (8) (Section 3.1)

Figure A1.

Top left panel: sensitivity from direct method (DIR) and shrinkage method (SHR) (square: DIR, circle: SHR). Top right panel: specificity from DIR and SHR. Bottom panel: probability of correct classification from DIR and SHR. X-axis: .

The sensitivity of using the linear classifier is

where μi > {μi}100c means that hospital i belongs to the top-tier, and means that hospital i is classified as in the top-tier using the linear classifier . The denominator Pr(μi > {μi}100c is 1 − c because we use the relative threshold to identify top 100(1−c)% of the hospitals, where under model (2).

To work out the numerator , first let , the 100c%-tile of , this implies that . On The basis of Eq. (5), has a marginal distribution of normal mixtures. Therefore, On basis of Eq. (6), and μi jointly have a marginal distribution bivariate normal mixtures. Therefore, .

Similarly, for specificity

The denominator is c, as 100c% of the M hospitals in the sub-optimal tier. For the numerator, the cutoff point cLIN remains the same as that of the sensitivity, whereas can still be calculated from the bivariate normal mixture, yet with reversed signs from the numerator of the sensitivity. Also note that in general The equality only holds in some limiting cases (e.g., both cLIN → ∞ and c → 1 or ρi → 1). This leads to Equations (7) and (8).

Identifying the optimal linear classifier (Section 3.2)

The optimal classifier is expected to maximize sensitivity or specificity. For sensitivity, the goal is to maximize objective function , subject to , where . This optimization problem can be solved by the Lagrange multiplier. Let , then , and where Φ2 abbreviates and ϕ() denotes the probability density function of the univariate standard normal. Also

| (A2) |

Therefore, , and .

Set the derivatives to 0, that is, and , Because ϕ(xi) > 0 for xi ∊ R, then , suggesting that the solution to xi, where λ* is the solution to λ.

For the Hessian matrix, , the off-diagonal element is zero because does not involve xj for j ≠ i. The i-th diagonal element . Plugging in , the first term drops, and after simplification for the second term, we obtain . For any nonzero vector A = (a1,a2, …, an)t, the quadratic term Similar derivations apply for specificity, and we omit the details.

Sample R code for calculating expected classification accuracy

rm(list=ls());

# library(mvtnorm) is used to calculate cumulative distribution function of bivariate normals; library(nor1mix) is used to calculate the quantiles of the normal mixtures;

library(mvtnorm);

library(nor1mix);

# functions sens.formula.vec and spec.formula.vec calculate the cumulative distribution functions for bivariate normals on the vector form;

# The parameter q is the cutoff point;

# The parameter x is a 2×1 vector. The first element x[1] is the cutoff point for Z1i in Table I; the 2nd element x[2] is the correlation coefficient ρi;

sens.formula.vec=function(q,x)pmvnorm(lower=c(x[1],qnorm(q)),upper=Inf,corr=matrix(c(1,x[2],x[2],1),2,2))/(1-q);

spec.formula.vec=function(q,x)pmvnorm(lower=-Inf,upper=c(x[1],qnorm(q)),corr=matrix(c(1,x[2],x[2],1),2,2))/q;

# assign parameter values: true.mean is μ; sigma.square is σ2; tau.square is τ2;

# we assign them as values solicted from Example data in Section 4.

# But they can be changed depending on the any actual data analysts have. true.mean=3.48;

sigma.square=2.31;

tau.square=0.29;

# K is τ/σ, the ratio of between/within variability.

# K ≈ 0.4;

K=sqrt(tau.square)/sqrt(sigma.square);

# sample.size is {ni}, the vector containing sample size for each hospital;

# we attach the example dataset in Section 4 with the manuscript;

# The users can input the sample size from their own data;

sample.size=scan(file=“ed08_sample_size.dat”, na.strings=“.”);

# N is the number of hospitals, equal to 329.

N=length(sample.size);

# q is the cutoff point, q=0.9 is for classifying top 10% of the hospitals;

# The users can change it to other values they want.

q=0.9;

# prob.class is the probability threshold in prob1 method;

# The users can change it to other values they want.

prob.class=0.9;

# cut.prob is the cutoff value for the prob2 method;

# set it to the upper 90

# The users can change it to other possibel values.

cut.prob=true.mean+qnorm(q)*sqrt(tau.square);

# true.rho.vec is the vector containing correlation coefficient {ρi} true.rho.vec=sqrt(K**2/(K**2+1/sample.size));

# In the following code, the label “dir” is for DIR method; the label “shr” is for SHR method; the label “prob1” is for PROB1 method; the label “prob2” is for PROB2 method;

# marginal.var.dir is the marginal variance of the DIR classifier, corresponding to the variance of normal mixturs in Eq. (6)

marginal.var.dir=tau.square+sigma.square/sample.size;

marginal.var.shr=tau.square**2/marginal.var.dir;

marginal.var.prob1=marginal.var.prob2=marginal.var.shr;

# dir.mixture is the normal mixture for the DIR method, stated in Eq. (6) dir.mixture=norMix(rep(true.mean, N), sig2=marginal.var.dir);

# quantile.dir.mixture is the cutoff values for the dir method, stated as in Table I.

quantile.dir.mixture=qnorMix(p=q, obj=dir.mixture);

shr.mixture=norMix(rep(true.mean, N), sig2=marginal.var.shr);

quantile.shr.mixture=qnorMix(p=q, obj=shr.mixture);

prob1.mixture=norMix(true.mean-qnorm(prob.class)*sqrt(K**2/(K**2+1/sample.size)*sigma. square/sample.size), sig2=marginal.var.prob1);

quantile.prob1.mixture=qnorMix(p=q, obj=prob1.mixture);

prob2.mixture=norMix((true.mean-cut.prob)/sqrt(K**2/(K**2+1/sample.size)*sigma.square/sample. size), sig2=sample.size*K**2);

quantile.prob2.mixture=qnorMix(p=q, obj=prob2.mixture);

# cut.off.dir is the cutoff for Z1i in the bivariate normal cdf function from DIR method, stated in Table I.

cut.off.dir=(quantile.dir.mixture-true.mean)/sqrt(marginal.var.dir);

cut.off.shr=(quantile.shr.mixture-true.mean)/sqrt(marginal.var.shr);

cut.off.prob1=(quantile.prob1.mixture-true.mean+qnorm(prob.class)

*sqrt (K**2/(K**2+1/sample.size)*sigma.square/sample.size)/sqrt(marginal.var.prob1);

cut.off.prob2=(quantile.prob2.mixture*sqrt(K**2/(K**2+1/sample.size)*sigma.square/sample.size)-true.mean+cut.prob)/sqrt(marginal.var.prob2);

# dir.mat combines cut.off.dir and true.rho.vec from DIR method for the purpose of vector computation;

dir.mat=cbind(cut.off.dir, true.rho.vec);

shr.mat=cbind(cut.off.shr, true.rho.vec);

prob1.mat=cbind(cut.off.prob1, true.rho.vec);

prob2.mat=cbind(cut.off.prob2, true.rho.vec);

# sens.mixture.dir.vec is the sensitivity vector function from DIR method, calculate for each i

sens.mixture.dir.vec=apply(dir.mat, 1, sens.formula.vec, q=q);

sens.mixture.shr.vec=apply(shr.mat, 1, sens.formula.vec, q=q);

sens.mixture.prob1.vec=apply(prob1.mat, 1, sens.formula.vec, q=q);

sens.mixture.prob2.vec=apply(prob2.mat, 1, sens.formula.vec, q=q);

# spec.mixture.dir.vec is the specificity vector function from DIR method; spec.mixture.dir.vec=apply(dir.mat, 1, spec.formula.vec, q=q);

spec.mixture.shr.vec=apply(shr.mat, 1, spec.formula.vec, q=q);

spec.mixture.prob1.vec=apply(prob1.mat, 1, spec.formula.vec, q=q);

spec.mixture.prob2.vec=apply(prob2.mat, 1, spec.formula.vec, q=q);

# average over all hospitals for the overall sensitivity of DIR method;

# calculate mean(sens.mixture.dir.vec);

mean(sens.mixture.shr.vec);

mean(sens.mixture.prob1.vec);

mean(sens.mixture.prob2.vec);

# average over all hospitals for the overall specificity of DIR method; mean(spec.mixture.dir.vec);

mean(spec.mixture.shr.vec);

mean(spec.mixture.prob1.vec);

mean(spec.mixture.prob2.vec);

# The previous estimates should be corresponding to those in the table ‘DIRECT’ under Table II.

References

- 1.Donabedian A Evaluating the quality of medical care. Milbank Memorial Fund Quarterly 1966; 44:166–203. [PubMed] [Google Scholar]

- 2.Iezzoni LI (ed.). Risk Adjustment for Measuring Health Care Outcomes. IL: Health Administration Press: Chicago, 2003. [Google Scholar]

- 3.Gatsonis CA. Profiling providers of medical care In Encyclopedia of Biostatistics, Vol. 3 Wiley: New York, 1998; 3536. [Google Scholar]

- 4.Institute of Medicine. Performance Measurement: Accelerating Improvement. The National Academies Press: Washington DC, 2006. [Google Scholar]

- 5.Rosenthal MB, Frank RG, Zhonghe L, Epstein AM. Early experience with pay-for-performance, from concept to practice. Journal of the American Medical Association 2005; 294:1788–1793. [DOI] [PubMed] [Google Scholar]

- 6.Premier Inc. Centers for medicare and medicaid services (cms)/premier hospital quality incentive demonstration project: findings from year 2, 2007. accessed from http://www.premierinc.com/quality-safety/tools-services/p4p/hqi/resources/hqi-whitepaper-year2.pdf.

- 7.Rao JNK. Small Area Estimation. Wiley: New Jersey, 2003. [Google Scholar]

- 8.Christiansen CL, Morris CM. Improving the statistical approach to health care provider profiling. Annals of Internal Medicine 1997; 127:764–768. [DOI] [PubMed] [Google Scholar]

- 9.McGlynn EA. Introduction and overview of the conceptual framework for a national quality measurement and reporting system. Medical Care 2003; 41:1–7. [DOI] [PubMed] [Google Scholar]

- 10.Thomas JW, Hofer TP. Accuracy of risk-adjusted mortality rates as a measure of hospital quality of care. Medical Care 1999; 37:83–92. [DOI] [PubMed] [Google Scholar]

- 11.Adams JL, Mehrotra A, Thomas JW, McGlynn EA. Physician cost profiling-reliability and risk of misclassification. New England Journal of Medicine 2010; 362:1014–1021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Normand SLT, Shahian DM. Statistical and clinical aspects of hospital outcomes profiling. Statistical Science 2007; 22:206–226. [Google Scholar]

- 13.Miller M, Richardson JM, Bloniaz K. More on physician cost profiling. New England Journal of Medicine 2010; 363:2075–2076. [DOI] [PubMed] [Google Scholar]

- 14.Adams JL, Mehrotra A, Thomas JW, McGlynn EA. The authors reply to “more on physician cost profiling” by Miller et al. (2010). New England Journal of Medicine 2010; 363:2076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Normand SLT, Wolf RE, Ayanian AZ, McNeil BJ. Assessing the accuracy of hospital clinical performance measures. Medical Decision Making 2007; 27:9–20. [DOI] [PubMed] [Google Scholar]

- 16.Austin PC. Bayes rules for optimally using Bayesian hierarchical regression models in provider profiling to identify high-mortality hospitals. BMC Medical Research Methodology 2008; 8:30–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Jones HE, Spiegelhalter DJ. The identification of “unusual” health-care providers from a hierarchical model. The American Statistician 2011; 65:154–163. [Google Scholar]

- 18.Teixeira-Pinto A, Normand SLT. Statistical methodology for classifying units on the basis of multiple-related measures. Statistics in Medicine 2008; 27:1329–1350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.McCulloch CE, Searle SR, Neuhaus JM. Generalized, Linear, and Mixed Models, 2nd Edition Wiley: New Jersey, 2008. [Google Scholar]

- 20.James W, Stein C. Estimation with quadratic loss In Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability, 1. University of California Press: Berkeley, 1961; 361–379. [Google Scholar]

- 21.Efron B, Morris CN. Data analysis using stein’s estimator and its generalizations. Journal of the American Statistical Association 1975; 70:311–319. [Google Scholar]

- 22.Burgess JF, Christiansen CL, Michalak SE, Morris CN. Medical profiling: improving standards and risk adjustment using hierarchical models. Journal of Health Economics 2000; 19:291–309. [DOI] [PubMed] [Google Scholar]

- 23.Jones HE, Spiegelhalter DJ. Accounting for regression-to-the-mean in tests for recent changes in institutional performance: analysis and power. Statistics in Medicine 2009; 28:1645–1667. [DOI] [PubMed] [Google Scholar]

- 24.Austin PC, Brunner LJ. Optimal bayesian probability levels for hospital report cards. Health Services and Outcomes Research Methodology 2008; 8:80–97. [Google Scholar]

- 25.Zheng H, Zhang W, Ayanian JZ, Zaborski LB, Zaslavsky AM. Profiling hospitals by survival of patients with colorectal cancer. Health Services Research 2011; 46:729–746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Slepian D The one-sided barrier problem for gaussian noise. Bell System Technolgoy Journal 1962; 41:463–501. [Google Scholar]

- 27.Neudecker H, Magnus JR. Matrix Differential Calculus with Applications in Statistics and Econometrics. Wiley: New York, 1988. [Google Scholar]

- 28.McCaig LF, McLemore T. Plan and operation of the National Hospital Ambulatory Medical Survey. Series 1: programs and collection procedures. Vital Health Statistics 1994; 34:1–78. [PubMed] [Google Scholar]

- 29.Pines JM, Decker SL, Hu T. Exogenous predictors of national performance measures for emergency department crowding. Annals of Emergency Medicine 2012; 60:293–298. [DOI] [PubMed] [Google Scholar]

- 30.Gelman AE, Carlin JB, Stern HS, Rubin DB. Bayesian Data Analysis. Chapman and Hall: London, 2004. [Google Scholar]

- 31.Gelman A, Rubin DB. Inference from iterative simulation using multiple sequences (with discussion). Statistical Science 1992; 7:457–511. [Google Scholar]

- 32.Thomas N, Longford NT, Rolph JE. Empirical bayes method for estimating hospital-specific mortality rates. Statistics in Medicine 1994; 13:889–903. [DOI] [PubMed] [Google Scholar]

- 33.Carter GM, Rolph JE. Empirical bayes methods applied to estimating fire alarm probabilities. Journal of the American Statistical Association 1974; 69:880–885. [Google Scholar]

- 34.Berger JO. Statistical Decision Theory and Bayesian Analysis. Springer-Verlag: New York, 1980. [Google Scholar]

- 35.Brown LD, Cai TT, DasGupta A. Interval estimation for a binomial proportion, (with discussion). Statistical Science 2001; 16:101–133. [Google Scholar]

- 36.Fellingham GW, Raghunathan TE. Sensitivity of point and interval estimates to distributional assumptions in longitudinal data analysis of small samples. Communications in Statistics - Simulation and Computation 1995; 24:617–630. [Google Scholar]

- 37.Austin PC. Bias in penalized quasi-likelihood estimation in random effects logistic regression models when the random effects are not normally distributed. Communications in Statistics-Simulation and Computation 2005; 34:549–565. [Google Scholar]

- 38.Litiere S, Alonso A, Molenberghs G. The impact of a misspecified random-effects distribution on the estimation and the performance of inferential procedures in generalized linear mixed models. Statistics in Medicine 2008; 27:3125–3144. [DOI] [PubMed] [Google Scholar]

- 39.Paddock SM, Ridgeway G, Lin R, Louis TA. Flexible distributions for triple-goal estimates in two-stage hierarchical models. Computational Statistics and Data Analysis 2006; 50:3242–3262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Ohlssen DI, Sharples LD, Spiegelhalter DJ. Flexible random-effects models using Bayesian semi-parametric models: applications to institutional comparisons. Statistics in Medicine 2007; 26:2088–2112. [DOI] [PubMed] [Google Scholar]

- 41.Normand SLT, Wolf RE, McNeil BJ. Discriminating quality of hospital care in the US. Medical Decision Making 2008; 38:308–322. [DOI] [PubMed] [Google Scholar]

- 42.Shwartz M, Justin R, Erol AP, Wang X, Cohen AB, Restuccia JD. Estimating a composite measure of hospital quality from the hospital compare database, differences when using a Bayesian hierarchical latent variable model versus denominator-based weights. Medical Care 2008; 46:778–785. [DOI] [PubMed] [Google Scholar]

- 43.He Y, Wolfe RE, Normand SLT. Assessing geographical variations in hospital processes of care using multilevel item response models. Health Services and Outcomes Methodology 2010; 10:111–133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Skrondal A, Rabe-Hesketh S. Generalized Latent Variable Modeling. Chapman and Hall/CRC: Boca Raton, FL, 2004. [Google Scholar]

- 45.Laird NM, Louis TA. Empirical bayes ranking methods. Journal of Educational Statistics 1989; 14:29–46. [Google Scholar]

- 46.Lockwood JR, Louis TA, McCaffrey DF. Uncertainty in rank estimation: implications for value-added modeling accountability systems. Journal of Educational and Behavioral Statistics 2002; 27:255–270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Lin R, Louis TA, Paddock SM, Ridgeway G. Loss function based ranking in two-stage, hierarchical models. Bayesian Analysis 2006; 1:915–946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Aitkin M, Longford NT. Statistical modelling issues in school effectiveness studies. Journal of the Royal Statistical Society, Series A 1986; 149:1–43. [Google Scholar]

- 49.Goldstein H, Speigelhalter D. League tables and their limitation: statistical issues in comparisons of institutional performance (with discussion). Journal of the Royal Statistical Society, Series A (Statistics in Society) 1996; 159:385–443. [Google Scholar]

- 50.Draper D, Gittoes M. Statistical analysis of performance indicators in UK higher education. Journal of the Royal Statistical Society, Series B (Statistical Methodology) 2004; 167:449–474. [Google Scholar]

- 51.Zhou XH, Obuchowski NA, McClish DK. Statistical Methods in Diagnostic Medicine. Wiley: New York, 2002. [Google Scholar]