Abstract

The extent to which the major subdivisions of prefrontal cortex (PFC) can be functionally partitioned is unclear. In approaching the question, it is often assumed that the organization is task dependent. Here we use fMRI to show that PFC can respond in a task-independent way, and we leverage these responses to uncover a stimulus-driven functional organization. The results were generated by mapping the relative location of responses to faces, bodies, scenes, disparity, color, and eccentricity in passively fixating macaques. The results control for individual differences in functional architecture and provide the first account of a systematic visual stimulus-driven functional organization across PFC. Responses were focused in dorsolateral PFC (DLPFC), in the ventral prearcuate region; and in ventrolateral PFC (VLPFC), extending into orbital PFC. Face patches were in the VLPFC focus and were characterized by a striking lack of response to non-face stimuli rather than an especially strong response to faces. Color-biased regions were near but distinct from face patches. One scene-biased region was consistently localized with different contrasts and overlapped the disparity-biased region to define the DLPFC focus. All visually responsive regions showed a peripheral visual-field bias. These results uncover an organizational scheme that presumably constrains the flow of information about different visual modalities into PFC.

Graphical Abstract

Introduction

Prefrontal cortex of macaque monkey (PFC) is important for executive functions, including decision making, attention, and memory. Studies of anatomical connectivity, cytoarchitecture, and lesions show that PFC can be partitioned into several large domains (Averbeck and Seo, 2008; Petrides et al., 2012). These include lateral PFC, which is associated with generating goals based in part on visual content or recent events (Passingham and Wise, 2012), and orbital PFC, which is implicated in good-based decisions (Kaskan et al., 2016; Padoa-Schioppa and Conen, 2017; Rudebeck et al., 2017). The open question that we take up is the extent to which these large domains are themselves organized by functionally defined subregions. There is reason to believe that they are: functional architecture is a canonical property of cerebral cortex (Van Essen and Glasser, 2018); and array recordings show that one albeit small portion of lateral PFC, the prearcuate region, can be subdivided either based on common neurophysiological noise (Kiani et al., 2015) or based on response timing during a delayed eye-movement task (Markowitz et al., 2015). Addressing the functional organization of PFC is important for at least two reasons. First, a functional parcellation of PFC, if it exists, could shed light on how PFC works to implement executive functions (O’Reilly, 2010), in the same way that knowledge of the organization of IT has opened opportunities to understand how IT works to bring about object vision (Conway, 2018; Janssens et al., 2014; Kravitz et al., 2012). Second, a functional parcellation would constrain models for how PFC evolved and how PFC is wired up during development and subsequent experience.

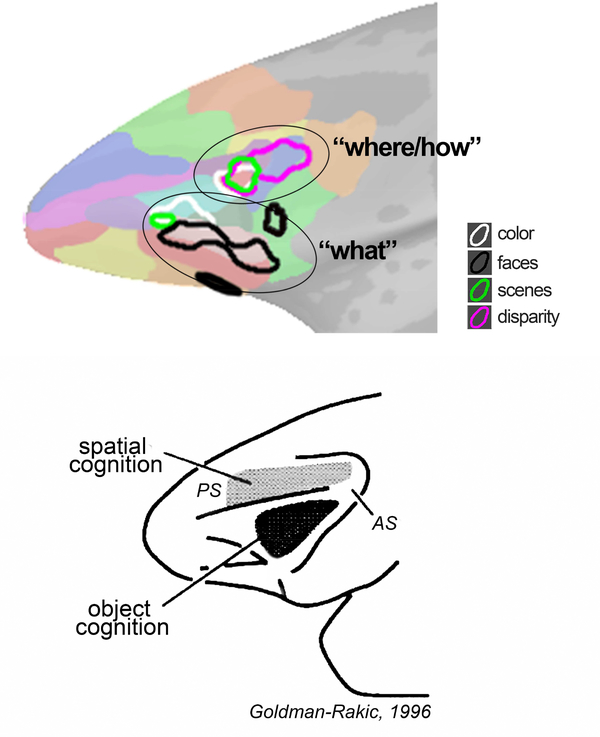

The idea that PFC houses functional compartments is an old one. Mishkin argued that the dorsolateral convexity computed spatial functions, while lateral orbital cortex maintained “central sets” (Mishkin, 1964). Goldman-Rakic developed this idea (Goldman-Rakic, 1996; Wilson et al., 1993), extending to lateral PFC the segregation of ventral versus dorsal visual streams (Ungerleider and Mishkin, 1982)—her framework implicated dorsolateral PFC in spatial working memory, and ventrolateral PFC in object working memory. Array recordings by Markowitz et al (2015) and Kiani et al (2015) support still other formulations on the organization of lateral PFC, but ones that nonetheless posit that lateral PFC contains functional partitions. Other microelectrode evidence, however, shows that stimulus and task-related information is distributed throughout lateral PFC, suggesting that dorsolateral and ventrolateral PFC form a single functional entity comprising a dynamic organization that reflects task demands (Buschman et al., 2011; Rainer et al., 1998; Rao et al., 1997; Stokes, 2015). For example, Buschman et al observed that neurons across much of lateral PFC show color selectivity when color is a relevant dimension. Yet lateral PFC neurons do not always encode task-relevant stimulus features: neurons recorded in animals performing challenging color discriminations at different cued locations encode the spatial dimension, and not stimulus color (Lara and Wallis, 2014). The discrepancy in the extent to which different studies report stimulus selectivity of single cells (for example to color) is thought to be attributed to differences in the tasks (such as the difficulty of the color discriminations). But as Lara and Willis (2014) point out, another possibility is that different stimulus-specific signals are handled by separate restricted regions within lateral PFC, which might not be evident given sampling limitations of microelectrode recording. A related question concerns the extent to which the various fine-grained anatomical parcellation schemes of lateral PFC (e.g. (Petrides et al., 2012)) are manifest as a functional architecture.

Many studies have shown that PFC neurons can be selective for sensory stimuli, across sensory domains (Bodner et al., 1996; Qi and Constantinidis, 2013; Romanski, 2012; Romo et al., 1999). Within the visual domain, single-unit recording studies show that lateral PFC neurons respond to shape, color, and faces (Mishkin and Manning, 1978; Ninokura et al., 2004; Rushworth et al., 1997; Sakagami et al., 2001; Scalaidhe et al., 1999), and are sensitive to 3D and 2D information (Peng et al., 2008; Theys et al., 2012). These observations raise the possibility that visually driven responses, rather than task-dependent responses, could be exploited to uncover a functional organization (Riley et al., 2017). But the extent to which there is a task-independent stimulus-driven organization across lateral PFC remains unclear for several reasons. First, as described above, microelectrode studies of PFC suffer from sampling limitations that preclude an assessment of the topographic organization across PFC. Riley et al (2017) addressed this challenge by pooling electrode recording data obtained in several animals. But sampling limitations remain a concern. Second, it is difficult to compare data acquired in different animals, either within a study (as in Riley et al) or across studies, because of individual differences in anatomy and likely individual differences in functional organization if it exists. The problems associated with pooling data across subjects is now widely acknowledged in fMRI studies: registering data to a common anatomical template and then generating group average maps can obscure meaningful structure, especially if the individual variability in the absolute location of a functional domain with respect to anatomical landmarks is on the order of the size of the functional domains (Lafer-Sousa et al., 2016). A topographical organization might be evident only by conserved relative locations of functional domains, rather than by conserved absolute locations of functional domains. Third, a satisfactory test of the visually driven functional organization requires that data be collected in animals that have no prior history of performing tasks and are not engaged in an overt cognitive task. Since it is widely assumed that PFC is involved in cognitive behavior, most studies of PFC have (reasonably) tested PFC function while subjects perform cognitive tasks.

One possible approach to address the question would be: (1) to use fMRI, which avoids the sampling limitations of traditional micro-electrode recording and makes it relatively straightforward to measure responses to a large battery of stimuli in the same subject, controlling for individual differences in functional architecture; and (2) to identify functional landmarks across animals. The clusters of face-selective cells in prefrontal cortex (Scalaidhe et al., 1997), which are evident with fMRI (Tsao et al., 2008), could serve this purpose. But the number and location of frontal face patches varies across animals (Janssens et al., 2014), underscoring the importance of controlling for individual differences. Moreover, it is not known to what extent frontal face patches are selective for faces compared to other stimuli (such as color), whether there are other landmarks (functional or anatomical) that determine the location of face patches, and the extent to which other visually driven parts of PFC are sensitive to face stimuli. The results presented here address these issues and show for the first time a visual stimulus-driven architecture across PFC. The data facilitate a direct comparison of the stimulus-driven functional organization of prefrontal cortex with the organization of temporal cortex (Lafer-Sousa and Conway, 2013), and show how information about different visual modalities flows into PFC.

Materials and Methods

Experimental procedures for alert fMRI in macaques are given in Lafer-Sousa and Conway (2013). All animal care and experimental procedures met the National Institutes of Health guidelines and were approved by the Animal Care and Use Committees of Harvard Medical School, Wellesley College, and the National Eye Institute. Further details concerning the surgical procedures, monkey training, image acquisition, and statistical analyses can be found in our previous work (Lafer-Sousa and Conway, 2013; Verhoef et al., 2015), and are described below.

fMRI scanning

Four male (M1, M2, M3, M4) rhesus monkeys (Macaca mulatta; 6–10 kg) were scanned at Massachusetts General Hospital Martinos Imaging Center on a 3-T Tim Trio scanner (Siemens, New York, NY) using an AC88 insert, a custom-made four channel surface coil and standard echo planar imaging (repetition time 2 s, 96 96 50 matrix, 1 mm3 voxels; echo time 13 ms). Prior to scanning, a contrast agent, monocrystalline iron oxide nanoparticle (MION, Feraheme; 8–10 mg/kg, diluted in saline, AMAG Pharmaceuticals), was injected into the femoral/saphenous vein (6–11mg/kg) of the animals. Using MION improves the contrast-noise ratio (Lafer-Sousa and Conway, 2013) and enhances the spatial selectivity of the magnetic resonance (MR) signal changes (Zhao et al., 2006), compared to blood oxygenation level-dependent (BOLD) measurements. Decreases in MION signals correspond to increases in BOLD response; therefore, time-course traces in all figures have been vertically flipped to facilitate comparison with conventional BOLD results. To reduce fMRI signal dropout caused by the buildup of iron, an iron chelator was administered to all four subjects (Gagin et al. 2014, Society for Neuroscience Abstract). In two monkeys (M1 and M2), we administered 21 treatments of 500mg of deferoxamine mesylate (Desferal, Novartis; IM injection) over 3 months and 104 treatments of 50mg/kg of deferiprone (Ferriprox, ApoPharma; PO) over seven months. Both chelators were given in the time following the collection of the color gratings, static scenes/faces, and disparity datasets and prior to the collection of the dynamic dataset. For M3 and M4, 50 mg/kg deferiprone was administered daily 4–5 days/week, with administered starting following the first scanning session in which MION was used.

Surgery and initial training protocol

The monkeys were pair housed and kept under standard 12:12 light-dark cycles. Prior to MR scanning, each monkey was implanted with a plastic headpost attached to the skull by custom-built plastic T-like bolts and ceramic screws, all covered by dental acrylic. All surgical protocols were performed under sterile conditions. In a first stage, animals were anesthetized with ketamine (15 mg/kg i.m.) and xylazine (2 mg/kg i.m.) and given atropine (0.05 mg/kg i.m.) to reduce salivary fluid production. During the operation, deep anesthesia was maintained with 1–2% isoflurane in oxygen administered with a tracheal tube. As preventive treatment, prior to the surgery, all animals were given buprenorphine (0.005 mg/kg i.m.) and flunixin (1.0 mg/kg i.m) as analgesics and an aprophylactic dose of antibiotic (Baytril, 5 mg/kg i.m.). Antibiotics were administered again 1.5 hours into surgery, and buprenorphine/flunixin were given for 48 hours post-operatively. During the recovery period, the animals were closely monitored to prevent pain and infection. After 2 to 3 months of recovery, all animals were adapted to physical restraint and trained to sit in a small plastic chair, keeping a sphinx position for several hours while directly facing a color CRT monitor (60 Hz refresh rate, Barco Display Systems). Intensive training in passive fixation was then required until the animals were comfortable with the task and could remain engaged for several hours. During the task, all animals were required to maintain fixation within a 1×1° virtual window centered on a white fixation point in the middle of the screen. Their eye position was monitored at 120Hz using both the pupil and the corneal reflection (Iscan). The monkeys were rewarded for fixating; the reward was fruit juice diluted in water. Behavioral control was achieved to monitor animal’s performance. Monkeys M1 and M2 had previously been trained on a psychophysical color detection task (Gagin et al., 2014; Stoughton et al., 2012) prior to collection of the present data, while M3 and M4 were only trained on neutral fixation tasks prior to data collection. We found no obvious systematic differences in PFC responses between these two groups of animals.

Stimulus sets

To localize regions biased for color, we contrasted responses to equiluminant gratings composed of hues evenly spaced in DKL color space with responses to achromatic luminance-contrast gratings (Figure 1A, B). This space is defined using photometric equiluminance (Zaidi and Halevy, 1993), and is used routinely in behavioral experiments in humans and monkeys, and in neurophysiological experiments in monkeys (Hass et al., 2015). Behavioral measures of equiluminance can vary from one individual to another, and different tests of equiluminance can produce different results in the same individuals (for discussion of equiluminance see (Conway, 2009)). For the present purposes, the use of photometric equilumiance assumes that monkeys and humans have similar color-detection and color-discrimination mechanisms. This assumption is justified by direct tests comparing color behavior in macaques and humans (Gagin et al., 2014; Stoughton et al., 2012).

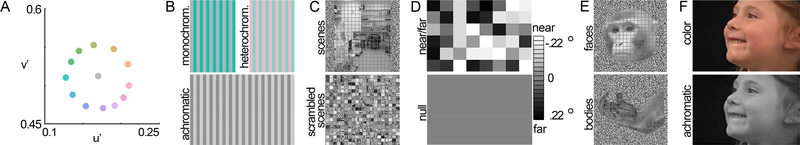

Figure 1. Stimuli used to assess the visual stimulus-driven functional organization of prefrontal cortex in macaque monkeys.

A. Chromaticities of the 12 colors used in the monochromatic gratings. B. Color stimuli were configured as low spatial frequency gratings, either monochromatic (color-gray) or heterochromatic (defined by the cardinal and intermediate directions of color space). Voxels were defined as color biased if they showed stronger responses to colored gratings than to achromatic luminance-contrast gratings. Note that the “gray” phase of the color-gray gratings appears reddish in the figure because of color induction; the gray itself was defined as the neutral adapting state of the null conditions. C. Scene stimuli consisted of photographs of the lab environment. Responses to scene stimuli were contrasted with responses to scrambled versions of the scene images, or with responses to faces. D. Schematic of the disparity stimulus, which consisted of random-dot anaglyph stereogram checkerboards (see scale bar). Responses were contrasted against null-disparity random-dot patterns. E. Face stimuli consisted of photographs of monkey faces. Responses were contrasted with responses to bodies. F. Dynamic stimuli consisted of short video clips showing faces, scenes, objects and bodies, presented either in color or grayscale.

Equiluminant color gratings were either color-gray gratings (Figure 1B top left, in animals M1 and M2) or heterochromatic gratings (Figure 1B top right, M3 and M4). To localize the scene-biased regions, we contrasted responses to images of intact scenes with scrambled images of the same scenes (Figure 1C). To localize binocular disparity-biased regions (M1 and M2), we contrasted responses to random-dot stereograms with and without depth cues (Figure 1D). To localize face-biased regions, we contrasted responses to images of faces and bodies (Figure 1E). To assess scene, face, and body responses, we collected data to static stimuli (achromatic stills, all subjects) and dynamic movie clips (both color and achromatic 3-second video clips, for monkeys M1 and M2, Figure 1F), in separate scanning sessions. We also collected responses to checkerboards restricted to annuli of varied sizes, to test for eccentricity representations of the visual field. The eccentricity stimuli are described in Lafer-Sousa and Conway (2013).

The stimuli covered the entire screen and were presented in a block-design paradigm. Responses to static and dynamic visual stimuli were recorded using color gratings, color and grayscale images and videos of visual scenes (Lafer-Sousa et al., 2016), faces and bodies (used to define the face selective regions), and disparity-defined random-dot stereograms. All visual stimuli were displayed on a screen (41° × 31°) placed 49 cm in front of the animal using a JVC-DLA projector (1024 × 768 pixels), and animals were rewarded for maintaining fixation within a ~1 degree square visual window surrounding a white point in the center of the stimulus. Full details of color grating stimuli and color stimulus calibration are provided in our previous work (Lafer-Sousa and Conway, 2013; Lafer-Sousa et al., 2012). Color stimuli were generated in cone-opponent color space (Derrington et al., 1984; MacLeod and Boynton, 1979) and set to have equal saturation in CIELUV space; stimuli were calibrated using spectral readings taken with a PR655 spectroradiometer (Photo Research Inc., Chatsworth, CA). Color stimuli were either monochromatic or heterochromatic gratings. Monochromatic color stimuli (used in animals M1 and M2) were presented as equiluminant, low spatial frequency color-gray gratings (Figure 1B), contrasted with achromatic gratings of 10% luminance contrast. Heterochromatic color stimuli (used for monkeys M3 and M4) consisted of equiluminant low spatial frequency color-color gratings, contrasted with achromatic gratings of 5% luminance contrast. The monochromatic gratings were defined by twelve hues (Figure 1A). For the heterochromatic gratings, each grating had colors defined by one axis through DKL color space. Four different gratings were used: L-M (red-green), Intermediate 1 (orange-cyan), S (yellow-lavender), and Intermediate 2 (lime-magenta). Each stimulus block comprised a single grating of 0.29 cycles/degree, slowly drifting 0.75 cycle/s, alternating directions every 2s, for 32 seconds. Stimulus blocks were interleaved with 32 second blocks of neutral full-field gray that maintained constant mean luminance (55 cd/m2).

Details of static scene, face and body stimuli are provided elsewhere (Lafer-Sousa and Conway, 2013). For the static stimulus set, all images were grayscale, surrounded by an achromatic white-noise background; the image portion of the stimulus occupied a 6° square centered at the fixation point. The images were matched in average luminance to the neutral gray (constant average luminance ~25cd/m2) throughout the stimulus sequence. The scene set comprised several familiar scenes (Figure 1C, top). The faces set included both monkey and human faces, familiar and unfamiliar faces, and forward-facing and ¾ facing faces (Figure 1E, top). The body stimulus set included several images of bodies without heads and body parts (Figure 1E, bottom). Blocks containing the intact scenes were followed without any delay by blocks containing scrambled versions of the same images (Figure 1C, bottom). All blocks were 32 seconds.

For scene, face, and body stimuli, we ran additional sessions using a dynamic stimulus set, consisting of 3 second video clips presented in 30 second blocks and showing similar images to those included in the static set (same stimulus category), but in natural motion (i.e. human faces moving, smiling, talking) (Lafer-Sousa et al., 2016). The dynamic stimuli were presented in color and grayscale (Figure 1F). The videos were centered at the fixation point, occupying the central 20° of the visual field.

The disparity stimuli consisted of anaglyph random-dot disparity checkerboards (dot density: 15%; dot size: 0.08° × 0.08°) presented on a black background; the stimuli were viewed through red-blue stereo goggles; details of the stimuli are given elsewhere(Hubel et al., 2015; Verhoef et al., 2015). Disparity checks were 3.5×3.5°, and varied from −.22° (crossed, near) to +.22° (uncrossed, far) disparity. We used two different disparity stimuli to localize disparity-biased regions. In the ‘near/far’ disparity stimuli, drifting random-dot stereograms with mixed near and far disparity (average disparity = 0°; range: −0.22° to 0.22°, Figure 1D, top) or no-disparity (null-disparity, uniform 0° disparity, Figure 1D, bottom) were shown. In the ‘near+far’ disparity stimuli, near and far disparities were presented as independent stimuli. The near stimuli consisted of a checkerboard presented in front of the fixation plane (average disparity = −0.11°; range: −0.22° to 0°). The far stimulus constituted a checkerboard presented behind the fixation plane (average disparity = 0.11°; range: 0° to 0.22°). Finally, the null-disparity stimulus contained no disparity variation (disparity = 0°). We did not confirm in the animals tested that they could see the disparity shown in the random-dot stereograms, but they very likely could, given prior work documenting exceptional stereopsis in macaque monkeys. Interocular distance does not appear to correlate with stereo ability among human or monkey observers (Bruce Cumming, personal communication). The disparities measured are well within the fusion capacity of macaques as shown in other behavioral studies (Prince et al., 2002) (Bough, 1970).

Topographic studies in the macaque PFC (Janssens et al., 2014; Schall et al., 1995; Suzuki and Azuma, 1983) suggest that visually responsive neurons in areas 8A, 46 (caudal), 45, and 12, are arranged according to a complex eccentricity map, with contralateral receptive fields but a pronounced ipsilateral representation for tasks requiring choosing among stimuli in both hemifields (Kadohisa et al., 2015; Lennert and Martinez-Trujillo, 2013). To further characterize the eccentricity map observed in PFC and relate it quantitatively to functionally defined regions of interest, we conducted an eccentricity mapping experiment in two animals (Lafer-Sousa and Conway, 2013). A set of four annuli of zero-disparity checkerboards (centered on the fixation spot) was used to map eccentricity bias across visual regions. The four eccentricity ranges were: 1.5°, and annuli extending from 0–1.5°, 1.5–3.5°, 3.5–7° and 7–20°. As in Lafer-Sousa and Conway (2013), checkerboards were comprised of checks of colored checkers defined by the cardinal axes in DKL color space (L-M, or S, or achromatic).

fMRI data processing

Prior to the experiments, high-resolution anatomical scans (0.35 × 0.35 × 0.35 mm3 voxels) were acquired in all four animals under light sedation. Significance maps generated from the functional data were rendered on the inflated surfaces obtained from each animal’s anatomical volume. All data analyses were performed using FREESURFER and FS-FAST software (http://surfer.nmr.mgh.harvard.edu/), together with the custom “jip” toolkit provided by Joseph Mandeville (http://www.nitrc.org/projects/jip/), and custom scripts written in MATLAB (MathWorks). The surfaces of the high-resolution structural volumes were reconstructed and inflated using FREESURFER; functional data were motion corrected with the AFNI motion correction algorithm (Cox and Hyde, 1997), spatially smoothed with a Gaussian kernel (full-width at half maximum = 2 mm), and registered to each animal’s own anatomical volume using jip.

Our fMRI images were processed using standard alert monkey fMRI processing techniques: images were first normalized to correct for signal intensity changes and temporal drift, and t-tests uncorrected for multiple comparisons were performed to construct statistical activation maps based on a general linear model. The activation maps were projected on high-resolution anatomical volumes and surfaces, and time courses were calculated by first detrending the fMRI response. The temporal drift associated with the fMRI signal was modeled by a second-order polynomial:

where x(t) is the raw fMRI signal, and S(t) is the detrended signal. The coefficients a, b and c were calculated using the MATLAB function polyfit. The percentage deviation of the fMRI signal, s′(t), reported as the y axis values in the time course traces, was calculated by , where s(t) = smoothed(S(t) + c), t = 1, 2,…N, is the mean of s(t), and N is the number of repetition times in the experiment. The constant c was added to S(t) to avoid dividing by zero errors. Smoothing was performed with a moving average of 3 TRs. To evaluate whether or not patchiness of fMRI response may be due to signal dropout, we calculated temporal signal-to-noise ratio (tSNR) maps. For each voxel, the tSNR was defined as the mean across all time points divided by the standard deviation across all time points.

Catalogue of data collected

Experiments involving colored gratings, static images, dynamic movie clips, and disparity were each run in separate sessions. Data for colored gratings: 16 TRs per block; 6 color and 2 achromatic blocks per run; 49 runs over 2 sessions (M1); 21 runs over 1 session (M2); 40 runs over 2 sessions (M3); 34 runs over 2 session (M4). For experiments in which static images were presented (to assess face, body, and scene regions), data obtained: 16 TRs per block; 3 face blocks, 1 scene block, and 1 body block per run; 18 runs over 1 session (M1); 18 runs over 1 session (M2); 17 runs over 1 session (M3); 29 runs over 1 session (M4). For experiments in which movie clips were presented (to assess face, scene, and color regions), data obtained: 15 TRs per block; 2 face blocks and 2 scene blocks per run; 6 color blocks and 6 achromatic blocks per run (see Lafer-Sousa et al, 2016 for other details); 23 runs over 2 sessions (M1). For disparity experiments in which a given image contained both near and far elements (“near/far”): 16 TRs per block; 4 disparity blocks and 2 null blocks per run; 23 runs over 1 session (M1); 11 runs over 1 session (M2). For disparity experiments in which a given image contained either near or far elements (“near + far”): 16 TRs per block; 1 disparity block and 1 null block; 26 runs over 1 session (M1). Eccentricity was mapped in two animals, 16 TRs per block, 2 of each annuli per run, 24 runs over 1 session (M1), and 20 runs over 1 session (M2).

ROI definition

ROIs were defined by comparing the magnitude of the responses to pairs of stimuli (e.g. responses to colored gratings versus responses to achromatic gratings), using a range of thresholds (p = 10−2, 10−3, 10−5, 10−6, and p = 10−8) (see Figure 8). In each analysis, ROIs were defined as the set of contiguous voxels with suprathreshold significance values; ROIs were defined in each hemisphere individually. The significance threshold was set to p = 10−3 to create the plots shown in Figures 5, 7, 9, 12-14. To define ROIs for color-biased regions, face-biased regions, and scene-biased regions, the ROIs were defined using alternate runs, and responses within the ROIs were quantified using the left-out data. To define the disparity-biased ROIs, data from one of the disparity experiments (‘near/far’ or ‘near+far’) was used to define the ROI, and data from the other disparity experiment was used to quantify the responses. We tested for reproducibility by swapping the data used to define the ROIs and the data used to quantify the responses (see Figure 7), and by comparing the responses within ROIs to independent data sets (see Figure 13).

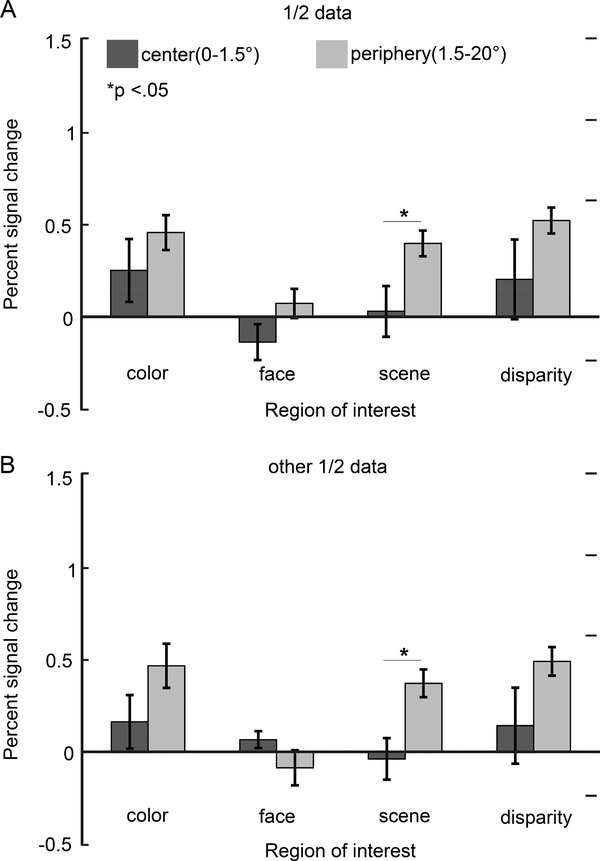

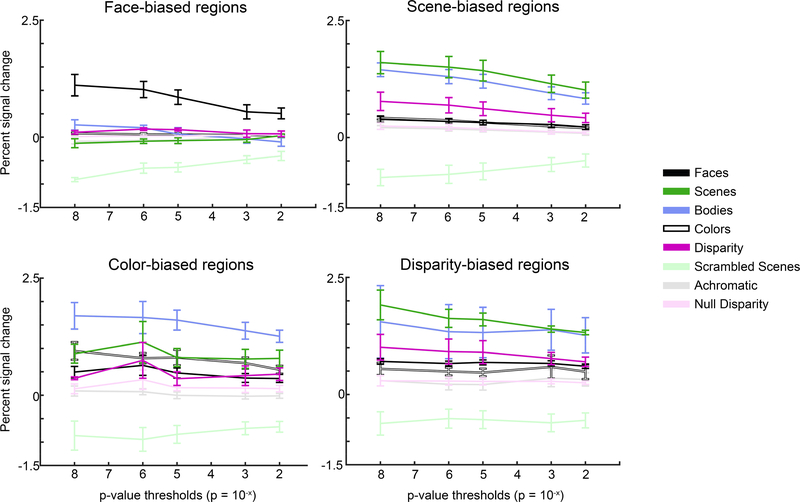

Figure 8. Quantification of the mean responses to the eight visual stimuli used, in the four regions of interest (ROI).

ROIs were defined with half the data; quantification was done with the left-out data. Mean responses were computed using ROIs defined with a range of significance thresholds, from restrictive (p=10−8) to permissive (p=10−2). Error bars show the SEMs.

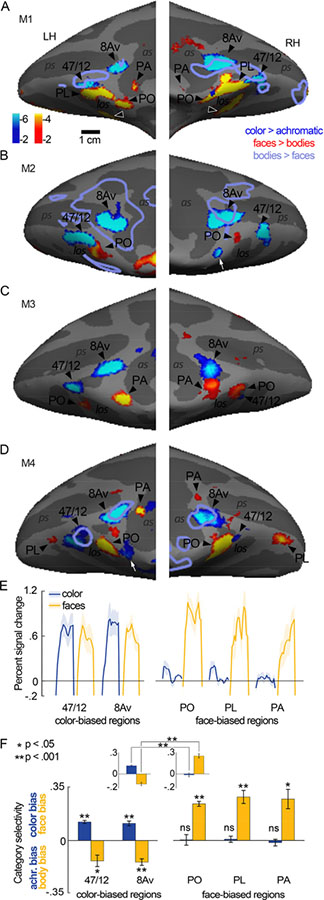

Figure 5. Relationship between color-biased and face-biased regions of frontal cortex of macaque monkey.

A-D Inflated prefrontal cortex surfaces of four animals (M1-M4). Significance maps showing color > luminance (gratings) in blue-cyan and faces > bodies (images) in yellow-red are displayed. E. Time courses of the average percent signal change observed for color and face stimuli in the color-biased regions (left plot, in or near anatomical parcels 47/12 and 8Av), and the prefrontal face-biased regions (right panel); the face-biased regions are labeled following published conventions (PO, PL, PA). Time courses were generated using data that were independent of the data used to define the regions of interest. Shading shows SEM. F. Quantification of color and face selectivity, using data that was independent of the data used to define the regions of interest. Color regions show a bias for color and bodies, while face regions show a bias for faces and no color bias. Each bar represents the mean activity as calculated across all hemispheres for which a region was present: 47/12r, 8 hemispheres; 8Av, 8 hemispheres; PA, 6 hemispheres; PL, 6 hemispheres; PO, 7 hemispheres. Error bars show the SEM. Inset shows results averaged across color ROIs (left panel) and face ROIs (right panel). Color responses were greater in color ROIs than in face ROIs (p=10−4); face biased response were greater in face ROI.

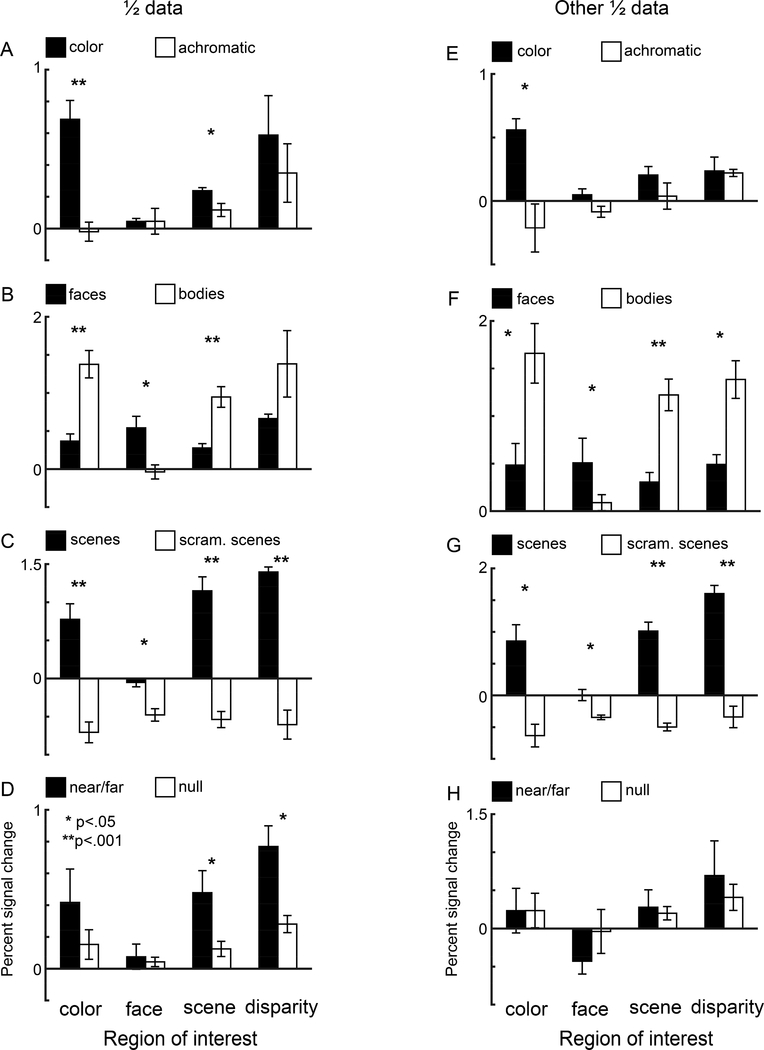

Figure 7. Quantification of the responses within the four regions of interest of macaque prefrontal cortex.

Each plot shows pairs of bars quantifying responses within ROIs defined by, from left to right, color bias, face bias, scene bias, and disparity bias. Quantification was made using data that was independent of the data used to define the ROIs. All ROIs were defined at p = 10−3 threshold. A. Bar plots showing percent signal change (PSC) to color gratings (black) and luminance gratings (open). B. PSC response to images of faces (black) and bodies (open). C. PSC response to images of scenes (black) and scrambled scenes (open) D. Responses (PSC) to near/far disparity (black) and null disparity images (open). Error bars show the SEM. Asterisks denote levels of significance: *, p < .05, ** p < .001. E-H. As for panels A-D, but where the data set used to define the ROIs and the left-out data used to quantify the responses was swapped.

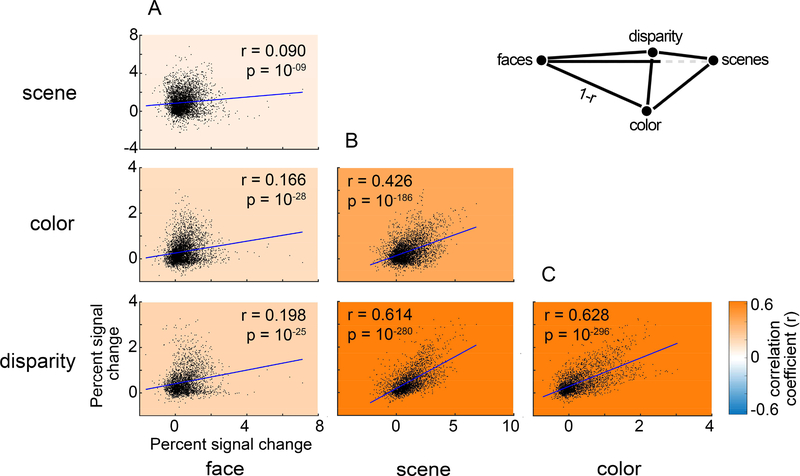

Figure 9. The relationship among visually sensitive voxels of the responses to scenes, faces, color, and disparity.

Data points in each panel show the responses of single voxels within an ROI defined by all visually responsive voxels. Colored underlay shows the correlation coefficient (Pearson’s r; see color-scale bar). A. Scatter plots comparing the PSC to face stimuli with the PSC to scenes (top), colors (middle), and disparity (bottom). B. Scatter plots comparing the PSC to scenes stimuli with the PSC to colors (top), and disparity (bottom). C. Scatter plot comparing the PSC to colors with the PSC to disparity stimuli. Blue lines show least-squares linear fit.

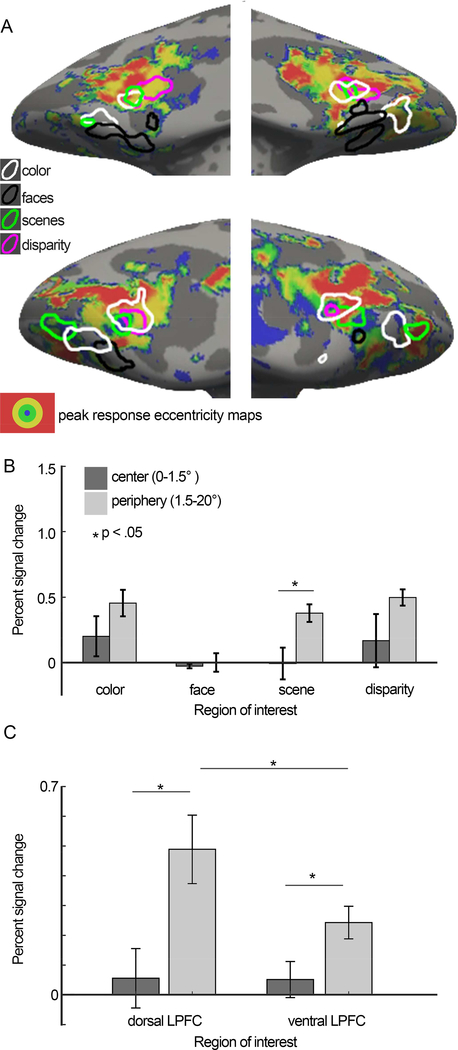

Figure 12. Relationship between functional regions of interest and responses to visual field eccentricity mapped with checker boards restricted to discs and annuli centered on the fixation point.

Inflated PFC surfaces of M1 (top) and M2 (bottom), showing the eccentricity map together with the overlaid contours for color-biased regions (white), face-biased regions (black), disparity-biased regions (magenta), and scene-biased regions (green). For each voxel, the eccentricity to which the voxel maximally responded was determined from the responses to annuli of flickering checkers restricted to four eccentricities: a 1.5° radius disc presented at the fovea (shown in blue); annulus of 1.5–3.5° (green); annulus of 3.5–7° (yellow); and annulus of 7–20° (red). Voxels with peak PSC response below the response to neutral gray plus the standard deviation of gray responses were left transparent. B. Quantification of the percent signal change (PSC) to central and peripheral stimuli, within color-biased, face-biased, scene-biased, and disparity-biased ROIs. C. Quantification of the percent signal change (PSC) to central and peripheral stimuli, within visually driven ROIs located more dorsally in lateral PFC and more ventrally in lateral PFC. Error bars show SEM.

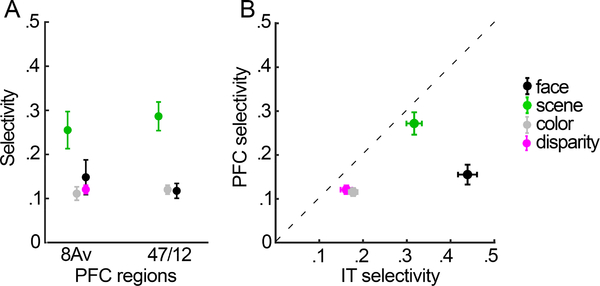

Figure 14. Selectivity of functional regions of interest in prefrontal cortex and inferior temporal cortex (IT).

A. Each point shows the mean selectivity index obtained for the given ROI, averaged over all hemispheres in PFC. B. Scatterplot showing relationship between mean selectivity of ROIs in PFC (y-axis) and mean selectivity of the corresponding functionally defined ROIs in IT (x-axis). Error bars are SEM.

Figure 13. Reproducibility of the eccentricity results.

As for Figure 12, but where only half of the eccentricity data were used in each set of bar plots.

Color-biased ROIs were defined by comparing responses to the achromatic contrast gratings with responses to the chromatic gratings. Scene-biased ROIs were defined by contrasting achromatic images of intact scenes with achromatic images of scrambled scenes. Face-biased ROIs were defined by comparing responses to achromatic images of faces with responses to achromatic images of bodies. Disparity-bias (near/far) ROIs were defined by contrasting the responses to stimuli with mixed near and far disparity with responses to stimuli without disparity. ROIs defined by eccentricity were generated by averaging responses to L-M, S, and achromatic checkerboards; the central ROI was defined by the responses to the central disc (radius 0–1.5°); the peripheral ROI was defined by the average response to the three annuli, spanning a radius of 1.5–20°.

For the paired correlation analysis (see Figure 9), we defined a single ROI as containing all visually responsive voxels. The ROI comprised the separate ROIs for color-biased regions, face-biased regions, scene-biased regions, and disparity-biased regions, in which each of the functional ROIs was defined by a threshold of p=10−3. Note that disparity was only measured in two of the four animals; the number of voxels in the visually responsive ROI was 2387 for comparisons excluding those with disparity (combining data from M1-M4), and 1732 voxels for comparisons involving disparity (combing data from M1 and M2).

Time course analysis

Percent signal changes and selectivity indices were calculated by taking the time course for each voxel in an ROI, then detrending, smoothing and normalizing by the response to the entire run. We performed detrending, normalization and smoothing prior to averaging the responses of all voxels in an ROI. We did this to allow for per-voxel analyses (see Figure 9); in our prior work (Verhoef et al., 2015) we performed detrending, normalization and smoothing after averaging responses to all the voxels. Both methods yielded the same conclusions when quantifying responses on an ROI basis. Subtle differences introduced by the new analysis method accounts for slight difference in selectivity measures (compare Figure 12 Verhoef et al (2015), with Figure 14). To avoid possible confounds introduced by the hemodynamic delay, the response obtained during each 16-TR stimulus block was calculated using the average from the last eight repetition times for this block. Thus for a stimulus which occurred at a kth block in a run, we denoted the response to the stimulus block as Rk, which was the mean of the response during the 9th to the 16th samples. The percentage signal change due to the stimulus block was then defined by

All selectivity indices were computed following the formula:

in which R1 and R2 are the responses to two different stimulus conditions for a given ROI. If any of the responses that make up R1 or R2 were negative, a value was added to these responses setting the minimum value to a zero or a positive number (Simmons et al., 2007). Mean selectivity-indices were determined by averaging the selectivity indices of the functionally defined regions in all hemispheres in which the tested region was present. The responses associated with the ROIs were used to compute standard errors of the mean and perform nonparametric statistical tests. Error bars in Figures 5F, 7, 8, 11-14 are standard errors (N is number of hemispheres).

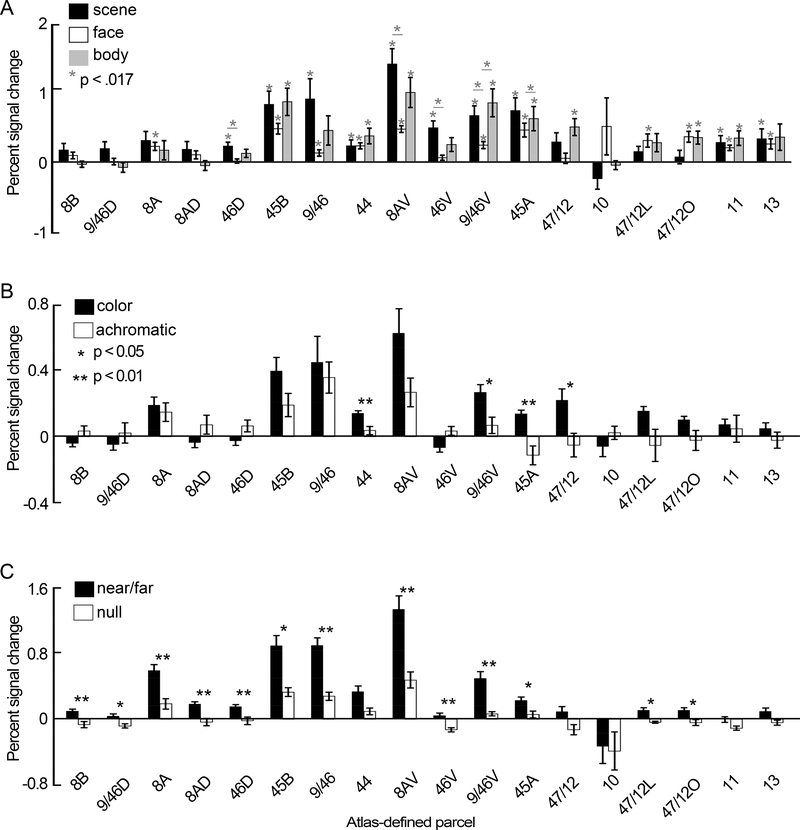

Figure 11. Quantification of functional responses within anatomically defined parcels of macaque prefrontal cortex.

A. Bar plots showing percent signal change (PSC) to images of scenes (black), faces (open), and bodies (gray), calculated for 18 anatomical parcels across lateral and orbital prefrontal cortex. Gray asterisks show levels of significance at the Bonferonni corrected alpha (p < 0.017), for difference from zero for each bar, and difference between bars within a region. Error bars show SEM. B. PSC responses to color gratings (black) and luminance gratings (open). Asterisks show levels of significance: *, p < .05, ** p < .001. C. PSC responses to images of near/far disparity (black) and null disparity stimuli (open). Other conventions as for panel B.

Response in anatomical regions

To determine the extent to which functionally defined regions co-register with anatomically defined parcels, we registered the CIVM Rhesus Macaque Atlas marking anatomical regions (Calabrese et al., 2015) to each monkey’s high resolution anatomical. Quantification within anatomically defined ROIs was done in the same way as for functionally defined ROIs.

Eccentricity maps

To generate a surface map of preferred eccentricity (Figure 12), we performed time course analysis on a per-voxel basis, which yielded each voxel’s preferred eccentricity out of the four eccentricity annuli shown (0–1.5°, 1.5–3.5°, 3.5–7° and 7–20°). If a voxel’s response to its preferred eccentricity was not greater than the voxel’s gray response + the standard deviation of the gray response, the voxel was considered visually unresponsive, and was left transparent on the surface map.

Results

Responses to color, faces, bodies, and scenes were obtained in four animals (M1-M4); responses to disparity and eccentricity were obtained in two of these animals (M1, M2). We first present data from individual animals, showing the pattern of responses in PFC to color, scenes, disparity, faces, and bodies (Figures 2–6), and then quantify the responses in subsequent figures. The fMRI provided coverage of the entire macaque brain; here we focus on PFC. The bulk of the visually driven activity was found in lateral PFC, extending into orbital PFC. We found little or no visually driven activity in medial or dorsal PFC.

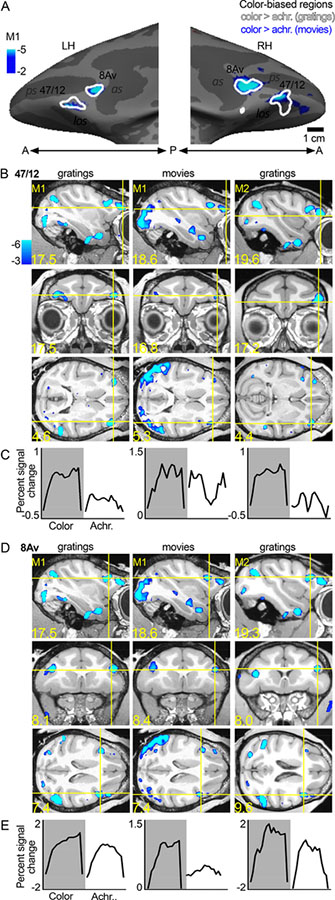

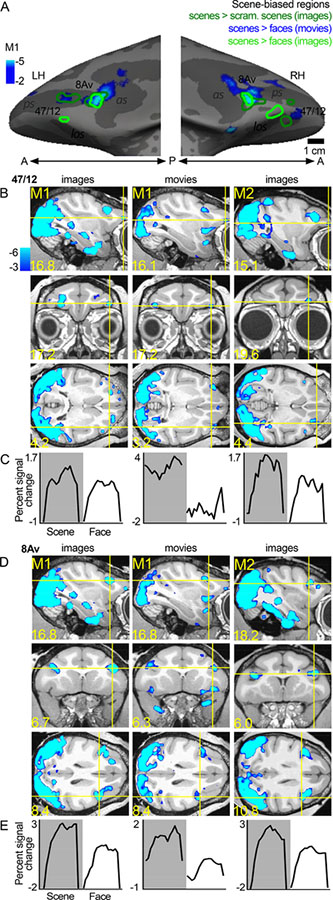

Figure 2. Color-biased regions in prefrontal cortex of macaque monkey.

A. Color-biased activity in animal M1 shown on the computationally inflated cortical surface, lateral views (LH, left hemisphere; RH, right hemisphere; P, posterior; A, anterior). The blue-cyan significance map shows the color > luminance activity for the dynamic movie stimuli (color movies > achromatic movies). The white contours show color > luminance responses measured with colored gratings. Color-biased activations were only found in lateral PFC extending into orbital PFC, in or near anatomically defined parcels 8Av and 47/12. B. Sagittal (top), coronal (middle), and horizontal (bottom) slices showing the responses to color, using gratings and movie clips; slices centered on 47/12, in two monkeys (M1 and M2; surface maps for M1 shown in panel A). Blue-cyan significance maps for each condition are superimposed on high-resolution anatomical scans of each animal’s own anatomy. Slices are labeled in Talairach coordinates (mm). C. Time course of the average percent signal change in the color-biased region of interest shown in panel B (time course and ROI defined using in independent datasets). Stimulus blocks were 16 TRs (2sec/TR; 32sec/block) for gratings stimuli and 15 TRs (2sec/TR; 30sec/block) for movie stimuli. As in B, the left column shows the time course for M1 as measured with gratings stimuli, the middle column shows responses to movies stimuli in M1, and the right column shows activations to gratings stimuli in M2. D, E. Same conventions as in B and C, for the more posterior color-biased region, in or near area 8Av. as, arcuate sulcus; ps, principal sulcus; los, lateral orbital sulcus.

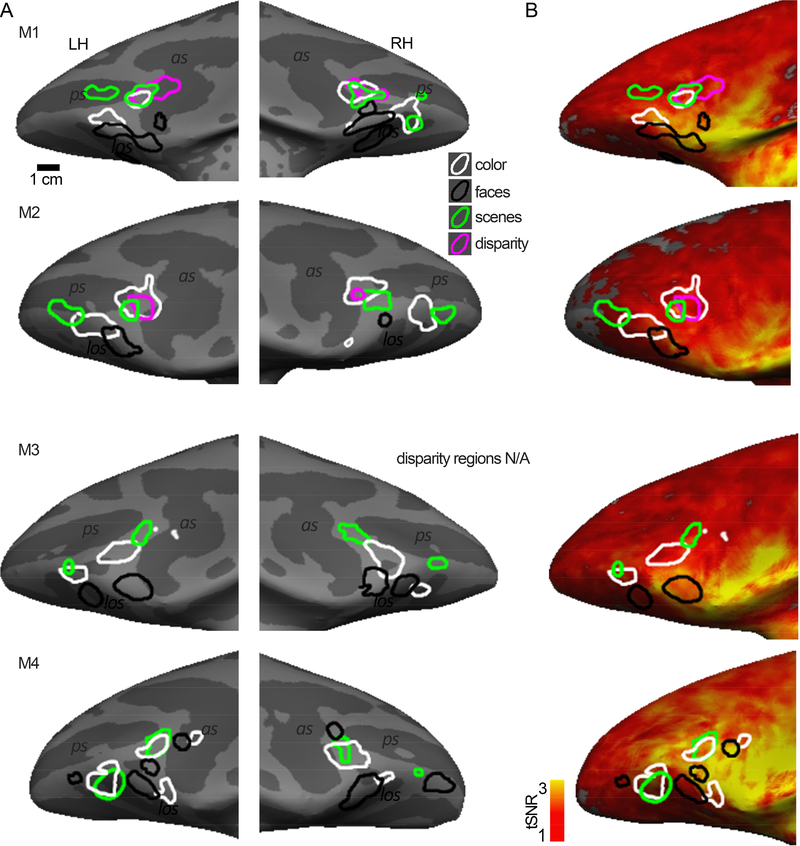

Figure 6. Spatial relationship between regions of interest, and tSNR maps.

A. Inflated bilateral surfaces, zoomed in on PFC, for animals M1-M4. Color-biased regions (white contours); face-biased regions (black); scene-biased regions (green); disparity-biased regions (magenta). B. Functional ROIs on normalized temporal signal to noise ratio (tSNR) maps; tSNR maps were obtained from the first run in each subject’s face and scene localizer scan and are representative of the tSNR for a given animal. The location of the ROIs is not predicted by regions of peak signal intensity, showing that the patchy functional organization cannot be accounted for by variation in tSNR.

Color-biased regions

Color-biased activation in PFC was not uniform. Instead, we discovered two main hotspots (Figure 2): an anterior-lateral region located in or near the anatomical parcel 47/12, that extended from ventrolateral PFC into orbital frontal cortex (Figure 2B); and a more dorsal-posterior region of lateral PFC, in the ventral prearcuate region, in or near anatomical parcel 8Av (Figure 2D). The location of the color-biased regions elicited by contrasting dynamic color videos with the same videos in black and white (plotted in blue in Figure 2A) aligned with the location of the color-biased regions identified using separate experiments in the same animals using colored gratings (contours in Figure 2A). The reproducibility of the results is also evident in the volume (compare the data shown in the leftmost column, which plots responses to gratings, with the data in the middle column, which plots responses to movie clips for M1; Figure 2B and 2D). The pattern of results was similar across monkeys: the third column shows the color-preferring voxels defined by the color-vs-achromatic gratings contrast in M2. We defined ROIs for the two color-biased regions in each hemisphere using half of the data obtained in the color gratings > achromatic gratings contrast, and quantified responses in the left-out (independent) data. Figure 2C and Figure 2E show the average time courses for each independently defined ROI, averaged over two hemispheres for each monkey. For both regions in both datasets and monkeys, there was a higher response to color stimuli than achromatic stimuli. The two color-biased regions were identified in all four animals tested, and in both hemispheres of each animal (see Figure 5).

Scene-biased regions

In addition to the two color-biased regions, we found two scene-biased regions also located in or near anatomically defined parcels 47/12 and 8Av (Figure 3); the activations (blue-cyan patterns in Figure 3) were generated by comparing responses to scenes versus responses to faces, following a convention established by others (Epstein and Kanwisher, 1998; Levy et al., 2001). Computing the scene activation in this way necessarily forces scene-biased regions to be spatially distinct from face-biased regions. To determine the extent to which the location of the scene activations is dependent on how scene-bias is computed, we also defined scene ROIs by comparing responses to intact scenes versus scrambled scenes (compare blue underlay with dark green contours, Figure 3A), and to scenes versus faces using static images (light green contours, Figure 3A). The region in or near anatomical parcel 8Av was consistently localized with all contrasts. But the region in or near 47/12 shifted location. Scene-biased activation was found in 8/8 hemispheres tested (see Figure 6). As with the color-biased regions, the scene-bias found in the functional ROIs was confirmed by quantification of responses in independent datasets (time courses shown in Figure 3C and 3E; see Figures 7–9).

Figure 3. Scene-biased regions in prefrontal cortex of macaque monkey.

A. Scene-biased activity in animal M1 shown on the computationally inflated cortical surface. The blue-cyan significance map shows the scene > face activity using responses to movie clips. The dark green contours show scene > scrambled scene activity measured with responses to static images. The light green contour shows scene > faces measured with static images. Scene-biased activity was consistently found across contrasts in or near 8Av. B. Slices showing the scene-biased activation in two images and movies, in M1 and M2. C. Time course of the average percent signal change in the 47/12 scene-biased region, as measured in independent datasets. D, E. Same conventions as in B and C, for the posterior scene-biased region, in or near area 8Av. Other conventions as for Figure 2.

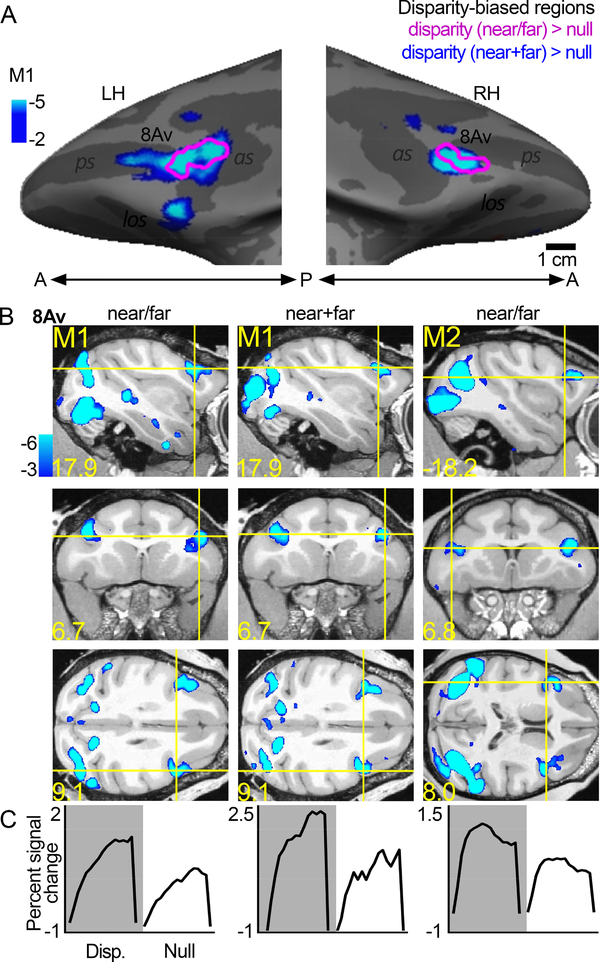

Disparity-biased regions

Figure 4 shows the location of disparity-biased activity on the inflated surface (Figure 4A) and slices (Figure 4B). In the two monkeys tested, we localized disparity activity by contrasting responses to non-zero disparity checkerboards, appearing to pop-out or recede from the plane of the monitor, to those with null disparity, appearing flat. The responses within these functional ROIs, quantified with independent data, showed a stronger amplitude response to disparity compared to the null-disparity condition (Figure 4C). The location and response of the disparity-biased voxels was consistent across stimulus types: results were similar when the disparity stimulus was a checkerboard containing both near and far checks (near/far, Figure 4A magenta contours, Figure 4B first column), and when the disparity stimulus was two checkboards containing either near or far checks (near+far, Figure 4A blue-cyan, Figure 4B second column). The location of the disparity ROI was comparable across the two animals (M1: first column; M2: third column), and it was found in 4/4 hemispheres.

Figure 4. Disparity-biased region in prefrontal cortex of macaque monkey.

A. Disparity-biased activity in monkey M1 shown on the computationally inflated cortical surface. The blue-cyan significance map shows disparity > null disparity responses measured with disparity stimuli that in any given block contained only near or far disparity checks. The magenta contours show regions of disparity > null activity measured in other experiments, using disparity checkerboards containing both near and far depths (near+far) presented simultaneously. With both stimulus sets, a disparity-biased region was identified in both hemispheres, in or near anatomical parcel 8Av. B. Slices showing disparity activity in the two conditions and two animals (M1 and M2). C. Time course of the average percent signal change observed in the disparity-biased region of interest; ROI was defined with data independent of the data used to quantify the time course. Other conventions as for Figure 2.

Responses to faces and bodies

Consistent with micro-electrode recording results (Romanski, 2012; Scalaidhe et al., 1999; Scalaidhe et al., 1997) and other imaging results (Janssens et al., 2014; Tsao et al., 2008), we found several face-biased regions within lateral and orbital frontal cortex (Figure 5). The location and number of face-biased regions showed considerable variability across the animals and hemispheres. Tsao and colleagues (2008) labeled three frontal face patches: PA (prefrontal arcuate); PL (prefrontal lateral); and PO (prefrontal orbital). In none of the hemispheres did we find exactly three clear face patches, which might seem to contradict prior results but it does not. In the Tsao et al study, frontal face patches were not found in all animals, and when identified, were not in consistent locations relative to the anatomy: PA was found in only 2 of 4 animals (3/8 hemispheres), on the crest of the arcuate sulcus in one animal, and within the arcuate sulcus in another animal; PL was found in 5/8 hemispheres, biased towards the RH, but not in a fixed location relative to the other face-biased regions (sometimes anterior to PO, sometimes posterior to it); PO was found in all hemispheres, situated on the lateral margin of the lateral orbital sulcus in some animals, or within the sulcus in other animals. Janssens et al (2014) describe similar variability, raising doubts about the extent to which frontal face patches are discrete entities. The variability in location of PL documented in prior reports, coupled with the low penetrance of the PA region, makes the naming convention somewhat arbitrary. Nonetheless, we have labeled the regions in Figure 5 with our best guess as to how they relate to the face patches identified in prior work. We identified a face-biased region within lateral-orbital PFC on the crest of the lateral occipital sulcus, or within this sulcus, in all hemispheres; we label this region PO. In two animals (M1 and M4) we identified a region in lateral PFC near the infra-principle dimple, which we have labeled PL. In three animals, a face-biased region was found anterior to the arcuate suclus (M3), within it (M4 LH, M1 LH), or posterior to it (M1 RH, M4 RH); we have labeled this region PA. Finally, in one animal (M1), we found an additional face-biased region within orbital PFC, medial to the region labeled PO (open arrow, Figure 5A). We found no evidence for a hemispheric bias in any of the regions (t-test of activity in each set of regions, tested separately, was: PO, p =0.4; PA, p =0.7; PL, N/A; all areas combined LH versus RH, p =0.75). Despite the interanimal variability, the pattern between the hemispheres in each animal was strikingly consistent, and in all animals the face-biased activity was found ventral to area 8Av and non-overlapping color-biased responses.

Figure 5 also shows that besides the two color-biased regions found consistently in all animals, 2/8 hemispheres showed evidence for an additional color-biased region on the lateral surface, ventral to 8Av (arrows M2 RH, M4 LH, Figure 5).

Relationships between visually driven regions of PFC

The variability in location of the functional activation patterns to faces, described above, underscores the importance of determining (and quantifying) the relative locations of different functional activation patterns within the same animal subjects. Are the face-biased regions simply peaks in the visual activation of PFC? What is their relationship to other functionally defined regions? What response do face-biased regions show to other visual stimuli? And how selective are face-biased regions compared with other functionally selective regions? Answering these questions, as we do here, will enable an evaluation of the extent to which frontal face patches are distinct entitites.

As in IT, color-biased regions had a systematic relationship to face-biased regions. Color-biased cortex was consistently located near but not overlapping face-biased cortex, at two main locations in each hemisphere in all four animals (Figure 5A-D). The pattern of color-biased regions was more consistent across animals than the pattern of face patches: two regions in each hemisphere, one in lateral PFC, anterior to the arcuate sulcus (labeled 8Av in Figure 5), and one on the lateral crest of the lateral orbital sulcus in OFC, that extended well into this sulcus in 5/8 hemispheres (labeled 47/12 in Figure 5). The color-biased voxels in or near 47/12 were dorsolateral and anterior to face-biased regions labeled PL or PO. Figure 5F quantifies the color selectivity and face selectivity within the two sets of regions of interest: color-biased ROIs had no face selectivity, instead they showed a strong body bias (mean selectivity −0.14, p < 0.0001), and face-biased ROIs had no color selectivity (mean selectivity −0.013, p=0.6) (Figure 5F); the face selectivity was different between face ROIs and color ROIs (p < 10−7); and the color selectivity was different between face ROIs and color ROIs (p=0.0004) (inset, Figure 5F). Note that although the selectivity index shows a dissociation of color and face activations, there was nonetheless substantial activation to faces outside face-biased regions, within the color-biased ROIs (Figure 5E)—the activation to faces was of lower magnitude than the activation to images of bodies, as quantified below (see Figures 7, 8), which explains the paradox: color-biased regions showed strong responses to faces but low face selectivity in a face-versus-body contrast.

Scene-biased regions and disparity-biased regions also showed little overlap with face-biased regions (Figure 6; quantified in Figure 7). In contrast to the clear separation of face-biased regions from the other functionally defined regions, we found scene-biased regions and disparity-biased regions overlapped with each other, and with the more dorsal color-biased regions. The overlap between color-biased regions, scene-biased regions, and disparity-biased regions varied among the animals, as did the spatial relationship between these regions and the face patches (Figure 6A). The scene and color regions overlapped in M1 and M4, but were spatially separated in M2 and M3. The functionally defined regions located near 8Av showed more overlap than those near 47/12: six of 8 hemispheres showed substantial overlap of the color-biased and scene-biased regions in/near 8Av, while only 3 of 8 hemispheres showed substantial overlap in/near 47/12. Despite the overarching similarities in the architecture of color-biased, scene-biased, and disparity-biased voxels (all clustered into two clumps in or near 47/12 and 8Av, and all were separated from the face patches), the specific pattern in a given animal was different. Figure 6B shows that the patchiness of the functional patterns cannot be attributed to variability in signal strength: contours showing the regions of interest are overlaid on top of the SNR maps for the four animals, and there is no obvious relationship between the ROIs and the variation in SNR.

Quantification in independent datasets

Responses to color, scene, disparity, and face stimuli were quantified in each of the functionally defined regions, using data that was independent of the data used to make ROIs (Figure 7A-D). As expected, color-biased regions showed a significant color response (Figure 7A, leftmost pair of bars). Consistent with the overlap of the ROIs shown on the surfaces, we also found a color bias in the scene-biased regions, and a trend towards a color bias in the disparity-biased region. Face-biased regions showed weak responses to both color and achromatic gratings. All regions showed a difference between the responses to faces versus bodies—but only the face ROI showed a bias for faces; all the other functionally defined regions showed a body bias (Figure 7B). The percent signal change elicited in response to faces was large in all ROIs, and in some cases (e.g. the disparity ROI), as large as in the face ROI (Figure 7B). These results show that face responses are broadly distributed across visually responsive PFC. The strong face selectivity of the face ROI (see Figure 5F), which defines the frontal face patches as distinct from other parts of PFC, is attributed to an especially low response to bodies. In fact, the face ROI showed a negligible response to every other stimulus we used besides faces (all the bars in Figures 7A-D for the face ROI are low, except for faces), showing that face patches are defined by a striking lack of response to non-face stimuli, not an especially strong response to faces. Again, consistent with overlap shown on the surfaces, the color, scene, and disparity ROIs all showed a higher percent signal change to intact scenes compared to scrambled scenes (Figure 7C). Disparity-biased regions differentiated near/far disparity stimuli from null disparity stimuli; a similar trend was found for scene-biased regions and color-biased regions, but not for face-biased regions, where the responses to all the disparity stimuli were negligible (Figure 7D).

By defining the ROIs with one half of the data and quantifying the responses with the left-out data, the analysis in Figure 7 is statistically sound (avoiding double dipping). To further assess the reproducibility of the results, we swapped the data used for the ROI definition and quantification (Figure 7E-H). The patterns of results are consistent in the two sets of analyses. Moreover, the relative responses to the different stimuli were not dependent on the specific thresholds used to define the ROIs (Figure 8). For each ROI, the relative response to the eight stimuli (faces, disparity, bodies, colored gratings, scenes, scrambled scenes, null disparity, and achromatic gratings) was the same for ROIs defined by a restrictive threshold (p=10−8) as by a permissive threshold (p=10−2). Moreover, the stimuli to which each ROI was most sensitive varied according the functional criterion used to define the ROI. For example, face-biased regions showed the strongest response to faces, and scene-biased regions showed the strongest response to scenes. Among the ROIs, color-biased regions showed the strongest differential response to colored gratings versus achromatic gratings, although the strongest percent signal change measured in the color-biased regions was to bodies, not colored gratings. And disparity-biased regions showed the strongest differential response to disparity versus null disparity, although the strongest percent signal change measured in the disparity-biased regions was to scenes, not disparity. These results show that the stimulus that elicited the peak PSC in an ROI is not necessarily the same as the stimulus used to define the ROI. We refer to the ROIs as “X-biased regions” rather than “X patches” to underscore this point: “X” is the tool used to define the ROI and does not necessarily capture a complete picture of the computations performed by the ROI. Across ROIs, the stimuli that elicited the largest percent signal change were faces, scenes, and bodies. The analysis in Figure 8 uncovered a similar response profile defined by the relative response to the eight stimuli for the scene-biased and disparity-biased regions, consistent with the substantial overlap of these regions (Figure 6); the response profiles of the color-biased regions and the face-biased regions were unique.

Paired correlation analysis

The results presented above provide evidence for a stimulus-driven functional organization of PFC. We probed this organizational structure further by doing a paired correlation analysis, in which we determined the percent signal change between all pairs of stimuli (scenes, color, disparity, faces) for all visually responsive voxels in PFC (Figure 9). All correlations (Pearson’s r) were significant at p < 0.0001, but the amount of variance explained by the correlation varied for different pairs of stimuli. Responses to faces correlated poorly with scenes (r = 0.090), colors (r = 0.166), and disparity (r = 0.198), consistent with the hypothesis that face ROIs are functionally distinct from regions defined using non-face criteria. Correlations among non-face stimuli, meanwhile, were relatively strong: responses to disparity were strongly correlated with responses to scenes (r = 0.614), and color (r = 0.628); and responses to color were somewhat correlated with responses to scenes (r = 0.426). The weakest correlation was between faces and scenes, which suggests that PFC would be clearly partitioned by contrasting responses to faces and responses to scenes. By contrast, the strongest correlation was between disparity and scenes, which suggests that PFC would not be clearly partitioned by contrasting responses to disparity with responses to scenes. The pattern of these correlations can be captured by the tetrahedron (inset Figure 9), in which the length of the sides corresponds to dissimilarity (1-r) values (the dissimilarity between faces and scenes is exaggerated by the dashed line so that the five other relationships can be shown accurately in two dimensions).

Taken together the results in Figures 7–9 show that PFC responds in a task-independent way to faces, colors, disparity, scenes, and bodies; the responses to each stimulus is not uniformly distributed across PFC; the parts of PFC engaged in processing disparity and scenes overlap and have a common response profile; disparity/scene-biased regions are distinct from face-biased regions; and color-biased regions have a response profile distinguished from both face patches and scene/disparity-biased regions.

Relationship of functional ROIs to anatomical parcels

Next, we considered the relationship of the functional regions to parcels defined using anatomical atlases. Most regions of interest were located within lateral PFC, within anatomical parcels labeled 47/12, 9/46, 8Av, 45B, 9/46, 47/12L; some of the activations veered into OFC, in anatomical parcels labeled 47/12O, 11 and 13 (Figure 10).

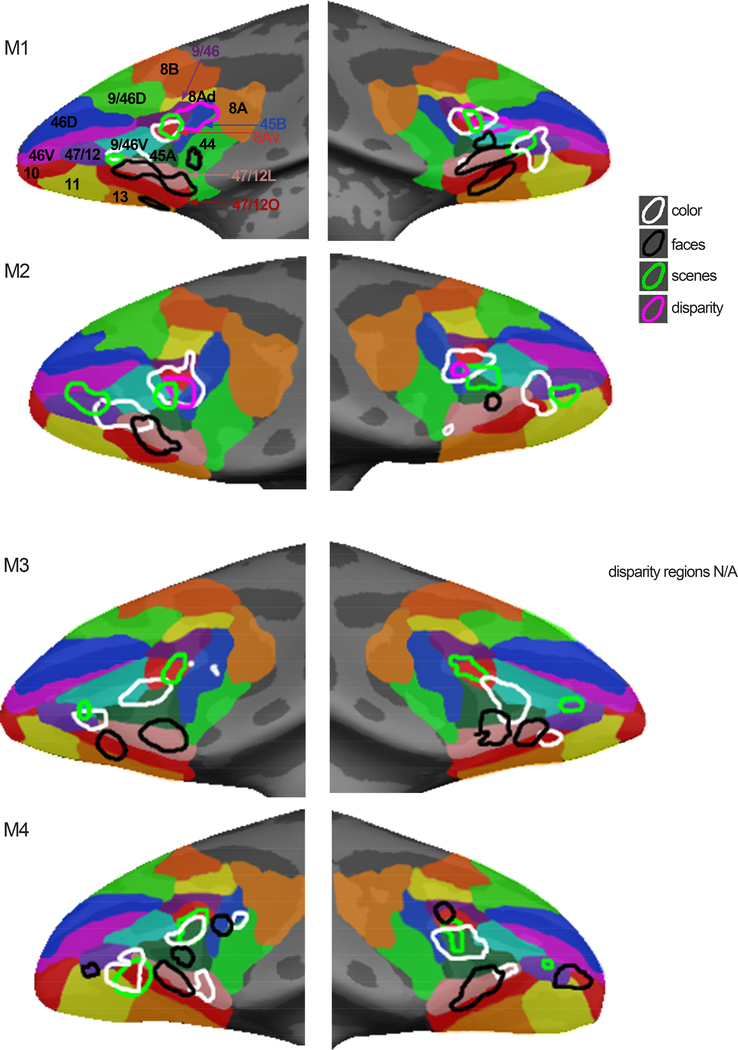

Figure 10. Spatial relationship between regions of interest and anatomical parcels.

Functional regions of interest shown on top of an anatomical atlas projected on each animal’s own anatomy. All anatomical regions were defined by aligning an atlas of anatomical labels (Petrides et al, 2012) to each monkey’s high-resolution anatomical MRI. Disparity responses were not measured in M3 and M4.

To test the extent to which anatomical parcels correspond to functionally defined regions in PFC, we determined the percent signal changes to faces, bodies, scenes, color gratings, achromatic gratings, disparity, and null-disparity stimuli across the 18 anatomical parcels identified by Petrides et al. (Petrides et al., 2012). As suggested by the surface maps in Figure 10, which show that the functional regions were never consistently restricted to a single anatomical region and anatomical regions were only partially encompassed by any given functional ROI, the quantification shows little evidence that the anatomical parcels correlate precisely with any functionally defined region (Figure 11). Nonetheless, responses to all stimuli we tested were not uniform across PFC, but focused in area 8A, 45B, 9/46, 44, 8Av, 46v, 9/46v, 45A and 47/12. Regions 8Av, 46V, 9/46V showed a stronger response to scenes compared to faces (Bonferroni corrected, p<0.017). The only parcels to show a trend for higher responses to faces over scenes (where the magnitude of the face response was greater than the upper error bar of the scene response) were parcels in ventral/orbital PFC, including 10, 47/12L, 47/12O (p=0.1, 0.14, 0.21, respectively; combined, p=0.17). Consistent with the results in Figure 8, anatomical parcels showing higher responses to scenes also showed higher responses to bodies (no region showed a significantly different response to scenes versus bodies). A significant color bias was evident in 44, 9/46v, 45A, 47/12, and a trend towards a color bias in 8Av (p = .057 for 8Av, p < .05 for 47/12). Almost all regions showed a significantly greater response to near/far over null disparity, with the greatest magnitude percent signal change in area 8Av, followed by 9/46, 45B, 8A, and 9/46v. One area, 9/46V, showed a significant color bias, scene bias, and disparity bias.

How do the functional patterns relate to the frontal eye field? The FEF is defined empirically using microstimulation (Bruce et al., 1985; Robinson and Fuchs, 1969), but is typically found within the arcuate sulcus, in a region where we did not find consistent visually driven activation; the lack of response in putative FEF might not be surprising because the animals were fixating, and the FEF is most active when animals are planning saccades (Bruce and Goldberg, 1985). Instead, the consistent visual-stimulus activation found near 8Av likely corresponds to the ventral prearcuate (VPA) region, which is thought to enable monkeys to locate objects using salient visual features (Bichot et al., 2015). The results support the idea that mechanisms for feature-based attention are localized to the VPA, and that color (more than achromatic gratings), scenes (more than faces), and disparity (more than null-disparity moving dots) capture covert attention in the absence of overt cognitive tasks or prior training (Figure 11, bars for the 8Av, 45A and 45B regions).

Eccentricity biases in PFC

Prior reports have measured the extent to which PFC has a visual-field representation (Janssens et al., 2014), but it is not known how this representation relates to functional domains. Inferior temporal cortex shows a coarse eccentricity map that predicts the location of the face, color and scene biased regions: face patches show a bias for foveal stimuli; color-biased regions show balanced responses to central and peripheral stimuli; and place-biased regions show a bias for peripheral stimuli (Lafer-Sousa and Conway, 2013). To test the extent to which this pattern is also manifest in PFC, we quantified responses to eccentricity-mapping stimuli in the various functionally defined ROIs. For all regions except for the face regions there was a trend towards higher responses to stimuli in the periphery (1.5°−20°) compared to the center of gaze (0–1.5°); this trend reached significance for the scene ROIs (Figure 12). The face regions showed little response to any of the eccentricity annuli, regardless of eccentricity. And no functionally defined ROI showed a bias for central stimuli. The responses to eccentricity stimuli were strongest among the more dorsal regions of lateral PFC (Figure 12C). The pattern of results was reproducible (Figure 13).

Selectivity and relationship to IT

The data presented here were collected as part of a broad project aimed at mapping the relative location of the functional regions in the fronto-temporal network to uncover the underlying organizational principles governing object vision. Figure 14 directly compares the category selectivity for each IT and PFC region; the IT data and PFC data were collected using the same stimuli, in the same experimental sessions. As described previously (Verhoef et al., 2015), inferior temporal cortex shows a general trend of higher selectivity for more anterior regions along the posterior-anterior axis that are consistent with a hierarchy of processing along the posterior-anterior axis of IT. We did not find any evidence for a hierarchy between the functionally biased regions of PFC: the more posterior regions (near 8Av) showed the same selectivity as the more anterior regions (near 47/12) (Figure 14A). In PFC, the face patches showed a more distinctive profile than any of the other visually driven ROIs (Figure 9), prompting us to analyze the face patches separately from other functionally defined ROIs. Selectivity estimates in disparity-biased regions, color-biased regions, and scene-biased regions were almost perfectly predicted by selectivity in IT (situated just below the unity diagonal), consistent with the idea that these PFC regions acquire their selectivity from IT. Frontal face-biased regions, meanwhile, showed considerably lower selectivity for faces than their IT counterparts (p = 6.3 ×10−10; Figure 14B). Finally, we found that selectivity in IT was stronger for stimuli defined by category (mean selectivity for faces and scenes) rather than visual feature (mean selectivity for color and disparity) (p = 3.5 × 10−11); we found a corresponding difference in the strength of selectivity in PFC for faces and scenes compared to color and disparity (p = 5 × 10−5).

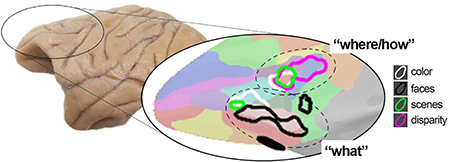

Discussion

This paper reports a systematic visual stimulus-driven functional organization of lateral prefrontal cortex in alert macaque monkeys. With respect to anatomical landmarks, the precise location of the functional activation to each stimulus (faces, scenes, bodies, colors, disparity) varied across the animals, but the relative location of the activation patterns were conserved within each animal. Visually driven responses were focused in two locations: a more dorsal-posterior location in DLPFC, in the ventral prearcute region (in or near anatomical parcel 8Av); and a ventral location in VLPFC that extended into orbital PFC (near anatomical parcel 47/12). Face patches were in the ventral location and were characterized by a striking lack of response to non-face stimuli. Color-biased regions were also found in the ventral location, but were not overlapping face patches. One scene-biased region could be consistently localized with different scene contrasts and overlapped the disparity-biased region and another color-biased region to define the DLPFC focus. Taken together, the results are consistent with the domain-specificity hypothesis (Romanski, 2007), and suggest that visual information enters PFC through two networks: a dorsal stream that leverages multiple visual signals including disparity, color, and scene information to flexibly enable a range of cognitive behaviors such as visual search and object-in-place memory; and a more ventral stream implicated in decoding object and social information. This formulation is consistent with a wide range of electrophysiological and lesion studies (Bichot et al., 2015; Kaskan et al., 2016; Kiani et al., 2015; Lara and Wallis, 2014; Markowitz et al., 2015; Padoa-Schioppa and Conen, 2017; Riley et al., 2017; Rudebeck et al., 2017; Wilson et al., 2007). The functional organization is reminsicent of a proposal by Goldman-Rakic (1996), in which DLPFC is preoccupied with spatial cognition (extending to PFC the “where” stream of parietal cortex with which DLPFC is strongly connected), and VLPFC is engaged in object cognition (extending to PFC the “what” stream of temporal cortex with which VLPFC is strongly connected)—although in her formulation the “where” network in PFC resided above the principle sulcus, not in the prearcuate area as evident here. Others have recast this set of parallel inputs to PFC as computing “how” and “what” (O’Reilly, 2010) (Figure 15).

Figure 15. Visual stimulus-driven functional organization of PFC (top) compared to an early proposal of Goldman-Rakic (bottom).

The present experiments sought to address what governs the flow of visual information into PFC. We hypothesized that PFC shows a visually driven functional organization because PFC receives a strong projection from IT (Barbas, 1988; Borra et al., 2009; Romanski et al., 1999; Ungerleider et al., 1989; Webster et al., 1994) and IT itself has a visually driven functional organization (Lafer-Sousa and Conway, 2013; Verhoef et al., 2015). Single unit, fMRI, and lesion studies have shown evidence for patchy responses to color (Bichot et al., 1996; Mishkin and Manning, 1978; Ninokura et al., 2004; Rushworth et al., 1997), scenes (de Borst et al., 2012; Gutchess et al., 2005), faces (Scalaidhe et al., 1997; Tsao et al., 2008), and disparity (Theys et al., 2012) in PFC. These responses were obtained in different animals. Because there is considerable individual variability in the location of the functional responses relative to anatomical landmarks (Janssens et al., 2014), it is impossible to assess the spatial relationships among response patterns across studies, precluding a direct evaluation of the visually driven functional organization across PFC from the published literature. Moreover, the responses in prior studies were often elicited while the animals performed cognitive tasks, confounding the interpretation of the response patterns (are responses caused by task engagement or by visual stimulus presentation?). Here we overcame these limitations by measuring responses in alert monkeys that were passively fixating but not otherwise engaged in a cognitive task, and by measuring responses to a battery of visual stimuli in the same animal subjects, controlling for individual differences in the localization of any given functional domain.

Neurons across lateral PFC often show task-dependent responses (Asaad et al., 1998; Bichot et al., 1996; Borra et al., 2011; Freedman and Assad, 2016; Gallese et al., 1996; Miller et al., 2002; Passingham et al., 2000; Rizzolatti et al., 1996; Sakagami et al., 2001). The stimulus-driven responses we describe are not in conflict with these observations but show that passive viewing is sufficient to drive PFC responses. But the task-dependence shown in prior studies prompts the concern: Could the stimulus-driven responses observed presently be attributed to familiarity with the stimuli? We doubt it. Two of the animals were entirely naïve, having only ever been trained to fixate. The other two animals had previously participated only in low-level color-detection tasks (Gagin et al., 2014; Stoughton et al., 2012). We found no differences in the pattern of color responses between animals that had participated in the detection tasks (monkeys M1 and M2) and animals that were naïve (monkeys M3 and M4).

We interpret the present findings in the context of evidence showing that retinal images are sufficient to explain “core object recognition” operations in IT (Baldassi et al., 2013; DiCarlo et al., 2012). This evidence suggests that the pattern of retinal signals can drive activity in brain regions involved in cognition. We hypothesize that the role of the visually driven responses in PFC is to recruit operations within PFC to direct attention to likely relevant stimuli, influencing visual-cortex computations and guiding executive function through top-down regulation (Crowe et al., 2013). Provided this framework, we predict that the stimuli that elicit the highest responses in lateral PFC are those defined by semantic categorization (i.e. content) rather than visual features (color/disparity). The results presented here are consistent with this prediction. Across functional ROIs the stimuli that elicited the highest responses were faces, bodies, and scenes. For example, color-biased regions (defined by a greater response to color compared to achromatic gratings) responded most strongly to images of bodies. This observation raises an intriguing possibility: the use of PFC responses as an assay of behavioral relevance (one could measure PFC responses to an exhaustive stimulus set and assign behavioral relevance based on PFC responses).

Although the stimuli varied in their behavioral relevance, this variability does not explain the parcellation of PFC evident in the data. Rather, the systematic organization suggests a functional division of labor within PFC, and uncovers a possible bottleneck of the flow of visual information into PFC. Presumably through rich interconnections across PFC (Yeterian et al., 2012) this visual information is made available to most parts of lateral PFC, explaining why many studies have found that neurons reflecting task demands are distributed throughout much of PFC (Buschman et al., 2011; Rainer et al., 1998; Rao et al., 1997; Stokes, 2015). The results make a surprising prediciton: that lesions of a visually driven zone would reduce the task-sensitivity of neurons across PFC to the visual sensitivity associated with the lesioned zone, while lesions outside a visually driven zone would have little impact on task sensitivity.

The number and distribution of frontal face patches varied among animals but were restricted to VLPFC (in anatomical parcels 47/12L and 47/12O), extending into orbital PFC (including parcels 11 and 13). It has been assumed that face patches are distinguished from the rest of PFC by a relatively strong response to faces. But there is an alternative organizational scheme: face responses could be relatively strong throughout PFC, with face patches defined by a relatively weaker response to non-face stimuli. By comparing the responses to a large battery of stimuli in the same set of subjects and assessing the absolute response to each stimulus, the results here show for the first time that the face patches fit this alternative account: face patches were characterized by a distinctive lack of response to non-face stimuli, rather than an especially strong response to faces. This observation may reconcile an apparent paradox: On the one hand, work looking at multisensory regions of lateral PFC, which suggests that the frontal face patches may be specialized for social communication (Romanski, 2012), explains why face patches respond selectively, and perhaps exclusively, to faces. On the other hand, the broadly distributed response to faces across visually responsive PFC predicts the lack of precise connectivity between the frontal face patches and IT face patches (Grimaldi et al., 2016), and suggests that sensitivity to faces can contribute to many computations within PFC. But it is perhaps premature to draw strong conclusions relating functional organization with anatomical connections between IT and PFC. Anatomical tracers have not yet been injected into the IT face patch AF, which by virtue of its location in the anterior portion of IT is the most likely to project to PFC (Romanski et al., 1999; Webster et al., 1994). Future work using fMRI to guide anatomical tracer injections are needed to determine the extent to which the patching connectivity is related to the patchy stimulus-driven functional organization of IT and PFC.