Abstract

Background

An information technology solution to provide a real-time alert to the nursing staff is necessary to assist in identifying patients who may have sepsis and avoid the devastating effects of its late recognition. The objective of this study is to evaluate the perception and adoption of sepsis clinical decision support.

Methods

A cross-sectional survey over a three-week period in 2015 was conducted in a major tertiary care facility. A sepsis alert was launched into five pilot units (including: surgery, medical-ICU, step-down, general medicine, and oncology). The pilot unit providers consisted of nurses from five inpatient units. Frequency, summary statistics, Chi-square, and nonparametric Kendall tests were used to determine the significance of the association and correlation between six evaluation domains.

Results

A total of 151 nurses responded (53% response rate). Questions included in the survey addressed the following domains: usability, accuracy, impact on workload, improved performance, provider preference, and physician response. The level of agreeability regarding physician response was significantly different between units (p=0.0136). There were significant differences for improved performance (p=0.0068) and physician response (p=0.0503) across levels of exposure to the alert. The strongest correlations were between questions related to usability and the domains of: accuracy (τ=0.64), performance (τ=0.66), and provider preference (τ=0.62), as well as, between the domains of: provider performance and provider preference (τ=0.67).

Discussion

Performance and preference of providers were evaluated to identify strengths and weaknesses of the sepsis alert. Effective presentation of the alert, including how and what is displayed, may offer better cognitive support in identifying and treating septic patients.

Keywords: Sepsis, alert, usability testing, clinical decision support tool

INTRODUCTION

Sepsis, a deadly combination of infection and inflammation, is a considerable burden on healthcare services, with far-reaching economic costs. The disease develops in approximately one of every twenty-three hospital admissions1 and, with increasing incidence and high case-fatality, accounts for nearly half of all hospital deaths.2 Early recognition and treatment is paramount to reducing mortality. However, unlike trauma, stroke, or acute myocardial infarction, the initial signs of sepsis are subtle and can easily be missed. When treatment is delayed, sepsis can rapidly advance to a multiple organ dysfunction syndrome, shock, and death. Thus, information systems are needed to identify and triage patients at risk of developing sepsis. Interventions that can reduce sepsis mortality exist, but their effectiveness depends on an early administration; therefore, timely recognition is critical.3

Clinical decision support (CDS) is defined as a key functionality of health information technology by interfacing evidence-based clinical knowledge at the point of care.4–6 When CDS is applied effectively, it increases quality of care, enhances outcomes, helps to avoid errors, improves efficiency, reduces costs, and boosts provider and patient satisfaction.7 However, there is a low acceptance for many types of CDS. Real-time CDS is overridden or ignored by clinicians 91% of the time because they are behind schedule, find the alert to be misleading, or their patients do not meet certain criteria (such as age or health condition).8 Other studies found that the use of automated, real-time alerts were modestly effective in increasing performance of key tasks due to the increased awareness of the need for interventions.9

At the bedside, clinicians are increasingly overwhelmed by information, and they must largely rely on pattern recognition and professional experience to comprehend complex clinical data and treat patients in a timely manner. To combat the late recognition of sepsis, our health system implemented a commercial solution to provide real-time alerts to the nursing staff to assist in identifying potentially septic patients The objective of this survey was to identify and explore the perception and clinical impact of an automated CDS sepsis alert prior to a systemwide expansion.

METHODS

Environment

Christiana Care Health System is a not-for-profit teaching hospital, with 1,080 hospital beds and 53,621 annual hospital admissions. With an ongoing commitment to sepsis quality improvement initiatives, Christiana Care launched a commercially available sepsis alert into five clinical pilot units. A planned evaluation of its performance and impact on providers was completed prior to its system-wide implementation. The pilot unit providers consisted of nurses from surgery, medical intensive care unit (MICU) step-down, general medicine, and oncology. Step-down unit refers to a unit providing an intermediate level of care for patients with requirements between that of the general ward and the intensive care unit (ICU).10

The sepsis alert continuously monitors for abnormalities of some of the key clinical indicators that can identify sepsis, including vital signs, white blood cell count, lactate, bilirubin, and creatinine. This data is extracted from the Electronic Health Records (EHR) and then analyzed by the sepsis tool for abnormalities within each parameter. When a septic patient is identified, users are notified about that patient using an active standardized alert structure within the EHR platform. The alert is configured to ensure that correct clinicians are notified as early as possible using pagers, asynchronous alerts, and an Emergency Department tracking board. Once triggered, the message is sent to the provider’s team, including the patient’s physician on record and current nurse, the eCare team, and a group of MICU nurses, who provide oversight to the clinical response.

Survey Design

We designed and conducted a cross-sectional survey over a three-week period in 2015, surveying 151 nurses within the five pilot units receiving the sepsis alert. Questions addressed the following domains: usability, accuracy, impact on workload, improved performance, provider preference, and physician response. The survey aimed to capture a broad overview of a complex process to help identify strengths, as well as opportunities for improvement. Questions were asked using Likert scales, drop down menus, ‘yes’/‘no’ options, ‘unsure’/‘undecided’, and open-ended feedback. Study data were collected and managed using Research Electronic Data Capture (REDCap) electronic data capture tools hosted internally within Christiana Care.11 REDCap is a secure, web-based application that allows for direct input of data elements into electronic database, minimizing data transcription errors.

Analysis

Frequency and summary statistics of the survey data were quantified. We tested the association between the specified domains with the primary outcomes whose distribution could potentially be affected by these specific criteria. We also looked to identify correlations across different domains to detect the agreement of the survey questions. Since the survey data has an ordinal scale, Chi-square test and nonparametric Kendall tests were used to determine the significance of the association and correlation, respectively. A p-value of <0.05 was considered to be statistically significant. For secondary analysis, the domains were analyzed by participant demographics including unit type, years of clinical experience, and exposure to the sepsis alert.

RESULTS

A total of 151 nurses from five pilot units responded to the survey with a 53% (151/284) response rate. Each group was well represented, with the highest participation rate in the oncology unit (Table 1). The experience of respondents ranged from <1 year to ≥21 years. Responses were stratified by clinical setting, frequency of patients triggering the alert, and years of experience, both as a nurse and as a nurse on the current unit. To understand current state of CDS at Christiana Care, participants were asked about tools currently available. 97% responded they currently have tools available to aid in decision-making, and 97% currently have tools available to identify a patient’s physiological deterioration in a timely manner. Additionally, 92% responded they received the appropriate amount of training regarding the sepsis alert. Almost all respondents (96%) received a sepsis alert for a patient they were treating. Of those, 65% of respondents had a patient trigger the alert more than once (i.e. the same patient receiving multiple advisories).

Table 1.

Participant demographics and distribution of responses to domain questions.

| Participant Demographics | Participants, n (% of total) | |

|---|---|---|

|

| ||

| Pilot Units | Surgery | 16 (10.6%) |

| MICU Step-down | 33 (21.9%) | |

| General Floor | 50 (33.1%) | |

| Oncology | 52 (34.4%) | |

|

| ||

| Years of experience on current unit | < 1 years | 15 (9.9%) |

| 1–2 years | 31 (20.5%) | |

| 3–5 years | 25 (16.6%) | |

| 6–10 years | 29 (19.2%) | |

| 11–15 years | 24 (15.9%) | |

| 16–20 years | 11 (7.3%) | |

| ≥ 21 years | 16 (10/6%) | |

|

| ||

| Years of experience as a nurse | < 1 years | 7 (4.6%) |

| 1–2 years | 23 (15.2%) | |

| 3–5 years | 23 (15.2%) | |

| 6–10 years | 34 (22.5%) | |

| 11–15 years | 17 (11.3%) | |

| 16–20 years | 15 (9.9%) | |

| ≥ 21 years | 32 (21.2%) | |

|

| ||

| Exposure to the alert system | Of the 137 (95.8%) who have received an alert:

|

|

| 1–4 alerts | 69 (50.4%) | |

| 5–9 alerts | 40 (29.2%) | |

| ≥ 10 alerts | 28 (20.4%) | |

| Domain n, (% of total) | Strongly Agree | Agree | Undecided | Disagree | Strongly Disagree |

|---|---|---|---|---|---|

| Usability | |||||

| 1. The alert provides clear clinical guidance. | 47 (32.9%) | 81 (56.6%) | 5 (3.5%) | 10 (7.0%) | 0 (0.0%) |

| 2. The alert is useful in identifying deteriorating patients. | 33 (23.7%) | 78 (56.1%) | 10 (7.2%) | 16 (11.5%) | 2 (1.4%) |

| Accuracy | |||||

| 1. All patients who trigger the sepsis alert should be started on the sepsis pathway. | 19 (13.4%) | 30 (21.1%) | 12 (8.5%) | 67 (47.2%) | 14 (9.9%) |

| 2. The alert is accurate in identifying deteriorating patients. | 17 (12.2%) | 60 (43.2%) | 17 (12.2%) | 40 (28.8%) | 5 (3.6%) |

| Improved Performance | |||||

| 1. The alert improves my ability to formulate an effective management plan. | 27 (19.4%) | 67 (48.2%) | 14 (10.0%) | 27 (19.4%) | 4 (2.9%) |

| Provider Preference | |||||

| 1. The alert gives me greater confidence in providing clinical care to my patients. | 15 (10.8%) | 62 (44.6%) | 23 (16.5%) | 34 (24.5%) | 5 (3.6%) |

| Physician Response | |||||

| 1. Physicians give me clinical direction based on the sepsis alert. | 20 (14.1%) | 95 (66.9%) | 11 (7.7%) | 16 (11.3%) | 0 (0.0%) |

| 2. Physicians are receptive when I contact them regarding a sepsis alert. | 19 (13.3%) | 88 (61.5%) | 9 (6.3%) | 27 (18.9%) | 0 (0.0%) |

| Domain n, (% of total) | Yes | Unsure | No | ||

| Impact on Workload | |||||

| 1. The alert has impacted/changed the plan of care for a patient I was treating. | 77 (55.8%) | 21 (15.2%) | 40 (30.0%) | ||

| Provider Preference | |||||

| 1. I receive feedback regarding patients that trigger the sepsis alert. | 51 (36.7%) | 36 (25.9%) | 52 (37.4%) | ||

| Domain n, (% of total) | Greatly Increase | Slightly Increase | Same | Slightly Decrease | Greatly Decrease |

| Impact on Workload | 12 (8.6%) | 81 (58.3%) | 39 (28%) | 4 (2.9%) | 3 (2.2%) |

| Improved Performance | 11 (7.9%) | 46 (33.1%) | 76 (55%) | 5 (3.6%) | 1 (0.7%) |

All six domains, such as usability and physician response, were assessed (Table 1). The nurses’ opinions were quantified as the percentage of nurses who ‘strongly agreed’, ‘agreed’, ‘disagreed’, or ‘strongly disagreed’ that the parameter is a quality of the system.

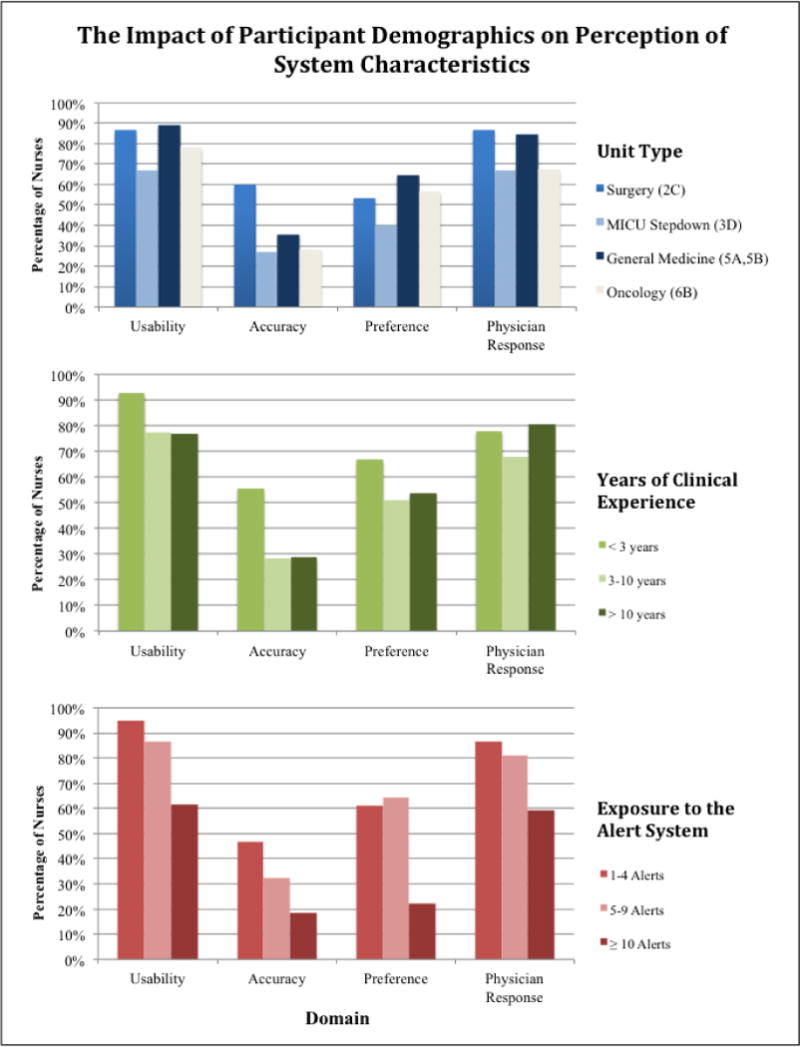

Survey Domains by Unit

The majority of nurses from all units agreed that the alert is usable (Figure 1). However, regarding accuracy, the level of agreeability was significantly different between units (p=0.0486). For example, 29% of stepdown nurses and 15% of oncology nurses felt the alert was not accurate in identifying deteriorating patients. Only 26% of MICU stepdown, 34% of general medicine, and 31% of oncology nurses agreed that all patients who trigger the sepsis alert should be started on the sepsis pathway. Nurses from surgery or general medicine floors felt that physicians were receptive to the alerts (93% and 95%, respectively), but fewer MICU stepdown and oncology nurses agreed (68% and 70%, respectively). The level of agreeability regarding physician response was significantly different between units (p=0.0136).

Figure 1.

Interaction of domains and unit type, clinical experience, and exposure to the alert.

Survey Domains by Clinical Experience

The majority of nurses disagreed that the alert was accurate in identifying deteriorating patients and that all patients who triggered the sepsis alert should be started on the sepsis pathway (deterioration specific to sepsis) (Figure 1). There were no significant differences based on clinical experience, with a trend in less experienced nurses rating the alert with higher accuracy than nurses with moderate to extensive experience (59% vs 26% and 31%, respectively).

Survey Domains by Exposure to the Sepsis Alert

Number of patients who have triggered a sepsis alert served as a surrogate for level of exposure to the alert (Figure 1). Nurses alerted more frequently did not find the system as usable (69%) as others did (95% for least exposed nurses, 86% for moderately exposed nurses) at a significant level (p=0.0124). Nurses with less exposure to the alert preferred it more (62%) than nurses who used it most frequently (22%) (p=0.0055). All nurses gave low accuracy ratings: 47% for those minimally exposed to the alert, 32% for the moderately exposed, and 19% for the most exposed (p=0.0425). Similarly, the majority of nurses of all levels of alert exposure reported an increase in workload (68% of all respondents); while nurses with the most exposure more frequently reported a non-statistically significant increase in workload (p=0.529). There were additional significant differences for improved performance (p=0.0068) and physician response (p=0.0503).

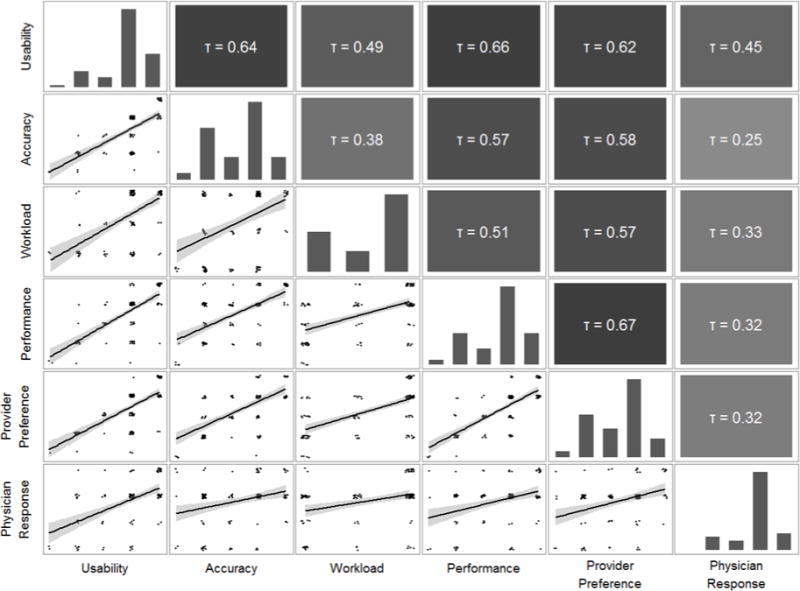

Associations between Domains

We determined associations between domains (Figure 2). The strongest correlations were between questions related to usability and the following domains: accuracy (perceived accuracy) (τ=0.64), performance (the ability to formulate an effective management plan) (τ=0.66), and provider preference (greater confidence in providing care) (τ=0.62). Other noted correlations were between: provider performance (the ability to formulate an effective management plan) and provider preference (greater confidence in providing care) (τ=0.67).

Figure 2.

Associations between domains using Kendall Tau-b correlation coefficients. Tau-b ranges between +1.0 and −1.0, with 0 indicating the absence of association. Coefficients of > (+) 0.6 or < (−) 0.6 correlate with strong agreement or inversion, respectively.

Alert Indications of Stability

There are additional learnings regarding the association between the alert and patient stability. For example, participants were asked what level of severity the sepsis alert can represent, in a “check all that apply” format. The response varies in that 16% felt the alert could represent a stable patient, 98% felt the alert could represent a patient at risk of becoming unstable, and 39% felt the alert could represent an unstable patient.

Qualitative Analysis Regarding Systemwide Expansion

Participants were asked open-ended questions regarding the systemwide expansion (moving the alert from the five pilots to all non-ICU inpatient units). The majority of participants (63%) felt the alert should be expanded, 16% did not and 21% were unsure. Nurses with fewer years of clinical experience recommended expansion more frequently (100%) than those with greater clinical experience (55%), as well as those less exposed to the alert (69%) compared to more exposure (44%). A higher percentage of oncology nurses recommended expansion (80%) compared to nurses from general medicine (60%), MICU stepdown (60%), and surgery (42%). Positive feedback conveyed that the alert increased awareness, benefitted patients, other units, and new nurses. Negative feedback included that the alert is not unit specific, too repetitive with multiple fires, increases workload, and has no positive impact on critical thinking. One of the most important comments was that the logic that contributed to the alert needs improvement, meaning that there is a lack of sensitivity and specificity in the current trigger tool.

DISCUSSION

The assessment of clinicians’ adoption of CDS can help better understand how systems influence clinical decision-making and tailor a sepsis alert tool to guarantee timely appropriate response. The planned approach must address human factors inherent to implementation science and information display along with the science of predicted analytics. This survey identified strengths and opportunities for improvement of the alert and provided a unique perspective of the end-users’ perception of multiple domains.

By stratifying results by unit type, clinical experience, and alert exposure, we uncovered varying perspectives that indicate vulnerabilities in alert design. Accuracy was viewed differently by units as well as physician receptiveness. This suggests that the one-size-fits-all trigger may not be appropriate for all units, and that based on the perceived accuracy, physicians may be less responsive. Collecting years of both general and unit-specific clinical experience provided reference to the nurses’ viewpoint of treating and viewing sepsis in a variety of settings. There were no statistically significant differences based on clinical experience, in general or by unit, but anecdotally, the system was preferred by those with less clinical experience. This suggests that CDS guidance may be more valuable for those less experienced with sepsis.

We used the number of sepsis advisories as a metric of alert exposure. In general, nurses that used the system less often gave higher ratings for usability, accuracy, preference, and physician response than nurses with more experience with the alert. Nurses with more exposure to the alert gave poor ratings for all six domains. This suggests that prior experience with inaccurate alerts and alert fatigue introduces mistrust and dissatisfaction. It may also indicate that more frequently exposed nurses are utilizing clinical judgment over the sepsis alert, suggestive of clinicians relying on their judgment rather than algorithms when mistrust is experienced.

We identified positive correlations between multiple domain questions. For those that agreed or strongly agreed the alert was accurate, they also agreed the alert was usable, improved their performance, and provided greater confidence in treating their patients. The opposite is true as well, those that disagreed that the alert was accurate in identifying deteriorating patients felt that the alert was not usable, did not impact their ability to formulate an effective management plan, or improve their performance.

Perception of accuracy identifies significant limitations in the algorithm used to fire an alert. Standardized in 1991, the original conceptualization of sepsis hinged on two of four Systemic Inflammatory Response Syndrome (SIRS) criteria. These definitions, focused solely on inflammatory excess, were the basis for inclusion in sepsis trials but challenged due to a lack of specificity and clinical utility.12–14 The Sepsis Definitions Task Force current definition of sepsis is no longer based on SIRS criteria but instead based entirely on the objective existence of acute organ dysfunction as a downstream marker for the (mal)adaptive host response to infection.15 The perception that the alert is not accurate is also reflected in open-ended feedback: “The sepsis alert does not take into account conditions that are already in place or variables that are normal for certain patients”, and “I have not found the sepsis alert to be efficient in caring for patients. They are often triggered by a slight change in vitals which creates additional work that is not beneficial. I have not cared for a patient with an alert that has actually been septic.”

Clinician alert fatigue continues to be a vexing problem, particularly when alerts are non-actionable and fade into noise.16,17 Participants indicate the alert needs to be actionable; meaning all patients who trigger the sepsis alert should be started on the sepsis pathway. Best practices suggest that in order to effectively manage alerts, they should be triggered and visible when the end user needs to make an important decision (e.g. prescribing, therapeutic, diagnostic). In that way, an actionable alert can be immediately used. This eliminates the concept of alert fatigue and creates data-driven best practices. Education can also be used to reduce confusion and variability amongst alert interpretation as nurses felt the alert could represent multiple levels of patient deterioration or none at all. Participants discussed their desire for transparency regarding the algorithm and alert triggers as a way of supporting clinical judgment and provider autonomy.

This nursing assessment is a snapshot of current perception. Like any survey, limitations include sampling bias, reliability, and external validity. Careful crafting of the survey questions from a multi-disciplinary team assisted in structuring domains, but may still have led to misinterpretation by participants completing the survey online. It is essential that both performance and preference of healthcare providers are evaluated to identify strengths, along with weaknesses of the sepsis alert, its inclusion and adoption. A companion assessment to the provider survey and the evaluation of patient outcomes is an assessment of clinical care and process-of-care measures.

There are multiple proposals at a local level to launch the sepsis alert into new clinical environments to assist with real-time identification. Evaluating exposure to the sepsis alert and their preference for expansion offers a unique insight. Similar to findings of each domain, our research suggests that the tool is more appropriate for less experienced clinicians (in terms of clinical years and sepsis care) and general medicine units, as opposed to ICU or Emergency Department environments. Many patients have non-specific vital sign abnormalities and organ dysfunction metrics that are related to trauma, hemorrhage, or cardiac etiology and would trigger an appropriate “fire” of the alert in a non-infected patient. Prematurely exposing nurses to this tool without amending thresholds and criteria may lead to staff frustration and non-compliance with alarm indications.18–20 An indiscriminate use of warnings can lead to high over-ride rates and alert fatigue in which staff ignore these and other warnings, thereby diminishing the effectiveness of ALL warnings and reducing potential benefits of other CDS tools.

Acknowledgments

Financial support: This work was supported by an Institutional Development Award (IDeA) from the National Institute of General Medical Sciences of the National Institutes of Health under grant number U54-GM104941 (PI: Binder-Macleod), and by the National Library of Medicine of the National Institutes of Health under grant number 1R01LM012300-01A1 (PI: Miller).

Footnotes

Author contributions: All authors have seen and approved the manuscript, and contributed significantly to the work.

Potential conflicts of interest: Authors declare no conflicts of interest. Authors declare that they have no commercial or proprietary interest in any drug, device, or equipment mentioned in the submitted article.

References

- 1.Elixhauser A, Friedman B, Stranges E. Septicemia in US Hospitals, 2009. Rockville, MD: 2011. (HCUP Statistical Brief #122). [Google Scholar]

- 2.Liu V, Escobar G, Greene J, et al. Hospital deaths in patients with sepsis from 2 independent cohorts. JAMA. 2014;312(1):90–92. doi: 10.1001/jama.2014.5804. [DOI] [PubMed] [Google Scholar]

- 3.Ahrens T, Tuggle D. Surviving severe sepsis: Early recognition and treatment. Crit Care Nurse. 2004;(Suppl):2–13. [PubMed] [Google Scholar]

- 4.Snyder-Halpern R. Assessing healthcare setting readiness for point of care computerized clinical decision support system innovations. Outcomes Manag Nurs Pract. 1999;(3):118–127. [PubMed] [Google Scholar]

- 5.Spooner S. Mathematical foundations of decision support systems. In: Berner ES, editor. Clinical Decision Support Systems Theory and Practice New York. New York: Springer; 1999. pp. 35–60. [Google Scholar]

- 6.Sim I, Gorman P, Greenes R, et al. Clinical decision support systems for the practice of evidence-based medicine. JAMA. 2001;8(6):527–534. doi: 10.1136/jamia.2001.0080527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Centers for Medicare and Medicaid Services. Clinical Decision Support: More Than Just ‘Alerts’ Tipsheet. EHealth University; [Google Scholar]

- 8.Sittig D, Krall M, Dykstra R, Russell A, Chin H. A Survey of Factors Affecting Clinician Acceptance of Clinical Decision Support. BMC Medical Informatics and Decision Making. BioMed Central. doi: 10.1186/1472-6947-6-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Nelson J, Smith B, Jared J, Younger J. Prospective Trial of Real-Time Electronic Surveillance to Expedite Early Care of Severe Sepsis. Ann Emerg Med. 2011;57(5):500–504. doi: 10.1016/j.annemergmed.2010.12.008. [DOI] [PubMed] [Google Scholar]

- 10.Prin M, Wunsch H. The role of stepdown beds in hospital care. Am J Respir Crit Care Med. 2014;190(11):1210–1216. doi: 10.1164/rccm.201406-1117PP. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Harris P, Taylor R, Thielke R, Payne J, Gonzalez N, Conde J. Research electronic data capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Informatics. 2009;42(2):377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Vincent JL. Dear SIRS, I’m sorry to say that I don’t like you. Crit Care Med. 1997;25(2):372–374. doi: 10.1097/00003246-199702000-00029. [DOI] [PubMed] [Google Scholar]

- 13.Kaukonen K, Bailey M, Pilcher D, Cooper J, Bellomo R. Systematic Inflammatory Response Syndrome Criteria in Defining Severe Sepsis. N Engl J Med. 2015;372(17):1629–1638. doi: 10.1056/NEJMoa1415236. [DOI] [PubMed] [Google Scholar]

- 14.Levy M, Fink M, Marshall J, et al. SCCM/ESICM/ACCP/ATS/SIS International Sepsis Definitions Conference. Crit Care Med. 2003;31(4):1250–1256. doi: 10.1097/01.CCM.0000050454.01978.3B. [DOI] [PubMed] [Google Scholar]

- 15.Singer M, Deutschman C, Seymour C, et al. The third international consensus definitions for sepsis and septic shock (Sepsis-3) JAMA. 2016;315(8):801–810. doi: 10.1001/jama.2016.0287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Graham K, Cvach M. Monitor alarm fatigue: Standardizing use of physiological monitors and decreasing nuisance alarms. Am J Crit Care. 2010;19(1):28–34. doi: 10.4037/ajcc2010651. [DOI] [PubMed] [Google Scholar]

- 17.Kowalczyk L. Joint Commission warns hospitals that alarm fatigue is putting patients at risk. Boston Globe. 2013 [Google Scholar]

- 18.Kowalczyk L, Joint Commission to hospitals . Tackle problem of alarm fatigue among staff. Boston Globe; 2013. [Google Scholar]

- 19.Mitka M. Joint commission warns of alarm fatigue: multitude of alarms from monitoring devices problematic. JAMA. 2013;309(22):2315–2316. doi: 10.1001/jama.2013.6032. [DOI] [PubMed] [Google Scholar]

- 20.Rayo M, Moffatt-Bruce S. Alarm system management: evidence-based guidance encouraging direct measurement of informativeness to improve alarm response. BMJ Qual Saf. 2015;24(4):282–286. doi: 10.1136/bmjqs-2014-003373. [DOI] [PubMed] [Google Scholar]