Abstract

Use of online panel vendors in research has grown over the past decade. Panel vendors are organizations that recruit participants into a panel to take part in web-based surveys, and match panelists to a target audience for data collection. We used two panel vendors to recruit families (N=411) with a 16- to 17-year-old teen to participate in a randomized control trial (RCT) of an online family-based program to prevent underage drinking and risky sexual behaviors. Our article addresses the following research questions: 1) How well do panel vendors provide a sample of families who meet our inclusion criteria to participate in a RCT? 2) How well do panel vendors provide a sample of families who reflect the characteristics of the general population? 3) Does the choice of vendor influence the characteristics of families that we engage in research? Despite the screening techniques used by the panel vendors to identify families who met our inclusion criteria, 23.8% were found ineligible when research staff verified their eligibility by direct telephone contact. Compared to the general U.S. population, our sample had more Whites and more families with higher education levels. Finally, across the two panel vendors, there were no significant differences in the characteristics of families, except for mean age. The online environment provides opportunities for new methods to recruit participants in research studies. However, innovative recruitment methods need careful study to ensure the quality of their samples.

INTRODUCTION

The use of online panel vendors in survey research has dramatically grown over the past decade (Baker et al., 2010). Panel vendors are organizations that recruit participants into a panel to take part in web-based surveys, and match panelists to a target audience for data collection (Berrens, Bohara, Jenkins‐Smith, Silva, & Weimer, 2003; Craig et al., 2013). These panels (e.g. GFK’s KnowledgePanel, Ipsos i-Say Panel, Qualtrics Online Sample, Toluna Healthcare Practice) are usually maintained by market research firms (Baker et al., 2010). The purpose of this paper is to describe our efforts using panel vendors to recruit families (N = 411) with a 16–17 year old teen to participate in a randomized control trial of a web-based family prevention program on underage drinking and risky sexual behaviors.

The terms, “online panel” and “Internet panel”, refer to both nonprobability-based panels and probability-based panels, or panels that have been formed by recruiting participants through random sampling methods such as random digit dialing (Baker et al., 2010; Hays, Liu, & Kapteyn, 2015; Heeren et al., 2008). Most panel vendors use nonprobability sampling for recruitment, which does not involve random selection (Baker et al., 2010). Nonprobability sampling is where the probability for each element in a population (e.g. person, household, etc.) to be included in a sample is unknown, and not every element has a certain chance of being included (Judd, Smith, & Kidder, 1991).

The most common type of nonprobability sampling used by panel vendors is purposive sampling, which aims to achieve a sample of people that is representative of a defined target population. The defined target population is most commonly the U.S. population as measured by the U.S. Census. Researchers can also implement quotas (e.g. age, gender, race/ethnicity, region), or a set of demographic characteristics, to ensure that they have achieved a sample that meets their requirements (Baker et al., 2010).

Panel vendors market their panels by a variety of methods (e.g. website banner ads, emails, direct mail) to recruit prospective panelists. They present panel involvement as an opportunity to earn money, have fun taking surveys, and voice opinions on new products and services (Baker et al., 2010). Therefore, most panels are formed by people who opt into the panel by visiting a panel company’s website, and provide personal and demographic information that are then used to select participants for specific surveys (Baker et al., 2010).

Panels that have been formed using nonprobability sampling methods are referred to as online convenience panels (Sell, Goldberg, & Conron, 2015), nonprobability panels, volunteer panels, opt-in panels, and access panels (Baker et al., 2010; Erens et al., 2014).

Panel vendors offer a wide range of services to researchers. These services include selecting and inviting panelists, verifying the identities of participants, maintaining databases, compensating respondents for participation, and securely transmitting panelists’ survey data back to the researchers’ host computer or mainframe for further analyses (Craig et al., 2013). Panel vendors utilize a variety of validation technologies to verify the identities of their panelists. These technologies include verifying against third-party databases, checking email addresses via formal and known Internet service providers (ISPs), confirming mailing addresses via postal records, and conducting “reasonableness” tests. These tests are performed by data mining so that panel vendors, for example, can examine whether a panelist’s age is appropriate compared to the ages of his/her children, or whether a panelist’s reported income is within the expected range for his/her stated profession. Panel vendors can also inspect the digital fingerprints of panelists to prevent duplication by IP (Internet Protocol) address (which uniquely identifies computers and other digital devices on the Internet) and ensure that panelists are correctly reporting their geographical locations (Baker et al., 2010).

The benefits of using online panels in studies include quick access to a large participant pool, and targeted recruitment directed by available demographic data (Erens et al., 2014; Heeren et al., 2008). Directed recruitment can include panelists who live in certain geographical locations (Miller et al., 2017), and rare and dispersed populations like sexual minorities (Datta, Hickson, Reid, & Weatherburn, 2013; Sell et al., 2015). Some studies have demonstrated that using panel vendors to recruit a sample reflective of the national population that meet certain criteria, for example, adults between the ages of 18 to 40, can occur rapidly (Craig et al., 2013; Erens et al., 2014; Heeren et al., 2008). Researchers may also use Internet panels because they present a cost-efficient approach to obtaining a sample. While data collection by Internet panels may prove more cost efficient over traditional methods such as telephone interviews (Bethell, Fiorillo, Lansky, Hendryx, & Knickman, 2004; Mook et al., 2016), the cost efficiencies of using this approach can erode if studies require extensive tracking and follow-ups of enrolled participants to increase their rates of response (Bethell et al., 2004).

Despite their growing popularity, several issues with panel vendors must be considered (Baker et al., 2010; Berrens et al., 2003). In recent years, researchers have expressed concerns about the quality of the data obtained from nonprobability panels. Panels vary in their quality (Baim, Galin, Frankel, Becker, & Agresti, 2009; Baker et al., 2010; Craig et al., 2013; Erens et al., 2014) by how panel vendors build and maintain them (Baker et al., 2010). These practices include where they draw their panelists from, what types of incentive programs are offered, how they confirm the identity of the panelists, how they identify inattentive panelists, and how often they contact panelists to complete surveys within a given time period (frequency of survey participation might increase conditioning effects whereby participation in previous surveys affects the panelists’ responses to questions on the current survey), and more (Baker et al., 2010). Some panels underrepresent lower socioeconomic respondents (Craig et al., 2013; Miller et al., 2017) and racial/ethnic minorities (Erens et al., 2014; Guillory et al., 2016). Second is the problem of panelists who provide false information to qualify for a questionnaire. These individuals assume different identities or misrepresent their qualifications to obtain the incentives offered by completing surveys (Baker et al., 2010). Finally, an overlap of panelists among different panel vendors can exist. Studies that recruit participants from multiple panels run the risk of sampling the same panelist multiple times (Baker et al., 2010). In one study using an online survey, among the seven panel vendors that were tested, six had a 25% overlap in respondents (Craig et al., 2013).

Panel vendors generally are used in survey research (Baker et al., 2010), especially for cross-sectional surveys. Different challenges arise when recruiting participants for interventions requiring follow-ups over time. Some vendors want to retain the contact information for their panelists and do not release this information to researchers, leaving follow-up efforts under the control of the panel vendor. For research interventions involving more than one family member, challenges include ensuring that both members of the family meet the criteria for inclusion and are willing to participate. Additionally, to ensure completion of the intervention in a timely manner, nudging participants to engage requires more effort than a one-time participation in a survey, and researchers may want to oversee this process themselves.

Research Questions

For our research, families with one parent and one teen (16 —17 years old) were needed to participate in a randomized control trial to test the efficacy of an interactive online family-based prevention program, SmartChoices4Teens. This program was designed to help families prevent underage drinking and risky sexual behaviors. Both parent and teen needed to agree to random assignment, complete surveys, and participate in the program as assigned. We recruited a sample of 411 families from two nonprobability panels (135 from vendor A and 276 from vendor B). These families were randomized into either the intervention (n=206) or control condition (n=205). Control families received a webpage from SmartChoices4Teens that directed them to online resources for alcohol, sexual health, and other related topics.

Before reaching our recruitment goal, we exhausted the available pool of participants from the first panel vendor, and subsequently hired a second panel vendor (see below on how the two vendors were selected). Given the concerns about panel quality, we raised questions about the reliability of information provided by panelists to qualify for the study, how reflective our sample is to the general population, and whether families would be substantially different in characteristics across vendors, biasing future efficacy outcomes. These questions are important to understand the strengths and limitations of using panel vendors to provide us with a sample for our study. Therefore, we address the following questions in this paper: 1) How well do panel vendors provide a sample of families who meet our inclusion criteria to participate in a RCT? 2) How well do panel vendors provide a sample of families who reflect the characteristics of the general population? 3) Does the choice of vendor influence the characteristics of families that we are able to engage in research? Our findings inform our recommendations on using online nonprobability panels for future intervention studies that intend on using this approach to recruit families.

METHODS

Choosing Panel Vendors for the Study

The study design required direct access to families for enrollment, to encourage completion of surveys, to motivate use of the intervention, and to provide additional assistance as needed. We reviewed four possible panel vendors, and eliminated two because they would not allow us to directly contact participants. The two remaining vendors (panel vendors A and B) did not require that they host the baseline or follow-up online surveys. Rather, they agreed to direct participants to our study’s website once enrolled. As an added incentive, they offered points to panelists participating in the research that could be redeemed for gift rewards (e.g., Amazon gift cards). Combined with the incentives provided by the research project, this added value to participating. Both panels were composed only of active panelists, defined as panel members who had accessed a survey within the last six months. The vendors used key demographic information on panelists to institute quotas on up to three specific demographic features to help attain a sample that is reflective of families nationally. We chose ethnicity, education, and income as characteristics to match to the U.S. Census. They also utilized a “double-opt-in” procedure, whereby after participants passed the screener and agreed to join the study, they were sent a confirmation email with a link to verify their intention to participate. Finally, the vendors used validation technologies to verify that members were who they say they were and located where they say they were.

Sample Recruitment

Using panel vendor A, we recruited families from November 2014 to June 2015, at which point we exhausted the available families who met our criteria. To complete our data collection, we contacted panel vendor B and continued with our recruitment of families from June 2015 to December 2015. Panel vendors sent an email invitation to potential participants that directed them to a vendor-hosted website to complete a screener. The screener included the following questions: Do you have a 16 or 17-year old teen? Is your teen male or female? Do you own a computer or tablet that meets these requirements [desktop or laptop computer running Windows 7 or higher and/or Mac OSX 10.5 or higher; iPad or Android tablet running IOS 6.0 and above, or Android 4.1.1 and above; Chrome 10 or above, Internet Explorer 9.0 or above, Firefox 10.0 or above, Safari 4.0 or above web browser with JavaScript enabled; cookies enabled?] Do you have broadband Internet services?

Originally, panelists who met the inclusion criteria were provided a detailed description of the research study and asked to provide informed consent for themselves and their teen by marking checkboxes. Respondents then completed an online enrollment form, in which they provided their names, their teens’ names, phone numbers, and email addresses. Within the first 10 families, the researchers noticed suspicious enrollment information (e.g. names for the parent and teen were the same, identical email addresses), and began a verification process. Separate calls were made to parents and to their teens after families passed the screener and their contact information was sent to the researchers. Parents were asked to confirm that they had signed up to participate in the study; and asked to verify their phone numbers, email and mailing addresses, and that they had a teen between 16–17 years old. Staff also called the teens to confirm their information (i.e. age, phone number, and email address). Eligible parents were provided with online informed consent documents and their teens were provided with online assent forms to confirm willingness to participate.

After completion of the informed consent forms, both parents and teens were then emailed (separately) links to the baseline survey. Three email reminders were sent at three-day intervals or until survey completion. If this did not result in survey completion, a text message was sent before the family was dropped from further contact. Once parent and teen completed the baseline survey, we considered the family enrolled. Following the survey, families were randomized into either the intervention or control condition.

The experimental families received SmartChoices4Teens, and the parents and teens were provided with separate and unique log-in usernames and passwords by email to access the intervention online. This family-based program was created by adapting materials from two evidence-based prevention programs: Family Matters (Bauman et al., 2002; Bauman, Foshee, Ennett, Hicks, & Pemberton, 2001; Bauman, Foshee, Ennett, Pemberton, et al., 2001) and A Parent Handbook for Talking with College Students about Alcohol (Ichiyama et al., 2009; Turrisi, Abar, Mallett, & Jaccard, 2010; Turrisi, Jaccard, Taki, Dunnam, & Grimes, 2001). Adaptations tailored the materials to older teens (16–17 years of age) and to an online interactive delivery mechanism. Baseline and three follow-up surveys (at 6 months, 12 months, and 18 months) were included in the design to capture pre- and post-effects of the intervention.

This study protocol was approved by the Pacific Institute for Research and Evaluation’s Institutional Review Board (IRB00000630).

RESULTS

Panel Vendors Providing a Sample of Families

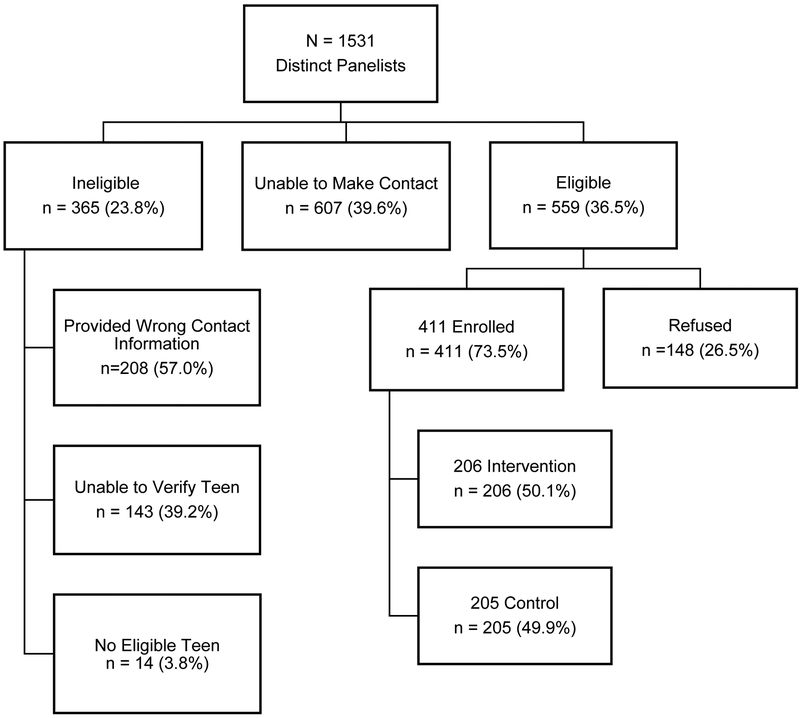

From the two vendors, a total of 1561 families responded to the panel vendors’ invitations. Between the two vendors, 30 families overlapped, which research staff discovered by comparing panelists’ email addresses and other contact information, leaving us with 1531 distinct panelists (parents). From this total, 36.5% were deemed eligible (see Figure 1) when both parent and teen were verified by research staff. However, we were unable to make direct contact (i.e. person would not answer or return our calls after multiple attempts) with a notable portion of families (39.6%). We therefore could not determine their eligibility. The final sample constituted slightly more than a quarter (26.8%) of the original pool of distinct contacts provided by the panel vendors.

Figure 1.

Participant flow chart of screened panelists during the recruitment process into SmartChoices4Teens.

Despite what appeared to be a large pool of families that met the vendors’ screener for participation, 23.8% of families were considered ineligible after our verification procedures. There are a number of reasons why families were ineligible (see Figure 1), with the most common reason being that the information they provided was inaccurate (e.g. contacts were given for restaurants, businesses, or organizations, contacts were given to persons who were unaware of signing up for the study, name on voicemail did not match the name provided on the enrollment form). There were other families that were ineligible because we could not speak to the teen to confirm that the family had an eligible teen (39.2%), even though we made contact with the panelist who reported to be the parent. A relatively small proportion of families did not meet our inclusion criteria (3.8%), which occurred when parents reported during the verification calls that they did not have a teen who was between 16–17 years old.

Comparison of Sample to General Population Characteristics

The majority (84.7%) of the recruited parents were mothers (biological or in the mother role) (see Table 1) with a mean age of 43.7 years. The largest racial/ethnic group enrolled were White at 82.0%, followed by Black (11.7%), and thirdly, Hispanics (7.3%). Most participants were married (71.8%). About 10.9% of the parents reported having been divorced; 6.3% reported being single and having never been married; and another 6.8% reported not being married but currently living with someone. The greatest percentage of participants in the study graduated from college at 31.1%, followed by some college (29.0%), and about 15.8% reported having attended graduate school. About 44.0% of the recruited parents reported being full-time employed. Full-time homemakers make up the second largest percentage (30.4%) of those who reported their employment status.

Table 1.

Parent demographics and household characteristics of sample in percentages

| Characteristic | Total (n = 411) |

Panel A (n = 135) |

Panel B (n = 276) |

|---|---|---|---|

| Age* | |||

| Mean | 43.7 | 42.8 | 44.2 |

| S.D. | 6.7 | 6.3 | 6.9 |

| Sex | |||

| Female | 84.7 | 81.5 | 86.2 |

| Male | 15.3 | 18.5 | 13.8 |

| Ethnicity | |||

| Hispanic or Latino | 7.3 | 7.4 | 7.3 |

| Race | |||

| American Indian/Alaskan Native | .2 | .7 | .0 |

| Asian | 1.9 | .7 | 2.5 |

| Black | 11.7 | 11.1 | 12.0 |

| White | 82.0 | 83.7 | 81.2 |

| Multiracial | 2.7 | 1.5 | 3.3 |

| Other or unknown | 1.5 | 2.2 | 1.1 |

| Marital Status | |||

| Married | 71.8 | 72.6 | 71.4 |

| Widowed | 1.0 | .7 | 1.1 |

| Divorced | 10.9 | 11.1 | 10.9 |

| Separated | 3.2 | 3.0 | 3.3 |

| Single, never married | 6.3 | 5.9 | 6.5 |

| Not married, but living with someone | 6.8 | 6.7 | 6.9 |

| Highest Level of Education Completed | |||

| Didn’t graduate high school | 1.0 | .0 | 1.4 |

| Graduated high school | 12.7 | 14.8 | 11.6 |

| Vocational or business school | 10.5 | 11.1 | 10.1 |

| Some college | 29.0 | 24.4 | 31.2 |

| Graduated from college | 31.1 | 33.3 | 30.1 |

| More than 4 years of college (graduate school) | 15.8 | 16.3 | 15.6 |

| Employment Status | |||

| Full-time homemaker | 30.4 | 24.6 | 33.2 |

| Retired | 1.7 | 1.5 | 1.8 |

| Unemployed | 3.7 | 4.5 | 3.3 |

| Part-time employed | 15.4 | 15.7 | 15.3 |

| Full-time employed | 44.4 | 50.7 | 41.2 |

| Other (disabled, student, self-employed) | 4.4 | 3.0 | 5.1 |

| Other Children Under Age 18 in Household | |||

| 0 | 41.6 | 39.1 | 43.1 |

| 1 child | 29.9 | 36.8 | 26.8 |

| 2 children | 15.1 | 12.8 | 16.3 |

| 3 children or more | 12.9 | 11.3 | 13.8 |

| Total Number of Persons Living in Household including Parent and Teen | |||

| 2 persons | 4.4 | 5.2 | 4.0 |

| 3 persons | 20.9 | 22.2 | 20.3 |

| 4 persons | 32.6 | 37.8 | 30.1 |

| 5 persons or more | 42.2 | 34.8 | 45.6 |

| Total Household Income | |||

| $20,000 or less | 7.3 | 6.9 | 7.5 |

| $20,001–$40,000 | 23.5 | 19.8 | 25.3 |

| $40,001–$60,000 | 23.5 | 27.5 | 21.5 |

| $60,001–$80,000 | 15.4 | 13.7 | 16.2 |

| $80,001–$100,000 | 11.4 | 9.2 | 12.5 |

| $100,001–$125,000 | 7.8 | 7.6 | 7.9 |

| $125,001–$150,000 | 4.8 | 5.3 | 4.5 |

| $150,001 or more | 6.3 | 10.0 | 4.5 |

| U.S. Region of Residence | |||

| Northeast | 18.5 | 23.7 | 15.9 |

| Midwest | 26.5 | 25.2 | 27.2 |

| South | 36.5 | 34.1 | 37.7 |

| West | 18.5 | 17.0 | 19.2 |

t(409) = −2.02, p < 0.05, d = .21, 95% CI [−2.80,−.04]

Regarding household characteristics, 41.6% reported that there were no other children in the household under the age of 18 other than the recruited teen. An additional 30% reported that there was a second child in the home that is under 18 years old. The largest percentage of the panelists (32.6%) were living in a household of 4 people, which included the recruited parent and teen. Only 4.4% reported that they lived in a household with only 2 persons. For total household income, 30.8% received $40,000 or less in the past year, and 18.9% received $100,001 or more.

Using the U.S. Census definitions of geographic regions of the U.S., the largest number of families reported living in the South, followed by the Midwest, and lastly by the Northeastern and Western regions of the country (Table 1).

Overall, the mean age for teens recruited in the study was 16.4. Slightly more than half were female. Nearly 10% of the teen participants reported identifying as Hispanic or Latino. The majority of the teens were White at 73.0%, followed by Black (11.7%), and multiracial teens (8.5%) (see Table 2, for demographics of recruited teens).

Table 2.

Teen demographic characteristics of sample in percentages

| Characteristic | Total (n=411) |

Panel A (n = 135) |

Panel B (n = 276) |

|---|---|---|---|

| Age* | |||

| Mean | 16.4 | 16.1 | 16.5 |

| S.D. | .5 | .3 | .5 |

| Sex | |||

| Female | 55.3 | 53.3 | 56.2 |

| Male | 44.7 | 46.7 | 43.8 |

| Ethnicity | |||

| Hispanic or Latino | 9.5 | 9.6 | 9.4 |

| Race | |||

| American Indian/Alaskan Native | 1.0 | .7 | 1.1 |

| Asian | 1.9 | .7 | 2.5 |

| Black | 11.7 | 12.6 | 11.2 |

| White | 73.0 | 78.5 | 70.3 |

| Multiracial | 8.5 | 4.4 | 10.5 |

| Other or unknown | 3.5 | 3.0 | 4.3 |

t(406) = −7.85, p <0.001, d=.88, 95% CI [−.47,−.28]

Racial and ethnic backgrounds of parents in the study generally reflect the relative percentages of racial/ethnic groups in the general population according to the latest U.S. Census data (Humes, Jones, & Ramirez, 2011). However, those of American Indian/Alaskan Native, Asian, and Hispanic or Latino backgrounds are underrepresented. Additionally, no parents that reported being of Native Hawaiian/Other Pacific Islander racial status were recruited into this study.

The full-time employment status of participating parents (44.0%) is lower than the general population (62.7%). The unemployment rate reported in this study (3.6%) is also lower than the national rate of 5.3% in 2016 (U.S. Bureau of Labor Statistics, 2016). Regarding educational attainment, the percentage of parents who graduated from college (31.1%) is comparable to the general population of adults between 45–64 years old with a college degree (32%). However, those who graduated from high school or did not graduate from high school are underrepresented. Furthermore, 15.8% of parents reported that they completed graduate school compared to 12.1% among the general population of adults (45–64 years old) (Ryan & Bauman, 2016). Compared to the latest U.S. Census estimates from 2015, the sample recruited into the study largely reports a total household income comparable to the general population (Proctor, Semega, & Kollar, 2016).

According to the U.S. Census data from 2015, the greatest percentage of the population lives in the South at 37.7%, which is comparable to the region where most of the families in the study live (36.5%). The regions of the Northeast and the Midwest are also well-represented. However, the Western region of the U.S. is not, where 23.7% of the national population resides.

Examining Sample Differences between Two Panel Vendors

There were no significant differences between the samples in parent demographics and household characteristics, except for mean age. The sample from vendor B had a slightly higher parental mean age (M = 44.20, SD = 6.87) compared to vendor A’s sample (M = 42.79, SD = 6.29), t(409) = −2.02, p < 0.05, d = .21, 95% CI [−2.80,−.04]. There were also no significant differences between the samples with regard to teen demographics, except for mean age. The sample from vendor B had a slightly higher teen mean age (M = 16.49, SD = .50) compared to vendor A’s sample (M = 16.12, SD = .33), t(406) = −7.85, p <0.001, d=.88, 95% CI [−.47,−.28].

There was a significant difference in overall recruitment rates between the two vendors. From panel vendor A, 31.2% were enrolled in the study as compared 24.4% from panel vendor B [χ2 (1, N = 1561) = 7.46, p < 0.001]. There was no significant difference between the two vendors in terms of the percentages of families that refused to participate. There were also no significant differences across the vendors regarding the proportions of families that were ineligible or were unable to make contact with.

DISCUSSION

In this study, we successfully utilized panel vendors to recruit a national sample of families with an older teen between 16–17 years old for a RCT study. However, we also devoted considerable resources to ensure the quality of the sample. We contacted all panel members who volunteered to confirm that they met our eligibility criteria. While sufficient numbers of eligible participants were ultimately recruited, nearly a quarter of those reached were ineligible for the study. Whether this eligibility problem occurred because potential participants were uninformed about study requirements (i.e., needing to have an age-eligible teen) or actively dissembled to gain access to the study incentives is not clear. Researchers should engage in efforts to verify eligibility when working with online panel vendors or risk enrolling participants who are ineligible. Ineligible participants may not complete all study requirements, contribute to loss at follow-up, or provide erroneous responses, all of which introduce biases. Our concerns about eligibility of recruitments online have been reported by other researchers (Buller, Borland, Bettinghaus, Shane, & Zimmerman, 2014). This suggests that verifying eligibility is necessary across different modes of online recruitment, not just from online panels. Recruitment methods such as online advertising (e.g. Google AdWords, Facebook, or Craigslist) that do not involve direct contact with the participants are likely to be susceptible to this problem as well.

Beyond eligibility, the quality of recruitment procedures is critical to determining how well a target population is represented in prevention trials (Prinz et al., 2001). Our concern was to identify a sample that reflected the characteristics of the general population as closely as possible. The finding that this sample was biased towards Whites and families with higher educational attainment is similar to what other studies have revealed (Craig et al., 2013; Roster, Rogers, Albaum, & Klein, 2004). The racial difference cannot be explained by disparities in Internet access, but adults with only a high school education have lower access to the Internet than individuals with at least some college education (Pew Research Center, 2014). The extent to which these two demographic characteristics predict the success of our intervention will be further considered for subsequent research on the intervention. Finally, we found little evidence that the two panel vendors we utilized produced substantially different families, based upon their demographic characteristics. Even though slightly more panelists from vendor A enrolled in the study, the quality of the samples from both vendors (i.e. refusal to participate, ineligibility, unable to contact) did not differ. These results reduced our concerns about using more than one panel vendor for identifying a research sample.

From this experience, we provide the following recommendations for future intervention studies that intend on using panel vendors to recruit their samples: First, the lack of overlapping panelists suggests that using multiple panels can increase the pool of potential participants, but researchers should choose the panel(s) they use carefully. There are significant differences among how panel vendors manage their panels that can affect the composition and quality of individual panels. Second, choose panel vendors who allow direct contact to panelists so that they can be verified before enrolling them in the study. Third, although verification procedures increase the costs and time for recruitment, they are essential. Researchers cannot rely on the online panel providers to identify eligible participants as revealed by those panelists who passed the vendors’ screeners by providing false information. Verification may be especially important when more complicated eligibility criteria exist, such as in this study with the need for an eligible teen who has his/her own email address that research staff can use to communicate directly and separately from the teen’s parent. Fourth, be aware that panel vendors change, for example, by merging with another panel vendor, which may impact recruitment procedures.

Researchers are turning to online recruitment methods (e.g. social media, Google ads, online message boards) (Guillory et al., 2016; Lane, Armin, & Gordon, 2015), presenting critical opportunities for research into new and innovative recruitment strategies. However, innovative recruitment methods need careful study to ensure the quality of their samples.

Acknowledgments

Funding

This research was supported by the National Institute on Alcohol Abuse and Alcohol (NIAAA) grant R01AA020977.

Footnotes

Declaration of Conflicting Interests

The Authors declare that there is no conflict of interest.

References

- Baim J, Galin M, Frankel MR, Becker R, & Agresti J (2009). ‘Sample Surveys Based on Internet Panels: 8 Years of Learning Paper presented at the Worldwide Readership Symposium, Valencia, Spain. [Google Scholar]

- Baker R, Blumberg SJ, Brick JM, Couper MP, Courtright M, Dennis JM, . . . Groves RM (2010). Research synthesis AAPOR report on online panels. Public Opinion Quarterly, 74(4), 711–781. [Google Scholar]

- Bauman KE, Ennett ST, Foshee VA, Pemberton M, King TS, & Koch GG (2002). Influence of a family program on adolescent smoking and drinking prevalence. Prevention Science, 3(1), 35–42. [DOI] [PubMed] [Google Scholar]

- Bauman KE, Foshee VA, Ennett ST, Hicks K, & Pemberton M (2001). Family Matters: A family-directed program designed to prevent adolescent tobacco and alcohol use. Health Promotion Practice, 2(1), 81–96. [Google Scholar]

- Bauman KE, Foshee VA, Ennett ST, Pemberton M, Hicks KA, King TS, & Koch GG (2001). The influence of a family program on adolescent tobacco and alcohol use. American Journal of Public Health, 91(4), 604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berrens RP, Bohara AK, Jenkins‐Smith H, Silva C, & Weimer DL (2003). The advent of Internet surveys for political research: A comparison of telephone and Internet samples. Political Analysis, 11(1), 1–22. [Google Scholar]

- Bethell C, Fiorillo J, Lansky D, Hendryx M, & Knickman J (2004). Online consumer surveys as a methodology for assessing the quality of the United States health care system. J Med Internet Res, 6(1), e2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buller DB, Borland R, Bettinghaus EP, Shane JH, & Zimmerman DE (2014). Randomized trial of a smartphone mobile application compared to text messaging to support smoking cessation. Telemedicine and e-Health, 20(3), 206–214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craig BM, Hays RD, Pickard AS, Cella D, Revicki DA, & Reeve BB (2013). Comparison of US panel vendors for online surveys. Journal of Medical Internet Research, 15(11), e260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Datta J, Hickson F, Reid D, & Weatherburn P (2013). STI testing without HIV disclosure by MSM with diagnosed HIV infection in England: cross-sectional results from an online panel survey. Sex Transm Infect, 89(7), 602–603. doi: 10.1136/sextrans-2013-051186 [DOI] [PubMed] [Google Scholar]

- Erens B, Burkill S, Couper MP, Conrad F, Clifton S, Tanton C, . . . Copas AJ (2014). Nonprobability Web surveys to measure sexual behaviors and attitudes in the general population: A comparison with a probability sample interview survey. J Med Internet Res, 16(12), e276. doi: 10.2196/jmir.3382 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guillory J, Kim A, Murphy J, Bradfield B, Nonnemaker J, & Hsieh Y (2016). Comparing Twitter and Online Panels for Survey Recruitment of E-Cigarette Users and Smokers. Journal of Medical Internet Research, 18(11), e288. doi: 10.2196/jmir.6326 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hays RD, Liu H, & Kapteyn A (2015). Use of Internet panels to conduct surveys. Behavior research methods, 47(3), 685–690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heeren T, Edwards EM, Dennis JM, Rodkin S, Hingson RW, & Rosenbloom DL (2008). A comparison of results from an alcohol survey of a prerecruited Internet panel and the National Epidemiologic Survey on Alcohol and Related Conditions. Alcohol Clin Exp Res, 32(2), 222–229. doi: 10.1111/j.1530-0277.2007.00571.x [DOI] [PubMed] [Google Scholar]

- Humes KR, Jones NA, & Ramirez RR (2011). Overview of Race and Hispanic Origin: 2010. Retrieved from https://www.census.gov/prod/cen2010/briefs/c2010br-02.pdf

- Ichiyama MA, Fairlie AM, Wood MD, Turrisi R, Francis DP, Ray AE, & Stanger LA (2009). A randomized trial of a parent-based intervention on drinking behavior among incoming college freshmen. Journal of Studies on Alcohol and Drugs, Supplement(16), 67–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Judd CM, Smith ER, & Kidder LH, Kidder (1991). Research methods in social relations (6th ed.). New York, NY: Holt, Rinehart, and Winston. [Google Scholar]

- Lane TS, Armin J, & Gordon JS (2015). Online Recruitment Methods for Web-Based and Mobile Health Studies: A Review of the Literature. J Med Internet Res, 17(7), e183. doi: 10.2196/jmir.4359 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EA, Berman L, Atienza A, Middleton D, Iachan R, Tortora R, & Boyle J (2017). A Feasibility Study on Using an Internet-Panel Survey to Measure Perceptions of E-cigarettes in 3 Metropolitan Areas, 2015. Public Health Rep, 132(3): 336–342. doi: 10.1177/0033354917701888 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mook P, Kanagarajah S, Maguire H, Adak GK, Dabrera G, Waldram A, . . . Oliver I (2016). Selection of population controls for a Salmonella case-control study in the UK using a market research panel and web-survey provides time and resource savings. Epidemiol Infect, 144(6), 1220–1230. doi: 10.1017/s0950268815002290 [DOI] [PubMed] [Google Scholar]

- Pew Research Center. (2014). Internet User Demographics Retrieved from http://www.pewinternet.org/data-trend/internet-use/latest-stats/.

- Prinz RJ, Smith EP, Dumas JE, Laughlin JE, White DW, & Barrón R (2001). Recruitment and retention of participants in prevention trials involving family-based interventions. Am J Prev Med, 20(1), 31–37. doi: 10.1016/S0749-3797(00)00271-3 [DOI] [PubMed] [Google Scholar]

- Proctor BD, Semega JL, & Kollar MA (2016). Income and poverty in the United States. Retrieved from https://www.census.gov/content/dam/Census/library/publications/2016/demo/p60-256.pdf.

- Roster CA, Rogers RD, Albaum G, & Klein D (2004). A comparison of response characteristics from web and telephone surveys. International Journal of Market Research, 46, 359–374. [Google Scholar]

- Ryan CL, & Bauman K (2016). Educational Attainment in the United States: 2015. U.S. Census Bureau; Retrieved from https://www.census.gov/content/dam/Census/library/publications/2016/demo/p20-578.pdf. [Google Scholar]

- Sell R, Goldberg S, & Conron K (2015). The Utility of an online convenience panel for reaching rare and dispersed populations. PLoS One, 10(12), e0144011. doi: 10.1371/journal.pone.0144011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turrisi R, Abar C, Mallett KA, & Jaccard J (2010). An examination of the mediational effects of cognitive and attitudinal factors of a parent intervention to reduce college drinking. Journal of Applied Social Psychology, 40(10), 2500–2526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turrisi R, Jaccard J, Taki R, Dunnam H, & Grimes J (2001). Examination of the short-term efficacy of a parent intervention to reduce college student drinking tendencies. Psychology of Addictive Behaviors, 15(4), 366. [DOI] [PubMed] [Google Scholar]

- U. S. Bureau of Labor Statistics. (2016). Labor force characteristics by race and ethnicity, 2015. Retrieved from http://www.bls.gov/opub/reports/race-and-ethnicity/2015/pdf/home.pdf. Accessed October 15, 2016.