Abstract

Background

There is a shortage of health care professionals competent in diabetes management worldwide. Digital education is increasingly used in educating health professionals on diabetes. Digital diabetes self-management education for patients has been shown to improve patients’ knowledge and outcomes. However, the effectiveness of digital education on diabetes management for health care professionals is still unknown.

Objective

The objective of this study was to assess the effectiveness and economic impact of digital education in improving health care professionals’ knowledge, skills, attitudes, satisfaction, and competencies. We also assessed its impact on patient outcomes and health care professionals’ behavior.

Methods

We included randomized controlled trials evaluating the impact of digitalized diabetes management education for health care professionals pre- and postregistration. Publications from 1990 to 2017 were searched in MEDLINE, EMBASE, Cochrane Library, PsycINFO, CINAHL, ERIC, and Web of Science. Screening, data extraction and risk of bias assessment were conducted independently by 2 authors.

Results

A total of 12 studies met the inclusion criteria. Studies were heterogeneous in terms of digital education modality, comparators, outcome measures, and intervention duration. Most studies comparing digital or blended education to traditional education reported significantly higher knowledge and skills scores in the intervention group. There was little or no between-group difference in patient outcomes or economic impact. Most studies were judged at a high or unclear risk of bias.

Conclusions

Digital education seems to be more effective than traditional education in improving diabetes management–related knowledge and skills. The paucity and low quality of the available evidence call for urgent and well-designed studies focusing on important outcomes such as health care professionals’ behavior, patient outcomes, and cost-effectiveness as well as its impact in diverse settings, including developing countries.

Keywords: evidence-based practice, health personnel, learning, systematic review, diabetes mellitus

Introduction

Diabetes is one of the biggest global public health concerns affecting an estimated 425 million adults worldwide, and this number is expected to rise to 629 million by 2045 [1]. This is coupled with a shortage of health care professionals competent in delivering high-quality diabetes care [2,3]. Enhancing both the size and competencies of health care professionals is a priority and improving health professions education is seen as one of the key strategies to this end [4]. Digital education, broadly defined as the use of digital technology in education, has been recognized as having the potential to improve health professions education by making it scalable, interactive, personalized, global, and cost-effective [5-7].

Past systematic reviews on digital education have focused mainly on diabetes self-management education for patients, showing an improvement in patients’ knowledge and outcomes [8-10]. The effectiveness of digital education interventions for health care professionals on diabetes management is still unknown [11]. To address this gap, we performed a systematic review to evaluate the effect of digital education on diabetes management on health care professionals’ knowledge, skills, attitudes, competencies, and behaviors, as well as its impact on patient outcomes.

Methods

Systematic Review Guidance

We followed the Cochrane Handbook of Systematic Reviews for our methodology [12] and the Preferred Reporting Items for Systematic Reviews and Meta-Analyses statement for reporting [13]. For a detailed description of the methodology, please refer to the study by Car et al [14].

Data Sources and Searches

This review is part of an evidence-synthesis initiative on digital health professions education, where an extensive search strategy was developed for a series of systematic reviews on different modalities of digital health education for health care professionals (see Multimedia Appendix 1) [15]. The following databases were searched from January 1990 to August 2017:

The Cochrane Central Register of Controlled Trials (The Cochrane Library,)

MEDLINE (Ovid)

EMBASE (Elsevier)

PsycINFO (Ovid)

Educational Resource Information Centre (ERIC; Ovid)

Cumulative Index to Nursing and Allied Health Literature (CINAHL; EBSCO)

Web of Science Core Collection (Clarivate analytics).

We included studies in all languages and at all stages of publication. Our search strategy included gray literature sources such as Google scholar, trial registries, theses, dissertations, and academic reports. The citations retrieved from different sources were combined into a single library and screened by 2 authors independently. We also screened references of included papers for potentially eligible studies. Discrepancies and disagreements were resolved through discussion until a consensus was reached.

Study Selection

We included randomized controlled trials (RCTs), cluster RCTs, and quasi-RCTs and excluded cross-over trials due to high likelihood of a carry-over effect in this type of studies [12]. Studies on pre- or postregistration health care professionals taking part in digital education interventions on diabetes management were considered eligible. We defined health care professionals in line with the Health Field of Education and Training (091) in the International Standard Classification of Education [16]. Studies on digital education on both type 1 and type 2 diabetes at all educational levels were included.

We defined digital education as any teaching and learning that occurs by means of digital technologies. We considered eligible all digital education modalities, including offline and online education, Serious Gaming and Gamification, Massive Open Online Courses, Virtual Reality Environments, Virtual Patient Simulations, Psychomotor Skills Trainers, and mobile learning. Eligible comparisons were traditional, blended, or another form of digital education intervention on diabetes management. Traditional education was defined as any teaching and learning taking place via nondigital educational material (eg, textbooks) or in-person human interaction (eg, lecture or seminar). Traditional education also included usual learning, for example, usual revisions as well as on-the-job learning without a specific intervention in postregistration health care professionals. Blended education was defined as the act of teaching and learning that combines aspects of traditional and digital education. Eligible primary outcomes measured using any validated and non-validated instruments were knowledge, skills, competencies, attitudes, and satisfaction. Eligible attitudes-related outcomes comprised all attitudes toward patients, new clinical knowledge, skills, and changes to clinical practice.

Eligible secondary outcomes included patient outcomes in studies on postregistration health care professionals (eg, patients’ blood pressure, blood glucose, and blood lipid levels), change in health care professional’s behavior (ie, treatment intensification, defined as an intensity or dose increase of an existing treatment or the addition of a new treatment/class of medication), and economic impact of the intervention.

Data Extraction

In this study, 2 authors independently extracted data from studies using a structured and piloted data extraction form. We extracted information on study design, participants’ demographics, type, content and delivery of digital education, and information pertinent to the intervention. Study authors were contacted in case of unclear or missing information.

Risk of Bias and Quality of Evidence Assessment

The methodological quality of included RCTs was independently assessed by 2 authors using the Cochrane Risk of Bias Tool [12]. The risk of bias assessment was piloted between the reviewers, and we contacted study authors in case of any unclear or missing information. We assessed the risk of bias in included RCTs for the following domains: (1) random sequence generation; (2) allocation concealment; (3) blinding of participants to the intervention; (4) blinding of outcome assessment; (5) attrition; (6) selective reporting; and (7) other sources of bias [17]. Cluster RCTs were assessed using 5 additional domains: (1) recruitment bias; (2) baseline imbalance; (3) loss of clusters; (4) incorrect analysis; and (5) comparability with individually randomized trials [12].

Data Synthesis and Analysis

In line with Miller’s classification, a learning model for assessment of clinical competence [18], we classified outcomes based on the type of outcome measurement instruments used in the study. For example, multiple-choice questionnaires were classified as assessing knowledge and objective structured clinical examinations as assessing participants’ skills.

Although some studies reported change scores, we presented only postintervention data as those were more commonly reported and to ensure consistency and comparability of findings. Continuous outcomes are presented using mean difference (for outcomes measured using the same measurement tool), standardized mean difference (SMD; for outcomes measured using diverse measurement tools), and 95% CIs. Dichotomous outcomes are presented using risk ratios (RRs) and 95% CIs. As we were unable to identify a clinically meaningful interpretation of effect size in the literature for digital education interventions, we interpreted the effect size using Cohen rule of thumb with SMD greater than or equal to 0.2 representing a small effect, SMD greater than or equal to 0.5 a moderate effect, and SMD greater than or equal to 0.8 a large effect [19,20]. In studies that reported more than one measure for each outcome, the primary measure, as defined by the primary study authors, was considered.

Heterogeneity and Subgroup Analyses

Heterogeneity was assessed qualitatively using information relating to participants, interventions, controls, and outcomes as well as statistically using the I2 statistic for outcomes allowing for pooled analysis [17]. Due to substantial methodological, clinical, and statistical heterogeneity (I2>50%), we conducted a narrative synthesis according to type of comparison, that is, (1) digital education versus traditional education, (2) digital education versus blended education, and (3) one digital education type versus another digital education type. Subgroup analyses were not feasible owing to the small number of studies and limited information. We presented the study findings in a forest plot using the random effects model and standardized mean difference as the measurement scales were different and without the pooled estimates.

Results

Included Studies

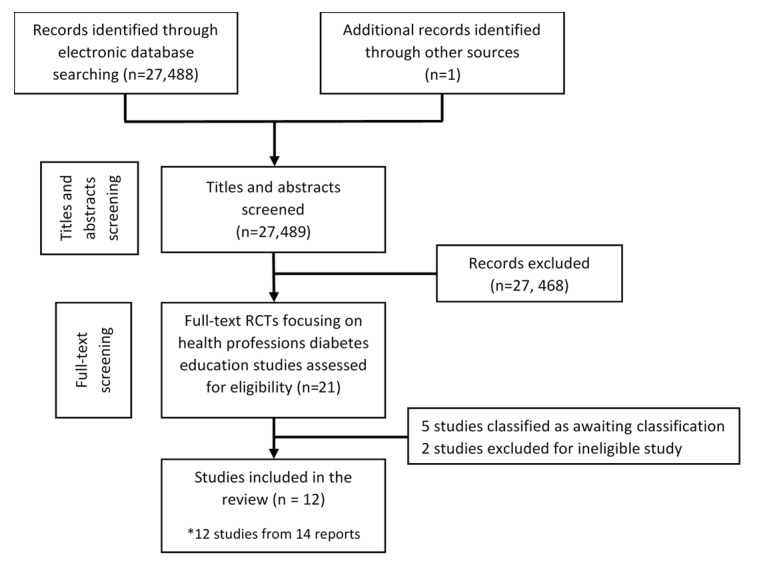

Our search strategy for a series of systematic reviews focusing on different digital health professions education modalities yielded 30,532 unique references. We removed 459 duplicates, and upon screening of titles and abstracts, the screening excluded 30,050 citations. We identified 23 potentially eligible studies for which we retrieved and screened full texts. Of these, we included 12 studies: 9 RCTs and 3 cluster RCTs, all published in English (Figure 1). Moreover, 1 study was reported by 3 journal papers [21-23]. Although presented as a cluster RCT, this study included randomization at the individual, physician level and was therefore considered an RCT. A total of 9 studies were excluded due to ineligible study design (n=3), missing data (n=5), and ineligible participants (n=1; Figure 1).

Figure 1.

Preferred Reporting Items for Systematic Reviews and Meta-Analyses flowchart of included studies.

Participant Characteristics

There were 2263 health care professionals in 12 included studies [23-34]. A third of the studies included less than 50 participants. The study with 3 published reports had 1182 patient records as a measure of clinical outcomes [21-23]. Only 1 study targeted pediatric patients with type 1 diabetes [28]. All other studies reporting patient outcomes focused on adult patients with type 2 diabetes. A total of 8 studies focused on doctors [23,24,26,27,29,32-34]. Moreover, 1 study each focused on medical students [30], pharmacy students [25], nurses [31], and jointly on doctors, nurses, and dietitians [28].

Study Characteristics

A total of 10 studies were conducted in high-income countries including Australia [30], the United States [23,24,26,29,31-33], and the United Kingdom [27,28]. A total of 2 studies were conducted in middle-income countries such as Thailand [25] and Brazil [34] each.

A total of 6 studies compared digital education with traditional education [25,26,29,30,32,34]. A total of 3 studies compared 2 different methods of digital education interventions [23,27,31], 2 compared blended education with usual education [28,33], and 1 study with 3 arms compared usual, blended, and digital education [24]. Only 4 studies reported duration of the intervention lasting from an hour to 2 weeks [25,26,30,34].

Various types of modalities were used to deliver the digital education interventions. A total of 3 studies used a Web-based or online portal [23,27,28]; 3 used a scenario-based simulation software [24,26,32]; 1 study each assessed high-fidelity mannequins [31]; an online game app on the computer [34]; periodic email reminders on the lecture content [33]; personal digital assistant–delivered learning materials [29]; and a computer-based diabetes management program [25].

All studies except 3 employed clinical scenarios in the digital education intervention [21,24-27,30-32,34]. The remaining 3 studies used text-based learning [29,33,28]. Feedback was provided to participants in the intervention group in 7 studies [21,24,26-28,32,34]. A total of 2 studies comparing different forms of digital education reminded participants to log into the system [21,27], whereas one employed an email reminder to consolidate learned knowledge [33]. Half of the studies evaluated interactive digital education interventions [21,24,26,31,32,34].

Comparison interventions were also varied; 3 studies utilized a Web-based system (online portal) for the control group [23,27,31]; 4 compared the digital education intervention with face-to-face education [25,29,33,34]; 1 provided hard copy materials [29]; 1 reported revision as usual where participants could access relevant materials available to them [30]. A total of 4 studies focusing on postregistration education did not include any control intervention [24,26,28,32].

A total of 11 studies measured primary outcomes; 6 assessed knowledge with questionnaires [25,27,29,31-33]; 5 assessed skills and competency (measured as a combination of knowledge and skill) [25,28,30,32,34]; 2 assessed learners’ attitude [27,34]; and 4 assessed learners’ satisfaction [24,26,30,34]. A total of 5 studies measured secondary outcomes; 2 assessed the cost of the intervention [26,28]; 4 assessed patient outcomes (ie, patients meeting glycated hemoglobin [23,24,26,28]; low-density lipoprotein [23,24,26]; and blood pressure control [23,26] goals); and 2 assessed treatment intensification (intensifying the treatment regimen as required) [21,24].

Participant type and content of diabetes education across the studies varied widely and included diabetes management skills for primary care physicians (PCPs) [23,24,26,27,34]; diabetes clinical care for primary care, family, and internal medicine residents [29,32]; communication skills for pediatric doctors, nurses, and dieticians managing type 1 diabetes patients [28]; clinical endocrinology skills for medical students [30]; primary care residents’ training on Hepatitis B vaccination for diabetes patients [33]; nursing care for hypoglycemic patients [31]; and diabetes management knowledge, communication, and patient note writing skills for pharmacy students [25].

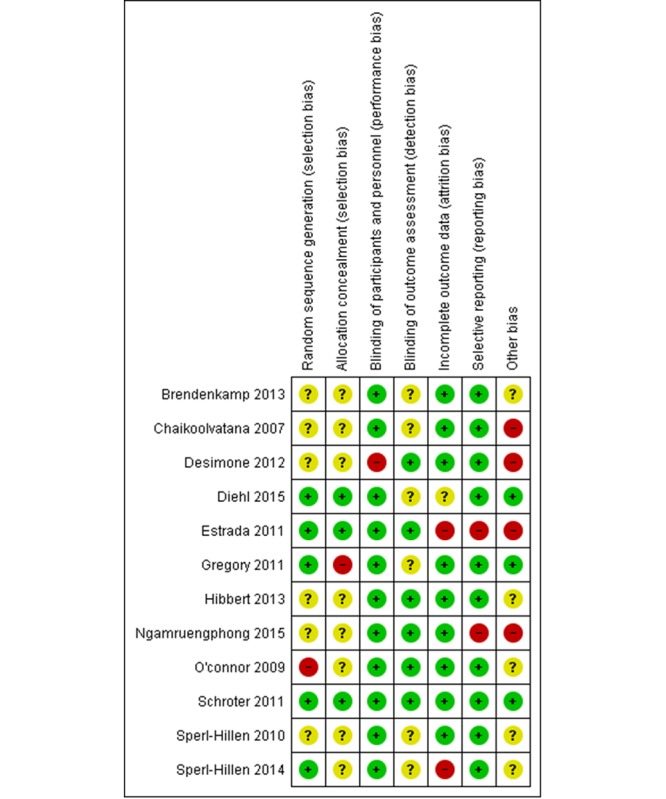

Risk of Bias in the Included Studies

Of 12 included studies, 7 were judged at a high risk of bias and three studies had an unclear risk of bias for at least three domains. Of three cluster RCTs, two were judged at a high risk due to baseline imbalance (Figure 2, Multimedia Appendix 2).

Figure 2.

Risk of bias summary: review authors' judgement about each risk of bias item for each included study. The symbol "+" indicates a low risk of bias, "?" indicates unclear risk of bias and "-" indicates a high risk of bias. The methodology of 2 studies (Crenshaw 2010 and Billue 2012) are duplicated with (Estrada 2011) and not presented in this figure.

Digital Education Versus Traditional Education

A total of 4 RCTs [25,29,30,34] and 2 cluster RCTs [26,32] compared digital education with traditional education, including no intervention (ie, knowledge acquisition as usual or usual on-the-job training), face-to-face lectures or hard copy printouts (Table 1). A total of 3 studies measured knowledge outcome. Of these studies, 2 compared online virtual simulation and computer-based learning intervention with no intervention, respectively, and reported moderate-to-large postintervention knowledge gain in the digital education group compared with the control group (Multimedia Appendix 3 and Multimedia Appendix 4). The final study compared learning materials either printed or displayed on a mobile electronic device for medical residents, where no between-group difference in postintervention knowledge scores was found [29].

Table 1.

Characteristics of the included studies.

| Study, design, and country | Learning modality | Type of participants | Number of sites and participants | Intervention duration | Type of outcome | |

| Digital education versus traditional education | ||||||

|

|

Chaikoolvatana 2007 [25]; RCTa; Thailand | Ib: Computer based learning (CBL); Cc: face-to-face lectures | Final year pharmacy students | I: 43, C: 40 | I: 2 hours; C: 2 3-hour sessions; (over 2 months) | (1) Knowledge; (2) skills |

|

|

Desimone 2012 [29]; RCT; United States | I: PDAd version education materials; C: Printed materials | Internal medicine residents | I: 11, C: 11 | Over 1 month | Knowledge |

|

|

Diehl 2017 [34]; RCT; Brazil | I: Online game; C: Face-to-face lectures and activities | Primary Care Physicians | I: 94, C: 76 | 4 hours (over 3 months) | Skills |

|

|

Hibbert 2013 [30]; RCT; Australia | I: Training Video; C: No intervention (usual revision) | Second year medical students | I: 12, C: 10 | Over 2 Weeks | Skills |

|

|

Sperl-Hillen 2010 [26]; cRCTe; United States | I: Simulation Software; C: No intervention | Primary Care Physicians and their patients | I: 20 sites, (1847 patients), C: 21 sites, (1570 patients) | 5.5 days; (over 6 months) | Patient outcomes; Economic impact |

|

|

Sperl-Hillen 2014 [32]; cRCT; United States | I: Simulation software; C: No intervention (Not assigned learning cases) | Family/ internal medicine residents | I: 10 sites (177 residents), C: 9 sites (164 residents) | Over 6 months | Knowledge; Skills |

| Blended learning versus traditional education | ||||||

|

|

Gregory 2011 [28]; cRCT; United Kingdom | I: Web-based intervention and practical workshops; C: No intervention | Paediatric doctors, nurses, psychologists, dieticians, and their patients | I: 13 sites (356 patients), C: 13 sites (333 patients) | Over 12 months | Skills; Patient outcomes; Economic impact |

|

|

Ngamruengphong 2011 [33]; RCT; United States | I: Standard education+30 min didactic lecture, a pocket card, and monthly e-mail reminders that consisted of the lecture content; C: Standard residency education | Primary care residents | I: 20, C: 19 | Over 2 months | Knowledge |

| Digital education versus digital education | ||||||

|

|

Billue 2012 [21]; RCT United States; Estrada 2011[23]; RCT United States; Crenshaw 2010[22]; RCT; United States | I: Web-based intervention with feedback; C: Web-based intervention without feedback | Family/ general/ internal medicine physicians | I: 48 physicians (479 patients), C: 47 physicians (466 patients) | Over 2 years | Patient outcomes |

|

|

Brendenkamp 2013 [31]; RCT; United States | I: Simulation (High fidelity Mannequin); C: Web-based intervention | Staff nurses | I: 47, C: 49 | Not reported | Knowledge |

|

|

Schroter 2011 [27]; RCT; United Kingdom | I: Web-based learning + Diabetes Needs assessment tool (DNAT); C: Web-based learning without DNAT | Diabetes doctors and nurses | I: 499, C: 498 | Over 4 months | Knowledge |

| Blended learning versus digital education versus traditional education | ||||||

|

|

O'Connor 2009 [24]; RCT; United States | Group A: No intervention; Group B: Simulated web-based learning; Group C: simulated case-based physician learning + physician opinion leader feedback | Primary care physicians and their patients | Group A: 100 physicians, 691 patients; Group B: 100 physicians, 725 patients; Group C: 99 physicians, 604 patients | Not reported | Patient outcomes |

aRCT: randomized controlled trial.

bI: intervention group.

cC: control group.

dPDA: Personal Digital Assistance.

ecRCT: cluster RCT.

Skills were assessed in 4 studies [25,30,32,34], which largely reported higher effectiveness of digital education interventions (Multimedia Appendix 3 and Multimedia Appendix 4). Moreover, 1 study comparing a training video and no intervention (usual revision) for medical students reported significant improvement in lower limb examination (RR: 2.29; 95% CI 1.05-4.99) and diabetes history taking skills (RR: 4.17; 95% CI 1.18-14.77), and no difference in thyroid disease examination. Another study comparing computer-based and face-to-face learning for final year pharmacy students found large improvements in subjective, objective, assessment, and plan note writing skills (SMD 0.78; 95% CI 0.33-1.22) in the digital education group and no difference in patient history taking skills between the groups [25]. The third study, comparing an online virtual case-based simulation with no intervention for medical residents, reported higher proportion of patients meeting safe treatment goals in 3 out of 4 hypothetical simulation cases [32] (Multimedia Appendix 4). The final study compared an online game with face-to-face learning for PCPs and assessed their competency, that is, a combination of factual knowledge and problem-solving skills of PCPs on insulin therapy for diabetes reported small improvements in the digital education group (SMD: 0.4; 95% CI 0.09-0.70]) [34].

Only 1 study comparing a simulated physician learning software and no intervention measured patient clinical outcomes and cost [24]. The study reported the mean pre- and postintervention change (95% CI) in glycated hemoglobin, systolic blood pressure, diastolic blood pressure, and low-density lipoprotein levels of patients under the care of participating physicians. Improvements were observed for all measures from baseline to postintervention in both intervention and control groups. However, when comparing the groups, results were mixed (Multimedia Appendix 4) [24]. Cost savings of US $71 per patient was reported for the intervention group compared with the control group from the health plan perspective, but the difference was not statistically significant.

Learner’s satisfaction was assessed with self-reported surveys in 3 studies. Only 1 study focusing on online games evaluated satisfaction for both intervention and control groups, but the use of different questionnaires did not allow between-group comparisons [32]. The same study found significantly better diabetes management and insulin-related attitudes and beliefs toward the digital education intervention in the intervention group [32]. The remaining 2 studies assessed satisfaction only in the intervention group, and more than 80% of participants were satisfied with the digital intervention [24,28].

Blended Education Versus Traditional Education

One cluster RCT [26] and one RCT [31] compared blended education with traditional education and evaluated knowledge, skills, patients’ glycated hemoglobin levels, and economic impact. The blended education within the RCT comprised the standard education and an additional 30-min didactic lecture, a pocket card, and monthly email reminders on lecture content. The study reported large improvement in postintervention knowledge score in the blended education group compared with the control group (SMD: 1.98; 95% CI 1.21-2.74) [31].

The blended learning program of the cluster RCT included Web-based training and practical workshops for behavioral change in pediatric patients with type 1 diabetes, whereas the control group received no intervention [26]. The blended education group had a large improvement in the postintervention communication skills score (SMD: 1.58; 95% CI 0.99-2.17) and a higher proportion of tasks done or partially done in shared agenda setting (RR: 7.49; 95% CI 1.88-29.9) compared with the control group (Multimedia Appendix 3). Cost differences in the mean total National Health Service cost (direct costs: training; indirect costs: clinic visits) were not statistically significant; although, the blended education intervention incurred an additional mean cost of £183.96 per patient. There was no statistically significant difference between the groups in patient outcomes (ie, glycated hemoglobin levels) and patients’ quality of life (Multimedia Appendix 4).

Digital Education Versus Blended Education Versus Traditional Education

One RCT study compared digital education, blended education, and traditional education to improve the safety and quality of diabetes care delivered by PCPs [24]. The digital education group received online case-based simulation, and the blended education group also received feedback in the form of additional face-to-face physician opinion. Learners’ satisfaction and patient clinical outcomes (ie, mean change in glycated hemoglobin, blood pressure, and low-density lipoprotein level and treatment intensification) were assessed. Over 97% of PCPs who completed the education intervention rated their satisfaction with the digital education and blended interventions as excellent or very good after completing the simulated cases. The mean glycated hemoglobin level significantly improved in the digital education group compared with blended or traditional education (Multimedia Appendix 4). There was no statistically significant difference across the intervention groups in the remaining patient outcomes (Multimedia Appendix 4).

Digital Education Versus Digital Education

A total of 3 RCT studies compared 2 different digital education modalities [21,25,29]. Moreover, 1 study compared a high-fidelity simulation mannequin with an online learning system [29]. The other 2 studies, employing the same Web-based (online) system in both the groups, evaluated the addition of an interactive learning needs assessment tool or feedback to the intervention group, respectively [21,25].

Studies reported no significant difference in terms of knowledge, attitudes, and patient outcomes. The study evaluating the use of feedback as part of the digital education intervention reported higher study engagement in the intervention group as reflected by the total number of pages viewed (SMD: 1.40; 95% CI 0.95-1.85), total number of visits (SMD: 1.38; 95% CI 0.93-1.83]), duration of Web access in min (SMD: 1.07; 95% CI 0.64-1.50), and the number of components viewed (SMD: 1.14; 95% CI 0.70-1.57) [18].

Discussion

Principal Findings

We found 12 studies evaluating the effectiveness of digital health professions education on diabetes management. Although evidence is limited, heterogeneous, and of low quality, our findings suggest that digital and blended education may improve health care professionals’ knowledge and skills compared with traditional education. However, an improvement in knowledge and skills does not seem to translate into improvements in diabetes care as reflected by little or no difference in sparsely reported patient outcomes in the included studies. Although simulated learning seems to be more effective in improving patient outcomes compared with the other strategies assessed, studies comparing different forms of digital education reported no statistically significant difference between groups.

The inconsistency between the effect on health care professionals’ and patients’ outcomes observed in our review is in line with the existing literature, where knowledge and skill gains outweigh improvements in patient outcomes [33]. Yet patient outcomes were only reported in 4 diverse studies in this review. The lack of patient-related data is common in digital education studies, possibly owing to difficulty in measuring patient outcomes, especially in preregistration health care professionals. Furthermore, patient outcomes are potentially affected by contextual factors unrelated to health care professionals’ competence, such as patients’ health beliefs and financial barriers [34]. Finally, a lack of difference between the groups observed in the included studies may be merely due to their insufficient statistical power to evaluate patient outcomes.

Although digital education has been present in health professions’ education for the last 2 decades, its technological development and adoption has been expedited in recent years [35], particularly in high-income countries. Likewise, most studies in our review were published since 2010 and are from high-income countries. Widespread access to digital technology in high-income countries may diminish the effects of digital education interventions in RCTs, given that blinding is not possible, and the control group participants may interact or have alternative electronic access to information. Studies on the use of digital education in low- and middle-income countries would provide a more comprehensive assessment as the technological setup and learning infrastructure is more limited [36-38]. Although there is a universal need for scalable and high-quality education to build health care professionals’ competencies in diabetes management and care, this is especially important for developing countries facing severe workforce shortages and increasing burden of chronic disease [5,39,40].

Digital education interventions in this review, although diverse in terms of the mode of delivery, mostly employed clinical scenarios for presentation of educational content. Furthermore, the included digital education interventions were mainly asynchronous and aimed at postregistration health care professionals. Although this digital education format may indeed be optimal for busy clinicians as part of their continuing professional development, there is scope for more research on other digital education formats as well as preregistration health care professionals [38,41,42].

Limitations

There are limitations to the evidence included in this review. First, studies were too heterogeneous to be pooled. Second, many studies were at a high risk of biases such as selection and attrition bias. Third, satisfaction with digital education interventions may be overestimated by a heavy reliance on self-reported measures and a disproportionate focus on only the intervention group. Satisfaction is important in ensuring the success of digital education interventions as it impacts the user’s intention to sustain learning through digital means [33]. Therefore, alternative methods should be used to explore satisfaction with digital education interventions such as the actual time spent on digital learning or in-depth qualitative analyses on the perceptions of digital education. Finally, studies, in general, did not refer to a learning theory in the intervention design. Digital education presents a new model of learning where technological and Web-based learning expands and changes the paradigm of usual learning. Furthermore, the complexity of diabetes management may warrant a unique learning pedagogy. The use of technological or adult learning theories in the development of digital education interventions may improve the quality, reporting, and ingenuity of the digital education research if grounded in existing theoretical frameworks [34].

Future Research

Digital education is rapidly transforming health professions training and is expected to gain even more prominence in the coming years. It is critical for digital education adoption and implementation to be guided by a robust evidence base. There is a need for more high-quality and standardized studies from a range of settings, including developing countries, which would focus on all aspects of diabetes management. Future research should also aim to assess the economic impact to inform planning, development, and adoption of digital health professions education interventions on diabetes management.

Conclusions

Digital education holds the promise of a scalable and affordable approach to health professions education, with particular relevance to developing countries tackling severe shortage of skilled health care staff. In this review, we aimed to determine the effectiveness and cost-effectiveness of digital education for health professions education on diabetes management. We identified 12 studies showing that digital education is well-received and seems to improve knowledge and skills scores in health care professionals compared with traditional or usual education. Although digital education seems to be more effective, or not inferior to other forms of education on diabetes management, the paucity and low quality of data prevent us from making recommendations about its adoption. Future studies should focus on a range of outcomes using validated and standardized outcome measurements in different settings to improve the quality and credibility of evidence.

Acknowledgments

This review was conducted in collaboration with the World Health Organization, Health Workforce Department. We gratefully acknowledge funding from the Lee Kong Chian School of Medicine, Nanyang Technological University Singapore, Singapore, eLearning for health professionals’ education grant. WST would like to acknowledge funding from the Singapore National Medical Research Council Research Training Fellowship and the Singapore National Healthcare Group PhD in Population Health Scheme for funding. ZH, SYL, MT, and WO were funded by the NTU research scholarship.

Abbreviations

- PCP

primary care physician

- RCT

randomized controlled trial

- RR

risk ratio

- SMD

standardized mean difference

MEDLINE (Ovid) search strategy.

Forest plots for knowledge and skill outcomes.

Risk of bias assessments.

Detailed characteristics of the included studies.

Footnotes

Authors' Contributions: LTC conceived the idea for the review. ZH and MS conducted the study selection, data extraction, and wrote and revised the review. LTC provided methodological guidance and critically revised the review. RB provided statistical guidance and comments on the review. SYL, MT, WST, and WO assisted in studies selection and data extraction and provided comments on the review. All authors commented on the review and made revisions following the first draft.

Conflicts of Interest: None declared.

References

- 1.International Diabetes Federation. 2017. [2019-02-05]. IDF Diabetes Atlas Eighth Edition https://www.idf.org/e-library/epidemiology-research/diabetes-atlas/134-idf-diabetes-atlas-8th-edition.html .

- 2.Byrne JL, Davies MJ, Willaing I, Holt RIG, Carey ME, Daly H, Skovlund S, Peyrot M. Deficiencies in postgraduate training for healthcare professionals who provide diabetes education and support: results from the Diabetes Attitudes, Wishes and Needs (DAWN2) study. Diabet Med. 2017 Feb 13;34(8):1074–83. doi: 10.1111/dme.13334. [DOI] [PubMed] [Google Scholar]

- 3.Childs BP. Core competencies in diabetes care: educating health professional students. Diabetes Spectr. 2005 Apr 1;18(2):67–8. doi: 10.2337/diaspect.18.2.67. [DOI] [Google Scholar]

- 4.Groupe. Livres World Health Organization. 2014. [2019-02-05]. A universal truth: no health without a workforce https://www.who.int/workforcealliance/knowledge/resources/GHWA_AUniversalTruthReport.pdf .

- 5.George PP, Papachristou N, Belisario JM, Wang W, Wark PA, Cotic Z, Rasmussen K, Sluiter R, Riboli-Sasco E, Tudor CL, Musulanov EM, Molina JA, Heng BH, Zhang Y, Wheeler EL, al Shorbaji N, Majeed A, Car J. Online eLearning for undergraduates in health professions: a systematic review of the impact on knowledge, skills, attitudes and satisfaction. J Glob Health. 2014 Jun;4(1):010406. doi: 10.7189/jogh.04.010406. http://europepmc.org/abstract/MED/24976965 .jogh-04-010406 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rider B, Lier S, Johnson T, Hu D. Interactive web-based learning: translating health policy into improved diabetes care. Am J Prev Med. 2016;50(1):122–8. doi: 10.1016/j.amepre.2015.07.038. [DOI] [PubMed] [Google Scholar]

- 7.Wood EH. MEDLINE: the options for health professionals. J Am Med Inform Assoc. 1994;1(5):372–80. doi: 10.1136/jamia.1994.95153425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Årsand E, Frøisland DH, Skrøvseth SO, Chomutare T, Tatara N, Hartvigsen G, Tufano JT. Mobile health applications to assist patients with diabetes: lessons learned and design implications. J Diabetes Sci Technol. 2012 Sep 1;6(5):1197–206. doi: 10.1177/193229681200600525. http://europepmc.org/abstract/MED/23063047 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cooper H, Cooper J, Milton B. Technology-based approaches to patient education for young people living with diabetes: a systematic literature review. Pediatr Diabetes. 2009;10(7):a. doi: 10.1111/j.1399-5448.2009.00509.x. [DOI] [PubMed] [Google Scholar]

- 10.Hou C, Carter B, Hewitt J, Francisa T, Mayor S. Do mobile phone applications improve glycemic control (HbA1c) in the self-management of diabetes? A systematic review, meta-analysis, and GRADE of 14 randomized trials. Diabetes Care. 2016 Nov;39(11):2089–95. doi: 10.2337/dc16-0346.39/11/2089 [DOI] [PubMed] [Google Scholar]

- 11.Jackson CL, Bolen S, Brancati FL, Batts-Turner ML, Gary TL. A systematic review of interactive computer-assisted technology in diabetes care. Interactive information technology in diabetes care. J Gen Intern Med. 2006 Mar 8;21(2):105–10. doi: 10.1111/j.1525-1497.2005.00310.x. http://onlinelibrary.wiley.com/resolve/openurl?genre=article&sid=nlm:pubmed&issn=0884-8734&date=2006&volume=21&issue=2&spage=105 .JGI310 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Higgins JP, Green S. Iran University of Medical Sciences. 2011. Oct 18, [2019-01-30]. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 http://crtha.iums.ac.ir/files/crtha/files/cochrane.pdf .

- 13.Shamseer L, Moher D, Clarke M, Ghersi D, Liberati A, Petticrew M, Shekelle P, Stewart LA. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015: elaboration and explanation. Br Med J. 2015 Jan 2;349:g7647. doi: 10.1136/bmj.g7647. http://www.bmj.com/cgi/pmidlookup?view=long&pmid=25555855 . [DOI] [PubMed] [Google Scholar]

- 14.Car J, Carlstedt-Duke J, Car LT, Posadzki P, Whiting P, Zary N, Atun R, Majeed A, Campbell J. Digital education for health professions: methods for overarching evidence syntheses. J Med Internet Res. 2019:1–36. doi: 10.2196/12913. Accepted but not published at the point of citation(forthcoming) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Posadzki P, Car L, Jarbrink K, Bajpai R, Semwal M, Kyaw B. The EQUATOR Network. 2017. [2018-11-29]. Protocol for development of the Standards for Reporting of Digital Health Education Intervention Trials (STEDI) statement http://www.equator-network.org/wp-content/uploads/2018/01/STEDI-Statement-Protocol-updated-Jan-2018.pdf .

- 16.Statistics U. National Center for Educational Quality Enhancement. 2014. [2018-11-29]. ISCED Fields of Education and Training 2013 (ISCED-F 2013): manual to accompany the International Standard Classification of Education https://eqe.ge/res/docs/228085e.pdf .

- 17.Higgins JPT, Altman DG, Gotzsche PC, Juni P, Moher D, Oxman AD, Savovic J, Schulz KF, Weeks L, Sterne JA. The Cochrane Collaboration's tool for assessing risk of bias in randomised trials. Br Med J. 2011 Oct 18;343:d5928. doi: 10.1136/bmj.d5928. http://europepmc.org/abstract/MED/22008217 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Miller GE. The assessment of clinical skills/competence/performance. Acad Med. 1990 Sep;65(9 Suppl):S63–7. doi: 10.1097/00001888-199009000-00045. [DOI] [PubMed] [Google Scholar]

- 19.Guyatt GH, Thorlund K, Oxman AD, Walter SD, Patrick D, Furukawa TA, Johnston BC, Karanicolas P, Akl EA, Vist G, Kunz R, Brozek J, Kupper LL, Martin SL, Meerpohl JJ, Alonso-Coello P, Christensen R, Schunemann HJ. GRADE guidelines: 13. Preparing summary of findings tables and evidence profiles-continuous outcomes. J Clin Epidemiol. 2013 Feb;66(2):173–83. doi: 10.1016/j.jclinepi.2012.08.001.S0895-4356(12)00240-5 [DOI] [PubMed] [Google Scholar]

- 20.Leucht S, Kissling W, Davis JM. How to read and understand and use systematic reviews and meta-analyses. Acta Psychiatr Scand. 2009 Jun;119(6):443–50. doi: 10.1111/j.1600-0447.2009.01388.x.ACP1388 [DOI] [PubMed] [Google Scholar]

- 21.Billue K, Safford M, Salanitro A, Houston T, Curry W, Kim Y, Allison JJ, Estrada CA. Medication intensification in diabetes in rural primary care: a cluster-randomised effectiveness trial. BMJ Open. 2012;2(5):e000959. doi: 10.1136/bmjopen-2012-000959. http://bmjopen.bmj.com/cgi/pmidlookup?view=long&pmid=22991217 .bmjopen-2012-000959 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Crenshaw K, Curry W, Salanitro A, Safford M, Houston T, Allison J. Is physician engagement with Web-based CME associated with patients' baseline hemoglobin A1c levels? The Rural Diabetes Online Care study. Acad Med. 2010 Sep;85(9):1511–7. doi: 10.1097/ACM.0b013e3181eac036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Estrada CA, Safford MM, Salanitro AH, Houston TK, Curry W, Williams JH, Ovalle F, Kim Y, Foster P, Allison JJ. A web-based diabetes intervention for physician: a cluster-randomized effectiveness trial. Int J Qual Health Care. 2011 Dec;23(6):682–9. doi: 10.1093/intqhc/mzr053. http://europepmc.org/abstract/MED/21831967 .mzr053 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.O'Connor P, Sperl-Hillen J, Johnson P, Rush W, Asche S, Dutta P, Biltz GR. Simulated physician learning intervention to improve safety and quality of diabetes care: a randomized trial. Diabetes Care. 2009 Apr;32(4):585–90. doi: 10.2337/dc08-0944. http://europepmc.org/abstract/MED/19171723 .dc08-0944 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Chaikoolvatana A, Haddawy Peter. Evaluation of the effectiveness of a computer-based learning (CBL) program in diabetes management. J Med Assoc Thai. 2007 Jul;90(7):1430–4. [PubMed] [Google Scholar]

- 26.Sperl-Hillen JM, O'Connor PJ, Rush WA, Johnson PE, Gilmer T, Biltz G, Asche SE, Ekstrom HL. Simulated physician learning program improves glucose control in adults with diabetes. Diabetes Care. 2010 Aug;33(8):1727–33. doi: 10.2337/dc10-0439. http://europepmc.org/abstract/MED/20668151 .dc10-0439 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Schroter S, Jenkins RD, Playle RA, Walsh KM, Probert C, Kellner T, Arnhofer G, Owens DR. Evaluation of an online interactive Diabetes Needs Assessment Tool (DNAT) versus online self-directed learning: a randomised controlled trial. BMC Med Educ. 2011 Jun 16;11:35. doi: 10.1186/1472-6920-11-35. https://bmcmededuc.biomedcentral.com/articles/10.1186/1472-6920-11-35 .1472-6920-11-35 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Gregory J, Robling M, Bennert K, Channon S, Cohen D, Crowne E. Development and evaluation by a cluster randomised trial of a psychosocial intervention in children and teenagers experiencing diabetes: the DEPICTED study. Health Technol Assess. 2011 Aug;15(29):1–202. doi: 10.3310/hta15290. [DOI] [PubMed] [Google Scholar]

- 29.Desimone ME, Blank GE, Virji M, Donihi A, DiNardo M, Simak DM, Buranosky R, Korytkowski MT. Effect of an educational Inpatient Diabetes Management Program on medical resident knowledge and measures of glycemic control: a randomized controlled trial. Endocr Pract. 2012 Mar;18(2):238–49. doi: 10.4158/EP11277.OR.K425123G3501G721 [DOI] [PubMed] [Google Scholar]

- 30.Hibbert EJ, Lambert T, Carter JN, Learoyd D, Twigg S, Clarke S. A randomized controlled pilot trial comparing the impact of access to clinical endocrinology video demonstrations with access to usual revision resources on medical student performance of clinical endocrinology skills. BMC Med Educ. 2013 Oct 3;13:135. doi: 10.1186/1472-6920-13-135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bredenkamp N. University of Northern Colorado PhD Dissertation. Denver: University of Northern Colorado; 2013. May, Simulation versus online learning: effects on knowledge acquisition, knowledge retention, and perceived effectiveness; p. E. [Google Scholar]

- 32.Sperl-Hillen J, O'Connor PJ, Ekstrom HL, Rush WA, Asche SE, Fernandes OD, Appana D, Amundson GH, Johnson PE, Curran DM. Educating resident physicians using virtual case-based simulation improves diabetes management: a randomized controlled trial. Acad Med. 2014 Dec;89(12):1664–73. doi: 10.1097/ACM.0000000000000406. http://Insights.ovid.com/pubmed?pmid=25006707 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ngamruengphong S, Horsley-Silva JL, Hines SL, Pungpapong S, Patel TC, Keaveny AP. Educational intervention in primary care residents' knowledge and performance of hepatitis B vaccination in patients with diabetes mellitus. South Med J. 2015 Sep 9;108(9):510–5. doi: 10.14423/SMJ.0000000000000334. [DOI] [PubMed] [Google Scholar]

- 34.Diehl LA, Souza RM, Gordan PA, Esteves RZ, Coelho IC. InsuOnline, An electronic game for medical education on insulin therapy: a randomized controlled trial with primary care physicians. J Med Internet Res. 2017 Dec 9;19(3):e72. doi: 10.2196/jmir.6944. http://www.jmir.org/2017/3/e72/ v19i3e72 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Lee MC. Explaining and predicting users’ continuance intention toward e-learning: an extension of the expectation–confirmation model. Comput Educ. 2010 Feb;54(2):506–16. doi: 10.1016/j.compedu.2009.09.002. [DOI] [Google Scholar]

- 36.Andrade J, Ares J, García R, Rodríguez S, Seoane M, Suárez S. Guidelines for the development of e-learning systems by means of proactive questions. Comput Educ. 2008 Dec;51(4):1510–22. doi: 10.1016/j.compedu.2008.02.002. [DOI] [Google Scholar]

- 37.Walsh K. The future of e-learning in healthcare professional education: some possible directions. Commentary. Ann Ist Super Sanita. 2014;50(4):309–10. doi: 10.4415/ANN_14_04_02. http://www.iss.it/publ/anna/2014/4/504309.pdf . [DOI] [PubMed] [Google Scholar]

- 38.Scott R, Mars M, Hebert M. How global is ‘e-Health’ and ‘Knowledge Translation’? In: Ho K, Jarvis-Selinger S, Novak Lauscher H, Cordeiro J, Scott R, editors. Technology Enabled Knowledge Translation for eHealth. New York, NY: Springer New York; 2012. pp. 339–57. [Google Scholar]

- 39.Al-Shorbaji N, Atun R, Car J, Majeed A, Wheeler E. World Health Organization. 2015. [2019-01-30]. eLearning for undergraduate health professional education: a systematic review informing a radical transformation of health workforce development https://www.who.int/hrh/documents/14126-eLearningReport.pdf .

- 40.Hernández-Torrano D, Ali S. First year medical students' learning style preferences and their correlation with performance in different subjects within the medical course. BMC Med Educ. 2017 Aug;17(1):131. doi: 10.1186/s12909-017-0965-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Instructional design variations in internet-based learning for health professions education: a systematic review and meta-analysis. Acad Med. 2010 May;85(5):909–22. doi: 10.1097/ACM.0b013e3181d6c319.00001888-201005000-00042 [DOI] [PubMed] [Google Scholar]

- 42.Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet-based learning in the health professions: a meta-analysis. J Am Med Assoc. 2008 Sep 10;300(10):1181–96. doi: 10.1001/jama.300.10.1181.300/10/1181 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

MEDLINE (Ovid) search strategy.

Forest plots for knowledge and skill outcomes.

Risk of bias assessments.

Detailed characteristics of the included studies.