Abstract

Brain activity patterns exhibited during task performance have been shown to spontaneously reemerge in the following restful awake state. Such “awake reactivation” has been observed across higher-order cortex for complex images or associations. However, it is still unclear whether the reactivation extends to primary sensory areas that encode simple stimulus features. To address this question, we trained human subjects from both sexes on a particular visual feature (Gabor orientation) and tested whether this feature will be reactivated immediately after training. We found robust reactivation in human V1 that lasted for at least 8 min after training offset. This effect was not present in higher retinotopic areas, such as V2, V3, V3A, or V4v. Further analyses suggested that the amount of awake reactivation was related to the amount of performance improvement on the visual task. These results demonstrate that awake reactivation extends beyond higher-order areas and also occurs in early sensory cortex.

SIGNIFICANCE STATEMENT How do we acquire new memories and skills? New information is known to be consolidated during offline periods of rest. Recent studies suggest that a critical process during this period of consolidation is the spontaneous reactivation of previously experienced patterns of neural activity. However, research in humans has mostly examined such reactivation processes in higher-order cortex. Here we show that awake reactivation occurs even in the primary visual cortex V1 and that this reactivation is related to the amount of behavioral learning. These results pinpoint awake reactivation as a process that likely occurs across the entire human brain and could play an integral role in memory consolidation.

Keywords: awake reactivation, fMRI, learning, perceptual decision making, V1

Introduction

Understanding how the human brain learns is one of the central goals in neuroscience. A growing body of literature demonstrates that new learning becomes consolidated during offline states (McGaugh, 2000; Diekelmann and Born, 2010; Sasaki et al., 2010). Studies in animals suggest that offline memory consolidation may be in part accomplished via spontaneous memory reactivation, that is, the reemergence of the brain activity patterns observed during task performance (Foster, 2017; Pfeiffer, 2017). This spontaneous reactivation occurs during sleep but also during restful wakefulness, in which case it is referred to as awake reactivation.

Recent studies have demonstrated that memory reactivation can be demonstrated in human subjects using fMRI. This line of research has found evidence for awake reactivation in the medial temporal lobe (Staresina et al., 2013; Tambini and Davachi, 2013) and in higher-order cortical areas (Deuker et al., 2013; Schlichting and Preston, 2014; Guidotti et al., 2015; Chelaru et al., 2016; de Voogd et al., 2016).

However, previous research could not determine what specific training components were reactivated. Indeed, some previous studies used associative learning between different categories (Deuker et al., 2013; Staresina et al., 2013; Tambini and Davachi, 2013; Schlichting and Preston, 2014). The use of associative learning makes it difficult to disentangle brain processes related to the coding of each stimulus from the brain processes related to the binding of different stimuli (Deuker et al., 2013). The studies that did not use associative learning used relatively complex visual stimuli consisting of a large number of individual features (Deuker et al., 2013; Staresina et al., 2013; Tambini and Davachi, 2013; Schlichting and Preston, 2014; Guidotti et al., 2015; Chelaru et al., 2016; de Voogd et al., 2016). Further, these studies observed awake reactivation in higher-order brain regions that have mixed selectivity to features (Huth et al., 2016), making it difficult to infer what specific features are reactivated.

Isolating a specific feature that is reactivated is critical for at least two reasons. First, it will demonstrate the limits of the awake reactivation phenomenon in humans. Does reactivation in the human brain occur only at the level of complete objects and can therefore be observed only in higher-level cortex? Or is reactivation a phenomenon that also occurs at the level of individual stimulus features and can be observed even in primary sensory areas? Second, providing evidence for awake reactivation of specific features will link the studies in humans even more tightly to the phenomenon of neuronal replay observed in animals where replay is typically demonstrated for relatively more specific stimuli, such as trajectory through space (Foster and Wilson, 2006; Diba and Buzsáki, 2007; Davidson et al., 2009; Carr et al., 2011) or placement on the screen (Han et al., 2008).

To find evidence for the existence of feature-specific awake reactivation, we focused on the primary visual cortex (V1), which is known to code stimulus orientation (Yacoub et al., 2008), to show changes after visual training (Sasaki et al., 2010; Rosenthal et al., 2016), and to be involved in learning and memory for visual information (Karanian and Slotnick, 2018; Rosenthal et al., 2018). We used oriented Gabor patches rather than complex stimuli for training and examined whether the trained orientation is reactivated in V1 after extensive visual training. Our results showed that the activity patterns of V1 were more likely to be classified as the trained, compared with an untrained orientation shortly after visual training. However, higher retinotopic areas, such as V2, V3, V3A, or ventral V4 (V4v), did not show this effect. Further analyses suggested that the greater amount of awake reactivation in V1 was associated with greater learning for the trained stimulus. These findings demonstrate that feature-specific awake reactivation takes place after visual training in the primary visual cortex, and suggest that reactivation may occur in both association and primary sensory cortices.

Materials and Methods

Participants.

Twelve healthy subjects (19–25 years old, 7 females) with normal or corrected-to-normal vision participated in this study. Subjects were screened for a history of neurological or psychiatric disorders, as well as for any contraindications to MRI. All subjects provided demographic information and written informed consent. The study was approved by the Institutional Review Board of Georgia Institute of Technology. All experiments were performed during daytime. All 12 subjects' data were included in the analysis. The sample size was determined based on similar fMRI experiments on visual learning (Guidotti et al., 2015; Shibata et al., 2016).

Task.

Subjects completed a 2-interval-forced-choice (2IFC; see Fig. 1A) orientation detection task where they indicated which of two intervals contained a Gabor patch. The Gabor patch (contrast = 100%, spatial frequency = 1 cycle/degree, Gaussian filter σ = 2.5 degrees, random spatial phase) was presented within an annulus subtending 0.75°–5° and was masked by noise at a given signal-to-noise (S/N) ratio. For an S/N ratio of X, 100-X% of the pixels in the Gabor patch were replaced with random noise. Subjects were always presented with a noisy Gabor patch during one interval and with pure noise (0% S/N ratio) during the other interval. The interval in which the Gabor patch was presented was determined randomly on every trial.

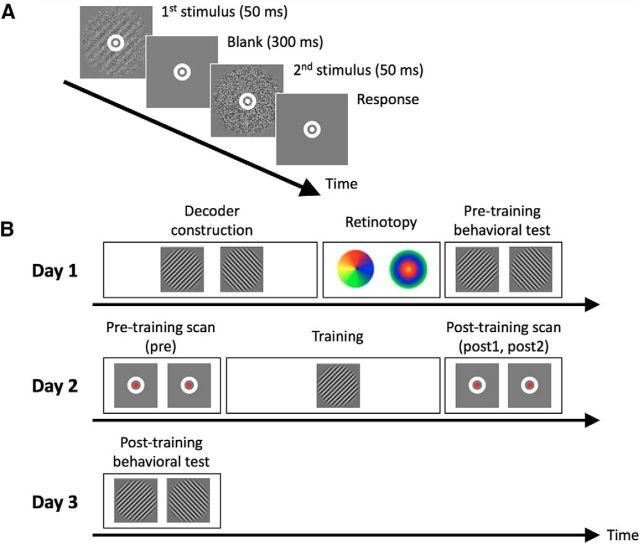

Figure 1.

Task and experimental procedure. A, Subjects performed a 2IFC orientation detection task during pretraining and post-training behavioral tests, as well as the training on day 2. During the task, subjects indicated whether a Gabor patch appeared in the first or second interval. B, The experiment consisted of 3 d. In the critical day 2, visual training (∼40 min on average) was preceded by two pretraining scans (5 min/scan; combined into a single “pre” baseline) and followed by two post-training scans (post1 and post2). During the pretraining and post-training scans, subjects detected a color change in a small fixation dot (see Materials and Methods). The main experimental question was whether the trained stimulus can be decoded in the post-training scan. Decoder construction (∼50 min) and retinotopy (∼20 min) scans were collected on day 1. Pretraining and post-training behavioral tests were conducted on days 1 and 3 to confirm that training improved task performance. (See Figure 1-1).

Each trial began with a 500 ms fixation period, followed by two stimulus intervals of 50 ms each. The two intervals were separated by a 300 ms blank period. Subjects were asked to fix their eyes on a white bull's-eye on a gray disc (0.75° radius) at the center of the screen for the entire duration of the task. Subjects indicated the interval in which the Gabor patch appeared by pressing a button on a keypad. There was no time limit for the response, and no feedback was provided.

Procedures.

The study took place over 3 d (see Fig. 1B). Day 1 consisted of decoder construction, retinotopy, and a pretraining behavioral test. Day 2 consisted of training on the visual task preceded and followed by pretraining and post-training scans. Finally, day 3 consisted of a single post-training behavioral test. Days 1 and 2 were separated by multiple days, whereas day 3 always immediately followed day 2.

The training, as well as the pretraining and post-training behavioral tests, consisted of individual blocks. Within each block, we controlled the difficulty of the task by adjusting the S/N ratio using a 2-down-1-up staircase procedure. Each block started with stimuli at 25% S/N ratio and terminated after 10 reversals of the staircase. Each block lasted ∼1–2 min, contained 30–40 trials, and presented stimuli with just one orientation (45° or 135°).

During the training, subjects completed 16 blocks in total lasting ∼40 min. All 16 blocks consisted of a single orientation (the “trained” orientation). The training was performed in the MR scanner.

The pretraining and post-training behavioral tests were performed to obtain each subject's threshold S/N ratio for each Gabor orientation. The order of presentation of the two orientations was randomized for each test and each subject. Subjects performed the pretraining and post-training behavioral tests in a mock scanner located immediately adjacent to the scanner room. Following previous experiments (Bang et al., 2018a,b; Shibata et al., 2017), we computed the threshold S/N ratio as the geometric mean of the S/N ratios for the last 6 reversals in a block.

Decoder construction.

The purpose of the decoder construction stage was to obtain each subject's BOLD signal patterns corresponding to each of the two Gabor orientations (45° and 135°). The decoder construction was used to construct the decoder that distinguishes between the two Gabor orientations.

During the decoder construction scan, the subjects performed 10 runs of a frequency detection task (for details of the task, see below) inside the MR scanner. Each run consisted of 18 trials of 16 s each, in addition to two 6 s fixation periods in the beginning and the end of the run (each run lasted a total 300 s). Each trial had two parts: 12 s stimulus presentation period and the following 4 s response period.

During the 12 s stimulus presentation period, 12 Gabor patches were presented at a rate of 1 Hz. Each Gabor patch was presented for 500 ms, allowing for 500 ms blank period between consecutive Gabor stimuli. All 12 Gabor patches had one specific orientation (45° or 135°) chosen randomly on each trial. In half of the 18 trials, 1 of the 12 Gabor patches had slightly higher spatial frequency compared with the other patches. For the other half of the 18 trials, all 12 Gabor patches had the same spatial frequency. After the end of the 12 s stimulus presentation period, subjects indicated whether a Gabor patch with a different spatial frequency was presented. All Gabor patches were displayed with 50% S/N (all other parameters were equivalent to the parameters used for the 2IFC task). In the beginning of the 12 s stimulus presentation period, the bull's-eye at the center of the screen changed its color from white to green to indicate that the stimulus presentation period had started. The color of the fixation point remained green throughout the 12 s stimulus presentation period and changed to white again when the response period started.

We controlled the difficulty of the frequency detection task using an adaptive staircase method. The initial degree of the spatial frequency change in the first run was set to 0.24 cycles/degree and decreased by 0.02 in the case of a hit and increased by 0.02 in the case of an error. In the case of a correct rejection, the degree of the frequency did not change. The following run always started from the last degree of the frequency experienced by the subject.

Pretraining and post-training scans.

We recorded each subject's BOLD activity before and after the visual training. In each case, we collected two 5 min scans. The two pretraining scans were combined in the analyses because we did not expect any difference between them. Rather, they served to establish a baseline against which we could compare the decoder performance in the post-training scan. The two post-training scans (post1 and post2) were analyzed separately to gain insight into the awake reactivation time course.

To discourage subjects from consciously imagining the trained orientation, we required them to perform a fixation task during the pretraining and post-training scans. The fixation task was designed so that the parts of the visual cortex corresponding to the trained stimulus were not prevented from engaging in awake reactivation. This was achieved by making the target in the fixation task small and spatially nonoverlapping with the trained stimulus. Subjects detected a color change of the center dot of the white bull's-eye (size and location of the white bull's-eye were the same as in the 2IFC task). The center dot changed color from white ([R, G, B] = [255, 255, 255]) to faint pink ([R, G, B] = [255, 255 − x, 255 − x]) for 1.5 s and returned to white. Subjects had to press a button during this 1.5 s period. After the center dot returned to white, the next color change occurred after a random interval between 0.5 and 1 s (the average interval was 0.76 s). Each 5 min pretraining and post-training scan contained on average 133 color changes. Initially, the color change x was set to 40 and was then controlled by a 2-down-1-up staircase procedure with a step size of 2.

To further verify that subjects did not engage in conscious rehearsal, we administered a postexperiment questionnaire. The questionnaire consisted of two questions and an open-ended prompt: (1) “Did you imagine anything during the fixation task?” (2) “Did you imagine any orientation during the fixation task?” and (3) “In your own words, describe your experience during the fixation task.” All subjects responded in the negative to the first two questions and no subject mentioned anything about the trained or untrained stimuli in the open-ended prompt, thus suggesting that subjects did not engage in conscious rehearsal of the trained stimulus.

To confirm that the subjects' performance during the fixation task remained at a similar level over time (during the pre, post1, and post2 scans), we conducted a one-way repeated-measures ANOVA on the accuracy rate with a factor of time (pre vs post1 vs post2). We found no significant main effect of time (F(2,22) = 2.045, Huynh–Feldt correction, ε = 0.724, p = 0.169), suggesting that subjects' performance was relatively constant over time. This conclusion was further supported by direct comparisons between pre and post1 (t(11) = −1.581, p = 0.142, paired t test) and pre and post2 (t(11) = −1.185, p = 0.261, paired t test).

MRI data acquisition.

Subjects were scanned in a Siemens 3T Trio MR scanner using a 12-channel head coil. For the anatomical reconstruction, high-resolution T1-weighted MR images were acquired using a multiecho MPRAGE (256 slices, voxel size = 1 × 1 × 1 mm3, TR = 2530 ms, FOV = 256 mm). Functional MR images were acquired using gradient echo EPI sequences (voxel size = 3 × 3 × 3.5 mm, TR = 2000 ms, TE = 30 ms, flip angle = 79°). Thirty-three contiguous slices were positioned parallel to the AC-PC plane to cover the whole brain.

Retinotopy and ROI selection.

We defined the retinotopically organized areas V1, V2, V3, V3A, and ventral V4 (V4v) using standard retinotopic methods (Sereno et al., 1995; Tootell et al., 1997). Briefly, we presented a flickering checkerboard pattern at the vertical/horizontal meridians and in the upper/lower visual fields. Furthermore, we presented an annulus stimulus to delineate the retinotopic regions in each visual area corresponding to the visual fields stimulated by the Gabor patch. The stimulus was a flickering checkerboard pattern within an annulus subtending 0.75°-5° from the center of the screen, which is identical with the size of the Gabor patch used in the rest of the experiment. Only the voxels activated by the annulus stimulus were included in the main analyses.

To localize the retinotopic areas V1, V2, V3, V3A, and V4v, we performed a conventional amplitude analysis where we delineated areas V1, V2, V3, V3A, and V4v using the contrast maps for vertical/horizontal meridians and upper/lower visual fields (Sereno et al., 1995; Tootell et al., 1997). We then localized the subregions in each visual area corresponding to the part of the visual field occupied by the Gabor patch by contrasting the annulus ON and annulus OFF conditions.

In addition to the retinotopically defined areas in the visual cortex, we analyzed 12 anatomical ROIs defined using Freesurfer as part of the cortical reconstruction: superior, middle, and inferior frontal cortex; orbitofrontal cortex; precentral, postcentral, and paracentral cortex; superior and inferior parietal cortex; and superior, middle, and inferior temporal cortex.

fMRI data analysis.

We analyzed the brain data using Freesurfer software (RRID:SCR_001847; http://surfer.nmr.mgh.harvard.edu/). Because we obtained each subject's brain data on separate days (days 1 and 2), we processed the structural images from two different days with the longitudinal stream (Reuter et al., 2012), which is known to create an unbiased within-subject structural template using robust, inverse consistent registration. We registered the functional data from the two different days' scans to the individual structural template that was created via the longitudinal stream using rigid-body transformations. A gray matter mask was used for extracting BOLD signals from voxels located within the gray matter. Functional data were realigned as part of preprocessing, but no spatial or temporal smoothing was applied.

We extracted the BOLD time courses from each voxel using MATLAB (RRID:SCR_001622; The MathWorks). We shifted the BOLD signals by 6 s to account for the hemodynamic delay and removed voxels that showed spikes >10 SDs from the mean during decoder construction scans. We further removed a linear trend using linear order polynomial algorithms. Then, within each run, we normalized (z scored) each voxel's BOLD time courses to minimize the baseline changes across runs. To create the data sample for decoding, we averaged the BOLD signals across 6 volumes (12 s) that correspond to the duration of the stimulus presentation period in the decoder construction.

We selected relevant voxels using sparse logistic regression (Yamashita et al., 2008) as implemented in the sparse logistic regression toolbox. Sparse logistic regression selects relevant voxels in the ROIs automatically while estimating their weight parameters for classification. We selected the voxels within the subregions of V1, V2, V3, V3A, and V4v corresponding to the Gabor stimuli as the input voxels. Then we trained the decoder to classify the BOLD patterns as either the Gabor stimulus with 45° or 135° orientation using all 180 data samples from all 10 runs in the decoder construction scan (90 samples for each orientation).

Before applying the decoder to the pretraining and post-training scans, we checked the validity of the decoder. Because of the known difficulties in assessing decoder validity (Varoquaux et al., 2017; Varoquaux, 2018), we performed two different cross-validation procedures on the data from the decoder construction scan. These cross-validations did not result in different decoders for the data from the pretraining and post-training scans; those scans were always analyzed with the decoder created based on all 10 runs from the decoder construction scan. We performed a 5-fold cross-validation where the decoder was trained on eight runs and tested on the remaining two runs (different iterations used runs 1 and 2, 3 and 4, 5 and 6, 7 and 8, and 9 and 10 as the test runs). We observed very high decoder performance of >80% accuracy for all retinotopically defined visual areas (V1: t(11) = 42.566, p < 0.001; V2: t(11) = 55.076, p < 0.001; V3: t(11) = 48.986, p < 0.001; V3A: t(11) = 34.767, p < 0.001; V4v: t(11) = 17.153, p < 0.001; uncorrected one-sample t tests; Fig. 2-2A). In addition, we performed a 10-fold cross-validation where the decoder was trained on nine runs and tested on the remaining run and again observed high decoder performance for all retinotopically defined visual areas (V1: t(11) = 10.856, p < 0.001; V2: t(11) = 11.746, p < 0.001; V3: t(11) = 8.345, p < 0.001; V3A: t(11) = 3.851, p = 0.003; V4v: t(11) = 4.241, p = 0.001; uncorrected one-sample t tests; Fig. 2-2B). Finally, pilot data from a previous subject suggested that our decoder generalizes with little to no loss of accuracy to data collected on a different day.

Finally, we applied the decoder to the pretraining and post-training scans using the same voxels as the ones selected during the decoder construction. The BOLD signals during pretraining and post-training scans were shifted by 6 s, and a linear trend was removed. The time courses of the BOLD signals were again averaged across 6 volumes because our decoder was constructed based on the average of 6 volumes at a time (see above). For each 6-volume period of the pretraining and post-training scans, we applied the decoder to calculate the likelihood that it was elicited by each Gabor orientation. Based on these likelihood scores, the decoder classified each 6-volume period as either the trained or untrained orientation.

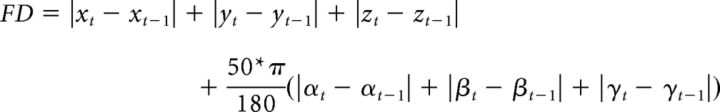

To ensure that our results were not due to motion artifacts, we excluded volumes with relatively strong motion. Motion was computed using the formula for framewise displacement (FD) from Power et al. (2012) as follows:

|

where x, y, and z (translations), and α, β, and γ (rotations) are the six head motion parameters obtained from the realignment step, and t is the time point of the current volume. This formula significantly overestimates the actual motion of each voxel (Yan et al., 2013). We used a stringent criterion and excluded volumes for which FD > 0.5. This criterion led to an exclusion of a total of just 27 volumes from all pretraining and post-training scans across all subjects (of a total of 7200 volumes; thus, a total of just 0.38% of all volumes were excluded), testifying to the low overall motion observed in the study. Performing the same analyses without any volume exclusions did not affect the results, including the significant classification in V1.

Statistical analyses.

We used two-tailed parametric statistical tests, such as t tests and ANOVAs. For all repeated-measures ANOVAs, we used Mauchly's test of Sphericity to test the assumption of sphericity. We used Huynh–Feldt correction with the estimated ε when the sphericity assumption was violated. All such violations are reported when they occurred.

Relationship between awake reactivation and behavioral improvement.

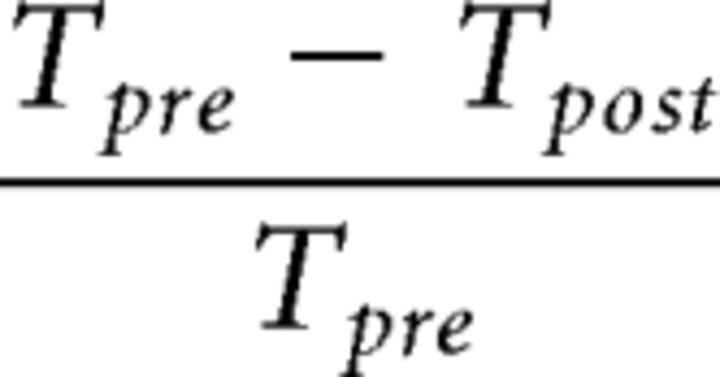

To check whether the amount of awake reactivation was associated with the amount of behavioral improvement, we first constructed an index of subjects' learning. The learning index measures the relative learning amount for the trained compared with the untrained orientation. The learning amount was defined as the change in the thresholds for pretraining and post-training behavioral tests divided by the threshold at pretraining behavioral test as follows:

|

where Tpre and Tpost refer to the threshold S/N ratios before and after training. Lower threshold values indicate better performance. We computed the learning index by subtracting the learning amount for the untrained orientation from learning amount for the trained orientation.

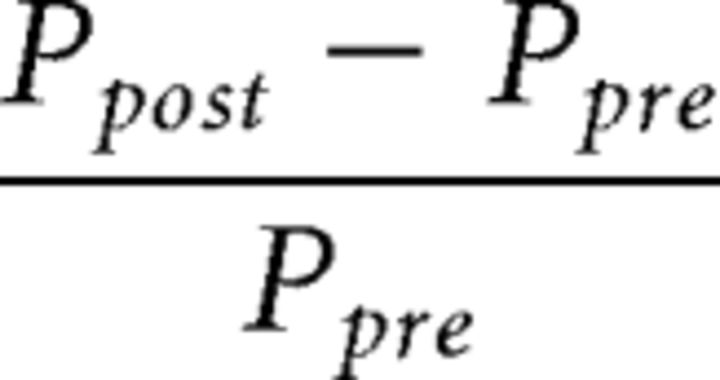

To relate the learning index to the amount of awake reactivation, we constructed an equivalent index of subject-specific awake reactivation. The reactivation index measures the increase in decodability of the trained stimulus in V1 from the pretraining to the post-training scan as follows:

|

where Ppre and Ppost refer to the probability of classifying brain activity as the trained orientation in the pretraining and post-training scans, respectively.

Apparatus.

All visual stimuli were created in MATLAB using Psychophysics Toolbox 3 (RRID:SCR_002881) (Brainard, 1997). The stimuli were presented on an LCD display (1024 × 768 resolution, 60 Hz refresh rate) inside a mock scanner and on an MRI-compatible LCD projector (1024 × 768 resolution, 60 Hz refresh rate) inside the MR scanner.

Data availability.

The data are freely available online at https://osf.io/9du8v/.

Results

We examined whether a process of feature-specific awake reactivation occurs after visual training in human V1. We trained human subjects (n = 12) to detect a specific Gabor orientation (45° or 135°, counterbalanced between subjects; Fig. 1A). The training led to better performance on the post-training compared with the pretraining behavioral test (F(1,11) = 34.351, p < 0.001), thus demonstrating that significant behavioral learning took place (Fig. 1-1). There was no significant difference in the learning amount for trained and untrained orientations (F(1,11) = 0.009, p = 0.926), suggesting that learning transfers between the trained and untrained stimuli as observed in a number of previous studies (Xiao et al., 2008; Zhang et al., 2010; McGovern et al., 2012; Wang et al., 2014).

Threshold S/N (mean ± s.e.m.) for the pre- and post-training tests for trained (white) and untrained (gray) orientations. To confirm that the subjects showed learning between pre- and post-training test stages, we performed a two-way repeated measures ANOVA with factors of orientation (trained vs. untrained orientation) and time (pre- vs. post-training) on the threshold S/N ratio. The results showed a significant main effect of time (F(1,11)=34.351, P<0.001) demonstrating that training was successful in improving performance. At the same time, there was no interaction between time and orientation (F(1,11)=0.009, P=0.926), suggesting that learning transfers between the trained and untrained stimuli as observed in a number of previous studies (Xiao et al., 2008; Zhang et al., 2010; McGovern et al., 2012; Wang et al., 2014). The dots represent individual data. Download Figure 1-1, TIF file (15MB, tif)

Before the training, we constructed a decoder that could distinguish between the multivoxel pattern of BOLD activity elicited by each Gabor orientation (Fig. 1B). We then applied this decoder to the brain activity scans conducted before (pre, two 5 min scans that we combined for analysis) and after (post1 and post2: consecutive two 5 min scans analyzed separately) the training.

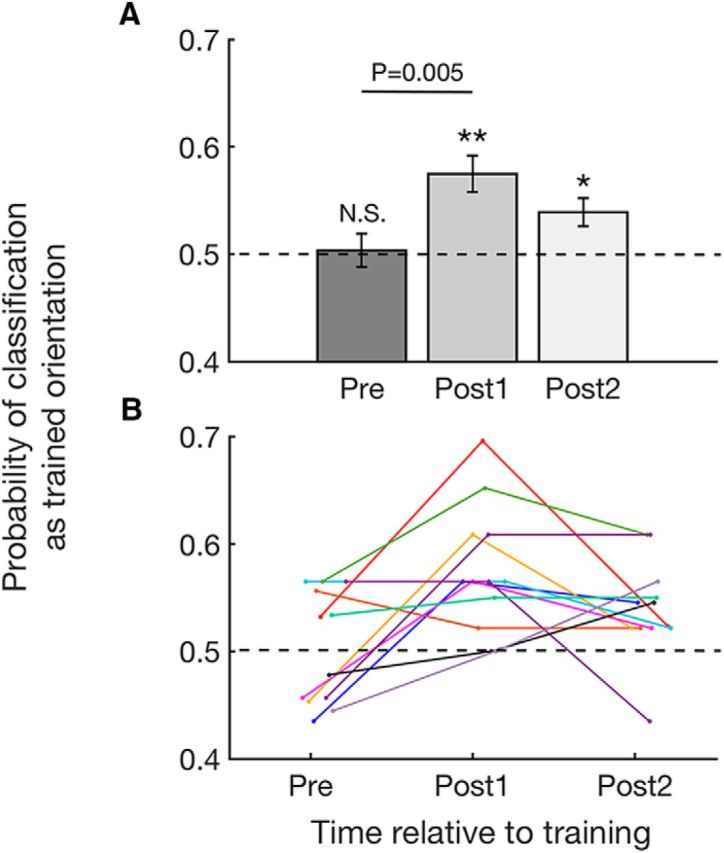

Awake reactivation in V1

If feature-specific awake reactivation indeed occurs in V1, it would manifest itself as post-training, but not pretraining, brain activity appearing more similar to the trained, compared with the untrained, orientation. To test for such feature-specific awake reactivation in V1, we analyzed the decoder's probability of classifying brain activity from the pretraining and post-training scans in V1 as the trained orientation. In this analysis, brain activity was always classified as either the trained or untrained Gabor orientation at each data point; we therefore only report the probability of “trained orientation” classifications. To examine whether the decoder's classification for the trained orientation significantly increased after the training, we applied a one-way repeated-measures ANOVA to the decoder's classifications. We found a significant main effect of time (pre vs post1 vs post2; F(2,22) = 5.721, p = 0.010). Consistent with the existence of awake reactivation, the activation pattern in V1 was more likely to be classified as the trained orientation immediately after (post1) compared with before (pre) the visual training (t(11) = 3.516, p = 0.005, uncorrected paired-sample t test; Fig. 2A,B). A significant quadratic trend was also observed in the time course of the decoder's probability (F(1,11) = 10.767, p = 0.007), indicating a peak right after training. Furthermore, the probability of classification as the trained orientation was significantly greater than chance in both post-training periods (post1: t(11) = 4.415, p = 0.001; post2: t(11) = 2.968, p = 0.013; one-sample t tests) but not before training (pre, t(11) = 0.226, p = 0.825, one-sample t test). No subject showed a significant bias for the trained or untrained stimulus in the pretraining scan (all p values >0.4, binomial tests), and there was no correlation between the classification performance in the pretraining scan with the identity of the trained stimulus (r = 0.041, p = 0.900). Thus, the significant classification results in the post-training scans cannot be attributed to differences in the pretraining scans.

Figure 2.

Probability of classifying brain activity during the pretraining and post-training scans as trained orientation in V1. Consistent with the existence of awake reactivation, brain activity in V1 immediately after training was more likely to be classified as the trained orientation. The effect is visible both in the group (A) and individual data (B). Error bars indicate SEM. *p < 0.05, **p < 0.01. N.S., not significant. Similar results were obtained by analyzing the continuous likelihood values instead of the binary classifications values (see Figure 2-1). The decoder was validated using cross-validation techniques (see Figure 2-2).

Likelihood of classification as trained orientation in V1. The likelihood of classification as trained orientation refers to a continuous measure (rather than a binary decision) of the likelihood that a particular brain signal corresponds to the trained or untrained orientation. A one-way repeated measures ANOVA to the decoder's likelihood of classification as the trained orientation showed a marginally significant main effect of time (pre vs. post1 vs. post2; F(2,22)=3.321, P=0.055). The likelihood of classification as the trained orientation increased immediately after (post1) compared to before (pre) the visual training (t(11)=2.545, P=0.027, uncorrected paired sample t-test). In addition, the likelihood of classification as the trained orientation was significantly higher than chance immediately after training (post1: t(11)=3.540, P=0.005, one-sample t-test) and 5 minutes after training (post2: t(11)=2.370, P=0.037, one-sample t-test) but not before training (pre: t(11)=0.455, P=0.658, one-sample t-test). Consistent with the result from the probability of classification, this result indicates that post-training spontaneous activity in V1 appears more similar to the trained orientation. Individual data is plotted on the group data (mean ± s.e.m.). Download Figure 2-1, TIF file (8.7MB, tif)

Accuracy of decoder during 5-fold and 10-fold cross-validations in V1, V2, V3, V3A and V4v. (A) The decoder was tested through 5-fold cross-validation using brain data obtained from the decoder construction scan. The accuracy of the decoder (mean ± s.e.m.) was above chance level (0.5) for all regions of interest (all P values < 0.001 before correction, one-sample t-tests; V1: t(11)=42.566, P<0.001; V2: t(11)=55.076, P<0.001; V3: t(11)=48.986, P<0.001; V3A: t(11)=34.767, P<0.001; V4v: t(11)=17.153, P<0.001). (B) The decoder was tested through 10-fold cross-validation using brain data obtained from the decoder construction scan. The accuracy of the decoder (mean ± s.e.m.) was above chance level (0.5) for all regions of interest (all P values < 0.005 before correction, one-sample t-tests; V1: t(11)=10.856, P<0.001; V2: t(11)=11.746, P<0.001; V3: t(11)=8.345, P<0.001; V3A: t(11)=3.851, P=0.003; V4v: t(11)=4.241, P=0.001). Download Figure 2-2, TIF file (6.1MB, tif)

The above analysis focused on the decoder's probability of classifying brain activity as the trained orientation based on the decoder's binary classification (either trained or untrained orientation) at each data point. However, for each data point, the decoder could also produce a continuous measure: the likelihood that the data point came from the trained or untrained orientation. Analyzing these continuous likelihood values (rather than the binary classification performance) led to the same pattern of results (Fig. 2-1). Specifically, we found a marginally significant effect of time in a one-way repeated-measures ANOVA (F(2,22) = 3.321, p = 0.055). Consistent with the existence of awake reactivation, the likelihood of classification as the trained orientation increased immediately after (post1) compared with before (pre) the visual training (t(11) = 2.545, p = 0.027, paired-sample t test). The likelihood of classification was significantly greater than chance in both post-training periods (post1: t(11) = 3.540, p = 0.005; post2: t(11) = 2.370, p = 0.037; one-sample t tests) but not before training (pre, t(11) = 0.455, p = 0.658, one-sample t test). Thus, both methods of analysis show that post-training brain activity in V1 appears more similar to the trained orientation.

Finally, we confirmed that the fixation task that subjects performed in the pretraining and post-training scans did not influence the decoder classification. Due to our continuous staircase, the color change always started as easily noticeable and gradually reached each individual's threshold value where it was barely noticeable. The color change was thus much more noticeable in the first compared with the second half of each scan. Therefore, to check for the influence of a noticeable color change on the decoder classification, we compared the likelihood of classification as the trained orientation between the first and the second halves of the post1 and post2 scans. We found no significant decrease for either post1 (t(11) = −1.140, p = 0.278, paired-sample t test) or post2 (t(11) = −0.618, p = 0.549, paired-sample t test). To increase the power for these analyses, we additionally combined the post1 and post2 scans and again found no difference between the first and the second halves (t(23) = −1.293, p = 0.209, paired t test). Therefore, the observed reactivation is unlikely to be simply caused by the occurrence of a noticeable color change event.

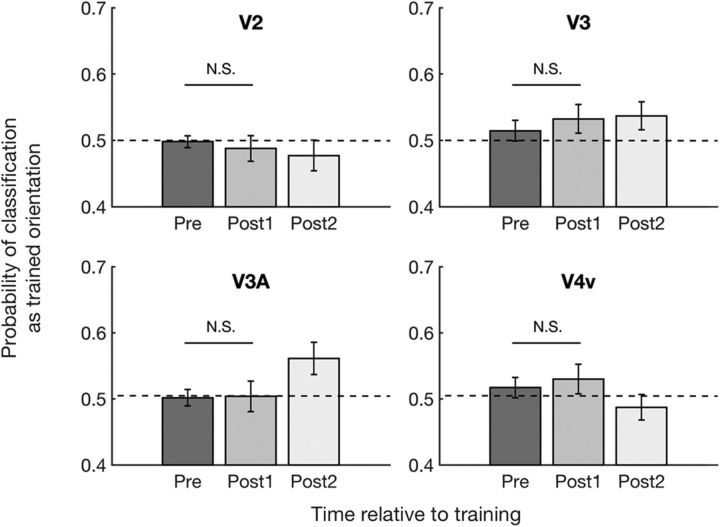

No awake reactivation in higher retinotopic areas

Having established the existence of feature-specific awake reactivation in V1, we explored whether similar effects could be observed in higher visual areas. To do so, we analyzed the decoder's probability of classifying pretraining and post-training brain activity as the trained orientation based on activity patterns in V2, V3, V3A, and V4v. To test whether the decoder's performance in higher visual areas changed after the training, we conducted a two-way repeated-measures ANOVA with factors time (pre vs post1 vs post2) and region (V2, V3, V3A, and V4v). The ANOVA revealed no significant effects of time (F(2,22) = 0.153, p = 0.859), region (F(3,33) = 1.886, p = 0.151), or interaction between the two (F(6,66) = 2.055, p = 0.071). Despite the lack of significant overall effects (Fig. 3; individual results are shown in Fig. 3-1), we analyzed the data further to ensure that none of these higher retinotopic regions showed the pattern of results in V1 where decodability of the trained stimulus increased immediately after training (post1) compared with before training (pre). Indeed, we found no such effects in V2, V3, V3A, or V4v (all p values >0.5 for pre vs post1 in each area before correction: V2: t(11) = 0.443, p = 0.666; V3: t(11) = −0.644, p = 0.533; V3A: t(11) = −0.081, p = 0.937; V4v: t(11) = −0.413, p = 0.688, paired-sample t tests).

Figure 3.

Probability of classifying brain activity during the pretraining and post-training scans as trained orientation in V2, V3, V3A, and V4v. Consistent with a lack of awake reactivation in higher retinotopic areas, post-training brain activity in V2, V3, V3A, and V4v was classified as the trained orientation at chance level (0.5) immediately after training. Error bars indicate SEM. N.S., not significant. For individual subject data, see Figure 3-1.

Probability of classifying spontaneous brain activity as trained orientation in V2, V3, V3A, and V4v. The probability for the trained orientation did not increase immediately after training in V2, V3, V3A, and V4v. Individual data is plotted on the group data (mean ± s.e.m.) for each region of interest. Download Figure 3-1, TIF file (13.4MB, tif)

We analyzed the data from V1 separately from the other four retinotopic areas based on our a priori hypothesis. However, in a control analysis, we entered all five retinotopic regions into the same two-way repeated-measures ANOVA. Consistent with the existence of awake reactivation exclusively in V1, a significant interaction between region and time emerged (F(8,88) = 2.290, p = 0.028). In addition, we further examined whether the decoder's probability of classifying brain activity as the trained orientation in V1 is significantly greater than that in V2, V3, V3A, and V4v immediately after training (post1). We found a greater decodability of the trained stimulus in V1 compared with V2, V3A, and V4v immediately after training (post1) (V1 vs V2, t(11) = 4.183, p = 0.002; V1 vs V3, t(11) = 1.306, p = 0.218; V1 vs V3A, t(11) = 2.657, p = 0.022; V1 vs V4v, t(11) = 2.415, p = 0.034; paired-sample t tests, before correction). These results support the notion that the pattern of increased decodability of the trained stimulus immediately after training is found exclusively in V1.

No awake reactivation outside the occipital lobe

In addition to the retinotopically defined ROIs, we checked for awake reactivation in the rest of cortex. We created anatomical ROIs corresponding to subregions of the temporal, parietal, and frontal cortex (see Materials and Methods). Leave-one-run-out cross-validation (10-fold cross-validation) demonstrated above chance orientation classification in three of these ROIs: superior parietal cortex (t(11) = 3.760, p = 0.003), inferior parietal cortex (t(11) = 2.351, p = 0.038), and middle temporal cortex (t(11) = 2.293, p = 0.043). Therefore, we only checked for awake reactivation (by applying the trained decoder to the pretraining and post-training scans) for these three ROIs.

We found no evidence of awake reactivation in any of these areas outside of the occipital cortex. Indeed, one-way repeated-measures ANOVAs with a factor of time revealed no significant effects of time in any of the three ROIs (superior parietal cortex: F(2,22) = 0.139, p = 0.871; inferior parietal cortex: F(2,22) = 1.180, p = 0.326; middle temporal cortex: F(2,22) = 0.201, p = 0.820). Despite the lack of significant overall effects in these ROIs, we checked specifically whether any of the three higher areas showed the pattern of results in V1 where decodability of the trained stimulus increased immediately after training (post1) compared with before training (pre). None of the three ROIs showed this pattern (superior parietal cortex: t(11) = 0.399, p = 0.698; inferior parietal cortex: t(11) = 0.852, p = 0.412; middle temporal cortex: t(11) = −0.650, p = 0.529; uncorrected paired-sample t tests pre vs post1). These results are consistent with the notion that awake reactivation is specific to V1.

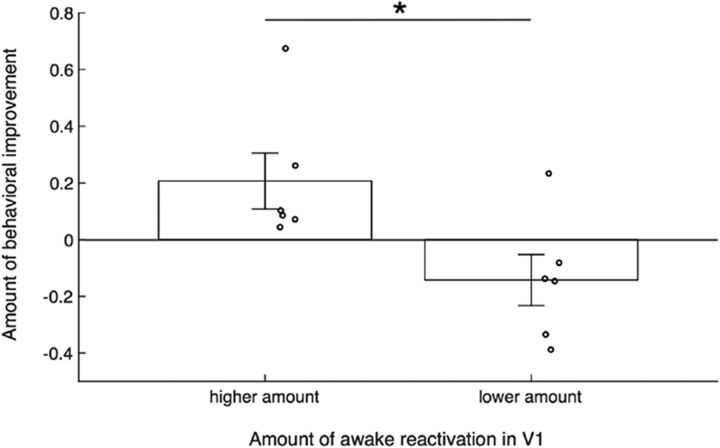

Relationship between awake reactivation and behavioral improvement

Finally, we examined whether the observed awake reactivation in V1 may have been related to the amount of behavioral learning. To address this question, we examined whether subjects who showed greater reactivation in V1 exhibited stronger behavioral improvement. We constructed a subject-specific learning index that measures the amount of learning that was specific to the trained orientation. Similarly, we constructed a subject-specific reactivation index that measures the amount of reactivation by comparing the probability of decoding the trained stimulus in the pretraining and post-training scans (for details about both indices, see Materials and Methods). We then compared the learning index across subjects with a high versus low amount of reactivation (using a median split). We found that the learning index for those who showed higher awake reactivation was greater than that for those who showed lower awake reactivation (t(10) = 2.616, p = 0.026, independent-samples t test; Fig. 4), suggesting a potential association between awake reactivation and greater learning for the trained compared with the untrained orientation.

Figure 4.

Strength of awake reactivation is associated with performance improvement specific to the trained orientation. The amount of behavioral improvement specific to the trained orientation was greater for subjects who showed higher levels of awake reactivation (based on a median split). Dots represent individual data. Error bars indicate SEM. *p < 0.05.

Discussion

We investigated whether a process of feature-specific awake reactivation takes place in human V1 following training with a novel visual stimulus. We found that, shortly after training, the brain activity in V1 was more likely to be classified as the trained orientation. This awake reactivation was specific to V1; higher retinotopic areas failed to show similar effects. Furthermore, the strength of awake reactivation showed a positive association with the behavioral performance improvement specific to the trained stimulus. These results provide strong evidence that awake reactivation extends beyond higher-level cortical areas to primary sensory cortices.

Awake reactivation has been studied in humans using paradigms in which the memory was explicitly cued (Bosch et al., 2014; Ekman et al., 2017) or subjects were asked to actively recall the memory (St-Laurent et al., 2014, 2015; Tompary et al., 2016). However, these studies cannot establish whether awake reactivation also occurs in the absence of explicit instructions. In the current study, we discouraged conscious rehearsal by requiring subjects to perform a challenging task at fixation during the pretraining and post-training scans and further administered a postexperiment questionnaire that confirmed that subjects did not engage in conscious rehearsal. A number of studies have shown that conscious rehearsal is not needed in order for memory enhancement to occur (Dewar et al., 2014; Brokaw et al., 2016; Craig et al., 2016; Tambini et al., 2017).

Our study demonstrates that awake reactivation in humans can be feature-specific. Indeed, by comparing Gabor stimuli that only vary in their orientation, we revealed that a basic visual feature, such as orientation, is specifically reactivated in the brain. Conversely, previous human studies could not determine what specific aspect of the trained stimulus was reactivated. Some of the prior studies used associative learning between two different categories (Deuker et al., 2013; Staresina et al., 2013; Tambini and Davachi, 2013; Schlichting and Preston, 2014). However, the reemergence of the brain processes during encoding of two different categories is insufficient to determine whether the reactivated information relates to the coding of each stimulus or the binding process (Deuker et al., 2013). Other studies did not use associative learning but used complex stimuli, such as animals, fruits, vegetables, letters, and cartoon movies (Deuker et al., 2013; Staresina et al., 2013; Tambini and Davachi, 2013; Schlichting and Preston, 2014; Guidotti et al., 2015; Chelaru et al., 2016; de Voogd et al., 2016), which contain a large number of individual features that could potentially be reactivated. By demonstrating feature-specific reactivation, the current study shows that awake reactivation in the human brain is not limited to the level of whole objects but extends down to the most basic visual features.

Related to the issue of feature-specific reactivation, we also showed that reactivation in humans is not exclusive to higher-order brain areas but exists even in the primary visual cortex V1. Prior studies demonstrated the existence of reactivation mostly in the association cortex but not in the primary sensory areas (Deuker et al., 2013; Staresina et al., 2013; Tambini and Davachi, 2013; Schlichting and Preston, 2014; Guidotti et al., 2015; Chelaru et al., 2016; de Voogd et al., 2016). It has thus remained unclear what the limits of the reactivation phenomenon are. Our study suggests that human reactivation is likely to occur across most (if not all) brain regions.

A major difference between our study and previous research on awake reactivation is that our task involved extensive training. In contrast, most previous studies used a single or very few presentations of each stimulus (Deuker et al., 2013; Staresina et al., 2013; Tambini and Davachi, 2013; Schlichting and Preston, 2014; Guidotti et al., 2015; Chelaru et al., 2016; de Voogd et al., 2016). Extensive training was also provided in a previous study of awake reactivation (Guidotti et al., 2015). Such training has been additionally shown to enhance the activity of the trained patch of V1 during sleep (Yotsumoto et al., 2009; Bang et al., 2014) and alter the connectivity between the trained area of visual cortex and the rest of the brain (Lewis et al., 2009). It is an open question whether the nature of awake reactivation is the same in cases of few stimulus presentations versus extensive training. Nevertheless, together with the study by Guidotti et al. (2015), our results demonstrate that awake reactivation occurs in a variety of visual learning paradigms. It should also be noted that, because we trained subjects on degraded visual stimuli, our decoder was trained on a separate decoder construction session rather than on the patterns of activity elicited during learning itself. Future studies should ideally use learning paradigms that allow the decoder to be trained using the brain activity elicited during learning.

In our study, the decodability of the trained orientation in V1 was highest in the first post-training scan (post1) but remained significant in the second post-training scan (post2). Therefore, awake reactivation in our study continued at least until the onset of the second post-training scan (post2), which occurred on average 7.75 min after the offset of visual training. In other words, reactivation persisted for at least ∼8 min after subjects last experienced the trained stimulus. This time course of awake reactivation in V1 agrees well with neuronal results in anesthetized rodent V1 (Han et al., 2008). In that study, 50 (125) stimulus repetitions led to ∼3 (∼14) minutes of replay activity. Compared with the results from Han et al. (2008), our visual training was significantly longer, but reactivation processes lasted for a shorter time. The shortened time length of reactivation is likely due to the fact that, unlike in anesthesia, the awake brain needs to remain responsive to its environment, which likely diminishes its capacity for reactivation.

Despite the fact that fMRI has limited spatiotemporal resolution compared with neuronal recordings, our results, together with prior human studies on awake reactivation, appear to reveal processes akin to neuronal replay in animals (Carr et al., 2011). Neuronal replay, defined as the reemergence of neuronal patterns that represent previous learning, has been studied extensively in hippocampal cells (Foster and Wilson, 2006; Diba and Buzsáki, 2007; Yao et al., 2007; Davidson et al., 2009; Carr et al., 2011) but also in areas of the neocortex, particularly the visual (Ji and Wilson, 2007; Yao et al., 2007; Han et al., 2008) and the frontal cortex (Euston et al., 2007) in animals. Similar to these previous findings, we suspect that our results reflect spontaneous firing of the same pools of V1 neurons that code for the trained stimulus. Future studies using finer spatiotemporal resolution are needed to directly examine this possibility.

An important open question is from where awake reactivation originates. In our study, we found evidence for reactivation in V1 but not in higher retinotopic areas or regions outside of the occipital lobe. This pattern of results can be interpreted as suggesting that local circuits within V1 initiated the reactivation process. Indeed, top-down processes, such as visual imagery (Albers et al., 2013), attention (Tootell et al., 1998), and working memory (Harrison and Tong, 2009), which affect V1 invariably, have as strong (or stronger) influence on the higher retinotopic areas. Moreover, it is possible to decode orientation cues consciously held in working memory, even in frontoparietal areas (Ester et al., 2015). In other words, it would be surprising if a top-down signal can be read out in V1 but not in higher areas. For this reason, we favor an explanation where awake reactivation originates from circuits that specifically code for a given feature. For example, V1 explicitly codes for orientation (Yacoub et al., 2008), whereas higher regions do not (even though orientation can still be decoded in them). Thus, our findings of awake reactivation only in V1 are consistent with the idea that reactivation could be expected to occur at the site that specifically codes for the trained feature.

The possibility of locally generated awake reactivation in V1 adds to a growing body of work demonstrating that V1 is not a simple, passive feature detector but instead is important for high-level processes (Karanian and Slotnick, 2018; Rosenthal et al., 2018). Indeed, a number of studies have suggested that changes in the nature and behavioral relevance of sensory stimulation alter V1 responses via fully or partly local mechanisms (Fahle, 2004; Shuler and Bear, 2006; Xu et al., 2012). For example, both spatiotemporal sequence learning (Gavornik and Bear, 2014) and visual recognition memory (Cooke et al., 2015) have been reported to occur in V1 via local plasticity. Therefore, our work contributes to the emerging view that V1 integrates perception, learning, and mnemonic functions.

In conclusion, we found evidence for feature-specific awake reactivation in V1 after training on a visual task in humans. Further, the amount of awake reactivation in V1 was associated with the behavioral learning amount on the trained stimulus. These results suggest that feature-specific awake reactivation might be a critical mechanism in offline memory consolidation.

Footnotes

This work was supported by Georgia Institute of Technology startup grant to D.R., NIH R01EY027841, NIH R01EY019466, United States - Israel Binational Science Foundation, BSF2016058 to T.W., and NIH R21EY028329, NSF BCS 1539717 to Y.S. We thank Kazuhisa Shibata for help with the computer codes.

The authors declare no competing financial interests.

References

- Albers AM, Kok P, Toni I, Dijkerman HC, de Lange FP (2013) Shared representations for working memory and mental imagery in early visual cortex. Curr Biol 23:1427–1431. 10.1016/j.cub.2013.05.065 [DOI] [PubMed] [Google Scholar]

- Bang JW, Khalilzadeh O, Hämäläinen M, Watanabe T, Sasaki Y (2014) Location specific sleep spindle activity in the early visual areas and perceptual learning. Vision Res 99:162–171. 10.1016/j.visres.2013.12.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bang JW, Shekhar M, Rahnev D (2018a) Sensory noise increases metacognitive efficiency. J Exp Psychol: General, in press. [DOI] [PubMed] [Google Scholar]

- Bang JW, Shibata K, Frank SM, Walsh EG, Greenlee MW, Watanabe T, Sasaki Y (2018b) Consolidation and reconsolidation share behavioural and neurochemical mechanisms. Nat Hum Behav 2:507–513. 10.1038/s41562-018-0366-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bosch SE, Jehee JF, Fernández G, Doeller CF (2014) Reinstatement of associative memories in early visual cortex is signaled by the hippocampus. J Neurosci 34:7493–7500. 10.1523/JNEUROSCI.0805-14.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. (1997) The psychophysics toolbox. Spat Vis 10:433–436. 10.1163/156856897X00357 [DOI] [PubMed] [Google Scholar]

- Brokaw K, Tishler W, Manceor S, Hamilton K, Gaulden A, Parr E, Wamsley EJ (2016) Resting state EEG correlates of memory consolidation. Neurobiol Learn Mem 130:17–25. 10.1016/j.nlm.2016.01.008 [DOI] [PubMed] [Google Scholar]

- Carr MF, Jadhav SP, Frank LM (2011) Hippocampal replay in the awake state: a potential substrate for memory consolidation and retrieval. Nat Neurosci 14:147–153. 10.1038/nn.2732 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chelaru MI, Hansen BJ, Tandon N, Conner CR, Szukalski S, Slater JD, Kalamangalam GP, Dragoi V (2016) Reactivation of visual-evoked activity in human cortical networks. J Neurophysiol 115:3090–3100. 10.1152/jn.00724.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cooke SF, Komorowski RW, Kaplan ES, Gavornik JP, Bear MF (2015) Visual recognition memory, manifested as long-term habituation, requires synaptic plasticity in V1. Nat Neurosci 18:262–271. 10.1038/nn.3920 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craig M, Wolbers T, Harris MA, Hauff P, Della Sala S, Dewar M (2016) Comparable rest-related promotion of spatial memory consolidation in younger and older adults. Neurobiol Aging 48:143–152. 10.1016/j.neurobiolaging.2016.08.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davidson TJ, Kloosterman F, Wilson MA (2009) Hippocampal replay of extended experience. Neuron 63:497–507. 10.1016/j.neuron.2009.07.027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deuker L, Olligs J, Fell J, Kranz TA, Mormann F, Montag C, Reuter M, Elger CE, Axmacher N (2013) Memory consolidation by replay of stimulus-specific neural activity. J Neurosci 33:19373–19383. 10.1523/JNEUROSCI.0414-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Voogd LD, Fernández G, Hermans EJ (2016) Awake reactivation of emotional memory traces through hippocampal-neocortical interactions. Neuroimage 134:563–572. 10.1016/j.neuroimage.2016.04.026 [DOI] [PubMed] [Google Scholar]

- Dewar M, Alber J, Cowan N, Della Sala S (2014) Boosting long-term memory via wakeful rest: intentional rehearsal is not necessary, consolidation is sufficient. PLoS One 9:e109542. 10.1371/journal.pone.0109542 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diba K, Buzsáki G (2007) Forward and reverse hippocampal place-cell sequences during ripples. Nat Neurosci 10:1241–1242. 10.1038/nn1961 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diekelmann S, Born J (2010) The memory function of sleep. Nat Rev Neurosci 11:114–126. 10.1038/nrn2762 [DOI] [PubMed] [Google Scholar]

- Ekman M, Kok P, de Lange FP (2017) Time-compressed preplay of anticipated events in human primary visual cortex. Nat Commun 8:15276. 10.1038/ncomms15276 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ester EF, Sprague TC, Serences JT (2015) Parietal and frontal cortex encode stimulus-specific mnemonic representations during visual working memory. Neuron 87:893–905. 10.1016/j.neuron.2015.07.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Euston DR, Tatsuno M, McNaughton BL (2007) Fast-forward playback of recent memory sequences in prefrontal cortex during sleep. Science 318:1147–1150. 10.1126/science.1148979 [DOI] [PubMed] [Google Scholar]

- Fahle M. (2004) Perceptual learning: a case for early selection. J Vis 4:879–890. 10.1167/4.8.879 [DOI] [PubMed] [Google Scholar]

- Foster DJ. (2017) Replay comes of age. Annu Rev Neurosci 40:581–602. 10.1146/annurev-neuro-072116-031538 [DOI] [PubMed] [Google Scholar]

- Foster DJ, Wilson MA (2006) Reverse replay of behavioural sequences in hippocampal place cells during the awake state. Nature 440:680–683. 10.1038/nature04587 [DOI] [PubMed] [Google Scholar]

- Gavornik JP, Bear MF (2014) Learned spatiotemporal sequence recognition and prediction in primary visual cortex. Nat Neurosci 17:732–737. 10.1038/nn.3683 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guidotti R, Del Gratta C, Baldassarre A, Romani GL, Corbetta M (2015) Visual learning induces changes in resting-state fMRI multivariate pattern of information. J Neurosci 35:9786–9798. 10.1523/JNEUROSCI.3920-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han F, Caporale N, Dan Y (2008) Reverberation of recent visual experience in spontaneous cortical waves. Neuron 60:321–327. 10.1016/j.neuron.2008.08.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrison SA, Tong F (2009) Decoding reveals the contents of visual working memory in early visual areas. Nature 458:632–635. 10.1038/nature07832 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huth AG, de Heer WA, Griffiths TL, Theunissen FE, Gallant JL (2016) Natural speech reveals the semantic maps that tile human cerebral cortex. Nature 532:453–458. 10.1038/nature17637 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ji D, Wilson MA (2007) Coordinated memory replay in the visual cortex and hippocampus during sleep. Nat Neurosci 10:100–107. 10.1038/nn1825 [DOI] [PubMed] [Google Scholar]

- Karanian JM, Slotnick SD (2018) Confident false memories for spatial location are mediated by V1. Cogn Neurosci. Advance online publication. Retrieved Jun. 27, 2018. doi: 10.1080/17588928.2018.1488244. [DOI] [PubMed] [Google Scholar]

- Lewis CM, Baldassarre A, Committeri G, Romani GL, Corbetta M (2009) Learning sculpts the spontaneous activity of the resting human brain. Proc Natl Acad Sci U S A 106:17558–17563. 10.1073/pnas.0902455106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGaugh JL. (2000) Memory: a century of consolidation. Science 287:248–251. 10.1126/science.287.5451.248 [DOI] [PubMed] [Google Scholar]

- McGovern DP, Webb BS, Peirce JW (2012) Transfer of perceptual learning between different visual tasks. J Vis 12:4. 10.1167/12.11.4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfeiffer BE. (2017) The content of hippocampal “replay”. Hippocampus. Advance online publication. Retrieved Dec. 20, 1017. doi: 10.1002/hipo.22824. 10.1002/hipo.22824 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Power JD, Barnes KA, Snyder AZ, Schlaggar BL, Petersen SE (2012) Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. Neuroimage 59:2142–2154. 10.1016/j.neuroimage.2011.10.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reuter M, Schmansky NJ, Rosas HD, Fischl B (2012) Within-subject template estimation for unbiased longitudinal image analysis. Neuroimage 61:1402–1418. 10.1016/j.neuroimage.2012.02.084 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenthal CR, Andrews SK, Antoniades CA, Kennard C, Soto D (2016) Learning and recognition of a non-conscious sequence of events in human primary visual cortex. Curr Biol 26:834–841. 10.1016/j.cub.2016.01.040 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenthal CR, Mallik I, Caballero-Gaudes C, Sereno MI, Soto D (2018) Learning of goal-relevant and -irrelevant complex visual sequences in human V1. Neuroimage 179:215–224. 10.1016/j.neuroimage.2018.06.023 [DOI] [PubMed] [Google Scholar]

- Sasaki Y, Nanez JE, Watanabe T (2010) Advances in visual perceptual learning and plasticity. Nat Rev Neurosci 11:53–60. 10.1038/nrn2737 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schlichting ML, Preston AR (2014) Memory reactivation during rest supports upcoming learning of related content. Proc Natl Acad Sci U S A 111:15845–15850. 10.1073/pnas.1404396111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno MI, Dale AM, Reppas JB, Kwong KK, Belliveau JW, Brady TJ, Rosen BR, Tootell RB (1995) Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science 268:889–893. 10.1126/science.7754376 [DOI] [PubMed] [Google Scholar]

- Shibata K, Sasaki Y, Kawato M, Watanabe T (2016) Neuroimaging evidence for 2 types of plasticity in association with visual perceptual learning. Cereb Cortex 26:3681–3689. 10.1093/cercor/bhw176 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shibata K, Sasaki Y, Bang JW, Walsh EG, Machizawa MG, Tamaki M, Chang LH, Watanabe T (2017) Overlearning hyperstabilizes a skill by rapidly making neurochemical processing inhibitory-dominant. Nat Neurosci 20:470–475. 10.1038/nn.4490 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shuler MG, Bear MF (2006) Reward timing in the primary visual cortex. Science 311:1606–1609. 10.1126/science.1123513 [DOI] [PubMed] [Google Scholar]

- Staresina BP, Alink A, Kriegeskorte N, Henson RN (2013) Awake reactivation predicts memory in humans. Proc Natl Acad Sci U S A 110:21159–21164. 10.1073/pnas.1311989110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- St-Laurent M, Abdi H, Bondad A, Buchsbaum BR (2014) Memory reactivation in healthy aging: evidence of stimulus-specific dedifferentiation. J Neurosci 34:4175–4186. 10.1523/JNEUROSCI.3054-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- St-Laurent M, Abdi H, Buchsbaum BR (2015) Distributed patterns of reactivation predict vividness of recollection. J Cogn Neurosci 27:2000–2018. 10.1162/jocn_a_00839 [DOI] [PubMed] [Google Scholar]

- Tambini A, Davachi L (2013) Persistence of hippocampal multivoxel patterns into postencoding rest is related to memory. Proc Natl Acad Sci U S A 110:19591–19596. 10.1073/pnas.1308499110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tambini A, Berners-Lee A, Davachi L (2017) Brief targeted memory reactivation during the awake state enhances memory stability and benefits the weakest memories. Sci Rep 7:15325. 10.1038/s41598-017-15608-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tompary A, Duncan K, Davachi L (2016) High-resolution investigation of memory-specific reinstatement in the hippocampus and perirhinal cortex. Hippocampus 26:995–1007. 10.1002/hipo.22582 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tootell RB, Mendola JD, Hadjikhani NK, Ledden PJ, Liu AK, Reppas JB, Sereno MI, Dale AM (1997) Functional analysis of V3A and related areas in human visual cortex. J Neurosci 17:7060–7078. 10.1523/JNEUROSCI.17-18-07060.1997 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tootell RB, Hadjikhani N, Hall EK, Marrett S, Vanduffel W, Vaughan JT, Dale AM (1998) The retinotopy of visual spatial attention. Neuron 21:1409–1422. 10.1016/S0896-6273(00)80659-5 [DOI] [PubMed] [Google Scholar]

- Varoquaux G. (2018) Cross-validation failure: small sample sizes lead to large error bars. Neuroimage 180:68–77. 10.1016/j.neuroimage.2017.06.061 [DOI] [PubMed] [Google Scholar]

- Varoquaux G, Raamana PR, Engemann DA, Hoyos-Idrobo A, Schwartz Y, Thirion B (2017) Assessing and tuning brain decoders: cross-validation, caveats, and guidelines. Neuroimage 145:166–179. 10.1016/j.neuroimage.2016.10.038 [DOI] [PubMed] [Google Scholar]

- Wang R, Zhang JY, Klein SA, Levi DM, Yu C (2014) Vernier perceptual learning transfers to completely untrained retinal locations after double training: a “piggybacking” effect. J Vis 14:12. 10.1167/14.13.12 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xiao LQ, Zhang JY, Wang R, Klein SA, Levi DM, Yu C (2008) Complete transfer of perceptual learning across retinal locations enabled by double training. Curr Biol 18:1922–1926. 10.1016/j.cub.2008.10.030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu S, Jiang W, Poo MM, Dan Y (2012) Activity recall in a visual cortical ensemble. Nat Neurosci 15:449–455, S1–S2. 10.1038/nn.3036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yacoub E, Harel N, Ugurbil K (2008) High-field fMRI unveils orientation columns in humans. Proc Natl Acad Sci U S A 105:10607–10612. 10.1073/pnas.0804110105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yamashita O, Sato MA, Yoshioka T, Tong F, Kamitani Y (2008) Sparse estimation automatically selects voxels relevant for the decoding of fMRI activity patterns. Neuroimage 42:1414–1429. 10.1016/j.neuroimage.2008.05.050 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yan CG, Cheung B, Kelly C, Colcombe S, Craddock RC, Di Martino A, Li Q, Zuo XN, Castellanos FX, Milham MP (2013) A comprehensive assessment of regional variation in the impact of head micromovements on functional connectomics. Neuroimage 76:183–201. 10.1016/j.neuroimage.2013.03.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yao H, Shi L, Han F, Gao H, Dan Y (2007) Rapid learning in cortical coding of visual scenes. Nat Neurosci 10:772–778. 10.1038/nn1895 [DOI] [PubMed] [Google Scholar]

- Yotsumoto Y, Sasaki Y, Chan P, Vasios CE, Bonmassar G, Ito N, Náñez JE Sr, Shimojo S, Watanabe T (2009) Location-specific cortical activation changes during sleep after training for perceptual learning. Curr Biol 19:1278–1282. 10.1016/j.cub.2009.06.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang JY, Zhang GL, Xiao LQ, Klein SA, Levi DM, Yu C (2010) Rule-based learning explains visual perceptual learning and its specificity and transfer. J Neurosci 30:12323–12328. 10.1523/JNEUROSCI.0704-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Threshold S/N (mean ± s.e.m.) for the pre- and post-training tests for trained (white) and untrained (gray) orientations. To confirm that the subjects showed learning between pre- and post-training test stages, we performed a two-way repeated measures ANOVA with factors of orientation (trained vs. untrained orientation) and time (pre- vs. post-training) on the threshold S/N ratio. The results showed a significant main effect of time (F(1,11)=34.351, P<0.001) demonstrating that training was successful in improving performance. At the same time, there was no interaction between time and orientation (F(1,11)=0.009, P=0.926), suggesting that learning transfers between the trained and untrained stimuli as observed in a number of previous studies (Xiao et al., 2008; Zhang et al., 2010; McGovern et al., 2012; Wang et al., 2014). The dots represent individual data. Download Figure 1-1, TIF file (15MB, tif)

Likelihood of classification as trained orientation in V1. The likelihood of classification as trained orientation refers to a continuous measure (rather than a binary decision) of the likelihood that a particular brain signal corresponds to the trained or untrained orientation. A one-way repeated measures ANOVA to the decoder's likelihood of classification as the trained orientation showed a marginally significant main effect of time (pre vs. post1 vs. post2; F(2,22)=3.321, P=0.055). The likelihood of classification as the trained orientation increased immediately after (post1) compared to before (pre) the visual training (t(11)=2.545, P=0.027, uncorrected paired sample t-test). In addition, the likelihood of classification as the trained orientation was significantly higher than chance immediately after training (post1: t(11)=3.540, P=0.005, one-sample t-test) and 5 minutes after training (post2: t(11)=2.370, P=0.037, one-sample t-test) but not before training (pre: t(11)=0.455, P=0.658, one-sample t-test). Consistent with the result from the probability of classification, this result indicates that post-training spontaneous activity in V1 appears more similar to the trained orientation. Individual data is plotted on the group data (mean ± s.e.m.). Download Figure 2-1, TIF file (8.7MB, tif)

Accuracy of decoder during 5-fold and 10-fold cross-validations in V1, V2, V3, V3A and V4v. (A) The decoder was tested through 5-fold cross-validation using brain data obtained from the decoder construction scan. The accuracy of the decoder (mean ± s.e.m.) was above chance level (0.5) for all regions of interest (all P values < 0.001 before correction, one-sample t-tests; V1: t(11)=42.566, P<0.001; V2: t(11)=55.076, P<0.001; V3: t(11)=48.986, P<0.001; V3A: t(11)=34.767, P<0.001; V4v: t(11)=17.153, P<0.001). (B) The decoder was tested through 10-fold cross-validation using brain data obtained from the decoder construction scan. The accuracy of the decoder (mean ± s.e.m.) was above chance level (0.5) for all regions of interest (all P values < 0.005 before correction, one-sample t-tests; V1: t(11)=10.856, P<0.001; V2: t(11)=11.746, P<0.001; V3: t(11)=8.345, P<0.001; V3A: t(11)=3.851, P=0.003; V4v: t(11)=4.241, P=0.001). Download Figure 2-2, TIF file (6.1MB, tif)

Probability of classifying spontaneous brain activity as trained orientation in V2, V3, V3A, and V4v. The probability for the trained orientation did not increase immediately after training in V2, V3, V3A, and V4v. Individual data is plotted on the group data (mean ± s.e.m.) for each region of interest. Download Figure 3-1, TIF file (13.4MB, tif)

Data Availability Statement

The data are freely available online at https://osf.io/9du8v/.