Abstract

Economic theory suggests that competition and information are complementary tools for promoting healthcare quality. The existing empirical literature has documented this effect only in the context of competition among existing firms. Extending this literature, we examine competition driven by the entry of new firms into the home health care industry. In particular, we use the Certificate of Need (CON) law as a proxy for the entry of firms to avoid potential endogeneity of entry. We find that home health agencies in non-CON states improved quality under public reporting significantly more than agencies in CON states. Since home health care is a labor-intensive, capital-light industry, the state CON law is a major barrier for new firms to enter. Our findings suggest that policymakers may jointly consider information disclosure and entry regulation to achieve better quality in home health care.

JEL: I1, L1, L8

Keywords: Information Disclosure, Certificate of Need, Competition, Home Health Care Quality

1. Introduction

Public reporting of provider quality has become an increasingly popular policy to promote healthcare quality, and economists are eager to find ways to make public reporting more effective and maximize quality improvement under public reporting.1 Economic theory suggests that competition and information are complementary tools for promoting healthcare quality (Dranove & Satterthwaite 1992; Gravelle & Sivey 2010).2 Recent empirical work confirms this statement, showing that quality improvement after quality disclosure is more pronounced in more competitive markets for coronary bypass graft surgeries (Chou et al. 2014) and nursing homes (Grabowski & Town 2011; Zhao 2016).

One common feature of the existing empirical studies, as cited above, is that they all focus on the cross-sectional variation in competition among existing firms. In this paper, we extend this literature and investigate another source of competition from the entry of new firms. Specifically, we study how providers who face the threat of new entrants differ in their quality improvement after public reporting from those who do not. Providers facing competition from new entrants are likely to lose market share to the new entrants if their reported quality scores are low. We thus expect that providers in markets with high rates of entry are more likely to improve quality after public reporting, compared with those in markets with low rates of entry.

A key challenge in identifying the effect of entry on quality is that entry can be reversely determined by quality and other unobservable factors related to quality. To address this endogeneity concern, we use the status of state-level Certificate of Need (CON) regulation as a proxy for entry in the state. Since CON is determined by the state government and is less endogenous to the actual quality level, it allows for an unbiased identification. CON was originally designed to control the overinvestment of capital-intensive technology in healthcare markets, with the aim of reducing duplicative costs and protecting quality (Conover & Sloan 1998; Salkever 2000). The federal mandate was enacted in 1974 and repealed in 1987. As of 2018, 18 states retain CON for home health care, and all of these states have had the regulation since the 1970s. Despite its prevalence, CON creates an entry barrier for firms and has been historically controversial due to its anti-competitive nature (Grabowski et al. 2003; Ho et al. 2009; Cutler et al. 2010; Epstein 2013; Horwitz & Polsky 2015).

Assuming that CON can effectively restrict entry in states with CON regulations, our main hypothesis is that quality improvement under public reporting is more pronounced in non-CON states than in CON states.3 The chain of logic is as follows. As credible rating scores on provider quality become available online, patients can easily compare the quality of care across providers. Such a comparison allows consumers to switch to high-quality providers, which in turn increases firms’ returns on quality improvement. This effect could be limited if CON restricts the number of available providers and the extent to which consumers switch to high-quality providers.

We test our main hypothesis in the home health industry, whose unique feature allows us to justify the key assumption about CON’s entry restriction. Home health care covers a wide range of post-acute care, such as skilled nursing care, physical therapy, home health aide, and medical social work services. It is delivered in patients’ homes and is considered a close substitute and efficient alternative to post-acute care provided in hospitals and skilled nursing facilities (Kim & Norton 2015). We focus on the home health care industry to study the interactive effect of CON and information disclosure for the following two reasons.

First, home health is a labor-intensive industry that involves minimal capital investment. Thus, home health CON has served exclusively as an entry barrier to prevent new agencies from entering the market, rather than existing agencies’ acquisition of costly equipment or facilities. The entry restriction imposed by CON was salient during the entry wave in the early 2000s. In 2000, Medicare payments for home health care changed from a restrictive interim system to a prospective payment system, which substantially increased the payment margin and made the industry profitable. As a result, many agencies entered the market.4 During this wave of entry, existing agencies in CON states faced a weaker threat of entry because new agencies were rarely approved in these states (Polsky et al. 2014). This sharp difference in the entry trend between CON and non-CON states allows us to use the relatively exogenous regulation as a proxy for the level of new entry and the competitive pressure providers face in the state.

Second, Medicare is the major revenue source for home health care (National Center for Health Statistics, 2007), and it does not require a copayment or deductible for home health services. Therefore, provider competition over price is limited.5 This fact allows us to focus on providers’ incentives to improve quality in response to information disclosure with little concern about contemporaneous price changes.6

A further concern when studying the effect of CON on quality is the endogeneity of CON. In an ideal world where CON is randomly assigned to states, a simple statewide comparison could identify the effect. However, for our analysis of quality changes, statewide analysis can be biased because the decision to retain CON could be determined by each state’s quality trend or unobserved factors related to quality such as medical supply, practice patterns, and healthcare spending.7 We address this issue by adopting a border-analysis strategy. We focus on Hospital Referral Regions (HRRs) that cross state boundaries where entry regulation varies at the state border. Within an HRR, factors related to quality should be similar. Therefore, state CON status is exogenous to unobservable factors that affect quality.

Comparing the differential quality trend in CON versus non-CON states within each HRR before and after the implementation of HHC, we find that both reported and unreported outcome measures improved, and that the improvement is more pronounced in non-CON states than in CON states. We observe no patient selection or deterioration of unreported quality. Furthermore, we find that ex-ante market concentrations has relatively little influence on the effects of quality reporting in home health markets, in contrast with the greater effect observed in nursing home markets (Zinn 1994). Overall, our results suggest that entry regulation plays a more important role than ex-ante market concentration in explaining the heterogeneous effects of quality reporting in the home health industry. Competition and information are complementary tools that create incentives for providers to improve quality.

2. Background on Medicare Home Health Compare

Medicare home health care represents an increasingly important sector of the U.S. healthcare system, with the highest annual growth rates. To ensure quality, starting in May 2003, CMS implemented the HHC in eight pilot states (i.e., FL, MA, MO, NM, OR, SC, WI, and WV) to disclose home health quality online. The national rollout of the HHC occurred in November 2003. The HHC reports a subset of OASIS quality metrics for care outcomes, including risk-adjusted functional improvements and the incidence of adverse events. CMS requires all Medicare-certified agencies to report scores unless the quality metric has fewer than 20 cases over the past 12 months. Table 1 lists the seven reported quality measures in the initial years of HHC.

Table 1:

Reported Quality measures in Home Health Compare

| Functional Improvement Measures | Bathing: How often patients get better at bathing |

| Ambulation: How often patients get better at walking or moving around | |

| Transferring to bed: How often patients get better at getting in and out of bed | |

| Managing oral medication: How often patients get better at taking their medicines correctly (by mouth) | |

| Less Pain interfering with activity: How often patients have less pain when moving around | |

| Utilization Measures | How often patients are admitted to an acute care hospital |

| How often patients visit the Emergency Department without hospitalization |

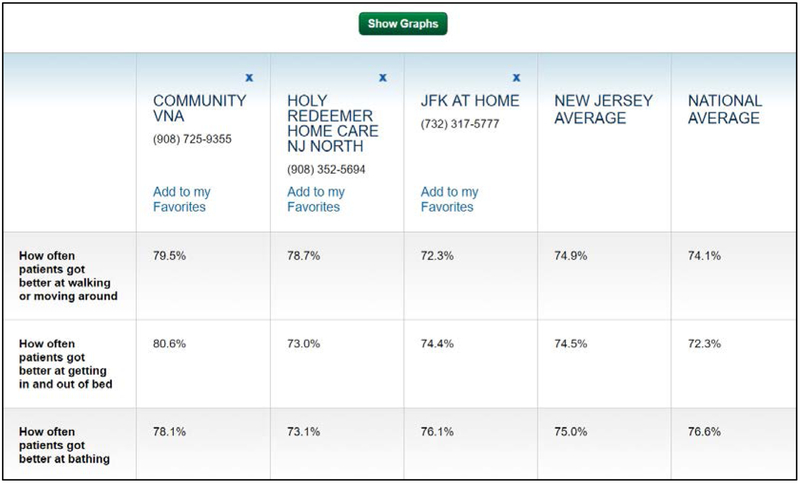

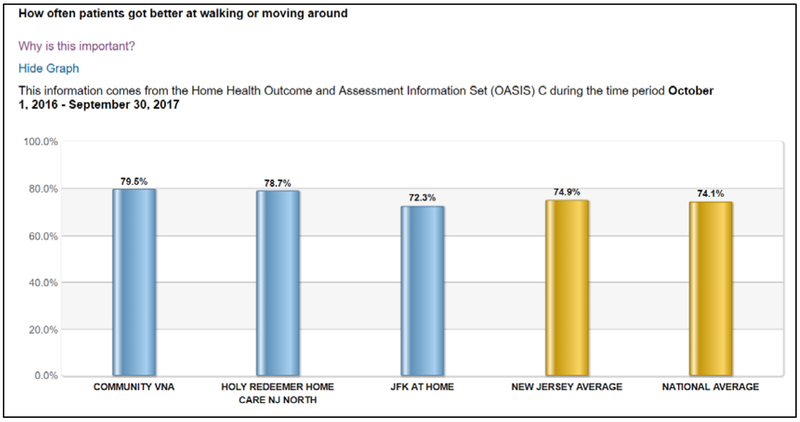

HHC is released through the Medicare.gov website. After visiting the website, consumers are asked to enter their zip code, city or state, and have the option to specify the name of the home health agencies they are interested in. A webpage would then pop up with the information on the comparison of quality among the home health agencies in the designated area along each of the HHC quality metrics. For each quality metric, the webpage shows the agency-specific risk-adjusted outcome score of each agency, as well as the state average and the national average scores. The comparison is presented in two formats: a table with numbers and a bar graph. Appendix Figure A1 shows a sample of the HHC report. The quality measures represent the 3-month lagged performance over the prior 12 months and are updated quarterly.

To promote public awareness of the HHC program, CMS announced the initiative and posted advertisements about HHC in over 70 major newspapers nationwide. Advertisements in each state use a sample of the HHC report with actual quality information of agencies in the state; they also show the online HHC website so consumers could access the reports instantly.

Did HHC have an impact on patient choice? Jung et al. (2015) showed that more patients used agencies with higher scores after HHC. While Jung et al. (2015) revealed the demand side response to HHC, how providers respond to HHC remains an unanswered question. In this paper, we fill this gap and examine whether HHC promoted quality and how quality improvement differed by state CON status.

3. Conceptual Framework

How does competition interact with information to affect provider quality? Economic theory suggests that increasing competition or improving information alone does not necessarily lead to better quality, and it is the combined effect of both competition and information that drives up quality.

3.1. The overall impact of HHC on home health care quality

First, what is the overall impact of information disclosure on quality? The theory suggests that, when holding the market structure and the price information constant, additional information on quality should raise the equilibrium quality in a monopolistically competitive market (Dranove & Satterthwaite 1992) and a duopoly market with asymmetric production costs (Gravelle & Sivey 2010). The intuition is that quality disclosure increases consumers’ sensitivity to quality and thus firms’ returns on quality, which prompts firms to raise quality (Werner & Asch 2005; Dranove & Jin 2010).8 The empirical literature, however, provides somewhat ambiguous evidence on the supply-side response to information disclosure, showing that information disclosure may improve reported quality, but may also lead to patient selection and the reallocation of resources toward improving reported quality dimensions and away from the unreported dimensions (Shahian et al. 2001; Dranove et al. 2003; Werner et al. 2005; Lu 2012).

Based on the above framework, we propose the following testable hypothesis:

H1: Overall, HHC led to higher home health care quality along the reported dimensions. The effect on unreported quality is unclear.

3.2. The complementarity between competition and information

The theoretical literature on the interaction between competition and information is relatively thin. Katz (2013) develops a theoretical model and shows that increased competition alone may lead to worse quality when quality information is imperfectly observed, and this is the case even when prices are fixed.9 Gravelle & Sivey (2010) suggest that information disclosure about provider quality can improve the equilibrium level of quality only with a sufficient level of quality competition. Recent empirical studies have confirmed these predictions (Grabowski & Town 2011; Chou et al. 2014; Zhao 2016).

Following this literature, we explore the complementarity between competition and information where competition is driven by new entry. Assume that CON is binding (we test and confirm the validity of this assumption in Section 4.2) and, thus, restrict the number of entrants in CON states during the entry wave driven by Medicare payment policy change,10 we form the following hypothesis.

H2: Quality improvement after the implementation of HHC was more pronounced along the reported dimensions in non-CON states than in CON states. The effect on unreported quality is unclear.

4. Empirical Framework

4.1. The overall impact of the HHC on quality

Since the HHC was adopted as a pilot program in eight states in May 2003, we expect that the response to the national rollout in November 2003 is stronger in nonpilot states than in pilot states. We therefore employ the following difference-in-differences estimation to test Hypothesis 1.

| (1) |

For patient-admission i in county c and state s, who was admitted during quarter t and received care from home health agency j , Yijcst refers to the reported and unreported outcome measures. NonPilots is an indicator for nonpilot states. Postt takes the value of 1 for months after November 2003. Xit, Zjt, and Wct are a set of patient risk factors, agency quarterly discharge, and a set of county characteristics that vary over time. We also control for the agency fixed effect, Agencyj, and year-month fixed effects, Quartert, to absorb time-invariant unobservable factors at the agency level and month-specific time trend. The coefficient of interest is β, which indicates the differential change in quality between pilot and nonpilot states.

4.2. Main analysis: entry regulation and quality improvement after HHC

Our empirical strategy to examine Hypothesis 2 relies on a border-analysis that compares quality improvement between agencies in CON and non-CON states within an HRR. To the extent that unobserved factors are similar within each HRR, the variation in policy across state boundaries should allow us to estimate the impact of CON on the extent to which information promotes quality.

To implement this strategy, we create a subsample of OASIS records for HHAs in HRRs that cross state boundaries where entry regulation varies across states within each HRR. We then estimate the following regression:

| (2) |

Where i, c, s, r, j and t refer to each patient-admission, county, state, HRR, agency and year-quarter, respectively. The dependent variable Yijcsrt represents reported and unreported quality measures, including both outcome and input measures. Non_CONs is an indicator for states without CON regulations. Postt takes the value of 1 for post-HHC periods. Xit, Zjt, and Wct, Agencyj, and Quartert are the same as in Equation (1). The coefficient of interest is β, which tests whether quality improvement after HHC is greater in non-CON states. In our preferred specifications, we allow each HRR to have different trajectories of quality change by controlling for HRR-specific quadratic time trends. Therefore, our identification comes from within-HRR comparison between CON and non-CON states.

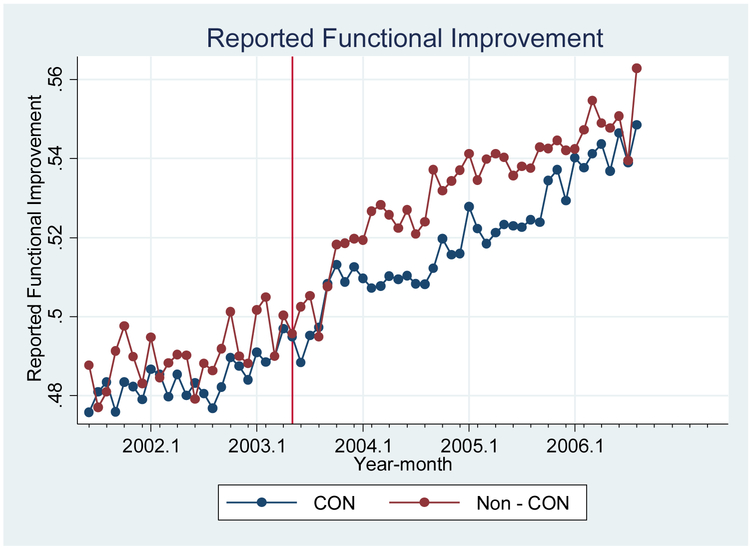

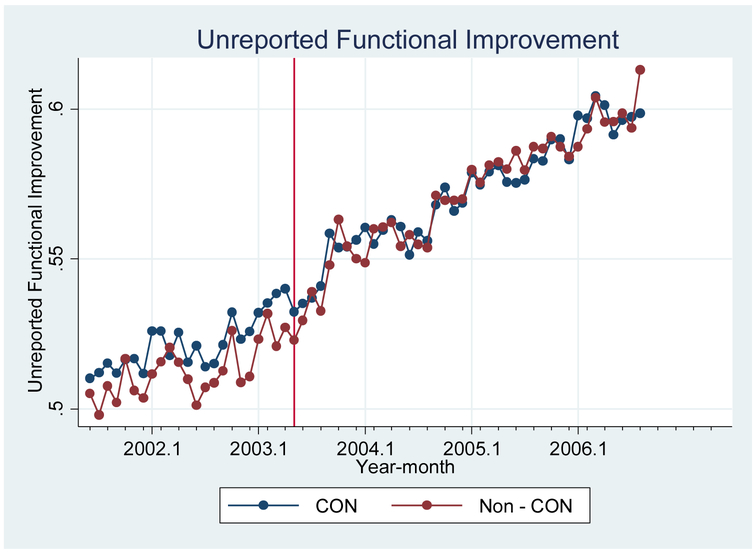

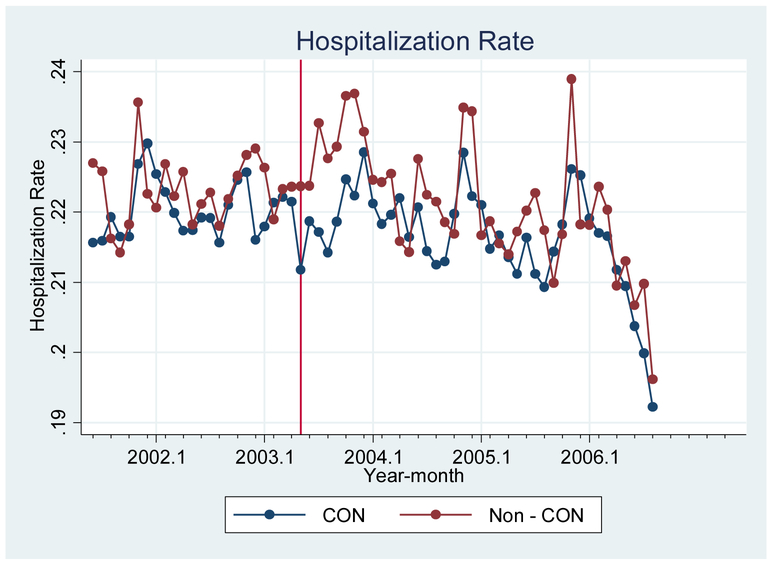

One identification concern is that the estimated coefficient of β might be driven by nonparallel pre-existing trends instead of the effect of HHC. To address this concern, we first plot the quality trend in the four outcome variables by CON status and find no obvious signs of nonparallel pre-exiting trends (Figure A3–A6). Furthermore, we add an interaction term between non-CON status and a pre-trend variable Pret in the baseline regression to test for the existence of any nonparallel pre-existing trends:

| (3) |

Pret in Equation (3) is equal to one for the three quarters preceding the release of HHC (i.e., March 2003 to November 2003). The coefficient β2 examines whether there is any differential pre-HHC quality trends between CON and non-CON states.

4.3. Justifying the key assumption – Is CON binding in CON states?

The key identification assumption of our border-analysis is that CON regulations are binding in CON states. This assumption is supported in Polsky et al. (2014), who document that new home health agencies are in practice rarely approved in CON states and, thus, potential entrants pose no threat to existing agencies in these states. In this section, we build on this observation and provide additional supporting evidence.

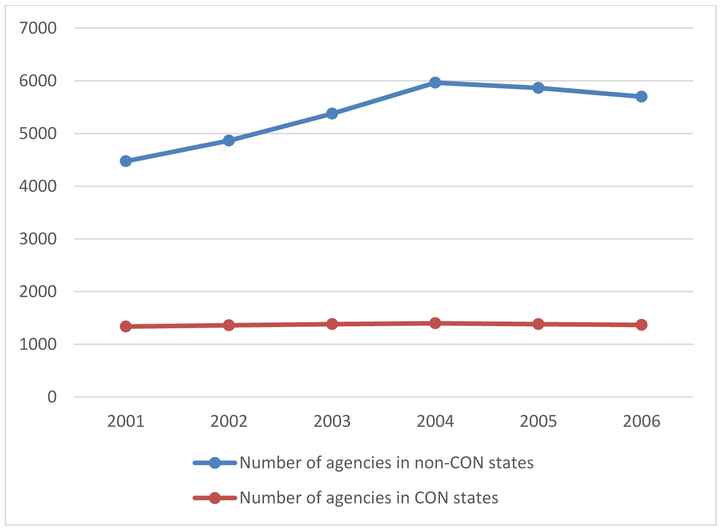

First, we plot in Figure 1 the total number of agencies in CON and non-CON states by year. The number of agencies in non-CON states increased by approximately 30% between 2001 and 2006, while that in CON states remained roughly constant. In 2006, CON states had about ten agencies per 1,000,000 Medicare beneficiaries, compared to twenty agencies in non-CON states.

Figure 1:

Total Number of agencies in CON states and non-CON states over time.

Next, we run an ordered-probit regression and examine how the number of agencies is associated with CON status, population, and population density at the county level. The results are shown in Appendix Table A1 and confirm that, on average, CON is associated with a smaller number of agencies after controlling for population and population density.

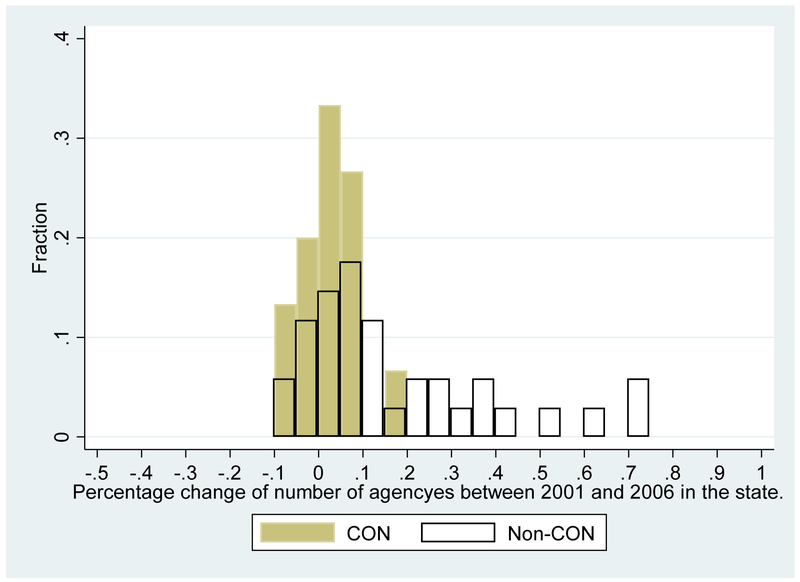

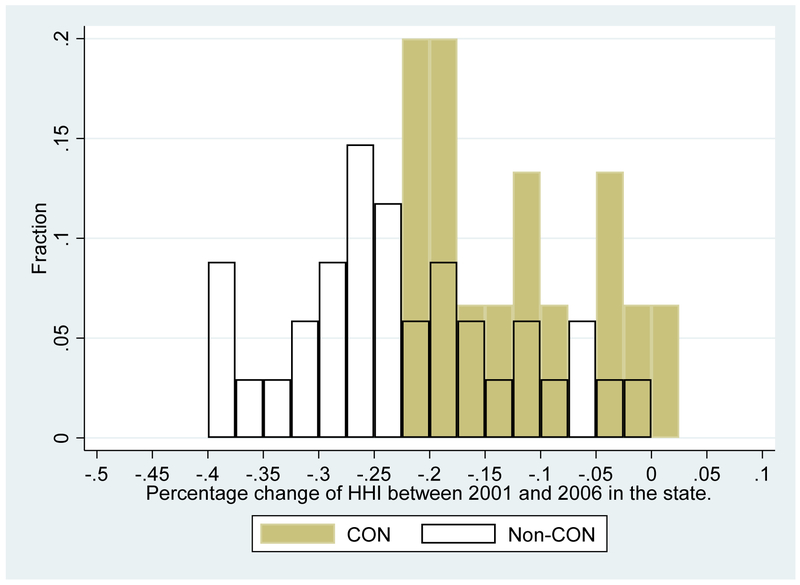

Furthermore, to test whether there is any outlier CON state where CON is not binding, we list in Appendix Table A2 the number of agencies in each state in the year 2001 and 2006, as well as the percentage change from 2001 to 2006. Figure A2.a plots the histogram of the percentage change for CON and non-CON states respectively, suggesting that most CON states did not experience a dramatic increase in the number of agencies.11 The only CON state where the number of agencies increased by more than 10% is Georgia, suggesting CON may not be binding in the state. We thus exclude HRRs that border Georgia from our main sample. (See Section 5.2 for the sample construction.)

Finally, the assumption that CON is binding in CON states would be violated if agencies could cross the state boundary to provide care to patients. Polsky et al. (2014) suggest that this is unlikely to happen because nurses are normally restricted to practice within the state they are licensed. We also test for potential “leakage” in our sample and find that a negligible share of patients (less than 4%) receive care from agencies in a different state.

5. Data and Study Sample

5.1. Data Source

Our primary data source is the Outcome and Assessment Information Set (OASIS) from June 2001 to September 2006, covering the pre- and post-HHC periods. The data contains comprehensive information on patient risk factors, functional outcomes and care utilization for all Medicare home health patients.12 The unit of observation is each home health admission, defined as home health care starting from the first day the patient receives care and ending on the day the patient is discharged from home health care.13 For each dimension of functional outcomes (e.g., bathing, ambulation, etc.), the OASIS evaluates the patient’s functional status at the beginning (i.e., admission) and the end (i.e., discharge) of care; it then reports whether the patient’s functional status is improved. For input measures, the OASIS reports the number visits a patient received during the course of treatment.

We augment the OASIS data with Medicare enrollment data and Medicare claims data to add beneficiaries’ residence (i.e., zip code and county) and demographic characteristics. We also obtain each agency’s staffing level (i.e., the number of registered nurses and the number of home health aides) from the Provider of Services (POS) file. We include county characteristics from the Census data and the Area Health Resources Files (AHRF) to control for contemporaneous shocks that might affect quality over time.

5.2. Study Sample

To implement the cross-border strategy for empirical analysis and test Hypothesis 2, we follow Polsky et al. (2014) and construct our primary study sample using home health admissions in HRRs that cross state boundaries where state CON regulation varies at the boundary. Specifically, we focus on the 33 HRRs that cross state boundaries where one side is a CON state and the other side is a non-CON state, out of a total of 306 HRRs in the country.14 Appendix Table A3 shows the list of the 33 HRRs and their boarding states. As explained in Section 4.2, CON might not be binding in the state of Georgia. We, therefore, exclude the three HRRs bordering Georgia from the main sample (i.e., Dothan, Jacksonville, and Tallahassee). Next, since CMS launched public reporting programs on hospital and nursing home quality in November 2002, we exclude patients admitted in the year 2003 from our pre-HHC sample to mitigate the concern that agencies may have anticipated the release of HHC after these earlier programs. We also exclude patients in areas with a population density below the 5th percentile to rule out the potential impact of unobservable factors in highly rural/remote areas. The final main sample consists of 908,361 home health admissions, with 32% occurring in CON states.15

Finally, to study the overall impact of HHC on home health care quality (Hypothesis 1), we use data from all HRRs but limit the sample to admissions in/after May 2003, when public reporting started in the eight pilot states. This analysis tests the overall HHC impact using nonpilot states as the experimental group. We define the time as “post” if it is after the national rollout in November 2003. There are 6,652,921 admissions in this second sample.

5.3. Quality Measures, Risk Adjusters, and Control Variables

Our dependent variables include four outcome measures and three input measures to capture different aspects of home health care quality, constructed from the OASIS and the POS data. We describe how we construct these measures in Appendix 2. Note that these quality measures are constructed from the raw measures reported in OASIS and POS and are not adjusted by patient risk factors. Therefore, to risk-adjust these measures, we follow the HHC risk-adjustment method as described in Murtaugh et al. (2007) and extract information from the OASIS data on the same categories of risk adjusters as used in the HHC.16 We also include a risk factor from the OASIS data that is not used by the HHC: patient race. This additional risk factor allow us to control for potential gaming behavior of the providers (e.g., patient selection) and evaluate the real quality change.17

The other control variables include the year-specific county characteristics, the number of discharges of the agency in the previous quarter, and a set of interactions between the agency’s for-profit status and the post dummy, as well as the interactions between hospital affiliation status and the post dummy, to capture potential influence of the agency type on the quality change. Table 2 reports summary statistics of the key variables.

Table 2:

Summary Statistics

| Variable | CON (n=286,257) | Non-CON (n=622,104) |

||

|---|---|---|---|---|

| Mean | SD | Mean | SD | |

| Panel 1: Patient Characteristics (Risk Adjusters) | ||||

| Age | 79.8 | 7.66 | 80.3 | 7.57 |

| Male % | 33.3 | .47 | 33.7 | .47 |

| White % | 90.5 | 29.3 | 89.6 | 30.5 |

| Admitted from hospital discharges % | 58.1 | 49.3 | 57.1 | 49.5 |

| Alcohol dependency % | 1.25 | 11.1 | 1.22 | 11.0 |

| Drug dependency % | .40 | 6.28 | .32 | 5.68 |

| Heavy smoking % | 6.47 | 24.6 | 5.72 | 23.2 |

| Obesity % | 14.2 | 34.9 | 13.8 | 34.5 |

| Depression % | 20.0 | 40.0 | 19.6 | 39.7 |

| Having Behavioral Problems % | 47.9 | 139 | 43.8 | 133 |

| Having Cognitive Problem % | 32.7 | 46.9 | 35.7 | 47.9 |

| Need Caregiver % | 83.2 | 37.4 | 85.0 | 35.7 |

| Total number of days during the course of treatment | 42 | 38 | 41 | 37 |

| Panel 2: County Characteristics (time-varying) | ||||

| Population (000) | 211 | 217 | 493 | 471 |

| Population Density (Population/sq miles) | 457 | 705 | 1689 | 2902 |

| Population 65+ % | 13.6 | 2.80 | 14.7 | 2.56 |

| Population African American % | 11.0 | 10.6 | 12.5 | 13.8 |

| Ln(Household Income) | 10.6 | .22 | 10.7 | .24 |

| # Hospital Beds per 10000 population | 39.4 | 22.6 | 39.2 | 29.7 |

| # Skilled Nursing Facility beds per 10000 population | 1.43 | 5.6 | 1.87 | 6.53 |

| Panel 3: Agency Characteristics | ||||

| Hospital-Based % (no variation over time) | 34.2 | 47.6 | 35.0 | 47.7 |

| For-profit % (no variation over time) | 28.1 | 44.9 | 33.5 | 47.2 |

| Quarterly Discharges | 239 | 186 | 416 | 556 |

| Predicted HHI (zip code-level) | 3732 | 1866 | 2576 | 1596 |

| Predicted HHI (county-level) | 3746 | 2062 | 2556 | 1732 |

| Low baseline competition (zip code-level) | 0.83 | 0.37 | 0.57 | 0.50 |

| Low baseline competition (county-level) | 0.79 | 0.41 | 0.58 | 0.49 |

| Panel 4: Reported Measures | ||||

| Functional improvement (reported in HHC) | .51 | .32 | .52 | .32 |

| Emergency department visit use without hospitalization % | 10.7 | 30.9 | 10.3 | 30.4 |

| Hospitalization % | 9.72 | 29.6 | 9.77 | 29.7 |

| Panel 5: Unreported Measures | ||||

| Functional improvement (unreported in HHC) | 55.9 | 36.4 | 55.8 | 36.0 |

| Weighted number of visits during 60 days | 11.7 | 8.37 | 11.6 | 8.13 |

| Full-time employed registered nurses | 14.1 | 15.6 | 17.8 | 28.7 |

| Full-time employed home health aids | 8.78 | 17.9 | 10.1 | 22.0 |

Note: Mean and Standard Deviations are reported. Sample include patient admissions between June 2001 and September 2006 in HRRs that cross state boundaries.

6. Results

6.1. The overall impact of the HHC on quality: pilot vs. nonpilot states

In this section, we present results from the difference-in-differences estimation of Equation (1) in Table 3. The results show that agencies in nonpilot states increased reported and unreported functional outcomes more than agencies in pilot states and decreased the incidence of emergency department visits and hospitalizations, confirming that there is a positive overall impact of the HHC on quality.

Table 3:

The overall effect of HHC on home health care quality (non-pilot states vs. pilot states)

| Reported Outcome Measures | Unreported Outcome Measures | |||

|---|---|---|---|---|

| Reported Functional Improvement (1) |

ER Visits (2) |

Hospitalizations (3) |

Unreported Functional Improvement (4) |

|

| Non-Pilot*Post | 0.0049*** | −0.0024*** | −0.0024*** | 0.0064*** |

| (0.0013) | (0.0009) | (0.0009) | (0.0017) | |

| Agency fixed-effects | Y | Y | Y | Y |

| Year-Month fixed-effects | Y | Y | Y | Y |

| N | 6,652,921 | 6,652,921 | 6,652,921 | 6,652,921 |

| Mean of Dependent Variable in Pre-Period | 0.51 | 0.09 | 0.09 | 0.54 |

Note: The sample contains all home health patients admitted between May 2013 and Sep 2016. “Post” is one if the admission month is in or after Nov 2013. All regressions control for county characteristics (i.e., county population, population density, percent of 65+ population, percent of African American population, log of household income, # hospital beds per 10000 population and # SNF beds per 10000 population), patient characteristics (i.e., age, gender, race, admitting source, alcohol dependency, drug dependency, heavy smoking, obesity, depression, having behavioral problems, having cognitive problems and whether need a caregiver), and the duration (number of days) of the treatment course, agency fixed effects, year-month fixed effects, and a HRR-specific quadratic time trend. Standard errors are clustered by each home health agency.

Significant at 1%.

Significant at 5%.

Significant at 10%.

6.2. Main results: entry regulation and quality improvement after the HHC

Table 4 shows the results of estimating Equations (2) and (3) on the reported and unreported outcome measures. Overall, quality improvement is more pronounced in non-CON states than in CON states, and the results are robust across different specifications.18 The increase in the reported functional improvement score was 1.4 percentage points higher in non-CON states than in CON states. This corresponds to a 2.9% further increase from the pre-HHC average level of the functional status improvement rate (0.49). Similar effects are observed for the reduction of emergency department visit and hospitalization rate, as well as the increase in unreported functional improvement score.

Table 4:

Main Result (Part I) – Information, CON regulation, and the effect on reported and unreported outcome measures

| Reported Outcome Measures | Unreported Outcome Measures | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Reported Functional Improvement | ER Visits | Hospitalizations | Unreported Functional Improvement | |||||||||||||

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | (10) | (11) | (12) | (13) | (14) | (15) | (16) | |

| Non-CON*Post | 0.014** | 0.014** | 0.014* | 0.014** | −0.009** | −0.008** | −0.008* | −0.009** | −0.004* | −0.004 | −0.003 | −0.005** | 0.017** | 0.018** | 0.022** | 0.016** |

| (0.006) | (0.006) | (0.008) | (0.006) | (0.004) | (0.004) | (0.004) | (0.004) | (0.002) | (0.002) | (0.003) | (0.002) | (0.008) | (0.008) | (0.009) | (0.008) | |

| Non-CON*Pre | −0.002 | −0.001 | 0.001 | 0.006 | ||||||||||||

| (0.005) | (0.003) | (0.002) | (0.005) | |||||||||||||

| White | 0.029*** | 0.029*** | 0.020*** | 0.030*** | 0.001 | 0.001 | −0.000 | 0.001 | 0.001 | 0.001 | −0.000 | 0.001 | 0.046*** | 0.046*** | 0.037*** | 0.049*** |

| (0.002) | (0.002) | (0.002) | (0.002) | (0.001) | (0.001) | (0.001) | (0.002) | (0.001) | (0.001) | (0.001) | (0.002) | (0.003) | (0.003) | (0.003) | (0.003) | |

| State Fixed Effects | N | N | N | Y | N | N | N | Y | N | N | N | Y | Y | N | N | Y |

| Agency Fixed Effects | Y | Y | Y | N | Y | Y | Y | N | Y | Y | Y | N | N | Y | Y | N |

| For-profit*Post | N | Y | Y | N | N | Y | Y | N | N | Y | Y | N | N | N | Y | N |

| Facility-based*Post | N | Y | Y | N | N | Y | Y | N | N | Y | Y | N | N | N | Y | N |

| N | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 |

| Mean of Dependent Variable in Pre-Period | 0.49 | 0.49 | 0.49 | 0.49 | 0.11 | 0.11 | 0.11 | 0.11 | 0.10 | 0.10 | 0.10 | 0.10 | 0.52 | 0.52 | 0.52 | 0.52 |

Note: All regressions control for county characteristics (i.e., county population, population density, percent of 65+ population, percent of African American population, log of household income, # hospital beds per 10000 population and # SNF beds per 10000 population), patient characteristics (i.e., age, gender, race, admitting source, alcohol dependency, drug dependency, heavy smoking, obesity, depression, having behavioral problems, having cognitive problems and whether need a caregiver), the duration (number of days) of the treatment course, year-month fixed effects, and a HRR-specific quadratic time trend. Standard errors are clustered by each home health agency.

Significant at 1%.

Significant at 5%.

Significant at 10%.

Throughout the quality metrics, the insignificant coefficients on the pre-trend control variable throughout the specifications suggest that our findings are not driven by differential pre-HHC trends of care quality between CON and non-CON areas.

To explore the mechanisms through which outcome improves, we show the impacts on input measures in Table 5. The results suggest that there is no significant differential change in the number of home health visits between CON and non-CON states. For staffing levels, the results are only marginally significant to insignificant: agencies in non-CON states hired 2.5–2.7 more home health aides after the HHC, representing a 25–27% increase from the pre-HHC average level (10); yet there is no significant difference in the number of registered nurses.19

Table 5:

Main Result (Part II) – Information, CON regulation, and the effect on unreported input measures

| Number of visits (weighted) | Number of full-time employed registered nurses | Number of full-time employed home health aides | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | (10) | (11) | (12) | |

| Non-CON*Post | 0.062 | 0.061 | 0.010 | 0.162 | 0.948 | 0.644 | 0.714 | 0.766 | 2.725* | 2.449 | 2.508 | 2.619* |

| (0.184) | (0.186) | (0.221) | (0.179) | (0.882) | (1.055) | (1.055) | (0.835) | (1.562) | (1.583) | (1.600) | (1.537) | |

| Non-CON*Pre | −0.071 | 0.178 | 0.149 | |||||||||

| (0.112) | (0.118) | (0.143) | ||||||||||

| State Fixed Effects | N | N | N | Y | N | N | N | Y | N | N | N | Y |

| Agency Fixed Effects | Y | Y | Y | N | Y | Y | Y | N | Y | Y | Y | N |

| For-profit*Post | N | Y | Y | N | N | Y | Y | N | N | Y | Y | N |

| Facility-based*Post | N | Y | Y | N | N | Y | Y | N | N | Y | Y | N |

| N | 908,361 | 908,361 | 908,361 | 908,361 | 3,325 | 3,325 | 3,325 | 3,325 | 3,325 | 3,325 | 3,325 | 3,325 |

| Mean of Dependent Variable in Pre-Period | 11.7 | 11.7 | 11.7 | 11.7 | 18 | 18 | 18 | 18 | 10 | 10 | 10 | 10 |

Note: All regressions control for county characteristics (i.e., county population, population density, percent of 65+ population, percent of African American population, log of household income, # hospital beds per 10000 population and # SNF beds per 10000 population), patient characteristics (i.e., age, gender, race, admitting source, alcohol dependency, drug dependency, heavy smoking, obesity, depression, having behavioral problems, having cognitive problems and whether need a caregiver), the duration (number of days) of the treatment course, year-month fixed effects, and a HRR-specific quadratic time trend. Standard errors are clustered by each home health agency.

Significant at 1%.

Significant at 5%.

Significant at 10%.

7. Extensions

7.1. Heterogeneity analysis by baseline competition and hospital affiliation

Our previous results focused on competition driven by new entrants. In this section, we explore the heterogeneity of the information disclosure effect by agencies’ baseline local concentration level determined by incumbent providers. To measure the level of local concentration, we construct a predicted HHI measure at the county level, following the method proposed in Kessler & McClellan (2000). We stratify the sample into four groups: CON-low Concentration, CON-high Concentration, non-CON-low Concentration, and non-CON-high Concentration, representing patients in CON or non-CON states that face low or high baseline concentration, respecitvely. The procedure to construct the local concentration measure is described in Appendix 3. We then estimate the following regression where the omitted group for comparison is CON-high Concentration.

| (4) |

The results of estimating Equation (4) are presented in Table 6. The effect appears similar between the low competition and the high competition group within CON or non-CON states. It suggests that the baseline concentration level does not significantly influence quality improvement after information disclosure; the threat of entry by new firms plays a bigger role in incentivizing agencies to improve quality.

Table 6:

Heterogeneity analysis by baseline competition level.

| Competition Measure is based on Zip code-level Predicted HHI | Competition Definition is based on County-level Predicted HHI | |||||||

|---|---|---|---|---|---|---|---|---|

| Reported Functional Improvement | ER Visits | Hospitalizations | Unreported Functional Improvement | Reported Functional Improvement | ER Visits | Hospitalizations | Unreported Functional Improvement | |

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | |

| CON, Low Concentration*Post | −0.003 | −0.002 | 0.005 | −0.004 | 0.001 | −0.001 | 0.000 | −0.004 |

| (0.008) | (0.005) | (0.003) | (0.008) | (0.008) | (0.006) | (0.003) | (0.008) | |

| NonCON, High Concentration*Post | 0.013* | −0.010** | −0.005* | 0.015* | 0.014* | −0.007* | −0.003 | 0.020** |

| (0.007) | (0.004) | (0.003) | (0.008) | (0.007) | (0.004) | (0.003) | (0.008) | |

| NonCON, Low Concentration*Post | 0.014* | −0.005 | −0.002 | 0.021** | 0.014** | −0.009** | −0.005* | 0.015* |

| (0.007) | (0.004) | (0.003) | (0.008) | (0.007) | (0.004) | (0.002) | (0.008) | |

| N | ||||||||

Note: All regressions control for county characteristics (i.e., county population, population density, percent of 65+ population, percent of African American population, log of household income, # hospital beds per 10000 population and # SNF beds per 10000 population), patient characteristics (i.e., age, gender, race, admitting source, alcohol dependency, drug dependency, heavy smoking, obesity, depression, having behavioral problems, having cognitive problems and whether need a caregiver), the duration (number of days) of the treatment course, year-month fixed effects, agency fixed effects and a HRR-specific quadratic time trend. Standard errors are clustered by each home health agency.

Significant at 1%.

Significant at 5%.

Significant at 10%.

Second, we explore the heterogeneous response by whether the agency is affiliated with a hospital. We run separate analysis by agency types and show results in Table 7. The results suggest that the complementarity between competition and information is stronger (statistically significant at the 10% level) among freestanding agencies for functional improvement measures. This could be because hospital-based agencies serve more hospital-discharged patients who are sensitive to the ER visit scores.20

Table 7:

Heterogeneity analysis by whether the home health agency is hospital-affiliated or freestanding.

| Reported Functional Improvement | ER Visits | Hospitalizations | Unreported Functional Improvement | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Hospital-Based HHAs |

Freestanding HHAs |

Statistically Significant Difference Between Hospital-Based and Freestanding HHAs | Hospital-Based HHAs |

Freestanding HHAs |

Statistically Significant Differences Between Hospital-Based and Freestanding HHAs | Hospital-Based HHAs | Freestanding HHAs | Statistically Significant Differences Between Hospital-Based and Freestanding HHAs | Hospital-Based HHAs |

Freestanding HHAs |

Statistically Significant Differences Between Hospital-Based and Freestanding HHAs | |

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | (10) | (11) | (12) | |

| Non-CON*Post | 0.002 | 0.023** | −0.013** | −0.004 | −0.005 | −0.002 | 0.014 | 0.019* | ||||

| (0.008) | (0.009) | Yes* | (0.005) | (0.006) | No | (0.003) | (0.003) | No | (0.010) | (0.010) | No | |

| N | 315,918 | 592,443 | 315,918 | 592,443 | 315,918 | 592,443 | 315,918 | 592,443 | ||||

Note: All regressions control for county characteristics (i.e., county population, population density, percent of 65+ population, percent of African American population, log of household income, # hospital beds per 10000 population and # SNF beds per 10000 population), patient characteristics (i.e., age, gender, race, admitting source, alcohol dependency, drug dependency, heavy smoking, obesity, depression, having behavioral problems, having cognitive problems and whether need a caregiver), the duration (number of days) of the treatment course, year-month fixed effects, agency fixed effects and a HRR-specific quadratic time trend. Standard errors are clustered by each home health agency.

Significant at 1%.

Significant at 5%.

Significant at 10%.

7.2. Dynamic effects

In this section, we explore the dynamics of the interaction effects to test whether the magnitudes of the effects increase over time. We replace the post time dummy in Equation (2) with a set of year dummies. Table 8 present the results with and without the control of a time trend for for-profit status and a time trend for hospital-affiliation status. Table 8 shows that the effects on quality improvement are bigger and more significant in year 2005/2006 than in 2004, suggesting that agencies may have learned over time to improve quality.

Table 8:

The dynamic effects of the interaction between CON and HHC on quality

| Reported Functional Improvement | ER Visits | Hospitalizations | Unreported Functional Improvement | |||||

|---|---|---|---|---|---|---|---|---|

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | |

| Non-CON*Year2001 | 0.001 | 0.001 | −0.001 | −0.001 | −0.001 | −0.001 | 0.001 | 0.001 |

| (0.005) | (0.005) | (0.004) | (0.004) | (0.003) | (0.003) | (0.006) | (0.006) | |

| Non-CON*Year2004 | 0.010* | 0.010* | −0.008** | −0.008* | −0.004* | −0.004 | 0.010 | 0.011 |

| (0.006) | (0.006) | (0.004) | (0.004) | (0.003) | (0.003) | (0.007) | (0.007) | |

| Non-CON*Year2005 | 0.017** | 0.017** | −0.008* | −0.007* | −0.004 | −0.005 | 0.021** | 0.022** |

| (0.008) | (0.008) | (0.004) | (0.004) | (0.003) | (0.003) | (0.009) | (0.009) | |

| Non-CON*Year2006 | 0.020** | 0.021* | −0.011** | −0.010** | −0.005 | −0.005 | 0.030** | 0.031** |

| (0.010) | (0.010) | (0.005) | (0.005) | (0.003) | (0.003) | (0.012) | (0.012) | |

| For-profit*Trend | N | Y | N | Y | N | Y | N | Y |

| Hospital-based*Trend | N | Y | N | Y | N | Y | N | Y |

| N | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 |

Note: All regressions control for county characteristics (i.e., county population, population density, percent of 65+ population, percent of African American population, log of household income, # hospital beds per 10000 population and # SNF beds per 10000 population), patient characteristics (i.e., age, gender, race, admitting source, alcohol dependency, drug dependency, heavy smoking, obesity, depression, having behavioral problems, having cognitive problems and whether need a caregiver), the duration (number of days) of the treatment course, year-month fixed effects and agency fixed effects. Standard errors are clustered by each home health agency.

Significant at 1%.

Significant at 5%.

Significant at 10%.

7.3. Patient selection

Agencies in non-CON states may select patients with less severe health problems based on factors not included in the HHC risk-adjustment. If this were the case, then the improved outcome measures could be driven by patient selection instead of real quality improvement.

To rule out this possibility, we re-estimate our baseline specification and replace the dependent variable with the risk factors. Among these risk adjusters, the only factor not included in the HHC risk-adjustment is race (measured by whether the patient is white). We focus in particular on race because, as we observed in Table 4 that being white significantly predicts better functional improvement outcomes.21 The results shown in Table 9 suggest that there is no evidence of patient selection based on either patient race or other HHC-included risk factors.

Table 9:

Testing for patient selection

| Risk Factors Not Included in HHC | Risk Factors Included in HHC | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| White | Hospital Discharged | Alcohol Dependency | Drug Dependency | Heavy Smoking | Obesity | Depression | Any Cognitive Issue | Any Behavior Issue | Need Caregiver | |

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | (10) | |

| Non-CON*Post | 0.001 | 0.013 | −0.000 | 0.002 | 0.003 | −0.008* | −0.009 | 0.007 | −0.003 | −0.007 |

| (0.005) | (0.008) | (0.001) | (0.007) | (0.003) | (0.004) | (0.009) | (0.012) | (0.053) | (0.007) | |

| N | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 | 908,361 |

| Mean of Dependent Variable in Pre-Period | 0.902 | 0.571 | 0.127 | 0.007 | 0061 | 0.130 | 0.202 | 0.326 | 0.298 | 0.832 |

Note: All regressions control for county characteristics (i.e., county population, population density, percent of 65+ population, percent of African American population, log of household income, # hospital beds per 10000 population and # SNF beds per 10000 population), patient characteristics (i.e., age, gender, race, admitting source, alcohol dependency, drug dependency, heavy smoking, obesity, depression, having behavioral problems, having cognitive problems and whether need a caregiver), the duration (number of days) of the treatment course, year-month fixed effects, agency fixed effects and a HRR-specific quadratic time trend. Standard errors are clustered by each home health agency.

Significant at 1%.

Significant at 5%.

Significant at 10%.

7.4. Falsification analysis

In this section, we conduct a falsification analysis to show there is no similar effect in HRRs where the CON law in CON states is not binding. Specifically, we use the three excluded HRRs that border GA to test whether similar results are found there. The results of estimating Equations (2) and (3) using the new sample are shown in Table 10. As expected, we do not observe additional quality improvement in non-CON states compared with the CON states.

Table 10:

Falsification analysis— Information, CON regulation, and the effect on reported and unreported outcome measures in HRRs where CON is not binding.

| Reported Outcome Measures | Unreported Outcome Measures | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Reported Functional Improvement | ER Visits | Hospitalizations | Unreported Functional Improvement | |||||||||||||

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | (10) | (11) | (12) | (13) | (14) | (15) | (16) | |

| Non-CON*Post | −0.088* | −0.079 | −0.069* | −0.076* | −0.007 | −0.003 | −0.003 | −0.002 | 0.011 | 0.013 | 0.010 | 0.008 | −0.074 | −0.059 | −0.048 | −0.056 |

| (0.027) | (0.030) | (0.021) | (0.021) | (0.012) | (0.013) | (0.010) | (0.010) | (0.013) | (0.009) | (0.011) | (0.010) | (0.026) | (0.026) | (0.020) | (0.027) | |

| Non-CON*Pre | −0.011* | 0.002 | −0.002 | −0.010 | ||||||||||||

| (0.004) | (0.002) | (0.007) | (0.013) | |||||||||||||

| State Fixed Effects | Y | N | N | N | Y | N | N | N | Y | N | N | N | Y | N | N | N |

| Agency Fixed Effects | N | Y | Y | Y | N | Y | Y | Y | N | Y | Y | Y | N | Y | Y | Y |

| For-profit*Post | N | N | Y | Y | N | N | Y | Y | N | N | Y | Y | N | N | Y | Y |

| Facility-based*Post | N | N | Y | Y | N | N | Y | Y | N | N | Y | Y | N | N | Y | Y |

| N | 78,739 | 78,739 | 78,739 | 78,739 | 78,739 | 78,739 | 78,739 | 78,739 | 78,739 | 78,739 | 78,739 | 78,739 | 78,739 | 78,739 | 78,739 | 78,739 |

| Mean of Dependent Variable in Pre-Period | .50 | .50 | .50 | .50 | .11 | .11 | .11 | .11 | .10 | .10 | .10 | .10 | .53 | .53 | .53 | .53 |

Note: All regressions control for county characteristics (i.e., county population, population density, percent of 65+ population, percent of African American population, log of household income, # hospital beds per 10000 population and # SNF beds per 10000 population), patient characteristics (i.e., age, gender, race, admitting source, alcohol dependency, drug dependency, heavy smoking, obesity, depression, having behavioral problems, having cognitive problems and whether need a caregiver), the duration (number of days) of the treatment course, year-month fixed effects, and a HRR-specific quadratic time trend. Standard errors are clustered by each home health agency.

Significant at 1%.

Significant at 5%.

Significant at 10%

8. Discussions

Previous literature suggests that competition and information disclosure may be complementary tools to ensure quality in hospital and nursing home markets. In this paper, we examine this issue in home health care where the relation between competition and quality differs from facility-based care due to easy market exits/entries and the absence of price competition. We focus on competition driven by new entry, and our results suggest that the threat of losing patients to new entrants may serve as an important mechanism through which providers improve quality under information disclosure. Moreover, we find the ex-ante market concentration has little impact on the effects of quality reporting in the home health industry. This is different from nursing home markets where ex-ante market plays an important role (Zinn, 1994). It is particularly important in labor-intensive industries, such as the home health care, where CON plays the role of restricting entry instead of regulating capital investment. Our findings imply that the deregulation of CON in these markets may complement the public reporting policy and make information disclosure more effective in promoting quality.

We find that both reported and unreported quality improved after public reporting, suggesting that these measures could be complementary in the production of home health care. This finding indicates that the proper selection of quality measures is important to guaranteeing the effectiveness of public reporting and avoiding potential gaming behaviors associated with the production of multiple care measures that might harm the unreported quality.

The small magnitude of our results is consistent with the prior findings in Zhao (2016). It could be related to our findings that agencies in non-CON states did not hire more registered nurses after the HHC, which may be due to the nursing shortage throughout the nation during the study period (Carter 2009).

We note a few limitations of our study. First, our sample only covers 2001–2006 and does not include recently added HHC measures. Starting in 2012, CMS included patients’ experience, such as “communication between providers and patients,” “specific care issues,” and “overall satisfaction” in the HHC. CMS also created a five-star rating system in 2016, which is a summary measure of quality scores and is more user-friendly. The effect of information disclosure may become stronger when patient experience measures are added and quality information becomes more transparent. Second, our results may not be generalizable to other industries, such as hospitals and nursing homes where capital investments are crucial. Third, our results show that outcome measures improved significantly, yet the observed input measures, such as staffing level, did no significantly increase. We note that there could be a few unobservable factors which may have contributed to the improvement of outcome. These factors include the level of efforts caregivers put in caring patients during a home health visit, the average amount of time per caregiver devoted to direct patient care, caregivers’ compliance to industry guidelines, caregivers’ expertise level, and the management level of the home health agency (DeLellis 2009; DeLellis & Ozcan 2013; Grabowski et al. 2017). Since we do not observe these input factors, further studies are needed to examine the role of entry regulation and information on these input measures.

In summary, we find that the CON regulations in the home health industry attenuate the effectiveness of information disclosure in improving quality. This suggests that removing CON might have a positive impact on the quality of home health care under the HHC. Continuing work is needed to examine the complementarity between entry regulation and information transparency in other health sectors and to find effective ways to improve the quality of care under information disclosure.

Acknowledgements:

This work is supported by NIH/NHLBI grant # R01 HL088586–01 and NIH/NIA grant # 1R03AG035098–01. No conflicts of interest exist.

Appendix for “Entry regulation and the effect of public reporting: Evidence from Home Health Compare”

Appendix 1. Appendix Figures and Tables

Figure A1:

A Sample of Home Health Compare—Table Display

Note: The comparison table is displayed after a consumer visiting the HHC website types in a ZIP/city/state. Consumers can choose quality measures of interest and select up to three HHAs for comparison at a time.

Figure A2:

A Sample of Home Health Compare—Graphical Display

Note: The comparison graph is displayed after a consumer visiting the HHC website types in a ZIP/city/state. Consumers can choose quality measures of interest and select up to three HHAs for comparison at a time.

Figure A2.a:

Distribution of percentage change in the state-level number of agencies in CON and non-CON states between 2001 and 2006

Figure A2.b:

Distribution of percentage change in average zip code-level HHI in CON and non-CON states between 2001 and 2006

Figure A3:

Reported Functional Improvement by CON status, 2001–2006

Figure A4:

Unreported Functional Improvement by CON status, 2001–2006

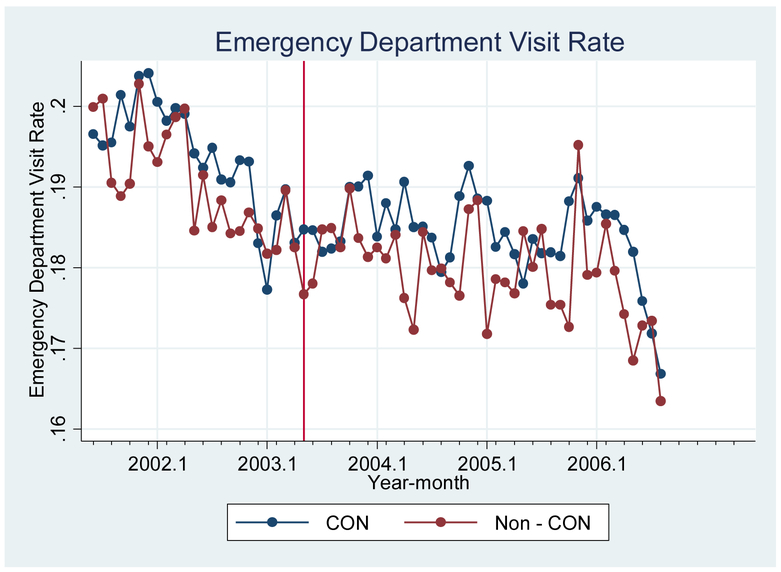

Figure A5:

Hospitalization Rate by CON status, 2001–2006

Figure A6:

Emergency Department Visit Rate by CON status, 2001–2006

Table A1:

The association between State CON status and county-level number of agencies in year 2006

| Dependent Variable=Number of agencies in a county. | |

|---|---|

| CON State | −1.12*** (.164) |

| Population/100000 | .106*** (.020) |

| Population Density | −.691 (.749) |

| N | 3080 |

| R-squared | .15 |

Note: The unit of observation is each county in the year 2006. Results are from an ordered-probit regression, weighted by county population (/100000). The regression also controls for percent of 65+ population, percent of African American population, percent of Medicare enrollment, # hospital beds per 10000 population and # SNF beds per 10000 population. Standard Errors are clustered by state.

Significant at 1%.

Significant at 5%.

Significant at 10%.

Table A2:

Change of number of agencies in CON states between 2001 and 2006

| Number of agencies in 2001 | Number of agencies in 2006 | Percentage change in the number of agencies from 2001 to 2006 | |

|---|---|---|---|

| Panel 1: CON States | |||

| WV | 61 | 57 | −6.5% |

| MS | 59 | 56 | −5.0% |

| NJ | 51 | 49 | −3.9% |

| KY | 99 | 98 | −1.0% |

| NY | 187 | 185 | −1.0% |

| SC | 67 | 67 | 0 |

| VT | 12 | 12 | 0 |

| AR | 169 | 171 | 1.2% |

| TN | 131 | 135 | 3.0% |

| AL | 135 | 140 | 3.7% |

| WA | 56 | 59 | 5.3% |

| MT | 35 | 37 | 5.7% |

| NC | 154 | 163 | 5.8% |

| MD | 41 | 45 | 9.8% |

| GA | 81 | 94 | 16.0% |

| Panel 2: Non-CON States | |||

| ND | 29 | 26 | −10% |

| ME | 31 | 28 | −9.7% |

| SD | 41 | 39 | −4.9% |

| WY | 26 | 25 | −3.8% |

| LA | 216 | 214 | −0.9% |

| MN | 190 | 189 | −0.5% |

| MA | 104 | 104 | 0 |

| KS | 123 | 124 | 0.8% |

| NE | 59 | 60 | 1.7% |

| CT | 77 | 79 | 2.6% |

| IA | 166 | 171 | 3.0% |

| RI | 20 | 21 | 5% |

| NH | 34 | 36 | 5.9% |

| WI | 106 | 113 | 6.6% |

| OR | 55 | 59 | 7.3% |

| MO | 137 | 147 | 7.3% |

| IN | 140 | 152 | 8.6% |

| PA | 221 | 243 | 10% |

| ID | 43 | 48 | 11.6% |

| NM | 51 | 58 | 13.7% |

| VA | 134 | 153 | 14.2% |

| DE | 13 | 15 | 15.4% |

| CO | 97 | 117 | 20.6% |

| OK | 156 | 190 | 21.8% |

| UT | 35 | 44 | 25.7% |

| IL | 239 | 307 | 28.5% |

| OH | 263 | 344 | 30.8% |

| CA | 409 | 557 | 36.2% |

| AZ | 51 | 70 | 37.3% |

| MI | 175 | 245 | 40% |

| NV | 33 | 51 | 54.5% |

| TX | 694 | 1140 | 64.3% |

| FL | 293 | 504 | 72% |

| DC | 11 | 19 | 72.7% |

Table A3:

List of HRRs consisting of multiple states with variations of CON regulation across the states

| Hospital Referral Region | CON State(s) | Non-CON State(s) |

|---|---|---|

| Albany | NY | MA |

| Allentown | NJ | PA |

| Billings | MT | WY |

| Dothan | AL/GA | FL |

| Durham | NC | VA |

| Erie | NY | PA |

| Evansville | KY | IN/OH |

| Fort Smith | AR | OK |

| Jacksonville | GA | FL |

| Jonesboro | AR | MO |

| Kingsport | RN | VA |

| Lebanon | VT | NH |

| Louisville | KY | OH |

| Morgantown | WV | PA |

| New Haven | NY | CT |

| Norfolk | NC | VA |

| Paducah | KY | IN |

| Pensacola | AL | FL |

| Philadelphia | NJ | PA |

| Pittsburgh | WV | PA |

| Portland | WA | OR |

| Roanoke | WV | VA |

| Salisbury | MD | DE |

| Sayre | NY | PA |

| Slidell | MS | LA |

| Spokane | WA | ID |

| Springfield | AR | MO |

| Tallahassee | GA | FL |

| Texarkana | AR | OK/TX |

Appendix 2. Construction of Quality Measures

For outcome measures, we first construct a composite measure for functional improvement outcomes reported in the HHC. This measure is calculated using the average of the five reported functional improvement outcomes (i.e., whether the patient’s functional status improved for bathing, ambulation, transferring to bed, managing oral medication and whether the patient had less pain during activity after receiving care). We also obtain two measures on the incidence of adverse events from OASIS (i.e., whether the patient has any emergency department visit without hospitalization and whether the patient is hospitalized, both of which are reported in HHC). Since the HHC only reports a subset of OASIS outcomes, we also construct an unreported functional improvement measure from OASIS. This unreported measure is equal to the average of the following functional improvement outcomes observed in OASIS but unreported in HHC: whether the patient improves regarding grooming, phone use, preparing meals and housekeeping after receiving care. This measure is important for us to test whether agencies reduced quality along the unreported dimensions.

In addition to the outcome measures, we also obtain two sets of input measures, which are not reported in the HHC. The first is the weighted number of home health visits of each admission (i.e., from the beginning to the end of care), constructed from the OASIS-reported number of visits for each type of care. The weights are developed by Welch et al. (1996) and represent the relative intensity and cost of resource use of each type of care. We use this measure to capture the intensity of the treatment. The other input measures are the staffing level of the agency each year, including the number of full-time equivalent registered nurses and the number of home health aides. These measures are obtained from the POS file.

Appendix 3. Construction of predicted HHI at the zip code and county level.

In this appendix, we describe how we construct the predicted HHI measure at the zip code-level and the county-level following the method described in Kessler & McClellan (2000). This measure was originally developed and used in Jung & Polsky (2014).

Step 1: We estimate a patient-level conditional choice model to predict each patient’s probability of choosing a specific agency, conditional on living in a zip code and then calculate the market share of the agency and a corresponding HHI measure. We model consumer choice based on agency characteristics, patient characteristics and the distance between the agency zip code and the patient’s zip code.1

Step 2: We construct an agency-level HHI using the weighted average of the HHIs from all zip codes the agency serves, where the weights are given by the predicted share of the agency’s patients coming from each zip code.

Step 3: We calculate a zip code- and a county-level HHI measure using the weighted average of the agency-specific HHIs from all agencies that serve the zip code or county, where the weights are the agency’s predicted share of patients.

The summary statistics of the predicted HHI at the zip-code level and the county level are presented in Table 2 Panel 3. As expected, the mean predicted HHI is higher in CON states, suggesting lower competition on average, compared with that in non-CON states.

Next, we define that an agency faces “low baseline competition” if the predicted HHI in 2001 is greater than 2500, and a “high baseline competition” if it is less than or equal to 2500. To estimate the heterogeneous response by local market competition, we stratify the sample into four groups: CON-low Competition, CON-high Competition, non-CON-low Competition, and non-CON-high Competition, representing patients in CON or non-CON states that face low or high baseline competition, respectively.

Footnotes

Arrow (1963) first raised the issue that limited information creates inefficiency in the healthcare market.

Conceptually, information allows patients to identify high-quality providers and thus reward (punish) high-quality (low-quality) providers with a higher (lower) market share (Werner & Asch 2005; Dranove & Jin 2010). However, the ability of information to move market share is restricted in a monopoly or concentrated market where consumers have limited choices and market shares are unlikely to move.

We justify this key assumption in Section 4.3.

The average margin among Medicare home health care agencies was approximately 17% between 2001 and 2013 (MedPAC, 2015), while the corresponding number was −5.4% for Medicare hospitals. The number of agencies increased from 7,528 in 2001 to 12,613 in 2013 (MedPAC, 2015).

All home health services are free for Medicare beneficiaries except for durable medical equipment (DME), such as wheelchair and walkers, which is covered by Medicare Part B. DME prices are fixed as patients pay the Part B standard 20% coinsurance of DME fee schedules. DME is also standardized in terms of quality. We thus believe that Medicare Part B does not have a significant impact on how agencies compete on price.

By contrast, in markets where price competition is intense relative to quality competition, there might be unintended consequences of information disclosure on price (Huang & Hirth 2016; McCarthy & Darden 2017).

For example, states with a low quality of care might remove CON to promote competition and improve quality of care, and high home health spending states might retain CON to contain cost.

Quality disclosure may also allow providers to compare themselves with peers, and providers’ intrinsic motivation to be good improves quality (Kolstad 2013).

The intuition is that, with imperfect quality information, providers may compete on dimensions that boost revenue but hurt the quality of care.

The 2000 Medicare Prospective Payment System on home health care substantially increased the payment margin and attracted new entries in the industry (Kim & Norton 2015).

Figure A2.a shows that the change in the number of agencies between 2001 and 2006 is generally within the range of −10% to 10%. By contrast, the percentage change among non-CON states ranges from −10% to 75%. We also justify the assumption by showing in Figure A2.b the distribution of percentage change in state-specific average HHI (across all zip codes in the state) in CON vs. non-CON states. The method of constructing the zip code-level HHI is detailed in Section 6.3. Consistent with our assumption that entry occurred mostly in non-CON states, we observe in Figure A2.b that HHI decreased most dramatically in non-CON states.

The OASIS data includes patients who receive skilled services and excludes patients who receive only personal care, homemakers, or chore services. For more information on the OASIS data, please refer to the OASIS-C2 Guidance Manual: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HomeHealthQualityInits/Downloads/OASIS-C2-Guidance-Manual-Effective_1_1_18.pdf

Each admission consists of multiple visits by registered nurses and home health aides and may involve more than one 60-day episode of care. OASIS also records a Medicare provider identifier of each home health agency from which patients receive care.

For example, the HRR of Portland, Oregon (a non-CON state), includes parts of south Washington (a CON state).

This sample contains 653,816 Medicare beneficiaries.

The adjusters include patient age, gender, admitting source, and physical and behavioral health conditions.

We also include the total number of days of treatment (i.e., from the beginning to the end of care), to absorb unobserved health conditions (e.g., chronic diseases). Results are robust when we exclude this control.

For functional improvement measures, higher values indicate better quality; for hospitalization rate and emergency department visit rate, lower values indicate better quality.

We use agency-year as the unit of analysis for staffing level because the staffing information is observed for each year. The estimations are weighted by the number of discharges by each agency in each year.

This pattern is observed in Jung et al. (2015).

This method of using “unused observables” to test for potential patient selection has been adopted in Dranove et al. (2003), Werner & Asch (2005), Kolstad (2013) and Finkelstein & Poterba (2014).

As explained in Jung & Polsky (2014), although home health patients receives care at home and do not travel, they still need to choose agencies to compare within a specific geographic area, such as zip code. Furthermore, nurses need to travel to the patient’s home, and thus local distance matters for patient choice.

References

- Carter A, 2009. Nursing shortage predicted to be hardest on home healthcare. Home Healthcare Now 27, 198. [DOI] [PubMed] [Google Scholar]

- Chassin MR, 2002. Achieving and sustaining improved quality: lessons from New York State and cardiac surgery. Health Affairs 21, 40–51 [DOI] [PubMed] [Google Scholar]

- Chou S-Y, Deily ME, Li S, Lu Y, 2014. Competition and the impact of online hospital report cards. Journal of Health Economics 34, 42–58 [DOI] [PubMed] [Google Scholar]

- Conover CJ, Sloan FA, 1998. Does removing Certificate-of-Need regulations lead to a surge in health care spending? Journal of Health Politics, Policy and Law 23, 455–481 [DOI] [PubMed] [Google Scholar]

- Cutler DM, Huckman RS, Kolstad JT, 2010. Input constraints and the efficiency of entry: Lessons from cardiac surgery. American Economic Journal: Economic Policy 2, 51–76 [Google Scholar]

- DeLellis N, 2009. Determinants of Nursing Home Performance: Examining the Relationship Between Quality and Efficiency. Virginia Commonwealth University Working Paper [Google Scholar]

- DeLellis NO, Ozcan YA, 2013. Quality outcomes among efficient and inefficient nursing homes: A national study. Health Care Management Review 38, 156–165 [DOI] [PubMed] [Google Scholar]

- Dranove D, Jin GZ, 2010. Quality disclosure and certification: Theory and practice. Journal of Economic Literature 48, 935–63 [Google Scholar]

- Dranove D, Kessler D, McClellan M, Satterthwaite M, 2003. Is more information better? The effects of “report cards” on health care providers. Journal of Political Economy 111, 555–588 [Google Scholar]

- Dranove D, Satterthwaite MA, 1992. Monopolistic competition when price and quality are imperfectly observable. The RAND Journal of Economics, 518–534 [Google Scholar]

- Epstein RA, 2013. Fixing Obamacare: The Virtues of Choice, Competition and Deregulation. New York University Annual Survey of American Law 68, 493 [Google Scholar]

- Finkelstein A, Poterba J, 2014. Testing for asymmetric information using “unused observables” in insurance markets: Evidence from the UK annuity market. Journal of Risk and Insurance 81, 709–734 [Google Scholar]

- Grabowski DC, Ohsfeldt RL, Morrisey MA, 2003. The effects of CON repeal on Medicaid nursing home and long-term care expenditures. Inquiry 40, 146–157 [DOI] [PubMed] [Google Scholar]

- Grabowski DC, Stevenson DG, Caudry DJ, O’malley AJ, Green LH, Doherty JA, Frank RG, 2017. The Impact of Nursing Home Pay-for-Performance on Quality and Medicare Spending: Results from the Nursing Home Value-Based Purchasing Demonstration. Health Services Research 52, 1387–1408 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grabowski DC, Town RJ, 2011. Does information matter? Competition, quality, and the impact of nursing home report cards. Health Services Research 46, 1698–1719 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gravelle H, Sivey P, 2010. Imperfect information in a quality-competitive hospital market. Journal of Health Economics 29, 524–535 [DOI] [PubMed] [Google Scholar]

- Hannan EL, Sarrazin MSV, Doran DR, Rosenthal GE, 2003. Provider profiling and quality improvement efforts in coronary artery bypass graft surgery: the effect on short-term mortality among Medicare beneficiaries. Medical care, 1164–1172 [DOI] [PubMed] [Google Scholar]

- Ho V, Ku-Goto MH, Jollis JG, 2009. Certificate of need (CON) for cardiac care: controversy over the contributions of CON. Health Services Research 44, 483–500 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horwitz JR, Polsky D, 2015. Cross Border Effects of State Health Technology Regulation. American Journal of Health Economics [Google Scholar]

- Huang SS, Hirth RA, 2016. Quality rating and private-prices: Evidence from the nursing home industry. Journal of Health Economics 50, 59–70 [DOI] [PubMed] [Google Scholar]

- Jung JK, Wu B, Kim H, Polsky D, 2015. The Effect of Publicized Quality Information on Home Health Agency Choice. Medical Care Research and Review, [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jung K, Polsky D, 2014. Competition and quality in home health care markets. Health Economics 23, 298–313 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Katz ML, 2013. Provider competition and healthcare quality: More bang for the buck? International Journal of Industrial Organization 31, 612–625 [Google Scholar]

- Kessler DP, McClellan MB, 2000. Is hospital competition socially wasteful? The Quarterly Journal of Economics 115, 577–615 [Google Scholar]

- Kim H, Norton EC, 2015. Practice patterns among entrants and incumbents in the home health market after the prospective payment system was implemented. Health Economics 24, 118–131 [DOI] [PubMed] [Google Scholar]

- Kolstad JT, 2013. Information and quality when motivation is intrinsic: Evidence from surgeon report cards. The American Economic Review 103, 2875–2910 [Google Scholar]

- Lu FS, 2012. Multitasking, information disclosure, and product quality: Evidence from nursing homes. Journal of Economics & Management Strategy 21, 673–705 [Google Scholar]

- McCarthy IM, Darden M, 2017. Supply-Side Responses to Public Quality Ratings: Evidence from Medicare Advantage. American Journal of Health Economics [Google Scholar]

- Murtaugh CM, Peng T, Aykan H, Maduro G, 2007. Risk adjustment and public reporting on home health care. Health Care Financing Review 28, 77. [PMC free article] [PubMed] [Google Scholar]

- Polsky D, David G, Yang J, Kinosian B, Werner RM, 2014. The effect of entry regulation in the health care sector: The case of home health. Journal of Public Economics 110, 1–14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salkever DS, 2000. Regulation of prices and investment in hospitals in the United States. Handbook of Health Economics 1, 1489–1535 [Google Scholar]

- Shahian DM, Normand S-L, Torchiana DF, Lewis SM, Pastore JO, Kuntz RE, Dreyer PI, 2001. Cardiac surgery report cards: comprehensive review and statistical critique1. The Annals of thoracic surgery 72, 2155–2168 [DOI] [PubMed] [Google Scholar]

- Werner RM, Asch DA, 2005. The unintended consequences of publicly reporting quality information. Jama 293, 1239–1244 [DOI] [PubMed] [Google Scholar]

- Werner RM, Asch DA, Polsky D, 2005. Racial profiling the unintended consequences of coronary artery bypass graft report cards. Circulation 111, 1257–1263 [DOI] [PubMed] [Google Scholar]

- Zhao X, 2016. Competition, information, and quality: Evidence from nursing homes. Journal of Health Economics 49, 136–152 [DOI] [PubMed] [Google Scholar]

- Zinn JS, 1994. Market competition and the quality of nursing home care. Journal of Health Politics, Policy and Law 19, 555–582 [DOI] [PubMed] [Google Scholar]