Abstract

Objective

To evaluate the dimensionality of hospital quality indicators treated as unidimensional in a prior publication.

Data Source/Study Design

Pooled cross‐sectional 2010‐2011 Hospital Compare data (10/1/10 and 10/1/11 archives) and the 2012 American Hospital Association Annual Survey.

Data Extraction

We used 71 indicators of structure, process, and outcomes of hospital care in a principal component analysis of Ridit scores to evaluate the dimensionality of the indicators. We conducted an exploratory factor analysis using only the indicators in the Centers for Medicare & Medicaid Services' Hospital Value‐Based Purchasing.

Principal Findings

There were four underlying dimensions of hospital quality: patient experience, mortality, and two clinical process dimensions.

Conclusions

Hospital quality should be measured using a variety of indicators reflecting different dimensions of quality. Treating hospital quality as unidimensional leads to erroneous conclusions about the performance of different hospitals.

Keywords: hospitals, patient experience, Quality of care/patient safety (measurement)

1. INTRODUCTION

Ensuring that all Americans have equal access to high‐quality health care is a core goal of the US health care system, but the quality of care is difficult to measure. The National Academy of Medicine notes that there are multiple aspects of health care quality: care should be safe, timely, equitable, effective, efficient, and patient‐centered. Patient‐centered care is defined as care that is “respectful of and responsive to individual patient preferences, needs, and values, and [ensures] that patient values guide all clinical decisions”.1

Patient experience measures indicate the extent to which care is patient‐centered.2 Anhang Price et al.3 reviewed the literature and concluded that better patient experiences are positively associated with patient adherence, use of some recommended clinical processes, better hospital patient safety culture, and lower unnecessary utilization, especially in the inpatient setting. However, many of the reviewed studies reported weak or no associations between patient experiences and clinical outcomes, suggesting that patient experiences and clinical measures may reflect different dimensions of health care quality. Manary et al.4 concluded that patient experience represents a dimension of health care quality distinct from other dimensions. For example, Veterans Affairs (VA) hospitals have been found to perform better than non‐VA hospitals on most outcome measures, but VA hospitals did worse on patient experience and behavioral health measures.5

Donabedian provided a framework for examining health care quality, which makes a distinction between structural measures, process measures, and outcome measures.6 The Agency for Healthcare Research and Quality provides guidance for the selection of quality measures using both the Donabedian framework and the National Academy of Medicine's six domains of health care quality.7 A review of public reporting programs from 2010 found that most report quality measures from several different domains.8 The Centers for Medicare & Medicaid Services' (CMS') Hospital Value‐Based Purchasing Program uses a multidimensional approach of measuring hospital quality by rewarding hospitals based on four domains of quality: safety, clinical care, efficiency and cost reduction, and patient and caregiver‐centered experience of care.9, 10, 11

Using a principal component analysis of Ridit scores,12 Lieberthal and Comer13 created an overall measure of hospital quality by combining 71 variables that are a mixture of structural characteristics, patient readmission and mortality rates, clinical process measures, and patient experience measures. Ridit scores were originally developed to transform ordinal variables into a probability scale,14 but can be applied to continuous and dichotomous indicators, as was done in Lieberthal and Comer.13 Patient experience was measured using the Consumer Assessment of Healthcare Providers and Systems (CAHPS®) Hospital survey (HCAHPS).15, 16 Twenty measures of hospital characteristics that are not traditionally considered measures of quality were also included, such as the number of beds and volume of patients. While such structural characteristics may be correlated with quality, they do not directly reflect the quality of care provided. A preferred approach is to directly measure the quality of care directly and assess how it varies by system characteristics. For example, it has been shown that the number of beds in a hospital has different associations with HCAHPS and clinical quality indicators.17

A key assumption of Lieberthal and Comer13 is that all 71 measures used in their analysis are indicators of a single underlying hospital quality dimension, identified by the first principal component of the Ridit scores. However, no diagnostic information is presented from the principal component analysis to support the assumption of unidimensionality and the use of a single principal component. In other studies, quality indicators have been shown to be multidimensional.18, 19, 20, 21, 22 Based on their analysis, Lieberthal and Comer concluded that

patient satisfaction is a poor measure of quality…. the best hospitals were not the ones that were the quietest or that had the most responsive clinicians. Busier hospitals tended to have better performance… These hospitals scored high using process and outcome variables and indicators of volume, but only in the middle in terms of patient satisfaction13 p. 32.

We reanalyze the data of Lieberthal and Comer13 using the same analytic approach that they used to assess the assumption that the 71 indicators reflect a single underlying construct. We also perform an exploratory factor analysis limiting the quality measures to a more conventional set, specifically, those included in the CMS' Hospital Value‐Based Purchasing Program. The CMS' Hospital Value‐Based Purchasing Program does not include hospital structural characteristics (eg, patient volume) because, as noted above, delivery system characteristics are not considered indicators of care quality. The performance period for the Hospital Value‐Based Purchasing Program for 2013 was July 1, 2011 through March 31, 2012, which partially overlaps with our study years. Domains covered by the Hospital Value‐Based Purchasing Program for 2013 were patient experience and clinical processes.

2. METHODS

2.1. Measures

The 71 indicator variables used by Lieberthal and Comer13 included 20 structural measures, 26 clinical process measures, six patient outcome measures (patient readmission and mortality rates), and 10 patient experience measures. The 10 patient experience measures were used to create 19 indicators, by calculating both a top‐box (percent of responses in the highest response category) and middle‐box score for each. More typically, either only top‐box scores are used, as is done in the Hospital Value‐Based Purchasing Program, or the full range of top‐, middle‐, and bottom‐box scores is reported, such as on the Hospital Compare website.23 When assessing the Lieberthal and Comer13 assumption of a single underlying construct, we use the top‐box and middle‐box representation of the patient experience measures to be consistent with their analyses. However, when we perform the exploratory factor analysis, we use a more traditional representation of the patient experience measures by limiting the quality measures to those included in the Hospital Value‐Based Purchasing Program, which uses only top‐box scores.

All clinical process, patient readmission and mortality, and patient experience measures were obtained from the Hospital Compare database (archives dated October 1, 2010 and October 1, 201124); a similar dataset was used in Lieberthal and Comer.13 Structural measures from Hospital Compare were supplemented with measures from the 2012 American Hospital Association Annual Survey. A complete list of indicators used in these analyses is in the Supporting Information.

2.2. Analysis

Principal component analysis of Ridit scores requires a complete dataset. While other imputation strategies are perhaps preferable,25 we used mean imputation to be consistent with Lieberthal and Comer.13 We developed an R function26 to conduct the principal component analyses of Ridit scores and confirmed the validity of our code by comparing the output to examples provided in the appendix of Brockett et al.12 and in Lieberthal and Comer.27 Our R code is available upon request from the first author.

Lieberthal and Comer13 approach of combining the 71 indicators into a single measure of hospital quality assumes that there is a single underlying construct of hospital quality represented by the measures. We reanalyze the data similar to theirs and examine multiple principal components from the Ridit scores. We examine correlations of the principal components with a select set of measures from the 2011 Hospital Compare database. This allows us to verify that hospital quality scores derived using 2010 data are predictive of hospital quality measurement in 2011.

To be consistent with the existing literature on hospital quality measurement, an exploratory factor analysis was also performed on a set of indicators consistent with CMS' Hospital Value‐Based Purchasing Program. This set does not include structural measures or the middle‐box scores of the patient experience measures.

3. RESULTS AND DISCUSSION

Lieberthal and Comer13 commented that for the HCAHPS patient experience measures, “In many cases, the variable weight for the mid‐level response was of similar magnitude but was in the opposite direction of the corresponding high‐level response. Taken together, these variable weights largely cancel out. Thus, the contribution of patient satisfaction variables to scores is less than the individual variable ranks imply” (p. 24). This conclusion only holds if the “middle‐box” and “top‐box” responses occur at the same rate in most hospitals—that is, if the percentage of respondents who answer “always” is the same as the percentage who respond “usually” within most hospitals. However, this is not the case (Table 1) as “top‐box” responses (eg, “always”) are far more common than “middle‐box” responses (eg, “usually”). Further, the HCAHPS patient experience measures explain 88% of the variance in the first principal component of Ridit scores, compared to 7% for the clinical process measures, 2% for the structural measures, and less than 0.1% for the outcome measures. Hence, most of the variation in the first principal component is explained by the patient experience measures. That is, the weights corresponding to the “middle‐box” and “top‐box” patient experience measures do not cancel out.

Table 1.

Mean hospital‐level percent endorsing each HCAHPS response option from the Hospital Compare database dated October 1, 2011

| HCAHPS measure | Number of hospitals | Top box | Middle box |

|---|---|---|---|

| Hospital clean | 3829 | 71% | 19% |

| Hospital quiet | 3829 | 58% | 30% |

| Doctors communicated well | 3829 | 80% | 15% |

| Nurses communicated well | 3829 | 76% | 19% |

| Received help | 3829 | 64% | 25% |

| Pain well controlled | 3829 | 69% | 23% |

| Staff explained new medications | 3825 | 61% | 18% |

| Recommend hospital | 3828 | 70% | 25% |

| Staff gave discharge information | 3827 | 82% | 18% |

| 9 or 10 | 7 or 8 | ||

|---|---|---|---|

| Overall hospital rating | 3828 | 68% | 23% |

Lieberthal and Comer13 also concluded that “patient satisfaction is a poor measure of quality” (p. 32) by estimating the first principal component of Ridit scores using only patient experience and hospital structural measures and correlating the resulting scores with heart attack, heart failure, and pneumonia mortality rates. They found that better patient experience was correlated with higher mortality rates and concluded that patient experience is a poor measure of quality. Since Lieberthal and Comer13 assume that hospital quality is unidimensional and assess the first principal component based on correlations with mortality and readmission rates, they assume that the single dimension of hospital quality is fully captured by mortality and readmission rates. In addition, positive patient experiences could be related to higher mortality rates. For example, sicker patients may receive more attention, especially near the end of life.28

We reexamined the correlation of the principal components of Ridit scores with mortality rates, but also considered the correlation with other measures of quality. We ran the analysis in two ways: (a) including the full set of indicators and (b) excluding the patient experience measures. This allowed us to examine the impact that the inclusion of patient experience measures has on the correlation between the principal component scores and various quality measures. Table 2 shows the product‐moment correlations between the first two principal components of Ridit scores from 2010 and a representative set of the indicators from 2011. Two years of data are used to assess how scores calculated in 2010 are correlated with indicators of quality in 2011. The sign of the correlations and the direction of the principal component scores are arbitrary because the direction of loadings on principal components is arbitrary. The first principal component of Ridit scores from 2010 that included all indicators is highly correlated with the patient experience measures from 2011 (correlations of ‐0.73 and 0.65 for always received help and usually received help, respectively). However, the correlations between the first principal component of Ridit scores from 2010 that exclude patient experience measures and the patient experience measures from 2011 are about half as large (correlations of ‐0.36 and 0.38 for always received help and usually received help, respectively). This indicates that patient experience is a dimension of quality not fully represented by the other measures.

Table 2.

Correlation of 1st and 2nd principal components of Ridit scores from 2010 with select 2011 Hospital Compare measures in 4629 hospitals

| Measure | Including patient experience in principal component analysisa | Excluding patient experience from principal component analysisb | ||

|---|---|---|---|---|

| Correlation with 1st principal component | Correlation with 2nd principal component | Correlation with 1st principal component | Correlation with 2nd principal component | |

| Heart failure mortality (count) | 0.43 | 0.42 | 0.59 | −0.26 |

| Heart failure mortality rate | −0.15 | −0.03 | −0.12 | 0.07 |

| Always receive help | −0.73 | 0.12 | −0.36 | 0.02 |

| Usually receive help | 0.65 | 0.03 | 0.38 | −0.01 |

| Heart attack patients given aspirin at arrival | 0.17 | 0.19 | 0.25 | −0.01 |

Includes all 71 indicator variables: 20 structural measures, 26 clinical process measures, 6 patient readmission and mortality rates, and 19 patient experience indicators.

Includes 52 indicator variables: 20 structural measures, 26 clinical process measures, and 6 patient readmission and mortality rates.

To explore this further, we considered more than just the first principal component of Ridit scores. Eighteen of the principal components for the 71 indicators were greater than one (Guttman's weakest lower bound).29 A scree plot of eigenvalues indicated multiple underlying dimensions (not shown). We restricted our attention to the first two principal components of the Ridit scores for 3 reasons: (a) ease of presentation, (b) the conclusions of Lieberthal and Comer13 can be refuted with the inclusion of only one additional dimension of quality, and (c) we perform a more comprehensive exploratory factor analysis next.

Table 2 includes the product‐moment correlations between the second principal component of Ridit scores from 2010 and a representative set of the indicators from 2011. Considering only the principal component model that includes all indicators, we see that the second principal component of Ridit scores is not correlated with patient experience measures from 2011 (correlations of 0.12 and 0.03 for always received help and usually received help, respectively). Along with the previous results, this indicates that when including all 71 indicators, the first dimension was defined by patient experience. The second principal component of Ridit scores is still correlated with mortality and process measures from 2011, indicating that it is capturing a dimension of quality that is not measured by patient experience. Again, the results of our principal component analysis of Ridit scores are consistent with multiple dimensions of hospital quality.

Next, we perform an exploratory factor analysis using only the measures that are commonly considered indicators of care quality. We exclude all structural characteristics of hospitals and restrict our attention to the measures included in CMS' Hospital Value‐Based Purchasing Program for FY2014. These measures were selected by CMS as domains on which hospitals are rewarded for high‐quality care. The set of indicators used in this analysis is shown in Table 3. As noted above, the middle‐box patient experience scores are not in this set.

Table 3.

Standardized factor loadings for 25 Commonly Used Indicators of Hospital Quality (loadings less than 0.150 in absolute value suppressed)

| Factor 1 | Factor 2 | Factor 3 | Factor 4 | |

|---|---|---|---|---|

| Heart attack patients given fibrinolytic agent within 30 minutes of arrival (AMI‐7a) | ||||

| Heart attack patients given PCI within 90 minutes of arrival (AMI‐8a) | 0.154 | |||

| Heart failure patients given discharge instructions (HF‐1) | 0.518 | |||

| Pneumonia patients whose initial emergency room blood culture was performed prior to the administration of the first hospital dose of antibiotics (PN‐3b) | 0.492 | |||

| Pneumonia patients given the most appropriate initial antibiotic (PN‐6) | 0.535 | |||

| Surgery patients who were given an antibiotic at the right time (within one hour before surgery) to help prevent infection (SCIP‐INF‐1) | 0.461 | |||

| Surgery patients who were given the right kind of antibiotic to help prevent infection (SCIP‐INF‐2) | 0.338 | 0.229 | ||

| Surgery patients whose preventive antibiotics were stopped at the right time (SCIP‐INF‐3) | 0.424 | |||

| Heart surgery patients whose blood sugar (blood glucose) is kept under good control in the days right after surgery (SCIP‐INF‐4) | ||||

| Surgery patients whose urinary catheters were removed on the first or second day after surgery (SCIP‐INF‐9) | 0.341 | |||

| Surgery patients on beta blockers before coming to the hospital who were kept on the beta blockers during the perioperative period (SCIP‐CARD‐2) | 0.37 | |||

| Surgery patients whose doctors ordered treatments to prevent blood clots after certain types of surgeries (SCIP‐VTE‐1) | 0.914 | |||

| Patients who got treatment at the right time to help prevent blood clots after certain types of surgery (SCIP‐VTE‐2) | 0.879 | |||

| Hospital always clean (H‐CLEAN‐HSP‐A‐P) | 0.744 | |||

| Hospital always quiet (H‐QUIET‐HSP‐A‐P) | 0.680 | −0.173 | ||

| Doctors always communicated well (H‐COMP‐2‐A‐P) | 0.812 | |||

| Nurses always communicated well (H‐COMP‐1‐A‐P) | 0.957 | |||

| Patients always received help (H_COMP_3_A_P) | 0.894 | |||

| Pain was always well controlled (H_COMP_4_A_P) | 0.832 | |||

| Staff gave discharge information (H_COMP_6_Y_P) | 0.538 | 0.334 | ||

| Staff always explained new medications (H_COMP_5_A_P) | 0.836 | |||

| Hospital rated 9‐10 (H_HSP_RATING_9_10) | 0.832 | 0.151 | ||

| PN morality rate (MORT_30_PN) | 0.585 | |||

| HF mortality rate (MORT_30_HF) | 0.649 | |||

| HA mortality rate (MORT_30_AMI) | 0.457 |

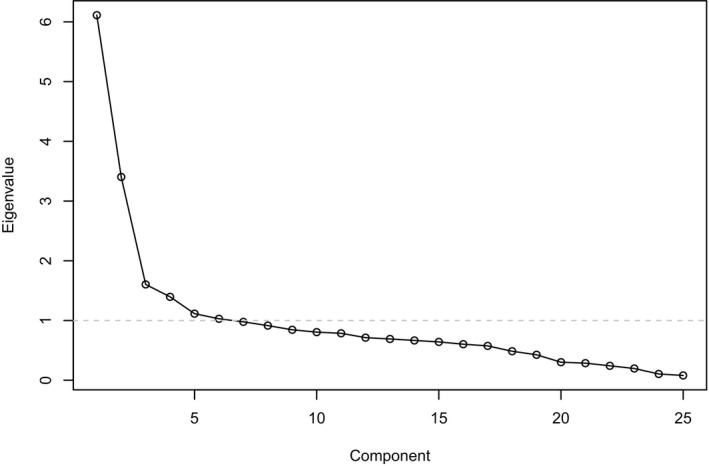

We performed an exploratory factor analysis on the reduced set of 25 quality indicators. There were 6 eigenvalues exceeding one in a principal component analysis of the correlation matrix. The scree plot of the eigenvalues (Figure 1) suggested up to 4 factors. We estimated a 4‐factor solution using Promax oblique rotation. Factor loadings are presented in Table 3. Patient experience measures loaded on the first factor. Several heart failure, pneumonia, and surgery clinical process measures loaded on the second factor. Two clinical processes measuring appropriate use and timing of venous thromboembolism prophylaxis loaded on the third factor. Heart failure, heart attack, and pneumonia mortality rates loaded on the fourth factor.

Figure 1.

Scree plot of eigenvalues from the exploratory factor analysis of 25 indicators of hospital quality

In summary, the exploratory factor analysis suggests that there are four underlying constructs: one measured by patient experience, two measured by clinical processes, and one measured by patient mortality.

4. CONCLUSIONS

The assumption that hospital quality is a single dimension is empirically testable and was rejected in the analyses reported here. Hence, our analyses provide support for multidimensionality and indicate that an unwarranted assumption of unidimensionality can lead to incorrect inferences. In this instance, incorrect assumptions of unidimensionality resulted in incorrect conclusions about the validity of patient experiences measures. Our findings highlight the need to measure quality using indicators that capture the multiple dimensions of quality. The quality measurement that corresponds to the multiple underlying dimensions is necessary to identify performance on specific aspects of care and improve quality. In addition, these findings highlight the importance of limiting inputs to quality measures, since including structural correlates that are not themselves indicative of quality can reward structure and penalize hospitals whose performance is unusually good compared to other hospitals with similar structure

Our analyses were focused on the assessment and measurement of multiple dimensions of hospital quality, but it may still be of interest to produce a single overall quality measure. It is important that the overall quality measure is not an arbitrary function of tangential aspects of the inputs, such as the number of quality measures in each domain included in the analysis. For example, if nine patient experience measures and one clinical process measure are included in a principal component analysis, the first principal component will tend to align with patient experience. A better approach is to measure and report each dimension of quality and, where needed, assign importance weights to the dimensions.

For example, CMS weights and combines different aspects of hospital quality to produce an overall star rating on its Hospital Compare website. In addition, CMS' Hospital Value‐Based Purchasing Program bases payment on 30% patient experience, 30% patient outcomes, 20% clinical processes, and 20% efficiency.30 Reporting about separate aspects of care and combining these indicators together provide both specific information and an overall indication of hospital quality that reflects policy priorities.

Supporting information

ACKNOWLEDGMENTS

Joint Acknowledgment/Disclosure Statement: This research was supported through cooperative agreements with the Agency for Healthcare Research and Quality (AHRQ; U18 HS016980 and U18HS016978). We would like to Biayna Darabidian and Fergal McCarthy for assistance with manuscript preparation. All authors declare no conflict of interest. All authors have approved the final version of the manuscript. There was no requirement for prior approval of the manuscript by the sponsoring agency, but the authors solicited feedback and provided an advanced copy of the manuscript as a courtesy. No other disclosures.

Cefalu MS, Elliott MN, Setodji CM, Cleary PD, Hays RD. Hospital quality indicators are not unidimensional: A reanalysis of Lieberthal and Comer. Health Serv Res. 2019;54:502–508. 10.1111/1475-6773.13056

REFERENCES

- 1. Institute of Medicine . Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: The National Academies of Sciences, Engineering, and Medicine; 2001. [Google Scholar]

- 2. de Silva A, Valentine N. Measuring Responsiveness: Results of a Key Informants Survey in 35 Countries. Geneva, Switzerland: World Health Organization; 2000. [Google Scholar]

- 3. Anhang Price R, Elliott MN, Zaslavsky AM, et al. Examining the role of patient experience surveys in measuring health care quality. Med Care Res Rev. 2014;71(5):522–554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Manary MP, Boulding W, Staelin R, Glickman SW. The patient experience and health outcomes. New Engl J Med. 2013;368(3):201–203. [DOI] [PubMed] [Google Scholar]

- 5. Blay E Jr, DeLancey JO, Hewitt DB, Chung JW, Bilimoria KY. Initial public reporting of quality at veterans affairs vs non‐veterans affairs hospitals. JAMA Intern Med. 2017;177(6):882–885. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Donabedian A. The quality of care. How can it be assessed? JAMA. 1988;260(12):1743–1748. [DOI] [PubMed] [Google Scholar]

- 7. Agency for Healthcare Research and Quality . Select Measures To Report. 2015; https://www.ahrq.gov/professionals/quality-patient-safety/talkingquality/create/gather.html.

- 8. O'Neil, S , Schurrer, J , and S Simon. Environmental Scan of Public Reporting Programs and Analysis. Washington, DC: Mathematica Policy Research Inc.; 2010. [Google Scholar]

- 9. Centers for Medicare & Medicaid Services . Medicare program; Hospital inpatient value‐based purchasing program. Fed Reg. 2011;76(88):26490–26547. [PubMed] [Google Scholar]

- 10. Centers for Medicare & Medicaid Services . Medicare Program; Hospital Inpatient Prospective Payment Systems for Acute Care Hospitals and the Long‐Term Care Hospital Prospective Payment System and Fiscal Year 2015 Rates; Quality Reporting Requirements for Specific Providers; Reasonable Compensation Equivalents for Physician Services in Excluded Hospitals and Certain Teaching Hospitals; Provider Administrative Appeals and Judicial Review; Enforcement Provisions for Organ Transplant Centers; and Electronic Health Record (EHR) Incentive Program. Fed Reg. 2014;79(163):49853–50536. [PubMed] [Google Scholar]

- 11. Centers for Medicare & Medicaid Services . Hospital Value‐Based Purchasing Program. Outreach and Education 2017; https://www.cms.gov/Outreach-and-Education/Medicare-Learning-Network-MLN/MLNProducts/MLN-Publications-Items/CMS1255514.html.

- 12. Brockett PL, Derrig RA, Golden LL, Levine A, Alpert M. Fraud classification using principal component analysis of RIDITs. J Risk Insur. 2002;69(3):341–371. [Google Scholar]

- 13. Lieberthal RD, Comer DM. What are the characteristics that explain hospital quality? A longitudinal Pridit approach. Risk Manag Ins Rev. 2013a;17(1):17–35. [Google Scholar]

- 14. Bross IDJ. How to use ridit analysis. Biometrics. 1958;14(1):18–38. [Google Scholar]

- 15. Keller S, O'Malley AJ, Hays RD, et al. Methods used to streamline the CAHPS Hospital Survey. Health Serv Res. 2005;40(6 Pt 2):2057–2077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Darby C, Hays RD, Kletke P. Development and Evaluation of the CAHPS® Hospital Survey. Health Serv Res. 2005;40(6 Pt 2):1973–1976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Lehrman WG, Elliott MN, Goldstein E, Beckett MK, Klein DJ, Giordano LA. Characteristics of hospitals demonstrating superior performance in patient experience and clinical process measures of care. Med Care Res Rev. 2010;67(1):38–55. [DOI] [PubMed] [Google Scholar]

- 18. Farquhar M. AHRQ Quality Indicators. Rockville, MD: Agency for Healthcare Research and Quality; 2008. [PubMed] [Google Scholar]

- 19. Hardeep C, Neetu K. Development of multidimensional scale for healthcare service quality (HCSQ) in Indian context. J Indian Bus Res. 2010;2(4):230–255. [Google Scholar]

- 20. Gutacker N, Street A. Multidimensional performance assessment of public sector organisations using dominance criteria. Health Econ. 2018;27(2):e13–e27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Schneider EC, Zaslavsky AM, Landon BE, Lied TR, Sheingold S, Cleary PD. National quality monitoring of Medicare health plans: the relationship between enrollees' reports and the quality of clinical care. Med Care. 2001;39(12):1313–1325. [DOI] [PubMed] [Google Scholar]

- 22. Wilson IB, Landon BE, Marsden PV, et al. Correlations among measures of quality in HIV care in the United States: cross sectional study. BMJ. 2007;335(7629):1085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Medicare.gov . Hospital Compare. https://www.medicare.gov/hospitalcompare/search.html?

- 24. Centers for Medicare & Medicaid Services . Hospital Compare datasets. 2017; https://data.medicare.gov/data/hospital-compare, 2017.

- 25. Little RJA, Rubin DB. Statistical Analysis with Missing Data. Hoboken, NJ: John Wiley & Sons; 2014. [Google Scholar]

- 26. R: A Language and Environment for Statistical Computing [computer program]. Vienna, Austria: R Foundation for Statistical Computing; 2015. [Google Scholar]

- 27. Lieberthal RD, Comer DM. Validating the PRIDIT Method for Determining Hospital Quality with Outcomes Data. Schaumburg, IL: Society of Actuaries; 2013b. [Google Scholar]

- 28. Elliott MN, Haviland AM, Cleary PD, et al. Care experiences of managed care Medicare enrollees near the end of life. J Am Geriatr Soc. 2013;61(3):407–412. [DOI] [PubMed] [Google Scholar]

- 29. Guttman L. Some necessary conditions for common factor analysis. Psychometrika. 1954;19(2):149–161. [Google Scholar]

- 30. Elliott MN, Beckett MK, Lehrman WG, et al. Understanding the role played by Medicare's patient experience points system in hospital reimbursement. Health Affairs (Project Hope). 2016;35(9):1673–1680. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials