Supplemental Digital Content is available in the text.

Keywords: communication, electronic health records, intensive care unit, medical education, medical errors, teaching rounds

Abstract

Objectives:

Incomplete patient data, either due to difficulty gathering and synthesizing or inappropriate data filtering, can lead clinicians to misdiagnosis and medical error. How completely ICU interprofessional rounding teams appraise the patient data set that informs clinical decision-making is unknown. This study measures how frequently physician trainees omit data from prerounding notes (“artifacts”) and verbal presentations during daily rounds.

Design:

Observational study.

Setting:

Tertiary academic medical ICU with an established electronic health record and where physician trainees are the primary presenters during daily rounds.

Subjects:

Presenters (medical student or resident physician), interprofessional rounding team.

Interventions:

None.

Measurements and Main Results:

We quantified the amount and types of patient data omitted from photocopies of physician trainees’ artifacts and audio recordings of oral ICU rounds presentations when compared with source electronic health record data. An audit of 157 patient presentations including 6,055 data elements across nine domains revealed 100% of presentations contained omissions. Overall, 22.9% of data were missing from artifacts and 42.4% from presentations. The interprofessional team supplemented only 4.1% of additional available data. Frequency of trainee data omission varied by data type and sociotechnical factors. The strongest predictor of trainee verbal omissions was a preceding failure to include the data on the artifact. Passive data gathering via electronic health record macros resulted in extremely complete artifacts but paradoxically predicted greater likelihood of verbal omission when compared with manual notation. Interns verbally omitted the most data, whereas medical students omitted the least.

Conclusions:

In an academic rounding model reliant on trainees to preview and select data for presentation during ICU rounds, verbal appraisal of patient data was highly incomplete. Additional trainee oversight and education, improved electronic health record tools, and novel academic rounding paradigms are needed to address this potential source of medical error.

Vast human effort and cost are spent in collecting patient data in service of diagnostic inquiry and clinical monitoring of a patient’s condition. In the ICU, the quantity of data generated is particularly immense and accumulates on a continuous, compounding basis (1). Every day on rounds, the interprofessional ICU team gathers to gain a shared understanding of the patient’s status and craft the treatment plan (2, 3). Numerous studies (4, 5) support the benefits of this team-based approach, including reduction in ICU patient mortality (6). In preparation to verbalize information during rounds, clinicians “pre-round,” or independently gather, review, and cognitively process the patient database (7). Integrating key diagnostic information and recognizing clinical trends allow clinicians to arrive at correct clinical diagnoses and formulate appropriate treatment plans.

Conversely, when clinicians make patient care decisions on the basis of faulty, outdated, or incomplete data, or when they fail to synthesize information, patients may be misdiagnosed and harmed (8). An estimated 15% of patients experience diagnostic error (9, 10) with potentially grave consequences in the ICU, where patients lack the physiologic reserve to survive added harms (11). Faulty information synthesis (8) and inappropriate data selectivity (12) are the most common manifestations of cognitive biases such as premature closure and availability bias that lead clinicians toward the wrong diagnosis.

In the era of widespread electronic health record (EHR) use (13), clinicians perform much of the prerounding tasks of acquiring, collating, and processing patient data by directly interfacing with the EHR. EHR rounding widgets, data graphing tools, and macros that allow automatic data importation into progress notes can support more efficient data gathering (14) that is less prone to transcription errors of paper charting (15). Conversely, studies of EHR navigation from both real-world (16) and simulation-based (17) ICU settings demonstrate that chart review requires screen switching between over 25 unique screens, suggesting poor EHR usability and design as it relates to data retrieval for critically ill patients.

By providing a time-stamped, legible, and geographically centralized database that can be retrospectively viewed, EHRs create a novel opportunity to study clinicians’ information gathering and processing behaviors in a manner previously unfeasible. In prior work (18), we audited the accuracy of: 1) EHR laboratory data extraction to prerounding notes (“artifacts”) and 2) subsequent verbalization on ICU rounds by comparing both to the original source data in the EHR. Physician trainee presenters failed to gather and misrepresented (i.e., omitted, misinterpreted, gave outdated or incorrect information) 22% and 39% of audited laboratory data, respectively. The majority of misrepresentations were omissions, likely due to some combination of selective filtering and failure to find the data in the EHR. The strongest predictor of accurate data verbalization was presence of the data on presenters’ artifacts, suggesting that, although imperfect, the artifact was an effective intermediary between the EHR and patient rounds.

In this study, we seek to expand our understanding of physician trainees’ data gathering and processing by applying a more rigorous methodology that extends the audit to other ICU data domains. We hypothesize that omissions will also be common across other data domains but that the use of macros to automatically populate artifacts with patient data as opposed to manual transcription will be associated with fewer verbal omissions on rounds.

MATERIALS AND METHODS

This study was approved by the institutional review board and conducted in our 16-bed medical ICU (MICU) at Oregon Health and Science University (Portland, OR), a tertiary academic medical center. A detailed description of the study methodology is included in the Supplementary Materials (Supplemental Digital Content 1, http://links.lww.com/CCM/E198). Institutional EHR use (Epic Care; Epic Systems, Verona, WI) is well established. The interprofessional ICU team rounds immediately outside patient rooms were accompanied by two or three mobile EHR-equipped computers assigned to resident and attending physicians. Before rounds, physician trainees (medical students and residents) gather new patient data since rounds the day prior and collate it onto a paper-based “artifact” that serves as a presentation aid during rounds. Rounds follow a standardized script, including time allotted for input from nursing, pharmacy, and respiratory therapy.

Interprofessional ICU rounds were audio recorded once weekly October through December 2015 by trained observers not participating in patients’ clinical care. Artifacts were photocopied at the conclusion of rounds, and audio recordings were professionally transcribed. Rounding teams knew that they were being recorded but researchers did not give advance notice of audit dates, collected data as silent observers, and arrived minutes before the onset of rounds to avoid contaminating trainees’ prerounding process. Observers collected descriptive characteristics related to human and sociotechnical factors.

We analyzed how commonly physician trainees fail to gather (“artifact omissions”) and verbalize (“trainee verbal omissions”) EHR data by retrospectively comparing data present in the EHR before the rounding presentation to that which was extracted to the artifact and then verbalized during the presentation. The audit included data from nine domains (Supplementary Tables 1–8, Supplemental Digital Content 1, http://links.lww.com/CCM/E198). Individual data elements (e.g., blood pressure within vital signs domain) were eligible for analysis if they resulted within patient charts between the previous noon and the onset of the patient’s rounding presentation. Data were scored as omitted if absent from the artifact or not specifically named or described during rounds such that a listener could ascertain that the data existed without prior knowledge of the patient’s case. Presenters were not penalized for summarizing rather than naming individual data. For instances in which presenters did not verbalize data, we observed whether any other ICU team member supplied missing data and report the frequency of “team verbal omissions.” Based on our previous work showing omissions accounted for nearly 80% of all data misrepresentations (18) and to minimize observer subjectivity, data audits were limited to completeness without consideration of accuracy, timeliness, or correctness of interpretation. To ensure consistent methodology, the researchers first performed dual data entry on 10 patients with an interobserver agreement rate of 91%.

The frequency of presenters’ failure to extract and verbalize data and how commonly omissions were caught are reported by data domain. Bivariate (chi-square test) and multiple logistic regression analyses were performed to establish associations between categorical human and sociotechnical variables and data omission. Analyses were repeated after grouping data elements by patient presentations. Study data were collected and managed using REDCap (Vanderbilt University, Nashville, TN) (19) electronic data capture tools hosted at Oregon Health and Science University. Data were analyzed with Graphpad Prism (GraphPad Software Inc., La Jolla, CA), JMP Statistical Software (SAS Institute, Cary, NC), and Microsoft Office Excel 2010 (Microsoft Corporation, Redmond, WA).

RESULTS

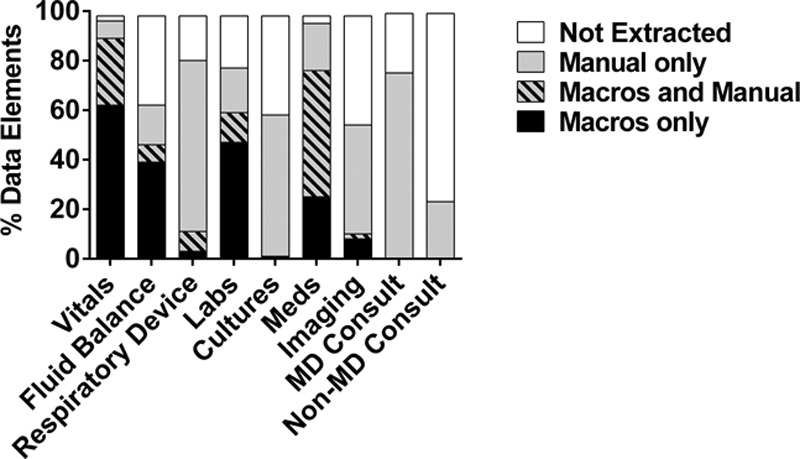

We audited 13 MICU rounding days yielding 157 patient presentations and 6,055 data elements. The frequencies of audited data by data domain type are shown in Supplementary Table 10 (Supplemental Digital Content 1, http://links.lww.com/CCM/E198). Laboratory data comprised the largest domain with over 50% of all audited data elements. Descriptive characteristics of the rounds presentations, presenters, patients, attendings, and artifacts are listed in Supplementary Table 9 (Supplemental Digital Content 1, http://links.lww.com/CCM/E198). Interns presented the most frequently and 100% of presentations involved an artifact. Ninety-one percentage of artifacts included data electronically imported from the EHR via macros and most presenters used an incomplete version of the daily progress note. The method and success of data extraction onto the artifact varied by data domain (Fig. 1 and Table 1). Vital signs and continuous infusion medication domains were distinct in the high rate of successful extraction to the artifact and the reliance on macros for EHR data extraction (Fig. 1).

Figure 1.

Success and method of data extraction to presenter artifacts by data domain. “Manual” method of extraction indicates free-typed or handwritten data on the artifact, whereas “macros” refers to using electronic health record (EHR)-specific commands to electronically import data from the EHR in a prespecified format. MD Consult = physician consultant recommendations, non-MD Consult = non-physician consultant recommendations.

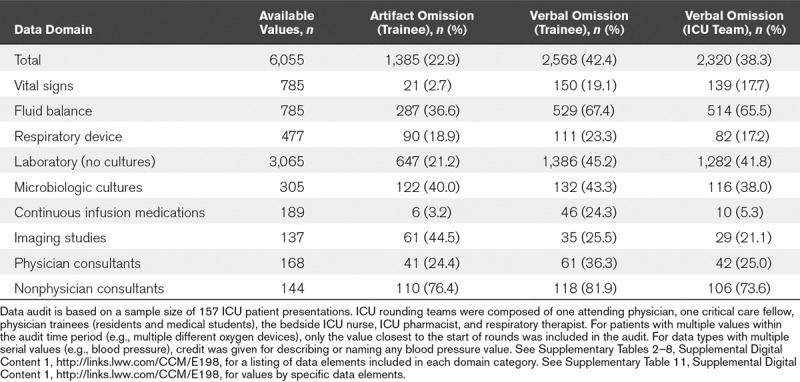

TABLE 1.

Incompleteness of Extracted and Verbalized Patient Data by Domain

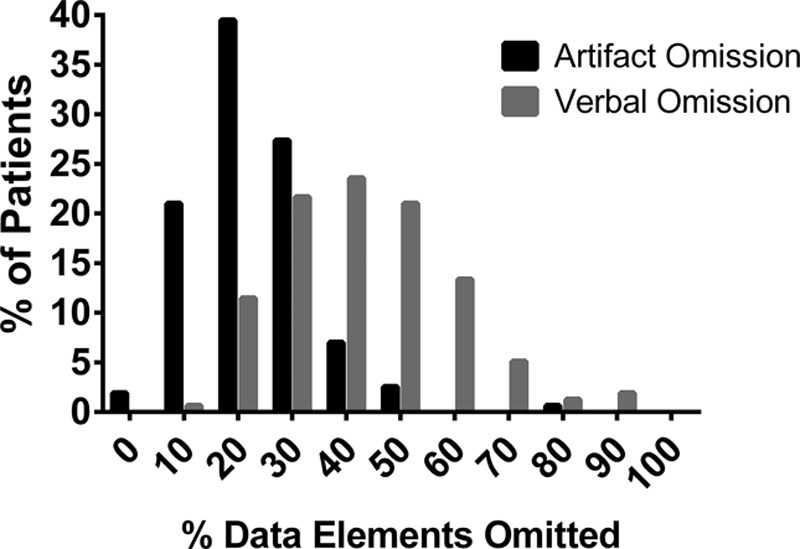

One hundred percent of trainee artifacts and presentations contained data omissions. However, the percentage of audited data omitted on a per patient basis varied widely with ranges of 3.2–82.5% and 6.1–94.6% for artifact and presentation omissions, respectively (Fig. 2). Trainee omission frequency also varied by data domain and individual data element types (Table 1; and Supplementary Table 11, Supplemental Digital Content 1, http://links.lww.com/CCM/E198). Of the complete audited data set, 22.9% of elements were absent from artifacts and 42.4% were not verbalized by trainees during rounds. With the exception of the imaging domain, there was decay in trainee data completeness from EHR extraction to the artifact and subsequent verbalization during rounds. Other ICU team members provided only an additional 4.1% of the available EHR data (Table 1). However, ICU team verbal omissions also varied by domain with only 5.3% of continuous infusion medications omitted as opposed to 65.5% of fluid balance data.

Figure 2.

Histogram of prevalence of per patient data omissions at artifact creation (“artifact omission”) and rounds presentation (“trainee verbal omission”) steps.

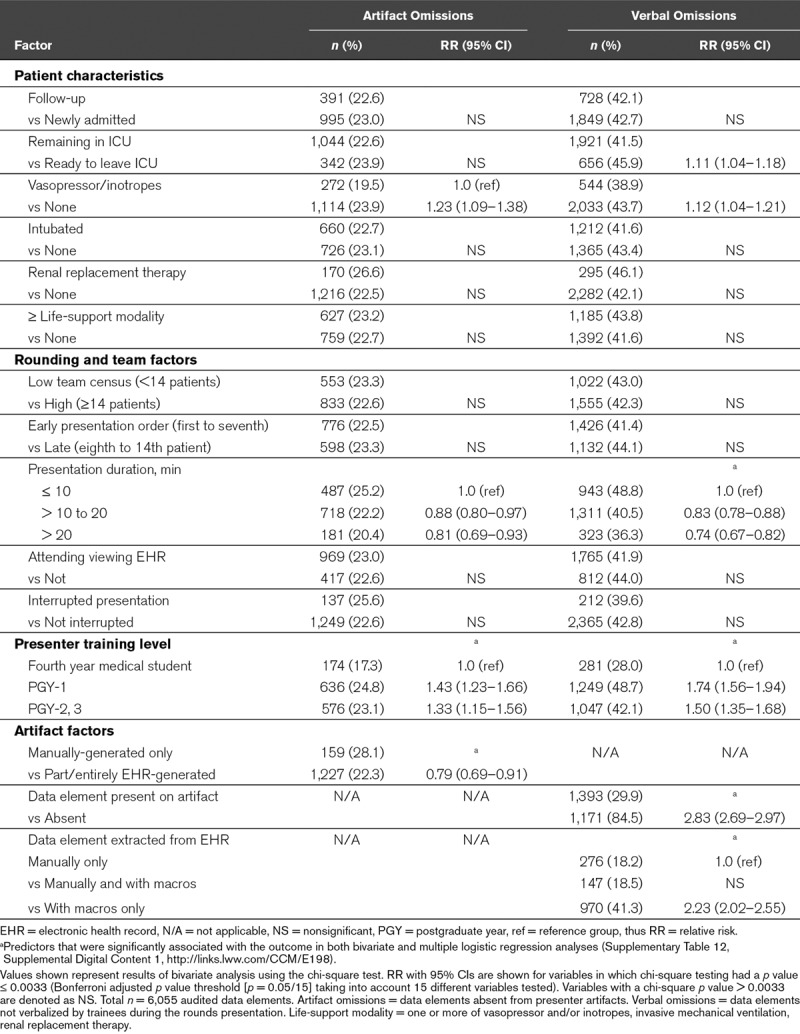

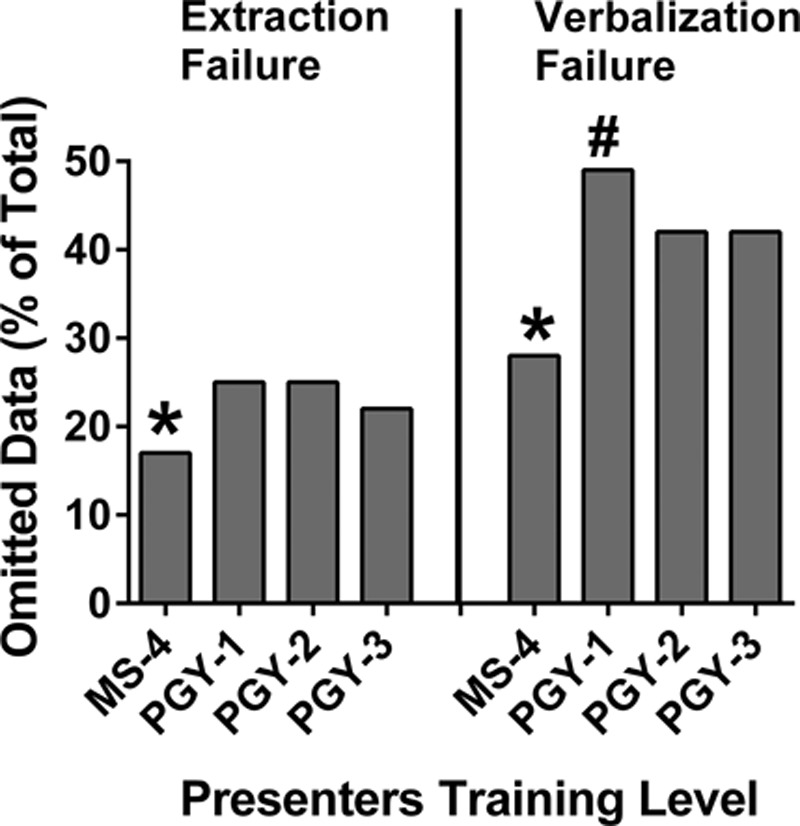

Human and sociotechnical factors associated with data extraction and verbalization failures are shown in Table 2 and Supplementary Table 12 (Supplemental Digital Content 1, http://links.lww.com/CCM/E198). Omission predictors did not significantly differ when data elements were grouped and analyzed by patient presentations (Supplementary Table 13, Supplemental Digital Content 1, http://links.lww.com/CCM/E198). The strongest predictor of failing to verbalize a data element was a preceding failure to extract the data element to the artifact (relative risk [RR], 2.83; 95% CI, 2.69–2.97; p < 0.0001) (Table 2; and Supplementary Fig. 4, Supplemental Digital Content 1, http://links.lww.com/CCM/E198). However, the method of EHR extraction was also predictive with presenters more likely to verbally omit EHR data retrieved exclusively via macros (RR, 2.23; 95% CI, 2.02–2.55; p < 0.0001) compared with manual artifact notation (Table 2; and Supplementary Table 12, Supplemental Digital Content 1, http://links.lww.com/CCM/E198). Significant differences related to presenter training level were observed (Table 2; and Supplementary Table 12, Supplemental Digital Content 1, http://links.lww.com/CCM/E198). Medical students created the most complete artifacts and omitted the least data during presentations whereas interns omitted the most (Fig. 3; and Supplementary Fig. 3, Supplemental Digital Content 1, http://links.lww.com/CCM/E198). Presenters omitted less data in longer compared with shorter presentations (Table 2; and Supplementary Fig. 2, Supplemental Digital Content 1, http://links.lww.com/CCM/E198).

TABLE 2.

Factors Associated With Data Omission by Physician Trainees (Bivariate Analysis)

Figure 3.

Frequency of omitted data on artifacts (“artifact omission”) and during rounds presentations (“trainee verbal omission”) stratified by physician trainee presenter training level. *Fourth year medical student (MS-4) group had fewer artifact and verbal omissions when compared with postgraduate year (PGY)-1 (p < 0.00001, p < 0.00001) and combined PGY-2/3 groups (p < 0.00015, p < 0.00001), respectively. #PGY-1 group had more verbal omissions compared with combined PGY-2/3 group (p < 0.00001).

DISCUSSION

In this study, we performed a comprehensive audit of the completeness of physician trainee EHR data gathering and subsequent data verbalization during interprofessional ICU rounds in order to quantify the ICU team information deficit yielded by our rounding paradigm. At our institution, data omissions occurred in 100% of patient rounds and involved 38% of the overall available data. Thus, despite several hours spent prerounding and the premise that rounds provide the entire team with a shared understanding of the patient’s condition, ultimately only 62% of patient data were collectively reviewed. Our results offer completeness of data appraisal as another metric in comparing the efficacy of novel rounding paradigms, and in the current EHR age, challenges the relevance of traditional academic rounding structures born from a paper-based era.

In addition to quantifying the magnitude, our study describes the composition of the rounding team’s “data blind spot” and identifies data types that may warrant increased vigilance by the ICU team and more emphasis during trainee education. For example, presenters were over four times more likely to omit respiratory rate compared with blood pressure and heart rate (Supplementary Table 11, Supplemental Digital Content 1, http://links.lww.com/CCM/E198). Disregard of respiratory rate is a well-known phenomenon on medical wards (20, 21) that appears to extend to the ICU despite availability of more credible readings via continuous external monitoring and evidence that tachypnea predicts clinical decompensation (20). Sixty-seven percentage of fluid balance and 53% of glucose data were omitted despite established associations between positive fluid balance and increased mortality in critical illness (22, 23) and the accepted importance of glycemic control (24). Trainees also regularly omitted set tidal volumes and measured plateau pressures in patients on volume control ventilation despite their established importance in patients with and without acute respiratory distress syndrome (25, 26).

There are several potential explanations for frequent data omission during rounds. Unintended data omissions are the result of an undesired failure in the steps of extraction from the EHR and subsequent verbalization during rounds. Similar to our previous study (18), data extracted to the artifact were more likely to be verbalized than data that were not, thus difficulty navigating the EHR may explain some omissions. Domains in which presenters relied more heavily on the use of macros were extracted to the artifact with an extremely high rate of completeness (Fig. 1) compared with those that were generally manually extracted. Thus, our work identifies types of EHR data collection that are currently manually intensive and for which the development of new macros might lessen providers’ workload and increase the success of EHR data retrieval.

However, whereas macros facilitated completeness of data extraction, in direct contrast to our hypothesis, manually handwriting or free-typing data elements on the artifact were more strongly associated with verbalization during rounds. This conundrum, whereby using macros facilitates more complete EHR data retrieval, but not verbalization, suggests that by itself, greater incorporation of macros into artifacts will not lead to complete data appraisal during rounds. In their current form, macros may be poorly designed to suit the visual display preferences of clinicians, resulting in clinicians retranscribing data into formats they prefer. Alternatively, clinicians may benefit from the time savings and cognitive off-loading of passive data collection, but akin to the benefits of note-taking demonstrated in education research (27, 28), manual notation may be a necessary activity for learning patient information that facilitates verbalization on rounds.

Many omissions were likely intended and the result of selective data gathering and reporting. This is supported by the findings that most audited data domains demonstrated a decrement in completeness between the steps of extraction to the artifact and verbalization on rounds and some data elements (e.g., physician vs nonphysician consultant notes) were extracted and verbalized with vastly different omission rates despite co-location in the EHR. Selective reporting may take several forms such as presenting only data points directly related to the patient’s primary diagnosis, omitting data with values that fall in the normal range, or categorically omitting data elements that are perceived by one healthcare provider group to have low clinical value.

Whereas selective reporting may be motivated by the goal of increasing ICU rounds efficiency or reducing information overload (1, 29), it can be problematic. First, limiting discussion of data relevant to only known diagnoses may blind the ICU team to early signs of the development of new diagnoses, such as hospital-acquired sepsis. Excluding tests with normal or stable values can result in incorrectly interpreting information or failing to appreciate the acuity of clinical changes that narrows a differential diagnosis. Routinely omitting data that are continuously collected but provide little value is a lost opportunity to reduce wasteful care as per the Choosing Wisely Campaign (30). Allowing clinicians to maintain biases against certain information fosters a situation whereby they overlook that data when it is actually critically relevant.

Additionally, our trainee-dependent academic rounding model places the most inexperienced clinician in charge of data selection. Interestingly, medical students omitted the least data, possibly a reflection of their expected clinical role as a data “reporter” rather than “interpreter” (31), greater scrutiny of their performance by the ICU team (18), or less cognitive burden compared with residents overseeing more patients. Residents may more successfully integrate and prioritize patient data, thereby appropriately excluding some data. However, that senior residents excluded less data than interns (Fig. 3; and Supplementary Fig. 3, Supplemental Digital Content 1, http://links.lww.com/CCM/E198), that vital sign instability (32) and important physiologic trends (33) are routinely omitted during studies of physician hand-off communication and that physician trainees overlooked 60–70% of safety issues during EHR review of simulated ICU patients (34, 35) all argue that ICU teams should not blindly accept trainee decisions in regard to data selectivity. Adding further complexity, attendings may differ by data completeness preferences and relevant data vary by patient diagnoses for which there currently is no widely accepted standard. Ideally, the rounding team should collectively appraise the patient’s entire data set without exclusions, but does so in a way that is not overly tedious or overwhelming and leverages EHR technology. Given greater verbal completeness came at the expense of longer presentations, finding this balance remains an area for further study.

Our study has important limitations. First, our results are the experience of a single academic ICU with a specific rounding paradigm. We expect that other settings with different rounding practices will yield different results. However, this detailed evaluation of one rounding structure provides a benchmark to which alternate rounding methods and ICU environments can be compared. Second, we cannot exclude a Hawthorne effect but hypothesize that omissions may be even higher in the absence of rounding observers. Third, our study is limited to completeness of data, thus the true quantity of accurate, correctly interpreted, and up-to-date verbalized data is probably even lower. Fourth, by design, our study did not weight the clinical relevance of individual data omissions nor attempt to link omissions with specific patient harms. Assigning importance or causality between individual data omissions and specific outcomes is highly subjective, even when done retrospectively. However, our work provides a broad survey of the types of data most prone to omission that may direct future studies for which there is consensus on the importance of specific data elements, such as exploring whether tidal volume omission during rounds contributes to clinicians’ failure to deliver lung-protective ventilation.

CONCLUSIONS

In an interprofessional rounding model reliant on physician trainees to preview patient data in the EHR, create a paper presentation aid, and then verbalize data during rounds, the ICU team routinely formulates patient care plans based on a remarkably incomplete data set. Transcribing data from the EHR onto an artifact was associated with less data omission but may be an inefficient and burdensome activity given the finding that more complete verbalization was associated with manual rather than passive electronic data transfer. Both deliberate and inadvertent data omissions may lead to cognitive errors and misdiagnoses; thus, additional study is needed to identify novel EHR tools, workflows, and rounding paradigms that result in efficient, yet complete data appraisal during ICU rounds.

Supplementary Material

Footnotes

*See also p. 478.

Supplemental digital content is available for this article. Direct URL citations appear in the printed text and are provided in the HTML and PDF versions of this article on the journal’s website (http://journals.lww.com/ccmjournal).

Supported, in part, by grants from National Institute of Health and Agency of Healthcare Research and Quality R01 HS023793.

Dr. Artis received funding from the Agency for Healthcare Research and Quality (AHRQ) (partial salary support paid through the grant), and she received support for article research from the AHRQ. Drs. Mohan and Gold’s institutions received funding from the AHRQ. Dr. Bordley has disclosed that he does not have any potential conflicts of interest.

This work was performed at the Oregon Health and Science University, Portland, OR.

REFERENCES

- 1.Manor-Shulman O, Beyene J, Frndova H, et al. Quantifying the volume of documented clinical information in critical illness. J Crit Care 2008; 23:245–250. [DOI] [PubMed] [Google Scholar]

- 2.Giri J, Ahmed A, Dong Y, et al. Daily intensive care unit rounds: A multidisciplinary perspective. Appl Med Inform 2013; 33:63–73. [Google Scholar]

- 3.Burger CD. Multidisciplinary rounds: A method to improve quality and safety in critically ill patients. Northeast Florida Med 2007; 58:16–19. [Google Scholar]

- 4.Lane D, Ferri M, Lemaire J, et al. A systematic review of evidence-informed practices for patient care rounds in the ICU. Crit Care Med 2013; 41:2015–2029. [DOI] [PubMed] [Google Scholar]

- 5.Young MP, Gooder VJ, Oltermann MH, et al. The impact of a multidisciplinary approach on caring for ventilator-dependent patients. Int J Qual Health Care 1998; 10:15–26. [DOI] [PubMed] [Google Scholar]

- 6.Kim MM, Barnato AE, Angus DC, et al. The effect of multidisciplinary care teams on intensive care unit mortality. Arch Intern Med 2010; 170:369–376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Malhotra S, Jordan D, Shortliffe E, et al. Workflow modeling in critical care: Piecing together your own puzzle. J Biomed Inform 2007; 40:81–92. [DOI] [PubMed] [Google Scholar]

- 8.Graber ML, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med 2005; 165:1493–1499. [DOI] [PubMed] [Google Scholar]

- 9.Graber ML. The incidence of diagnostic error in medicine. BMJ Qual Saf 2013; 22(Suppl 2):ii21–ii27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Tejerina E, Esteban A, Fernández-Segoviano P, et al. Clinical diagnoses and autopsy findings: Discrepancies in critically ill patients. Crit Care Med 2012; 40:842–846. [DOI] [PubMed] [Google Scholar]

- 11.Winters B, Custer J, Galvagno SM, Jr, et al. Diagnostic errors in the intensive care unit: A systematic review of autopsy studies. BMJ Qual Saf 2012; 21:894–902. [DOI] [PubMed] [Google Scholar]

- 12.Zwaan L, Thijs A, Wagner C, et al. Does inappropriate selectivity in information use relate to diagnostic errors and patient harm? The diagnosis of patients with dyspnea. Soc Sci Med 2013; 91:32–38. [DOI] [PubMed] [Google Scholar]

- 13.Henry J, Pylypchuk Y, Searcy T, et al. Adoption of Electronic Health Record Systems Among U.S. Non-Federal Acute Care Hospitals: 2008–2015. ONC Data Brief, no.35. 2016Washington, DC, Office of the National Coordinator for Health Information Technology. [Google Scholar]

- 14.Van Eaton EG, McDonough K, Lober WB, et al. Safety of using a computerized rounding and sign-out system to reduce resident duty hours. Acad Med 2010; 85:1189–1195. [DOI] [PubMed] [Google Scholar]

- 15.Black R, Woolman P, Kinsella J. Variation in the transcription of laboratory data in an intensive care unit. Anaesthesia 2004; 59:767–769. [DOI] [PubMed] [Google Scholar]

- 16.Nolan ME, Siwani R, Helmi H, et al. Health IT usability focus section: Data use and navigation patterns among medical ICU clinicians during electronic chart review. Appl Clin Inform 2017; 8:1117–1126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gold JA, Stephenson LE, Gorsuch A, et al. Feasibility of utilizing a commercial eye tracker to assess electronic health record use during patient simulation. Health Informatics J 2016; 22:744–757. [DOI] [PubMed] [Google Scholar]

- 18.Artis KA, Dyer E, Mohan V, et al. Accuracy of laboratory data communication on ICU daily rounds using an electronic health record. Crit Care Med 2017; 45:179–186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Harris PA, Taylor R, Thielke R, et al. Research Electronic Data Capture (REDCap)–A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009; 42:377–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Goldhill DR, McNarry AF, Mandersloot G, et al. A physiologically-based Early Warning Score for ward patients: The association between score and outcome. Anaesthesia 2005; 60:547–553. [DOI] [PubMed] [Google Scholar]

- 21.Cretikos MA, Bellomo R, Hillman K, et al. Respiratory rate: The neglected vital sign. Med J Aust 2008; 188:657–659. [DOI] [PubMed] [Google Scholar]

- 22.Wang N, Jiang L, Zhu B, et al. ; Beijing Acute Kidney Injury Trial (BAKIT) Workgroup: Fluid balance and mortality in critically ill patients with acute kidney injury: A multicenter prospective epidemiological study. Crit Care 2015; 19:371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sirvent JM, Ferri C, Baró A, et al. Fluid balance in sepsis and septic shock as a determining factor of mortality. Am J Emerg Med 2015; 33:186–189. [DOI] [PubMed] [Google Scholar]

- 24.Jacobi J, Bircher N, Krinsley J, et al. Guidelines for the use of an insulin infusion for the management of hyperglycemia in critically ill patients. Crit Care Med 2012; 40:3251–3276. [DOI] [PubMed] [Google Scholar]

- 25.Fan E, Del Sorbo L, Goligher EC, et al. ; American Thoracic Society, European Society of Intensive Care Medicine, and Society of Critical Care Medicine: An official American Thoracic Society/European Society of Intensive Care Medicine/Society of Critical Care Medicine Clinical Practice Guideline: Mechanical ventilation in adult patients with acute respiratory distress syndrome. Am J Respir Crit Care Med 2017; 195:1253–1263. [DOI] [PubMed] [Google Scholar]

- 26.Serpa Neto A, Cardoso SO, Manetta JA, et al. Association between use of lung-protective ventilation with lower tidal volumes and clinical outcomes among patients without acute respiratory distress syndrome: A meta-analysis. JAMA 2012; 308:1651–1659. [DOI] [PubMed] [Google Scholar]

- 27.Mueller PA, Oppenheimer DM. The pen is mightier than the keyboard: Advantages of longhand over laptop note taking. Psychol Sci 2014; 25:1159–1168. [DOI] [PubMed] [Google Scholar]

- 28.Stacy EM, Cain J. Note-taking and handouts in the digital age. Am J Pharm Educ 2015; 79:107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ahmed A, Chandra S, Herasevich V, et al. The effect of two different electronic health record user interfaces on intensive care provider task load, errors of cognition, and performance. Crit Care Med 2011; 39:1626–1634. [DOI] [PubMed] [Google Scholar]

- 30.Halpern SD, Becker D, Curtis JR, et al. ; Choosing Wisely Taskforce; American Thoracic Society; American Association of Critical-Care Nurses; Society of Critical Care Medicine: An official American Thoracic Society/American Association of Critical-Care Nurses/American College of Chest Physicians/Society of Critical Care Medicine policy statement: The choosing Wisely® top 5 list in Critical Care Medicine. Am J Respir Crit Care Med 2014; 190:818–826. [DOI] [PubMed] [Google Scholar]

- 31.Pangaro L. A new vocabulary and other innovations for improving descriptive in-training evaluations. Acad Med 1999; 74:1203–1207. [DOI] [PubMed] [Google Scholar]

- 32.Venkatesh AK, Curley D, Chang Y, et al. Communication of vital signs at emergency department handoff: Opportunities for improvement. Ann Emerg Med 2015; 66:125–130. [DOI] [PubMed] [Google Scholar]

- 33.Pickering BW, Hurley K, Marsh B. Identification of patient information corruption in the intensive care unit: Using a scoring tool to direct quality improvements in handover. Crit Care Med 2009; 37:2905–2912. [DOI] [PubMed] [Google Scholar]

- 34.Sakata KK, Stephenson LS, Mulanax A, et al. Professional and interprofessional differences in electronic health records use and recognition of safety issues in critically ill patients. J Interprof Care 2016; 30:636–642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Bordley J, Sakata KK, Bierman J, et al. Use of a novel, electronic health record-centered interprofessional ICU rounding simulation to understand latent safety issues. Crit Care Med 2018; 46:1570–1576. [DOI] [PMC free article] [PubMed] [Google Scholar]