Abstract

Social information processing is a critical mechanism underlying children’s socio-emotional development. Central to this process are patterns of activation associated with one of our most salient socioemotional cues, the face. In this study, we obtained fMRI activation and high-density ERP source data evoked by parallel face dot-probe tasks from 9-to-12-year-old children. We then integrated the two modalities of data to explore the neural spatial-temporal dynamics of children’s face processing. Our results showed that the tomography of the ERP sources broadly corresponded with the fMRI activation evoked by the same facial stimuli. Further, we combined complementary information from fMRI and ERP by defining fMRI activation as functional ROIs and applying them to the ERP source data. Indices of ERP source activity were extracted from these ROIs at three a priori ERP peak latencies critical for face processing. We found distinct temporal patterns among the three time points across ROIs. The observed spatial-temporal profiles converge with a dual-system neural network model for face processing: a core system (including the occipito-temporal and parietal ROIs) supports the early visual analysis of facial features, and an extended system (including the paracentral, limbic, and prefrontal ROIs) processes the socio-emotional meaning gleaned and relayed by the core system. Our results for the first time illustrate the spatial validity of high-density source localization of ERP dot-probe data in children. By directly combining the two modalities of data, our findings provide a novel approach to understanding the spatial-temporal dynamics of face processing. This approach can be applied in future research to investigate different research questions in various study populations.

Keywords: Face processing, Dot-probe, fMRI, ERP, Source analysis, Children

1. Introduction

Individual variation in processing socioemotional information (e.g., facial expressions) is a critical contributor to both typical and atypical socioemotional development in children (MacLeod et al., 1986). Experimental data and clinical insights both suggest that biases that enhance attention to social cues, such as angry faces, may exacerbate risk for psychopathology (particularly anxiety) and maintain clinical states (Britton et al., 2012; Pérez-Edgar et al., 2010; Pérez-Edgar et al., 2014; White et al., 2009). This study investigated the neural circuitry associated with the processing of threatening and neutral faces as presented in a standard attention paradigm. Specifically, we integrated event-related potential (ERP) and functional magnetic resonance imaging (fMRI) data collected from parallel dot-probe tasks in a sample of 9–12-year-old children. By using high-density ERP source localization techniques, we examined the spatial correspondence between the ERP source activity and fMRI activation evoked by the same faces. Further, by integrating the temporal information from the ERP data and the spatial information contributed by the fMRI data, we were able to better depict the spatial-temporal characteristics of face processing in school-age children. Coupling the spatial distribution and chronometry of processing may help us better understand the neural underpinnings of face-related processing, which is important for discerning typical developmental mechanisms and identifying individual variation that may relate to psychopathology.

The dot-probe paradigm has been typically used to measure attention processing biases, especially related to threat, in both children (Bar-Haim et al., 2007; Britton et al., 2012; Liu et al., 2018; Monk et al., 2006; Monk et al., 2008; Price et al., 2014; Telzer et al., 2008) and adults (Fani et al., 2012; Hardee et al., 2013; Mogg and Bradley, 1999). In this paradigm, each trial presents a pair of faces (threat-neutral or neutral-neutral) followed by an arrow-probe replacing one of the faces. The participant identifies the direction of the arrow as quickly and as accurately as possible via a button press. The processing bias is quantified by subtracting reaction times (RTs) in response to probes replacing the threat face (congruent trials) from RTs to probes replacing the neutral face (incongruent trials) in threat-neutral face pairs. This paradigm is also commonly combined with neuroscience approaches, such as ERP and fMRI, to provide biomarkers of threat-related processes (Britton et al., 2012; Monk et al., 2006, 2008; Price et al., 2014; Fu et al., 2017; Hardee et al., 2013; Liu et al., 2018; Thai et al., 2016). Indeed, biomarkers of attentional processing biases have proven to be more reliable psycho-metrically, and are better predictors of risk, than the initial RT-based bias measures (Brown et al., 2014; White et al., 2016).

ERP/EEG and fMRI studies are typically carried out in parallel within the developmental neuroscience literature, with limited attempts to integrate data from the two units of analysis. Two studies have compared ERP source localization and fMRI activation data modulated by the same task, examining children’s early reading acquisition (Brem et al., 2009, 2010) and semantic processing (Schulz et al., 2008). Both studies reported significant spatial convergence between ERP and fMRI data. In addition, individual structural MRI images have been combined with EEG/ERP source localization data to construct individual-specific head models for more precise localization in children, adolescents, and adults (Bathelt et al., 2014; Buzzell et al., 2017; Ortiz-Mantilla et al., 2011). In a similar vein, source localization of resting-state EEG data has been combined with resting-state fMRI in epileptic children to locate the neural generators of epileptic spikes (Elshoff et al., 2012; Groening et al., 2009). To our knowledge, however, no study has taken the approach of combining ERP and fMRI to explore the neural correlates of face processing in children. By taking advantage of the high temporal resolution of ERP and the refined spatial characterization from fMRI, this novel approach could significantly advance our understanding of the neural mechanisms underlying children’s emotion-related processing.

In the dot-probe literature, several studies have used Low Resolution Electromagnetic Tomography to conduct source localization for task-generated ERP data from adults. One study found that the early visual components, C1, evoked by the face-pair (~90 ms post-stimulus), and P1, evoked by the face-pair or the probe (~130 ms), were localized within the striate and extrastriate visual cortices, respectively (Pourtois et al., 2004). Another study (Mueller et al., 2009) found that an enhanced P1 elicited by angry-neutral versus happy-neutral faces possibly originated from the right fusiform gyrus in anxious adults. Finally, a P1, which was larger when evoked by probes replacing neutral faces (incongruent) versus angry faces (congruent), originated from the anterior cingulate cortex (ACC; Santesso et al., 2008). These results are compatible with fMRI findings in dot-probe studies, noting that ACC activation or ACC-related connectivity were reported in the incongruent versus congruent condition in adults (Carlson et al., 2009) and youth (Fu et al., 2017; Price et al., 2014).

However, none of the current ERP source localization findings have been directly compared and integrated with fMRI activation evoked by the same paradigm. In addition, we do not know if localization patterns differ in children, even with the same task parameters used in adult studies. This is critical, as both attentional biases in processing socio-emotional information and anxiety symptoms typically first emerge in childhood (Dudeney et al., 2015; Kessler et al., 2005). Therefore, it is important to directly integrate ERP and fMRI data from a parallel dot-probe paradigm in the same sample to specify the spatial-temporal dynamics of children’s processing of emotional faces.

Studies linking fMRI to EEG/ERP are also important from a methodological perspective. ERP/EEG studies are more economical than fMRI research and much easier to implement in children, especially when studying task-modulated processes (Pérez-Edgar and Bar-Haim, 2010). Localizing the neural generators of high-density ERP data might provide a feasible alternative to fMRI when attempting to characterize the spatial dimension of neural functions. However, we must first establish the spatial validity of ERP source localization by examining convergence with the spatial distribution indicated by fMRI data. The question of method convergence has become more salient with the advent of the National Institute on Mental Health’s (NIMH) Research Domain Criteria (RDoC) initiative (Insel et al., 2010; Cuthbert and Kozak, 2013), which seeks to examine psychological constructs across multiple units of analysis (e.g., behavioural, neural, physiological, genetic, molecular). This work requires that we verify the coincidence (or lack thereof) of measures generated across units of analysis.

Consistent with the RDoC initiative, and building on the developmental neuroscience literature, this study has three research goals:

First, we examine the spatial correspondence between high-density ERP source localization and fMRI activation, by comparing the two units of data modulated by a parallel dot-probe paradigm from a sample of 9- to 12-year-old children.

Second, we aim to demonstrate the neural correlates of children’s face processing by synthesizing the spatial information from the fMRI data with temporal information from the ERP data. In particular, we initially defined fMRI activation evoked by the faces as functional ROIs. These ROIs were then applied to the source localization data time-locked to three a priori ERP peaks (i.e., time points) elicited by the same faces. We were then able to examine any differences in temporal dynamic patterns of activation between the three time points within each functional ROI.

Third, this study will provide a foundation for implementing a simultaneous ERP-fMRI approach in children, a more advanced tool for future research. Simultaneous data collection may help us better understand the spatial-temporal characteristics of the neural underpinnings of face processing and other psychological processes.

There is limited existing data on ERP-fMRI convergence with the dot-probe task in children. As such, this is an initial exploratory examination of convergence patterns. Nonetheless, given the previous ERP source localization findings in the adult dot-probe data (Pourtois et al., 2004; Santesso et al., 2009) and its compatibility with other fMRI dot-probe data, we expected good correspondence between the ERP sources and fMRI activation modulated by the dot-probe paradigm. Moreover, a previous MEG study exploring adults’ facial expression processing (Sato et al., 2015) found that the source of their MEG signals achieved maximum activity 150–200 ms after the face onset, in areas involving the middle temporal visual area, fusiform gyrus, and superior temporal sulcus. In contrast, maximum activity in the later window of 300–350 ms was observed in the inferior frontal areas. Based on these data, we expected that the temporal dynamic patterns characterized by the ERP source activity in children might also differ across functional ROIs defined by the fMRI activation. For instance, ERP source activity might achieve its initial maximum in the posterior, visual-sensory regions first, followed by later activation in the anterior, frontal regions.

2. Material and methods

2.1. Participants

Participants were a cohort of 118 9–12-year-old children (Mage = 10.98, SD = 1.04, 58 male) drawn from a larger attentional bias modification (ABM) training project examining the relations between temperament, ABM, and anxiety (Liu et al., 2018).1 All participants were recruited from the area surrounding State College, PA. The present study incorporated the baseline/pre-training data from the larger project. From this sample, 74 participants with useable dot-probe ERP data were selected for the source localization analysis (selection criteria specified below). For the dot-probe fMRI data, children with head motion exceeding 3 mm or behavioural accuracy <75% were excluded from analysis. Eventually, 99 children contributed useable fMRI data collected from two fMRI scanners (specified below). Fifty-four children provided data to both the ERP and fMRI sub-groups. This study was approved by the Institutional Review Board at The Pennsylvania State University. Prior to participation, written informed consent and assent were acquired from the participants and their parents. Portions of these data were previously published in studies examining the fMRI (Fu et al., 2017; Liu et al., 2018) and ERP (Thai et al., 2016) correlates of attentional bias to threat faces. Table 1 presents demographic information of the total sample, the ERP sub-group, the fMRI sub-group, and the overlap between the two.

Table 1.

Sample size, gender, and years of age of the total sample and each sub-group.

| Total Sample | ERP sub-group | fMRI sub-group | Overlap Sample | |||

|---|---|---|---|---|---|---|

| Total | New scanner | Old scanner | ||||

| N (F/M) | 118 (60/58) | 74 (35/39) | 99 (52/47) | 38 (19/19) | 61 (33/28) | 54 (26/28) |

| Mean years of age (SD) | 10.98 (1.04) | 10.94 (0.97) | 10.97 (1.06) | 10.51 (1.11) | 11.25 (0.92) | 10.94 (1.00) |

2.2. The emotion face dot-probe paradigm

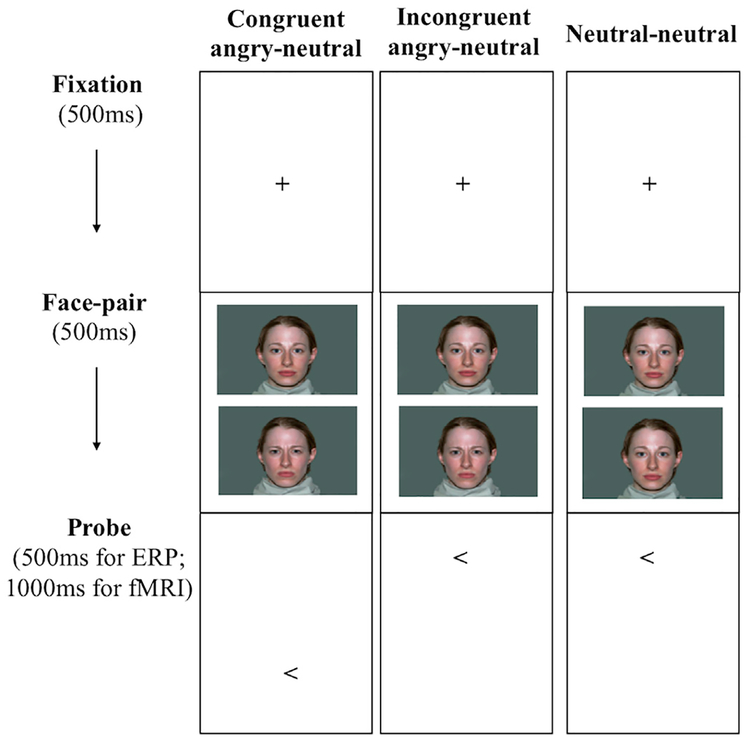

We used the standard dot-probe toolkit developed by the Tel Aviv University/NIMH ABMT Initiative for both the ERP and the fMRI tasks (Abend et al., 2012). Identical face stimuli were used in the two versions of tasks, including 20 colored face pictures of 10 adults (5 female, 5 male; 1 angry and 1 neutral face per actor; NimStim face set, Tottenham et al., 2009). Fig. 1 depicts the trial procedure for the dot-probe paradigm. In both the ERP and fMRI tasks, trials started with a 500 ms fixation cross, followed by a pair of identity-matched faces (angry-neutral or neutral-neutral), vertically displayed for 500 ms. The face pair was then replaced by an arrow-probe presented in the location of either face (500 ms for ERP, 1000 ms for fMRI). An inter-trial interval (ITI) of 1500 ms for ERP, or varying 250–750 ms for fMRI (to create jittered intervals; average 500 ms), was inserted in between trials.

Fig. 1.

Experimental conditions and trial procedure of the emotion face dot-probe paradigm for the ERP and fMRI tasks.

As shown in Fig. 1, both tasks incorporated three experimental conditions: (1) congruent angry-neutral trials where the angry-neutral face pair was followed by an arrow replacing the angry face; (2) incongruent trials where the angry-neutral face pair was replaced by the probe replacing the neutral face; (3) neutral–neutral trials with the probe presented in either location. The ERP task was composed of 180 trials, divided into 3 blocks with 60 trials each (20 congruent angry-neutral + 20 incongruent angry-neutral +20 neutral-neutral). The fMRI task included 320 trials in total, divided into 2 blocks with 160 trials each (40 congruent angry-neutral + 40 incongruent angry-neutral + 40 neutral-neutral + 40 blank filler trials). Children were instructed to indicate whether the arrow pointed to left or right by pressing one of two buttons as accurately and as quickly as possible. Angry-face location, arrow-probe location, arrow-probe direction, and face identities were counterbalanced across participants. For both the ERP and fMRI data collection, the task was administered using the E-Prime software version 2.0 (Psychology Software Tools, Pittsburgh, PA).

Typically, the participants completed the ERP and fMRI versions of the task on the same day, with the fMRI task always preceding the ERP task. Due to scheduling concerns, 5 participants completed the two tasks on different days. Two children completed fMRI before ERP (interval = 1 and 21 days, respectively), and 3 children completed ERP before fMRI(interval = 35, 37, and 53 days, respectively).2

2.3. fMRI data acquisition, processing, and analysis

A scanner upgrade occurred during our data collection. As such, fMRI data were collected on a 3T Siemens Trio (pre-upgrade) and 3T Siemens Prisma Fit (post-upgrade; Siemens Medical Solutions, Erlangen, Germany), using the identical scanning protocol: T2-weighted EPI, 3 × 3 × 3 mm voxel, 15°above AC–PC, TR = 2500 ms, TE 25 ms, flip angle = 80°, FoV = 192 mm, 64 × 64 matrix; T1-weighted MP-RAGE, 1 × 1 × 1 mm voxel, TR=1700 ms TE=2.01,flip angle 9°, FoV=256 mm, 256 × 256 matrix.

The fMRI data were preprocessed and analyzed using the FreeSurfer Functional Analysis Stream Tool (FsFast; The Martinos Center for Biomedical Imaging, Boston). The preprocessing of fMRI data for each participant included 1) co-registration to the structural images, 2) motion correction by realigning to the first volume of each session, 3) slice-timing correction, 4) spatial smoothing by a 6 mm isotropic Gaussian kernel, and 5) normalization to the MNI305 space. Each individuals’ brain images were then inflated and reconstructed by the cortical surface model based on the FreeSurfer average brain template (FSAverage, Fischl et al., 1999). The preprocessed fMRI data were subjected to general linear model (GLM) using the surface-based stream. As the purpose of fMRI analysis was to provide the most robust assessment of face processing dynamics available, we collapsed functional data across the 3 experimental conditions (congruent angry-neutral + incongruent angry-neutral + neutral-neutral) and focused on the contrast of Faces > Baseline (blank filler trials + ITI). Regressors for invalid trials (with missing, inaccurate, or outlier responses) and the 6 motion parameters (x, y, z for rigid body rotation and translation) were also included in the model.

Our goal was to inform and complement ERP source localization data by incorporating fMRI data to provide spatial information of neural activity from the same sample of children. Thus, we incorporated areas activated in either scanner as valid functional ROIs. For the group-level analysis, a whole-brain one-sample t-test was performed on data from each scanner, separately, to determine regions significantly activated in response to the Faces > Baseline contrast. In order to obtain larger functional ROIs to obtain sufficient information regarding ERP source dipoles, we used a relatively more liberal thresholding and correction criteria. Data were first thresholded at whole brain, voxel-wise level for uncorrected p < .05. Next, a cluster-wise correction, p < .05, was applied to correct for multiple comparisons (Hagler et al., 2006). The conjunct areas of significant activation across the two scanners were defined as functional ROIs in the surface space of the FSAverage template.

2.4. ERP data acquisition and processing

During the ERP dot-probe task, participants’ electroencephalogram (EEG) activity was recorded continuously using a 128-channel geodesic sensor net (Electrical Geodesics Inc., Eugene, Oregon). An analog filter of 6000 Hz and sampling rate of 1000 Hz were applied to the EEG signals. The signals were referenced to Cz and grounded at PCz. Vertical electrooculography was calculated from four electrodes placed ~1 cm above and below each eye (right = channel 8 - channel 126, left =channel 25 -channel 127). Horizontal electrooculography was calculated by subtracting two electrodes from each other placed ~1 cm at the outer canthi of each eye (channel 43 - channel 120). Impedances were kept below 50 kΩ. The raw EEG data were preprocessed by Brain Vision Analyzer (Brain Products GmbH, Germany). The data were first filtered (0.1–40 Hz), followed by visual identification and interpolation of extremely bad channels (Mean number of bad channels =3.2, SD =2.8, Min =0, Max = 12). Ocular artifacts were corrected using the= ICA decomposition method. Specifically, 128 independent components were generated, among which two ocular artifact components (one vertical, one horizontal) were located by visual inspection and removed from the EEG signals. The data were then re-referenced to the average across all channels and epoched from 200 ms pre the onset of the faces to 500 ms post-face-onset, with 200 ms pre-onset baseline correction. Segmented trials with invalid behavioural responses (incorrect or missing responses, irregular RTs exceeding the window of 150–2000 ms, or ±2SD of the individual’s mean RT) or artifact exceeding ±100 μV were excluded. Across subjects, approximately 40% of the excluded trials (SD = 29%) were due to invalid behavioural responses and 60% were due to ERP noise.

2.5. ERP source localization analysis

In order to achieve sufficient power (i.e., number of valid trials) to conduct the source localization analysis, the artifact-free ERPs were aggregated across all three experimental conditions and time-locked to the onset of all faces for each participant. This is also in line with our previous work (Thai et al., 2016), which found no difference between angry-neutral and neutral-neutral faces in dot-probe elicited ERPs. Participants with at least 30 valid trials across the three conditions were considered for the subsequent source localization analysis.

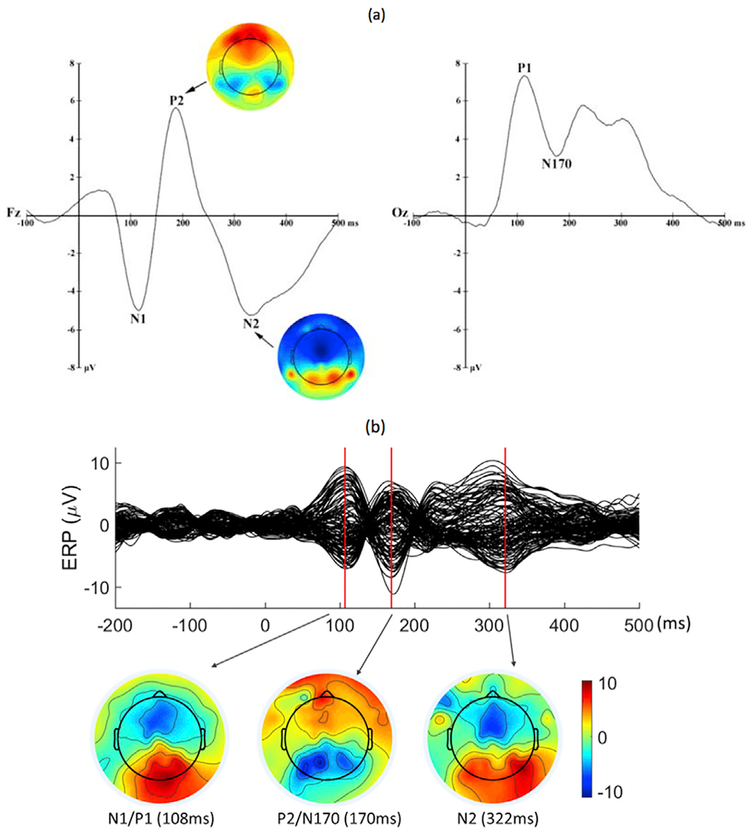

Next, we selected three time points within the 500 ms ERP segment for source localization, based on our recent ERP findings from the same dot-probe paradigm (Thai et al., 2016). The five ERP components included early visual processing indices P1 and N1, the face-specific N170, the attention-related P2, and the later, more cognitively tinged, N2. As illustrated in Fig. 2a, the P1 (over the occipital electrodes) and N1 (over the fronto-central electrodes) components were quantified within the 40–140 ms and 60–140 ms time windows, respectively, with similar peak latencies around 110 ms. N170 (occipital) and P2 (fronto-central) were quantified within the 140–240 ms and 120–220 ms windows, with close peak latencies around 180 ms. N2 was derived from the 260–360 ms time window and peaked at approximately 320 ms. In the current study, we took these components as a priori psychophysiological markers of face processing, and selected three time points that corresponded to the peak latencies of these components to localize their source activities: Peak 1 for the N1/P1 complex (Mean= 111 ms, SD = 12), Peak 2 for the P2/N170 complex (Mean = 182 ms,SD=13), and Peak 3 for N2 (Mean = 317 ms, SD = 15). We then conducted the source localization analysis at these three peaks. Of the 74 children with useable ERP data (Mean number of trials = 109.9, SD = 35.3, Min = 31,Max = 170), 54 showed visible peaks at the three time points (Mean number of trials = 107.8, SD = 36.5,Min = 31, Max = 170). For these children, we individual peak latencies to localize each participant’s ERP sources. For the other 20 children without all three visible peaks (Mean number of trials = 115.5, SD = 32.3, Min = 45 Max = 158), the averaged latencies across participants(111 ms, 182 ms, and 317 ms) were used for missing peaks. Fig. 2b presents the ERP waveforms time-locked to all faces at the 128 channels of an example participant.

Fig. 2.

(a) ERP waveforms at Fz and Oz elicited by all faces of the dot-probe task averaged across participants (Thai et al., 2016). (b) ERP waveforms of a single participant included in the current analysis at the 128 channels elicited by all faces of the dot-probe task.

We conducted the ERP source localization analysis using the Minimum Norm Estimate (MNE) package (Gramfort et al., 2013, 2014). To achieve greater accuracy, we adopted age appropriate MRI templates with averaged locations of the 128 channels to build the forward head model (Richards et al., 2016; Sanchez et al., 2012). Given the age range of our participants (9.16–12.93 years), nine age-appropriate MRI templates were used (ages 9, 9.5, 10, 10.5, 11, 11.5, 12, 12.5, and 13). For each participant, the template of the closest age was applied with the 128 locations registered to the corresponding voxels in this individual’s structural MR images, based on which the boundary element head model (BEM) of the three compartments (skin, skull, brain, 5120 triangles) was generated by using the MNE watershed tool.

Next, the dynamic Statistical Parametric Mapping (dSPM) method was applied to all participants to reconstruct the source activities of ERP responses at the three a priori time points (i.e., the peak latencies of N1/P1, P2/N170, and N2) on a 2D surface (Bai et al., 2007; Dale et al., 2000). Finally, we averaged the reconstructed source distributions across participants and superimposed them on the FSAverage brain template for illustration purposes.

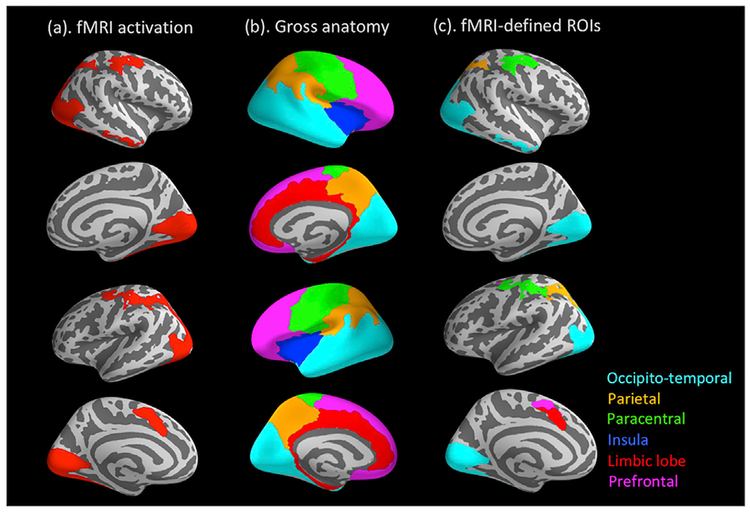

2.6. Integration of the fMRI and ERP data

Following the ERP source localization, we directly integrated the spatial information from the fMRI data into the ERP source data. As stated above, conjunct fMRI activation for the Faces > Baseline contrast across the two scanners was defined as functional ROIs. To reduce the number of ROIs, we further grouped these functionally activated regions across hemispheres according to the six gross anatomical regions defined by the Destrieux atlas in FreeSurfer: occipito-temporal, parietal, para-central, limbic (including the cingulate cortex), insula, and prefrontal (Fig. 5b; Desikan et al., 2006). These functionally-defined, anatomically-grouped ROIs were then applied to the ERP source data, with the indices of source activity (i.e., averaged dSPM values) extracted for each of the three a priori time points from each ROI. Last, the extracted dSPM values were subjected to a linear mixed-effect model in SPSS (SPSS Inc., Chicago, IL), with Peak, ROI, and the Peak × ROI interaction as fixed-effect factors, and subject as the random-effect factor. As a repeated-measures design was involved here, which might incur correlated residuals within each subject, we included a first-order autoregressive covariance matrix in the mixed model to promote model fit (Peugh and Enders, 2005). For the purpose of the current study, we focused on probing the Peak × ROI interaction to see if the temporal evolving patterns between the three time points differ across ROIs.

Fig. 5.

(a) fMRI activation in response to the Faces > Baseline contrast; (b) six gross anatomical regions defined by the Destrieux Atlas in FreeSurfer; (c) fMRI activation grouped into the anatomical regions.

3. Results

The supplement presents parallel analyses with (a) participants displaying clear peaks for all three ERP components and (b) participants with useable data for both the ERP and fMRI versions of the dot-probe task.

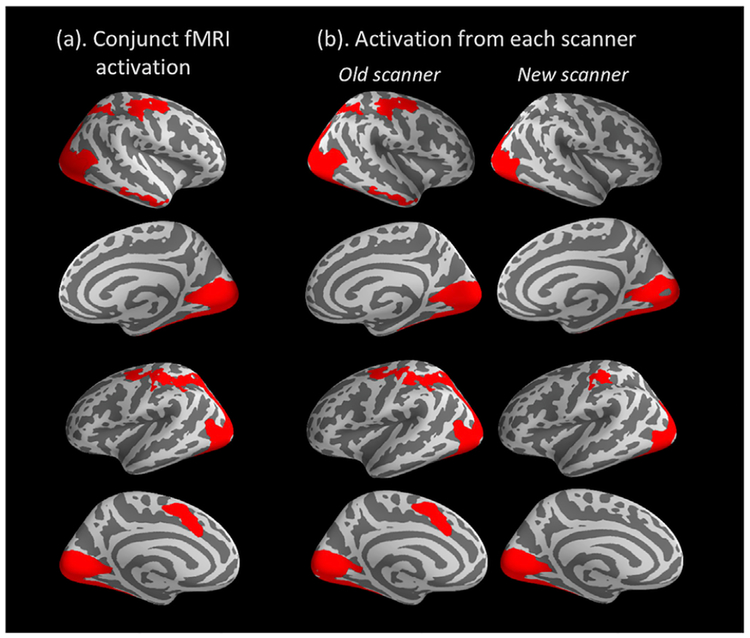

3.1. fMRI activation in response to the faces in the dot-probe task

Brain regions showing significant BOLD activation in response to Faces > Baseline are presented in Table 2 and Fig. 3. A distributed face-processing network was activated across the broad occipital, temporal, and parietal regions, as well as clusters in the central and frontal areas. As shown in Fig. 3, activation from the two scanners largely overlapped. However, data from the old scanner demonstrated more widely distributed activation than the new scanner, particularly in the right inferior temporal, right superior parietal and left superior frontal areas (Fig. 3b). This might be due to the fact that a larger subset of children was scanned in the old scanner (N = 61) versus the new one (N = 38). In line with our goal to define fMRI-informed ROIs for the ERP source data, we used the conjunct areas of activation across the two scanners (Fig. 3a) as functional ROIs for the following analyses.

Table 2.

Significant fMRI activation for the Faces > Baseline contrast for the two scanners.

| Peak vertex | Cluster | Hemisphere | Scanner | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Max | X | Y | Z | Annotation | Size(mm2) | # of vertices | cluster-wise p | 90% CI for p | ||

| 19.6 | −8.8 | −102 | 5 | lateral occipital | 13479.48 | 23178 | 0.00 | [0.0000, 0.0002] | Left | Old |

| 8.65 | −8.4 | 8.6 | 49.3 | superior frontal | 790.61 | 1682 | 0.01 | [0.0046, 0.0065] | Left | Old |

| 23.4 | 36.4 | −64 | −16 | fusiform | 10889.48 | 16157 | 0.00 | [0.0000, 0.0002] | Right | Old |

| 7.84 | 35 | −18 | 51.6 | precentral | 2321.75 | 5176 | 0.00 | [0.0000, 0.0002] | Right | Old |

| 3.58 | 53.6 | −34 | −17 | inferior temporal | 663.45 | 1016 | 0.01 | [0.0118, 0.0148] | Right | Old |

| 3.41 | 25.9 | −56 | 48.9 | superior parietal | 879.87 | 2040 | 0.00 | [0.0009, 0.0018] | Right | Old |

| 16.3 | −4.5 | −88 | −7.8 | lingual | 9257.55 | 13470 | 0.00 | [0.0000, 0.0002] | Left | New |

| 4.32 | −42 | −31 | 47.1 | postcentral | 743.80 | 1845 | 0.01 | [0.0113, 0.0141] | Left | New |

| 16.2 | 36.8 | −51 | −18 | fusiform | 9755.91 | 14320 | 0.00 | [0.0000, 0.0002] | Right | New |

Max: the value of -lg(p) for the peak vertex in the cluster, p = significance level of the peak vertex.

X, Y, Z: coordinates of the peak vertex in the MNI305 space.

Fig. 3.

(a) Group-level significant fMRI activation for the Faces > Baseline contrast across the two scanners, cluster-wise corrected p < .05; (b) activation from the old scanner (Siemens 3T Trio, N = 61) and new scanner (Siemens 3T Prisma, N = 38), respectively.

3.2. ERP source activities at the three time points

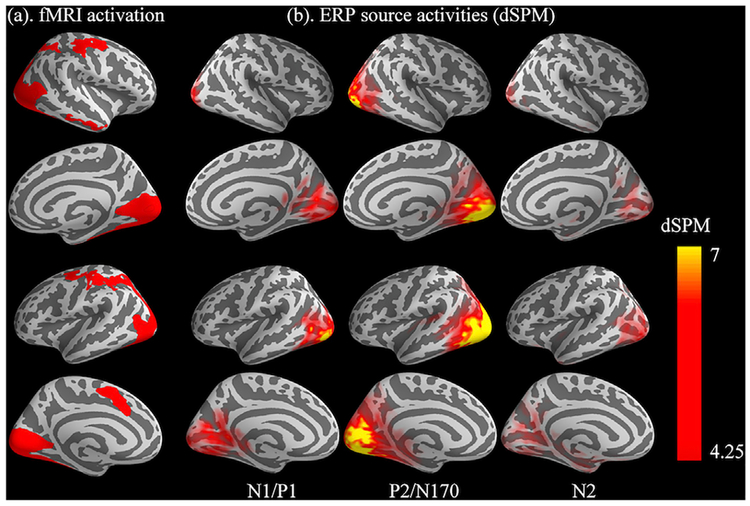

Fig. 4 presents the ERP source activity at the three a priori time points elicited by faces in the dot-probe task (Fig. 4b), in comparison with the BOLD activation evoked by the same faces (Fig. 4a, taken from Fig. 3a). Visual inspection suggested broad correspondence between the two units of analysis: (1) N1/P1 (~111 ms), early visual cortex activation; (2) P2/N170 (~182 ms), enhanced visual activation and transition towards the anterior end; (3) N2 (~317), late, weaker visual activation, further anterior-wise transition in both the dorsal and ventral directions.

Fig. 4.

(a) fMRI activation in response to the Faces > Baseline contrast; (b) ERP source activity in response to the same face stimuli at three peak latencies of a priori ERP complex (averaged latencies across participants: 111 ms, 182 ms, and 317 ms).

3.3. Temporal patterns of ERP source activities in different fMRI-defined ROIs

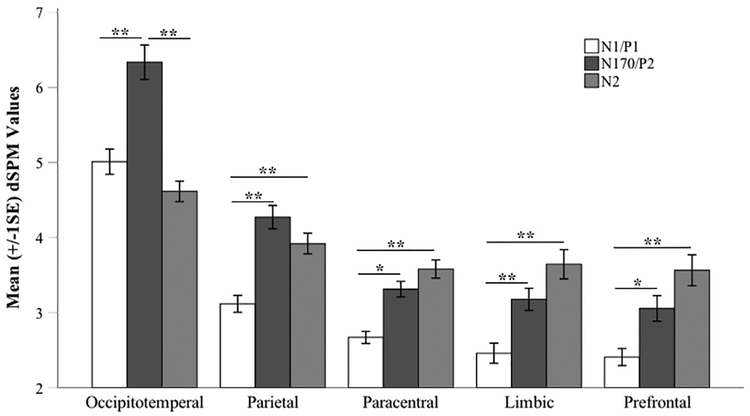

Fig. 5 illustrates how the functionally activated regions from the fMRI data (Fig. 5a) were further grouped into the six gross anatomical regions defined the Destrieux atlas (Fig. 5b). This eventually generated five ROIs within these anatomical boundaries (Fig. 5c): occipito-temporal, parietal, paracentral, limbic, and prefrontal (no significant fMRI activation was found in the anatomical area of insula). The averaged dSPM values were extracted from each of the five ROIs at the three a priori time points. These values were subjected to a linear mixed-effect model to investigate the temporal patterns of face-evoked activities in different spatial regions by probing the Peak × ROI interaction.

The overall linear mixed-effect model showed a good fit to the data, pseudo-R-squared3 = 0.42. Unsurprisingly, the main effects of Peak and ROI were significant: Peak, F(2, 217.72) = 38.11, p < .001; ROI, F(4, 749.65) = 154.46, p < .001. More importantly, a significant Peak × ROI interaction was also observed, F(8, 711.32) 10.65, p < .001, pseudo-R-squared = 0.08. To decompose the patterns of interaction, pairwise comparisons were conducted between the 3 time points within each ROI, respectively. For each ROI, we used a significance level Bonferroni corrected p < .05 for pairwise comparisons between the time points. For the overall analysis, we further divided the Bonferroni corrected 0.05 by 5 (the number of ROIs), and used p < .01 as the significance level to evaluate the results across ROIs. Fig. 6 presents the results of pairwise comparisons. In the occipito-temporal ROI, ERP source activity significantly increased from the first time point (N1/P1) to the second (P2/N170), p < .001, 95% CI = [−1.81, −0.85], then significantly decreased from P2/N170 to N2, p < .001, 95% CI = [1.24, 2.20]. A similar pattern was observed in the parietal ROI showing a significant increase from N1/P1 to P2/N170, p < .001, 95% CI = [−1.64,− 0.67]. However, the decline from to P2/N170 toN2 in the parietal ROI was at trend-level only and did not achieve significance, p = .24, 95% CI = [−0.13, 0.83].

Fig. 6.

Mean (+/− 1SE) dSPM values extracted from each of the five fMRI defined ROIs at the three a priori time points, N1/P1 (Mean = 111 ms), P2/N170 (Mean = 182 ms), and N2 (Mean = 317 ms). **<0.002, * <0.01;+<0.02.

Within the other three ROIs, interestingly, distinct temporal patterns were observed between the time points: the ERP source activity continued to increase from the first time point (N1/P1) through the third (N2). First, activity significantly increased from the first peak (N1/P1) to the second (P2/N170) for the paracentral ROI, p < .005, 95% CI [−1.13,−0.16], the limbic ROI, p < .002, 95% CI [−1.20, −0.24], and the prefrontal ROI, p < .005, 95% CI = [−1.13,−0.17]. At the third peak (N2), activity was significantly higher than that of N1/P1 for the para-central ROI, p < .001, 95% CI = [−1.39,−0.43], the limbic ROI, p < .001, 95% CI = [−1.67,−0.71], and the prefrontal ROI, p < .001, 95% CI [−1.64, 0.67].

4. Discussion

By employing a high-density ERP source localization technique in a unique youth sample with both ERP and fMRI data available from the same emotion face dot-probe paradigm, we examined the source activity of face-sensitive ERP components in 9–12-year-old children. As expected, our results showed that the tomography of the ERP sources broadly corresponded with the fMRI activation evoked by a parallel dot-probe paradigm in the same sample of children. Further, we directly integrated the spatial information from the fMRI data into the ERP data, by defining fMRI activation as functional ROIs and applying the ROIs to the ERP source data. Indices of ERP source activity were extracted from these ROIs at three a priori time points, the peak latencies of three ERP complexes that were critical for the face processing. By combining complementary information from these two units of analysis, we found that children’s neural activity during face processing showed distinct temporal patterns in different brain regions. Our results are the first to illustrate the spatial validity of high-density source localization of ERP dot-probe data in children. By directly integrating two modalities of data, our findings provide a novel approach to understanding the spatial-temporal profiles of face processing. This approach can then be adapted to address additional questions of interest across tasks and study populations.

First, our fMRI and ERP results obtained in the dot-probe tasks replicated findings from these parallel literature. In the fMRI task, children showed significant BOLD signal activation across a widely distributed face-processing network, including the lateral occipital areas, lingual gyrus, fusiform gyrus, inferior temporal areas, superior parietal areas, and superior frontal regions. The observed network is consistent with findings in the neuroimaging literature on face processing in both pediatric (Herba and Phillips, 2004; Taylor et al., 2004) and adult populations (Fusar-Poli et al., 2009). Similarly, in our ERP dot-probe data, a group of expected face-evoked components were identified: N1 and P1, reflecting initial, automatic attention allocation to visual-facial stimuli (Eimer and Holmes, 2007; Halit et al., 2000; Itier and Taylor, 2004), N170, capturing the unique structural processing of faces (Eimer and Holmes, 2007), P2, marking sustained visual processing (Eimer and Holmes, 2007), and N2, noting top-down conflict monitoring (Dennis and Chen, 2007, 2009) and inhibitory control (van Veen and Carter, 2002). These components were compatible with previous findings of face-elicited ERPs among adults in the dot-probe paradigm (see review Torrence and Troup, 2018) and our recently published ERP data in children (Thai et al., 2016).

Next, our source localization analysis at the three peaks of the face-elicited ERP components found that the neural generators of the early ERP complex, N1/P1 (~111 ms), were localized primarily in the visual areas of the occipital cortex. Source activities of the P2/N170 complex (~182 ms) showed enhanced visual cortex activation and transition towards the anterior end of the brain. Finally, sources of the higher-order N2 component (~317 ms) encompassed weaker visual activation and a further anterior-wise transition, in both the dorsal and ventral directions. As expected, inspecting the tomography of these sources suggested good spatial correspondence with our fMRI data from a parallel dot-probe paradigm.

Our findings are also consistent with previous EEG (Batty and Taylor, 2003; Corrigan et al., 2009; Pizzagalli et al., 2002; Sprengelmeyer and Jentzsch, 2006; Wong et al., 2009) and MEG (Hung et al., 2010; Streit et al., 1999) source localization studies on face processing, although very few of them were conducted in children. Of note, in a group of 10–13-year-old children, Wong et al. (2009) reported dipole sources of face-evoked ERPs with comparable spatio-temporal characteristics with our observations. They found that the dipole sources of the early N1/P1 complex (peaking around 120 ms) were localized in the visual association cortex, including the lingual gyrus. Dipole sources of the face N170 and Vertex Positive Potential (160–200 ms; cf. our P2/N170 complex) were located in the inferior temporal/fusiform regions. Dipoles of the later P2/N2 complex (220–430 ms; cf. our N2) were localized to the superior parietal, inferior temporal, and prefrontal regions (Wong et al., 2009).

Comparable localization findings have been reported in adults as well. For instance, by conducting seeded source modeling on adults’ face-evoked ERP data, Trautmann-Lengsfeld et al. (2013) localized adults’ face-sensitive N170 (140–190 ms, cf. our P2/N170 complex) and posterior negativity (250–350 ms, cf. our N2) to brain regions including the middle occipital gyrus, fusiform, inferior temporal gyrus, insula, pre-central gyrus, and superior and inferior frontal area. Likewise, a MEG localization study reported that adults’ face-elicited MEG responses during 150–200 ms originated from broad regions of the bilateral occipital and temporal cortices, while their later responses during 300–350 ms were localized to the right inferior frontal gyrus (Sato et al., 2015). Converging with the previous literature, our current findings further demonstrated the neural generators of face-elicited ERPs in children by means of a more advanced, high-density source imaging method, and provided evidence for the spatial validity of this approach in children.

More importantly, we directly integrated the spatial information from the BOLD signals into the ERP source activity, by extracting dSPM values(i.e., indicators of ERP source activity) from fMRI-defined ROIs at three target time points that are known to be critical for face processing in the ERP literature. Examining the temporal patterns between the time points within each ROI allows us to better delineate the spatial-temporal pro-files of children’s attentional processes.

First, as identified by the fMRI results, face stimuli activated a broad range of occipito-temporal regions in children, including the lateral occipital areas, lingual gyrus, fusiform gyrus, and inferior temporal areas. The dSPM values of source activity extracted from these regions indicated that these areas were activated predominantly during the early stage of face processing, achieved maximum at the second time point of interest (P2/N170, ~180 ms), and decreased after that. This spatial-temporal profile of the early processing of facial stimuli echoes a widely cited model for face processing, namely, the distributed neural system (Haxby et al., 2000, 2002). This model postulates that facial inputs first go through a “core system” of the human brain that primarily comprises occipital regions, fusiform, and superior temporal regions (Haxby et al., 2000, p. 230). The main function of the core system is to conduct visual analysis of faces, including initial allocation of visual attention resources and analyses of the structural and expressive facial features. Our findings from integrating the fMRI and ERP source data supported the activation of such a “core system” in children, with visual analyses during the early stage of face processing (N1/P1 to P2/N170) rather than later in the process (P2/N170 to N2).

A similar “rise and drop” pattern was observed in the superior parietal ROI, as source activity increased and peaked at P2/N170, and then reduced from P2/N170 to N2, although without achieving statistical significance. This region has been associated with visual-spatial attention, in particular attentional shifts (Corbetta, 1998; Corbetta et al., 1995; Haxby et al., 1994). Further, temporal-parietal connections may play a role in transferring expressive, changeable facial features (e.g., facial expressions, gaze directions) from the temporal to the parietal regions for spatial attention (Harries and Perrett, 1991). This might partially explain the difference noted between the parietal ROI and the occipito-temporal ROI. That is, both ROIs showed decreases in source activity from P2/N170 to N2. However, the magnitude was smaller and statistically non-significant in the parietal ROI, implying a directional relation from the occipito-temporal to the parietal regions.

Activated BOLD signals were also observed in the paracentral, limbic, and prefrontal ROIs. Interestingly, the ERP source activity extracted from these ROIs showed distinct temporal patterns in comparison with the occipito-temporal and parietal ROIs. Instead of the “rise and drop” pattern, activity in these ROIs presented with an increasing pattern through the three time points and reached maximum at the last peak. They showed significant increases from N1/P1 to P2/N170 as well as from N1/P1 to N2. From P2/N170 to N2, there was a trend of increase, but did not achieve statistical significance.

The paracentral, limbic, and prefrontal ROIs fit into the “extended system” of Haxby’s face processing model (Haxby et al., 2000, p. 230), noting the socio-emotional meaning of facial information gleaned and relayed by the core, visual-sensory system. The paracentral region, where the primary sensorimotor cortex is located, might be involved in facial expression processing when the viewer interprets the face by overtly or covertly simulating the perceived expression (Adolphs, 1999; Wild et al., 2001). A previous study on 10–13-year-old children observed dipole source activity in a similar area within the somatosensory cortex around 280 ms post face-onset (Wong et al., 2009). They speculated that the participants might be mentally simulating the perceived face to assist their interpretation of the facial cues. While we cannot directly capture this behavior in the current study, similar mechanisms may be at play.

The limbic ROI observed in the current data primarily encompasses an area within the ACC but not the amygdala (see Fig. 5c). Of note, our whole-brain analysis of the fMRI data did not yield significant amygdalar activation. This is not surprising, given previous fMRI studies using a similar paradigm failed to observe amygdala activation in whole-brain analysis in children and adolescents (Fu et al., 2017; Monk et al., 2006). The ACC area, together with the other activated areas in the superior frontal region, are critical functional regions for top-down attention control, conflict-monitoring, and maintenance of goal-directed executive function in processing socio-emotionally salient stimuli (Corbetta, 1998; Corbetta and Shulman, 2002; Fox and Pine, 2012). In our findings, the temporal patterns of activities for these two regions suggest that the later stage of face processing was dominated by the higher-order, attentional control processes sub-served by regions within ACC and the prefrontal network. This is also consistent with the ERP literature localizing the generators of the control-related N2 to areas within ACC (Hauser et al., 2014; van Veen and Carter, 2002), which was also reported in a sample of 6–10 year olds (Jonkman et al., 2007).

In sum, two distinct spatial-temporal profiles emerged from our fMRI-informed ERP localization data: (1) activity within the occipito-temporal and parietal ROIs, which underlie the visual sensory and visual attention processes, predominate the earlier stage of processing; (2) activity within ROIs (paracentral, limbic, and prefrontal) that primarily sub-serve top-down control processes predominate later stages of processing. These two profiles can be mapped onto the “core system” and the “extended system” as proposed in a neural network model of face processing (Haxby et al., 2000, 2002). The coincidence between our child data and the general adult imaging literature suggests that a widely distributed neural network supporting the processing of facial expressions is already underway in the developing brain by late childhood or early adolescence.

This observation is compatible with the developmental literature, which has substantial work exploring the development of the neural system for face processing (see review Haist and Anzures, 2017). Ongoing debates center on whether a dedicated face processing system is present since birth or emerge over time, and which aspects of the system develop first. However, there is an emerging consensus that the face processing network presents as more general and distributed during infancy and becomes more specialized and pruned as the developing brain matures over time (Pascalis et al., 2011). For example, previous psychophysiological work comparing multiple age groups of children with adults suggested that the face-specific ERP component, N170, does not become “adult-like” until late childhood (Batty and Taylor, 2003, 2006). It was proposed that a qualitative change in N170 occurs around 10–13 years of age, stabilizing the shape, latency, and lateralization of the N170 (Chung and Thomson, 1995). An fMRI study comparing face processing in 10–12-year-old children and adults observed a developmental shift from a more distributed pattern of activation in children to a more concentrated pattern of activation in adults, suggesting that the neural systems supporting face processing may undergo development and fine-tuning well into late childhood (Passarotti et al., 2003). With only one age group, our current data cannot directly speak to the neural development of face processing. Future studies recruiting multiple age groups of children or adopting a longitudinal approach are needed to characterize the neural development in face processing during different time windows.

Certain limitations exist in our study. First, due to the limited number of trials per condition in the dot-probe task, we had to aggregate the ERP data across experimental conditions to obtain sufficient power for the source localization analysis. We were unable to contrast the neural responses between different conditions (i.e., incongruence versus congruent). Future study designs with more trials per condition will enable us to tap into the potential differences in ERP sources between conditions in the dot-probe paradigm. Examining these between-condition differences will better speak to the neural network underlying children’s attentional processing of threat in particular, and the related regulatory and control processes that are needed to sustain and shift attention. Second, due to the practical complexities of scheduling the data collection facilities, most participants completed the fMRI task prior to the ERP task. This might have potentiated an order effect, although comparing the behavioural performance of the two tasks yielded no significant difference. A more balanced testing order is preferred for future studies. Finally, the extended time period needed to collect these complex data overlapped with a scheduled upgrade of the imaging facility.

Finally, in the fMRI analysis, we normalized the functional images of all participants to the MNI305 space but did not apply age-appropriate MRI templates. We chose this approach because our fMRI analysis in FsFast and ERP source analysis in MNE were conducted in the surface, but not the 3D volume, space. As a highly automated fMRI analysis stream tool, FsFast generates the inflated surface based on the FSAverage template for each participant at the group-level analysis, independent of the template used in the initial normalization in the 3D space. Thus, we consider it very difficult, if not technically impossible, to modify the analysis pipeline in FsFast (and MNE) to accommodate age-appropriate MRI templates. Future work focusing on method development will help resolve this issue.

Despite these limitations, our data reflect the RDoC initiative, illustrate the convergence of the two units of analyses, ERP and fMRI, and support the spatial validity of high-density source localization of ERP data in 9–12-year-old children. Given the many constraints of carrying out task-modulated fMRI research in children, conducting high-density ERP source analysis might be a feasible alternative to fMRI. Further, by directly integrating complementary information from these two units of data, we contribute novel evidence on the spatial-temporal dynamics of children’s neural processing of faces. Our findings also provided insights for the future implementation of the more advanced, simultaneous ERP-fMRI recordings (Bonmassar et al., 2001; Mulert et al., 2004) in children, which will help better understand the development of the spatial-temporal neural profiles of (threat-related) attentional processes.

Acknowledgement and disclosure

This work is supported by a grant from the National Institute of Mental Health (BRAINS R01 MH094633) to Dr. Pérez-Edgar. The funding source had no involvement in study design, data collection and analysis, and preparation and submission of the manuscript. The authors thank the Social, Life, and Engineering Sciences Imaging Center at The Pennsylvania State University for their support in the EEG and 3T MRI facilities. The authors are indebted to the many individuals who contributed to the data collection and data processing. The authors especially gratefully acknowledge the families who participated in these studies.

Footnotes

Declarations of interest

None.

Appendix A. Supplementary data

Supplementary data to this article can be found online at https://doi.org/10.1016/j.neuroimage.2018.09.070.

Children in the larger study were characterized for the temperamental trait of behavioural inhibition (BI), ranging across the full BI spectrum from low to high. The current findings did not vary as a function of BI.

To test if the participants’ behavioural performance was comparable between the ERP and fMRI tasks, we ran a paired sample t-test comparing the behavioural accuracy and the response time (RT)-based attentional bias score (RT of incongruent trials – RT of congruent trials) in the 54 subjects with useable data for both the ERP and fMRI tasks. There were no significant differences (p’s > .10).

pseudo-R-squared = 1 − [conditional residual variance/unconditional residual variance] (Bickel, 2007).

References

- Abend R, Pine DS, Bar-Haim Y, 2012. The TAU-NIMH Attention Bias Measurement Toolbox Retrieved from. http://people.socsci.tau.ac.il/mu/anxietytrauma/research/.

- Adolphs R, 1999. Social cognition and the human brain. Trends Cognit. Sci 3, 469–479. [DOI] [PubMed] [Google Scholar]

- Bai X, Towle VL, He EJ, He B, 2007. Evaluation of cortical current density imaging methods using intracranial electrocorticograms and functional MRI. Neuroimage 35, 598–608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar-Haim Y, Lamy D, Pergamin L, Bakermans-Kranenburg MJ, Van Ijzendoorn MH, 2007. Threat-related attentional bias in anxious and nonanxious individuals: a meta-analytic study. Psychol. Bull 133 (1), 1–24. [DOI] [PubMed] [Google Scholar]

- Bathelt J, O’Reilly H, de Haan M, 2014. Cortical source analysis of high-density EEG recordings in children. JoVE 88, e51705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Batty M, Taylor MJ, 2003. Early processing of the six basic facial emotional expressions. Cognit. Brain Res 17, 613–620. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ, 2006. The development of emotional face processing during childhood. Dev. Sci 9 (2), 207–220. [DOI] [PubMed] [Google Scholar]

- Bickel R, 2007. Multilevel Analysis for Applied Research: It’s Just Regression! The Guilford Press, NY. [Google Scholar]

- Bonmassar G, Schwartz DP, Liu AK, Kwong KK, Dale AM, Belliveau JW, 2001. Spatiotemporal brain imaging of visual-evoked activity using interleaved EEG and fMRI recordings. Neuroimage 13 (6), 1035–1043. [DOI] [PubMed] [Google Scholar]

- Brem S, Bach S, Kucian K, Kujala JV, Guttorm TK, Martin E, et al. , 2010. Brain sensitivity to print emerges when children learn letter–speech sound correspondences. Proc. Natl. Acad. Sci. U. S. A 107 (17), 7939–7944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brem S, Halder P, Bucher K, Summers P, Martin E, Brandeis D, 2009. Tuning of the visual word processing system: distinct developmental ERP and fMRI effects. Hum. Brain Mapp 30 (6), 1833–1844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Britton JC, Bar-Haim Y, Carver FW, Holroyd T, Norcross MA, Detloff A, et al. , 2012. Isolating neural components of threat bias in pediatric anxiety. JCPP (J. Child Psychol. Psychiatry) 53 (6), 678–686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown HM, Eley TC, Broeren S, Macleod C, Rinck M, Hadwin JA, Lester KJ,2014. Psychometric properties of reaction time based experimental paradigms measuring anxiety-related information-processing biases in children. J. Anxiety Disord 28 (1), 97–107. [DOI] [PubMed] [Google Scholar]

- Buzzell GA, Richards JE, White LK, Barker TV, Pine DS, Fox NA, 2017. Development of the error-monitoring system from ages 9–35: unique insight provided by MRI-constrained source localization of EEG. Neuroimage 157, 13–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlson JM, Reinke KS, Habib R, 2009. A left amygdala mediated network for rapid orienting to masked fearful faces. Neuropsychologia 47, 1386–1389. [DOI] [PubMed] [Google Scholar]

- Chung M, Thomson DM, 1995. Development of face recognition. Br. J. Psychol 86,55–87. [DOI] [PubMed] [Google Scholar]

- Corbetta M, 1998. Frontoparietal cortical networks for directing attention and the eye to visual locations: identical, independent, or overlapping neural systems? Proc. Natl. Acad. Sci. Unit. States Am. A 95, 831–838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL, 2002. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci 3 (3), 201–215. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL, Miezin FM, Petersen SE, 1995. Superior parietal cortex activation during spatial attention shifts and visual feature conjunction. Science 270, 802–805. [DOI] [PubMed] [Google Scholar]

- Corrigan NM, Richards T, Webb SJ, et al. , 2009. An investigation of the relationship between fMRI and ERP source localized measurements of brain activity during face processing. Brain Topogr 22 (2), 83–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cuthbert BN, Kozak MJ, 2013. Constructing constructs for psychopathology: the NIMH research domain criteria. J. Abnorm. Psychol 122, 928–937. [DOI] [PubMed] [Google Scholar]

- Dale AM, Liu AK, Fischl BR, Buckner RL, Belliveau JW, et al. , 2000. Dynamic statistical parametric mapping: combining fMRI and MEG for high-resolution imaging of cortical activity. Neuron 26, 55–67. [DOI] [PubMed] [Google Scholar]

- Dennis TA, Chen CC, 2007. Neurophysiological mechanisms in the emotional modulation of attention: the interplay between threat sensitivity and attentional control. Biol. Psychol 76 (1), 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dennis TA, Chen CC, 2009. Trait anxiety and conflict monitoring following threat: an ERP study. Psychophysiology 46 (1), 122–131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desikan RS, Ségonne F, Fischl B, Quinn BT, Dickerson BC, Blacker D, Buckner RL, Dale AM, Maguire RP, Hyman BT, Albert MS, Killiany RJ, 2006. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage 31 (3), 968–980. [DOI] [PubMed] [Google Scholar]

- Dudeney J, Sharpe L, Hunt C, 2015. Attentional bias towards threatening stimuli in children with anxiety: a meta-analysis. Clin. Psychol. Rev 40, 66–75. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A, 2007. Event-related brain potential correlates of emotional face processing. Neuropsychologia 45 (1), 15–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elshoff L, Groening K, Grouiller F, Wiegand G, Wolff S, Michel C, et al. , 2012. The value of EEG-fMRI and EEG source analysis in the presurgical setup of children with refractory focal epilepsy. Epilepsia 53 (9), 1597–1606. [DOI] [PubMed] [Google Scholar]

- Fani N, Jovanovic T, Ely TD, Bradley B, Gutman D, Tone EB, Ressler KJ, 2012. Neural correlates of attention bias to threat in post-traumatic stress disorder. Biol. Psychol 90 (2), 134–142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl B, Sereno MI, Tootell RB, Dale AM, 1999. High-resolution intersubject averaging and a coordinate system for the cortical surface. Hum. Brain Mapp 8, 272–284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox NA, Pine DS, 2012. Temperament and the emergence of anxiety disorders. J. Am. Acad. Child Adolesc. Psychiatr 51 (2), 125–128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu X, Taber-Thomas B, Pérez-Edgar K, 2017. Frontolimbic functioning during threat-related attention: relations to early behavioral inhibition and anxiety in children. Biol. Psychol 122, 98–109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fusar-Poli P, Placentino A, Carletti F, Landi P, Allen P, Surguladze S, et al. , 2009. Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J. Psychiatry Neurosci 34 (6), 418–432. [PMC free article] [PubMed] [Google Scholar]

- Gramfort A, Luessi M, Larson E, Engemann D, Strohmeier D, Brodbeck C,Parkkonen L, Hämäläinen M, 2014. MNE software for processing MEG and EEG data. Neuroimage 86, 446–460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gramfort A, Luessi M, Larson E, Engemann D, Strohmeier D, Brodbeck C, Goj R, Jas M, Brooks T, Parkkonen L, Hämäläinen M, 2013. MEG and EEG data analysis with MNE-Python. Front. Neurosci 7, 267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Groening K, Brodbeck V, Moeller F, Wolff S, van Baalen A, Michel CM, et al. , 2009. Combination of EEG–fMRI and EEG source analysis improves interpretation of spike-associated activation networks in paediatric pharmacoresistant focal epilepsies. Neuroimage 46 (3), 827–833. [DOI] [PubMed] [Google Scholar]

- Hagler DJ Jr., Saygin AP, Sereno MI, 2006. Smoothing and cluster thresholding for cortical surface-based group analysis of fMRI data. Neuroimage 33 (4), 1093–1103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haist F, Anzures G, 2017. Functional development of the brain’s face-processing system. Wiley Interdisciplinary Reviews. Cognitive Science 8 (1–2). 10.1002/wcs.1423. 10.1002/wcs.1423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halit H, de Haan M, Johnson MH, 2000. Modulation of event-related potentials by prototypical and atypical faces. Neuroreport 11 (9), 1871–1875. [DOI] [PubMed] [Google Scholar]

- Hardee JE, Benson BE, Bar-Haim Y, Mogg K, Bradley BP, Chen G, et al. , 2013. Patterns of neural connectivity during an attention bias task moderate associations between early childhood temperament and internalizing symptoms in young adulthood. Biol. Psychiatry 74 (4), 273–279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harries M, Perrett D, 1991. Visual processing of faces in temporal cortex: physiological evidence for a modular organization and possible anatomical correlates. J. Cognit. Neurosci 3, 9–24. [DOI] [PubMed] [Google Scholar]

- Hauser TU, Iannaccone R, St€ampfli P, Drechssler R, Brandeis D, Walitza S,Brem S, 2014. The feedback-related negativity (FRN) revisited: new insights into the localization, meaning and network organization. Neuroimage 84 (1), 159–168. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI, 2000. The distributed human neural system for face perception. Trends Cognit. Sci 4 (6), 223–233. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI, 2002. Human neural systems for face recognition and social communication. Biol. Psychiatry 51 (1), 59–67. [DOI] [PubMed] [Google Scholar]

- Haxby J, Horwitz B, Ungerleider LG, Maisog JM, Pietrini P, Grady CL, 1994. The functional organization of human extrastriate cortex: a PET–rCBF study of selective attention to faces and locations. J. Neurosci 14, 6336–6353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herba C, Phillips M, 2004. Annotation: development of facial expression recognition from childhood to adolescence: behavioural and neurological perspectives. JCPP (J. Child Psychol. Psychiatry) 45 (7), 1185–1198. [DOI] [PubMed] [Google Scholar]

- Hung Y, Smith ML, Bayle DJ, Mills T, Cheyne D, et al. , 2010. Unattended emotional faces elicit early lateralized amygdala-frontal and fusiform activations. Neuroimage 50, 727–733. [DOI] [PubMed] [Google Scholar]

- Insel T, Cuthbert B, Garvey M, Heinssen R, Pine DS, Quinn K, Wang P, 2010. Research domain criteria (RDoC): developing a valid diagnostic framework for research on mental disorders. Am. J. Psychiatry 167, 748–751. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ, 2004. Face recognition memory and configural processing: a developmental ERP study using upright, inverted, and contrast-reversed faces. J. Cognit. Neurosci 16 (3), 487–502. [DOI] [PubMed] [Google Scholar]

- Jonkman LM, Sniedt FLF, Kemner C, 2007. Source localization of the Nogo-N2: a developmental study. Clin. Neurophysiol 118 (5), 1069–1077. [DOI] [PubMed] [Google Scholar]

- Kessler RC, Berglund P, Demler O, Jin R, Merikangas KR, Walters EE, 2005. Lifetime prevalence and age-of-onset distribution of DSM-IV disorders in the national comorbidity survey replication. Arch. Gen. Psychiatr 62, 593–602. [DOI] [PubMed] [Google Scholar]

- Liu P, Taber-Thomas B, Fu X, Perez-Edgar K, 2018. Biobehavioral markers of attention bias modification in temperamental risk for anxiety: a randomized control trial. J. Am. Acad. Child Adolesc. Psychiatr 57, 103–110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacLeod C, Mathews AM, Tata P, 1986. Attentional bias in emotional disorders. J. Abnorm. Psychol 95, 15–20. [DOI] [PubMed] [Google Scholar]

- Mogg K, Bradley BP, 1999. Some methodological issues in assessing attentional biases for threatening faces in anxiety: a replication study using a modified version of the probe detection task. Behav. Res. Ther 37 (6), 595–604. [DOI] [PubMed] [Google Scholar]

- Monk CS, Nelson EE, McClure EB, Mogg K, Bradley BP, Leibenluft E, et al. , 2006. Ventrolateral prefrontal cortex activation and attentional bias in response to angry faces in adolescents with generalized anxiety disorder. Am. J. Psychiatry 163(6), 1091–1097. [DOI] [PubMed] [Google Scholar]

- Monk CS, Telzer EH, Mogg K, Bradley BP, Mai X, Louro HM, et al. , 2008Amygdala and ventrolateral prefrontal cortex activation to masked angry faces in children and adolescents with generalized anxiety disorder. Arch. Gen. Psychiatr 65(5), 568–576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mueller EM, Hofmann SG, Santesso DL, Meuret AE, Bitran S, Pizzagalli DA, 2009. Electrophysiological evidence of attentional biases in social anxiety disorder. Psychol. Med 39 (7), 1141–1152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mulert C, Jäger L, Schmitt R, Bussfeld P, Pogarell O, Möller HJ, et al. , 2004. Integration of fMRI and simultaneous EEG: towards a comprehensive understanding of localization and time-course of brain activity in target detection. Neuroimage 22(1), 83–94. [DOI] [PubMed] [Google Scholar]

- Ortiz-Mantilla S, Hämäläinen JA, Benasich AA, 2011. Time course of ERP generators to syllables in infants: a source localization study using age-appropriate brain templates. Neuroimage 59 (4), 3275–3287. [DOI] [PubMed] [Google Scholar]

- Pascalis O, de M. de Viviés X, Anzures G, Quinn PC, Slater AM, Tanaka JW, Lee K, 2011. Development of face processing. Wiley interdisciplinary reviews. Cognit. Sci 2 (6), 666–675. 10.1002/wcs.146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Passarotti AM, Paul BM, Bussiere JR, Buxton RB, Wong EC, Stiles J, 2003. The development of face and location processing: an fMRI study. Dev. Sci 6 (1), 100–117. [Google Scholar]

- Pérez-Edgar K, Bar-Haim Y, 2010. Application of cognitive-neuroscience techniques to the study of anxiety-related processing biases in children In: Hadwin J, Field A (Eds.), Information Processing Biases in Child and Adolescent Anxiety John Wiley & Sons, Chichester, UK, pp. 183–206. [Google Scholar]

- Pérez-Edgar K, Bar-Haim Y, McDermott JNM, Chronis-Tuscano A, Pine DS,Fox NA, 2010. Attention biases to threat and behavioral inhibition in early childhood shape adolescent social withdrawal. Emotion 10 (3), 349–357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pérez-Edgar K, Taber-Thomas B, Auday E, Morales S, 2014. Temperament and attention as core mechanisms in the early emergence of anxiety In: Lagattuta K (Ed.), Children and Emotion: New Insights into Developmental Affective Science, vol.26 Karger Publishing, pp. 42–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peugh JL, Enders CK, 2005. Using the SPSS mixed procedure to fit cross-sectional and longitudinal multilevel models. Educ. Psychol. Meas 65 (5), 717–741. [Google Scholar]

- Pizzagalli DA, Lehmann D, Hendrick AM, Regard M, Pascual-Marqui RD, et al. , 2002. Affective judgments of faces modulate early activity (approximately 160 ms) within the fusiform gyri. Neuroimage 16, 663–677. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Grandjean D, Sander D, Vuilleumier P, 2004. Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cerebr. Cortex 14, 619–633. [DOI] [PubMed] [Google Scholar]

- Price RB, Siegle GJ, Silk JS, Ladouceur CD, McFarland A, Dahl RE, Ryan ND, 2014. Looking under the hood of the dot-probe task: an fMRI study in anxious youth. Depress. Anxiety 31 (3), 178–187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards JE, Sanchez C, Phillips-Meek M, Xie W, 2016. A database of age-appropriate average MRI templates. Neuroimage 124 (Pt B), 1254–1259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sanchez CE, Richards JE, Almli CR, 2012. Age-specific MRI templates for pediatric neuroimaging. Dev. Neuropsychol 37, 379–399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santesso DL, Meuret AE, Hofmann SG, Mueller EM, Ratner KG, Roesch EB,Pizzagalli DA, 2008. Electrophysiological correlates of spatial orienting towards angry faces: a source localization study. Neuropsychologia 46 (5), 1338–1348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sato W, Kochiyama T, Uono S, 2015. Spatiotemporal neural network dynamics for the processing of dynamic facial expressions. Sci. Rep 5, 12432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schulz E, Maurer U, van der Mark S, Bucher K, Brem S, Martin E, Brandeis D,2008. Impaired semantic processing during sentence reading in children with dyslexia: combined fMRI and ERP evidence. Neuroimage 41 (1), 153–168. [DOI] [PubMed] [Google Scholar]

- Sprengelmeyer R, Jentzsch I, 2006. Event related potentials and the perception of intensity in facial expressions. Neuropsychologia 44, 2899–2906. [DOI] [PubMed] [Google Scholar]

- Streit M, Ioannides AA, Liu L, Wölwer W, Dammers J, et al. , 1999. Neurophysiological correlates of the recognition of facial expressions of emotion as revealed by magnetoencephalography. Cognit. Brain Res 7, 481–491. [DOI] [PubMed] [Google Scholar]

- Taylor MJ, Batty M, Itier RJ, 2004. The faces of development: a review of early face processing over childhood. J. Cognit. Neurosci 16 (8), 1426–1442. [DOI] [PubMed] [Google Scholar]

- Telzer EH, Mogg K, Bradley BP, Mai X, Ernst M, Pine DS, Monk CS, 2008. Relationship between trait anxiety, prefrontal cortex, and attention bias to angry faces in children and adolescents. Biol. Psychol 79 (2), 216–222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thai N, Taber-Thomas BC, Pérez-Edgar KE, 2016. Neural correlates of attention biases, behavioral inhibition, and social anxiety in children: an ERP study. Developmental Cognitive Neuroscience 19 (6), 200–210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Torrence RD, Troup LJ, 2018. Event-related potentials of attentional bias toward faces in the dot-probe task: a systematic review. Psychophysiology (in press). [DOI] [PubMed] [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, et al. , 2009. The NimStim set of facial expressions: judgments from untrained research participants. Psychiatr. Res 168 (3), 242–249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trautmann-Lengsfeld SA, Domínguez-Borr as J, Escera C, Herrmann M, Fehr T, 2013. The perception of dynamic and static facial expressions of happiness and disgust investigated by ERPs and fMRI constrained source analysis. PloS One 8 (6), e66997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Veen V, Carter CS, 2002. The anterior cingulate as a conflict monitor: fMRI and ERP studies. Physiol. Behav 77 (4), 477–482. [DOI] [PubMed] [Google Scholar]

- White LK, Britton JC, Sequeira S, Ronkin EG, Chen G, Bar-Haim Y, et al. , 2016. Behavioral and neural stability of attention bias to threat in healthy adolescents. Neuroimage 136, 84–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- White LK, Helfinstein SM, Reeb-Sutherland BC, Degnan KA, Fox NA, 2009. Role of attention in the regulation of fear and anxiety. Dev. Neurosci 31, 309–317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wild B, Erb M, Bartels M, 2001. Are emotions contagious? Evoked emotions while viewing emotionally expressive faces: quality, quantity, time course and gender differences. Psychiatr. Res 102, 109–124. [DOI] [PubMed] [Google Scholar]

- Wong TKW, Fung PCW, McAlonan GM, Chua SE, 2009. Spatiotemporal dipole source localization of face processing ERPs in adolescents: a preliminary study. Behav. Brain Funct 5, 16. [DOI] [PMC free article] [PubMed] [Google Scholar]