Abstract

For objects larger than the depth of focus of an imaging system, one must account for wavefield propagation effects within the object as is done in diffraction tomography, diffraction microscopy, and multislice ptychographic tomography. We show here that if the imaging method used reconstructs Na planes along each viewing direction, one can reduce the number of illumination directions required to fill Fourier space by a factor of 1/Na, relaxing the usual Crowther criterion for tomography. This provides a conceptual basis to explain two recent experiments where multiple axial planes were imaged per viewing direction, and tomographic images were obtained with good 3D spatial resolution even though fewer illumination directions were used than one would have expected from the Crowther criterion.

1. INTRODUCTION

Early in the development of tomographic imaging, Crowther, De Rosier, and Klug put forward [Sec. 6.2 1] a simple relationship of

| (1) |

between the maximum size D of an object to be imaged, and the number of illumination angles Nθ required to obtain full Fourier domain sampling to reconstruct a 3D object at an isotroptic spatial resolution d. This relationship, discussed in Sec. 2, is commonly referred to as the Crowther criterion.

Multislice ptychographic tomography, based on multislice ptychography [2], has recently been introduced by Li and Maiden [3] as a way to obtain three-dimensional reconstructions of objects that extend beyond the depth of focus of an imaging system or its equivalent. Li and Maiden observed that one can obtain a high quality 3D reconstruction with fewer illumination angles than would be expected for conventional tomography based on the Crowther criterion, but provided no simple expression for how much the Crowther criterion could be relaxed. In a somewhat different approach, Hovden et al. used through-focal depth sectioning in a scanning transmission electron microscope, and they also obtained high resoIution 3D reconstructions with fewer illumination angles than required by the Crowther criterion [4], yet again no specific expression was given for how much one can relax the Crowther criterion. We put forward here an explanation based on the fact that resolving objects into separate planes along one viewing direction fundamentally alters the transverse-axial Fourier space distribution of the information obtained, so that these observations are in fact expected for fundamental reasons. This reduction in the number of illumination angles required should also apply to other 3D reconstruction methods that allow for wavefield propagation effects within the specimen, such as diffraction microscopy [5–7].

2. THE CROWTHER CRITERION

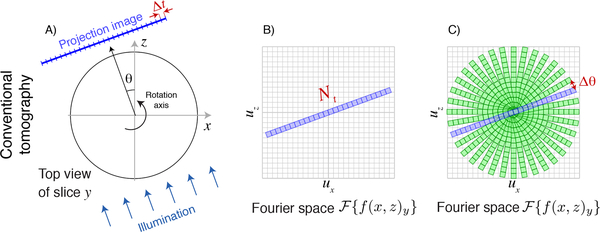

Tomography is based on the acquisition of pure projection images of an object as it is rotated, providing the data needed to obtain a 3D reconstruction. With a 2D imaging detector aligned to a specimen on a vertical rotation axis , each yth row of the detector separately obtains a series of 1D images of one vertical slice of the object (Fig. 1A) with Nt transverse pixels each of size Δt. The transverse-axial Fourier or reciprocal space represenation of an individual projection image will therefore have Nt complex pixels extending to a maximum transverse spatial frequency of ut = 1/(2 Δt) based on Nyquist-Shannon sampling [8, 9]. Because a pure projection image provides no information on the relative placement of different object features along the θ = 0 illumination axis direction, each projection image is only one pixel deep (the zero-spatial-frequency pixel) along the axial direction in Fourier space. Therefore, a 1D object slice image acquired at a rotation angle θ = 0 maps into Nx = Nt pixels in the ux direction and Nz = 1 pixel in the uz direction in transverse-axial Fourier space as shown in Fig. 1B. When the illumination is rotated by an angle θ, so is the transverse-axial Fourier space representation of the information obtained. We note that this transverse-axial or (x, z) representation in Fourier space (ux, uz) is different than the bowtie-shaped support [10–12] of information obtained in the Fourier transform of the Radon transform p(θ, u), which is more analagous to a sinogram representation of tomographic data in (θ, x).

Fig. 1.

Schematic representation of the Crowther criterion in conventional tomography. In conventional tomography, each slice y of the object along the direction of the rotation axis is mapped onto one row of a detector. One then obtains one-dimensional pure projection images of the object with Nt transverse pixels of width Δt in the transverse direction (A), and a depth of precisely one pixel at zero spatial frequency in the axial direction (because there is no way to distinguish between different axial positions in a pure projection). For an angle θ = 0°, the Fourier transform of this image yields an array with Nx = Nt pixels in the transverse or ux direction and Nz = 1 pixels in the axial or uz direction in trasverse-axial Fourier space (B). As the object is rotated, so is the information obtained in Fourier space, so the (ux, uz) Fourier space is filled in as shown in (C). The Crowther criterion of Eq. 3 is effectively a statement that one must provide complete, gap-free coverage of all pixels around the periphery in transverse-axial Fourier space.

As the illumination direction is rotated by θ about the object axis and object slice projection images are obtained, each of these Nt × 1 arrays rotated by θ can in principle be assem-bled in transverse-axial Fourier space as shown in Fig. 1C. (Note that care must be taken in how data are interpolated from the angular-transverse or (θ, t) array of each projection onto the transverse-axial or (x, z) grid [13–15], and one must apply the 1/|u| ramp filter of filtered backprojection to account for the overweighting of information at low transverse-axial spatial frequencies where there is much information overlap as shown in Fig. 1C). Once this is done, one can simply take an inverse Fourier transform of the assembled complex information and obtain a transverse-axial real space image of the object slice. In fact this conceptually simple procedure is not the usual approach in practical tomography experiments due to the subtleties noted above of interpolating complex information from tilted projections into pixels in a regular 2D array in Fourier space. In addition, one can use algebraic reconstruction algorithms [16] and their variants which can incorporate various constraints on the object to reduce the presence of artifacts in the reconstructed object slice that would otherwise appear due to incomplete information in Fourier space. However, no matter what reconstruction method is used, the information available in transverse-axial Fourier space is as shown in Fig. 1C.

Examination of the assembled information in Fourier space as shown in Fig. 1C reveals the required number of illumination angles Nθ that should be used to obtain full information in transverse-axial Fourier space. The size of one pixel in Fourier space is

| (2) |

because there are Nt/2 pixels running from zero spatial frequency out to the maximum ut.

Because one projection image fills in pixels at both positive and negative maximum spatial frequencies, one needs to cover the circumference of a half-circle, or πut, with Nθ rotational samples at the spacing Δut. Therefore one requires N(Δut) = πut giving

| (3) |

to cause the angular spacing Δθ shown in Fig. 1C to be equal to the regular array pixel spacing of Δut and thus completely fill the circular region in transverse-axial Fourier space with information. Because the maximum object size is D = Nt Δt, and Nyquist-Shannon sampling requires that two half-period pixels be used to represent the finest object period d giving d = 2 Δt, one has D/d = (Nt Δt)/(2 Δt) = Nt/2 and Eq. 3 reproduces the Crowther criterion [Sec. 6.2 1] of Nθ ≃ πD/d as given in Eq. 1.

3. MULTISLICE PTYCHOGRAPHIC TOMOGRAPHY

One way to obtain pure projection images of a sufficiently thin 3D object is through the use of ptychography. In 2D imaging via ptychography, one illuminates a larger object with a finite-extent coherent illumination spot, and then moves the spot to a series of overlapping positions on the object while collecting the far-field diffraction intensities [21, 22]. The fact that one object feature appears in many diffraction patterns due to the probe (illumination spot) overlap means that iterative phase retrieval algorithms [23, 24] can serve as the basis for robust reconstructions of the complex exit wave leaving the object [25, 26]. This has led to the development of x-ray ptychographic tomography [27] for nanoscale 3D imaging.

Because objects can in fact extend over a larger axial distance, the pure projection approximation does not always apply. In an imaging system, Fresnel propagation of the point spread function gives rise to a depth of field about the axial focus position beyond which the point spread or probe function undergoes significant change; this gives rise to an axial resolution [28] of δa = 1.22λ/N.A.2 for a circular lens. If one writes the numerical aperture as being given by the maximum scattering angle detected of N.A. = λ/(2 Δt), one arrives at an axial resolution of

| (4) |

Because a wavefield evolves in the axial direction due to the very same Fresnel propagation effect that alters the point spread function, this same limit applies to ptychography and in fact all x-ray ptychographic tomography experiments have been carried out within this depth of focus limit [29].

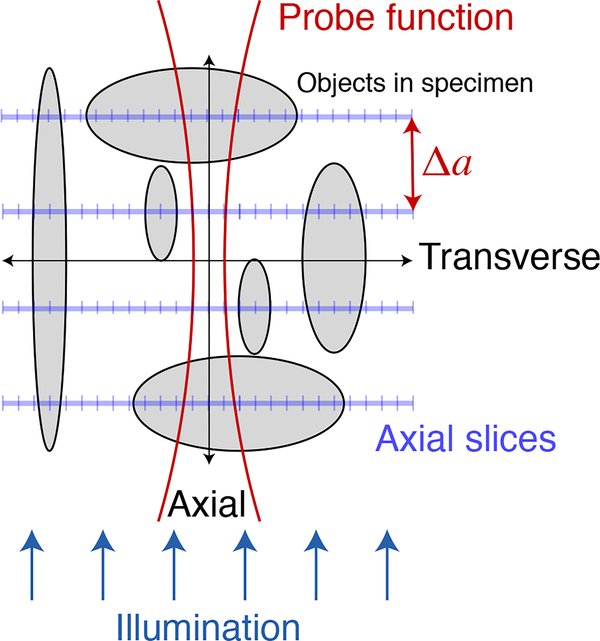

In order to employ the advantages of ptychography (where the image resolution is limited not by the resolution of a lens, but by the maximum angle at which one detects and phases coherent diffraction magnitudes) for objects that extend beyond the depth of focus limit, multislice ptychography was introduced [2] as shown in Fig. 2. This approach treats the object as being comprised of several distinct axial planes, each of which contain a “slice” of optical modulation that can be applied to the incident wavefield, followed by free-space propagation to the plane of the next “slice” in a way reminiscent of multislice forward-modeling calculations developed for electron microscopy [30, 31]. This approach has been shown to be successful in visible light [2, 17], x-ray [18, 19], and electron microscopy [20]. (It accounts for multiple optical interaction planes so from a wavefield propagation point of view it accounts for multiple scattering, though it does not allow for the complete loss of optical path length information due to plural elastic scattering of individual photons that occurs for example in fog or clouds). In x-ray experiments [19], an empircal observation of the maximum usable separation between planes gave 5.2 (Δt)2/λ, which is in fair agreement with Eq. 4.

Fig. 2.

Multislice ptychography [2] uses Fresnel propagation of the illumination probe to determine which of many axial slices an object is localized to. While propagating a single probe function between many axial planes (as well carrying out the standard ptychographic action of translating the probe to multiple transverse positions), at each axial plane the net wavefield is decomposed into contributions from the probe and from the optical modulation provided by any specimen features at that plane. This is accomplished through the fact that the probe is modified as it propagates plane-to-plane, so that prior experimental demonstrations [2, 17–20] have clearly shown the ability to separate even broad, low-spatial-frequency objects into their respective planes along the illumination direction. Multislice ptychography (as well as through-focus imaging methods that succeed to various degrees in separating objects into their respective planes along the illumination direction) therefore delivers axial slice images which can have objects of all transverse sizes (and therefore content at all transverse spatial frequencies) reconstructed at separate axial planes.

Multislice ptychographic tomography has recently been used to image objects to which the pure projection approximation does not apply [3]. In this approach, for each illumination angle a number Na of axial planes were reconstructed through an extended object as shown in Figs. 2 and Fig. 3A. These planes were separated by a distance Δa approximately equal to the depth resolution of Eq. 4. (The use of a much smaller plane separation distance Δa ≪ δa would lead to difficulties in the factorization of features to one plane versus another, and the Fourier plane power would not extend to high spatial frequencies in ua with too fine a plane separation distance). In these experiments [3], the net optical effect from each plane was then added together (and phase unwrapping was applied) to synthesize a pure projection image from each illumination direction before using standard tomographic reconstruction algorithms to obtain a 3D image.

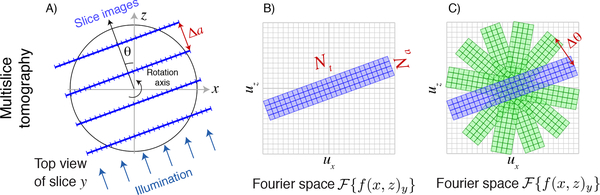

Fig. 3.

Schematic representation of the Crowther criterion in multislice ptychographic tomography. Because multislice ptychography obtains separate reconstructed images for each axial slice (A; see also Fig. 2), and because these objects at each plane can be larger or small and thus contain information at all transverse spatial frequencies, the information that a multislice ptychographic image delivers in (ux, uz) or transverse-axial Fourier space is as shown in (B). If one then carries out multislice ptychography from a variety of illumination angles, one fills in Fourier space as shown in (C). The equivalent of the Crowther criterion (Fig. 1C) for multislice ptychographic tomography is then determined by setting the angular separation Δθ between illumination directions to a value such that one provies complete, gap-free coverage of all pixels around the periphery in in transverse-axial Fourier space, leading to Eq. 6.

While multislice ptychography provides an especially good way to separate complex objects into their different axial plane positions so that they can then be combined to synthesize a pure projection image, there are also a variety of approaches for taking through-focus image sequences in conventional imaging and assigning features to their plane of sharpest focus [32–36]. These approaches must contend with the nature of the 3D point spread function and its associated optical transfer function for the imaging mode and contrast mechanism chosen, so the per-axial-slice images they deliver may not provide full information at all transverse spatial frequencies. However, they do deliver some information on objects located at depth planes separated by about a depth of field (Eq. 4), again leading to image data as shown in Fig. 2. Such an approach has been used in high angle dark field electron microscopy [4], where it was shown empirically that one then needed fewer illumination angles for a high quality 3D reconstruction than one might have assumed from the Crowther criterion of Eq. 3.

Let us then consider the information provided by multislice imaging along one illumination direction, with a transverse-axial Foruier plane representation as shown in Fig. 3B. The multislice reconstruction will produce Na axial pixels with a maximum axial spatial frequency of ua = 1/(2 Δa). How do these pixels map onto the same grid in Fourier space as the transverse information, given that the real space pixel sizes of Δt and Δa are quite different? These Na reconstruction planes (which extend axially by δa/2 on either side of the plane) must cover the object diameter D, so we have Na Δa = D but also Nt Δt = D for the object to fit within the transverse field of view, giving Δa = Nt Δt/Na. Including the negative spatial frequency limit −ua, the axial planes are mapped onto Fourier space over a span of pixels, which when combined with Δa = Nt Δt/Na give

| (5) |

In other words, we arrive at the picture as shown in Fig. 3B where multislice imaging provides Fourier space information over Nt transverse pixels and Na axial pixels all on the same scale in Fourier space.

Now we return to the approach used earlier to calculate the Crowther criterion, but with multislice imaging instead of with pure projections. As before, the size of one pixel in Fourier space is Δut as given by Eq. 2, and we need to cover the circumference of a half-circle, or π ut, with Nθ,a rotational samples. However, each rotational sample now extends over not 1 pixel in the axial direction as shown in Fig. 1B, but NA pixels as shown in Fig. 3B. We therefore have

| (6) |

Compared to the standard Crowther criterion result of Nθ = (π/2)Nt as given in Eq. 3, we see in multislice ptychographic tomography (as well as in other multislice imaging methods) that we reduce the number of rotation angles Nθ,a for full Fourier space coverage by a factor of Na, the number of multislice planes reconstructed per view. This is illustrated in Fig. 3C.

In the first demonstration of multislice ptychographic tomography [3], it was shown in Li and Maiden’s Fig. 7 that one can synthesize projections from other viewing directions by calculating the shift of separate axial planes along the new synthesized viewing direction before plane addition. These synthesized viewing directions were added with actual viewing direction data to provide high quality 3D reconstructions using fewer actual illumination directions than one would have expected from the Crowther criterion. However, these synthesized projections involve the acquisition of no new image information, so the conclusion of Eq. 6 stands unchanged. Beyond-Crowther reconstruction quality was also observed when using learning algorithms to reconstruct diffraction microscopy data obtained using a limited number of actual illumination directions [7], as well as in through-focal depth sectioning electron microscopy example described above [4]. Because all of these approaches intrinsically account for multislice propagation effects, the information mapping considerations outlined here and the result of Eq. 6 provide a firm conceptual reason for these observed improvements.

The approach of adding together the optical contributions of the multislice planes (along with phase unwrapping) has provided a successful demonstration [3] of tomographic imaging of objects that extend beyond the depth resolution limit of Eq. 4. However, it shifts the information mapping from what is shown in Fig. 3B back to a synthesized pure projection as shown in Fig. 1B, with additional synthesized illumination angle information obtained as described above. These additional but synthesized pure projection images obscure the reason why one can collect information from fewer illumination angles than one would expect from the conventional Crowther criterion. That is why we have proposed here modifying the conventional Crowther criterion of Eq. 3 with the multislice imaging version of Eq. 6.

4. ACKNOWLEDGEMENT

I thank Ming Du and Yudong Yao, Northwestern University, for helpful discussions.

5. FUNDING INFORMATION

This research used resources of the Advanced Photon Source, a U.S. Department of Energy (DOE) Office of Science User Facility operated for the DOE Office of Science by Argonne National Laboratory under Contract No. DE-AC02-06CH11357, and was also supported by the National Institutes of Health under grants R01 GM104530 and R01 MH115265.

REFERENCES

- 1. Crowther RA, DeRosier DJ, and Klug A, Proc. Royal Soc. Lond. A 317, 319 (1970). [Google Scholar]

- 2.Maiden AM, Humphry MJ, and Rodenburg JM, J. Opt. Soc. Am. A 29, 1606 (2012). [DOI] [PubMed] [Google Scholar]

- 3.Li P and Maiden AM, Sci. Reports 8, 2049 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hovden R, Ercius P, Jiang Y, Wang D, Yu Y, Abruña HD, Elser V, and Muller DA, Ultramicroscopy 140, 26 (2014). [DOI] [PubMed] [Google Scholar]

- 5.Charrière F, Marian A, Montfort F, Kuehn J, Colomb T, Cuche E, Marquet P, and Depeursinge C, Opt. Lett 31, 178 (2006). [DOI] [PubMed] [Google Scholar]

- 6.Popescu G, Quantitative phase imaging of cells and tissues (McGraw-Hill, 2011). [Google Scholar]

- 7.Kamilov US, Papadopoulos IN, Shoreh MH, Goy A, Vonesch C, Unser M, and Psaltis D, Optica 2, 517 (2015). [Google Scholar]

- 8.Nyquist H, Transactions Am. Inst. Electr. Eng 47, 617 (1928). [Google Scholar]

- 9.Shannon CE, Proc. Inst. Radio Eng 37, 10 (1949). [Google Scholar]

- 10.Rattey P and Lindgren A, IEEE Transactions on Acoust. Speech Signal Process. 29, 994 (1981). [Google Scholar]

- 11.Lindgren AG and Rattey PA, “The inverse discrete Radon transform with applications to tomographic imaging using projection data,” in Advances in Electronics and Electron Physics, vol. 56 Marton C, ed. (Academic Press, New York, 1981), pp. 359–410. [Google Scholar]

- 12.Lindgren AG and Rattey PA, Signal Process 7, 371 (1984). [Google Scholar]

- 13.Brouw WN, “Aperture synthesis,” in Radio Astronomy, vol. 14 of Methods in Computational Physics: Advances in Research and Applications Alder B, Fernbach S, and Rotenberg M, eds. (Academic Press, 1975), pp. 131–175. [Google Scholar]

- 14.O’Sullivan JD, IEEE Transactions on Med. Imaging 4, 200 (1985). [DOI] [PubMed] [Google Scholar]

- 15.Dowd BA, Campbell GH, Marr RB, Nagarkar V, Tipnis S, Axe L, and Siddons DP, Proc. SPIE 3772, 224 (1999). [Google Scholar]

- 16.Gordon R, Bender R, and Herman GT, J. Theor. Biol 29, 471 (1970). [DOI] [PubMed] [Google Scholar]

- 17.Godden TM, Suman R, Humphry MJ, Rodenburg JM, and Maiden AM, Opt. Express 22, 12513 (2014). [DOI] [PubMed] [Google Scholar]

- 18.Suzuki A, Furutaku S, Shimomura K, Yamauchi K, Kohmura Y, Ishikawa T, and Takahashi Y, Phys. Rev. Lett 112, 053903 (2014). [DOI] [PubMed] [Google Scholar]

- 19.Tsai EHR, Usov I, Diaz A, Menzel A, and Guizar-Sicairos M, Opt. Express 24, 29089 (2016). [DOI] [PubMed] [Google Scholar]

- 20.Gao S, Wang P, Zhang F, Martinez GT, Nellist PD, Pan X, and Kirkland AI, Nat. Commun 8, 1 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hoppe W, Acta Crystallogr. A 25, 495 (1969). [Google Scholar]

- 22.Hegerl R and Hoppe W, Berichte der Bunsengesellschaft für physikalische Chemie 74, 1148 (1970). [Google Scholar]

- 23.Gerchberg RW and Saxton WO, Optik 35, 237 (1972). [Google Scholar]

- 24.Fienup JR, Opt. Lett 3, 27 (1978). [DOI] [PubMed] [Google Scholar]

- 25.Faulkner HML and Rodenburg J, Phys. Rev. Lett 93, 023903 (2004). [DOI] [PubMed] [Google Scholar]

- 26.Rodenburg J, Hurst A, Cullis A, Dobson B, Pfeiffer F, Bunk O, David C, Jefimovs K, and Johnson I, Phys. Rev. Lett 98, 034801 (2007). [DOI] [PubMed] [Google Scholar]

- 27.Dierolf M, Menzel A, Thibault P, Schneider P, Kewish CM, Wepf R, Bunk O, and Pfeiffer F, Nature. 467, 436 (2010). [DOI] [PubMed] [Google Scholar]

- 28.Born M and Wolf E, Principles of Optics (Cambridge University Press, Cambridge, 1999), seventh ed. [Google Scholar]

- 29.Deng J, Vine DJ, Chen S, Nashed YSG, Peterka T, Ross R, Vogt S, and Jacobsen C, Proc. SPIE 9592, 95920U (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Cowley JM and Moodie AF, Proc. Phys. Soc. Lond. B 70, 486 (1957). [Google Scholar]

- 31.Cowley JM and Moodie AF, Acta Crystallogr 10, 609 (1957). [Google Scholar]

- 32.Sugimoto SA and Ichioka Y, Appl. Opt 24, 2076 (1985). [DOI] [PubMed] [Google Scholar]

- 33.Schechner YY, Kiryati N, and Basri R, Int. J. Comput. Vis 39, 25 (2000). [Google Scholar]

- 34.Valdecasas AG, Marshall D, Becerra JM, and Terrero JJ, Micron 32, 559 (2001). [DOI] [PubMed] [Google Scholar]

- 35.Piccinini F, Tesei A, Zoli W, and Bevilacqua A, Microsc. Res. Tech 75, 1582 (2012). [DOI] [PubMed] [Google Scholar]

- 36.Ficker T, Optik 144, 289 (2017). [Google Scholar]