Abstract

Background

Barriers to cancer clinical trial participation have been the subject of frequent study, but the rate of trial participation has not changed substantially over time. Studies often emphasize patient-related barriers, but other types of barriers may have greater impact on trial participation. Our goal was to examine the magnitude of different domains of trial barriers by synthesizing prior research.

Methods

We conducted a systematic review and meta-analysis of studies that examined the trial decision-making pathway using a uniform framework to characterize and quantify structural (trial availability), clinical (eligibility), and patient/physician barrier domains. The systematic review utilized the PubMed, Google Scholar, Web of Science, and Ovid Medline search engines. We used random effects to estimate rates of different domains across studies, adjusting for academic vs community care settings.

Results

We identified 13 studies (nine in academic and four in community settings) with 8883 patients. A trial was unavailable for patients at their institution 55.6% of the time (95% confidence interval [CI] = 43.7% to 67.3%). Further, 21.5% (95% CI = 10.9% to 34.6%) of patients were ineligible for an available trial, 14.8% (95% CI = 9.0% to 21.7%) did not enroll, and 8.1% (95% CI = 6.3% to 10.0%) enrolled. Rates of trial enrollment in academic (15.9% [95% CI = 13.8% to 18.2%]) vs community (7.0% [95% CI = 5.1% to 9.1%]) settings differed, but not rates of trial unavailability, ineligibility, or non-enrollment.

Conclusions

These findings emphasize the enormous need to address structural and clinical barriers to trial participation, which combined make trial participation unachievable for more than three of four cancer patients.

Patient participation in clinical trials forms the backbone of cancer clinical research. Clinical trials are the key step in advancing new treatments and improving outcomes. With greater participation, trials can be conducted more rapidly and efficiently and new treatments can be discovered more quickly, benefitting all patients with cancer. Moreover, trials present patients the opportunity to access the newest available treatments, so access to trials should be equitable and easy for patients.

The vast majority of adult patients with cancer do not participate in clinical trials. It is commonly assumed that only 2%–3% of adult cancer patients participate in clinical trials (1–4), even though most Americans view clinical trial participation favorably (5). This gap between the willingness of patients to participate in trials and their actual participation rates suggests there are numerous barriers to trial participation, many of which are modifiable.

The identification of these barriers to trial participation and efforts to remove such barriers represent critical research objectives for cancer investigators. Barriers to trial participation have been the subject of much research, with a major emphasis on patient-related barriers (6–10). Because patients ultimately decide whether to participate in a trial, this is an appropriate avenue of research. However, this emphasis may be interpreted to indicate that patients themselves are the primary limiting factor to improving trial enrollment.

The rate of trial participation has not changed substantially over time (1–4). One reason could be that patient-related barriers represent only one of several domains of potential trial barriers. Structural factors also play a large role, especially the local availability of trials appropriate for a patient’s cancer type and stage. Efforts to modernize clinical exclusion factors for trials have been a recent focus (11,12). Physicians play a vital role in helping patients determine treatment choice, and patients often look to their physicians to inform them of clinical trials (13,14). Yet physicians may lack the appropriate support and incentives for participating in clinical research or may choose not to offer trial participation as an option to their patients out of concern that it would interfere with the physician-patient relationship (10,15). Patterns of trial barriers also vary by institutional setting, an important consideration given that about 85% of patients receive treatment in the community, compared with only about 15% in larger, generally urban academic centers (16–21).

Although many studies have examined the type and nature of barriers to clinical trials, few have characterized the treatment decision-making process about trial participation in the context of trial barrier domains. A clear understanding of these barrier domains and the magnitude of their contributions to nonparticipation in trials is important for guiding policy choices about how to direct research and resources to improve trial participation. Our goal was to examine the magnitude of different domains of trial barriers by synthesizing prior research under a uniform framework.

Methods

Conceptual Framework

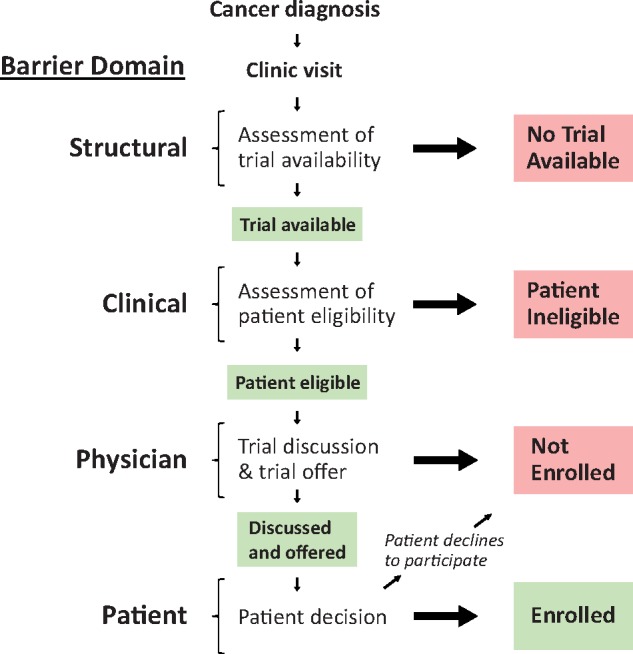

We specified a framework to characterize treatment decision-making about trial participation (Figure 1) (22). This framework stipulates that the treatment decision process is initiated at cancer diagnosis and clinic visit. A determination is made as to whether a trial is available for the patient’s cancer type and stage at the treating institution. The absence of an available trial represents a structural barrier at sites/institutions. If a trial is available, the patient is assessed for eligibility based on criteria that typically include performance status, prior treatments, and comorbidities. Trial eligibility exclusion criteria represent an aspect of trial design that is a potential clinical barrier for patients. If the patient is eligible, the physician may then discuss and offer trial participation to the patient. Only then does the patient have the opportunity to decide whether to participate, a determination made at the end of an otherwise long process. Thus, eligible patients may not enroll due to either not being asked or declining when they are asked. These barrier domains may also vary depending on demographic and socioeconomic attributes (1,6,22–25).

Figure 1.

Cancer clinical trial decision-making framework. A framework for describing the clinical trial decision-making pathway stipulates that the treatment decision process is initiated at cancer diagnosis and clinic visit. A determination is made as to whether a trial is available for the patient’s histology and stage of cancer. The absence of an available trial represents a structural domain barrier at sites or institutions. If a trial is available, the patient is assessed for eligibility, representing a potential clinical domain barrier of the trial design. If the patient is eligible, a trial is then discussed and trial participation is either offered or not offered to the patient; ultimately, the patient decides whether to participate in the trial and may decline (physician and patient domain barriers). Thus, eligible patients may not enroll due to either not being asked or declining when they are asked. Each of these types of barriers may also vary depending on demographic and socioeconomic attributes.

Literature Search

To identify studies that used this framework, we conducted a computerized literature search using the PubMed, Google Scholar, Web of Science, and Ovid Medline databases under Preferred Reporting Items for Systematic Reviews and Meta-Analyses guidelines for articles published between January 1, 1999 and December 31, 2017 (26). We used the search terms “clinical trial accrual,” “clinical trial enrollment,” “enrollment in clinical trials,” “clinical trial enrollment barriers,” and “patient participation in clinical trials” in combination with the term “cancer.” Thus, our full electronic search strategy is summarized as: (“clinical trial accrual” OR “clinical trial enrollment” OR “clinical trial enrollment barriers” OR “enrollment in clinical trials” OR “patient participation in clinical trials”) AND “cancer,” filtered according to publication dates in the range January 1, 1999 to December 31, 2017. The full search strategy is outlined in Supplementary Table 1 (available online). The search was conducted in October 2018.

Inclusion Criteria

The following criteria were used to identify studies included in our analysis. First, the studies were required to have been conducted in the United States. Second, they had to include complete data documenting the clinical trial decision-making process from initial evaluation of trial availability through the patient’s decision about whether to participate. Studies that only asked patients whether they participated in a clinical trial (without evaluating the other pathway elements, ie, trial availability) or about their willingness to participate in general (rather than their decision to participate) were excluded from our analysis. Third, studies had to be representative of the general population of patients in one or more cancer settings. Therefore studies that examined only specific demographic subgroups were excluded, while studies that focused on specific cancer types only were included.

Study Selection, Quality Assessment, and Data Extraction

Titles, abstracts, and full studies were independently screened by two reviewers (JMU and RV) to ensure consistent data collection and to reduce the likelihood of subjective interpretation of study-level results. The same two reviewers extracted data from the selected studies using a predefined table based on the clinical trial decision-making framework described above. Any differences between reviewers about compliance of studies with the inclusion criteria and data extraction were resolved by consensus. Our analysis included both published abstracts and full articles that met the inclusion criteria. Search results from PubMed included only full articles, whereas those from Google Scholar, Web of Science, and Ovid Medline included published abstracts and posters in addition to full articles.

Statistical Analysis

To determine rates of trial unavailability, ineligibility, nonenrollment, and enrollment, we used meta-analysis for proportions using the R-package “metaphor” (27,28). Summaries of the individual study effects for each domain were illustrated using forest plots. Forest plots were generated to provide a visual representation of study-specific effects for each domain. Both fixed and random effects approaches were considered for deriving summary rates. The fixed effects approach provides a valid inference about the set of k studies included in the final sample based on the assumption the k studies share a common effect or rate (ie, rate of patients enrolled) (29,30). In the fixed effects setting, effect size differences are assumed to vary only due to sampling error, so summary measures are weighted according to study sample size. This assumption can be examined using a Cochrane Q statistic to test whether the assumption of a common effect is violated; in the setting of multiple category rates, the maximum Q statistic is preferred because it represents the most conservative estimate of the common Q (31–33). We also calculated the proportion of total variation in study estimates due to heterogeneity using the I2 statistic, with notable (ie, severe) heterogeneity defined as values greater than 50% (34). A statistically significant Q statistic or high I2 statistic would indicate substantial heterogeneity in the study effect sizes, motivating the use of a random effects approach to better account for between-study variation (29,30,33). A random-effects approach takes into account both within- and between-study variance. Given its advantageous properties (both efficient and approximately unbiased), we used the restricted maximum-likelihood estimator (35,36). Further, based on prior evidence that trial participation patterns differ between academic and community centers, we examined whether institutional setting moderated the study estimates for any domain using meta-regression techniques for moderator analyses (37). If yes, the final overall estimates for each domain were weighted according to the proportion of patients estimated to receive cancer care in the academic (15%) vs community (85%) settings, respectively (16–21). Estimates for each of the domains were examined individually as a proportion. Because transformed rates may not sum exactly to one, the rates were normalized after pooling and back transformation, with the estimated 95% confidence intervals adjusted accordingly (ie, centered around the normalized estimates) (31). A double-arcsine transformation was used (38).

To examine the sensitivity of the results to the influence of single studies, we used a “leave one out” procedure by iteratively excluding each of the individual studies and recalculating the overall domain-specific estimates. Results were presented using a tornado plot.

To address the potential for study bias to influence the results, we characterized the study profiles for each study included in the meta-analysis. Multiple sensitivity analyses were conducted accordingly. We examined whether the inclusion of studies focusing on specific cancers—rather than all cancers—influenced the results, by excluding this subset of studies. We also examined whether estimates differed by calendar time based on the completion date of study enrollment. We examined the sensitivity of the estimates to the assumed rate (85%) of patients receiving their cancer care in community settings by varying this rate from 75% to 95% in 5% increments. We also used the Begg rank correlation test to identify any evidence of publication bias for any of the domains using the ranktest function in R (27,39). All statistical tests were two-sided. P values of less than .05 were considered statistically significant.

Results

Identification of Studies

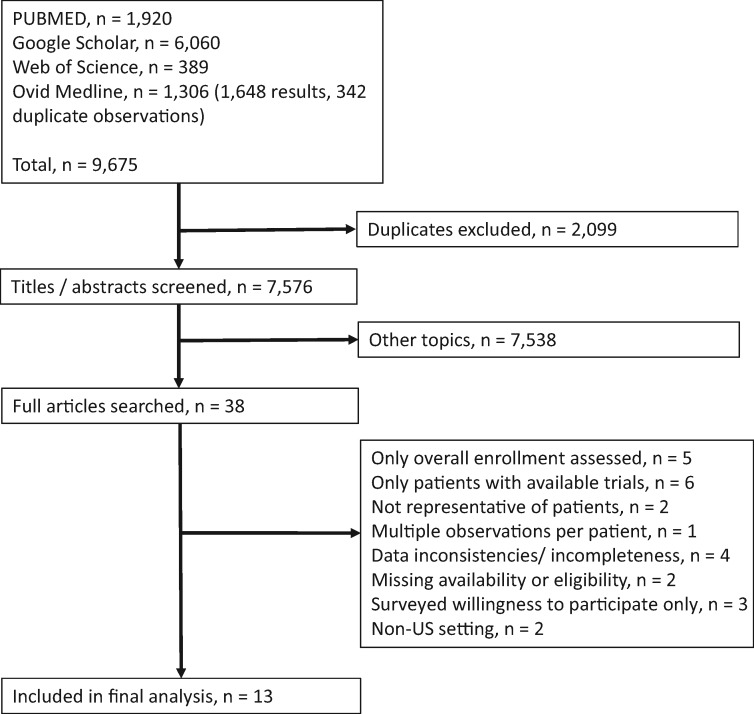

In total, 9675 studies were flagged by the four search engines, and after excluding duplicates, 7576 unique studies were identified (Figure 2). We reviewed the full articles for 38 studies. Twenty-five were excluded; the reasons for exclusion are noted briefly in Figure 2, with further details provided in Supplementary Table 2 (available online). Thirteen studies comprised of 8883 patients met our inclusion criteria (Table 1; Figure 2) (40–52). Nine studies focused on academic care settings and four focused on community care settings. The majority of studies (7 of 13, 53.8%) examined patient decision-making patterns in all types of cancers; the remaining studies focused on breast only (n = 2), lung only (n = 2), prostate only (n = 1), and cervix/uterine cancers (n = 1) (Table 2). All studies included all stages of disease. Most studies focused on patients with new cancer or diagnosis. The recruitment period across the 13 studies spanned 1997–2012.

Figure 2.

Selection of studies included in the analysis.

Table 1.

Calculations of trial unavailability, ineligibility, nonenrollment, and enrollment among 13 studies included in meta-analysis

| Study Categories | Academic studies (n = 9) |

Community studies (n = 4) |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Lara, et al., 2001 (49) | Martel, et al., 2004 (50) | Umutyan, et al., 2008 (52) | Baggstrom, et al., 2010 (40) | Javid, et al., 2012 (46) | Kanarek, et al., 2012 (47) | Horn, et al., 2013 (45) | Swain-Cabriales, et al., 2013 (51) | Brooks, et al., 2015 (41) | Klabunde, et al., 1999 (48) | Guarino, et al., 2005 (44) | Go, et al., 2006 (42) | Guadagnolo, et al., 2009 (43) | |

| Original sample size, No. | 276 | 270 | 389 | 263 | 1079 | 94 | 1043 | 418 | 771 | 2339 | 518 | 1012 | 903 |

| Excluded from denominator | 29 | 71 | 120 | 10 | 170 | 6 | 14 | — | — | — | 28 | — | 44 |

| Missing data | — | — | — | — | — | — | 14 | — | — | — | — | — | — |

| No evidence of disease | 9 | — | — | — | — | — | — | — | — | — | — | — | 2 |

| Not returning | 12 | 14 | 29 | — | 170 | 6 | — | — | — | — | — | — | 27 |

| No need for therapy | — | 3 | — | 10 | — | — | — | — | — | — | — | — | 3 |

| No pathologic diagnosis | 8 | 54 | 91 | — | — | — | — | — | — | — | 28 | — | 12 |

| Sample size for analysis, No. | 247 | 199 | 269 | 253 | 909 | 88 | 1029 | 418 | 771 | 2339 | 490 | 1012 | 859 |

| Trial unavailable, No. (%) | 116 (47.0) | 71 (35.7) | 102 (37.9) | 44 (17.4) | 421 (46.3) | 36 (40.9) | 416 (40.4) | 295 (70.6) | 481 (62.4) | 1403 (60.0) | 232 (47.3) | 656 (64.8) | 452 (52.6) |

| No trial available, No. | 80 | 56 | 43 | 44 | 421 | 33 | 416 | 255 | 481 | 1403 | 232 | 587 | 305* |

| No available protocol, No. | 22 | 10 | 49 | — | — | — | — | — | — | — | — | — | — |

| Unknown primary, No. | 5 | — | — | — | — | — | — | — | — | — | — | — | 20 |

| Other†, No. | 9 | 5 | 10 | — | — | 3 | — | 40 | — | — | — | 69 | 127 |

| Patient ineligible, No. (%) | 55 (22.3) | 77 (38.7) | 97 (36.1) | 127 (50.2) | 124 (13.6) | 17 (19.3) | 281 (27.3) | 29 (6.9) | 38 (4.9) | 363 (15.5) | 149 (30.4) | 94 (9.3) | 274 (31.9) |

| Ineligible, No. | 14 | 11 | 13 | 101 | 124 | 10 | 281 | 25 | 38 | 363 | 149 | 84 | 139‡ |

| Poor performance status, No. | 20 | 17 | 23 | — | — | — | — | — | — | — | — | — | 12 |

| Synchronous primary tumors, No. | 5 | 3 | — | — | — | — | — | — | — | — | — | — | 21 |

| Prior therapy, No. | 11 | 14 | 30 | — | — | — | — | — | — | — | — | — | 25 |

| Already receiving therapy, No. | — | 6 | — | 26 | — | 5 | — | — | — | — | — | — | — |

| Non-measurable disease, No. | — | 17 | 21 | — | — | — | — | — | — | — | — | — | — |

| Abnormal labs | — | 3 | — | — | — | — | — | — | — | — | — | — | — |

| Incomplete ineligibility data, No. | 1 | — | — | — | — | — | — | — | — | — | — | — | — |

| Other†, No. | 4 | 6 | 10 | — | — | 2 | — | 4 | — | — | — | 10 | 77 |

| Not enrolled, No. (%) | 37 (15.0) | 16 (8.0) | 33 (12.3) | 57 (22.5) | 222 (24.4) | 24 (27.3) | 191 (18.6) | 14 (3.3) | 102 (13.2) | 407 (17.4) | 69 (14.1) | 220 (21.7) | 55 (6.4) |

| Enrolled, No. (%) | 39 (15.8) | 35 (17.6) | 37 (13.8) | 25 (9.9) | 142 (15.6) | 11 (12.5) | 141 (13.7) | 80 (19.1) | 150 (19.5) | 166 (7.1) | 40 (8.2) | 42 (4.2) | 78 (9.1) |

Trial not available because of stage (171) and tumor (134) ineligibility. Em dash (—) indicates no information for this category was provided or could be derived.

Includes unavailability and ineligibility listed as “other” or “physician decision” without additional explanation. Results were distributed to unavailable and ineligible categories based on observed distributions.

Including 19 ineligible due to comorbidities.

Table 2.

Included study characteristics*

| Study | Cancer type | Stage | Other restrictions | Recruitment period | Other site information |

|---|---|---|---|---|---|

| Academic | |||||

| Lara, et al., 2001 (49) | All types | All stages | None listed | Multiple periods from January, 1997-April, 2000 | UC Davis Cancer Center |

| Martel, et al., 2004 (50) | All types | All stages | New patients (potentially) | August 2002 - November 2002 | UC Davis Cancer Center |

| Umutyan, et al., 2008 (52) | All types | All stages | None listed | October, 2004 – December, 2004 | UC Davis Cancer Center |

| Baggstrom, et al., 2010 (40) | NSCLC | All stages | None listed | January, 2006 – December, 2006 | Alvin J Siteman Cancer Center |

| Javid, et al., 2012 (46) | Breast | I to IV | New patients or new diagnosis; age ≥18 y; able to read and understand English | 2004-2008 | 8 SWOG sites |

| Kanarek, et al., 2012 (47) | Prostate | All stages | Patients seen for first visit | January, 2010 – April, 2010 | Sidney Kimmel Comprehensive Cancer Center |

| Horn, et al., 2013 (45) | Lung | All stages | New patients | November, 2005 – November, 2008 | Vanderbilt Ingram Cancer Center |

| Swain-Cabriales, et al., 2013 (51) | Breast | All stages | Histologically confirmed breast cancer; patients presenting for second opinion but not treated at the site were excluded | 2009 | City of Hope Medical Center |

| Brooks, et al., 2015 (41) | Cervix, uterus | All stages | Newly diagnosed primary or recurrent | July, 2010 – January, 2012 | Multiple GOG institutions |

| Community | |||||

| Klabunde, et al., 1999 (48) | All types | All stages | Age ≥20 y | June, 1997 – January, 1998 | 15 sites in southeastern US |

| Guarino, et al., 2005 (44) | All types | All stages | None listed | April, 2004 – August, 2004 | Physician practice |

| Go, et al., 2006 (42) | All types | All stages | New cancer | November, 2003 – October, 2004 | Gundersen Lutheran Cancer Center |

| Guadagnolo, et al., 2009 (43) | All types | All stages | Patients presenting for initial evaluation | September, 2006 – January, 2008 | Rapid City Regional Hospital |

All studies included all stages of disease. GOG = Gynecologic Oncology Group; NSCLC = non-small cell lung cancer; SWOG = Southwest Oncology Group; UC = University of California.

Evaluation of Heterogeneity and Moderation

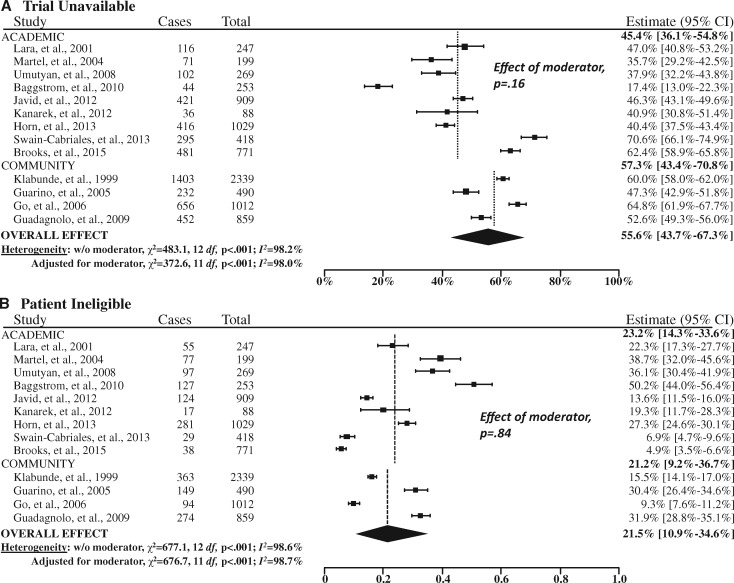

Both the estimated Q (P ≤ .001) and the I2 (>95% for three of the four domains) statistics indicated a high degree of heterogeneity across the studies, so a random effects approach was used (Figure 3). In addition, institutional setting (academic vs community site) was a statistically significant moderator of the rate of trial participation (P < .001). Thus, our final random effects model also included institutional care setting as a covariate.

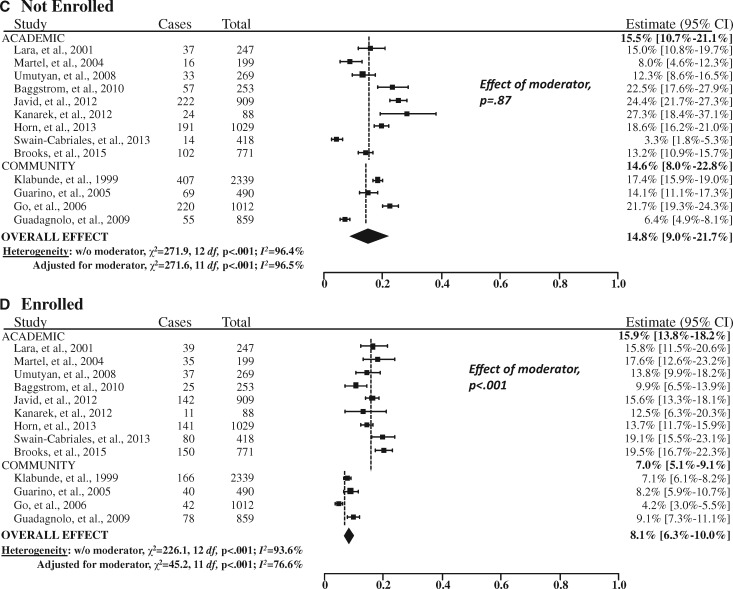

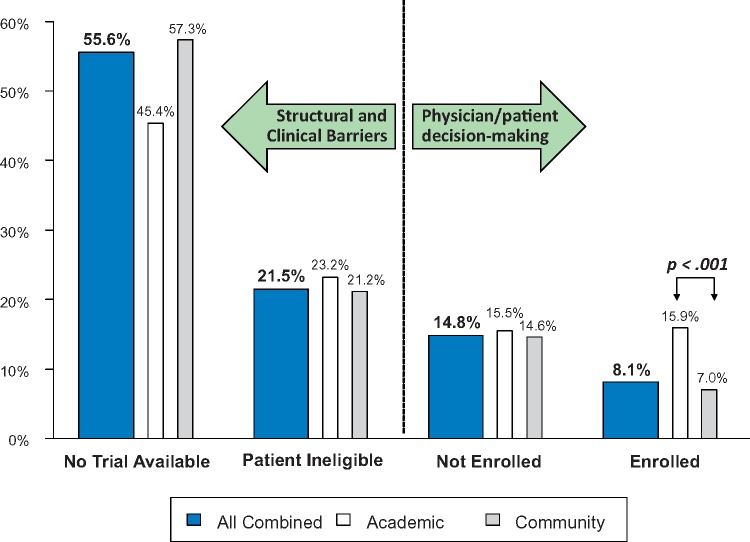

Figure 3.

Forest plots of the study-level and summary estimates for each domain. A) Trial unavailable. B) Patient ineligible. C) Not enrolled. D) Enrolled. The boxes show the study-level estimate and the 95% confidence intervals. The overall effect is a summary measure based on the meta-regression analysis accounting for institutional setting (academic vs community sites) as a moderator, weighted at a ratio of 15:85 based on the estimated proportion of cancer cases treated in the community setting (85%). The diamond shows the 95% confidence intervals (CIs) for the summary estimates. The P values were calculated from Cochran’s Q test; all statistical tests were two-sided. The dashed vertical lines indicate the derived estimate within academic and community sites, respectively.

Summary Estimates

For 55.6% (95% CI = 43.7% to 67.3%) of patients overall, no trial was available for the patient’s cancer type and stage (Figure 3). A further 21.5% (95% CI = 10.9% to 34.6%) of patients were not eligible for an available trial. Among the remaining patients, 14.8% (95% CI = 9.0% to 21.7%) did not enroll and 8.1% (95% CI = 6.3% to 10.0%) enrolled in a trial. In total, structural and clinical barriers are the reasons for more than three of four patients (77.1%) not enrolling in clinical trials (Figure 4).

Figure 4.

Magnitude of barriers for each domain for academic sites, community sites, and all sites combined. The P value was derived from a z score in a random effects model. A two-sided test was used.

Estimates by Institutional Setting

Trial participation was much greater at academic sites (15.9%, 95% CI = 13.8% to 18.2%) than at community sites (7.0%, 95% CI = 5.1% to 9.1%, P < .001; Figure 3). In contrast, there were no statistically significant differenecs in the rates of trial unavailability (P = .16), ineligibility (P = .84) or nonenrollment by institutional setting (P = .87).

Additional Analyses

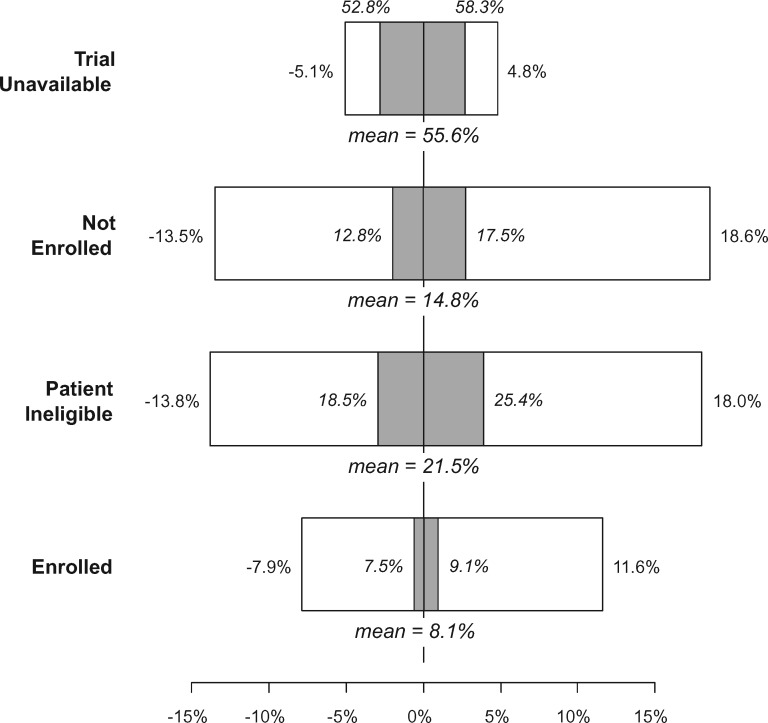

The exclusion of individual studies (“leave one out”) resulted in relative changes to the trial unavailable rate of at most 5.1% (range in absolute percentage estimates: 52.8%–58.3%), to the nonenrollment rate of at most 18.6% (range in absolute percentage estimates: 12.8%–17.5%), to the patient ineligibility rate of at most 18.0% (range in absolute percentage estimates: 18.5%–25.4%), and to the overall enrollment rate of at most 11.6% (range in absolute percentage estimates: 7.5%–9.1%; Figure 5). These results suggest that the influence of any particular study in our sample did not dramatically change the findings.

Figure 5.

Tornado plot showing sensitivity analysis results for the “leave one out” method. This approach excludes each of the 13 studies one at a time and recalculates the overall domain-specific estimates using the specified random-effects approach. Each box shows the range of relative (in white) and absolute percentage (in gray) increases or decreases in the overall estimated rate for each domain. The primary estimates are also shown.

We categorized our sample by newer vs older studies by calendar month and year of completion (recruitment period completion, after vs before 2006; see dates of completion, Table 2), separately within academic- and community-oriented studies. The estimated rates of trial unavailability, ineligibility, nonenrollment, and enrollment were 51.8%, 24.9%, 15.8%, and 8.5% for the older studies, and 58.7%, 19.1%, 13.9%, and 7.6% for the newer studies, respectively. There was no statistical evidence that rates for the older and newer studies differed for any of the domains using moderator analysis (P > .05 in all cases).

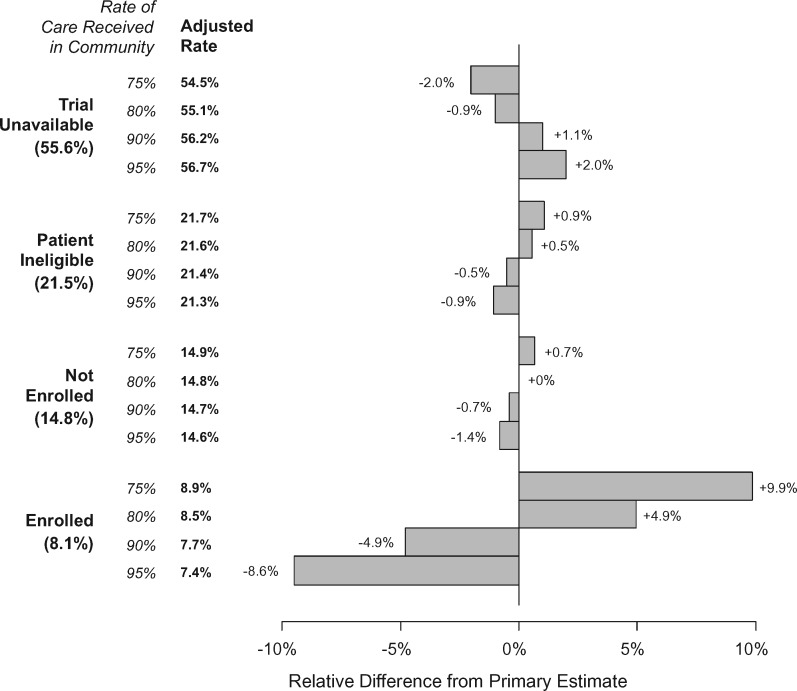

We allowed the assumed rate of care received in the community to vary (Figure 6). A 5% deviation from the primary assumed rate of 85% (ie, 80% or 90%) resulted in minimal relative changes in the percentage estimates for the trial unavailable rate (at most 1.1%), the trial ineligible rate (0.5%), and the nonenrollment rate (at most 0.7%). Changes to the overall trial enrollment rate were larger but still small (4.9%). A 10% deviation from the primary assumed rate (ie, 75% or 95%) generated relative differences that were twice that for a 5% deviation.

Figure 6.

Sensitivity of results to the assumed rate of care received in the community. For each domain, we allowed the assumed rate of care in the community to vary from 65% to 85% (with 75% not shown in the bar graph because this represents the primary baseline against which the alternative estimates are compared). The adjusted percentage rate is shown as well as the relative difference in the estimates in the bar graph.

No evidence of publication bias was identified according to the rank correlation test for trial unavailability (P = .31), ineligibility (P = .51), nonenrollment (P = .77), or enrollment (P = .51). Nearly all included studies (11 of 13, 84.6%) explicitly indicated that the assessment of trial availability was determined by cancer site (or “histologic type” or “disease site”) and stage (Supplementary Table 3, available online). However, one study more broadly defined trial availability as being one that is “appropriate to the patient’s condition,” which they further indicated can include either “cancer site and stage” or “patient class” as pertinent to prostate cancer (47,53,54). Another study was reported as an abstract only and contains no specific definition (42). With these two studies excluded, the overall trial unavailability rate increased modestly from 55.6% to 58.3%, a difference that was not statistically significant (P = .52) using meta-regression moderator analysis. There were also no differences in estimates of ineligibility (P = .75), nonenrollment (P = .37), or enrollment (P = .90).

Discussion

This is the first effort to systematically both define and quantify domains of clinical trial barriers using a meta-analytic approach. The results are striking in that more than half (55.6%) of all cancer patients do not participate in trials because no trial is available for the patient’s cancer type and stage at the treating center. When a trial was available, an additional 21.5% were ineligible. Taken together, these structural and clinical factors are the reasons more than three of four patients (77.1%) did not participate. At the same time, the influence of patient-related factors and patient choice—which occurs only at the end of an extensive decision-making process—comprised only a small portion of barriers to trial participation overall.

The absence of an available trial represents a systemic, structural attribute of conducting trials in the United States that reflects two main causes. First, a trial may not be available where the patient is being treated. Having to travel to participate in a trial can be an overwhelming burden to patients. Second, for some cancers, there may not be any trials available because the cancer is curable, has a very favorable prognosis, or is rare. In these settings, investing in new treatment development may not be a priority given finite resources. The Orphan Drug Act of 1983 represented an attempt to provide an incentive to develop new treatments for rare cancers (55,56). Increasingly, the expansion of biomarker-specific eligibility criteria to tumor staging may limit trial participation for some patients. The advent of precision medicine-oriented umbrella trials, which allow treatment for patients of any biomarker profile, are intended in part to help alleviate this trial barrier (57,58).

Clinical trials exclude patients for many reasons related to the desire to maintain patient safety, and to establish a study cohort with similar patient profiles to more accurately assess the response of patients to different treatments (59). One criticism of trial designs is that eligibility criteria are too narrow, excluding many patients for minimal safety or research benefit and generating study results based on narrow populations of patients (60). A collaboration of stakeholders including the American Society for Clinical Oncology, Friends of Cancer Research, and the United States Food and Drug Administration recently published recommendations to modernize eligibility criteria to ensure that patients are not unnecessarily excluded from trial participation (11,12). Efforts such as these are vital to reducing barriers to accessing trials for patients with baseline comorbid conditions, especially those conditions that have only limited impact on cancer outcomes.

Nonenrollment of eligible patients in clinical trials can be due to physician or patient factors. Physicians often decide not to ask eligible patients about trial participation due to institutional or clinic time/reimbursement constraints, treatment preference, or other reasons (42,43,46,61). This removes a key opportunity for patients to consider taking part in a trial. Surveys have shown that patients look to their doctors as a major source of information about clinical trials (13,14) and that when eligible patients are actually offered trial participation, they say yes more than 50% of the time (7,25,61–64). Top reasons patients decline participation include the desire to determine their own treatment, loss of control, fear of side effects, concerns about costs, and logistical barriers like transportation (8,25,46,48,49,65,66).

Finally, our estimate of the overall trial participation rate was 8.1%, higher than the 2%–3% rates typically assumed. This is likely due to the contributions of industry-sponsored trials, whereas prior evaluations of trial participation emphasized participation in government-sponsored research. Indeed, our estimate is consistent with the observation that the ratio of industry- to government-sponsored trial participation is more than 2 to 1 (67–69). Our findings about the average participation rate are supported by at least one population-based survey, which showed an overall participation rate of 11% (70). Nonetheless, the rate remains low with numerous adverse consequences. Trials often fail to complete due to poor accrual or take a very long time to complete, generating less timely and less influential results (71–73). Additionally, differences by site type were evident for trial participation, wherein academic sites were more likely to have patients participate in trials (Figure 3). These results reflect the fact that academic centers are more often oriented towards clinical research.

The results of this analysis were robust in sensitivity analyses. There was limited evidence that any individual study finding influenced the results. Also, the results were not substantively changed under different assumptions about the proportions of patients receiving care in community settings, nor was there marked evidence of publication bias or that patterns of trial decision-making have changed over time.

But the study also has limitations. The specification of how trial availability was determined was available for most but not all studies. Although the included studies all examined patients of any stage—an important element of consistency—not all studies sampled a representative set of cancers, relying instead on selected cancer types. More generally, the studies included in this examination may have oversampled research-oriented sites. If so, the actual overall trial participation rate may be lower than we estimated, and, conversely, the rate of structural and clinical barriers may be higher than estimated. The use of novel data sources that sample a broad spectrum of both research- and nonresearch-oriented centers—such as institutional accreditation data—may help clarify the rate of trial participation.

These findings emphasize the enormous need to address structural and clinical barriers to trial participation, which combined are the reasons trial participation is not possible for more than 3 of 4 cancer patients. One focus should be to improve access to available trials. Some steps have been taken. The NCI-sponsored network cancer research groups make their trials available to all groups through a central participation mechanism (the Cancer Trials Support Unit) and have grown outreach to embrace community oncologists and their patients. Numerous portals to identify recruiting trials are available, including through cancer.gov. Only by addressing structural and clinical barriers will the participation rate in cancer treatment trials be increased in a substantial way.

Funding

Research reported in this publication was supported by the National Cancer Institute of the National Institutes of Health under award number UG1CA189974.

Notes

Affiliations of authors: Fred Hutchinson Cancer Research Center, Seattle, WA (JMU, RV); SWOG Statistical Center, Seattle, WA (JMU, RV); Columbia University Medical Center, New York, NY (DLH); National Cancer Institute, Division of Cancer Prevention, Rockville, MD (LMM); American Cancer Society Cancer Action Network Inc., Washington, DC (MF).

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The funders had no role in the design of the study; the collection, analysis, and interpretation of the data; the writing of the manuscript; and the decision to submit the manuscript for publication.

The authors have no disclosures.

Supplementary Material

References

- 1.IOM (Institute of Medicine). Transforming Clinical Research in the United States: Challenges and Opportunities: Workshop Summary. Washington, DC: The National Academies Press; 2010. [PubMed] [Google Scholar]

- 2. Murthy VH, Krumholz HM, Gross CP.. Participation in cancer clinical trials: race-, sex-, and age-based disparities. JAMA. 2004;291(22):2720–2726. [DOI] [PubMed] [Google Scholar]

- 3. Sateren WB, Trimble EL, Abrams J, et al. How sociodemographics, presence of oncology specialists, and hospital cancer programs affect accrual to cancer treatment trials. J Clin Oncol. 2002;20(8):2109–2117. [DOI] [PubMed] [Google Scholar]

- 4. Tejeda HA, Green SB, Trimble EL, et al. Representation of African-Americans, Hispanics, and whites in National Cancer Institute cancer treatment trials. J Natl Cancer Inst. 1996;88(12):812–816. [DOI] [PubMed] [Google Scholar]

- 5. Comis RL, Miller JD, Aldigé CR, Krebs L, Stoval E.. Public attitudes toward participation in cancer clinical trials. J Clin Oncol. 2003;21(5):830–835. [DOI] [PubMed] [Google Scholar]

- 6. Ford JG, Howerton MW, Lai GY, et al. Barriers to recruiting underrepresented populations to cancer clinical trials: a systematic review. Cancer. 2008;112(2):228–242. [DOI] [PubMed] [Google Scholar]

- 7. Kemeny MM, Peterson BL, Kornblith AB, et al. Barriers to clinical trial participation by older women with breast cancer. J Clin Oncol. 2003;21(12):2268–2275. [DOI] [PubMed] [Google Scholar]

- 8. Meropol NJ, Buzaglo JS, Millard J, et al. Barriers to clinical trial participation as perceived by oncologists and patients. J Natl Compr Canc Netw. 2007;5(8):655–664. [DOI] [PubMed] [Google Scholar]

- 9. Mills EJ, Seely D, Rachlis B, et al. Barriers to participation in clinical trials of cancer: a meta-analysis and systematic review of patient-reported factors. Lancet Oncol. 2006;7(2):141–148. [DOI] [PubMed] [Google Scholar]

- 10. Ross S, Grant A, Counsell C, Gillespie W, Russell I, Prescott R.. Barriers to participation in randomized controlled trials: a systematic review. J Clin Epidemiol. 1999;52(12):1143–1156. [DOI] [PubMed] [Google Scholar]

- 11.American Society of Clinical Oncology. ASCO in Action: Initiative to Modernize Eligibility Criteria for Clinical Trials Launched May 17, 2016. https://www.asco.org/advocacy-policy/asco-in-action/initiative-modernize-eligibility-criteria-clinical-trials-launched. Accessed March 11, 2018.

- 12. Kim ES, Bruinooge SS, Roberts S, et al. Broadening eligibility criteria to make clinical trials more representative: American Society of Clinical Oncology and Friends of Cancer Research Joint Research Statement. J Clin Oncol. 2017;35(33):3737–3744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Sood A, Prasad K, Chhatwani L, et al. Patients’ attitudes and preferences about participation and recruitment strategies in clinical trials. Mayo Clin Proc. 2009;84(3):243–247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Research America. America Speaks: Poll Data Summary Vol 18. https://www.researchamerica.org/sites/default/files/RSAM_1801_PollDataSummary_FINALWEB.pdf. Accessed October 29, 2018.

- 15. Somkin CP, Altschuler A, Ackerson L, et al. Organization barriers to physician participation in cancer clinical trials. Am J Manag Care. 2005;11(7):413–421. [PubMed] [Google Scholar]

- 16.Cancer Support Community. Distress Screening https://www.cancersupportcommunity.org/distress-screening. Accessed October 23, 2018.

- 17. Copur MS, Ramaekers R, Gönen M, et al. Impact of the National Cancer Institute Community Cancer Centers Program on clinical trial and related activities at a community cancer center in rural Nebraska. JOP. 2016;12(1):67–68, e44–e51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Johnson MR, O’Brien DM.. Improving Cancer Care and Expanding Research in the Community: The NCI Community Cancer Centers Program March 23, 2010. http://www.strategicvisionsinhealthcare.com/wp-content/uploads/2012/03/NCIJohnson-OBrienVHA-3-23-10final-3-17-10no-notes2.pdf. Accessed October 23, 2018.

- 19. Kincaid E. Advanced cancer treatments far from big-name hospitals. The Wall Street Journal. March 6, 2017. [Google Scholar]

- 20. Petrelli NJ. A community cancer center program: getting to the next level. J Am Coll Surg. 2010;210(3):261–270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Pfister DG, Rubin DM, Elkin EB, et al. Risk adjusting survival outcomes in hospitals that treat patients with cancer without information on cancer stage. JAMA Oncol. 2015;1(9):1303–1310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Unger JM, Cook E, Tai E, Bleyer A.. The role of clinical trial participation in cancer research: barriers, evidence, and strategies. Am Soc Clin Oncol Educ Book. 2016;36:185–198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Hutchins LF, Unger JM, Crowley JJ, Coltman CA, Albain KS.. Underrepresentation of patients 65 years of age or older in cancer-treatment trials. N Engl J Med. 1999;341(27):2061–2067. [DOI] [PubMed] [Google Scholar]

- 24. Stewart JH, Bertoni AG, Staten JL, Levine EA, Gross CP.. Participation in surgical oncology clinical trials: gender-, race/ethnicity-, and age-based disparities. Ann Surg Oncol. 2007;14(12):3328–3334. [DOI] [PubMed] [Google Scholar]

- 25. Unger JM, Hershman DL, Albain KS, et al. Patient income level and cancer clinical trial participation. J Clin Oncol. 2013;31(5):536–542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Moher D, Liberati A, Tetzlaff J, et al. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6(7):e1000097.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Viechtbauer W. Conducting meta-analyses in R with the metaphor package. J Stat Softw. 2010;36(3):1–48. [Google Scholar]

- 28. Wang N. How to conduct a meta-analysis of proportions in R: a comprehensive tutorial. 2018; doi:10.13140/RG.2.2.27199.00161.

- 29. Borenstein M, Hedges LV, Higgins JP, Rothstein HR.. A basic introduction to fixed-effect and random-effects models for meta-analysis. Res Synth Methods. 2010;1(2):97–111. [DOI] [PubMed] [Google Scholar]

- 30. Brockwell SE, Gordon IR.. A comparison of statistical methods for meta-analysis. Stat Med. 2001;20(6):825–840. [DOI] [PubMed] [Google Scholar]

- 31. Barendregt JJ, Doi SA, Lee YY, Norman RE, Vos T.. Meta-analysis of prevalence. J Epidemiol Community Health. 2013;67(11):974–978. [DOI] [PubMed] [Google Scholar]

- 32. Cochran WG. The combination of estimates from different experiments. Biometrics. 1954;10(1):101–129. [Google Scholar]

- 33. DerSimonian R, Laird NM.. Meta-analysis in clinical trials. Control Clin Trials. 1986;7(3):177–188. [DOI] [PubMed] [Google Scholar]

- 34. Higgins JPT, Thompson SG.. Quantifying heterogeneity in a meta-analysis. Stat Med. 2002;21(11):1539–1558. [DOI] [PubMed] [Google Scholar]

- 35. Langan D, Higgins JPT, Jackson D, et al. A comparison of heterogeneity variance estimators in simulated random-effects meta-analyses. Res Synth Methods. 2018; doi:10.1002/jrsm.1316. [DOI] [PubMed] [Google Scholar]

- 36. Viechtbauer W. Bias and efficiency of meta-analytic variance estimators in the random-effects model. J Educ Behav Stat. 2005;30(3):261–293. [Google Scholar]

- 37. Borenstein M, Hedges LV, Higgins JPT, Rothstein HR.. Introduction to Meta-Analysis. West Sussex, United Kingdom: John Wiley & Sons, Ltd; 2009. [Google Scholar]

- 38. Freeman MF, Tukey JW.. Transformations related to the angular and the square root. Ann Math Stat. 1950;21(4):607–611. [Google Scholar]

- 39. Begg CB, Mazumdar M.. Operating characteristics of a rank correlation test for publication bias. Biometrics. 1994;50(4):1088–1101. [PubMed] [Google Scholar]

- 40. Baggstrom MQ, Waqar SN, Sezhiyan AK, et al. Barriers to enrollment in non-small cell lung cancer therapeutic clinical trials. J Thorac Oncol. 2011;6(1):98–102. [DOI] [PubMed] [Google Scholar]

- 41. Brooks SE, Carter RL, Plaxe SC, et al. Patient and physician factors associated with participation in cervical and uterine cancer trials: an NRG/GOG247 study. Gynecol Oncol. 2015;138(1):101–108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Go RS, Frisby KA, Lee JA, et al. Clinical trial accrual among new cancer patients at a community-based cancer center. Cancer. 2006;106(2):426–433. [DOI] [PubMed] [Google Scholar]

- 43. Guadagnolo BA, Petereit DG, Helbig P, et al. Involving American Indians and medically underserved rural populations in cancer clinical trials. Clin Trials. 2009;6(6):610–617. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Guarino MJ, Masters GA, Schneider CJ, et al. Barriers exist to patient participation in clinical trials. J Clin Oncol. 2005;23(16_suppl):6015–6015. no. [Google Scholar]

- 45. Horn L, Keedy VL, Campbell N, et al. Identifying barriers associated with enrollment of patients with lung cancer into clinical trials. Clin Lung Cancer. 2013;14(1):14–18. [DOI] [PubMed] [Google Scholar]

- 46. Javid SH, Unger JM, Gralow JR, et al. A prospective analysis of the influence of older age on physician and patient decision-making when considering enrollment in breast cancer clinical trials (SWOG S0316). Oncologist. 2012;17(9):1180–1190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Kanarek NF, Kanarek MS, Olatoye D, Carducci MA.. Removing barriers to participation in clinical trials, a conceptual framework and retrospective chart review study. Trials. 2012;13:237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Klabunde CN, Springer BC, Butler B, et al. Factors influencing enrollment in clinical trials for cancer treatment. South Med J. 1999;92(12):1189–1193. [DOI] [PubMed] [Google Scholar]

- 49. Lara PN Jr, Higdon R, Lim N, et al. Prospective evaluation of cancer clinical trial accrual patterns: identifying potential barriers to enrollment. J Clin Oncol. 2001;19(6):1728–1133. [DOI] [PubMed] [Google Scholar]

- 50. Martel CL, Li Y, Beckett L, et al. An evaluation of barriers to accrual in the era of legislation requiring insurance coverage of cancer clinical trial costs in California. Cancer J. 2004;10(5):294–300. [DOI] [PubMed] [Google Scholar]

- 51. Swain-Cabriales S, Bourdeanu L, Niland J, Stiller T, Somlo G.. Enrollment onto breast cancer therapeutic clinical trials: a tertiary cancer center experience. Appl Nurs Res. 2013;26(3):133–135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Umutyan A, Chiechi C, Beckett LA, et al. Overcoming barriers to cancer clinical trial accrual: impact of a mass media campaign. Cancer. 2008;112(1):212–219. [DOI] [PubMed] [Google Scholar]

- 53. Bubley GJ, Carducci M, Dahut W, et al. Eligibility and response guidelines for phase II clinical trials in androgen-independent prostate cancer: recommendations from the Prostate-Specific Antigen Working Group. J Clin Oncol. 1999;17(11):3461–3467. [DOI] [PubMed] [Google Scholar]

- 54. Scher HI, Eisenberger M, D’Amico AV, et al. Eligibility and outcomes reporting guidelines for clinical trials for patients in the state of a rising prostate-specific antigen: recommendations from the Prostate-Specific Antigen Working Group. J Clin Oncol. 2004;22(3):537–556. [DOI] [PubMed] [Google Scholar]

- 55. Haffner ME. Adopting orphan drugs—two dozen years of treating rare diseases. N Engl J Med. 2006;354(5):445–447. [DOI] [PubMed] [Google Scholar]

- 56.Orphan Drug Act of 1983. Pub L. No. 97–414, 96 Stat. 2049.

- 57. Mullard A. NCI-MATCH trial pushes cancer umbrella trial paradigm. Nat Rev Drug Discov. 2015;14(8):513–515. [DOI] [PubMed] [Google Scholar]

- 58. Steuer CE, Papadimitrakopoulou V, Herbst RS, et al. Innovative clinical trials: the LUNG-MAP study. Clin Pharmacol Ther. 2015;97(5):488–491. [DOI] [PubMed] [Google Scholar]

- 59. Green S, Benedetti J, Crowley J.. Clinical Trials in Oncology. 3rd ed Boca Raton, FL: CRC Press; 2003. [Google Scholar]

- 60. Newhouse JP, McClellan M.. Econometrics in outcomes research: the use of instrumental variables. Annu Rev Public Health. 1998;19:17–34. [DOI] [PubMed] [Google Scholar]

- 61. St Germain D, Denicoff AM, Dimond EP, et al. Use of the National Cancer Institute Community Cancer Centers Program screening and accrual log to address cancer clinical trial accrual. JOP. 2014;10(2):e73–e80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Avis NE, Smith KW, Link CL, et al. Factors associated with participation in breast cancer treatment clinical trials. J Clin Oncol. 2006;24(12):1860–1867. [DOI] [PubMed] [Google Scholar]

- 63. Mannel RS, Walker JL, Gould N, et al. Impact of individual physicians on enrollment of patients into clinical trials. Am J Clin Oncol. 2003;26(2):171–173. [DOI] [PubMed] [Google Scholar]

- 64. Wujcik D, Wolff SN.. Recruitment of African Americans to National Oncology Clinical Trials through a clinical trial shared resource. J Health Care Poor Underserved. 2010;21(1 suppl):38–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.American Cancer Society Cancer Action Network. Barriers to Patient Enrollment in Therapeutic Clinical Trials for Cancer: A Landscape Report https://www.acscan.org/policy-resources/clinical-trial-barriers. Accessed June 19, 2018.

- 66. Zaleta AK, Miller MF, Johnson J, McManus S, Buzaglo JS, Perceptions of cancer clinical trials among racial and ethnic minority cancer survivors In: American Psychological Association Annual Convention. Washington, DC: American Psychological Association; 2017. [Google Scholar]

- 67. Anderson ML, Chiswell K, Peterson ED, et al. Compliance with results reporting at ClinicalTrials.gov. N Engl J Med. 2015;372(11):1031–1039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Ehrhardt S, Appel LJ, Meinert CL.. Trends in National Institutes of Health funding for clinical trials registered in ClinicalTrials.gov. JAMA. 2015;314(23):2566–2567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Hirsch BR, Califf RM, Cheng SK, et al. Characteristics of oncology clinical trials: insights from a systematic analysis of ClinicalTrials.gov. JAMA Intern Med. 2013;173(11):972–979. [DOI] [PubMed] [Google Scholar]

- 70. Baquet CR, Commiskey P, Daniel Mullins C, et al. Recruitment and participation in clinical trials: socio-demographic, rural/urban, and health care access predictors. Cancer Detect Prev. 2006;30(1):24–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71. Djulbegovic B, Kumar A, Soares HP, et al. Treatment success in cancer: new cancer treatment successes identified in phase 3 randomized controlled trials conducted by the National Cancer Institute-sponsored cooperative oncology groups, 1955 to 2006. Arch Intern Med. 2008;168(6):632–642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72. Soares HP, Kumar A, Daniels S, et al. Evaluation of new treatments in radiation oncology: are they better than standard treatments? JAMA. 2005;293(8):970–978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Unger JM, Barlow WE, Ramsey SD, et al. The scientific impact of positive and negative phase 3 cancer clinical trials. JAMA Oncol. 2016;2(7):875–881. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.