Abstract

In healthcare applications, temporal variables that encode movement, health status and longitudinal patient evolution are often accompanied by rich structured information such as demographics, diagnostics and medical exam data. However, current methods do not jointly optimize over structured covariates and time series in the feature extraction process. We present ShortFuse, a method that boosts the accuracy of deep learning models for time series by explicitly modeling temporal interactions and dependencies with structured covariates. ShortFuse introduces hybrid convolutional and LSTM cells that incorporate the covariates via weights that are shared across the temporal domain. ShortFuse outperforms competing models by 3% on two biomedical applications, forecasting osteoarthritis-related cartilage degeneration and predicting surgical outcomes for cerebral palsy patients, matching or exceeding the accuracy of models that use features engineered by domain experts.

1. Introduction

In biomedical applications, time series data frequently co-occur with structured information. These time series data vary widely in form and temporal resolution, from high-frequency vital signs to longitudinal health indicators in an electronic medical record to activity monitoring data recorded by accelerometers. Structured covariates, such as patient demographics and measures from clinical examinations, are common and complementary to these time series data. While large amounts of these types of data are available, they are in many cases challenging to integrate and analyze.

For instance, consider data from patients with cerebral palsy (CP), a condition that affects approximately 3 out of every 1000 children in the U.S. (Bhasin et al., 2006). Cerebral palsy makes walking inefficient and sometimes painful. Musculoskeletal surgeries can improve walking, but outcomes are highly variable. Extensive data is available to aid treatment planning, including gait analysis data that characterizes the motion of each joint (e.g., hip, knee, and ankle) during gait, along with a host of structured data such as strength and flexibility measures and birth history (e.g., number of weeks born premature). At many clinical centers, there are roughly as many structured covariates as time series features, from high resolution gait data to clinical visit records kept over several years. All these interconnected factors make treatment planning difficult.

Current methods for analyzing these types of data rely on extensive feature engineering, often modeling the time-series and structured information independently. Standard transformations such as Principal Component Analysis (PCA) can be insufficient for capturing all information in time series, requiring additional feature engineering by domain experts. Traditionally, when methods such as PCA, Multiple Kernel Learning (MKL), Dynamic Time Warping (DTW), neural networks or other transformations are used to extract features from time series, the structured covariates in the datasets have no impact on the learned temporal features. In most biomedical applications, there are interactions and correlations between time series and covariates that we would like to leverage. In the case of cerebral palsy, younger children or those with a more severe neural injury might have different gait features that help predict an appropriate surgical plan.

To address this issue, we introduce ShortFuse, a method that boosts the accuracy of deep learning models for time series by leveraging the structured covariates in the dataset. The key to learning relevant representations is to take into account the specifics of the covariates. For instance, cerebral palsy subjects are seen and treated from toddlerhood to adulthood and the temporal patterns in the joint motion waveforms will depend on the subject’s stage of development – while walking on the toes is normal in toddlers, it is an abnormality in older children and adults. By definition, the structured information for a sample is constant along the temporal domain, which is why its corresponding parameters should not be allowed considerable variations, as that would translate to an additional intercept term and result in overfitting. As an illustration of this, consider that two successive gait cycles for the same subject could result in vastly different representations by tweaking the time-varying weights of the covariates, which is why this temporal variation of the weights should be discouraged through parameter sharing. Finally, as clinicians try to keep as complete records as possible, there might be numerous fields denoting clinical tests and patient history which, unlike the time series data, might not be relevant to the predictive task at hand, so there must be some mechanism to discount them and not explode the parameter space.

ShortFuse preserves the sequential structure of time series, explicitly modeling interactions and dependencies with structured covariates, allowing the latter to guide feature learning and improve predictive performance. Our approach introduces specialized structures, which we call ‘hybrid layers’ for fusing structured covariates with time series data. The hybrid layers use structured information as distinct inputs, which are used to parametrize, guide, and enrich the feature representations. The first type of layer uses convolutions parametrized by the covariates, where the weights of the structured covariates are shared across the convolutions. Secondly, we introduce an LSTM hybrid, which shares the covariates and their weights across the cells and uses them in the computation of the input gate, forget gate, state change and output layer. The LSTM hybrid is thus able, for instance, to adjust the length of the forget window according to the structured information.

We demonstrate, through two representative biomedical applications, the versatility of ShortFuse, which makes no assumptions regarding the structure, dimensionality, or sampling frequency of the time series. We also show that the method is flexible, in that it can be applied to RNN or CNN model architectures. Through the two biomedical examples, we show that adding structured covariates boosts the accuracy of a time-series-only deep learning model. In addition, ShortFuse matches or improves on results obtained through feature engineering performed by domain experts, achieving state-of-the-art accuracy with no manual feature engineering. While the focus here is on biomedical applications, ShortFuse is also applicable to vehicle monitoring, financial forecasting, activity recognition based on sensor arrays, and prediction of cyberattacks from net traffic.

2. Related Work

Several different approaches have been used to featurize time series for integration with structured information. A simple approach is to construct histograms of the values in the sequence and operate solely on count data. This is a common approach to extract features about physical activity intensity from accelerometer data, e.g. (Dunlop et al., 2011; Song et al., 2010). Principal Component Analysis (PCA) can summarize signals by extracting the linear combinations that account for most of the variance in the data. For example, previous investigators have used PCA to extract features from joint motion waveforms measured during walking and running and then appended the principal components to other structured information, e.g. (Astephen et al., 2008; Federolf et al., 2013). Segmentation of periodic signals into periodic intervals is also widely used for the processing of vital signs such as ECG (Keogh et al., 2001, 2004) to extract features such as peak-to-peak variability. Methods that account for time series similarity, such as Dynamic Time Warping (DTW) were previously applied to sensor data from an Inertial Measurement Unit (IMU) for gait recognition (Kale et al., 2003) in combination with age and gender information (Trung et al., 2012) and the study of gait in subjects with Parkinson’s disease (Wang et al., 2016b). Other sophisticated methods such as Multiple Kernel Learning (MKL) (Aiolli and Donini, 2014) have been applied, for example, to classify, based on electroencephalography (EEG) signals, which parts of the brain are important for subjects performing gait movements (stop, walk, turn) (Zhang et al., 2017). Hand engineered features, such as summary statistics, ranges of values, and spectral data extracted from the signal are also frequently employed (e.g., for accelerometer-based activity data (Lee et al., 2015) and joint motion waveforms (Truong et al., 2011; Fukuchi et al., 2011). All these existing methods for combining covariates with time series are highly specialized for their intended application. Optimizing feature representations over all data, temporal and structured, could improve predictive performance by accounting for the interdependence between temporal and structured data.

Deep learning obviates this need for feature engineering and provides a general method to integrate time series and structured covariates, but approaches of joint optimization over these data are largely unexplored. In the past, CNNs and LSTMs have proven apt at encoding temporal information. RNN and LSTMs were shown to perform well for vital signs (Graves et al., 2013). Deep CNNs perform well for network traffic monitoring (Wang et al., 2016a), financial time series (Borovykh et al., 2017), audio (Zheng et al., 2014) and clinical diagnostics (Razavian and Sontag, 2015). Multiscale or Multiresolution CNNs were recently shown to perform well on time series benchmark tasks (Cui et al., 2016). Encoders can improve the performance of architectures, for example in the case of anomaly detection in vehicle sensors (Malhotra et al., 2016).

Given the wide range of available deep learning architectures, one could trivially introduce covariates at the bottom levels of existing models by replicating them along the temporal dimension, thus obtaining a constant sequence for each covariate, which can be added to existing time series. This poses multiple problems. LSTM layers learn from variations along the temporal domain, which do not appear in covariates. With convolutions, there is no parameter sharing, which means the replicated covariates are treated as separate inputs, which can easily lead to overfitting due to the introduction of parameters for each of the covariates at each time point, which are not needed as the covariates themselves do not change in time. Also, there are no shortcut connections that may link covariates to later stages in the network, which restricts the information flow. This misses the opportunity for something akin to skip connections (Sermanet et al., 2013), which can enrich representations by connecting arbitrary levels in the network. ShortFuse overcomes all these issues by jointly learning representations over heterogeneous time series and structured data though the hybrid layers described in detail in the next section.

3. ShortFuse

In the sections below, we discuss the fusion of information from time series and structured data using deep neural networks, and introduce the technical contributions of ShortFuse.

3.1. Information Fusion

First, we discuss the cases when fusing time series data and covariates leads to improved predictive performance. We assume that the input data has a set of d structured covariates. S is the design matrix, the structured information in the dataset. X is a fixed-length multivariate time series. We refer to the temporal variables as sequences or signals. Y is the univariate output. For a sample i, we use si, xi and yi to indicate the covariate vector, time series and label, respectively. xi is a matrix of n by t, where n is the recorded number of sequences, or time series signals, and t is the number of points in time at which the records were captured. y is an integer representing the class label.

Given that the covariates and the sequences typically record different clinical data, it is expected that a predictive model of Y using both X and S will perform better than using either X or S. A simple test to check whether the covariates contain additional information is that Y is not conditionally independent of S given X. In this case, I(Y;S|X) ≠ 0. Recent work in nonparametric estimation of mutual information (Reddi and Póczos, 2013) makes it possible to perform this test. Similarly, if I(Y;X|S) ≠ 0, the covariates are also insufficiently informative. In other words, fusion of time series and covariates should be used when both of the conditional mutual information values are above 0.

The simplest approach to introducing covariates in the deep learning model is to replicate each covariate, and provide them as inputs appended to the time series. Alternatively, they could be introduced in one of the intermediate layers or only used in the final layer in the network. These choices impact the learned features, as the relevant temporal features often depend directly on the structured covariates.

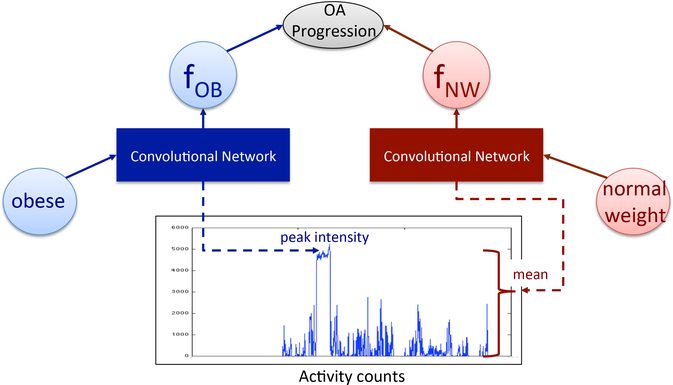

For instance, consider the case of two subjects with osteoarthritis, but different body mass index (BMI) values – one subject is obese and one is normal weight. The task is to determine whether osteoarthritis will progress in time given the subjects’ physical activity data, time-series data from accelerometers, and other structured covariates. For the obese subject, the important physical activity features may be different. For example, given the obese subject’s higher weight to height ratio, intense activity may cause detrimental loading of the joint, while in the normal subject the same types of activity may not contribute to disease progression. Instead, for the healthy subject, the mean or minimum activity intensity is possibly more predictive. Thus, structured information present in the dataset has a direct impact on which features should be learned by the model. In this case, not considering the covariates runs the risk of producing less informative features.

Figure 1 illustrates a minimal structure that is capable, via back-propagation, of leveraging these dependencies to learn and use internal representations as appropriate for the predictive task. Assume there are two binary structured covariates, ‘obese’ and ‘normal weight’, representing subjects’ BMI status. If ‘obese’ is active for a subject we’ll use their data to update the first feature fOB. Over time, the feature will become informative in determining whether the subjects osteoarthritis will progress for obese subjects. For instance, it might learn to encode maximum activity intensity. As the covariate ‘obese’ is 0 for subjects with normal weight, feature fOB does not contribute to their predictions. The second feature fNW will be updated in the same way, using data from the subjects with a normal BMI. Overall, the internal representations are only influenced by the samples for which these representations are relevant.

Figure 1:

Feature learning mechanism in the presence of covariates. fOB and fNW are internal representations learned by the network. The two parts of the convolutional network learn features relevant for obese subjects and subjects with normal weight, respectively.

3.2. Fused Architectures

ShortFuse works on the premise that the earlier the covariates are introduced into a network, the more they will be able to direct feature construction. Given a deep network designed for time series, ShortFuse constructs hybrid layers that use the covariates in such a manner that the representational capabilities of LSTMs and CNNs are preserved, meaning that the hypothesis space for the learned features is expanded. The key novelty of the hybrid layers is the treatment of structured covariates as global features that are combined with the local temporal patterns encoded by the network. The following section details the hybrid CNNs and LSTMs used to obtain our results, while a complete list, based on commonly used deep learning layers, is summarized in Appendix A, Table 2.

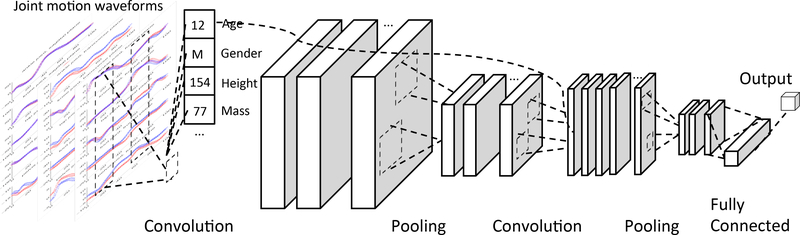

3.2.1. Hybrid Convolutions

The ShortFuse hybrid convolutional layers, used predominantly though not exclusively in the initial layers of the network, provide the covariates as parameters to every convolution function along the temporal dimension, together with the time series in that specific window. Figure 2 shows a network with two convolutional hybrid layers. Dropout is used such that not all the covariates are provided to all the convolutions.

Figure 2:

Hybrid convolutional layers using structured covariates. The time series data is shown on the left. The convolutions, using dropout, are then applied to the sequences in a time window with the covariates (age, gender, height, and mass) as parameters. There can be several convolutional filters, the output of which are pooled, followed by another layer of convolutions which can, in turn, use the covariates. In this example, there is a second pooling layer followed by a fully connected layer and a softmax.

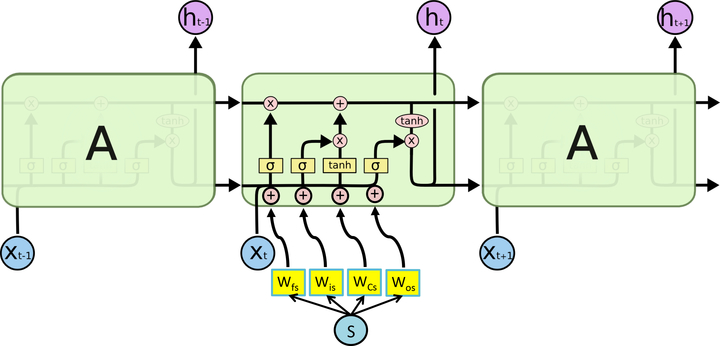

3.2.2. Hybrid LSTMs

For LSTM-based architectures, the structured covariates are used internally by the LSTM as part of additive terms in the computation of the LSTM’s nonlinearities, as shown in Figure 3. We introduce weights Wfs(forget gate), Wis (input gate), WCs(state change), Wos (output gate). The added ‘s’ in the subscript indices of the weights indicate that these weights correspond to the structured covariates s. The terms Wfs · s, Wis · s, WCs · s and Wos ·s are added to the arguments of each of the four nonlinearities in the LSTM. The time series values xt−1, xt and xt+1 are provided as input to the cells. The structured covariates s for a given sample are shared across the LSTM cells, together with the covariate weights Wfs, Wis, WCs and Wos.

Figure 3:

Hybrid LSTM layer. The structured covariate weights are shown in yellow. A dot product between these parameters, shared across cells and the structured covariates is added to the original input to the LSTM nonlinearities. Within a cell, the symbols + and × in the small circles represent binary operations, while tanh in the oval is the activation applied to the output. The functions in the yellow rectangles, σ, σ, tanh and σ represent the nonlinearities of the LSTM for the forget gait, input gate, state change and output gate respectively. The outputs of the LSTM, ht−1, ht and ht+1, are the learned representations.

3.2.3. Late Fuse

A simple alternative to merging time series and covariates data uses a CNN on the structured covariates before the output layer (softmax, binary cross-entropy) of the network. The method is called LateFuse as the covariates are only considered at the end. LateFuse merges the outputs from the network on the time series data and from the covariate CNN.

4. Experimental Design

We developed an evaluation framework to compare ShortFuse (i.e., early use of covariates) to deep learning models that do not use covariates, along with LateFuse (features included in the top level), and methods which train classifiers on separate time series representations appended to structured covariates. We selected two representative biomedical applications: predicting good candidates for surgical treatment of cerebral palsy-related gait disorders and predicting cartilage degeneration in patients at risk for osteoarthritis.

4.1. Candidate Models

For each application we tested several deep learning models that have been shown to perform well for time series, as discussed in Section 2. The contenders include an LSTM (Appendix B, Figure 4a) (Graves et al., 2013), a Deep CNN (Conneau et al., 2016), a Multiresolution CNN (Cui et al., 2016), and a CNN network with an Encoder (Appendix B, Figure 4b) (Malhotra et al., 2016). We compared these CNN and LSTM based models, following hyper-parameter tuning according to the application, against ShortFuse and LateFuse, using the top-performing deep learning model for each of the applications.

We also ran Multiple Kernel Learning (MKL) and Dynamic Time Warping (DTW). MKL is a class of algorithms which uses linear combinations of a few predetermined kernels to predict the output (Aiolli and Donini, 2014). Lambda is a regularization hyperparameter of the MKL algorithm which represents the minimizer of the 2-norm of the vector of distances. DTW is a similarity measure which computes the distance between two timeseries. We predict our output using nearest neighbours wherein DTW is used as the distance measure between two samples. We first used these methods with only the time series and then also provided the structured covariates as input by repeating them along the temporal dimension. Another baseline was Random Forests (RF) applied to top PCs extracted from time series and covariates. Finally, we ran RF on the structured covariates and then added features engineered by domain experts from time series data.

4.2. Experimental Protocol

The data were split into training, validation, and test sets to perform hyper-parameter tuning and evaluation. We use a two-level sampling scheme. The outer loop consists of M iterations, each randomly splitting the data 90%/10% for training+validation and test. Model selection is performed within the inner loop, with the 90% set being randomly split once again 90/10 into training and validation sets. There are N rounds of subsampling for training+validation, with the average performance over the validation set being used to select the best performing model. The hyper-parameters with the best validation accuracy are chosen, and the model with these parameters is trained on the 90% training+validation dataset. The range of hyperparameters for each model is in Appendix C, Table 3. The model is evaluated on the 10% test set in the outer loop. We report the average accuracy over the M test sets. In this work, we use M = 5 and N = 10. We tested the models described in the previous sections, with hyperparameters obtained by our 2-level model selection.

5. Discussion of Results

The two biomedical applications, osteoarthritis progression and cerebral palsy surgical outcome are described in detail in the next sections. The class imbalance is 63% for osteoarthritis progression and 65% for the Psoas prediction. The results are summarized in Table 1. For both applications, the RF models trained exclusively on covariates are the worst performers, indicating that time series should be used in the prediction. The results also show that ShortFuse, which introduces structured covariates in deep learning architectures built for the processing of time series data, is 3% more accurate than past deep learning models and other methods for automatically learning time series representations. ShortFuse also matches or outperforms models trained by domain experts. We also find that ShortFuse outperforms LateFuse by 2–4% – the structured covariates have a greater impact in the representation learning if they are integrated into the network as opposed to being merged right before the prediction. ShortFuse also outperforms MKL, DTW and PCA+RF by 2–3%, even when these methods use the structured covariates. Unlike in the case of deep learning models, providing covariates to MKL and DTW did not lead to significant increase in accuracy. The hypothesis space expressed by these models is not rich enough to explain the underlying connections between the time series and the structured data.

Table 1:

Accuracy of the deep learning models on the benchmark datasets. The checkmarks indicate which types of data – covariates or time series – are used by each model.

| Cov. | Time series | CP Psoas Prediction | OAI Progression | |

|---|---|---|---|---|

| Default predictor | − | − | 65% | 63% |

| COV+RF | ✓ | − | 67% | 65% |

| Engineered Features + RF | ✓ | ✓ | 78% * | 67% |

| PCA+COV+RF | ✓ | ✓ | 72% | 67% |

| MKL | − | ✓ | 64% | 67% |

| MKL+COV | ✓ | ✓ | 76% | 68% |

| DTW | − | ✓ | 72% | 71% |

| DTW+COV | ✓ | ✓ | 72% | 71% |

| RNN/LSTM | − | ✓ | 68% | 69% |

| Multiresolution CNN | − | ✓ | 75% | 71% |

| Encoder + CNN | − | ✓ | 75% | 68% |

| CNN | − | ✓ | 75% | 68% |

| LateFuse | ✓ | ✓ | 77% [BASE = CNN] | 70% [BASE=LSTM] |

| ShortFuse | ✓ | ✓ | 78% [BASE = CNN] | 74% [BASE=LSTM] |

As obtained by (Schwartz et al., 2013).

5.1. Forecasting osteoarthritis progression

Knee osteoarthritis (OA) is a leading cause of disability in older adults (CDC, 2009; Guccione et al., 1994), with 50% of the population at risk of developing symptoms at some point in their life (Murphy et al., 2008). Prevention, which could significantly reduce the burden of this incurable disease, hinges on a deeper understanding of modifiable risk factors, such as physical activity (Dunlop et al., 2014; Lee et al., 2015). Currently, clinicians lack the necessary evidence to make specific activity modification recommendations to patients. Some studies have reported that physical activity is associated with an increased risk of knee OA (Lin et al., 2013; Felson et al., 2013). Others have reported either no association or opposite findings (Racunica et al., 2007; Mansournia et al., 2012). Current suggestions are not fine-tuned to patient demographics, medical histories, and lifestyles. Similar types of activities are expected to have different effects on patients with different joint alignment angles or different levels of systemic inflammation (Griffin and Guilak, 2005). The interaction of these covariates with physical activity is thus important in predicting disease progression. In this example application, our task is to predict the progression of osteoarthritis, in terms of an objective measure of cartilage degeneration called Joint Space Narrowing (JSN).

We use a dataset of 1926 patients collected as part of the Osteoarthritis Initiative (OAI), an ongoing 7-year longitudinal observational study on the natural progression of knee OA that monitored patients yearly, collecting medical histories, nutritional information, medication usage, accelerometer-based physical activity data, and other data from OA-related questionnaires. As part of the study, subjects had radiographs (X-rays) of their knees taken yearly and their activity monitored for one week. Activity time series were provided as activity counts (acceleration time steps per minute). X-ray data had been previously processed to extract the joint space width, or the distance between the thigh and shank bones, which is representative of cartilage thickness. As cartilage degenerates, the joint space becomes narrower. If the decrease in cartilage width is higher than 0.7mm per year, the disease is said to have progressed. We used covariates from years 0–4 and physical activity time series from year 4 to predict whether the disease progressed from year 4 to year 6. The structured covariates include clinical features extracted from knee X-rays, while the time series data represents activity counts obtained over a week of monitoring. The human engineered features for this task are based on histograms for the activity data.

An RF model that featurizes the activity data using a histogram approach where features are activity totals for 10 bins of activity intensity levels obtains a 67% classification accuracy, which is only slightly above random chance, once class imbalance is accounted for. The best base deep learning architecture is LSTM, which we found to perform well for the singlesequence, non-periodic in this application. ShortFuse with a hybrid LSTM obtains an accuracy of 74%, a 7% increase over the histogram and RF approach and a 5% increase over a standard LSTM.

5.2. Predicting the outcome of surgery in patients with cerebral palsy

Cerebral palsy is a disorder of movement, muscle tone, and/or posture that is caused by damage that occurs to the immature, developing brain, most often before birth. The condition affects 500,000 people in the US (3.3 per 1,000 births), with 8,000 babies and infants diagnosed each year (Bhasin et al., 2006). Automated tools are needed to aid treatment planning and predict surgical outcomes given both the complexity of the disease (patients present with widely varying gait pathologies) and invasive nature of treatments, which include skeletal, muscular, and neural surgeries.

In this application, our task is to predict whether psoas lengthening surgery (a procedure to address a tight or overactive muscle in the pelvic region) will have a positive outcome. As in previous work (Truong et al., 2011), we define a positive outcome as (1) an improvement of more than 5 points in Pelvis and Hip Deviation Index (PHiDI), which is a gait-based measure of dysfunction of motion of the pelvis and hip during walking, or (2) a post-surgical Gait Deviation Index of more than 90, which indicates that the subject’s gait pattern is within one standard deviation of a typically-developing child. The time series in the data are joint angles obtained during the subject’s gait cycle from motion capture using markers. The computation of the human engineered features requires domain expertise such as knowledge of the stances in the gait cycle (i.e., whether the foot of the limb of interest is in contact with the ground or not).

The current state of the art uses an RF model trained on clinical information as well as engineered features, which has an accuracy of 78% (Schwartz et al., 2013). The best performing deep learning architecture is the deep CNN, possibly because the gait time series consists of multiple (15) sequential variables representing joint angles, which all have a shape that does not vary considerably across subjects. ShortFuse improves over the best deep learning model by 3%, matching the performance of the model trained on human engineered features and covariates, thus obviating the need for human designed features.

6. Conclusions

We introduce a new method, called ShortFuse, that incorporates structured covariates into time series deep learning, which can improve performance over current state-of-the-art models. The key contribution over previous work is that the covariates have a direct effect on the representations that are learned, which, as shown in our example, leads in more accurate models. Results indicate that the structured covariates have a greater impact on the representation learning if they are integrated into the network early as opposed to being merged right before the final layer. We have also outperformed other standard baselines, even when the baselines use covariates. For example, for the cerebral palsy task, MKL with covariates obtains 76% accuracy, whereas ShortFuse obtains 78%.

ShortFuse obtains 3% improvement over all other approaches in forecasting osteoarthritisrelated cartilage degeneration, 3 years in advance. This is crucial in supporting clinicians in making informed recommendations for patients who present with joint pain. For surgery outcome prediction in cerebral palsy patients, we outperformed or matched the state-ofthe-art, at the same time eliminating the need for painstaking feature engineering.

Acknowledgments

We would like to acknowledge support from the National Institutes of Health (U54 EB020405). Many thanks to Dr. Michael Schwartz at the University of Minnesota and Gillette Children’s Specialty Healthcare Hospital for providing the cerebral palsy data. The OAI is a public-private partnership comprised of five contracts (N01-AR-2–2258; N01-AR-2–2259; N01-AR-2–2260; N01-AR-2–2261; N01-AR-2–2262) funded by the National Institutes of Health, a branch of the Department of Health and Human Services, and conducted by the OAI Study Investigators. Private funding partners include Merck Research Laboratories; Novartis Pharmaceuticals Corporation, GlaxoSmithKline; and Pfizer, Inc. Private sector funding for the OAI is managed by the Foundation for the National Institutes of Health. This manuscript was prepared using an OAI public use data set and does not necessarily reflect the opinions or views of the OAI investigators, the NIH, or the private funding partners. We also thank Prof. David Sontag for his helpful suggestions.

Appendix A. List of hybrid layers

Table 2: List of ShortFuse hybrid layers and connections with standard layers.

| Standard Layer | Hybrid Layer |

|---|---|

| Convolution 1D | Covariates provided to convolutions along temporal dimension. |

| Concolution 2D | Interleave covariates to obtain a sequence of the same periodicity and size as the time series data. |

| Fully Connected | Covariates inputted to each one of the fully connected cells. |

| RNN/LSTM | Use the structured covariates as part of additive terms in the computation of the LSTM parameters. |

Appendix B. Figures of deep learning models

Figure 4: Deep learning candidate models.

Appendix C. Model parameters

Table 3: Hyperparameters used in the model training and the models they apply to.

| Hyperparameter | Model to which it applies | Parameter range for search |

|---|---|---|

| Learning rate | RNN / LSTM / all CNN models | 0.001 – 0.003 |

| Dropout | RNN / LSTM / all CNN models | 0.0 – 0.5 |

| Embedding size | LSTM | 16 – 64 |

| Number of filters | all CNN models | 3–13 |

| Number of layers | all CNN models | 1–10 |

| Resolutions | Multiresolution CNN | 256 – 128 – 64 – 32 −16 |

| Kernel | Multiple Kernel Learning | RBF |

| Number of trees | Random Forests | 10 – 1000 |

Contributor Information

Madalina Fiterau, Computer Science Department, Stanford University, MFITERAU@CS.STANFORD.EDU.

Suvrat Bhooshan, Computer Science Department, Stanford University, SUVRAT@STANFORD.EDU.

Jason Fries, Computer Science Department, Stanford University, JASON-FRIES@STANFORD.EDU.

Charles Bournhonesque, Institute for Computational and Mathematical Engineering, Stanford University, CBOURNHO@STANFORD.EDU.

Jennifer Hicks, Bioengineering Department, Stanford University, JENHICKS@STANFORD.EDU.

Eni Halilaj, Bioengineering Department, Stanford University, EHALILAJ@STANFORD.EDU.

Christopher Ré, Computer Science Department, Stanford University, CHRISMRE@CS.STANFORD.EDU.

Scott Delp, Bioengineering Department, Stanford University, DELP@STANFORD.EDU.

References

- Aiolli F and Donini M Easy multiple kernel learning. In 22th European Symposium on Artificial Neural Networks (ESANN), pages 289–294, 2014. [Google Scholar]

- Astephen JL, Deluzio KJ, Caldwell GE, Dunbar MJ, and Hubley-Kozey CL Gait and neuromuscular pattern changes are associated with differences in knee osteoarthritis severity levels. Journal of Biomechanics, 41(4):868–876, 2008. [DOI] [PubMed] [Google Scholar]

- Bhasin TK, Brocksen S, Avchen RN, and Braun KVN Prevalence of four developmental disabilities among children aged 8 years: Metropolitan Atlanta Developmental Disabilities Surveillance Program, 1996 and 2000. US Department of Health and Human Services, Centers for Disease Control and Prevention, 2006. [PubMed] [Google Scholar]

- Borovykh A, Bohte S, and Oosterlee CW Conditional time series forecasting with convolutional neural networks. preprint arXiv:170304691, 2017. [Google Scholar]

- CDC. Prevalence and most common causes of disability among adults—United States, 2005. MMWR: Morbidity and Mortality weekly report, 58(16):421–426, 2009. [PubMed] [Google Scholar]

- Conneau A, Schwenk H, Barrault L, and Lecun Y Very deep convolutional networks for text classification. preprint arXiv:160601781, 2016. [Google Scholar]

- Cui Z, Chen W, and Chen Y Multi-scale convolutional neural networks for time series classification. preprint arXiv:160306995, 2016. [Google Scholar]

- Dunlop DD, Song J, Semanik PA, Chang RW, Sharma L, Bathon JM, Eaton CB, Hochberg MC, Jackson RD, Kwoh CK, et al. Objective physical activity measurement in the osteoarthritis initiative: are guidelines being met? Arthritis & Rheumatology, 63(11):3372–3382, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunlop DD, Song J, Semanik PA, Sharma L, Bathon JM, Eaton CB, Hochberg MC, Jackson RD, Kwoh CK, Mysiw WJ, et al. Relation of physical activity time to incident disability in community dwelling adults with or at risk of knee arthritis: prospective cohort study. BMJ, 348:g2472, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Federolf P, Boyer K, and Andriacchi T Application of principal component analysis in clinical gait research: identification of systematic differences between healthy and medial knee-osteoarthritic gait. Journal of Biomechanics, 46(13):2173–2178, 2013. [DOI] [PubMed] [Google Scholar]

- Felson DT, Niu J, Yang T, Torner J, Lewis CE, Aliabadi P, Sack B, Sharma L, Guermazi A, Goggins J, et al. Physical activity, alignment and knee osteoarthritis: data from MOST and the OAI. Osteoarthritis and cartilage, 21(6):789–795, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fukuchi RK, Eskofier BM, Duarte M, and Ferber R Support vector machines for detecting age-related changes in running kinematics. Journal of Biomechanics, 44(3): 540–542, 2011. [DOI] [PubMed] [Google Scholar]

- Graves A, Mohamed A-R, and Hinton G Speech recognition with deep recurrent neural networks. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 6645–6649. IEEE, 2013. [Google Scholar]

- Griffin TM and Guilak F The role of mechanical loading in the onset and progression of osteoarthritis. Exercise and sport sciences reviews, 33(4):195–200, 2005. [DOI] [PubMed] [Google Scholar]

- Guccione AA, Felson DT, Anderson JJ, Anthony JM, Zhang Y, Wilson P, Kelly-Hayes M, Wolf PA, Kreger BE, and Kannel WB The effects of specific medical conditions on the functional limitations of elders in the Framingham study. American Journal of Public Health, 84(3):351–358, 1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kale A, Cuntoor N, Yegnanarayana B, Rajagopalan A, and Chellappa R Gait analysis for human identification. In International Conference on Audio-and Video-Based Biometric Person Authentication, pages 706–714. Springer, 2003. [Google Scholar]

- Keogh E, Chu S, Hart D, and Pazzani M An online algorithm for segmenting time series. In Proceedings, IEEE International Conference on Data Mining (ICDM), pages 289–296. IEEE, 2001. [Google Scholar]

- Keogh E, Chu S, Hart D, and Pazzani M Segmenting time series: A survey and novel approach. Data mining in time series databases, 57:1–22, 2004. [Google Scholar]

- Lee J, Chang RW, Ehrlich-Jones L, Kwoh CK, Nevitt M, Semanik PA, Sharma L, Sohn M-W, Song J, and Dunlop DD Sedentary behavior and physical function: objective evidence from the osteoarthritis initiative. Arthritis Care & Research, 67(3): 366–373, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin W, Alizai H, Joseph G, Srikhum W, Nevitt M, Lynch J, McCulloch C, and Link T Physical activity in relation to knee cartilage T2 progression measured with 3T MRI over a period of 4 years: data from the osteoarthritis initiative. Osteoarthritis and Cartilage, 21(10):1558–1566, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malhotra P, Ramakrishnan A, Anand G, Vig L, Agarwal P, and Shroff G LSTM-based encoder-decoder for multi-sensor anomaly detection. preprint arXiv:160700148, 2016. [Google Scholar]

- Mansournia MA, Danaei G, Forouzanfar MH, Mahmoodi M, Jamali M, Mansournia N, and Mohammad K Effect of physical activity on functional performance and knee pain in patients with osteoarthritis: analysis with marginal structural models. Epidemiology, 23(4):631–640, 2012. [DOI] [PubMed] [Google Scholar]

- Murphy L, Schwartz TA, Helmick CG, Renner JB, Tudor G, Koch G, Dragomir A, Kalsbeek WD, Luta G, and Jordan JM Lifetime risk of symptomatic knee osteoarthritis. Arthritis Care & Research, 59(9):1207–1213, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- OAI. Osteoarthritis initiative, a knee health study, 2017. URL https://oai.epi-ucsf. org/datarelease/. [Google Scholar]

- Racunica TL, Teichtahl AJ, Wang Y, Wluka AE, English DR, Giles GG, O’Sullivan R, and Cicuttini FM Effect of physical activity on articular knee joint structures in community-based adults. Arthritis Care & Research, 57(7):1261–1268, 2007. [DOI] [PubMed] [Google Scholar]

- Razavian N and Sontag D Temporal convolutional neural networks for diagnosis from lab tests. CoRR, abs/1511.07938, 2015 URL http://arxiv.org/abs/1511.07938. [Google Scholar]

- Reddi SJ and Póczos B Scale invariant conditional dependence measures. In Proceedings of the 30th International Conference on Machine Learning (ICML), pages 1355–1363, 2013. [Google Scholar]

- Schwartz MH, Rozumalski A, Truong W, and Novacheck TF Predicting the outcome of intramuscular psoas lengthening in children with cerebral palsy using preoperative gait data and the random forest algorithm. Gait & Posture, 37(4):473–479, 2013. [DOI] [PubMed] [Google Scholar]

- Sermanet P, Kavukcuoglu K, Chintala S, and LeCun Y Pedestrian detection with unsupervised multi-stage feature learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 3626–3633, 2013. [Google Scholar]

- Song J, Semanik P, Sharma L, Chang RW, Hochberg MC, Mysiw WJ, Bathon JM, Eaton CB, Jackson R, Kwoh CK, et al. Assessing physical activity in persons with knee osteoarthritis using accelerometers: data from the osteoarthritis initiative. Arthritis Care & Research, 62(12):1724–1732, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trung NT, Makihara Y, Nagahara H, Mukaigawa Y, and Yagi Y Performance evaluation of gait recognition using the largest inertial sensor-based gait database. In 5th IAPR International Conference on Biometrics (ICB), pages 360–366. IEEE, 2012. [Google Scholar]

- Truong WH, Rozumalski A, Novacheck TF, Beattie C, and Schwartz MH Evaluation of conventional selection criteria for psoas lengthening for individuals with cerebral palsy: a retrospective, case-controlled study. Journal of Pediatric Orthopaedics, 31(5):534–540, 2011. [DOI] [PubMed] [Google Scholar]

- Wang W, Chen C, Wang W, Rai P, and Carin L Earliness-aware deep convolutionalnetworks for early time series classification. preprint arXiv:161104578, 2016a. [Google Scholar]

- Wang X, Kyrarini M, Risti D´c-Durrant, Spranger M, and Gr¨aser A Monitoring of gait performance using dynamic time warping on IMU-sensor data. In IEEE International Symposium on Medical Measurements and Applications (MeMeA), pages 1–6. IEEE, 2016b. [Google Scholar]

- Zhang Y, Prasad S, Kilicarslan A, and Contreras-Vidal JL Multiple kernel based regionimportance learning for neural classification of gait states from EEG signals. Frontiers in Neuroscience, 11, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zheng Y, Liu Q, Chen E, Ge Y, and Zhao JL Time series classification using multichannels deep convolutional neural networks. In International Conference on Web-Age Information Management, pages 298–310. Springer, 2014. [Google Scholar]