Abstract

Background:

The study of learning in populations of subjects can provide insights into the changes that occur in the brain with aging, drug intervention, and psychiatric disease.

New Method:

We introduce a separable two-dimensional (2D) random field (RF) model for analyzing binary response data acquired during the learning of object-reward associations across multiple days. The method can quantify the variability of performance within a day and across days, and can capture abrupt changes in learning.

Results:

We apply the method to data from young and aged macaque monkeys performing a reversal-learning task. The method provides an estimate of performance within a day for each age group, and a learning rate across days for each monkey. We find that, as a group, the older monkeys require more trials to learn the object discriminations than do the young monkeys, and that the cognitive flexibility of the younger group is higher. We also use the model estimates of performance as features for clustering the monkeys into two groups. The clustering results in two groups that, for the most part, coincide with those formed by the age groups. Simulation studies suggest that clustering captures inter-individual differences in performance levels.

Comparison with Existing Method(s):

In comparison with generalized linear models, this method is better able to capture the inherent two-dimensional nature of the data and find between group differences.

Conclusions:

Applied to binary response data from groups of individuals performing multi-day behavioral experiments, the model discriminates between-group differences and identifies subgroups.

Keywords: behavior, learning, aging, separable random field model, change-point test, reversal learning task, EM algorithm, Markov Chain Monte Carlo, Laplace prior, Bayesian inference, Gibbs sampling, MAP estimation

1. Introduction

Understanding how learning differs between groups of subjects is important in many areas of neuroscience research. Obtaining an objective measure of performance is challenging because observations are often not continuous (e.g., binary) and learning is both dynamic and varies greatly across individuals.

A popular method to quantify performance changes in binary data is to use the change point test [1] or a moving average [2, 3, 4]. These methods are easy to implement but can be problematic in that they rely on a predefined null hypothesis [1] or arbitrary choice of window length [2, 3, 4], respectively. State-space and hidden Markov approaches [5, 6, 7, 8] have been found to be a practical alternative since they make use of likelihood methods to fit the data, allowing accurate assessment of individual and group dynamics. These models are useful for analysis of behavioral data as they are able to track dynamics of learning (and forgetting), and provide confidence bounds on estimates allowing for across trial inference.

We propose a two-dimensional model of binary response data from multi-day behavioral experiments termed the SMuRF (separably Markov random field) model, first introduced in [9]. In the present study, we examine the binary response data from two age groups of animals learning multiple object-reward associations across days. Conventional one-dimensional methods for analyzing these data [5, 6, 7, 8] ignore their two-dimensional nature by aggregating the responses across object pairs or days, thus failing to capture subtle changes in learning dynamics. Two-dimensional state-space models have been applied to the analysis of neural spike rasters [10, 11], with the goal of simultaneously capturing the within and cross trial variability of neural spike rasters. Their appeal, in the context of binary response data from behavioral experiments (e.g., repeated across multiple days), is the ability to simultaneously capture the within-day (across object pairs) and cross-day variability of learning. While classical two-dimensional state-space models [10, 11] obviate the need for aggregation, they lack the interpretability of one-dimensional state-space models. In contrast, the SMuRF model [9] is a two-dimensional model that inherits the interpretability of one-dimensional approaches. As we demonstrate formally in [9], the SMuRF model is not a two-dimensional state-space model in the classical sense. Applied to data from behavioral experiments, the SMuRF model expresses the probability of observing the binary outcomes as a function of two latent processes: a within-day learning process that reflects an animal’s item-dependent performance level during a day, and a cross-day learning process that captures the dynamics of proficiency across days. The two processes play the same role as the latent processes in one-dimensional methods [5, 6, 7, 8], thus inheriting the interpretability of these methods. Unlike in one-dimensional methods, we combine the two processes, thus capturing the two-dimensional nature of the behavioral raster data as in [10, 11]. In this context, the contribution of this paper is twofold. First, we substitute a Laplace random walk for the Gaussian random walk in [9], to allow for accurate and automatic modeling of sudden changes or jumps in behavioral data (e.g., due to reversal learning). We term the resulting model the Laplace SMuRF model, and that from [9], the Gaussian SMuRF model. The Laplace SMuRF model leads to algorithms for parameter estimation and inference (e.g., MAP inference) different from those derived in [9] for the Gaussian SMuRF model. Second, learning within a day is tracked over groups in addition to the individual learning across days. Using this formulation, we are able to obtain an accurate assessment of learning using a single model. We demonstrate that this model has superior predictive power, as measured by the WAIC [12], compared to one that aggregates the behavioral data within day, and is therefore better suited to perform inferences about the presence or absence of age differences.

We apply the Laplace SMuRF model to data acquired from young (mean 10.4 years, range 9.8 – 11.2) and aged (mean 23.2 years, range 20.2 – 27.7) female macaque monkeys performing a reversal learning task which was designed to identify alterations in behavior after reinforcement contingencies change [13]. Using a linear mixed-effects model (LME), the analysis from [13] indicated that older animals were impaired at affective shifting. In addition, by dividing each day into three epochs, it was observed that, for both age groups, within-day learning/performance was generally better in the middle of the session than at the beginning or the end, an effect which may be termed a “middle-list” effect. No between age difference was observed using this technique. Using the Laplace SMuRF model, we find that, as a group, the older monkeys find the object pairings harder than do the young monkeys, and that the ability to change behavior (“cognitive flexibility”) of the younger group is higher. We are also able to use the results of our analysis to create clusters that, for the most part, coincide with those formed by the age groups. Simulation studies suggest that clustering based on the models results captures inter-individual differences in performance levels, which allows us to identify exceptional behavior.

The remainder of our treatment begins in Section 2, where we describe the experimental behavioral paradigm that motivated the Laplace SMuRF model. We introduce the Laplace SMuRF model of learning, as well as algorithms for parameter estimation and inference, in Section 3. The interested reader can find the derivations relevant to Section 3 in the Appendix. In Section 4, we utilize the model from Section 3 to assess between-group differences in learning, and to predict group membership from behavior. We conclude in Section 5.

2. Experimental behavioral paradigm

We analyze data from 14 female macaque monkeys – 6 young (mean 10.4 years, range 9.8 – 11.2) and 8 old (mean 23.2 years, range 20.2 – 27.7) animals – performing a reversal learning task [14, 13]. This experiment was designed to identify differences in affective shifting between the two groups. Behavioral experiments followed guidelines established by the National Institutes of Health and were approved by the Institutional Animal Care and Use Committee at the University of Arizona.

In the first phase of the task, each animal was presented each day with the same 40 object pairs in the same order. By a process of trial and error the animal determined which object in the pair was associated with a food reward. The first phase of the task ended when a monkey reached a 90% performance criterion over 5 consecutive days (that is, 180/200 item pairs correct). After reaching criterion, the second phase began. In this part of the experiment, the reward for each object pair was switched to the previously unrewarded object and each animal was expected to learn these novel associations. The second phase ended when the same 90% criterion over 5 days was reached. Figure 1 presents a schematic depiction of the object reversal paradigm.

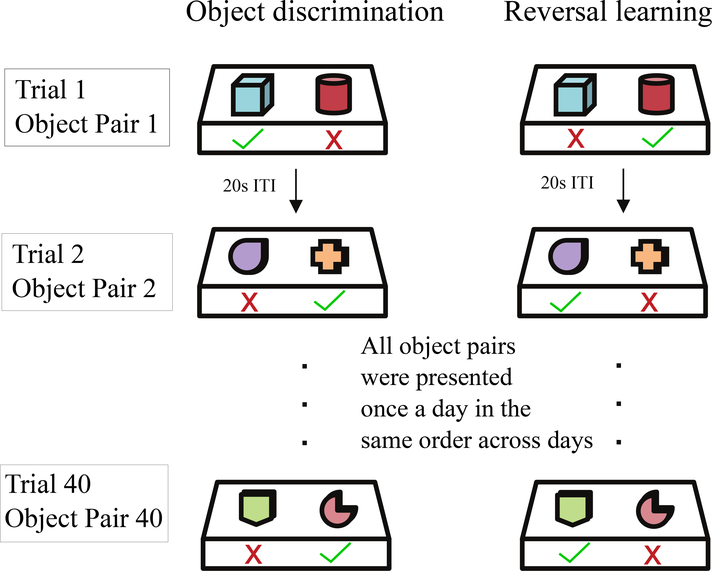

Figure 1: Depiction of the object reversal learning paradigm.

The object discrimination part of the learning task (left column) requires monkeys to learn 40 novel object pairs presented sequentially in the same order every session until they reach a 90% performance criterion. After reaching criterion, the object-reward associations are switched (reversal learning, right column), and the monkeys re-learn the new associations to the same criterion.

Existing analysis methods:

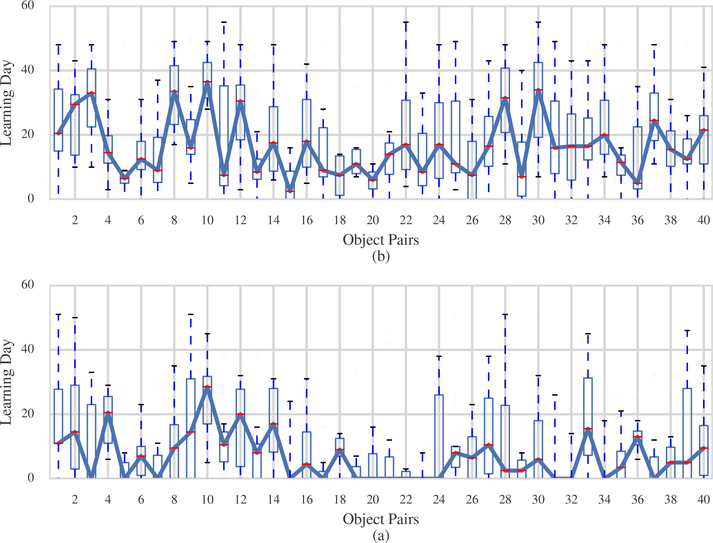

Conventional analyses of learning in this type of multi-day task collapse performance data within a day (i.e. ignore information regarding object pairs) [15, 16, 17] and make use of analysis of variance (ANOVA) to identify between group learning differences. Because it was hypothesized in this experiment that pairs presented at the start and end of the 40 object pairings might be recalled more easily – primacy and recency effects – the original analysis in [13] of data from these experiments examined within-day effects by using a hybrid state-space linear mixed model. A three-step process was employed. First, for each animal and object pair, the across-day performance was summarized by computing one learning day for the object discrimination task and one learning day for the reversal learning task, using one-dimensional state-space models [5]. Figure 2(a) shows a plot of boxplots of the learning days during the object discrimination task as a function of object pair. Figure 2(b) shows the equivalent boxplots for the reversal learning task. For each object-pair, as many state-space models were fit as there were animals. As can be observed (and at odds with the literature on typical primacy and recency effects), there is a general trend towards quicker learning of the object-pairs in the middle of the 40-trial daily task. Second, as a consequence of the observation, these learning days were organized for each animal into three epochs: the first 14 object-pairs (epoch 1), the second 12 object-pairs (epoch 2) and the final 14 object pairs (epoch 3). Third, a linear mixed effects model (LME) was applied with age, task and epoch as factors. Results suggested that during both tasks, the second and third group of objects were learned significantly faster than were the first third of the objects. Also, the middle third of objects were learned significantly faster than the last third of objects. There were no significant age interactions with task or epoch. We hypothesize that this is due to the fact that the model, by partitioning every day of the experiment into three epochs, did not contain fine enough granularity to detect age differences at the level of object-pairs. In contrast (Section 4), we will see that the Laplace SMuRF model which deals with the within-day dynamics in greater detail is able to tease out between-group and inter-individual differences that are predictive of the group membership of the animals. In particular, we will show that a model that takes into account the within-day dynamics has better predictive power compared to one that does not, as measured by the Watanabe-Akaike Information Criterion (WAIC, [12]). The WAIC is a measure of predictive performance for Bayesian models that is an analogue to the Akaike Information Criterion (AIC, [18]). The WAIC and AIC can each be thought of as an approximation to some form of cross-validation [19].

Figure 2: Summary of estimated learning day for each object pair.

Boxplots showing learning day estimated for all 40 object pairs in object discrimination (OD) (a) and reversal learning (RL) (b) parts of the task. Learning days are computed individually for each animal using a state-space approach. Boxes indicate the interquartile range (IQR) with whiskers extending to the most extreme data point that is no more than 1.5 IQR from the edge of the box. Blue line joins the median values.

3. Methods

3.1. Laplace SMuRF model

The goal is to model the probability, , of the event that a given monkey m picks the correct object in a given pair r, on a given day k. A precise assessment of this trial (object pair) and day-dependent probability would provide a complete characterization of the dynamics of learning of a monkey during the reversal learning task. We propose a group-dependent separably-Markov random field (SMuRF) model [9] for these data. For simplicity, we describe the SMuRF model for a single group, with the understanding that the dynamics of learning within each group (young or old) follow separate SMuRF models. The Laplace SMuRF model expresses the dynamics of learning of a monkey as

| (1) |

In Equation 1, is a group-level latent random walk process that represents the group performance on object pairs presented within a day (within-day learning), and are independent and identically distributed (i.i.d.) Gaussian random variables with mean zero and variance . represents monkey m’s latent state of learning across days (cross-day learning). Across days the noise variables, , are i.i.d. Laplace random variables [20] with parameter λ. Compared to a Gaussian prior, the Laplace prior can capture abrupt changes in cross-day learning due to the reversal of object-reward associations. The probability of the event that a given monkey m picks the correct object in a given pair k on a given day r is related to these latent processes via a logit link function which constrains the probabilities between 0 and 1. Responses are denoted by which is 1 if monkey m produces a correct response when presented with object pair k on day r, and 0 otherwise. Across days, each animal is allowed its own personal learning curve that results in a latent state sequence for each monkey. There are a total of K = 40 object pairs, and the total number of days Rm of the experiment depends on the animal [13].

3.2. Parameter estimation in the SMuRF model by maximum likelihood

Let be a vector that denotes the learning state of the mth monkey, be a vector of learning states for all monkeys, and d = (d1, ⋯, dK)T be a vector that represents within-day group performance. We denote the response data from the mth monkey by .

The goal is to maximize, with respect to the unknown parameters, , the log-likelihood of the SMuRF model

| (2) |

This is a challenging problem because of the high-dimensional integral that must be evaluated in Equation 2. We propose to maximize this likelihood by Expectation-Maximization (EM). Given a candidate solution, EM [21] builds an approximation of the likelihood in its E-step. Maximizing this approximation in the M-step is guaranteed to yield a new candidate solution that does not decrease the value of the likelihood function. By iterating this process, EM generates a sequence of iterates that converge to a local optimum of the likelihood function. Let θ(ℓ) be the candidate solution in the ℓth iteration of EM. In the context of the Laplace SMuRF model, the key challenge of EM is to compute defined as

| (3) |

the expected value of the complete-data likelihood with respect to the joint posterior distribution of the missing data (x, d) conditioned on the observed data and the candidate solution θ(ℓ). This expectation is not tractable, i.e., it cannot be computed in closed-form. We propose to approximate the required expectations using Markov-Chain Monte-Carlo (MCMC) samples from . In particular, we will use Gibbs sampling [22], a Monte-Carlo technique, to generate samples from a distribution by sampling from its so-called full conditionals (conditional distribution of one variable given all others), thus generating a Markov chain that, under regularity conditions, can be shown to converge to a sample from the desired distribution.

Gibbs sampling is attractive in cases where sampling from the full conditionals is simple. However, sampling from the full conditionals and is not trivial, for reasons detailed in [9]. We circumvent this difficulty by considering, instead of Equation 1, the following equivalent augmented SMuRF model

| (4) |

where are i.i.d. Polya-Gamma (PG) [23] random variables, m = 1, ⋯, M, and are i.i.d. exponential random variables with parameter . To simplify notation, let , , , and for m = 1, ⋯, M. The models in Equation 1 and Equation 4 are equivalent in the sense that the joint distribution of from the former is equivalent to that from the latter marginalized over and z. In what follows, it will be useful to let , and

Block Gibbs sampling from augmented SMuRF model:.

The augmented SMuRF model of Equation 4 yields the following block Gibbs sampler, the derivation of which is in the Appendix. This Gibbs sampler forms the basis of the E-step of our Monte-Carlo EM algorithm for maximizing the likelihood for the SMuRF model of the learning dynamics. The full-conditionals (distributions from which one must obtain samples) for the Gibbs sampler are as follows

In other words, conditioned on group performance d and all of the data , the learning states x1, ⋯, xM of the M monkeys in the group are independent. We show in the Appendix that, for each m = 1, ⋯, M, is the posterior distribution of the state sequence in a linear-Gaussian state-space model. This leads to a very efficient forward-filtering backward-sampling algorithm.

. This represents the full-conditional of within-day group performance, which obeys properties similar to the previous full conditional (by symmetry).

. A result from [20] tells us that , where IG(·;,) denotes the inverse Gaussian distribution. The full conditional for z is the product of independent inverse Gaussians, where the independence is across animals and days of the task.

-

. From [23], that is, the full conditional for W is the product of independent Polya-Gammas, where the independent variable is across animals, days of the task, and object pairs within a day.

Derivations of the form of the first two full conditionals are in the Appendix. The forms of the last two full conditionals follow from the form of the SMuRF model, and results from [20] and [23] respectively.

In the M-step, we update the parameters and λ by maximizing with respect to θ. Following relatively simple algebra detailed in the Appendix, we obtain(5)

where we approximate the necessary expectations using the Monte-Carlo samples from the E-step.(6)

Computational Complexity of Block Gibbs Sampling:.

Sampling from the full-conditional of learning states x1, ⋯, xM of the M monkeys is the most computationally expensive step of the block Gibbs sampler. For each Gibbs sampling iteration, the complexity of this step is , where R is the number of days the slowest animal (young or old) takes to perform the experiment. That is, the complexity is linear in the maximum number of days R it takes an animal to complete the experiment. This is because the cost of forward-filter backward sampler is the same as the cost of a Kalman smoother which, for a given animal m, is linear in the number of days Rm it takes the animal to complete the experiment. Since the full-conditional factorizes over animals, we sample independently for each animal and therefore the cost per Gibbs iteration is also linear in the number of animals M in the group. To obtain the cost of the EM algorithm, we multiply the cost per Gibbs iteration by the number of Gibbs sample per EM iteration, and by the number of EM iterations.

We note that, compared to the block Gibbs sampler for the Gaussian SMuRF [9], the Laplace SMuRF requires an additional step – third bullet point above – making it slightly more expensive computational than the Gaussian SMuRF.

3.3. Assessing the dynamics of learning from binary response data

Bayesian estimation of the Laplace SMuRF model enables us to infer detailed changes in learning dynamics, in particular to extract the within-day and cross-day components of performance dynamics that accompany the learning of a task by an animal. This is because, following estimation, inference in the Laplace SMuRF model yields the joint posterior distribution of the within and cross-day components of learning conditioned on the observed data. We can use this posterior distribution, in turn, to assess between group differences in performance within a day, as well as inter-individual differences in learning across days. If the model fits the data better than competing ones, then it forms a better basis for performing inference (e.g. assessing the effect of age on performance).

We let denote the posterior distribution of x and d, given the binary response data and the maximum likelihood estimate of θ. It is understood that we use Gibbs samples from to obtain an empirical estimate of the distribution. In order to compare the within-day group performance and the cross-day individual performances we estimate the mode of the posterior distribution using a maximum a posteriori (MAP) estimate. For the derivation of the MAP estimator, we refer the reader to the Appendix.

Model comparison:

The Watanabe-Akaike Criterion (WAIC) [12] is a measure of model fit that balances the goodness-of-fit of a model with the number of parameters used. It can be computed from [12].

| (7) |

where is the computed log pointwise predictive density

| (8) |

and is a measure of model complexity

| (9) |

where is the sample variance and . Because the models for young and old are independent we can compute the WAIC (Equation 7) for each age group (for a given age group, M is understood as the number of animals in that group) separately and add them to obtain the cumulative WAIC. A model with a lower WAIC can be assumed to enable better inference about the data.

Within-day learning differences:.

We can use our models to compute, for any of the object pairs, the posterior probability that young monkeys would perform better on that pairing than old monkeys. This probability is

| (10) |

where we approximate it by its frequency of occurrence in the posterior samples from the model.

Cross-day learning differences:.

We define a monkey’s cognitive flexibility measure as the difference between its learning rate before and after the reversal. Assuming reversal for monkey m occurs on day Dm, the monkey’s cognitive flexibility is

| (11) |

In practice, each Gibbs sample (xi, di) yields one number

| (12) |

Repeating this calculation for all n Gibbs samples yields an empirical posterior distribution for the cognitive flexibility of the mth animal.

Armed with this definition of cognitive flexibility, we can compare animals by computing the probability that animal m is more cognitively flexible than animal

| (13) |

4. Results

In what follows, we will consider two classes of models of the data from the reversal learning task. Model Class 1 comprises two separate Laplace SMuRF models, one for young monkeys, and another for old monkeys. It assumes that animals within a group follow the same within-day learning profile. We consider two subclasses, Model Class 1A and Model Class 1B. In subclass A, we assume there are no dynamics within a day i.e. the random walk for dk defined in Equation 1 is set to have a negligibly small variance. In subclass B, we allow the possibility of dynamics by estimating the random walk variance from the data. Comparing the predictive accuracy of Model Class 1A, which assumes no within-day dynamics, to Model Class 1B, which assumes the presence of within-day dynamics, would let us determine the importance of dynamics for the purpose of making inferences in this experiment (e.g. the effect of age on performance). In Model Class 2, we assume that each animal is in a group of its own. That is, Model Class 2 comprises 14 separate Laplace SMuRF models, one for each animal.

4.1. Model comparison to assess importance of within-day dynamics

We use the WAIC to assess the predictive accuracy of Model Class 1A compared to 1B (see Table 1). The WAIC values reported in the first and second column confirm that incorporating within-day dynamics leads to lower WAIC i.e. a model of the multi-day behavioral experiment that is better predictive of animal performance across the experiment. For the remainder of the paper, references to Model Class 1 should be understood as the dynamic version (Model Class 1B).

Table 1:

Comparison of the predictive accuracy of the three models of the multi-day behavioral experiment based on the WAIC. Lower values of the WAIC signify better predictive accuracy. The WAIC values reported in the first (Model Class 1A) and second column (Model Class 1B) confirm that incorporating within-day dynamics (second column) leads to a model of the multi-day behavioral experiment that is better predictive of animal performance across the experiment. The third column compares the predictive accuracy of the dynamic Laplace SMuRF model (Model Class 1B) to one that employs the Student-t distribution instead, termed Student-t SMuRF. The Student-t distribution is a popular choice to capture abrupt changes in data. The WAIC values indicate that the Laplace SMuRF model is a better fit to the data compared to the Student-t SMuRF

| Laplace SMuRF (Static) | Laplace SMuRF (Dynamic) | Student-t SMuRF (Dynamic) | |

|---|---|---|---|

| WAIC | 36929 | 35988 | 37959 |

4.2. Student-t alternative to Laplace prior

The Student-t distribution is a popular choice to capture abrupt changes in data. The third column in Table 1 compares the predictive accuracy of the dynamic Laplace SMuRF model to one that employs the Student-t distribution instead, termed Student-t SMuRF. The WAIC values indicate that the Laplace SMuRF model (Model Class 1B) is a better fit to the data compared to the Student-t SMuRF. We hypothesize that this is because the Student-t distribution does not capture the abruptness in change in performance due to the object-pair reversals as accurately as the Laplace distribution.

4.3. Comparing learning between groups

Two objectives of the study in [13] were to identify learning effects within a daily session and establish age differences in ability to learn tasks known to rely on different brain networks. Using Model Class 1, we can perform these comparisons easily with our paradigm because it provides a summary for learning within the day for old and young animals, and a learning curve across days for each animal.

As described above, we can use the Gibbs samples yielded at convergence of the Monte Carlo EM algorithm to compare the groups’ performance on the different object pairs (Equation 10). We consider the overall item-pair performance at one point in the daily session to be greater than the performance at another point if this probability is greater than 0.95. Learning across days can be analyzed using the probability distribution of each animal’s learning curve.

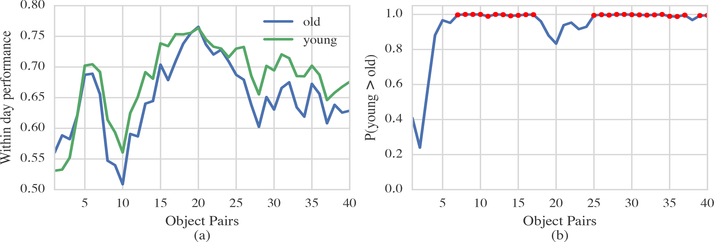

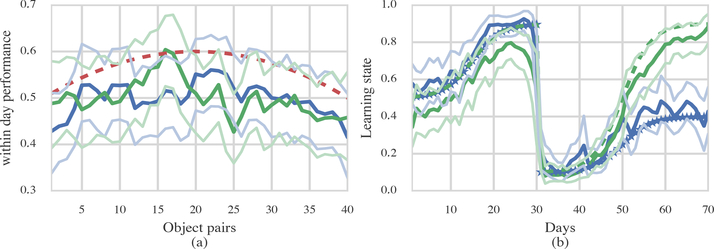

Within-day performance for young and old animals is shown in Figure 3(a). Both groups show similar trends. Performance was low for two distinct periods near the start each day (item pairs 0–3 and 7–11), reached a peak near item pair 20, and tailed down at the end of the session (item pairs 26 onwards).

Figure 3: Within-day performance comparison between young and old animals.

(a) MAP estimates of the within-day performance of young (green) and old (blue) animals across all 40 object pairs. (b) Probability that the young animals’ performance is greater than the old animals’ (Equation 10). Values where the probability is greater than 0.95 are indicated with red dots.

In general the older animals perform equivalently or worse than the younger animals. Comparison between the underlying distributions is shown in Figure 3(b). The curve shows the item-by-item probability that the young animals performance is better than the old animals. When the curve lies above 0.95 we can be fairly confident that the young animals performance is better than the old animals. Thus, from pairs 6–16 and pairs 24–39 (with the exception of pair 37) we observe that, according to the model, with 95% certainty, the younger animals perform better than older.

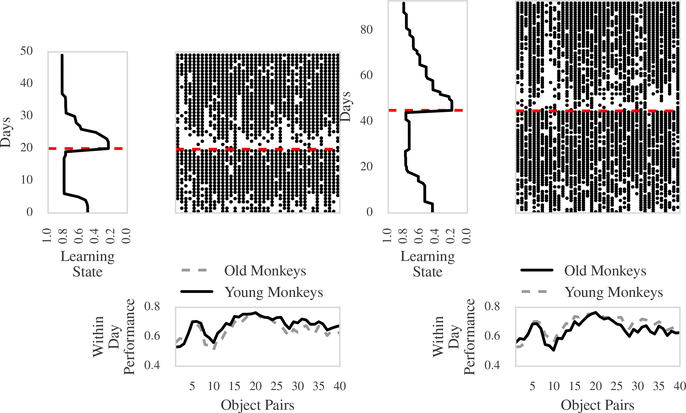

Figures 4(a) and (b) show the individual learning curves for the young and old monkeys, respectively, aligned by the day of reversal. In general the older monkeys took longer to learn both before and after reversal. Our model does not a priori know the reversal point in the data but in all cases accurately identifies the change point.

Figure 4: MAP estimates of cross-day performance for all 14 animals.

(a) Young animals (y1,..,y6). (b) Old animals (o1, …, o8). Note that older animals tend to take longer to learn both before and after reversal and that the model clearly captures the sharp change in behavior after reversal.

Figure 5 shows raw data in the form of a raster and a summary of our analyses for one young monkey and one old monkey. The black dots in the raster plots indicate rewarded, correct behavior, whereas a space indicates unrewarded, incorrect behavior. The red dashed line highlights the day when reversal of reward occurs. The bottom panels depict the within-day performance curve for both the young and the old group. The left panel of Figure 5(a) and (b) are the MAP estimates of the learning curves for the corresponding young and old monkey, respectively. This figure demonstrates that the Laplace SMuRF model can provide a succinct summary of the dynamics of learning in this behavioral experiment across multiple days, and with multiple observations within a day.

Figure 5: Summary of analyses of representative young and old monkeys.

(a) Young animal. (b) Old animal. The raster plots indicate performance within day (x-axis) and across days (y-axis). A black dot indicates rewarded, correct behavior, and a space indicates unrewarded, incorrect behavior. The red dashed line highlights the day when reversal of reward occurs. In each panel, the plot on the left represents the MAP estimate of the learning state for the animal. Both bottom panels show the within-day performance curves from 3(a).

4.4. Predicting age-group membership from behavior

In this section, we assume the subjects’ ages are not known and investigate whether their behavior naturally falls into two or more groups using clustering. Three metrics (described below) are considered: a single cognitive flexibility measure and two simple daily performance measures (i.e., averaged response data across days and model-based across-day performance).

Clustering based on cognitive flexibility:.

A single monkey’s cognitive flexibility measure is defined as the difference between its learning rate before and after the reversal. The learning rate in the pre and post-reversal periods is calculated based on the Gibbs sample estimate rate differences (Equation 11). The resulting difference provides an empirical cognitive flexibility distribution for each animal. The measure was chosen for this experiment as each animal performed the task over a different number of days. We used Model Class 1 to compute the empirical cognitive flexibility distribution of each animal (Equation 11).

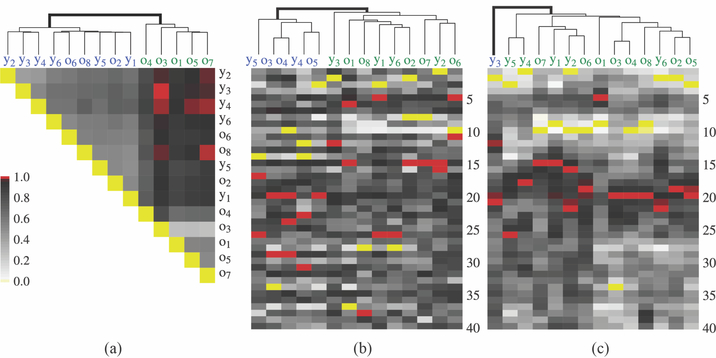

To visualize comparison of this measure with that of other monkeys, we plot a cognitive flexibility heatmap (Figure 6(a)). Each block in the heatmap reflects the probability that the monkey on the y-axis is more cognitively flexible than the monkey on the x-axis (Equation 12). These values are computed based on comparing samples from each animal’s cognitive flexibility posterior distribution. A monkey is defined here to be more flexible than another monkey if, with high probability, its cognitive flexibility is higher than that of the other monkey. We cluster the individual monkeys into groups using the hierarchical clustering algorithm from [24]. Clusters are shown on Figure 6, with gray exterior lines. Clustering results indicate two main clusters: one with five old animals (o1, o3, o4, o5, o7) and a second with all young animals and three old animals (o2, o6, o8). This result is consistent with other aging studies that indicate that there is considerable individual variation in effects of aging on learning and memory [25, 26, 13].

Figure 6: Clustering of animal responses based on raw data without knowledge of age.

(a) Heatmap of relative cognitive flexibility for each animal (Equation 13). Shading indicates the probability level that one animal’s flexibility is higher than a second animal’s. Values greater than 0.95 are indicated in red. Individual animals are labeled by their actual chronological age (old are o1, …, o8 and young are y1, …, y6) even though we do not use this information. (b) Heatmap indicating average response across days (y-axis) for each animal. (c) Empirical posterior average of the within-day performance for each animal, obtained by fitting a separate Laplace SMuRF to each animal. This leads to estimates of daily performance that are smoother than in Panel (b). In each panel, the gray lines above the heatmap indicate the hierarchical group clusters and the bold line indicates the clustering into two groups according to which the labels are colored.

Clustering based on simple measures:.

The clustering method described above is based on a probabilistic definition of cognitive flexibility derived from the Laplace SMuRF model. We compare this method with two possible alternate methods based on mean daily measures. The first is based on the average of the response data across days, resulting in one 40-dimensional vector for each animal. The second is based on Model Class 2, which yields empirical posterior averages of the within-day performance for each animal, m = 1, ⋯, 14. This method also results in one 40-dimensional vector for each animal. It produces a smoother estimate of daily performance than the average of the response data across days.

Figures 6(b) and 6(c) show the corresponding surfaces representing these values. For the first metric based on raw data (Figure 6(b)), the main two groups are a mixture of old and young animals (Group 1: y4, y5, o3, o4, o5, Group 2: y1, y2, y3, y6, o1, o2, o8). For the second metric based on the latent process (Figure 6(c)) the main two groups are divided into a single young animal (y3) and the rest of the animals. These latter two methods are harder to interpret than one based on cognitive flexibility and do not provide predicted group memberships as consistent with the true groups.

4.5. Simulation study: predicting performance-group membership from behavior

In the previous analysis, we were able to use cognitive flexibility (Equation 12) to cluster the animals into groups that are, for the most part, consistent with the young and old age groups. In agreement with their learning curves (Figure 4), monkeys o2, o6, o8 were assigned to the cluster containing all young animals, suggesting that they are high-performing old animals. This is a common observation in aging studies. Ideally, we would want to cluster animals based on performance, that is, to use the binary response data to assign every animal to one of two groups: a low-performing group, and a high-performing one.

In the context of an experiment, we cannot assess the ability of a model such as the Laplace SMuRF model to produce clusters consistent with ground-truth performance level as the only information available is age. In this section, we design a simulated reversal learning experiment comprising two groups of monkeys differing in their intrinsic performance levels, as opposed to age. We begin by assessing the extent to which cognitive flexibility computed based on Model Class 2 can produce clusters consistent with the low and high performing groups. Then, we assess how well the learning state can be used for clustering in case all monkeys have the same number of trials. We cannot do this for the experiment from Section 2 because the 90% stopping criterion leads to learning state vectors of different length for different animals. We use the sensitivity and positive predictive value (PPV) curves to quantify the performance of each clustering method as we vary the extent to which the groups can be differentiated.

Simulation paradigm for binary response data from a reversal-learning experiment:.

We propose a framework for simulating binary response data in a reversal learning experiment. In the first phase of the task, we assume each simulated animal is presented each day with the same 40 object pairs (K = 40) in the same order. The first phase of the task ends after 30 days, followed by a second phase (the reversal phase), which lasts 40 days, for a total of R = 70 days for all simulated animals.

Model of binary response data from simulated experiment:.

The simulated data are binary outcomes encoding the occurrence of the event that a simulated animal picks the correct object in a given pair, on a given day. We express the probability of this event as a function of a within-day learning process that reflects the dependence of an animal’s performance on object pairs, and a cross-day learning process that captures the dynamics of proficiency across days.

We consider two groups of animals: a high-performing (H) one and low-performing (L) one. We assume that all animals, irrespective of their group membership, follow the same within-day learning process. That is, the only difference between the two groups is the evolution of the cross-day learning process.

Motivated by the preceding analysis (Figure 3), we assume that the evolution of the within-day learning process follows the parabola

| (14) |

The cross-day learning process for the high-performing group follows the piecewise logistic functions

| (15) |

The cross-day learning process for the low-performing group follows the piecewise logistic function

| (16) |

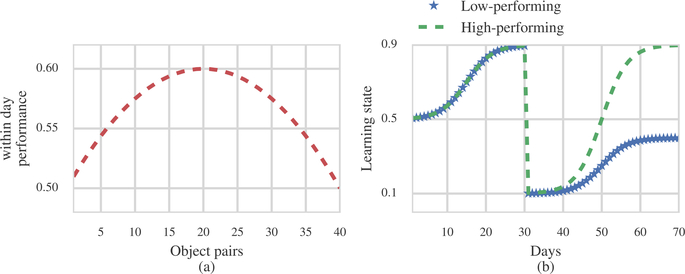

The goal of the simulation is to determine the extent to which we can distinguish the two groups as we vary the similarity in their cross-day learning processes. Therefore, we consider 9 different values for the final level of the low performing group probability, , ranging from 0.1 to 0.9. Figure 7 shows plots of our models for the within and cross-day performance curves.

Figure 7: Within and cross-day performance curves from simulation.

(a) Within-day performance. (b) Cross-day performance.

Simulating from the model:.

We simulate binary response data from the reversal-learning task by generating Bernoulli random variables for each object pair k and day r as follows:

| (17) |

We refer to dk and xr as the “generating curves” of the simulation. Note that xr is either given by Equation 15 or Equation 16, depending on whether we are simulating data from a high or low performing animal. In the latter case, we vary as described above.

Simulation results:.

We simulated binary response data from 8 animals in each group. Figure 8 shows the result of applying Model Class 2 to the simulated data. The estimates of within-day performance (Figure 8(a)) for the two groups overlap and are not significantly different. This is consistent with the fact that the generating within-day performance curve is the same for the two groups. Figure 8(b) demonstrates the accurate reconstruction of the generating learning state curve for two example low and high performing animals.

Figure 8: Results from one example simulation.

(a) The red dashed line shows true performance within days and green and blue lines show the estimated performance within days for simulated young and old animals. (b) The dashed lines indicate true performance across days. 95% confidence bounds are indicated with the lighter shaded lines.

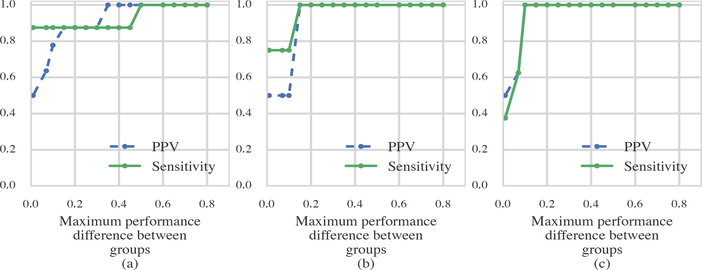

We quantify our ability to cluster the monkeys into two groups using the cognitive flexibility measures from Section 3 (Equations 11, 12, 13) by varying how close the cross-day learning processes of the two groups are to each other. We use as a scalar measure of closeness of the cross-day learning curves from the two groups. For each of the 9 possible values of pH−L, we performed clustering as in Section 4 and computed the positive predictive value (PPV) and sensitivity (Figure 9). In Figure 9(a), for instance, we see as expected that, when there is no group difference (pH−L = 0), the PPV and sensitivity are 0.5. We are completely able to distinguish the groups when pH−L ≥ 0.5 and perform quite well already when pH−L ≥ 0.1. The values in Figure 9 are computed from the average of 10 simulations. Very little between simulation variation was observed so we do not show confidence bounds.

Figure 9: Positive predictive value and sensitivity results for three clustering methods as a function of overall difference in cross-day performance (pH−L).

(a) Clustering based on cognitive flexibility. (b) Clustering based on raw data. (c) Clustering based on across day performance.

The results from Figure 9(a) suggest that the clustering of the monkeys using Model Class 2 (Section 4, Figure 6) reflects difference in performance among the two groups identified. The fact that these two groups do not overlap with the age groups underscores the utility of the Laplace SMuRF model, and our approach, for the identification of similar groups of monkeys solely based on performance, and irrespective of age.

Figures 9(b) and 9(c) show the PPV and sensitivity plots obtained by clustering using the simple measures described in Section 4 (Figures 6(b) and (c)). They further demonstrate the strength of clustering based on differences in ognitive flexibility (Equation 13) when the group differences are very small. Indeed, this method can identify the two groups even when pH−L ≈ 0.1, while the methods based on raw averages across object pairs (Figure 9(b)) or using the estimates of the learning states from the model (Figure 9(c)) cannot. This result is consistent with the fact that averaging across object pairs preserves information regarding the within day performance, which is the same for both groups, and thus reduces the ability to cluster. In contrast, our model separates the learning state from the within day performance.

5. Discussion

At present, there is no consensus on the best method to analyze population behavioral data that spans trials within a day and across many days. We have proposed a two-dimensional state-space approach for the analysis of data from two groups of animals performing multiple object pairings across days [13]. The model, termed Laplace SMuRF model, expresses the probability of observing the binary outcomes as a function of two latent processes: a within-day learning process that reflects an animal’s item-dependent performance level during a day, and a cross-day learning process that captures the dynamics of proficiency across days.

The standard for analysis of behavioral data is to make use of linear mixed effects (LME) models, which are preferred over t-tests as, being model based, they are amenable to probabilistic inference, i.e. to computing the posterior probability of events of interest under the model (e.g. the probability of there being an age difference given the data). Applied to behavioral data, LME models typically require the aggregation of the data over user-defined epochs, which can obfuscate the presence of group differences, as well as detailed changes in the learning dynamics. The Laplace SMuRF model presents several advantages over LME models. First, the Laplace SMuRF model is a generative model that obviates the need for aggregating the behavioral data into groups (either over days or within day [13]). Our analysis demonstrates that this model, which preserves object-pair information (Model Class 1B) (i.e. does not aggregate the behavioral data within day), provides a description of the experimental data that is more predictive, as measured by the WAIC [12], than one that aggregates the behavioral data within day (Model Class 1A). Second, having superior predictive power, the Laplace SMuRF model is closer to the underlying true model that generated the data, and therefore better from the perspective of performing inferences. In hypothesis testing, the need to correct for multiple hypotheses stems from the absence of a model for the joint distribution of the data. By modeling this joint distribution and demonstrating the predictive power of the model, we put ourselves in a position to perform inferences, with the understanding that “all models are wrong, some are useful”. Last, the Laplace SMuRF model provides a detailed characterization of the learning dynamics underlying behavioral data, leading to a more precise characterization of between-group differences. Indeed, we found that, across object pairs, young animals performed better than did older animals. This finding was not apparent from previous analyses [13] based on aggregating the data into “beginning”, “middle” and “end” object pair epochs. It should be emphasized that the model does not assume a-priori the presence of age differences. These are uncovered after fitting the model. When we define cognitive flexibility as the difference between the learning rate of an animal following reversal and that preceding reversal, we find that, as a group, younger monkeys are more cognitively flexible than older ones. The model is also able to tease out inter-individual differences in cognitive flexibility as demonstrated by our ability to cluster the animals post-hoc into two groups that are consistent with the a-priori-unknown performance level of the animals. We used simulation studies to validate these findings and conclude that the clustering is indeed reflective of the animals’ performance. This suggests that the Laplace SMuRF model is a powerful tool for the classification of animals across the spectrum of performance in old age and provides a basis for evaluating individual differences.

Our analyses were facilitated by the derivation of powerful algorithms for estimation and inference in the Laplace SMuRF model. Compared to its Gaussian counterpart [9] the Laplace prior explicitly models the abrupt change in performance at the onset of reversal learning. However, inference in the Laplace SMuRF is more expensive computationally. We derived a Monte Carlo EM algorithm for parameter estimation that uses an elegant Gibbs sampler. We also derived an efficient algorithm for MAP estimation that allows us to automatically capture abrupt changes (e.g., due to reversal learning).

Overall, the Laplace SMuRF model provides a simple, yet powerful, tool for the analysis of binary response data from behavioral experiments.

Highlights.

Behavioral experiments enable the quantification of changes in the brain due to aging.

We propose a method to analyze binary data from multi-object, multi-day, experiments.

We apply it to data from young and aged monkeys in an object-reward association task.

Unlike existing methods, it captures differences in group and individual performance.

The method can discriminate the age groups with high accuracy solely using behavior.

Acknowledgments

Demba Ba thanks the Alfred P. Sloan Foundation for its generous support. C A Barnes, D T Gray, S N Burke, and A C Smith thank the NIH (Grant R01 AG050548) and the McKnight Brain Research Foundation.

Appendix: Supporting derivations for the Methods section

Complete-data likelihood from augmented Laplace SMuRF model

We derive the expression for , the log of the complete-data likelihood. The following derivations are parallel to those in the Appendix of [9]. We sketch only the main steps here.

By definition of the augmented SMuRF model, the complete-data likelihood is

| (18) |

Therefore,

| (19) |

where log and

Without loss of generality, we assume that d0 = 0, and .

Derivation of full conditionals of learning states

From Equation 19, we see that

| (20) |

Therefore, conditioned on , are independent. For sampling, we can restrict ourselves to a single m. By completing the square, we can simplify as follows

| (21) |

It follows that sampling from is equivalent to sampling from the posterior of the state in the following linear-Gaussian state-space model

Derivation of full conditionals of group performance

From Equation 19, we see that

| (22) |

Therefore, sampling from is equivalent to sampling from the posterior of the state in the following linear-Gaussian state-space model

This form leads to an efficient forward-filtering backward-sampling algorithm (see [9]).

M-step of EM for augmented SMuRF model

In the M-step, we obtain new values and by maximizing . We first derive the update equation for . From Equation 19, taking terms in λ, and writing ,

| (23) |

Taking the derivative of the objective with respect to α and setting equal to zero yields

| (24) |

where we approximate the expectations in the denominator from the Monte-Carlo samples computed in the E-step.

Similarly,

| (25) |

which yields

| (26) |

Derivation of algorithm for computing MAP estimates of d and

The Monte-Carlo EM iterative algorithm for maximizing the likelihood of the SMuRF model results in estimates of the model parameters and λ. At convergence of the EM algorithm, samples from the joint posterior are also available. From these samples, we can approximate the posterior means of x and d. The use of the Laplace distribution in the SMuRF model allows us to automatically capture abrupt changes, due to reversal learning, in the learning states of the animals. This, however, is a property of the mode of the posterior distribution and not necessarily its mean. Indeed, due to the potentially complex nature of the posterior distribution, its mean and its mode may not coincide. Computing the mode of the posterior distribution is very challenging, which motivates the iterative algorithm we derive below. In what follows, we denote the posterior by , leaving implicit its dependence on and λ.

Finding the maximum of the posterior distribution corresponds to solving the following optimization problem:

| (27) |

Invoking Equation 19, one can see that

| (28) |

with

It is possible to show that

is a majorizer [27] of the objective function in Equation 27. That is, if (x(n), d(n)) is a candidate solution for the maximizer of Equation 27, we can generate a new candidate solution (x(n), d(n)) by solving

| (29) |

After some algebra,

| (30) |

We first define

| (31) |

| (32) |

and

| (33) |

We can maximize this function by alternative maximization. First, given (x(n), d(n)), we fix d(n), and find x(n+1) by maximizing:

| (34) |

Using an argument similar to the derivation of the full conditional of the learning states in the Gibbs sampler, we can solve this problem in parallel (across animals) using a Kalman smoother for each animal.

Lastly, given x(n+1), we solve for d(n+1) by computing

| (35) |

Using an argument similar to the derivation of the full conditional of group performance d in the Gibbs sampler, we can solve this problem using a Kalman smoother.

In this manner, we can generate a sequence that is provably convergent to the maximizer of the posterior (Equation 27). We omit the proof here (see [27]).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- [1].Siegel S, Castellan NJ, Nonparametric statistics for the behavioral sciences, McGraw-Hill, New York, 1988. [Google Scholar]

- [2].Kim S, Frank L, Hippocampal lesions impair rapid learning of a continuous spatial alternation task, PLoS ONE 4 (5) (2009) e5494. doi:{ 10.1371/journal.pone.0005494}. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Stefani M, Groth K, Moghaddam B, Glutamate receptors in the rat medial prefrontal cortex regulate set-shifting ability, Behavioral Neuroscience 117 (4) (2003) 728–737. doi:{ 10.1037/0735-7044.117.4.728}. [DOI] [PubMed] [Google Scholar]

- [4].Wise S, Murray E, Role of the hippocampal system in conditional motor learning: mapping antecedents to action, Hippocampus 9 (2) (1999) 101–117. doi:{}. [DOI] [PubMed] [Google Scholar]

- [5].Smith A, Frank L, Wirth S, Yanike M, Hu D, Kubota Y, Graybiel A, Suzuki W, Brown E, Dynamic analysis of learning in behavioral experiments, Journal of Neuroscience 24 (2004) 447–461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Smith A, Stefani M, Moghaddam B, Brown E, Analysis and design of behavioral experiments to characterize population learning, Journal of Neurophysiology 93 (2005) 1776–1792. [DOI] [PubMed] [Google Scholar]

- [7].Smith A, Wirth S, Suzuki W, Brown E, Bayesian analysis of interleaved learning and response bias in behavioral experiments, Journal of Neurophysiology 97 (2007) 2516–2524. [DOI] [PubMed] [Google Scholar]

- [8].Eldar E, Morris G, Niv Y, The effects of motivation on response rate: A hidden semi-Markov model analysis of behavioral dynamics, Journal of Neuroscience Methods 201 (1) (2011) 251–261. doi:{ 10.1016/j.jneumeth.2011.06.028}. [DOI] [PubMed] [Google Scholar]

- [9].Zhang Y, Malem-Shinitski N, Allsop SA, Tye KM, Ba D, Estimating a separably markov random field from binary observations, Neural computation 30 (4) (2018) 1046–1079. doi: 10.1162/neco_a_01059. [DOI] [PubMed] [Google Scholar]

- [10].Czanner G, Eden U, Wirth S, Yanike M, Suzuki W, Brown E, An analysis of between-trial and within-trial neural spiking dynamics, J. Neurophysiol. 99 (5) (2008) 2672–2693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Rad KR, Paninski L, Efficient, adaptive estimation of two-dimensional firing rate surfaces via gaussian process methods, Network: Computation in Neural Systems 21 (3–4) (2010) 142–168. [DOI] [PubMed] [Google Scholar]

- [12].Vehtari A, Gelman A, Gabry J, Practical bayesian model evaluation using leave-one-out cross-validation and waic, Statistics and Computing 27 (5) (2017) 1413–1432. [Google Scholar]

- [13].Gray D, Smith A, Burke S, Gazzaley A, Barnes C, Attentional updating and monitoring and affective shifting are impacted independently by aging in macaque monkeys, Behavioural Brain Research (2016)–doi: 10.1016/j.bbr.2016.06.056 URL http://www.sciencedirect.com/science/article/pii/S016643281630420X [DOI] [PMC free article] [PubMed]

- [14].Burke SN, Thome A, Plange K, Engle JR, Trouard TP, Gothard KM, Barnes CA, Orbitofrontal cortex volume in area 11/13 predicts reward devaluation, but not reversal learning performance, in young and aged monkeys, Journal of Neuroscience 34 (30) (2014) 9905–9916. arXiv:http://www.jneurosci.org/content/34/30/9905.full.pdf, doi: 10.1523/JNEUROSCI.3918-13.2014. URL http://www.jneurosci.org/content/34/30/9905 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Shamy JL, Habeck C, Hof PR, Amaral DG, Fong SG, Buonocore MH, Stern Y, Barnes CA, Rapp PR, Volumetric correlates of spatiotemporal working and recognition memory impairment in aged rhesus monkeys, Cerebral cortex 21 (7) (2010) 1559–1573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Burke SN, Thome A, Plange K, Engle JR, Trouard TP, Gothard KM, Barnes CA, Orbitofrontal cortex volume in area 11/13 predicts reward devaluation, but not reversal learning performance, in young and aged monkeys, Journal of Neuroscience 34 (30) (2014) 9905–9916. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Rapp PR, Amaral DG, Evidence for task-dependent memory dysfunction in the aged monkey, Journal of Neuroscience 9 (10) (1989) 3568–3576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Akaike H, Information theory and an extension of the maximum likelihood principle, in: Selected Papers of Hirotugu Akaike, Springer, 1998, pp. 199–213. [Google Scholar]

- [19].Stone M, An asymptotic equivalence of choice of model by cross-validation and akaike’s criterion, Journal of the Royal Statistical Society. Series B (Methodological) 39 (1) (1977) 44–47. [Google Scholar]

- [20].Eltoft T, Kim T, Lee T, On the multivariate laplace distribution, IEEE Signal Processing Letters 13 (5) (2006) 300–303. [Google Scholar]

- [21].Dempster A, Laird N, Rubin D, Maximum likelihood from incomplete data via the em algorithm, Journal of the royal statistical society. Series B (methodological) (1977) 1–38. [Google Scholar]

- [22].Casella G, George E, Explaining the gibbs sampler, The American Statistician 46 (3) (1992) 167–174. [Google Scholar]

- [23].Polson N, Scott J, Windle J, Bayesian inference for logistic models using pólya–gamma latent variables, Journal of the American statistical Association 108 (504) (2013) 1339–1349. [Google Scholar]

- [24].Müllner D, Modern hierarchical, agglomerative clustering algorithms, arXiv preprint arXiv:1109.2378.

- [25].Roberts JM, Ly M, Murray E, Yassa MA, Temporal discrimination deficits as a function of lag interference in older adults, Hippocampus 24 (10) (2014) 1189–1196. doi: 10.1002/hipo.22303. URL 10.1002/hipo.22303 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Spiegel AM, Koh MT, Vogt NM, Rapp PR, Gallagher M, Hilar interneuron vulnerability distinguishes aged rats with memory impairment, Journal of Comparative Neurology 521 (15) (2013) 3508–3523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Hunter DR, Lange K, A tutorial on mm algorithms, The American Statistician 58 (1) (2004) 30–37. [Google Scholar]