Abstract

Background:

Electronic Health Records (EHRs) provide clinically rich data for research and quality improvement work. However, the data is often unstructured text, may be inconsistently recorded and extracted into centralized databases, making them difficult to use for research.

Objectives:

We sought to quantify the variation in how key labs are recorded in the Department of Veterans Affairs (VA) Corporate Data Warehouse (CDW) across hospitals and over time. We included six labs commonly drawn within the first 24 hours of hospital admission (albumin, bilirubin, creatinine, hemoglobin, sodium, white blood cell count (WBC)) from fiscal years 2005 – 2015.

Results:

We assessed laboratory test capture for 5,454,411 acute hospital admissions at 121 sites across the VA. The mapping of standardized laboratory nomenclature (Logical Observation Identifiers Names and Codes, LOINCs) to test results in CDW varied within hospital by laboratory test. The relationship between LOINCs and laboratory test names improved over time; by FY2015, 109 (95.6%) hospitals had more than 90% of the six labs mapped to an appropriate LOINC. All fields used to classify test results are provided in an appendix.

Conclusions:

The use of EHR data for research requires assessing data consistency and quality. Using laboratory test results requires the use of both unstructured text fields and the identification of appropriate LOINCs. When using data from multiple facilities, the results should be carefully examined by facility and over time to maximize capture of data fields.

Introduction/Background

Electronic Health Record (EHR) systems provide vast amounts of clinically rich data that can, in principle, be used in research and evaluation activities to improve patient care and outcomes.1 However, these data are typically organized to meet the diverse exigencies of ongoing clinical work and are not easy to use for research and evaluation purposes.2,3,4 EHR data are often recorded in the form of unstructured text fields, which require programming and clinical expertise to clean and classify the data prior to analysis.5 Failing to capture results may threaten 1) reliable performance measurement that requires all relevant values for risk adjustment; 2) clinical decision support based on laboratory values; and 3) the “big data” agenda that seeks to use laboratory values to discover new patterns of pathophysiology.

The Department of Veterans Affairs (VA) includes diverse nationwide medical centers that have been using a unified EHR since the 1990s. The VA EHR data is extracted nightly and uploaded into the VA’s Corporate Data Warehouse (CDW), where it is organized into relational data tables and can be accessed for research and quality improvement. The extraction process from EHR to the CDW involves only rudimentary data checks centered on data quantity (not data quality), such as algorithms to confirm that the number of rows extracted are within one standard deviation of previous extractions and that missing fields do not exceed a pre-specified threshold.6 Lab results appear in the CDW as test names and may also be mapped to Logical Observation Identifiers Names and Codes (LOINCs).7 LOINCs were developed by and are maintained by the Regenstrief Institute and provide a common coding scheme to identify lab tests. The LOINC database is updated bi-annually.8

Identifying all relevant test results is critical for any project using EHR data—whether identifying clinical specimens or conducting system-level risk-adjustment.9 However, few systematic analyses have been published on the identification or validity of test results extracted from EHR data.10 Instead, such work is usually assumed to have been done correctly “behind the scenes” on large data sets with the same felicity as if values were being hand-extracted by trained research coordinators. Yet there have been several calls for increased transparency regarding data cleaning and methods to assess EHR data quality 11, as well as greater reporting and sharing of methods for selecting clinical codes when using EHR data for research.12,13 In this work, we sought to describe and quantify the variation in how six key laboratory measures are recorded across hospitals and over time to assess the degree to which reliable and transparent data could be validly assumed in studies using large EHR.

Methods

Cohort and Data Sources

Our cohort included all VA acute care hospitalizations during FY2005 – 2015 (October 1, 2004 – September 30, 2015). We used the VA CDW inpatient domain to identify acute care hospitalizations based on treating specialty.14 A complete list of specialties that were classified as acute is available in Appendix Table 1. We used the CDW Laboratory Chemistry domain to identify laboratory measures. We included the first laboratory blood draw within 24 hours of hospital admission for albumin, bilirubin, creatinine, hemoglobin, sodium, and white blood cell count (WBC). These represent commonly used tests relevant to both clinical work and risk adjustment.

Identifying Laboratory Tests

Laboratory test names appear as unstructured text with associated fields for specimen type and units of measurement and may be linked with a standardized LOINC.7 However, reports suggest that some laboratory tests are not linked with a LOINC or may be linked with an incorrect LOINC.15 The VA began using LOINC mapping in 2005. Missing LOINCs may result when the mapping is not done at the time of the laboratory test verification, when a site has not mapped a newly created test to a LOINC, or when the LOINC is not available in the EHR. The laboratory test names may also vary over time. For instance, when a facility changes to a new manufacturer or different equipment, the test name may change.16 Thus, relying only on LOINCs or only on test names to select laboratory results may not capture all relevant labs.

We identified laboratory tests of interest using both LOINCs and test names. The LOINCs associated with the labs of interest were identified in two ways: 1) the Regenstreif Institute’s searchable website and 2) identifying all LOINCs linked to labs with a test name corresponding to a lab of interest. We identified test names of interest by reviewing the list of unique test names associated with hospitalizations. Test names that appeared to represent the lab of interest were flagged for inclusion. We excluded records where the LOINC and test names did not match, unless one was missing, in which case we kept the record. We included results where the specimen type was blood, plasma, or serum. A complete list of the included specimen types is available in Appendix Table 2.

We extracted all laboratory results associated with our list of LOINCs and test names. To validate, we examined descriptive statistics and distributions by LOINC, test name, and unit of measurement. We further excluded test names where the laboratory results did not fit with the expected distribution for that lab. Our final list of LOINC codes and test names is included in Appendix Table 3.

The study was approved by the IRB of the VA Ann Arbor Healthcare System with a waiver of informed consent.

Statistical Analysis

We examined the proportion of acute hospitalizations with each lab in the first 24 hours of admission and the proportion of tests associated with the correct LOINC. Variation between sites and over time and correlations between hospital level LOINC use for the different labs was assessed. We identified the proportion of sites with less than 90% of tests with a LOINC over time. An a priori threshold of 90% was used to classify sites as problematic based on the importance of missing more than 10% of labs when using retrospective data for research or evaluation. Results are presented as means and 95% confidence intervals or medians and interquartile ranges.

Results

Between FY 2005 and 2015, there were 5,454,411 acute hospital admissions at 121 sites across the VA. We excluded sites with fewer than 10 acute hospitalizations annually. The median number of hospitalizations each fiscal year was 495,809 (interquartile range (IQR): 481,674– 512,546). The median number of admissions at a single site was 4,057 (IQR: 1,780–5,913) per year.

There were between 4 to 8 LOINCs and 61 to 114 test names identified for each of the six laboratory tests; see Appendix Table 3. Of the 5,454,411 hospitalizations, 76.5% had an albumin in the first 24 hours of admission, 75.7% had a bilirubin, 94.6% had a creatinine, 95.2% had a hemoglobin, 94.5% had a sodium and 94.5% had a WBC. Table 1 displays these proportions, as well as the proportion of tests that could be identified using LOINCs or test names. Overall, more than 90% of laboratory tests (range: 90.1%−94.6%) had an associated LOINC, while a slightly higher percent (range: 92.8%−97.0%) of laboratory tests could be identified using test name.

Table 1.

Overall lab frequencies

| Lab | Hospitalizations with lab collected within 24 hours, N (%) | Labs with correct LOINC code, N (%) | Labs with correct Test Names, N (%) |

|---|---|---|---|

| Albumin | 4,174,664 (76.5%) | 3,933,610 (94.2%) | 3,974,919 (95.2%) |

| Bilirubin | 4,128,887 (75.7%) | 3,825,679 (92.7%) | 3,927,150 (95.1%) |

| Creatinine | 5,158,971 (94.6%) | 4,646,143 (90.1%) | 4,788,384 (92.8%) |

| Hemoglobin | 5,192,475 (95.2%) | 4,748,276 (91.4%) | 4,923,084 (94.8%) |

| Sodium | 5,155,181 (94.5%) | 4,850,240 (94.1%) | 4,996,554 (96.9%) |

| White Blood Cell | 5,151,851 (94.5%) | 4,875,813 (94.6%) | 4,998,665 (97.0%) |

Note: The percent of hospitalizations with the lab collected within the first 24 hours are based on N=5,454,411 VA hospitalizations from 2005 – 2015. The percent of labs with correct LOINC and Test Names are based on data presented in the first column; i.e., the number of hospitalizations with the lab collected.

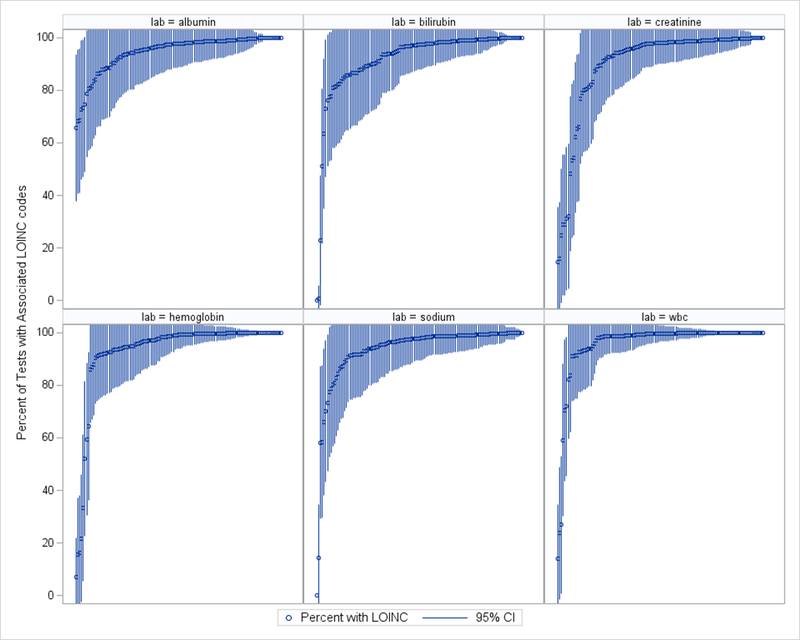

There was wide variation in LOINC use across sites for each lab. Across all eligible laboratory tests, 92.8% had a LOINC during our period of interest. Figure 1 displays the proportion of laboratory tests identified using LOINCs across site for each test. Ninety-four sites (77.7%) had 90% or higher use of the LOINCs for all six laboratory tests during this period.

Figure 1.

Distribution of the percent of tests with an associated LOINC for N=5,454,411 acute hospitalizations among VA hospitals from 2005 – 2015. Each line represents a VA hospital, the circle represents the average proportion across years of laboratory tests associated with a correct LOINC for that test at that site, the lines represent the 95% Confidence Interval.

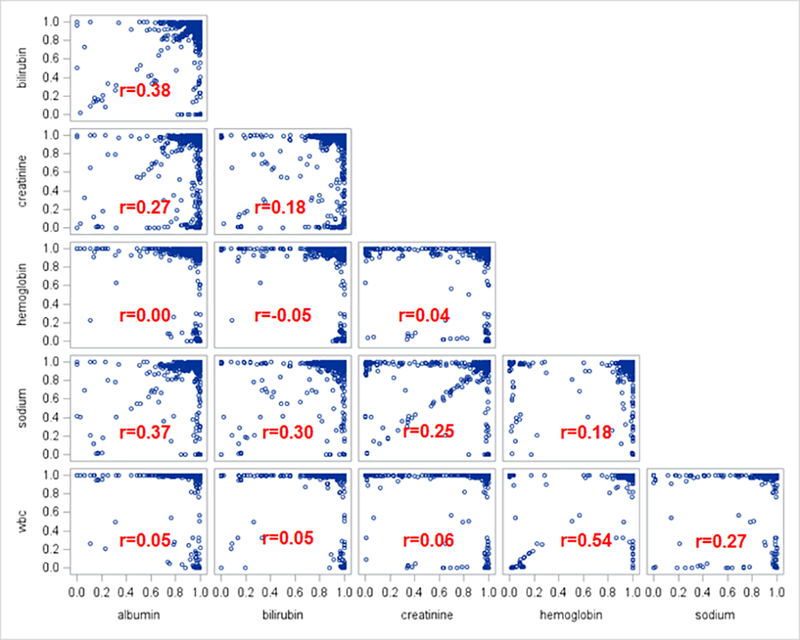

The use of LOINCs at a given site was not correlated for the six laboratory tests (range of Pearson correlations: r=−0.05–0.54), suggesting that sites have mapped LOINCs to some labs and not others. Figure 2 displays the correlation in the proportion of tests with an associated LOINC between each laboratory test, where each point represents the proportion of laboratory tests with an associated LOINC at a given site. The strongest correlation for the use of LOINCs was between hemoglobin and WBC (r=0.54); the weakest was between hemoglobin and albumin (r=0.00).

Figure 2.

Correlation in the use of LOINC by site between laboratory tests for N=5,454,411 acute hospitalizations among VA hospitals from 2005 – 2015. Each blue circle represents a VA hospital’s proportion of labs with a LOINC mapping for the corresponding two labs. For instance, the relationship between hemoglobin and WBC suggests that across VA hospitals, a higher proportion of hemoglobin tests mapped to a LOINC is moderately associated with a higher proportion of WBC tests mapped to a LOINC (r=0.54).

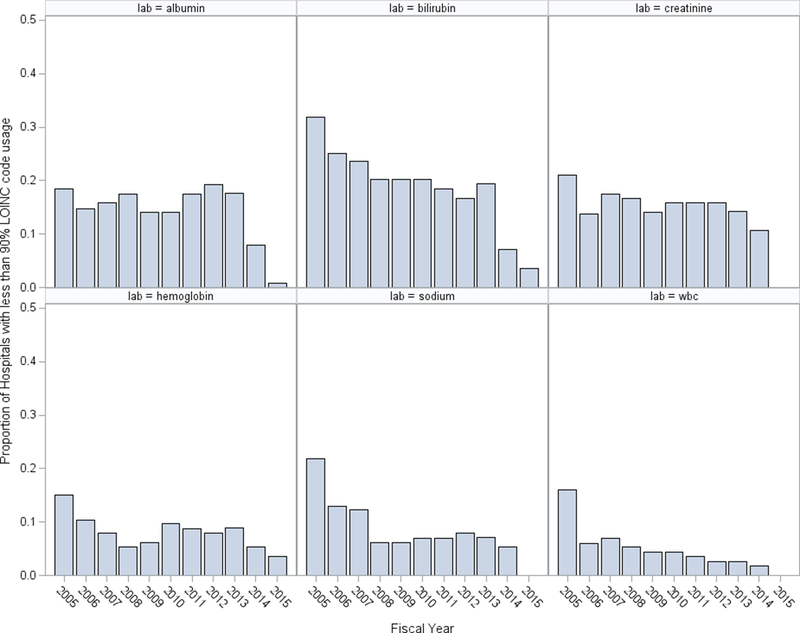

There was some improvement in the overall use of LOINCs over time. Although the overall mean use of LOINCs remained consistently high over time, there were individual sites that were inadequately using LOINCs. Figure 3 displays the proportion of hospitals with less than 90% of tests captured using LOINCs each year. WBC and hemoglobin were captured most consistently, with fewer than 10% of hospitals failing to include LOINCs after 2006. Conversely, bilirubin did not have LOINCs for more than 20% of hospitals from 2005 to 2013.

Figure 3.

Proportion of hospitals with less than 90% of tests with an associated LOINC by year for N=5,454,411 acute hospitalizations among VA hospitals from 2005 – 2015.

Discussion

Our results show that LOINCs are not consistently mapped to laboratory tests at VA hospitals. In addition, the use of LOINCs varied within site by laboratory test. Although a hospital may have LOINCs mapped for most of one laboratory test, other tests at that hospital may not be consistently mapped. Finally, we observed improvement in LOINC mapping over time, with more than 90% of laboratory results mapped to a LOINC for most hospitals by FY2015. This is consistent with recent findings from the VA’s data quality review on labs, in which data inconsistencies were partially addressed by a patch to the VA’s EHR in March/April 2014.15 Lab entries after this date should be more reliably mapped to appropriate LOINCs. However, these updates typically affect only active test results and results prior to this time will have more inconsistencies. Researchers seeking to use retrospective data will need to utilize both test names and LOINCs to identify laboratory results.

In the era of big data, many researchers are taking advantage of the vast amounts of data available and conducting analysis on large cohorts of patients using data from several years at a time. In these instances, it is easy for data problems to remain “hidden”. Missing one test name may result in excluding all values of interest from a hospital for a period. Consistent use of LOINCs would ease the abstraction of test results and allow for increased standardization of labs utilized in retrospective data analysis. In this paper, we quantified this variability to help researchers understand the impact and provide additional insight into using EHR data for research and quality improvement purposes.

We focused our study on the capture of laboratory test results during the first 24 hours of admission to a VA hospital. It is possible that laboratory results are available outside of this period, and expanding this window may allow for increased capture of results. We focused on the first 24 hours, which are often used for risk-adjustment. We selected six common labs for examination; further study is needed to examine the variation in lab capture by site and over time for other laboratory tests. Finally, we included all acute care hospitalizations. It is possible that admissions for specific conditions would have different patterns of capture.

These findings are unlikely to be unique to the VA. Other multi-hospital systems that utilize EHRs for patient care will likely face similar challenges to creating structured data tables that can more easily and reliably be used for research and evaluation purposes. The desire for structure to allow easy research and organizational intelligence must be balanced against the need for rapid reporting in a clinical context. Scientific journals should consider requiring that authors report in some detail the process by which specific laboratory values were identified (usually in an Appendix), with some evidence of their completeness and specificity, as without this the scientific reproducibility and validity of data may be limited.

Although there are challenges associated with transforming EHR data into research-ready datasets, EHRs offer a large amount of clinically rich information that can be used to improve patient care and outcomes. As additional healthcare professionals, administrators, and clinical researchers seek to tap into the potential of EHRs to better understand performance measures, decision making, and patterns of patient care, new guidelines are also being offered to improve the standardization and structure of EHR data. 4,9,10,11,17 Along with these increased recommendations, researchers will benefit from collaborating with other clinicians, data scientists, and informaticians to efficiently and effectively use EHR data.

Supplementary Material

Acknowledgments

Funding sources: This work was supported by VA Health Services Research & Development IIR #109. Dr. Prescott is supported in part by K08 GM115859. Dr. Waljee is supported in part by VA Health Services Research & Development IIR #16–024.

Footnotes

All authors participated in the preparation of the manuscript. This paper does not necessarily represent the position or policy of the US government or the Department of Veterans Affairs.

Conflicts of interest: None

References

- 1.Safran C, Bloomrosen M, Hammond WE, Labkoff S, Markel-Fox S, Tang PC, Detmer DE. Toward a national framework for the secondary use of health data: an American medical informatics association white paper. J Am Med Inform Assoc 2007;14(1):1–9 10.1197/jamia.M2273 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Abhyankar S, Demner-Fushman D, McDonald CJ. Standardizing clinical laboratory data for secondary use. J Biomed Inform 2012; 45(4): 642–650. 10.1016/j.jbi.2012.04.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Overhage JM, Overhage LM. Sensible use of observational clinical data. Stat Methods Med Res 2011; 22(1): 7–13. DOI: 10.1177/0962280211403598 [DOI] [PubMed] [Google Scholar]

- 4.Hripcsak G, Albers DJ. Next-generation phenotyping of electronic health records. J Am Med Inform Assoc 2013;20:117–121. doi: 10.1136/amiajnl-2012-001145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bayley KB, Belnap T, Savitz L, Masica AL, Shah N, Fleming NS. Challenges in using electronic health record data for CER: Experience of 4 learning ogranizations and solutions applied. Med Care 2013; 51: S80–S86. [DOI] [PubMed] [Google Scholar]

- 6.VA Information Resource Center. VIReC Resource Guide: VA Corporate Data Warehouse. Hines, IL: U.S. Dept. of Veterans Affairs, Health Services Research and Development Service, VA Information Resource Center, March 2012. [Google Scholar]

- 7.Gonsoulin Margaret. VIReC Factbook: Corporate Data Warehouse (CDW)Laboratory Chem Domain. Hines IL: U.S. Department of Veterans Affairs, Health Services Research & Development Service, VA Information Resource Center; August, 2017. [Google Scholar]

- 8.LOINC Website. https://loinc.org

- 9.Kharrazi H, Chi W, Chang HY, Richards TM, Gallagher JM, Knudson SM, Weiner JP. Comparing population-based risk-stratification model performance using demographic, diagnosis and medication data extracted from outpatient electronic health records versus administrative claims. Med Care 2017; 55(8): 789–796. [DOI] [PubMed] [Google Scholar]

- 10.Hersh WR, Weiner MG, Embi PJ, Logan JR, Payne PRO, Bernstam EV, Lehmann HP, Hripcsak G, Hartzog TH, Cimino JJ, Saltz JH. Caveats for the use of operational electronic health record data in comparative effectiveness research. Med Care 2013; 51 (803): S30–S37. doi: 10.1097/MLR.0b013e31829b1dbd. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Weiskopf NG, Weng C. Methods and dimensions of electronic health record data quality assessment: enabling reuse for clinical research. J Am Med Inform Assoc 2013; 20:144–151. doi: 10.1136/amiajnl-2011-000681 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Williams R, Kontopantelis E, Buchan I, Peek N. Clinical code set engineering for reusing HER data for research: A review. J Biomed Inform 2017; 70, 1–13. 10.1016/j.jbi.2017.04.010 [DOI] [PubMed] [Google Scholar]

- 13.Gulliford MC, Charlton J, Ashworth M, Rudd AG, Toschke AM, Delaney B, Grieve A, Heuschmann PU, Little P, Redfern J, van Staa T, Wolfe C, Yardley L, McDermott L. Selection of medical diagnostic codes for analysis of electronic patient records: application to stroke in a primary care database, PLoS ONE 4 (2009), 10.1371/journal.pone.0007168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Vincent BM, Wiitala WL, Burns JA, Iwashyna TJ, Prescott HC. Using Veterans Affairs Corporate Data Warehouse to identify 30-day hospital readmissions. Health Serv Outcomes Res Method, 2018, 10.1007/s10742-018-0178-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.VHA Data Quality Program Laboratory Reports. VHA OIA Data Quality Program. http://vaww.vhadataportal.med.va.gov/Resources/DataReports.aspx. Last Accessed 09/21/2017.

- 16.Render ML, Freyberg RW, Hasselbeck R, Hofer TP, Sales AE, Deddens J, Levesque O, Almenoff PL. Infrastructure for quality transformation: measurement and reporting in Veterans administration intensive care units. BMJ Qual Saf 2011;20:498–507. Doi: 10.1136/bmjqs.2009.037218 [DOI] [PubMed] [Google Scholar]

- 17.Fridsma DB, Payne TH. AMIA response to FDA draft guidance on using EHR data in clinical investigations. July 18, 2016. Available at: https://www.amia.org/sites/default/files/AMIA-Response-to-FDA-Draft-Guidance-on-Using-EHR-Data-in-Clinical%20Investigations.pdf Last accessed September 27, 2017.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.